Context Firewall

In today's digital landscape, the importance of data security cannot be overstated. Organizations across various sectors are constantly striving to protect sensitive information from malicious actors. One key element in this endeavor is the implementation of context firewalls.

In this blogpost, we will delve into the concept of context firewalls, their benefits, challenges, and how businesses can effectively navigate this security measure.

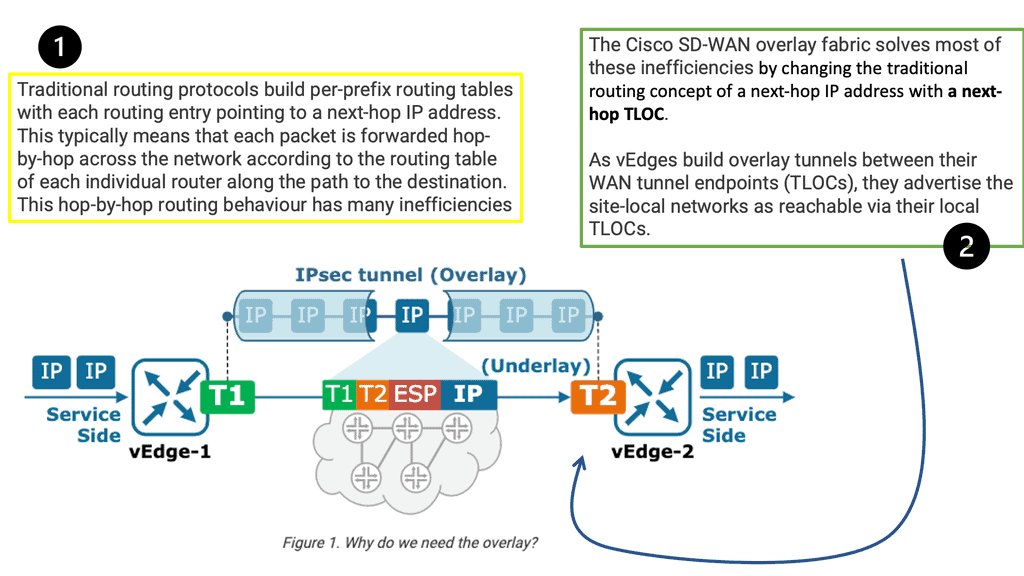

A context firewall is a sophisticated cybersecurity measure that goes beyond traditional firewalls. While traditional firewalls focus on blocking specific network ports or IP addresses, context firewalls take into account the context and content of network traffic. They analyze the data flow, examining the behavior and intent behind network requests, ensuring a more comprehensive security approach.

Context firewalls play a crucial role in enhancing digital security by providing advanced threat detection and prevention capabilities. By examining the context and content of network traffic, they can identify and block malicious activities, including data exfiltration attempts, unauthorized access, and insider attacks. This proactive approach helps defend against both known and unknown threats, adding an extra layer of protection to your digital assets.

The advantages of context firewalls are multi-fold. Firstly, they enable granular control over network traffic, allowing administrators to define specific policies based on context. This ensures that only legitimate and authorized activities are allowed, reducing the risk of unauthorized access or data breaches.

Secondly, context firewalls provide real-time visibility into network traffic, empowering security teams to identify and respond swiftly to potential threats. Lastly, these firewalls offer advanced analytics and reporting capabilities, aiding in compliance efforts and providing valuable insights into network behavior.Matt Conran

Highlights: Context Firewall

The Role of Firewalling

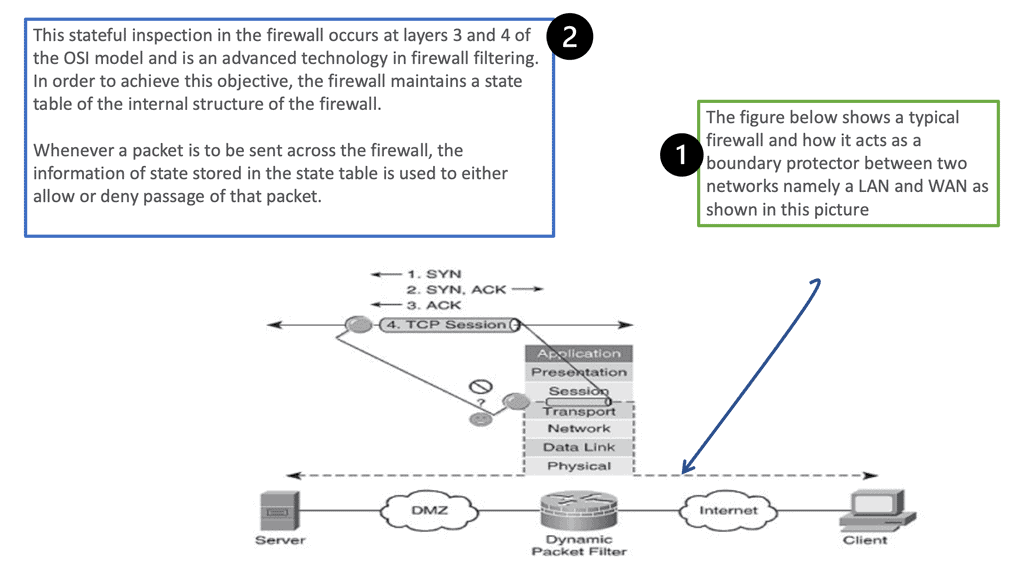

Firewalls protect inside networks from unauthorized access from outside networks. Firewalls can also separate inside networks, for example, by keeping human resources networks from user networks.

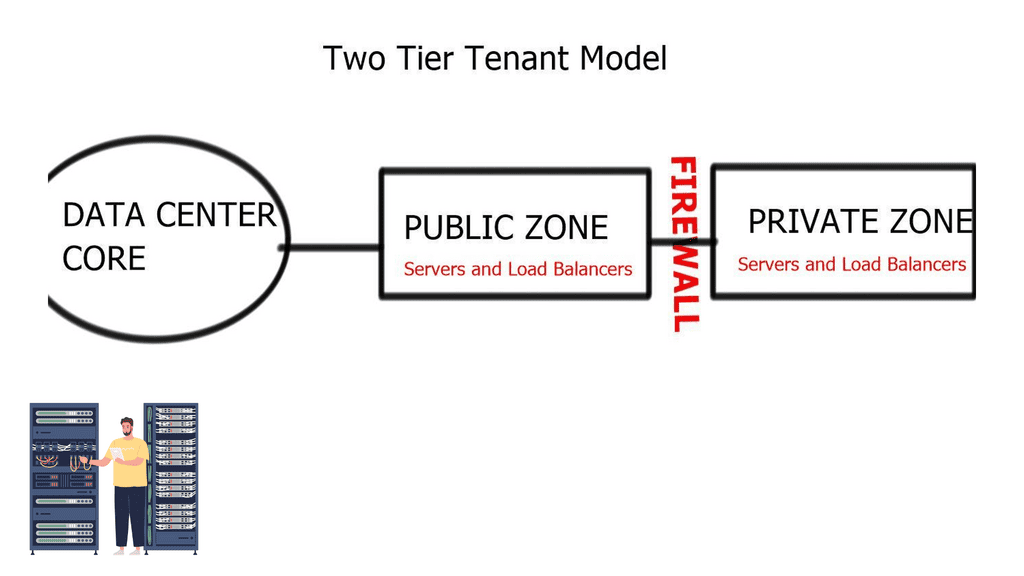

Demilitarized zones (DMZs) are networks behind firewalls that allow outside users to access network resources such as web or FTP servers. A firewall only allows limited access to the DMZ, but since the DMZ only contains the public servers, an attack there will only affect the servers and not the rest of the network.

Additionally, you can restrict access to outside networks (for example, the Internet) by allowing only specific addresses out, requiring authentication, or coordinating with an external URL filtering server.

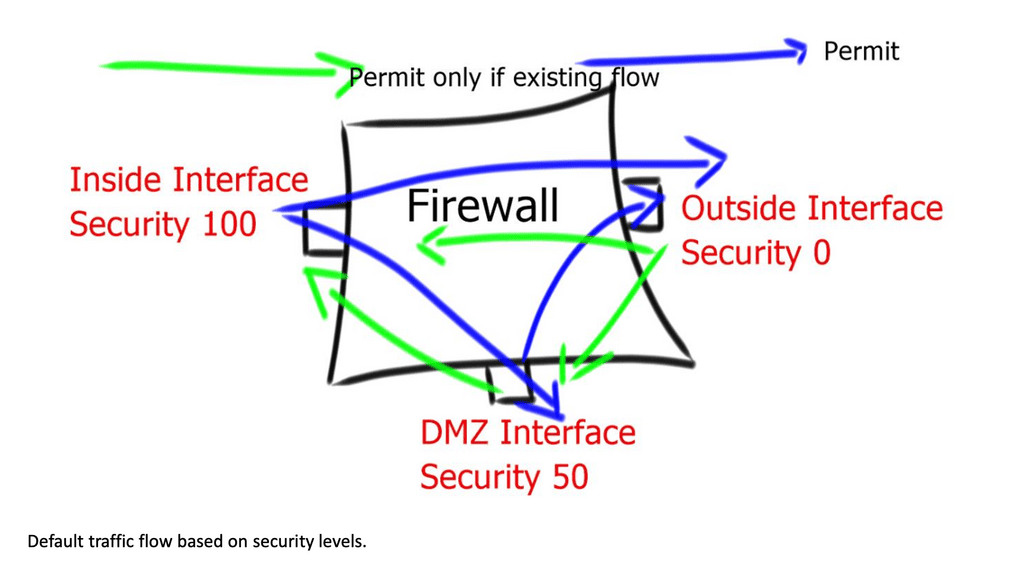

Three types of networks are connected to a firewall: the outside network, the inside network, and a DMZ, which permits limited access to outside users. These terms are used in a general sense because the security appliance can configure many interfaces with different security policies, including many inside interfaces, many DMZs, and even many outside interfaces.

Understanding Multi-Context Firewalls

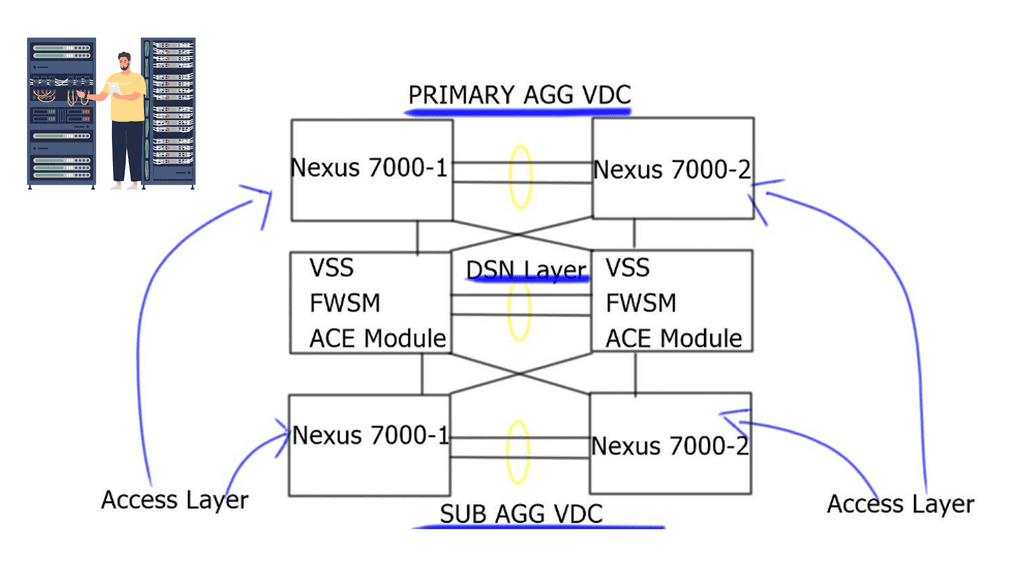

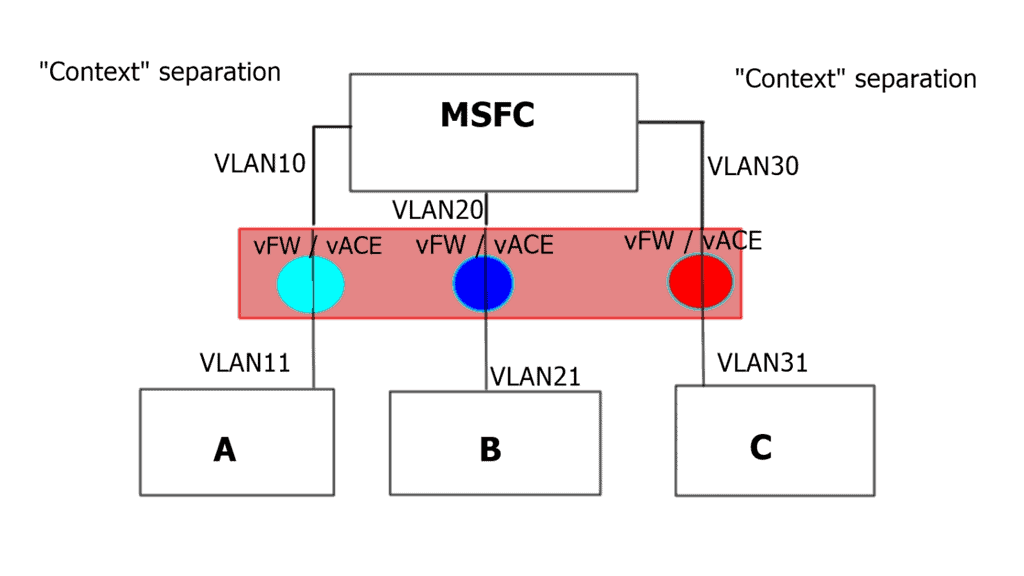

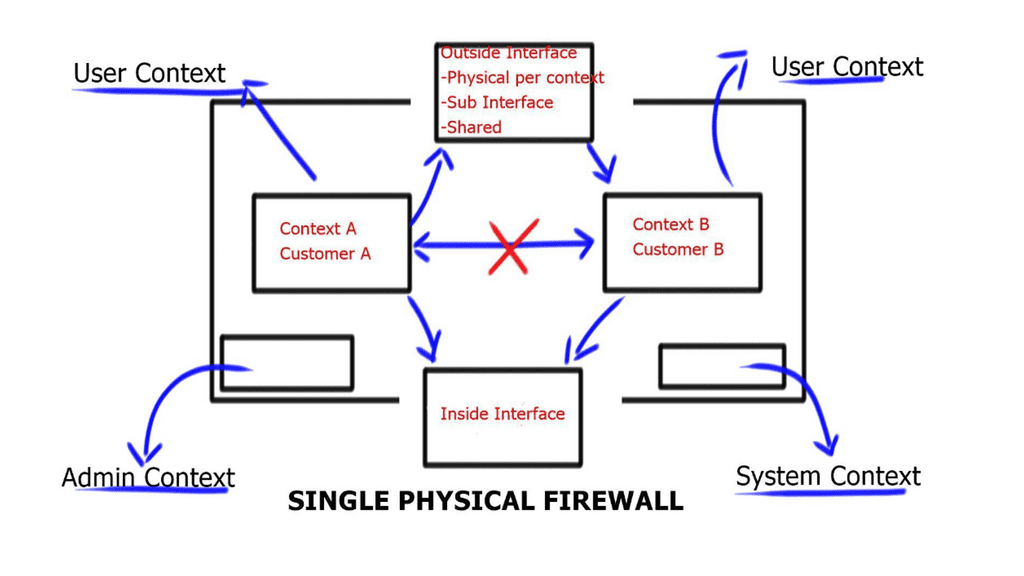

A multi-context firewall is a security device that creates multiple virtual firewalls within a single physical firewall appliance. Each virtual firewall, known as a context, operates independently of its security policies, interfaces, and routing tables. This segregation enables organizations to consolidate their network security infrastructure while maintaining strong isolation between network segments.

Organizations can ensure that traffic flows are strictly controlled and isolated by creating separate contexts for different departments, business units, or even customers. This segmentation prevents lateral movements in case of a breach, limiting the potential impact on the entire network.

Security Context

By partitioning a single security appliance, multiple security contexts can be created. Each context has its own security policy, interface, and administrator. Having multiple contexts is similar to having multiple standalone devices. Routing tables, firewalls, intrusion prevention systems, and management are all supported in multiple context modes. Dynamic routing protocols and VPNs are not supported.

Context Firewall Operation

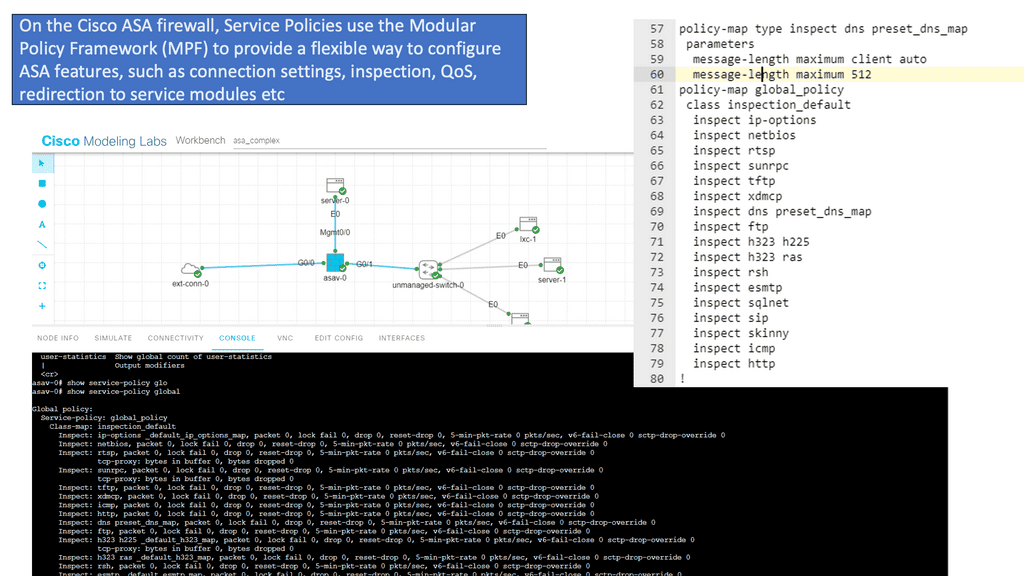

A context firewall is a security system designed to protect a computer network from malicious attacks. It blocks, monitors, and filters network traffic based on predetermined rules. Multiple Context Mode divides Adaptive Security Appliance ( ASA ) into multiple logical devices, known as security contexts.

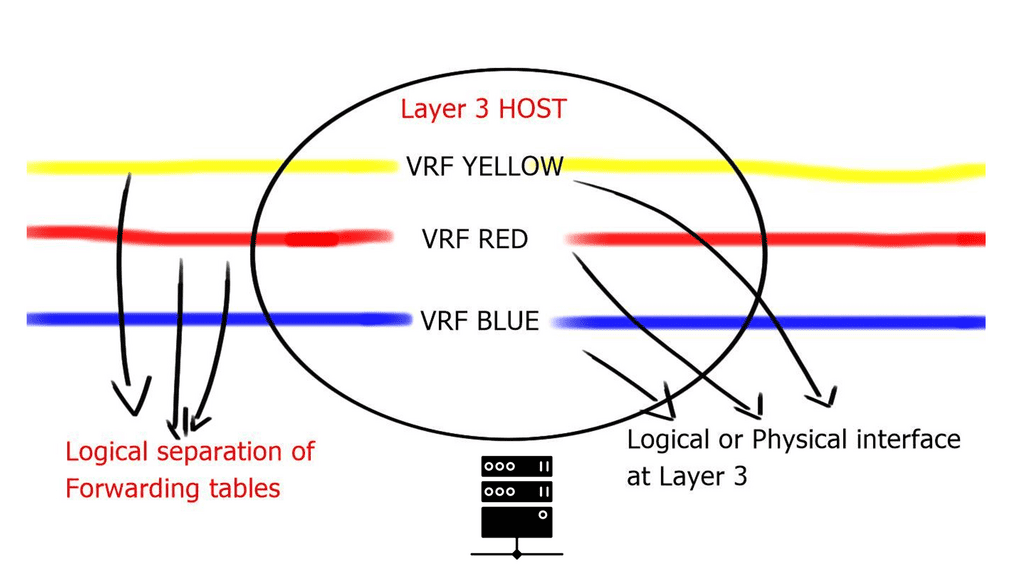

Each security context acts like one device and operates independently of others. It has security policies and interfaces similar to Virtual Routing and Forwarding ( VRF ) on routers. You are acting like a virtual firewall. The context firewall offers independent data planes ( one for each security context ), but one control plane controls all of the individual contexts.

Use Cases

Use cases are large enterprises requiring additional ASAs – hosting environments where service providers want to sell security services ( managed firewall service ) to many customers – one context per customer. So, in summary, the ASA firewall is a stateful inspection firewall that supports software virtualization using firewall contexts. Every context has routing, filtering/inspection, address translation rules, and assigned IPS sensors.

When would you use multiple security contexts?

- A network that requires more than one ASA. So, you may have one physical ASA and need additional firewall services.

- You may be a large service provider offering security services that must provide each customer with a different security context.

- An enterprise must provide distinct security policies for each department or user and require a different security context. This may be needed for compliance and regulations.

Related: Before you proceed, you may find the following posts helpful:

- Virtual Device Context

- Virtual Data Center Design

- Distributed Firewalls

- ASA Failover

- OpenShift Security Best Practices

- Network Configuration Automation

Context Firewall Key Context Firewall Discussion Points: |

|

Back to Basics: The firewall

Highlighting the firewall

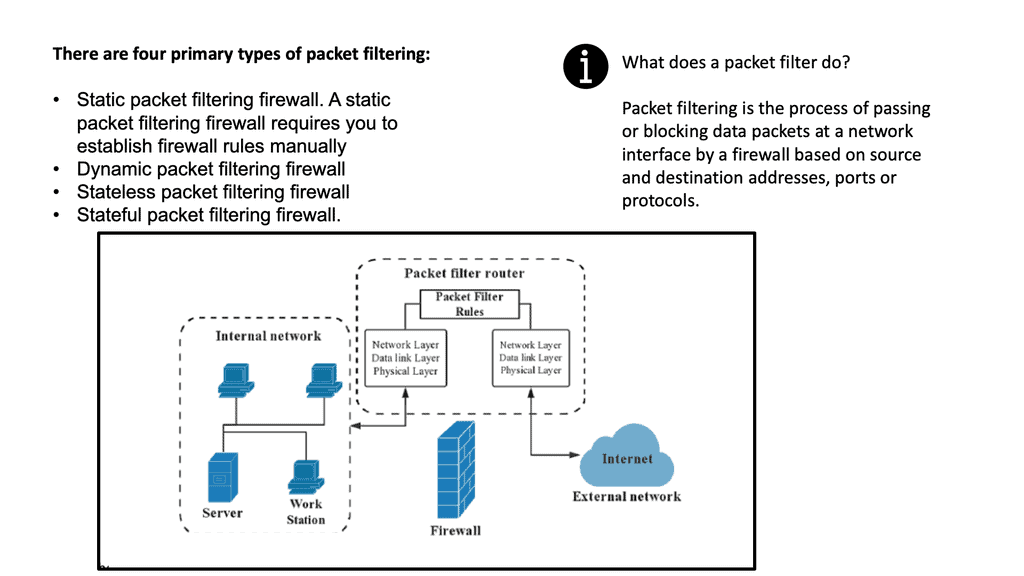

A firewall is a hardware or software, aka virtual firewalls filtering device, that implements a network security policy and protects the network against external attacks. A packet is a unit of information routed between one point and another over the network. The packet header contains a wealth of data such as source, type, size, origin, and destination address information. As the firewall acts as a filtering device, it watches for traffic that fails to comply with the rules by examining the contents of the packet header.

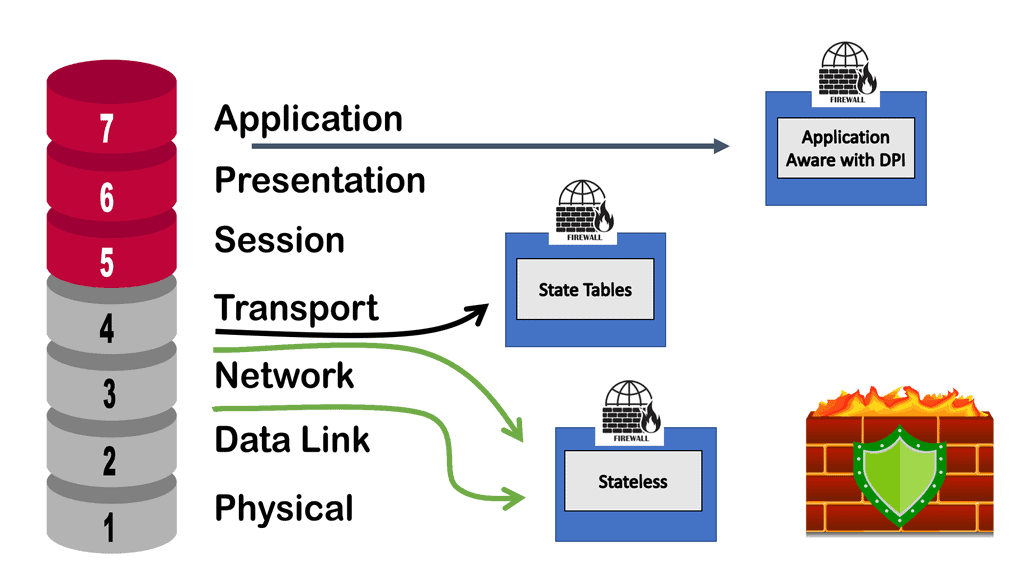

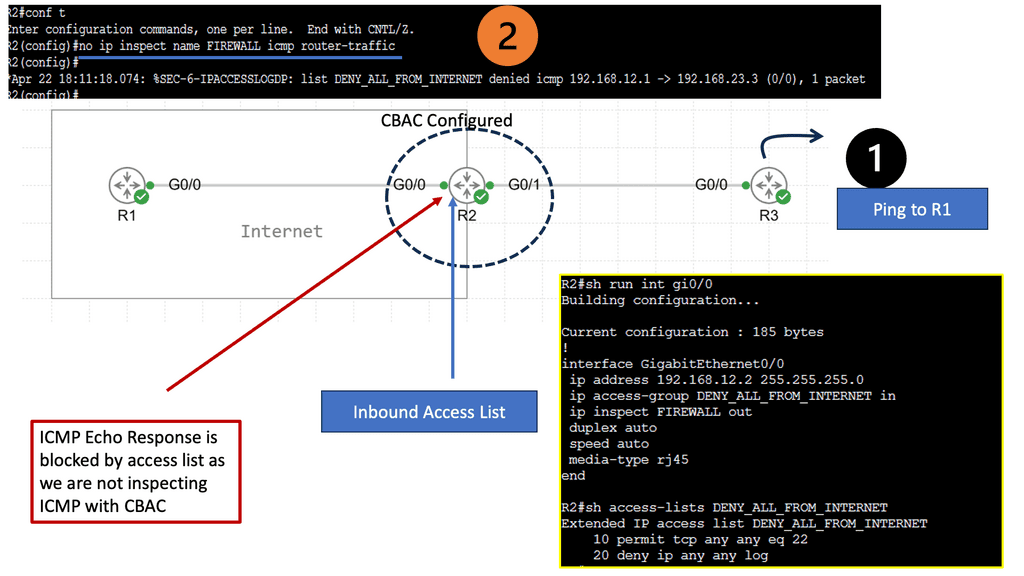

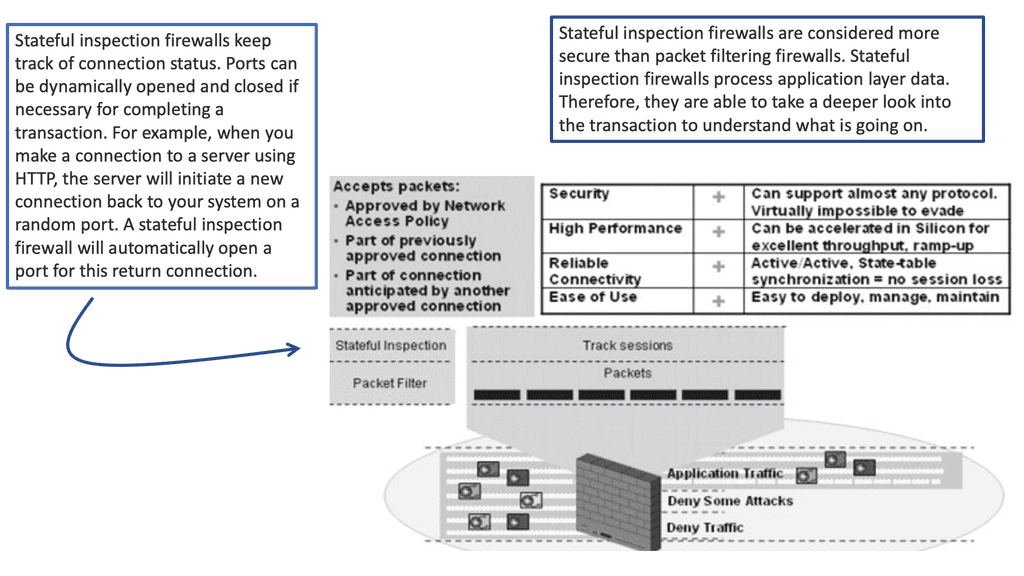

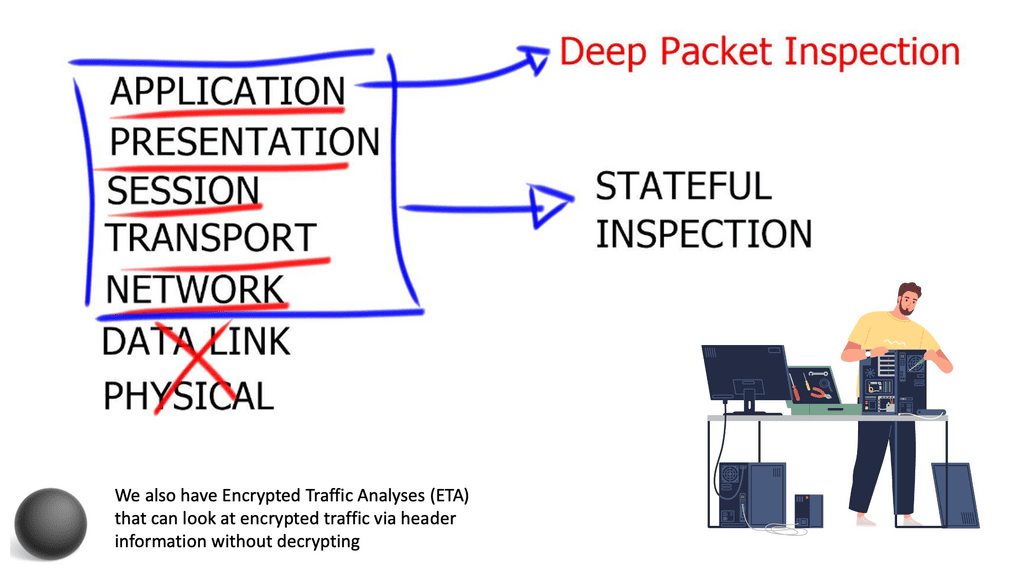

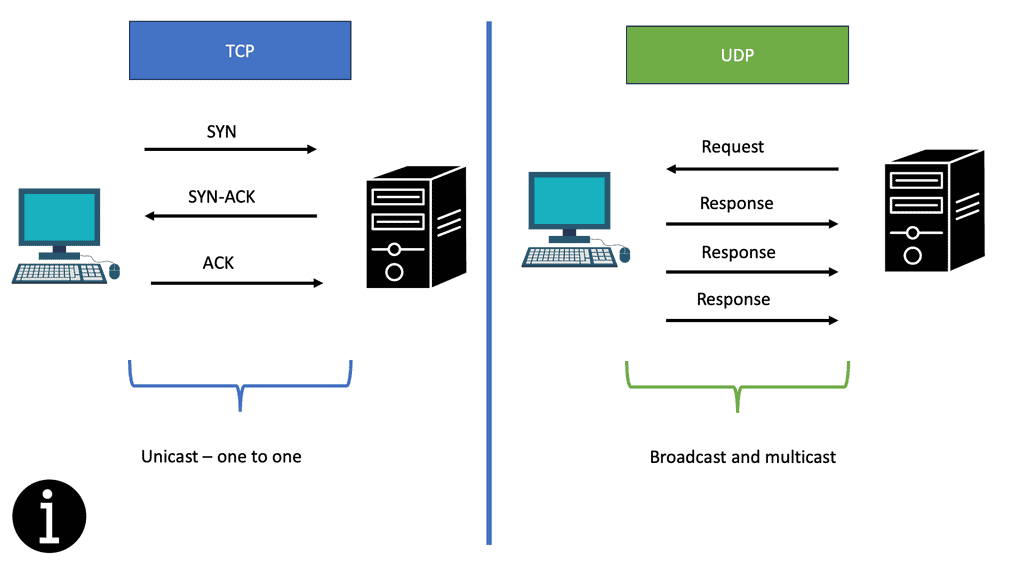

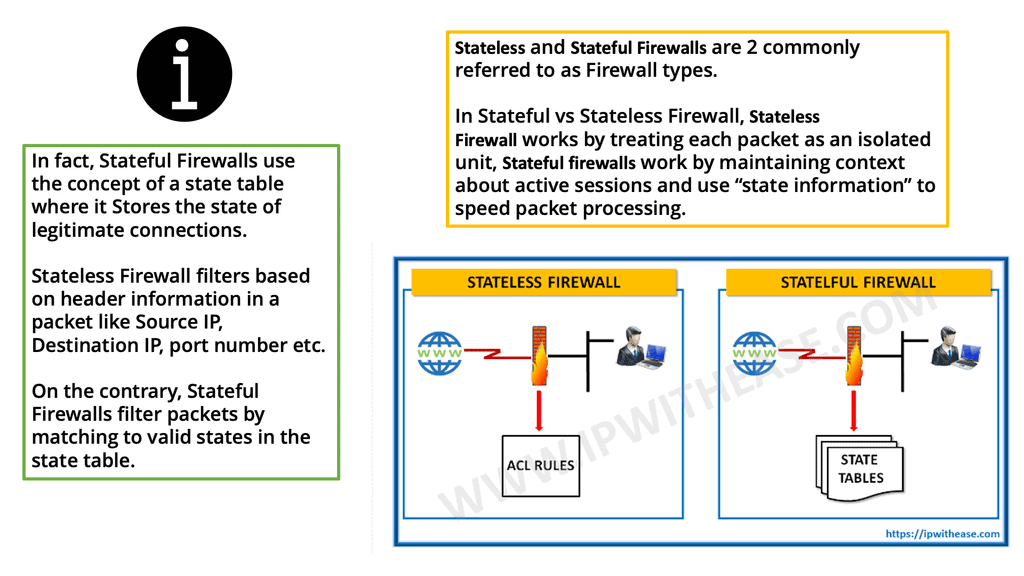

Firewalls can concentrate on the packet header, the packet payload, or both, and possibly other assets, depending on the firewall types. Most firewalls focus on only one of these. The most common filtering focus is on the packet’s header, with a packet’s payload a close second. The following diagram shows the two main firewall categories stateless and stateful firewalls.

A stateful firewall is a type of firewall technology that is used to help protect network security. It works by keeping track of the state of network connections and allowing or denying traffic based on predetermined rules. Stateful firewalls inspect incoming and outgoing data packets and can detect malicious traffic. They can also learn which traffic is regular for a particular environment and block any traffic that does not conform to expected patterns.

A stateless firewall is a network security device that monitors and controls incoming and outgoing network traffic based on predetermined security rules. It does this without keeping any record or “state” of past or current network connections. Controlling traffic based on source and destination IP addresses, ports, and protocols can also prevent unauthorized access to the network.

Stateful vs. Stateless Firewall

Stateful Firewall:

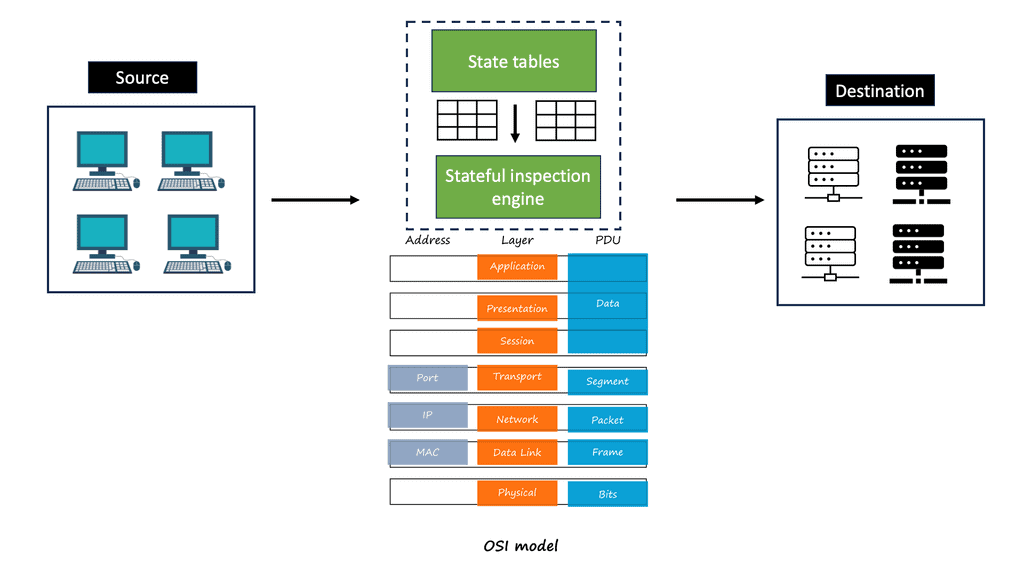

A stateful firewall, also known as a dynamic packet filtering firewall, operates at the OSI model’s network layer (Layer 3). Unlike stateless firewalls, which inspect individual packets in isolation, stateful firewalls maintain knowledge of the connection state and context of network traffic. This means that stateful firewalls make decisions based on the characteristics of individual packets and the history of previous packets exchanged within a session.

How Stateful Firewalls Work:

Stateful firewalls keep track of the state of network connections by creating a state table, also known as a stateful inspection table. This table stores information about established connections, including the source and destination IP addresses, port numbers, sequence numbers, and other relevant data. By comparing incoming packets against the information in the state table, stateful firewalls can determine whether a packet is part of an established session or a new connection attempt.

Advantages of Stateful Firewalls:

1. Enhanced Security: Stateful firewalls offer a higher level of security by understanding the context and state of network traffic. This enables them to detect and block suspicious or unauthorized activities more effectively.

2. Better Performance: By maintaining a state table, stateful firewalls can quickly process packets without inspecting each packet individually. This results in improved network performance and reduced latency compared to stateless firewalls.

3. Granular Control: Stateful firewalls provide administrators with fine-grained control over network traffic by allowing them to define rules based on network states, such as allowing or blocking specific types of connections.

Stateless Firewall:

In contrast to stateful firewalls, stateless firewalls, also known as packet filtering firewalls, operate at the network and transport layers (Layers 3 and 4). These firewalls examine individual packets based on predefined rules and criteria without considering the context or history of the network connections.

How Stateless Firewalls Work:

Stateless firewalls analyze incoming packets based on criteria such as source and destination IP addresses, port numbers, and protocol types. Each packet is evaluated independently, without referencing the packets before or after. If a packet matches a rule in the firewall’s rule set, it is allowed or denied based on the specified action.

Advantages of Stateless Firewalls:

1. Simplicity: Stateless firewalls are relatively simple in design and operation, making them easy to configure and manage.

2. Speed: Stateless firewalls can process packets quickly since they do not require the overhead of maintaining a state table or inspecting packet history.

3. Scalability: Stateless firewalls are highly scalable as they do not store any connection-related information. This allows them to handle high traffic volumes efficiently.

Next-generation Firewalls

Next-generation firewalls (NGFWs) would carry out the most intelligent filtering. They are a type of advanced cybersecurity solution designed to protect networks and systems from malicious threats.

They are designed to provide an extra layer of protection beyond traditional firewalls by incorporating features such as deep packet inspection, application control, intrusion prevention, and malware protection. NGFWs can conduct deep packet inspections to analyze network traffic contents and observe traffic patterns.

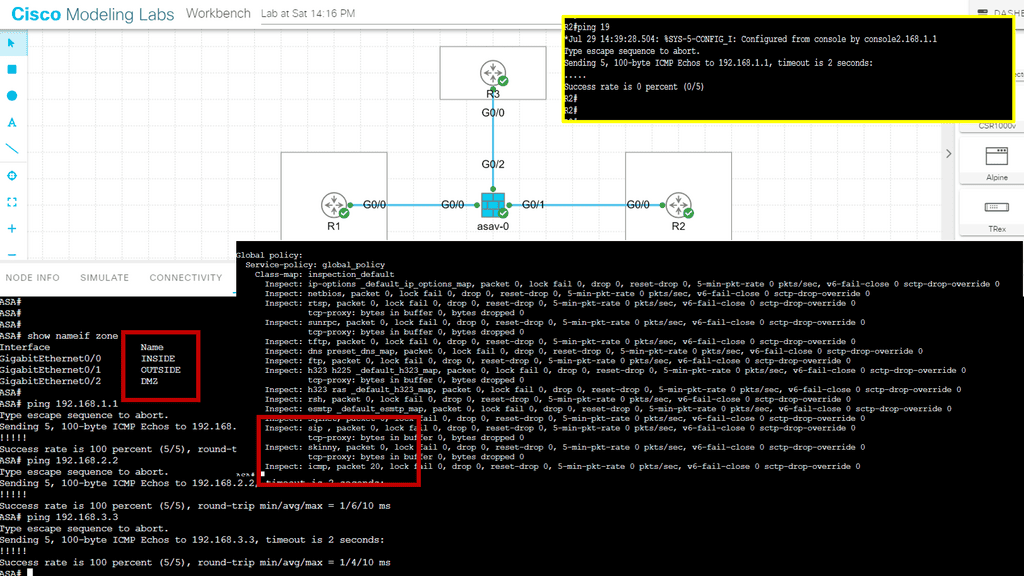

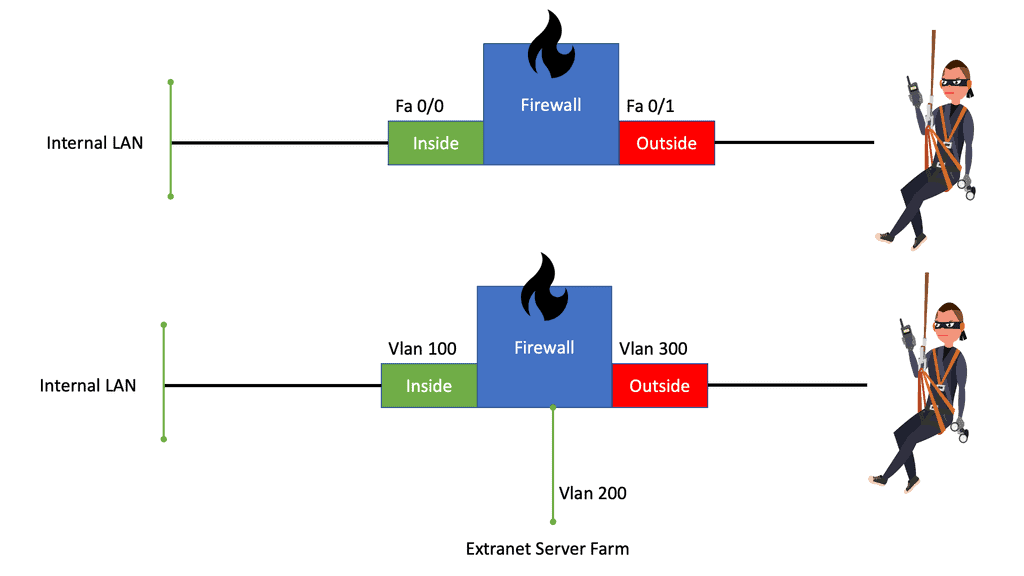

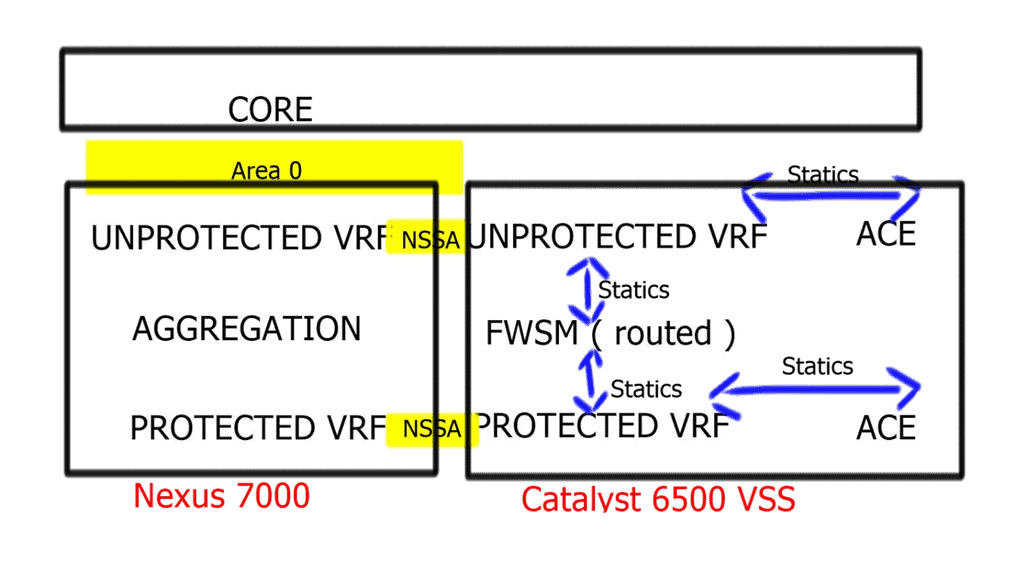

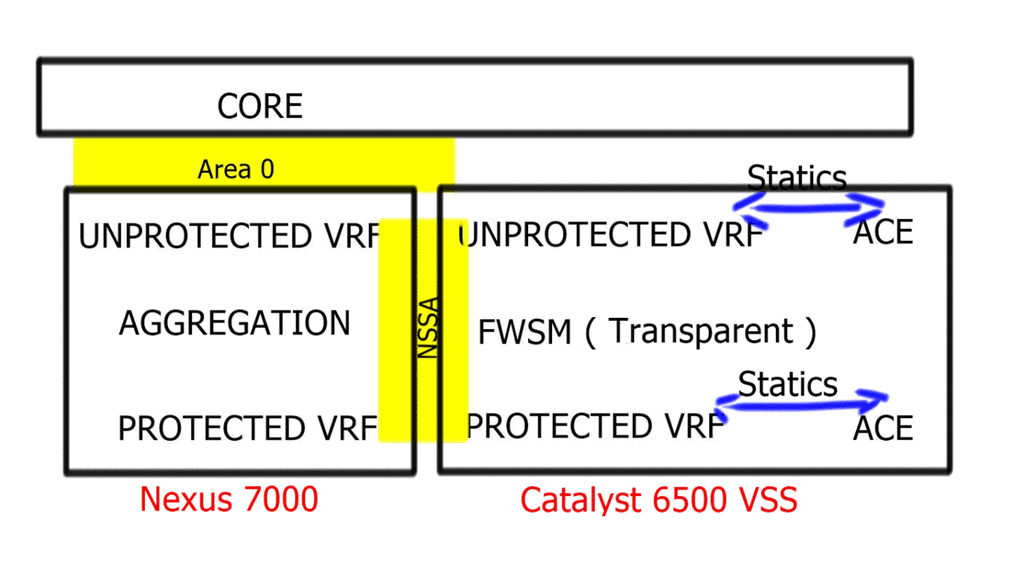

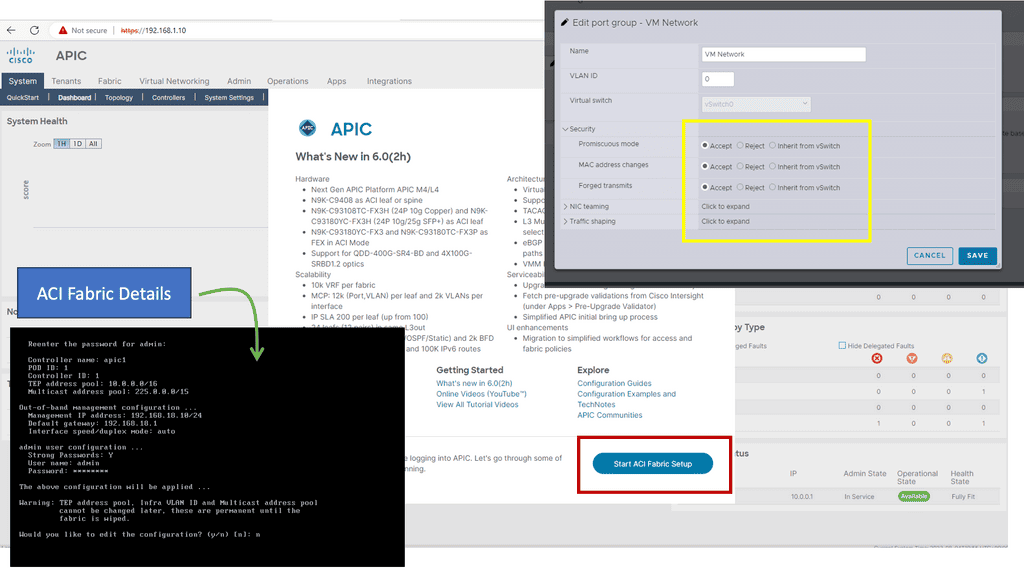

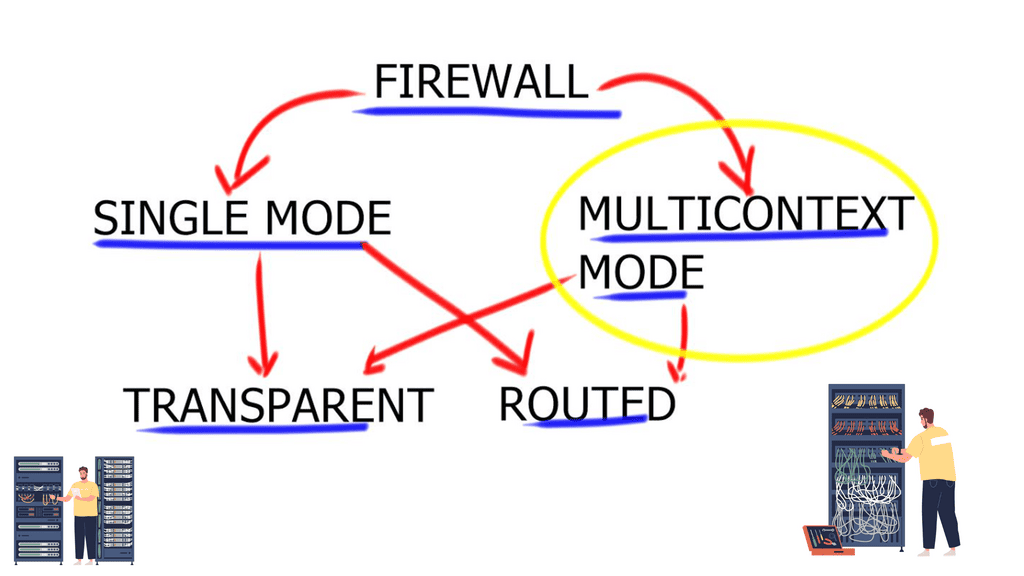

This feature allows NGFWs to detect and block malicious packets, preventing them from entering the system and causing harm. The following diagram shows the different ways a firewall can be deployed. The focus of this post will be on multi-context mode. An example would be the Cisco Secure Firewall.

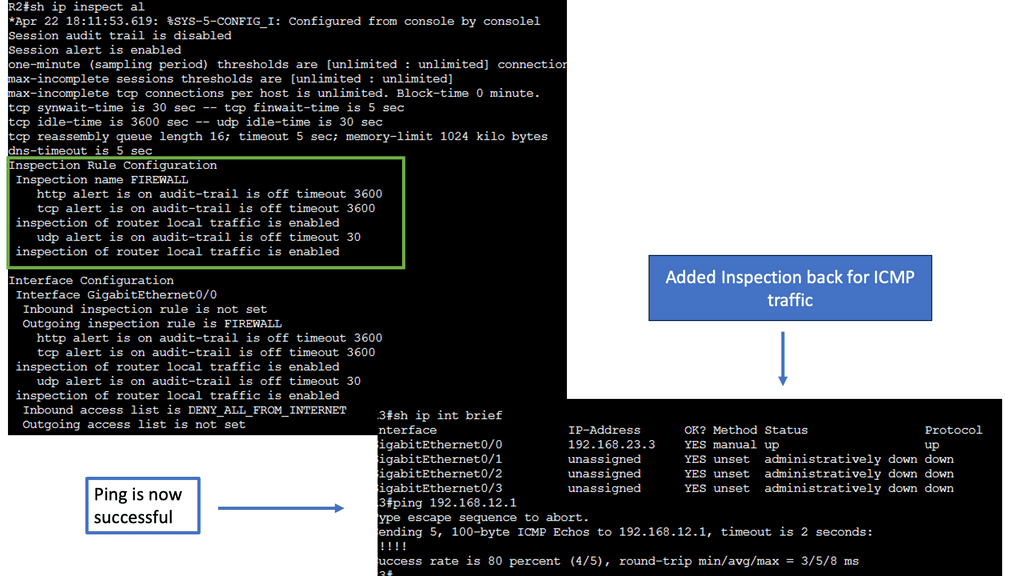

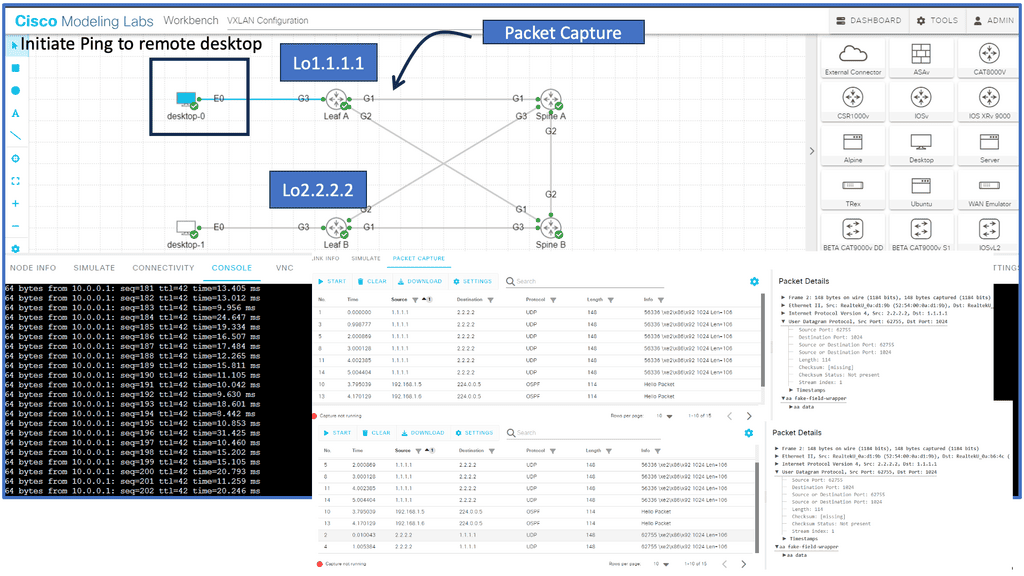

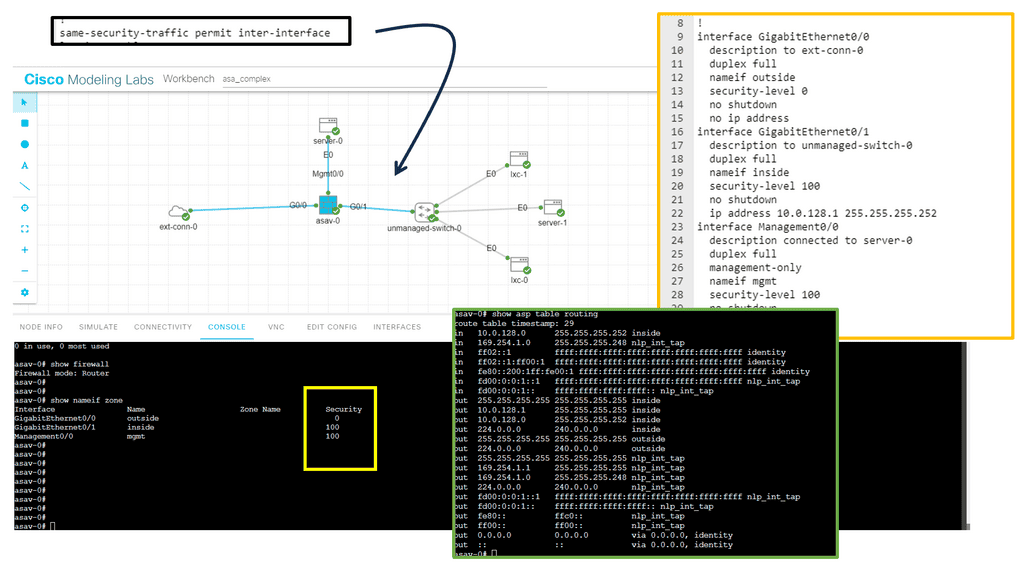

1st Lab Guide: ASA Basics.

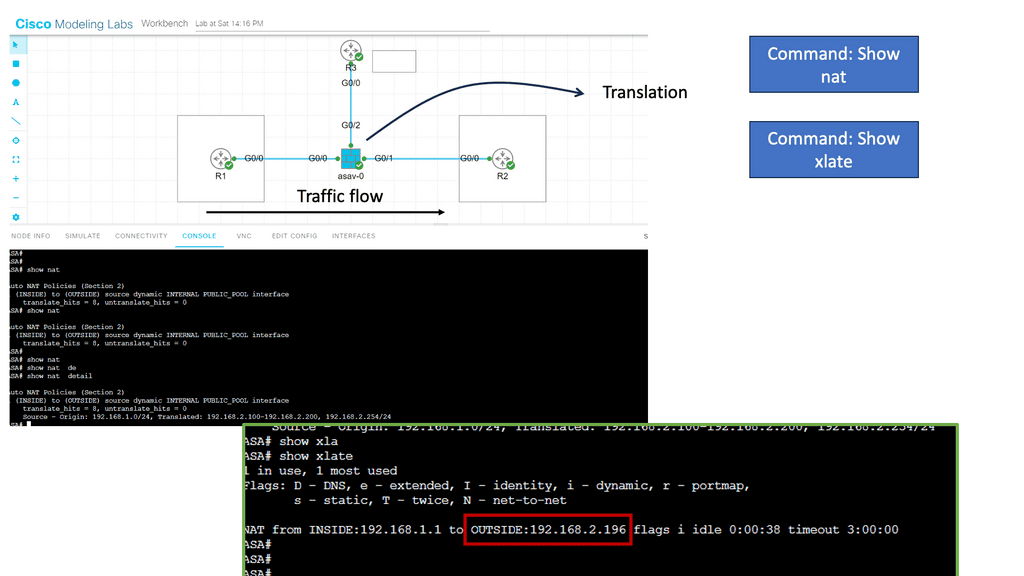

In the following lab guide, you can see we have an ASA working in routed mode. In routed mode, the ASA is considered a router hop in the network. Each interface that you want to route between is on a different subnet. You can share Layer 3 interfaces between contexts.

Traditionally, a firewall is a routed hop and acts as a default gateway for hosts that connect to one of its screened subnets. On the other hand, a transparent firewall is a Layer 2 firewall that acts like a “bump in the wire” or a “stealth firewall” and is not seen as a router hop to connected devices.

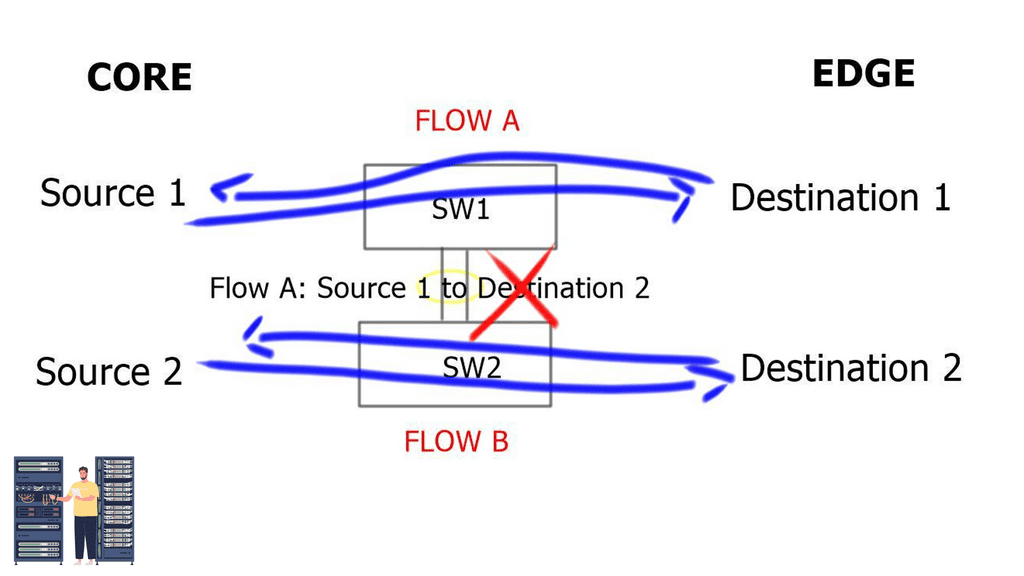

The ASA considers the state of a packet when deciding to permit or deny the traffic. One enforced parameter for the flow is that traffic enters and exits the same interface. The ASA drops any traffic for an existing flow that enters a different interface. Take note of the command: same-security-traffic permit inter-interface.

Context Firewall Types

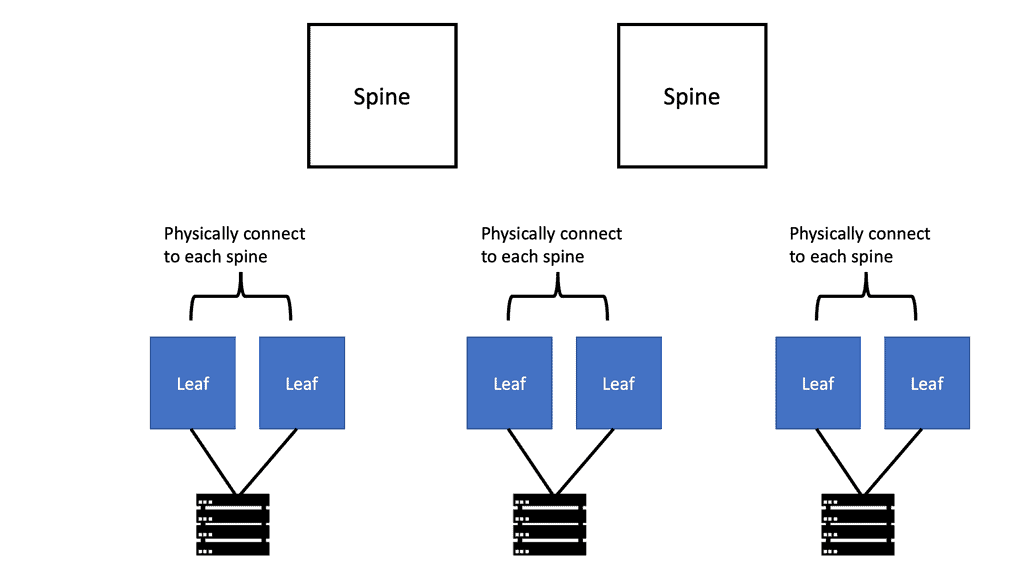

Contexts are generally helpful when different security policies are applied to traffic flows. For example, the firewall might protect multiple customers or departments in the same organization. Other virtualization technologies, such as VLANs or VRFs, are expected to be used alongside the firewall contexts; however, the firewall contexts have significant differences from the VRFs seen in the IOS routers.

Context Configuration Files

Context Configurations

For each context, the ASA includes a configuration that identifies the security policy, interfaces, and settings that can be configured. Context configurations can be stored in flash memory or downloaded from a TFTP, FTP, or HTTP(S) server.

System configuration

A system administrator configures the configuration location, interfaces, and other operating parameters of contexts in the system configuration to add and manage contexts. The startup configuration looks like this. Basic ASA settings are identified in the system configuration. There are no network interfaces or settings in the system configuration; when the system needs to access network resources (such as downloading contexts from the server), it uses an admin context. The system configuration has only a specialized failover interface for failover traffic.

Admin context configuration

Admin contexts are no different from other contexts. Users who log into the admin context have administrator rights and can access all contexts and the system. No restrictions are associated with the admin context, which can be used just like any other context. However, you may need to restrict access to the admin context to appropriate users because logging into the admin context grants administrator privileges over all contexts.

Flash memory must contain the admin context, not remote storage. When you switch from single to multiple modes, the admin context is configured in an internal flash memory file called admin.cfg. You can change the admin context if you do not wish to use admin.cfg as the admin context.

Steps: Turning a firewall into multiple context mode:

To turn the firewall to the multiple contexts mode, you should enter the global command mode multiple when logged in via the console port (you may do this remotely, converting the existing running configuration into the so-called admin context, but you risk losing connection to the box); this will force the mode change and reload the appliance.

If you connect to the appliance on the console port, you are logging in to the system context; the sole purpose of this context is to define other contexts and allocate resources to them.

System Context | Used for console access. Create new contexts and assign interfaces to each context. |

Admin Context | Used for remote access, either Telnet or SSH. Remote supports the change to command. |

User Context | Where the user-defined multi-context ( virtual firewall ) lives. |

Your first action step should be to define the admin context; this special context allows logging into the firewall remotely (via ssh, telnet, or HTTPS). This context should be configured first because the firewall won’t let you create other contexts before designating the admin context using the global command admin-context <name>.

Then you can define additional contexts if needed using the command context <name> and allocate physical interfaces to the contexts using the context-level command allocate-interface <physical-interface> [<logical-name>].

Each firewall context is assigned.

Interfaces | Physical or 802.1Q subinterface. Possible to have a shared interface where contexts share interfaces. |

Resource Limits | Number of connections, hosts, xlates |

Firewall Policy | Different MPF inspections, NAT translations, etc. for each context. |

The multi-context mode has many security contexts acting independently. Sharing multiple contexts with a single interface confuses determining which context to send packets to. ASA must associate inbound traffic with the correct context. Three options exist for classifying incoming packets.

Unique Interfaces | One-to-one pairing with either physical link or sub-interfaces ( VLAN tags ). |

Shared Interface | Unique Virtual MAC Addresses per virtual context, either auto-generate or manual set. |

NAT Configurations | Not common. |

ASA Packet Classification

Packets are also classified differently in multi-context firewalls. For example, in multimode configuration, interfaces can be shared between contexts. Therefore, the ASA must distinguish which packets must be sent to each context.

The ASA categorizes packets based on three criteria:

- Unique interfaces – 1:1 pairing with a physical link or sub-interfaces (VLAN tags)

- Unique MAC addresses – shared interfaces are assigned Unique Virtual Mac addresses per virtual context to alleviate routing issues, which complicates firewall management

- NAT configuration: If unique MAC addresses are disabled, the ASA uses the mapped addresses in the NAT configuration to classify packets.

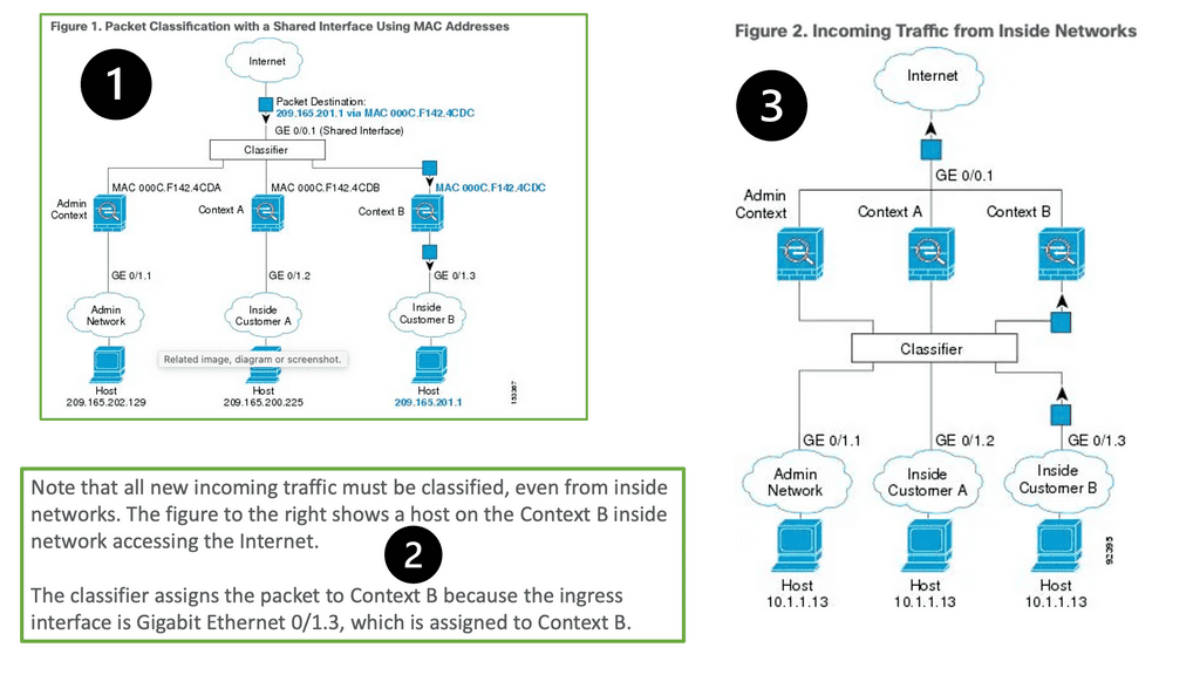

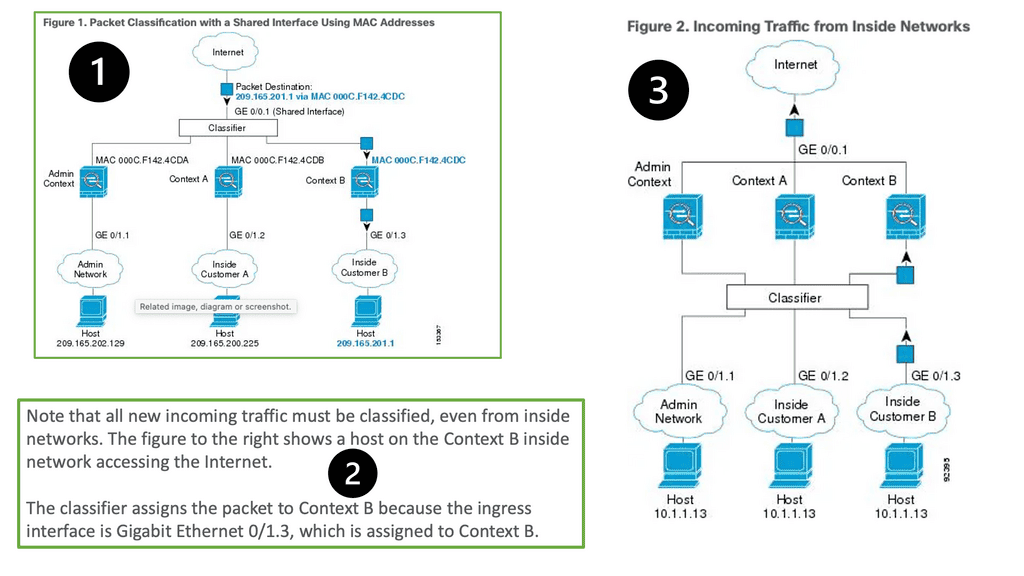

Starting with Point 1, the following figure shows multiple contexts sharing an outside interface. The classifier assigns the packet to Context B because Context B includes the MAC address to which the router sends the packet.

Firewall context interface details

Unique Interfaces are self-explanatory: there should be unique interfaces for each security context, for example, GE 0/0.1 Admin Context, GE 0/0.2 Context A, and GE 0/0.3 Context B. Unique interfaces are best practices, but you also need unique routing and IP addressing. This is because each VLAN has its subnet. Transparent firewalls must use unique interfaces.

With Shared Interfaces, contexts MAC addresses classify packets so upstream and downstream routers can send packets to that context. Every security context that shares an interface requires a unique MAC address.

It can be auto-generated ( default behavior ) or manually configured. Manual MAC address assignments take precedence. We share the same outside interface with numerous contexts but have a unique MAC address per context. Use the mac-address auto command under the system context or enter the manual under the interface. Then, we have Network Address Translation ( NAT ) and NAT translation per context for shared interfaces—a less common approach.

Addressing scheme

The addressing scheme in each context is arbitrary when using shared or unique interfaces. Configure 10.0.0.0/8-address space in context A and context B. ASA does not use an IP address to classify the traffic; it uses the MAC address or the physical link. The problem is that the same addressing cannot be used if NAT is used for incoming packet classification. The recommended approach is unique interfaces, not NAT, for classification.

Routing between context

Like route-leaking VRFs, routing between contexts is accomplished by traffic hair-pinning in and out of the interface by pointing static routes to relevant next hops. Designs available to Cascade Contexts for shared firewalls; the default route from one context indicates the inside interface of another context.

Firewall context resource limitations

All security contexts share resources and belong to the default class, i.e., the control plane has no division. Therefore, no predefined limits are specified from one security context to another. However, problems may arise when one security context overwhelms others, consuming too many resources and denying connections to different contexts. In this case, security contexts are assigned to resource classes, and upper limits are set.

The default class has the following limitations:

| Telnet sessions | 5 sessions |

| SSH sessions | 5 sessions |

| IPsec sessions | 5 sessions |

| MAC addresses | 5 sessions |

| VPN site-to-site tunnels | 0 sessions |

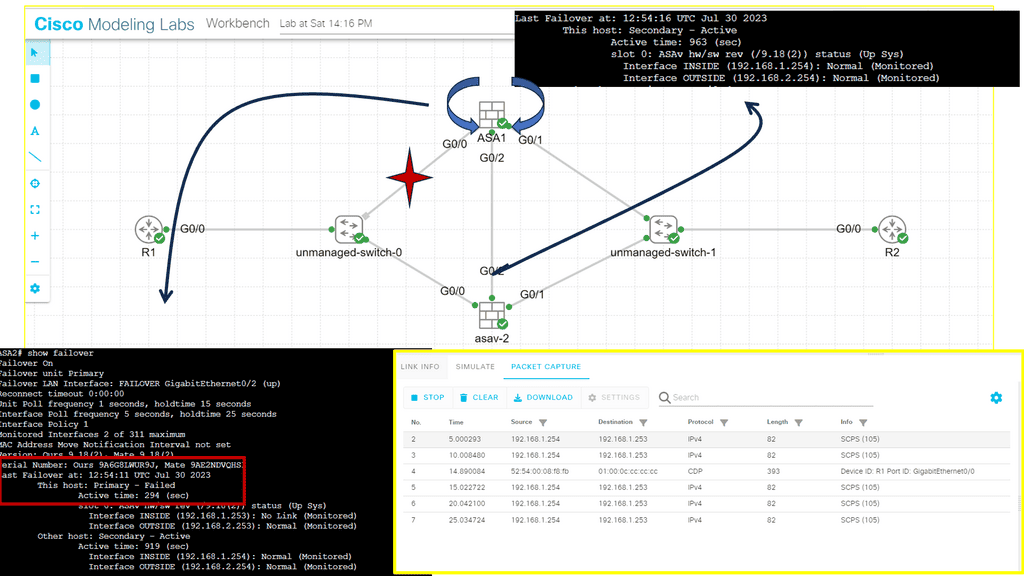

Active/active failover:

Multi-context mode offers Active / Active fail-over per Context. Primary forwards are for one set of contexts, and secondary forwards are for another. Security contexts divide logically into failure groups, a maximum of two failure groups. There are never two active forwarding paths at the same time. One ASA is active for Context A. The second ASA is the standby for Context A. Reversed roles for Context B.

So, in summary, multi-context mode offers active/active fail-over per context—the primary forwards for one context and the secondary for another. The security contexts divide logically into failure groups, with a maximum of two failure groups. There will always be one active forwarding path at a time.

2nd Lab Guide: ASA Failover.

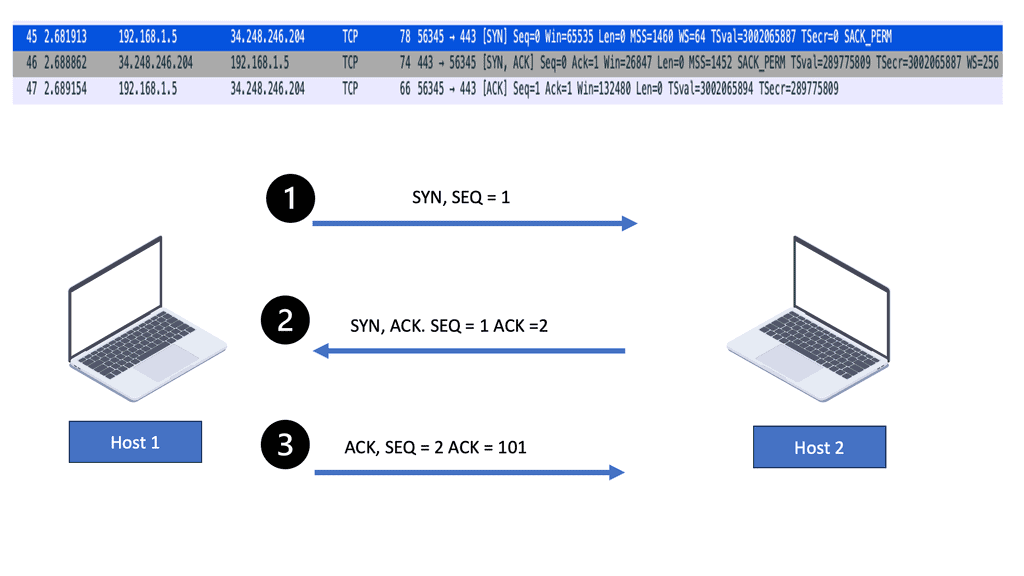

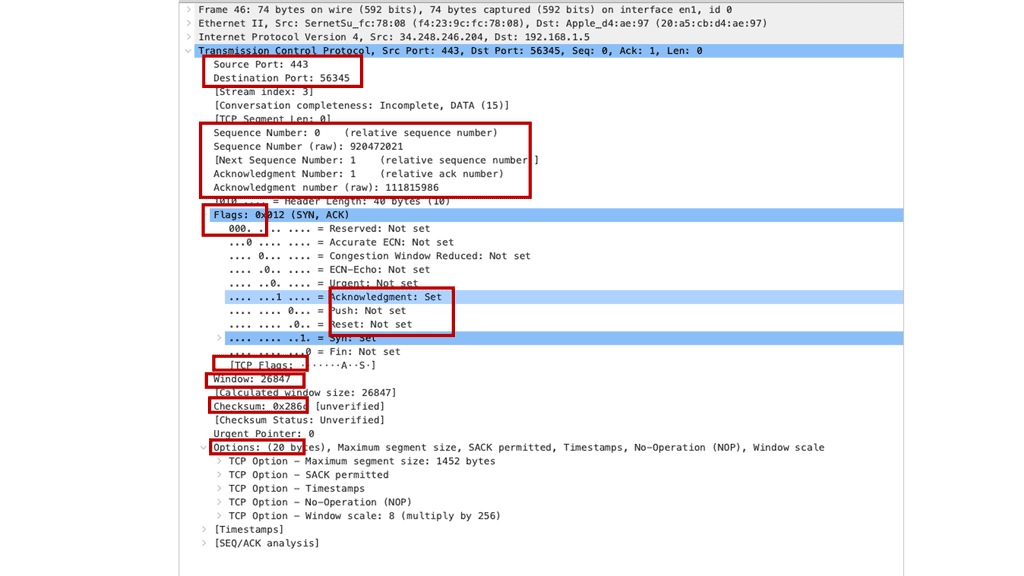

The following have two ASAs: ASA1 and ASA2. There is a failover link connecting the two firewalls. ASA1 is the primary, and ASA2 is the backup. ASA failover only occurs when there is an issue; in this case, the links from ASA1 to the switch were down, creating the failover event. Notice the protocol used between the ASA of SCPS from a packet capture.

Closing Comments on Context Firewall

Context firewalls provide several advantages over traditional firewalls. By inspecting the content of the network traffic, they can identify and block unauthorized access attempts, malicious code, and other potential threats. This proactive approach significantly enhances the security posture of an organization or an individual, reducing the risk of data breaches and unauthorized access.

Context firewalls are particularly effective in protecting against advanced persistent threats (APTs) and targeted attacks. These sophisticated cyber attacks often exploit application vulnerabilities or employ social engineering techniques to gain unauthorized access. By analyzing the context of network traffic, context firewalls can detect and block such attacks, minimizing the potential damage.

Key Features of Context Firewalls:

Context firewalls have various features that augment their effectiveness in securing the digital environment. Some notable features include:

1. Deep packet inspection: Context firewalls analyze the content of individual packets to identify potential threats or unauthorized activities.

2. Application awareness: They understand the specific protocols and applications being used, allowing them to apply tailored security policies.

3. User behavior analysis: Context firewalls can detect anomalies in user behavior, which can indicate potential insider threats or compromised accounts.

4. Content filtering: They can restrict access to specific websites or block certain types of content, ensuring compliance with organizational policies and regulations.

5. Threat intelligence integration: Context firewalls can leverage threat intelligence feeds to stay updated on the latest known threats and patterns of attack, enabling proactive protection.

Context firewalls provide organizations and individuals with a robust defense against increasing cyber threats. By analyzing network traffic content and applying security policies based on specific contexts, context firewalls offer enhanced protection against advanced threats and unauthorized access attempts.

With their deep packet inspection, application awareness, user behavior analysis, content filtering, and threat intelligence integration capabilities, context firewalls play a vital role in safeguarding our digital environment. As the cybersecurity landscape continues to evolve, investing in context firewalls should be a priority for anyone seeking to secure their digital assets effectively.

Summary: Context Firewall

In today’s digital age, ensuring the security and privacy of sensitive data has become increasingly crucial. One effective solution that has emerged is the context firewall. This blog post delved into context firewalls, their benefits, implementation, and how they can enhance data security in various domains.

Understanding Context Firewalls

Context firewalls serve as an advanced layer of protection against unauthorized access to sensitive data. Unlike traditional firewalls that filter traffic based on IP addresses or ports, context firewalls consider additional contextual information such as user identity, device type, location, and time of access. This context-aware approach allows for more granular control over data access, significantly reducing the risk of security breaches.

Benefits of Context Firewalls

Implementing a context firewall brings forth several benefits. Firstly, it enables organizations to enforce fine-grained access control policies, ensuring that only authorized users and devices can access specific data resources. Secondly, context firewalls enhance the overall visibility and monitoring capabilities, providing real-time insights into data access patterns and potential threats. Lastly, context firewalls facilitate compliance with industry regulations by offering more robust security measures.

Implementing a Context Firewall

The implementation of a context firewall involves several steps. First, organizations need to identify the context parameters relevant to their specific data environment. This includes factors such as user roles, device types, and location. Once the context parameters are defined, organizations can configure the firewall rules accordingly. Additionally, integrating the context firewall with existing infrastructure and security systems is essential for seamless operation.

Context Firewalls in Different Domains

The versatility of context firewalls allows them to be utilized across various domains. In the healthcare sector, context firewalls can restrict access to sensitive patient data based on factors such as user roles and location, ensuring compliance with privacy regulations like HIPAA. In the financial industry, context firewalls can help prevent fraudulent activities by implementing strict access controls based on user identity and transaction patterns.

Conclusion:

In conclusion, the implementation of a context firewall can significantly enhance data security in today’s digital landscape. By considering contextual information, organizations can strengthen access control, monitor data usage, and comply with industry regulations more effectively. As technology continues to advance, context firewalls will play a pivotal role in safeguarding sensitive information and mitigating security risks.