OpenShift Networking

OpenShift, developed by Red Hat, is a leading container platform that enables organizations to streamline their application development and deployment processes. With its robust networking capabilities, OpenShift provides a secure and scalable environment for running containerized applications. This blog post will explore the critical aspects of OpenShift networking and how it can benefit your organization.

OpenShift networking is built on top of Kubernetes networking and extends its capabilities to provide a flexible and scalable networking solution for containerized applications. It offers various networking options to meet the diverse needs of different organizations.

Load balancing and service discovery are essential aspects of Openshift networking. In this section, we will explore how Openshift handles load balancing across pods using services. We will discuss the various load balancing algorithms available and highlight the importance of service discovery in ensuring seamless communication between microservices within an Openshift cluster.

Openshift offers different networking models to suit diverse deployment scenarios. We will explore the three main models: Overlay Networking, Host Networking, and VxLAN Networking. Each model has its advantages and considerations, and we'll highlight the use cases where they shine.

Openshift provides several advanced networking features that enhance performance, security, and flexibility. We'll dive into topics like Network Policies, Service Mesh, Ingress Controllers, and Load Balancing. Understanding and utilizing these features will empower you to optimize your Openshift networking environment.Matt Conran

Highlights:OpenShift Networking

**The Basics of OpenShift Networking**

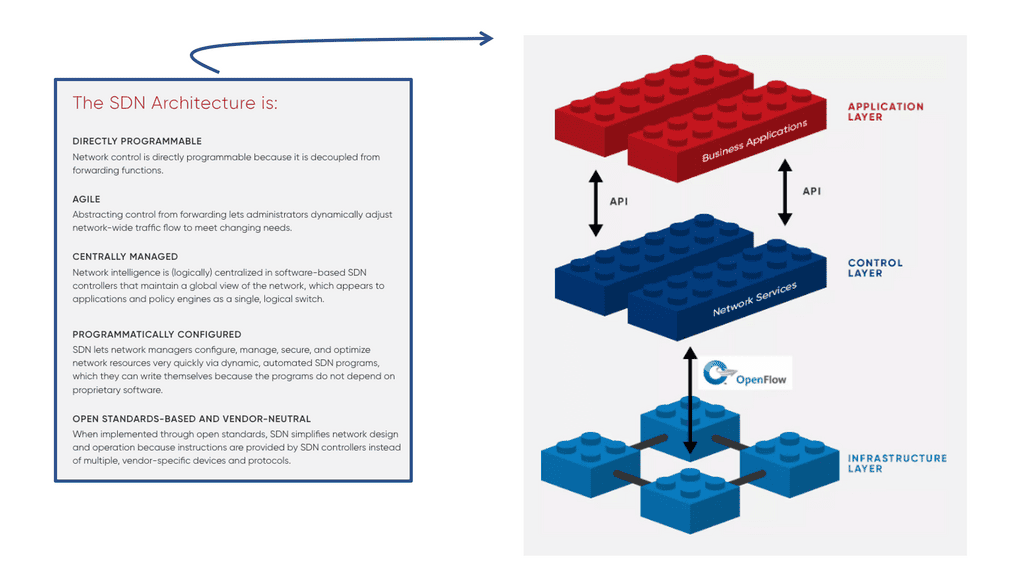

OpenShift networking is built on the Kubernetes foundation, with enhancements that cater to enterprise needs. At its core, OpenShift uses the concept of Software Defined Networking (SDN) to manage container communication. This allows developers to focus on building applications rather than worrying about the complexities of network configurations. The SDN provides a virtual network layer, enabling pods to communicate without relying on the physical network infrastructure.

**Key Components and Concepts**

To navigate through OpenShift networking, it is essential to understand its key components. The primary elements include Pods, Services, Routes, and Ingress Controllers. Pods are the smallest deployable units, and they communicate with each other using the cluster’s internal IP address. Services act as a stable endpoint for accessing Pods, even as they are scaled or replaced. Routes and Ingress Controllers manage external access, allowing users to interact with the applications hosted within the cluster. These components work in harmony to provide a comprehensive networking solution.

**Security and Policies in OpenShift Networking**

Security is a paramount concern in any networking model, and OpenShift addresses this with robust security policies. Network policies in OpenShift allow administrators to control traffic flow to and from pods, enhancing the security posture of applications. By defining rules that specify which pods can communicate, administrators can create a micro-segmented network environment. This granular control helps in mitigating potential threats and reducing the attack surface.

**Advanced Networking Features**

OpenShift offers advanced networking features that cater to diverse use cases. One such feature is the Multi-Cluster Networking capability, allowing applications to span across multiple OpenShift clusters. This is particularly beneficial for organizations that operate in hybrid or multi-cloud environments. Additionally, OpenShift supports network plug-ins that extend its capabilities, such as integrating with third-party solutions for monitoring and managing network traffic.

Overview – OpenShift Networking

OpenShift networking provides a robust and scalable network infrastructure for applications running on the platform. It allows containers and pods to communicate with each other and external systems and services. The networking model in OpenShift is based on the Kubernetes networking model, providing a standardized and flexible approach.

Software-Defined Networking (SDN) is a crucial component of OpenShift Networking, enabling dynamic, programmatically efficient network configuration. SDN abstracts the traditional networking hardware, providing a flexible network fabric that can adapt to the needs of your applications. SDN operates within OpenShift, enabling improved scalability, enhanced security, and simplified network management.

Key Initial Considerations:

A. OpenShift Container Platform

OpenShift Container Platform (formerly known as OpenShift Enterprise) or OCP is Red Hat’s offering for the on-premises private platform as a service (PaaS). OpenShift is based on the Origin open-source project and is a Kubernetes distribution. The foundation of the OpenShift Container Platform is based on Kubernetes and, therefore, shares some of the same networking technology along with some enhancements.

B. Kubernetes: Orchestration Layer

Kubernetes is the leading container orchestration, and OpenShift is derived from containers, with Kubernetes as the orchestration layer. All of these elements lie upon an SDN layer that glues everything together by providing an abstraction layer. SDN creates the cluster-wide network. The glue that connects all the dots is the overlay network that operates over an underlay network.

C. OpenShift Networking & Plugins

When it comes to container orchestration, networking plays a pivotal role in ensuring connectivity and communication between containers, pods, and services. Openshift Networking provides a robust framework that enables efficient and secure networking within the Openshift cluster. By leveraging various networking plugins and technologies, it facilitates seamless communication between applications and allows load balancing, service discovery, and more.

The Architecture of OpenShift Networking

OpenShift’s networking model is built upon the foundation of Kubernetes, but it takes things a step further with its own enhancements bringing better networking and security capabilities than the default Kubernetes model. The architecture consists of several key components, including the OpenShift SDN (Software Defined Networking), network policies, and service mesh capabilities.

The OpenShift SDN abstracts the underlying network infrastructure, allowing developers to focus on application logic rather than network configurations. This abstraction enables greater flexibility and simplifies the deployment process.

**Network Policies: Securing Your Cluster**

One of the standout features of OpenShift networking is the implementation of network policies. These policies allow administrators to define how pods communicate with each other and with the outside world. By leveraging network policies, teams can enforce security boundaries, ensuring that only authorized traffic is allowed to flow within the cluster. This is particularly crucial in multi-tenant environments where different teams might share the same OpenShift cluster but require isolated communication channels.

**Service Mesh: Enhancing Microservices Communication**

As organizations increasingly adopt microservices architectures, managing service-to-service communication becomes a challenge. OpenShift addresses this with its service mesh capabilities, primarily through the integration of Istio. A service mesh adds an additional layer of security, observability, and resilience to your microservices. It provides features like advanced traffic management, circuit breaking, and telemetry collection, all of which are essential for maintaining a healthy and efficient microservices ecosystem.

**Navigating Through OpenShift’s Route and Ingress**

Routing is another fundamental aspect of OpenShift networking. OpenShift’s routing layer allows developers to expose their applications to external users. This can be achieved through both routes and ingress resources. Routes are specific to OpenShift and provide a simple way to map external URLs to services within the cluster. On the other hand, ingress resources, which are part of Kubernetes, offer more advanced configurations and are particularly useful when you need to define complex routing rules.

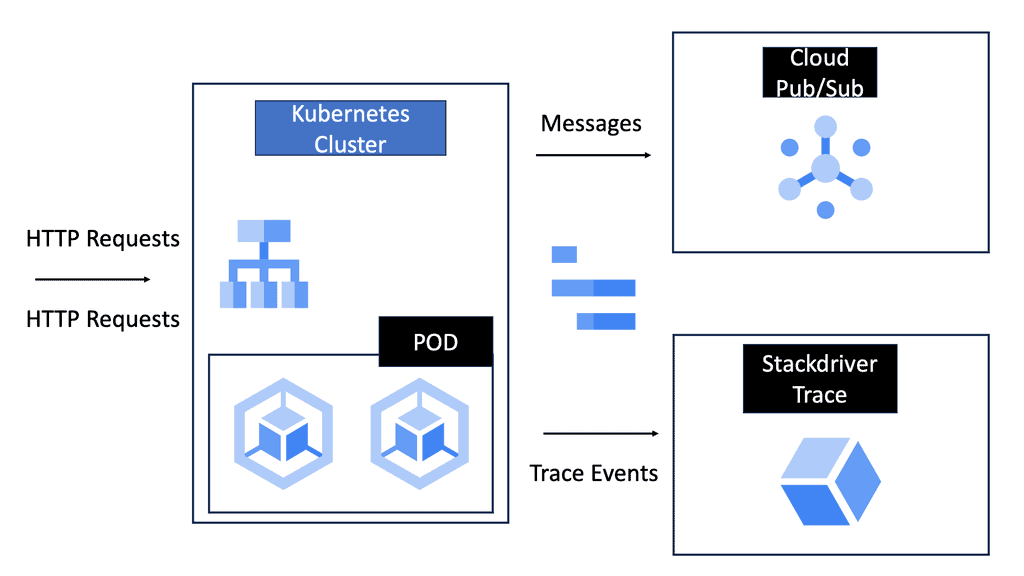

Example Technology: Cloud Service Mesh

### The Core Components of a Service Mesh

At its heart, a service mesh consists of two fundamental components: the data plane and the control plane. The data plane is responsible for managing the actual data transfer between services, often through a network of proxies. These proxies intercept requests and responses, enabling smooth traffic flow and providing essential features like load balancing and retries. In contrast, the control plane oversees the configuration and management of the proxies, ensuring that policies are consistently applied across the network.

### Benefits of Implementing a Service Mesh

Implementing a cloud service mesh offers numerous advantages. Primarily, it enhances the observability of microservices by providing detailed insights into traffic patterns, latency, and service health. Additionally, it strengthens security with features like mutual TLS for encrypted communications and fine-grained access controls. Service mesh also improves resilience by offering built-in capabilities for circuit breaking and fault injection, which help to identify and mitigate potential failures proactively.

Key Considerations: OpenShift Networking

1. Network Namespace Isolation:

OpenShift networking leverages network namespaces to achieve isolation between different projects, or namespaces, on the platform. Each project has its virtual network, ensuring that containers and pods within a project can communicate securely while isolated from other projects.

2. Service Discovery and OpenShift Load Balancer:

OpenShift networking provides service discovery and load-balancing mechanisms to facilitate communication between various application components. Services act as stable endpoints, allowing containers and pods to connect to them using DNS or environmental variables. The built-in OpenShift load balancer ensures that traffic is distributed evenly across multiple instances of a service, improving scalability and reliability.

3. Ingress and Egress Network Policies:

OpenShift networking allows administrators to define ingress and egress network policies to control network traffic flow within the platform. Ingress policies specify rules for incoming traffic, allowing or denying access to specific services or pods. Egress policies, on the other hand, regulate outgoing traffic from pods, enabling administrators to restrict access to external systems or services.

4. Network Plugins and Providers:

OpenShift networking supports various network plugins and providers, allowing users to choose the networking solution that best fits their requirements. Some popular options include Open vSwitch (OVS), Flannel, Calico, and Multus. These plugins provide additional capabilities such as network isolation, advanced routing, and security features.

5. Network Monitoring and Troubleshooting:

OpenShift provides robust monitoring and troubleshooting tools to help administrators track network performance and resolve issues. The platform integrates with monitoring systems like Prometheus, allowing users to collect and analyze network metrics. Additionally, OpenShift provides logging and debugging features to aid in identifying and resolving network-related problems.

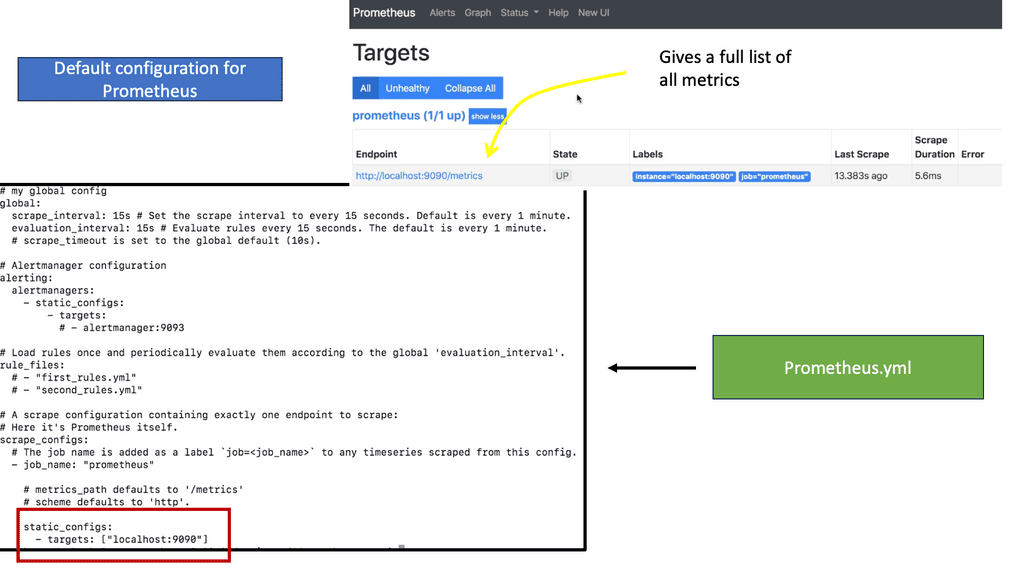

Example Technology: Prometheus Pull Approach

**The Basics: What is the Prometheus Pull Approach?**

Prometheus utilizes a pull-based data collection model, meaning it periodically scrapes metrics from configured endpoints. This contrasts with the push model, where monitored systems send the data to a central server. The pull approach allows Prometheus to control the rate of data collection and manage the load on both the server and clients efficiently. This decoupling of data collection from data processing is a key feature that offers significant flexibility and control.

**Advantages of the Pull Model**

One of the primary advantages of the pull model is that it enables dynamic service discovery. Prometheus can automatically adjust to changes in the infrastructure, such as scaling up or down, by discovering new targets as they come online. This eliminates the need for manual configuration and reduces the risk of stale or missing data. Additionally, the pull model simplifies security configurations, as it requires fewer open ports and allows for more fine-grained access control.

OpenShift Networking Features

OpenShift Networking offers many features that empower developers and administrators to build and manage scalable applications. Some notable features include:

1. SDN Integration: Openshift seamlessly integrates with Software-Defined Networking (SDN) solutions, allowing for flexible network configurations and efficient traffic routing.

2. Multi-tenancy Support: With Openshift Networking, you can create isolated network zones, enabling multiple teams or projects to coexist within the same cluster while maintaining secure communication.

3. Service Load Balancing: Openshift Networking incorporates built-in load balancing capabilities, distributing incoming traffic across multiple instances of a service, thus ensuring high availability and optimal performance.

**Key Challenges: Traditional data center**

Several challenges with traditional data center networks prove they cannot support today’s applications, such as microservices and containers. Therefore, we need a new set of networking technologies built into OpenShift SDN to deal adequately with today’s landscape changes.

– Firstly, one of the main issues is that we have a tight coupling with all the networking and infrastructure components. With traditional data center networking, Layer 4 is coupled with the network topology at fixed network points and lacks the flexibility to support today’s containerized applications, which are more agile than traditional monolith applications.

– One of the main issues is that containers are short-lived and constantly spun down. Assets that support the application, such as IP addresses, firewalls, policies, and overlay networks that glue the connectivity, are continually recycled. These changes bring a lot of agility and business benefits, but there is an extensive comparison to a traditional network that is relatively static, where changes happen every few months.

OpenShift networking – Two layers

- In the case of OpenShift itself deployed in the virtual environment, the physical network equipment directly determines the underlying network topology. OpenShift does not control this level, which provides connectivity to OpenShift masters and nodes.

- OpenShift SDN plugin determines the virtual network topology. At this level, applications are connected, and external access is provided.

OpenShift uses an overlay network based on VXLAN to enable containers to communicate with each other. Layer 2 (Ethernet) frames can be transferred across Layer 3 (IP) networks using the Virtual Extensible Local Area Network (VXLAN) protocol.

Whether the communication is limited to pods within the same project or completely unrestricted depends on the SDN plugin being used. The network topology remains the same regardless of which plugin is used. OpenShift makes its internal SDN plugins available out-of-the-box and for integration with third-party SDN frameworks.

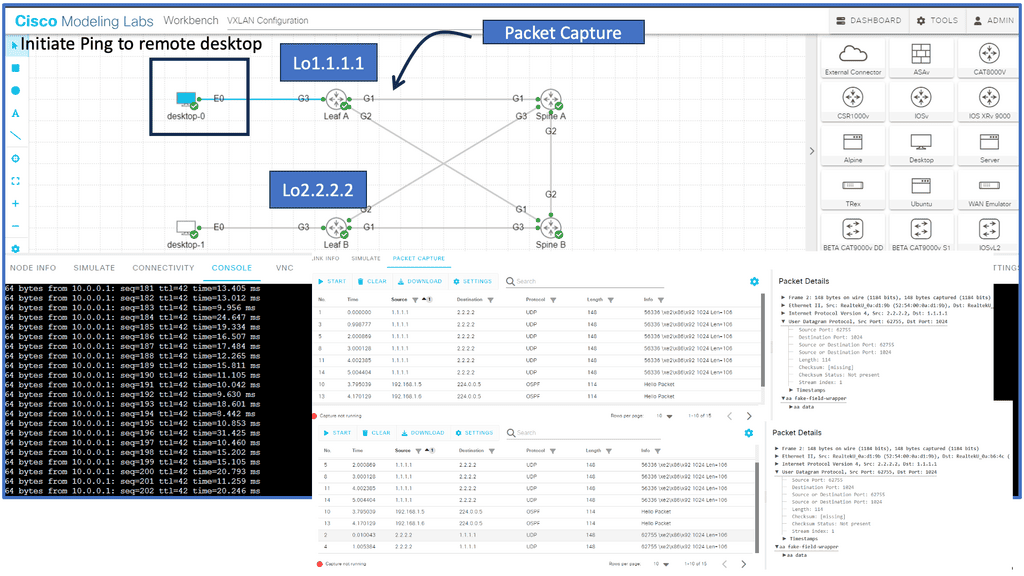

Example Overlay Technology: VXLAN

VXLAN is a network virtualization technology that extends Layer 2 networks over a Layer 3 infrastructure. This is achieved by encapsulating Ethernet frames within UDP packets, allowing them to traverse Layer 3 networks seamlessly. The primary goal of VXLAN is to address the limitations of traditional VLANs, particularly their scalability issues.

**Advantages of VXLAN**

One of the standout features of VXLAN is its capacity to support up to 16 million unique identifiers, vastly exceeding the 4096 limit of VLANs. This scalability makes it ideal for large-scale data centers and cloud environments. Additionally, VXLAN enhances network flexibility by enabling the creation of isolated virtual networks over a shared physical infrastructure, thereby optimizing resource utilization and improving security.

OpenShift Networking Plugins

### Understanding OpenShift Networking Plugins

OpenShift networking plugins are essential for managing network traffic within a cluster. They determine how pods communicate with each other and with external networks. The choice of networking plugin can significantly impact the performance and security of your applications. OpenShift supports several plugins, each with distinct features, allowing administrators to tailor their network configuration to specific needs.

### Popular Networking Plugins in OpenShift

1. **OVN-Kubernetes**: This plugin is widely used for its scalability and support for network policies. It provides a flexible, secure, and scalable network solution that is ideal for complex deployments. OVN-Kubernetes integrates seamlessly with Kubernetes, offering enhanced network isolation and simplified network management.

2. **Calico**: Known for its simplicity and efficiency, Calico provides high-performance network connectivity. It excels in environments that require fine-grained network policies and is particularly popular in microservices architectures. Calico’s support for IPv6 and integration with Kubernetes NetworkPolicy API makes it a versatile choice.

3. **Flannel**: A simpler alternative, Flannel is suitable for users who prioritize ease of setup and basic networking functionality. It uses a flat network model, which is straightforward to configure and manage, making it a good choice for smaller clusters or those in the early stages of development.

### Choosing the Right Plugin

Selecting the appropriate networking plugin for your OpenShift environment depends on several factors, including your organization’s security requirements, scalability needs, and existing infrastructure. For instance, if your primary concern is network isolation and security, OVN-Kubernetes might be the best fit. On the other hand, if you need a lightweight solution with minimal overhead, Flannel could be more suitable.

### Implementing and Managing Networking Plugins

Once you’ve chosen a networking plugin, the next step is implementation. This involves configuring the plugin within your OpenShift cluster and ensuring it aligns with your network policies. Regular monitoring and management are crucial to maintaining optimal performance and security. Utilizing OpenShift’s built-in tools and dashboards can greatly aid in this process, providing visibility into network traffic and potential bottlenecks.

Related: Before you proceed, you may find the following posts helpful for some pre-information

OpenShift Networking

Networking Overview

1. Pod Networking: In OpenShift, containers are encapsulated within pods, the most minor deployable units. Each pod has its IP address and can communicate with other pods within the same project or across different projects. This enables seamless communication and collaboration between applications running on different pods.

2. Service Networking: OpenShift introduces the concept of services, which act as stable endpoints for accessing pods. Services provide a layer of abstraction, allowing applications to communicate with each other without worrying about the underlying infrastructure. With service networking, you can easily expose your applications to the outside world and manage traffic efficiently.

3. Ingress and Egress: OpenShift provides a robust routing infrastructure through its built-in Ingress Controller. It lets you define rules and policies for accessing your applications outside the cluster. To ensure seamless connectivity, you can easily configure routing paths, load balancing, SSL termination, and other advanced features.

4. Network Policies: OpenShift enables fine-grained control over network traffic through network policies. You can define rules to allow or deny communication between pods based on their labels and namespaces. This helps enforce security measures and isolate sensitive workloads from unauthorized access.

5. Multi-Cluster Networking: OpenShift allows you to connect multiple clusters, creating a unified networking fabric. This enables you to distribute your applications across different clusters, improving scalability and fault tolerance. OpenShift’s intuitive interface makes managing and monitoring your multi-cluster environment easy.

6. Network Policies and Security: One key aspect of Openshift Networking is its support for network policies. These policies allow administrators to define fine-grained rules and access controls, ensuring secure communication between different components within the cluster. With Openshift Networking, organizations can enforce policies restricting traffic flow, implementing encryption mechanisms, and safeguarding sensitive data from unauthorized access.

7. Service Discovery and Load Balancing: Service discovery and load balancing are crucial for maintaining high availability and optimal performance in a dynamic container environment. Openshift Networking offers robust mechanisms for service discovery, allowing containers to locate and communicate with one another seamlessly. Additionally, it provides load-balancing capabilities to distribute incoming traffic efficiently across multiple instances, ensuring optimal resource utilization and preventing bottlenecks.

8. Network Plugin Options: Openshift Networking offers a range of network plugin options, allowing organizations to select the most suitable solution for their specific requirements. Whether the native OpenShift SDN (Software-Defined Networking) plugin, the popular Flannel plugin, or the versatile Calico plugin, Openshift provides flexibility and compatibility with different network architectures and setups.

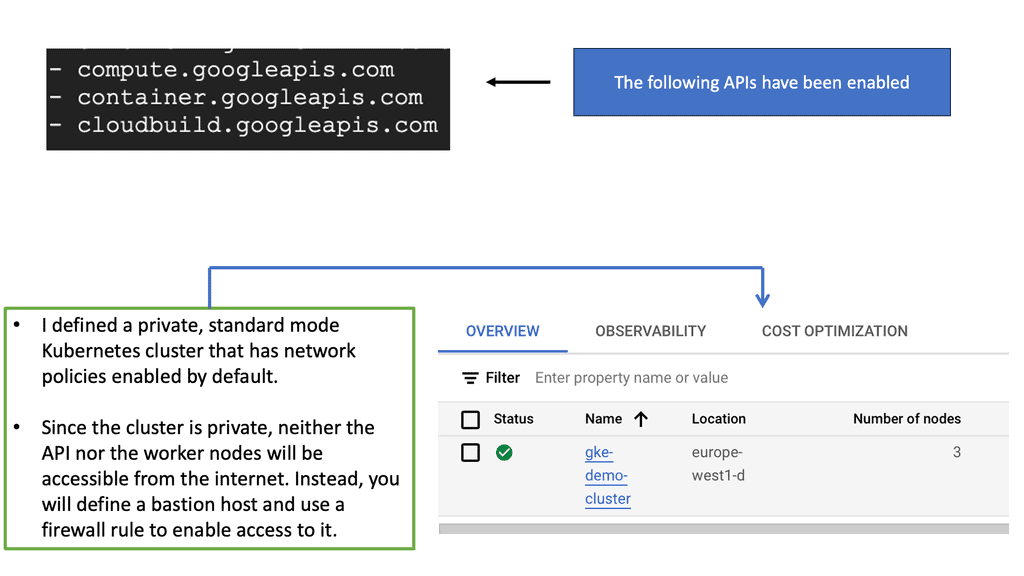

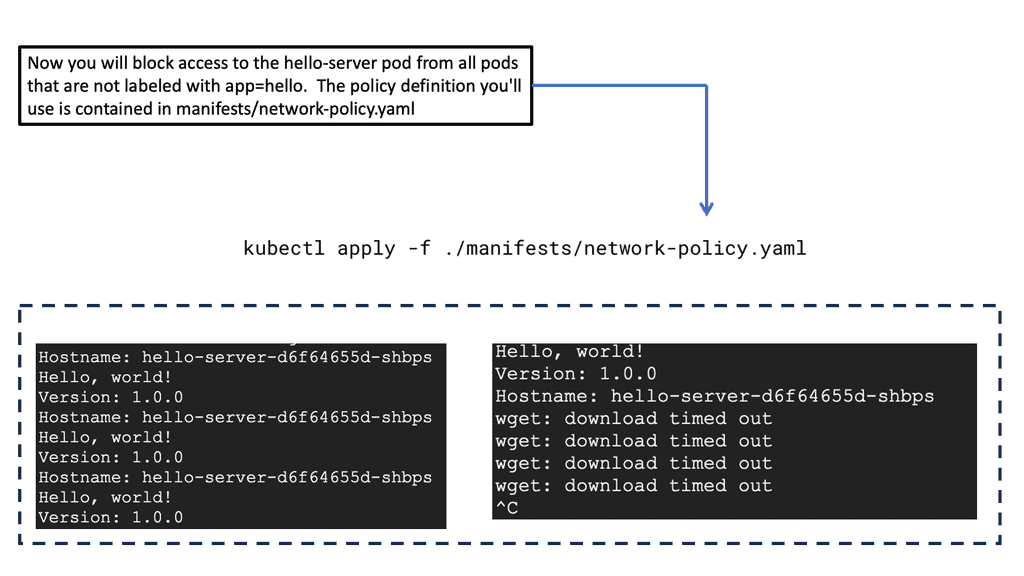

Example Network Policy Technology: GKE Kubernetes

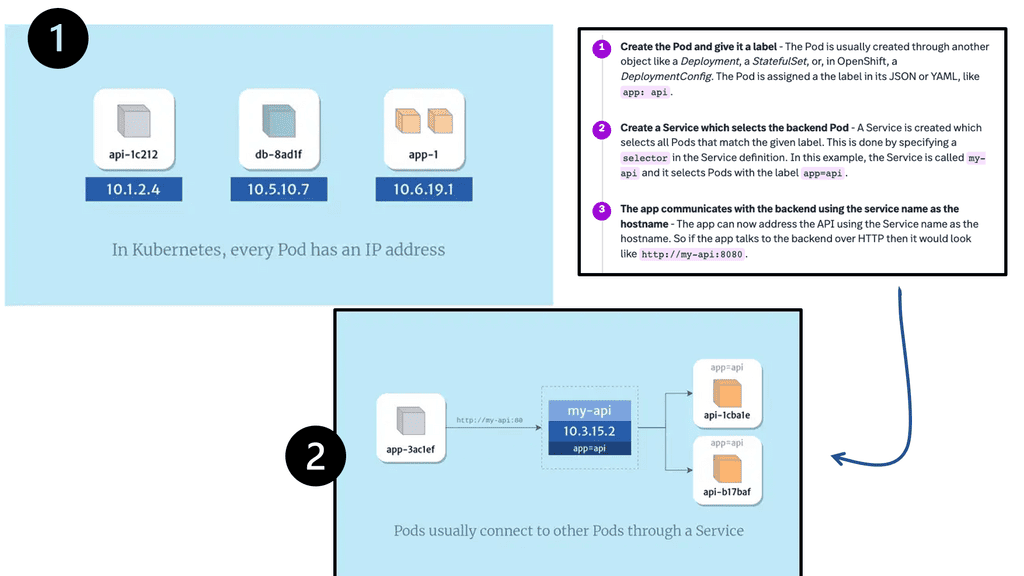

POD Networking

Each pod in Kubernetes is assigned an IP address from an internal network that allows pods to communicate with each other. By doing this, all containers within the pod behave as if they were on the same host. The IP address of each pod enables pods to be treated like physical hosts or virtual machines.

It includes port allocation, networking, naming, service discovery, load balancing, application configuration, and migration. Linking pods together is unnecessary, and IP addresses shouldn’t be used to communicate directly between pods. Instead, create a service to interact with the pods.

OpenShift Container Platform DNS

To enable the frontend pods to communicate with the backend services when running multiple services, such as frontend and backend, environment variables are created for user names, service IPs, and more. To pick up the updated values for the service IP environment variable, the frontend pods must be recreated if the service is deleted and recreated.

To ensure that the IP address for the backend service is generated correctly and that it can be passed to the frontend pods as an environment variable, the backend service must be created before any frontend pods.

Due to this, the OpenShift Container Platform has a built-in DNS, enabling the service to be reached by both the service DNS and the service IP/port. Split DNS is supported by the OpenShift Container Platform by running SkyDNS on the master, which answers DNS queries for services. By default, the master listens on port 53.

POD Network & POD Communication

As a general rule, pod-to-pod communication holds for all Kubernetes clusters: An IP address is assigned to each Pod in Kubernetes. While pods can communicate directly with each other by addressing their IP addresses, it is recommended that they use Services instead. Services consist of Pods accessed through a single, fixed DNS name or IP address. The majority of Kubernetes applications use Services to communicate. Since Pods can be restarted frequently, addressing them directly by name or IP is highly brittle. Instead, use a Service to manage another pod.

Simple pod-to-pod communication

The first thing to understand is how Pods communicate within Kubernetes. Kubernetes provides IP addresses for each Pod. IP addresses are used to communicate between pods at a very primitive level. Therefore, you can directly address another Pod using its IP address whenever needed.

A Pod has the same characteristics as a virtual machine (VM), which has an IP address, exposes ports, and interacts with other VMs on the network via IP address and port.

What is the communication mechanism between the front-end pod and the back-end pod? In a web application architecture, a front-end application is expected to talk to a backend, an API, or a database. In Kubernetes, the front and back end would be separated into two Pods.

The front end could be configured to communicate directly with the back end via its IP address. However, a front end would still need to know the backend’s IP address, which can be tricky when the Pod is restarted or moved to another node. Using a Service can make our solution less brittle.

Because the app still communicates with the API pods via the Service, which has a stable IP address, if the Pods die or need to be restarted, this won’t affect the app.

How do containers in the same Pod communicate?

Sometimes, you may need to run multiple containers in the same Pod. The IP addresses of various containers in the same Pod are the same, so Localhost can be used to communicate between them. For example, a container in a pod can use the address localhost:8080 to communicate with another container in the Pod on port 8080.

Two containers cannot share the same port in the pod because the IP addresses are shared, and communication occurs on localhost. For instance, you wouldn’t be able to have two containers in the same Pod that expose port 8080. So, it would help if you ensured that the services use different ports.

In Kubernetes, pods can communicate with each other in a few different ways:

- Containers in the same Pod can connect using localhost; the other container exposes the port number.

- A container in a Pod can connect to another Pod using its IP address. To find the IP address of a pod, you can use oc get pods.

- A container can connect to another Pod through a Service. Like my service, a service has an IP address and usually a DNS name.

OpenShift and Pod Networking

When you initially deploy OpenShift, a private pod network is created. Each pod in your OpenShift cluster is assigned an IP address on the pod network, which is used to communicate with each pod across the cluster.

The pod network spanned all nodes in your cluster and was extended to your second application node when that was added to the cluster. Your pod network IP addresses can’t be used on your network by any network that OpenShift might need to communicate with. OpenShift’s internal network routing follows all the rules of any network and multiple destinations for the same IP address lead to confusion.

**Endpoint Reachability**

Also, Endpoint Reachability. Not only have endpoints changed, but have the ways we reach them. The application stack previously had very few components, maybe just a cache, web server, or database. Using a load balancing algorithm, the most common network service allows a source to reach an application endpoint or load balance to several endpoints.

A simple round-robin or a load balancer that measured load was standard. Essentially, the sole purpose of the network was to provide endpoint reachability. However, changes inside the data center are driving networks and network services toward becoming more integrated with the application.

Nowadays, the network function exists no longer solely to satisfy endpoint reachability; it is fully integrated. In the case of Red Hat’s OpenShift, the network is represented as a Software-Defined Networking (SDN) layer. SDN means different things to different vendors. So, let me clarify in terms of OpenShift.

Highlighting software-defined network (SDN)

When you examine traditional networking devices, you see the control and forwarding planes shared on a single device. The concept of SDN separates these two planes, i.e., the control and forwarding planes are decoupled. They can now reside on different devices, bringing many performance and management benefits.

The benefits of network integration and decoupling make it much easier for the applications to be divided into several microservice components driving the microservices culture of application architecture. You could say that SDN was a requirement for microservices.

Challenges to Docker Networking

Port mapping and NAT

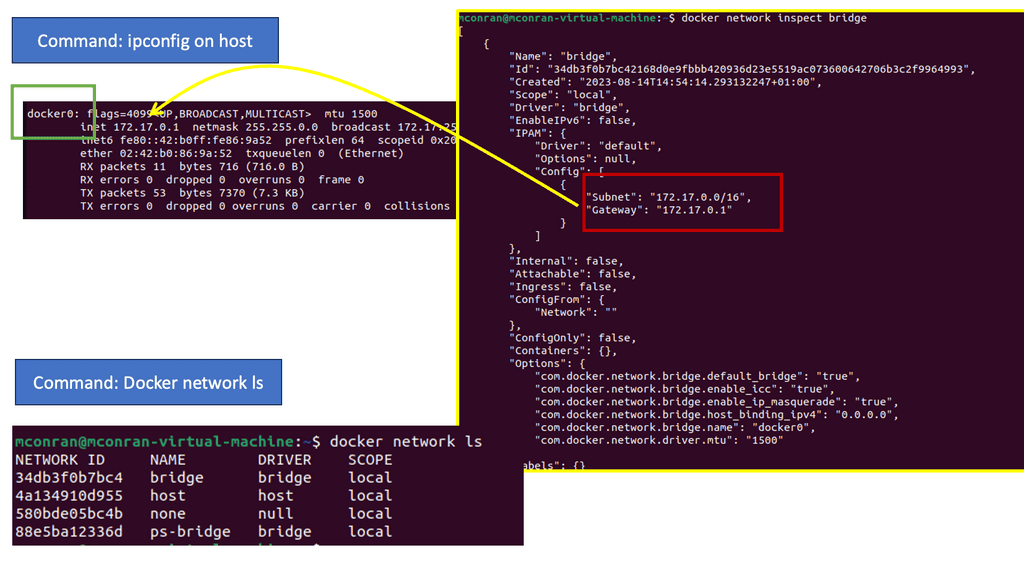

Docker containers have been around for a while, but networking had significant drawbacks when they first came out. If you examine container networking, for example, Docker containers have other challenges when they connect to a bridge on the node where the docker daemon is running.

To allow network connectivity between those containers and any endpoint external to the node, we need to do some port mapping and Network Address Translation (NAT). This adds complexity.

Port Mapping and NAT have been around for ages. Introducing these networking functions will complicate container networking when running at scale. It is perfectly fine for 3 or 4 containers, but the production network will have many more endpoints. The origins of container networking are based on a simple architecture and primarily a single-host solution.

Docker at scale: Orchestration layer

The core building blocks of containers, such as namespaces and control groups, are battle-tested. Although the docker engine manages containers by facilitating Linux Kernel resources, it’s limited to a single host operating system. Once you get past three hosts, networking is hard to manage. Everything needs to be spun up in a particular order, and consistent network connectivity and security, regardless of the mobility of the workloads, are also challenged.

This led to an orchestration layer. Just as a container is an abstraction over the physical machine, the container orchestration framework is an abstraction over the network. This brings us to the Kubernetes networking model, which Openshift takes advantage of and enhances; for example, the OpenShift Route Construct exposes applications for external access.

The Kubernetes model: Pod networking

As we discussed, the Kubernetes networking model was developed to simplify Docker container networking, which had drawbacks. It introduced the concept of Pod and Pod networking, allowing multiple containers inside a Pod to share an IP namespace. They can communicate with each other on IPC or localhost.

Nowadays, we place a single container into a pod, which acts as a boundary layer for any cluster parameters directly affecting the container. So, we run deployment against pods rather than containers.

In OpenShift, we can assign networking and security parameters to Pods that will affect the container inside. When an app is deployed on the cluster, each Pod gets an IP assigned, and each Pod could have different applications.

For example, Pod 1 could have a web front end, and Pod could be a database, so the Pods need to communicate. For this, we need a network and IP address. By default, Kubernetes allocates an internal IP address for each Pod for applications running within the Pod. Pods and their containers can network, but clients outside the cluster cannot access internal cluster resources by default. With Pod networking, every Pod must be able to communicate with each other Pod in the cluster without Network Address Translation (NAT).

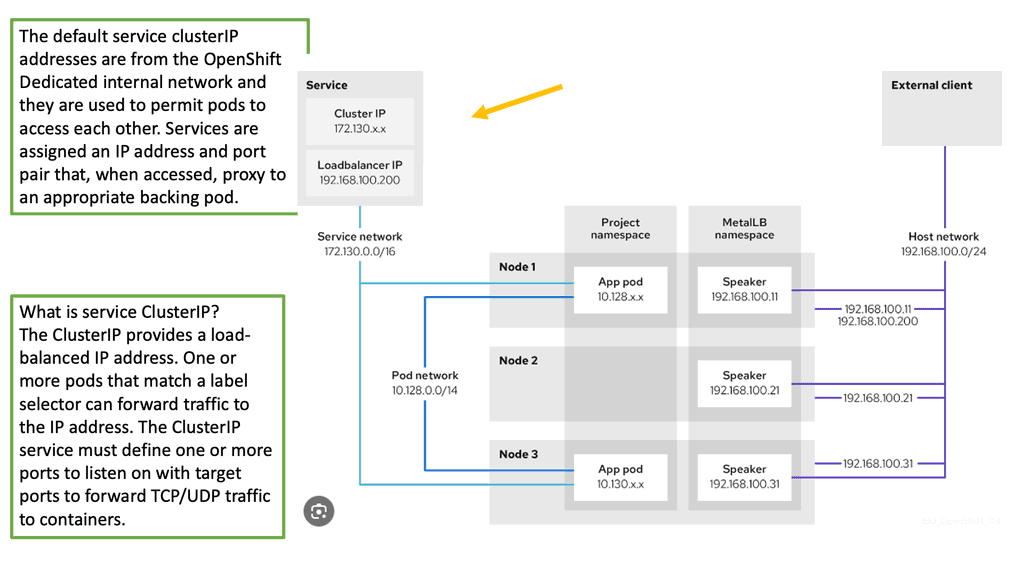

A typical service type: ClusterIP

The most common service IP address type is “ClusterIP .” ClusterIP is a persistent virtual IP address used for load-balancing traffic internal to the cluster. Services with these service types cannot be directly accessed outside the cluster; there are other service types for that requirement.

The service type of Cluster-IP is considered for East-West traffic since it originates from Pods running in the cluster to the service IP backed by Pods that also run in the cluster.

Then, to enable external access to the cluster, we need to expose the services that the Pod or Pods represent, and this is done with an Openshift Route that provides a URL. So, we have a service in front of the pod or groups of pods. The default is for internal access only. Then, we have a URL-based route that gives the internal service external access.

Using an OpenShift Load Balancer

Get Traffic into the Cluster

If you do not need a specific external IP address, OpenShift Container Platform clusters can be accessed externally through an OpenShift load balancer service. The OpenShift load balancer allocates unique IP addresses from configured pools. Load balancers have a single edge router IP (which can be a virtual IP (VIP), but it is still a single machine for initial load balancing). How many OpenShift load balancers are there in OpenShift?

Two load balancers

The solution supports some load balancer configuration options: Use the playbooks to configure two load balancers for highly available production deployments, or use the playbooks to configure a single load balancer, which is helpful for proof-of-concept deployments. Deploy the solution using your OpenShift load balancer.

This process involves the following:

- The administrator performs the prerequisites;

- The developer creates a project and service if the service to be exposed does not exist;

- The developer exposes the service to create a route.

- The developer creates the Load Balancer Service.

- The network administrator configures networking to the service.

OpenShift load balancer: Different Openshift SDN networking modes

OpenShift security best practices

So, depending on your Openshift SDN configuration, you can tailor the network topology differently. You can have free-for-all Pod connectivity, similar to a flat network or something stricter, with different security boundaries and restrictions. A free-for-all Pod connectivity between all projects might be good for a lab environment.

Still, you may need to tailor the network with segmentation for production networks with multiple projects, which can be done with one of the OpenShift SDN plugins. We will get to this in a moment.

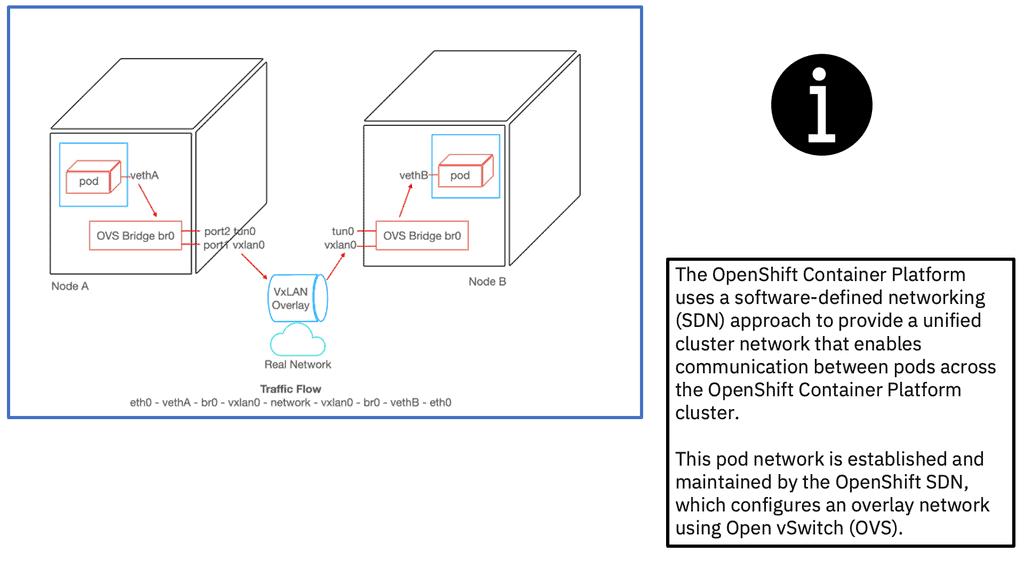

Openshift networking does this with an SDN layer and enhances Kubernetes networking to have a virtual network across all the nodes created with the Open switch standard. For the Openshift SDN, this Pod network is established and maintained by the OpenShift SDN, configuring an overlay network using Open vSwitch (OVS).

The OpenShift SDN plugin

We mentioned that you could tailor the virtual network topology to suit your networking requirements. The OpenShift SDN plugin and the SDN model you select can determine this. With the default OpenShift SDN, several modes are available.

This level of SDN mode you choose is concerned with managing connectivity between applications and providing external access to them.

Some modes are more fine-grained than others. How are all these plugins enabled? The Openshift Container Platform (OCP) networking relies on the Kubernetes CNI model while supporting several plugins by default and several commercial SDN implementations, including Cisco ACI.

The native plugins rely on the virtual switch Open vSwitch and offer alternatives to providing segmentation using VXLAN, specifically the VNID or the Kubernetes Network Policy objects:

We have, for example:

ovs-subnet

ovs-multitenant

ovs-network policy

Choosing the right plugin depends on your security and control goals. As SDNs take over networking, third-party vendors develop programmable network solutions. OpenShift is tightly integrated with products from such providers by Red Hat. According to Red Hat, the following solutions are production-ready:

- Nokia Nuage

- Cisco Contiv

- Juniper Contrail

- Tigera Calico

- VMWare NSX-T

- ovs-subnet plugin

After OpenShift is installed, this plugin is enabled by default. As a result, pods can be connected across the entire cluster without limitations so traffic can flow freely between them. This may be undesirable if security is a top priority in large multitenant environments.

ovs-multitenant plugin

Security is usually unimportant in PoCs and sandboxes but becomes paramount when large enterprises have diverse teams and project portfolios, especially when third parties develop specific applications. A multitenant plugin like ovs-multitenant is an excellent choice if simply separating projects is all you need.

This plugin sets up flow rules on the br0 bridge to ensure that only traffic between pods with the same VNID is permitted, unlike the ovs-subnet plugin, which passes all traffic across all pods. It also assigns the same VNID to all pods for each project, keeping them unique across projects.

ovs-networkpolicy plugin

While the ovs-multitenant plugin provides a simple and largely adequate means for managing access between projects, it does not allow granular control over access. In this case, the ovs-networkpolicy plugin can be used to create custom NetworkPolicy objects that, for example, apply restrictions to traffic egressing or entering the network.

Egress routers

In OpenShift, routers direct ingress traffic from external clients to services, which then forward it to pods. As well as forwarding egress traffic from pods to external networks, OpenShift offers a reverse type of router. Egress routers, on the other hand, are implemented using Squid instead of HAProxy. Routers with egress capabilities can be helpful in the following situations:

They are masking different external resources used by several applications with a single global resource. For example, applications may be developed so that they are built, pulling dependencies from other mirrors, and collaboration between their development teams is rather loose. So, instead of getting them to use the same mirror, an operations team can set up an egress router to intercept all traffic directed to those mirrors and redirect it to the same site.

To redirect all suspicious requests for specific sites to the audit system for further analysis.

OpenShift supports the following types of egress routers:

- redirect for redirecting traffic to a specific destination IP

- http-proxy for proxying HTTP, HTTPS, and DNS traffic

Summary:OpenShift Networking

In the ever-evolving world of cloud computing, Openshift has emerged as a robust application development and deployment platform. One crucial aspect that makes it stand out is its networking capabilities. In this blog post, we delved into the intricacies of Openshift networking, exploring its key components, features, and benefits.

Understanding Openshift Networking Fundamentals

Openshift networking operates on a robust and flexible architecture that enables efficient communication between various components within a cluster. It utilizes a combination of software-defined networking (SDN) and network overlays to create a scalable and resilient network infrastructure.

Exploring Networking Models in Openshift

Openshift offers different networking models to suit various deployment scenarios. The most common models include Single-Stacked Networking, Dual-Stacked Networking, and Multus CNI. Each model has advantages and considerations, allowing administrators to choose the most suitable option for their specific requirements.

Deep Dive into Openshift SDN

At the core of Openshift networking lies the Software-Defined Networking (SDN) solution. It provides the necessary tools and mechanisms to manage network traffic, implement security policies, and enable efficient communication between Pods and Services. We will explore the inner workings of Openshift SDN, including its components like the SDN controller, virtual Ethernet bridges, and IP routing.

Network Policies in Openshift

To ensure secure and controlled communication between Pods, Openshift implements Network Policies. These policies define rules and regulations for network traffic, allowing administrators to enforce fine-grained access controls and segmentation. We will discuss the concept of Network Policies, their syntax, and practical examples to showcase their effectiveness.

Conclusion: Openshift’s networking capabilities play a crucial role in enabling seamless communication and connectivity within a cluster. By understanding the fundamentals, exploring different networking models, and harnessing the power of SDN and Network Policies, administrators can leverage Openshift’s networking features to build robust and scalable applications.

In conclusion, Openshift networking opens up a world of possibilities for developers and administrators, empowering them to create resilient and interconnected environments. By diving deep into its intricacies, one can unlock the full potential of Openshift networking and maximize the efficiency of their applications.

- Fortinet’s new FortiOS 7.4 enhances SASE - April 5, 2023

- Comcast SD-WAN Expansion to SMBs - April 4, 2023

- Cisco CloudLock - April 4, 2023