Container Networking

Containerization has revolutionized the way we develop, deploy, and manage applications. Organizations have gained newfound flexibility and scalability by encapsulating applications in lightweight, isolated containers. However, as the number of containers increases, so does the networking complexity among them. This blog post will explore container networking, its challenges, solutions, and best practices.

Container networking refers to the communication and connectivity between containers within a distributed system. Unlike traditional monolithic applications, containers are designed to be ephemeral and can be dynamically created, scaled, and destroyed. This dynamic nature necessitates a flexible and efficient networking infrastructure to facilitate seamless communication between containers, regardless of their physical location.

Container networking is the foundation upon which communication between containers and the outside world is established. It allows containers to connect with each other, with other services, and with external networks. In this section, we will cover the fundamental concepts of container networking, including network namespaces, bridges, and virtual Ethernet devices.

There are various networking models and architectures to consider when working with containers. From host networking to overlay networks, each model offers different benefits and trade-offs. We will explore these models in detail, discussing their use cases, advantages, and potential limitations.

While container networking brings flexibility and scalability, it also introduces certain challenges. In this section, we will address common obstacles faced when dealing with container networking, such as IP address management, network isolation, and service discovery. We will provide insights into overcoming these challenges and offer practical solutions.

To ensure smooth and efficient container networking, it is crucial to follow best practices. We will share a set of guidelines and recommendations for implementing container networking effectively. From choosing the appropriate network driver to configuring network security policies, these best practices will empower you to optimize your container networking infrastructureMatt Conran

Highlights: Container Networking

Understanding Container Networking

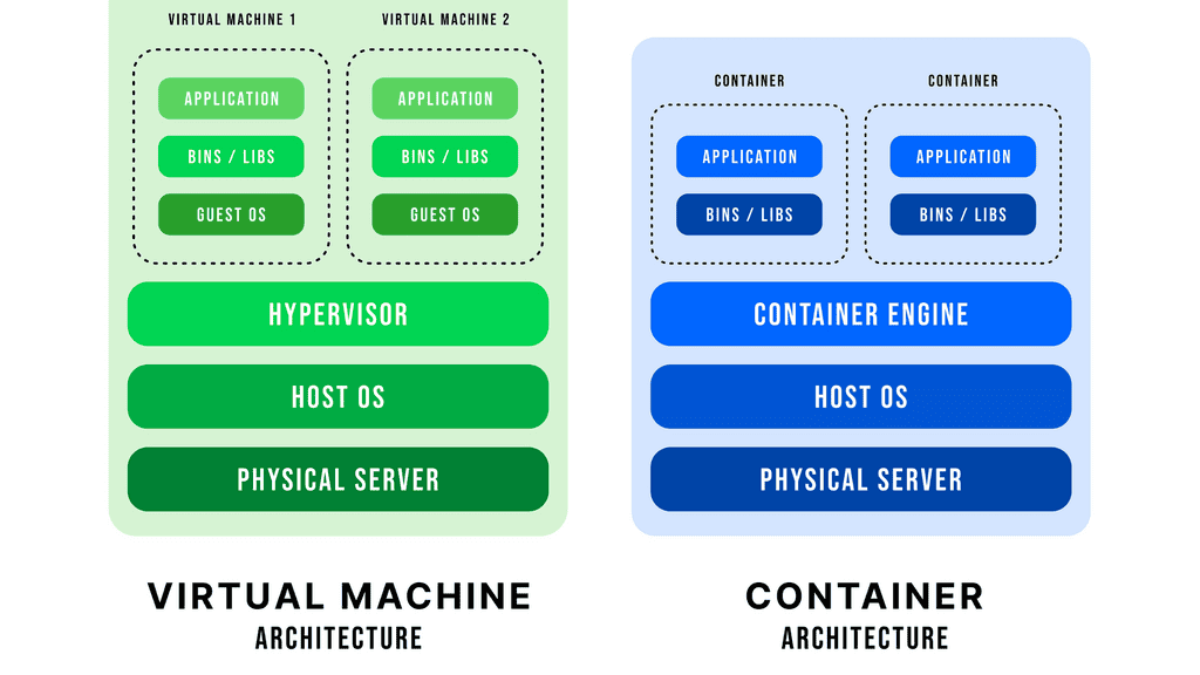

Container networking refers to the process of establishing communication between containers and external networks. Unlike traditional networking methods, container networking provides isolation, scalability, and flexibility, making it ideal for modern application architectures. We can achieve better resource utilization and application performance by encapsulating applications and their dependencies within containers.

Container networking serves as the bridge that connects containers to each other and to external networks. It facilitates communication, data exchange, and resource sharing. This section will delve into the foundational concepts of container networking, covering topics such as network namespaces, virtual Ethernet devices, and bridge networks.

Key Points To Consider:

– Scalability and Resource Optimization: Container networking enables unprecedented scalability by allowing applications to be broken down into smaller, independent containers. These containers can be easily replicated and distributed across a cluster of machines, ensuring efficient resource utilization. With container networking, organizations can effortlessly scale their applications based on demand without incurring unnecessary costs or compromising performance.

– Enhanced Security and Isolation: One of the key advantages of container networking is the built-in security and isolation it offers. Each container operates within its own isolated environment, preventing any potential vulnerabilities from affecting other containers or the underlying host system. Container networking allows for the implementation of fine-grained access controls and network policies, ensuring that sensitive data and critical services remain safeguarded.

– Seamless Communication and Service Discovery: Container networking facilitates seamless communication between containers within and across different hosts. Containers can be connected through virtual networks, enabling them to exchange data and interact with each other effortlessly. Moreover, container orchestration platforms provide built-in service discovery mechanisms, allowing containers to locate and communicate easily with other services in the cluster, further simplifying the development and deployment process.

– Flexibility and Portability: Container networking offers unparalleled flexibility and portability, making it an ideal choice for modern application development. Containers can be easily moved or migrated between hosts, irrespective of the underlying infrastructure. This portability eliminates the need for tedious system configurations, making deployments swift and hassle-free. Furthermore, container networking enables developers to encapsulate the entire application stack, ensuring consistency across different environments, from development to production.

Connecting Containers

Container networking refers to establishing communication channels between containers and external resources, such as other containers, host machines, or the Internet. It allows containers to exchange data and access necessary services while maintaining isolation and security. By comprehending the basics of container networking, we can unlock its potential for building scalable and resilient applications.

Multiple networking models are available for containers, each with its advantages and use cases. We will explore three common models:

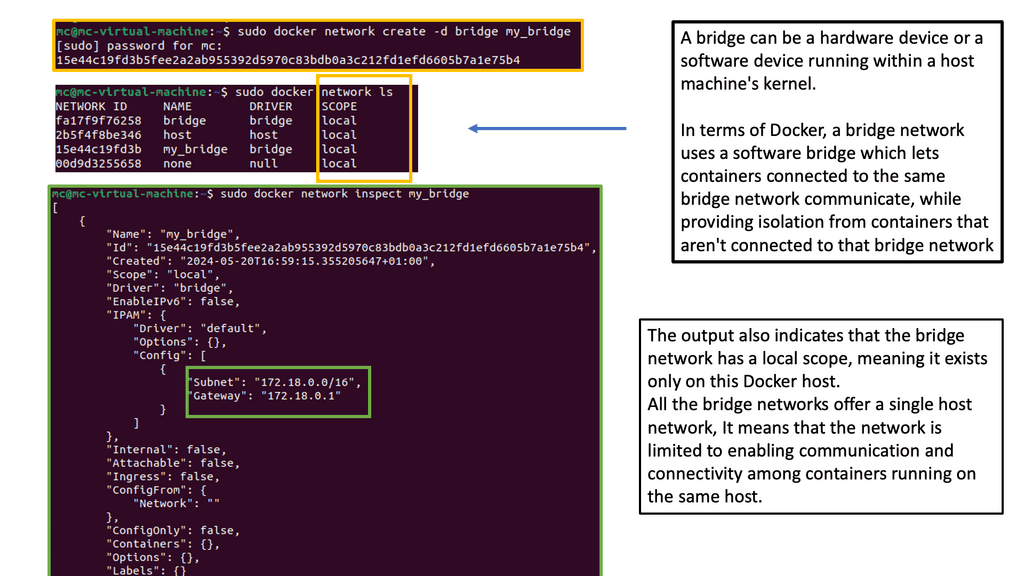

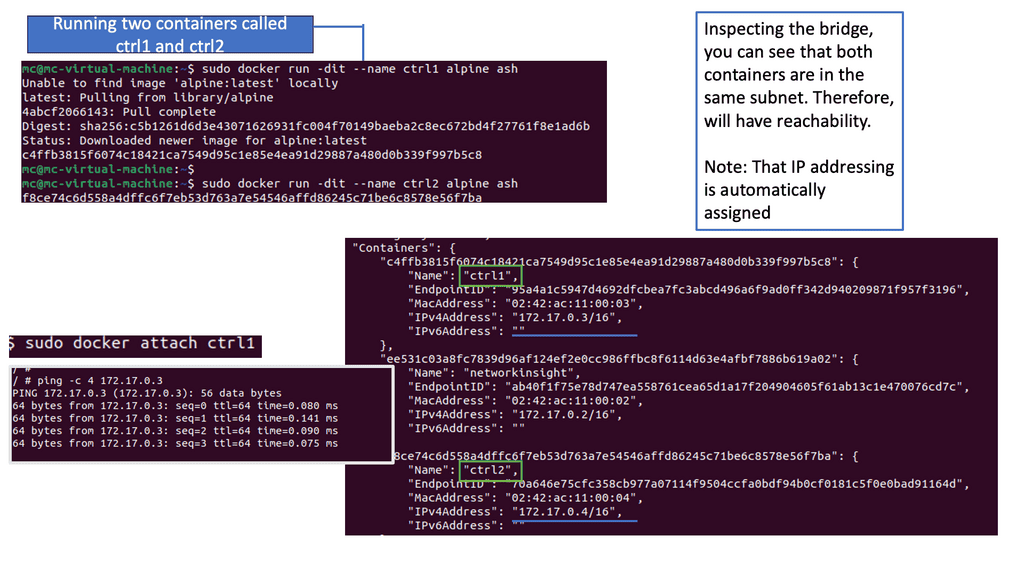

1. Bridge Networking: This model creates a bridge network interface on the host machine, enabling containers to communicate with each other through the bridge. It provides automatic DNS resolution and IP address assignment but lacks direct connectivity to the host network.

2. Overlay Networking: Overlay networks facilitate container communication on different hosts or multiple data centers. By encapsulating container traffic within virtual networks, overlay networking ensures seamless connectivity and flexibility, but it may introduce additional overhead.

3. Host Networking: This model allows containers to share the host network stack, leveraging its IP address and network interfaces. Host networking offers maximum performance but compromises container isolation and may lead to port conflicts.

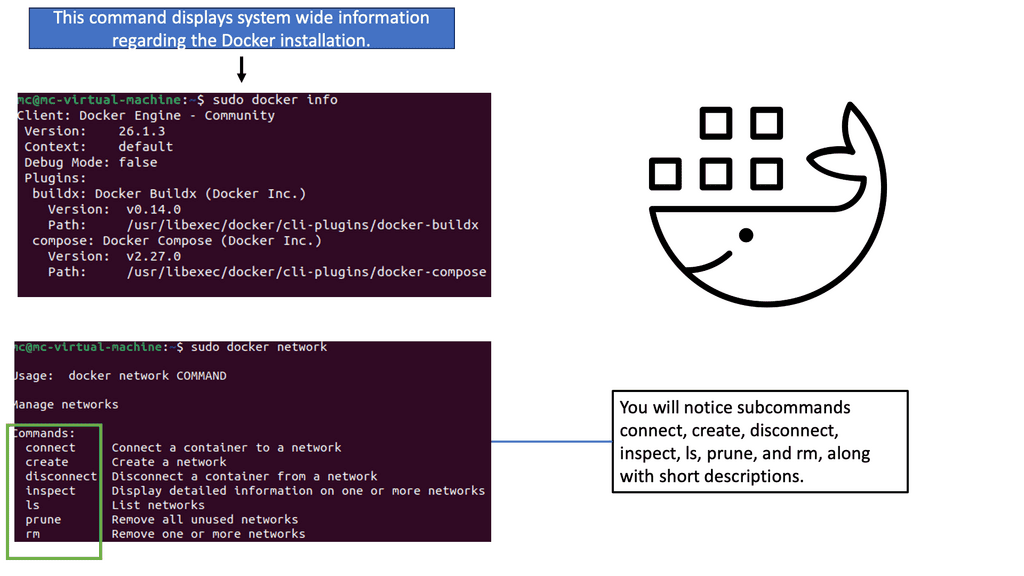

Example: Docker Networking

GKE Network Policies

Why Network Policies Matter

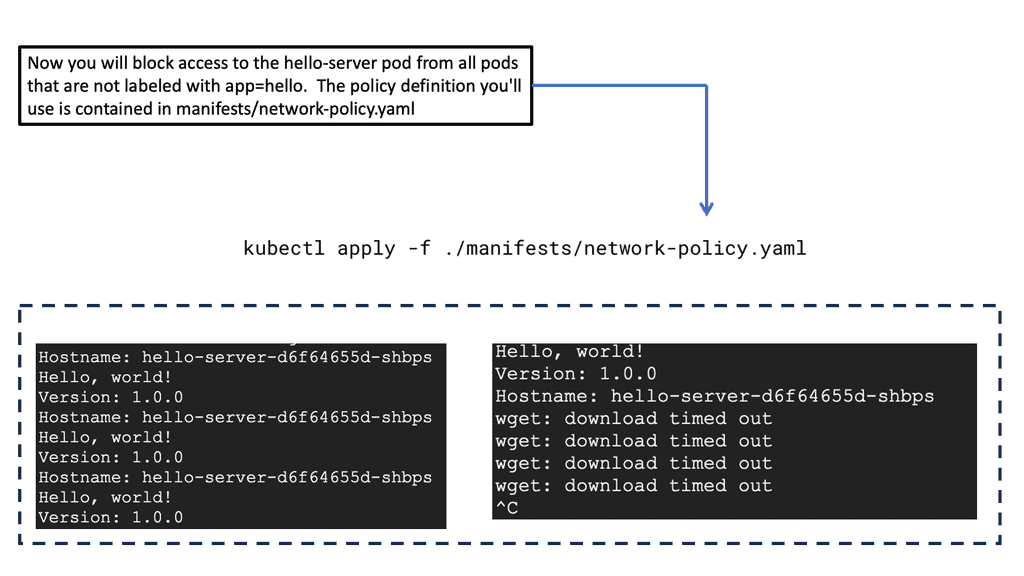

The importance of network policies cannot be overstated. In a typical Kubernetes cluster, all pods can communicate with each other by default. While this might be convenient for development, it poses a significant security risk in production environments. Network policies provide a way to enforce rules that dictate which pods can communicate with each other. This level of control is crucial in maintaining a secure and robust microservices architecture. By implementing well-defined network policies, you can prevent potential attacks, such as lateral movement within the cluster, thus fortifying your application’s security posture.

### Crafting Effective Network Policies

Creating effective network policies requires a thorough understanding of your application’s architecture and communication patterns. Start by mapping out the data flow between your services. Identify which services need to communicate and which ones should be isolated. Use this information to define network policies that permit only the required traffic. When crafting these policies, it’s beneficial to follow the principle of least privilege—allow only what is necessary and deny everything else by default. This approach not only minimizes the attack surface but also simplifies policy management over time.

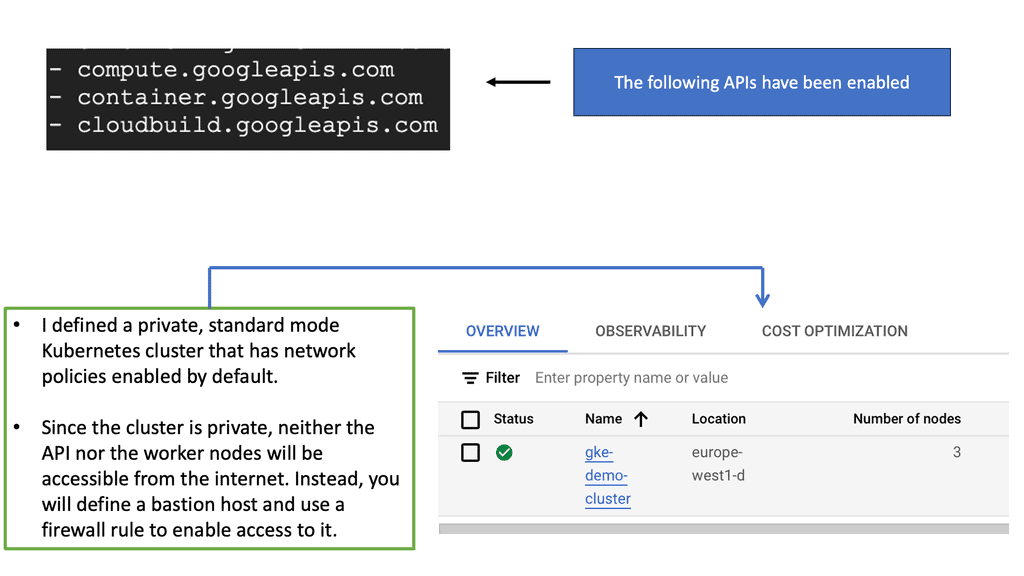

### Implementing Network Policies in GKE

Implementing network policies in GKE involves defining policy resources using YAML configuration files. These files specify the allowed ingress and egress rules for your pods. Begin by enabling network policy enforcement on your GKE cluster. Once enabled, you can apply your custom network policies using the `kubectl` command-line tool. It’s essential to test these policies in a controlled environment before deploying them to production. Regular audits and updates to your network policies are also crucial to adapt to changes in your application’s architecture and security requirements.

Understanding Docker’s Default Networking

– Docker’s default networking is based on a bridge network driver that creates a virtual network interface on the host machine. This bridge acts as a gateway, enabling containers to communicate with each other and the host. By default, Docker assigns IP addresses from a predefined range to containers, facilitating seamless connectivity within the network.

– One of the fundamental aspects of Docker’s default networking is container-to-container communication. Containers within the same bridge network can effortlessly communicate with each other using their respective IP addresses or container names. This opens up endless possibilities for building complex, interconnected systems composed of microservices.

– While container-to-container communication is vital, Docker also provides mechanisms to connect containers with the external world. We can expose services running inside containers to the host machine or the entire network by mapping container ports to host ports. This allows seamless integration of Dockerized applications with external systems.

– In addition to the default bridge network, Docker offers advanced networking techniques such as overlay networks. Overlay networks allow containers to communicate across multiple Docker hosts, enabling the creation of distributed systems and facilitating scalability. Understanding these advanced networking options expands the possibilities of using Docker in complex scenarios.

Container Orchestration

**Understanding Container Networking**

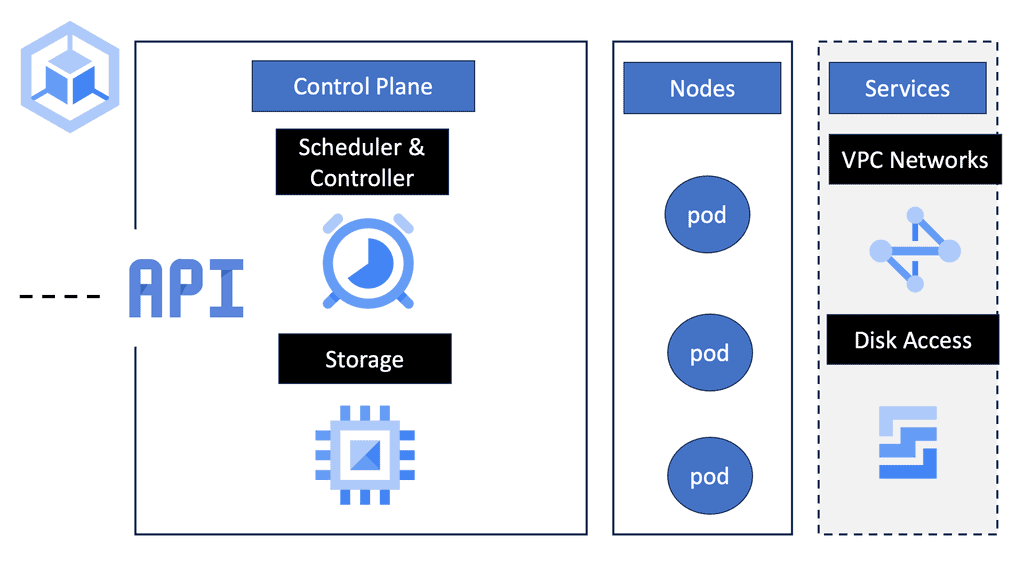

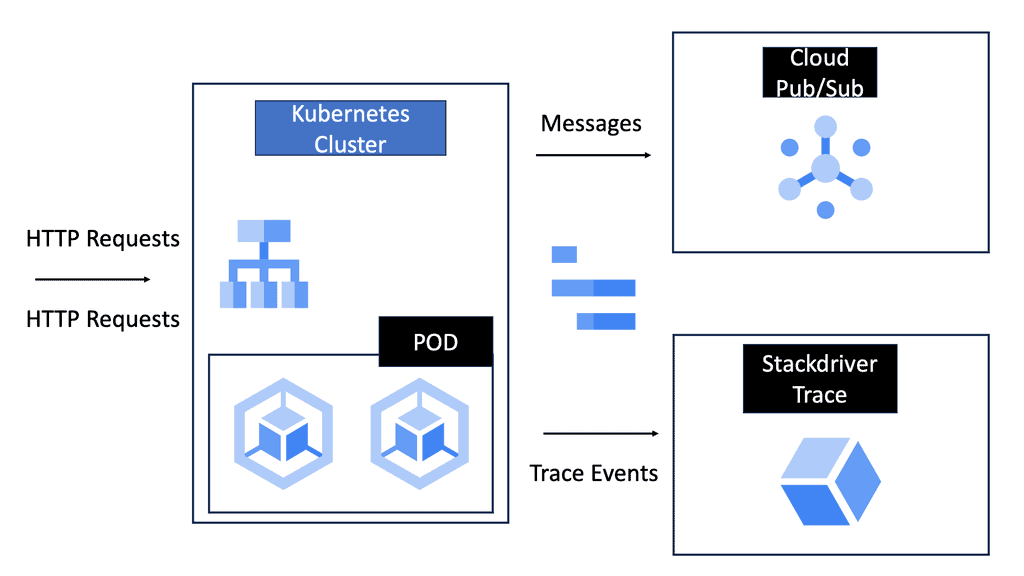

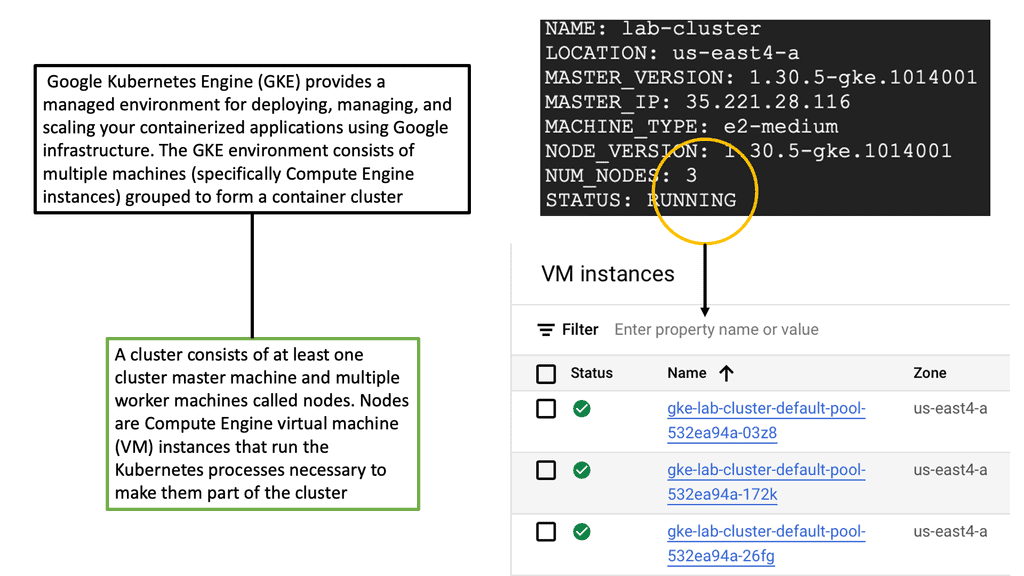

Container networking is a critical aspect of application deployment in GKE. It involves the communication between containers, nodes, and external services. In GKE, this process is streamlined with the integration of Kubernetes networking policies and the use of Google Cloud’s Virtual Private Cloud (VPC). These components work together to provide a secure and efficient networking environment, where each container can communicate with others while maintaining isolation and security.

**The Role of Kubernetes Networking Policies**

Kubernetes networking policies are essential for managing traffic flow within a GKE cluster. These policies define how pods communicate with each other and with external endpoints. By specifying rules for ingress and egress traffic, developers can fine-tune the security and performance of their applications. In GKE, networking policies are implemented using YAML configurations, providing a flexible and scalable approach to managing container networks.

**Integrating Google Cloud’s Virtual Private Cloud (VPC)**

Google Cloud’s VPC plays a pivotal role in enhancing the networking capabilities of GKE. With VPC, users can create isolated networks within Google Cloud, allowing for fine-grained control over IP address ranges, subnets, and routing. This integration ensures that containers within a GKE cluster can securely communicate with other Google Cloud services, on-premises resources, and the internet, while maintaining compliance with organizational security policies.

**Optimizing Performance with Container Networking**

Optimizing container networking in GKE involves balancing performance and security. By leveraging features like Network Endpoint Groups (NEGs) and Cloud Load Balancing, developers can ensure high availability and low latency for their applications. Additionally, monitoring tools provided by Google Cloud, such as Stackdriver, offer insights into network performance, enabling proactive management and troubleshooting of networking issues.

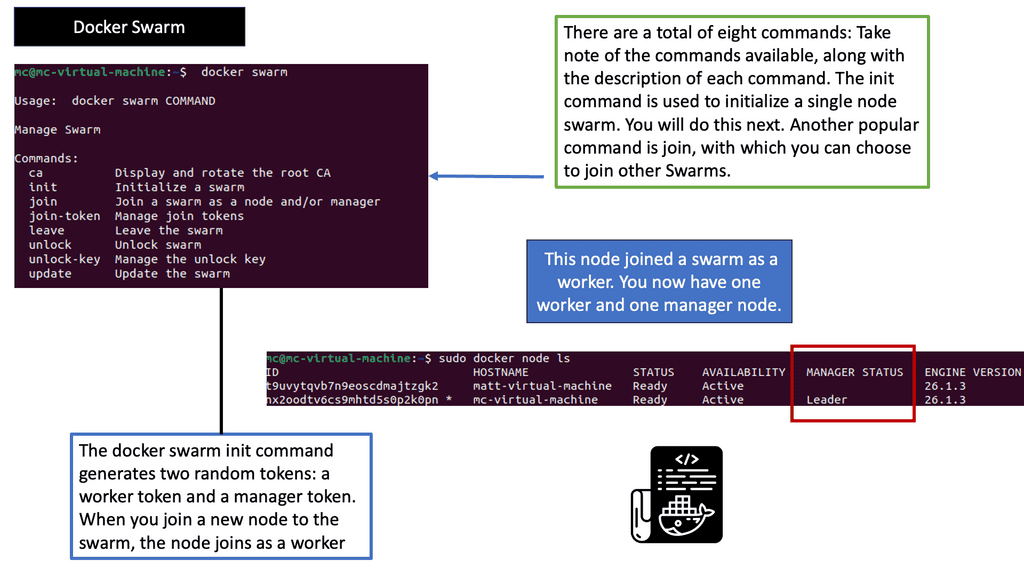

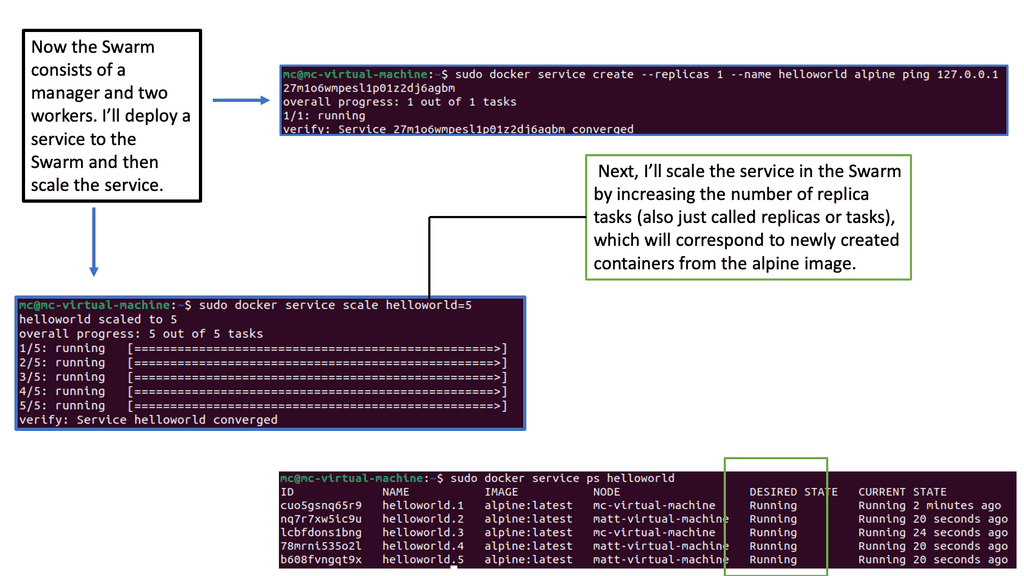

Understanding Docker Swarm

Understanding Docker Swarm

At its core, Docker Swarm is a native clustering and orchestration solution for Docker containers. It enables the creation of a swarm, a group of Docker nodes that work together in a distributed system. Each node in the swarm can run multiple containers, forming a resilient and scalable infrastructure. By abstracting away the complexity of managing individual containers, Docker Swarm empowers developers and operators to focus on their applications’ logic rather than infrastructure intricacies.

Docker Swarm offers a plethora of features that streamline container deployment and management. Automatic load balancing, service discovery, and rolling updates are just a few of the capabilities that make Swarm an attractive choice for container orchestration. Additionally, Swarm provides fault tolerance, ensuring high availability even in the face of node failures. Its intuitive command-line interface and integration with Docker CLI make it easy to adopt and incorporate into existing workflows.

Benefits and Advantages

The advantages of Docker Swarm are manifold. Firstly, Swarm allows horizontal scaling, enabling applications to handle increased workloads effortlessly. Scaling up or down can be achieved seamlessly without downtime or disruptions. Furthermore, Swarm promotes fault tolerance through its replication and distribution mechanisms, ensuring that applications remain highly available even when faced with failures.

With built-in service discovery and load balancing, Swarm simplifies deploying and managing microservices architectures. Additionally, Swarm integrates well with other Docker tools and services, such as Docker Compose and Docker Registry, further enhancing its versatility.

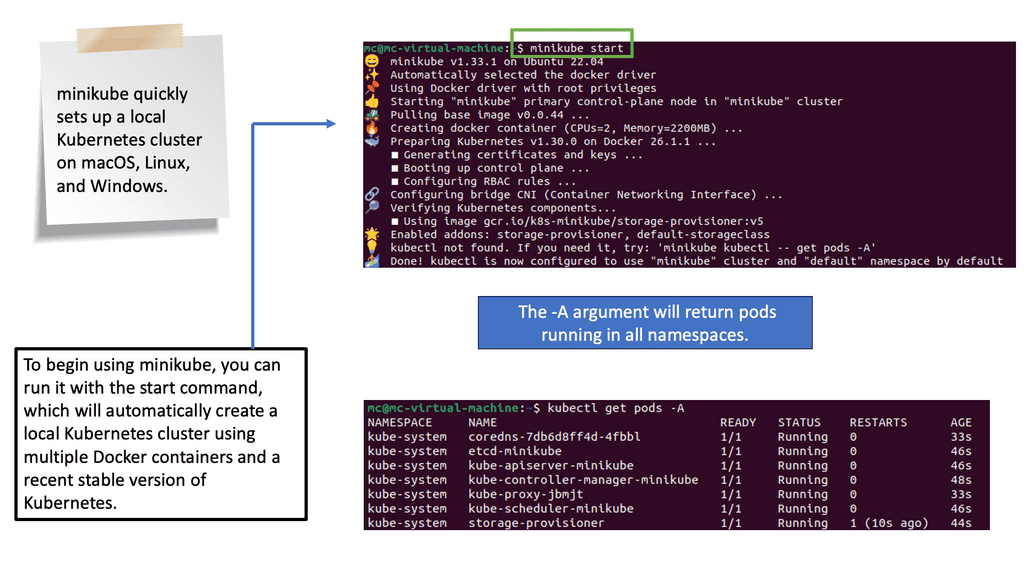

What is Minikube?

Minikube is a lightweight, open-source tool that enables developers to run a single-node Kubernetes cluster locally. It provides a simplified way to set up and manage a Kubernetes environment on your machine, allowing developers to experiment, test, and develop applications without needing a full-scale production cluster. With Minikube, developers can replicate the production environment on their local machines, saving time and effort during development.

Example OpenShift: Network Services

The most common network service allows a source to reach an application endpoint. Nowadays, the network function no longer solely satisfies endpoint reachability; it is fully integrated into the application. In the case of OpenShift networking, the Route and Sevice construct provides both reachability and an abstraction layer for application access.

In the past, applications had three standard components: cache, web server, and database. Applications look very different now. Several services interact, are completely decoupled into units, and are packaged in containers; all are mobile and may move around.

Container Networking and the CNI

Running a container requires a host. On-premises data centers may use physical machines such as bare-metal servers, or virtual machines may be used in the cloud.

Docker daemon and client access interactive container registry. Containers can also be started, stopped, paused, and inspected, and container images pulled/pushed. Modern containers often comply with Open Container Initiative (OCI), and Docker is not the only option. Kubernetes and other alternatives to Docker can also be helpful.

Hosts and containers have a 1:N relationship. Typically, one host runs several containers. Facebook reports running 10 to 40 containers per host, depending on the machine’s beefiness.

You will likely have to deal with networking whether you use a single host or a cluster:

A single-host deployment almost always requires connecting to other containers on the same host; for example, WildFly might need to connect to a database.

During multi-host deployments, you must consider how containers communicate inside and between hosts. Your design decisions will likely be influenced by performance and security concerns. An Apache Spark or Apache Kafka cluster generally requires multiple hosts when a single host’s capacity is insufficient or for resilience reasons.

In a nutshell, Docker offers four single-host networking modes:

- Bridge mode

This is the default network driver for apps running in standalone containers.

- Host mode

It is also used for standalone containers, removing network isolation from the host.

- Container mode

It lets you reuse another container’s network namespace. Used in Kubernetes.

- No networking

It disables Docker networking support and allows you to set up custom networking.’

Knowledge Check: Container Security

Understanding Namespaces

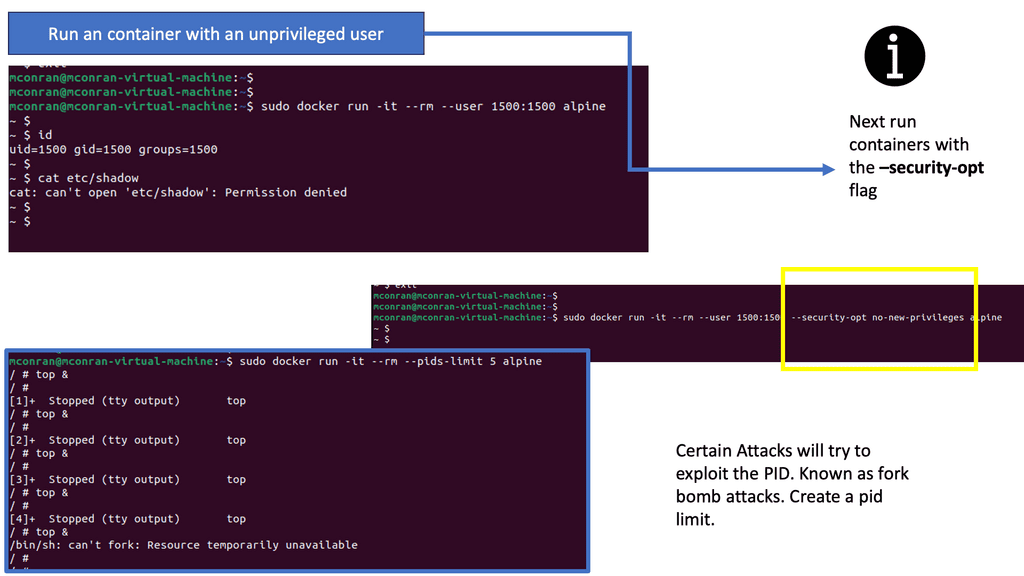

Namespaces is a fundamental building block for achieving resource isolation within a Linux environment. They can virtualize system resources like process IDs, network interfaces, and file systems. By creating separate namespaces for different processes or groups of processes, we can ensure that each entity operates in its isolated environment, oblivious to other processes outside its namespace.

While namespaces focus on resource isolation, control groups take the concept further by enabling resource management. Control groups, commonly known as cgroups, allow administrators to allocate and limit system resources to specific processes or groups of processes, such as CPU, memory, and I/O. This fine-grained control enhances system performance, prevents resource starvation, and ensures fair distribution among entities.

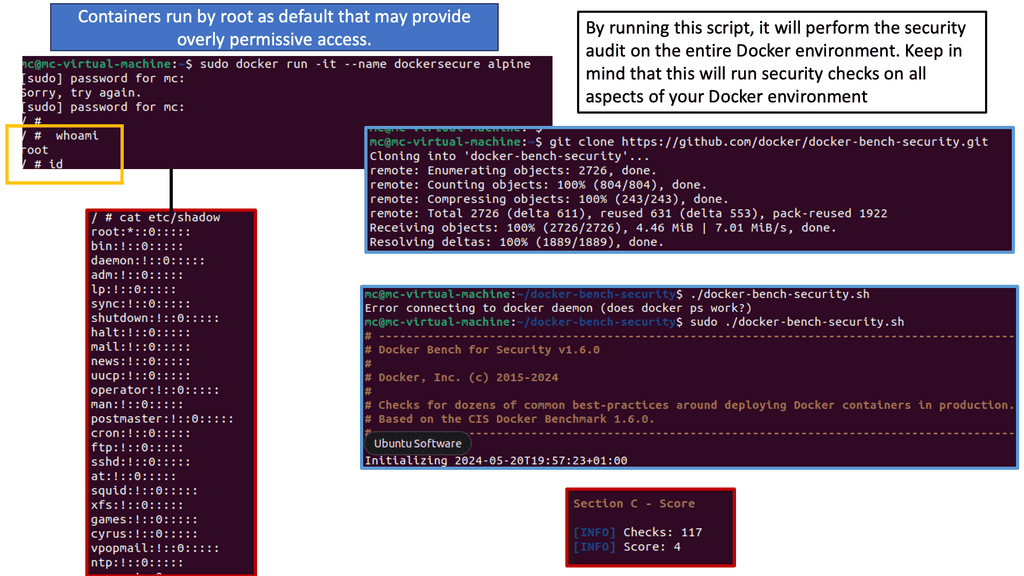

**Network Security for Docker**

Securing the network connectivity of your Docker environment is essential to protect against potential attacks. Consider implementing these practices:

– Utilize Docker’s network security features, such as network segmentation and access control lists (ACLs).

– Enable and enforce firewall rules to control inbound and outbound traffic to and from containers.

– Consider using encrypted communication protocols (e.g., HTTPS) for containerized applications.

**Docker Host Security**

Securing the underlying Docker host is paramount for overall container security. Here are a few tips to enhance host security:

– Regularly update the host operating system and Docker daemon to patch known vulnerabilities.

– Employ strong access control measures to limit administrative privileges on the host.

– Implement intrusion detection and prevention systems to monitor and detect any unauthorized activities on the host.

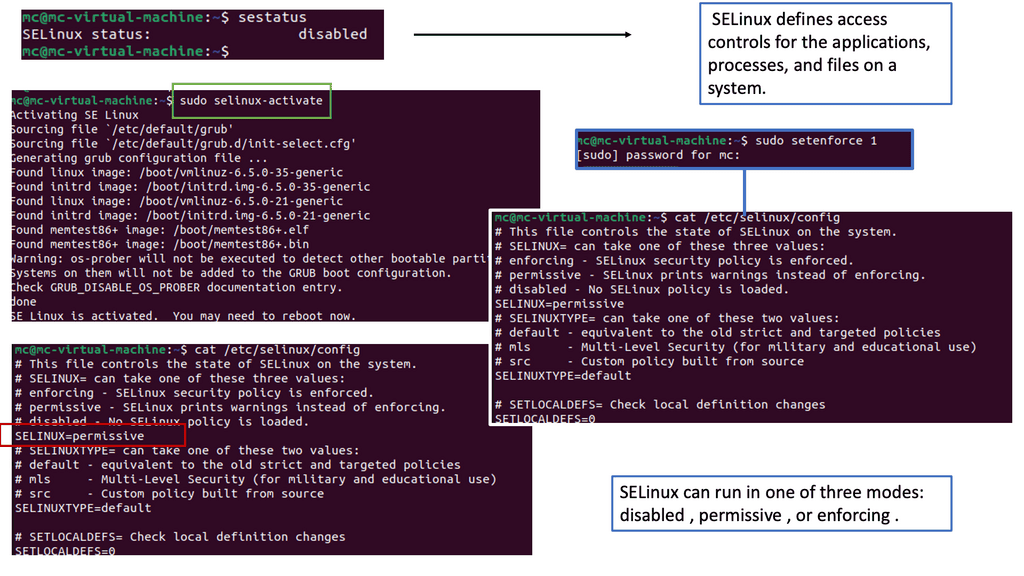

**Understanding SELinux**

SELinux is a mandatory access control (MAC) system that enforces fine-grained policies to restrict access and actions within a Linux system. It defines rules and labels for processes, files, and network resources, ensuring that only authorized activities are allowed.

When SELinux is enabled, it actively enforces access control policies on Docker containers and their associated network resources. SELinux labels define and implement rules regarding network communication, preventing unauthorized access or tampering.

One critical benefit of SELinux in Docker networking is its ability to mitigate network-based attacks. By leveraging SELinux’s access control capabilities, containers are isolated and protected from potential network threats. Unauthorized network interactions are blocked, reducing the attack surface and enhancing overall security.

Related: Before you proceed, you may find the following helpful:

Container Networking

Docker Networking

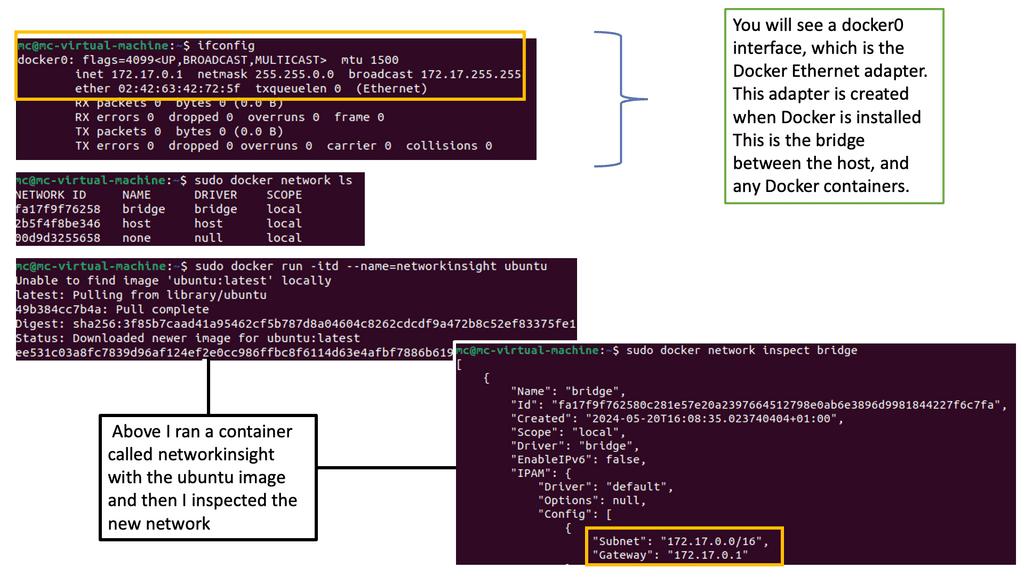

The Docker networking model uses a virtual bridge network by default, defined per host, and a private network where containers attach. The container’s IP address is allocated a private IP address, which indicates containers operating on different machines cannot communicate with each other.

In this case, you will have to map host ports to container ports and then proxy the traffic to reach across nodes with Docker. Therefore, it is up to the administrator to avoid port clashes between containers. Kubernetes networking handles this differently.

**Challenges in Container Networking**

Container networking presents several challenges that must be addressed to ensure optimal performance and reliability. Some of the key challenges include:

- Network Isolation: Containers should be isolated from each other to prevent unauthorized access and potential security breaches.

- IP Address Management: Containers are assigned unique IP addresses, which can quickly become challenging to manage as the number of containers grows.

- Scalability: As the container ecosystem expands, the networking infrastructure must scale effortlessly to accommodate the increasing number of containers.

- Service Discovery: Containers need a reliable mechanism to discover and communicate with other services within the network, especially in a microservices architecture.

**Solutions and Best Practices**

To overcome these challenges, several solutions and best practices have emerged in the realm of container networking:

1. Container Network Interface (CNI): CNI is a specification that defines how container runtimes interact with networking plugins. It enables easy integration of various networking solutions into container orchestration platforms like Kubernetes and Docker.

2. Overlay Networking: Overlay networks create a virtual network that spans multiple hosts, allowing containers to communicate seamlessly, regardless of physical location. Technologies like VXLAN, GRE, and WireGuard are commonly used for overlay networking.

3. Network Policies: Network policies define the rules and restrictions for incoming and outgoing traffic between containers. By implementing network policies, organizations can enforce security and control network traffic flow within their containerized environments.

4. Service Mesh: Service mesh technologies, such as Istio and Linkerd, provide advanced networking capabilities, including traffic management, load balancing, and observability. They enhance the resilience and reliability of containerized applications by offloading complex networking tasks from individual services.

Service Mesh & Networking

### What is a Cloud Service Mesh?

A Cloud Service Mesh is designed to handle the complex communication needs between various microservices within a cloud-native application. It provides a unified way to secure, connect, and observe services without the need to modify the application code. By abstracting the network logic from the business logic, a service mesh ensures that services can communicate seamlessly and securely, regardless of the underlying infrastructure.

### The Role of Container Networking

Container networking refers to the methods and protocols used to enable communication between containerized applications. Containers, which package applications and their dependencies, need an efficient way to communicate to ensure smooth operation. This is where Cloud Service Mesh comes into play. It provides advanced networking capabilities such as load balancing, traffic management, and secure communication channels specifically designed for containers. By integrating with container orchestrators like Kubernetes, a service mesh can automate and optimize these networking tasks.

### Key Benefits of Using a Cloud Service Mesh

1. **Enhanced Security**: A service mesh can handle encryption and secure communication between services, ensuring that data remains protected as it travels across the network.

2. **Observability**: It provides insights into service performance and operational metrics, making it easier to diagnose issues and optimize performance.

3. **Traffic Management**: With features like traffic splitting, retries, and circuit breaking, a service mesh allows more granular control over how traffic flows between services.

4. **Resilience**: By managing retries and failovers, a service mesh can improve the overall resilience of applications, ensuring they remain available even during partial failures.

### Challenges and Considerations

While the benefits are compelling, implementing a Cloud Service Mesh is not without its challenges. Complexity in setup and management, potential performance overhead, and the need for specialized knowledge are some of the hurdles that organizations might face. It’s essential to evaluate whether the benefits outweigh these challenges in the context of your specific use case.

### Real-World Use Cases

Several leading organizations have already adopted Cloud Service Mesh to streamline their container networking. For instance, companies in the finance and healthcare sectors leverage service meshes to ensure secure and compliant communication between microservices. Meanwhile, tech giants use it to manage massive, distributed systems with ease, ensuring high availability and optimal performance.

Container Networking: A Different Application Philosophy

Computing is distributed over multiple elements, and they all interact arbitrarily. Network integration allows the application to be divided into several microservice components. Microservices will enable the application to be packaged into pieces and deployed on different hosts or even different cloud providers.

The application stack no longer belongs to a single server. Small, composable units enhance application replication and fault tolerance services. Containers and the ability to interconnect them make all this possible.

Containers offer a single-purpose environment. They are a bunch of lightweight namespaces and processes sharing a common kernel. Typically, you don’t run a full stack in a single container.

Ideally, there is only one process per container, which makes them very lightweight. VMs with guest O/S are resource-heavy; containers are a far better option if the application can be containerized.

However, containers offer an utterly different endpoint type for the network. With virtual machines spinning, they arrive and disappear quickly, measured in milliseconds, not seconds or minutes. The speed is down to their light properties. Some containerized application transactions only live for the length of transaction time. The infrastructure and network must be pre-built to support this type of endpoint.

Despite containerization’s advantages, remember that Docker container security and Docker security options are enabled at each point in the defense layer.

Introducing Docker Network Types

Docker networking comes with several Docker network types and setups. The latest release is Docker version 1.10, which has enhancements, including linking with user-defined networks. There are other solutions available to enhance Docker networking functionality.

Docker is pluggable and allows ecosystem partners to plug into Docker networking. Project Calico offers a pure IP-based solution that utilizes the same principles of the Internet. Every host is an IP router. Calico uses a Felix agent and a BGP BIRD demon. This would be a clean option if the application only needs Layer 3 connectivity.

The weave is another solution that operates an overlay function and aims to fit the multi-data center requirements. Each host in a Weave network thinks it belongs to one large switched fabric. The physical locations are abstracted, and they all have reachability. A multi-datacenter solution must concern itself with metrics other than endpoint reachability.

Container Networking with Linux Kernel and User Namespaces

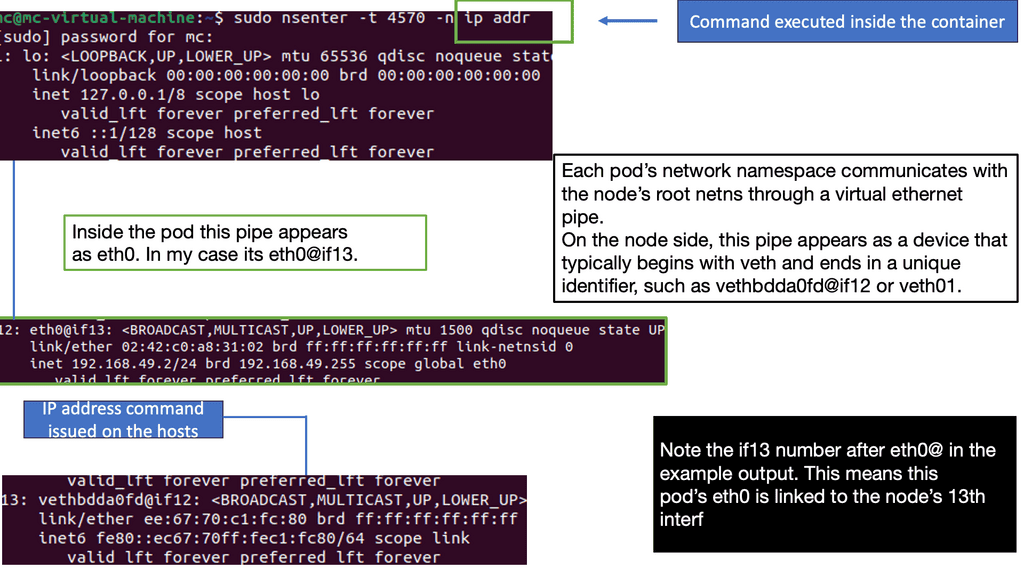

Several unique resources, such as network interfaces and file systems, appear isolated inside each container even though the containers share the Linux kernel. Global resources are abstracted to appear unique per container, an abstraction made available using Linux namespaces.

Namespaces initially provided resource isolation for the first Linux containers project, offering a process virtualization solution. They do not create additional operating system instances on the host but instead use a single system with resource isolation.

Similarly, FreeBSD, where Jails provides resource isolation while running one kernel instance. In 2002, mount namespaces were the first type of Linux namespace with kernel 2.4.19. User namespaces emerged with kernel 3.8.

The Different Namespaces

Containers have namespaces for each type of resource. We have six namespaces.

- Mount namespace makes the container feel like it has its filesystem.

- UTS namespace offers individual hostnames and domain names.

- User namespace provides isolation between the user and group IDs.

- IPC namespace isolates message queue systems.

- PID namespace offers different PIDs inside the container.

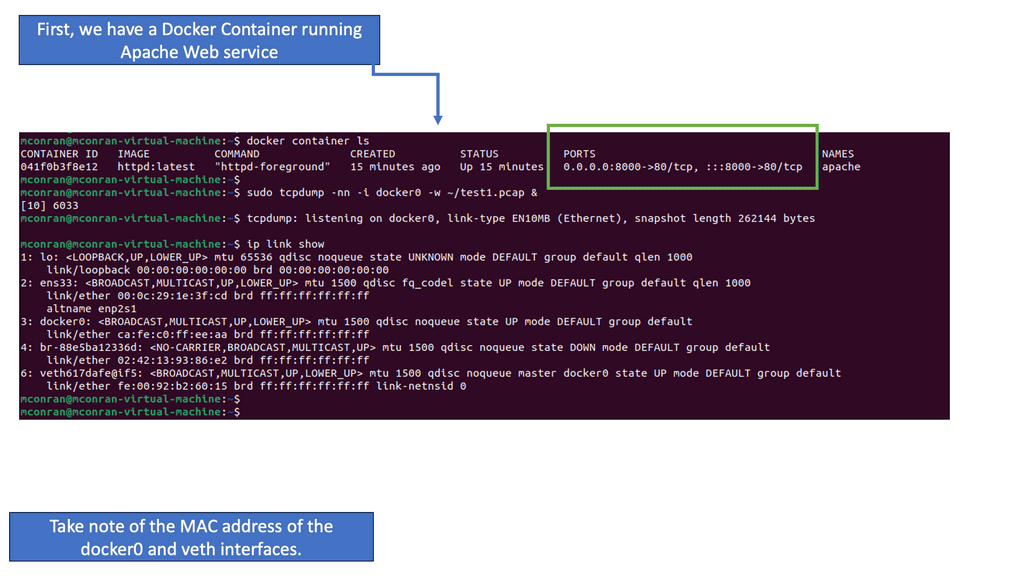

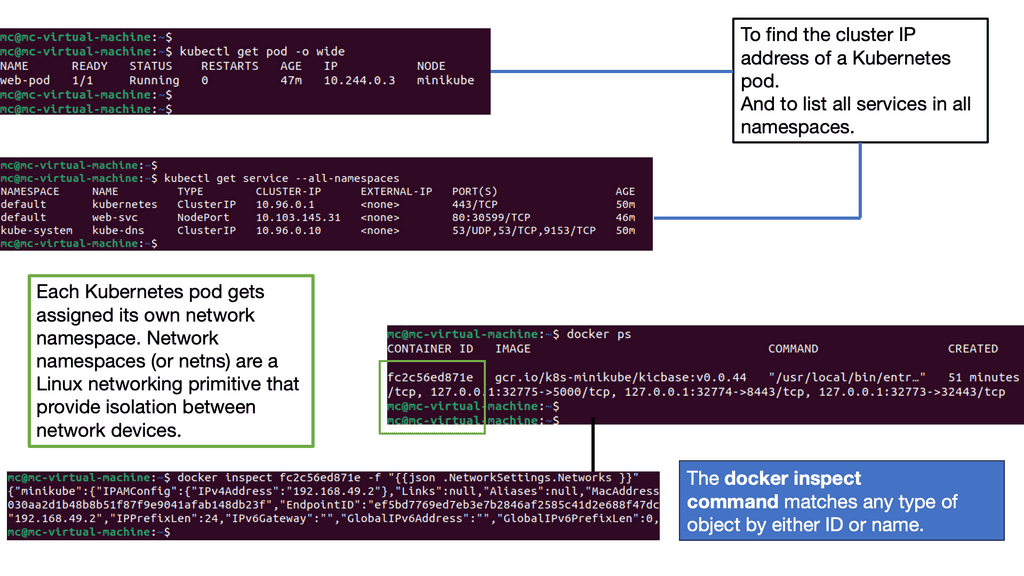

Finally, the network namespace gives the container a separate network stack. When you issue the docker ps command, you will see what ports are bound; these ports are on the namespace network interface.

Docker Networking and Docker Network Types

Install docker creates three network types – bridge, host, and none. You cannot delete these networks; you only interact with the default bridge network. There is the option to create user-defined networks and customized plugins.

Network plugins (LibNetwork project) extend the docker network to support additional networking features such as IP VLAN or macvlan. User-defined networks can take the form of bridge or overlay networks.

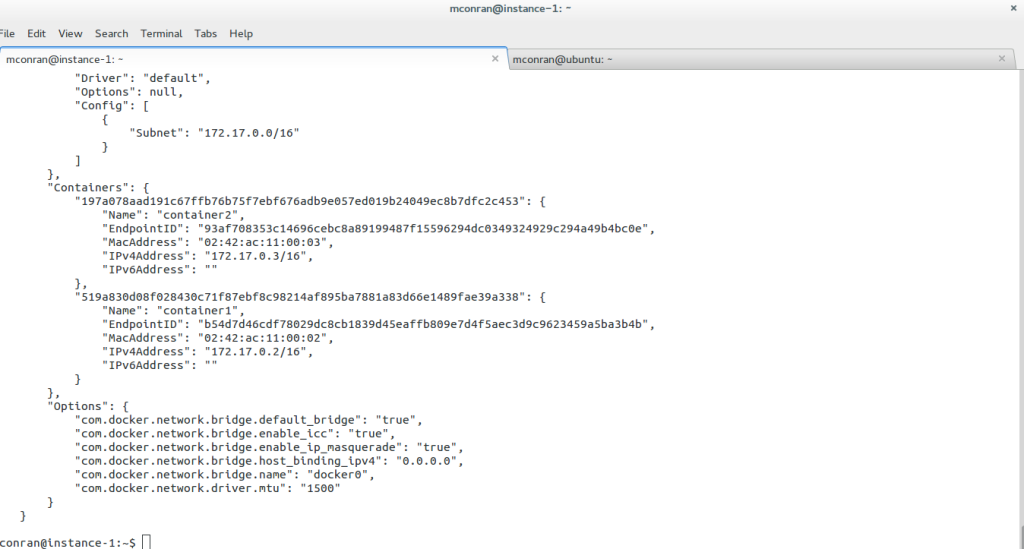

Bridge networks have a single-host local scope, and overlay networks have a multi-host global scope. The diagram below displays the default bridge and the corresponding attached containers. The driver is “default,” meaning it has local scope.

The user-defined bridge is similar to the default bridge0. Containers from the same host are added and can cross-communicate. External access is not prohibited, but you can expose network sections with port mappings.

The user-defined overlay networking feature enables multi-host networking using the VXLAN networking driver libnetwork and Docker’s libkv library. The overlay function requires a valid key-value store.

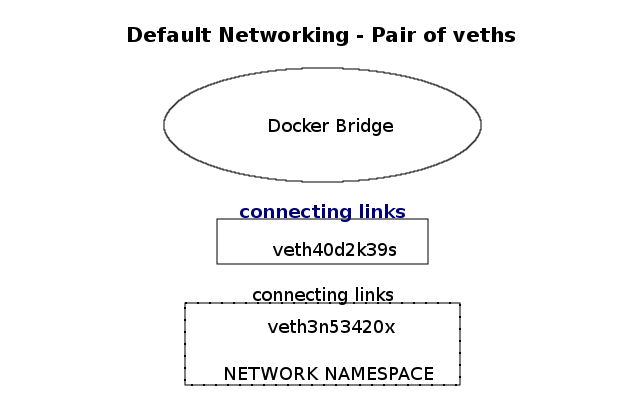

The Docker libkv library supports Consul, Etcd, and ZooKeeper. With Docker default networking, a veth pair is created—one inside the container and the other outside in the namespaces. All are connected via the docker bridge. The veth is mapped to appear as eth0 in the container, using Linux namespaces.

Container networking, port mapping, and traffic flow.

Docker container networking cross-communicates if they are on the same machine and thus connect to the same virtual bridge. Containers can also connect to multiple networks at the same time. By default, containers on different machines can not reach each other. Cross-communication on different nodes must be allocated ports on the machine’s IP address, which are then proxied to the containers.

Port mapping provides access to the container from the outside. Docker allocates a DNAT port in the range of 49153 – 65535. This additional functionality continues to use the default Docker0 bridge but adds IPtables rules for the DNAT.

When you spin up a container and do a port mapping, you can see it inside the docker ps command that you have a port mapping from, for example, the host 8080 to container 80. IPtables is setting a port mapping between 8080 and the IP addresses assigned to the container.

The problem with Docker is that you may have to coordinate ports and plenty of NAT. NAT was designed to address the shortage of IPv4 addresses and was only meant to be used for a short period. It is so ingrained in people’s minds we still see it come out in fresh designs.

Ports and NAT are problematic at scale and expose users to cluster-level issues outside their control. This can lead to port conflicts and many complexities in scheduling.

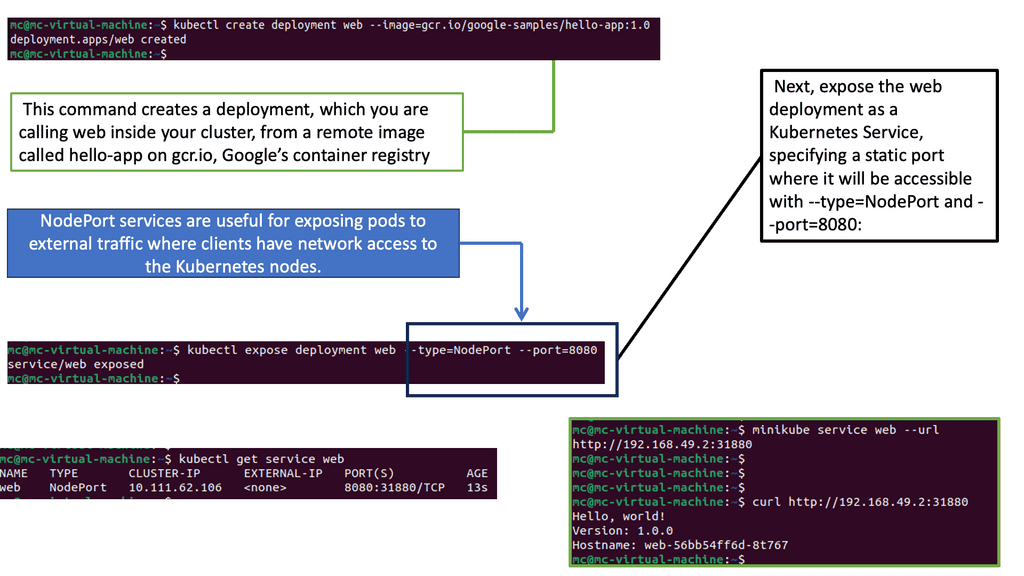

Kubernetes

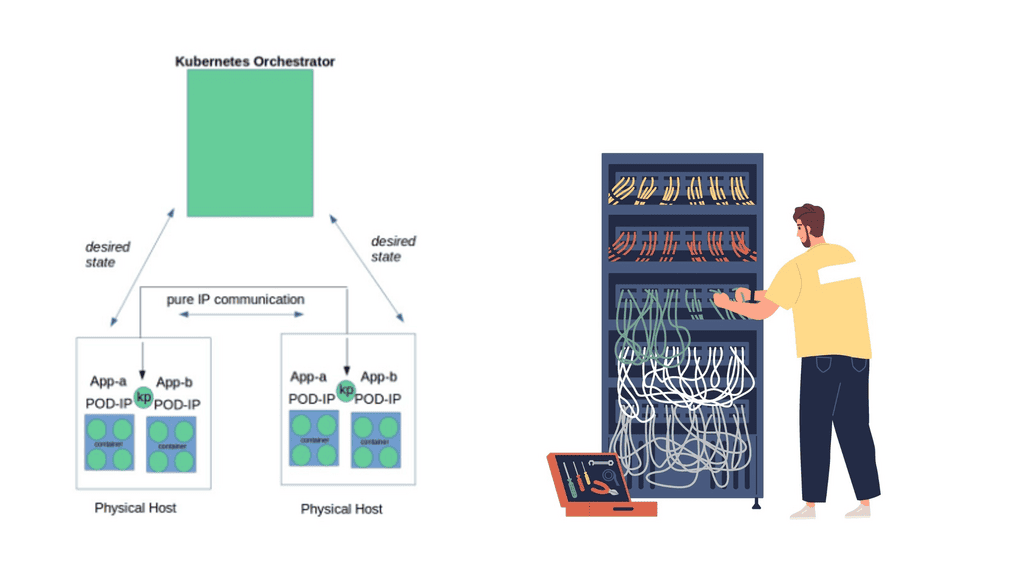

Kubernetes networking does not use any NAT. Instead, it applies IP addresses at the Pod scope level. Remember that containers within a Pod share network namespaces, including their IP address. This means containers within a Pod can all reach each other’s ports on localhost. Kubernetes makes finding and configuring Kubernetes services much easier due to the unique IP addresses per Pod model.

Kubernetes Networking 101: IP-per-pod-model

Kubernetes network namespace has two fundamental abstractions – Pods and Services. Pods are essentially scheduling ATOMs in Kubernetes. They represent a group of tightly integrated containers that share resources and fate. An example of an application container grouping in a Pod might be a file puller and a web server.

Frontend / Backend tiers usually fall outside this category as they can be scaled separately. Pods share a network namespace and talk to each other as local hosts.

Pods are assigned a private IP that is routable within the internal fabric. Docker doesn’t give you an IP; you must do weird things like going through a host and exposing a port. This is not a great idea, as port deployment may have issues and operational complexities.

With Kubernetes, all containers talk to each other, even across nodes, without NAT. The entire solution is NAT-less, flat address space. Pods can talk to Pods without any translations. Communications on ports can be done but with well-known port numbers, avoiding service discovery systems like DNS-SD, Consul, or Etcd.

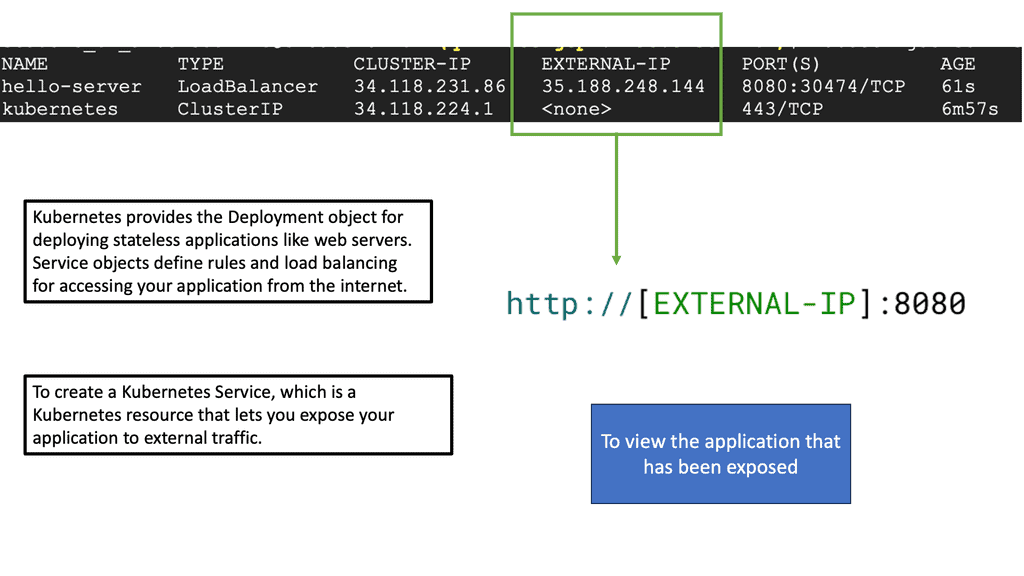

Understanding Pod Networking

At the heart of Kubernetes networking lies the concept of pods. Pods are the basic building blocks of any Kubernetes cluster, encapsulating one or more containers that work together. To ensure seamless communication between pods, Kubernetes assigns each pod a unique IP address and exposes it to other pods within the cluster.

While pods enable communication within a cluster, services take it further by providing a stable endpoint for accessing a set of pods. There are various services in Kubernetes, including ClusterIP, NodePort, and LoadBalancer, with their use cases and how they enable service discovery.

Container network and services

The second abstraction is services. Services are similar to a load balancer. They are groups of Pods that act as one. It may be better to reference the service with an IP address, not a Pod. This is because Pods can go away, but services are more dedicated.

A typical flow would be something like this: a client on a cluster looks for IP for a particular service. The Kubernetes nodes that it is running on do an iptables DNAT. Instead of going to the service, it reroutes to the Kube proxy, which is a proxy running on every Kubernetes node.

It programs iptables rules to trap access to service IPs and redirect them to the backends using round-robin load balancing. It also watches the API server to determine which pods are active and ready to serve requests.

Several implementations include Google Compute Engine, Flannel, Calico, and OVS with GRE/VxLAN to support the IP-per-pod model. OpenVSwitch connects Pods on different hosts with GRE or VxLAN. The Linux bridge replaces the docker0 bridge, encapsulating traffic to and from Pods. Flannel may also be used with Kubernetes.

It creates an overlay network and gives a subnet to each host. Flannel can be used on cloud providers that cannot offer an entire /24 to each host. Flannels’ flannel agent runs on each host and controls the IP assignment. Calico, already mentioned, is also an IP-based solution that relies on traditional BGP.

Closing Points on Container Networking

Container networking refers to the methods and protocols that enable containers to communicate with each other, with other applications, and with external networks. Unlike traditional virtual machines, containers share the same operating system but operate in isolated environments. This isolation necessitates specialized networking solutions to facilitate connectivity. From simple bridge networks to more complex overlay networks, container networking offers a variety of options to suit different needs and environments.

1. **Bridge Networks**: The default networking option for many container platforms, bridge networks allow containers on the same host to communicate with each other. This setup is ideal for simple applications or when all containers are on a single machine.

2. **Overlay Networks**: Perfect for multi-host deployments, overlay networks create a virtual network that spans across multiple machines. This type of network is essential for scaling applications across different infrastructure and provides a level of abstraction that simplifies network management.

3. **Host Networks**: In this configuration, containers share the host’s network stack. This can lead to improved performance since there’s no network address translation (NAT) overhead, but it also means less isolation compared to other networking types.

4. **Macvlan Networks**: These networks assign a unique MAC address to each container, allowing them to appear as physical devices on the network. This is useful for legacy applications that require direct network access.

Several tools and technologies have emerged to facilitate container networking, each offering unique features and capabilities:

– **Docker Networking**: Docker provides built-in networking capabilities that cater to a range of use cases, from simple bridge networks to more robust overlay networks.

– **Kubernetes Networking**: As one of the most popular container orchestration platforms, Kubernetes offers powerful networking features through its network plugins and service mesh integrations.

– **Cilium**: Leveraging eBPF technology, Cilium provides advanced networking and security capabilities, making it a popular choice for Kubernetes environments.

– **Weave Net**: A simple yet effective solution, Weave Net offers automatic network creation and service discovery, making it easier to manage container networks.

Summary: Container Networking

Container networking is fundamental to modern software development and deployment, enabling seamless communication and connectivity between containers. In this blog post, we delved into the intricacies of container networking, exploring key concepts and best practices to simplify connectivity and enhance scalability.

Understanding Container Networking Basics

Container networking involves establishing communication channels between containers, allowing them to exchange data and interact. We will explore the underlying principles and technologies that facilitate container networking, such as bridge networks, overlay networks, and network namespaces.

Container Networking Models

Depending on your application’s specific requirements, you can choose from various container networking models. We will discuss popular models like host networking, bridge networking, and overlay networking, highlighting their strengths and use cases. Understanding these models will empower you to make informed decisions regarding your container networking architecture.

Networking Drivers and Plugins

Container runtimes like Docker provide networking drivers and plugins to enhance container networking capabilities. We will explore popular networking drivers, such as bridge, macvlan, and overlay, and delve into the benefits and considerations of each. Additionally, we will discuss third-party networking plugins that enable advanced features like network security, load balancing, and service discovery.

Best Practices for Container Networking

To ensure efficient and reliable container networking, it is essential to follow best practices. We will cover critical recommendations, including proper network segmentation, optimizing network performance, implementing security measures, and monitoring network traffic. These practices will help you maximize the potential of your containerized applications.

Challenges and Solutions

Container networking can present challenges like network congestion, scalability issues, and inter-container communication complexities. In this section, we will address these challenges and provide practical solutions. We will discuss techniques like service meshes, container orchestration frameworks, and software-defined networking (SDN) to overcome these obstacles effectively.

Conclusion:

Container networking is a critical component of modern application development and deployment. You can build robust and scalable containerized environments by understanding the basics, exploring various models, leveraging appropriate drivers and plugins, following best practices, and overcoming challenges. Embracing the power of container networking allows you to unlock the full potential of your applications, enabling efficient communication and seamless scalability.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023