LISP Hybrid Cloud Implementation

In today's rapidly evolving technological landscape, hybrid cloud solutions have emerged as a game-changer for businesses seeking flexibility, scalability, and cost-effectiveness. One of the most intriguing aspects of hybrid cloud architecture is its potential when combined with LISP (Locator/Identifier Separation Protocol). In this blog post, we will delve into the concept of LISP hybrid cloud and explore its advantages, use cases, and potential impact on the future of cloud computing.

LISP, short for Locator/Identifier Separation Protocol, is a network architecture that separates the routing identifier of an endpoint device from its location information. This separation enables efficient mobility, scalability, and flexibility in networks, making it an ideal fit for hybrid cloud environments. By decoupling the endpoint's identity and location, LISP simplifies network management and enhances the overall performance and security of the hybrid cloud infrastructure.

Enhanced Scalability: LISP Hybrid Cloud Implementation provides unparalleled scalability, allowing businesses to seamlessly scale their network infrastructure without disruptions. With LISP, endpoints can be dynamically moved across different locations without changing their identity, making it ideal for businesses with evolving needs.

Improved Performance: By decoupling the endpoint identity from its location, LISP Hybrid Cloud Implementation reduces the complexity of routing. This results in optimized network performance, reduced latency, and improved overall user experience.

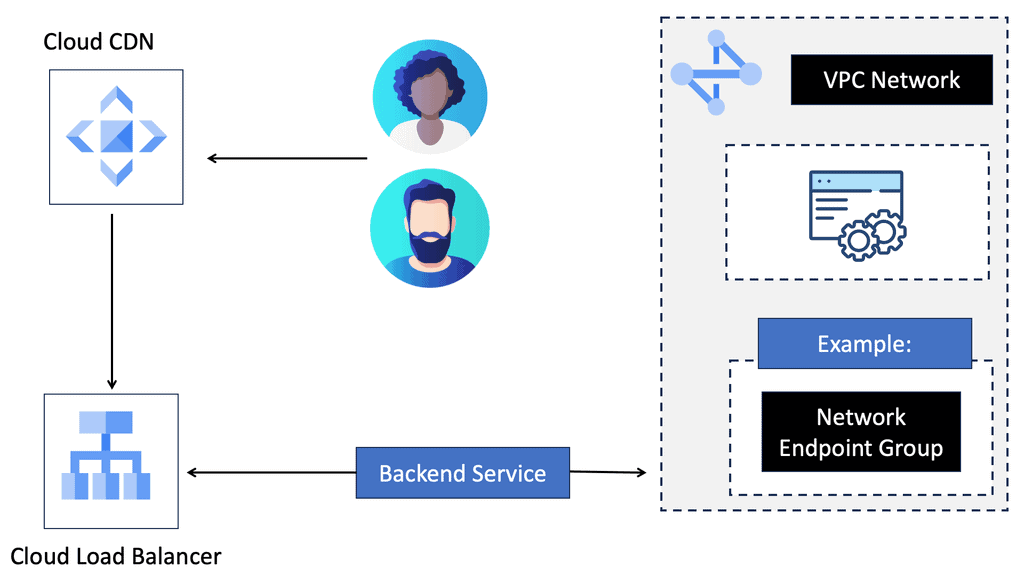

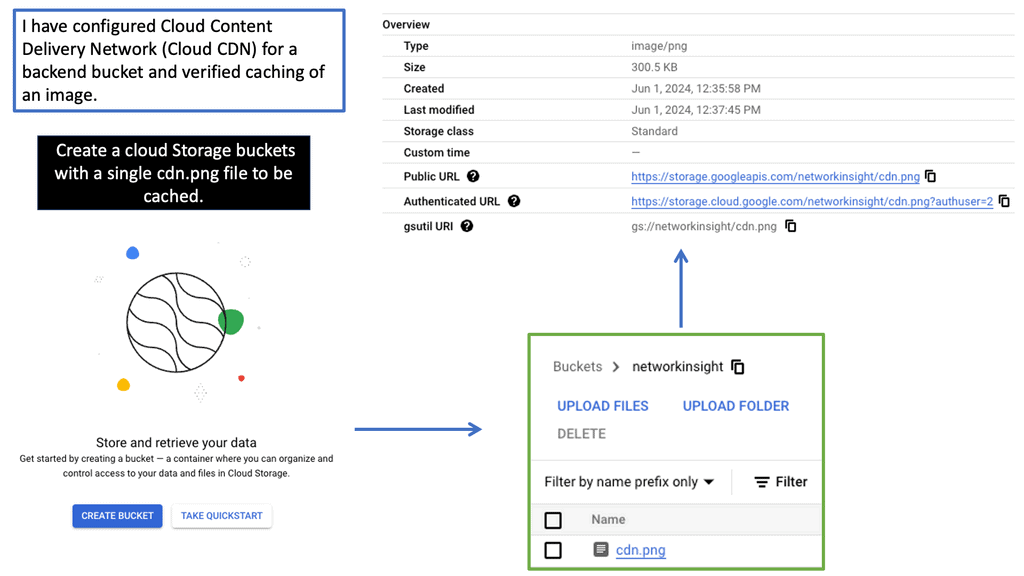

Seamless Multicloud Integration:One of the key advantages of LISP Hybrid Cloud Implementation is its compatibility with multicloud environments. It simplifies the integration and management of multiple cloud providers, enabling businesses to leverage the strengths of different clouds while maintaining a unified network architecture.

Assessing Network Requirements:Before implementing LISP Hybrid Cloud, it is essential to assess your organization's specific network requirements. Understanding factors such as scalability needs, mobility requirements, and multicloud integration goals will help in designing an effective implementation strategy.

To ensure a successful LISP Hybrid Cloud Implementation, partnering with an experienced provider is crucial. Look for a provider that has expertise in LISP and a track record of implementing hybrid cloud solutions. They can guide you through the implementation process, address any challenges, and provide ongoing support.

Conclusion: In conclusion, LISP Hybrid Cloud Implementation offers a powerful solution for businesses seeking scalability, performance, and multicloud integration. By leveraging the benefits of LISP, organizations can optimize their network infrastructure, enhance user experience, and future-proof their IT strategy. Embracing LISP Hybrid Cloud Implementation can pave the way for a more agile, efficient, and competitive business landscape.Matt Conran

Highlights: LISP Hybrid Cloud Implementation

Understanding LISP

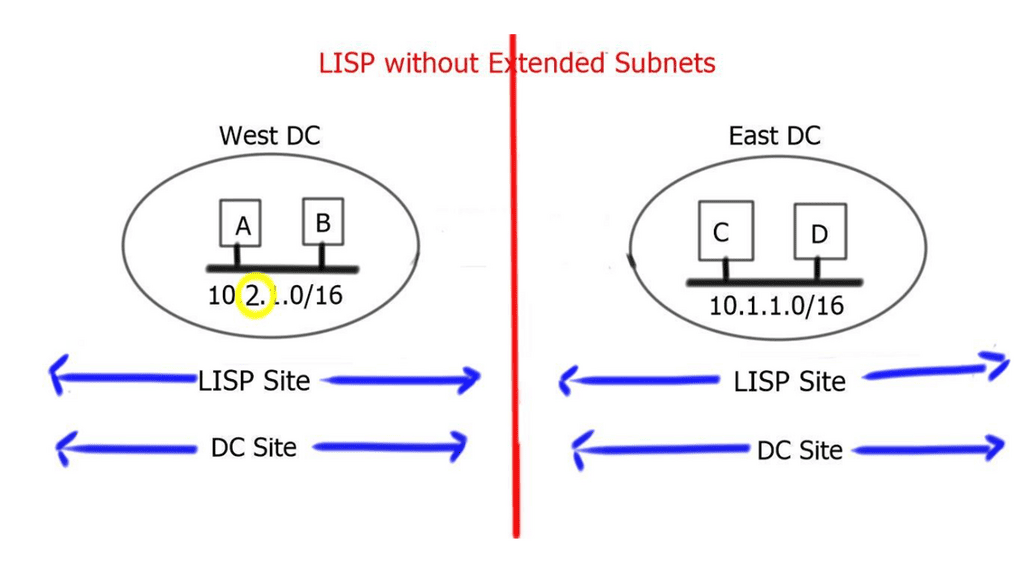

LISP, short for Locator/ID Separation Protocol, is a network architecture that separates the device’s identity (ID) from its location (Locator). By decoupling these two elements, LISP enables greater scalability, improved mobility, and enhanced security. This protocol has gained significant traction in recent years and forms the foundation of LISP hybrid cloud.

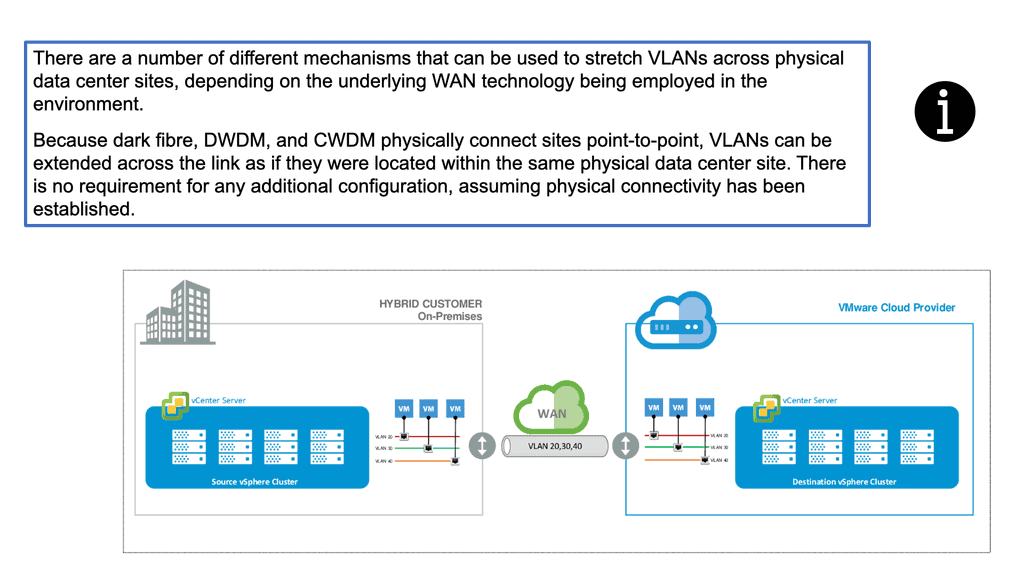

Hybrid cloud computing combines the use of both public and private cloud infrastructures, allowing organizations to leverage the benefits of both worlds. By seamlessly integrating on-premises resources with public cloud services, businesses can achieve enhanced flexibility, scalability, and cost-efficiency. This hybrid approach serves as an ideal platform for deploying LISP infrastructure, creating a potent combination.

**Endpoint identifiers and routing locators**

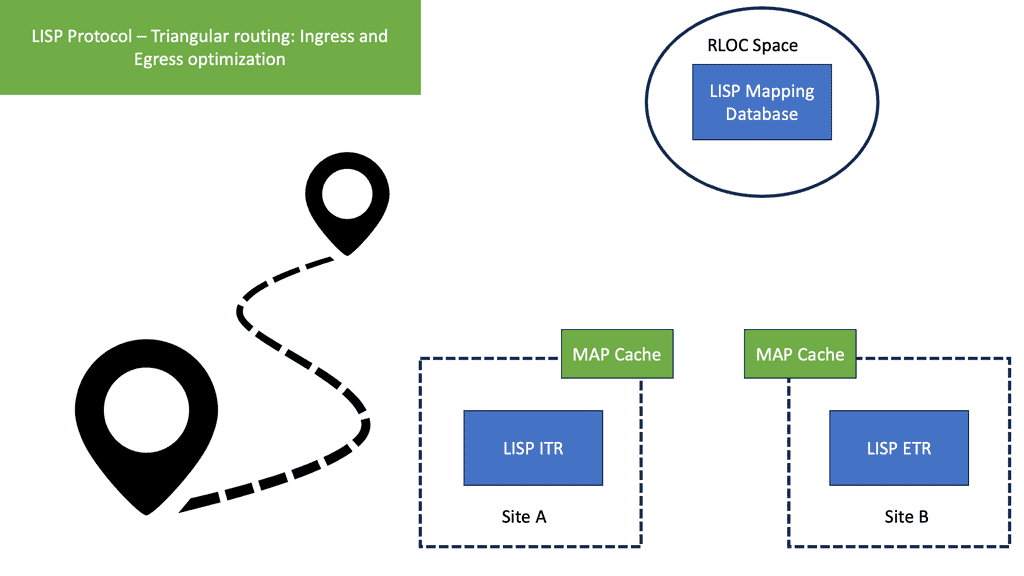

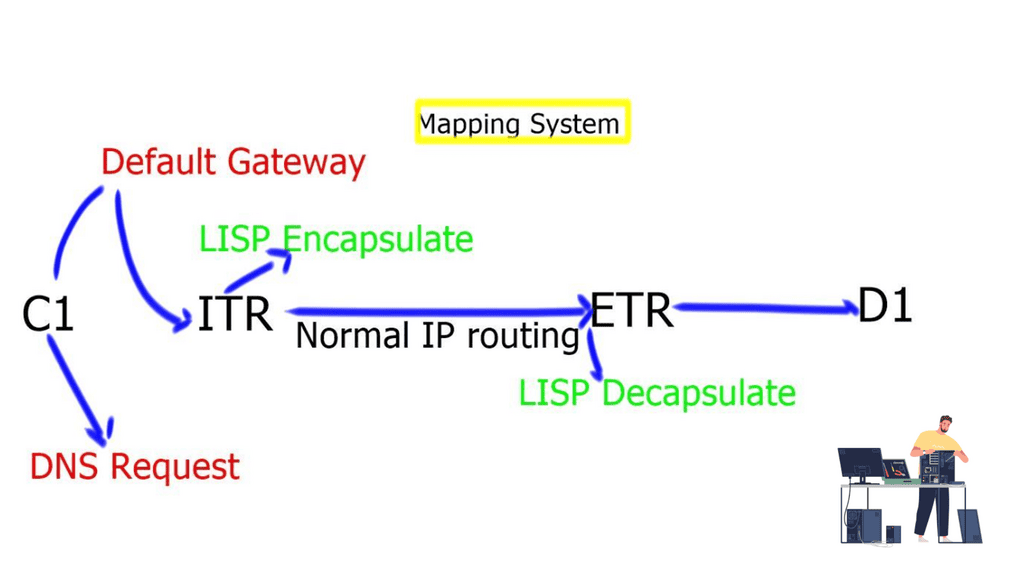

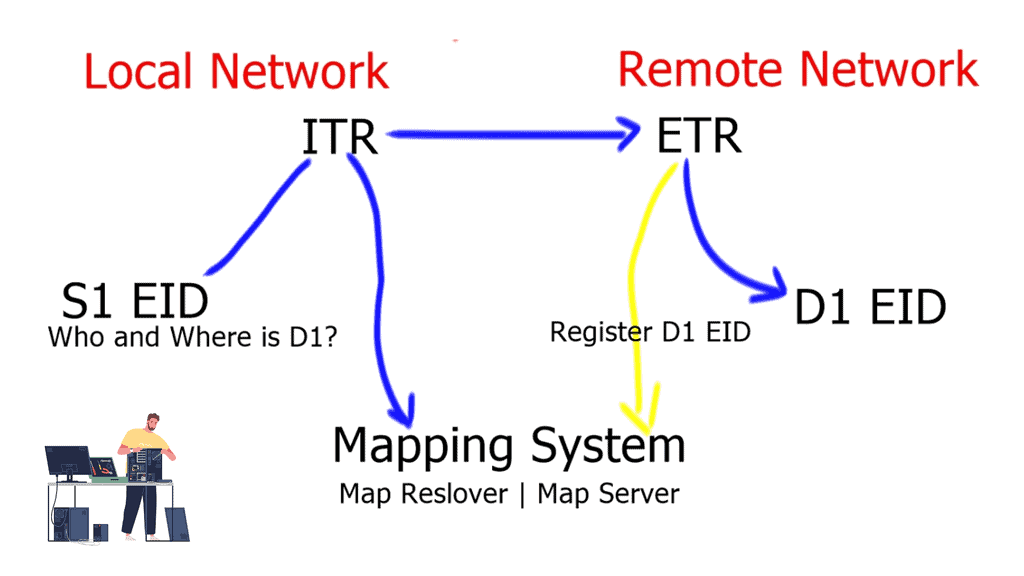

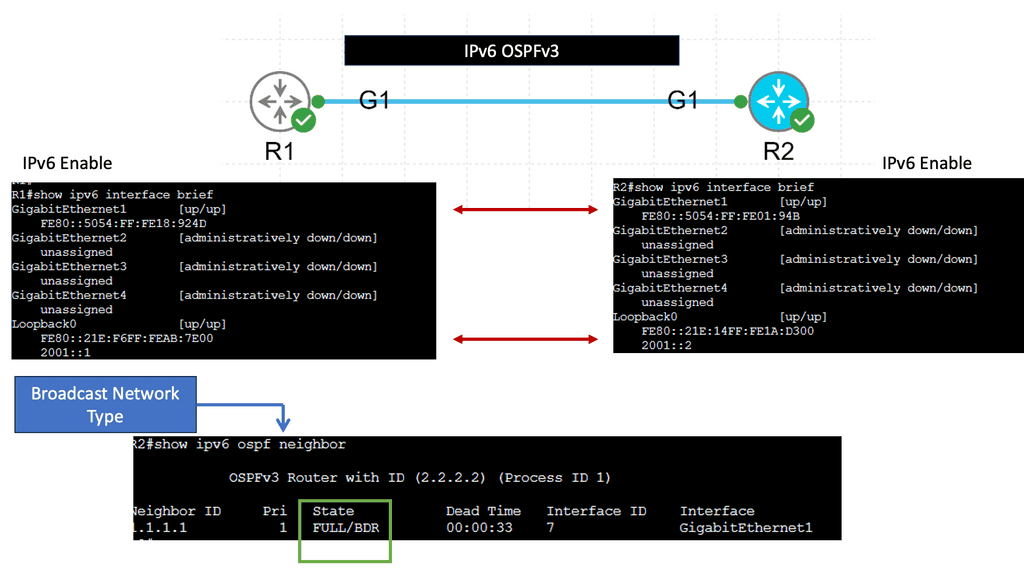

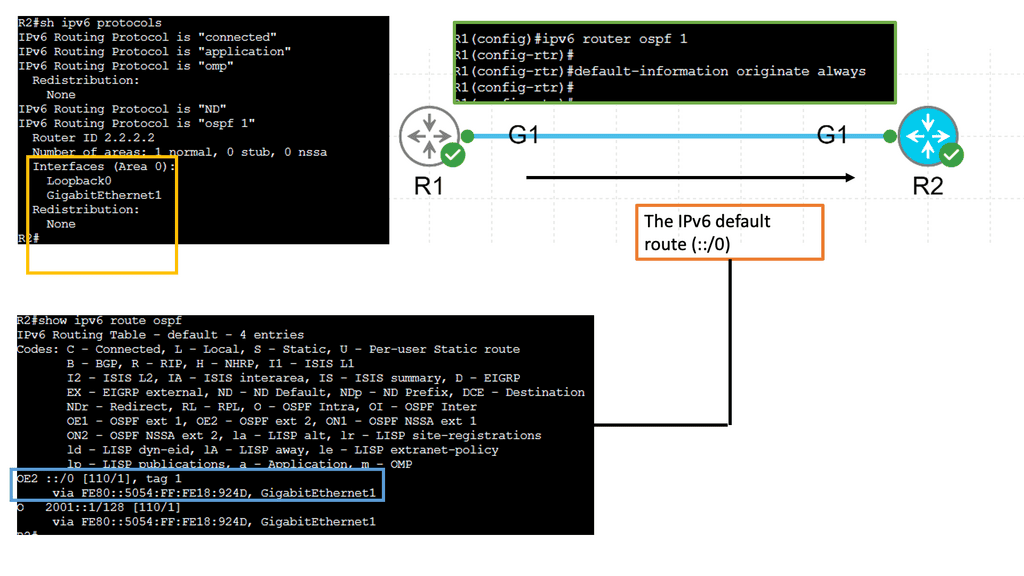

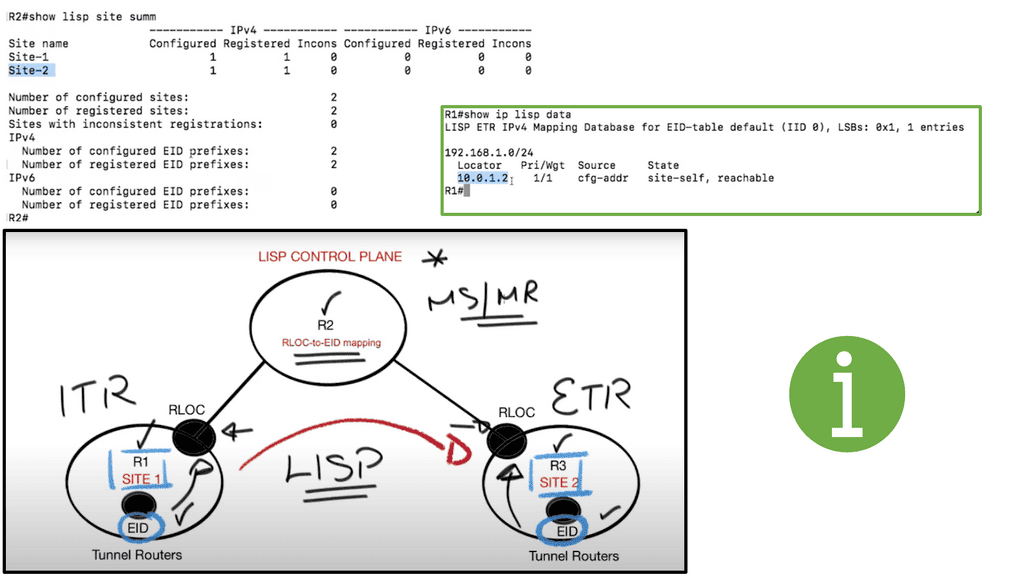

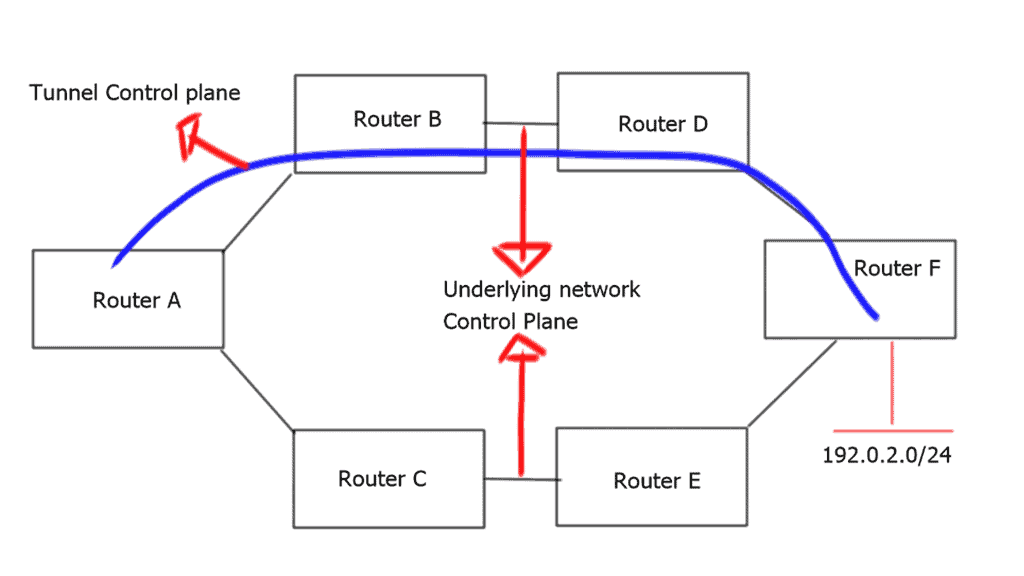

A device’s IPv4 or IPv6 address identifies it and indicates its location. Present-day Internet hosts are assigned a different IPv4 or IPv6 address whenever they move from one location to another, which overloads the location/identity semantic. Through the RLOC and EID, LISP separates location from identity. IP addresses of the egress tunnel router (ETR) and the host’s IP address are represented by the RLOC and EID, respectively.

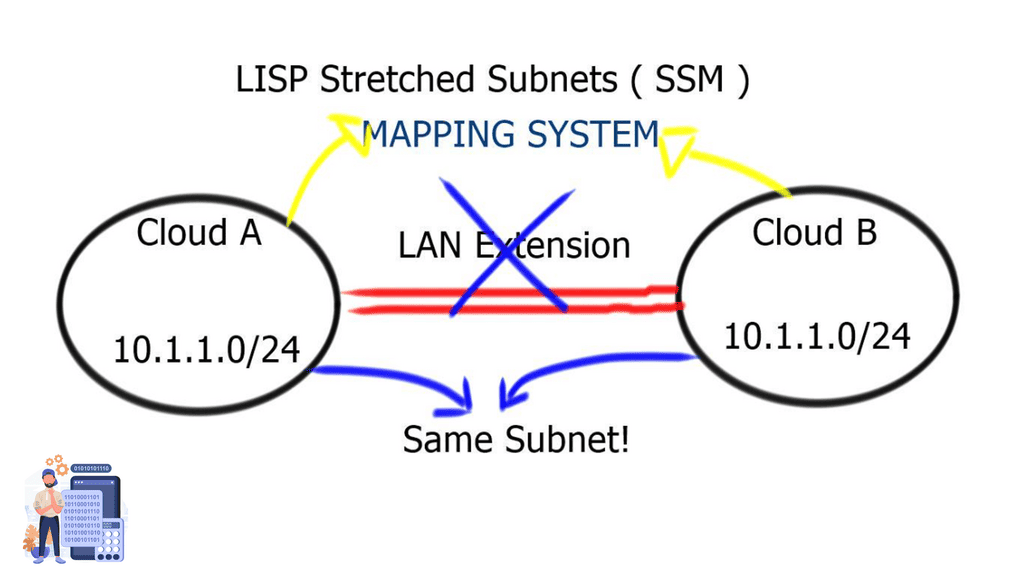

A device’s identity does not change with a change in location with LISP. The device retains its IPv4 or IPv6 address when it moves from one location to another, but the site tunnel router (xTR) changes dynamically. A mapping system ensures that the identity of the host does not change with the change in location. As part of the distributed architecture, LISP provides an EID-to-RLOC mapping service that maps EIDs to RLOCs.

Advantages of LISP in Hybrid Cloud:

1. Improved Scalability: LISP’s ability to separate the identifier from the location allows for easier scaling of hybrid cloud environments. With LISP, organizations can effortlessly add or remove resources without disrupting the overall network architecture, ensuring seamless expansion as business needs evolve.

2. Enhanced Flexibility: LISP’s inherent flexibility enables organizations to distribute workloads across cloud environments, including public, private, and on-premises infrastructure. This flexibility empowers businesses to optimize resource utilization and leverage the benefits of different cloud providers, resulting in improved performance and cost-efficiency.

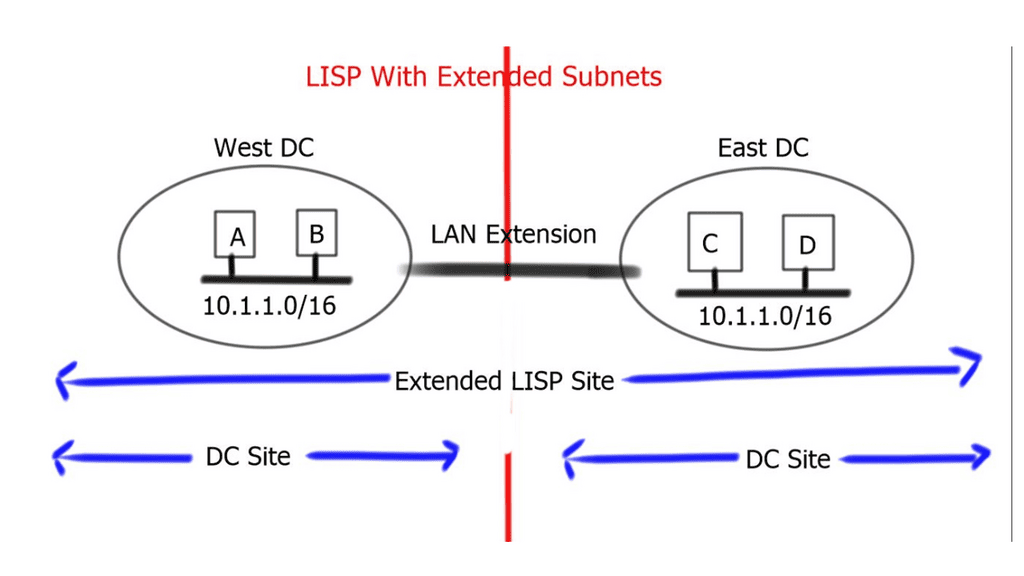

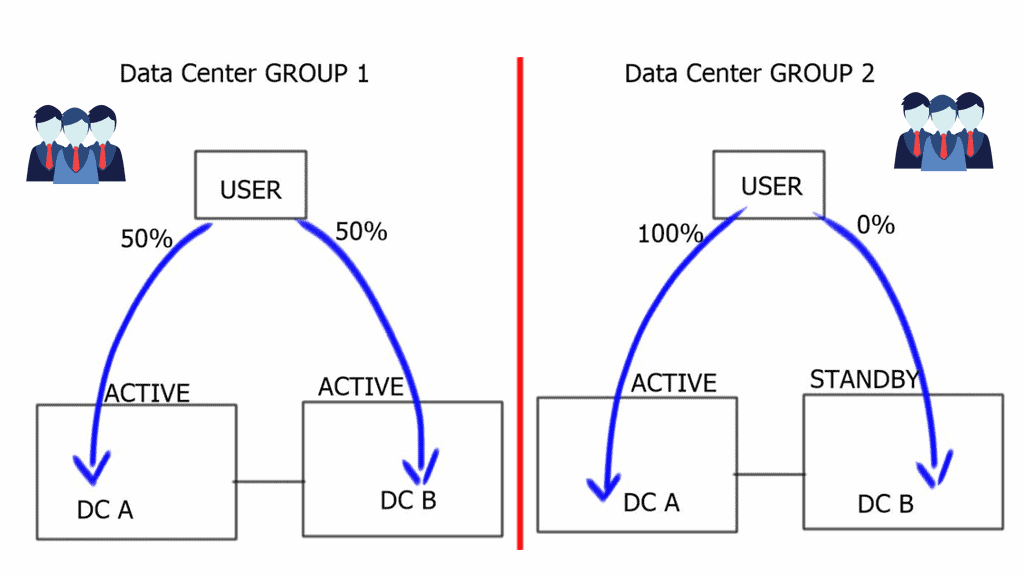

3. Efficient Mobility: Hybrid cloud environments often require seamless mobility, allowing applications and services to move between cloud providers or data centers. LISP’s mobility capabilities enable smooth migration of workloads, ensuring continuous availability and reducing downtime during transitions.

4. Enhanced Security: LISP’s built-in security features provide protection to hybrid cloud environments. With LISP, organizations can implement secure overlay networks, ensuring data integrity and confidentiality across diverse cloud infrastructures. LISP’s encapsulation techniques also prevent unauthorized access and mitigate potential security threats.

Use Cases of LISP in Hybrid Cloud:

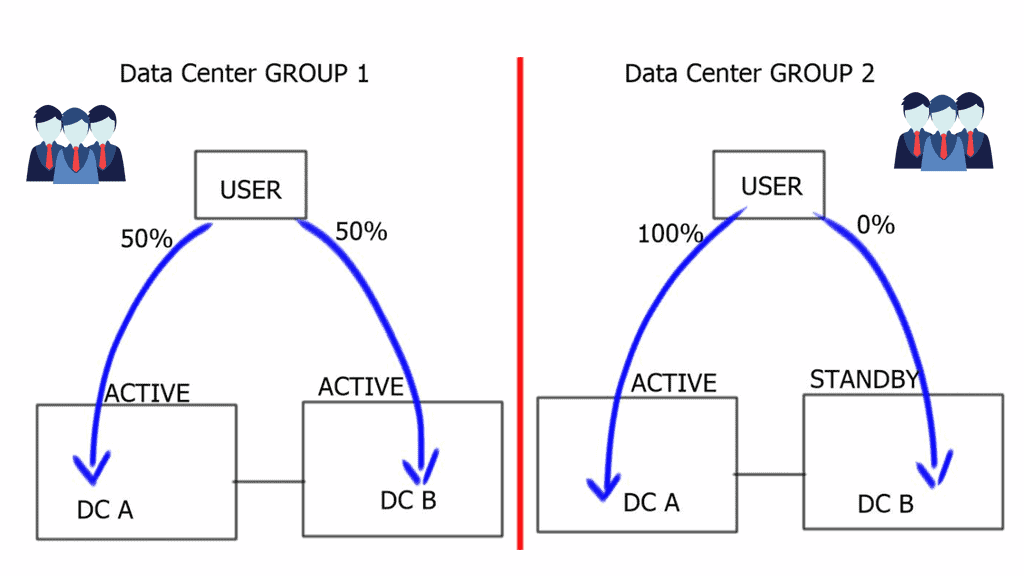

1. Disaster Recovery: LISP’s mobility and scalability make it an excellent choice for implementing disaster recovery solutions in hybrid cloud environments. By leveraging LISP, organizations can seamlessly replicate critical workloads across multiple cloud providers or data centers, ensuring business continuity during a disaster.

2. Cloud Bursting: LISP’s flexibility enables organizations to leverage additional resources from public cloud providers during peak demand periods. With LISP, businesses can easily extend their on-premises infrastructure to the public cloud, ensuring optimal performance and cost optimization.

3. Multi-Cloud Deployments: LISP’s ability to abstract the underlying network infrastructure simplifies the management of multi-cloud deployments. Organizations can efficiently distribute workloads across cloud providers by utilizing LISP, avoiding vendor lock-in, and maximizing resource utilization.

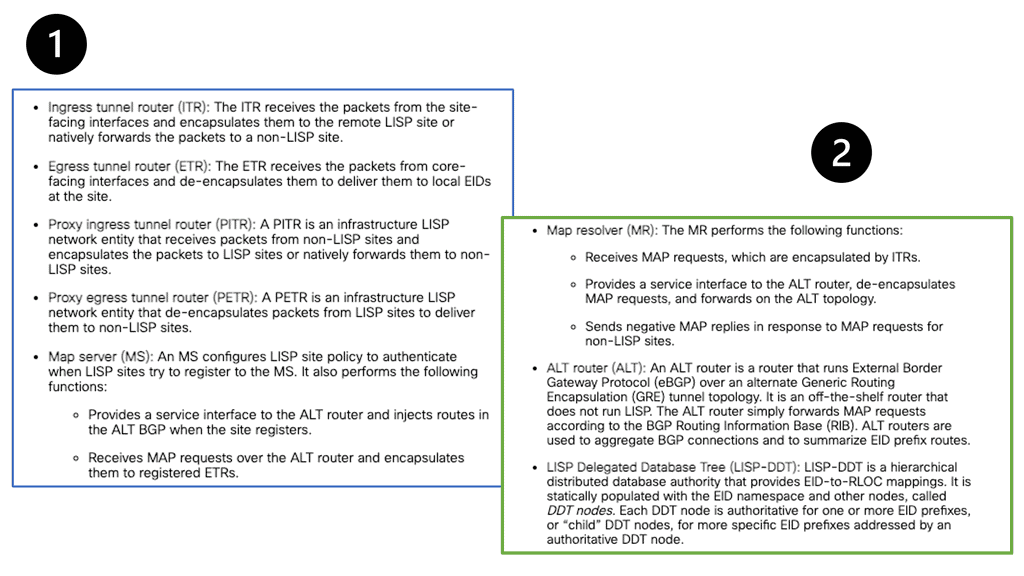

LISP Components

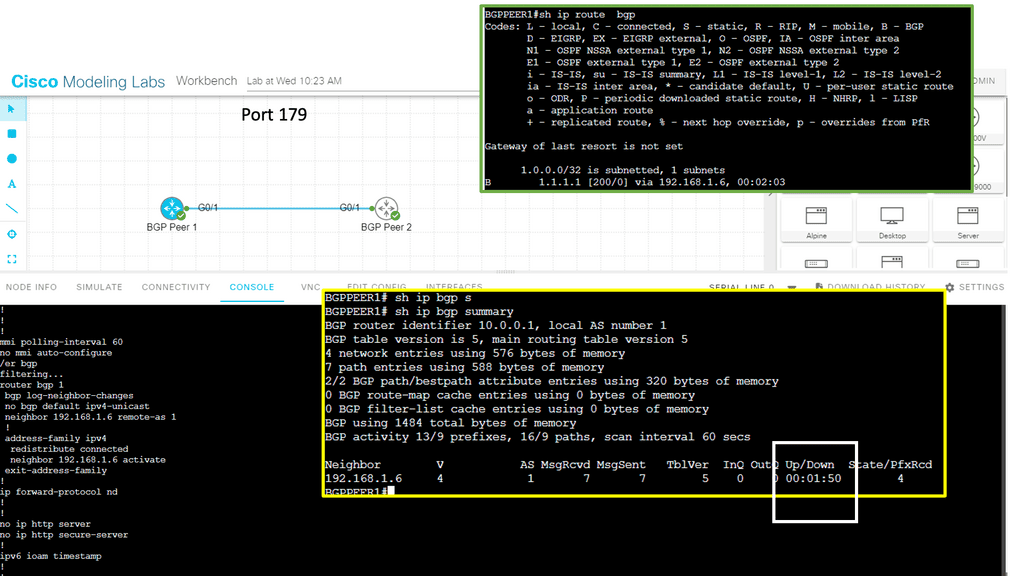

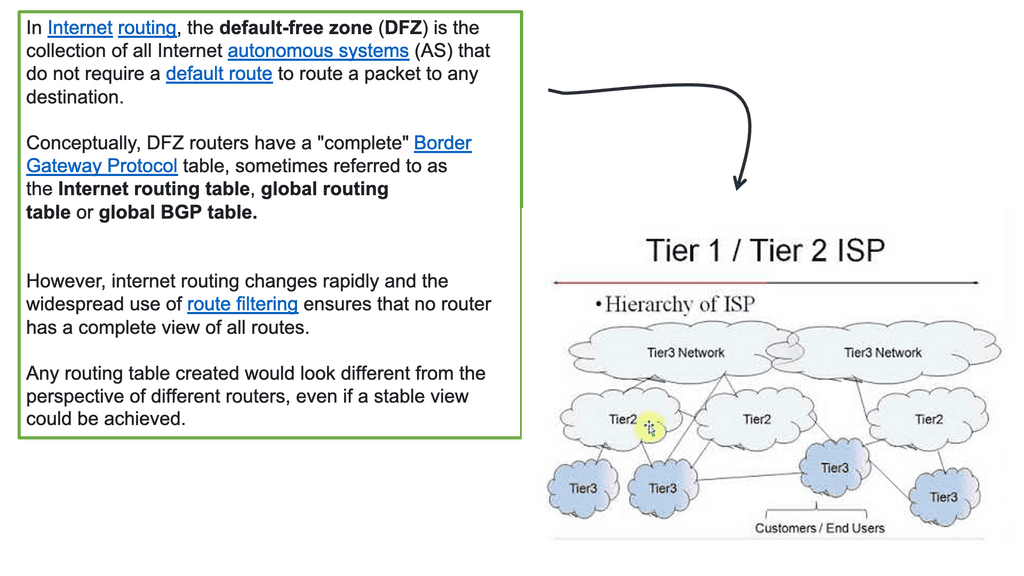

In addition to separating device identity from location, the Location/ID Separation Protocol (LISP) architecture also reduces operational expenses (opex) by providing a Border Gateway Protocol (BGP)–free multihoming network. Multiple address families (AF) are supported, a highly scalable virtual private network (VPN) solution is provided, and host mobility is enabled in data centers. Understanding LISP’s architecture and how it works is essential to understand how all these benefits and functionalities are achieved.

LISP Architecture

In RFC 6830, LISP defines a routing and addressing architecture for the Internet Protocol. The LISP routing architecture addressed scalability, multi-homing, traffic engineering, and mobility problems. A single 32-bit (IPv4 address) or 128-bit (IPv6 address) number on the Internet today combines location and identity semantics. In LISP, the location is separated from the identity. As a result, the LISP’s network layer locator (the network layer identifier) can change, but the network layer locator (the network layer identifier) cannot.

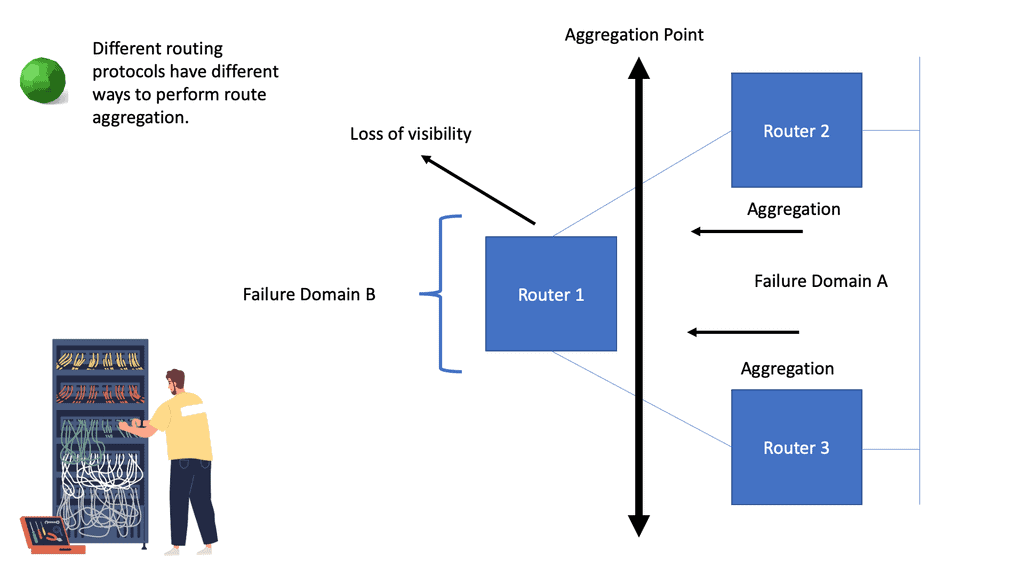

As a result of LISP, the end user device identifiers are separate from the routing locators that others use to contact them. As a result of the LISP routing architecture design, devices are identified by their endpoint identifiers (EIDs), while their locations, called routing locators (RLOCs), are identified by their routing locators.

Before you proceed, you may find the following posts helpful for pre-information:

LISP Hybrid Cloud Implementation

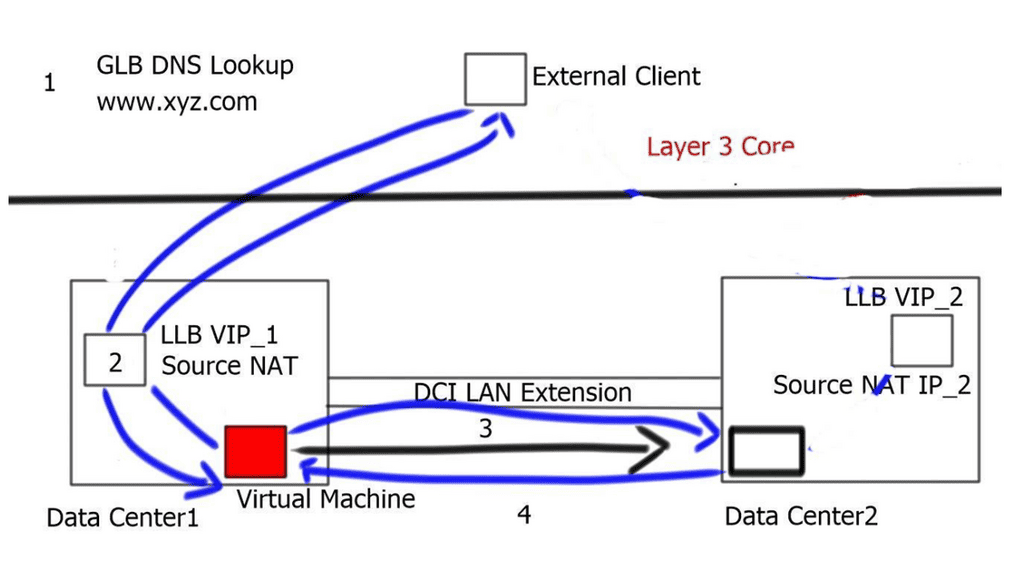

Critical Points and Traffic Flows

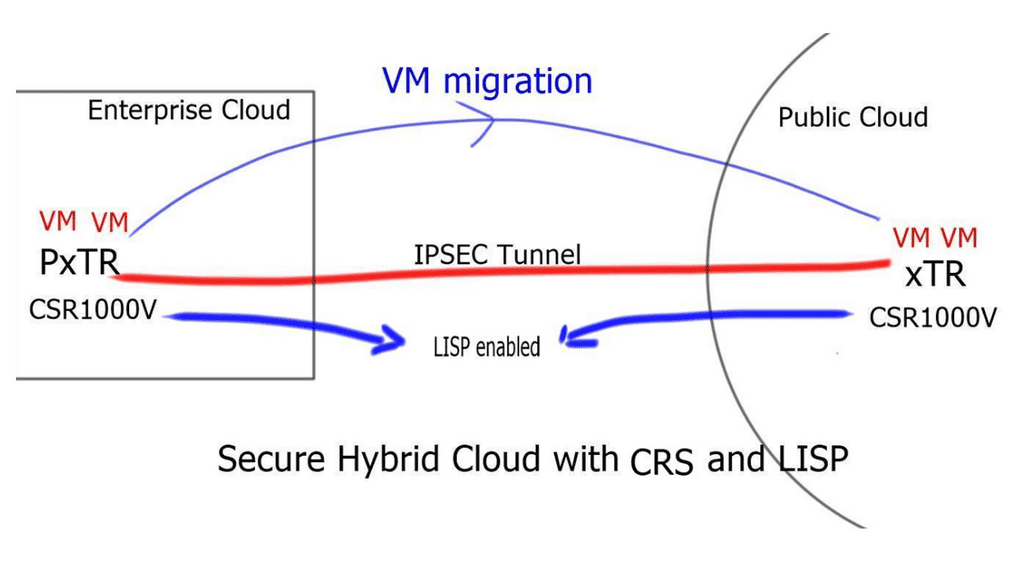

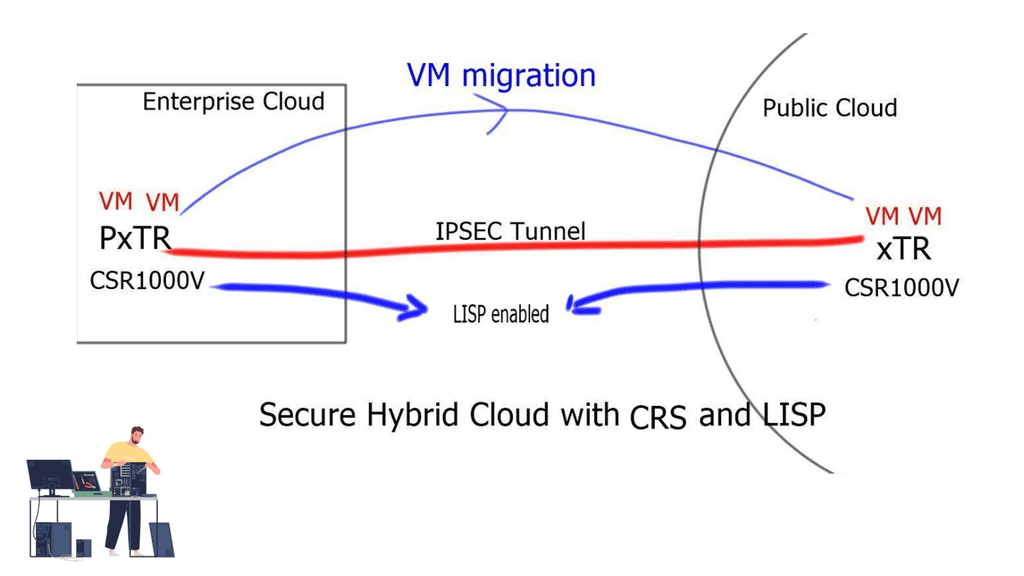

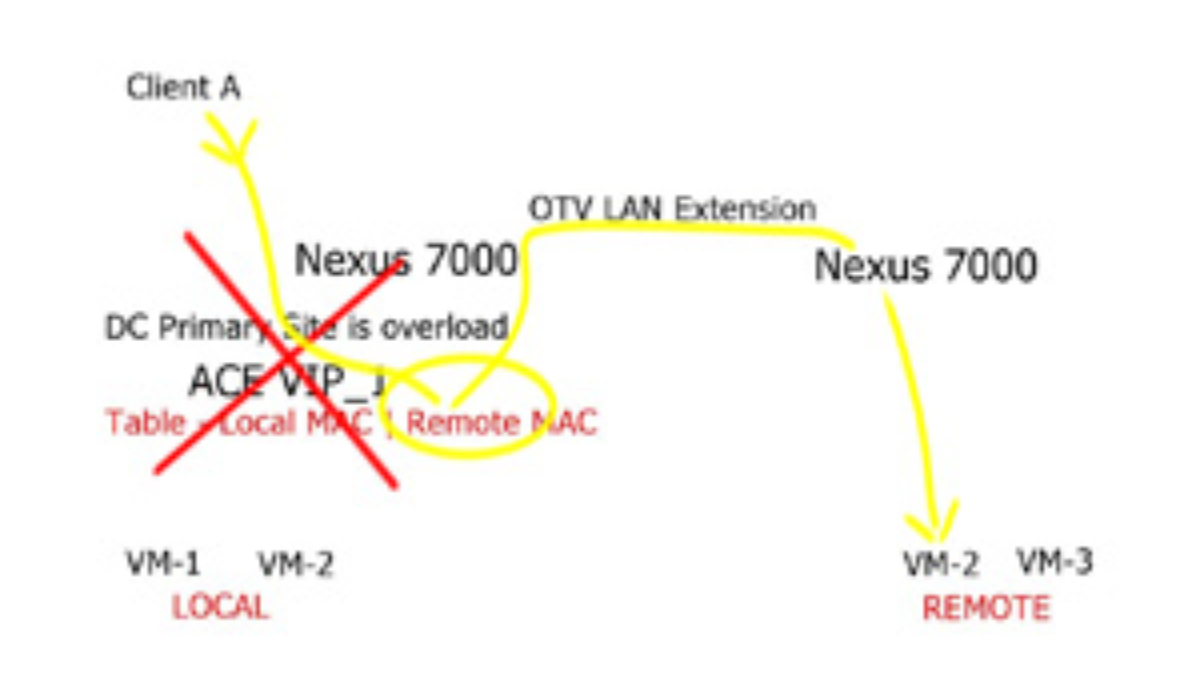

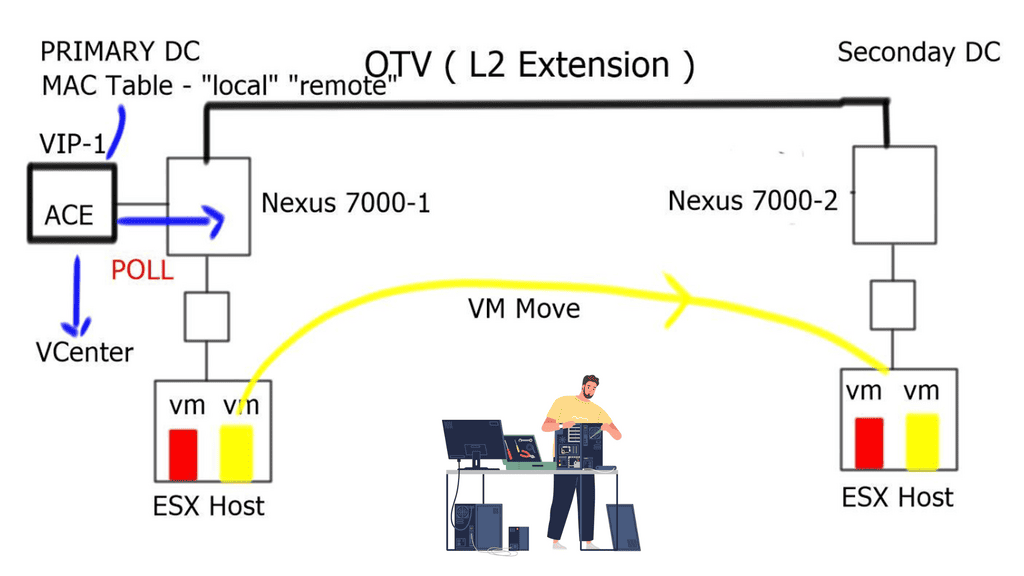

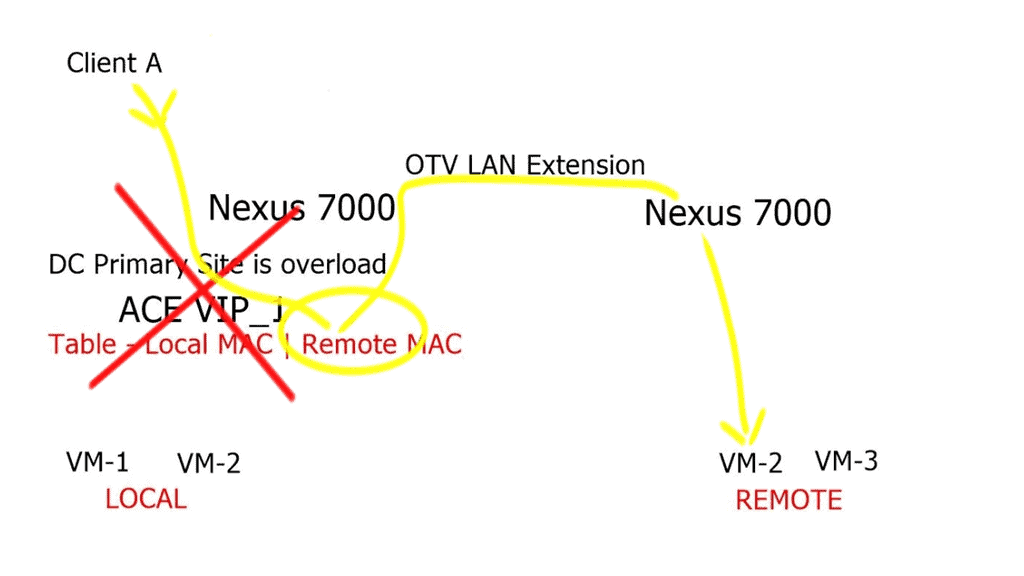

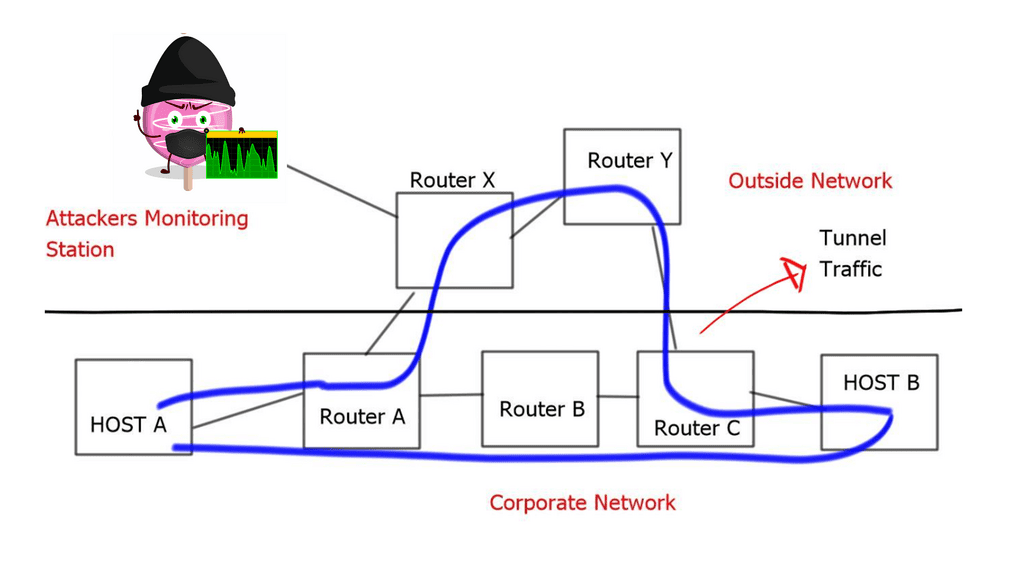

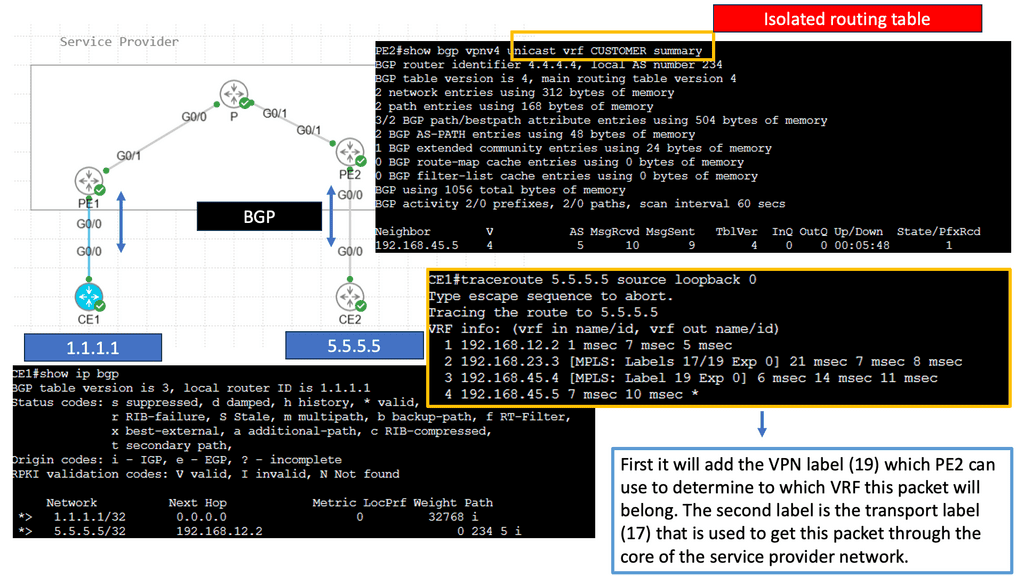

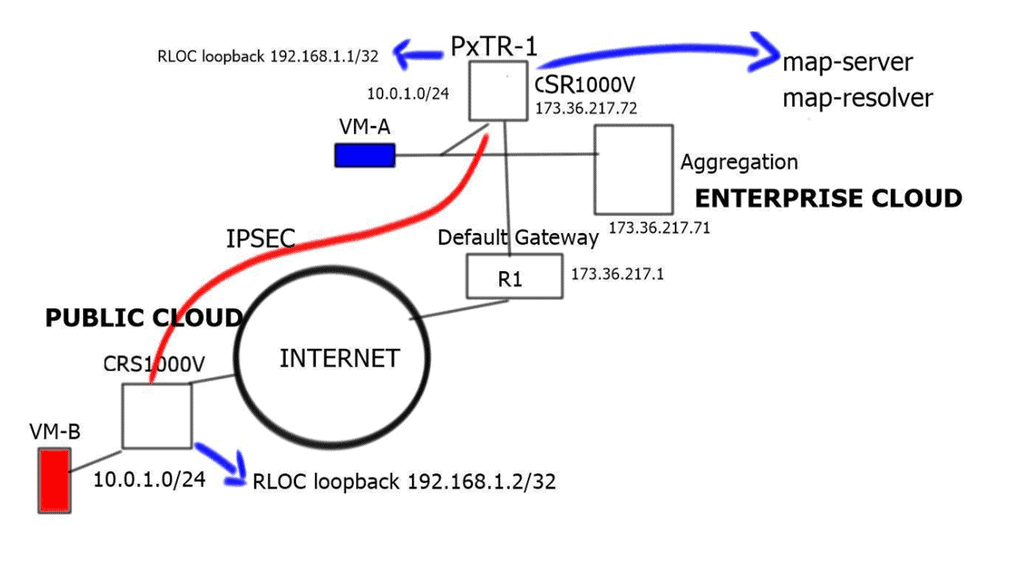

- The enterprise LISP-enabled router ( PxTR-1) can be either physical or virtual. The ASR 1000 and selected ISR models support Locator Identity Separation Protocol ( LISP ) functions for the physical world and the CSR1000V for the virtual world.

- The CSR or ASR/ISR acts as a PxTR with both Ingress Tunnel Router ( ITR ) and Egress Tunnel Router ( ETR ) functions. The LISP-enabled router acts as PxTR so that non-LISP sites like the branch office can access the mobile servers once they have moved to the cloud. The “P” stands for proxy. The ITR and ETR functions relate to LISP encapsulation/decapsulation depending on traffic flow direction. The ITR encapsulates, and the ETR decapsulates.

- The PxTR-1 ( Proxy Tunnel Router ) does not need to be in the regular forwarding path and does not have to be the default gateway for the servers that require mobility between sites. However, it does require an interface ( same subnet ) to be connected to the servers that require mobility. The interface can be either a physical or a sub-interface.

- The PxTR-1 can detect server EID ( server IP address ) by listening to the Address Resolution Protocol ( ARP ) request that could be sent during server boot time or by specifically sending Internet Control Message Protocol ( ICMP ) requests to those servers.

- The PxTR-1 uses Proxy-ARP for both intra-subnet and inter-subnet communication.

- The PxTR-1 proxy replies on behalf of nonlocal servers ( VM-B in the Public Cloud ) by inserting its MAC address for any EID.

- There is an IPsec tunnel, and routing is enabled to provide reachability for the RLOC address space. The IPSEC tunnel endpoints are the PxTR-1 and the xTR-1.

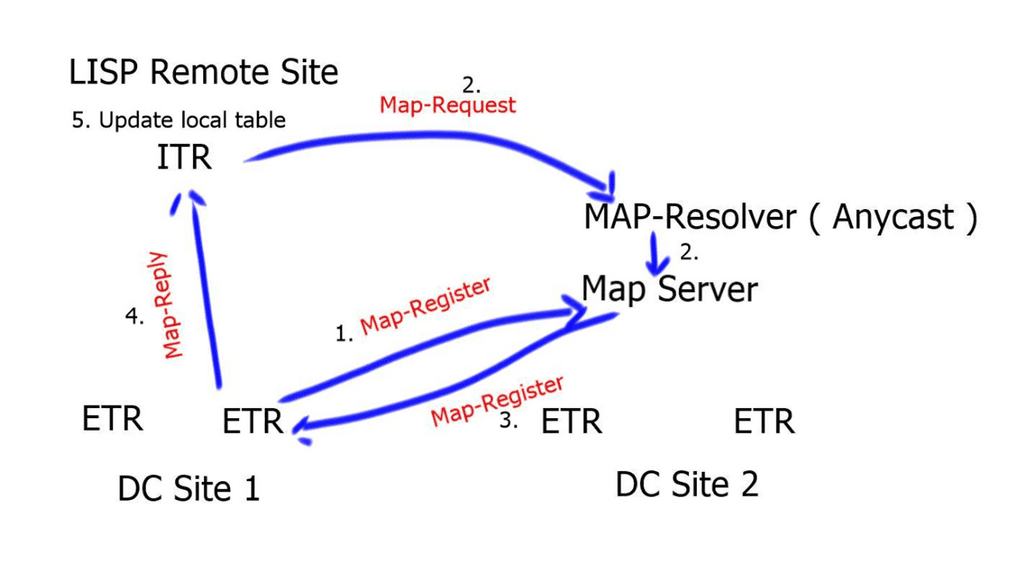

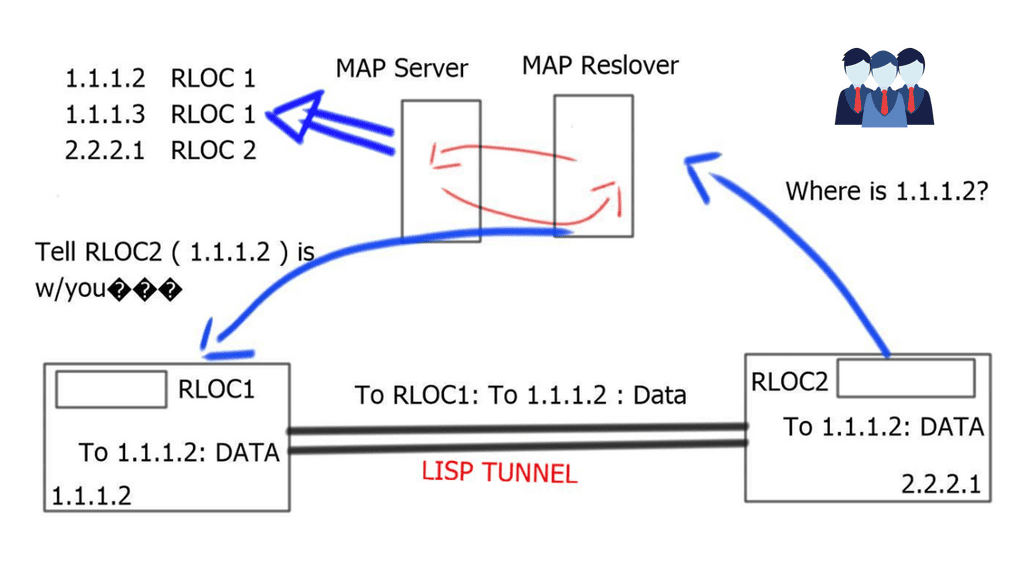

LISP hybrid cloud: The map-server and map-resolver

The map-server and map-resolver functions are enabled on the PxTR-1. They can, however, be enabled in the private cloud. For large deployments, redundancy should be designed for the LISP mapping system by having redundant map-server and map-resolver devices. You can implement these functions on separate devices, i.e., the map-server on one device and the map resolver on the other. Anycast addressing can be used on the map-resolver so LISP sites can choose the topologically closer resolver.

Public cloud deployment

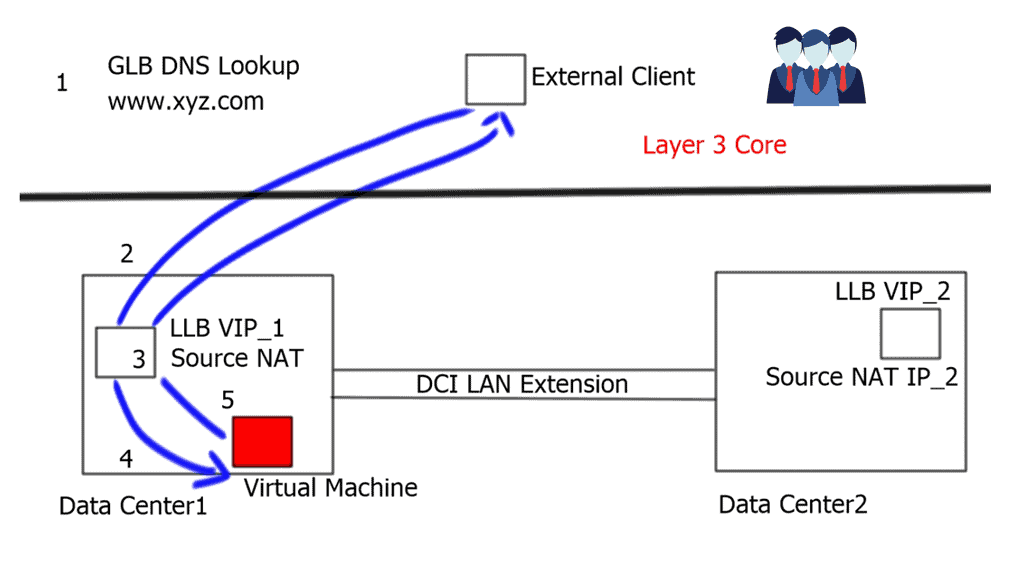

- Unlike the PxTR-1 in the enterprise domain, the xTR-1 in the Public Cloud must be in the regular data forwarding path and acts as the default gateway.

- At the cloud site, the xTR-1 acts as both the eTR and the iTR. With flows from the enterprise domain to the public cloud, the xTR-1 performs eTR functions.

- For returning traffic from the cloud to the enterprise, the xTR-1 acts as an iTR.

- The xTR-1 LISP encapsulates traffic and forwards it to the RLOC at the enterprise site for an unknown destination.

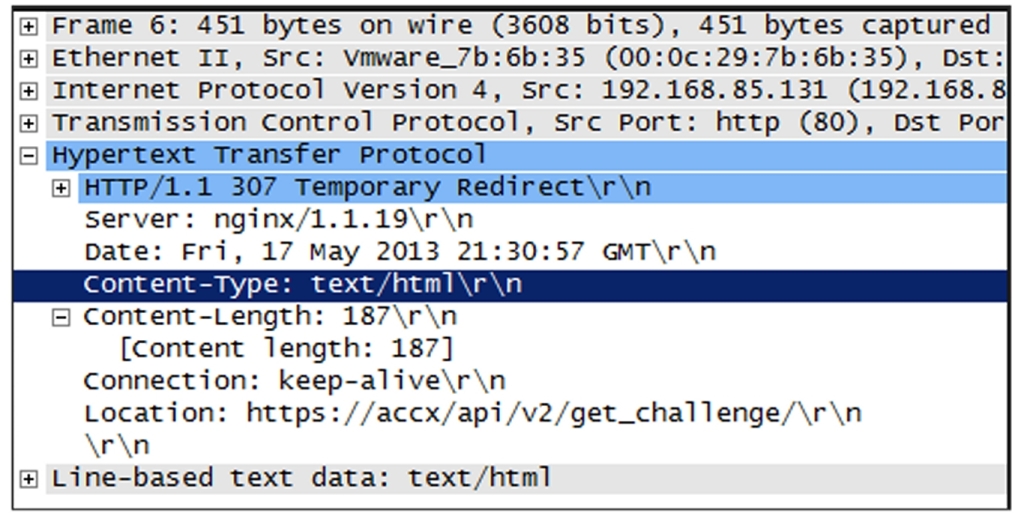

Packet walk: Enterprise to public cloud

- Virtual Machine A in the enterprise space wants to communicate and opens a session with Virtual Machine B in the public cloud space.

- VM-A sends an ARP request for VM-B. This is used to find the MAC address of VM-B.

- The PxTR-1 with an interface connected to VM-A ( server mobility interface ) receives this request and replies with its MAC address. This is the Proxy ARP feature of the PxTR-1 and its users because VM-B is not directly connected.

- VM-A receives the MAC address via ARP from the PxTR-1 and forwards traffic to its default gateway.

- As this is a new connection, the PxTR-1 does not have a LISP mapping in its cache for the remote VM. This triggers the LISP control plane, and the PxTR-1 sends a map request to the LISP mapping system ( map-resolver and map-server ).

- The LISP mapping system, which is local to the device, replies with the EID-to-RLOC mapping, which shows that VM-B is located in the public cloud site.

- Finally, the LISP encapsulates traffic to the xTR-1 at the remote site.

Packet walk: Non-LISP site to public cloud

- An end host in a non-LISP site wants to open a connection with VM-B.

- Traffic is naturally attracted via traditional routing to the enterprise site domain and passed to the local default gateway.

- The local default gateway sends an ARP request to find the MAC address of VM-B.

- The PxTR-1 performs proxy-ARP, responds to the ARP request, and inserts its MAC address for the remote VM-B.

- Traffic is then LISP encapsulated and sent to the remote Public Cloud, where VM-B is located.

Summary: LISP Hybrid Cloud Implementation

In the ever-evolving landscape of cloud computing, one technology has been making waves and transforming how organizations manage their infrastructure: LISP Hybrid Cloud. This innovative approach combines the benefits of the Locator/ID Separation Protocol (LISP) and the flexibility of hybrid cloud architectures. This blog post explored the key features, advantages, implementation strategies, and use cases of LISP Hybrid Cloud.

Understanding LISP Hybrid Cloud

LISP, originally designed to improve the scalability of the Internet’s routing infrastructure, has now found its application in the cloud world. LISP Hybrid Cloud leverages the principles of LISP to seamlessly extend a network across multiple cloud environments, including public, private, and hybrid clouds. LISP Hybrid Cloud provides enhanced mobility, scalability, and security by decoupling the network’s location and identity.

Benefits of LISP Hybrid Cloud

Enhanced Mobility: With LISP Hybrid Cloud, virtual machines and applications can be moved across different cloud environments without complex network reconfigurations. This flexibility enables organizations to optimize resource utilization and implement dynamic workload management strategies.

Improved Scalability: LISP Hybrid Cloud efficiently scales network infrastructure by separating the endpoint’s identity from its location. This decoupling enables the seamless addition or removal of cloud resources while maintaining connectivity and minimizing disruptions.

Enhanced Security: By abstracting the network’s identity, LISP Hybrid Cloud provides an additional layer of security. It enables the obfuscation of the actual location of resources, making it harder for potential attackers to target specific endpoints.

Implementing LISP Hybrid Cloud

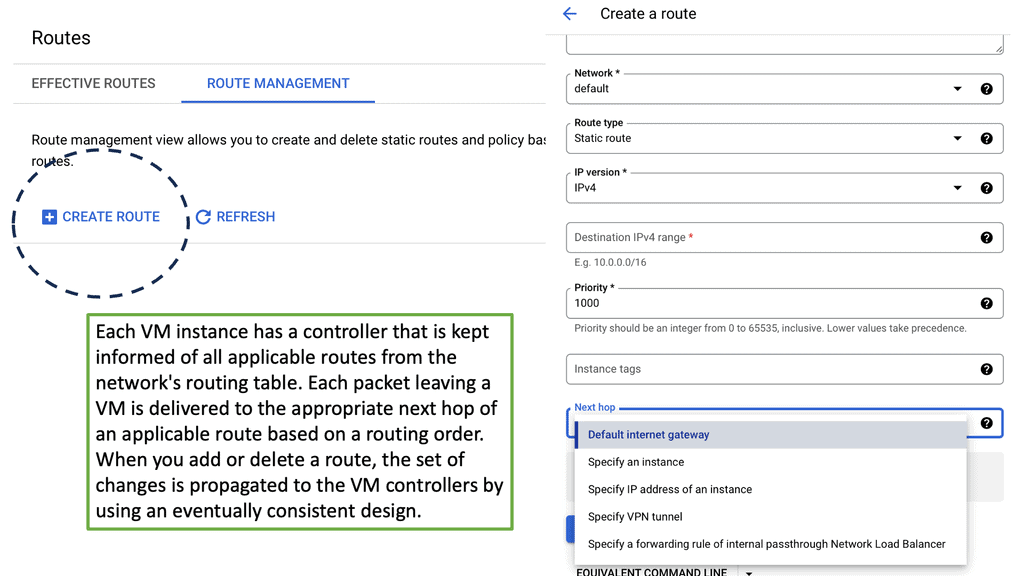

Infrastructure Requirements: Implementing LISP Hybrid Cloud requires a LISP-enabled network infrastructure, which includes LISP-capable routers and controllers. Organizations must ensure compatibility with their existing network equipment or consider upgrading to LISP-compatible devices.

Configuration and Management: Proper configuration of the LISP Hybrid Cloud involves establishing LISP overlays, mapping systems, and policies. Organizations should also consider automation and orchestration tools to streamline the deployment and management of their LISP Hybrid Cloud architecture.

Use Cases of LISP Hybrid Cloud

Disaster Recovery and Business Continuity: LISP Hybrid Cloud enables organizations to replicate their critical workloads across multiple cloud environments, ensuring business continuity during a disaster or service disruption.

Multi-Cloud Deployments: LISP Hybrid Cloud simplifies the deployment and management of applications across multiple cloud providers. It enables organizations to leverage the strengths of different clouds while maintaining seamless connectivity and workload mobility.

Conclusion:

LISP Hybrid Cloud offers a transformative approach to cloud networking, combining the power of LISP with the flexibility of hybrid cloud architectures. Organizations can achieve enhanced mobility, scalability, and security by decoupling the network’s location and identity. As the cloud landscape continues to evolve, LISP Hybrid Cloud presents a compelling solution for organizations looking to optimize their infrastructure and embrace the full potential of hybrid cloud environments.