SDN Data Center

The world of technology consists of data centers that play a crucial role in storing and managing vast amounts of information. Traditional data centers, however, have faced challenges in terms of scalability, flexibility, and efficiency. Enter Software-Defined Networking (SDN), a groundbreaking approach reshaping the landscape of data centers. In this blog post, we will explore the concept of SDN, its benefits, and its potential to revolutionize data centers as we know them.

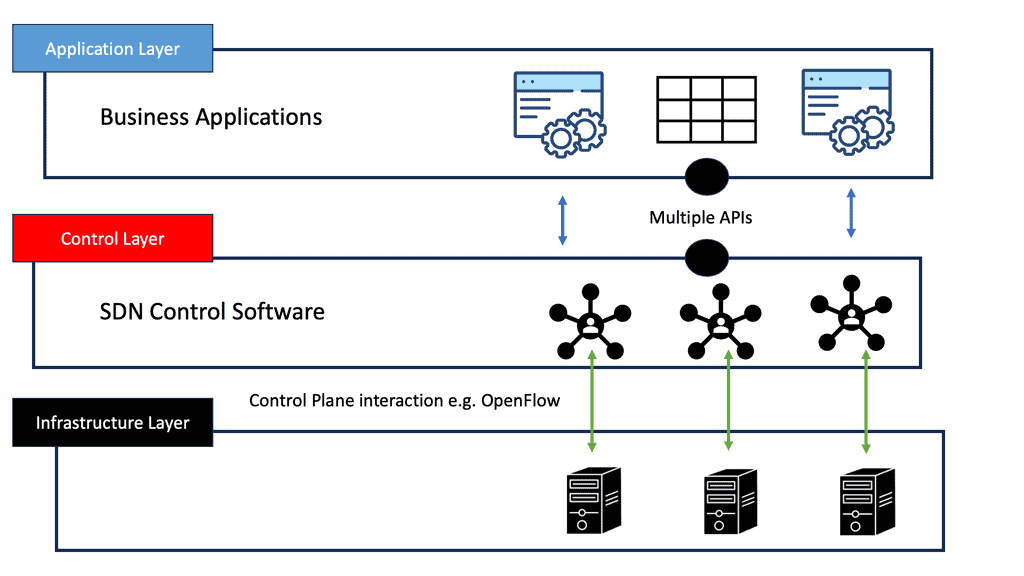

In SDN, the functions of network nodes (switches, routers, bare metal servers, etc.) are abstracted so they can be managed globally and coherently. A single controller, the SDN controller, manages the whole entity coherently by detaching the network device's decision-making part (control plane) from its operational part (data plane).

The name "Software Defined" comes from this controller, allowing "network programmability." The Open Networking Foundation (ONF) was founded in March 2011 to promote the concept and development of OpenFlow. In 2009, the University of Stanford (US) and its research center (ONRC) published the first OpenFlow specifications, one of the protocols used by SDN controllers.

Traditional data center networks often face challenges such as complex configurations, limited scalability, and lack of agility. SDN technology addresses these issues by introducing a software-based approach to network management. With SDN, data center operators can automate network provisioning, streamline operations, and achieve greater scalability. Moreover, SDN enables network virtualization, allowing multiple virtual networks to coexist on a shared physical infrastructure, leading to improved resource utilization.

Security is a top priority for data centers, and SDN brings notable advancements in this domain. With its centralized control, SDN provides a holistic view of the network, enabling enhanced security policies and threat detection mechanisms. By dynamically allocating resources and isolating traffic, SDN mitigates potential security breaches. Additionally, SDN facilitates network resilience through features like automatic traffic rerouting, load balancing, and real-time network monitoring.

The applications of SDN in data centers are vast and varied. One notable use case is network virtualization, which allows data center operators to create isolated virtual networks for different tenants or applications. This enhances resource allocation and provides better network performance. SDN also enables efficient load balancing across servers, optimizing resource utilization and improving application delivery. Furthermore, SDN facilitates the deployment of network services, such as firewalls and intrusion detection systems, in a more agile and scalable manner.Matt Conran

Highlights: SDN Data Center

SDN Data Center

**The Architecture of SDN**

– At the heart of SDN lies its unique architecture, which comprises three main components: the application layer, the control layer, and the infrastructure layer. The application layer is responsible for delivering network services to the users. The control layer, often referred to as the SDN controller, acts as the brain of the network, making intelligent decisions and managing data flow.

– Finally, the infrastructure layer consists of the physical network devices that execute the commands of the SDN controller. This separation of roles allows for unprecedented control over the network, optimizing performance and resource allocation.

**Benefits of Implementing SDN in Data Centers**

– One of the most significant advantages of SDN is its ability to enhance network agility and flexibility. With SDN, network administrators can programmatically manage, configure, and optimize network resources in real-time. This leads to improved efficiency and reduced operational costs.

– Additionally, SDN supports automation, which minimizes human intervention and the potential for error. It also bolsters security by enabling faster detection and mitigation of threats through centralized control.

**Challenges Faced in SDN Deployment**

– Despite its numerous benefits, the deployment of SDN in data centers is not without challenges. The transition from traditional networking to SDN requires significant investment in both time and resources. There is also a steep learning curve associated with understanding and implementing SDN technologies.

– Furthermore, interoperability with existing systems can pose issues, necessitating careful planning and execution. Organizations must weigh these factors against the potential long-term gains of adopting SDN.

What is SDN:

With SDN, network nodes (switches, routers, bare-metal servers, etc.) are abstracted from their functions, which allows them to be managed globally and coherently. An SDN controller coherently manages the entire system through its control plane (control plane) and data plane (data plane (data plane).

“Network programmability” is enabled by Software Defined Controllers. March 2011 saw the founding of the Open Networking Foundation (ONF), a non-profit organization dedicated to promoting and developing OpenFlow. Research centers, such as Stanford University’s ONRC, which produced the first OpenFlow specifications in 2009, were interested in using OpenFlow as a protocol for SDN controllers.

Why do we need it?

IT teams are responsible for building and managing IT infrastructure and applications, but they should also serve key business drivers for their organization, such as these:

- Affordability

- Growth

- Adaptability

- Ability to scale

- A secure environment.

As we know, non-SDN networks in the data center space have many drawbacks and present many operational challenges to modern IT infrastructures. In addition to these challenges, organisations from diverse industries raised new demands for SDN.

Google Cloud Data Centers

What is Google Network Connectivity Center?

Google Network Connectivity Center (NCC) is a comprehensive network management solution designed to unify and simplify the connectivity experience. It serves as a centralized hub for managing and orchestrating network connectivity, providing a holistic view of an organization’s network. By leveraging NCC, businesses can ensure efficient and secure data flow between their on-premises infrastructure, cloud environments, and remote locations.

#### Centralized Management

One of the standout features of NCC is its centralized management capability. It allows network administrators to monitor and control multiple network connections from a single interface. This centralization reduces complexity and enhances operational efficiency, making it easier to identify and resolve connectivity issues swiftly.

#### Automation and Orchestration

NCC integrates powerful automation and orchestration tools, which streamline network operations. Automated workflows can be configured to handle routine tasks, reducing the manual effort required and minimizing the risk of human error. This ensures that network operations remain consistent and reliable.

#### Enhanced Security

Security is a top priority for any network management solution, and NCC is no exception. It offers robust security features such as encryption, access control, and threat detection. These features help safeguard the integrity and confidentiality of data as it moves across different network segments.

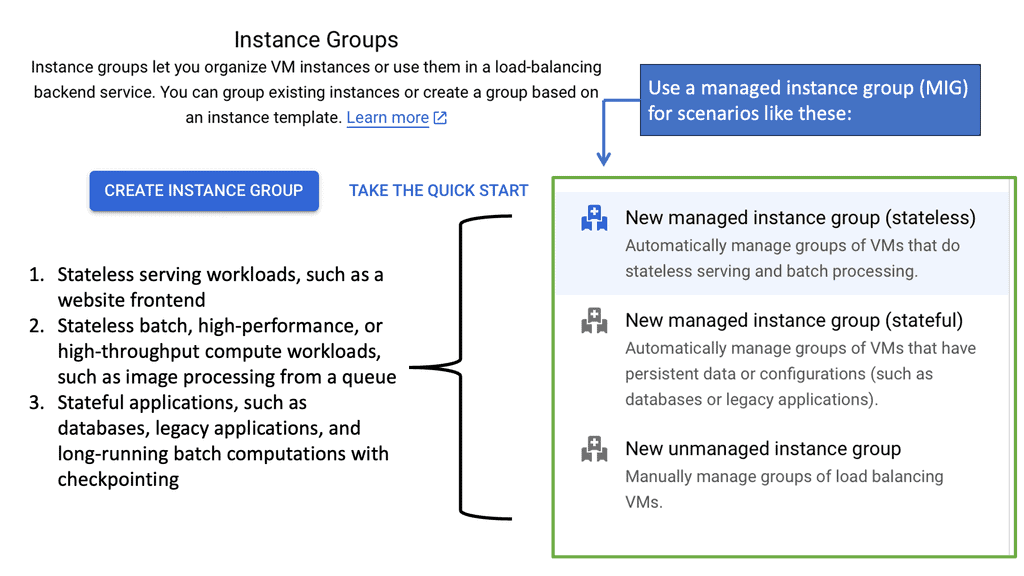

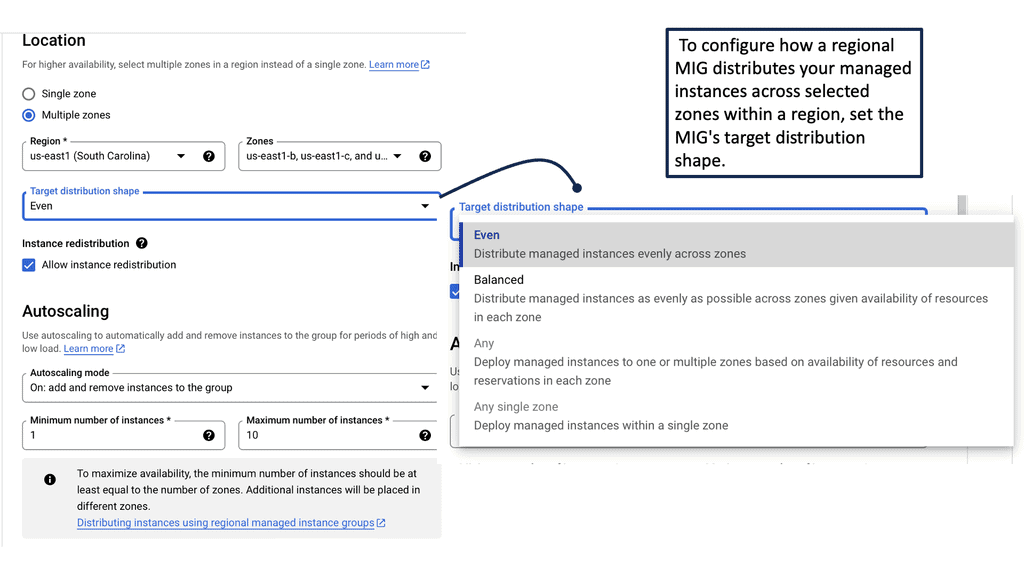

**What Are Managed Instance Groups?**

Managed Instance Groups are a powerful feature of Google Cloud that allows you to manage a group of identical virtual machine (VM) instances. These groups are designed to provide automated, scalable, and resilient VM operations. By using templates, you can define configurations for your instances, ensuring consistency and control across your infrastructure. Whether you’re running a web application or a large-scale computational workload, MIGs can help you maintain optimal performance and availability.

**The Benefits of Using Managed Instance Groups**

One of the primary benefits of Managed Instance Groups is their ability to automatically scale your infrastructure based on demand. This means you can dynamically add or remove instances in response to traffic patterns, reducing costs during low-demand periods and ensuring capacity during peak times. Additionally, MIGs come with built-in load balancing, distributing incoming traffic evenly across your instances, which enhances application reliability and performance.

**How to Set Up Managed Instance Groups on Google Cloud**

Setting up a Managed Instance Group in Google Cloud is straightforward. First, you’ll need to create an instance template, which specifies the machine type, image, and other instance properties. Then, you can create a Managed Instance Group using this template, defining parameters such as the number of instances and the scaling policy. Google Cloud provides an intuitive interface and comprehensive documentation to guide you through this process, making it accessible even for those new to cloud computing.

**Best Practices for Optimizing Managed Instance Groups**

To get the most out of your Managed Instance Groups, it’s essential to follow best practices. Start by defining clear scaling policies that align with your application’s needs. Regularly update your instance templates to incorporate the latest software updates and patches. Additionally, monitor your instance group’s performance using Google Cloud’s monitoring tools, allowing you to make data-driven decisions and optimize resource allocation.

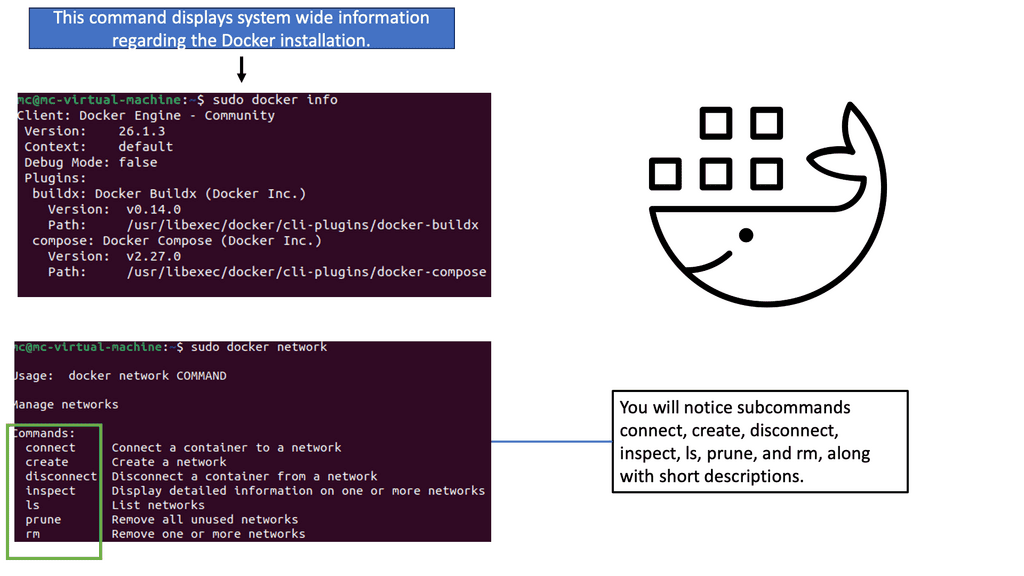

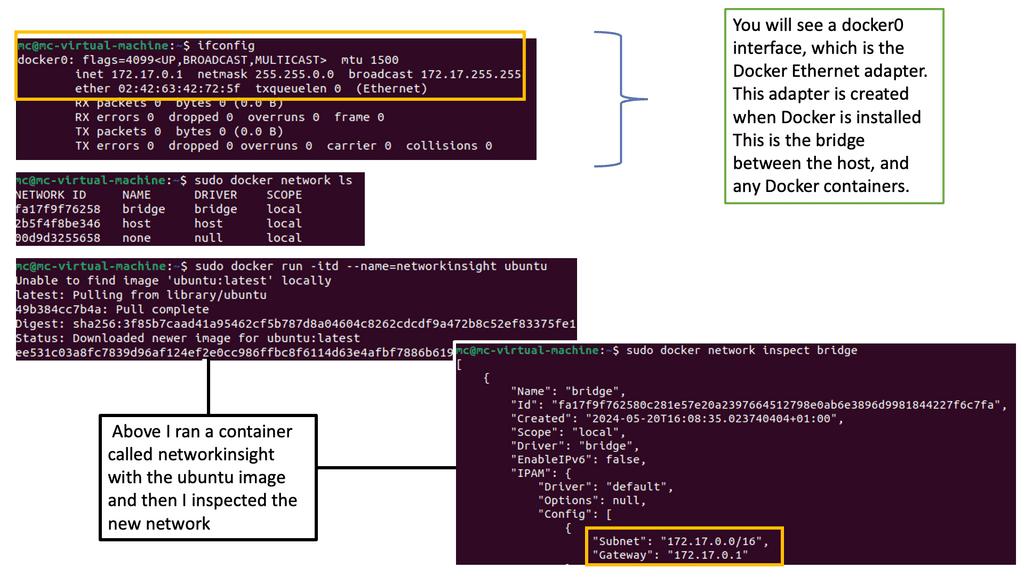

Understanding Container Networking Fundamentals

Container networking revolves around enabling communication between containers, as well as establishing connections with external networks. It involves various components such as virtual bridges, network namespaces, and IP routing. By understanding these fundamentals, developers and system administrators can harness the full potential of container networking to create robust and scalable applications.

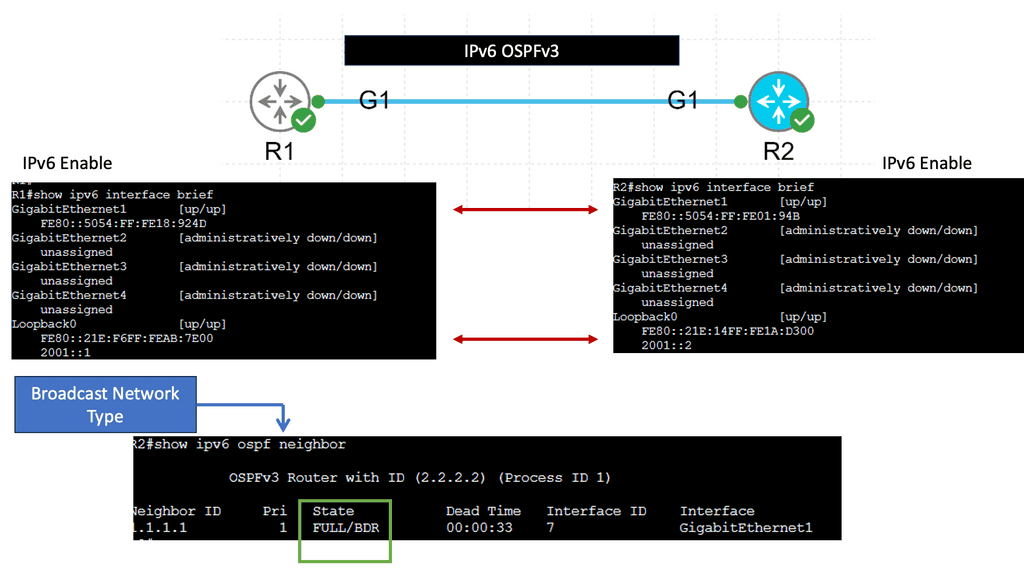

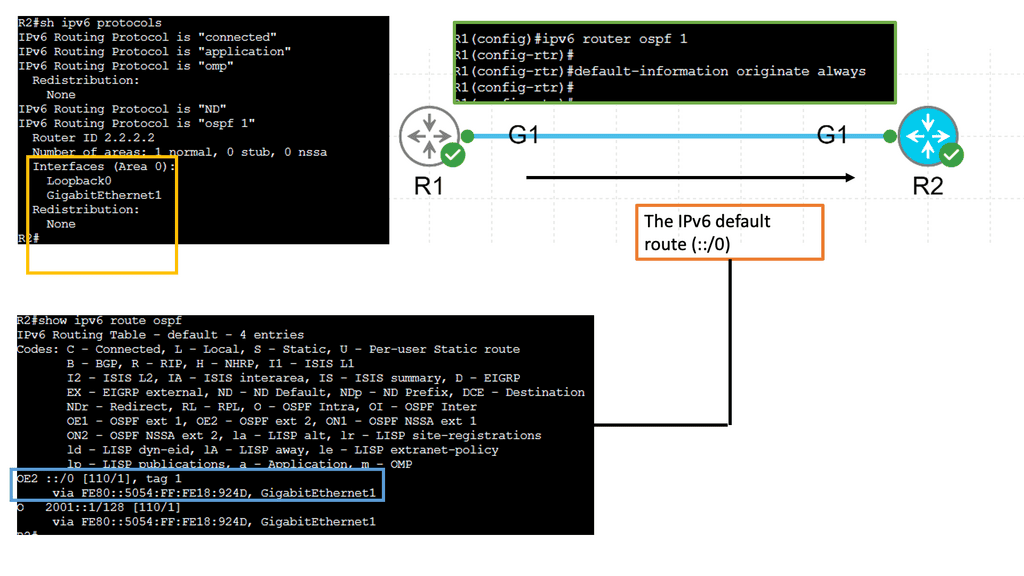

Example IPv6: SDN Data Center

OSPFv3, which stands for Open Shortest Path First version 3, is an enhanced version of OSPF designed specifically for IPv6 networks. It serves as a dynamic routing protocol that enables routers to exchange information and determine the most efficient paths for packet forwarding. Unlike its predecessor, OSPFv2, OSPFv3 fully supports the IPv6 addressing scheme, making it an essential component of modern network infrastructures.

One notable feature of OSPFv3 is its support for multiple address families, allowing for the simultaneous routing of IPv6, IPv4, and other address families. This flexibility is crucial in transitioning networks from IPv4 to IPv6 while ensuring backward compatibility. Furthermore, OSPFv3 utilizes link-local IPv6 addresses for neighbor discovery and communication, simplifying configuration and improving network scalability.

**The Value of SDN**

In addition to OpenFlow, software-defined networks (SDNs) provide another paradigm shift. In the last few years, the idea of separating the data plane, which runs in hardware ASICs on network switches, from the control plane, which runs on a central controller, has gained traction. This effort aims to develop standardized OpenFlow APIs that expose rich functionality from the hardware to the controller. For the entire data center cluster comprised of different types of switches to be uniformly programmed to enforce a specific policy, SDNs should promote programmatic interfaces that switch vendors should support. At its simplest, the data plane merely programs hardware based on the controller’s directions by serving as a set of “dumb” devices.

- SDN Controllers

SDN controllers serve as the brains of an SDN data center. They are responsible for managing and orchestrating network traffic flow. Through a centralized control plane, SDN controllers provide a unified network view, allowing administrators to implement policies, configure devices, and monitor traffic. These controllers are the driving force behind the agility and programmability offered by SDN data centers.

- OpenFlow Protocol

The OpenFlow protocol is at the heart of SDN data centers. It enables communication between the SDN controller and network devices such as switches and routers. By separating the control plane from the data plane, OpenFlow allows administrators to control network traffic flow directly, making it easier to implement dynamic and granular network policies. The protocol facilitates the flexibility and adaptability of SDN data centers.

- SDN Switches

SDN switches play a crucial role in SDN data centers by forwarding network packets based on instructions received from the SDN controller. These switches are programmable and provide a level of intelligence that traditional switches lack. SDN switches can implement traffic engineering, Quality of Service (QoS) policies, and security measures. Their programmability and centralized management make SDN switches an integral part of SDN data centers.

- Network Virtualization

One of the critical advantages of SDN data centers is network virtualization. By abstracting the underlying physical network infrastructure, SDN enables the creation of virtual networks. These virtual networks can be customized, isolated, and securely provisioned, providing flexibility and scalability to meet the dynamic demands of modern applications. Network virtualization is a game-changer for SDN data centers, offering enhanced resource utilization and simplified network management.

**Scalability**

As server ports increased in density, data centers grew, making it impossible to keep up. A limited number of MAC addresses, inactive links, and multicast streams prevented multicast streams from being transported in this case. Infrastructure growth became more than a “nice to have” as needs evolved. Using SDN controllers and standardized off-the-shelf switches, adding new switches and configuring their configurations quickly became easy.

To maximize downlink throughput, all links on switches must be utilized. Local networks already know about the widespread use of spreading trees (which disable parts of links). As a result of the phenomenal growth of server density, various multipathing scenarios have been addressed using things like Multi-Chassis EtherChannel (MEC) and ECMP (Equal Cost Multi-Path) with CLOS architectures.

Virtualization is one of the abstraction capabilities brought by SDN. Multiple isolated virtual networks were used to compute and store data on servers. There was also a virtualization movement in the network industry. At different layers, SDN has been developed in several variants.

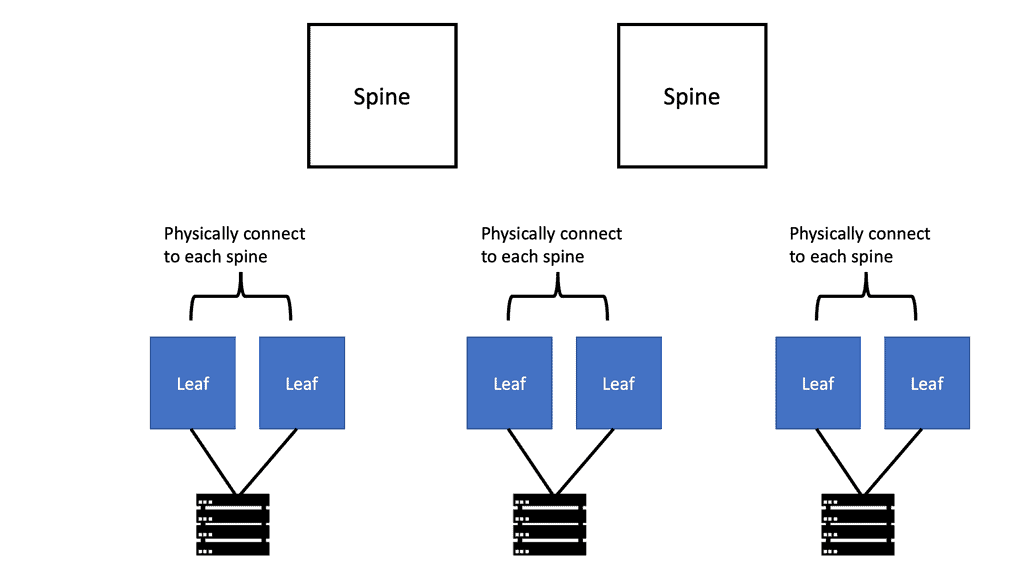

ClOS-based architectures

In recent years, high-speed network switches have made CLOS-based31 architectures extremely popular. The CLOS topology has a simple rule: switches at tier x should only be connected to switches at tier x-1 and x+1 and never to other switches at the same tier. In this topology, redundancy provides high resilience, fault tolerance, and traffic load sharing.

Due to the many redundant paths between any two switches, network resources can be utilized efficiently. There is no oversubscription in CLOS-based architectures, which may be advantageous for some applications due to the huge bisection bandwidth. Additionally, the relatively simple topology alleviates the burden of having separate core and aggregation layers inherent in traditional three-tier architectures, which help troubleshoot traffic.

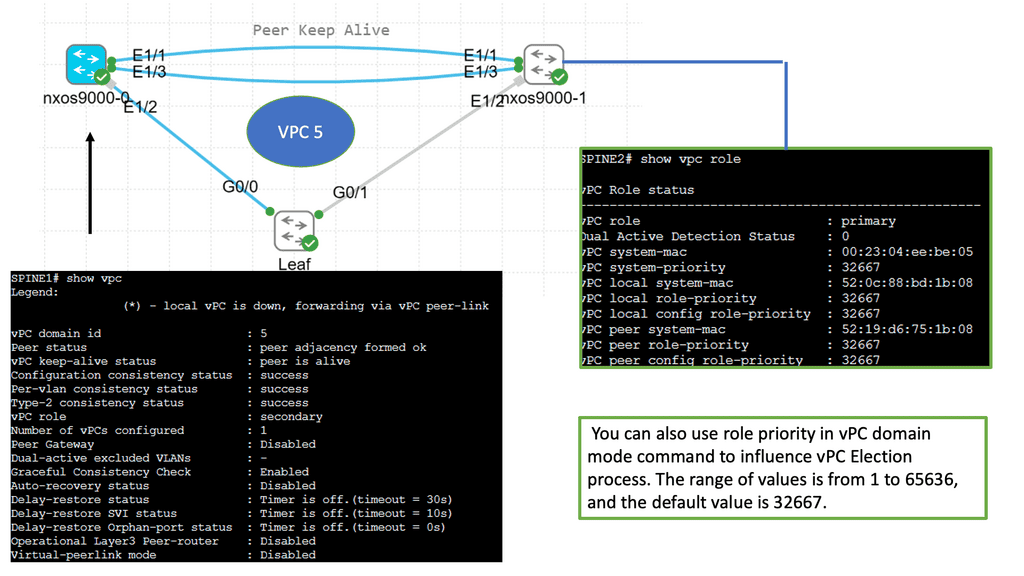

Example Technology: Nexus and VPC

Understanding Nexus Virtual Port Channel

At its core, Nexus vPC is a feature that allows two Nexus switches to appear as a single logical entity. This logical entity enables the creation of redundancy, load balancing, and seamless failover mechanisms. Linking the switches together through a virtual port channel allows them to share the traffic load and act as a unified system. This technology eliminates the traditional limitations of spanning tree protocol and unlocks new levels of performance and resiliency.

The benefits of deploying Nexus vPC are manifold. First and foremost, it enhances network availability by providing active-active links between switches. In the event of a link failure, traffic seamlessly fails over to the remaining links, minimizing downtime. Additionally, vPC enables load balancing across the links, optimizing bandwidth utilization and improving overall network performance. This feature is precious in data centers with high traffic demands.

What problems do we have, and what are we doing about them? Ask yourself: Are data centers ready and available for today’s applications and tomorrow’s emerging data center applications? Businesses and applications are putting pressure on networks to change, ushering in a new era of data center design. From 1960 to 1985, we started with mainframes and supported a customer base of about one million users.

Example: ACI Cisco

ACI Cisco, short for Application Centric Infrastructure, is a software-defined networking (SDN) solution developed by Cisco Systems. It provides a holistic approach to managing and automating network infrastructure, allowing organizations to achieve agility, scalability, and security all in one framework.

Cisco ACI is a software-defined networking (SDN) solution that brings automation, scalability, and agility to network infrastructure. It combines physical and virtual elements, creating a unified and programmable network fabric that simplifies operations and accelerates application deployment. By abstracting network policies from the underlying infrastructure, Cisco ACI enables organizations to achieve policy-driven automation and policy-based security across the entire network.

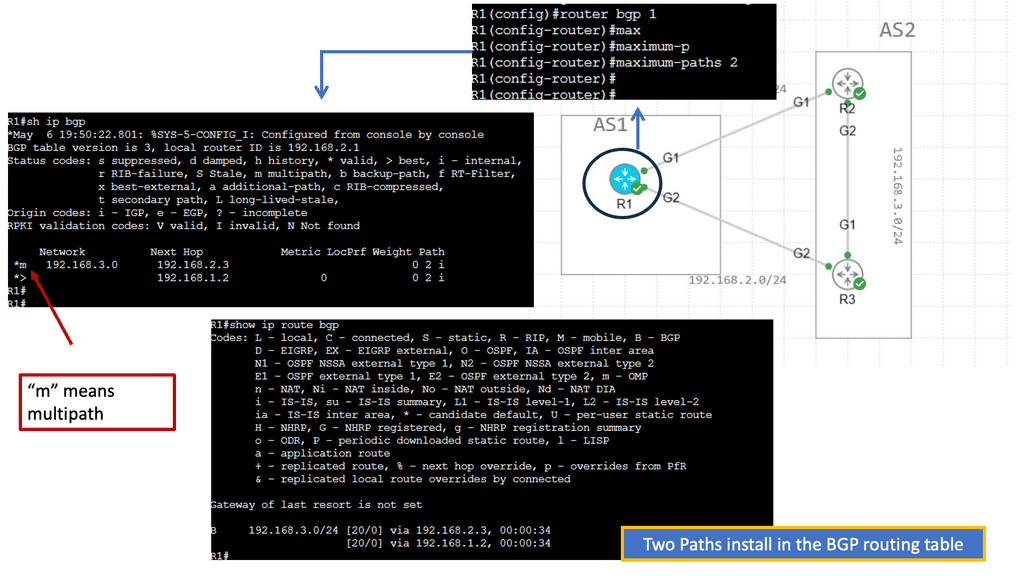

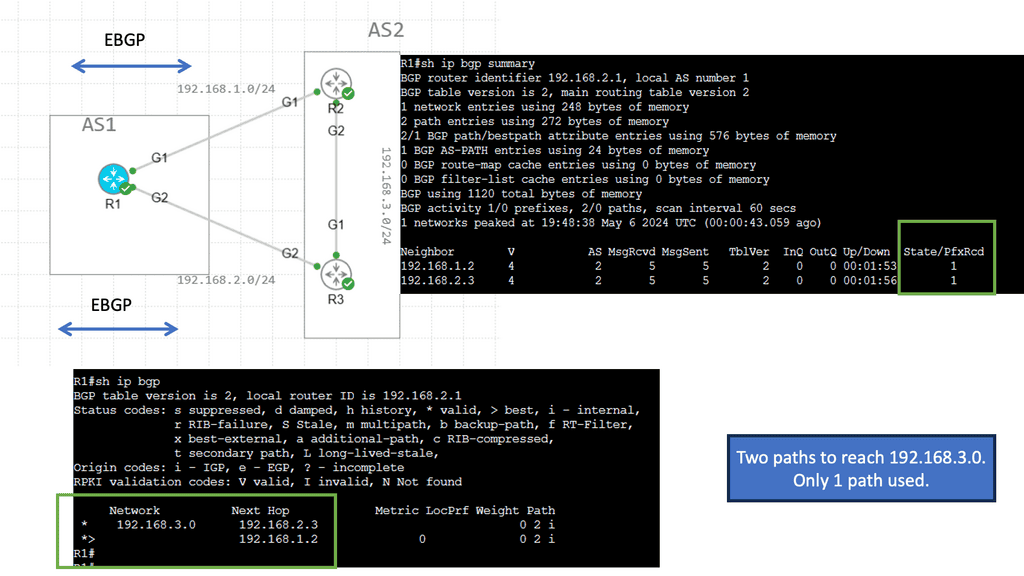

Example Technology: BGP in the data center

Understanding BGP Multipath

BGP Multipath is a feature that enables the installation of multiple paths for the same destination prefix in the BGP routing table. Unlike traditional BGP, which only selects a single best path, BGP Multipath allows for the utilization of multiple paths simultaneously. This feature significantly enhances network resiliency, load balancing, and routing efficiency.

Load Balancing: BGP Multipath distributes traffic across multiple paths, preventing congestion on a single path and optimizing bandwidth utilization. This load-balancing mechanism enhances network performance and reduces bottlenecks.

Fault Tolerance: BGP Multipath increases network resilience and fault tolerance by providing redundancy. In a link failure or congestion, traffic can be seamlessly rerouted through alternative paths, ensuring uninterrupted connectivity.

Improved Convergence: BGP Multipath reduces convergence time by incorporating multiple paths into the routing decision process. This results in faster route selection and improved network responsiveness.

Security in SDN Data Centers

Example Technology: Nexus and MAC ACLs

Understanding MAC ACLs

MAC ACLs, or Media Access Control Access Control Lists, are powerful tools that allow network administrators to filter traffic based on source or destination MAC addresses. By defining specific rules, administrators can permit or deny traffic at Layer 2 and enhance network security and performance.

Nexus 9000 MAC ACLs offer several advantages over traditional access control methods. Firstly, they provide granular control at the MAC address level, enabling administrators to restrict or allow access to specific devices. Additionally, MAC ACLs can be dynamically applied to VLANs, making them highly scalable and adaptable to evolving network environments.

Configuring MAC ACLs on the Nexus 9000 is straightforward. Administrators can define ACL rules using the command-line interface (CLI) or the graphical user interface (GUI). By specifying the MAC addresses, action (permit/deny), and optional parameters, administrators can create custom access control policies tailored to their network requirements.

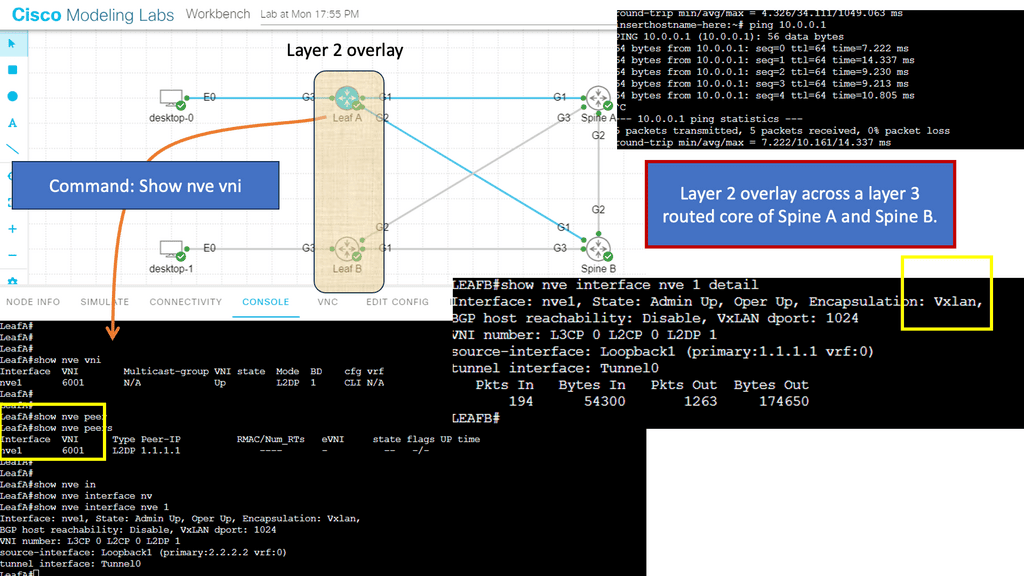

VXLAN Overlays

**Scalability and Agility**

With the increasing demands of modern business applications, scalability and agility are paramount. Cisco ACI offers a highly scalable architecture that can adapt to changing network requirements. By leveraging a spine-leaf topology and VXLAN overlays, Cisco ACI provides a flexible and scalable foundation that can seamlessly grow to accommodate evolving business needs.

VXLAN, at its core, is an encapsulation protocol that enables the creation of virtualized networks over existing Layer 3 infrastructure. It extends Layer 2 segments over Layer 3 networks, facilitating scalable and flexible network virtualization. Using unique VXLAN identifiers overcomes the limitations of traditional VLANs, allowing for a significantly more significant number of virtual networks to coexist.

**Benefits of VXLAN**

-Enhanced Scalability and Flexibility: VXLAN addresses the limitations of VLANs, which are often restricted to a maximum of 4096 unique IDs. With VXLAN, the pool of available IDs expands dramatically, creating an almost limitless number of virtual networks. This scalability empowers organizations to meet the demands of modern applications and dynamic workloads.

-Improved Network Segmentation: VXLAN enables efficient network segmentation by isolating traffic within virtual networks. This segmentation enhances security, simplifies network management, and provides a more robust framework for multi-tenancy environments. By leveraging VXLAN, organizations can better control and isolate their network traffic.

-Seamless Network Extension and Migration: VXLAN facilitates seamless network extension and migration across data centers, campuses, or cloud environments. By encapsulating Layer 2 frames within Layer 3 packets, VXLAN enables the creation of virtual networks that span geographically dispersed locations. This capability simplifies workload mobility, disaster recovery, and data center consolidation efforts.

Example Technology: VXLAN Flood and Learn

The Basics of Flood and Learn

As the name suggests, VXLAN Flood and Learn involves flooding network traffic to learn the MAC (Media Access Control) addresses. In traditional Ethernet networks, switches use MAC address tables to determine the destination of incoming frames. However, in VXLAN environments, the MAC addresses of virtual machines and hosts keep changing due to mobility and dynamic provisioning. Flood and Learn addresses this challenge by flooding traffic to all ports, allowing the switches to learn the MAC addresses associated with each VXLAN.

VXLAN Flood and Learn offers several benefits and finds applications in various scenarios. One such application is in data center environments with virtualized networks. It enables seamless communication between virtual machines across different hosts without requiring manual MAC address configuration. VXLAN Flood and Learn also facilitates network mobility, making it suitable for dynamic workloads and cloud environments.

Example: Software-defined data centers

To offer computing and network services to many clients, software-defined data centers (SDDCs) use virtualization technologies to separate hardware infrastructure into virtual machines. All computing, storage, and networking resources can be abstracted and represented as software in a virtualized data center. Anybody could access the data center resources if sold as a service.

SDDCs include software-defined networking (SDN) and virtual machines. In addition to Citrix, KVM, OpenDaylight, OpenStack, OpenFlow, Red Hat, and VMware, many other open and proprietary software platforms exist for virtualizing computing resources.

The advantage of SDDC is that clients do not have to build their infrastructure. They can meet their computing, networking, and storage needs by renting resources from the cloud. It is advantageous for software companies or service providers to have centralized data centers because they can serve many clients simultaneously. Hardware and storage costs are plummeting, a significant factor driving SDDC and cloud computing. Infrastructure as a Service (IaaS) becomes more economical as these resources become cheaper, making it more advantageous to build large data centers on a large scale.

Example: Open Networking Foundation

We also have the Open Networking Foundation ( ONF ), which leverages SDN principles, employs open-source platforms, and defines standards to build and operate open networking. The ONF’s portfolio includes several areas, such as mobile, broadband, and data centers running on white box hardware.

Recap on SDN Principles

SDN Defined:

SDN is an innovative approach to networking that separates the control plane from the data plane, providing a centralized and programmable network architecture. SDN enables dynamic and agile network management by decoupling network control and forwarding functions.

1. Centralized Control:

SDN leverages a central controller that acts as the brain of the network, making intelligent decisions about traffic forwarding, network policies, and resource allocation. This centralized control enhances network visibility and simplifies management tasks.

At its core, SDN centralized control refers to a network architecture in which a central controller governs the behavior of the entire network. Unlike traditional networking models, where intelligence is distributed across different network devices, SDN Centralized Control consolidates control into a single entity. This central controller acts as the brain of the network, making global decisions and orchestrating network flows.

SDN Centralized Control offers many advantages. First, it gives network administrators a holistic view of the entire network, simplifying management and troubleshooting processes. With a centralized controller, administrators can configure and monitor network devices from a single control point, saving time and effort.

2. Programmability:

One of the critical principles of SDN is its programmability. Network administrators can dynamically control and configure the network behavior by utilizing open interfaces and standard protocols like OpenFlow. This programmability empowers network operators to tailor the network to specific needs and applications.

SDN programmability is the ability to control and manipulate network behavior through software-based programming interfaces. It allows network administrators to dynamically configure and manage network resources, making networks more adaptable and responsive to changing business needs. By separating the control plane from the data plane, SDN programmability enables centralized management and control of network infrastructure, leading to simplified operations and increased efficiency.

SDN programmability empowers network administrators to respond to changing demands and quickly adapt network configurations. It allows for the creation of virtual networks, enabling the seamless segmentation and isolation of network traffic. This flexibility allows organizations to optimize network resources and support diverse applications and services.

Traditionally, scaling network infrastructure has been a complex and time-consuming task. SDN programmability simplifies the scaling process by automating the provisioning and deployment of network resources. This scalability ensures that network performance remains optimal even during peak usage periods.

3. Abstraction:

SDN abstracts the underlying network infrastructure, providing a simplified and logical view of the network. By abstracting complex network details, SDN enables higher-level automation, easier troubleshooting, and more efficient resource utilization.

SDN abstraction is the process of separating the underlying network infrastructure from the control logic that governs it. By abstracting the network resources, administrators can interact with the network at a higher level of abstraction, making it easier to manage and automate complex tasks. This abstraction layer provides a simplified, centralized network view independent of the underlying hardware and protocols.

SDN abstraction offers unprecedented flexibility by decoupling network control from the underlying infrastructure. It enables dynamic control and reconfiguration of network resources, allowing for rapid adaptation to changing requirements.

With SDN abstraction, complex network configurations can be managed through a single, intuitive interface. Administrators can define network policies and services without getting involved in the low-level details of network devices.

Abstraction simplifies network management, making it easier to scale the network infrastructure. By automating tasks and reducing the manual effort required, SDN abstraction improves operational efficiency and reduces the risk of human errors.

Google Cloud Data Centers

Understanding Network Tiers

Network tiers, in simple terms, are a hierarchical structure that categorizes the quality, performance, and cost of network connections. Google Cloud offers two main tiers: Premium Tier and Standard Tier. Let’s explore each tier in detail.

The Premium Tier is designed for businesses that demand the utmost in performance, reliability, and low latency. Leveraging Google’s vast global network infrastructure, the Premium Tier ensures optimized routing, reduced congestion, and enhanced end-user experience. Whether your application requires lightning-fast response times or handles mission-critical workloads, the Premium Tier is tailored to meet your needs.

For organizations seeking a cost-effective network solution without compromising on quality, the Standard Tier is an excellent choice. With competitive pricing, this tier offers reliable connectivity while prioritizing affordability. It serves as a viable option for applications that are less latency-sensitive or require less bandwidth.

Understanding VPC Peerings

VPC Peerings serve as a bridge between two VPC networks, allowing them to communicate as if they were part of the same network. It establishes a private and encrypted connection between VPC networks, ensuring data privacy and security. With VPC Peerings, you can extend your network’s reach, enabling collaboration and data sharing across different VPCs.

Enhanced Security: By utilizing VPC Peerings, you can establish secure connections between VPC networks without exposing your services to the public internet. This helps mitigate potential security risks and ensures your data remains protected.

Improved Performance: VPC Peerings enable low-latency and high-throughput communication between VPC networks. This allows for faster data transfer and reduces network bottlenecks, enhancing overall application performance.

Simplified Network Architecture: VPC Peerings eliminate the need for complex VPN configurations or costly dedicated connections. They simplify your network architecture by providing seamless connections and communication between VPC networks.

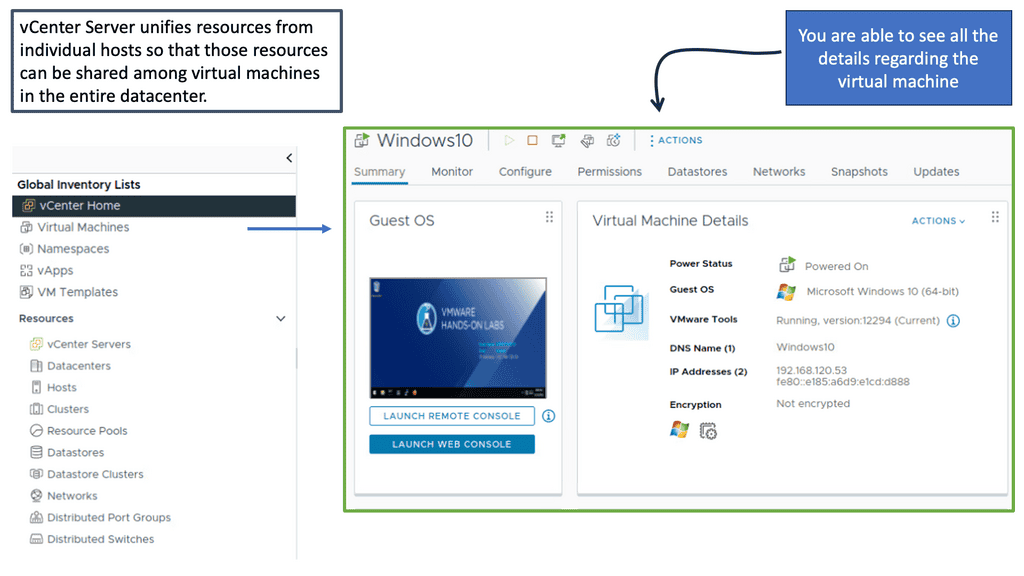

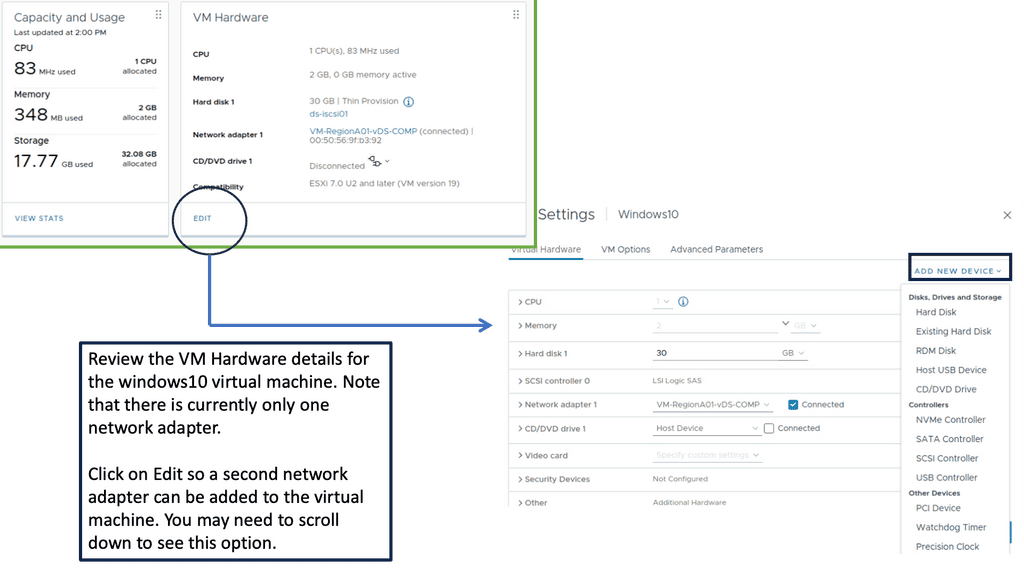

vCenter Server

**Seamless Management of Virtual Environments**

One of the most compelling features of vCenter Server is its ability to provide a single pane of glass for managing your entire virtual environment. This centralized control allows administrators to monitor resource allocation, optimize performance, and ensure high availability across multiple virtual machines (VMs). With vCenter Server, you can easily create, configure, and manage VMs, clusters, and data stores, ensuring that your infrastructure is always running smoothly.

**Enhanced Security and Compliance**

In today’s digital age, security is more critical than ever. vCenter Server includes robust security features designed to protect your virtual environment. From role-based access control (RBAC) to secure boot and encrypted vMotion, vCenter Server ensures that your data remains protected. Additionally, it offers compliance tools that help you adhere to industry standards and regulations, making it easier to pass audits and avoid potential fines.

**Automation and Orchestration**

Why spend countless hours on repetitive tasks when you can automate them? vCenter Server supports a variety of automation tools, including vRealize Orchestrator and PowerCLI, which allow you to script and automate routine operations. This not only saves time but also reduces the risk of human error, improving overall efficiency. With built-in automation features, you can schedule tasks such as VM provisioning, backups, and updates, freeing up your IT team to focus on more strategic initiatives.

**Scalability and Flexibility**

As your business grows, so does your need for a scalable and flexible IT infrastructure. vCenter Server is designed to scale seamlessly with your organization. Whether you’re managing a small cluster of VMs or an extensive data center, vCenter Server can handle it all. Its flexible architecture supports hybrid cloud environments, allowing you to extend your on-premises infrastructure to the cloud effortlessly. This scalability ensures that you can meet changing business demands without significant disruptions.

Related: Before you proceed, you may find the following post helpful:

SDN Data Center

The Future of Data Centers

Exploring Software-Defined Networking (SDN)

In recent years, the rapid advancement of technology has given rise to various innovative solutions transforming how data centers operate. One such revolutionary technology is Software-Defined Networking (SDN), which has garnered significant attention and is set to reshape the landscape of data centers as we know them. In this blog post, we will delve into the fundamentals of SDN and explore its potential to revolutionize data center architecture.

SDN is a networking paradigm that separates the control plane from the data plane, enabling centralized control and programmability of network infrastructure. Unlike traditional network architectures, where network devices make independent decisions, SDN offers a centralized management approach, providing administrators with a holistic view and control over the entire network.

**The Benefits of SDN in Data Centers**

Enhanced Network Flexibility and Scalability:

SDN allows data center administrators to allocate network resources dynamically based on real-time demands. Scaling up or down becomes seamless with SDN, resulting in improved flexibility and agility. This capability is crucial in today’s data-driven environment, where rapid scalability is essential to meeting growing business demands.

Simplified Network Management:

SDN abstracts the complexity of network management by centralizing control and offering a unified view of the network. This simplification enables more efficient troubleshooting, faster service provisioning, and streamlined network management, ultimately reducing operational costs and increasing overall efficiency.

Increased Network Security:

By offering a centralized control plane, SDN enables administrators to implement stringent security policies consistently across the entire data center network. SDN’s programmability allows for dynamic security measures, such as traffic isolation and malware detection, making it easier to respond to emerging threats.

SDN and Network Virtualization:

SDN and network virtualization are closely intertwined, as SDN provides the foundation for implementing network virtualization in data centers. By decoupling network services from physical infrastructure, virtualization enables the creation of virtual networks that can be customized and provisioned on demand. SDN’s programmability further enhances network virtualization by allowing the rapid deployment and management of virtual networks.

Back to Basics: SDN Data Center

From 1985 to 2009, we moved to the personal computer, client/server model, and LAN /Internet model, supporting a customer base of hundreds of millions. From 2009 to 2020+, the industry has completely changed. We have various platforms (mobile, social, big data, and cloud) with billions of users, and it is estimated that the new IT industry will be worth 4.8T. All of these are forcing us to examine the existing data center topology.

SDN data center architecture is a type of architectural model that adds a level of abstraction to the functions of network nodes. These nodes may include switches, routers, bare metal servers, etc.), to manage them globally and coherently. So, with an SDN topology, we have a central place to work a disparate network of various devices and device types.

We will discuss the SDN topology in more detail shortly. At its core, SDN enables the entire network to be centrally controlled, or ‘programmed,’ using a software SDN application layer. The significant advantage of SDN is that it allows operators to manage the whole network consistently, regardless of the underlying network technology.

Statistics don’t lie.

The customer has changed and is making us change our data center topology. Content doubles over the next two years, and emerging markets may overtake mature markets. We expect 5,200 GB of data/per person created in 2020. These new demands and trends are putting a lot of duress on the amount of content that will be made, and how we serve and control this content poses new challenges to data networks.

Knowledge check for other software-defined data center market

The software-defined data center market is considerable. In terms of revenue, it was estimated at $43.178 billion in 2020. However, this has grown significantly; now, the software-defined data center market will grow to $120.3 billion by 2025, representing a CAGR of 22.4%.

Knowledge Check for SDN data center architecture and SDN Topology.

Software Defined Networking (SDN) simplifies computer network management and operation. It is an approach to network management and architecture that enables administrators to manage network services centrally using software-defined policies. In addition, the SDN data center architecture enables greater visibility and control over the network by separating the control plane from the data plane. Administrators can control routing, traffic management, and security by centralized managing networks. With global visibility, administrators can control the entire network. They can then quickly apply network policies to all devices by creating and managing them efficiently.

The Value: SDN Topology

An SDN topology separates the control plane from the data plane connected to the physical network devices. This allows for better network management and configuration flexibility, and configuring the control plane can create a more efficient and scalable network.

The SDN topology has three layers: the control plane, the data plane, and the physical network. The control plane controls the data plane, which carries the data packets. It is also responsible for setting up virtual networks, configuring network devices, and managing the overall SDN topology.

A personal network impact assessment report

I recently approved a network impact assessment for various data center network topologies. One of my customers was looking at rate-limiting current data transfer over the WAN ( Wide Area Network ) at 9.5mbps over 10 hours for 34GB of data transfer at an off-prime time window. Due to application and service changes, this customer plans to triple that volume over the next 12 months.

They result in a WAN upgrade and a change in the scope of DR ( Disaster Recovery ). Big Data, Applications, Social Media, and Mobility force architects to rethink how they engineer networks. We should concentrate more on scale, agility, analytics, and management.

SDN Data Center Architecture: The 80/20 traffic rule

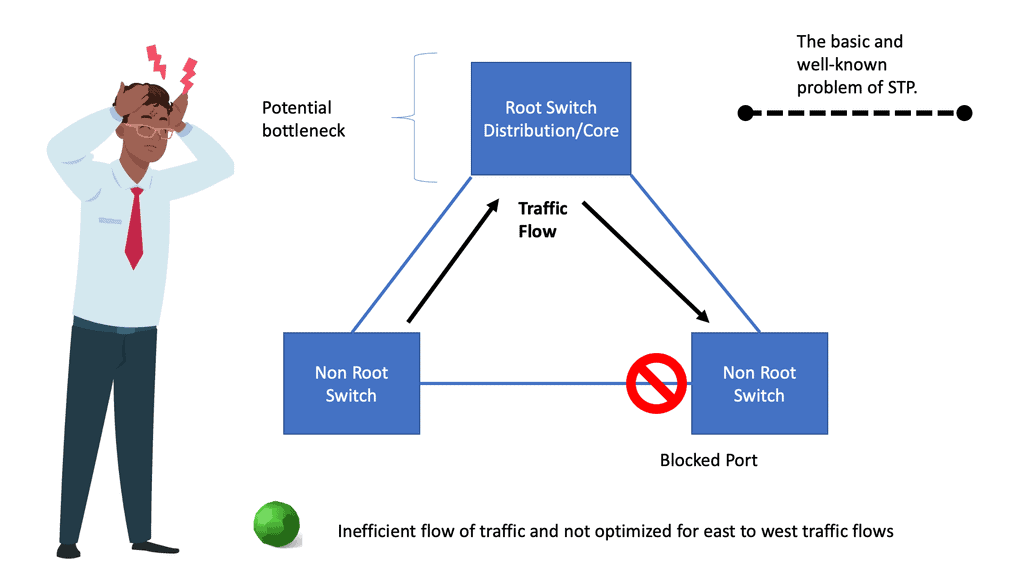

The data center design was based on the 80/20 traffic pattern rule with Spanning Tree Protocol ( 802.1D ), where we have a root, and all bridges build a loop-free path to that root. This results in half ports forwarding and half in a blocking state—completely wasting your bandwidth even though we can load balance based on a certain number of VLANs forwarding on one uplink and another set of VLANs forwarding on the secondary uplink.

We still face the problems and scalability of having large Layer 2 domains in your data center design. Spanning tree is not a routing protocol; it’s a loop prevention protocol, and as it has many disastrous consequences, it should be limited to small data center segments.

SDN Data Center | Data Center Stability |

Layer 2 to the Core layer | |

STP blocks reduandant links | |

Manual pruning of VLANs for redudancy design | |

Rely on STP convergence for topology changes | |

Efficient and stable design |

Data Center Topology: The Shifting Traffic Patterns

The traffic patterns have shifted, and the architecture needs to adapt. Before, we focused on 80% leaving the DC, while now, a lot of traffic is going east to west and staying within the DC. The original traffic pattern made us design a typical data center style with access, core, and distribution based on Layer 2, leading to Layer 3 transport. The route you can approach was adopted as Layer 3, which adds stability to Layer 2 by controlling broadcast and flooding domains.

The most popular data architecture in deployment today is based on very different requirements, and the business is looking for large Layer 2 domains to support functions such as VMotion. We need to meet the challenge of future data center applications, and as new apps come out with unique requirements, it isnt easy to make adequate changes to the network due to the protocol stack used. One way to overcome this is with overlay networking and VXLAN.

The Issues with Spanning Tree

The problem is that we rely on the spanning tree, which was useful before but is past its date. The original author of the spanning tree is now the author of THRILL ( replacement to STP ). STP ( Spanning Tree Protocol ) was never a routing protocol to determine the best path; it was used to provide a loop-free path. STP is also a fail-open protocol ( as opposed to a Layer 3 protocol that fails closed ).

One of the spanning trees’ most significant weaknesses is their failure to open. If I don’t receive a BPDU ( Bridge Protocol Data Unit ), I assume I am not connected to a switch and start forwarding on that port. Combining a fail-open paradigm with a flooding paradigm can be disastrous.

STP va Routing Blocking Links

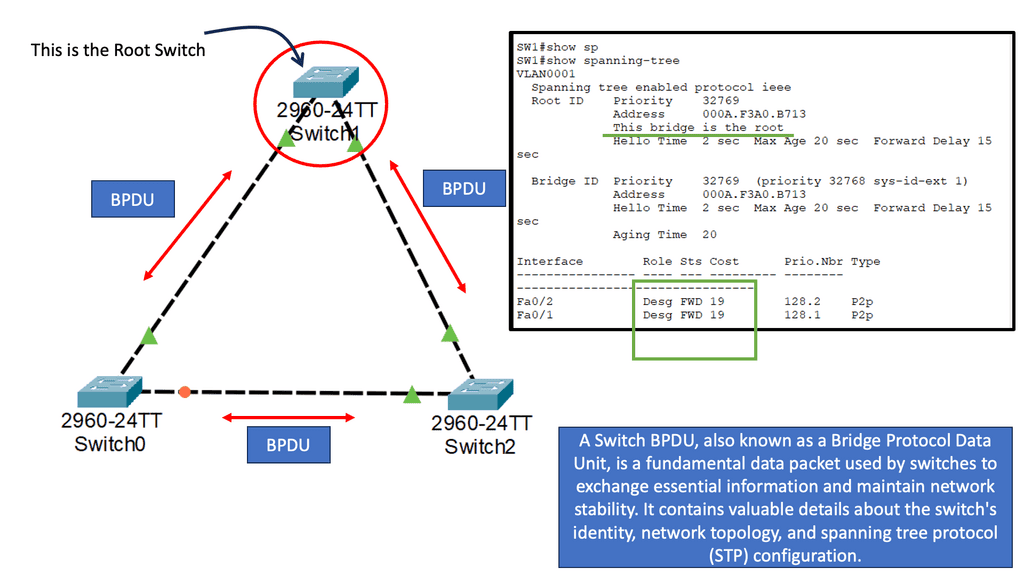

Next, let’s address the Spanning Tree Protocol on a network of 3 switches. STP is there to help, but in some cases, it blocks specific ports based on the default configuration or by the administrator forcing traffic to get a certain way. Either way, you can lose bandwidth. It is easy to demonstrate this by looking at three switches in the diagram. You would want all of these links in a forwarding state, but with STP, one of the links is blocked to prevent loops.

Since the spanning tree is enabled, all our switches will send a unique frame to each other called a BPDU (Bridge Protocol Data Unit). The spanning tree requires two pieces of information in this BPDU: the MAC address and Priority. Together, the MAC address and priority make up the bridge ID.

The spanning tree requires the bridge ID for its calculation. Let me explain how it works:

- First, a spanning tree will elect a root bridge; this root bridge will have the best “bridge ID.”

- The switch with the lowest bridge ID is the best one.

- The priority is 32768 by default, but we can change this value.

So, who will become the root bridge? In our example, SW1 will become the root bridge! The bridge ID is made up of priority and MAC address. Since all switches have the same priority, the MAC address will be the tiebreaker. SW1 has the lowest MAC address, thus the best bridge ID, and will become the root bridge. The ports on our root bridge are always designated, which means they are forwarding.

Above, you see that SW1 has been elected as the root bridge, and the “D” on the interfaces stands for designated.

Now we have agreed on the root bridge, our next step for all our “non-root” bridges (so that’s every switch that is not the root) will be to find the shortest path to our root bridge! The shortest path to the root bridge is called the “root port.” Take a look at my example:

VPC for Nexus Data Centers

Port States:

If you have played with some Cisco switches before, you might have noticed that every time you plugged in a cable, the LED above the interface was orange and, after a while, became green. What is happening at this moment is that the spanning tree is determining the state of the interface; this is what happens as soon as you plug in a cable:

- The port is in listening mode for 15 seconds. In this phase, it will receive and send BPDUs but not learn MAC addresses or transmit data.

- The port is in learning mode for 15 seconds. We are still sending and receiving BPDUs, but now the switch will also learn MAC addresses. There is still no data transmission, though.

- Now we go into forwarding mode, and finally, we can transmit data!

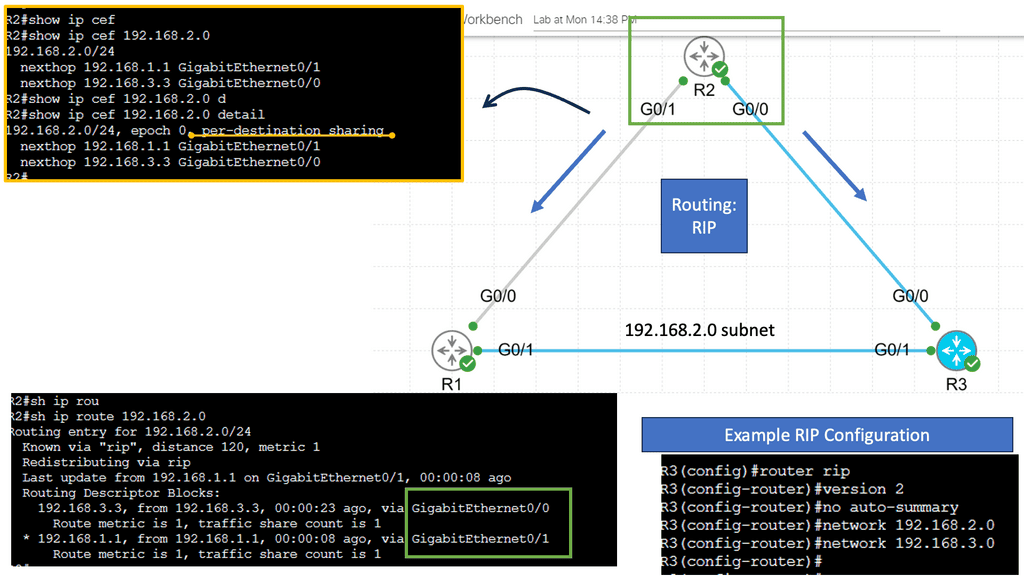

How does this compare to routing? With layer 3, we have a TTL, meaning we can stop loops as long as there is no complicated route redistribution at different points in the network topology. Let’s look at the following example, which uses RIP.

RIP is a distance vector routing protocol and the simplest one. We’ll start by paying attention to the distance vector class. What does the name distance vector mean?

- Distance: How far away? In the routing world, we use metrics.

- Vector: Which direction? In the routing world, we care about which interface and the next router’s IP address to send the packet to.

Notice below we are not blocking ports. Instead, we are load balancing.

Analysis:

Load-sharing between packets or destinations (actually source/destination IP address pairs) is supported by Cisco Express Forwarding (CEF) without performance degradation (without CEF, per-packet load-sharing requires process switching). Even though there is no performance impact on the router, per-packet load sharing almost always results in out-of-order packets. As a result of packet reordering, TCP throughput might be reduced in high-speed environments (per-packet load-sharing improves per-flow throughput in low-speed/few-flow scenarios) or applications that cannot survive out-of-order packet delivery, for example, Fast Sequenced Transport for SNA over IP or voice/video streams, may suffer.

Use the ip load-sharing per-packet interface configuration command to configure per-packet load-sharing (the default is per destination). This command must be used to configure all outgoing interfaces where traffic is load-shared.

STP has a bad reputation

STP, in theory, prevents bridging loops. Many reasons contribute to STP’s lousy reputation in practice.

You must accept that design choice if you prefer plug-and-pray networking over proper routing protocols. There is little we can do in this situation. To use alternate paths, you need an appropriate routing protocol, regardless of whether you’re routing on layer 2 (TRILL, SPB) or layer 3 (IP). Forward-on behavior is one of the main problems with STP. All links forward traffic until BPDUs block some of them.

A forwarding loop is almost certain to occur if a device drops BPDUs or if a switch loses its control plane (for example, due to a memory leak).

Design a Scalable Data Center Topology

To overcome the limitation, some are now trying to route ( Layer 3 ) the entire way to the access layer, which has its problems, too, as some applications require L2 to function, e.g., clustering and stateful devices—however, people still like Layer 3 as we have stability around routing. You have an actual path-based routing protocol managing the network, not a loop-free protocol like STP, and routing also doesn’t fail to open and prevents loops with the TTL ( Time to Live ) fields in the headers.

Convergence routing around a failure is quick and improves stability. We also have ECMP ( Equal Cost Multi-Path) paths to help with scaling and translating to scale-out topologies. This allows the network to grow at a lower cost. Scale-out is better than scale-up.

Whether you are a small or large network, having a routed network over a Layer 2 network has clear advantages. However, how we interface with the network is also cumbersome, and it is estimated that 70% of network failures are due to human errors. The risk of changes to the production network leads to cautious changes, slowing processes to a crawl.

In summary, the problems we have faced so far;

STP-based Layer 2 has stability challenges; it fails to open. Traditional bridging is controlled flooding, not forwarding, so it shouldn’t be considered as stable as a routing protocol. Some applications require Layer 2, but people still prefer Layer 3. The network infrastructure must be flexible enough to adapt to new applications/services, legacy applications/services, and organizational structures.

There is never enough bandwidth, and we cannot predict future application-driven requirements, so a better solution would be to have a flexible network infrastructure. The consequences of inflexibility slow down the deployment of new services and applications and restrict innovation.

The infrastructure needs to be flexible for the data center applications, not the other way around. It must also be agile enough not to be a bottleneck or barrier to deployment and innovation.

What are the new options moving forward?

Layer 2 fabrics ( Open standard THRILL ) change how the network works and enable a large routed Layer 2 network. A Layer 2 Fabric, for example, Cisco FabricPath, is Layer 2; it acts more than Layer 3 as it’s a routing protocol-managed topology. As a result, there is improved stability and faster convergence. It can also support massive ( up to 32 load-balanced forwarding paths versus a single forwarding path with Spanning Tree ) and scale-out capabilities.

VXLAN: Overlay networking

Suppose you already have a Layer 3 core and must support Layer 2 end to end. In that case, you could go for an Encapsulated Overlay ( VXLAN, NVGRE, STT, or a design with generic routing encapsulation). You have the stability of a Layer 3 core and the familiarity of a Layer 2 core but can service Layer 2 end to end using UDP port numbers as network entropy. Depending on the design option, it builds an L2 tunnel over an L3 core.

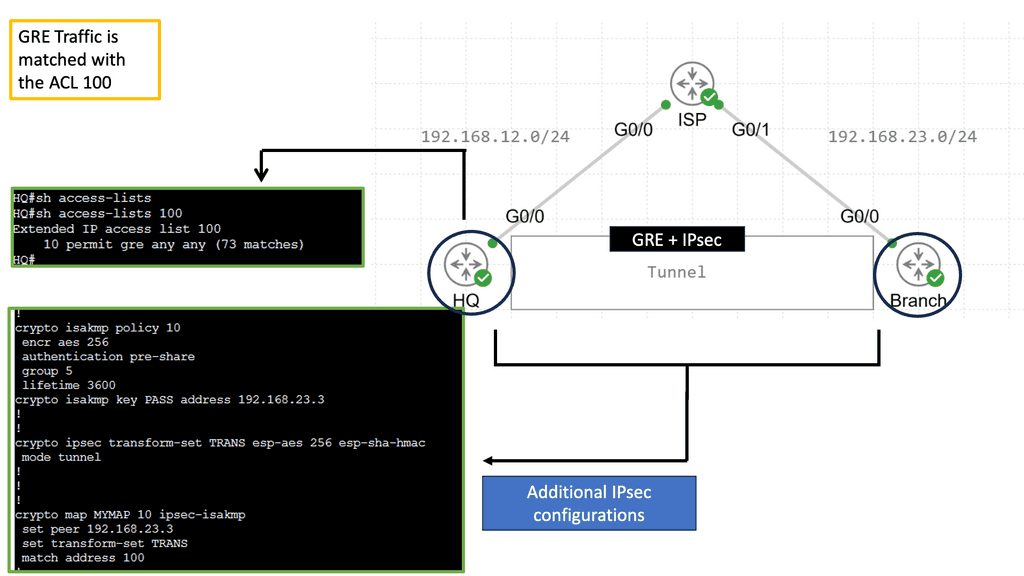

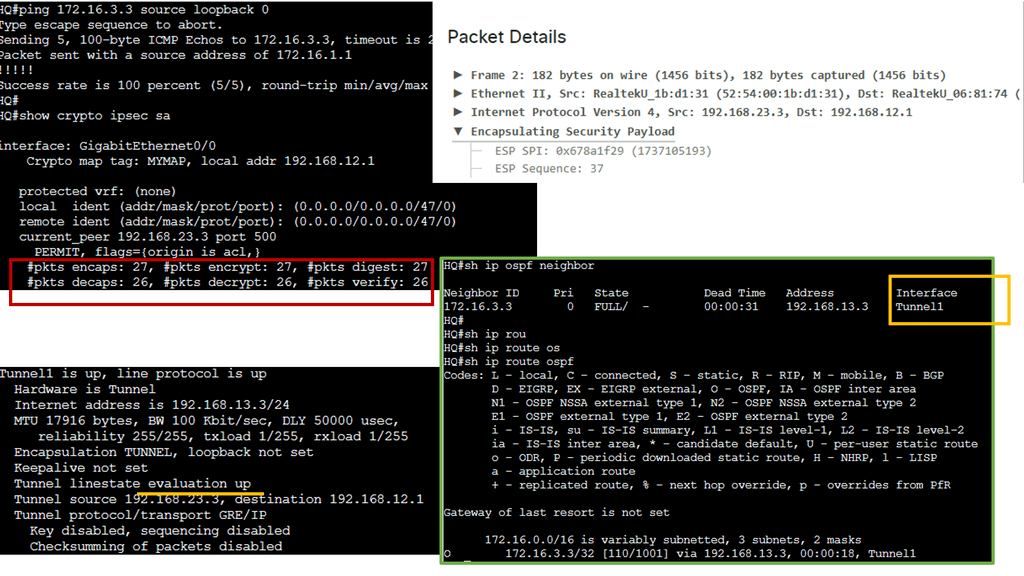

Example: Encrypted GRE with IPsec

Understanding Encrypted GRE

GRE, or Generic Routing Encapsulation, is a network protocol commonly used to encapsulate and transport different network layer protocols over an IP network. It provides a virtual point-to-point connection, allowing the transmission of data between different sites or networks. However, without encryption, the data transmitted through GRE is vulnerable to interception and unauthorized access. This is where encrypted GRE with IPSec comes into play.

IPSec, or Internet Protocol Security, is a suite of protocols used to secure IP communications by authenticating and encrypting the data packets. It provides a secure tunnel between two endpoints, ensuring the transmitted data’s confidentiality, integrity, and authenticity. By combining IPSec with GRE, organizations can create a safe and private communication channel over an untrusted network.

a. Enhanced Data Privacy: With encrypted GRE and IPSec, organizations can ensure the privacy of their data while transmitting it over public or untrusted networks. The encryption algorithms used in IPSec provide high security, making it extremely difficult for unauthorized parties to decipher the transmitted information.

b. Secure Communication: Encrypted GRE with IPSec establishes a secure tunnel between endpoints, protecting the integrity of the data. It prevents tampering, replay attacks, and other malicious activities, ensuring the information reaches its destination without any unauthorized modifications.

c. Flexibility and Compatibility: Encrypted GRE with IPSec can be implemented across various network environments, making it a versatile solution. It is compatible with different operating systems, routers, and firewalls, allowing organizations to integrate it seamlessly into their existing network infrastructure.

Back to VXLAN

A use case for this will be if you have two devices that need to exchange state at L2 or require VMotion. VMs cannot migrate across L3 as they need to stay in the same VLAN to keep the TCP sessions intact. Software-defined networking is changing the way we interact with the network.

It provides faster deployment and improved control. It changes how we interact with the network and has more direct application and service integration. With a centralized controller, you can view this as a policy-focused network.

Many prominent vendors will push within the framework of converged infrastructure ( server, storage, networking, centralized management ) all from one vendor and closely linking hardware and software ( HP, Dell, Oracle ). While other vendors will offer a software-defined data center in which physical hardware is virtual, centrally managed, and treated as abstraction resource pools that can be dynamically provisioned and configured ( Microsoft ).

Summary: SDN Data Center

In the dynamic landscape of technology, data centers play a crucial role in storing, processing, and delivering digital information. Traditional data centers have limitations, but the emergence of Software-Defined Networking (SDN) has revolutionized how data centers operate. In this blog post, we delved into the world of SDN data centers, exploring their benefits, key components, and potential implications.

Understanding SDN

SDN, in essence, separates the control plane from the data plane, enabling centralized network management through software. Unlike traditional networks, where network devices make individual decisions, SDN allows for a more programmable and flexible infrastructure. By abstracting the network’s control, SDN empowers administrators to manage and orchestrate their data centers dynamically.

Key Components of SDN Data Centers

It is crucial to grasp the critical components of SDN data centers to comprehend their inner workings. The SDN architecture comprises three fundamental elements: the Application Layer, Control Layer, and Infrastructure Layer. The Application Layer houses the software applications that utilize the network services, while the Control Layer handles network-wide decisions and policies. Lastly, the Infrastructure Layer comprises the physical and virtual network devices that forward data packets.

Advantages of SDN Data Centers

The adoption of SDN in data centers brings forth a myriad of advantages. Firstly, SDN enables network programmability, allowing administrators to configure and manage their networks through software interfaces. This flexibility reduces manual configuration efforts and enhances overall efficiency. Secondly, SDN data centers boast improved scalability, as the centralized control plane simplifies network expansion and resource allocation. Additionally, SDN enhances network security by enabling fine-grained control and real-time threat detection.

Potential Implications and Challenges

While SDN data centers offer numerous benefits, addressing potential implications and challenges is crucial. One concern is the potential risk of a single point of failure in the centralized control plane. Network disruptions or software vulnerabilities could significantly impact the entire data center. Moreover, transitioning from traditional networks to SDN requires careful planning, as it involves reconfiguring the existing infrastructure and training network administrators to adapt to the new paradigm.

Conclusion:

In conclusion, Software-Defined Networking (SDN) has paved the way for a new era of data centers. By separating the control and data planes, SDN empowers administrators to programmatically manage their networks programmatically, leading to enhanced flexibility, scalability, and security. Despite the challenges and potential implications, SDN data centers hold immense potential for transforming the way we architect and operate modern data centers.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023