Hello, I recently completed a 3 part package for Uniken. Part 1 can be found here – Matt Conran & Uniken. Stayed tuned for Part 2 and Part 3!

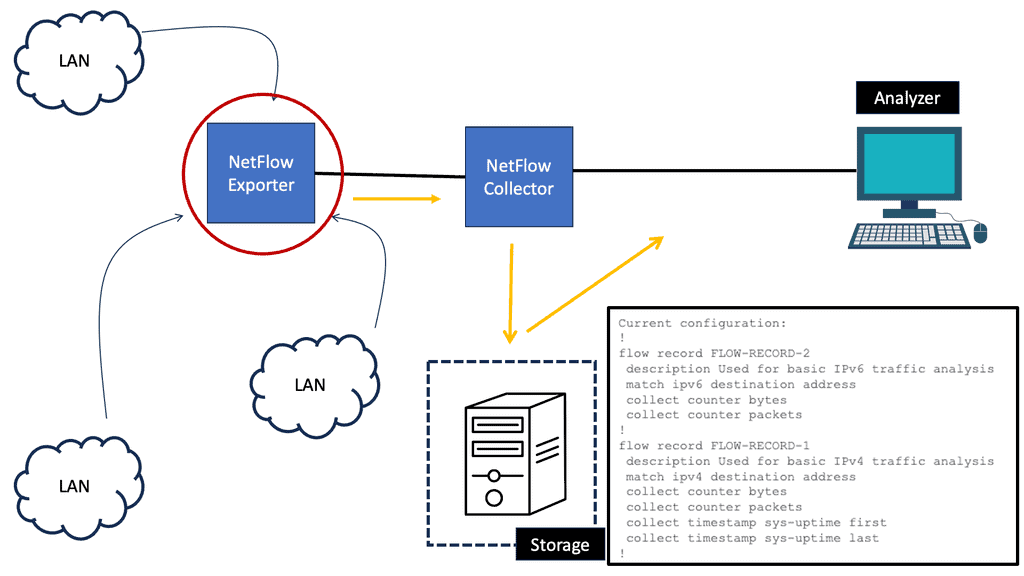

Paessler – NetFlow for Cybersecurity

Paessler purchased a three package article on NetFlow for Cybersecurity. Generally, the three posts relate to how NetFlow can be used to battle the ongoing threats of cybercriminals. Kindly, click the links. Part 1 – Matt Conran Paessler, Part 2 – Matt Conran Outbound DDoS, Part 3 – Matt Conran Cyberhunter.

Aviatrix Hybrid Cloud – Active Directory

Aviatrix, a hybrid cloud networking specialist used a 3 part blog package to formulate a solution brief – ActiveDirectoryTechBriefWhitePaperR1

Nominum Security Report

I had the pleasure to contribute to Nominum’s Security Report. Kindly click on the link to register and download – Matt Conran with Nominum

“Nominum Data Science just released a new Data Science and Security report that investigates the largest threats affecting organizations and individuals, including ransomware, DDoS, mobile device malware, IoT-based attacks and more. Below is an excerpt:

October 21, 2016, was a day many security professionals will remember. Internet users around the world couldn’t access their favorite sites like Twitter, Paypal, The New York Times, Box, Netflix, and Spotify, to name a few. The culprit: a massive Distributed Denial of Service (DDoS) attack against a managed Domain Name System (DNS) provider not well-known outside technology circles. We were quickly reminded how critical the DNS is to the internet as well as its vulnerability. Many theorize that this attack was merely a Proof of Concept, with far bigger attacks to come”

NS1 – Adding Intelligence to the Internet

I recently completed a two-part guest post for DNS-based company NS1. It discusses Internet challenges and introduces the NS1 traffic management solution – Pulsar. Part 1, kindly click – Matt Conran with NS1, and Part 2, kindly click – Matt Conran with NS1 Traffic Management.

“Application and service delivery over the public Internet is subject to various network performance challenges. This is because the Internet comprises different fabrics, connection points, and management entities, all of which are dynamic, creating unpredictable traffic paths and unreliable conditions. While there is an inherent lack of visibility into end-to-end performance metrics, for the most part, the Internet works, and packets eventually reach their final destination. In this post, we’ll discuss key challenges affecting application performance and examine the birth of new technologies,multi-CDNN designs, and how they affect DNS. Finally, we’ll look at Pulsar and our real-time telemetry engine developed specifically for overcoming many performance challenges by adding intelligence at the DNS lookup stage.”

SD WAN Tutorial: Nuage Networks

Nuage Networks

The following post details Nuage Netowrk and its response to SD-WAN. Part 2 can be found here with Nuage Network and SD-WAN. It’s a 24/7 connected world, and traffic diversity puts the Wide Area Network (WAN) edge to the test. Today’s applications should not be hindered by underlying network issues or a poorly designed WAN. Instead, the business requires designers to find a better way to manage the WAN by adding intelligence via an SD WAN Overlay with improved flow management, visibility, and control.

The WAN Monitoring role has changed from providing basic inter-site connectivity to adapting technology to meet business applications’ demands. It must proactively manage flows over all available paths, regardless of transport type. Business requirements should drive today’s networks, and the business should dictate the directions of flows, not the limitations of a routing protocol. The remainder of the post relates to Nuage Network and services as a good foundation for an SD WAN tutorial.

For additional information, you may find the following posts helpful:

Nuage SD WAN. |

|

The building blocks of the WAN have remained stagnant while the application environment has dynamically shifted; sure, speeds and feeds have increased, but the same architectural choices that were best practice 10 or 15 years ago are still being applied, hindering rapid growth in business evolution. So how will the traditional WAN edge keep up with new application requirements?

Nuage SD WAN

Nuage Networks SD-WAN solution challenges this space and overcomes existing WAN limitations by bringing intelligence to routing at an application level. Now, policy decisions are made by a central platform that has full WAN and data center visibility. A transport-agnostic WAN optimizes the network and the decisions you make about it. In the eyes of Nuage, “every packet counts,” and mission-critical applications are always available on protected premium paths.

Routing Protocols at the WAN Edge

Routing protocols assist in the forwarding decisions for traffic based on destinations, with decisions made hop-by-hop. This limits the number of paths the application traffic can take. Paths are further limited to routing loop restrictions – routing protocols will not take a path that could potentially result in a forwarding loop. Couple this with the traditional forwarding paradigms of primitive WAN designs, resulting in a network that cannot match today’s application requirements. We need to find more granular ways to forward traffic.

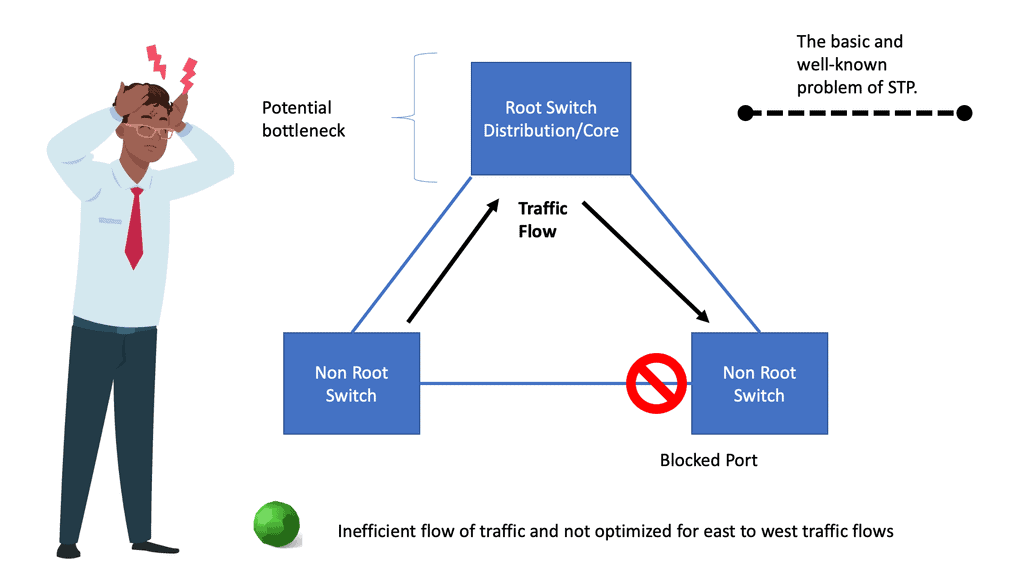

There has always been a problem with complex routing for the WAN. BGP supports the best path, and ECMP provides some options for path selection. Solutions like Dynamic Multipoint VPN (DMVPN) operate with multiple control planes that are hard to design and operate. It’s painful to configure QOS policies per-link basis and design WAN solutions to incorporate multiple failure scenarios. The WAN is the most complex module of any network yet so important as it acts as the gateway to other networks such as the branch LAN and data center.

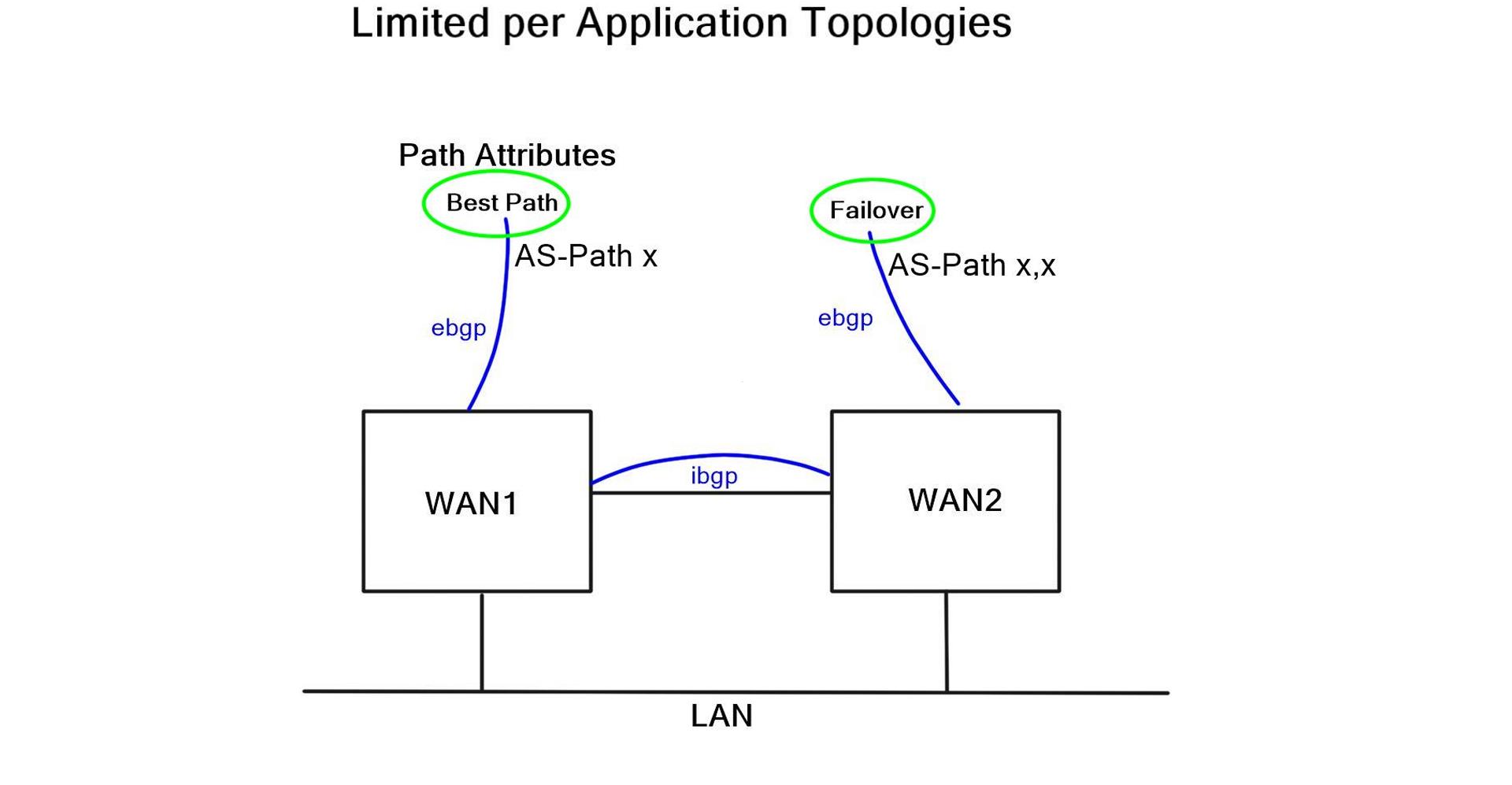

Best path & failover only.

At the network edge, where there are two possible exit paths, choosing a path based on a unique business characteristic is often desirable. For example, use a historical jitter link for web traffic or premium links for mission-critical applications. The granularity for exit path selection should be flexible and selected based on business and application requirements. Criteria for exit points should be application-independent, allowing end-to-end network segmentation.

External policy-based protocol

BGP is an external policy-based protocol commonly used to control path selection. BGP peers with other BGP routers to exchange Network Layer Reachability Information (NLRI). Its flexible policy-orientated approach and outbound traffic engineering offer tailored control for that network slice. As a result, it offers more control than an Interior Gateway Protocol (IGP) and reduces network complexity in large networks. These factors have made BGP the de facto WAN edge routing protocol.

However, the path attributes that influence BGP does not consider any specifically tailored characteristics, such as unique metrics, transit performance, or transit brownouts. When BGP receives multiple paths to the same destination, it runs the best path algorithm to decide the best path to install in the IP routing table; generally, this path selection is based on AS-Path. Unfortunately, AS-Path is not an efficient measure of end-to-end transit. It misses the shape of the network, which can result in long path selection or paths experiencing packet loss.

The traditional WAN

Traditional WAN routes down one path and, by default, have no awareness of what’s happening at the application level (packet loss, jitter, retransmissions). There have been many attempts to enhance the WANs behavior. For example, SLA steering based on enhanced object tracking would poll a metric such as Round Trip Time (RTT).

These methods are popular and widely implemented, but failover events occur on a configurable metric. All these extra configuration parameters make the WAN more complex. Simply acting as band-aids for a network that is under increasing pressure.

“Nuage Networks sponsor this post. All thoughts and opinions expressed are the authors.”

DMVPN Phases | DMVPN Phase 1 2 3

Highlighting DMVPN Phase 1 2 3

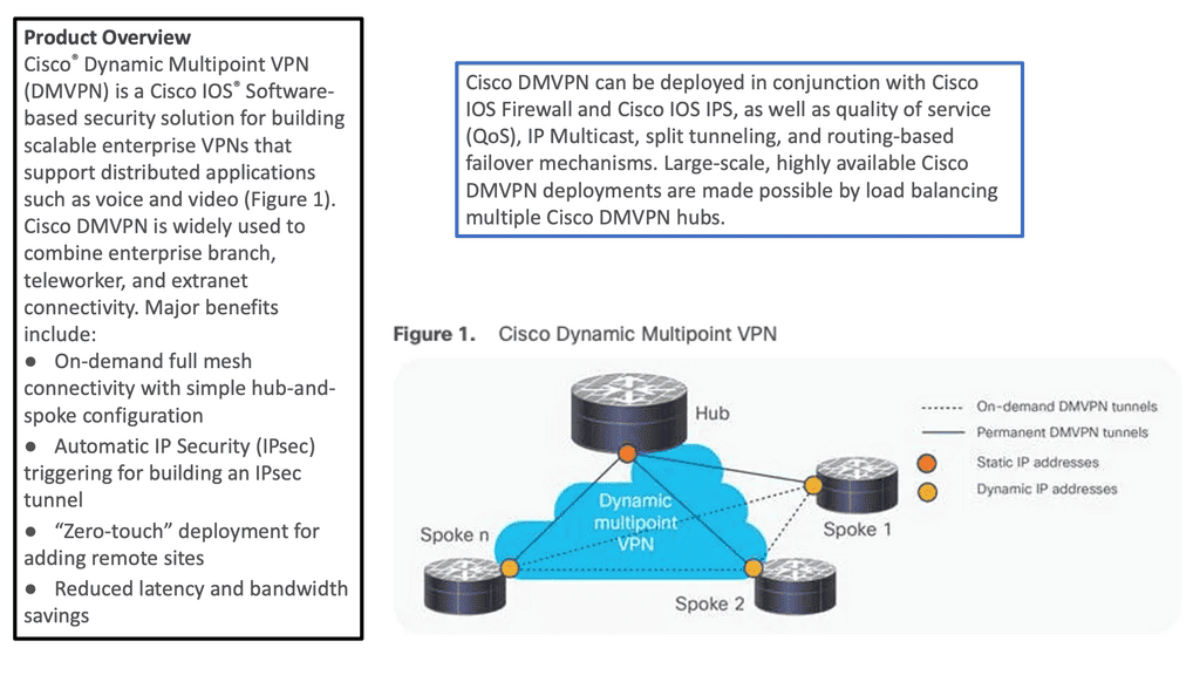

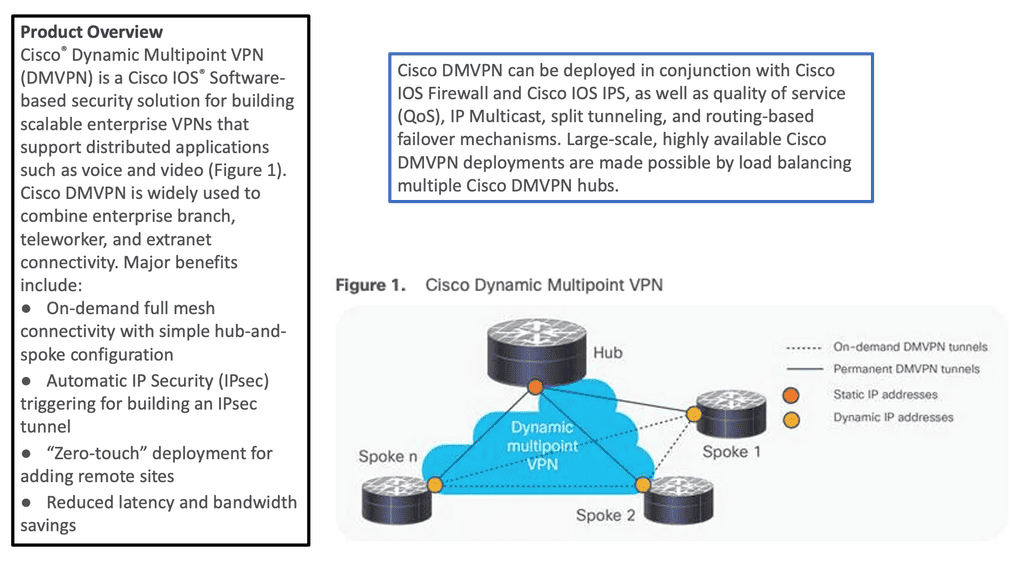

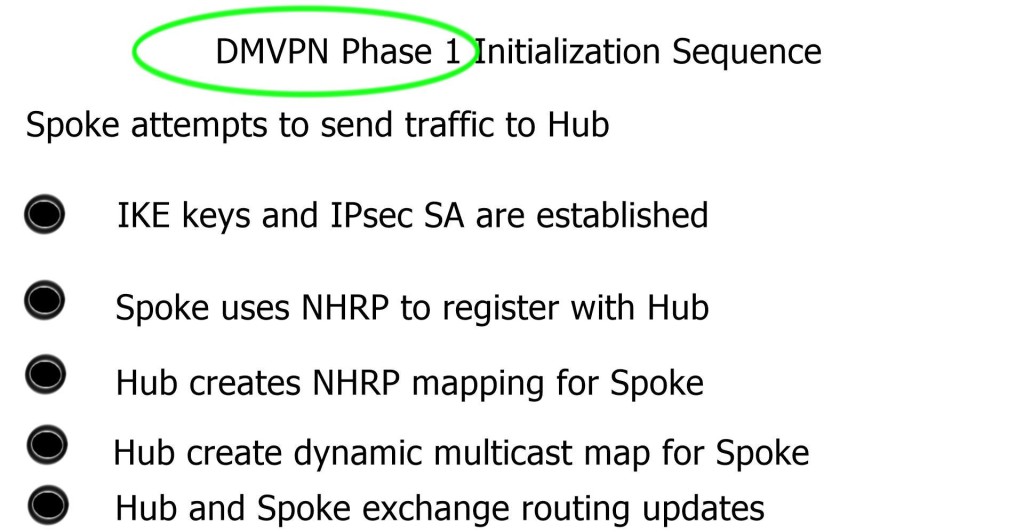

Dynamic Multipoint Virtual Private Network ( DMVPN ) is a dynamic virtual private network ( VPN ) form that allows a mesh of VPNs without needing to pre-configure all tunnel endpoints, i.e., spokes. Tunnels on spokes establish on-demand based on traffic patterns without repeated configuration on hubs or spokes. The design is based on DMVPN Phase 1 2 3.

- Point-to-multipoint Layer 3 overlay VPN

In its simplest form, DMVPN is a point-to-multipoint Layer 3 overlay VPN enabling logical hub and spoke topology supporting direct spoke-to-spoke communications depending on DMVPN design ( DMVPN Phases: Phase 1, Phase 2, and Phase 3 ) selection. The DMVPN Phase selection significantly affects routing protocol configuration and how it works over the logical topology. However, parallels between frame-relay routing and DMVPN routing protocols are evident from a routing point of view.

- Dynamic routing capabilities

DMVPN is one of the most scalable technologies when building large IPsec-based VPN networks with dynamic routing functionality. It seems simple, but you could encounter interesting design challenges when your deployment has more than a few spoke routers. This post will help you understand the DMVPN phases and their configurations.

DMVPN Phases. |

|

DMVPN allows the creation of full mesh GRE or IPsec tunnels with a simple template of configuration. From a provisioning point of view, DMPVN is simple.

Before you proceed, you may find the following useful:

- A key point: Video on the DMVPN Phases

The following video discusses the DMVPN phases. The demonstration is performed with Cisco modeling labs, and I go through a few different types of topologies. At the same time, I was comparing the configurations for DMVPN Phase 1 and DMVPN Phase 3. There is also some on-the-fly troubleshooting that you will find helpful and deepen your understanding of DMVPN.

Back to basics with DMVPN.

- Highlighting DMVPN

DMVPN is a Cisco solution providing scalable VPN architecture. In its simplest form, DMVPN is a point-to-multipoint Layer 3 overlay VPN enabling logical hub and spoke topology supporting direct spoke-to-spoke communications depending on DMVPN design ( DMVPN Phases: Phase 1, Phase 2, and Phase 3 ) selection. The DMVPN Phase selection significantly affects routing protocol configuration and how it works over the logical topology. However, parallels between frame-relay routing and DMVPN routing protocols are evident from a routing point of view.

- Introduction to DMVPN technologies

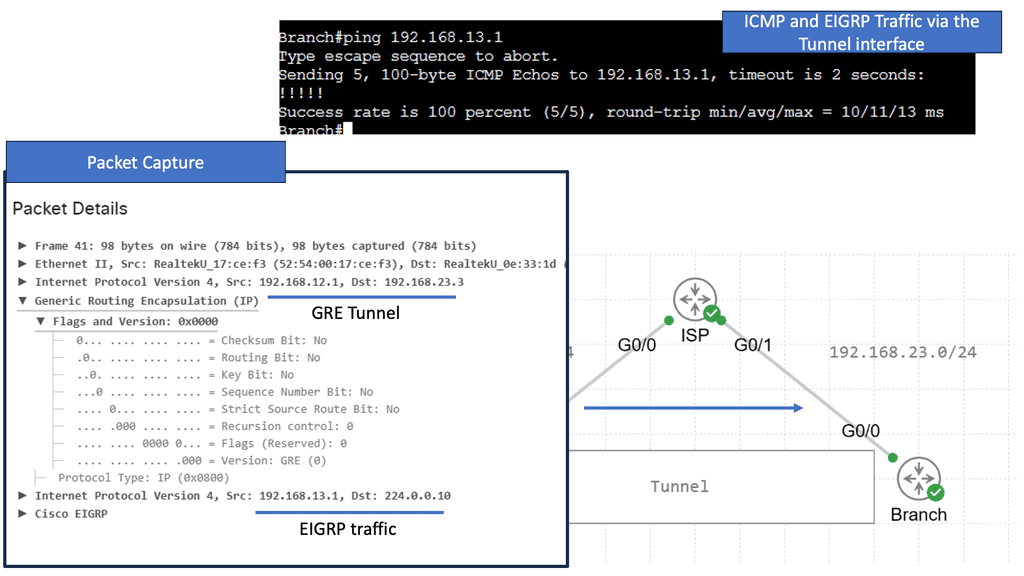

DMVPN uses industry-standardized technologies ( NHRP, GRE, and IPsec ) to build the overlay network. DMVPN uses Generic Routing Encapsulation (GRE) for tunneling, Next Hop Resolution Protocol (NHRP) for on-demand forwarding and mapping information, and IPsec to provide a secure overlay network to address the deficiencies of site-to-site VPN tunnels while providing full-mesh connectivity.

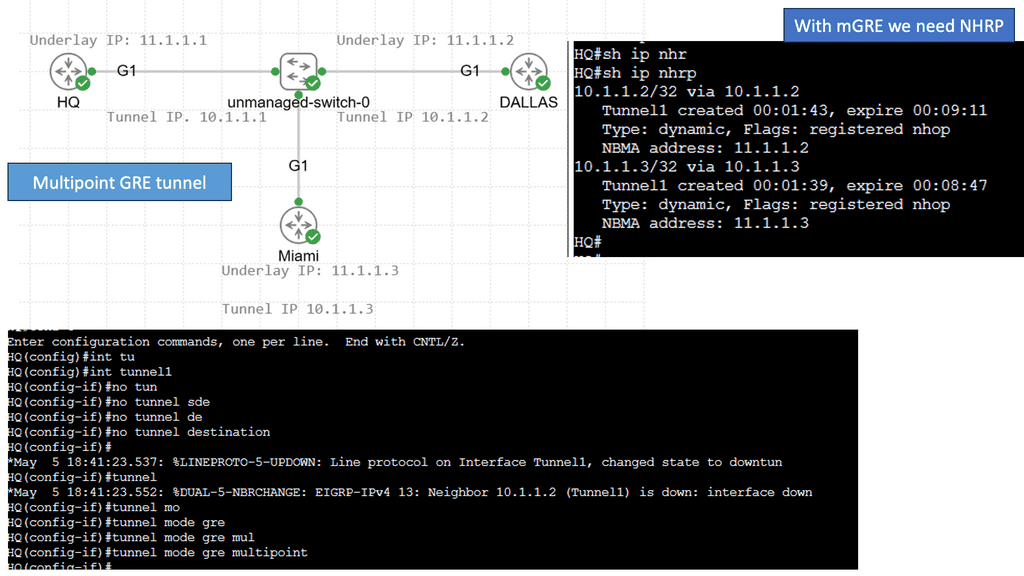

In particular, DMVPN uses Multipoint GRE (mGRE) encapsulation and supports dynamic routing protocols, eliminating many other support issues associated with other VPN technologies. The DMVPN network is classified as an overlay network because the GRE tunnels are built on top of existing transports, also known as an underlay network.

DMVPN Is a Combination of 4 Technologies:

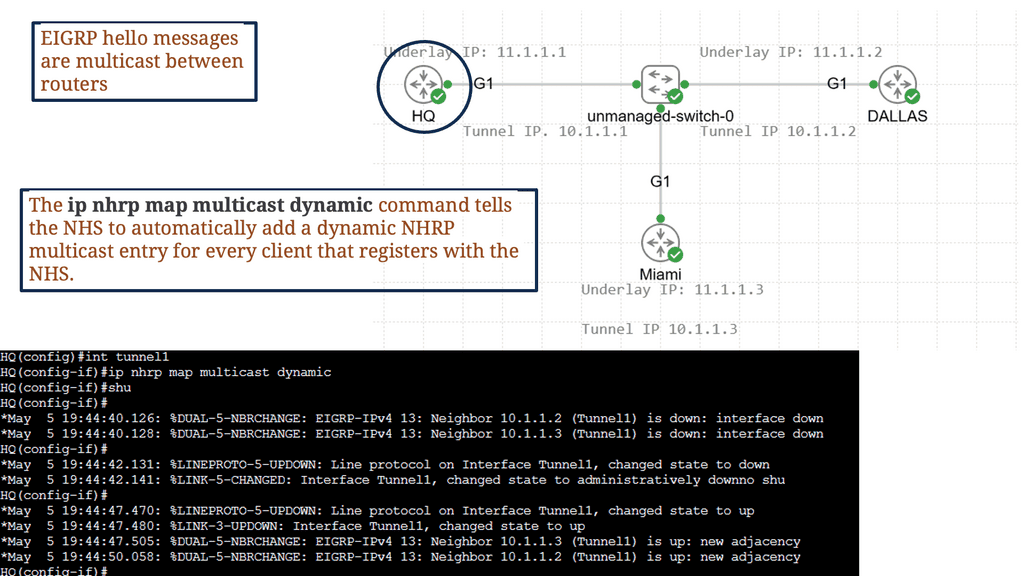

mGRE: In concept, GRE tunnels behave like point-to-point serial links. mGRE behaves like LAN, so many neighbors are reachable over the same interface. The “M” in mGRE stands for multipoint.

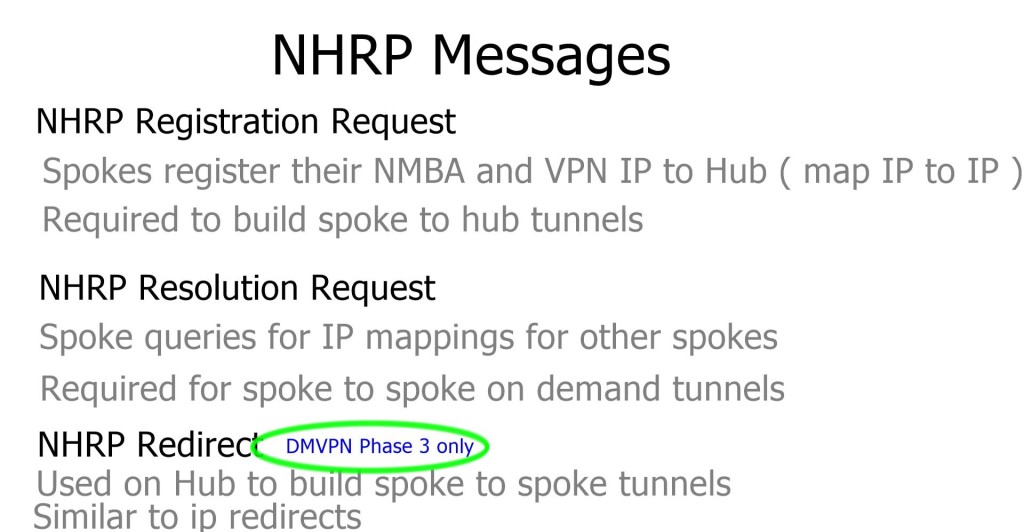

Dynamic Next Hop Resolution Protocol ( NHRP ) with Next Hop Server ( NHS ): LAN environments utilize Address Resolution Protocol ( ARP ) to determine the MAC address of your neighbor ( inverse ARP for frame relay ). mGRE, the role of ARP is replaced by NHRP. NHRP binds the logical IP address on the tunnel with the physical IP address used on the outgoing link ( tunnel source ).

The resolution process determines if you want to form a tunnel destination to X and what address tunnel X resolve towards. DMVPN binds IP-to-IP instead of ARP, which binds destination IP to destination MAC address.

- A key point: Lab guide on Next Hop Resolution Protocol (NHRP)

So we know that NHRP is a dynamic routing protocol that focuses on resolving the next hop address for packet forwarding in a network. Unlike traditional static routing protocols, DNHRP adapts to changing network conditions and dynamically determines the optimal path for data transmission. It works with a client-server model. The DMVPN hub is the NHS, and the Spokes are the NHC.

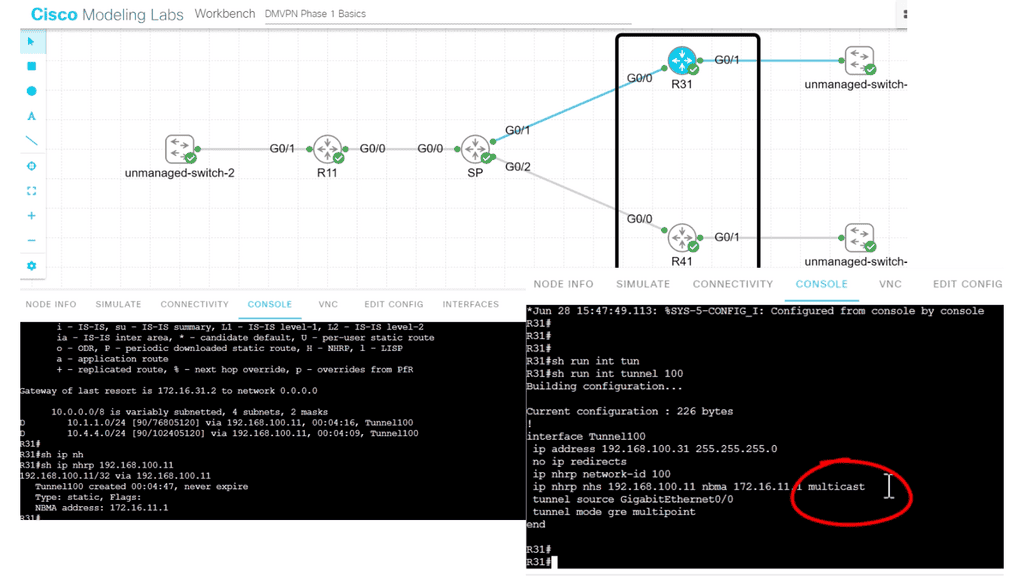

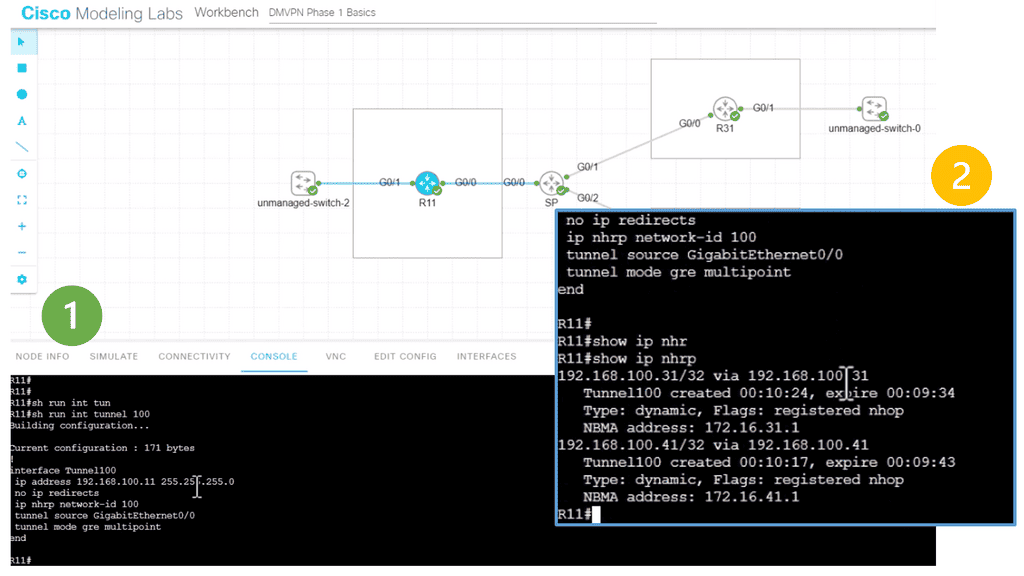

In the following lab topology, we have R11 as the hub with two spokes, R31 and R41. The spokes need to explicitly configure the next hop server (NHS) information with the command: IP nhrp NHS 192.168.100.11 nbma 172.16.11.1. Notice we have the “multicast” keyword at the end of the configuration line. This is used to allow multicast traffic.

As the routing protocol over the DMVPN tunnel, I am running EIGRP, which requires multicast Hellos to form EIGRP neighbor relationships. To form neighbor relationships with BGP, you use TCP, so you would not need the “multicast” keyword.

IPsec tunnel protection and IPsec fault tolerance: DMVPN is a routing technique not directly related to encryption. IPsec is optional and used primarily over public networks. Potential designs exist for DMVPN in public networks with GETVPN, which allows the grouping of tunnels to a single Security Association ( SA ).

Routing: Designers are implementing DMVPN without IPsec for MPLS-based networks to improve convergence as DMVPN acts independently of service provider routing policy. The sites only need IP connectivity to each other to form a DMVPN network. It would be best to ping the tunnel endpoints and route IP between the sites. End customers decide on the routing policy, not the service provider, offering more flexibility than sites connected by MPLS. MPLS-connected sites, the service provider determines routing protocol policies.

Map IP to IP: If you want to reach my private address, you need to GRE encapsulate it and send it to my public address. Spoke registration process.

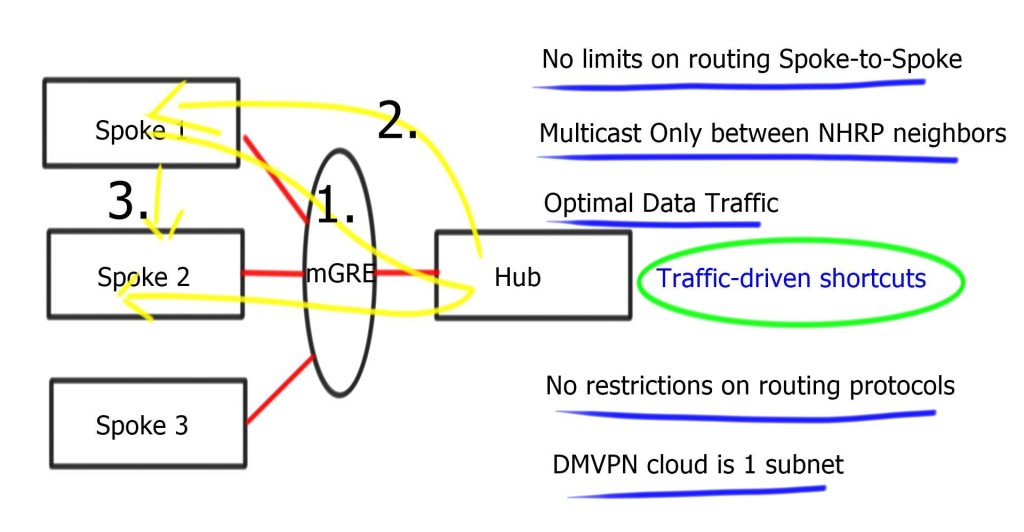

DMVPN Phases Explained

DMVPN Phases: DMVPN phase 1 2 3

The DMVPN phase selected influence spoke-to-spoke traffic patterns supported routing designs and scalability.

- DMVPN Phase 1: All traffic flows through the hub. The hub is used in the network’s control and data plane paths.

- DMVPN Phase 2: Allows spoke-to-spoke tunnels. Spoke-to-spoke communication does not need the hub in the actual data plane. Spoke-to-spoke tunnels are on-demand based on spoke traffic triggering the tunnel. Routing protocol design limitations exist. The hub is used for the control plane but, unlike phase 1, not necessarily in the data plane.

- DMVPN Phase 3: Improves scalability of Phase 2. We can use any Routing Protocol with any setup. “NHRP redirects” and “shortcuts” take care of traffic flows.

- A key point: Video on DMVPN

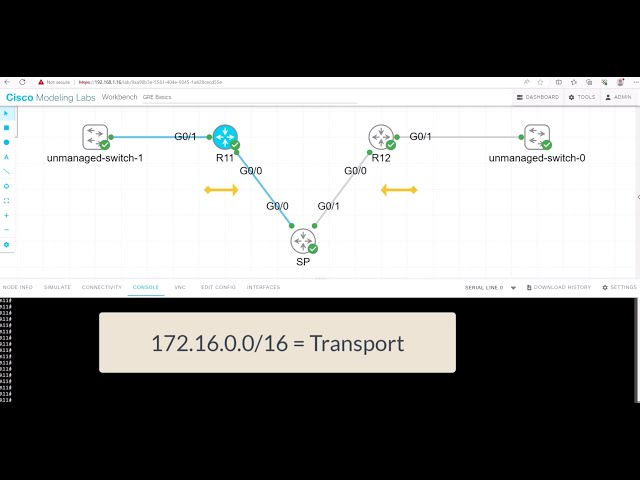

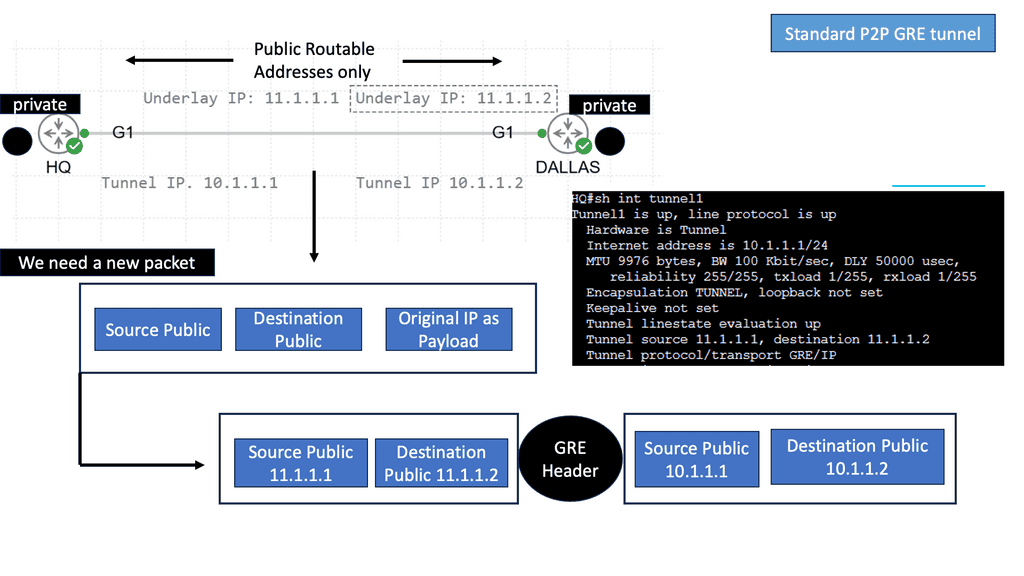

In the following video, we will start with the core block of DMVPN, GRE. Generic Routing Encapsulation (GRE) is a tunneling protocol developed by Cisco Systems that can encapsulate a wide variety of network layer protocols inside virtual point-to-point links or point-to-multipoint links over an Internet Protocol network.

We will then move to add the DMVPN configuration parameters. Depending on the DMVPN phase you want to implement, DMVPN can be enabled with just a few commands. Obviously, it would help if you had the underlay in place.

As you know, DMVPN operates as the overlay that lays up an existing underlay network. This demonstration will go through DMVPN Phase 1, which was the starting point of DMVPN, and we will touch on DMVPN Phase 3. We will look at the various DMVPN and NHRP configuration parameters along with the show commands.

The DMVPN Phases

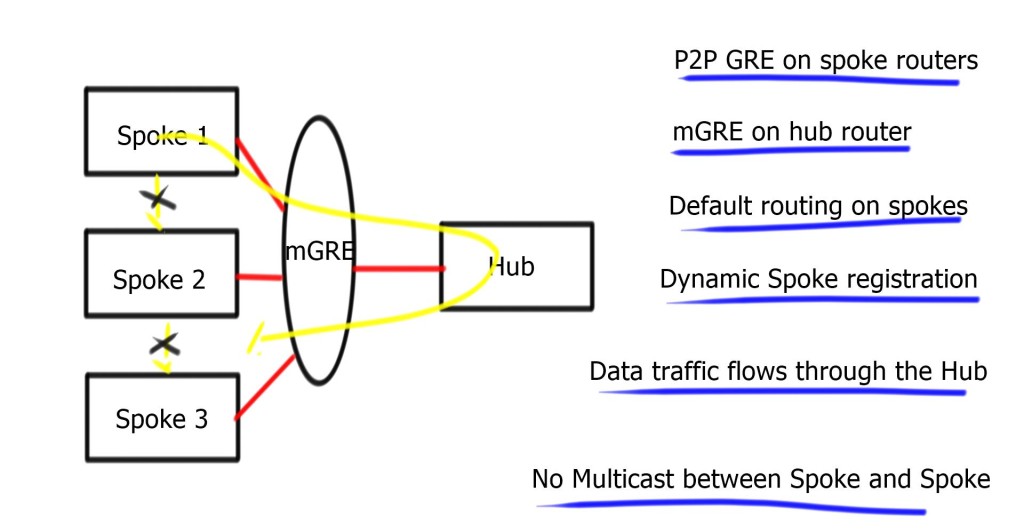

DMVPN Phase 1

- Phase 1 consists of mGRE on the hub and point-to-point GRE tunnels on the spoke.

Hub can reach any spoke over the tunnel interface, but spokes can only go through the hub. No direct Spoke-to-Spoke. Spoke only needs to reach the hub, so a host route to the hub is required. Perfect for default route design from the hub. Design against any routing protocol, as long as you set the next hop to the hub device.

Multicast ( routing protocol control plane ) exchanged between the hub and spoke and not spoke-to-spoke.

On spoke, enter adjust MMS to help with environments where Path MTU is broken. It must be 40 bytes lower than the MTU – IP MTU 1400 & IP TCP adjust-mss 1360. In addition, it inserts the max segment size option in TCP SYN packets, so even if Path MTU does not work, at least TCP sessions are unaffected.

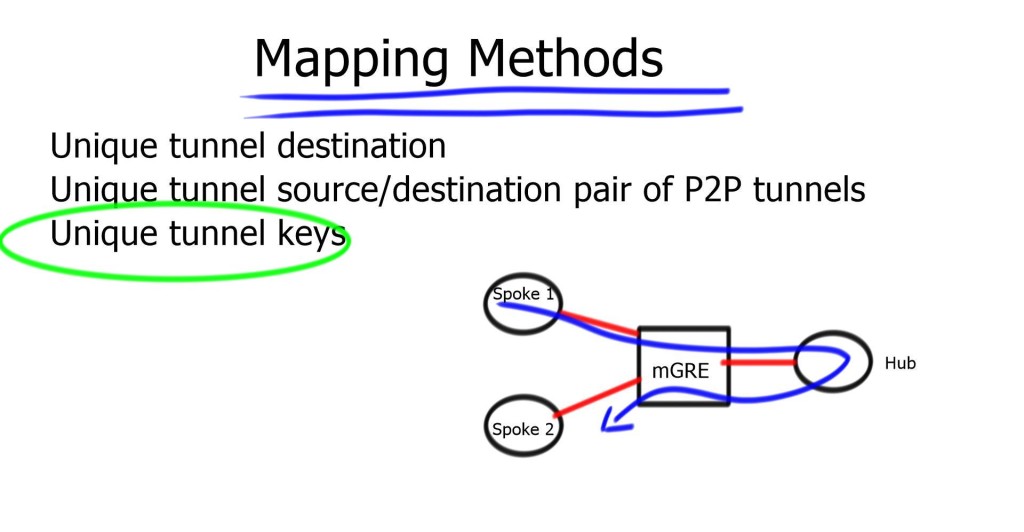

- A key point: Tunnel keys

Tunnel keys are optional for hubs with a single tunnel interface. They can be used for parallel tunnels, usually in conjunction with VRF-light designs. Two tunnels between the hub and spoke, the hub cannot determine which tunnel it belongs to based on destination or source IP address. Tunnel keys identify tunnels and help map incoming GRE packets to multiple tunnel interfaces.

Tunnel Keys on 6500 and 7600: Hardware cannot use tunnel keys. It cannot look that deep in the packet. The CPU switches all incoming traffic, so performance goes down by 100. You should use a different source for each parallel tunnel to overcome this. If you have a static configuration and the network is stable, you could use a “hold-time” and “registration timeout” based on hours, not the 60-second default.

In carrier Ethernet and Cable networks, the spoke IP is assigned by DHCP and can change regularly. Also, in xDSL environments, PPPoE sessions can be cleared, and spokes get a new IP address. Therefore, non-Unique NHRP Registration works efficiently here.

Routing Protocol

Routing for Phase 1 is simple. Summarization and default routing at the hub are allowed. The hub constantly changes next-hop on spokes; the hub is always the next hop. Spoke needs to first communicate with the hub; sending them all the routing information makes no sense. So instead, send them a default route.

Careful with recursive routing – sometimes, the Spoke can advertise its physical address over the tunnel. Hence, the hub attempts to send a DMVPN packet to the spoke via the tunnel, resulting in tunnel flaps.

DMVPN phase 1 OSPF routing

Recommended design should use different routing protocols over DMVPN, but you can extend the OSPF domain by adding the DMVPN network into a separate OSPF Area. Possible to have one big area but with a large number of spokes; try to minimize the topology information spokes have to process.

Redundant set-up with spoke running two tunnels to redundant Hubs, i.e., Tunnel 1 to Hub 1 and Tunnel 2 to Hub 2—designed to have the tunnel interfaces in the same non-backbone area. Having them in separate areas will cause spoke to become Area Border Router ( ABR ). Every OSPF ABR must have a link to Area 0. Resulting in complex OSPF Virtual-Link configuration and additional unnecessary Shortest Path First ( SPF ) runs.

Make sure the SPF algorithm does not consume too much spoke resource. If Spoke is a high-end router with a good CPU, you do not care about SPF running on Spoke. Usually, they are low-end routers, and maintaining efficient resource levels is critical. Potentially design the DMVPN area as a stub or totally stub area. This design prevents changes (for example, prefix additions ) on the non-DVMPN part from causing full or partial SPFs.

Low-end spoke routers can handle 50 routers in single OSPF area.

Configure OSPF point-to-multipoint. Mandatory on the hub and recommended on the spoke. Spoke has GRE tunnels, by default, use OSPF point-to-point network type. Timers need to match for OSPF adjacency to come up.

OSPF is hierarchical by design and not scalable. OSPF over DMVPN is fine if you have fewer spoke sites, i.e., below 100.

DMVPN phase 1 EIGRP routing

On the hub, disable split horizon and perform summarization. Then, deploy EIGRP leak maps for redundant remote sites. Two routers connecting the DMVPN and leak maps specify which information ( routes ) can leak to each redundant spoke.

Deploy spokes as Stub routers. Without stub routing, whenever a change occurs ( prefix lost ), the hub will query all spokes for path information.

Essential to specify interface Bandwidth.

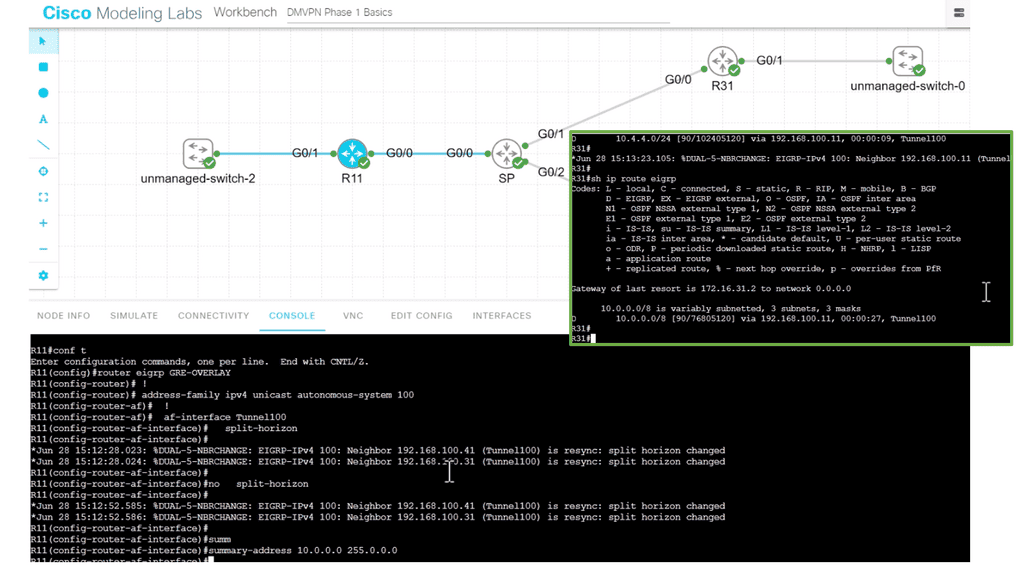

- A key point: Lab guide with DMVPN phase 1 EIGRP.

In the following lab guide, I show how to turn on and off split horizon at the hub sites, R11. So when you turn on split-horizon, the spokes will only see the routes behind R11; in this case, it’s actually only one route. They will not see routes from the other spokes. In addition, I have performed summarization on the hub site. Notice how the spoke only see the summary route.

Turning the split horizon on with summarization, too, will not affect spoke reachability as the hub summarizes the routes. So, if you are performing summarization at the hub site, you can also have split horizon turned on at the hub site, R11,

DMVPN phase 1 BGP routing

Recommended using EBGP. Hub must have next-hop-self on all BGP neighbors. To save resources and configuration steps, possible to use policy templates. Avoid routing updates to spokes by filtering BGP updates or advertising the default route to spoke devices.

In recent IOS, we have dynamic BGP neighbors. Configure the range on the hub with command BGP listens to range 192.168.0.0/24 peer-group spokes. Inbound BGP sessions are accepted if the source IP address is in the specified range of 192.168.0.0/24.

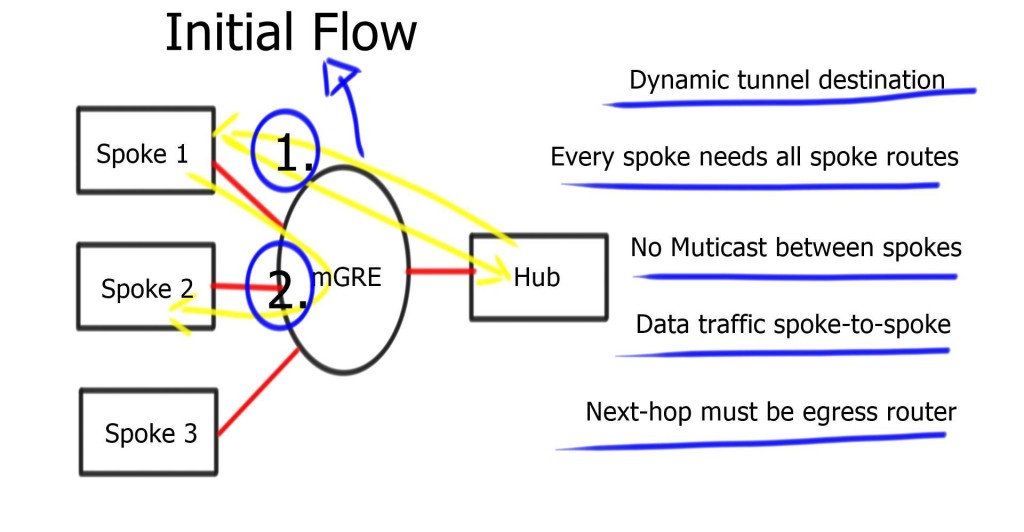

DMVPN Phase 2

Phase 2 allowed mGRE on the hub and spoke, permitting spoke-to-spoke on-demand tunnels. Phase 2 consists of no changes on the hub router; change tunnel mode on spokes to GRE multipoint – tunnel mode gre multipoint. Tunnel keys are mandatory when multiple tunnels share the same source interface.

Multicast traffic still flows between the hub and spoke only, but data traffic can now flow from spoke to spoke.

DMVPN Packet Flows and Routing

DMVPN phase 2 packet flow

| -For initial packet flow, even though the routing table displays the spoke as the Next Hop, all packets are sent to the hub router. Shortcut not established. |

| -The spokes send NHRP requests to the Hub and ask the hub about the IP address of the other spokes. |

| -Reply is received and stored on the NHRP dynamic cache on the spoke router. |

| -Now, spokes attempt to set up IPSEC and IKE sessions with other spokes directly. |

| -Once IKE and IPSEC become operational, the NHRP entry is also operational, and the CEF table is modified so spokes can send traffic directly to spokes. |

The process is unidirectional. Reverse traffic from other spoke triggers the exact mechanism. Spokes don’t establish two unidirectional IPsec sessions; Only one.

There are more routing protocol restrictions with Phase 2 than DMVPN Phases 1 and 3. For example, summarization and default routing is NOT allowed at the hub, and the hub always preserves the next hop on spokes. Spokes need specific routes to each other networks.

DMVPN phase 2 OSPF routing

Recommended using OSPF network type Broadcast. Ensure the hub is DR. You will have a disaster if a spoke becomes a Designated Router ( DR ). For that reason, set the spoke OSPF priority to “ZERO.”

OSPF multicast packets are delivered to the hub only. Due to configured static or dynamic NHRP multicast maps, OSPF neighbor relationships only formed between the hub and spoke.

The spoke router needs all routes from all other spokes, so default routing is impossible for the hub.

DMVPN phase 2 EIGRP routing

No changes to the spoke. Add no IP next-hop-self on a hub only—Disable EIRP split-horizon on hub routers to propagate updates between spokes.

Do not use summarization; if you configure summarization on spokes, routes will not arrive in other spokes. Resulting in spoke-to-spoke traffic going to the hub.

DMVPN phase 2 BGP pouting

Remove the next-hop-self on hub routers.

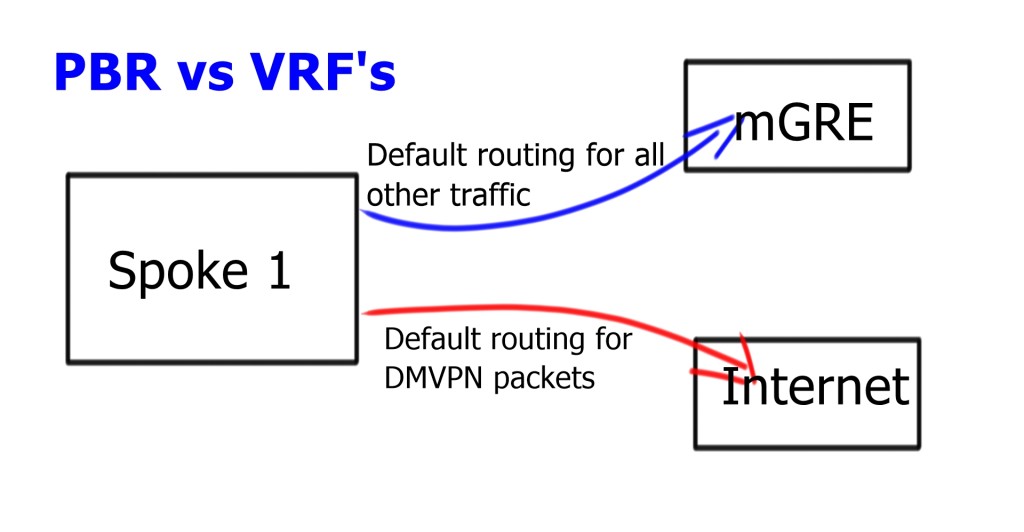

Split default routing

Split default routing may be used if you have the requirement for default routing to the hub: maybe for central firewall design, and you want all traffic to go there before proceeding to the Internet. However, the problem with Phase 2 allows spoke-to-spoke traffic, so even though we would default route pointing to the hub, we need the default route point to the Internet.

Require two routing perspectives; one for GRE and IPsec packets and another for data traversing the enterprise WAN. Possible to configure Policy Based Routing ( PBR ) but only as a temporary measure. PBR can run into bugs and is difficult to troubleshoot. Split routing with VRF is much cleaner. Routing tables for different VRFs may contain default routes. Routing in one VRF will not affect routing in another VRF.

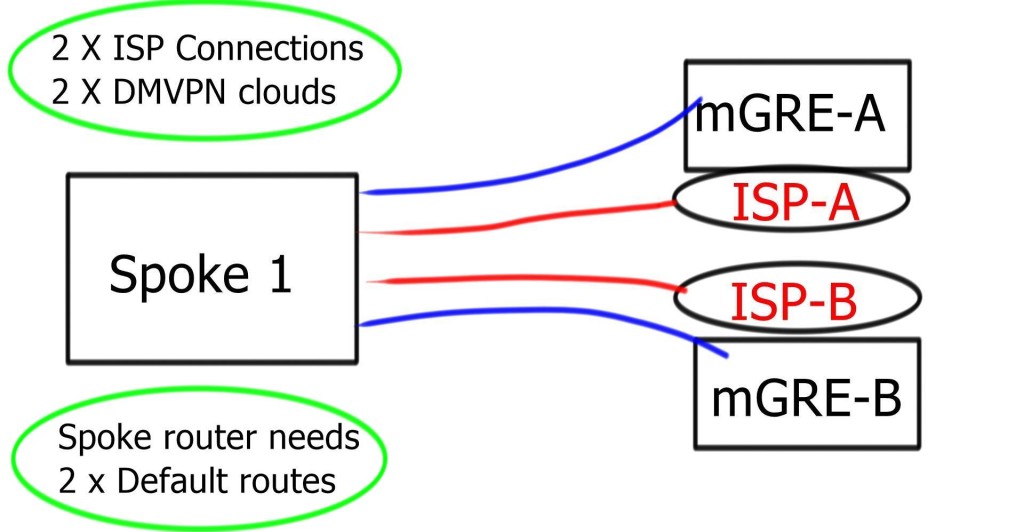

Multi-homed remote site

To make it complicated, the spoke needs two 0.0.0/0. One for each DMVPN Hub network. Now, we have two default routes in the same INTERNET VRF. We need a mechanism to tell us which one to use and for which DMVPN cloud.

Even if the tunnel source is for mGRE-B ISP-B, the routing table could send the traffic to ISP-A. ISP-A may perform uRFC to prevent address spoofing. It results in packet drops.

The problem is that the outgoing link ( ISP-A ) selection depends on Cisco Express Forwarding ( CEF ) hashing, which you cannot influence. So, we have a problem: the outgoing packet has to use the correct outgoing link based on the source and not the destination IP address. The solution is Tunnel Route-via – Policy routing for GRE. To get this to work with IPsec, install two VRFs for each ISP.

DMVPN Phase 3

Phase 3 consists of mGRE on the hub and mGRE tunnels on the spoke. Allows spoke-to-spoke on-demand tunnels. The difference is that when the hub receives an NHRP request, it can redirect the remote spoke to tell them to update their routing table.

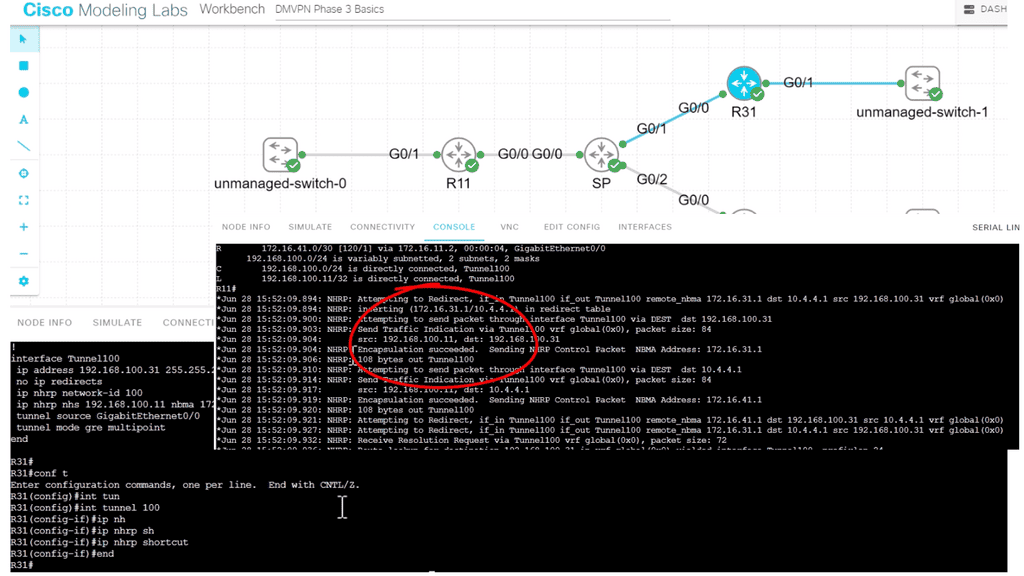

- A key point: Lab on DMVPN Phase 3 configuration

The following lab configuration shows an example of DMVPN Phase 3. The command: Tunnel mode gre multipoint GRE is on both the hub and the spokes. This contrasts with DMVPN Phase 1, where we must explicitly configure the tunnel destination on the spokes. Notice the command: Show IP nhrp. We have two spokes. dynamically learned via the NHRP resolution process with the flag “registered nhop.” However, this is only part of the picture for DMVPN Phase 3. We need configurations to enable dynamic spoke-to-spoke tunnels, and this is discussed next.

Phase 3 redirect features

The Phase 3 DMVPN configuration for the hub router adds the interface parameter command ip nhrp redirect on the hub router. This command checks the flow of packets on the tunnel interface and sends a redirect message to the source spoke router when it detects packets hair pinning out of the DMVPN cloud.

Hairpinning means traffic is received and sent to an interface in the same cloud (identified by the NHRP network ID). For instance, hair pinning occurs when packets come in and go out of the same tunnel interface. The Phase 3 DMVPN configuration for spoke routers uses the mGRE tunnel interface and the command ip nhrp shortcut on the tunnel interface.

Note: Placing ip nhrp shortcut and ip nhrp redirect on the same DMVPN tunnel interface has no adverse effects.

Phase 3 allows spoke-to-spoke communication even with default routing. So even though the routing table points to the hub, the traffic flows between spokes. No limits on routing; we still get spoke-to-spoke traffic flow even when you use default routes.

“Traffic-driven-redirect”; hub notices the spoke is sending data to it, and it sends a redirect back to the spoke, saying use this other spoke. Redirect informs the sender of a better path. The spoke will install this shortcut and initiate IPsec with another spoke. Use ip nhrp redirect on hub routers & ip nhrp shortcuts on spoke routers.

No restrictions on routing protocol or which routes are received by spokes. Summarization and default routing is allowed. The next hop is always the hub.

- A key point: Lab guide on DMVPN Phase 3

I have the command in the following lab guide: IP nhrp shortcut on the spoke, R31. I also have the “redirect” command on the hub, R11. So, we don’t see the actual command on the hub, but we do see that R11 is sending a “Traffic Indication” message to the spokes. This was sent when spoke-to-spoke traffic is initiated, informing the spokes that a better and more optimal path exists without going to the hub.

Main Checklist Points To Consider

|

How-to: Fabric Extenders & VPC

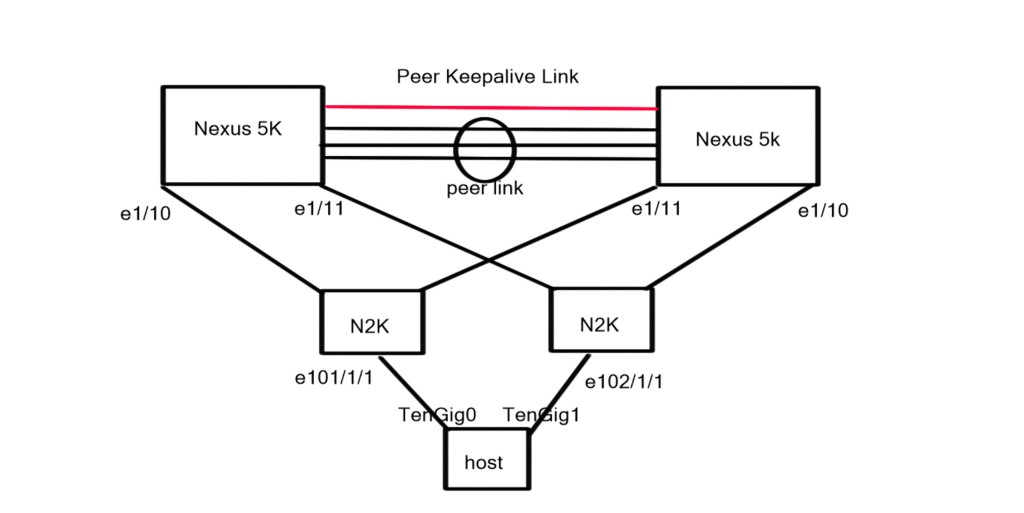

Topology Diagram

The topology diagram depicts two Nexus 5K acting as parent switches with physical connections to two downstream Nexus 2k (FEX) acting as the 10G physical termination points for the connected server.

Part1. Connecting the FEX to the Parent switch:

The FEX and the parent switch use Satellite Discovery Protocol (SDP) periodic messages to discovery and register with one another.

When you initially log on to the Nexus 5K you can see that the OS does not recognise the FEX even though there are two FEXs that are cabled correctly to parent switch. As the FEX is recognised as a remote line card you would expect to see it with a “show module” command.

| N5K3# sh module Mod Ports Module-Type Model Status — —– ——————————– ———————- ———— 1 40 40x10GE/Supervisor N5K-C5020P-BF-SUP active * 2 8 8×1/2/4G FC Module N5K-M1008 ok Mod Sw Hw World-Wide-Name(s) (WWN) — ————– —— ————————————————– 1 5.1(3)N2(1c) 1.3 — 2 5.1(3)N2(1c) 1.0 93:59:41:08:5a:0c:08:08 to 00:00:00:00:00:00:00:00 Mod MAC-Address(es) Serial-Num — ————————————– ———- 1 0005.9b1e.82c8 to 0005.9b1e.82ef JAF1419BLMA 2 0005.9b1e.82f0 to 0005.9b1e.82f7 JAF1411AQBJ |

We issue the “feature fex” command we observe the FEX sending SDP messages to the parent switch i.e. RX but we don’t see the parent switch sending SDP messages to the FEX i.e. TX.

Notice in the output below there is only “fex:Sdp-Rx” messages.

| N5K3# debug fex pkt-trace N5K3# 2014 Aug 21 09:51:57.410701 fex: Sdp-Rx: Interface: Eth1/11, Fex Id: 0, Ctrl Vntag: -1, Ctrl Vlan: 1 2014 Aug 21 09:51:57.410729 fex: Sdp-Rx: Refresh Intvl: 3000ms, Uid: 0x4000ff2929f0, device: Fex, Remote link: 0x20000080 2014 Aug 21 09:51:57.410742 fex: Sdp-Rx: Vendor: Cisco Systems Model: N2K-C2232PP-10GE Serial: FOC17100NHX 2014 Aug 21 09:51:57.821776 fex: Sdp-Rx: Interface: Eth1/10, Fex Id: 0, Ctrl Vntag: -1, Ctrl Vlan: 1 2014 Aug 21 09:51:57.821804 fex: Sdp-Rx: Refresh Intvl: 3000ms, Uid: 0x2ff2929f0, device: Fex, Remote link: 0x20000080 2014 Aug 21 09:51:57.821817 fex: Sdp-Rx: Vendor: Cisco Systems Model: N2K-C2232PP-10GE Serial: FOC17100NHU |

The FEX appears as “DISCOVERED” but no additional FEX host interfaces appear when you issue a “show interface brief“.

Command: show fex [chassid_id [detail]]: Displays information about a specific Fabric Extender Chassis ID

Command: show interface brief: Display interface information and connection status for each interface.

| N5K3# sh fex FEX FEX FEX FEX Number Description State Model Serial ———————————————————————— — ——– Discovered N2K-C2232PP-10GE SSI16510AWF — ——– Discovered N2K-C2232PP-10GE SSI165204YC N5K3# N5K3# show interface brief ——————————————————————————– Ethernet VLAN Type Mode Status Reason Speed Port Interface Ch # ——————————————————————————– Eth1/1 1 eth access down SFP validation failed 10G(D) — Eth1/2 1 eth access down SFP validation failed 10G(D) — Eth1/3 1 eth access up none 10G(D) — Eth1/4 1 eth access up none 10G(D) — Eth1/5 1 eth access up none 10G(D) — Eth1/6 1 eth access down Link not connected 10G(D) — Eth1/7 1 eth access down Link not connected 10G(D) — Eth1/8 1 eth access down Link not connected 10G(D) — Eth1/9 1 eth access down Link not connected 10G(D) — Eth1/10 1 eth fabric down FEX not configured 10G(D) — Eth1/11 1 eth fabric down FEX not configured 10G(D) — Eth1/12 1 eth access down Link not connected 10G(D) — snippet removed |

The Fabric interface Ethernet1/10 show as DOWN with a “FEX not configured” statement.

| N5K3# sh int Ethernet1/10 Ethernet1/10 is down (FEX not configured) Hardware: 1000/10000 Ethernet, address: 0005.9b1e.82d1 (bia 0005.9b1e.82d1) MTU 1500 bytes, BW 10000000 Kbit, DLY 10 usec reliability 255/255, txload 1/255, rxload 1/255 Encapsulation ARPA Port mode is fex-fabric auto-duplex, 10 Gb/s, media type is 10G Beacon is turned off Input flow-control is off, output flow-control is off Rate mode is dedicated Switchport monitor is off EtherType is 0x8100 snippet removed |

To enable the parent switch to fully discover the FEX we need to issue the “switchport mode fex-fabric” under the connected interface. As you can see we are still not sending any SDP messages but we are discovering the FEX.

The next step is to enable the FEX logical numbering under the interface so we can start to configure the FEX host interfaces. Once this is complete we run the “debug fex pkt-trace” and we are not sending TX and receiving RX SDP messages.

Command:”fex associate chassis_id“: Associates a Fabric Extender (FEX) to a fabric interface. To disassociate the Fabric Extender, use the “no” form of this command.

From the “debug fexpkt-race” you can see the parent switch is now sending TX SDP messages to the fully discovered FEX.

| N5K3(config)# int Ethernet1/10 N5K3(config-if)# fex associate 101 N5K3# debug fex pkt-trace N5K3# 2014 Aug 21 10:00:33.674605 fex: Sdp-Tx: Interface: Eth1/10, Fex Id: 101, Ctrl Vntag: 0, Ctrl Vlan: 4042 2014 Aug 21 10:00:33.674633 fex: Sdp-Tx: Refresh Intvl: 3000ms, Uid: 0xc0821e9b0500, device: Switch, Remote link: 0x1a009000 2014 Aug 21 10:00:33.674646 fex: Sdp-Tx: Vendor: Model: Serial: ———- 2014 Aug 21 10:00:33.674718 fex: Sdp-Rx: Interface: Eth1/10, Fex Id: 0, Ctrl Vntag: 0, Ctrl Vlan: 4042 2014 Aug 21 10:00:33.674733 fex: Sdp-Rx: Refresh Intvl: 3000ms, Uid: 0x2ff2929f0, device: Fex, Remote link: 0x20000080 2014 Aug 21 10:00:33.674746 fex: Sdp-Rx: Vendor: Cisco Systems Model: N2K-C2232PP-10GE Serial: FOC17100NHU 2014 Aug 21 10:00:33.836774 fex: Sdp-Rx: Interface: Eth1/11, Fex Id: 0, Ctrl Vntag: -1, Ctrl Vlan: 1 2014 Aug 21 10:00:33.836803 fex: Sdp-Rx: Refresh Intvl: 3000ms, Uid: 0x4000ff2929f0, device: Fex, Remote link: 0x20000080 2014 Aug 21 10:00:33.836816 fex: Sdp-Rx: Vendor: Cisco Systems Model: N2K-C2232PP-10GE Serial: FOC17100NHX 2014 Aug 21 10:00:36.678624 fex: Sdp-Tx: Interface: Eth1/10, Fex Id: 101, Ctrl Vntag: 0, Ctrl Vlan: 4042 2014 Aug 21 10:00:36.678664 fex: Sdp-Tx: Refresh Intvl: 3000ms, Uid: 0xc0821e9b0500, device: Switch, Remote snippet removed |

Now the 101 FEX status changes from “DISCOVERED” to “ONLINE”. You may also see an additional FEX with serial number SSI165204YC as “DISCOVERED” and not “ONLINE”. This is due to the fact that we have not explicitly configured it under the other Fabric interface.

| N5K3# sh fex FEX FEX FEX FEX Number Description State Model Serial ———————————————————————— 101 FEX0101 Online N2K-C2232PP-10GE SSI16510AWF — ——– Discovered N2K-C2232PP-10GE SSI165204YC N5K3# N5K3# show module fex 101 FEX Mod Ports Card Type Model Status. — — —– ———————————- —————— ———– 101 1 32 Fabric Extender 32x10GE + 8x10G Module N2K-C2232PP-10GE present FEX Mod Sw Hw World-Wide-Name(s) (WWN) — — ————– —— ———————————————– 101 1 5.1(3)N2(1c) 4.4 — FEX Mod MAC-Address(es) Serial-Num — — ————————————– ———- 101 1 f029.29ff.0200 to f029.29ff.021f SSI16510AWF |

Issuing the “show interface brief” we see new interfaces, specifically host interfaces for the FEX. The syntax below shows that only one interface is up; interface labelled Eth101/1/1. Reason for this is that only one end host (server) is connected to the FEX

| N5K3# show interface brief ——————————————————————————– Ethernet VLAN Type Mode Status Reason Speed Port Interface Ch # ——————————————————————————– Eth1/1 1 eth access down SFP validation failed 10G(D) — Eth1/2 1 eth access down SFP validation failed 10G(D) — snipped removed ——————————————————————————– Port VRF Status IP Address Speed MTU ——————————————————————————– mgmt0 — up 192.168.0.53 100 1500 ——————————————————————————– Ethernet VLAN Type Mode Status Reason Speed Port Interface Ch # ——————————————————————————– Eth101/1/1 1 eth access up none 10G(D) — Eth101/1/2 1 eth access down SFP not inserted 10G(D) — Eth101/1/3 1 eth access down SFP not inserted 10G(D) — Eth101/1/4 1 eth access down SFP not inserted 10G(D) — Eth101/1/5 1 eth access down SFP not inserted 10G(D) — Eth101/1/6 1 eth access down SFP not inserted 10G(D) — snipped removed |

| N5K3# sh run int eth1/10 interface Ethernet1/10 switchport mode fex-fabric fex associate 101 |

The Fabric Interfaces do not run a Spanning tree instance while the host interfaces do run BPDU guard and BPDU filter by default. The reason why the fabric interfaces do not run spanning tree is because they are backplane point to point interfaces.

By default, the FEX interfaces will send out a couple of BPDU’s on start-up.

| N5K3# sh spanning-tree interface Ethernet1/10 No spanning tree information available for Ethernet1/10 N5K3# N5K3# N5K3# sh spanning-tree interface Eth101/1/1Vlan Role Sts Cost Prio.Nbr Type —————- —- — ——— ——– ——————————– VLAN0001 Desg FWD 2 128.1153 Edge P2p N5K3# N5K3# sh spanning-tree interface Eth101/1/1 detail Port 1153 (Ethernet101/1/1) of VLAN0001 is designated forwarding Port path cost 2, Port priority 128, Port Identifier 128.1153 Designated root has priority 32769, address 0005.9b1e.82fc Designated bridge has priority 32769, address 0005.9b1e.82fc Designated port id is 128.1153, designated path cost 0 Timers: message age 0, forward delay 0, hold 0 Number of transitions to forwarding state: 1 The port type is edge Link type is point-to-point by default Bpdu guard is enabled Bpdu filter is enabled by default BPDU: sent 11, received 0 |

| N5K3# sh spanning-tree interface Ethernet1/10 No spanning tree information available for Ethernet1/10 N5K3# N5K3# N5K3# sh spanning-tree interface Eth101/1/1Vlan Role Sts Cost Prio.Nbr Type—————- —- — ——— ——– ——————————– VLAN0001 Desg FWD 2 128.1153 Edge P2p N5K3# N5K3# sh spanning-tree interface Eth101/1/1 detail Port 1153 (Ethernet101/1/1) of VLAN0001 is designated forwarding Port path cost 2, Port priority 128, Port Identifier 128.1153 Designated root has priority 32769, address 0005.9b1e.82fc Designated bridge has priority 32769, address 0005.9b1e.82fc Designated port id is 128.1153, designated path cost 0 Timers: message age 0, forward delay 0, hold 0 Number of transitions to forwarding state: 1 The port type is edge Link type is point-to-point by default Bpdu guard is enabled Bpdu filter is enabled by default BPDU: sent 11, received 0 |

Issue the commands below to determine the transceiver type for the fabric ports and also the hosts ports for each fabric interface.

Command: “show interface fex-fabric“: displays all the Fabric Extender interfaces

Command: “show fex detail“: Shows detailed information about all FEXs. Including more recent log messages related to the FEX.

| N5K3# show interface fex-fabric Fabric Fabric Fex FEX Fex Port Port State Uplink Model Serial ————————————————————— 101 Eth1/10 Active 3 N2K-C2232PP-10GE SSI16510AWF — Eth1/11 Discovered 3 N2K-C2232PP-10GE SSI165204YC N5K3# N5K3# N5K3# show interface Ethernet1/10 fex-intf Fabric FEX Interface Interfaces ————————————————— Eth1/10 Eth101/1/1 N5K3# N5K3# show interface Ethernet1/10 transceiver Ethernet1/10 transceiver is present type is SFP-H10GB-CU3M name is CISCO-TYCO part number is 1-2053783-2 revision is N serial number is TED1530B11W nominal bitrate is 10300 MBit/sec Link length supported for copper is 3 m cisco id is — cisco extended id number is 4 N5K3# show fex detail FEX: 101 Description: FEX0101 state: Online FEX version: 5.1(3)N2(1c) [Switch version: 5.1(3)N2(1c)] FEX Interim version: 5.1(3)N2(1c) Switch Interim version: 5.1(3)N2(1c) Extender Serial: SSI16510AWF Extender Model: N2K-C2232PP-10GE, Part No: 73-12533-05 Card Id: 82, Mac Addr: f0:29:29:ff:02:02, Num Macs: 64 Module Sw Gen: 12594 [Switch Sw Gen: 21] post level: complete pinning-mode: static Max-links: 1 Fabric port for control traffic: Eth1/10 FCoE Admin: false FCoE Oper: true FCoE FEX AA Configured: false Fabric interface state: Eth1/10 – Interface Up. State: Active Fex Port State Fabric Port Eth101/1/1 Up Eth1/10 Eth101/1/2 Down None Eth101/1/3 Down None Eth101/1/4 Down None snippet removed Logs: 08/21/2014 10:00:06.107783: Module register received 08/21/2014 10:00:06.109935: Registration response sent 08/21/2014 10:00:06.239466: Module Online S |

Now we quickly enable the second FEX connected to fabric interface E1/11.

| N5K3(config)# int et1/11 N5K3(config-if)# switchport mode fex-fabric N5K3(config-if)# fex associate 102 N5K3(config-if)# end N5K3# sh fex FEX FEX FEX FEX Number Description State Model Serial ———————————————————————— 101 FEX0101 Online N2K-C2232PP-10GE SSI16510AWF 102 FEX0102 Online N2K-C2232PP-10GE SSI165204YC N5K3# show fex detail FEX: 101 Description: FEX0101 state: Online FEX version: 5.1(3)N2(1c) [Switch version: 5.1(3)N2(1c)] FEX Interim version: 5.1(3)N2(1c) Switch Interim version: 5.1(3)N2(1c) Extender Serial: SSI16510AWF Extender Model: N2K-C2232PP-10GE, Part No: 73-12533-05 Card Id: 82, Mac Addr: f0:29:29:ff:02:02, Num Macs: 64 Module Sw Gen: 12594 [Switch Sw Gen: 21] post level: complete pinning-mode: static Max-links: 1 Fabric port for control traffic: Eth1/10 FCoE Admin: false FCoE Oper: true FCoE FEX AA Configured: false Fabric interface state: Eth1/10 – Interface Up. State: Active Fex Port State Fabric Port Eth101/1/1 Up Eth1/10 Eth101/1/2 Down None Eth101/1/3 Down None Eth101/1/4 Down None Eth101/1/5 Down None Eth101/1/6 Down None snippet removed Logs: 08/21/2014 10:00:06.107783: Module register received 08/21/2014 10:00:06.109935: Registration response sent 08/21/2014 10:00:06.239466: Module Online Sequence 08/21/2014 10:00:09.621772: Module Online FEX: 102 Description: FEX0102 state: Online FEX version: 5.1(3)N2(1c) [Switch version: 5.1(3)N2(1c)] FEX Interim version: 5.1(3)N2(1c) Switch Interim version: 5.1(3)N2(1c) Extender Serial: SSI165204YC Extender Model: N2K-C2232PP-10GE, Part No: 73-12533-05 Card Id: 82, Mac Addr: f0:29:29:ff:00:42, Num Macs: 64 Module Sw Gen: 12594 [Switch Sw Gen: 21] post level: complete pinning-mode: static Max-links: 1 Fabric port for control traffic: Eth1/11 FCoE Admin: false FCoE Oper: true FCoE FEX AA Configured: false Fabric interface state: Eth1/11 – Interface Up. State: Active Fex Port State Fabric Port Eth102/1/1 Up Eth1/11 Eth102/1/2 Down None Eth102/1/3 Down None Eth102/1/4 Down None Eth102/1/5 Down None snippet removed Logs: 08/21/2014 10:12:13.281018: Module register received 08/21/2014 10:12:13.283215: Registration response sent 08/21/2014 10:12:13.421037: Module Online Sequence 08/21/2014 10:12:16.665624: Module Online |

Part 2. Fabric Interfaces redundancy

Static Pinning is when you pin a number of host ports to a fabric port. If the fabric port goes down so do the host ports that are pinned to it. This is useful when you want no oversubscription in the network.

Once the host port shut down due to a fabric port down event, the server if configured correctly should revert to the secondary NIC.

The “pinning max-link” divides the number specified in the command by the number of host interfaces to determine how many host interfaces go down if there is a fabric interface failure.

Now we shut down fabric interface E1/10, you can see that Eth101/1/1 has changed its operation mode to DOWN. The FEX has additional connectivity with E1/11 which remains up.

| Enter configuration commands, one per line. End with CNTL/Z. N5K3(config)# int et1/10 N5K3(config-if)# shu N5K3(config-if)# N5K3(config-if)# end N5K3# sh fex detail FEX: 101 Description: FEX0101 state: Offline FEX version: 5.1(3)N2(1c) [Switch version: 5.1(3)N2(1c)] FEX Interim version: 5.1(3)N2(1c) Switch Interim version: 5.1(3)N2(1c) Extender Serial: SSI16510AWF Extender Model: N2K-C2232PP-10GE, Part No: 73-12533-05 Card Id: 82, Mac Addr: f0:29:29:ff:02:02, Num Macs: 64 Module Sw Gen: 12594 [Switch Sw Gen: 21] post level: complete pinning-mode: static Max-links: 1 Fabric port for control traffic: FCoE Admin: false FCoE Oper: true FCoE FEX AA Configured: false Fabric interface state: Eth1/10 – Interface Down. State: Configured Fex Port State Fabric Port Eth101/1/1 Down Eth1/10 Eth101/1/2 Down None Eth101/1/3 Down None snippet removed Logs: 08/21/2014 10:00:06.107783: Module register received 08/21/2014 10:00:06.109935: Registration response sent 08/21/2014 10:00:06.239466: Module Online Sequence 08/21/2014 10:00:09.621772: Module Online 08/21/2014 10:13:20.50921: Deleting route to FEX 08/21/2014 10:13:20.58158: Module disconnected 08/21/2014 10:13:20.61591: Offlining Module 08/21/2014 10:13:20.62686: Module Offline Sequence 08/21/2014 10:13:20.797908: Module Offline FEX: 102 Description: FEX0102 state: Online FEX version: 5.1(3)N2(1c) [Switch version: 5.1(3)N2(1c)] FEX Interim version: 5.1(3)N2(1c) Switch Interim version: 5.1(3)N2(1c) Extender Serial: SSI165204YC Extender Model: N2K-C2232PP-10GE, Part No: 73-12533-05 Card Id: 82, Mac Addr: f0:29:29:ff:00:42, Num Macs: 64 Module Sw Gen: 12594 [Switch Sw Gen: 21] post level: complete pinning-mode: static Max-links: 1 Fabric port for control traffic: Eth1/11 FCoE Admin: false FCoE Oper: true FCoE FEX AA Configured: false Fabric interface state: Eth1/11 – Interface Up. State: Active Fex Port State Fabric Port Eth102/1/1 Up Eth1/11 Eth102/1/2 Down None Eth102/1/3 Down None Eth102/1/4 Down None snippet removed Logs: 08/21/2014 10:12:13.281018: Module register received 08/21/2014 10:12:13.283215: Registration response sent 08/21/2014 10:12:13.421037: Module Online Sequence 08/21/2014 10:12:16.665624: Module Online |

Port Channels can be used instead of static pinning between parent switch and FEX so in the event of a fabric interface failure all hosts ports remain active. However, the remaining bandwidth on the parent switch will be shared by all the host ports resulting in an increase in oversubscription.

Part 3. Fabric Extender Topologies

Straight-Through: The FEX is connected to a single parent switch. The servers connecting to the FEX can leverage active-active data plane by using host vPC.

Shutting down the Peer link results in ALL vPC member ports in the secondary peer become disabled. For this reason it is better to use a Dual Homed.

Dual Homed: Connecting a single FEX to two parent switches.

In active – active, a single parent switch failure does not affect the host’s interfaces because both vpc peers have separate control planes and manage the FEX separately.

For the remainder of the post we are going to look at Dual Homed FEX connectivity with host Vpc.

Full configuration:

| N5K1: feature lacp feature vpc feature fex ! vlan 10 ! vpc domain 1 peer-keepalive destination 192.168.0.52 ! interface port-channel1 switchport mode trunk spanning-tree port type network vpc peer-link ! interface port-channel10 switchport access vlan 10 vpc 10 ! interface Ethernet1/1 switchport access vlan 10 spanning-tree port type edge speed 1000 ! interface Ethernet1/3 – 5 switchport mode trunk spanning-tree port type network channel-group 1 mode active ! interface Ethernet1/10 switchport mode fex-fabric fex associate 101 ! interface Ethernet101/1/1 switchport access vlan 10 channel-group 10 mode on N5K2: feature lacp feature vpc feature fex ! vlan 10 ! vpc domain 1 peer-keepalive destination 192.168.0.51 ! interface port-channel1 switchport mode trunk spanning-tree port type network vpc peer-link ! interface port-channel10 switchport access vlan 10 vpc 10 ! interface Ethernet1/2 switchport access vlan 10 spanning-tree port type edge speed 1000 ! interface Ethernet1/3 – 5 switchport mode trunk spanning-tree port type network channel-group 1 mode active ! interface Ethernet1/11 switchport mode fex-fabric fex associate 102 ! interface Ethernet102/1/1 switchport access vlan 10 channel-group 10 mode on |

The FEX do not support LACP so configure the port-channel mode to “ON”

The first step is to check the VPC peer link and general VPC parameters.

Command: “show vpc brief“. Displays the vPC domain ID, the peer-link status, the keepalive message status, whether the configuration consistency is successful, and whether peer-link formed or the failure to form

Command: “show vpc peer-keepalive” Displays the destination IP of the peer keepalive message for the vPC. The command also displays the send and receives status as well as the last update from the peer in seconds and milliseconds

| N5K3# sh vpc brief Legend: (*) – local vPC is down, forwarding via vPC peer-link vPC domain id : 1 Peer status : peer adjacency formed ok vPC keep-alive status : peer is alive Configuration consistency status: success Per-vlan consistency status : success Type-2 consistency status : success vPC role : primary Number of vPCs configured : 1 Peer Gateway : Disabled Dual-active excluded VLANs : – Graceful Consistency Check : Enabled vPC Peer-link status ——————————————————————— id Port Status Active vlans — —- —— ————————————————– 1 Po1 up 1,10 vPC status —————————————————————————- id Port Status Consistency Reason Active vlans —— ———– —— ———– ————————– ———– 10 Po10 up success success 10 N5K3# show vpc peer-keepalive vPC keep-alive status : peer is alive –Peer is alive for : (1753) seconds, (536) msec –Send status : Success –Last send at : 2014.08.21 10:52:30 130 ms –Sent on interface : mgmt0 –Receive status : Success –Last receive at : 2014.08.21 10:52:29 925 ms –Received on interface : mgmt0 –Last update from peer : (0) seconds, (485) msec vPC Keep-alive parameters –Destination : 192.168.0.54 –Keepalive interval : 1000 msec –Keepalive timeout : 5 seconds –Keepalive hold timeout : 3 seconds –Keepalive vrf : management –Keepalive udp port : 3200 –Keepalive tos : 192 |

The trunk interface should be forwarding on the peer link and VLAN 10 must be forwarding and active on the trunk link. Take note if any vlans are on err-disable mode on the trunk.

| N5K3# sh interface trunk ——————————————————————————- Port Native Status Port Vlan Channel ——————————————————————————– Eth1/3 1 trnk-bndl Po1 Eth1/4 1 trnk-bndl Po1 Eth1/5 1 trnk-bndl Po1 Po1 1 trunking — ——————————————————————————– Port Vlans Allowed on Trunk ——————————————————————————– Eth1/3 1-3967,4048-4093 Eth1/4 1-3967,4048-4093 Eth1/5 1-3967,4048-4093 Po1 1-3967,4048-4093 ——————————————————————————– Port Vlans Err-disabled on Trunk ——————————————————————————– Eth1/3 none Eth1/4 none Eth1/5 none Po1 none ——————————————————————————– Port STP Forwarding ——————————————————————————– Eth1/3 none Eth1/4 none Eth1/5 none Po1 1,10 ——————————————————————————– Port Vlans in spanning tree forwarding state and not pruned ——————————————————————————– Eth1/3 — Eth1/4 — Eth1/5 — Po1 — ——————————————————————————– Port Vlans Forwarding on FabricPath ——————————————————————————– N5K3# sh spanning-tree vlan 10 VLAN0010 Spanning tree enabled protocol rstp Root ID Priority 32778 Address 0005.9b1e.82fc This bridge is the root Hello Time 2 sec Max Age 20 sec Forward Delay 15 sec Bridge ID Priority 32778 (priority 32768 sys-id-ext 10) Address 0005.9b1e.82fc Hello Time 2 sec Max Age 20 sec Forward Delay 15 sec Interface Role Sts Cost Prio.Nbr Type —————- —- — ——— ——– ——————————– Po1 Desg FWD 1 128.4096 (vPC peer-link) Network P2p Po10 Desg FWD 1 128.4105 (vPC) Edge P2p Eth1/1 Desg FWD 4 128.129 Edge P2p |

Check the Port Channel database and determine the status of the port channel

| N5K3# show port-channel database port-channel1 Last membership update is successful 3 ports in total, 3 ports up First operational port is Ethernet1/3 Age of the port-channel is 0d:00h:13m:22s Time since last bundle is 0d:00h:13m:18s Last bundled member is Ethernet1/5 Ports: Ethernet1/3 [active ] [up] * Ethernet1/4 [active ] [up] Ethernet1/5 [active ] [up] port-channel10 Last membership update is successful 1 ports in total, 1 ports up First operational port is Ethernet101/1/1 Age of the port-channel is 0d:00h:13m:20s Time since last bundle is 0d:00h:02m:42s Last bundled member is Ethernet101/1/1 Time since last unbundle is 0d:00h:02m:46s Last unbundled member is Ethernet101/1/1 Ports: Ethernet101/1/1 [on] [up] * |

To execute reachability tests, create an SVI on the first parent switch and run ping tests. You must first enable the feature set “feature interface-vlan”. The reason we create an SVI in VLAN 10 is because we need an interfaces to source our pings.

| N5K3# conf t Enter configuration commands, one per line. End with CNTL/Z. N5K3(config)# fea feature feature-set N5K3(config)# feature interface-vlan N5K3(config)# int vlan 10 N5K3(config-if)# ip address 10.0.0.3 255.255.255.0 N5K3(config-if)# no shu N5K3(config-if)# N5K3(config-if)# N5K3(config-if)# end N5K3# ping 10.0.0.3 PING 10.0.0.3 (10.0.0.3): 56 data bytes 64 bytes from 10.0.0.3: icmp_seq=0 ttl=255 time=0.776 ms 64 bytes from 10.0.0.3: icmp_seq=1 ttl=255 time=0.504 ms 64 bytes from 10.0.0.3: icmp_seq=2 ttl=255 time=0.471 ms 64 bytes from 10.0.0.3: icmp_seq=3 ttl=255 time=0.473 ms 64 bytes from 10.0.0.3: icmp_seq=4 ttl=255 time=0.467 ms — 10.0.0.3 ping statistics — 5 packets transmitted, 5 packets received, 0.00% packet loss round-trip min/avg/max = 0.467/0.538/0.776 ms N5K3# ping 10.0.0.10 PING 10.0.0.10 (10.0.0.10): 56 data bytes Request 0 timed out 64 bytes from 10.0.0.10: icmp_seq=1 ttl=127 time=1.874 ms 64 bytes from 10.0.0.10: icmp_seq=2 ttl=127 time=0.896 ms 64 bytes from 10.0.0.10: icmp_seq=3 ttl=127 time=1.023 ms 64 bytes from 10.0.0.10: icmp_seq=4 ttl=127 time=0.786 ms — 10.0.0.10 ping statistics — 5 packets transmitted, 4 packets received, 20.00% packet loss round-trip min/avg/max = 0.786/1.144/1.874 ms N5K3# |

Do the same tests on the second Nexus 5K.

| N5K4(config)# int vlan 10 N5K4(config-if)# ip address 10.0.0.4 255.255.255.0 N5K4(config-if)# no shu N5K4(config-if)# end N5K4# ping 10.0.0.10 PING 10.0.0.10 (10.0.0.10): 56 data bytes Request 0 timed out 64 bytes from 10.0.0.10: icmp_seq=1 ttl=127 time=1.49 ms 64 bytes from 10.0.0.10: icmp_seq=2 ttl=127 time=1.036 ms 64 bytes from 10.0.0.10: icmp_seq=3 ttl=127 time=0.904 ms 64 bytes from 10.0.0.10: icmp_seq=4 ttl=127 time=0.889 ms — 10.0.0.10 ping statistics — 5 packets transmitted, 4 packets received, 20.00% packet loss round-trip min/avg/max = 0.889/1.079/1.49 ms N5K4# ping 10.0.0.13 PING 10.0.0.13 (10.0.0.13): 56 data bytes Request 0 timed out Request 1 timed out Request 2 timed out Request 3 timed out Request 4 timed out — 10.0.0.13 ping statistics — 5 packets transmitted, 0 packets received, 100.00% packet loss N5K4# ping 10.0.0.3 PING 10.0.0.3 (10.0.0.3): 56 data bytes Request 0 timed out 64 bytes from 10.0.0.3: icmp_seq=1 ttl=254 time=1.647 ms 64 bytes from 10.0.0.3: icmp_seq=2 ttl=254 time=1.298 ms 64 bytes from 10.0.0.3: icmp_seq=3 ttl=254 time=1.332 ms 64 bytes from 10.0.0.3: icmp_seq=4 ttl=254 time=1.24 ms — 10.0.0.3 ping statistics — 5 packets transmitted, 4 packets received, 20.00% packet loss round-trip min/avg/max = 1.24/1.379/1.647 ms |

Shut down one of the FEX links to the parent and you see that the FEX is still reachable via the other link that is in the port channel bundle.

| N5K3# conf t Enter configuration commands, one per line. End with CNTL/Z. N5K3(config)# int Eth101/1/1 N5K3(config-if)# shu N5K3(config-if)# end N5K3# N5K3# N5K3# ping 10.0.0.3 PING 10.0.0.3 (10.0.0.3): 56 data bytes 64 bytes from 10.0.0.3: icmp_seq=0 ttl=255 time=0.659 ms 64 bytes from 10.0.0.3: icmp_seq=1 ttl=255 time=0.515 ms 64 bytes from 10.0.0.3: icmp_seq=2 ttl=255 time=0.471 ms 64 bytes from 10.0.0.3: icmp_seq=3 ttl=255 time=0.466 ms 64 bytes from 10.0.0.3: icmp_seq=4 ttl=255 time=0.465 ms — 10.0.0.3 ping statistics — 5 packets transmitted, 5 packets received, 0.00% packet loss round-trip min/avg/max = 0.465/0.515/0.659 ms |

If you would like to futher your knowlege on VPC and how it relates to Data Center toplogies and more specifically, Cisco’s Application Centric Infrastructure (ACI), you can check out my following training courses on Cisco ACI. Course 1: Design and Architect Cisco ACI, Course 2: Implement Cisco ACI, and Course 3: Troubleshooting Cisco ACI,