Network Security Components

In today's interconnected world, network security plays a crucial role in protecting sensitive data and ensuring the smooth functioning of digital systems. A strong network security framework consists of various components that work together to mitigate risks and safeguard valuable information. In this blog post, we will explore some of the essential components that contribute to a robust network security infrastructure.

Network security encompasses a range of strategies and technologies aimed at preventing unauthorized access, data breaches, and other malicious activities. It involves securing both hardware and software components of a network infrastructure. By implementing robust security measures, organizations can mitigate risks and ensure the confidentiality, integrity, and availability of their data.

Network security components form the backbone of any robust network security system. By implementing a combination of firewalls, IDS, VPNs, SSL/TLS, access control systems, antivirus software, DLP systems, network segmentation, SIEM systems, and well-defined security policies, organizations can significantly enhance their network security posture and protect against evolving cyber threats.Matt Conran

Highlights: Network Security Components

Value of Network Security

– Network security is essential to any company or organization’s data management strategy. It is the process of protecting data, computers, and networks from unauthorized access and malicious attacks. Network security involves various technologies and techniques, such as firewalls, encryption, authentication, and access control.

Example: Firewalls help protect a network from unauthorized access by preventing outsiders from connecting to it. Encryption protects data from being intercepted by malicious actors. Authentication verifies a user’s identity, and access control manages who has access to a network and their access type.

We have several network security components from the endpoints to the network edge, be it a public or private cloud. Policy and controls are enforced at each network security layer, giving adequate control and visibility of threats that may seek to access, modify, or break a network and its applications.

-Firstly, network security is provided from the network: your IPS/IDS, virtual firewalls, and distributed firewalls technologies.

-Second, some network security, known as endpoint security, protects the end applications. Of course, you can’t have one without the other, but if you were to pick a favorite, it would be endpoint security.

Personal Note: Remember that most network security layers in the security architecture I see in many consultancies are distinct. There may even be a different team looking after each component. This has been the case for a while, but there needs to be some integration between the layers of security to keep up with the changes in the security landscape.

**Network Security Layers**

Design and implementing a network security architecture is a composite of different technologies working at different network security layers in your infrastructure, spanning on-premises and in the cloud. So, we can have other point systems operating at the network security layers or look for an approach where each network security device somehow works holistically. These are the two options.

Whichever path of security design you opt for, you will have the same network security components carrying out their security function, either virtual or physical, or a combination of both.

There will be a platform-based or individual point solution approach. Some traditional security functionality, such as firewalls that have been around for decades, is still widely used, along with new ways to protect, especially regarding endpoint protection.

Firewalls –

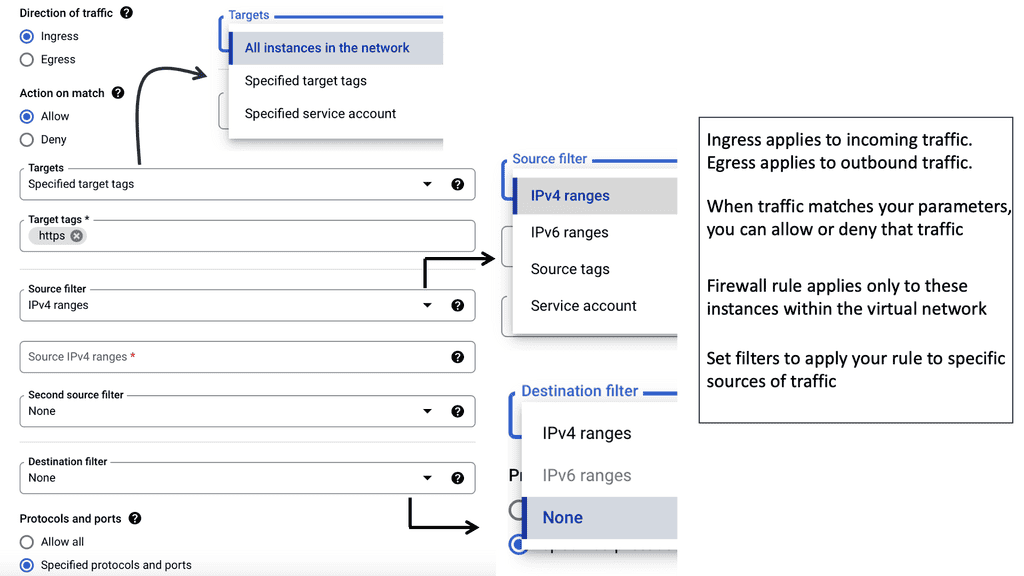

A. Firewalls: Firewalls serve as the first line of defense by monitoring and controlling incoming and outgoing network traffic. They act as filters, scrutinizing data packets and determining whether they should be allowed or blocked based on predefined security rules. Firewalls provide an essential barrier against unauthorized access, preventing potential intrusions and mitigating risks.

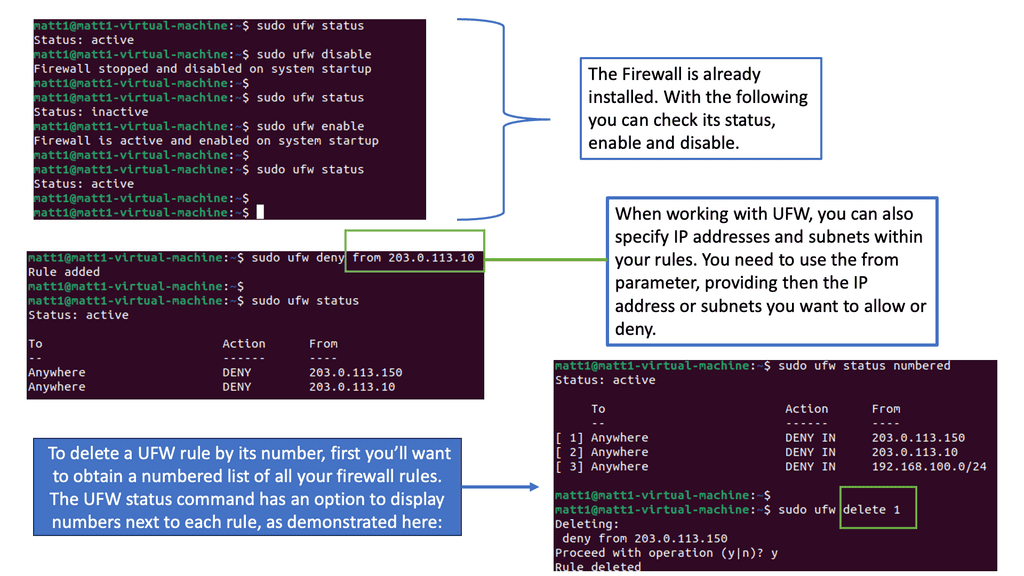

–Understanding UFW

UFW, short for Uncomplicated Firewall, is a user-friendly front-end for managing netfilter firewall rules in Linux. It provides a simplified interface for creating, managing, and enforcing firewall rules to protect your network from unauthorized access and potential threats. Whether you are a beginner or an experienced user, UFW offers a straightforward approach to network security.

To start with UFW, you must ensure it is installed on your Linux system. Most distributions come with UFW pre-installed, but if not, you can easily install it using the package manager. Once installed, configuring UFW involves defining incoming and outgoing traffic rules, setting default policies, and enabling specific ports or services. We will walk you through the step-by-step process of configuring UFW to meet your security requirements.

Intrusion Detection Systems –

B. Intrusion Detection Systems (IDS): Intrusion Detection Systems are designed to detect and respond to suspicious or malicious activities within a network. By monitoring network traffic patterns and analyzing anomalies, IDS can identify potential threats that may bypass traditional security measures. These systems act as vigilant sensors, alerting administrators to potential breaches and enabling swift action to protect network assets.

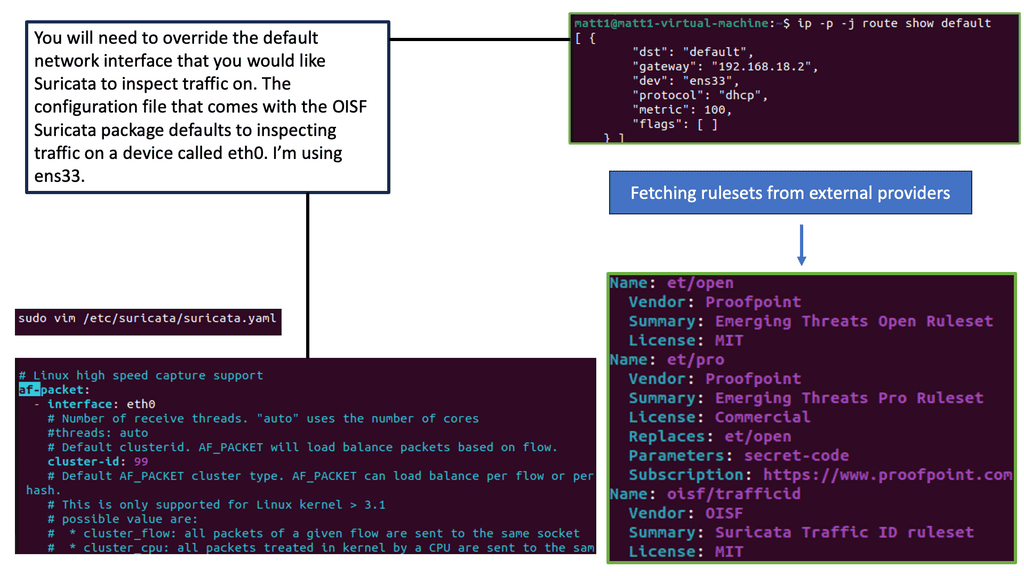

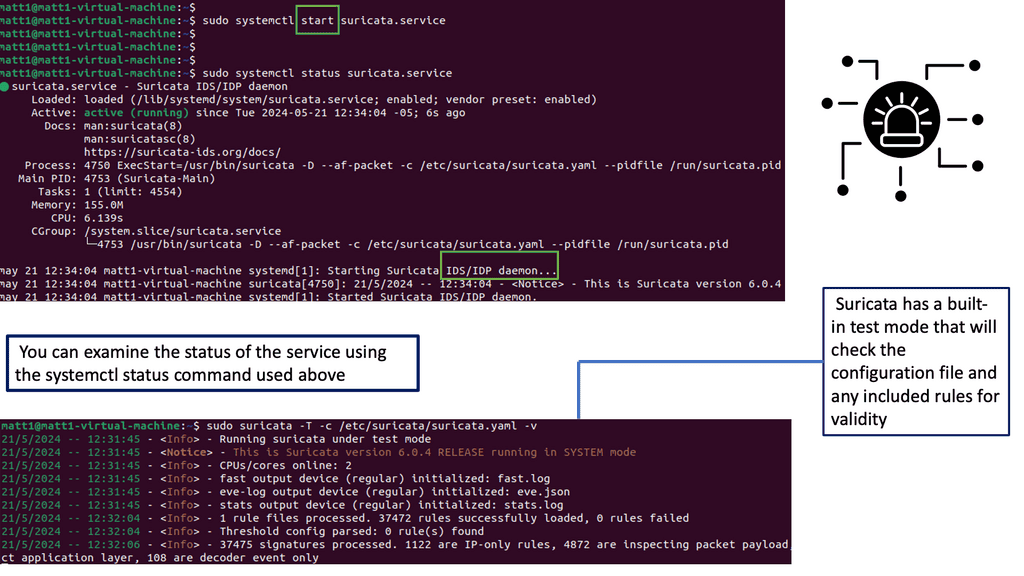

–Understanding Suricate IPS IDS

Suricate IPS IDS, short for Intrusion Prevention System and Intrusion Detection System, is a comprehensive security solution designed to detect and mitigate potential network intrusions proactively. By analyzing network traffic in real-time, it identifies and responds to suspicious activities, preventing unauthorized access and data breaches.

Suricate IPS IDS offers a wide array of features that enhance network security. Its advanced threat intelligence capabilities allow for the detection of both known and emerging threats. It can identify malicious patterns and behaviors by utilizing signature-based detection and behavioral analysis, providing an extra defense against evolving cyber threats.

Virtual Private Networks –

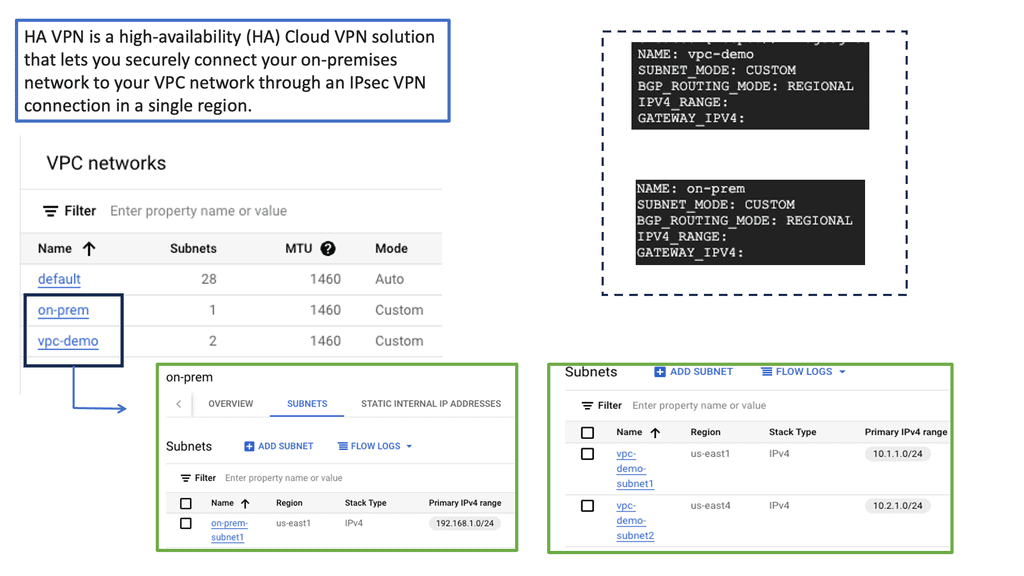

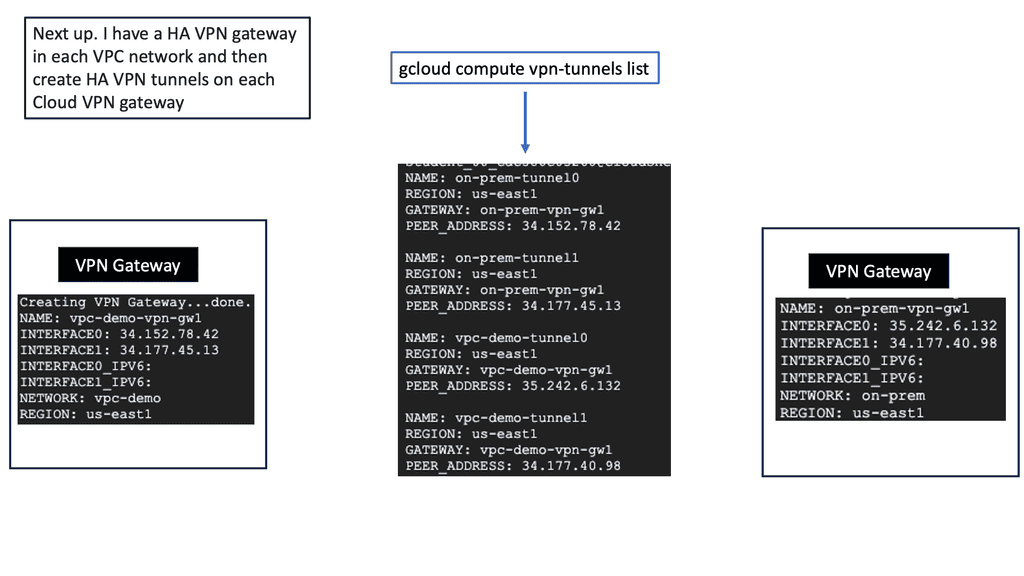

C. Virtual Private Networks (VPNs): VPNs provide a secure and encrypted connection between remote users or branch offices and the leading network. VPNs ensure confidentiality and protect sensitive data from eavesdropping or interception by establishing a private tunnel over a public network. With the proliferation of remote work, VPNs have become indispensable in maintaining secure communication channels.

Access Control Systems

Access Control Systems

D. Access Control Systems: Access Control Systems regulate and manage user access to network resources. Through thorough authentication, authorization, and accounting mechanisms, these systems ensure that only authorized individuals and devices can gain entry to specific data or systems. Implementing robust access control measures minimizes the risk of unauthorized access and helps maintain the principle of least privilege.

Encryption –

E. Encryption: Encryption converts plaintext into ciphertext, rendering it unreadable to unauthorized parties. Organizations can protect their sensitive information from interception or theft by encrypting data in transit and at rest. Robust encryption algorithms and secure critical management practices form the foundation of data protection.

Core Activity: Mapping the Network

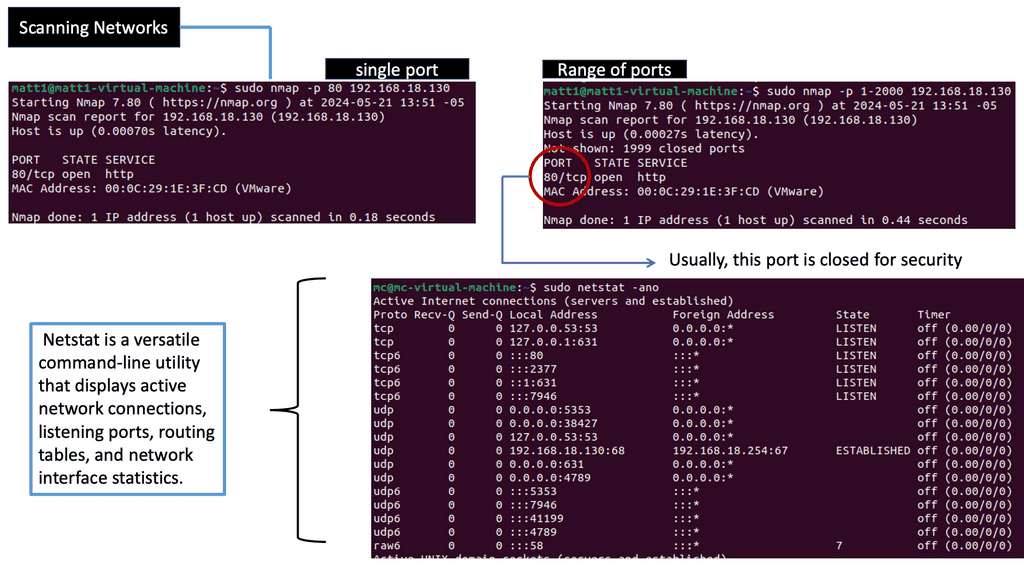

Network Scanning

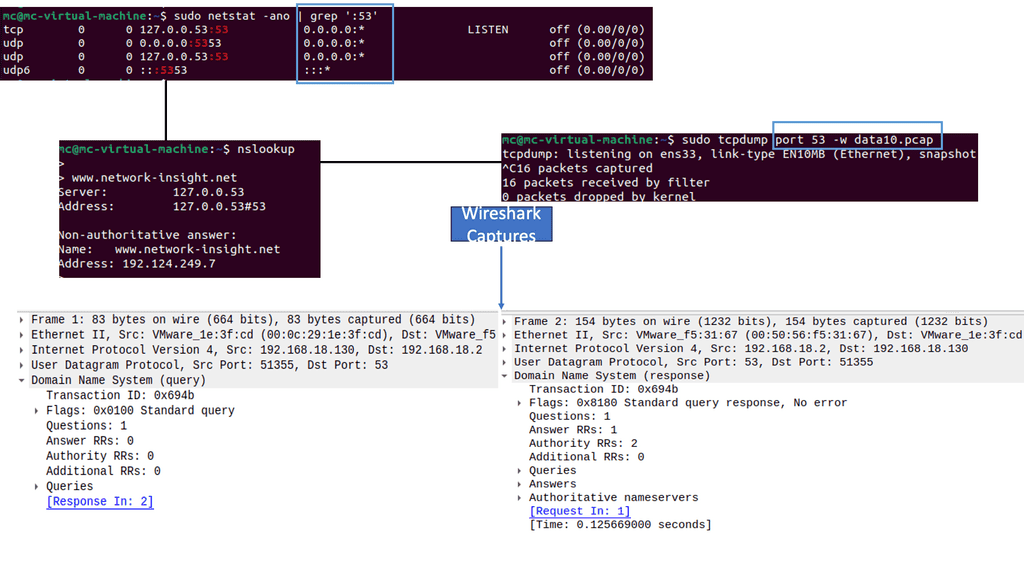

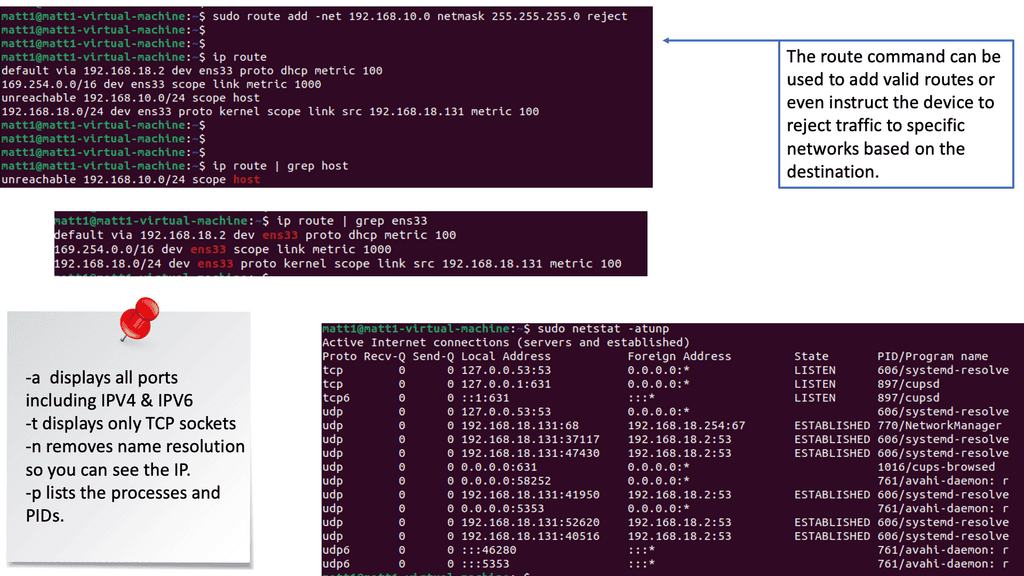

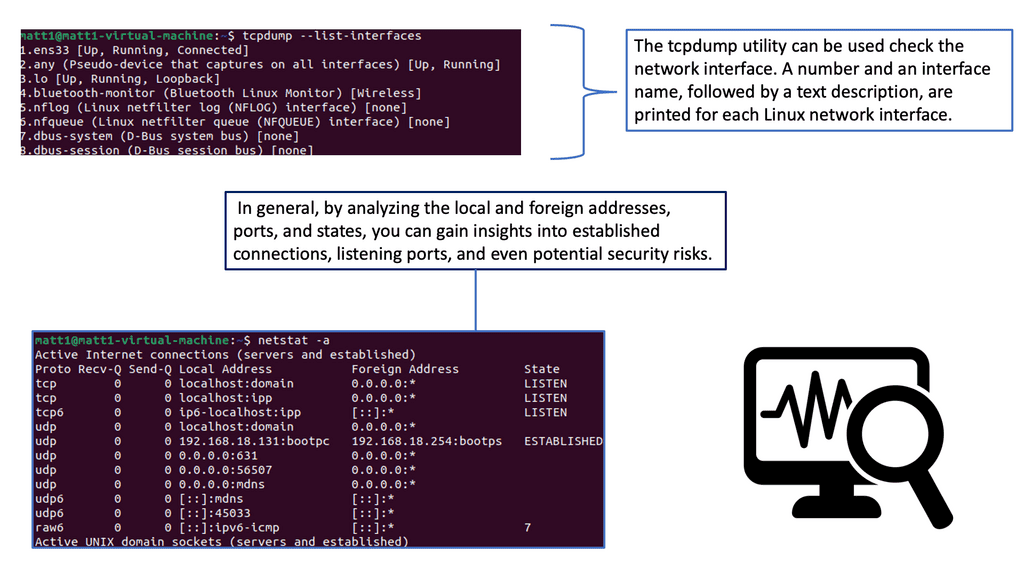

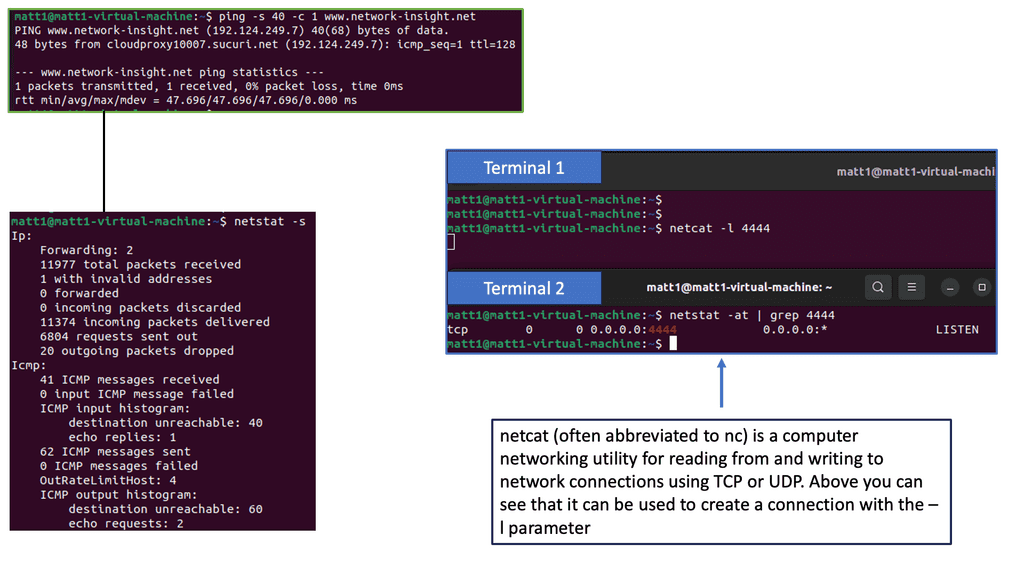

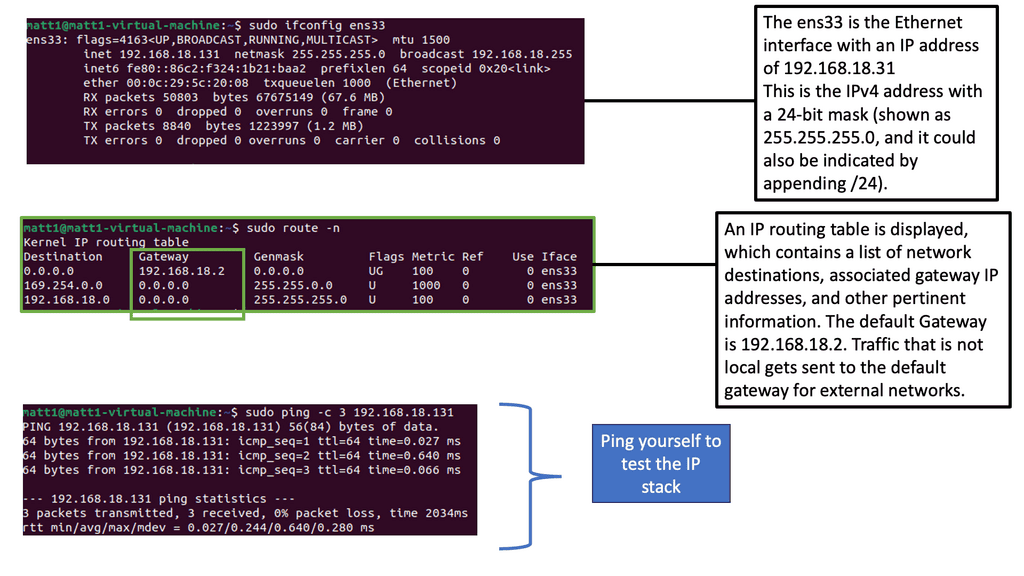

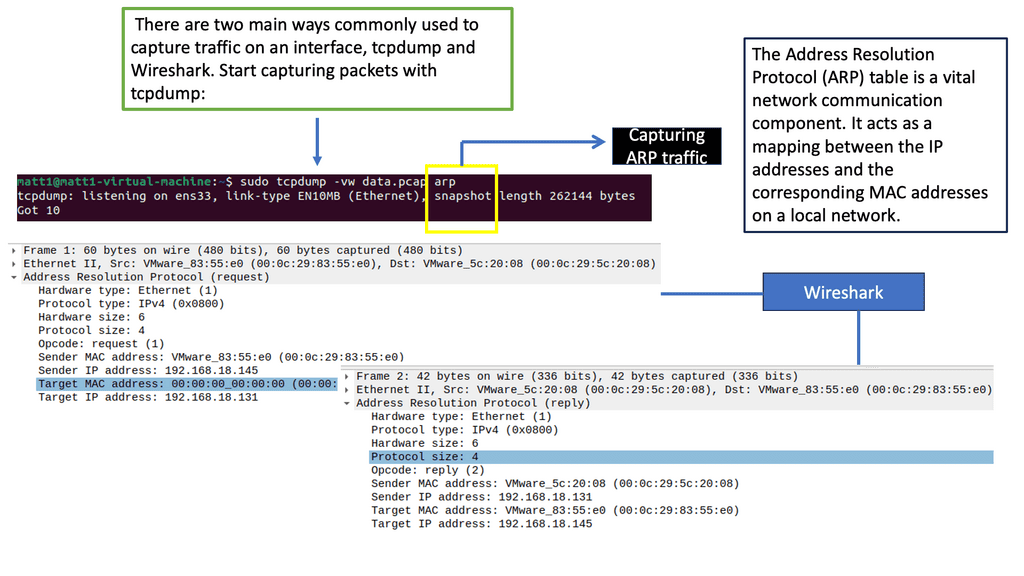

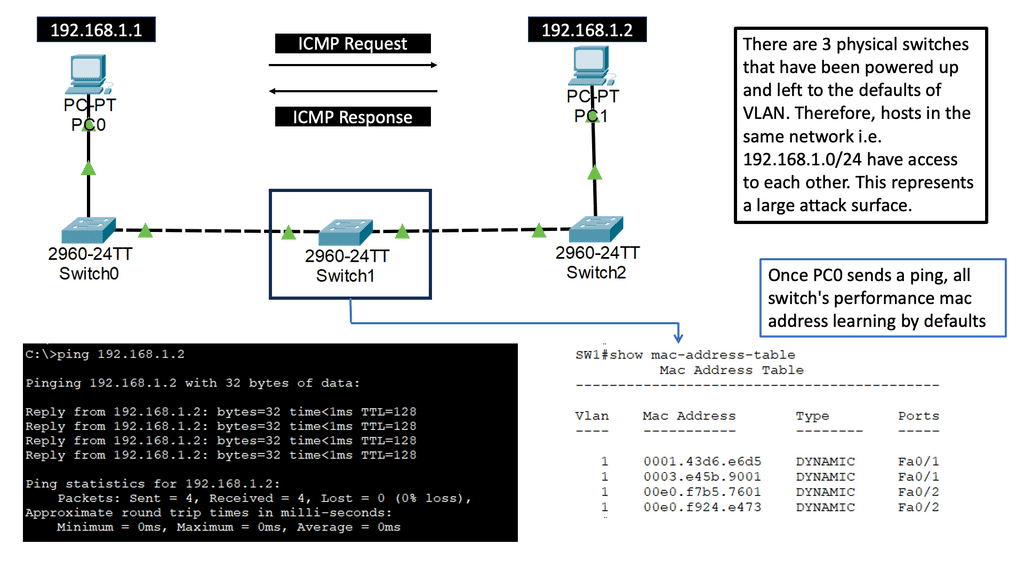

Network scanning is the systematic process of identifying active hosts, open ports, and services within a network. It is a reconnaissance technique for mapping out the network’s architecture and ascertaining its vulnerabilities. Network scanners can gather valuable information about the network’s structure and potential entry points using specialized tools and protocols like ICMP, TCP, and UDP.

Scanning Techniques

Various network scanning techniques are employed by security professionals and hackers alike. Port scanning, for instance, focuses on identifying open ports and services, providing insights into potential attack vectors. Vulnerability scanning, on the other hand, aims to uncover weaknesses and misconfigurations in network devices and software. Other notable methods include network mapping, OS fingerprinting, and packet sniffing, each serving a unique purpose in network security.

Benefits:

Network scanning offers a plethora of benefits and finds applications in various domains. Firstly, it aids in proactive network defense by identifying vulnerabilities before malicious actors exploit them. Additionally, network scanning facilitates compliance with industry regulations and standards, ensuring the network meets necessary security requirements. Moreover, it assists in troubleshooting network issues, optimizing performance, and enhancing overall network management.

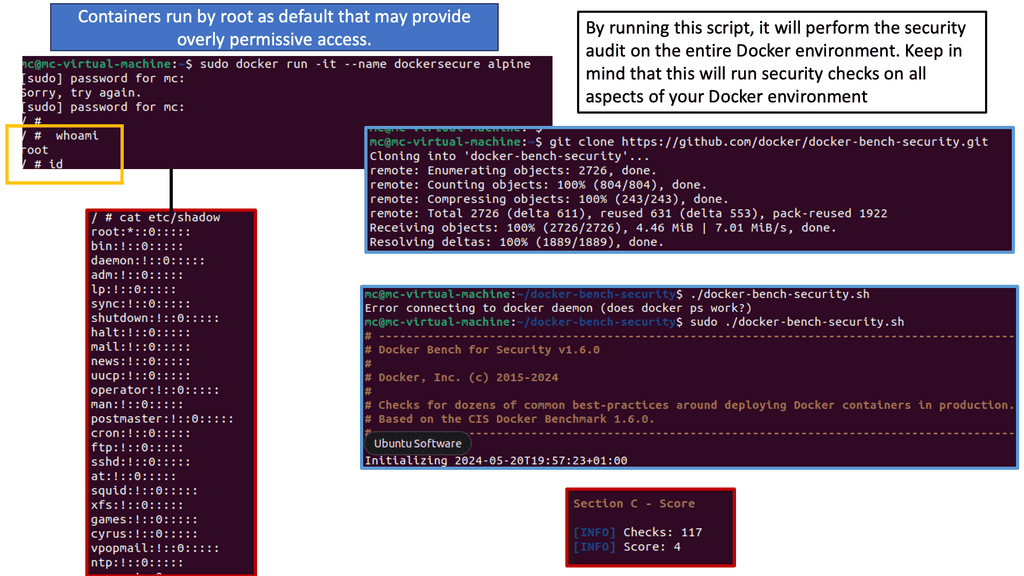

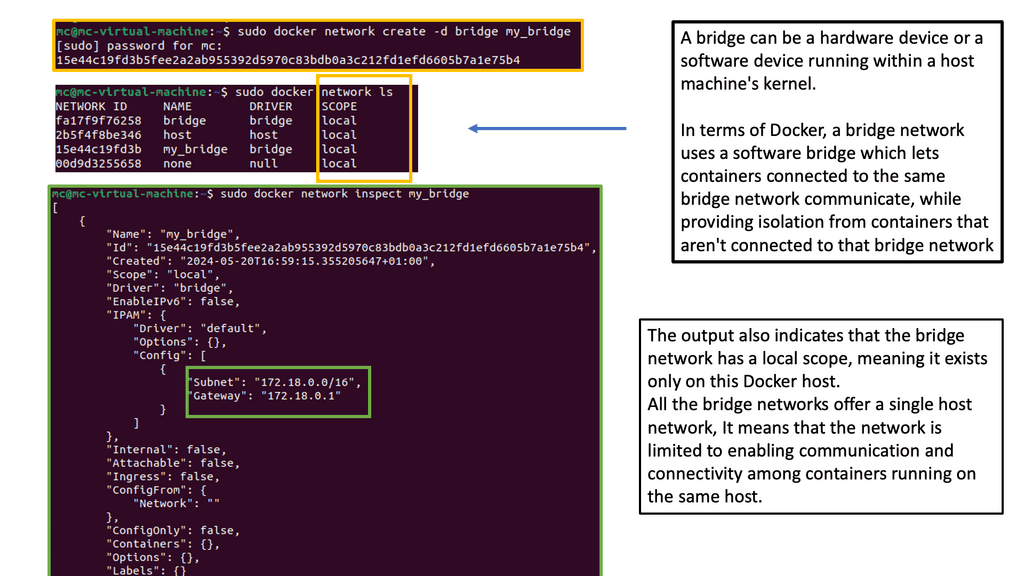

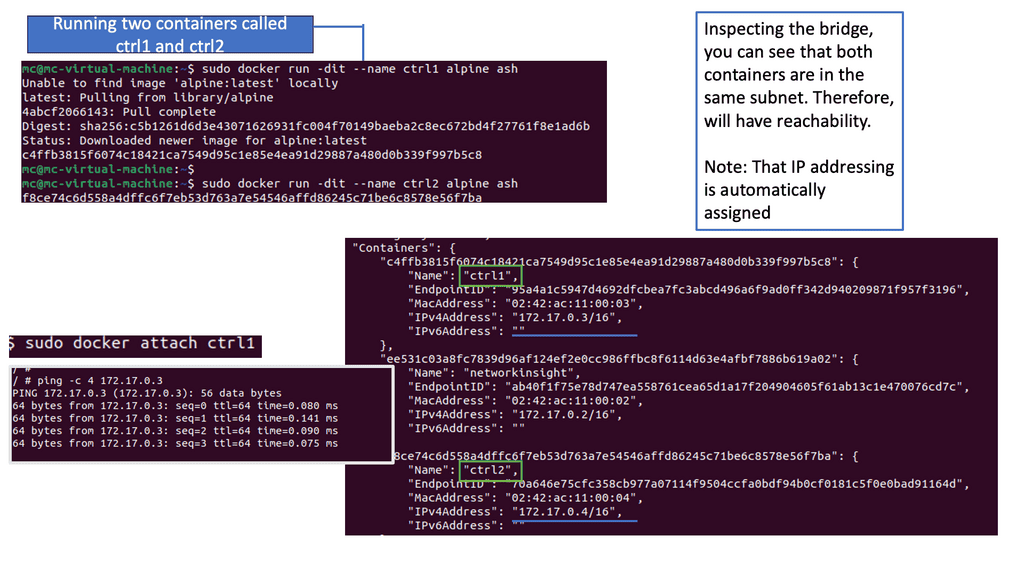

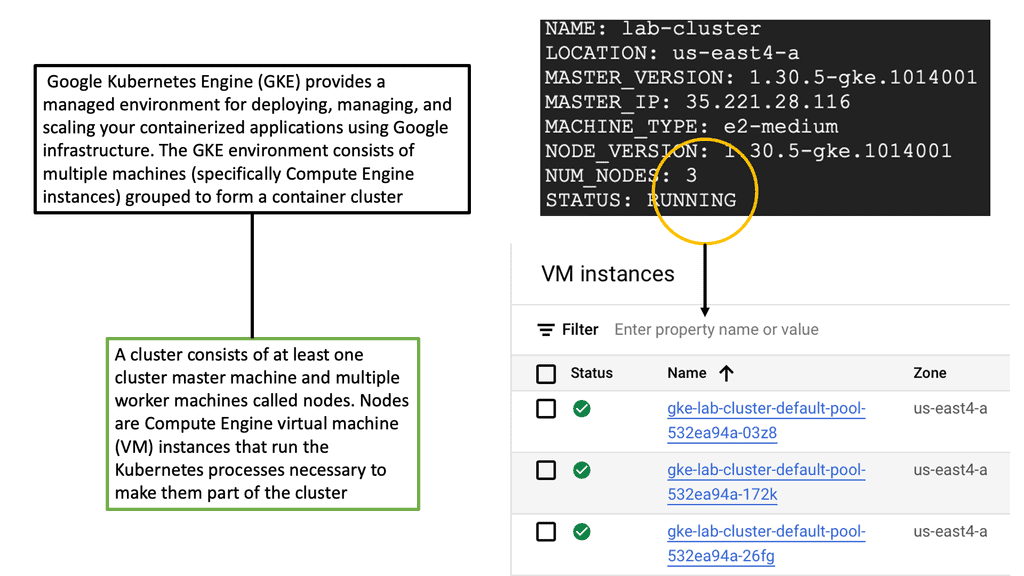

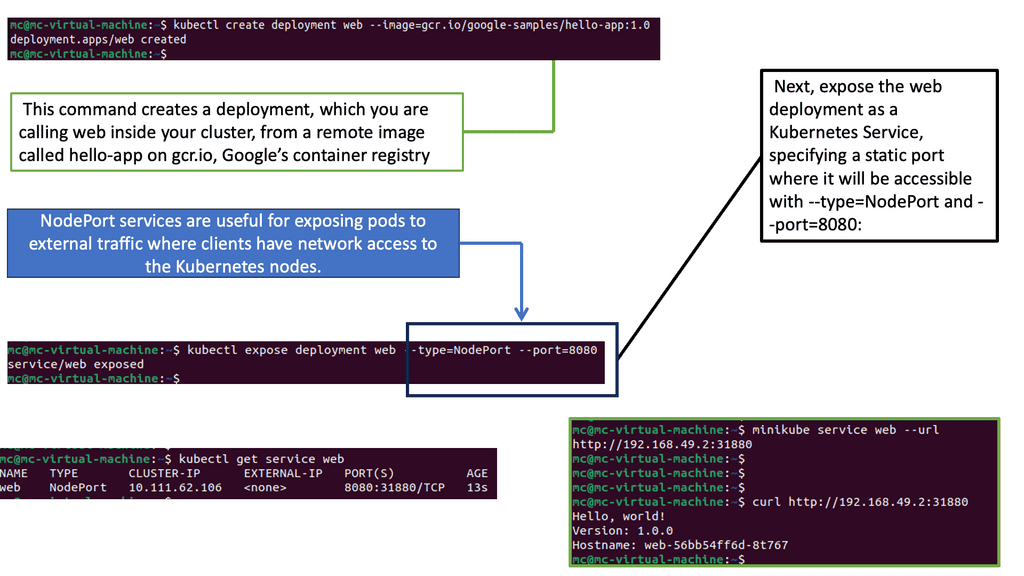

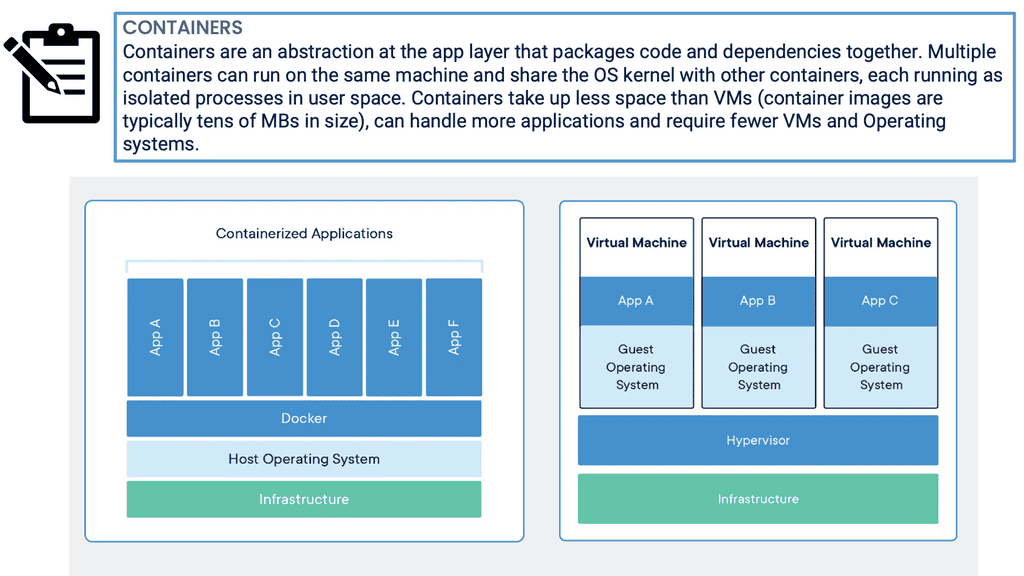

**Container Security Component – Docker Bench**

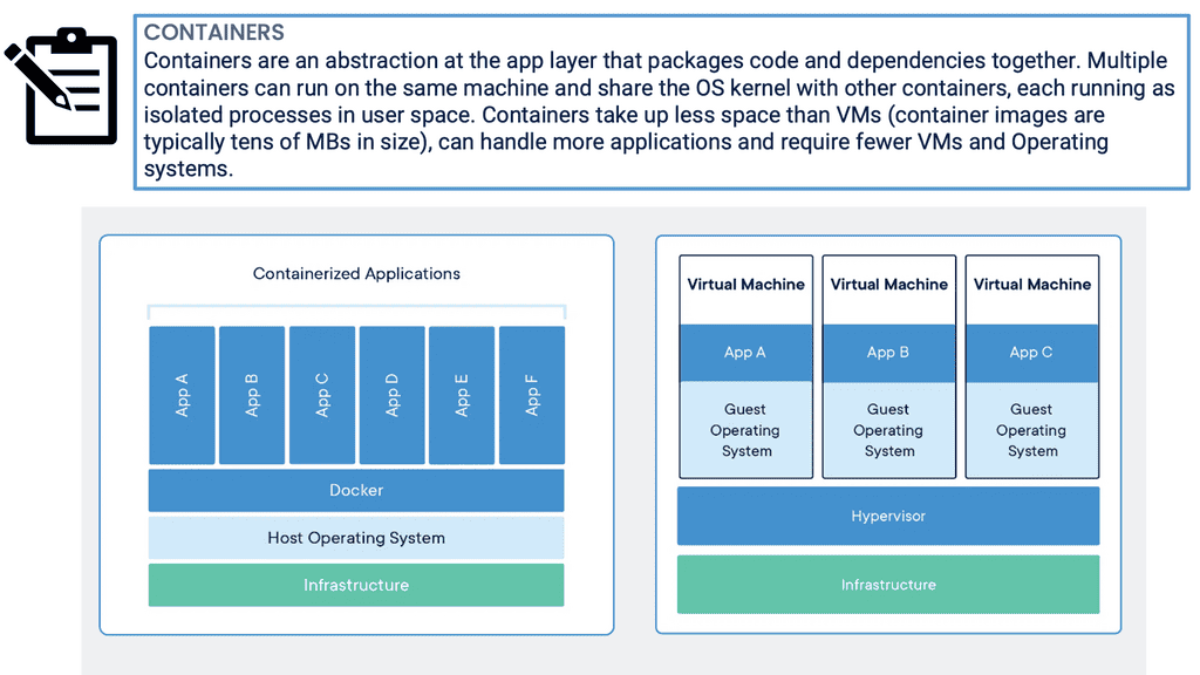

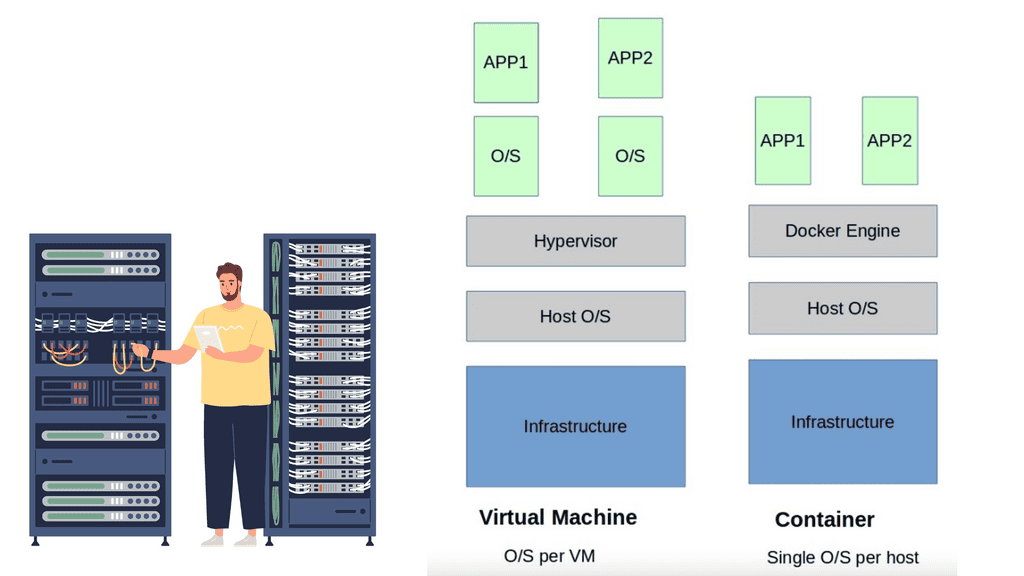

A Key Point: Understanding Container Isolation

Understanding container isolation is crucial to Docker security. Docker utilizes Linux kernel features like cgroups and namespaces to provide isolation between containers and the host system. By leveraging these features, containers can run securely alongside each other, minimizing the risk of potential vulnerabilities.

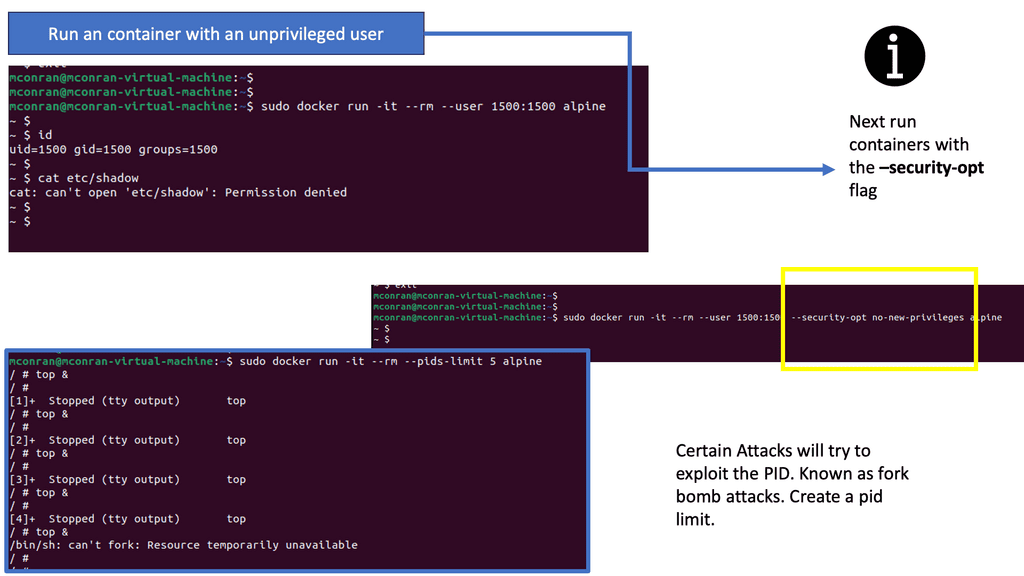

- Limit Container Privileges

One of the fundamental principles of Docker security is limiting container privileges. Docker containers run with root privileges by default, which can be a significant security risk. However, creating and running containers with the least privileges necessary for their intended purpose is advisable. Implementing this principle ensures that potential damage is limited even if a container is compromised.

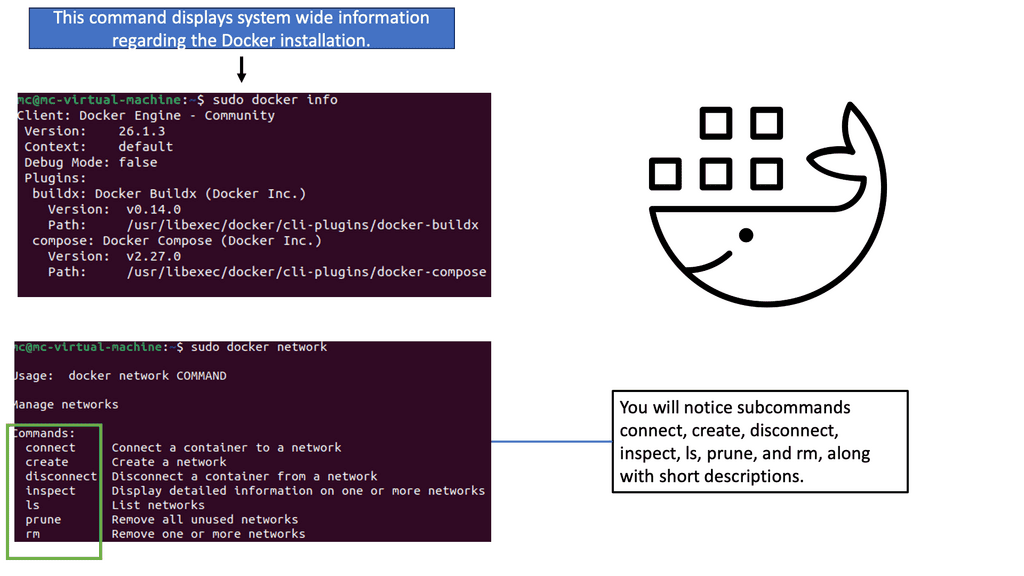

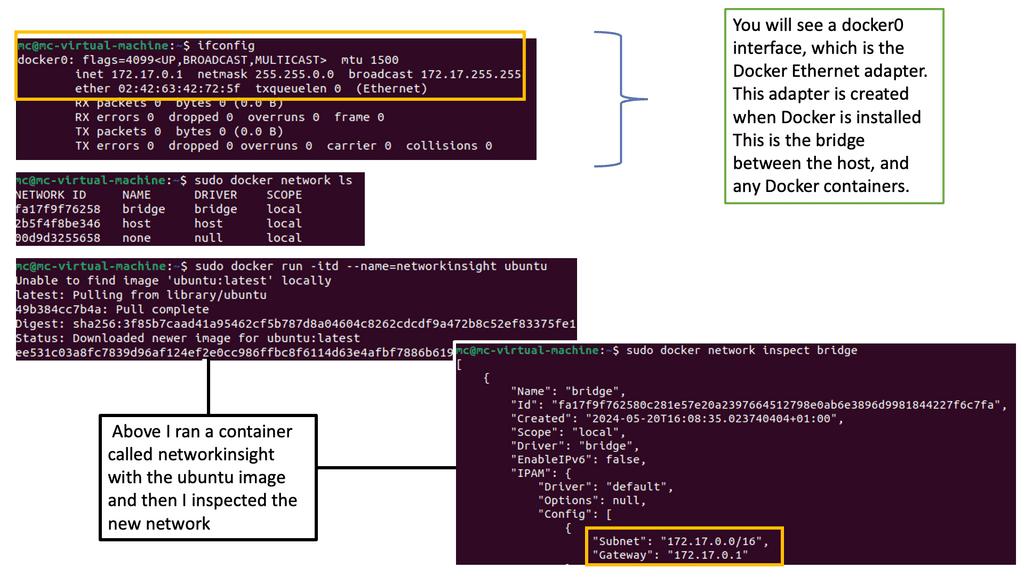

- Docker Bench Security

Docker Bench Security is an open-source tool developed by the Docker team. Its purpose is to provide a standardized method for evaluating Docker security configurations against best practices. You can identify potential security vulnerabilities and misconfigurations in your Docker environment by running Docker Bench Security.

- Running Docker Bench

To run Docker Bench Security, you can clone the official repository from GitHub. Once cloned, navigate to the directory and execute the shell script provided. Docker Bench Security will then perform a series of security checks on your Docker installation and provide a detailed report highlighting any potential security issues.

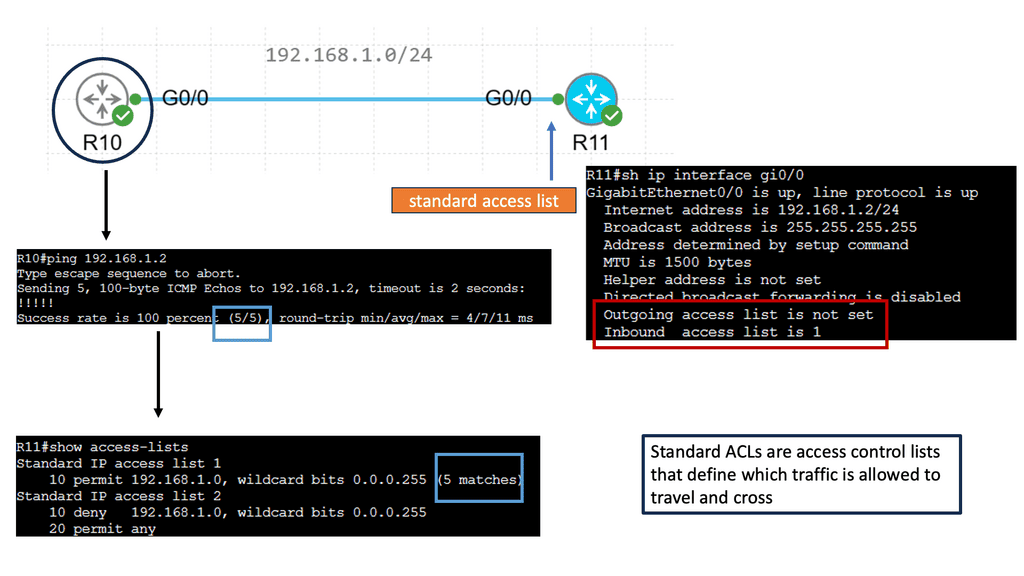

Access List for IPv4 & IPv6

IPv4 Standard Access Lists

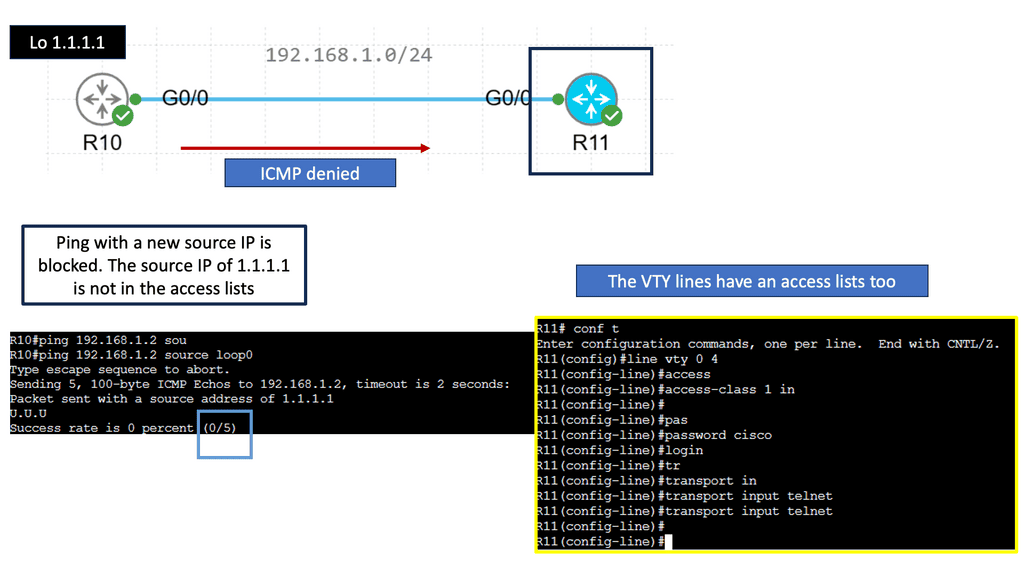

Standard access lists are fundamental components of network security. They enable administrators to filter traffic based on source IP addresses, offering a basic level of control. Network administrators can allow or deny specific traffic flows by carefully crafting access control entries (ACEs) within the standard ACL.

Implementing Access Lists

Implementing standard access lists brings several advantages to network security. Firstly, they provide a simple and efficient way to restrict access to specific network resources. Administrators can mitigate potential threats and unauthorized access attempts by selectively permitting or denying traffic based on source IP addresses. Standard access lists can also help optimize network performance by reducing unnecessary traffic flows.

ACL Best Practices

Following best practices when configuring standard access lists is crucial to achieving maximum effectiveness. First, it is recommended that the ACL be applied as close to the source of the traffic as possible, minimizing unnecessary processing.

Second, administrators should carefully plan and document the desired traffic filtering policies before implementing the ACL. This ensures clarity and makes future modifications easier. Lastly, regular monitoring and auditing of the ACL’s functionality is essential to maintaining a secure network environment.

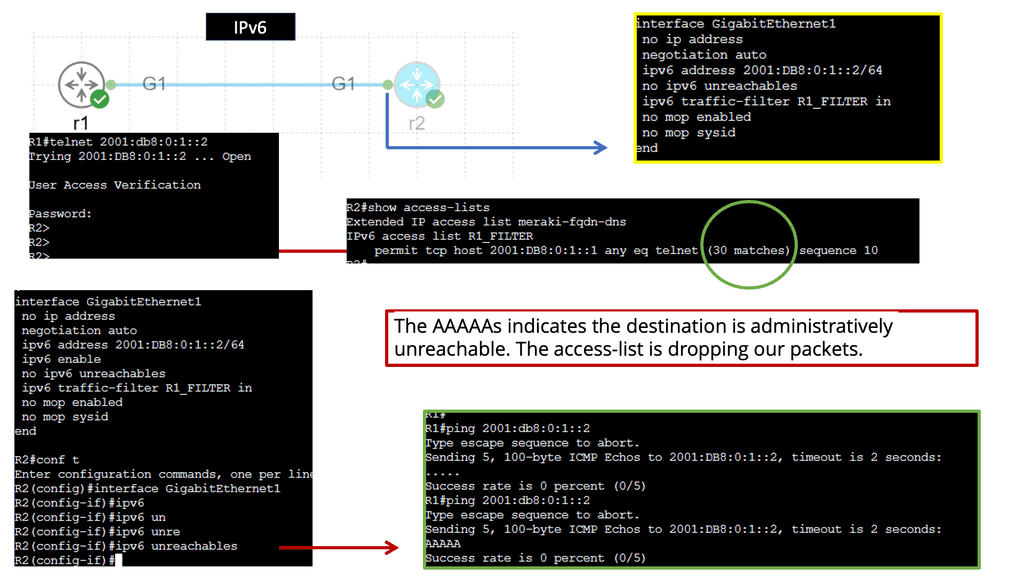

Understanding IPv6 Access-lists

Understanding IPv6 Access-lists

IPv6 access lists are a fundamental part of network security architecture. They filter and control the flow of traffic based on specific criteria. Unlike their IPv4 counterparts, IPv6 access lists are designed to handle the larger address space provided by IPv6. They enable network administrators to define rules that determine which packets are allowed or denied access to a network.

Standard & Extended ACLs

IPv6 access lists can be categorized into two main types: standard and extended. Standard access lists are based on the source IPv6 address and allow or deny traffic accordingly. On the other hand, extended access lists consider additional parameters such as destination addresses, protocols, and port numbers. This flexibility makes extended access lists more powerful and more complex to configure.

Configuring IPv6 access lists

To configure IPv6 access lists, administrators use commands specific to their network devices, such as routers or switches. This involves defining access list entries, specifying permit or deny actions, and applying the access list to the desired interface or network. Proper configuration requires a clear understanding of the network topology and security requirements.

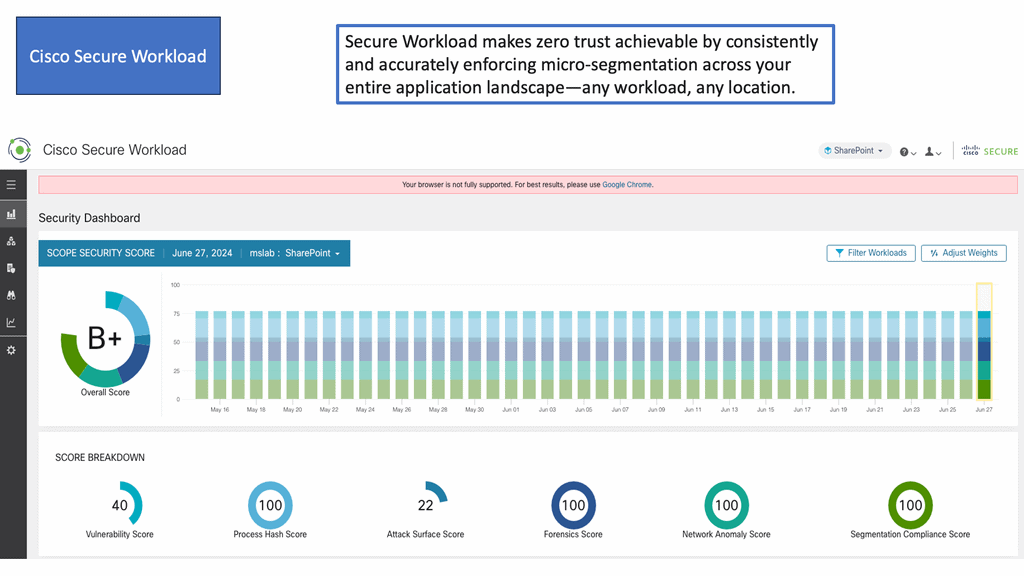

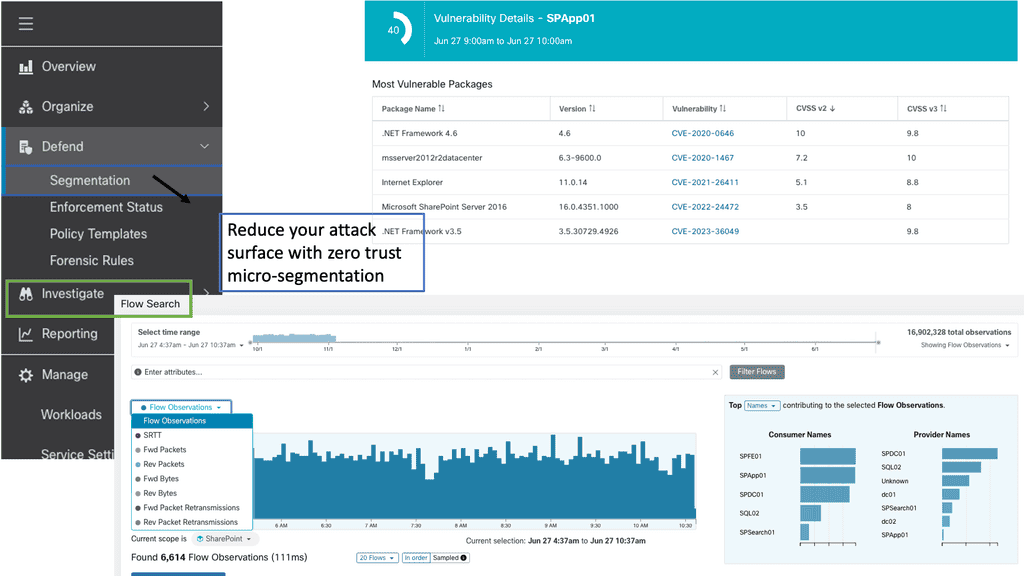

Example Product: Cisco Secure Workload

#### What is Cisco Secure Workload?

Cisco Secure Workload, formerly known as Tetration, is an advanced security platform that provides workload protection across on-premises, hybrid, and multi-cloud environments. It offers a unified approach to securing your applications by delivering visibility, security policy enforcement, and threat detection. By leveraging machine learning and behavioral analysis, Cisco Secure Workload ensures that your network remains protected against known and unknown threats.

#### Key Features of Cisco Secure Workload

1. **Comprehensive Visibility**:

One of the standout features of Cisco Secure Workload is its ability to provide complete visibility into your network traffic. This includes real-time monitoring of all workloads, applications, and their interdependencies, allowing you to identify vulnerabilities and potential threats promptly.

2. **Automated Security Policies**:

Cisco Secure Workload enables automated policy generation and enforcement, ensuring that your security measures are consistently applied across all environments. This reduces the risk of human error and ensures that your network remains compliant with industry standards and regulations.

3. **Advanced Threat Detection**:

Using advanced machine learning algorithms, Cisco Secure Workload can detect anomalous behavior and potential threats in real-time. This proactive approach allows you to respond to threats before they can cause significant damage to your network.

4. **Scalability and Flexibility**:

Whether your organization is operating on-premises, in the cloud, or in a hybrid environment, Cisco Secure Workload is designed to scale with your needs. It provides a flexible solution that can adapt to the unique requirements of your network architecture.

#### Benefits of Implementing Cisco Secure Workload

1. **Enhanced Security Posture**:

By providing comprehensive visibility and automated policy enforcement, Cisco Secure Workload helps you maintain a robust security posture. This minimizes the risk of data breaches and ensures that your sensitive information remains protected.

2. **Operational Efficiency**:

The automation capabilities of Cisco Secure Workload streamline your security operations, reducing the time and effort required to manage and enforce security policies. This allows your IT team to focus on more strategic initiatives.

3. **Cost Savings**:

By preventing security incidents and reducing the need for manual intervention, Cisco Secure Workload can lead to significant cost savings for your organization. Additionally, its scalability ensures that you only pay for the resources you need.

#### How to Implement Cisco Secure Workload

1. **Assessment and Planning**:

Begin by assessing your current network infrastructure and identifying the specific security challenges you face. This will help you determine the best way to integrate Cisco Secure Workload into your existing environment.

2. **Deployment**:

Deploy Cisco Secure Workload across your on-premises, cloud, or hybrid environment. Ensure that all critical workloads and applications are covered to maximize the effectiveness of the platform.

3. **Policy Configuration**:

Configure security policies based on the insights gained from the platform’s visibility features. Automate policy enforcement to ensure consistent application across all environments.

4. **Monitoring and Optimization**:

Continuously monitor your network using Cisco Secure Workload’s real-time analytics and threat detection capabilities. Regularly review and optimize your security policies to adapt to the evolving threat landscape.

Related: For pre-information, you may find the following post helpful:

Network Security Components

The Issue with Point Solutions

The security landscape is constantly evolving. To have any chance, security solutions also need to grow. There needs to be a more focused approach, continually developing security in line with today’s and tomorrow’s threats. For this, it is not to continuously buy more point solutions that are not integrated but to make continuous investments to ensure the algorithms are accurate and complete. So, if you want to change the firewall, you may need to buy a physical or virtual device.

**Complex and scattered**

Something impossible to do with the various point solutions designed with complex integration points scattered through the network domain. It’s far more beneficial to, for example, update an algorithm than to update the number of point solutions dispersed throughout the network. The point solution addresses one issue and requires a considerable amount of integration. You must continuously add keys to the stack, managing overhead and increased complexity. Not to mention license costs.

Would you like to buy a car or all the parts?

Let’s consider you are searching for a new car. Would you prefer to build the car with all the different parts or buy the already-built car? If we examine security, the way it has been geared up is provided in detail.

So I have to add this part here, and that part there, and none of these parts connect. Each component must be carefully integrated with another. It’s your job to support, manage, and build the stack over time. For this, you must be an expert in all the different parts.

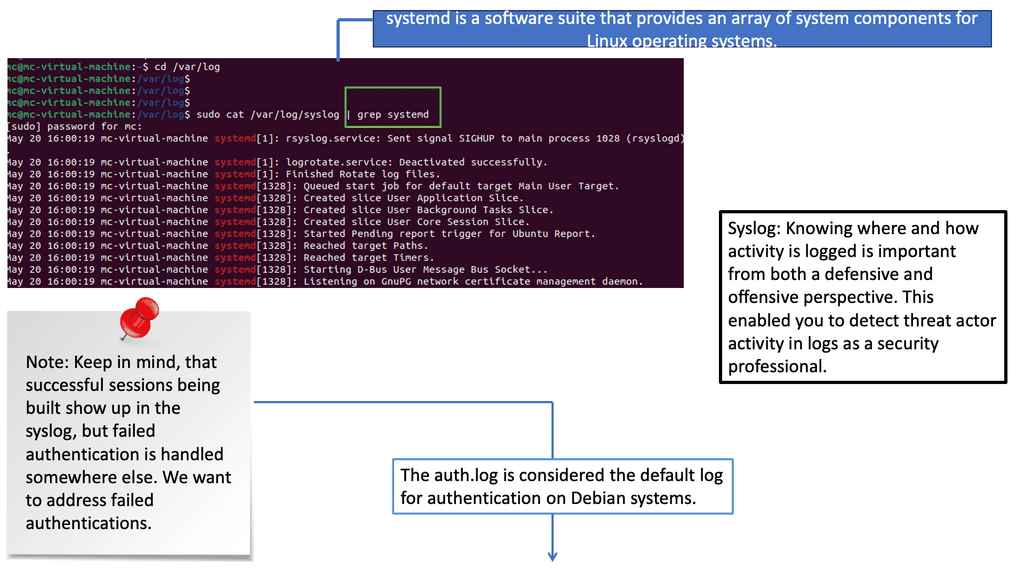

**Example: Log management**

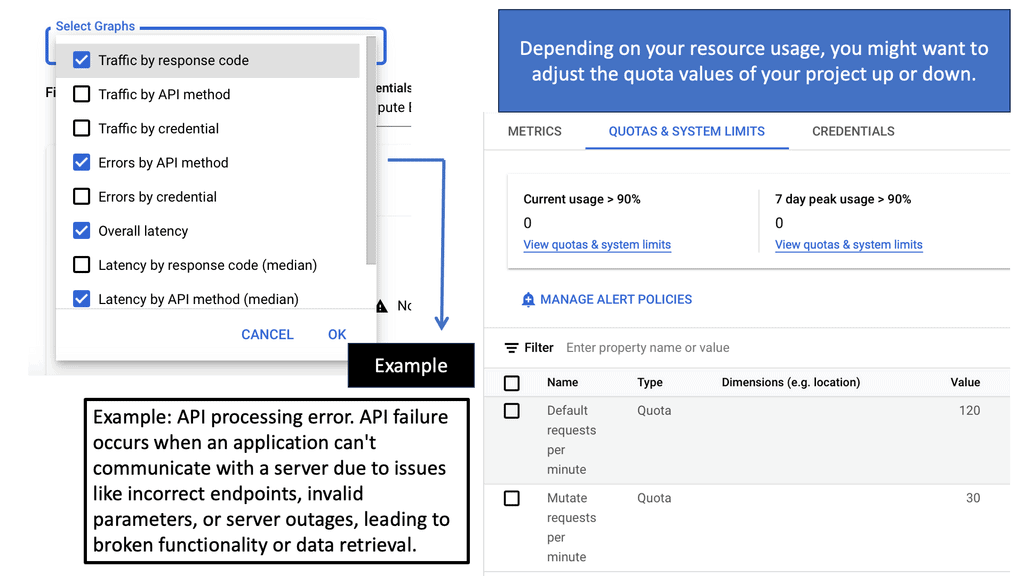

Let’s examine a log management system that needs to integrate numerous event sources, such as firewalls, proxy servers, endpoint detection, and behavioral response solutions. We also have the SIEM. The SIEM collects logs from multiple systems. It presents challenges to deploying and requires tremendous work to integrate into existing systems. How do logs get into the SIEM when the device is offline?

How do you normalize the data, write the rules to detect suspicious activity, and investigate if there are legitimate alerts? The results you gain from the SIEM are poor, considering the investment you have to make. Therefore, considerable resources are needed to successfully implement it.

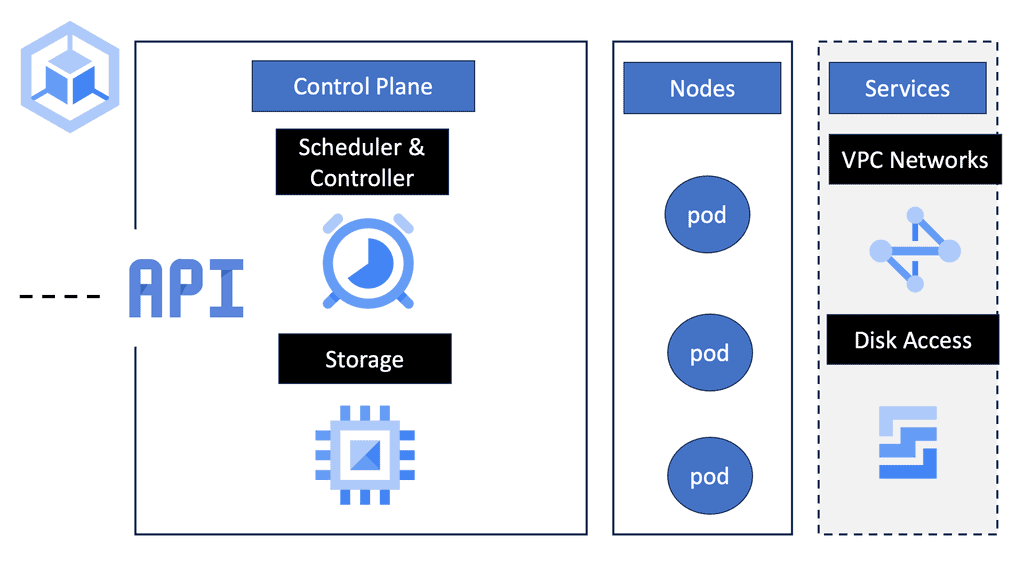

**Changes in perimeter location and types**

We also know this new paradigm spreads the perimeter, potentially increasing the attack surface with many new entry points. For example, if you are protecting a microservices environment, each unit of work represents a business function that needs security. So we now have many entry points to cover, moving security closer to the endpoint.

Network Security Components – The Starting Point:

Enforcement with network security layers: So, we need a multi-layered approach to network security that can implement security controls at different points and network security layers. With this approach, we are ensuring a robust security posture regardless of network design.

Therefore, the network design should become irrelevant to security. The network design can change; for example, adding a different cloud should not affect the security posture. The remainder of the post will discuss the standard network security component.

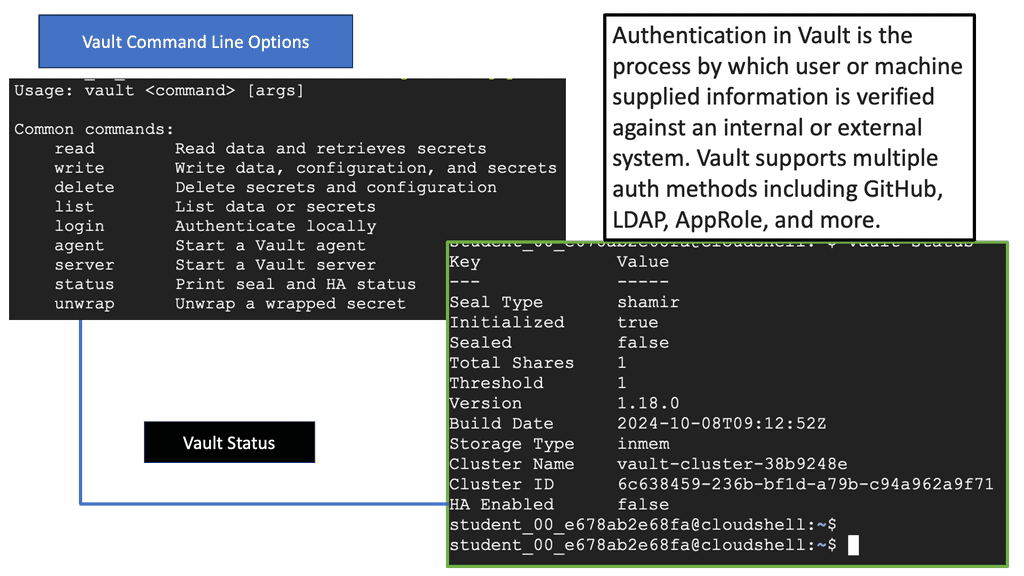

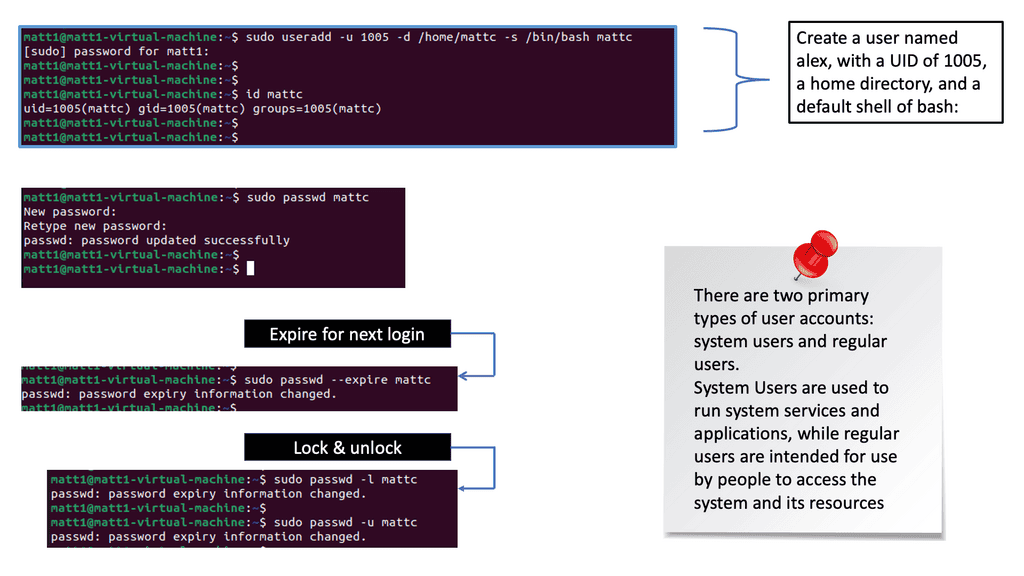

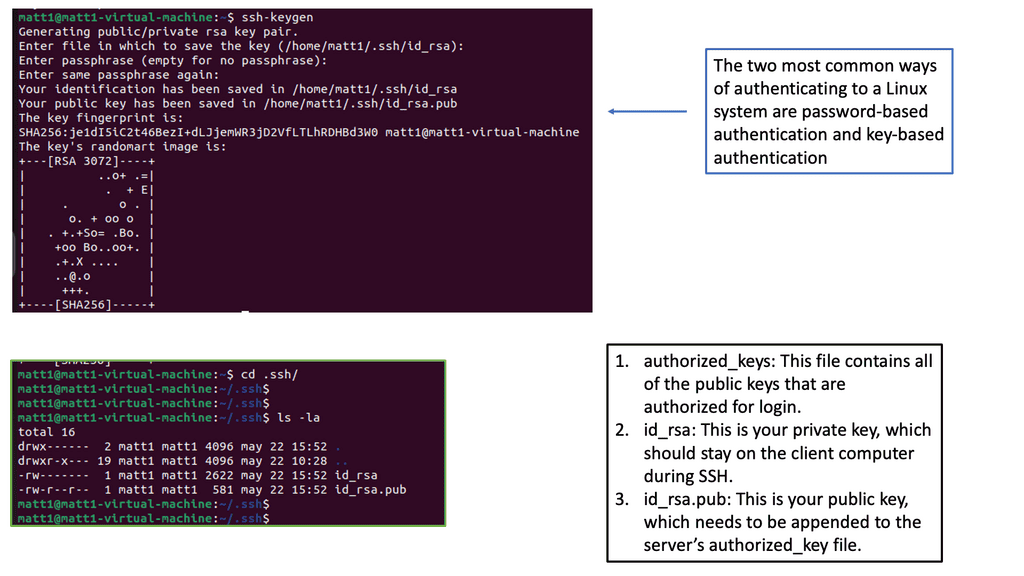

Understanding Identity Management

**The Role of Authentication**

Authentication is the process of verifying an individual or entity’s identity. It serves as a gatekeeper, granting access only to authorized users. Businesses and organizations can protect against unauthorized access and potential security breaches by confirming a user’s authenticity. In an era of rising cyber threats, weak authentication measures can leave individuals and organizations vulnerable to attacks.

Strong authentication is a crucial defense mechanism, ensuring only authorized users can access sensitive information or perform critical actions. It prevents unauthorized access, data breaches, identity theft, and other malicious activities.

There are several widely used authentication methods, each with its strengths and weaknesses. Here are a few examples:

1. Password-based authentication: This is the most common method where users enter a combination of characters as their credentials. However, it is prone to vulnerabilities such as weak passwords, password reuse, and phishing attacks.

2. Two-factor authentication (2FA): This method adds an extra layer of security by requiring users to provide a second form of authentication, such as a unique code sent to their mobile device. It significantly reduces the risk of unauthorized access.

3. Biometric authentication: Leveraging unique physical or behavioral traits like fingerprints, facial recognition, or voice patterns, biometric authentication offers a high level of security and convenience. However, it may raise privacy concerns and be susceptible to spoofing attacks.

Enhancing Authentication with Multi-factor Authentication (MFA)

Multi-factor authentication (MFA) combines multiple authentication factors to strengthen security further. By utilizing a combination of something the user knows (password), something the user has (smartphone or token), and something the user is (biometric data), MFA provides an additional layer of protection against unauthorized access.

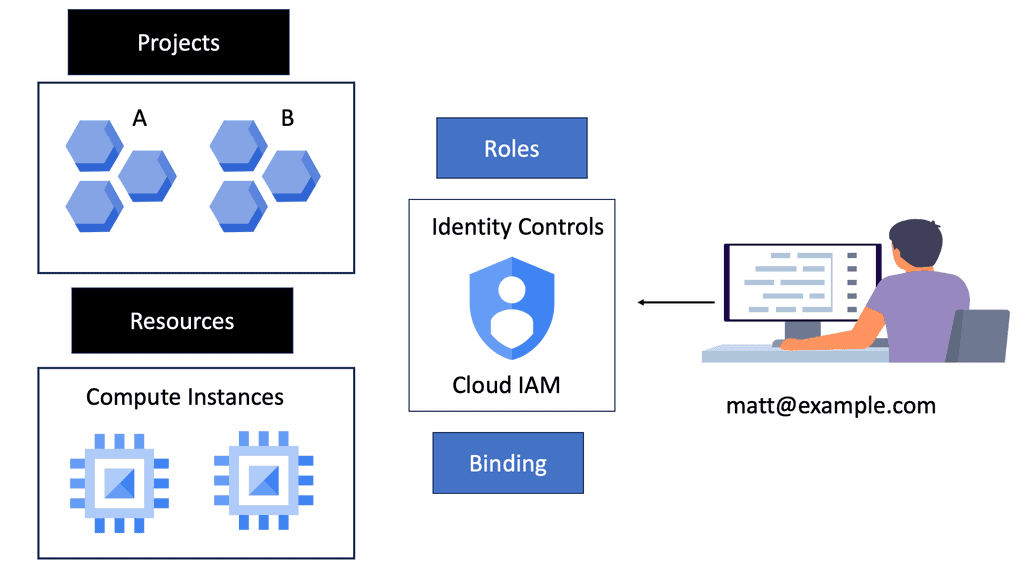

**The Role of Authorization**

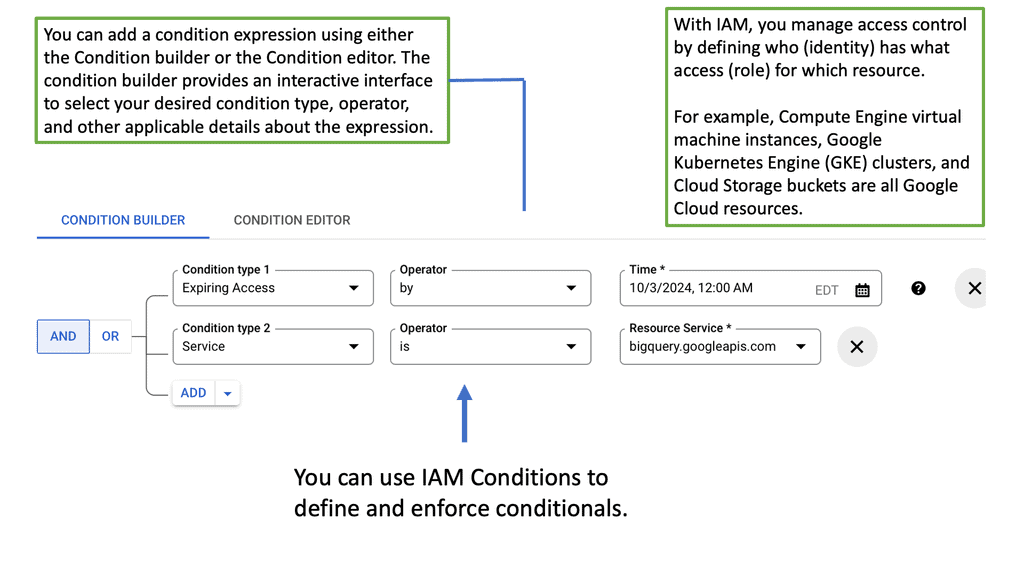

Authorization is the gatekeeper of access control. It determines who has the right to access specific resources within a system. By setting up rules and permissions, organizations can define which users or groups can perform certain actions, view specific data, or execute particular functions. This layer of security ensures that only authorized individuals can access sensitive information, reducing the risk of unauthorized access or data breaches.

A.Granular Access Control: One key benefit of authorization is the ability to apply granular access control. Rather than providing unrestricted access to all resources, organizations can define fine-grained permissions based on roles, responsibilities, and business needs. This ensures that individuals only have access to the resources required to perform their tasks, minimizing the risk of accidental or deliberate data misuse.

B.Role-Based Authorization: Role-based authorization is a widely adopted approach simplifying access control management. Organizations can streamline granting and revoking access rights by assigning roles to users. Roles can be structured hierarchically, allowing for easy management of permissions across various levels of the organization. This enhances security and simplifies administrative tasks, as access rights can be managed at a group level rather than individually.

C.Authorization Policies and Enforcement: Organizations must establish robust policies that govern access control to enforce authorization effectively. These policies define the rules and conditions for granting or denying resource access. They can be based on user attributes, such as job title or department, and contextual factors, such as time of day or location. Organizations can ensure access control aligns with their security requirements and regulatory obligations by implementing a comprehensive policy framework.

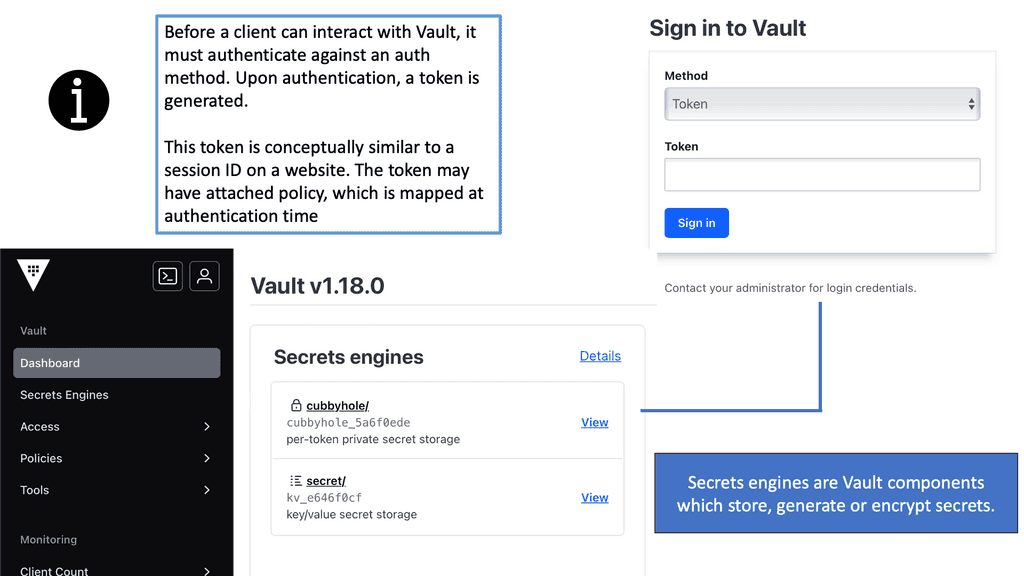

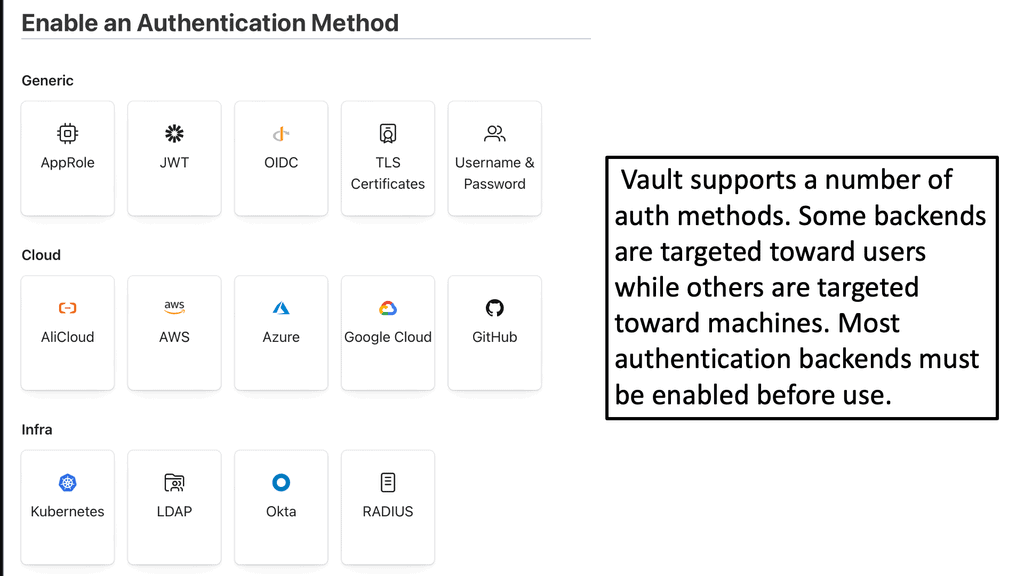

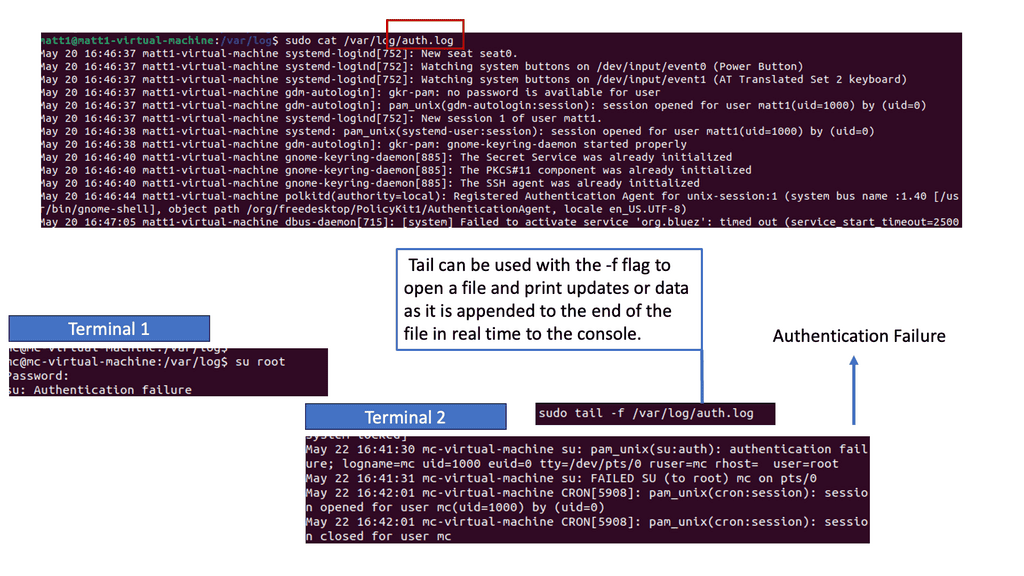

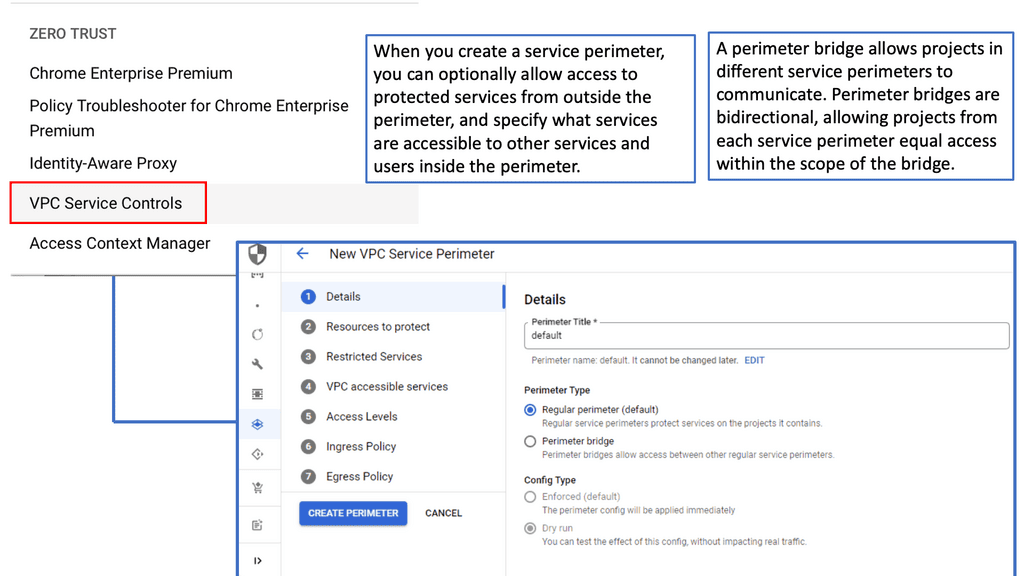

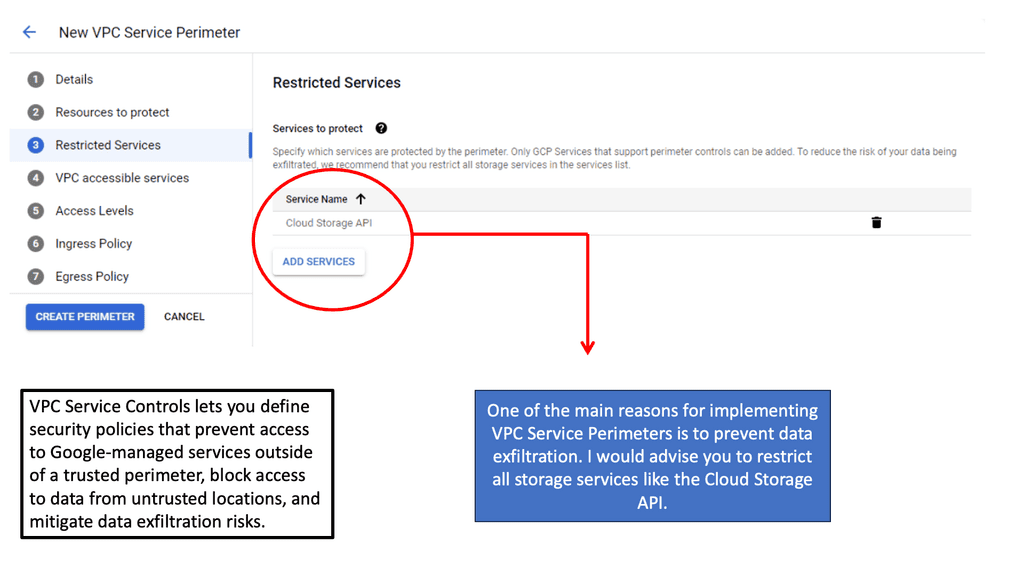

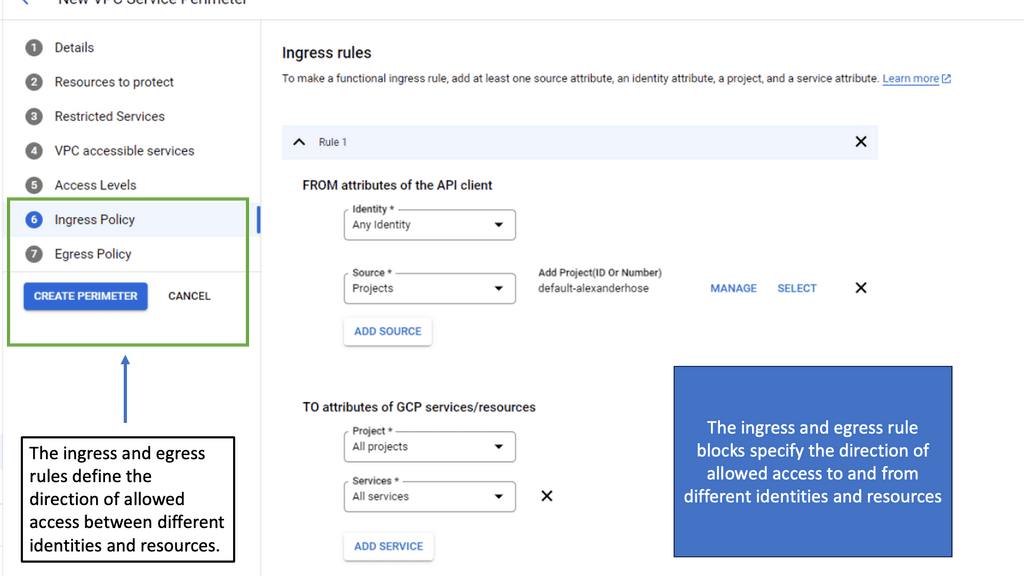

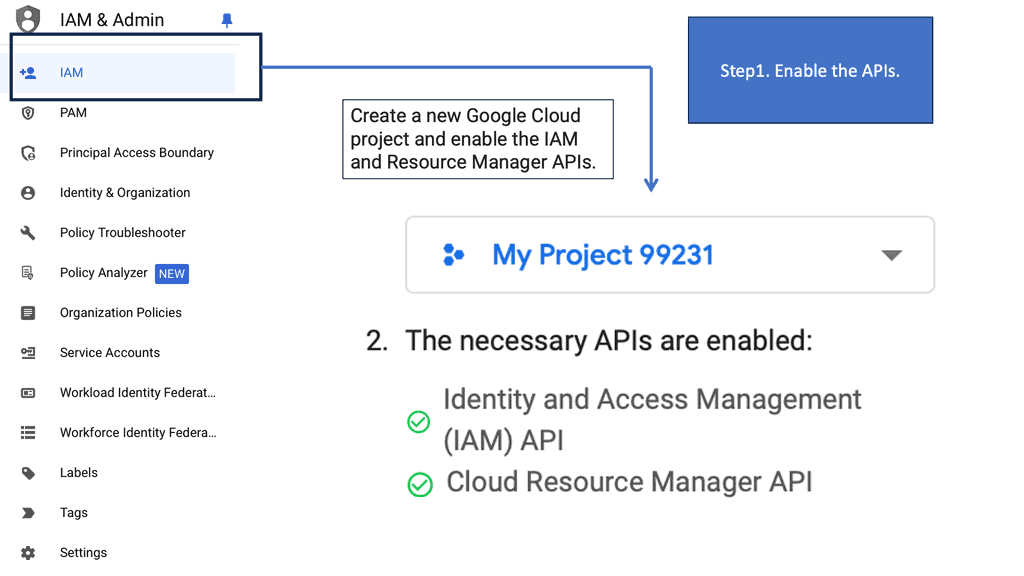

**Step1: Access control**

Firstly, we need some access control. This is the first step to security. Bad actors are not picky about location when launching an attack. An attack can come from literally anywhere and at any time. Therefore, network security starts with access control carried out with authentication, authorization, accounting (AAA), and identity management.

Authentication proves that the person or service is who they say they are. Authorization allows them to carry out tasks related to their role. Identity management is all about managing the attributes associated with the user, group of users, or another identity that may require access. The following figure shows an example of access control. More specifically, network access control.

Identity-centric access control

It would be best to have an identity based on logical attributes, such as the multi-factor authentication (MFA), transport layer security (TLS) certificate, the application service, or a logical label/tag. Be careful when using labels/tags when you have cross-domain security.

So, policies are based on logical attributes rather than using IP addresses to base policies you may have used. This ensures an identity-centric design around the user identity, not the IP address.

Once initial security controls are passed, a firewall security device ensures that users can only access services they are allowed to. These devices decide who gets access to which parts of the network. The network would be divided into different zones or micro-segments depending on the design. Adopting micro-segments is more granular regarding the difference between micro-segmentation and micro-segmentation.

Dynamic access control

Access control is the most critical component of an organization’s cybersecurity protection. For too long, access control has been based on static entitlements. Now, we are demanding dynamic access control, with decisions made in real time. Access support must support an agile IT approach with dynamic workloads across multiple cloud environments.

A pivotal point to access control is that it is dynamic and real-time, constantly accessing and determining the risk level. Thereby preventing unauthorized access and threats like a UDP scan. We also have zero trust network design tools, such as single packet authentication (SPA), that can keep the network dark until all approved security controls are passed. Once security controls are passed, access is granted.

**Step2: The firewall design locations**

A firewalling strategy can offer your environment different firewalls, capabilities, and defense-in-depth levels. Each firewall type positioned in other parts of the infrastructure forms a security layer, providing a defense-in-depth and robust security architecture. There are two firewalling types at a high level: internal, which can be distributed among the workloads, and border-based firewalling.

Firewalling: Different network security layers

The different firewall types offer capabilities that begin with basic packet filters, reflexive ACL, stateful inspection, and next-generation features such as micro-segmentation and dynamic access control. These can take the form of physical or virtualized.

Firewalls purposely built and designed for a particular role should not be repurposed to carry out the functions that belong to and are intended to be offered by a different firewall type. The following diagram lists the different firewall types. Around nine firewall types work at various layers in the network.

Example: Firewall security policy

A firewall is an essential part of an organization’s comprehensive security policy. A security policy defines the goals, objectives, and procedures of security, all of which can be implemented with a firewall. There are many different firewalling modes and types.

However, generally, firewalls can focus on the packet header, the packet payload (the essential data of the packet), or both, the session’s content, the establishment of a circuit, and possibly other assets. Most firewalls concentrate on only one of these. The most common filtering focus is on the packet’s header, with the packet’s payload a close second.

Firewalls come in various sizes and flavors. The most typical firewall is a dedicated system or appliance that sits in the network and segments an “internal” network from the “external” Internet.

The primary difference between these two types of firewalls is the number of hosts the firewall protects. Within the network firewall type, there are primary classifications of devices, including the following:

- Packet-filtering firewalls (stateful and nonstateful)

- Circuit-level gateways

- Application-level gateways

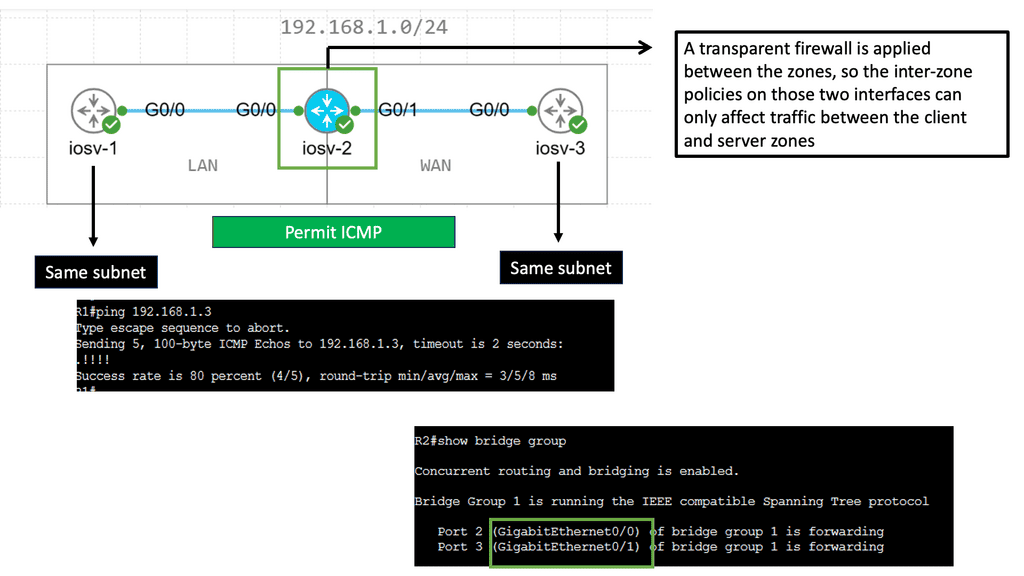

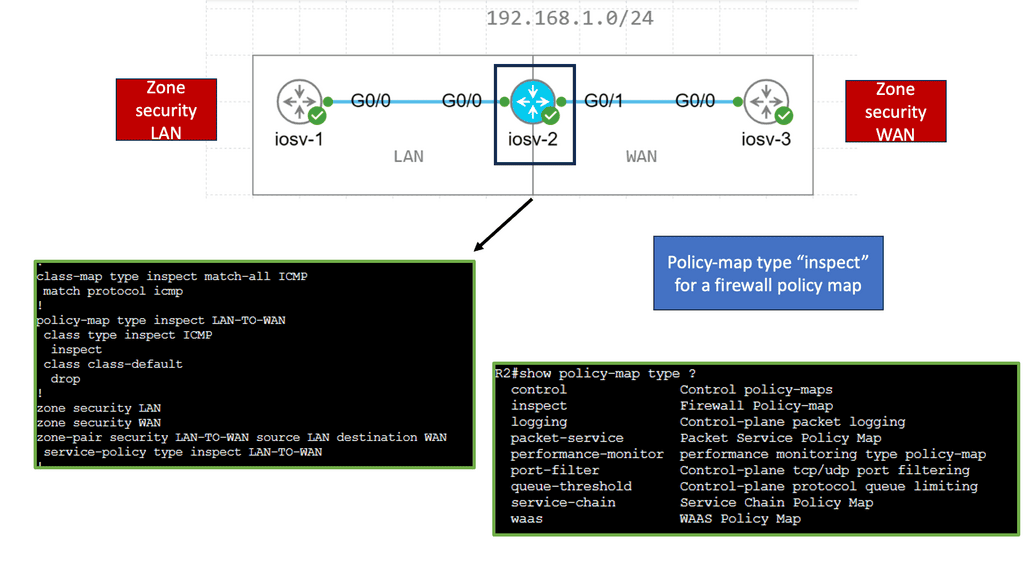

Zone-Based Firewall ( Transparent Mode )

Understanding Zone-Based Firewall

Zone-Based Firewall, or ZBFW, is a security feature embedded within Cisco IOS routers. It provides a highly flexible and granular approach to network traffic control, allowing administrators to define security zones and apply policies accordingly. Unlike traditional ACL-based firewalls, ZBFW operates based on zones rather than interfaces, enabling efficient traffic management and advanced security controls.

Transparent mode is a distinctive feature of Zone-Based Firewall that allows seamless integration into existing network infrastructures without requiring a change in the IP addressing scheme. In this mode, the firewall acts as a “bump in the wire,” transparently intercepting and inspecting traffic between different zones while maintaining the original IP addresses. This makes it ideal for organizations looking to enhance network security without significant network reconfiguration.

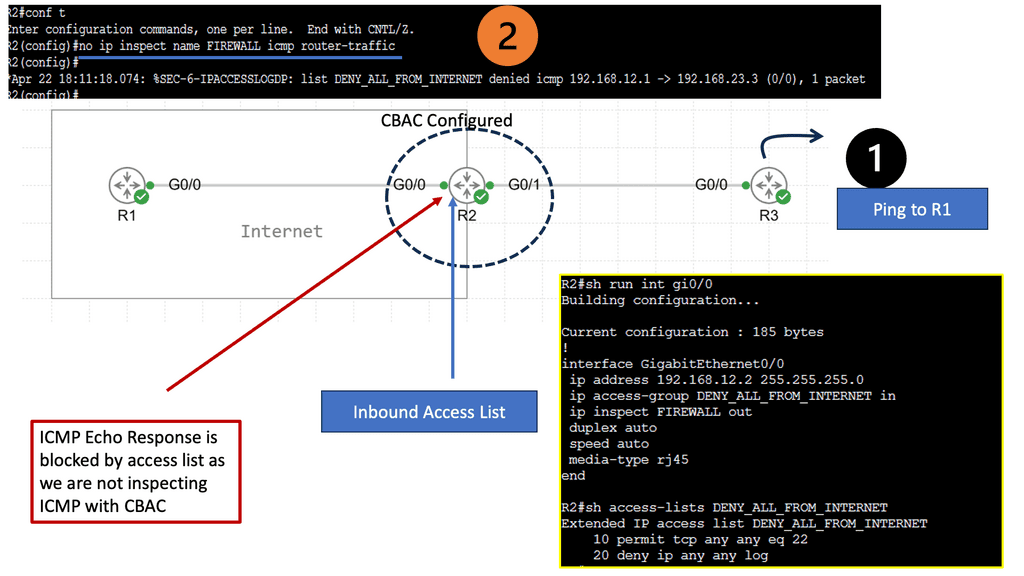

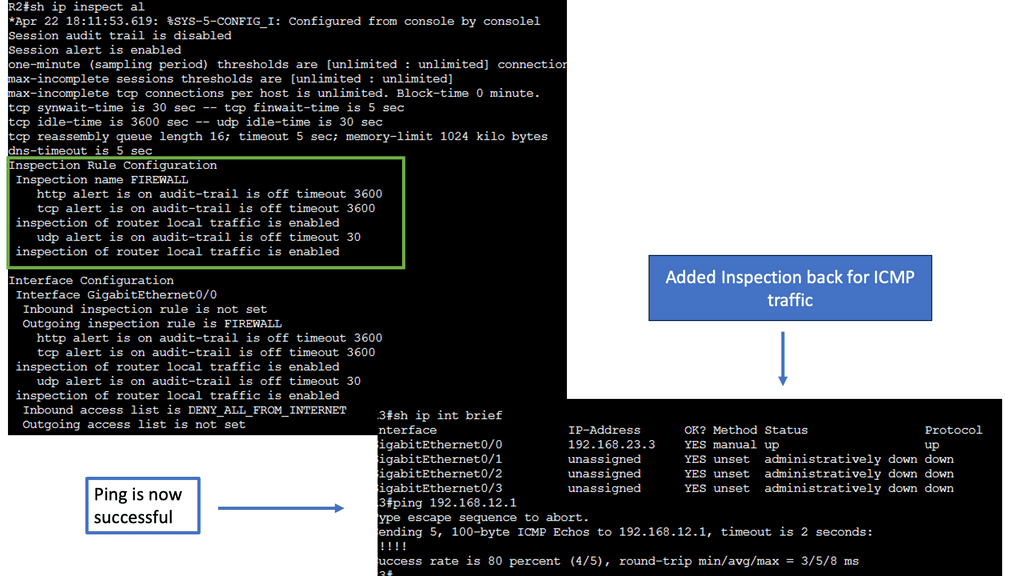

CBAC – Context-Based Access Control Firewall

Understanding CBAC Firewall

– CBAC Firewall, short for Context-Based Access Control Firewall, is a stateful inspection firewall operating at the OSI model’s application layer. Unlike traditional packet-filtering firewalls, CBAC Firewall provides enhanced security by dynamically analyzing the context and content of network traffic. This allows it to make intelligent decisions, granting or denying access based on the state and characteristics of the communication.

– CBAC Firewall offers a range of powerful features that make it a preferred choice for network security. Firstly, it supports session-based inspection, enabling it to track the state of network connections and only allow traffic that meets specific criteria. This eliminates the risk of unauthorized access and helps protect against various attacks, including session hijacking and IP spoofing.

– Furthermore, the CBAC Firewall excels at protocol anomaly detection. Monitoring and comparing network traffic patterns against predefined rules can identify suspicious behavior and take appropriate action. Whether detecting excessive data transfer or unusual port scanning, the CBAC Firewall enhances your network’s ability to identify potential threats and respond proactively.

**Additional Firewalling Types**

- Internal Firewalls

Internal firewalls inspect higher up in the application stack and can have different types of firewall context. They operate at a workload level, creating secure micro perimeters with application-based security controls. The firewall policies are application-centric, purpose-built for firewalling east-west traffic with layer 7 network controls with the stateful firewall at a workload level.

- Virtual firewalls and VM NIC firewalling

I often see virtualized firewalls here, and the rise of internal virtualization in the network has introduced the world of virtual firewalls. Virtual firewalls are internal firewalls distributed close to the workloads. For example, we can have the VM NIC firewall. In a virtualized environment, the VM NIC firewall is a packet filtering solution inserted between the VM Network Interfaces card of the Virtual Machines (VM) and the virtual hypervisor switch. All traffic that goes in and out of the VM has to pass via the virtual firewall.

- Web application firewalls (WAF)

We could use web application firewalls (WAF) for application-level firewalls. These devices are similar to reverse proxies that can terminate and initiate new sessions to the internal hosts. The WAF has been around for quite some time to protect web applications by inspecting HTTP traffic.

However, they have the additional capability to work with illegal payloads that can better identify destructive behavior patterns than a simple VM NIC firewall.

WAFs are good at detecting static and dynamic threats. They protect against common web attacks, such as SQL injection and cross-site scripting, using pattern-matching techniques against the HTTP traffic. Active threats have been the primary source of threat and value a WAF can bring.

**Step3: Understanding Encryption**

Encryption is an encoding method that allows only authorized parties to access and understand it. It involves transforming plain text into a scrambled form called ciphertext using complex algorithms and a unique encryption key.

Encryption is a robust shield that protects our data from unauthorized access and potential threats. It ensures that even if data falls into the wrong hands, it remains unreadable and useless without the corresponding decryption key.

Various encryption algorithms are used to secure data, each with strengths and characteristics. From the widely-used Advanced Encryption Standard (AES) to the asymmetric encryption of RSA, these algorithms employ different mathematical techniques to encrypt and decrypt information.

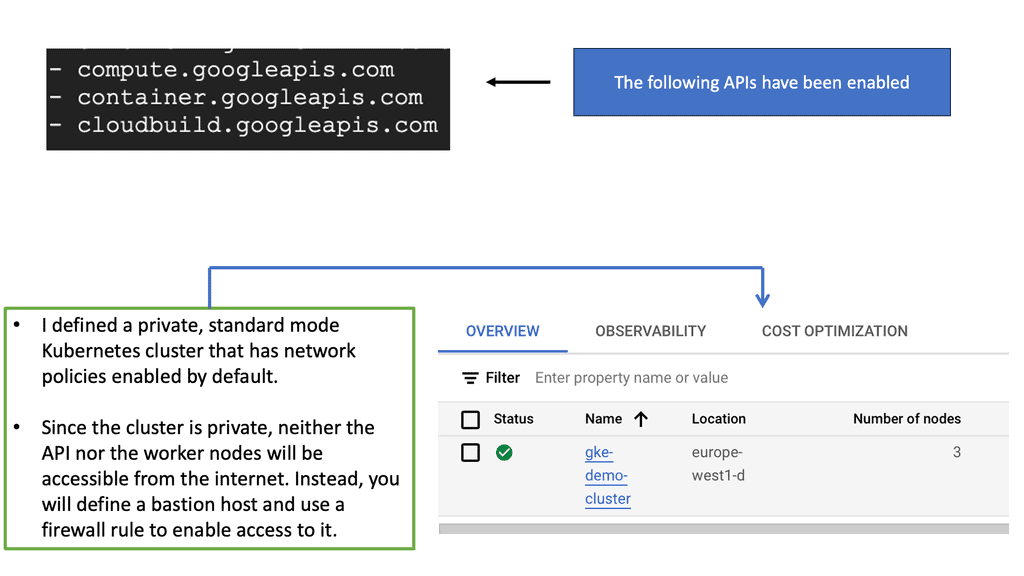

**Step4: Network Segmentation**

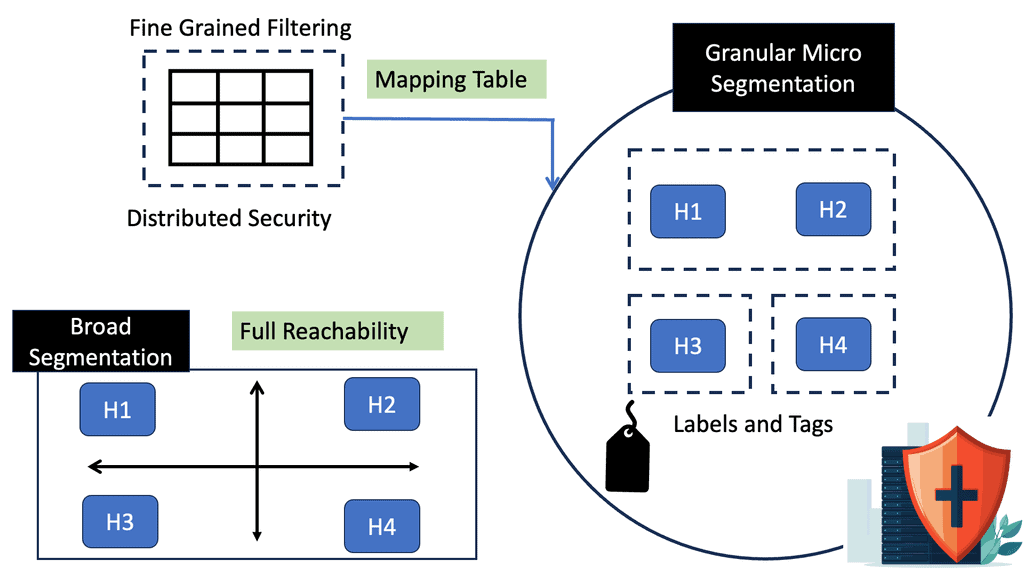

Macro segmentation

The firewall monitors and controls the incoming and outgoing network traffic based on predefined security rules. It establishes a barrier between the trusted network and the untrusted network. The firewall commonly inspects Layer 3 to Layer 4 at the network’s edge. In addition, to reduce hair pinning and re-architecture, we have internal firewalls. We can put an IPD/IDS or an AV on an edge firewall.

In the classic definition, the edge firewall performs access control and segmentation based on IP subnets, known as macro segmentation. Macro segmentation is another term for traditional network segmentation. It is still the most prevalent segmentation technique in most networks and can have benefits and drawbacks.

Same segment, same sensitivity level

It is easy to implement but ensures that all endpoints in the same segment have or should have the same security level and can talk freely, as defined by security policy. We will always have endpoints of similar security levels, and macro segmentation is a perfect choice. Why introduce complexity when you do not need to?

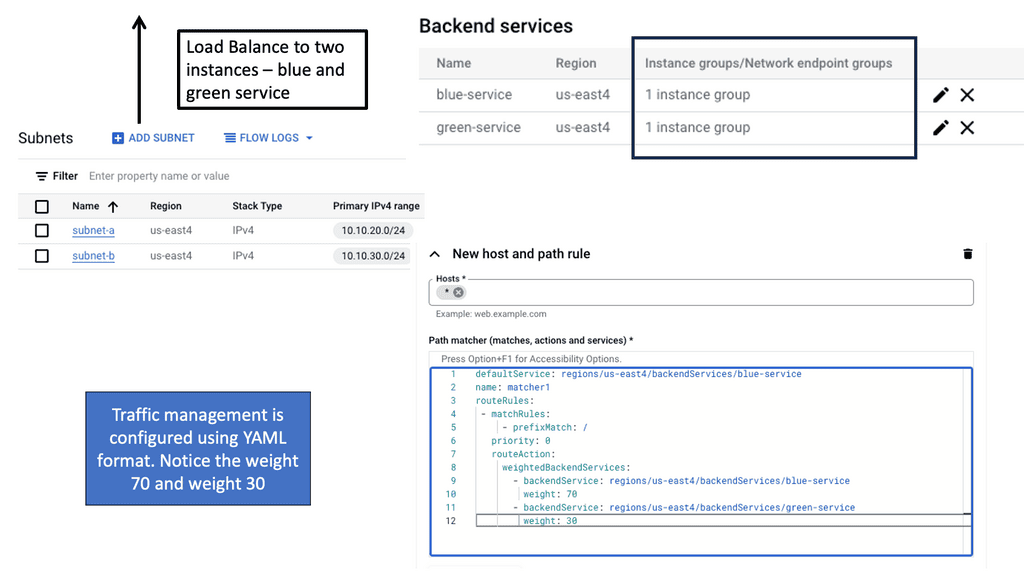

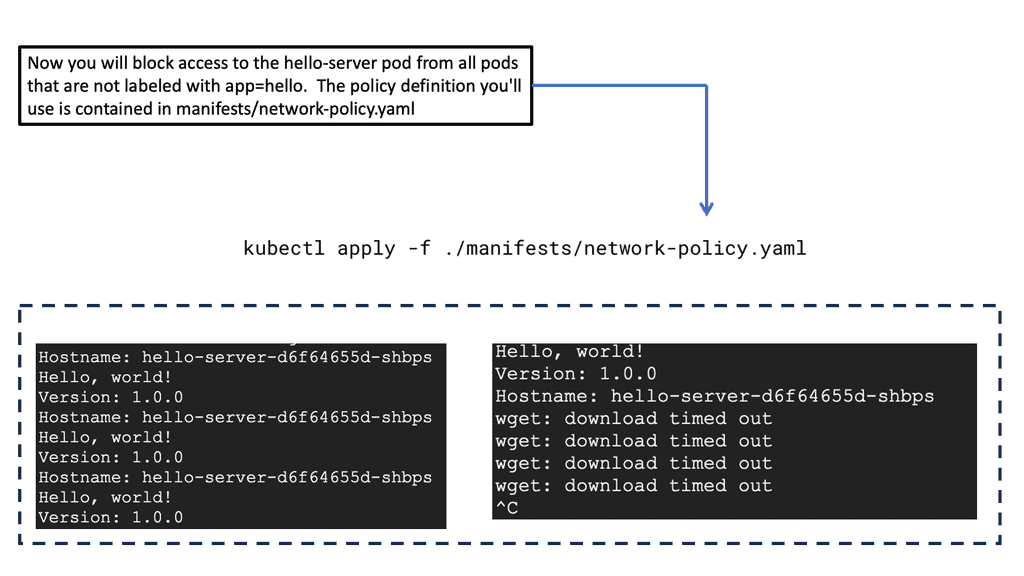

Micro-segmentation

The same edge firewall can be used to do more granular segmentation; this is known as micro-segmentation. In this case, the firewall works at a finer granularity, logically dividing the data center into distinct security segments down to the individual workload level, then defining security controls and delivering services for each unique segment. So, each endpoint has its segment and can’t talk outside that segment without policy. However, we can have a specific internal firewall to do the micro-segmentation.

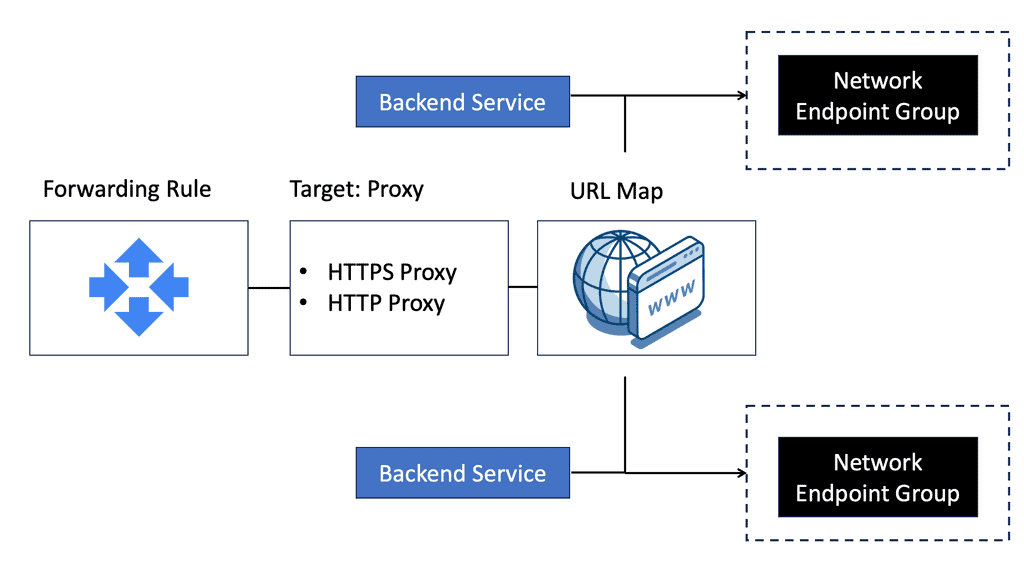

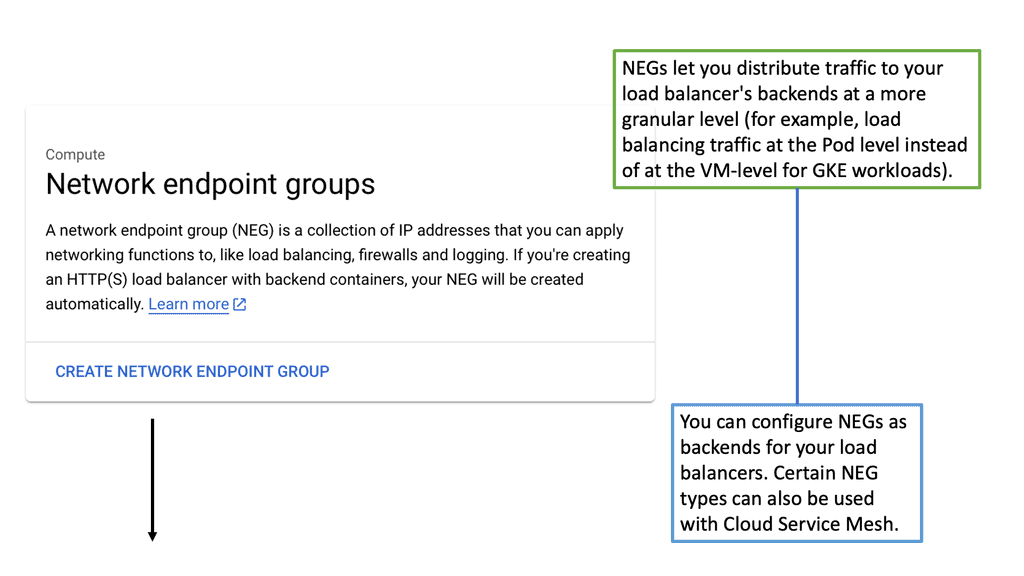

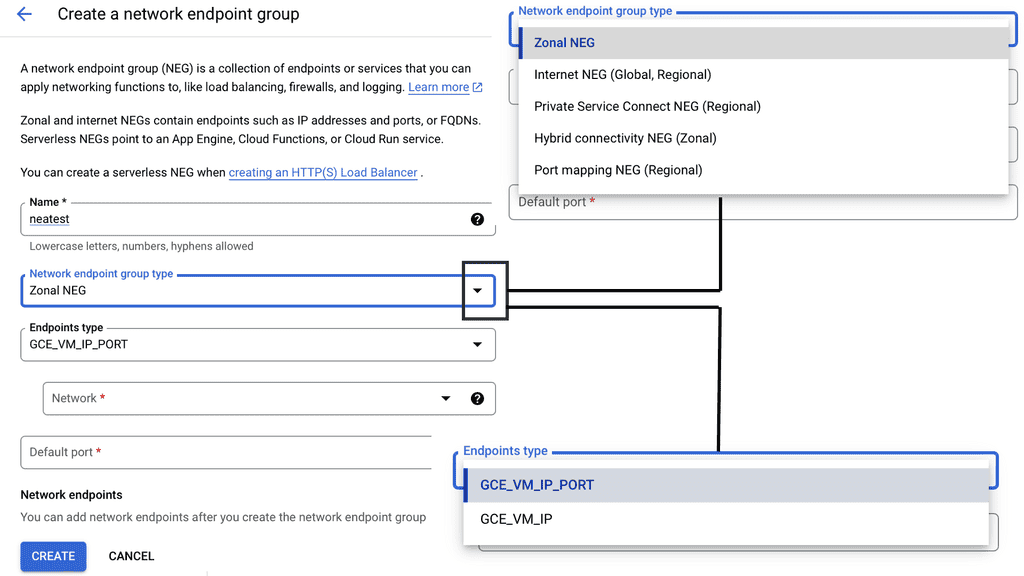

Example: Network Endpoint Groups

**Types of Network Endpoint Groups**

Google Cloud offers different types of NEGs tailored to various use cases:

1. **Zonal NEGs**: These are used for grouping VM instances within a specific zone. Zonal NEGs are ideal for applications that require low latency and high availability within a particular geographic area.

2. **Internet NEGs**: Useful for including endpoints that are reachable over the internet, such as external IP addresses. Internet NEGs allow you to incorporate externally hosted services into your Google Cloud network seamlessly.

3. **Serverless NEGs**: Designed for serverless applications, these NEGs enable you to integrate Google Cloud services such as Cloud Functions and Cloud Run into your network architecture. This is perfect for modern applications leveraging microservices.

**Benefits of Using NEGs in Google Cloud**

NEGs offer several advantages for managing and optimizing network resources:

1. **Scalability**: NEGs facilitate easy scaling of applications by allowing you to add or remove endpoints based on demand, ensuring your services remain responsive and efficient.

2. **Load Balancing**: By integrating NEGs with Google Cloud Load Balancing, you can distribute traffic efficiently across multiple endpoints, improving application performance and reliability.

3. **Flexibility**: NEGs offer the flexibility to mix different types of endpoints, such as VMs and serverless functions, enabling you to design hybrid architectures that meet your specific needs.

4. **Simplified Management**: With NEGs, managing network resources becomes more straightforward, as you can handle groups of endpoints collectively rather than individually, saving time and reducing complexity.

**Implementing NEGs in Your Cloud Infrastructure**

To implement NEGs in your Google Cloud environment, follow these general steps:

1. **Identify Your Endpoints**: Determine which endpoints you need to group together based on their roles and requirements.

2. **Create the NEG**: Use the Google Cloud Console or gcloud command-line tool to create a NEG, specifying the type and endpoints you wish to include.

3. **Configure Load Balancing**: Integrate your NEG with a load balancer to ensure optimal traffic distribution and application performance.

4. **Monitor and Adjust**: Continuously monitor the performance of your NEGs and make adjustments as needed to maintain efficiency and responsiveness.

Example: Cisco ACI and microsegmentation

Some micro-segmentation solutions could be Endpoint Groups (EPGs) with the Cisco ACI and ACI networks. ACI networks are based on ACI contracts that have subjects and filters to restrict traffic and enable the policy. Traffic is unrestricted within the Endpoint Groups; however, we need an ACI contract for traffic to cross EPGs.

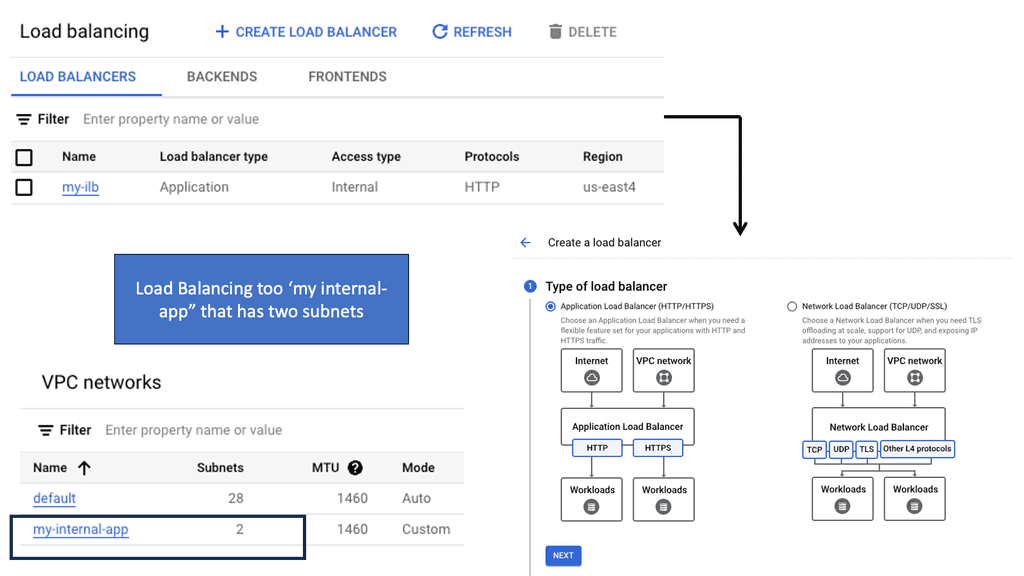

**Step5: Load Balancing**

Understanding Load Balancing

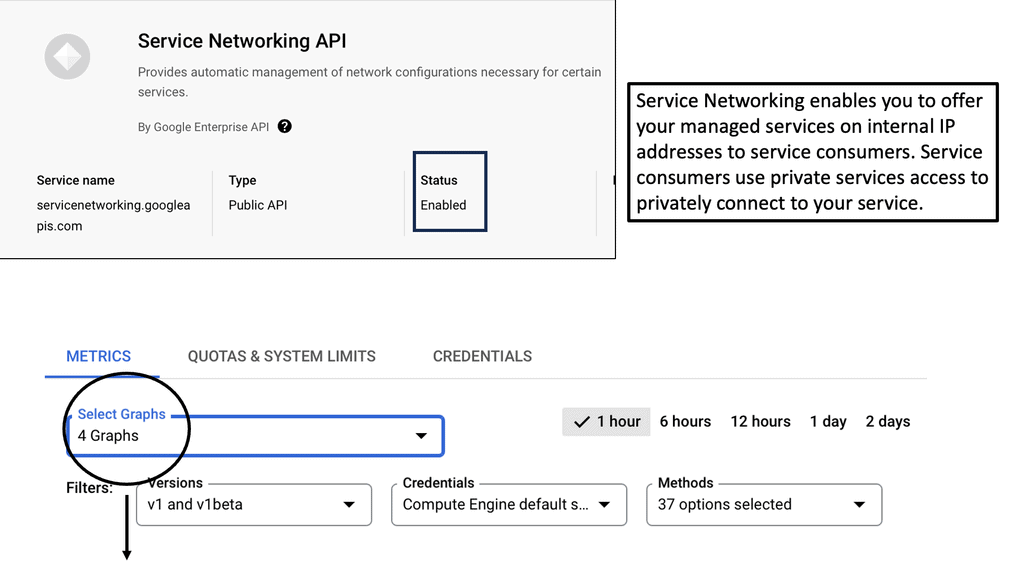

Load balancing is the process of distributing incoming network traffic across multiple servers or resources. It helps avoid congestion, optimize resource utilization, and enhance overall system performance. It also acts as a crucial mechanism for handling traffic spikes, preventing any single server from becoming overwhelmed.

Various load-balancing strategies are available, each suited for different scenarios and requirements. Let’s explore a few popular ones:

A. Round Robin: This strategy distributes incoming requests equally among the available servers cyclically. It is simple to implement and provides a basic level of load balancing.

B. Least Connection Method: With this strategy, incoming requests are directed to the server with the fewest active connections at any given time. It ensures that heavily loaded servers receive fewer requests, optimizing overall performance.

C. Weighted Round Robin: In this strategy, servers are assigned different weights, indicating their capacity to handle traffic. Servers with higher weights receive more incoming requests, allowing for better resource allocation.

Load balancers can be hardware-based or software-based, depending on the specific needs of an infrastructure. Let’s explore the two main types:

Hardware Load Balancers: These are dedicated physical appliances specializing in load balancing. They offer high performance, scalability, and advanced features like SSL offloading and traffic monitoring.

Software Load Balancers are software-based solutions that can be deployed on standard servers or virtual machines. They provide flexibility and cost-effectiveness and are often customizable to suit specific requirements.

**Scaling The load balancer**

A load balancer is a device that acts as a reverse proxy and distributes network or application traffic across several servers. This allows organizations to ensure that their resources are used efficiently and that no single server is overburdened. It can also improve running applications’ performance, scalability, and availability.

Load balancing and load balancer scaling refer to efficiently distributing incoming network traffic across a group of backend servers, also known as a server farm or pool. For security, a load balancer has some capability and can absorb many attacks, such as a volumetric DDoS attack. Here, we can have an elastic load balancer running in software.

So it can run in front of a web property and load balance between the various front ends, i.e., web servers. If it sees an attack, it can implement specific techniques. So, it’s doing a function beyond the load balancing function and providing a security function.

**Step6: The IDS**

Traditionally, the IDS consists of a sensor installed on the network that monitors traffic for a set of defined signatures. The signatures are downloaded and applied to network traffic every day. Traditional IDS systems do not learn from behaviors or other network security devices over time. The solution only looks at a specific time, lacking an overall picture of what’s happening on the network.

**Analyse Individual Packets**

They operate from an island of information, only examining individual packets and trying to ascertain whether there is a threat. This approach results in many false positives that cause alert fatigue. Also, when a trigger does occur, there is no copy of network traffic to do an investigation. Without this, how do you know the next stage of events? Working with IDS, security professionals are stuck with what to do next.

A key point: IPS/IDS

An intrusion detection system (IDS) is a security system that monitors and detects unauthorized access to a computer or network. It also monitors communication traffic from the system for suspicious or malicious activity and alerts the system administrator when it finds any. An IDS aims to identify and alert the system administrator of any malicious activities or attempts to gain unauthorized access to the system.

**IDS – Hardware or Software Solution**

An IDS can be either a hardware or software solution or a combination. It can detect various malicious activities, such as viruses, worms, and malware. It can also see attempts to access the system, steal data, or change passwords. Additionally, an IDS can detect any attempts to gain unauthorized access to the system or other activities that are not considered standard.

**Detection Techniques**

The IDS uses various techniques to detect intrusion. These techniques include signature-based detection, which compares the incoming traffic against a database of known attacks; anomaly-based detection, which looks for any activity that deviates from normal operations; and heuristic detection, which uses a set of rules to detect suspicious activity.

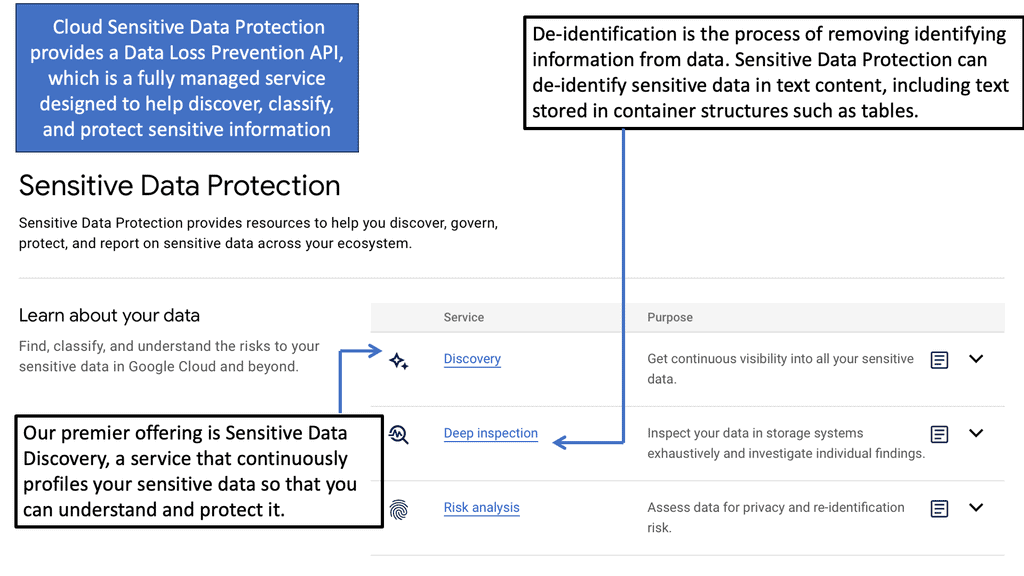

Example: Sensitive Data Protection

Challenge: Firewalls and static rules

Firewalls use static rules to limit network access to prevent access but don’t monitor for malicious activity. An IPS/IDS examines network traffic flows to detect and prevent vulnerability exploits. The classic IPS/IDS is typically deployed behind the firewall and does protocol analysis and signature matching on various parts of the data packet.

The protocol matching is, in some sense, a compliance check against the publicly declared spec of the protocol. We are doing basic protocol checks if someone abuses some of the tags. Then, the IPS/IDS uses signatures to prevent known attacks. For example, an IPS/IDS uses a signature to prevent you from doing SQL injections.

Example: Firewalling based on Tags

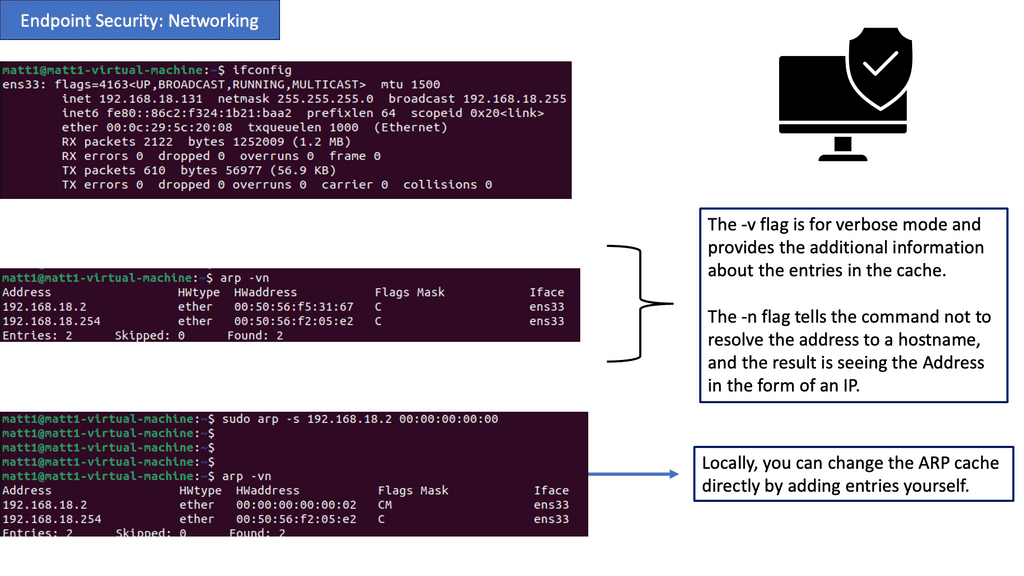

**Step7: Endpoint Security**

Move security to the workload

Like the application-based firewalls, the IPS/IDS functionality at each workload ensures comprehensive coverage without blind spots. So, as you can see, the security functions are moving much closer to the workloads, bringing the perimeter from the edge to the workload.

Endpoint security is an integral part of any organization’s security strategy. It protects endpoints like laptops, desktops, tablets, and smartphones from malicious activity. Endpoint security also protects data stored on devices and the device itself from malicious code or activity.

Endpoint Security Tools

Endpoint security includes various measures, including antivirus and antimalware software, application firewalls, device control, and patch management. Antivirus and antimalware software detect and remove malicious code from devices. Application firewalls protect by monitoring incoming and outgoing network traffic and blocking suspicious activity.

Device control ensures that only approved devices can be used on the network. Finally, patch management ensures that devices are up-to-date with the latest security patches.

Network detection and response

Then, we have the network detection and response solutions. The Network detection and response (NDR) solutions are designed to detect cyber threats on corporate networks using machine learning and data analytics. They can help you discover evidence on the network and cloud of malicious activities that are in progress or have already occurred.

Some of the analyses promoting the NDR tools are “Next-Gen IDS.” One significant difference between NDR and old IDS tools is that NDR tools use multiple Machine Learning (ML) techniques to identify normal baselines and anomalous traffic rather than static rules or IDS signatures, which have trouble handling dynamic threats. The following figure shows an example of a typical attack lifecycle.

**Step8: Anti-malware gateway**

Anti-malware gateway products have a particular job. They look at the download, then take the file and try to open it. Files are put through a sandbox to test whether they contain anything malicious—the bad actors who develop malware test against these systems before releasing the malware. Therefore, the gateways often lag one step behind. Also, anti-malware gateways are limited in scope and not focused on anything but malware.

Endpoint detection and response (EDR) solutions look for evidence and effects of malware that may have slipped past EPP products. EDR tools also detect malicious insider activities such as data exfiltration attempts, left-behind accounts, and open ports. Endpoint security has the best opportunity to detect several threats. It is the closest to providing a holistic offering. It is probably the best point solution, but remember, it is just a point solution.

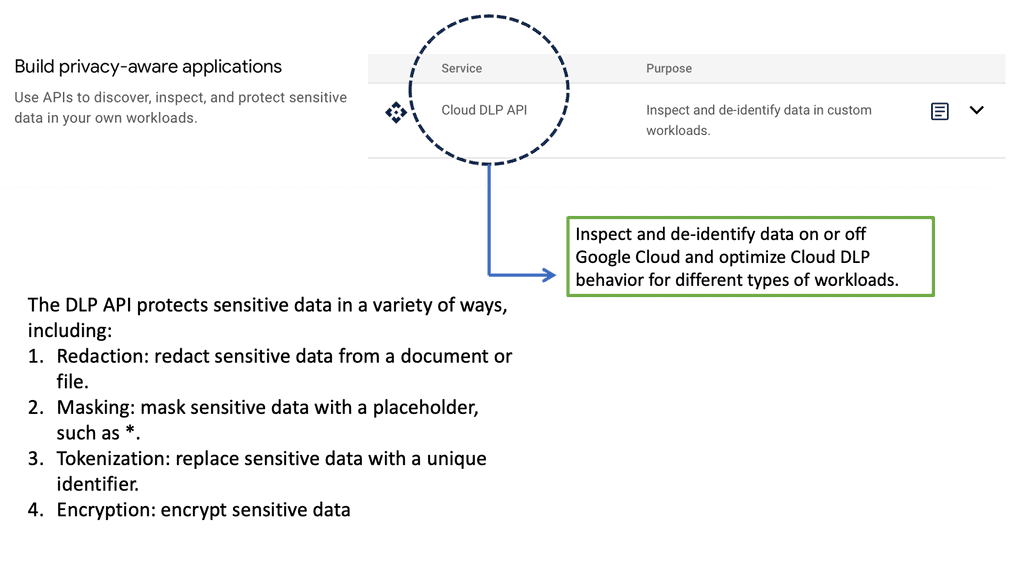

**DLP security-

By monitoring the machine and process, endpoint security is there for the long haul instead of assessing a file on a once-off basis. It can see when malware is executing and then implement DLP. Data Loss Prevention (DLP) solutions are security tools that help organizations ensure that sensitive data such as Personally Identifiable Information (PII) or Intellectual Property (IP) does not get outside the corporate network or to a user without access. However, endpoint security does not take sophisticated use cases into account. For example, it doesn’t care what you print or what Google drives you share.

**Endpoint security and correlation-

In general, endpoint security does not do any correlation. For example, let’s say there is a .exe that connects to the database; there is nothing on the endpoint to say that it is a malicious connection. Endpoint security finds distinguishing benign from legitimate hard unless there is a signature. Again, it is the best solution, but it is not a managed service or has a holistic view.

**Security controls from the different vendors-

As a final note, consider how you may have to administer the security controls from the different vendors. How do you utilize the other security controls from other vendors, and more importantly, how do you use them adjacent to one another? For example, Palo Alto operates an App-ID, a patented traffic classification system only available in Palo Alto Networks firewalls.

Different vendors will not support this feature in a network. This poses the question: How do I utilize next-generation features from vendors adjacent to devices that don’t support them? Your network needs the ability to support features from one product across the entire network and then consolidate them into one. How do I use all the next-generation features without having one vendor?

**Use of a packet broker-

However, changing an algorithm that can affect all firewalls in your network would be better. That would be an example of an advanced platform controlling all your infrastructures. Another typical example is a packet broker that can sit in the middle of all these tools. Fetch the data from the network and endpoints and then send it back to our existing security tools. Essentially, this ensures that there are no blind spots in the network.

This packet broker tool should support any workload and be able to send information to any existing security tools. We are now bringing information from the network into your existing security tools and adopting a network-centric approach to security.

Summary: Network Security Components

This blog post delved into the critical components of network security, shedding light on their significance and how they work together to protect our digital realm.

Firewalls – The First Line of Defense

Firewalls are the first line of defense against potential threats. Acting as gatekeepers, they monitor incoming and outgoing network traffic, analyzing data packets to determine their legitimacy. By enforcing predetermined security rules, firewalls prevent unauthorized access and protect against malicious attacks.

Intrusion Detection Systems (IDS) – The Watchful Guardians

Intrusion Detection Systems play a crucial role in network security by detecting and alerting against suspicious activities. IDS monitors network traffic patterns, looking for any signs of unauthorized access, malware, or unusual behavior. With their advanced algorithms, IDS helps identify potential threats promptly, allowing for swift countermeasures.

Virtual Private Networks (VPNs) – Securing Data in Transit

Virtual Private Networks establish secure connections over public networks like the Internet. VPNs create a secure tunnel by encrypting data traffic, preventing eavesdropping and unauthorized interception. This secure communication layer is vital when accessing sensitive information remotely or connecting branch offices securely.

Access Control Systems – Restricting Entry

Access Control Systems are designed to manage user access to networks, systems, and data. Through authentication and authorization mechanisms, these systems ensure that only authorized individuals can gain entry. Organizations can minimize the risk of unauthorized access and data breaches by implementing multi-factor authentication and granular access controls.

Security Incident and Event Management (SIEM) – Centralized Threat Intelligence

SIEM systems provide a centralized platform for monitoring and managing security events across an organization’s network. SIEM enables real-time threat detection, incident response, and compliance management by collecting and analyzing data from various security sources. This holistic approach to security empowers organizations to stay one step ahead of potential threats.

Conclusion:

Network security is a multi-faceted discipline that relies on a combination of robust components to protect against evolving threats. Firewalls, IDS, VPNs, access control systems, and SIEM collaborate to safeguard our digital realm. By understanding these components and implementing a comprehensive network security strategy, organizations can fortify their defenses and ensure the integrity and confidentiality of their data.