Software Defined Internet Exchange

In today's digital era, where data is the lifeblood of every organization, the importance of a reliable and efficient internet connection cannot be overstated. As businesses increasingly rely on cloud-based applications and services, the demand for high-performance internet connectivity has skyrocketed. To meet this growing need, a revolutionary technology known as Software Defined Internet Exchange (SD-IX) has emerged as a game-changer in the networking world. In this blog post, we will delve into the concept of SD-IX, its benefits, and its potential to revolutionize how we connect to the internet.

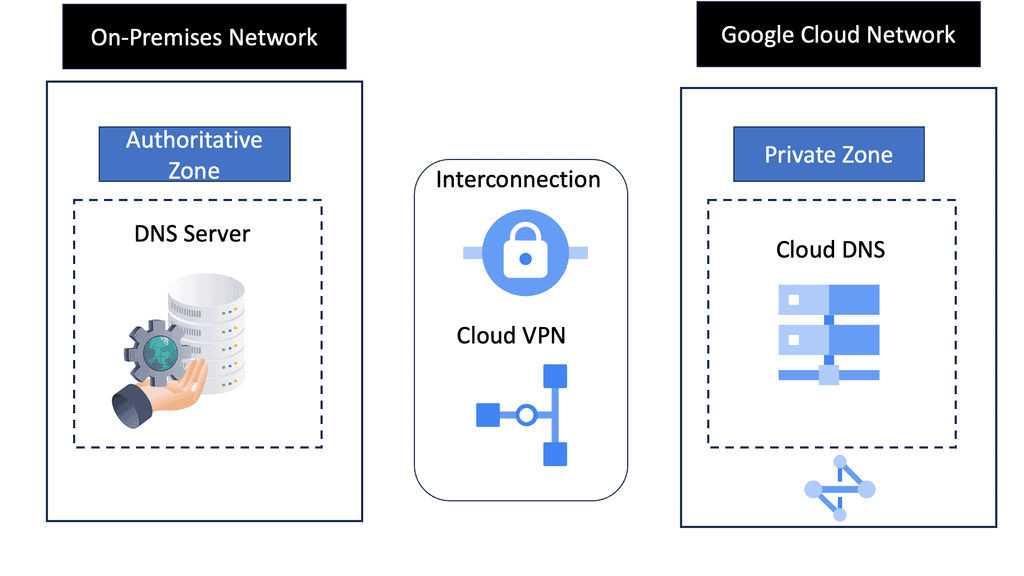

Software Defined Internet Exchange, or SD-IX, allows organizations to dynamically connect to multiple Internet service providers (ISPs) through a centralized platform. Traditionally, internet traffic is exchanged through physical interconnections between ISPs, resulting in limited flexibility and control. SD-IX eliminates these limitations by virtualizing the interconnection process, enabling organizations to establish direct, secure, and scalable connections with multiple ISPs.

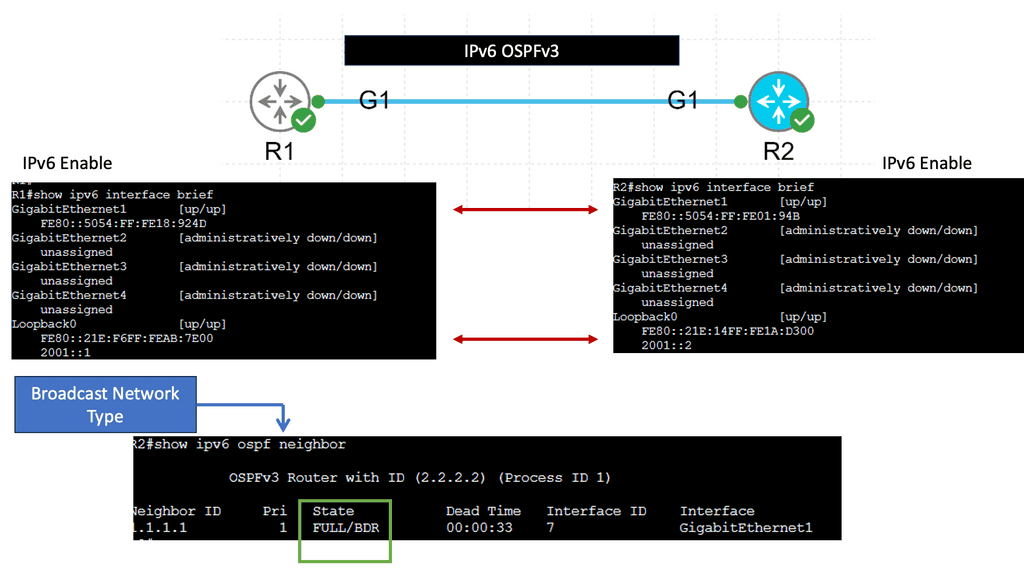

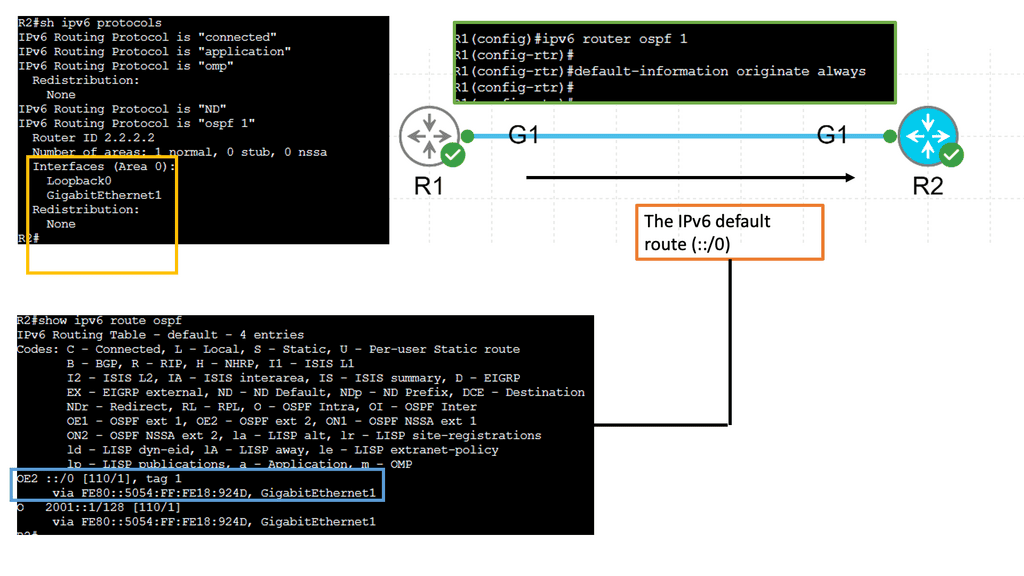

SD-IX Defined: Software Defined Internet Exchange, or SD-IX, is a cutting-edge technology that enables dynamic and automated interconnection between networks. Unlike traditional methods that rely on physical infrastructure, SD-IX leverages software-defined networking (SDN) principles to create virtualized interconnections, providing flexibility, scalability, and enhanced control.

Enhanced Performance: One of the prominent advantages of SD-IX is its ability to optimize network performance. By utilizing intelligent routing algorithms and traffic engineering techniques, SD-IX reduces latency, improves packet delivery, and enhances overall network efficiency. This translates into faster and more reliable connectivity for businesses and end-users alike.

Flexibility and Scalability: SD-IX offers unparalleled flexibility and scalability. With its virtualized nature, organizations can easily adjust their network connections, add or remove services, and scale their infrastructure as needed. This agility empowers businesses to adapt to changing demands, optimize their network resources, and accelerate their digital transformation initiatives.

Cost Efficiency: By leveraging SD-IX, organizations can significantly reduce their network costs. Traditional methods often require expensive physical interconnections and complex configurations. SD-IX eliminates the need for such costly infrastructure, replacing it with virtualized interconnections that can be provisioned and managed efficiently. This cost-saving aspect makes SD-IX an attractive option for businesses of all sizes.

Driving Innovation: SD-IX is poised to drive innovation in the networking landscape. Its ability to seamlessly connect disparate networks, whether cloud providers, content delivery networks, or internet service providers, opens up new possibilities for collaboration and integration. This interconnected ecosystem paves the way for novel services, improved user experiences, and accelerated digital innovation.

Enabling Edge Computing: As the demand for low-latency applications and services grows, SD-IX plays a crucial role in enabling edge computing. By bringing data centers closer to the edge, SD-IX reduces latency and enhances the performance of latency-sensitive applications. This empowers businesses to leverage emerging technologies like IoT, AI, and real-time analytics, unlocking new opportunities and use cases.

Software Defined Internet Exchange (SD-IX) represents a significant leap forward in the world of connectivity. With its virtualized interconnections, enhanced performance, flexibility, and cost efficiency, SD-IX is poised to reshape the networking landscape. As organizations strive to meet the ever-increasing demands of a digitally connected world, embracing SD-IX can unlock new realms of possibilities and propel them towards a future of seamless connectivity.

Matt Conran

Highlights: Software Defined Internet Exchange

Understanding Software-Defined Internet Exchange

a) SD-IX is a cutting-edge technology that enables dynamic and flexible interconnection between networks. Unlike traditional internet exchange points (IXPs), SD-IX leverages software-defined networking (SDN) principles to create virtualized exchange environments. By abstracting the physical infrastructure, SD-IX allows on-demand network connections, enhanced scalability, and simplified network management.

b) Internet exchanges are physical locations where multiple Internet service providers (ISPs), content delivery networks (CDNs), and network operators connect their networks to exchange Internet traffic. By establishing direct connections, IXPs enable efficient and cost-effective data transfer between various networks, enhancing internet performance and reducing latency.

**How Internet Exchanges Work**

Internet Exchanges typically consist of high-speed switches and routers deployed in data centers. These devices provide the necessary connectivity between participating networks, facilitating traffic exchange.

To join an Internet Exchange, networks must adhere to specific peering policies and agreements. These guidelines dictate the terms of traffic exchange, including technical requirements, traffic ratios, and network security measures.

**Internet Exchange Points Around the World**

1: – ) Numerous Internet Exchange Points (IXPs) are located worldwide, with some of the most prominent ones including DE-CIX in Frankfurt, AMS-IX in Amsterdam, and LINX in London. These IXPs are critical hubs for global internet connectivity, enabling networks from different regions to exchange traffic.

2: – ) major global IXPs, regional and national Internet Exchange Points cater to specific geographic areas. These local IXPs further improve network performance by facilitating regional traffic exchange and reducing the need for long-haul data transfer.

3: – ) the demand for high-performance and reliable internet connectivity continues to grow, SD-IX is poised to play a pivotal role in shaping the future of networking. By virtualizing the interconnection process and providing organizations with unprecedented control and flexibility over their network connections, SD-IX empowers businesses to optimize their network performance, enhance security, and reduce costs. With its ability to scale on-demand and seamlessly reroute traffic, SD-IX is well-suited for the evolving needs of cloud-based applications, IoT devices, and emerging technologies such as edge computing.

4: – ) Defined Internet Exchange represents a paradigm shift in how organizations connect to the Internet. By virtualizing the interconnection process and providing enhanced performance, reliability, cost efficiency, scalability, and security, SD-IX offers a compelling solution for businesses seeking to optimize their network infrastructure. As the digital landscape continues to evolve, SD-IX is set to revolutionize the way we connect to the internet, enabling organizations to stay ahead of the curve and unlock new possibilities in the digital era.

Key SD-IX Considerations:

– Enhanced Performance and Latency Reduction: SD-IX brings networks closer to end-users by establishing globally distributed points of presence (PoPs). This proximity reduces latency and improves application performance, resulting in a superior user experience.

– Seamless Network Scalability: With SD-IX, organizations can quickly scale their network resources up or down based on demand. This agility empowers businesses to adapt rapidly to changing network requirements, ensuring optimal performance and cost-efficiency.

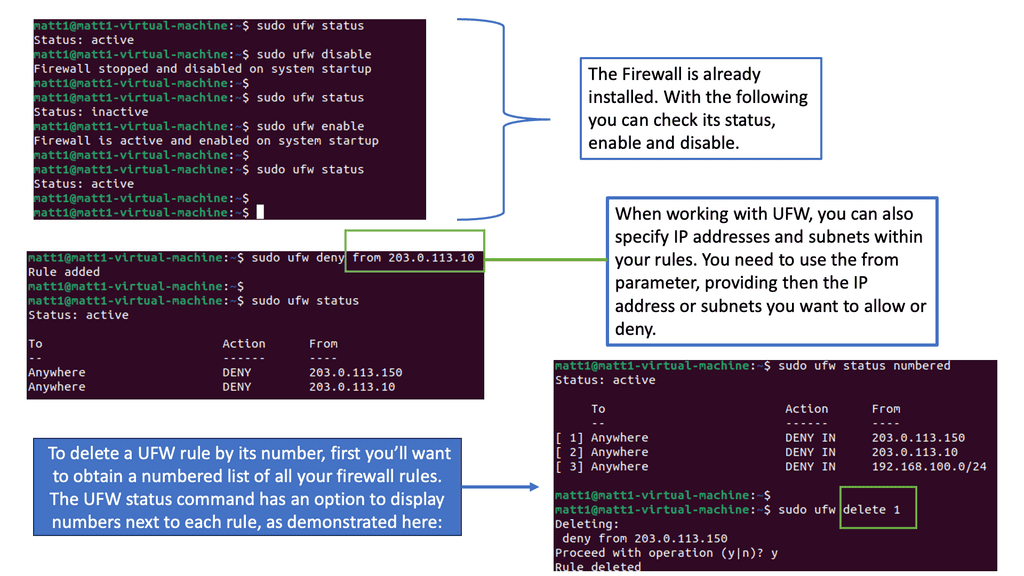

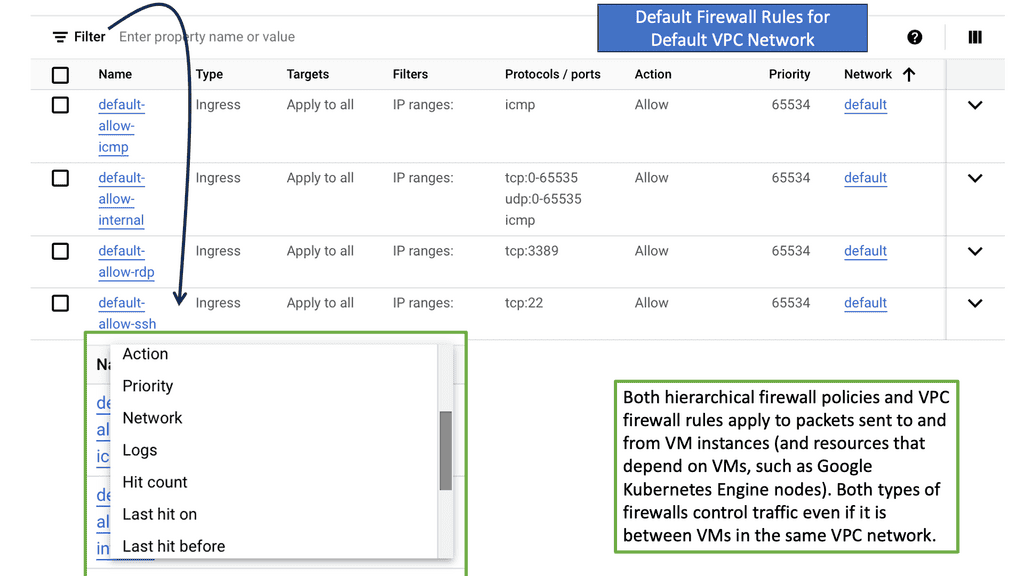

– Simplified Network Management: Traditional IXPs often require complex physical infrastructure and manual configurations. SD-IX simplifies network management by providing a centralized control plane, allowing administrators to automate provisioning, traffic engineering, and policy enforcement.

– Cloud Service Providers: SD-IX enables providers to establish direct and secure customer connections. This direct access bypasses the public internet, ensuring better security, lower latency, and improved data transfer speeds.

– Content Delivery Networks (CDNs): CDNs can leverage SD-IX to optimize content delivery by strategically placing their PoPs closer to end-users. This reduces latency, minimizes bandwidth costs, and enhances content delivery performance.

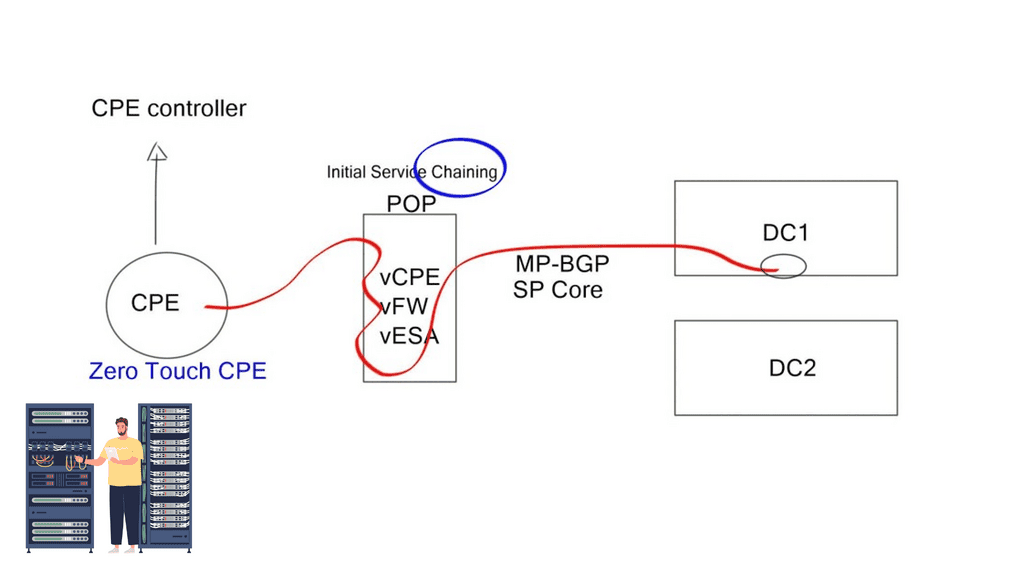

– Enterprises and Multi-Cloud Connectivity: Enterprises can benefit from SD-IX by establishing private connections between their networks and multiple cloud service providers. This enables secure, high-performance multi-cloud connectivity, facilitating seamless data transfer and workload migration.

Understanding SD-IX

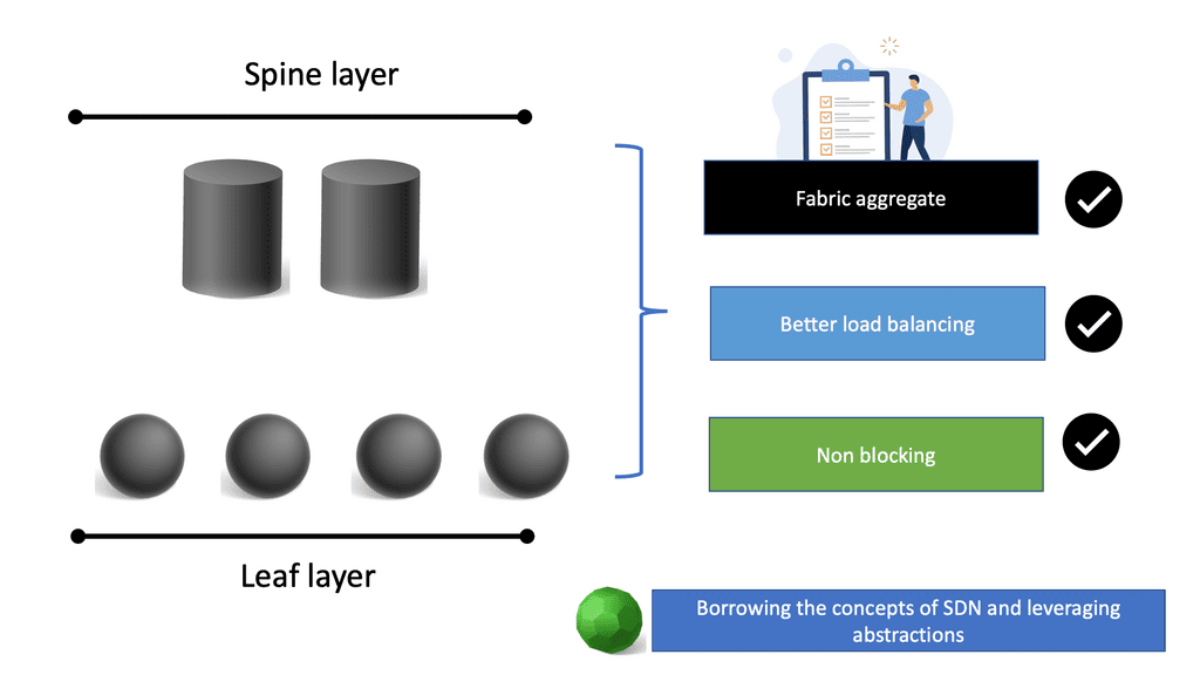

At its core, SD-IX is an architectural framework enabling the dynamic and automated internet traffic exchange between networks. Unlike traditional methods that rely on physical infrastructure, SD-IX leverages software-defined networking (SDN) principles to create a virtualized exchange ecosystem. By decoupling the control plane from the data plane, SD-IX brings flexibility, agility, and scalability to internet exchange.

One of SD-IX’s critical advantages is its ability to provide enhanced performance through optimized routing. By leveraging intelligent algorithms and real-time analytics, SD-IX can intelligently direct traffic along the most efficient paths, reducing latency and improving overall network performance. Moreover, SD-IX offers improved scalability, allowing networks to dynamically adjust their capacity based on demand, ensuring seamless connectivity even during peak usage.

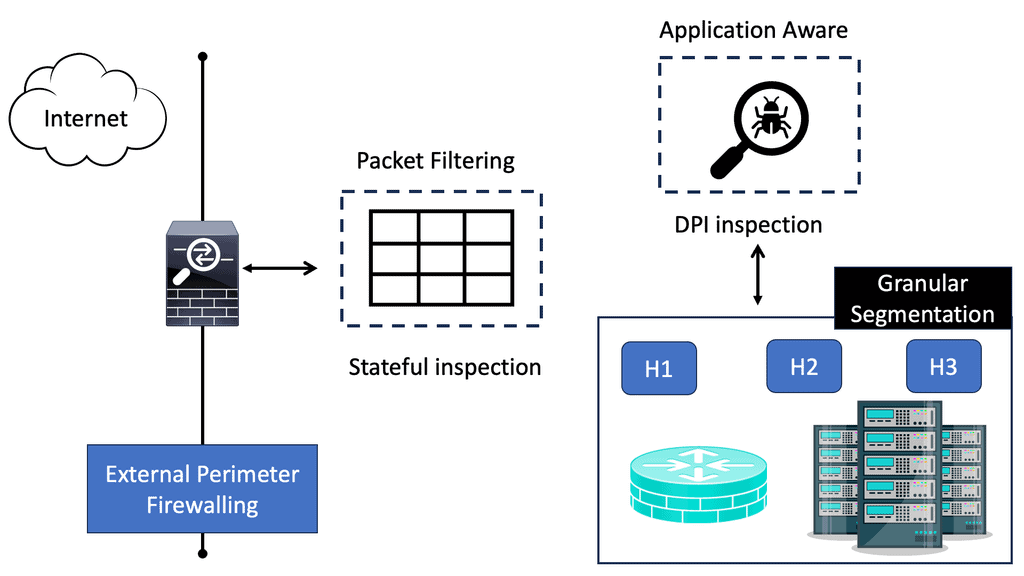

Security and Privacy Advancements

SD-IX brings significant advancements in an era where data security and privacy are of the utmost concern. With the ability to implement granular access control policies and encryption mechanisms, SD-IX ensures secure data transmission across networks. SD-IX’s centralized management and monitoring capabilities enable network administrators to detect and mitigate potential security threats in real-time, bolstering overall network security.

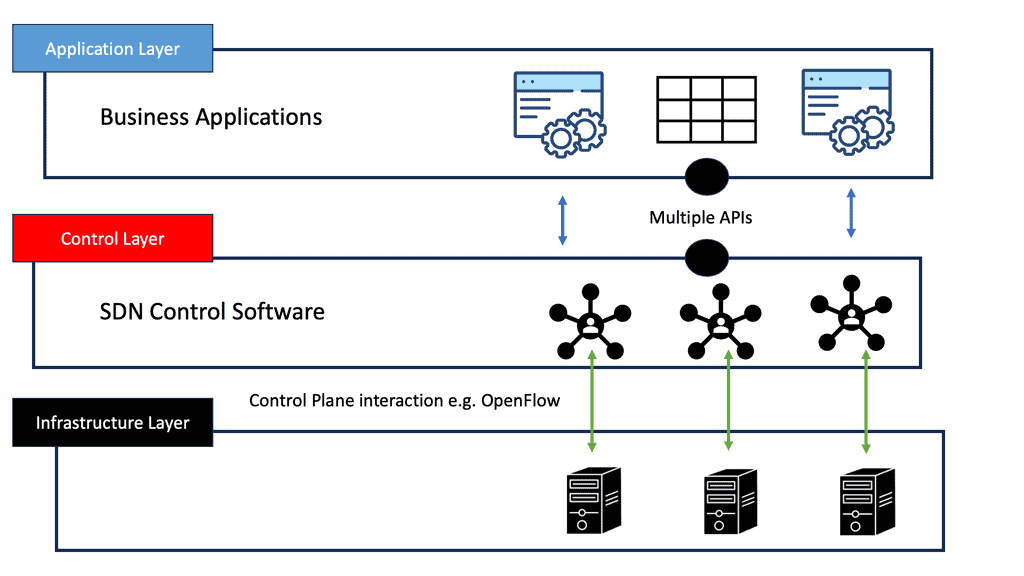

Software-defined networks

A software-defined network (SDN) optimizes and simplifies network operations by closely tying applications and network services, whether real or virtual. By establishing a logically centralized network control point (typically an SDN controller), the control point orchestrates, mediates, and facilitates communication between applications that wish to interact with network elements and network elements that want to communicate information with those applications. The controller exposes and abstracts network functions and operations through modern, application-friendly, bidirectional programmatic interfaces.

As a result, software-defined, software-driven, and programmable networks have a rich and complex history and various challenges and solutions to those challenges. Because of the success of technologies that preceded them, software-defined, software-driven, and programmable networks are now possible.IP, BGP, MPLS, and Ethernet are the fundamental elements of most networks worldwide.

Control and Data Plane Separation

SDN’s early proponents advocated separating a network device’s control and data planes as a potential advantage. Network operators benefit from this separation regarding centralized or semi-centralized programmatic control. As well as being economically advantageous, it can consolidate into a few places, usually a complex piece of software to configure and control, onto less expensive, so-called commodity hardware.

One of SDN’s most controversial tenets is separating control and data planes. It’s not a new concept, but the contemporary way of thinking puts a twist on it: how far should the control plane be from the data plane, how many instances are needed for resiliency and high availability, and if 100% of the control plane can be moved beyond a few inches are all intensely debated. There are many possible control planes, ranging from the simplest, the fully distributed, to the semi- and logically centralized, to the strictly centralized.

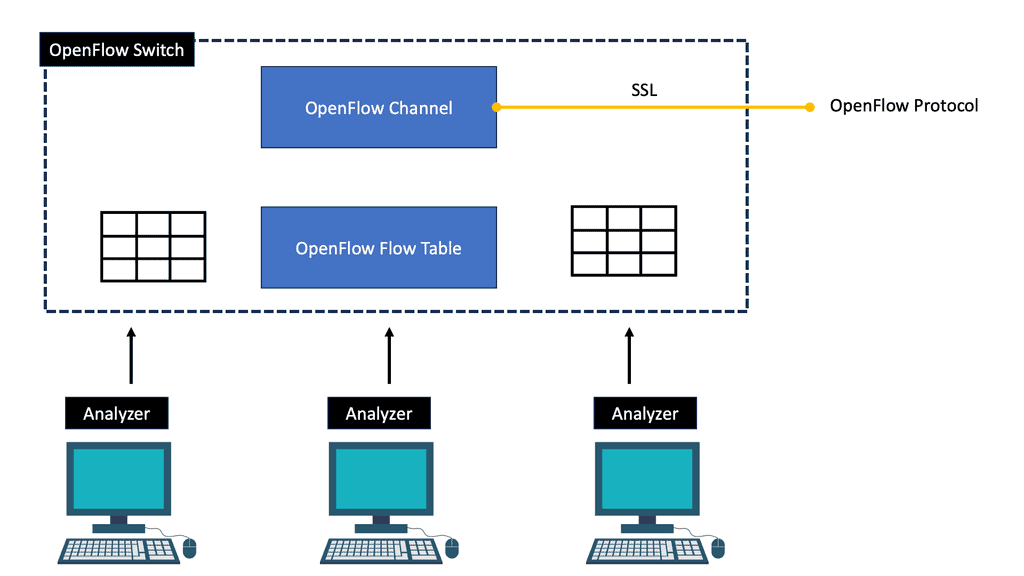

OpenFlow Matching

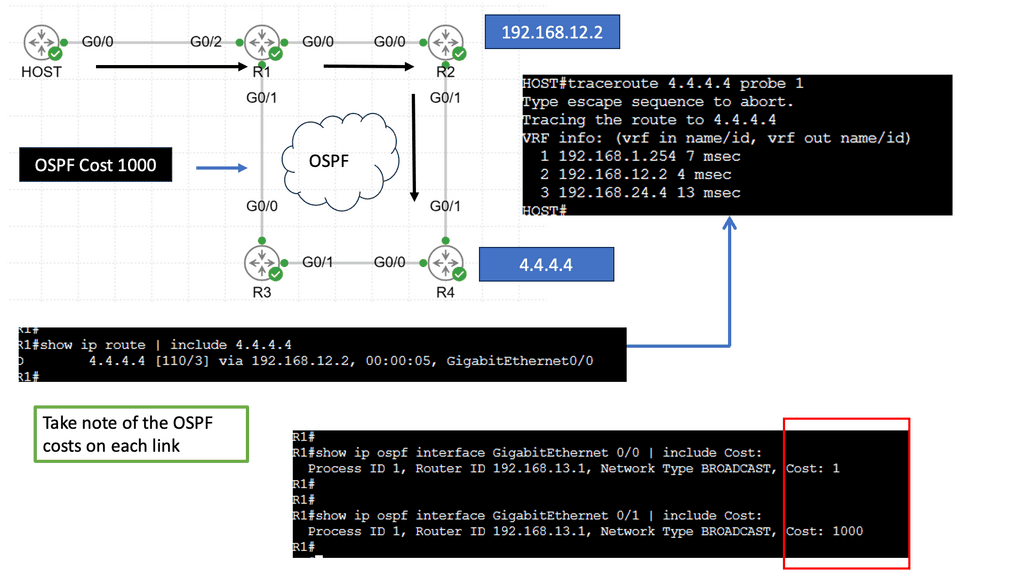

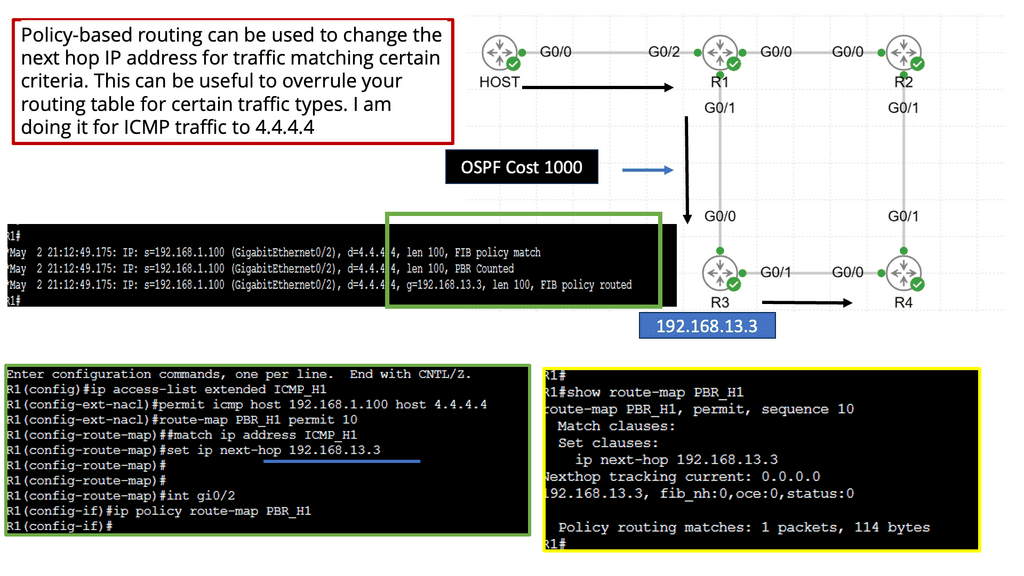

With OpenFlow, the forwarding path is determined more precisely (matching fields in the packet) than traditional routing protocols because the tables OpenFlow supports more than just the destination address. Using the source address to determine the next routing hop is similar to the granularity offered by PBR.

In the same way that OpenFlow would do many years later, PBR permits network administrators to forward traffic based on “nontraditional” attributes, such as the source address of a packet. However, PBR-forwarded traffic took quite some time for network vendors to offer equivalent performance, and the final result was very vendor-specific.

Example Technology: Policy Based Routing

**How Policy-Based Routing Works**

At its core, policy-based routing operates by applying a series of rules to incoming packets. These rules, defined by network administrators, determine the next hop for packets based on criteria such as source or destination IP address, protocol type, or even application-level data. Unlike conventional routing protocols that rely solely on destination IP addresses to make decisions, PBR provides the ability to consider a broader set of parameters, thus enabling more granular control over network traffic flows.

**Benefits of Implementing Policy-Based Routing**

One of the primary advantages of PBR is its ability to optimize network performance. By directing traffic along paths that make the most sense for specific types of data, network operators can reduce congestion and improve response times. Additionally, PBR can enhance security by allowing sensitive data to be routed over secure, encrypted pathways while less critical data takes a different route. This capability is particularly valuable in environments where network resources are shared across multiple departments or where specific compliance requirements must be met.

**Challenges and Considerations**

Despite its benefits, policy-based routing is not without challenges. The complexity of configuring and maintaining PBR rules can be daunting, especially in large networks with diverse requirements. Careful planning and ongoing management are essential to ensure that PBR implementations remain effective and do not introduce unintended routing behaviors. Moreover, network administrators must keep an eye on the broader network architecture to ensure that PBR policies align with overall network goals and do not conflict with other routing protocols in use.

**Use Cases: Real-World Applications of Policy-Based Routing**

Policy-based routing finds its place in a variety of real-world applications. In enterprise networks, PBR is often used to prioritize business-critical applications or to implement cost-saving measures by routing traffic over less expensive links when possible. It also plays a significant role in multi-tenant environments, where different customers or departments may require distinct levels of service. Additionally, PBR is instrumental in hybrid cloud environments, where data flows between on-premises infrastructure and cloud services must be managed efficiently.

**The Role of SDN Solutions**

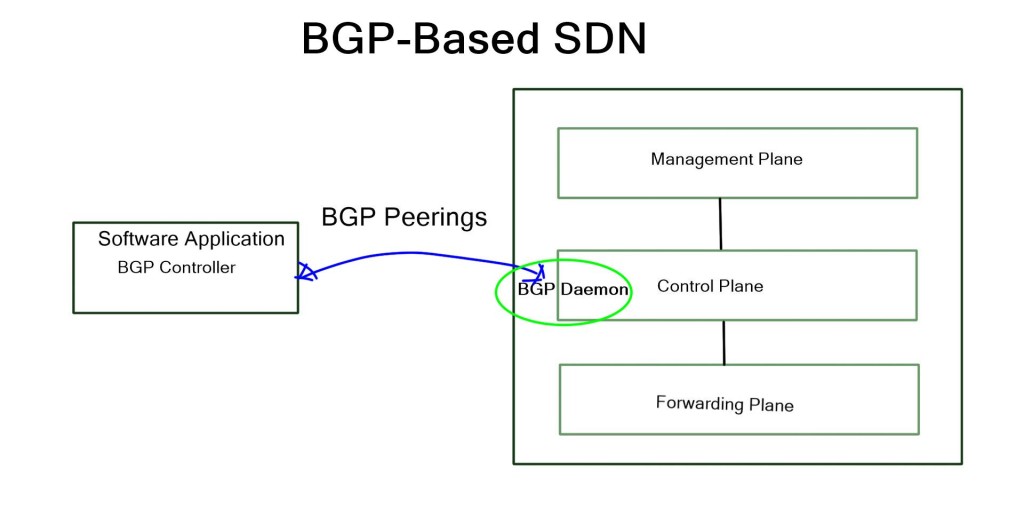

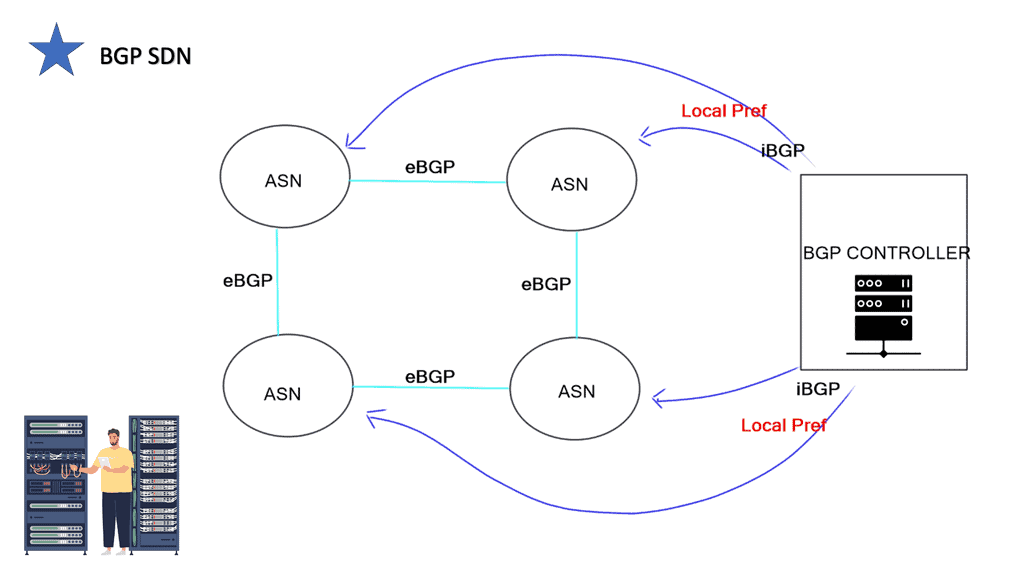

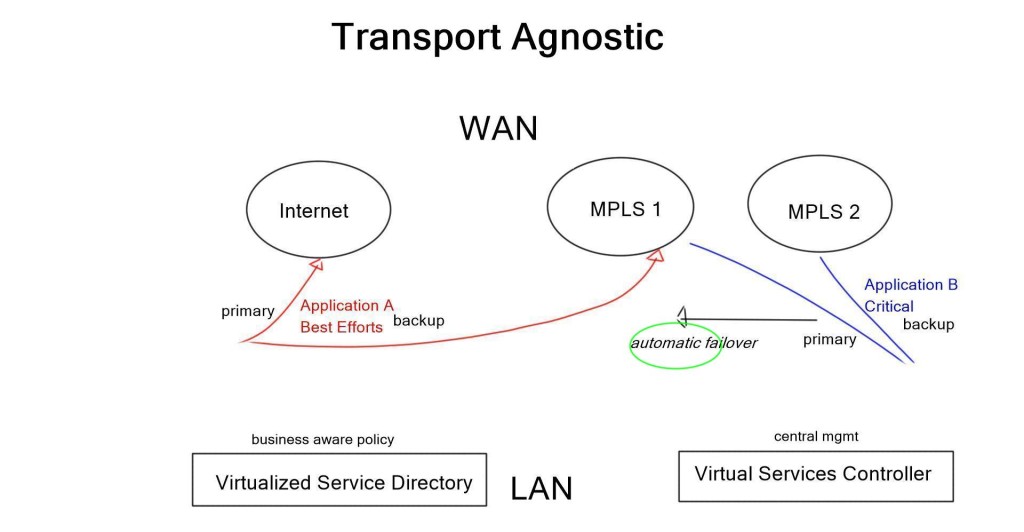

Most existing SDN solutions are aimed at cellular core networks, enterprises, and the data center. However, at the WAN edge, SD-WAN and WAN SDN are leading a solid path, with many companies offering a BGP SDN solution augmenting natural Border Gateway Protocol (BGP) IP forwarding behavior with a controller architecture, optimizing both inbound and outbound Internet-bound traffic. So, how can we use these existing SDN mechanisms to enhance BGP for interdomain routing at Internet Exchange Points (IXP)?

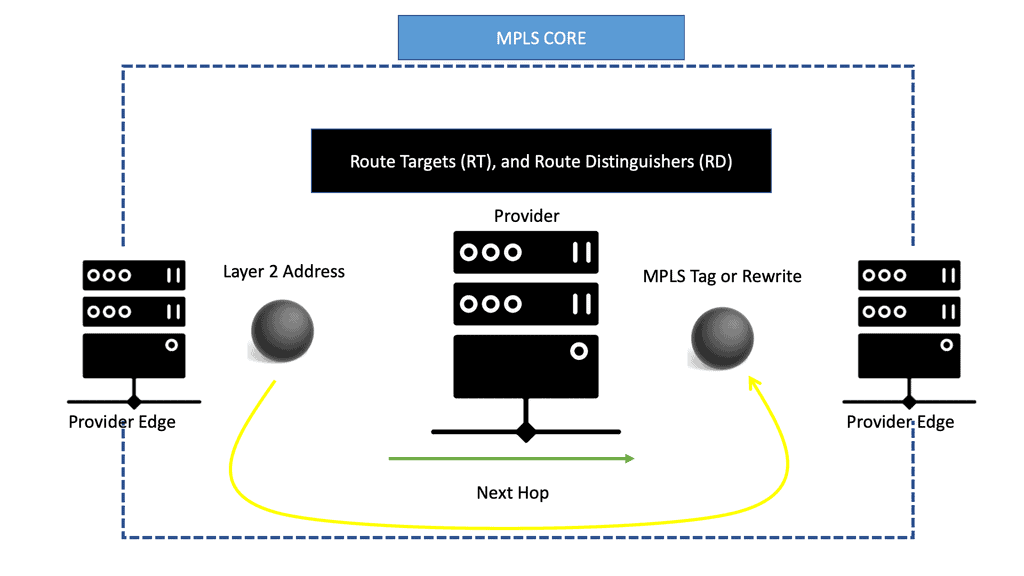

**The Role of IXPs**

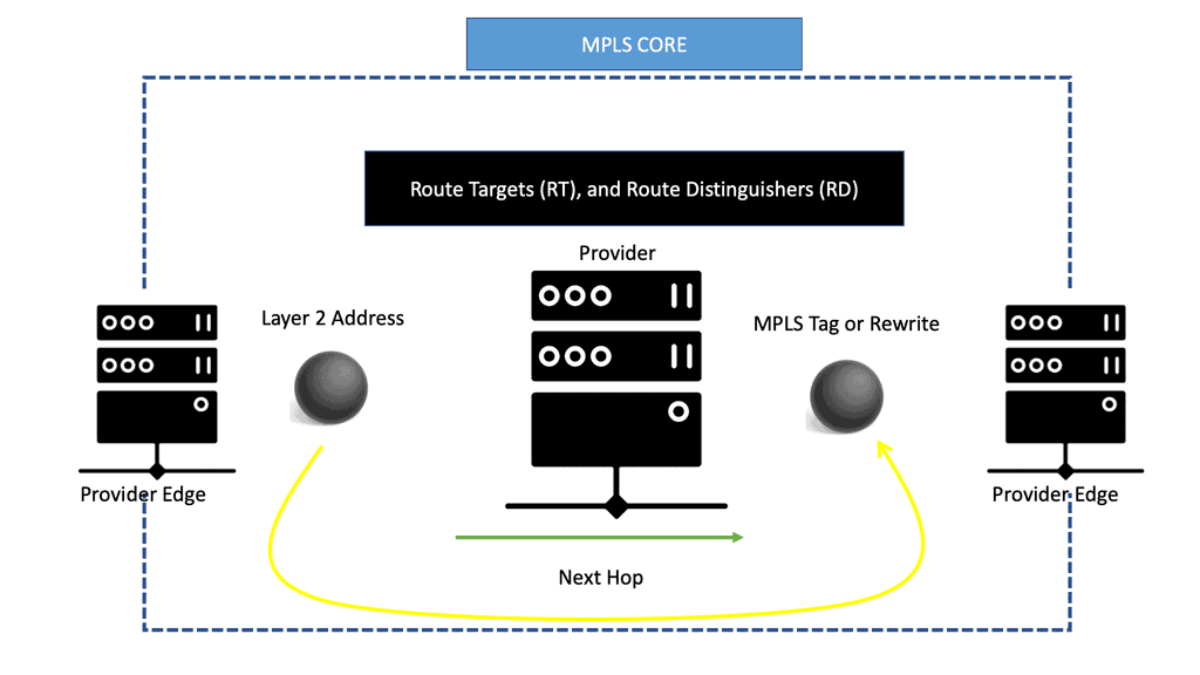

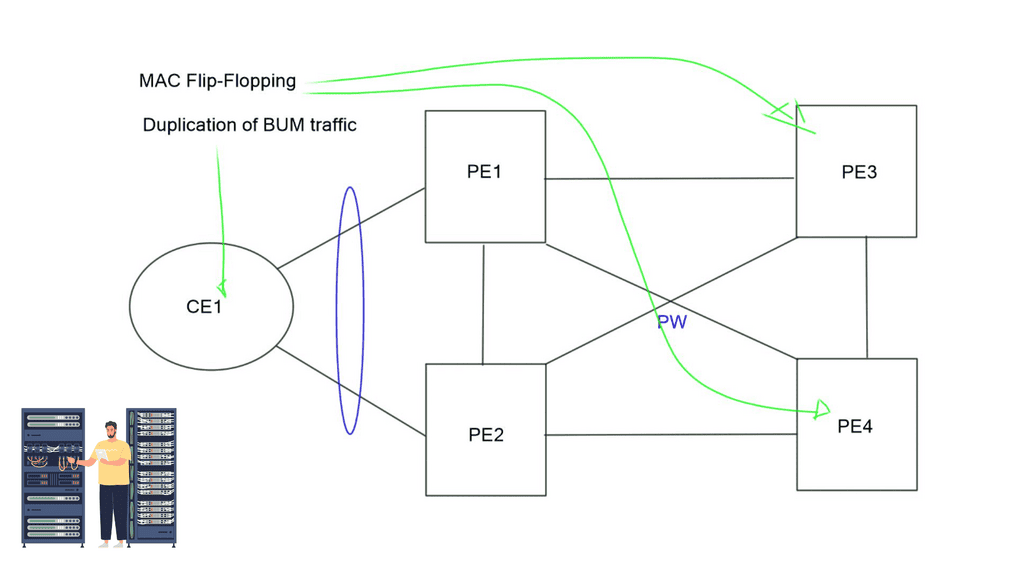

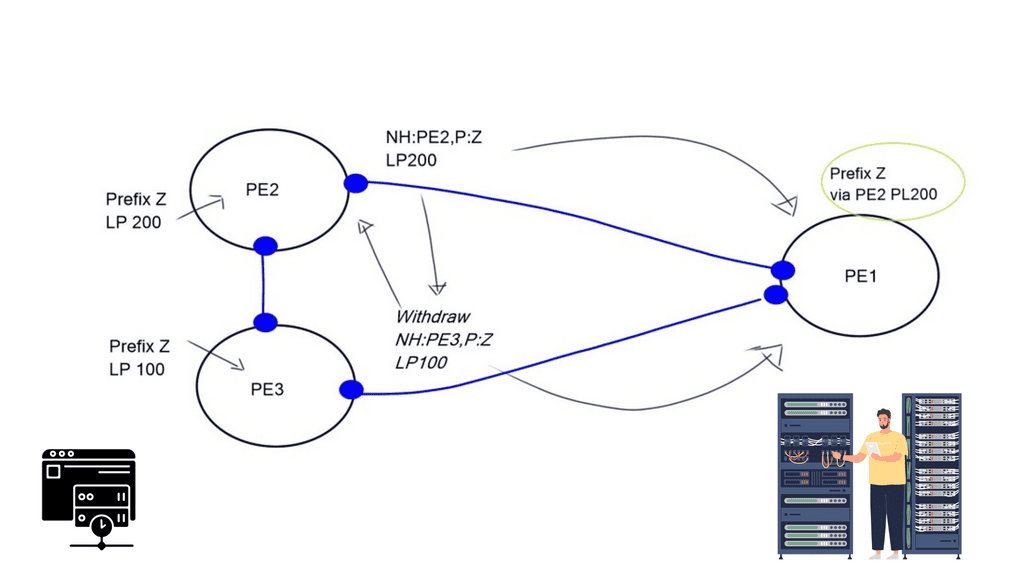

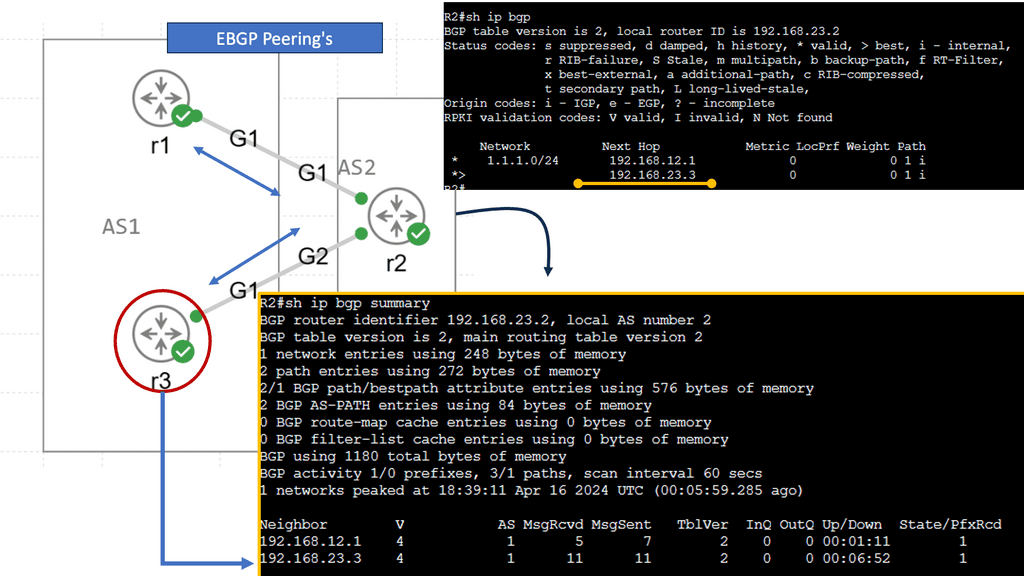

IXPs are location points where networks from multiple providers meet to exchange traffic with BGP routing. Each participating AS exchanges BGP routes by peering eBGP with a BGP route server, which directs traffic to another network ASes over a shared Layer 2 fabric. The shared Layer 2 fabric provides the data plane forwarding of packets. The actual BGP route server is the control plane to exchange routing information.

For additional pre-information, you may find the following posts helpful:

Software Defined Internet Exchange

An Internet exchange point (IXP) is a physical location through which Internet infrastructure companies such as Internet Service Providers (ISPs) and CDNs connect. These locations exist on the “edge” of different networks and allow network providers to share transit outside their network.

IXPs will run BGP. Also, it is essential to understand that Internet exchange point participants often require that the BGP NEXT_HOP specified in UPDATE messages be that of the peer’s IP address, as a matter of policy.

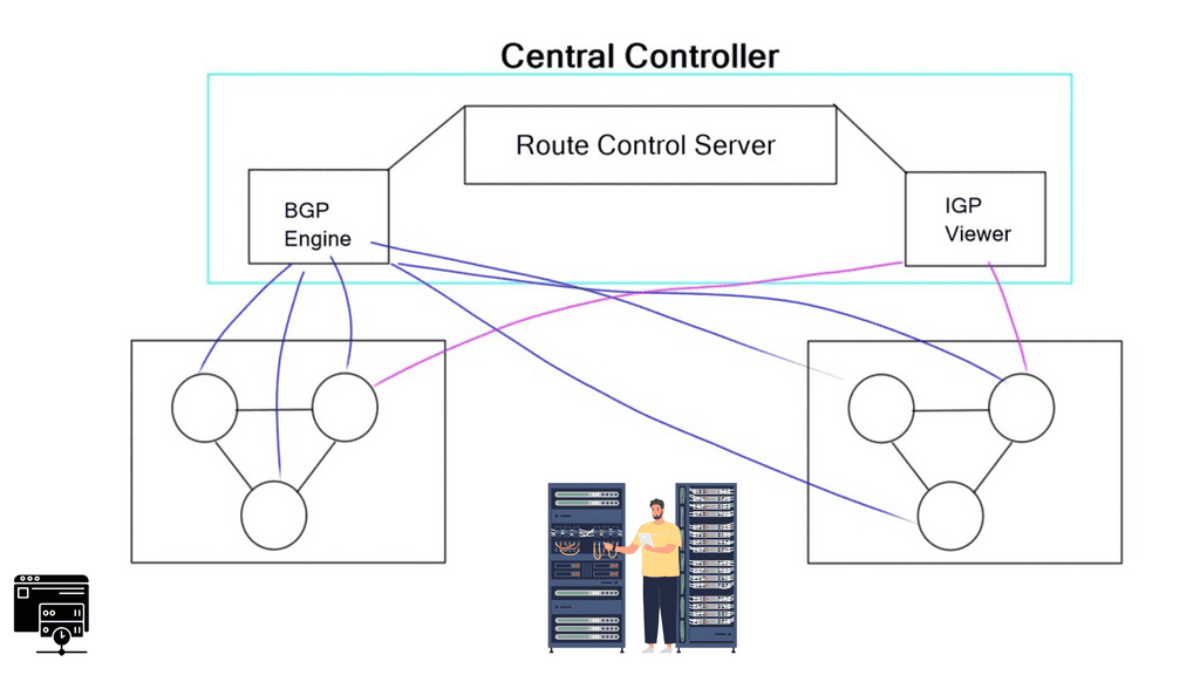

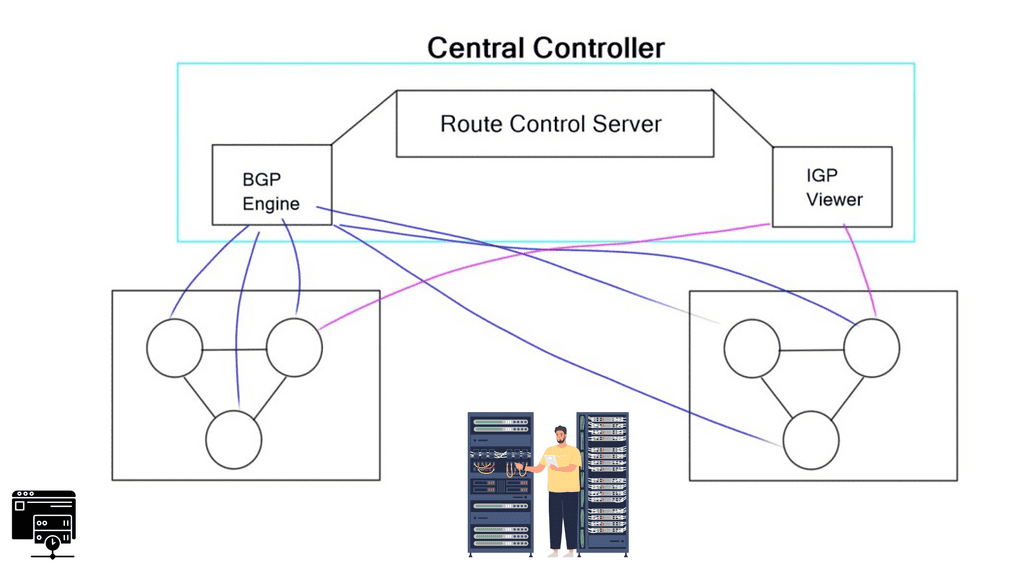

Route Server

A route server provides an alternative to full eBGP peering between participating AS members, enabling network traffic engineering. It’s a control plane device and does not participate in data plane forwarding. There are currently around 300 IXPs worldwide. Because of their simple architecture and flat networks, IXPs are good locations to deploy SDN.

There is no routing for forwarding, so there is a huge need for innovation. They usually consist of small teams, making innovation easy to introduce. Fear is one of the primary emotions that prohibit innovation, and one thing that creates fear is Loss of Service.

This is significant for IXP networks, as they may have over 5 Terabytes of traffic per second. IXPs are major connecting points, and a slight outage can have a significant ripple effect.

- A key point. Internet Exchange Design

SDX, a software-defined internet exchange, is an SDN solution based on the combined efforts of Princeton and UC Berkeley. It aims to address IXP pain points (listed below) by deploying additional SDN controllers and OpenFlow-enabled switches. It doesn’t try to replace the entire classical IXP architecture with something new but rather augments existing designs with a controller-based solution, enhancing IXP traffic engineering capabilities. However, the risks associated with open-source dependencies shouldn’t be ignored.

Challenges: Software Defined Internet Exchange: IXP Pain Points

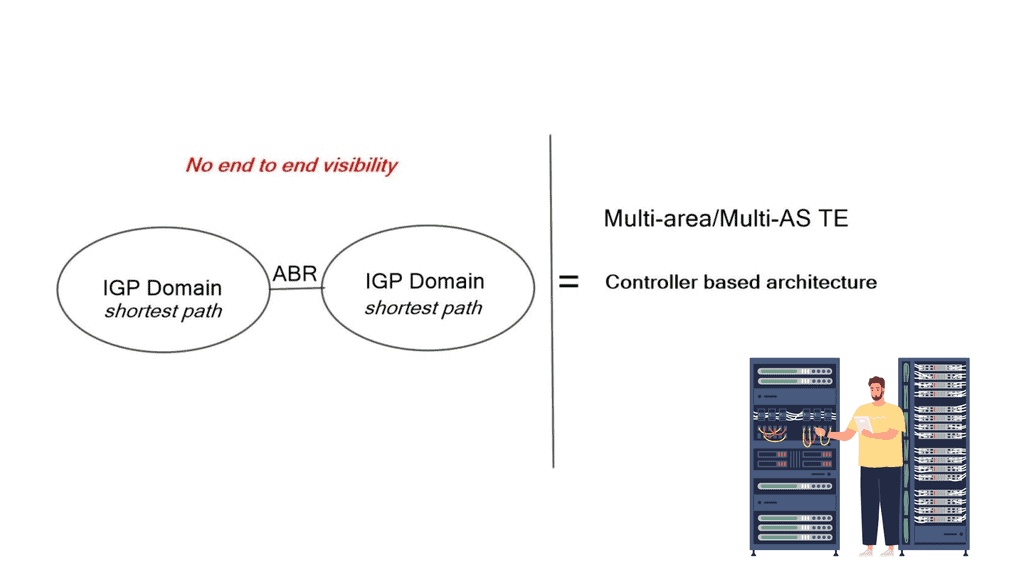

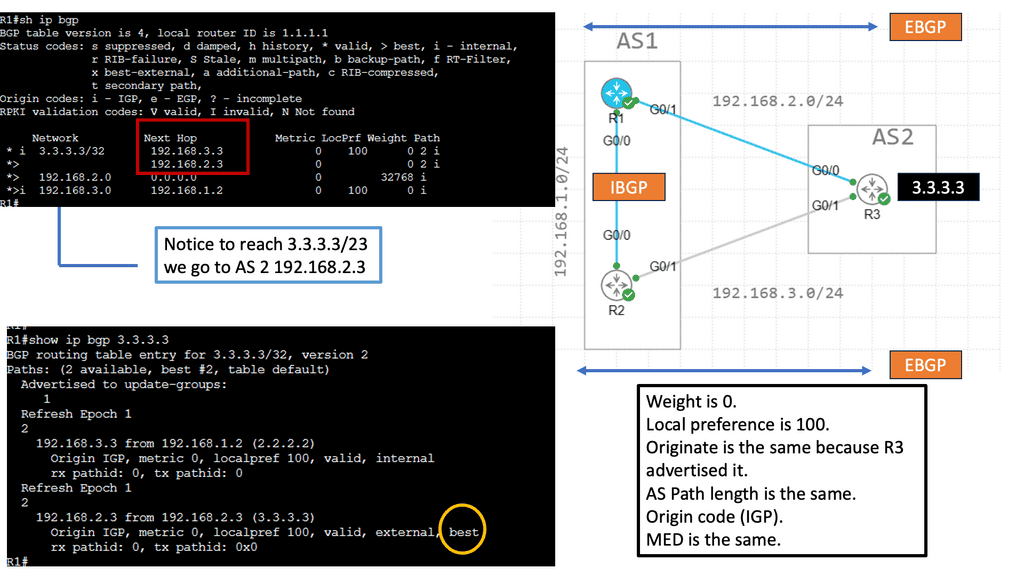

BGP is great for scalability and reducing complexity but severely limits how networks deliver traffic over the Internet. One tricky thing to do with BGP is good inbound TE. The issue is that IP routing is destination-based, so your neighbor decides where traffic enters the network. It’s not your decision.

The forwarding mechanism is based on the destination IP prefix. A device forwards all packets with the same destination address to the same next hop, and the connected neighbor decides.

The main pain points for IXP networks:

As already mentioned, routing is based on the destination IP prefix. BGP selects and exports routes for destination prefixes only. It doesn’t match other criteria in the packet header, such as source IP address or port number. Therefore, it cannot help with application steering, which would be helpful in IXP networks.

Secondly, you can only influence direct neighbors. There is no end-to-end control, and it’s hard to influence neighbors that you are not peering. Some BGP attributes don’t carry across multiple ASes; others may be recognized differently among vendors. We also use a lot of de-aggregation to TE. Everyone is doing this, which is why we have the problem of 540,000 prefixes on the Internet. De-aggregation and multihoming create lots of scalability challenges.

Finally, there is an indirect expression of policy. Local Preference (LP) and Multiple Exit Discriminator (MED) are ineffective mechanisms influencing traffic engineering. We should have better inbound and outbound TE capabilities. MED, AS Path, pretending, and Local Preference are widely used attributes for TE, but they are not the ultimate solution.

They are inflexible because they can only influence routing decisions based on destination prefixes. You can not do source IP or application type. They are very complex, involving intense configuration on multiple network devices. All these solutions involve influencing the remote party to decide how it enters your AS, and if the remote party does not apply them correctly, TE becomes unpredictable.

SDX: Software-Defined Internet Exchange

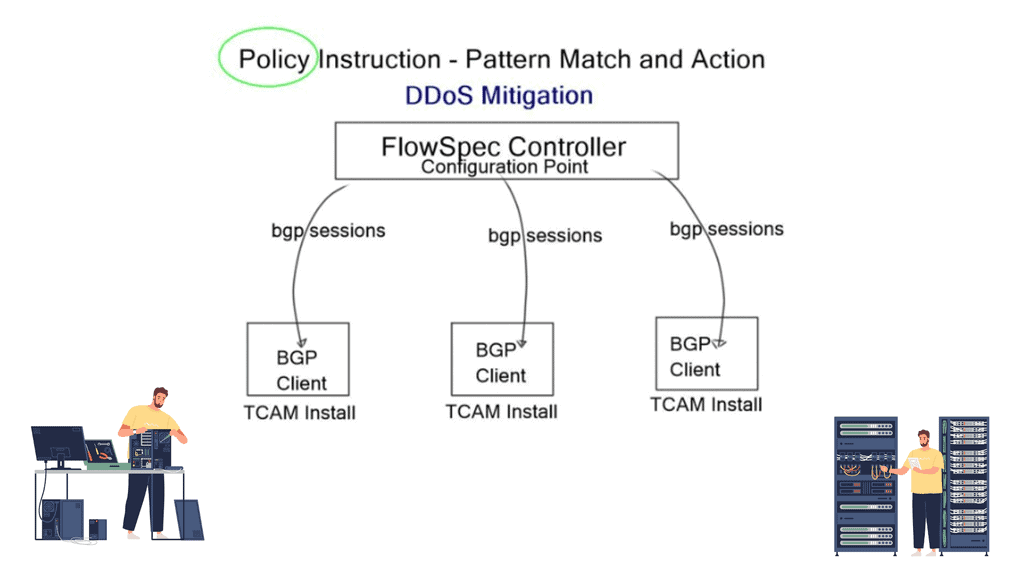

The SDX solution proposed by Laurent is a Software-Defined Internet Exchange. As previously mentioned, it consists of a controller-based architecture with OpenFlow 1.3-enabled physical switches. It aims to solve the pain points of BGP at the edge using SDN.

Transport SDN offers direct control over packet-processing rules that match on multiple header fields (not just destination prefixes) and perform various actions (not just forwarding), offering direct control over the data path. SDN enables the network to execute a broader range of decisions concerning end-to-end traffic delivery.

How does it work?

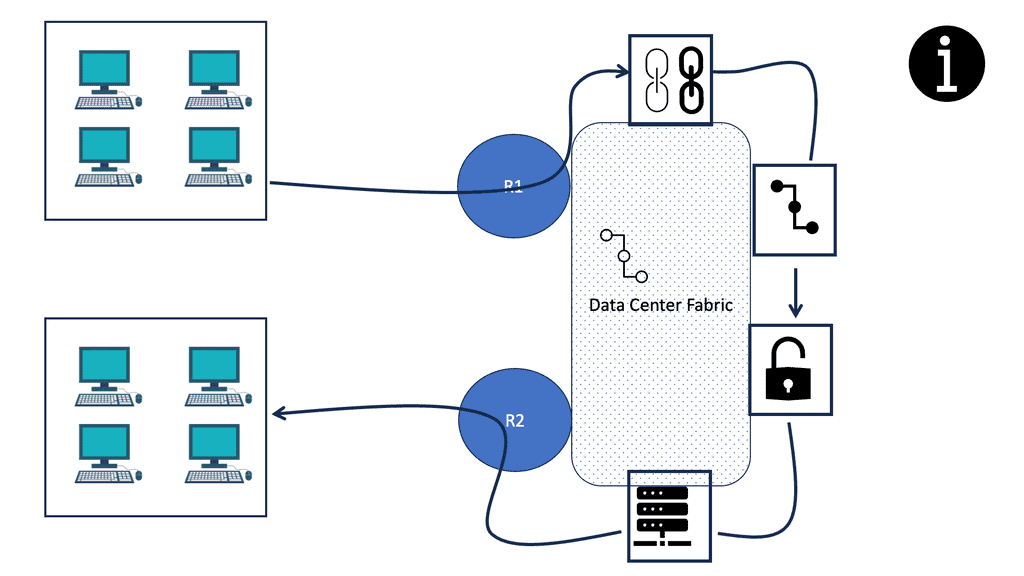

What is OpenFlow? Is the IXP fabric replaced with OpenFlow-enabled switches? Now, network traffic engineering is based on granular OpenFlow rules. It’s more predictable as it does not rely on third-party neighbors to decide the entry. OpenFlow rules can be based on any packet header field, so they’re much more flexible than existing TE mechanisms. An SDN-enabled data plane enables networks to have optimal WAN traffic with application steering capabilities.

The existing route server has not been modified, but now we can push SDN rules into the fabric without requiring classical BGP tricks (local preference, MED, AS prepend). The solution matches the destination MAC address, not the destination IP prefix, and uses an ARP proxy to convert the IP prefixes to MAC addresses.

The participants define the forwarding policies, and the controller’s role is to compile the forwarding entries into the fabric. The SDX controller implementation has two main pipelines: a policy compiler based on Pyretic and a route server based on ExaBGP. The policy compiler accepts input policies (custom route advertisements) written in Pyretic from individual participants and BGP routes from the route server. This produces forwarding rules that implement the policies.

SDX Controller

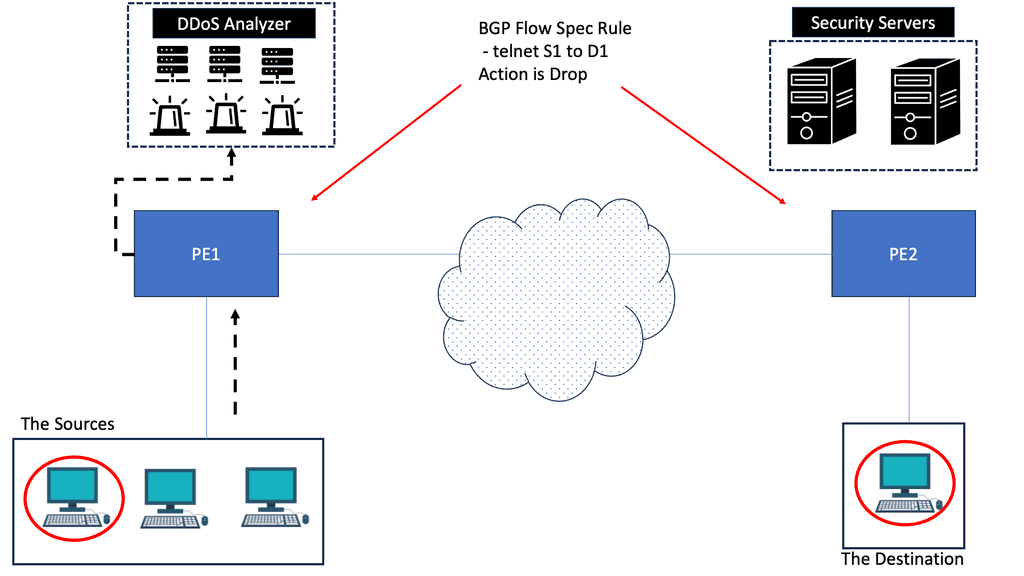

The SDX controller combines the policies from multiple member ASes into one policy for the physical switch implementation. The controller is like an optimized compiler, compiling down the policy and optimizing the code in the forwarding by using a virtual next hop. There are other potential design alternatives to SDX, such as BGP FlowSpec. But in this case, BGP FlowSpec would have to be supported by all participating member AS edge devices.

Closing Points on Software Defined Internet Exchange

At its core, SDX is an evolution of traditional Internet Exchange Points (IXPs), which are critical nodes in the internet’s infrastructure, allowing different networks to interconnect. Traditional IXPs are hardware-driven, requiring physical switches and routers to manage traffic between networks. SDX, on the other hand, leverages the principles of SDN to introduce a software layer that enhances flexibility and control over these exchanges. This software-defined approach allows for dynamic configuration and management of network policies, enabling more efficient and tailored data traffic handling.

One of the primary benefits of SDX is its capacity for greater agility and adaptability in managing network traffic. Unlike traditional IXPs, SDX can quickly respond to changing network demands, optimizing the flow of data in real time. This adaptability is particularly beneficial for handling peak traffic periods or unexpected surges, ensuring that data exchanges remain smooth and uninterrupted. Additionally, SDX provides enhanced security features, as the software layer can be programmed to detect and mitigate potential threats more effectively than conventional hardware solutions.*

The implications of adopting SDX are vast and varied. For internet service providers, SDX offers the potential to provide more personalized services to their customers, adjusting bandwidth and routing protocols based on individual needs. Enterprises can benefit from SDX by gaining more control over their data exchanges, optimizing their network performance, and reducing operational costs. Furthermore, SDX is particularly advantageous for emerging technologies like the Internet of Things (IoT) and 5G networks, where the ability to efficiently handle large volumes of data is crucial.

Despite its many advantages, the transition to SDX is not without its challenges. Implementing SDX requires significant changes to existing network infrastructures, which can be costly and complex. Moreover, the shift to a software-centric model necessitates a new skill set for IT professionals, who must be adept in both networking and software development. There is also the consideration of interoperability, as networks must ensure that their SDX solutions can work seamlessly with other networks and legacy systems.

Summary: Software Defined Internet Exchange

In today’s fast-paced digital world, seamless connectivity is necessary for businesses and individuals. As technology advances, traditional Internet exchange models face scalability, flexibility, and cost-effectiveness limitations. However, a groundbreaking solution has emerged – software-defined internet exchange (SD-IX). In this blog post, we will delve into the world of SD-IX, exploring its benefits, functionalities, and potential to revolutionize how we connect online.

Understanding SD-IX

SD-IX, at its core, is a virtualized network infrastructure that enables the dynamic and efficient exchange of internet traffic between multiple parties. Unlike traditional physical exchange points, SD-IX leverages software-defined networking (SDN) principles to provide a more agile and scalable solution. By separating the control and data planes, SD-IX empowers organizations to manage their network traffic with enhanced flexibility and control.

The Benefits of SD-IX

Enhanced Performance and Latency Reduction: SD-IX brings the exchange points closer to end-users, reducing the distance data travels. This proximity results in lower latency and improved network performance, enabling faster application response times and better user experience.

Scalability and Agility: Traditional exchange models often struggle to keep up with the ever-increasing demands for bandwidth and connectivity. SD-IX addresses this challenge by providing a scalable architecture that can adapt to changing network requirements. Organizations can easily add or remove connections, adjust bandwidth, and optimize network resources on-demand, all through a centralized interface.

Cost-Effectiveness: With SD-IX, organizations can avoid the costly investments in building and maintaining physical infrastructure. By leveraging virtualized network components, businesses can save costs while benefiting from enhanced connectivity and performance.

Use Cases and Applications

- Multi-Cloud Connectivity

SD-IX facilitates seamless connectivity between multiple cloud environments, allowing organizations to distribute workloads and resources efficiently. By leveraging SD-IX, businesses can build a robust and resilient multi-cloud architecture, ensuring high availability and optimized data transfer between cloud platforms.

- Hybrid Network Integration

For enterprises with a mix of on-premises infrastructure and cloud services, SD-IX serves as a bridge, seamlessly integrating these environments. SD-IX enables secure and efficient communication between different network domains, empowering organizations to leverage the advantages of both on-premises and cloud-based resources.

Conclusion:

In conclusion, software-defined Internet exchange (SD-IX) presents a transformative solution to the challenges faced by traditional exchange models. With its enhanced performance, scalability, and cost-effectiveness, SD-IX is poised to revolutionize how we connect and exchange data in the digital age. As businesses continue to embrace the power of SD-IX, we can expect a new era of connectivity that empowers innovation, collaboration, and seamless digital experiences.