OpenContrail

In today's fast-paced world, where cloud computing and virtualization have become the norm, the need for efficient and flexible networking solutions has never been greater. OpenContrail, an open-source software-defined networking (SDN) solution, has emerged as a powerful tool. This blog post explores the capabilities, benefits, and significance of OpenContrail in revolutionizing network management and delivering enhanced connectivity in the cloud era.

OpenContrail, initially developed by Juniper Networks, is an open-source SDN platform offering comprehensive network capabilities for cloud environments. It provides a scalable and flexible network infrastructure that enables automation, network virtualization, and secure multi-tenancy across distributed cloud deployments.

OpenContrail, an open-source network virtualization platform, is designed to simplify the management and orchestration of virtual networks. Built on well-established technologies such as OpenStack and SDN, it provides a comprehensive set of tools and APIs to create and manage virtualized network services. With OpenContrail, organizations can achieve greater scalability, security, and performance while reducing operational complexities.

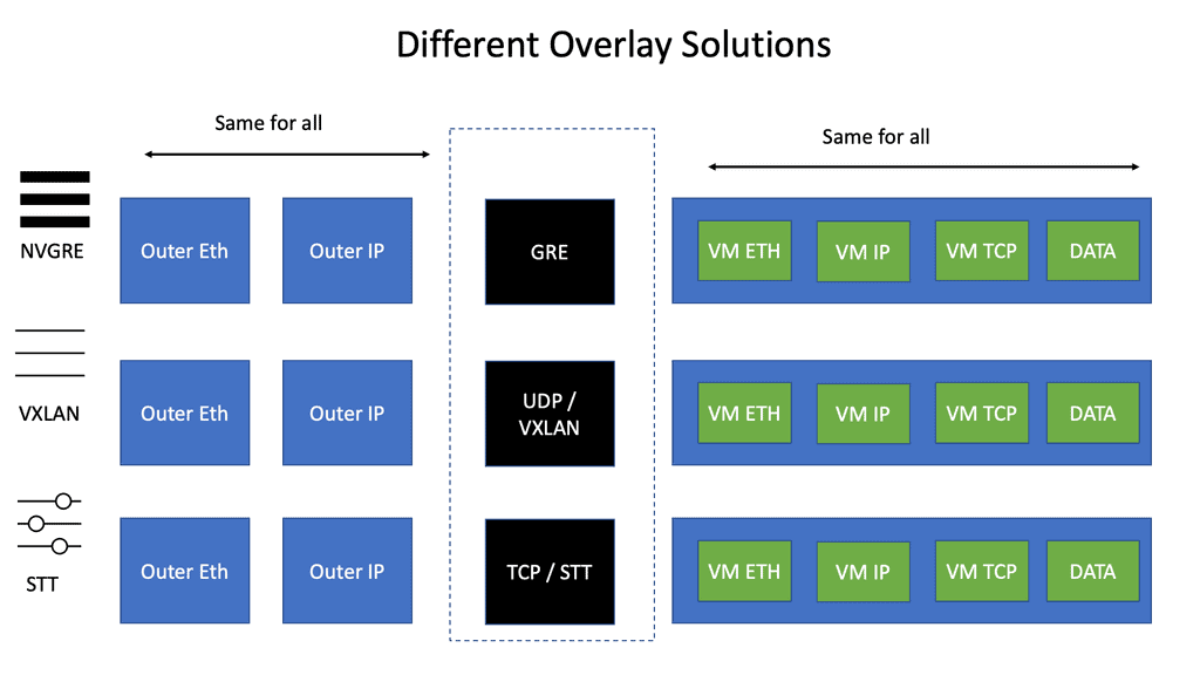

Virtual Network Overlays: OpenContrail leverages virtual network overlays to create isolated and secure network segments, allowing for seamless multi-tenancy and network segmentation.

Network Policy and Security: It offers fine-grained network policies to control traffic flow, implement access control, and enforce security measures at the virtual network level.

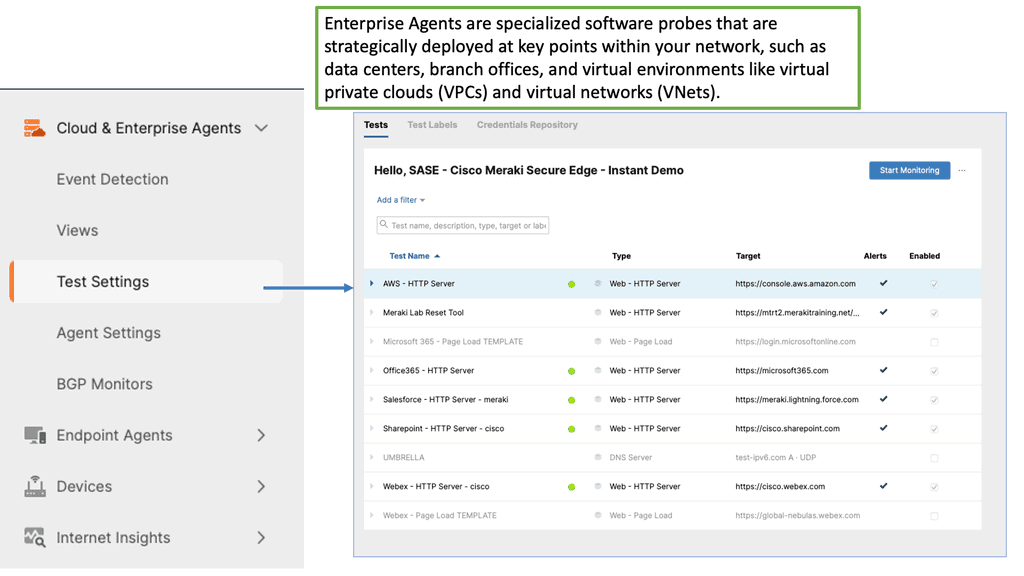

Analytics and Monitoring: OpenContrail provides advanced analytics and monitoring capabilities, allowing administrators to gain insights into network performance, troubleshoot issues, and optimize resource allocation.

Cloud Service Providers: OpenContrail empowers cloud service providers to deliver scalable and secure network services to their customers. It enables seamless provisioning of virtual networks, ensuring high-performance connectivity and efficient resource utilization.

Enterprise Networks: Enterprises can leverage OpenContrail to build agile and flexible network infrastructures. It simplifies network management, enables seamless integration with existing infrastructure, and provides enhanced security measures.

Internet of Things (IoT): With the proliferation of IoT devices, OpenContrail offers a robust solution for managing and securing large-scale IoT deployments. It enables efficient communication between devices, ensures data privacy, and provides centralized control over IoT network resources.

OpenContrail proves to be a groundbreaking solution in the realm of network virtualization. Its feature-rich architecture, open-source nature, and diverse real-world applications make it an invaluable tool for organizations seeking to optimize network performance, enhance security, and embrace the future of virtualized networks.

Matt Conran

Highlights: OpenContrail

Understanding OpenContrail

OpenContrail is an open-source software-defined networking (SDN) solution that enables the creation and management of virtual networks. It provides a scalable and flexible networking platform that simplifies network provisioning, enhances security, and optimizes network performance. By leveraging OpenContrail, organizations can effectively address the challenges posed by traditional networking approaches.

**Key Features and Benefits**

OpenContrail offers a wide range of powerful features that set it apart from traditional networking solutions. One of its key features is network virtualization, which allows the creation of isolated virtual networks within a physical network infrastructure.

This enables organizations to achieve greater agility and scalability, as well as efficient resource utilization. Additionally, OpenContrail provides advanced security measures, including micro-segmentation, that help protect sensitive data and prevent unauthorized access.

**Use Cases and Industry Applications**

OpenContrail is versatile and can be applied across various industries and use cases. In the telecommunications sector, it supports network slicing and virtual network functions (VNFs), crucial for deploying 5G networks. Enterprises use OpenContrail to create agile and scalable cloud environments, facilitating faster application deployment and improving overall operational efficiency.

Additionally, OpenContrail’s robust security features make it a preferred choice for sectors that require stringent data protection measures, such as finance and healthcare. By providing micro-segmentation and advanced threat detection, OpenContrail helps organizations safeguard their sensitive information.

Open-source network virtualization platform

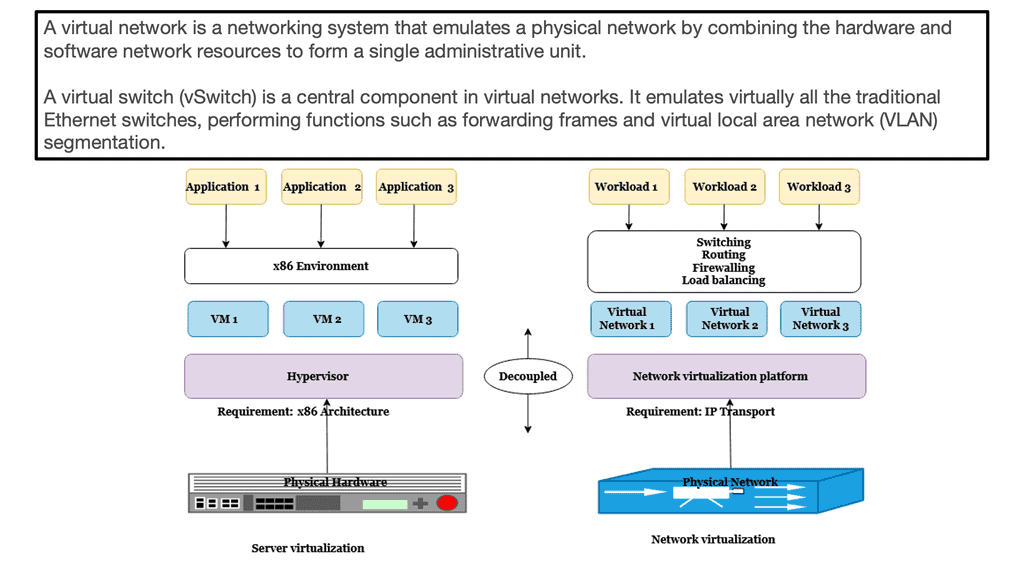

OpenContrail is an open-source network virtualization platform that enables the creation of virtual networks overlaying physical infrastructure. It provides a scalable and flexible solution for managing network resources, improving security, and enhancing overall network performance. By decoupling the network control plane from the data plane, OpenContrail brings a new level of agility and efficiency to network operations.

1. Virtual Network Creation: OpenContrail allows the creation of virtual networks, each with its own isolated environment, policies, and routing tables. This enables organizations to achieve multi-tenancy and securely isolate their applications and workloads.

2. Network Automation and Orchestration: With OpenContrail, network provisioning and management become automated and orchestrated. This reduces manual configuration efforts and brings more consistency and reliability to network operations.

3. Enhanced Security: OpenContrail provides advanced security features such as micro-segmentation, distributed firewalling, and traffic isolation. These capabilities ensure that applications and data remain protected and isolated, even in complex and dynamic network environments.

Understanding OpenContrail components

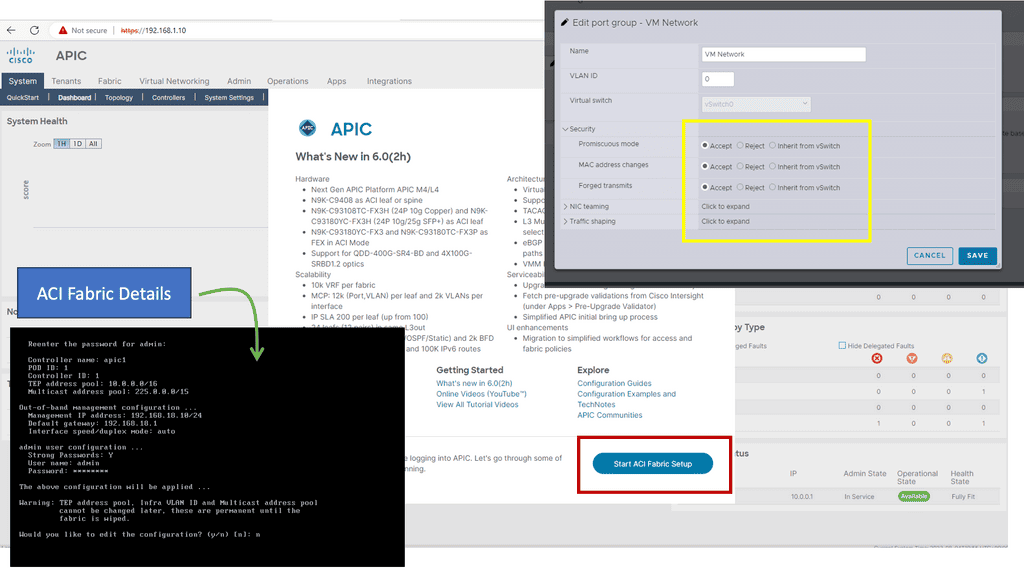

Controller Node: At the heart of OpenContrail lies the Controller Node, which acts as the brain of the network. It is responsible for managing and orchestrating all the network services, including configuration, control, and analytics. Through its intuitive and user-friendly interface, network administrators can easily define and enforce policies, monitor network performance, and troubleshoot issues.

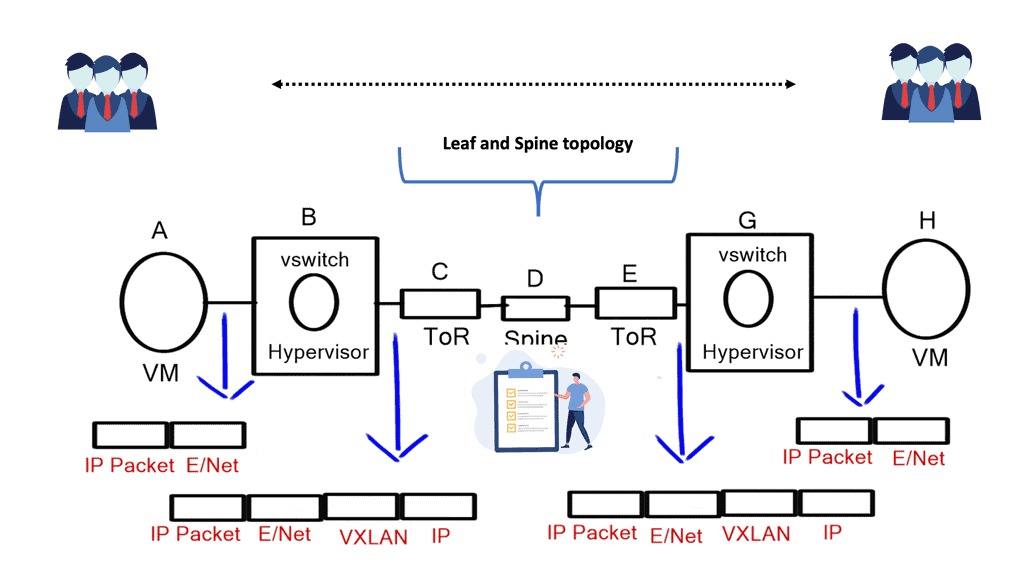

vRouter: The vRouter, short for virtual router, is a critical component of OpenContrail that ensures efficient packet forwarding within the network. By combining the power of virtualization and routing, the vRouter enables seamless communication between virtual machines and physical hosts. It provides advanced networking capabilities, such as firewalling, NAT, and VPN, while ensuring high performance and scalability.

Analytics Node: To gain valuable insights into network behavior and performance, OpenContrail incorporates an Analytics Node. This component collects and analyzes network data, generating comprehensive reports and metrics. Network operators can leverage this information to optimize network utilization, identify bottlenecks, and proactively address potential issues. The Analytics Node plays a crucial role in ensuring the reliability and efficiency of the entire network infrastructure.

Web User Interface: OpenContrail offers a user-friendly Web User Interface (UI) that simplifies network management and configuration. With its intuitive design and powerful functionalities, network administrators can easily define network topologies, set up policies, and monitor network performance in real time. The Web UI provides a centralized platform for managing the entire network infrastructure, making deploying, scaling, and maintaining OpenContrail deployments easier.

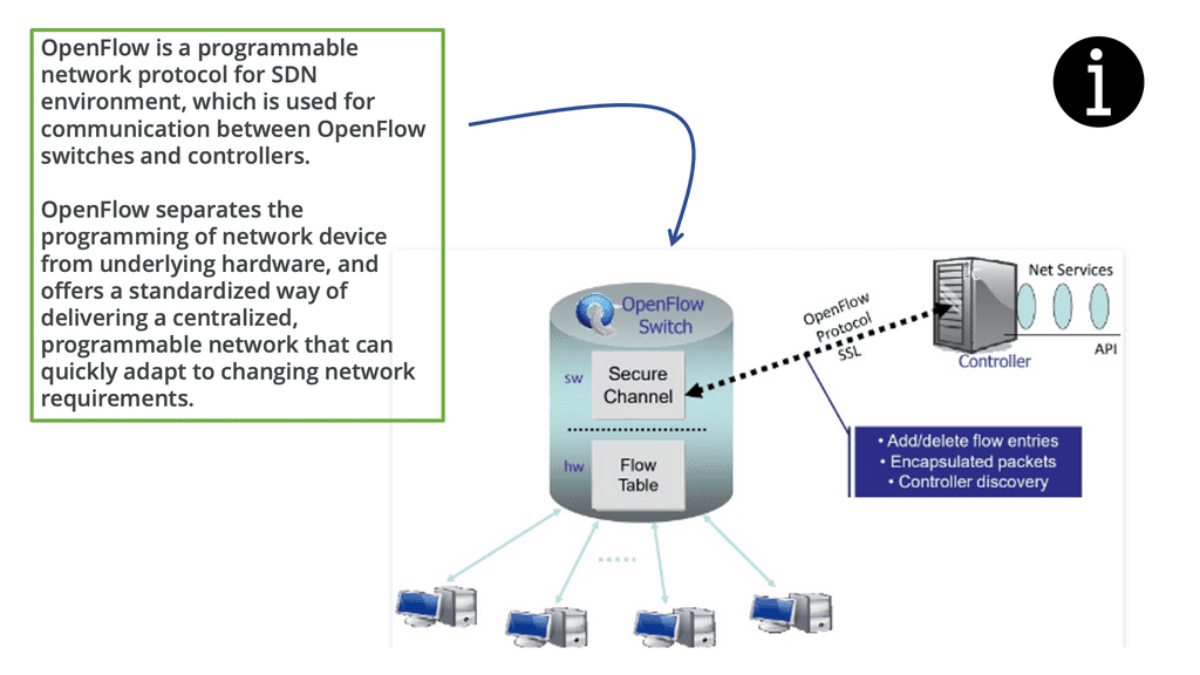

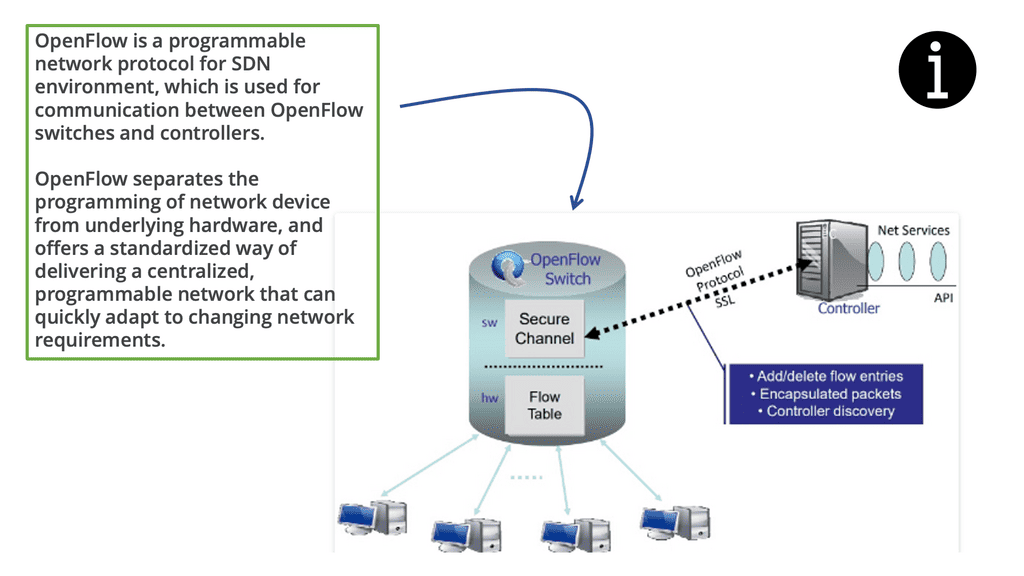

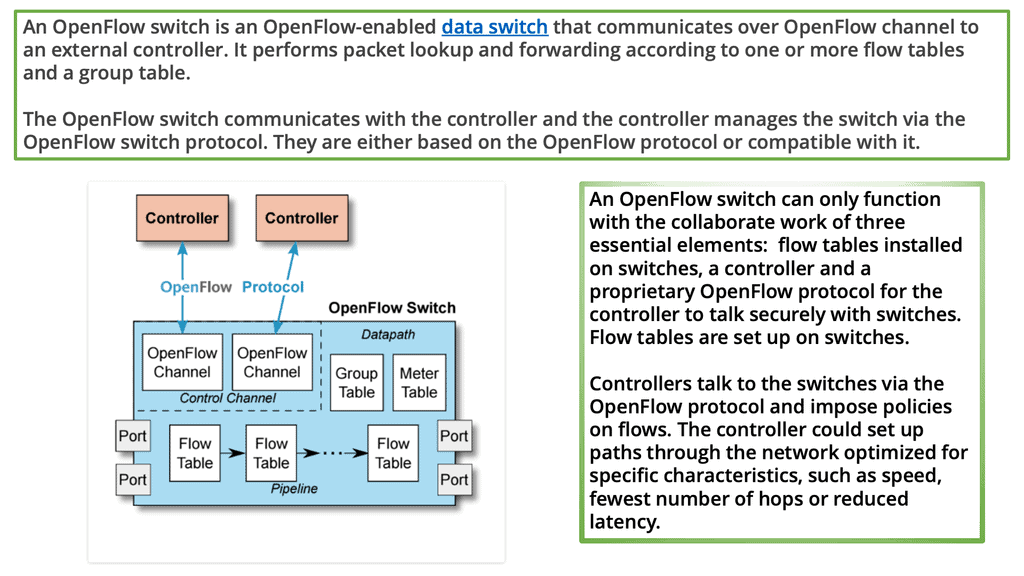

The traditional network vs. SDN network

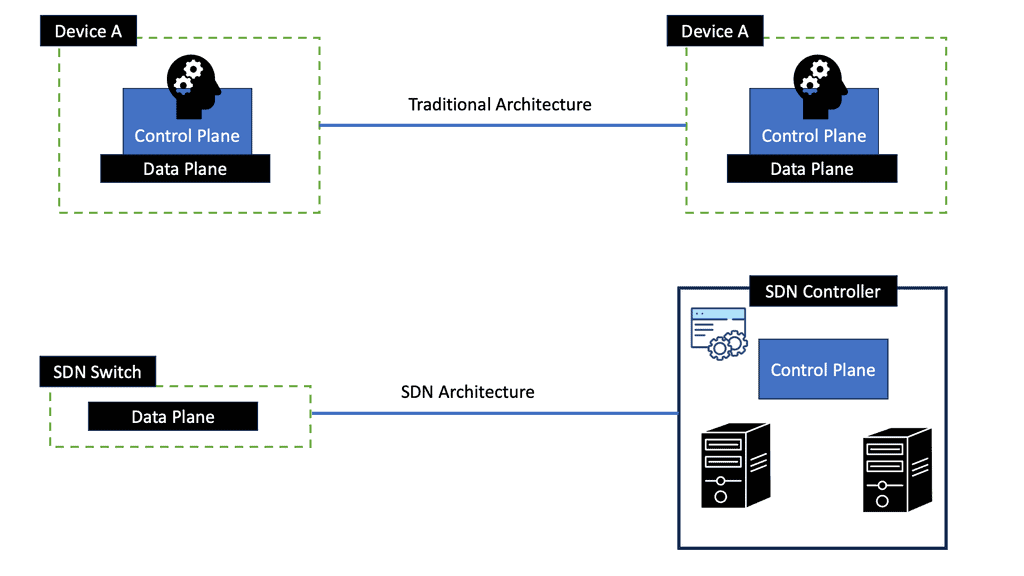

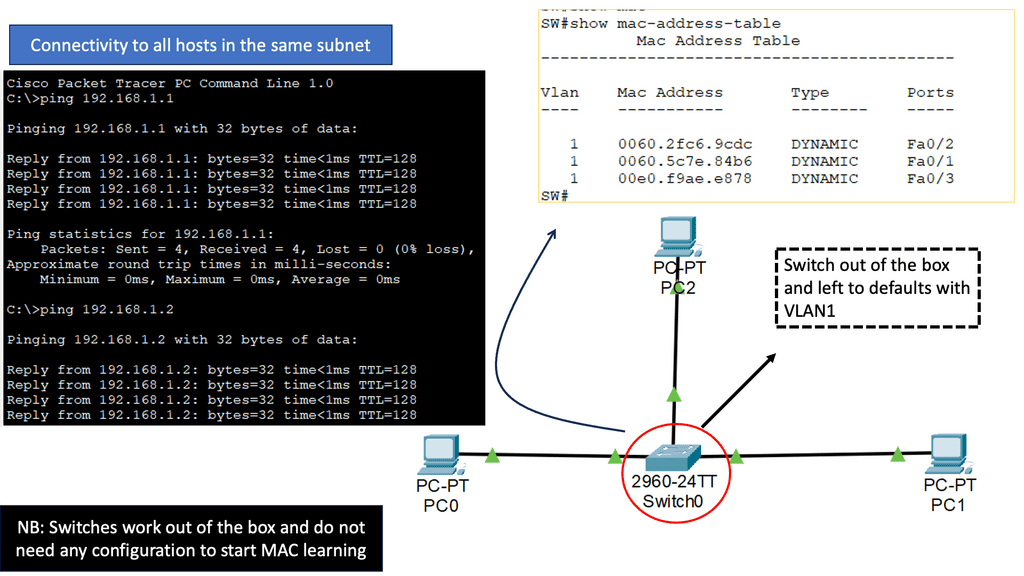

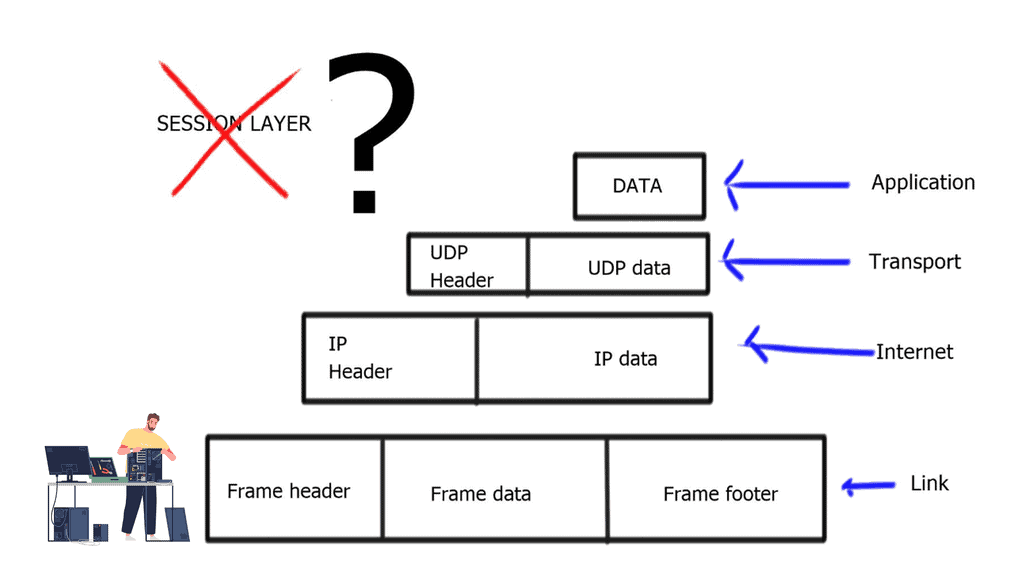

In a traditional network, each switch/router must be programmed individually because applications are loaded. These applications could include a load balancer, intrusion detection, monitoring, or Voice over IP (VoIP). Based on local logic, each switch/router decides where to route packets as traffic flows through the network. Changing applications or flows in this network requires systematically programming each switch/router.

A traditional network includes both a control plane and a forwarding plane. There are also applications loaded on each device, which must be configured separately.

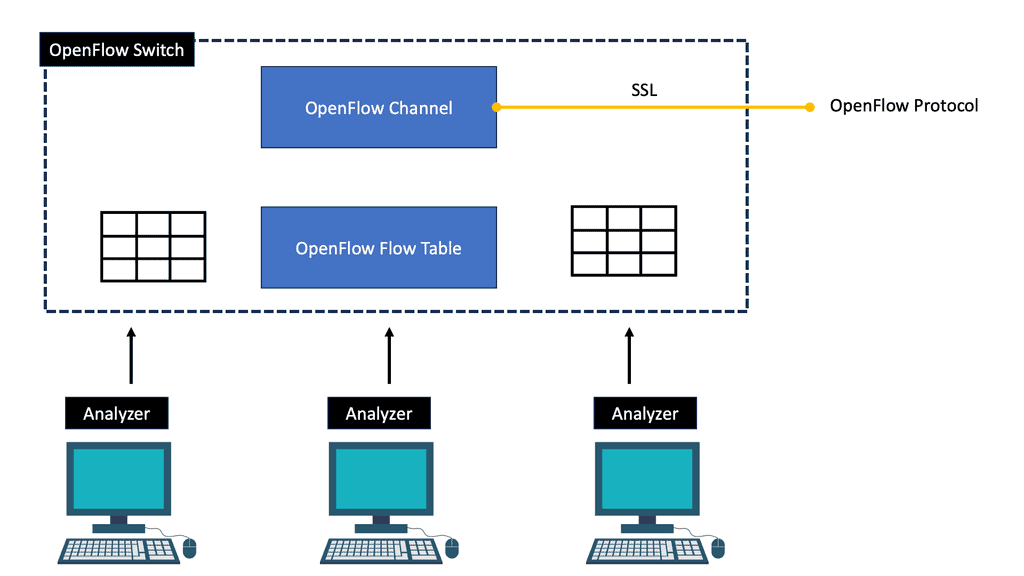

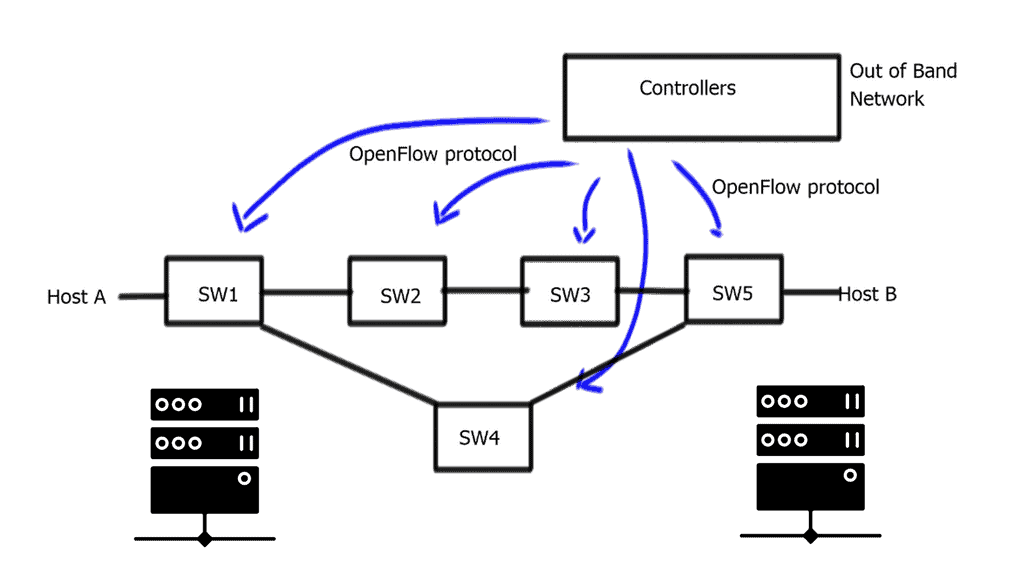

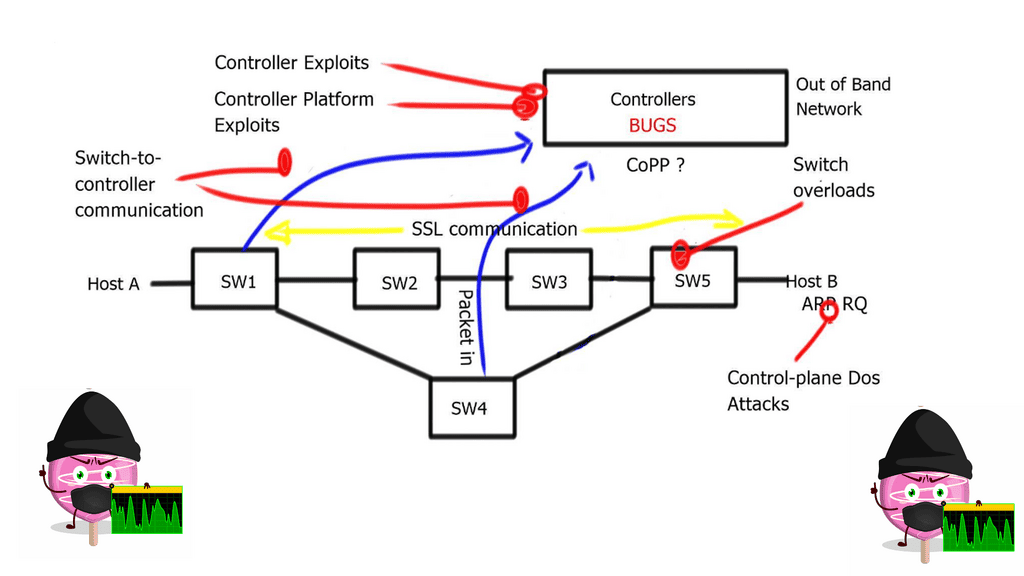

In an SDN network, a switch/router is not connected to any applications or intelligence. By centralized control of all devices, the network becomes programmable. A controller interfaces with applications, which are then executed across a network. Traffic flows are now supervised by a centralized controller that distributes and manages a flow table for each switch/router. Several factors can be used to define very flexible flow tables.

The flow table also collects statistics, which are fed up to the controller. This improves both visibility and control of the network because issues are immediately reported to the controller, which, in turn, can make immediate adjustments across the entire network.

The role of The VM

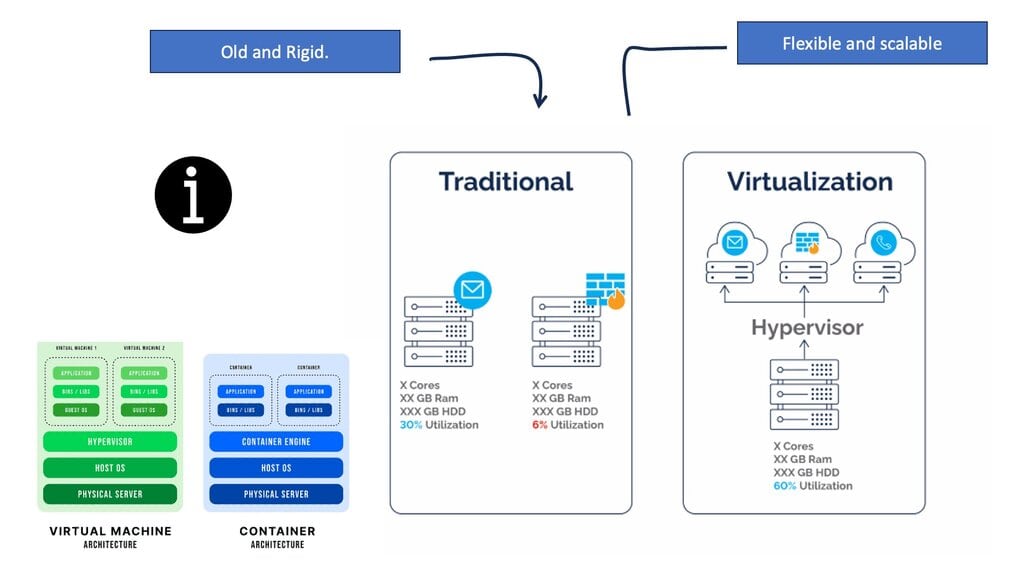

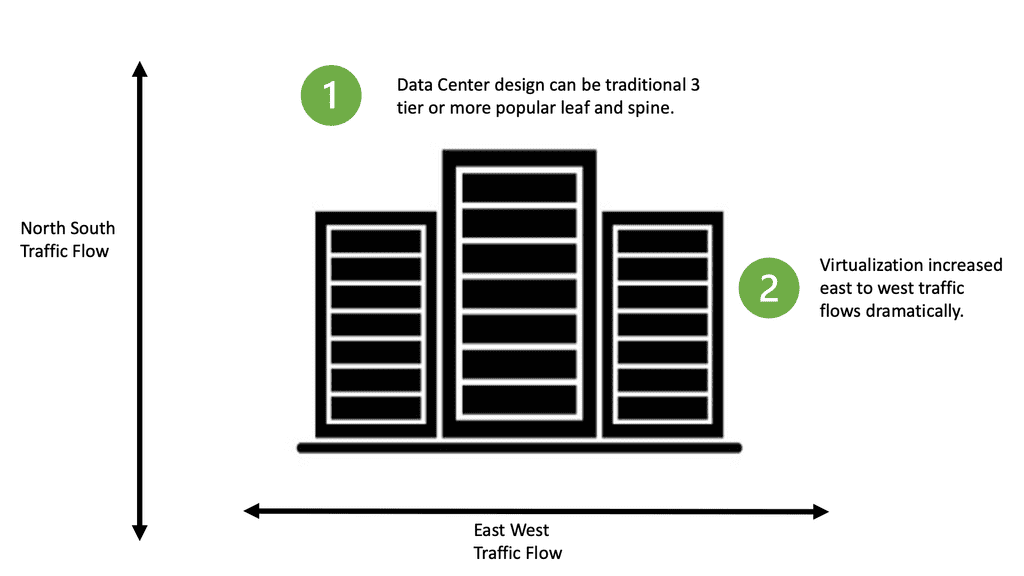

Virtual machines have been around for a long time, but we are beginning to spread our computing workloads in several ways. When you throw in docker containers and bare metal servers, networking becomes more interesting. Network challenges arise when all these components require communication within the same subnet, access to Internet gateways, and Layer 3 MPLS/VPNs.

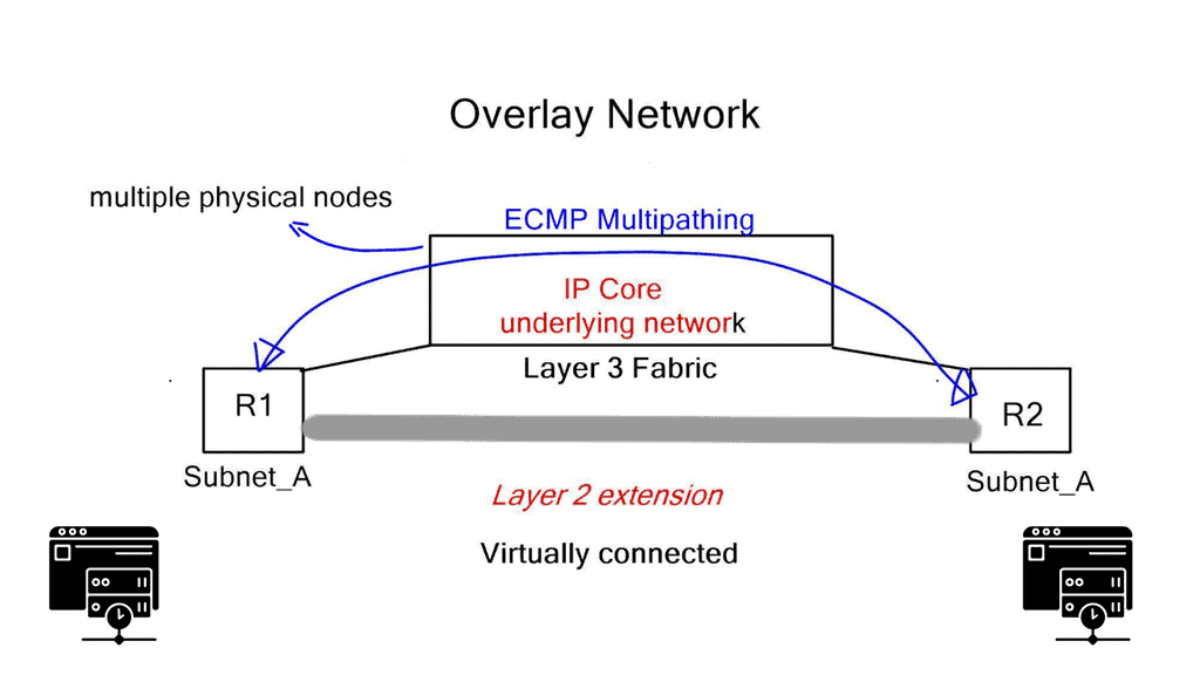

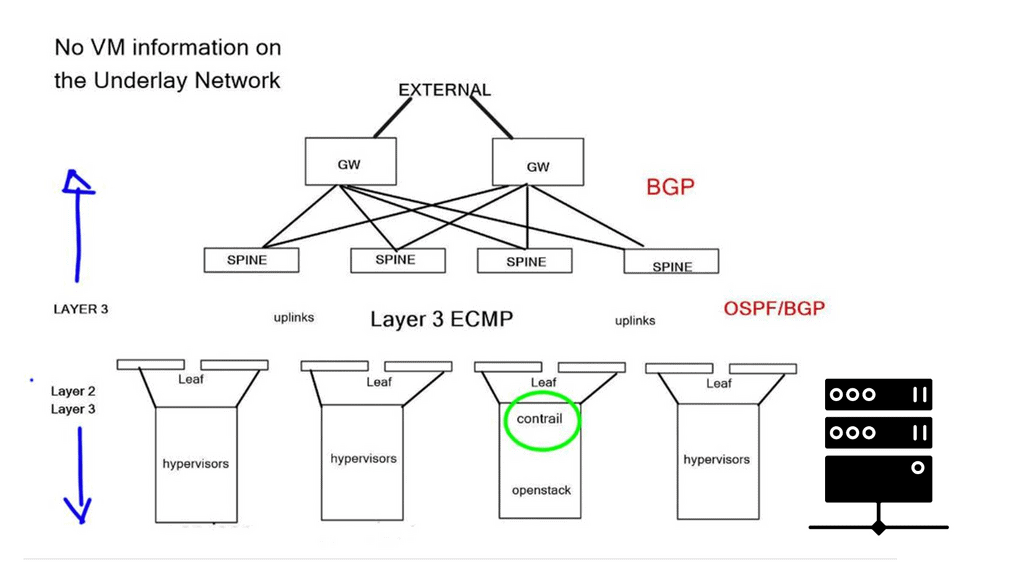

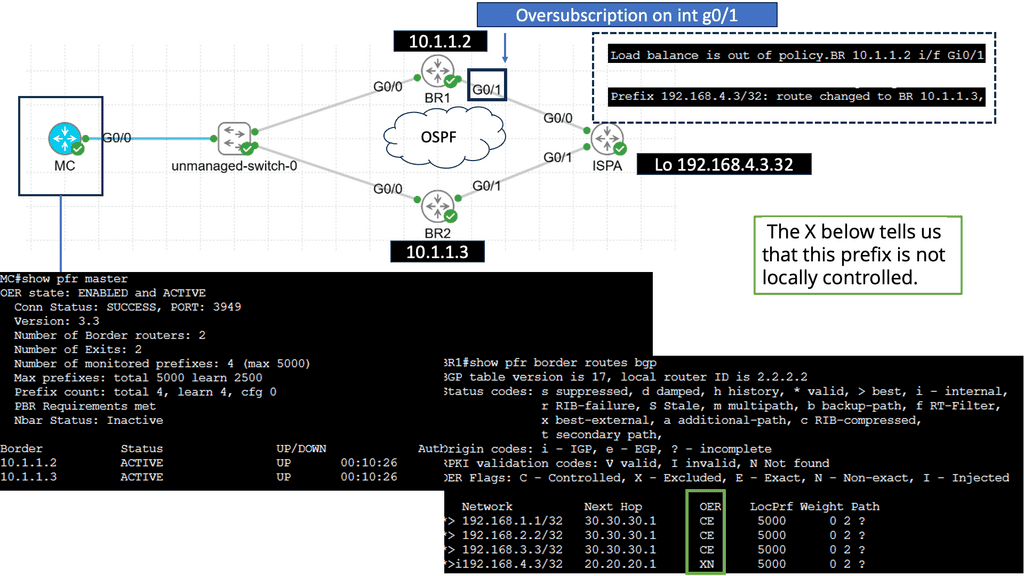

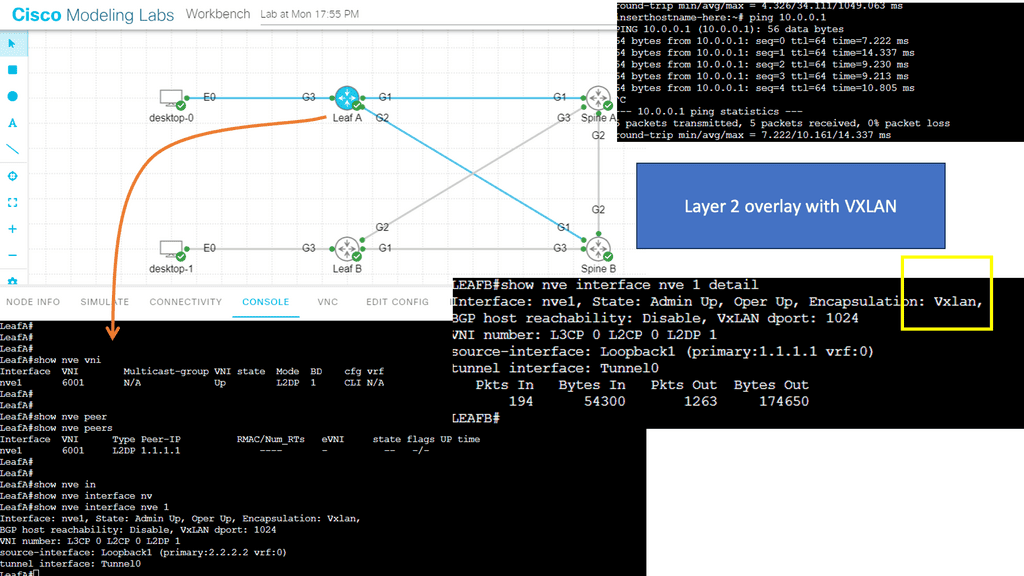

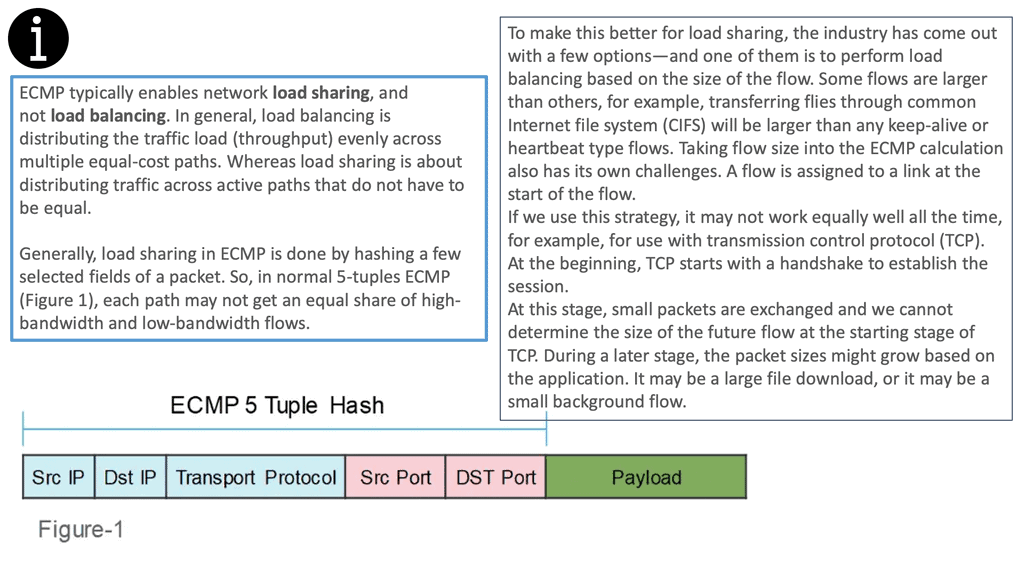

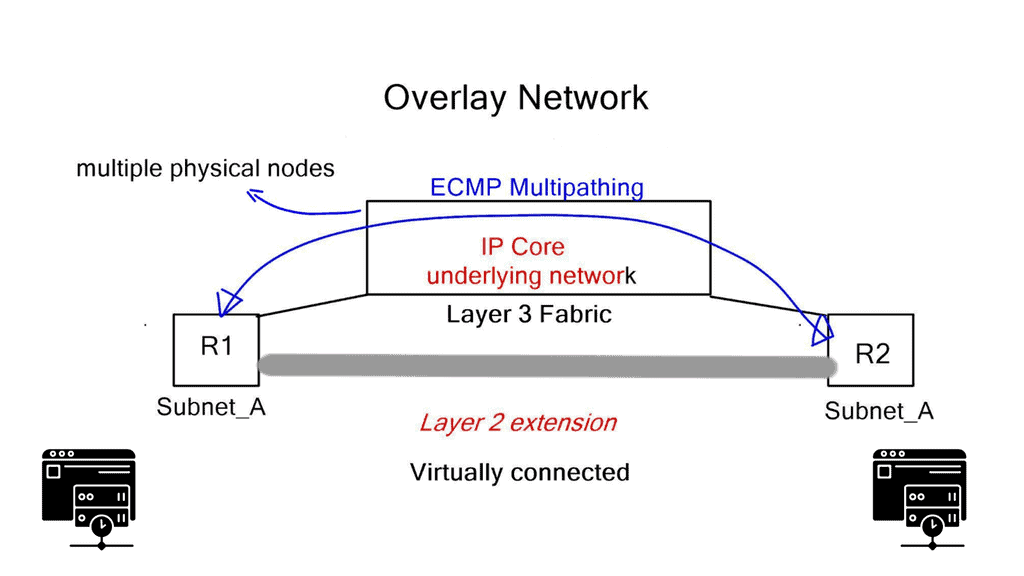

As a result, data center networks are moving towards IP underlay fabrics and Layer 2 overlays. Layer 3 data plane forwarding utilizes efficient Equal-cost multi-path routing (ECMP), but we lack Layer 2 multipathing by default. Now, similar to an SD WAN overlay approach, we can connect dispersed layer 2 segments and leverage all the good features of the IP underlay. To provide Layer 2 overlays and network virtualization, Juniper has introduced an SDN platform called Junipers OpenContrail in direct competition with

For additional pre-information, you may find the following post of use.

Highlights: OpenContrail

Key Features and Benefits:

Network Virtualization:

OpenContrail leverages network virtualization techniques to provide isolated virtual networks within a shared physical infrastructure. It offers a logical abstraction layer, enabling the creation of virtual networks that operate independently, complete with their own routing, security, and quality of service policies. This approach allows for the efficient utilization of resources, simplified network management, and improved scalability.

Secure Multi-Tenancy:

OpenContrail’s security features ensure tenants’ data and applications remain isolated and protected from unauthorized access. It employs micro-segmentation to enforce strict access control policies at the virtual machine level, reducing the risk of lateral movement within the network. Additionally, OpenContrail integrates with existing security solutions, enabling the implementation of comprehensive security measures.

Intelligent Automation:

OpenContrail automates various network provisioning, configuration, and management tasks, reducing manual intervention and minimizing human errors. Its programmable API and centralized control plane simplify the deployment of complex network topologies, accelerate service delivery, and enhance overall operational efficiency.

Scalability and Flexibility:

OpenContrail’s architecture is designed to scale seamlessly, supporting distributed cloud deployments across multiple locations. It offers a highly flexible solution that can adapt to changing network requirements, allowing administrators to dynamically allocate resources, establish new connectivity, and respond to evolving business needs.

OpenContrail in Practice:

OpenContrail has gained significant traction among cloud providers, service providers, and enterprises seeking to build robust, scalable, and secure networks. Its open-source nature has facilitated its adoption, encouraging collaboration, innovation, and customization. OpenContrail’s community-driven development model ensures continuous improvement and the availability of new features and enhancements.

Highlighting Junipers OpenContrail

OpenContrail is an open-source network virtualization platform. The commercial controller and open-source product are identical; they share the same checksum on the binary image. Maintenance and support are the only difference. Juniper decided to open source to fit into the open ecosystem, which wouldn’t have worked in a closed environment.

OpenContrail offers features similar to VMware NSX, can apply service chaining and high-level security policies, and provides connections to Layer 3 VPNs for WAN integration. OpenContrail works with any hardware, but integration with Juniper’s product sets offers additional rich analytics for the underlay network.

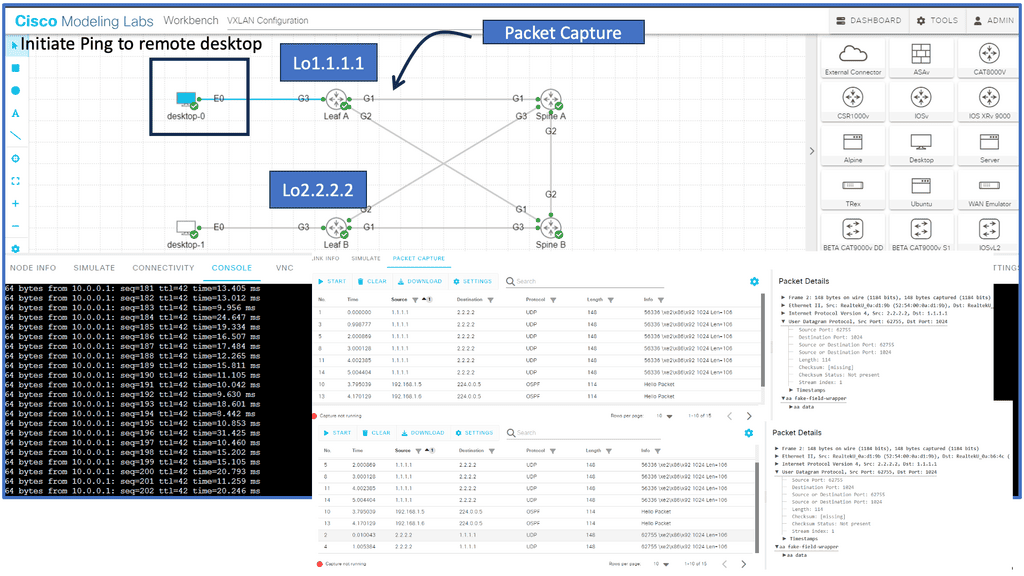

Underlay and overlay network visibility are essential for troubleshooting. You need to look further than the first header of the packet; you need to look deeper into the tunnel to understand what is happening entirely.

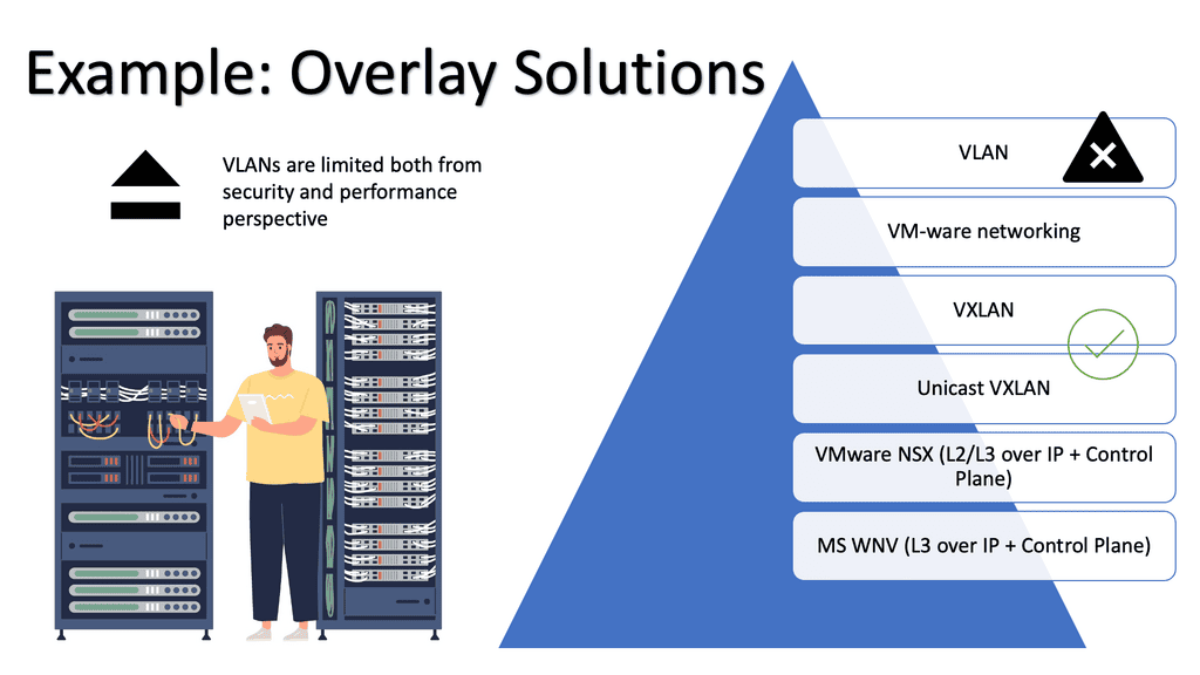

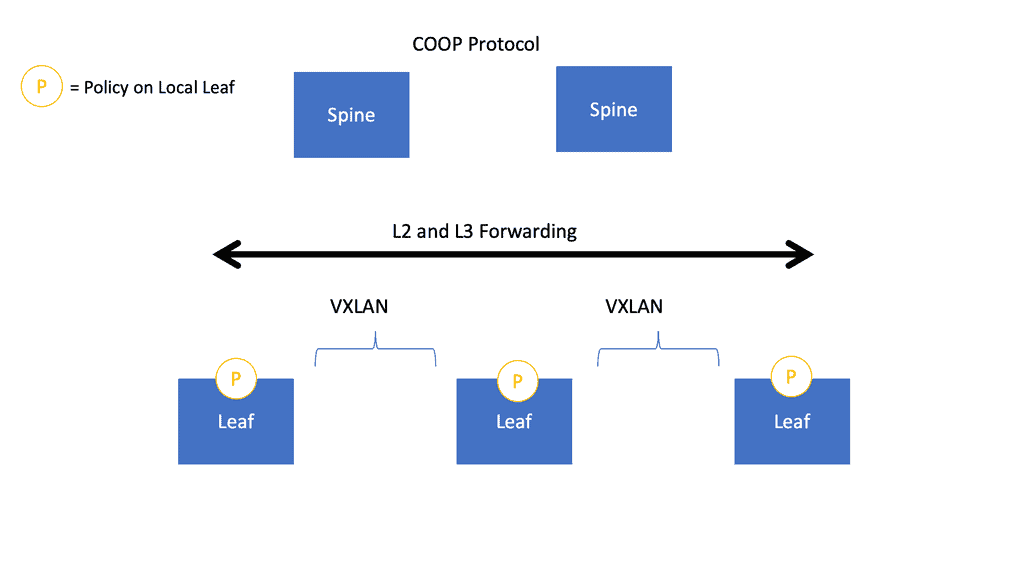

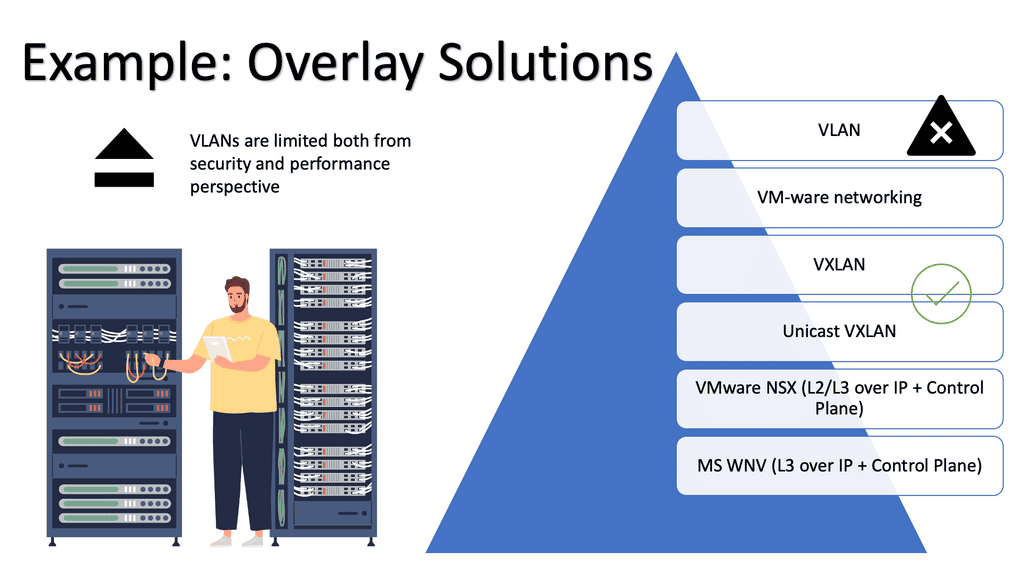

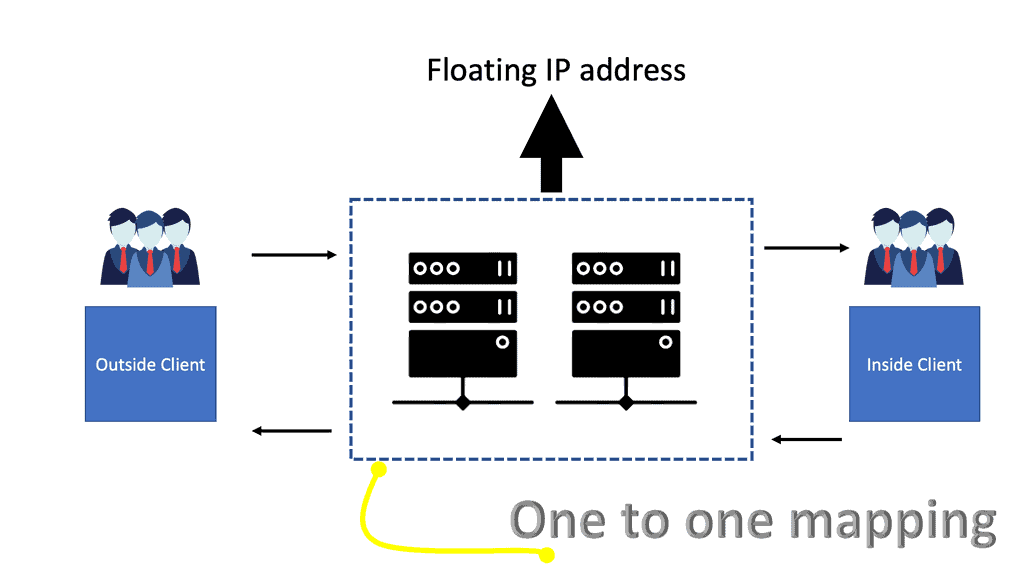

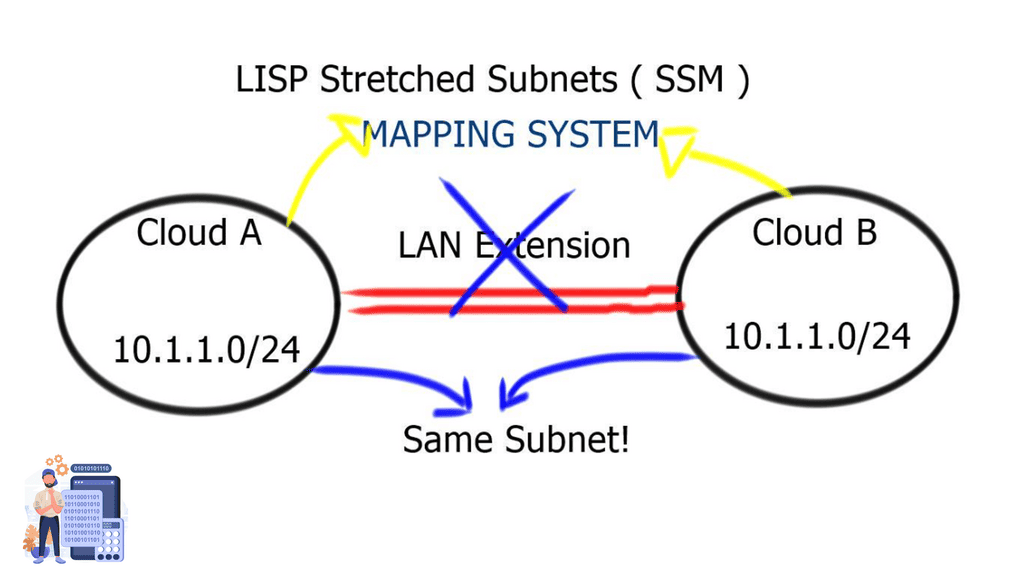

Network virtualization – Isolated networks

With a cloud architecture, network virtualization gives the illusion that each tenant has a separate isolated network. Virtual networks are independent of physical network location or state, and nodes within the physical underlay can fail without disrupting the overlay tenant. A tenant may be a customer or department, depending if it’s a public or private cloud.

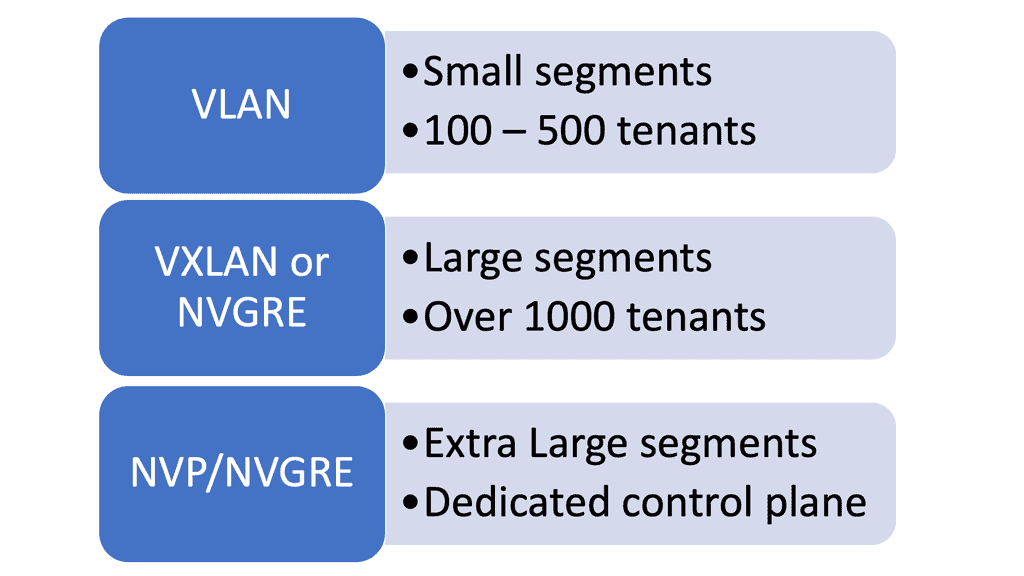

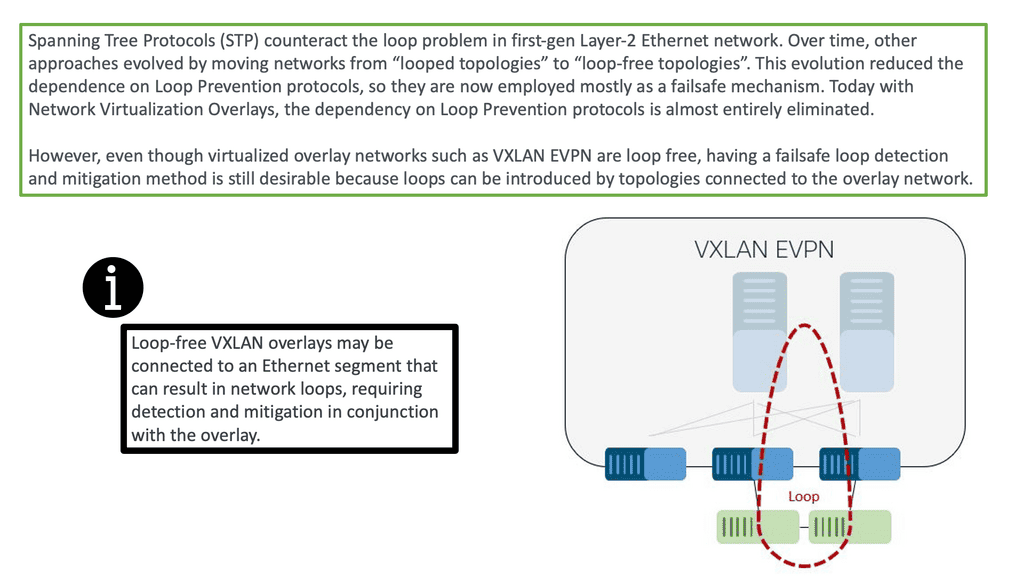

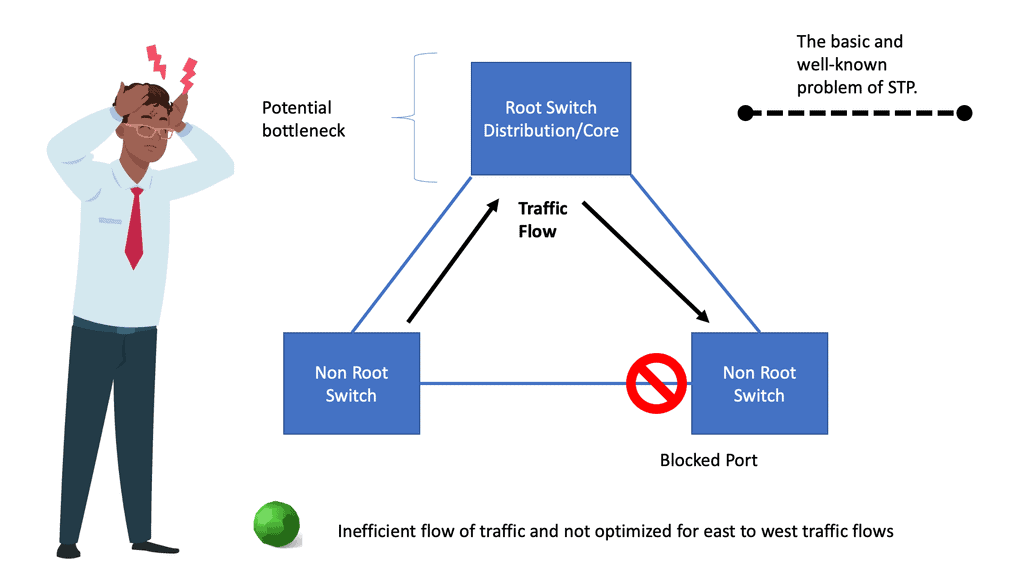

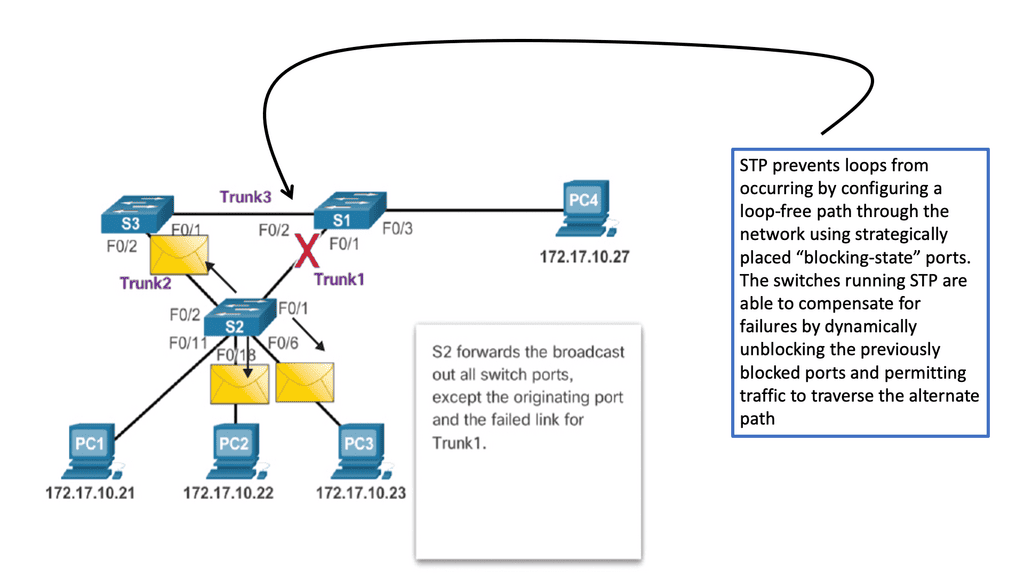

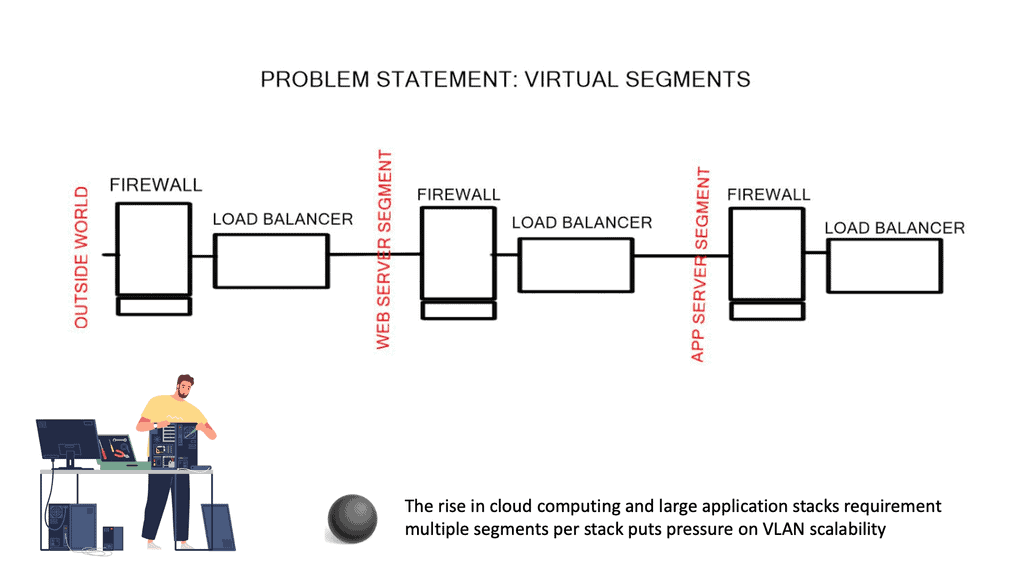

The virtual network sits on top of a physical network, the same way the compute virtual machines sit on top of a physical server. Virtual networks are not created with VLANs; Contrail uses a virtual overlay network system for multi-tenancy and cross-tenant communication. Many problems exist with large-scale VLAN deployments for multi-tenancy in today’s networks.

They introduce a lot of states in the physical network, and the Spanning Tree Protocol (STP) also introduces well-documented problems. There are technologies (THRILL, SPB) to overcome these challenges, but they add complexity to the design of the network.

Service Chaining

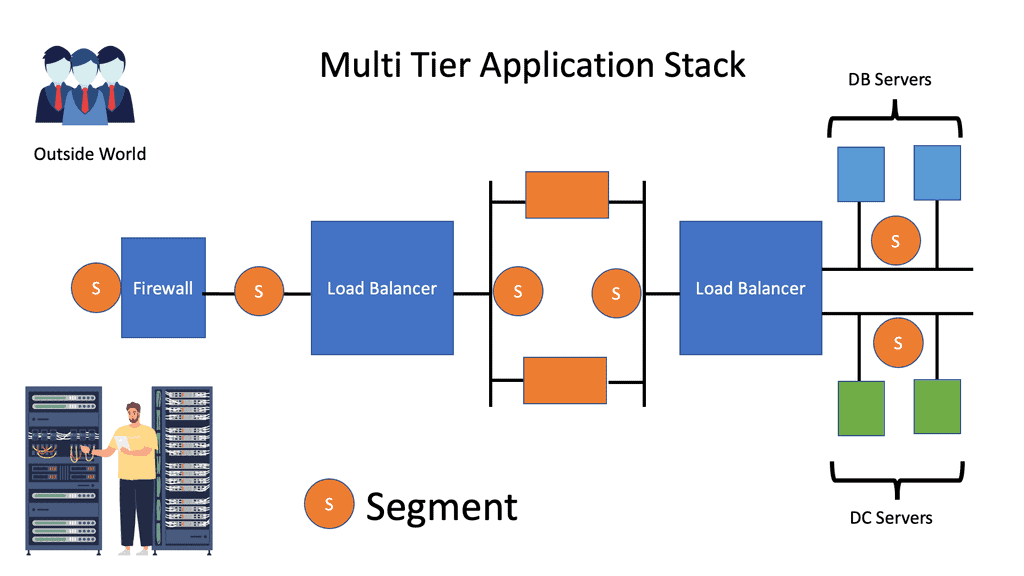

Customers require the ability to apply policy at virtual network boundaries. Policies may include ACL and stateless firewalls provided within the virtual switch. However, once you require complicated policy pieces between virtual networks, you need a more sophisticated version of policy control and orchestration called service chaining. Service chaining applies intelligent services between traffic from one tenant to another.

For example, if a customer requires content caching and stateful services, you must introduce additional service appliances and force next-hop traffic through these appliances. Once you deploy a virtual appliance, you need a scale-out architecture.

The ability to Scale-out

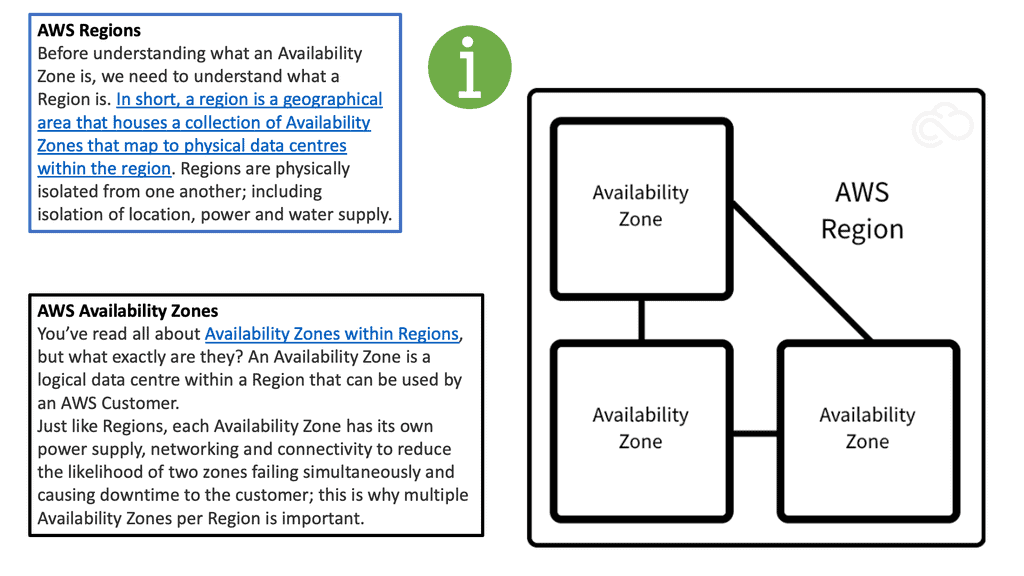

Scale-out is the ability to instantiate multiple physical and virtual machine instances and load balance traffic across them. Customers may also require the ability to connect with different tenants in dispersed geographic locations or to workloads in a remote private cloud or public cloud. Usually, people build a private cloud for the norm and then burst into a public cloud.

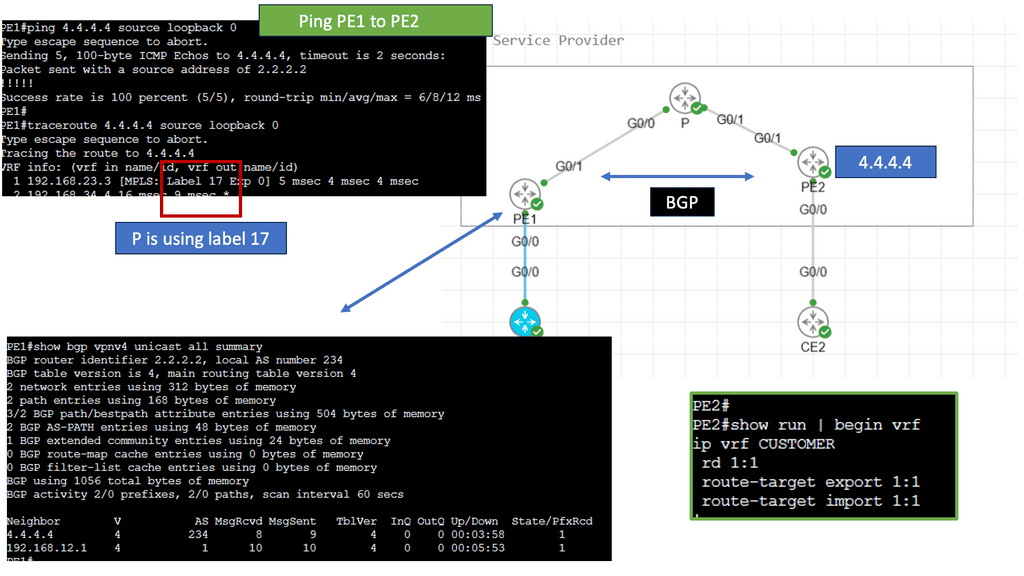

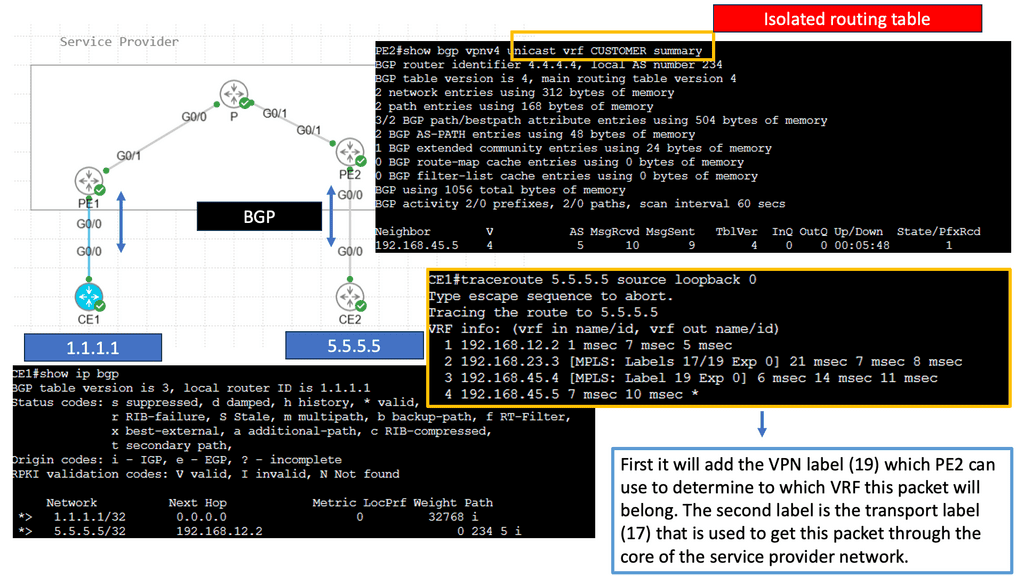

Juniper has implemented a virtual networking architecture that meets these requirements. It is based on well-known technology, MPLS/layer 3 VPN. MPLS/layer 3 VPN is the base for Juniper designs.

Virtual Network Implementation

A – MPLS Overlay

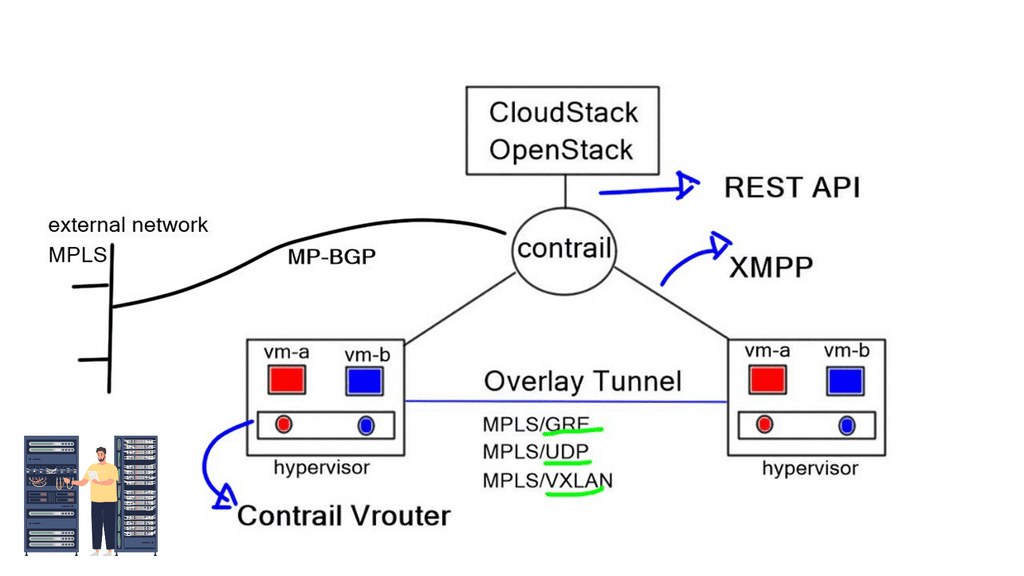

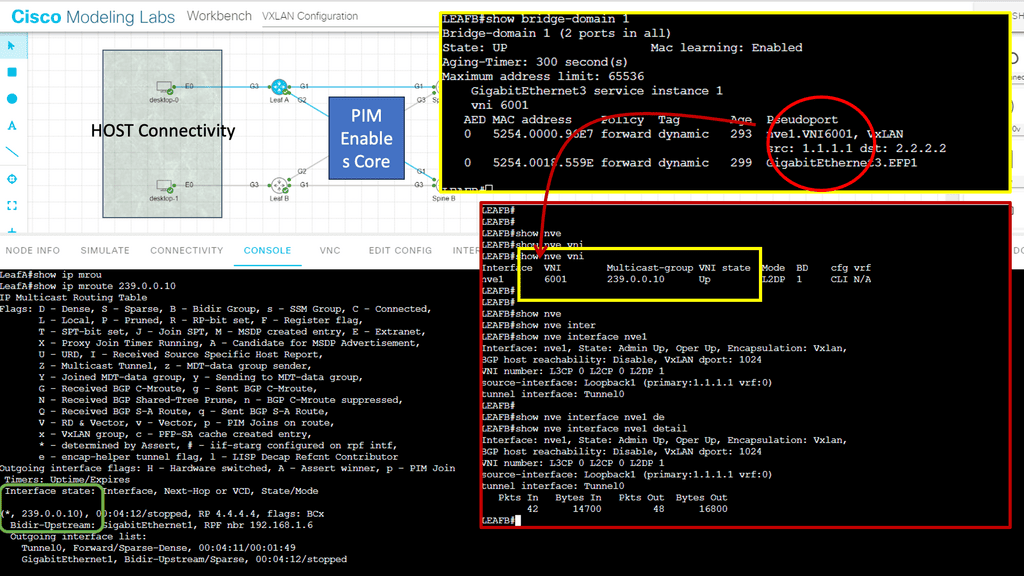

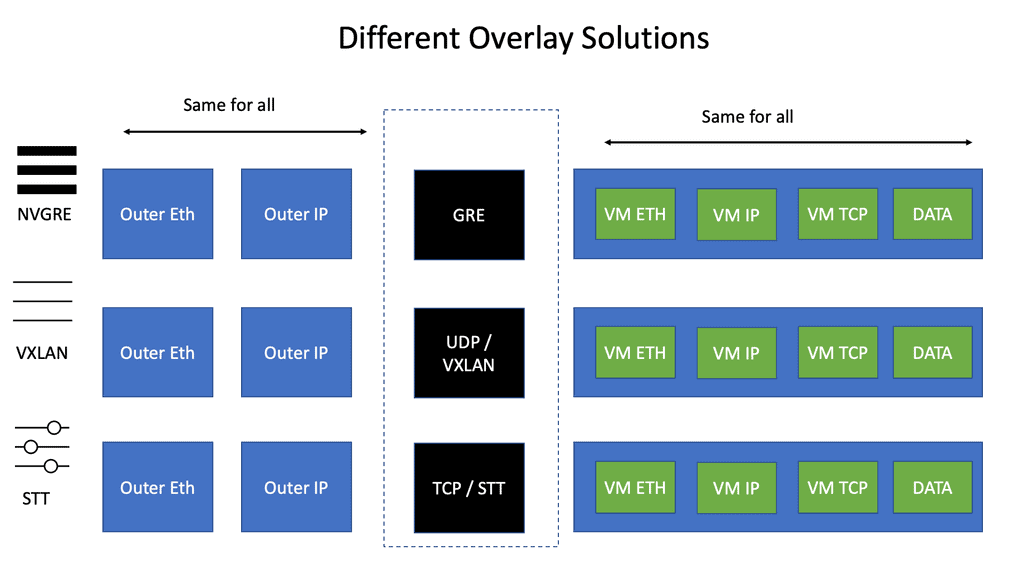

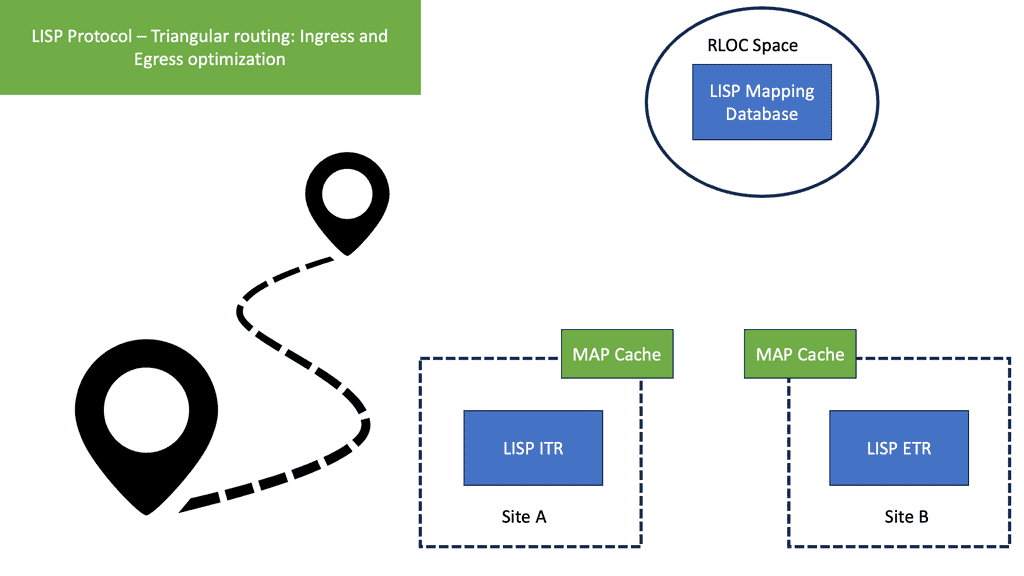

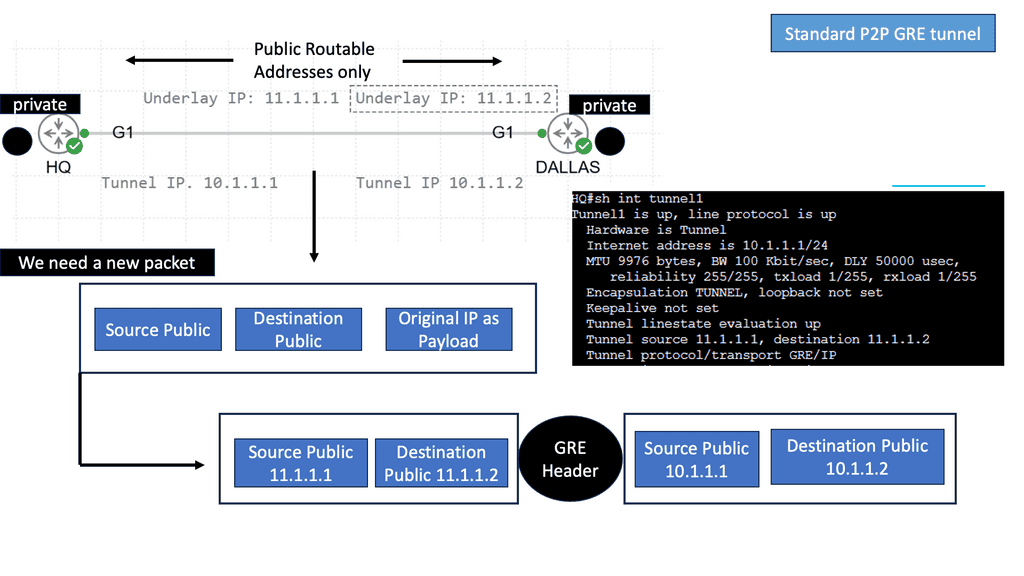

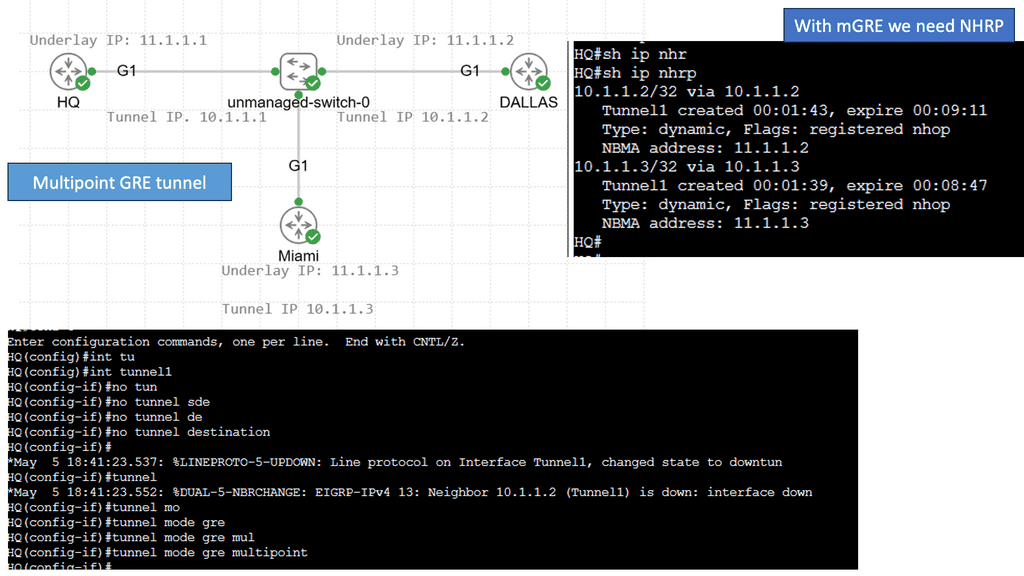

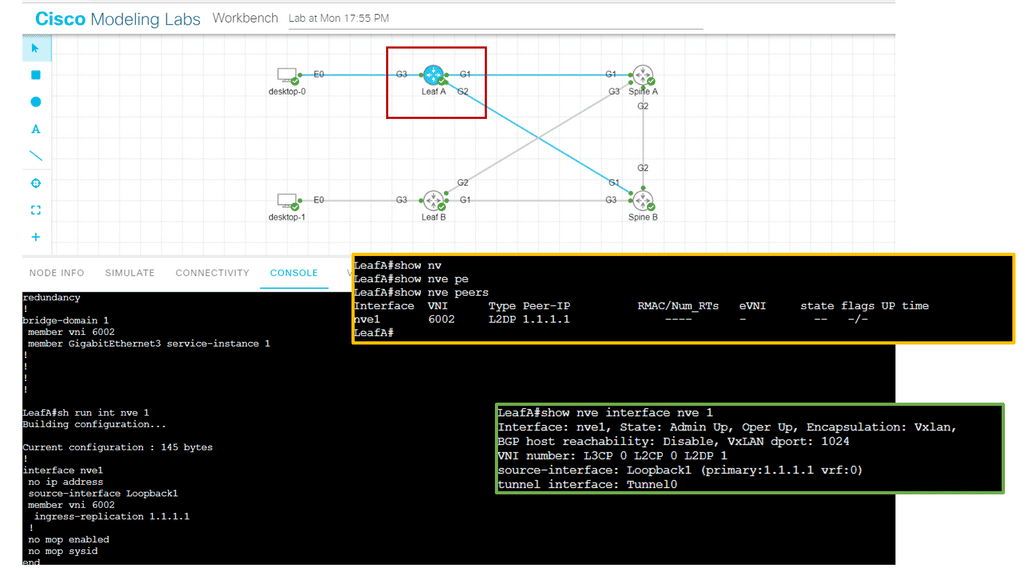

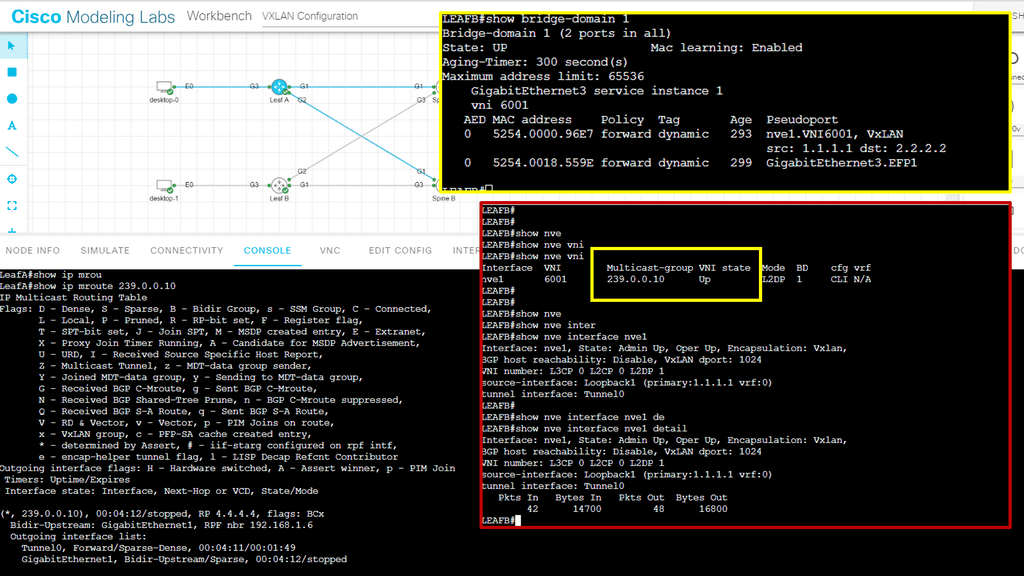

The SDN controller is responsible for the networking aspects of virtualization. When creating virtual networks, initiate the Northbound API and issue an instruction that attaches the VM to the VN. The network responsibilities are delegated from Cloudstack or OpenStack to Contrail. The Contrail SDN controller automatically creates the overlay tunnel between virtual machines. The overlay can be either an MPLS overlay style with MPLS-over-GRE, MPLS-over-UDP, or VXLAN.

L3VPN for routed traffic and EVPN for bridged traffic

Juniper’s OpenContrail is still a pure MPLS overlay of MPLS/VPN, using L3VPN for routed traffic and EVPN for bridged traffic. Traffic forwarding between end nodes has one MPLS label (VPN label), but they use various encapsulation methods to carry labeled traffic across the IP fabric. As mentioned above, this includes MPLS-over-GRE – a traditional encapsulation mechanism, MPLS-over-UDP – a variation of MPLS-over-GRE that replaces the GRE headers with UDP headers. MPLS-over-VXLAN uses VXLAN packet format but stores the MPLS label in the Virtual Network Identifier (VNI) field.

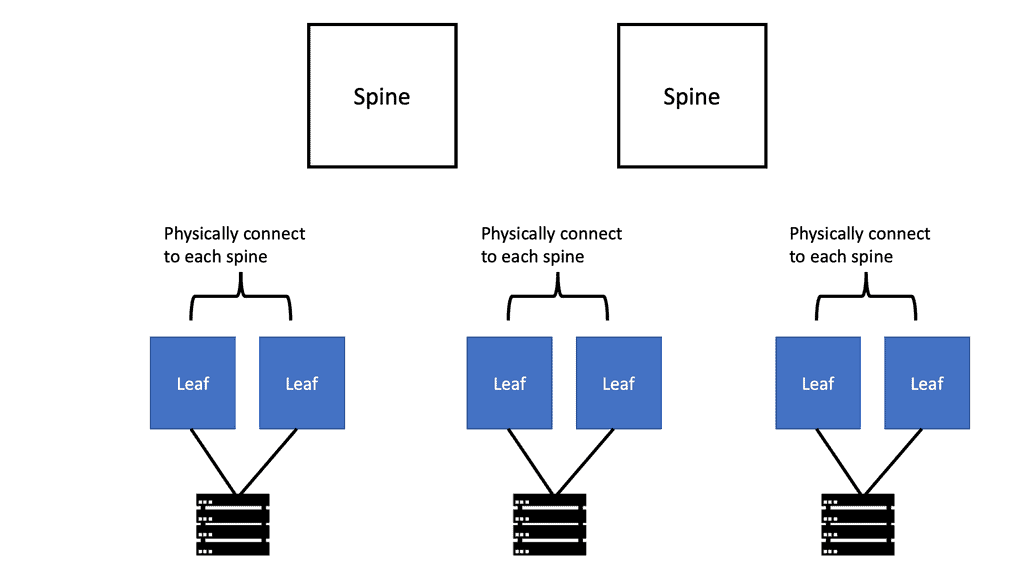

B – The forwarding plane

The forwarding plane takes the packet from the VM and gives it to the “Vrouter,” which does a lookup and determines if the destination is a remote network. If it is, it encapsulates the packet and sends it across the tunnel. The underlay that sites between the workloads forward is based on tunnel source and destination only.

No state belongs to end hosts ‘VMs, MAC addresses, or IPs. This type of architecture gives the Core a cleaner and more precise role. Generally, as a best practice, keeping “state” in the Core is a lousy design principle.

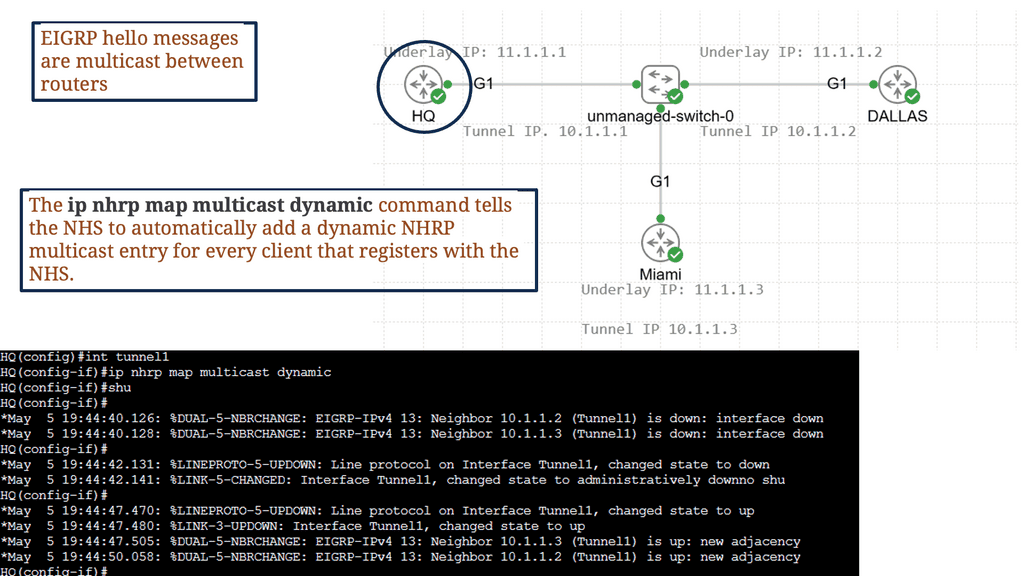

C – Northbound and southbound interfaces

To implement policy and service chaining, use the Northbound Interface and express your policy at a high level. For example, you may require HTTP or NAT and force traffic via load balancers or virtual firewalls. Contrail does this automatically and issues instructions to the Vrouter, forcing traffic to the correct virtual appliance. In addition, it can create all the suitable routes and tunnels, causing traffic through the proper sequence of virtual machines.

Contrail achieves this automatically with southbound protocols, such as XMPP (Extensible Messaging and Presence Protocol) or BGP. XMPP is a communications protocol based on XML (Extensible Markup Language).

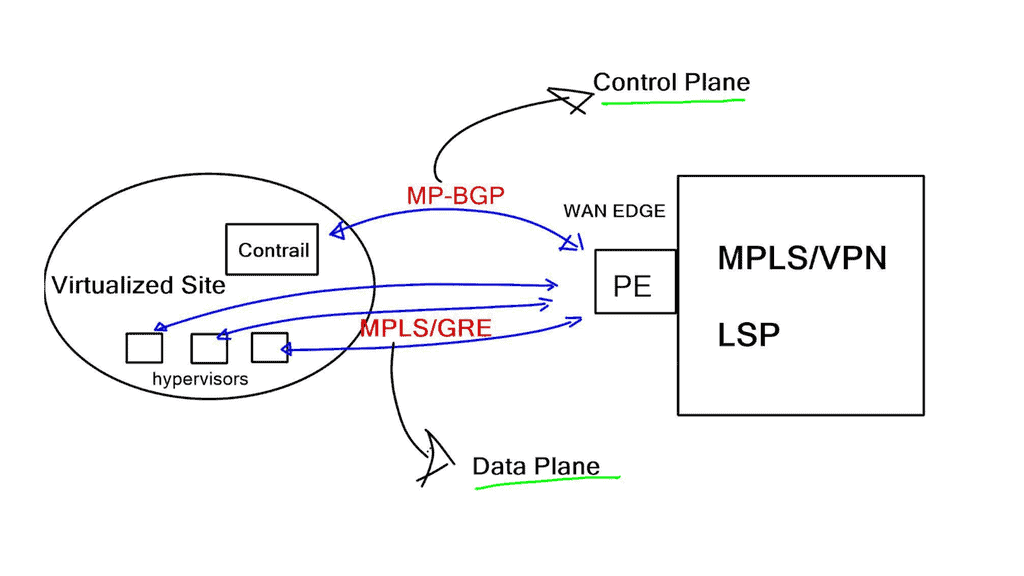

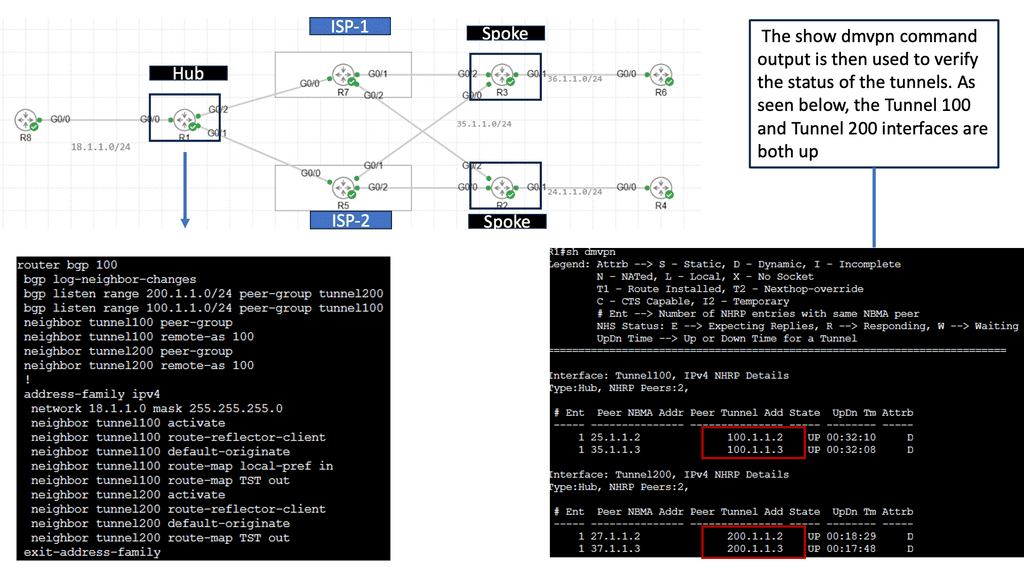

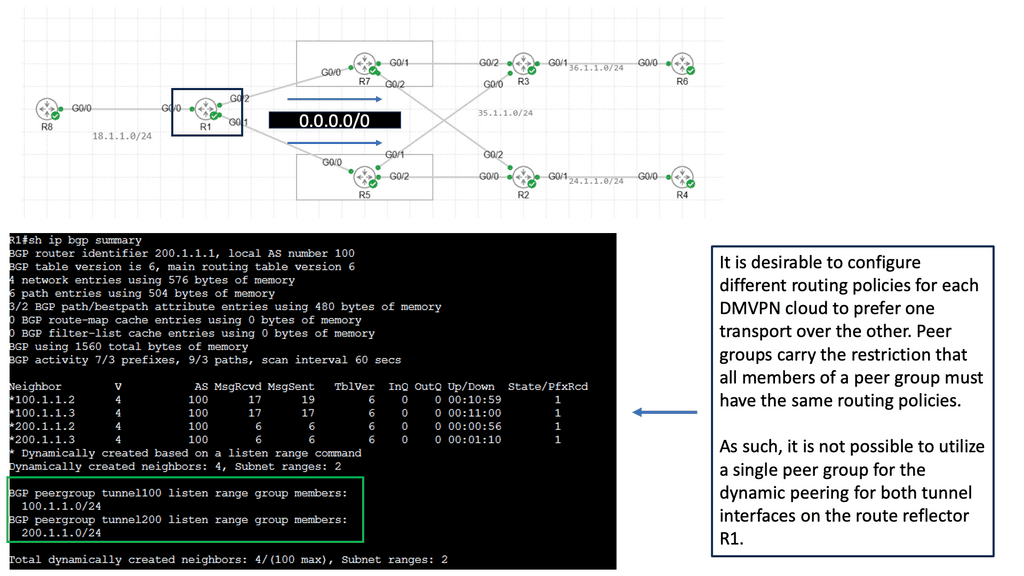

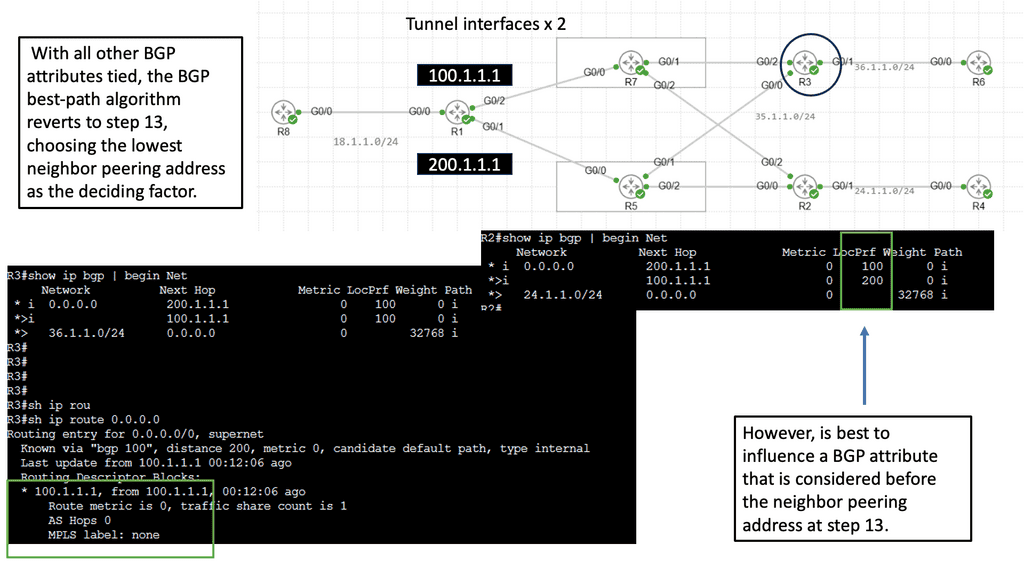

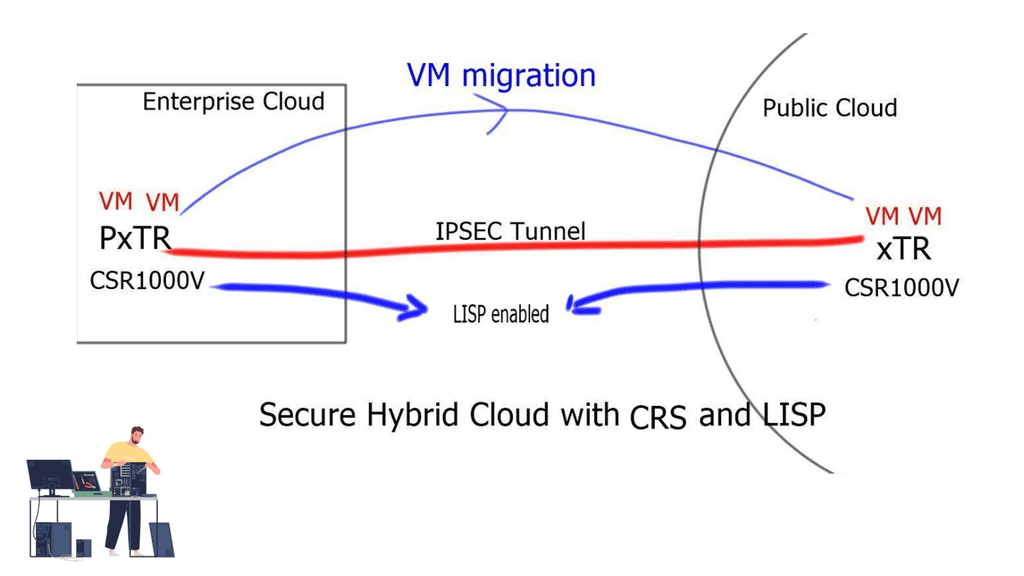

WAN Integration

Junipers OpenContrail can connect virtual networks to external Layer 3 MPLS VPN for WAN integration. In addition, they gave the controller the ability to peer BGP to gateway routers. For the data plane, they support MPLS-over-GRE, and for the control plane, they speak MP-BGP.

Contrail communicates directly with PE routers, exchanging VPNv4 routes with MP-BGP and using MPLS-over-GRE encapsulation to pass IP traffic between hypervisor hosts and PE routers. Using standards-based protocols lets you choose any hardware appliance as the gateway node.

This data and control plane makes integration to an MPLS/VPN backbone a simple task. First, MP-BGP between the controllers and PE-routers should be established. Inter-AS Option B next hop self-approach should be used to demonstrate some demarcation points.

OpenContrail has emerged as a game-changer in software-defined networking, empowering organizations to build agile, secure, and scalable networks in the cloud era. With its advanced features, such as network virtualization, secure multi-tenancy, intelligent automation, and scalability, OpenContrail offers a comprehensive solution that addresses the complex networking challenges of modern cloud environments.

As the demand for efficient and flexible network management continues to rise, OpenContrail provides a compelling option for organizations looking to optimize their network infrastructure and unlock the full potential of the cloud.

Summary: OpenContrail

OpenContrail is a powerful open-source software-defined networking (SDN) solution revolutionizing network management and connectivity. In this blog post, we will explore its key features, benefits, and use cases and showcase how it empowers organizations to build robust and scalable networks.

Understanding OpenContrail

OpenContrail, developed by Juniper Networks, is an open-source SDN controller that provides network virtualization and automation capabilities. It is a single control point for managing and orchestrating network resources, enabling organizations to simplify network operations and enhance flexibility. By decoupling the network control plane from the underlying physical infrastructure, OpenContrail brings agility and scalability to modern networks.

Key Features of OpenContrail

OpenContrail offers a wide range of features, making it a preferred choice for network administrators. Some notable features include:

1. Virtual Network Overlay: OpenContrail creates virtual network overlays, allowing multiple virtual networks to coexist on a shared physical infrastructure. This isolation ensures enhanced security and enables efficient resource utilization.

2. Policy-Driven Automation: With policy-driven automation, network administrators can define and enforce network policies and access controls across the infrastructure. OpenContrail simplifies the management and enforcement of complex policies, reducing operational overhead.

3. Analytics and Monitoring: OpenContrail provides extensive analytics and monitoring capabilities, offering real-time insights into network traffic, performance, and security. These insights help administrators optimize network resources and troubleshoot issues effectively.

Use Cases of OpenContrail

OpenContrail finds applications in various use cases across industries. Some prominent use cases include:

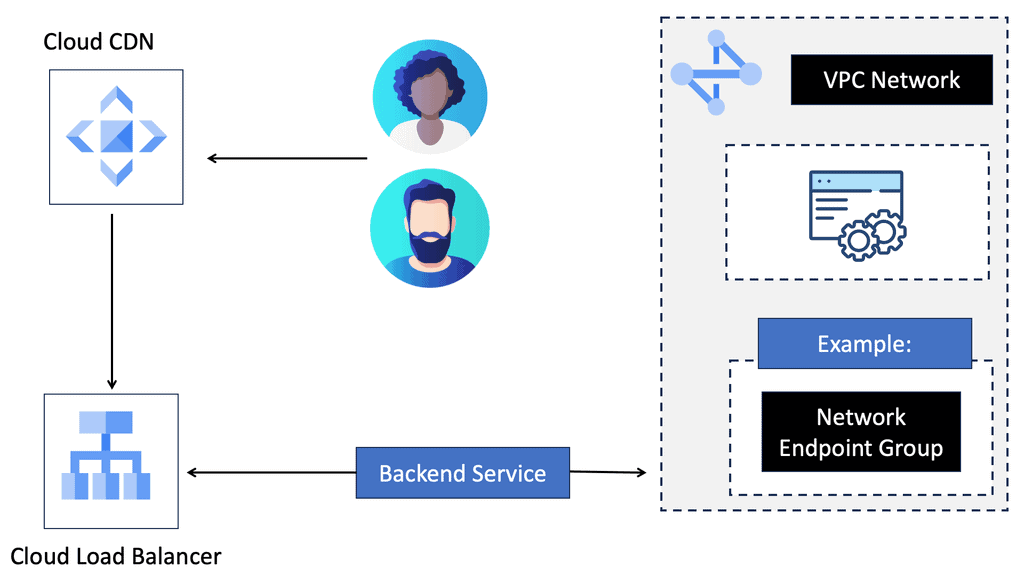

1. Cloud Infrastructure: OpenContrail enables cloud service providers to build and manage scalable and secure cloud infrastructures. It facilitates seamless integration with popular cloud platforms and offers rich networking capabilities.

2. Data Centers: OpenContrail simplifies network management in data center environments. It provides dynamic workload placement, automated provisioning, and seamless connectivity between virtual machines and containers, ensuring efficient resource utilization.

3. Multi-Cloud Networking: OpenContrail supports multi-cloud networking, allowing organizations to connect and manage multiple cloud environments securely. It provides seamless connectivity, consistent policies, and centralized control across cloud providers.

Conclusion:

OpenContrail presents a game-changing solution for organizations seeking to enhance their networking capabilities. With its rich feature set, including virtual network overlays, policy-driven automation, and advanced analytics, OpenContrail empowers organizations to build scalable, secure, and agile networks. Whether it’s cloud infrastructure, data centers, or multi-cloud networking, OpenContrail is a reliable and versatile SDN solution.