SD WAN Overlay

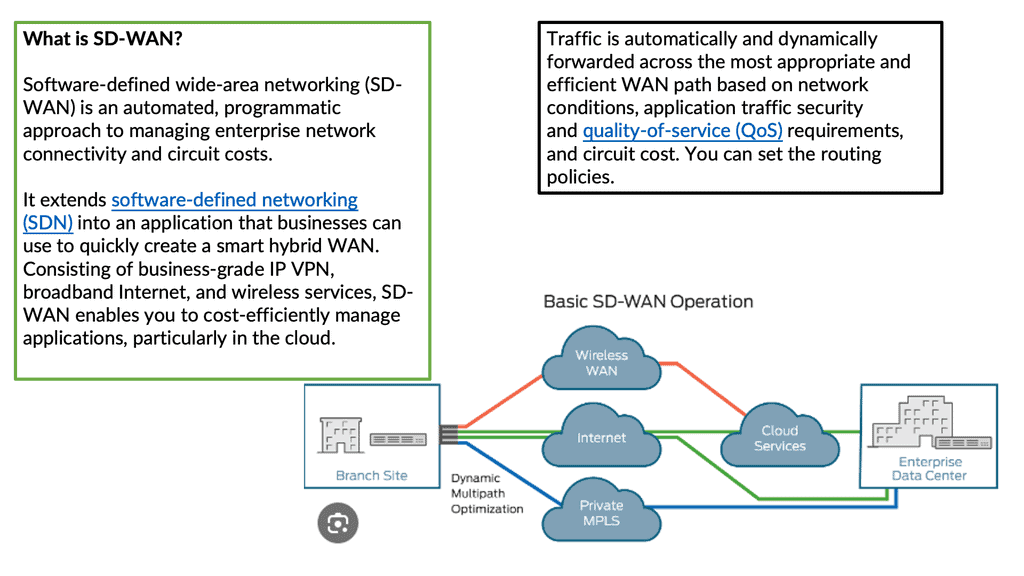

In today's digital age, businesses rely on seamless and secure network connectivity to support their operations. Traditional Wide Area Network (WAN) architectures often struggle to meet the demands of modern companies due to their limited bandwidth, high costs, and lack of flexibility. A revolutionary SD-WAN (Software-Defined Wide Area Network) overlay has emerged to address these challenges, offering businesses a more efficient and agile network solution. This blog post will delve into SD-WAN overlay, exploring its benefits, implementation, and potential to transform how businesses connect.

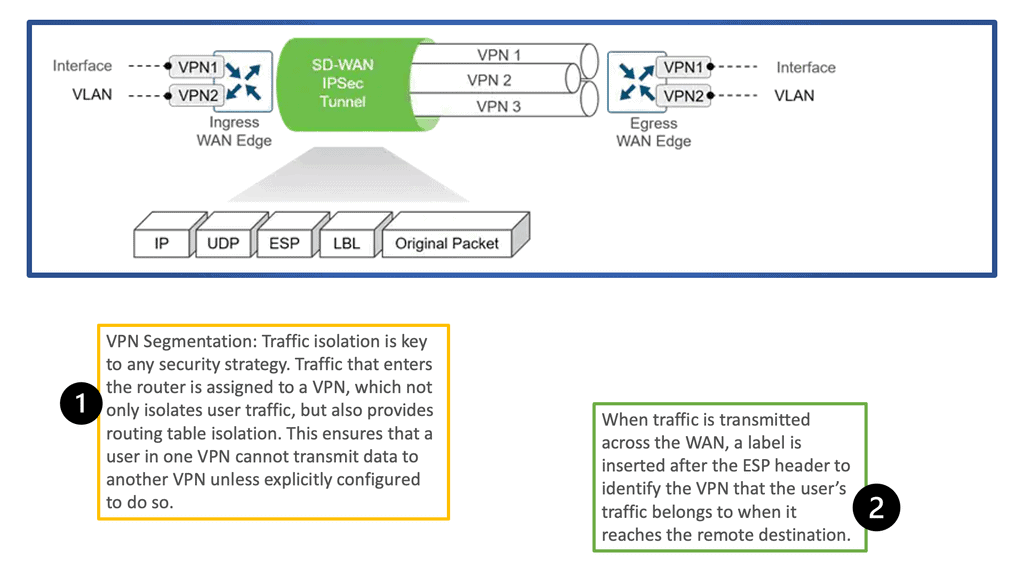

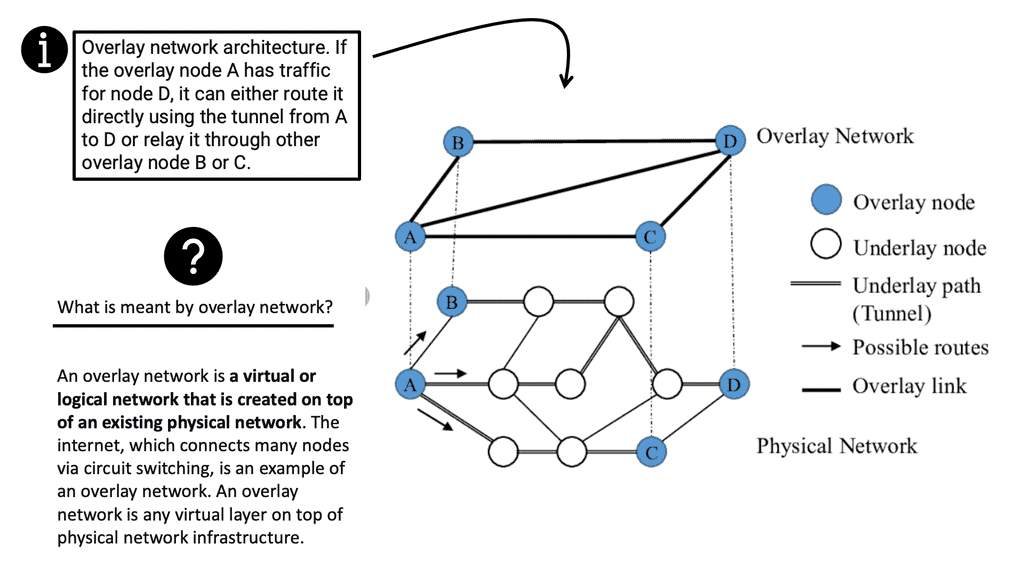

SD-WAN employs the concepts of overlay networking. Overlay networking is a virtual network architecture that allows for the creation of multiple logical networks on top of an existing physical network infrastructure. It involves the encapsulation of network traffic within packets, enabling data to traverse across different networks regardless of their physical locations. This abstraction layer provides immense flexibility and agility, making overlay networking an attractive option for organizations of all sizes.

- Scalability: One of the key advantages of overlay networking is its ability to scale effortlessly. By decoupling the logical network from the underlying physical infrastructure, organizations can rapidly deploy and expand their networks without disruption. This scalability is particularly crucial in cloud environments or scenarios where network requirements change frequently.

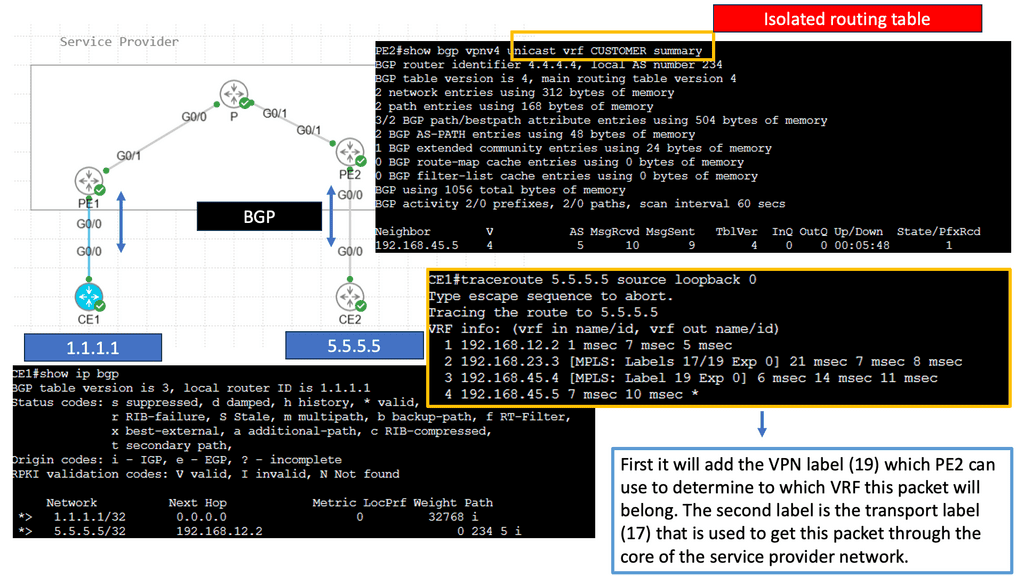

- Security and Isolation: Overlay networks provide enhanced security by isolating different logical networks from each other. This isolation ensures that data traffic remains segregated and prevents unauthorized access to sensitive information. Additionally, overlay networks can implement advanced security measures such as encryption and access control, further fortifying network security.Matt Conran

Highlights: SD WAN Overlay

Understanding Overlay Networking

Overlay networking is a revolutionary approach to network design that enables the creation of virtual networks on top of existing physical networks. By abstracting the underlying infrastructure, overlay networks provide a flexible and scalable solution to meet the dynamic demands of modern applications and services. Whether in cloud environments, data centers, or even across geographically dispersed locations, overlay networking opens up a world of possibilities.

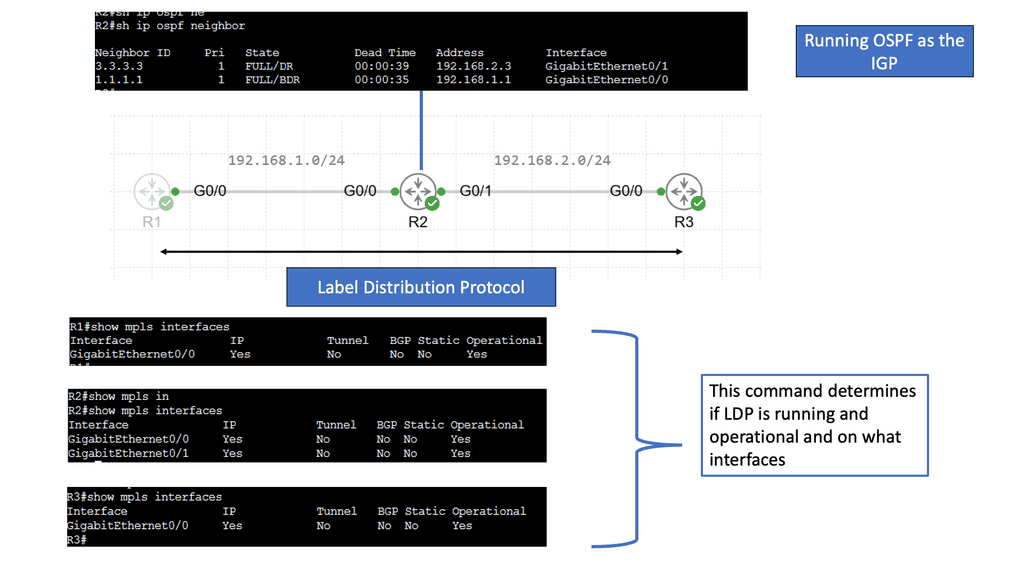

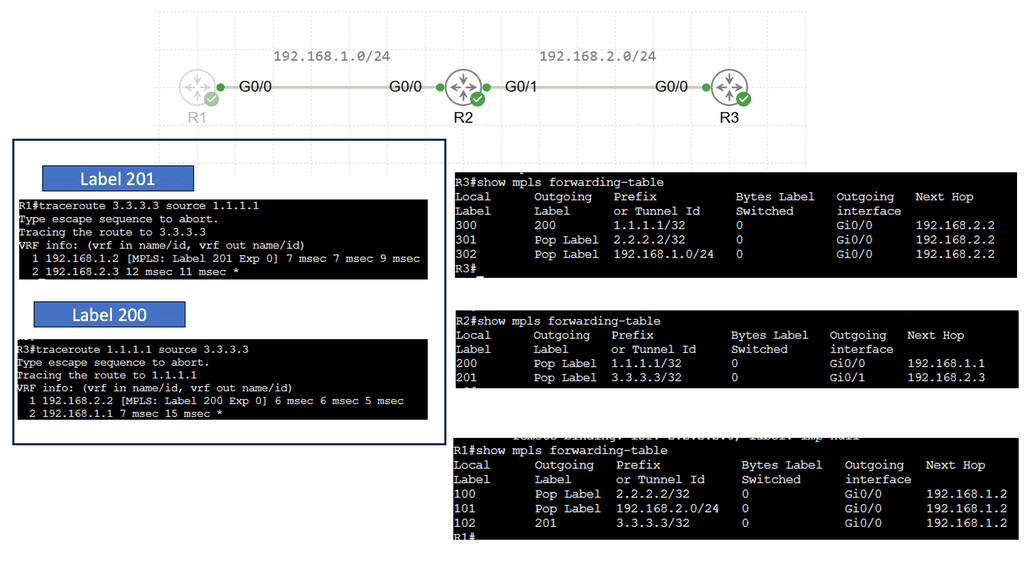

Overlay networks act as a virtual layer on the physical network infrastructure. They enable the creation of logical connections independent of the physical network topology. In the context of SD-WAN, overlay networks facilitate the seamless integration of multiple network connections, including MPLS, broadband, and LTE, into a unified and efficient network.

The advantages of overlay networking are manifold:

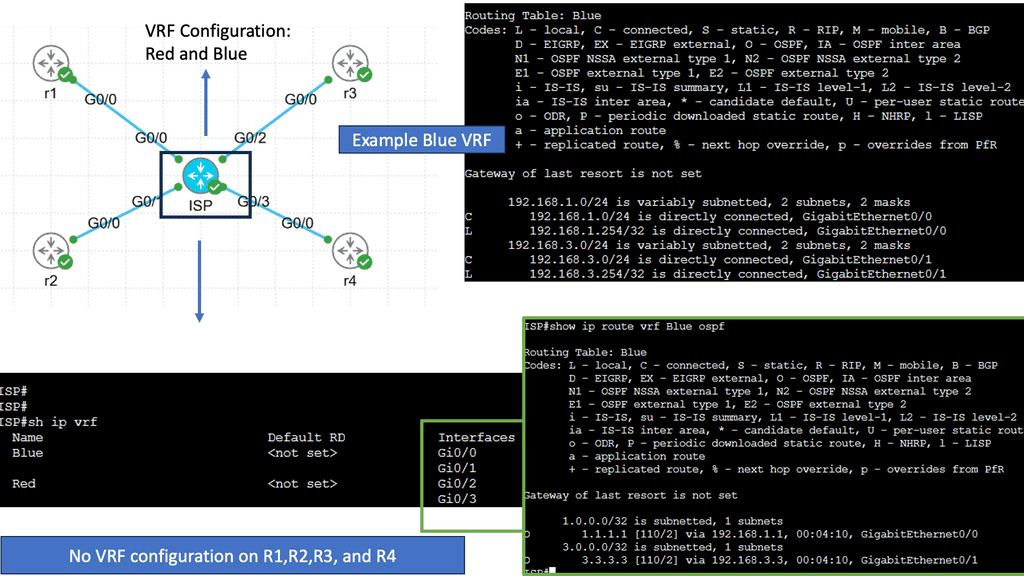

– First, it allows for seamless network segmentation, enabling different applications or user groups to operate in isolation while sharing the same physical infrastructure. This enhances security and simplifies network management.

– Secondly, overlay networks facilitate deploying advanced network services such as load balancing, firewalling, and encryption without complex changes to the underlying network infrastructure. This abstraction level empowers organizations to adapt and respond rapidly to evolving business needs.

**So, what exactly is an SD-WAN overlay?**

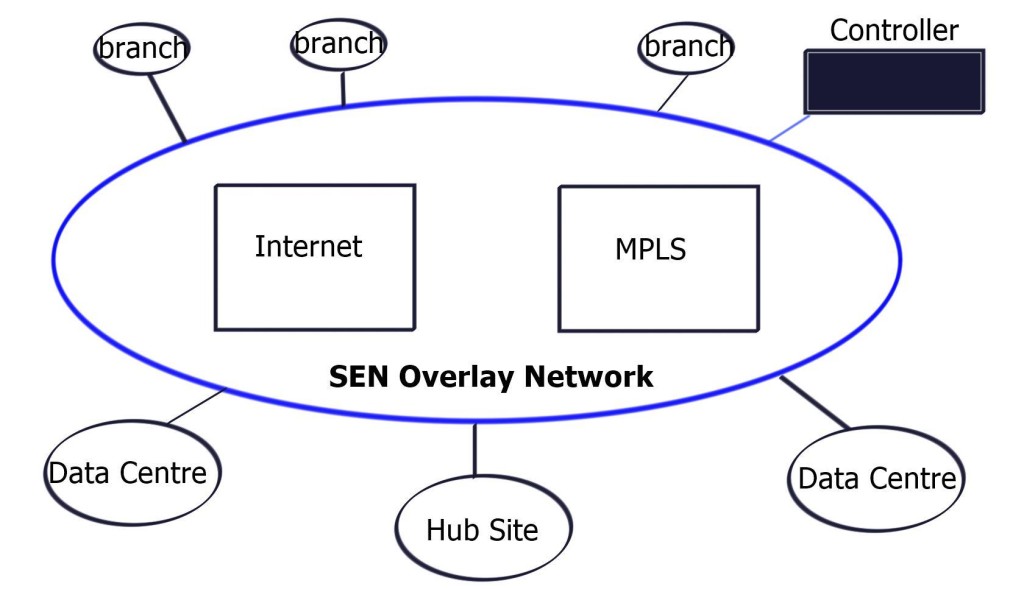

In simple terms, it is a virtual layer added to the existing network infrastructure. These network overlays connect different locations, such as branch offices, data centers, and the cloud, by creating a secure and reliable network.

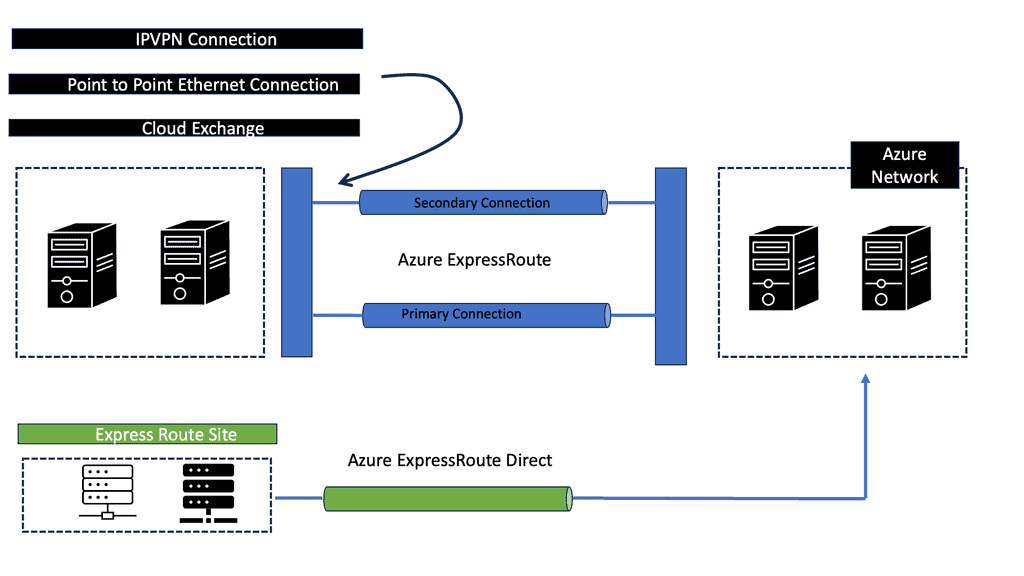

1. Tunnel-Based Overlays:

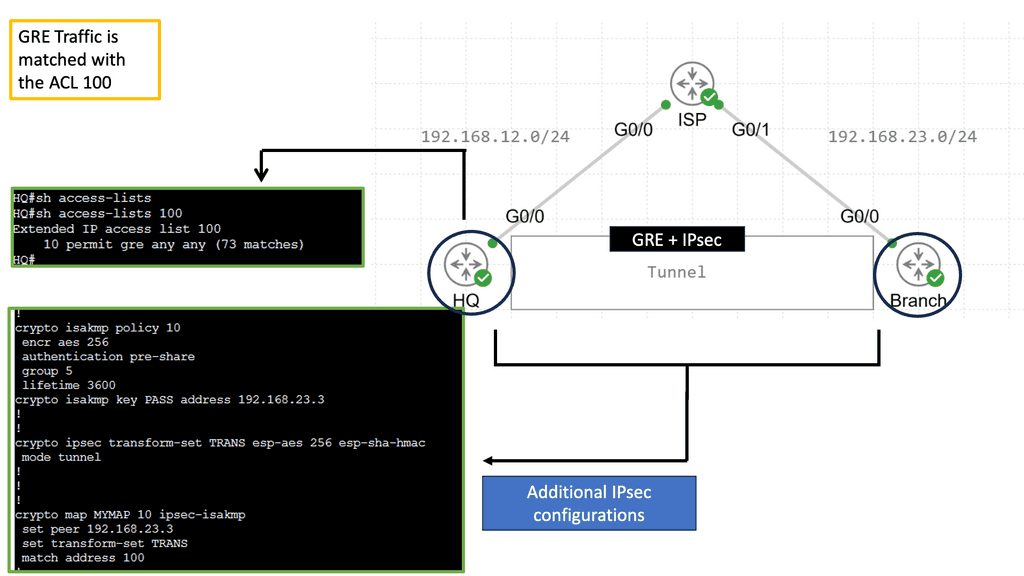

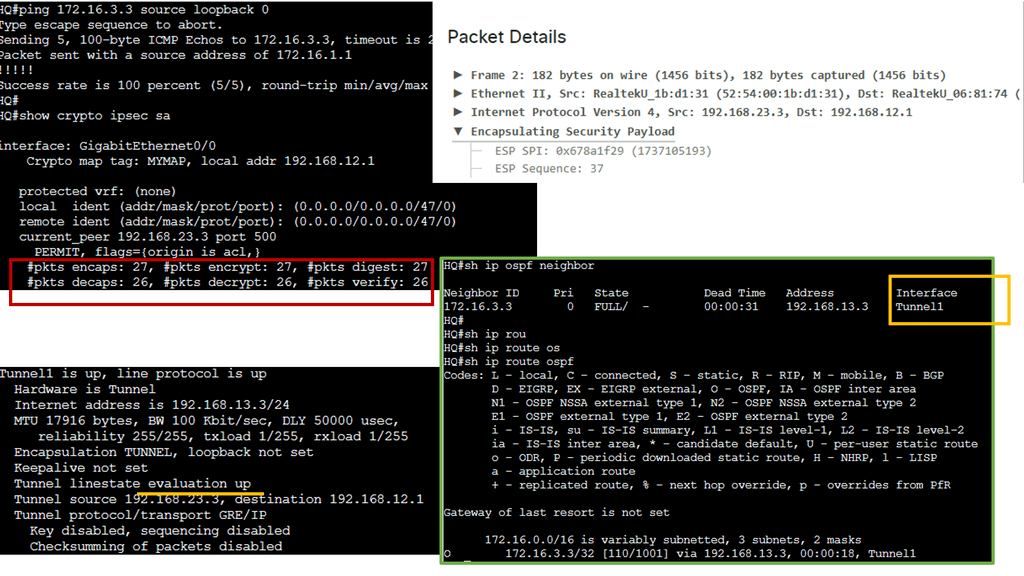

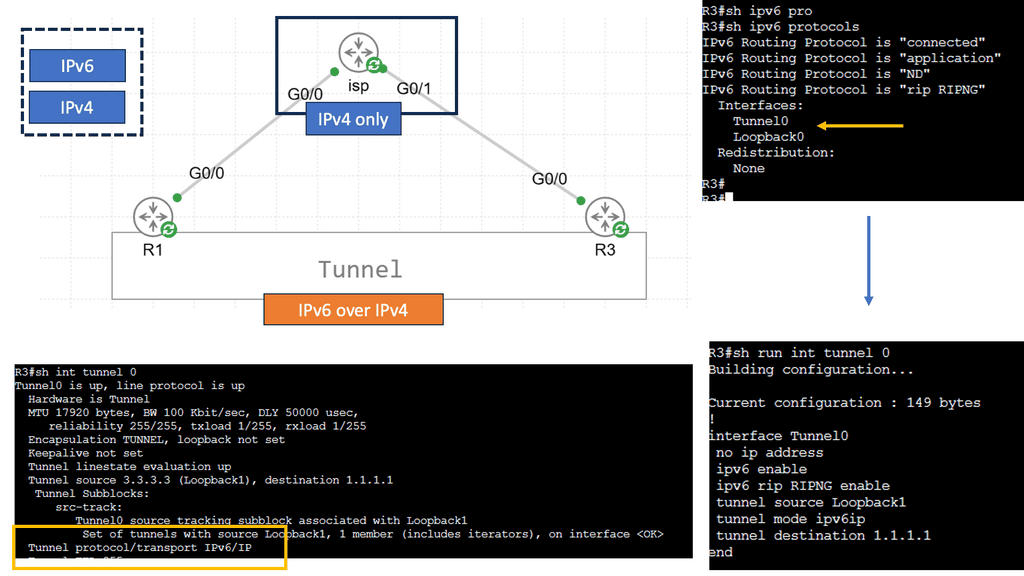

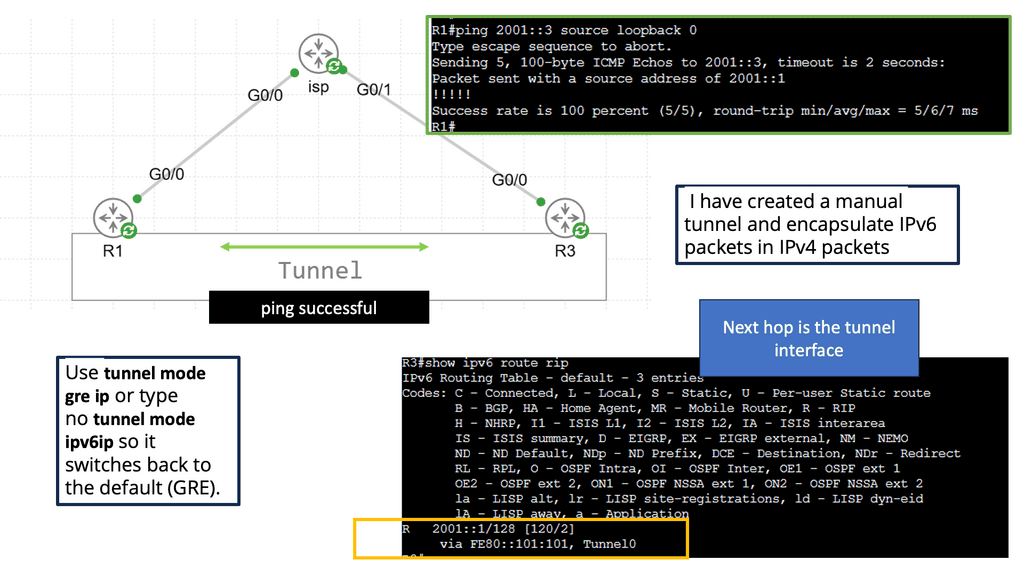

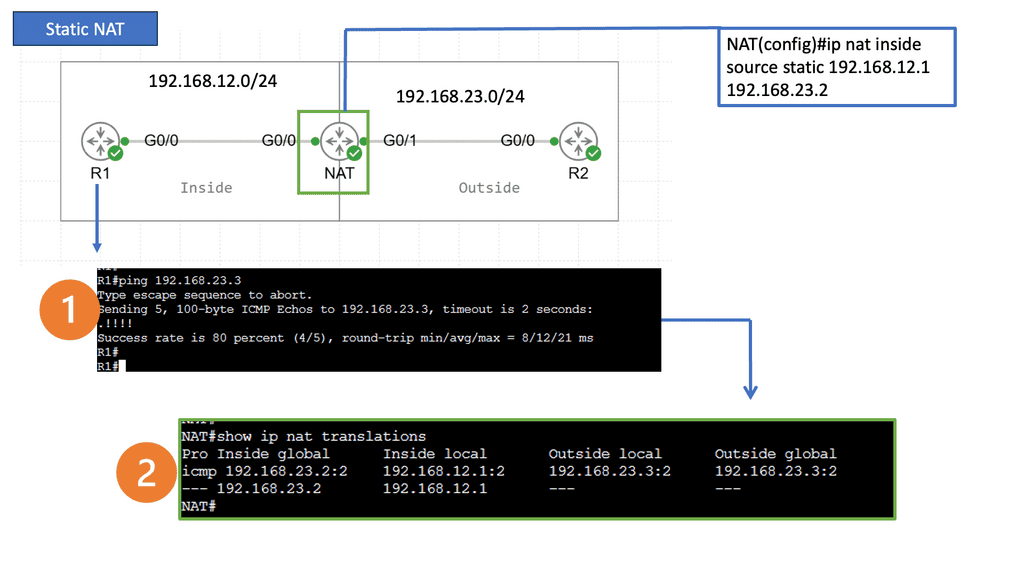

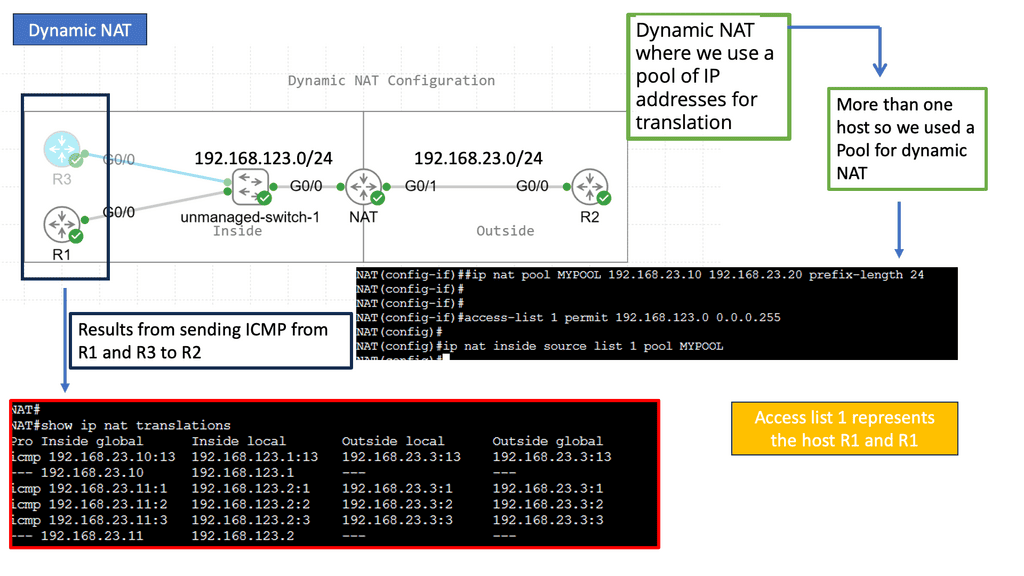

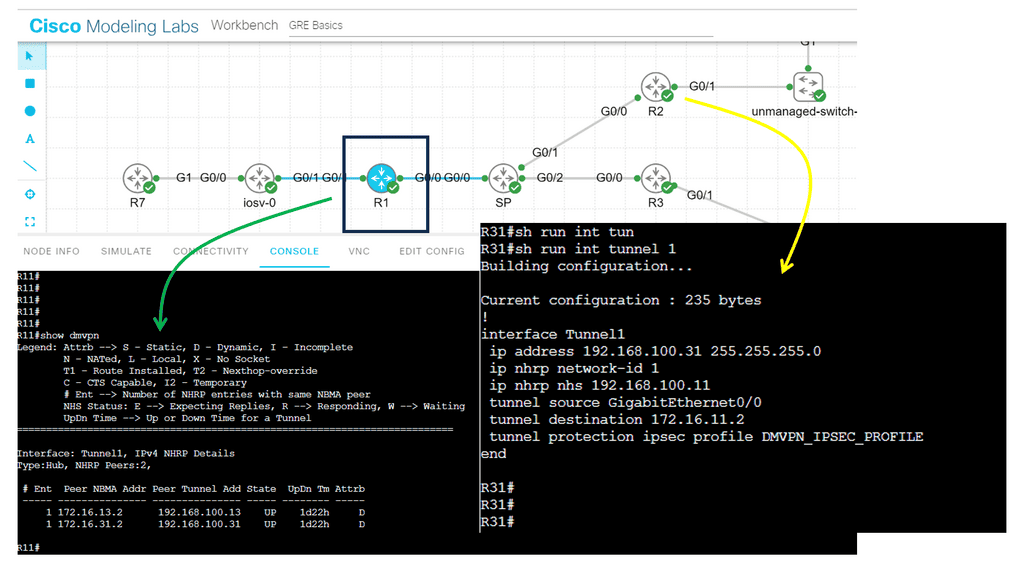

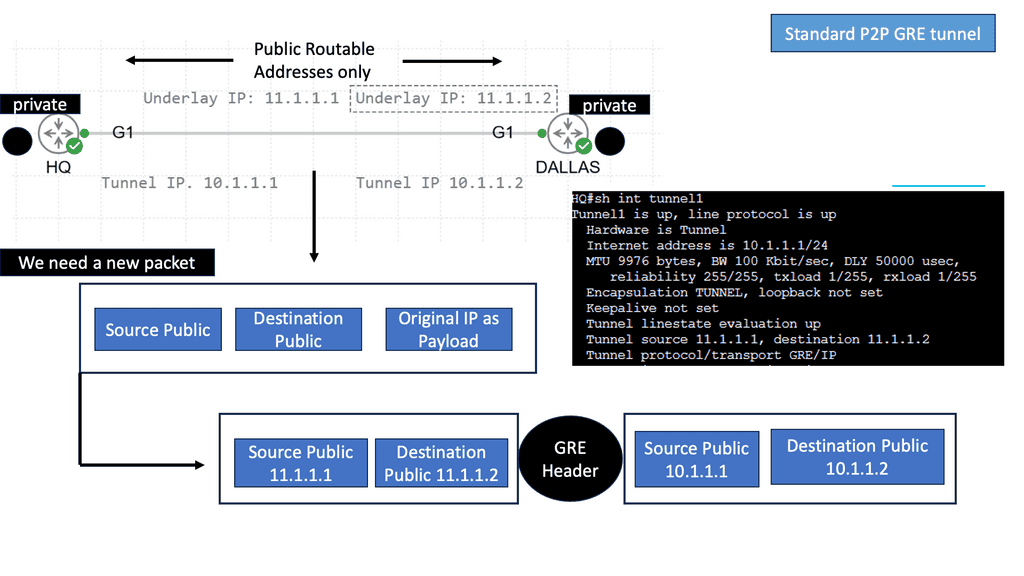

One of the most common types of SD-WAN overlays is tunnel-based overlays. This approach encapsulates network traffic within a virtual tunnel, allowing it to traverse multiple networks securely. Tunnel-based overlays are typically implemented using IPsec or GRE (Generic Routing Encapsulation) protocols. They offer enhanced security through encryption and provide a reliable connection between the SD-WAN edge devices.

Example Technology: IPSec and GRE

Organizations can achieve enhanced network security and improved connectivity by combining GRE and IPSec. The integration allows for creating secure tunnels between networks, ensuring that data transmitted between them remains protected from potential threats. This combination also enables the establishment of Virtual Private Networks (VPNs), enabling secure remote access and seamless connectivity for geographically dispersed teams.

By encrypting GRE tunnels, IPsec provides secure VPN tunnels. This approach offers many benefits, including support for dynamic IGP routing protocols, non-IP protocols, and IP multicast. Furthermore, the headend IPsec termination points can support QoS policies and deterministic routing metrics.

There is built-in redundancy due to the pre-established primary and backup GRE over IPsec tunnels. Static IP addresses are required for the headend site, but dynamic IP addresses are permitted for the remote sites. To differentiate primary tunnels from backup tunnels, routing metrics can be modified slightly to favor one or the other.

2. Segment-Based Overlays:

Segment-based overlays are designed to segment the network traffic based on specific criteria such as application type, user group, or location. This allows organizations to prioritize critical applications and allocate network resources accordingly. By segmenting the traffic, SD-WAN can optimize the performance of each application and ensure a consistent user experience. Segment-based overlays are particularly beneficial for businesses with diverse network requirements.

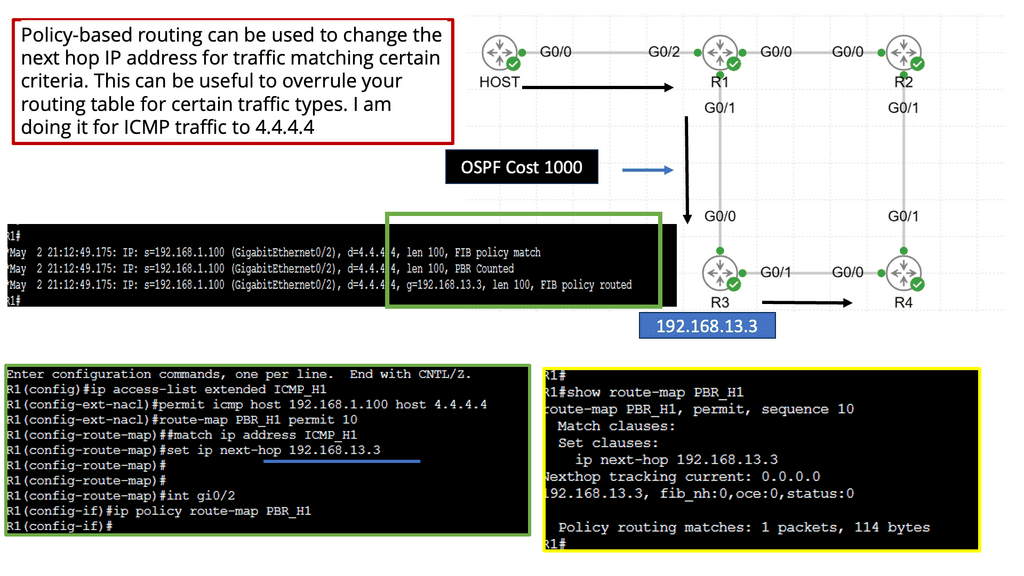

3. Policy-Based Overlays:

Policy-based overlays enable organizations to define rules and policies that govern the behavior of the SD-WAN network. These overlays use intelligent routing algorithms to dynamically select the most optimal path for network traffic based on predefined policies. By leveraging policy-based overlays, businesses can ensure efficient utilization of network resources, minimize latency, and improve overall network performance.

4. Hybrid Overlays:

Hybrid overlays combine the benefits of both public and private networks. This overlay allows organizations to utilize multiple network connections, including MPLS, broadband, and LTE, to create a robust and resilient network infrastructure. Hybrid overlays intelligently route traffic through the most suitable connection based on application requirements, network availability, and cost. Businesses can achieve high availability, cost-effectiveness, and improved application performance by leveraging mixed overlays.

5. Cloud-Enabled Overlays:

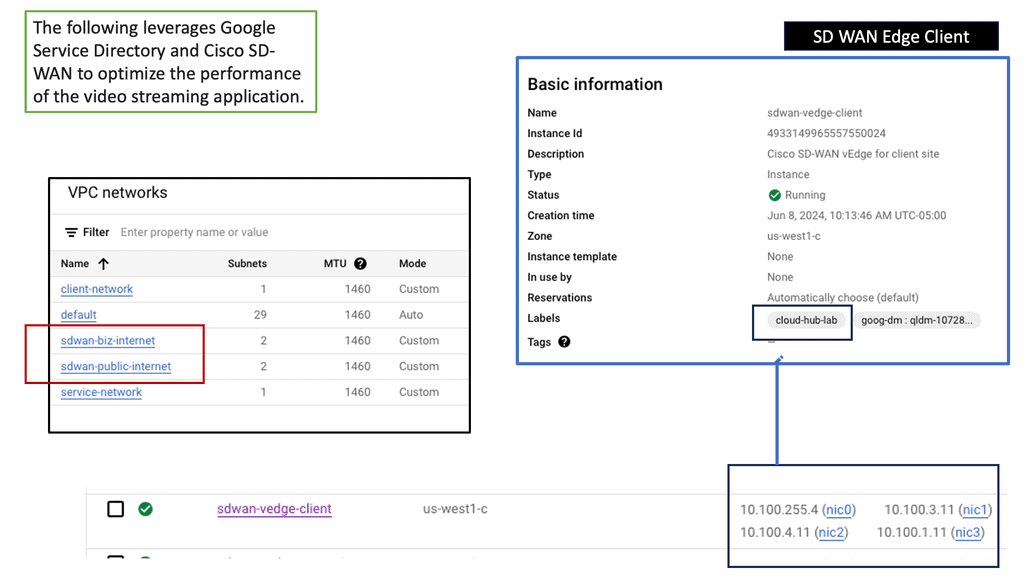

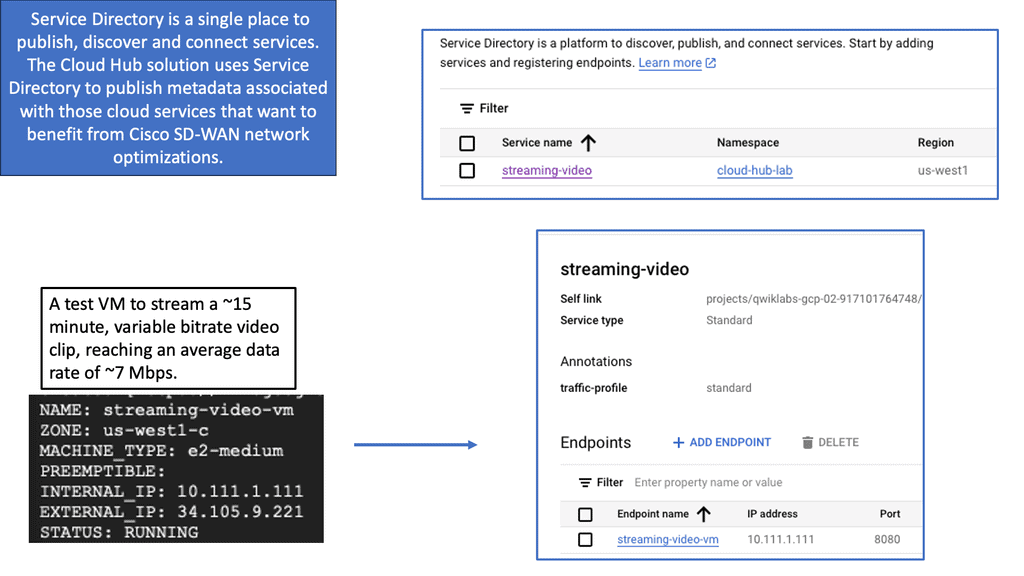

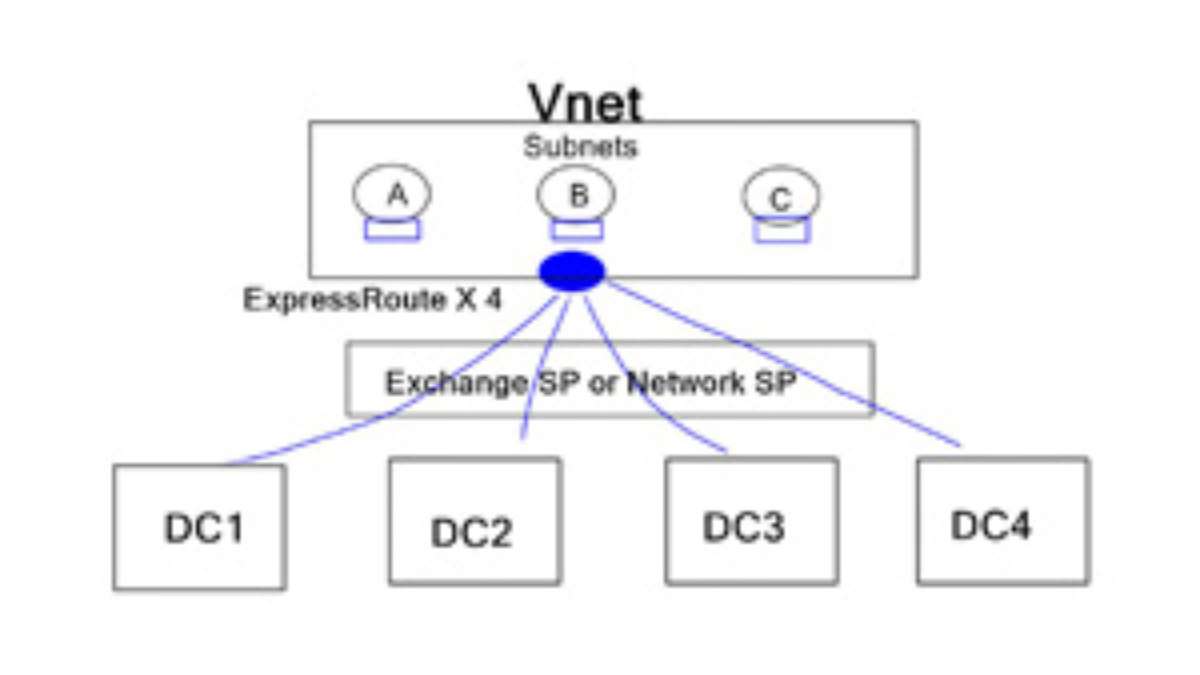

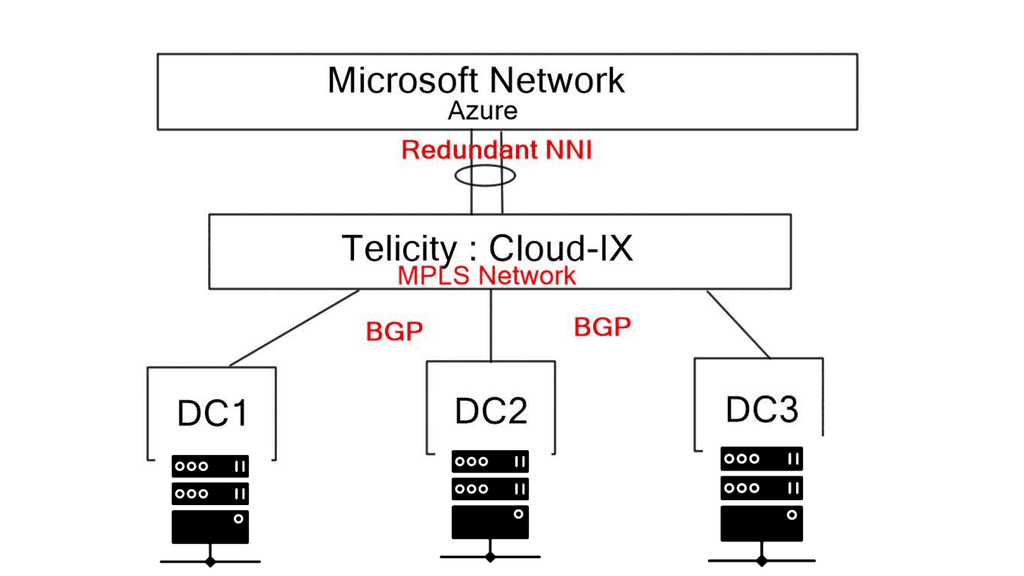

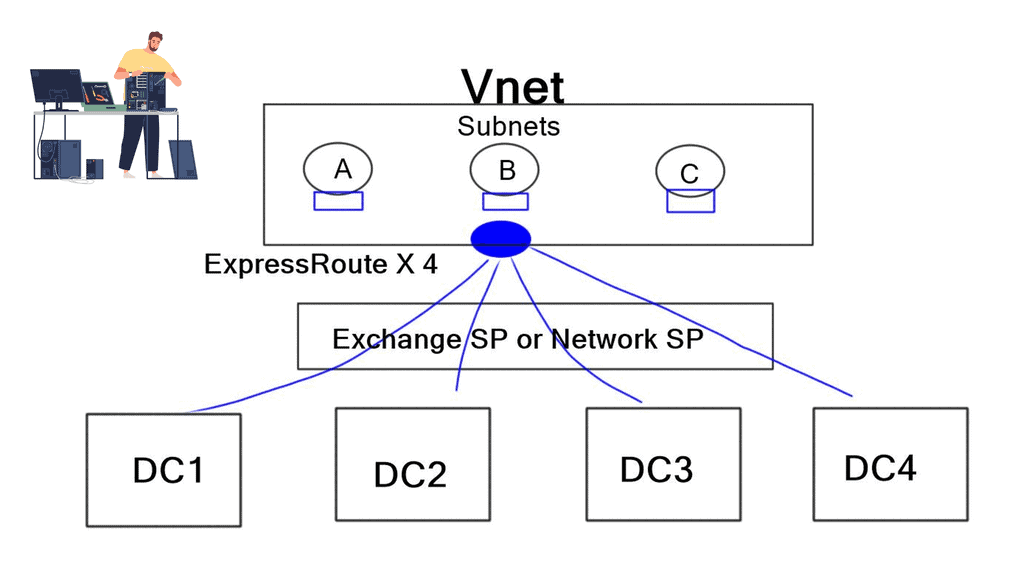

As more businesses adopt cloud-based applications and services, seamless connectivity to cloud environments becomes crucial. Cloud-enabled overlays provide direct and secure connectivity between the SD-WAN network and cloud service providers. These overlays ensure optimized performance for cloud applications by minimizing latency and providing efficient data transfer. Cloud-enabled overlays simplify the management and deployment of SD-WAN in multi-cloud environments, making them an ideal choice for businesses embracing cloud technologies.

**Challenge: The traditional network**

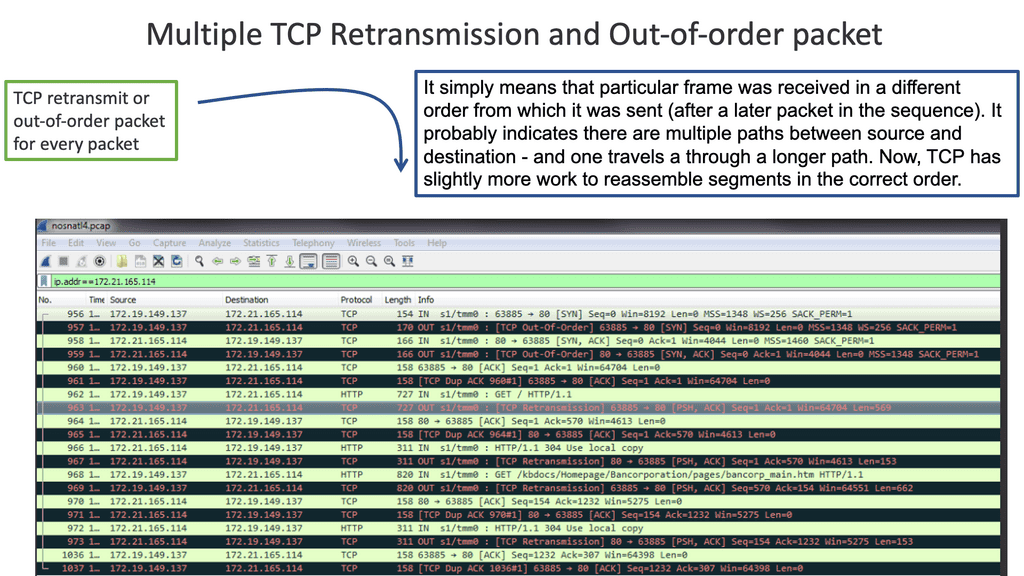

The networks we depend on for business are sensitive to many factors that can result in a slow and unreliable experience. Latency, which refers to the time between a data packet being sent and received, or round-trip time, which is the time it takes for the packet to be sent and for it to get a reply, can be experienced.

We can also experience jitter, the variance in the time delay between data packets in the network, which is a “disruption” in the sending and receiving packets. We have fixed-bandwidth networks that can experience congestion. For example, with five people sharing the same Internet link, each could experience a stable and swift network. Add another 20 or 30 people onto the same link, and the experience will be markedly different.

Google Cloud & SD WAN

SD WAN Cloud Hub

Google Cloud is renowned for its robust infrastructure, scalability, and advanced services. By integrating SD-WAN with Google Cloud, businesses can tap into this powerful ecosystem. They gain access to Google’s global network, enabling optimized routing and lower latency. Additionally, the scalability and flexibility of Google Cloud allow organizations to adapt to changing network demands seamlessly.

Key Advantages:

When SD-WAN Cloud Hub is integrated with Google Cloud, it unlocks a host of advantages. Firstly, it enables organizations to seamlessly connect their branch offices, data centers, and cloud resources, providing a unified network fabric. This integration optimizes traffic flow and enhances application performance, ensuring a consistent user experience.

Key Features and Benefits:

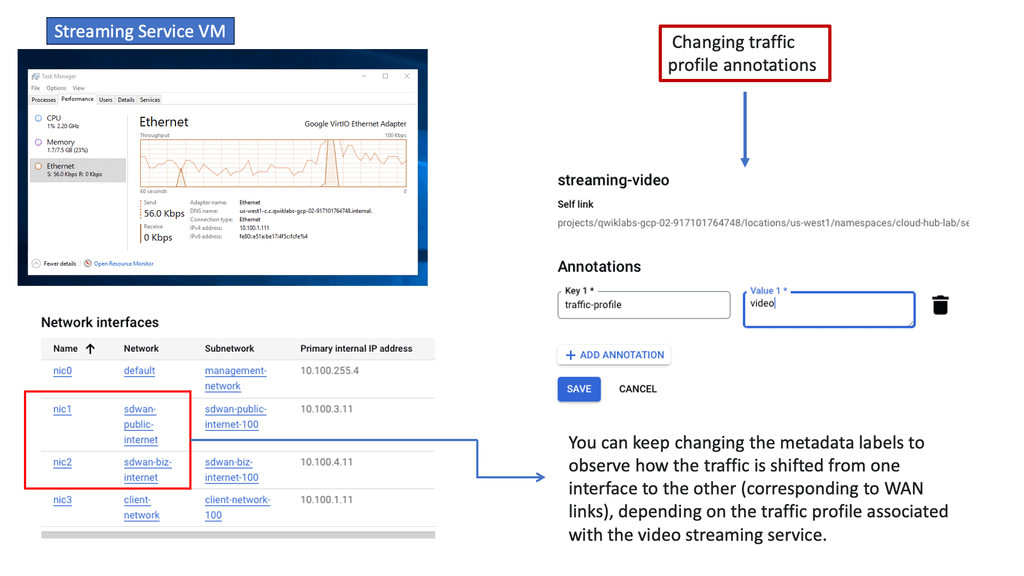

Intelligent Traffic Steering: SD-WAN Cloud Hub with Google Cloud allows organizations to intelligently steer traffic based on application requirements, network conditions, and security policies. This dynamic traffic routing ensures optimal performance and minimizes latency.

Simplified Network Management: The centralized management platform of SD-WAN Cloud Hub simplifies network configuration, monitoring, and troubleshooting. Integration with Google Cloud provides a unified view of the entire network infrastructure, streamlining operations and reducing complexity.

Enhanced Security: SD-WAN Cloud Hub leverages Google Cloud’s security features, such as Cloud Armor and Cloud Identity-Aware Proxy, to protect network traffic and ensure secure communication between branches, data centers, and the cloud.

VPN and Overlay technologies

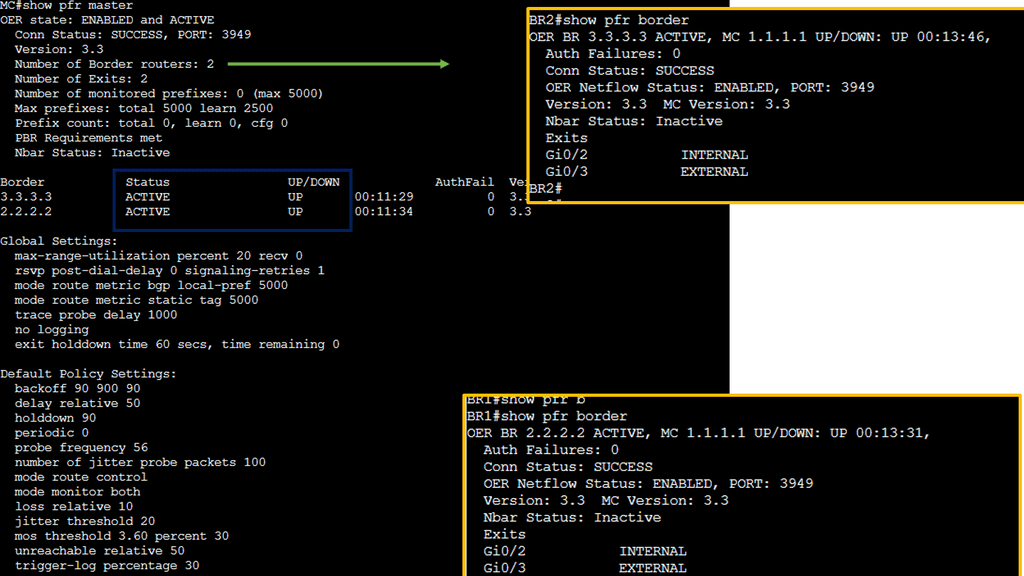

Performance-Based Routing & DMVPN

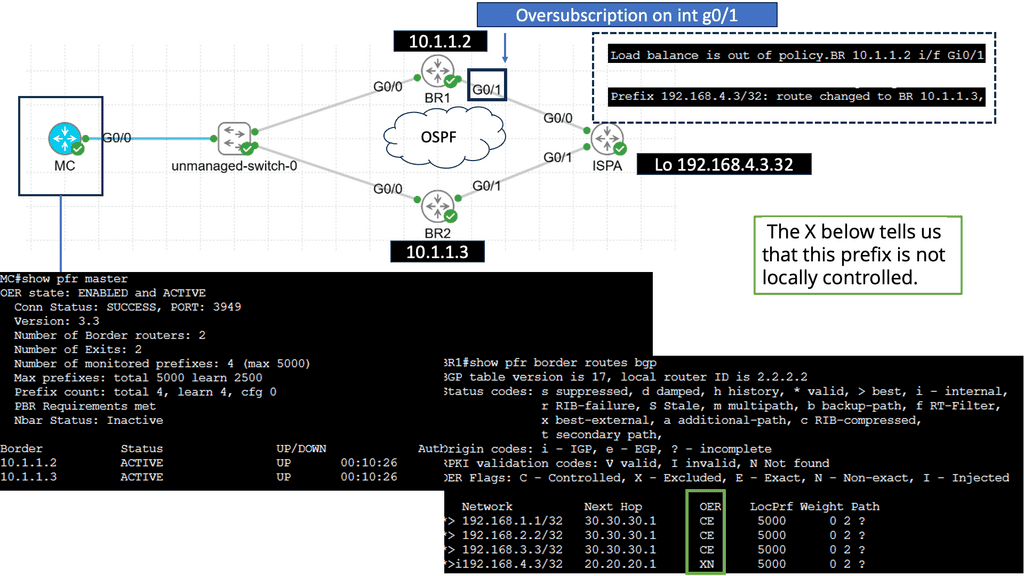

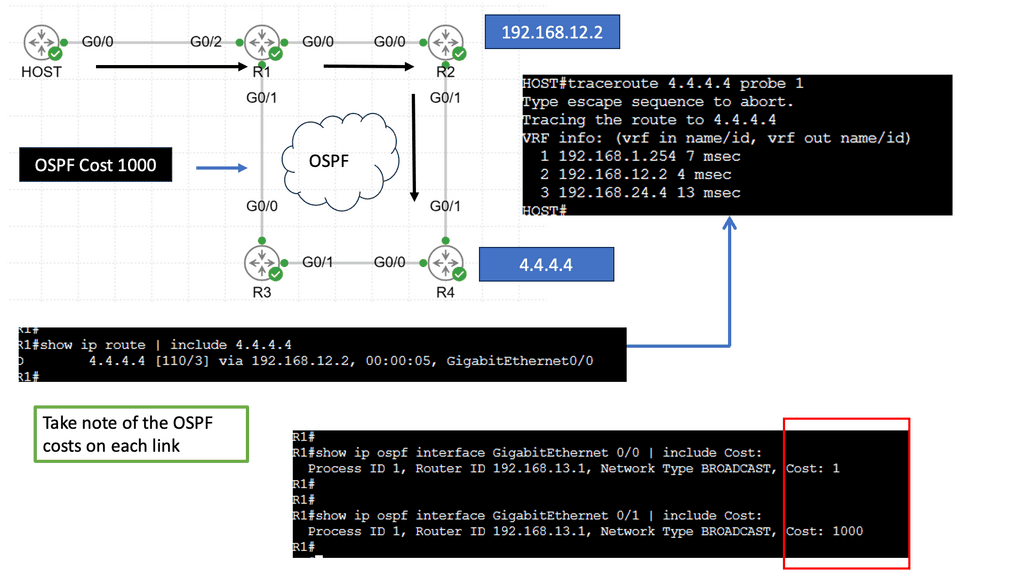

Performance-based routing is a dynamic routing technique beyond traditional static routing protocols. It leverages real-time data and network monitoring to make intelligent routing decisions based on latency, bandwidth availability, and network congestion. By constantly analyzing network conditions, performance-based routing algorithms can adapt and reroute traffic to the most efficient paths, ensuring speedy and reliable data transmission.

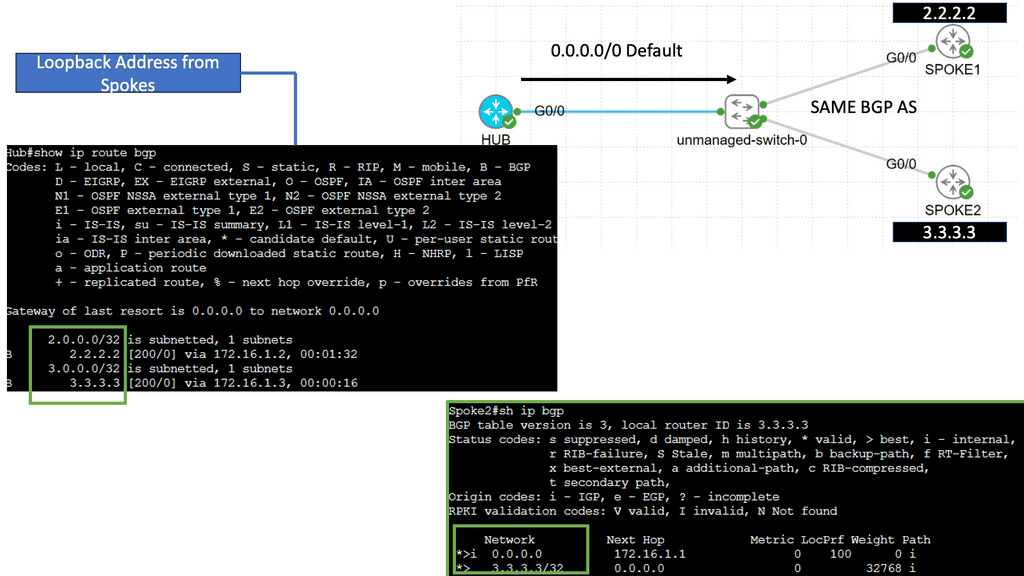

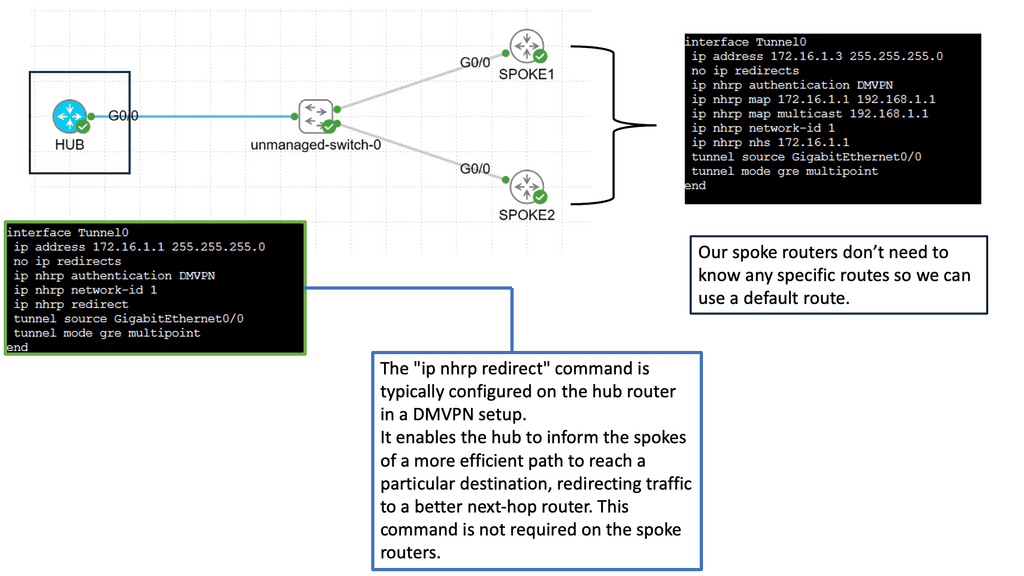

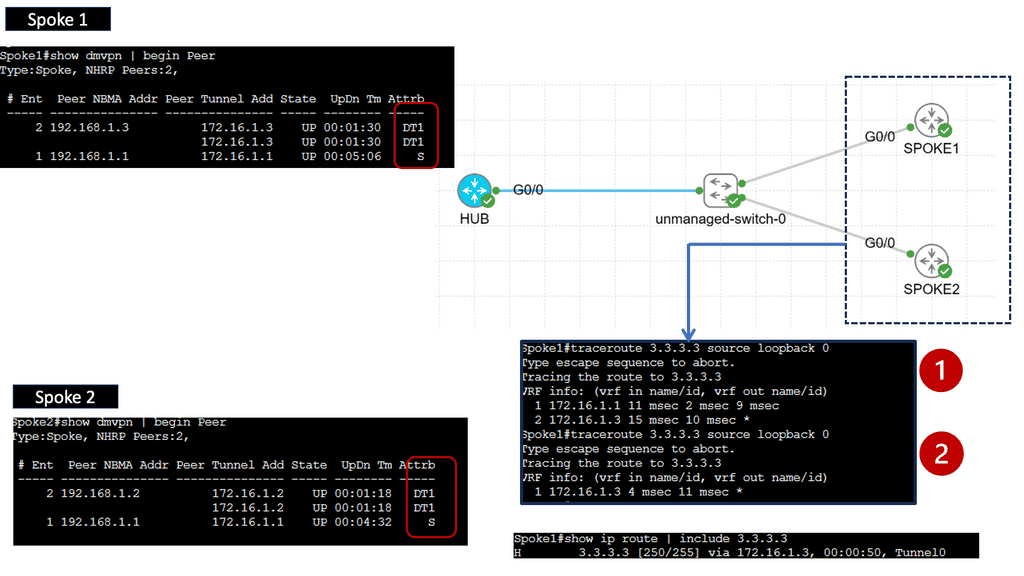

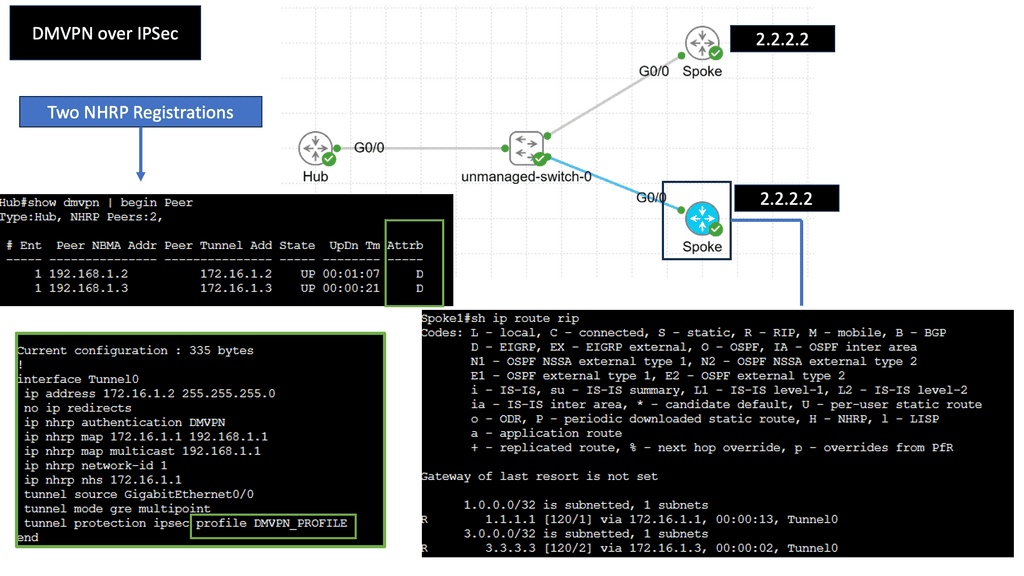

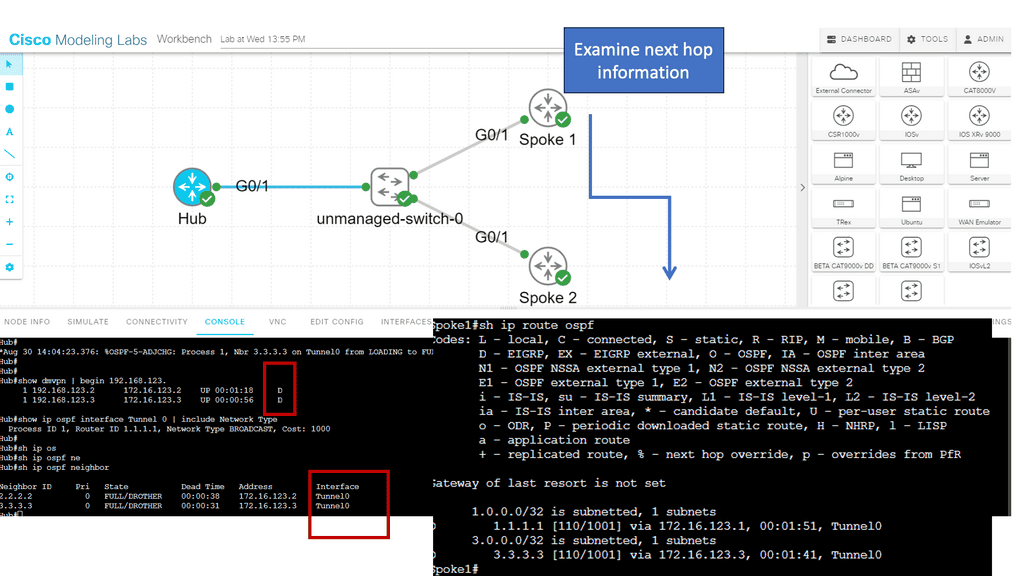

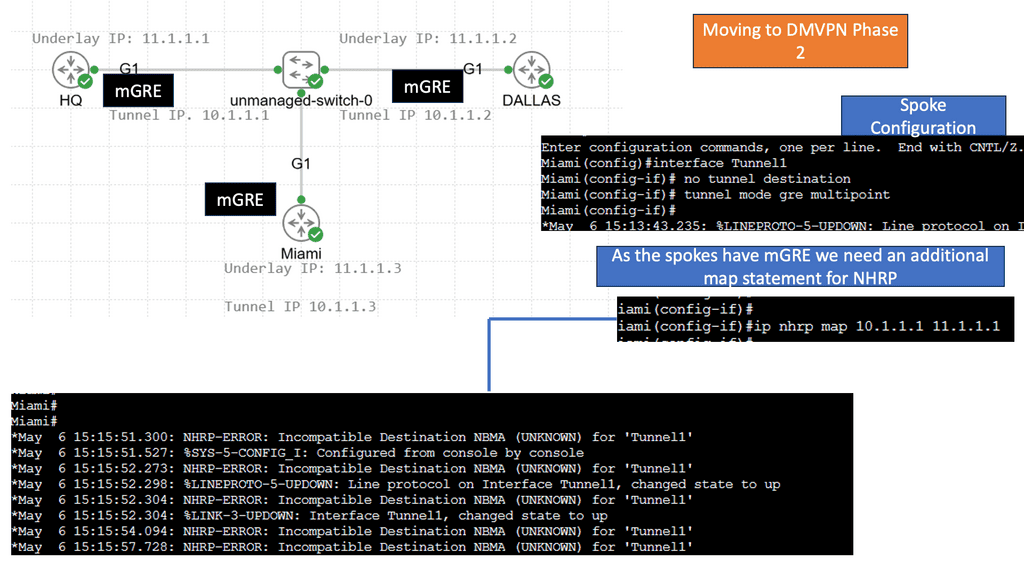

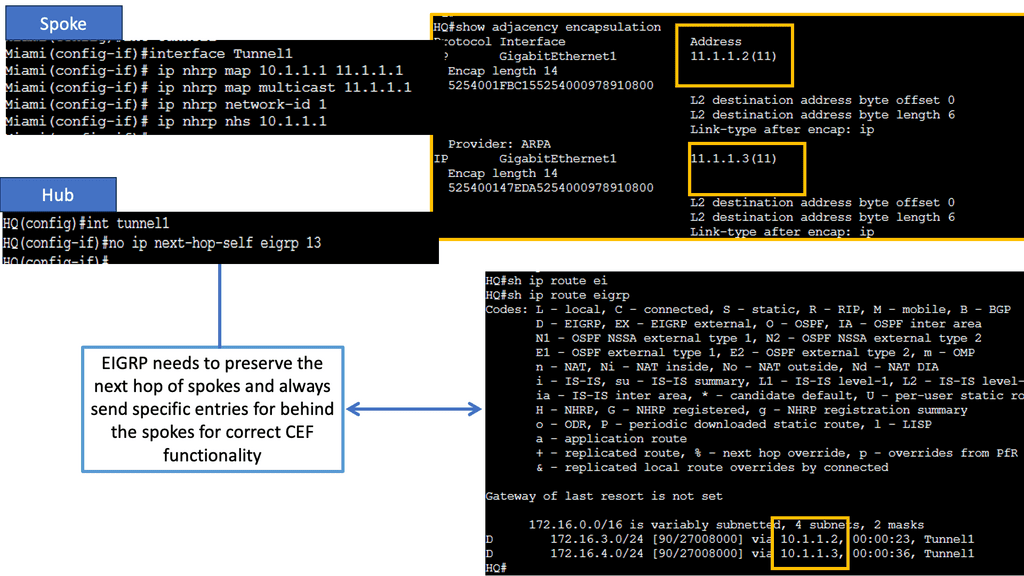

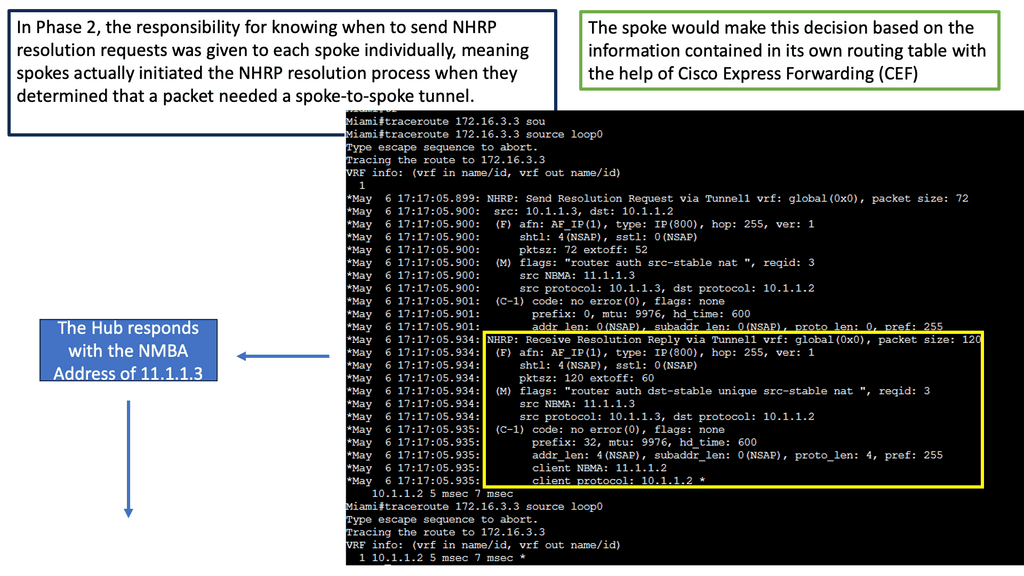

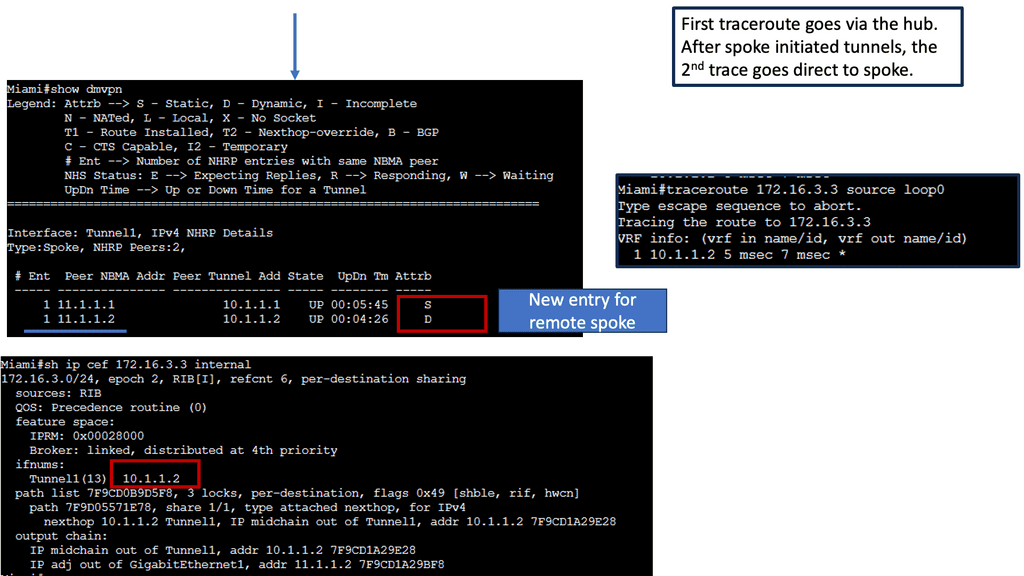

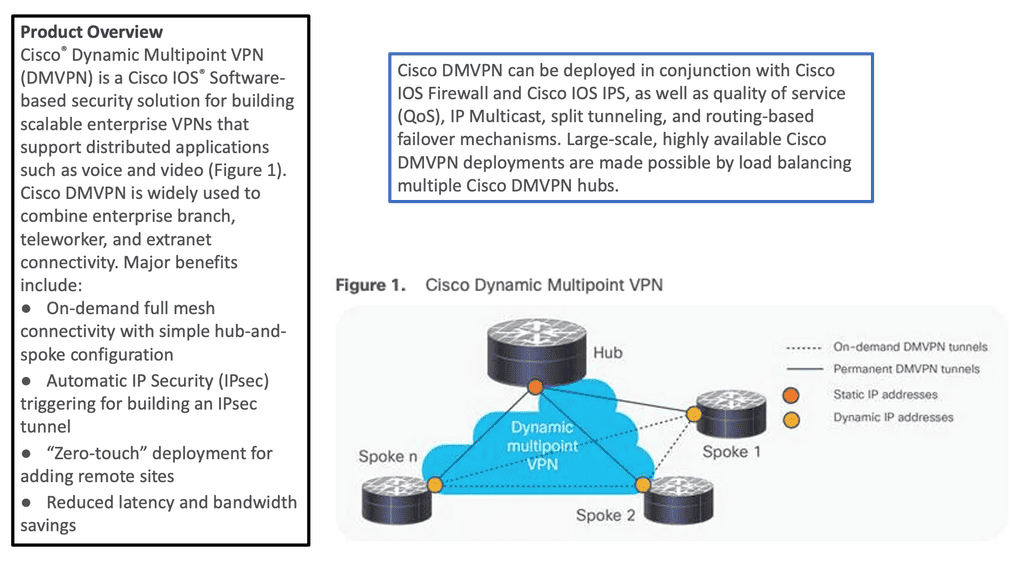

- DMVPN Phase 3

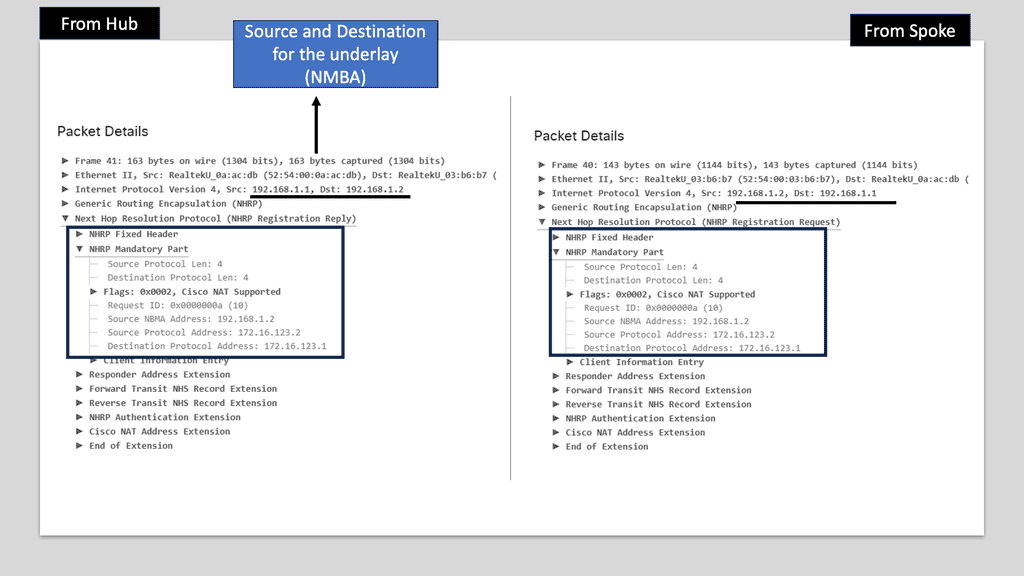

DMVPN Phase 3 is the latest iteration of the DMVPN technology, designed to address the limitations of its predecessors. It introduces significant improvements in scalability, routing protocol support, and encryption capabilities. By utilizing multipoint GRE tunnels, DMVPN Phase 3 enables efficient and dynamic communication between multiple sites securely and efficiently.

One of DMVPN Phase 3’s key advantages is its scalability. The introduction of the NHRP (Next Hop Resolution Protocol) redirect allows for the dynamic and efficient allocation of network resources, making it an ideal solution for large-scale deployments. Additionally, DMVPN Phase 3 supports a wide range of routing protocols, including OSPF, EIGRP, and BGP, providing network administrators with flexibility and ease of integration.

Multipoint GRE (mGRE) is an underlying technology that plays a crucial role in DMVPN’s functionality. It enables the establishment of multiple tunnels over a single GRE interface, maximizing network resource utilization. Encapsulating packets in GRE headers, mGRE facilitates traffic routing between remote sites, creating a secure and efficient communication path.

Configuring spokes to terminate multiple headends at one or more hub locations can achieve redundancy. Cryptographic attributes are typically mapped to the tunnel initiated by the remote peer through IPsec tunnel protection..

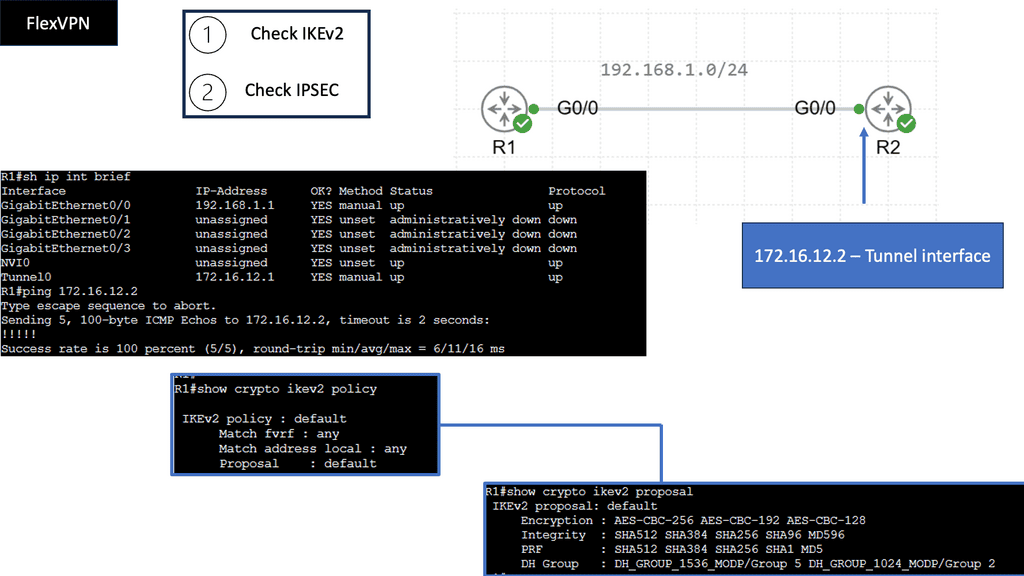

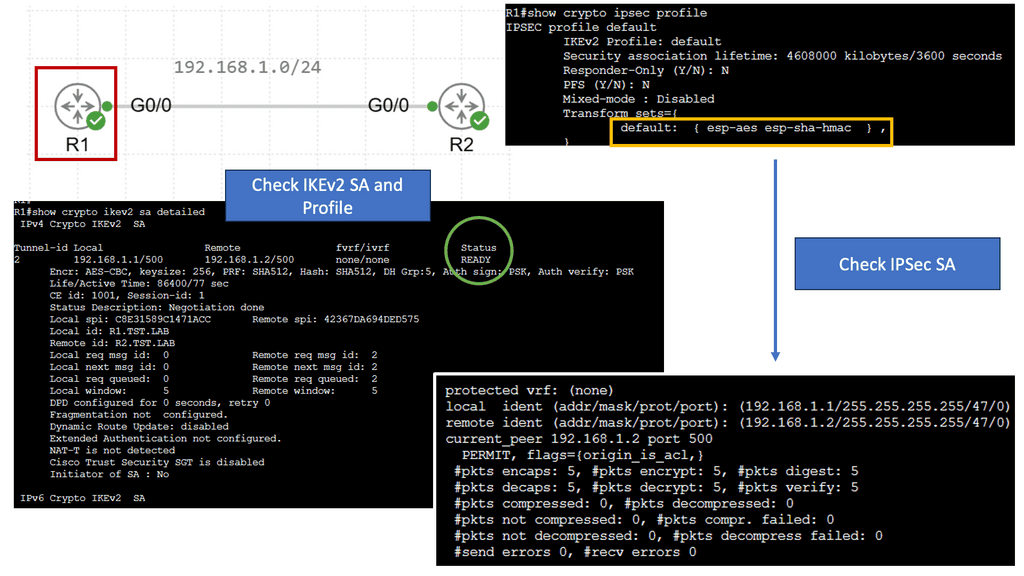

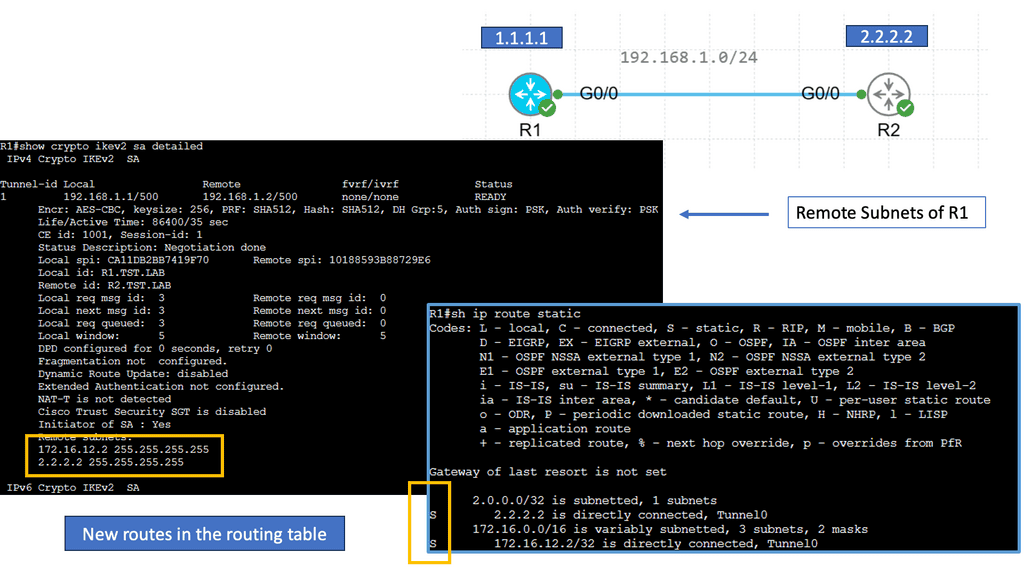

FlexVPN Site-to-Site Smart Defaults

FlexVPN Site-to-Site Smart Defaults is a feature designed to simplify and streamline the configuration process of site-to-site VPN connections. It provides a set of predefined default values for various parameters, eliminating the need for complex manual configurations. Network administrators can save time and effort by leveraging these smart defaults while ensuring robust security.

One key advantage of FlexVPN Site-to-Site Smart Defaults is its ease of use. Network administrators can quickly deploy secure site-to-site VPN connections without extensive knowledge of complex VPN configurations. The predefined defaults ensure that essential security features are automatically enabled, minimizing the risk of misconfigurations and vulnerabilities.

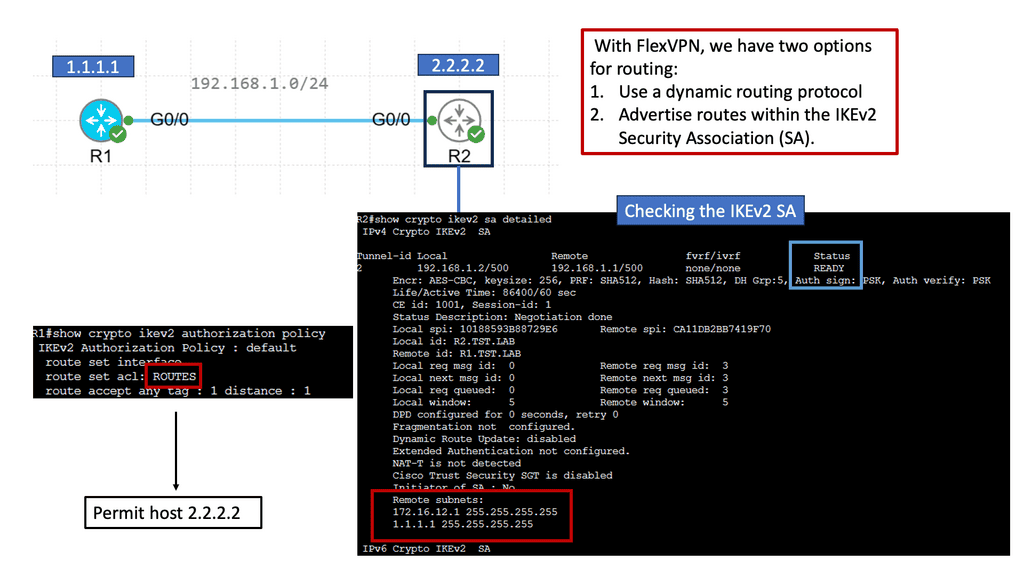

FlexVPN IKEv2 Routing

FlexVPN IKEv2 routing is a robust routing protocol that combines the flexibility of FlexVPN with the security of IKEv2. It allows for dynamic routing between different sites and simplifies the management of complex network infrastructures. By utilizing the power of cryptographic security and advanced routing techniques, FlexVPN IKEv2 routing ensures secure and efficient communication across networks.

FlexVPN IKEv2 routing offers numerous benefits, making it a preferred choice for network administrators. Firstly, it provides enhanced scalability, allowing networks to grow and adapt to changing requirements quickly. Additionally, the protocol ensures end-to-end security by encapsulating data within secure tunnels, protecting it from unauthorized access. Moreover, FlexVPN IKEv2 routing supports multipoint connectivity, enabling seamless communication between multiple sites.

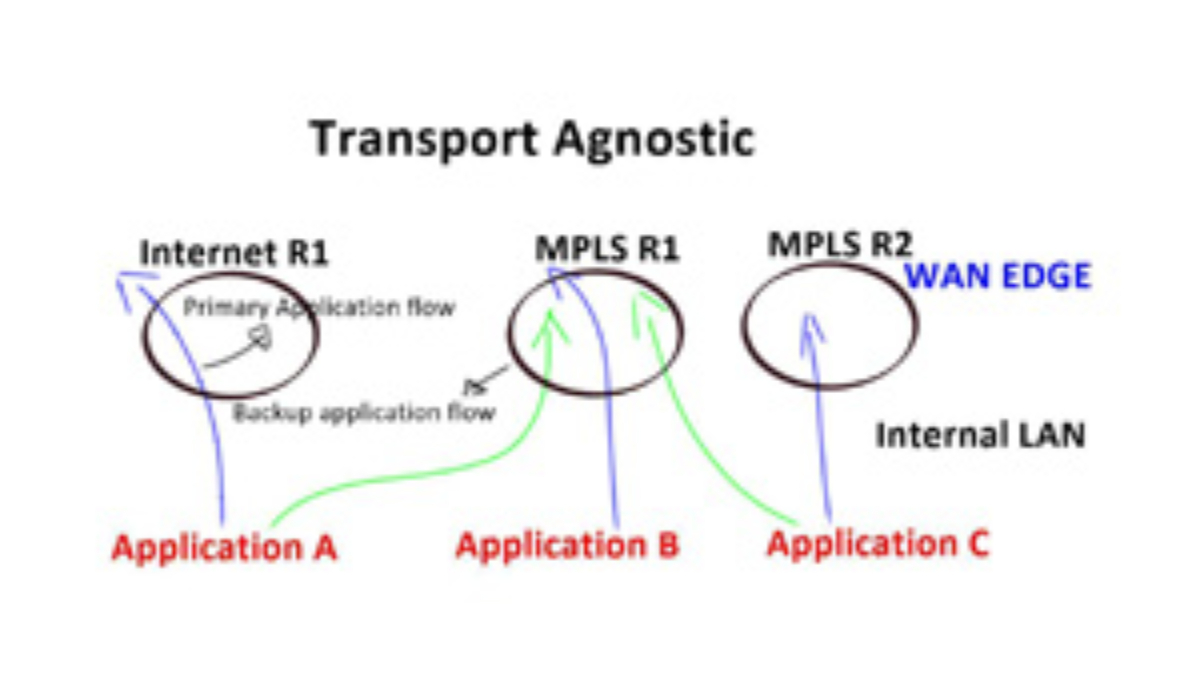

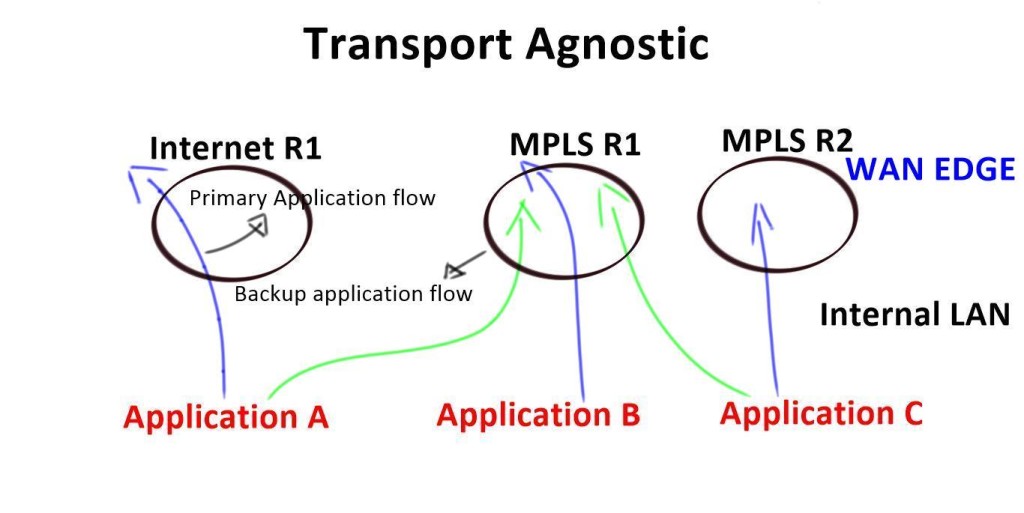

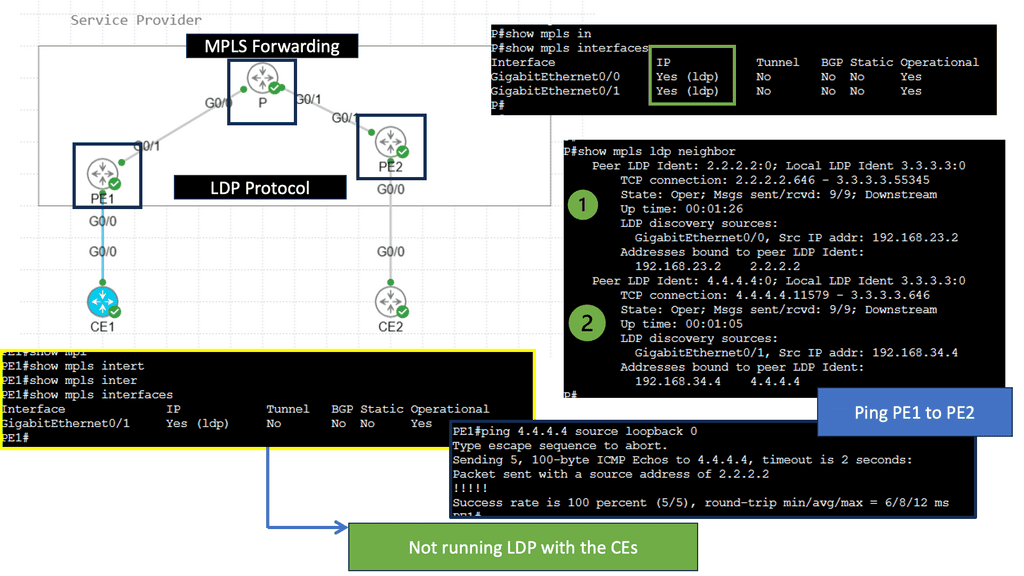

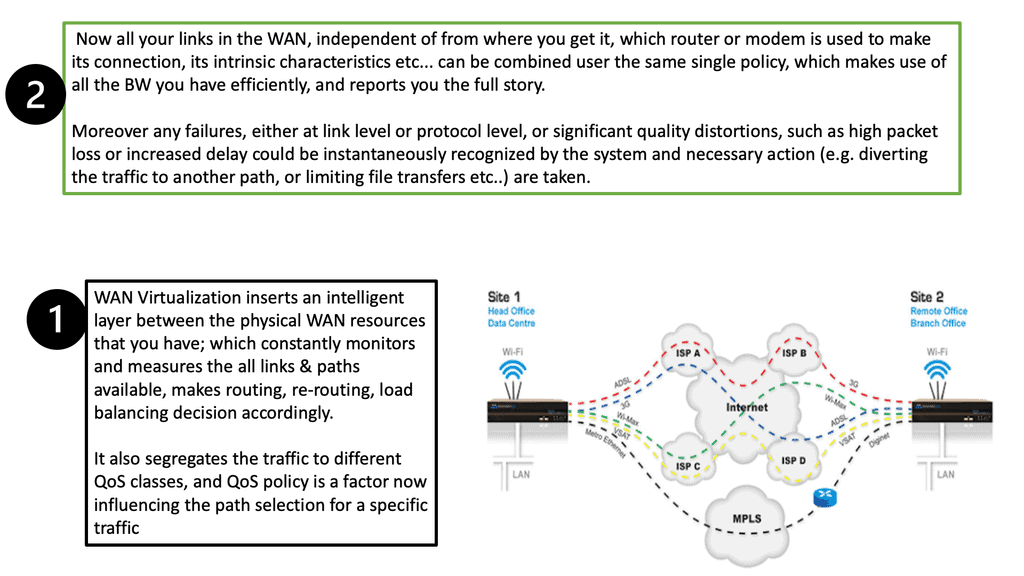

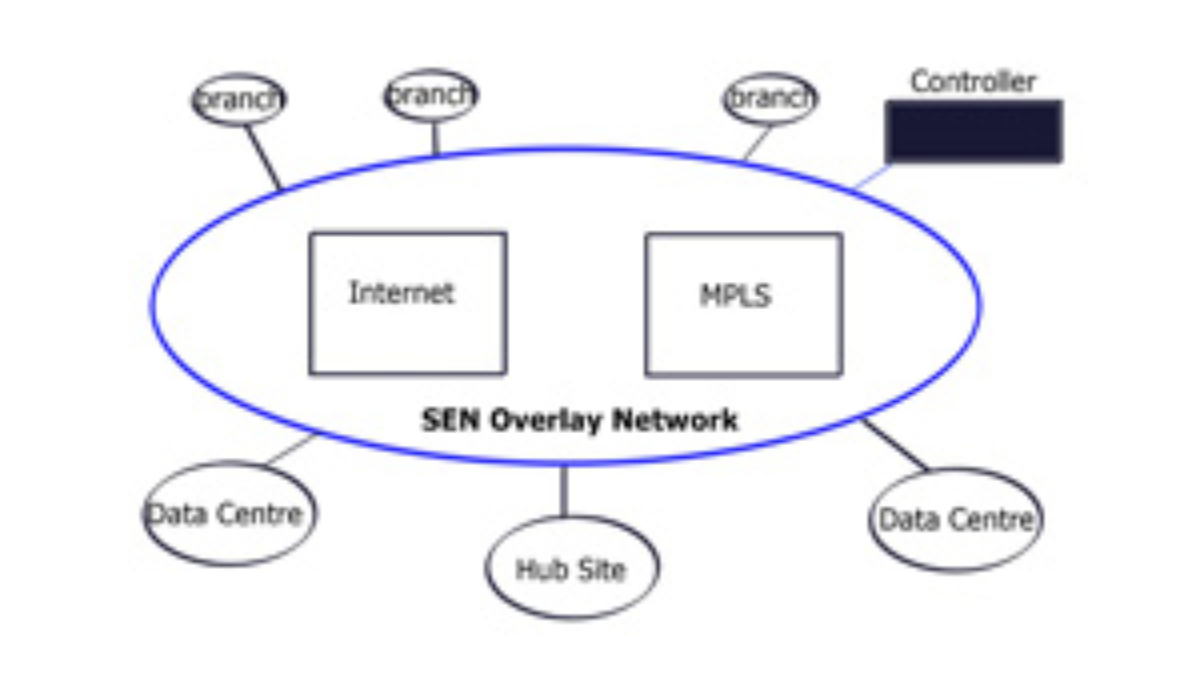

**Transport Fabric Technology**

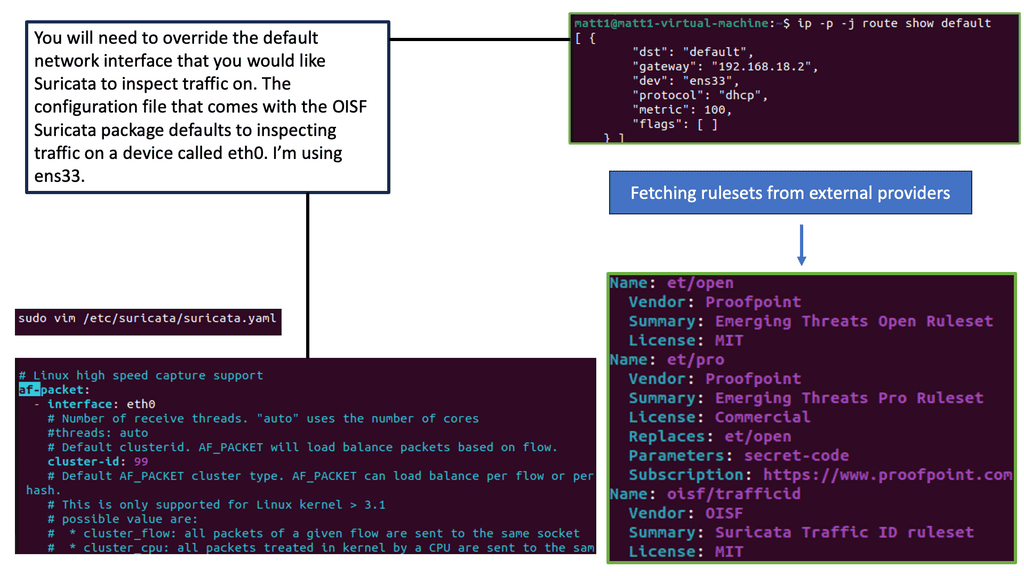

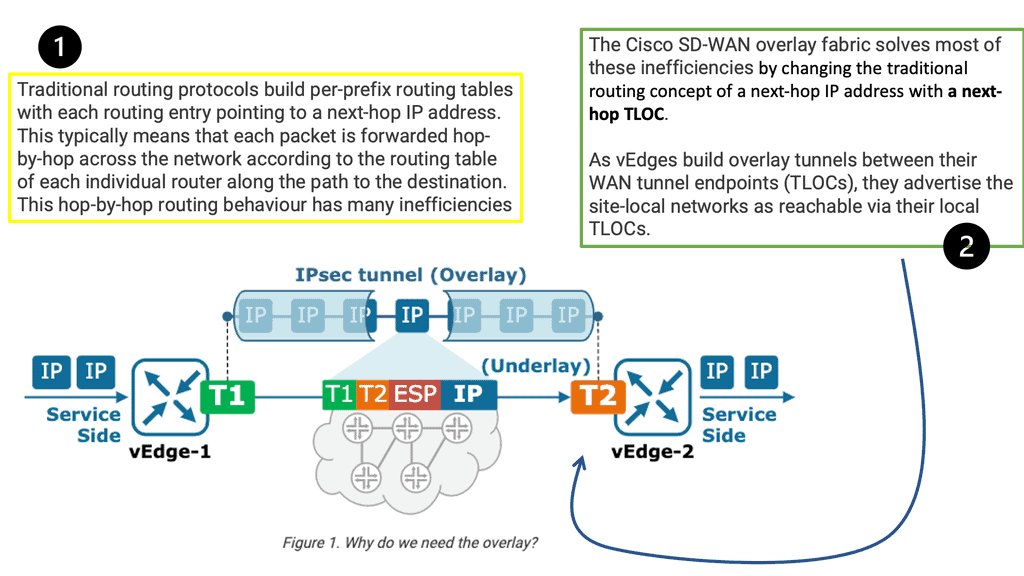

SD-WAN leverages transport-independent fabric technology to connect remote locations. This is achieved by using overlay technology. The SDWAN overlay works by tunneling traffic over any transport between destinations within the WAN environment.

This gives authentic flexibility to routing applications across any network portion regardless of the circuit or transport type. This is the definition of transport independence. Having a fabric SDWAN overlay network means that every remote site, regardless of physical or logical separation, is always a single hop away from another. DMPVN works based on transport agnostic design.

SD-WAN vs Traditional WAN

SD-WAN overlays offer several advantages over traditional WANs, including improved scalability, reduced complexity, and better control over traffic flows. They also provide better security, as each site is protected by its dedicated security protocols. Additionally, SD-WAN overlays can improve application performance and reliability and reduce latency.

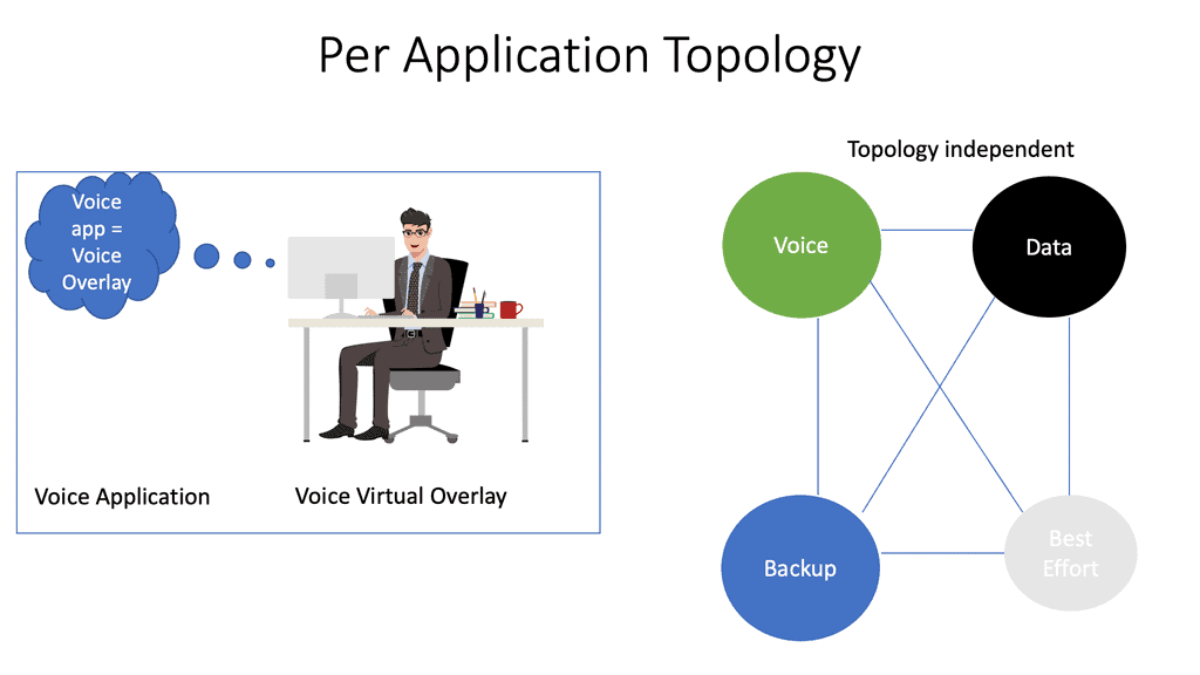

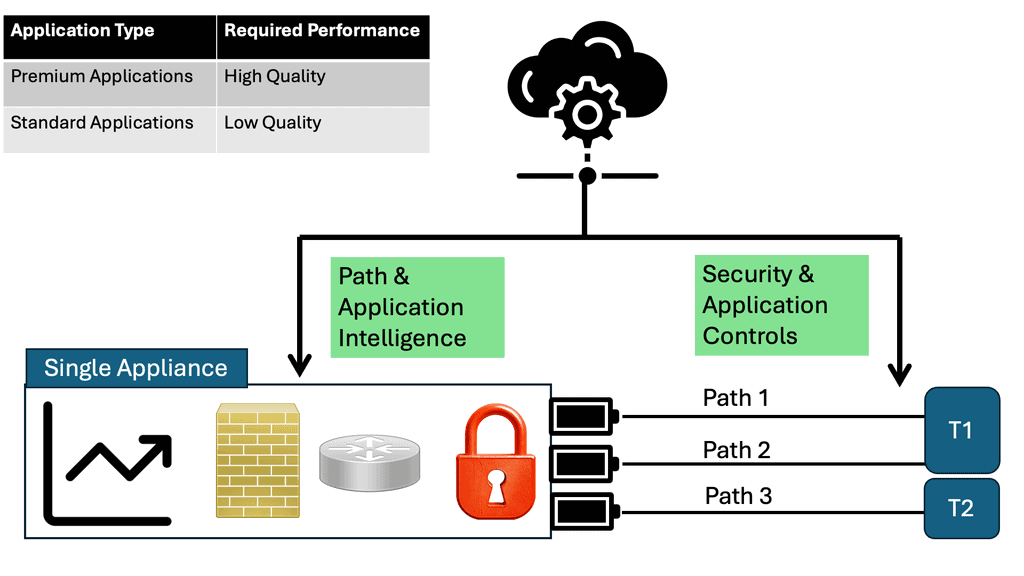

Key Point: SD-WAN abstracts the underlay

With SD-WAN, the virtual WAN overlays are abstracted from the physical device’s underlay. Therefore, the virtual WAN overlays can take on topologies independent of each other without being pinned to the configuration of the underlay network. SD-WAN changes how you map application requirements to the network, allowing for the creation of independent topologies per application.

For example, mission-critical applications may use expensive leased lines, while lower-priority applications can use inexpensive best-effort Internet links. This can all change on the fly if specific performance metrics are unmet.

Previously, the application had to match and “fit” into the network with the legacy WAN, but with an SD-WAN, the application now controls the network topology. Multiple independent topologies per application are a crucial driver for SD-WAN.

Example Technology: PTP GRE

Point to Point GRE, or Generic Routing Encapsulation, is a protocol that encapsulates and transports various network layer protocols over an IP network. Providing a virtual point-to-point link enables secure and efficient communication between remote networks. Point to Point GRE offers a flexible and scalable solution for organizations seeking to establish secure connections over public or private networks.

SD-WAN combines transports, SDWAN overlay, and underlay

SD-WAN combines transports, SDWAN overlay, and underlay

Look at it this way. With an SD-WAN topology, there are different levels of networking. There is an underlay network, the physical infrastructure, and an SDWAN overlay network. The physical infrastructure is the router, switches, and WAN transports; the overlay network is the virtual WAN overlays.

The SDWAN overlay presents a different network to the application. For example, the voice overlay will see only the voice overlay. The logical virtual pipe the overlay creates and the application sees differs from the underlay.

An SDWAN overlay network is a virtual or logical network created on top of an existing physical network. The internet, which connects many nodes via circuit switching, is an example of an SDWAN overlay network. An overlay network is any virtual layer on top of physical network infrastructure.

Consider an SDWAN overlay as a flexible tag.

This may be as simple as a virtual local area network (VLAN) but typically refers to more complex virtual layers from an SDN or an SD-WAN). Think of an SDWAN overlay as a tag so that building the overlays is not expensive or time-consuming. In addition, you don’t need to buy physical equipment for each overlay as the overlay is virtualized and in the software.

Similar to software-defined networking (SDN), the critical part is that SD-WAN works by abstraction. All the complexities are abstracted into application overlays. For example, application type A can use this SDWAN overlay, and application type B can use that SDWAN overlay.

I.P. and port number, orchestrations, and end-to-end

Recent application requirements drive a new type of WAN that more accurately supports today’s environment with an additional layer of policy management. The world has moved away from looking at I.P. addresses and Port numbers used to identify applications and made the correct forwarding decision.

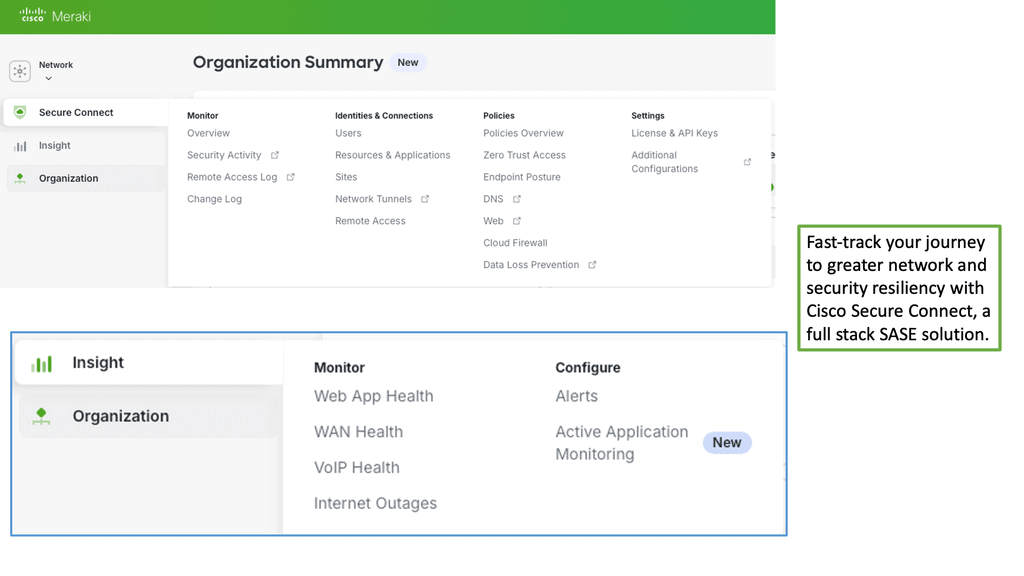

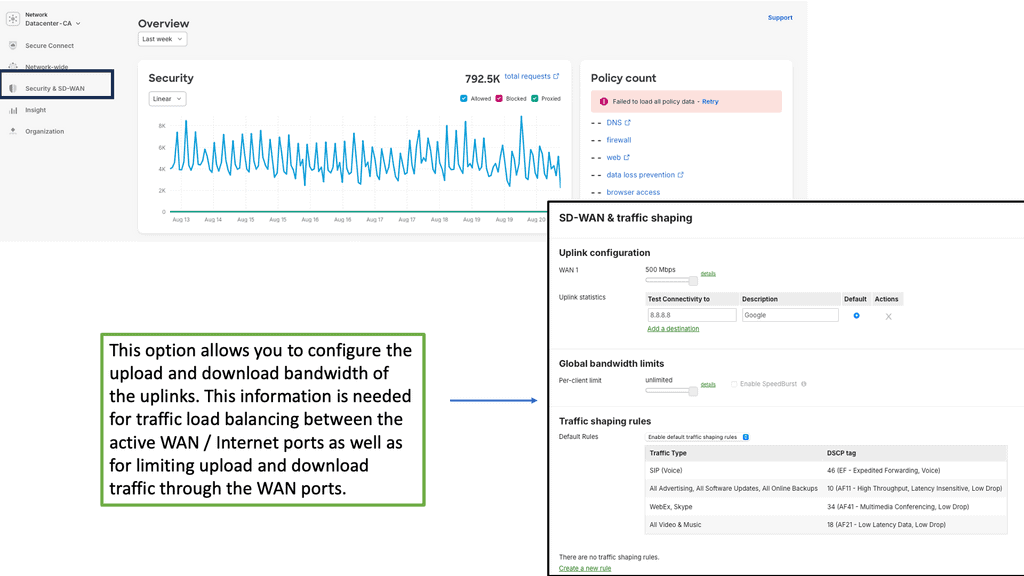

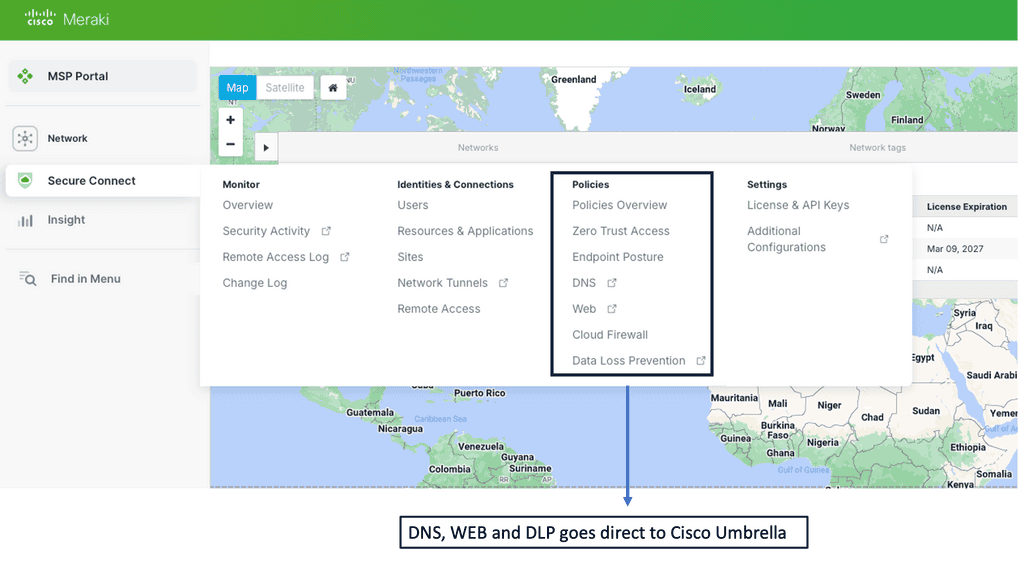

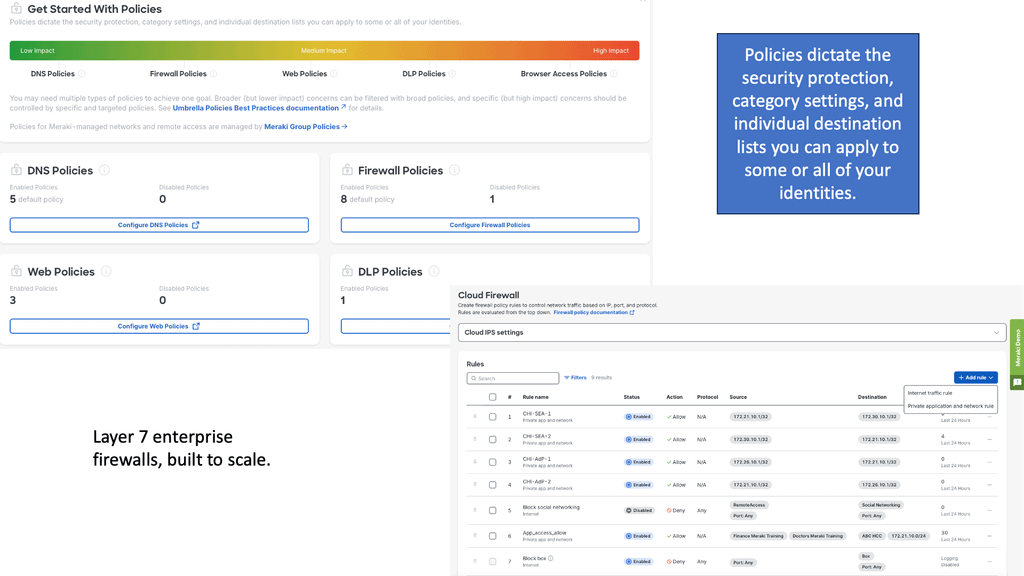

Example Product: Cisco Meraki

**Section 1: Simplified Network Management**

One of the standout features of the Cisco Meraki platform is its user-friendly interface. Gone are the days of complex configurations and cumbersome setups. With Meraki, IT administrators can manage their entire network from a single, intuitive dashboard. This centralized management capability allows for real-time monitoring, troubleshooting, and updates, all from the cloud. The simplicity of the platform means that even those with limited technical expertise can effectively manage and optimize their network.

**Section 2: Robust Security Features**

In today’s digital landscape, security is paramount. Cisco Meraki understands this and has built comprehensive security features into its platform. From advanced malware protection to intrusion prevention and content filtering, Meraki offers a multi-layered approach to cybersecurity. The platform also includes built-in security analytics, providing IT teams with valuable insights to proactively address potential threats. This level of security ensures that your network remains protected against both internal and external vulnerabilities.

**Section 3: Scalability and Flexibility**

Another significant advantage of the Cisco Meraki platform is its scalability. As your business grows, so too can your network. Meraki’s cloud-based nature allows for seamless integration of new devices and locations without the need for extensive hardware upgrades. This flexibility makes it an ideal solution for businesses of all sizes, from small startups to large multinational corporations. The platform’s ability to adapt to changing needs ensures that it can grow alongside your business.

**Section 4: Comprehensive Support and Training**

Cisco Meraki doesn’t just provide a platform; it offers a complete ecosystem of support and training. From comprehensive documentation and online tutorials to live webinars and a dedicated support team, Meraki ensures that you have all the resources you need to make the most of its platform. This commitment to customer success means that you’re never alone in your network management journey.

Challenges to Existing WAN

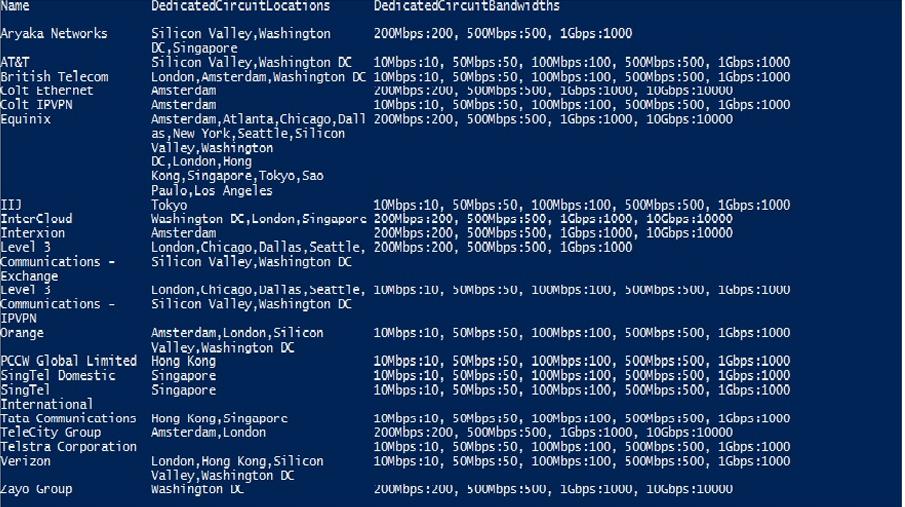

Traditional WAN architectures consist of private MPLS links complemented with Internet links as a backup. Standard templates in most Service Provider environments are usually broken down into Bronze, Silver, and Gold SLAs.

However, these types of SLA do not fit all geographies and often should be fine-tuned per location. Capacity, reliability, analytics, and security are all central parts of the WAN that should be available on demand. Traditional infrastructure is very static, and bandwidth upgrades and service changes require considerable time processing and locking agility for new sites.

It’s not agile enough, and nothing can be performed on the fly to meet the growing business needs. In addition, the cost per bit for the private connection is high, which is problematic for bandwidth-intensive applications, especially when the upgrades are too costly and can’t be afforded.

A distributed world of dissolving perimeters

Perimeters are dissolving, and the world is becoming distributed. Applications require a WAN to support distributed environments along with flexible network points. Centralized-only designs result in suboptimal traffic engineering and increased latency. Increased latency disrupts the application performance, and only a particular type of content can be put into a Content Delivery Network (CDN). CDN cannot be used for everything.

Traditional WANs are operationally complex; people likely perform different network and security functions. For example, you may have a DMVPN, Security, and Networking specialist. Some wear all hats, but they are few and far between. Different hats have different ideas, and agreeing on a minor network change could take ages.

The World of SD-WAN Static Network-Based

SD-WAN replaces traditional WAN routers that are agnostic to the underlying transport technology. You can use various link types, such as MPLS, LTE, and broadband. All combined. Based on policies generated by the business, SD-WAN enables load sharing across different WAN connections that more efficiently support today’s application environment.

It pulls policy and intelligence out of the network and places them into an end-to-end solution orchestrated by a single pane of glass. SDN-WAN is not just about tunnels. It consists of components that work together to simplify network operations while meeting all bandwidth and resilience requirements.

Centralized network points are no longer adequate; we need network points positioned where they make the most sense for the application and user. Backhauling traffic to a central data center is illogical, and connecting remote sites to a SaaS or IaaS model over the public Internet is far more efficient. The majority of enterprises prefer to connect remote sites directly to cloud services. So why not let them do this in the best possible way?

A new style of WAN and SD-WAN

We require a new WAN style and a shift from a network-based approach to an application-based approach. The new WAN no longer looks solely at the network to forward packets. Instead, it looks at the business application and decides how to optimize it with the correct forwarding behavior. This new style of forwarding is problematic with traditional WAN architecture.

Making business logic decisions with IP and port number information is challenging. Standard routing is packet by packet and can only set part of the picture. They have routing tables and perform forwarding but essentially operate on their little island, losing out on a holistic view required for accurate end-to-end decision-making. An additional layer of information is needed.

A controller-based approach offers the necessary holistic view. We can now make decisions based on global information, not solely on a path-by-path basis. Getting all the routing information and compiling it into a dashboard to make a decision is much more efficient than making local decisions that only see parts of the network.

From a customer’s viewpoint, what would the perfect WAN look like if you could roll back the clock and start again?

Related: For additional pre-information, you may find the following helpful:

SD WAN Overlay

Introducing the SD-WAN Overlay

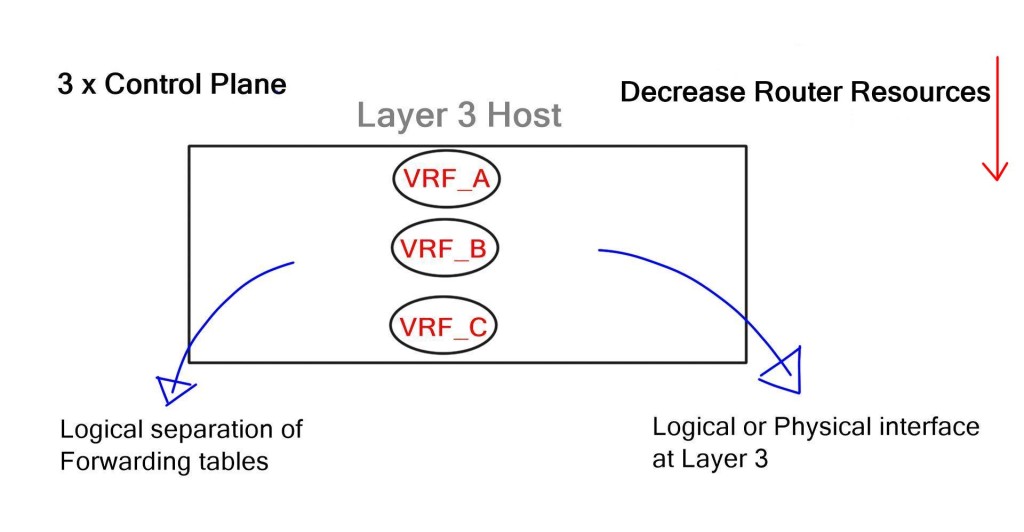

SD-WAN decouples (separates) the WAN infrastructure, whether physical or virtual, from its control plane mechanism and allows applications or application groups to be placed into virtual WAN overlays. The separation will enable us to bring many enhancements and improvements to a WAN that has had little innovation in the past compared to the rest of the infrastructure, such as server and storage modules.

With server virtualization, several virtual machines create application isolation on a physical server. Applications placed in VMs operate in isolation, yet the VMs are installed on the same physical hosts.

Consider SD-WAN to operate with similar principles. Each application or group can operate independently when traversing the WAN to endpoints in the cloud or other remote sites. These applications are placed into a virtual SDWAN overlay.

Overlay Networking

Overlay networking is an approach to computer networking that involves building a layer of virtual networks on top of an existing physical network. This approach improves the underlying infrastructure’s scalability, performance, and security. It also allows for the creation of virtual networks that span multiple physical networks, allowing for greater flexibility in traffic routes.

**Virtualization**

At the core of overlay networking is the concept of virtualization. This involves separating the physical infrastructure from the virtual networks, allowing greater control over allocating resources. This separation also allows the creation of virtual network segments that span multiple physical networks. This provides an efficient way to route traffic and the ability to provide additional security and privacy measures.

**Underlay network**

A network underlay is a physical infrastructure that provides the foundation for a network overlay, a logical abstraction of the underlying physical network. The network underlay provides the physical transport of data between nodes, while the overlay provides logical connectivity.

The network underlay can comprise various technologies, such as Ethernet, Wi-Fi, cellular, satellite, and fiber optics. It is the foundation of a network overlay and essential for its proper functioning. It provides data transport and physical connections between nodes. It also provides the physical elements that make up the infrastructure, such as routers, switches, and firewalls.

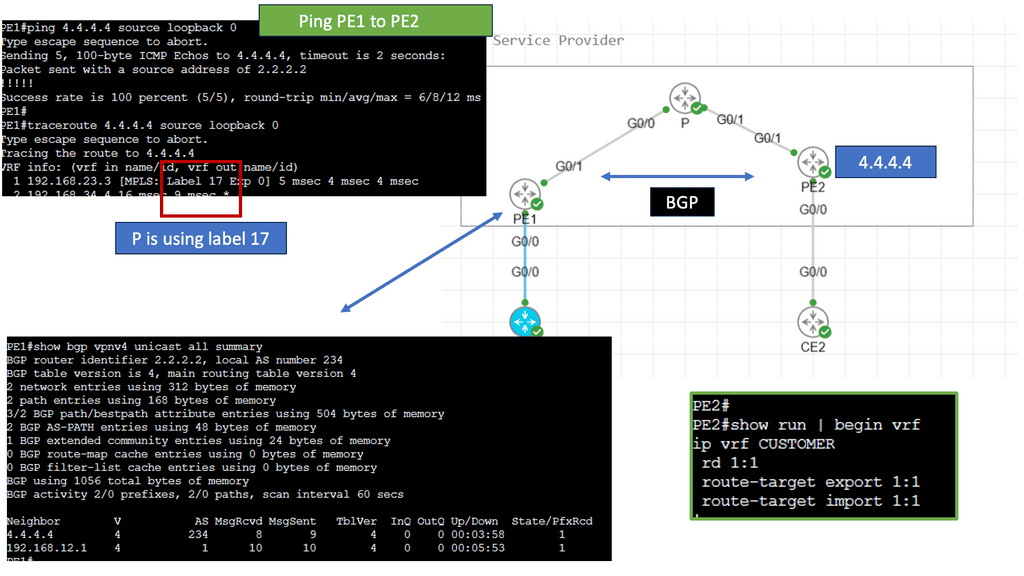

Example: DMVPN over IPSec

Understanding DMVPN

DMVPN is a dynamic VPN technology that simplifies establishing secure connections between multiple sites. Unlike traditional VPNs, which require point-to-point tunnels, DMVPN uses a hub-and-spoke architecture, allowing any-to-any connectivity. This flexibility enables organizations to quickly scale their networks and accommodate dynamic changes in their infrastructure.

On the other hand, IPsec provides a robust framework for securing IP communications. It offers encryption, authentication, and integrity mechanisms, ensuring that data transmitted over the network remains confidential and protected against unauthorized access. IPsec is a widely adopted standard that is compatible with various network devices and software, making it an ideal choice for securing DMVPN connections.

The combination of DMVPN and IPsec brings numerous benefits to organizations. Firstly, DMVPN’s dynamic nature allows for easy scalability and improved network resiliency. New sites can be added seamlessly without the need for manual configuration changes. Additionally, DMVPN over IPsec provides strong encryption, ensuring the confidentiality of sensitive data. Moreover, DMVPN’s any-to-any connectivity optimizes network traffic flow, enhancing performance and reducing latency.

Key Challenges: Driving Overlay Networking & SD-WAN

**Challenge: We need more bandwidth**

Modern businesses demand more bandwidth than ever to connect their data, applications, and services. As a result, we have many things to consider with the WAN, such as regulations, security, visibility, branch, data center sites, remote workers, internet access, cloud, and traffic prioritization. They were driving the need for SD-WAN.

The concepts and design principles of creating a wide area network (WAN) to provide resilient and optimal transit between endpoints have continuously evolved. However, the driver behind building a better WAN is to support applications that demand performance and resiliency.

**Challenge: Suboptimal traffic flow**

The optimal route will be the fastest or most efficient and, therefore, preferred to transfer data. Sub-optimal routes will be slower and, hence, not the selected route. Centralized-only designs resulted in suboptimal traffic flow and increased latency, which will degrade application performance.

A key point to note is that traditional networks focus on centralized points in the network that all applications, network, and security services must adhere to. These network points are fixed and cannot be changed.

**Challenge: Network point intelligence**

However, the network should evolve to have network points positioned where it makes the most sense for the application and user, not based on a previously validated design for a different application era. For example, many branch sites do not have local Internet breakouts.

So, for this reason, we backhauled internet-bound traffic to secure, centralized internet portals at the H.Q. site. As a result, we sacrificed the performance of Internet and cloud applications. Designs that place the H.Q. site at the center of connectivity requirements inhibit the dynamic access requirements for digital business.

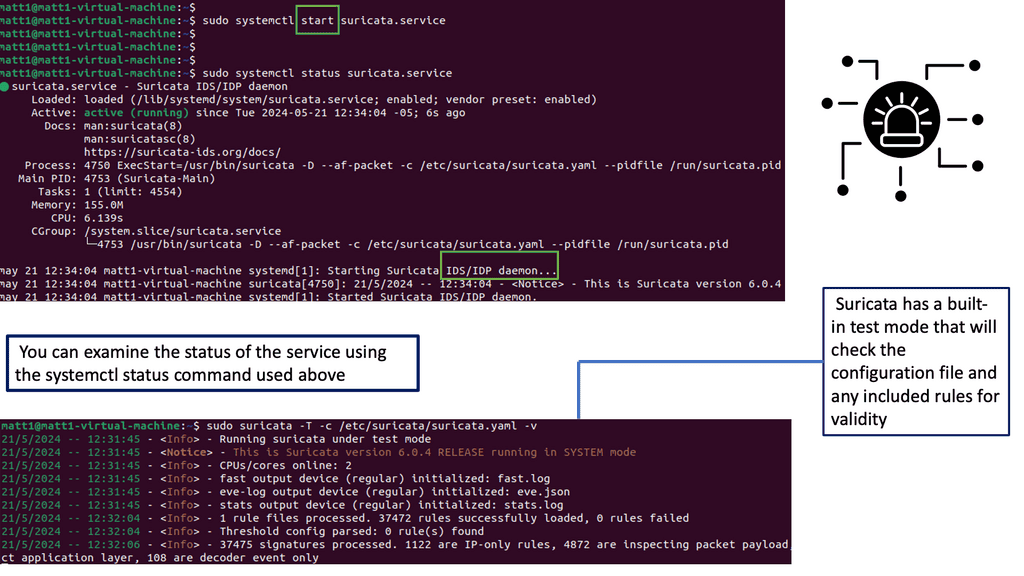

**Challenge: Hub and spoke drawbacks**

Simple spoke-type networks are sub-optimal because you always have to go to the center point of the hub and then out to the machine you need rather than being able to go directly to whichever node you need. As a result, the hub becomes a bottleneck in the network as all data must go through it. With a more scattered network using multiple hubs and switches, a less congested and more optimal route could be found between machines.

The Fabric:

The word fabric comes from the fact that there are many paths to move from one server to another to ease balance and traffic distribution. SDN aims to centralize the order that enables the distribution of the flows over all the fabric paths. Then, we have an SDN controller device. The SDN controller can also control several fabrics simultaneously, managing intra and inter-datacenter flows.

SD-WAN is used to control and manage a company’s multiple WANs. There are different types of WAN: Internet, MPLS, LTE, DSL, fiber, wired network, circuit link, etc. SD-WAN uses SDN technology to control the entire environment. Like SDN, the data plane and control plane are separated. A centralized controller must be added to manage flows, routing or switch policies, packet priority, network policies, etc. SD-WAN technology is based on overlay, meaning nodes representing underlying networks.

Centralized logic:

In a traditional network, each device’s transport functions and controller layer are resident. This is why any configuration or change must be done box-by-box. Configuration was carried out manually or, at the most, an Ansible script. SD-WAN brings Software-Defined Networking (SDN) concepts to the enterprise branch WAN.

Software-defined networking (SDN) is an architecture, whereas SD-WAN is a technology that can be purchased and built on SDN’s foundational concepts. SD-WAN’s centralized logic stems from SDN. SDN separates the control from the data plane and uses a central controller to make intelligent decisions, similar to the design that most SD-WAN vendors operate.

A holistic view:

The controller and the SD-WAN overlay have a holistic view. The controller supports central policy management, enabling network-wide policy definitions and traffic visibility. The SD-WAN edge devices perform the data plane. The data plane is where simple forwarding occurs, and the control plane, which is separate from the data plane, sets up all the controls for the data plane to forward.

Like SDN, the SD-WAN overlay abstracts network hardware into a control plane with multiple data planes to make up one large WAN fabric. As the control layer is abstracted and decoupled above the physicals and running in software, services can be virtualized and delivered from a central location to any point on the network.

SD-WAN Overlay Features

SD-WAN Overlay Feature 1: Combining the transports:

At its core, SD-WAN shapes and steers application traffic across multiple WAN means of transport. Building on the concept of link bonding to combine numerous means of transport and transport types, the SD-WAN overlay improves the idea by moving the functionality up the stack—first, SD-WAN aggregates last-mile services, representing them as a single pipe to the application.SD-WAN allows you to combine all transport links into one big pipe. SD-WAN is transport agnostic. As it works by abstraction, it does not care what transport links you have. Maybe you have MPLS, private Internet, or LTE. It can combine all these or use them separately.

SD-WAN Overlay Feature 2: location:

From a central location, SD-WAN pulls all of these WAN resources together, creating one large WAN fabric that allows administrators to slice up the WAN to match the application requirements that sit on top. Different applications traverse the WAN, so we need the WAN to react differently. For example, if you’re running a call center, you want a low delay, latency, and high availability with Voice traffic. You may wish to use this traffic to use an excellent service-level agreement path.

SD-WAN Overlay Feature 3: steering:

Traffic steering may also be required: voice traffic to another path if, for example, the first Path is experiencing high latency. If it’s not possible to steer traffic automatically to a link that is better performing, run a series of path remediation techniques to try to improve performance. File transfer differs from real-time Voice: you can tolerate more delay but need more B/W. Here, you may want to use a combination of WAN transports ( such as customer broadband and LTE ) to achieve higher aggregate B/W.

This also allows you to automatically steer traffic over different WAN transports when there is a deflagration on one link. With the SD-WAN overlay, we must start thinking about paths, not links.

SD-WAN Overlay Feature 4: decisions:

At its core, SD-WAN enables real-time application traffic steering over any link, such as broadband, LTE, and MPLS, assigning pre-defined policies based on business intent. Steering policies support many application types, making intelligent decisions about how WAN links are utilized and which paths are taken.

The concept of an underlay and overlay are not new, and SD-WAN borrows these designs. First, the underlay is the physical or virtual world, such as the physical infrastructure. Then, we have the overlay, where all the intelligence can be set. The SDWAN overlay represents the virtual WANs that hold your different applications.

A virtual WAN overlay enables us to steer traffic and combine all bandwidths. Similar to how applications are mapped to V.M. in the server world, with SD-WAN, each application is mapped to its own virtual SD-WAN overlay. Each virtual SD-WAN overlay can have its own SD-WAN security policies, topologies, and performance requirements.

SD-WAN Overlay Feature 5:-Aware Routing Capabilities

Not only do we need application visibility to forward efficiently over either transport, but we also need the ability to examine deep inside the application and look at the sub-applications. For example, we can determine whether Facebook chat is over regular Facebook. This removes the application’s mystery and allows you to balance loads based on sub-applications. It’s like using a scalpel to configure the network instead of a sledgehammer.

SD-WAN Overlay Feature 6: Of Integration With Existing Infrastructure

The risk of introducing new technologies may come with a disruptive implementation strategy. Loss of service damages more than the engineer’s reputation. It hits all areas of the business. The ability to seamlessly insert new sites into existing designs is a vital criterion. With any network change, a critical evaluation is to know how to balance risk with innovation while still meeting objectives.

How aligned is marketing content to what’s happening in reality? It’s easy for marketing materials to implement their solution at Layer 2 or 3! It’s an entirely different ball game doing this. SD-WAN carries a certain amount of due diligence. One way to read between the noise is to examine who has real-life deployments with proven Proof of concept (POC) and validated designs. Proven POC will help you guide your transition in a step-by-step manner.

SD-WAN Overlay Feature 7: Specific Routing Topologies

Every company has different requirements for hub and spoke full mesh and Internet PoP topologies. For example, Voice should follow a full mesh design, while Data requires a hub and spokes connecting to a central data center. Nearest service availability is the key to improved performance, as there is little we can do about the latency Gods except by moving services closer together.

SD-WAN Overlay Feature 8: Device Management & Policy Administration

The manual box-by-box approach to policy enforcement is not the way forward. It’s similar to stepping back into the Stone Age to request a catered flight. The ability to tie everything to a template and automate enables rapid branch deployments, security updates, and configuration changes. The optimal solutions have everything in one place and can dynamically push out upgrades.

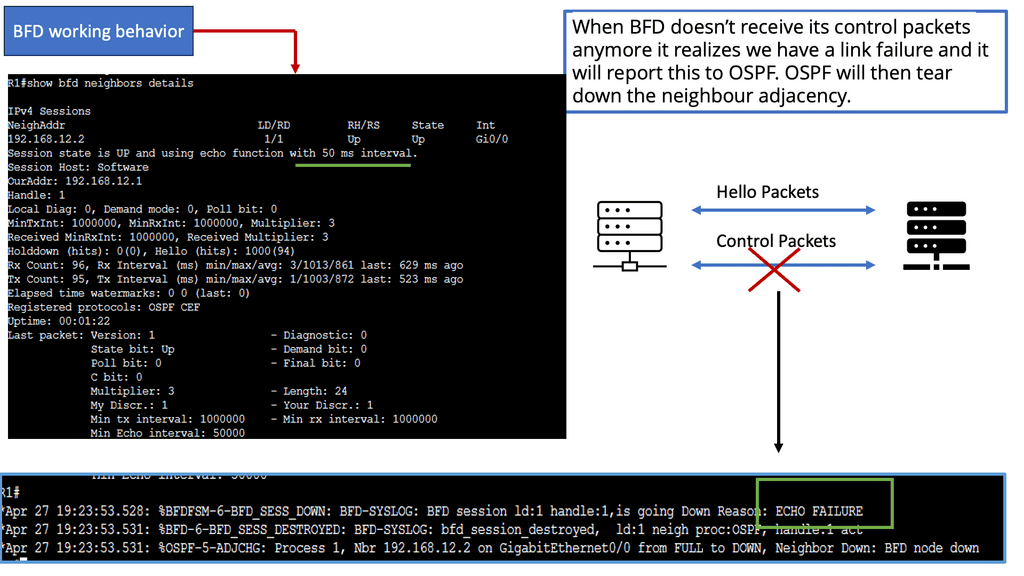

SD-WAN Overlay Feature 9: Available With Automatic Failovers

You cannot apply a singular viewpoint to high availability. An end-to-end solution should address the high availability requirements of the device, link, and site level. WANs can fail quickly, but this requires additional telemetry information to detect failures and brownout events.

SD-WAN Overlay Feature 10: On All Transports

Irrespective of link type, whether MPLS, LTE, or the Internet, we need the capacity to encrypt on all those paths without the excess baggage of IPsec. Encryption should happen automatically, and the complexity of IPsec should be abstracted.

**Application-Orientated WAN**

Push to the cloud:

When geographically dispersed users connect back to central locations, their consumption triggers additional latency, degrading the application’s performance. No one can get away from latency unless we find ways to change the speed of light. One way is to shorten the link by moving to cloud-based applications.

The push to the cloud is inevitable. Most businesses are now moving away from on-premise in-house hosting to cloud-based management. Nevertheless, the benefits of moving to the cloud are manifold. It is easier for so many reasons.

The ready-made global footprint enables the usage of SaaS-based platforms that negate the drawbacks of dispersed users tromboning to a central data center. This software is pivotal to millions of businesses worldwide, which explains why companies such as Capsifi are so popular.

Logically positioned cloud platforms are closer to the mobile user. It’s increasingly far more efficient from the technical and business standpoint to host these applications in the cloud, which makes them available over the public Internet.

Bandwidth intensive applications:

Richer applications, multimedia traffic, and growth in the cloud application consumption model drive the need for additional bandwidth. Unfortunately, we can only fit so much into a single link. The congestion leads to packet drops, ultimately degrading application performance and user experience. In addition, most applications ride on TCP, yet TCP was not designed with performance.

Organic growth:

Organic business growth is a significant driver of additional bandwidth requirements. The challenge is that existing network infrastructures are static and unable to respond to this growth in a reasonable period adequately. The last mile of MPLS locks you in and kills agility. Circuit lead times impede the organization’s productivity and create an overall lag.

Costs:

A WAN virtualization solution should be simple. To serve the new era of applications, we need to increase the link capacity by buying more bandwidth. However, nothing is as easy as it may seem. The WAN is one of the network’s most expensive parts, and employing link oversubscription to reduce congestion is too costly.

Furthermore, bandwidth comes at a cost, and the existing TDM-based MPLS architectures cannot accommodate application demands.

Traditional MPLS is feature-rich and offers many benefits. No one doubts this fact. MPLS will never die. However, it comes at a high cost for relatively low bandwidth. Unfortunately, MPLS’s price and capabilities are not a perfect couple.

Hybrid connectivity:

Since there is not one stamp for the entire world, similar applications will have different forwarding preferences. Therefore, application flows are dynamic and change depending on user consumption. Furthermore, the MPLS, LTE, and Internet links often complement each other since they support different application types.

For example, Storage and Big data replication traffic are forwarded through the MPLS links, while cheaper Internet connectivity is used for standard Web-based applications.

Limitations of protocols:

When left to its defaults, IPsec is challenged by hybrid connectivity. IPSec architecture is point-to-point, not site-to-site. As a result, it doesn’t natively support redundant uplinks. Complex configurations are required when sites have multiple uplinks to multiple providers.

By default, IPsec is not abstracted; one session cannot be used over multiple uplinks, causing additional issues with transport failover and path selection. It’s a Swiss Army knife of features, and much of IPSec’s complexities should be abstracted. Secure tunnels should be torn up and down immediately, and new sites should be incorporated into a secure overlay without much delay or manual intervention.

Internet of Things (IoT):

Security and bandwidth consumption are key issues when introducing IP-enabled objects and IoT access technologies. IoT is all about Data and will bring a shed load of additional overheads for networks to consume. As millions of IoT devices come online, how efficiently do we segment traffic without complicating the network design further? Complex networks are hard to troubleshoot, and simplicity is the mother of all architectural success. Furthermore, much IoT processing requires communication to remote IoT platforms. How do we account for the increased signaling traffic over the WAN? The introduction of billions of IoT devices leaves many unanswered questions.

Branch NFV:

There has been strong interest in infrastructure consolidation by deploying Network Function Virtualization (NFV) at the branch. Enabling on-demand service and chaining application flows are key drivers for NFV. However, traditional service chaining is static since it is bound to a particular network topology. Moreover, it is typically built through manual configuration and is prone to human error.

SD-WAN overlay path monitoring:

SD-WAN monitors the paths and the application performance on each link (Internet, MPLS, LTE ) and then chooses the best path based on real-time conditions and the business policy. In summary, the underlay network is the physical or virtual infrastructure above which the overlay network is built. An SDWAN overlay network is a virtual network built on top of an underlying Network infrastructure/Network layer (the underlay).

Controller-based policy:

An additional layer of information is needed to make more intelligent decisions about how and where to forward application traffic. SD-WAN offers a controller-based policy approach that incorporates a holistic view.

A central controller can now make decisions based on global information, not solely on a path-by-path basis with traditional routing protocols. Getting all the routing information and compiling it into the controller to make a decision is much more efficient than making local decisions that only see a limited part of the network.

The SD-WAN Controller provides physical or virtual device management for all SD-WAN Edges associated with the controller. This includes, but is not limited to, configuration and activation, IP address management, and pushing down policies onto SD-WAN Edges located at the branch sites.

SD-WAN Overlay Case Study:

Personal Note: I recently consulted for a private enterprise. Like many enterprises, they have many applications, both legacy and new. No one knew about courses and applications running over the WAN; visibility was low. For the network design, the H.Q. has MPLS and Direct Internet access. Nothing is new here; this design has been in place for the last decade. All traffic is backhauled to the HQ/MPLS headend for security screening. The security stack, including firewalls, IDS/IPS, and anti-malware, was in the H.Q. The remote sites have high latency and limited connectivity options.

More importantly, they are transitioning their ERP system to the cloud. As apps move to the cloud, they want to avoid fixed WAN, a big driver for a flexible SD-WAN solution. They also have remote branches, which are hindered by high latency and poorly managed IT infrastructure. But they don’t want an I.T. representative at each site location. They have heard that SD-WAN has a centralized logic and can view the entire network from one central location. These remote sites must receive large files from the H.Q.; the branch sites’ transport links are only single-customer broadband links.

Some remote sites have LTE, and the bills are getting more significant. The company wants to reduce costs with dedicated Internet access or customer/business broadband. They have heard that you can combine different transports with SD-WAN and have several path remediations on degraded transports for better performance. So, they decided to roll out SD-WAN. From this new architecture, they gained several benefits.

SD-WAN Visibility

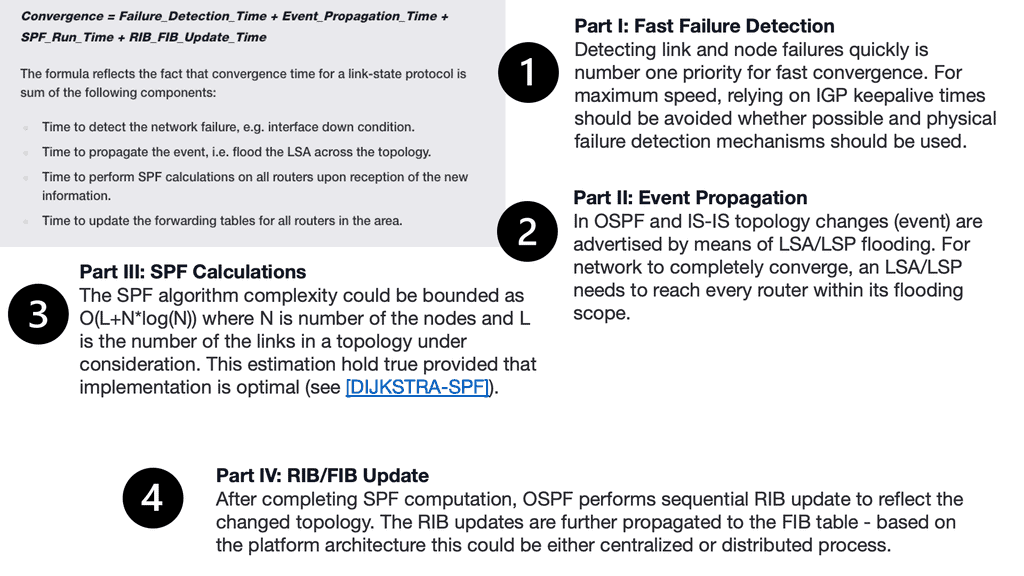

When your business-critical applications operate over different provider networks, troubleshooting and finding the root cause of problems becomes more challenging. So, visibility is critical to business. SD-WAN allows you to see network performance data in real-time and is essential for determining where packet loss, latency, and jitter are occurring so you can resolve the problem quickly.

You also need to be able to see who or what is consuming bandwidth so you can spot intermittent problems. For all these reasons, SD-WAN visibility needs to go beyond network performance metrics and provide greater insight into the delivery chains that run from applications to users.

Understand your baselines:

Visibility is needed to complete the network baseline before the SD-WAN is deployed. This enables the organization to understand existing capabilities, the norm, what applications are running, the number of sites connected, what service providers used, and whether they’re meeting their SLAs. Visibility is critical to obtaining a complete picture, so teams understand how to optimize the business infrastructure. SD-WAN gives you an intelligent edge, so you can see all the traffic and act on it immediately.

First, look at the visibility of the various flows, the links used, and any issues on those links. Then, if necessary, you can tweak the bonding policy to optimize the traffic flow. Before the rollout of SD-WAN, there was no visibility into the types of traffic, and different apps used what B.W. They had limited knowledge of WAN performance.

SD-WAN offers higher visibility:

With SD-WAN, they have the visibility to control and class traffic on layer seven values, such as what URL you are using and what Domain you are trying to hit, along with the standard port and protocol. All applications are not equal; some run better on different links. If an application is not performing correctly, you can route it to a different circuit. With the SD-WAN orchestrator, you have complete visibility across all locations, all links, and into the other traffic across all circuits.

SD-WAN High Availability:

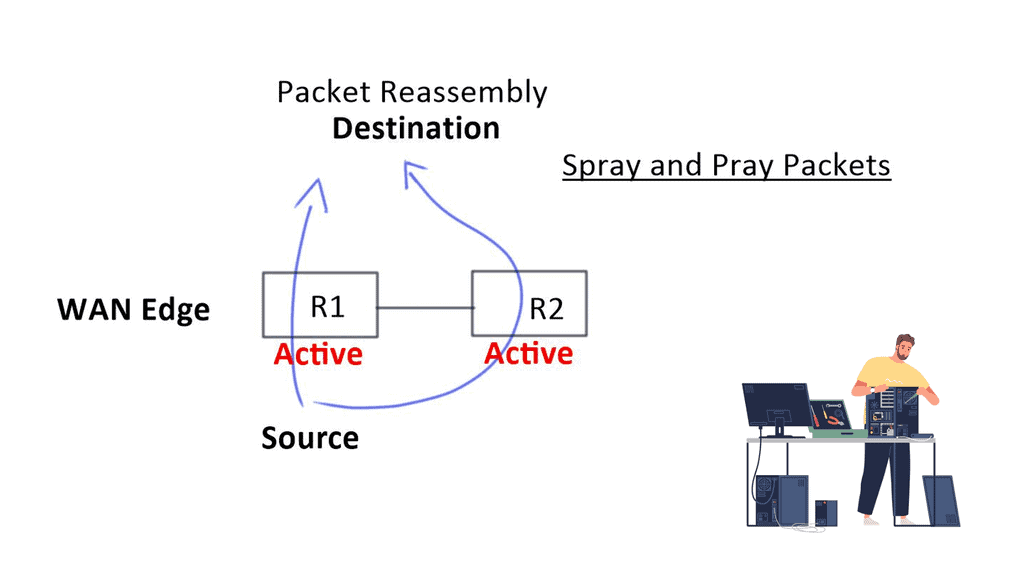

Any high-availability solution aims to ensure that all network services are resilient to failure. It aims to provide continuous access to network resources by addressing the potential causes of downtime through functionality, design, and best practices. The previous high-availability design was active and passive with manual failover. It was hard to maintain, and there was a lot of unused bandwidth. Now, they use resources more efficiently and are no longer tied to the bandwidth of the first circuit.

There is a better granular application failover mechanism. You can also select which apps are prioritized if a link fails or when a certain congestion ratio is hit. For example, you have LTE as a backup, which can be expensive. So applications marked high priority are steered over the backup link, but guest WIFI traffic isn’t.

Flexible topology:

Before, they had a hub-and-spoke MPLS design for all applications. They wanted a complete mesh architecture for some applications, kept the existing hub, and spoke for others. However, the service provider couldn’t accommodate the level of granularity that they wanted.

With SD-WAN, they can choose topologies that are better suited to the application type. As a result, the network design is now more flexible and matches the application than the application matching a network design it doesn’t want.

Types of SD-WAN

The market for branch office wide-area network functionality is shifting from dedicated routing, security, and WAN optimization appliances to feature-rich SD-WAN. As a result, WAN edge infrastructure now incorporates a widening set of network functions, including secure routers, firewalls, SD-WAN, WAN path control, and WAN optimization, along with traditional routing functionality. Therefore, consider the following approach to deploying SD-WAN.

1. Application-based approach

With SD-WAN, we are shifting from a network-based approach to an application-based approach. The new WAN no longer looks solely at the network to forward packets. Instead, it looks at the business requirements and decides how to optimize the application with the correct forwarding behavior. This new way of forwarding would be problematic when using traditional WAN architectures.

Making business logic decisions with I.P. and port number information is challenging. Standard routing is the most common way to forward application traffic today, but it only assesses part of the picture when making its forwarding decision.

These devices have routing tables to perform forwarding. Still, with this model, they operate and decide on their little island, losing the holistic view required for accurate end-to-end decision-making.

2. SD-WAN: Holistic decision

The WAN must start making decisions holistically. It should not be viewed as a single module in the network design. Instead, it must incorporate several elements it has not integrated to capture the correct per-application forwarding behavior. The ideal WAN should be automatable to form a comprehensive end-to-end solution centrally orchestrated from a single pane of glass.

Managed and orchestrated centrally, this new WAN fabric is transport agnostic. It offers application-aware routing, regional-specific routing topologies, encryption on all transports regardless of link type, and high availability with automatic failover. All of these will be discussed shortly and are the essence of SD-WAN.

3. SD-WAN and central logic

Besides the virtual SD-WAN overlay, another key SD-WAN concept is centralized logic. Upon examining a standard router, local routing tables are computed from an algorithm to forward a packet to a given destination.

It receives routes from its peers or neighbors but computes paths locally and makes local routing decisions. The critical point to note is that everything is calculated locally. SD-WAN functions on a different paradigm.

Rather than using distributed logic, it utilizes centralized logic. This allows you to view the entire network holistically and with a distributed forwarding plane that makes real-time decisions based on better metrics than before.

This paradigm enables SD-WAN to see how the flows behave along the path. They are taking the fragmented control approach and centralizing it while benefiting from a distributed system.

The SD-WAN controller, which acts as the brain, can set different applications to run over different paths based on business requirements and performance SLAs, not on a fixed topology. So, for example, if one path does not have acceptable packet loss and latency is high, we can move to another path dynamically.

4. Independent topologies

SD-WAN has different levels of networking and brings the concepts of SDN into the Wide Area Network. Similar to SDN, we have an underlay and an overlay network with SD-WAN. The WAN infrastructure, either physical or virtual, is the underlay, and the SDWAN overlay is in software on top of the underlay where the applications are mapped.

This decoupling or separation of functions allows different application or group overlays. Previously, the application had to work with a fixed and pre-built network infrastructure. With SD-WAN, the application can choose the type of topology it wants, such as a full mesh or hub and spoke. The topologies with SD-WAN are much more flexible.

5. The SD-WAN overlay

SD-WAN optimizes traffic over multiple available connections. It dynamically steers traffic to the best available link. Suppose the available links show any transmission issues. In that case, it will immediately transfer to a better path or apply remediation to a link if, for example, you only have a single link. SD-WAN delivers application flows from a source to a destination based on the configured policy and best available network path. A core concept of SD-WAN is overlaid.

SD-WAN solutions provide the software abstraction to create the SD-WAN overlay and decouple network software services from the underlying physical infrastructure. Multiple virtual overlays may be defined to abstract the underlying physical transport services, each supporting a different quality of service, preferred transport, and high availability characteristics.

6. Application mapping

Application mapping also allows you to steer traffic over different WAN transports. This steering is automatic and can be implemented when specific performance metrics are unmet. For example, if Internet transport has a 15% packet loss, the policy can be set to steer all or some of the application traffic over to better-performing MPLS transport.

Applications are mapped to different overlays based on business intent, not infrastructure details like IP addresses. When you think about overlays, it’s common to have, on average, four overlays. For example, you may have a gold, platinum, and bronze SDWAN overlay, and then you can map the applications to these overlays.

The applications will have different networking requirements, and overlays allow you to slice and dice your network if you have multiple application types.

SD-WAN & WAN metrics

SD-WAN captures metrics that go far beyond the standard WAN measurements. For example, the traditional method measures packet loss, latency, and jitter metrics to determine path quality. These measurements are insufficient for routing protocols that only make the packet flow decision at layer 3 of the OSI model.

As we know, layer 3 of the OSI model lacks intelligence and misses the overall user experience. We must start looking at application transactions rather than relying on bits, bytes jitter, and latency.

SD-WAN incorporates better metrics beyond those a standard WAN edge router considers. These metrics may include application response time, network transfer, and service response time. Some SD-WAN solutions monitor each flow’s RTT, sliding windows, and ACK delays, not just the I.P. or TCP. This creates a more accurate view of the application’s performance.

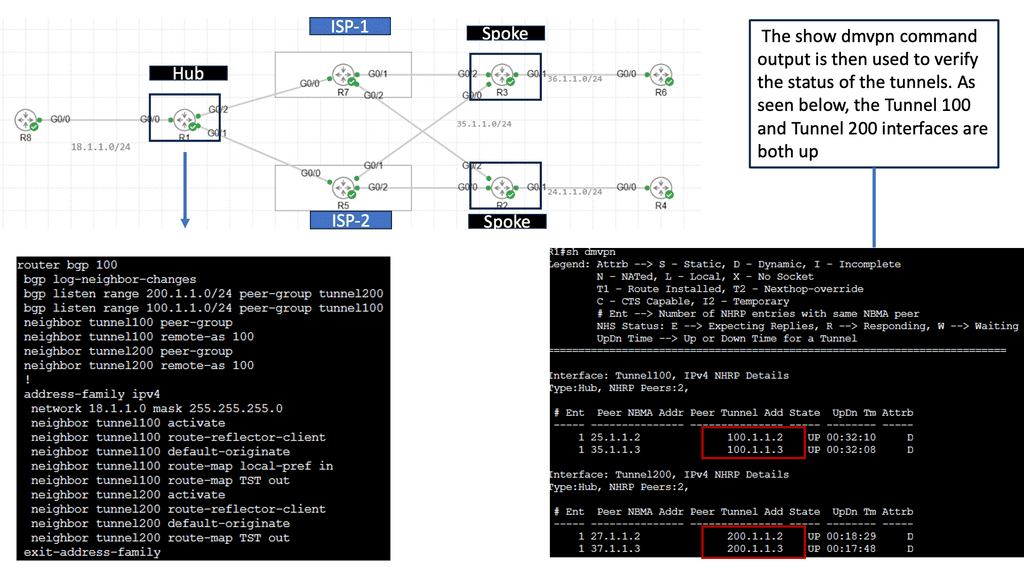

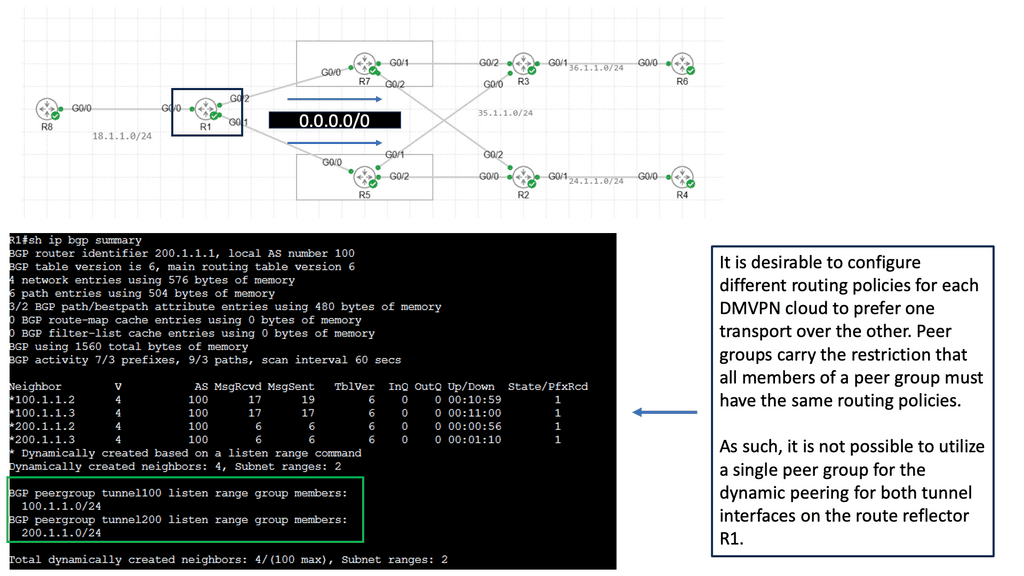

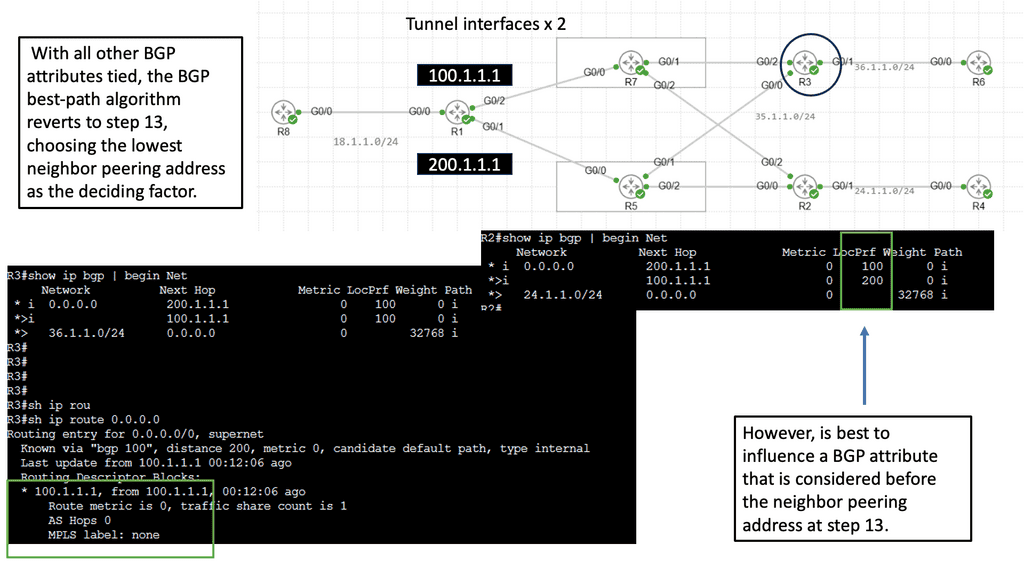

Overlay Use Case: DMVPN Dual Cloud

Exploring Single Hub Dual Cloud Configuration

The Single Hub, Dual Cloud configuration, is a DMVPN setup in which a central hub site connects to two or more cloud service providers simultaneously. This configuration offers several advantages, such as increased redundancy, improved performance, and enhanced security.

By connecting to multiple cloud service providers, the Single Hub Dual Cloud configuration ensures redundancy if one provider experiences an outage. This redundancy enhances network availability and minimizes the risk of downtime, providing a robust and reliable network infrastructure.

With the Single Hub Dual Cloud configuration, network traffic can be load-balanced across multiple cloud service providers. This load balancing distributes the workload evenly, optimizing network performance and preventing bottlenecks. It allows for efficient utilization of network resources, resulting in enhanced user experience and improved application performance.

Summary: SD WAN Overlay

In today’s digital landscape, businesses increasingly rely on cloud-based applications, remote workforces, and data-driven operations. As a result, the demand for a more flexible, scalable, and secure network infrastructure has never been greater. This is where SD-WAN overlay comes into play, revolutionizing how organizations connect and operate.

SD-WAN overlay is a network architecture that allows organizations to abstract and virtualize their wide area networks, decoupling them from the underlying physical infrastructure. It utilizes software-defined networking (SDN) principles to create an overlay network that runs on top of the existing WAN infrastructure, enabling centralized management, control, and optimization of network traffic.

Key benefits of SD-WAN overlay

1. Enhanced Performance and Reliability:

SD-WAN overlay leverages multiple network paths to distribute traffic intelligently, ensuring optimal performance and reliability. By dynamically routing traffic based on real-time conditions, businesses can overcome network congestion, reduce latency, and maximize application performance. This capability is particularly crucial for organizations with distributed branch offices or remote workers, as it enables seamless connectivity and productivity.

2. Cost Efficiency and Scalability:

Traditional WAN architectures can be expensive to implement and maintain, especially when organizations need to expand their network footprint. SD-WAN overlay offers a cost-effective alternative by utilizing existing infrastructure and incorporating affordable broadband connections. With centralized management and simplified configuration, scaling the network becomes a breeze, allowing businesses to adapt quickly to changing demands without breaking the bank.

3. Improved Security and Compliance:

In an era of increasing cybersecurity threats, protecting sensitive data and ensuring regulatory compliance are paramount. SD-WAN overlay incorporates advanced security features to safeguard network traffic, including encryption, authentication, and threat detection. Businesses can effectively mitigate risks, maintain data integrity, and comply with industry regulations by segmenting network traffic and applying granular security policies.

4. Streamlined Network Management:

Managing a complex network infrastructure can be a daunting task. SD-WAN overlay simplifies network management with centralized control and visibility, enabling administrators to monitor and manage the entire network from a single pane of glass. This level of control allows for faster troubleshooting, policy enforcement, and network optimization, resulting in improved operational efficiency and reduced downtime.

5. Agility and Flexibility:

In today’s fast-paced business environment, agility is critical to staying competitive. SD-WAN overlay empowers organizations to adapt rapidly to changing business needs by providing the flexibility to integrate new technologies and services seamlessly. Whether adding new branch locations, integrating cloud applications, or adopting emerging technologies like IoT, SD-WAN overlay offers businesses the agility to stay ahead of the curve.

Implementation of SD-WAN Overlay:

Implementing SD-WAN overlay requires careful planning and consideration. The following steps outline a typical implementation process:

1. Assess Network Requirements: Evaluate existing network infrastructure, bandwidth requirements, and application performance needs to determine the most suitable SD-WAN overlay solution.

2. Design and Architecture: Create a network design incorporating SD-WAN overlay while considering factors such as branch office connectivity, data center integration, and security requirements.

3. Vendor Selection: Choose a reliable and reputable SD-WAN overlay vendor based on their technology, features, support, and scalability.

4. Deployment and Configuration: Install the required hardware or virtual appliances and configure the SD-WAN overlay solution according to the network design. This includes setting up policies, traffic routing, and security parameters.

5. Testing and Optimization: Thoroughly test the SD-WAN overlay solution, ensuring its compatibility with existing applications and network infrastructure. Optimize the solution based on performance metrics and user feedback.

Conclusion: SD-WAN overlay is a game-changer for businesses seeking to optimize their network infrastructure. By enhancing performance, reducing costs, improving security, streamlining management, and enabling agility, SD-WAN overlay unlocks the true potential of connectivity. Embracing this technology allows organizations to embrace digital transformation, drive innovation, and gain a competitive edge in the digital era. In an ever-evolving business landscape, SD-WAN overlay is the key to unlocking new growth opportunities and future-proofing your network infrastructure.