Removing State From Network Functions

In recent years, the networking industry has witnessed a significant shift towards stateless network functions. This revolutionary approach has transformed the way networks are designed, managed, and operated. In this blog post, we will explore the concept of removing state from network functions and delve into the benefits it brings to the table.

State in network functions refers to the information that needs to be stored and maintained for each connection or flow passing through the network. Traditionally, network functions such as firewalls, load balancers, and intrusion detection systems heavily relied on maintaining state. This stateful approach introduced complexities and limitations in terms of scalability, performance, and fault tolerance.

Stateless network functions, on the other hand, operate without the need for maintaining connection-specific information. Instead, they process packets or flows independently, solely based on the information present in each packet. This paradigm shift eliminates the burden of state management, enabling networks to scale more efficiently, achieve higher performance, and exhibit enhanced resiliency.

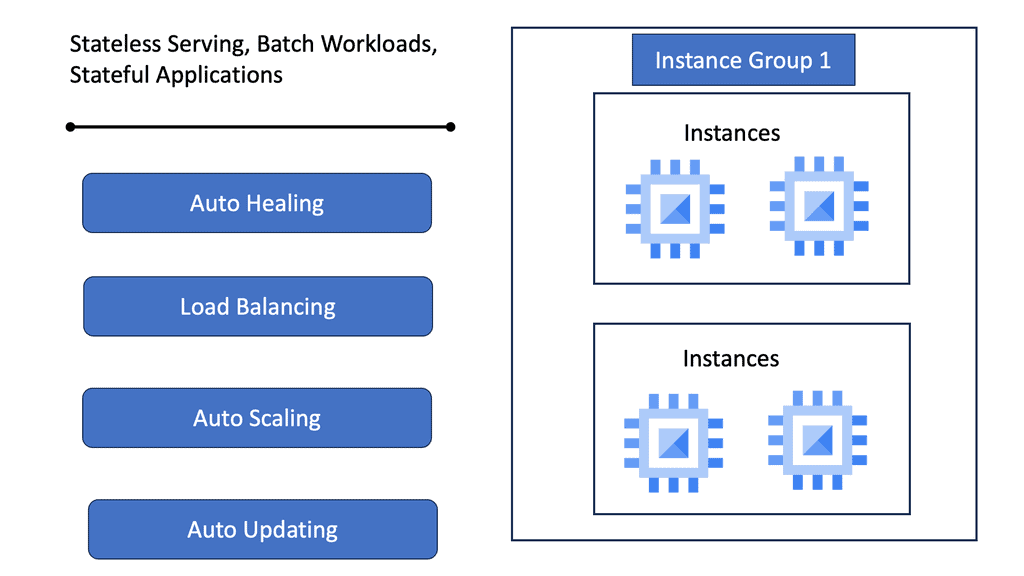

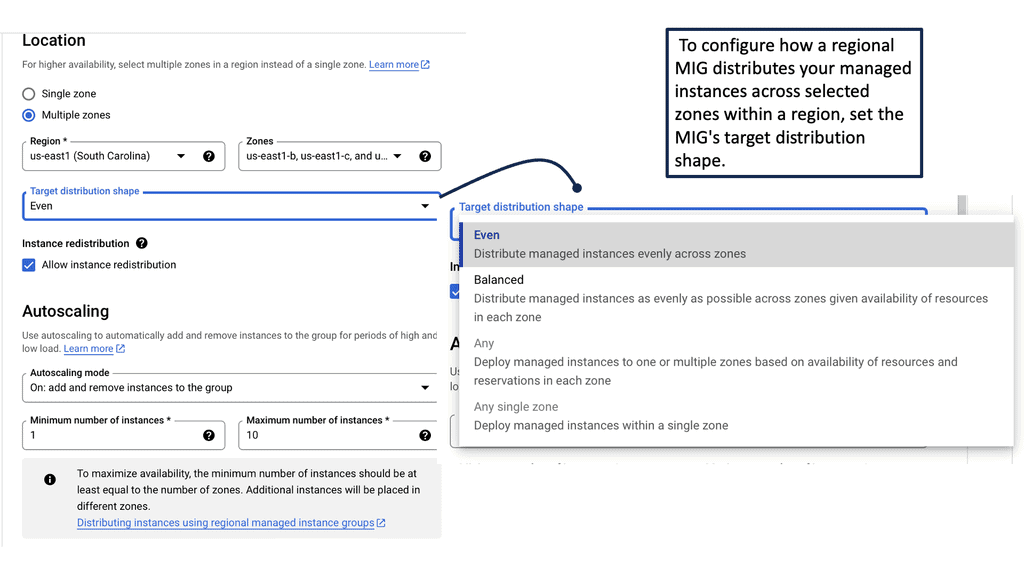

Enhanced Scalability: By removing state from network functions, networks become inherently more scalable. Stateless functions allow for easier distribution and parallel processing, empowering networks to handle increasing traffic demands without being limited by state management overhead.

Improved Performance: Stateless network functions offer improved performance compared to their stateful counterparts. Without the need to constantly maintain state information, these functions can process packets or flows more quickly, resulting in reduced latency and improved overall network performance.

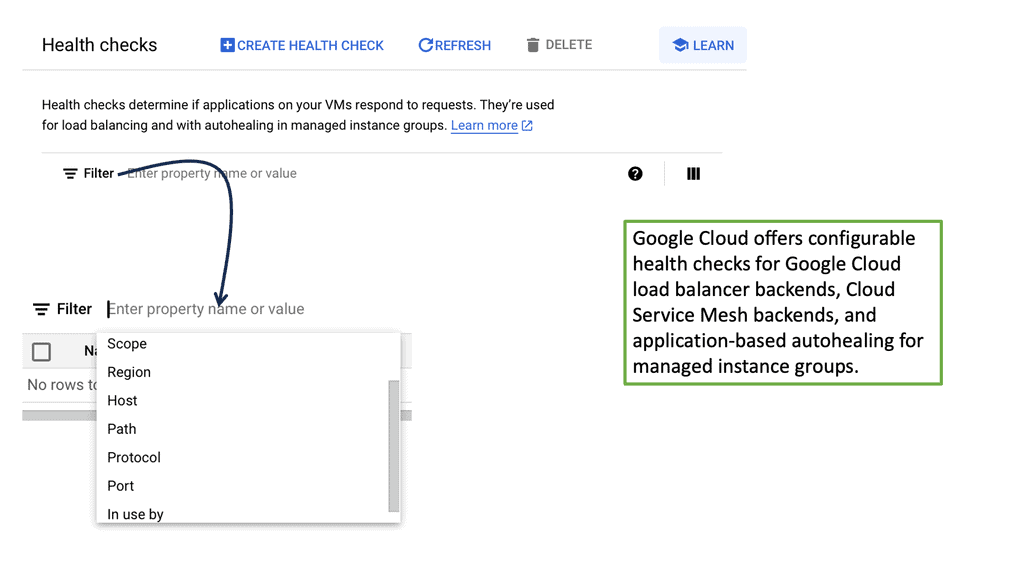

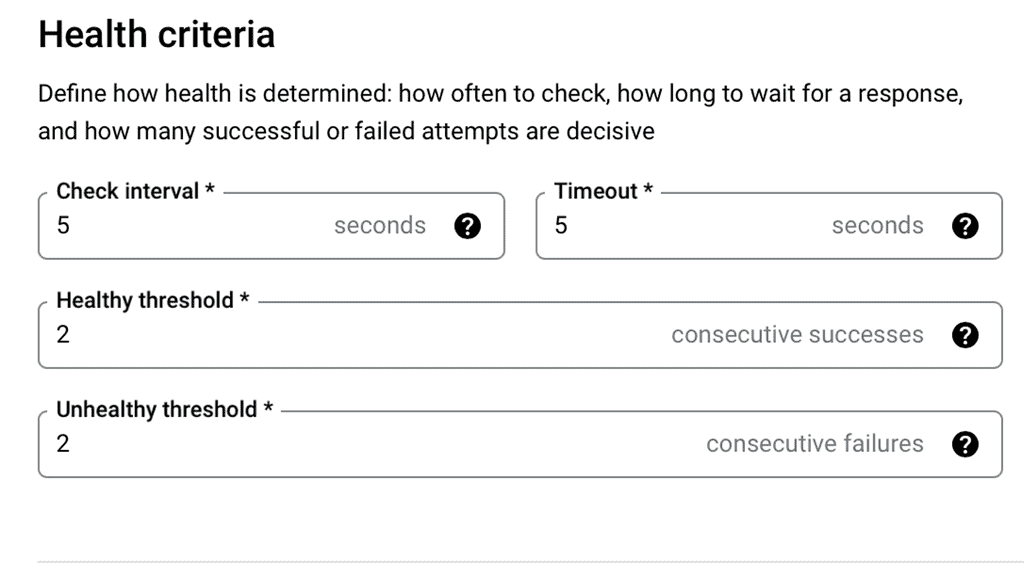

Enhanced Fault Tolerance: Stateless network functions facilitate fault tolerance by enabling easy redundancy and failover mechanisms. Since there is no state to be replicated or synchronized, redundant instances can seamlessly take over in case of failures, ensuring uninterrupted network services.

The removal of state from network functions has revolutionized the networking landscape. Stateless network functions bring enhanced scalability, improved performance, and enhanced fault tolerance to networks, enabling them to meet the ever-increasing demands of modern applications and services. Embracing this paradigm shift paves the way for more agile, efficient, and resilient networks that can keep up with the rapid pace of digital transformation.

Matt Conran

Highlights: Removing State From Network Functions

**Understanding State in Network Functions**

To grasp the significance of stateless network functions, it’s essential to first understand what “state” means in this context. State refers to the stored information that a network function requires to operate effectively. This includes data about past interactions, user sessions, and configuration settings. While stateful functions can offer certain advantages, such as maintaining session continuity, they also introduce complexity and potential bottlenecks.

**The Benefits of Stateless Network Functions**

1. **Scalability**: Stateless network functions can easily scale horizontally. Without the need to store and manage state information, these functions can be replicated across multiple instances, distributing the load and improving performance.

2. **Resilience**: Stateless functions are inherently more resilient to failures. In a stateless architecture, if one instance fails, another can seamlessly take over without the risk of data loss or service interruption.

3. **Simplicity**: By removing the need to manage state, developers can focus on building simpler, more maintainable code. This reduction in complexity often leads to faster development cycles and easier debugging processes.

**Implementing Statelessness in Network Functions**

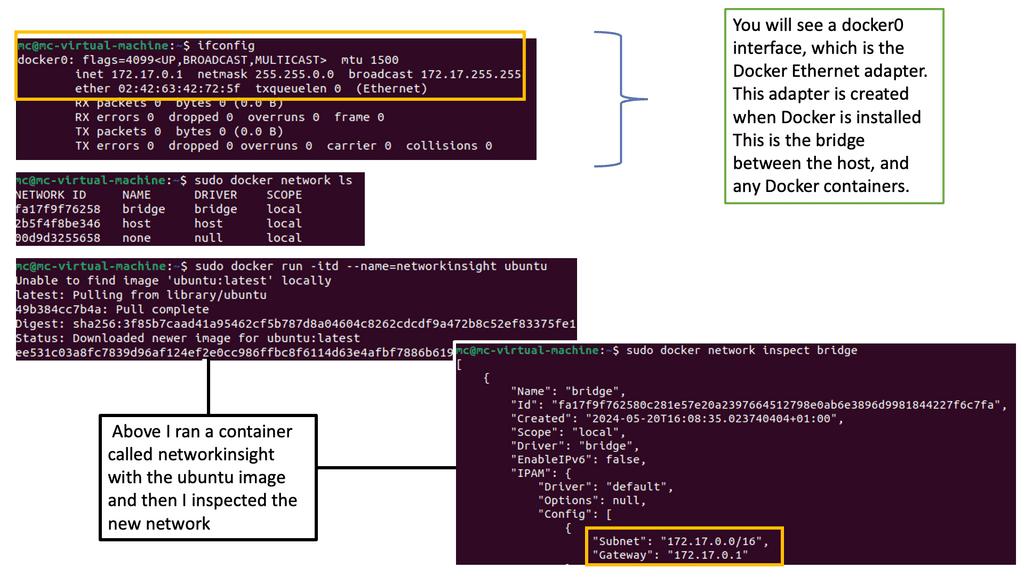

Transitioning to stateless network functions involves rethinking how data is handled. One approach is to offload state management to external storage systems or databases. By doing so, network functions can remain lightweight and focused solely on processing data. Additionally, modern technologies such as microservices and containerization can support the implementation of stateless architectures, allowing for more efficient resource utilization.

**Real-World Applications and Case Studies**

Many leading tech companies have successfully adopted stateless network functions to enhance their operations. For instance, cloud service providers have embraced stateless architectures to offer scalable and reliable services to their customers. These real-world applications demonstrate the practicality and effectiveness of removing state from network functions, providing valuable insights for organizations considering a similar transition.

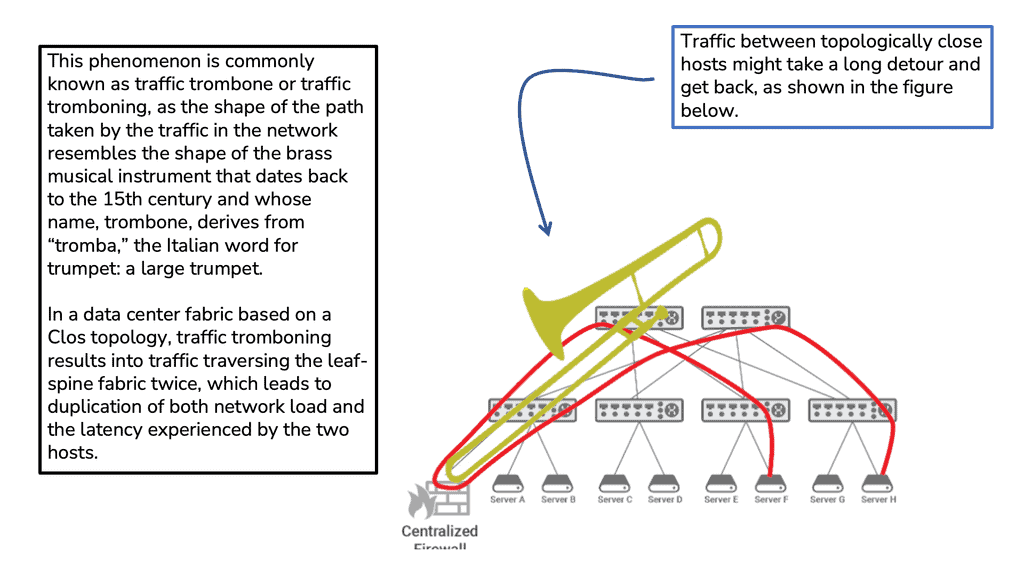

Understanding Stateful Network Functions

Stateful network functions have been the backbone of traditional networking architectures. These functions, such as firewalls, load balancers, and NAT (Network Address Translation), maintain complex state information about the connections passing through them. While they have served us well, stateful network functions have inherent limitations. They introduce latency, create single points of failure, and hinder scalability, especially in modern distributed systems.

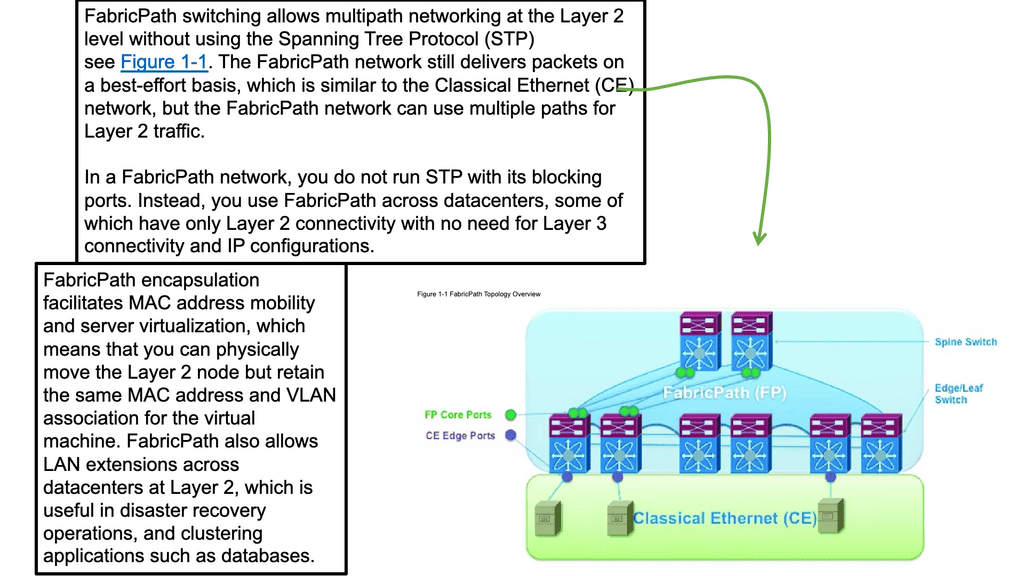

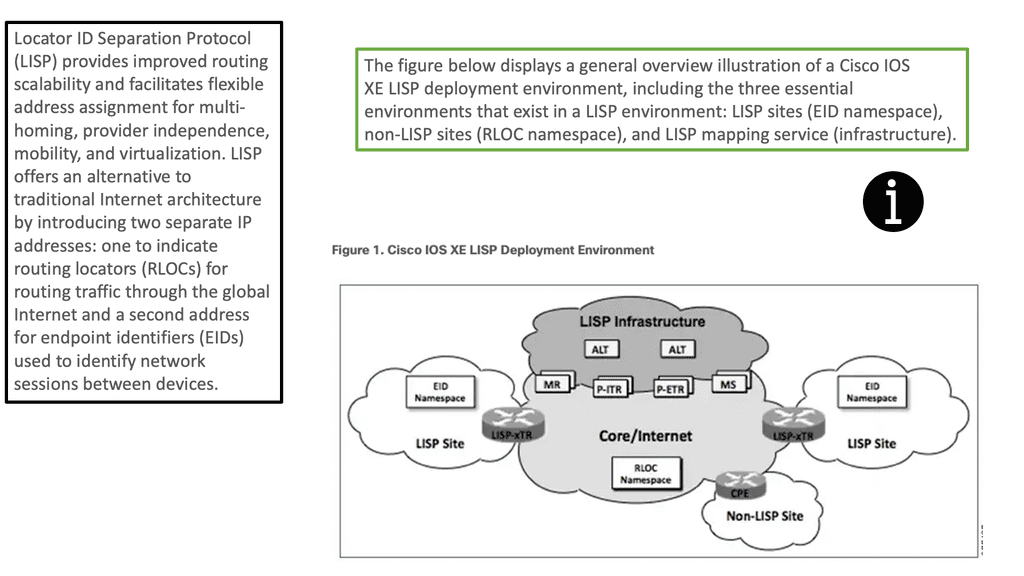

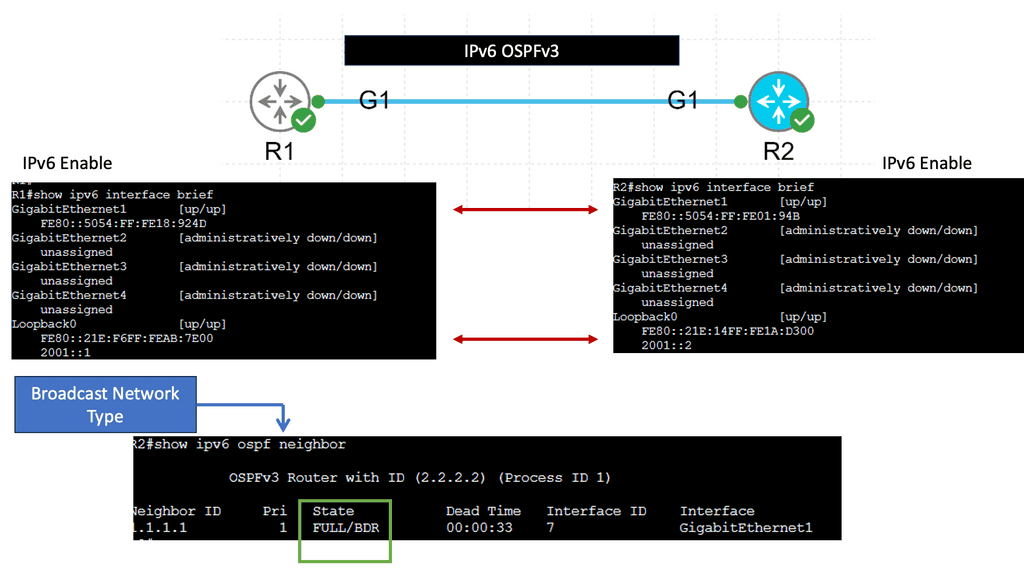

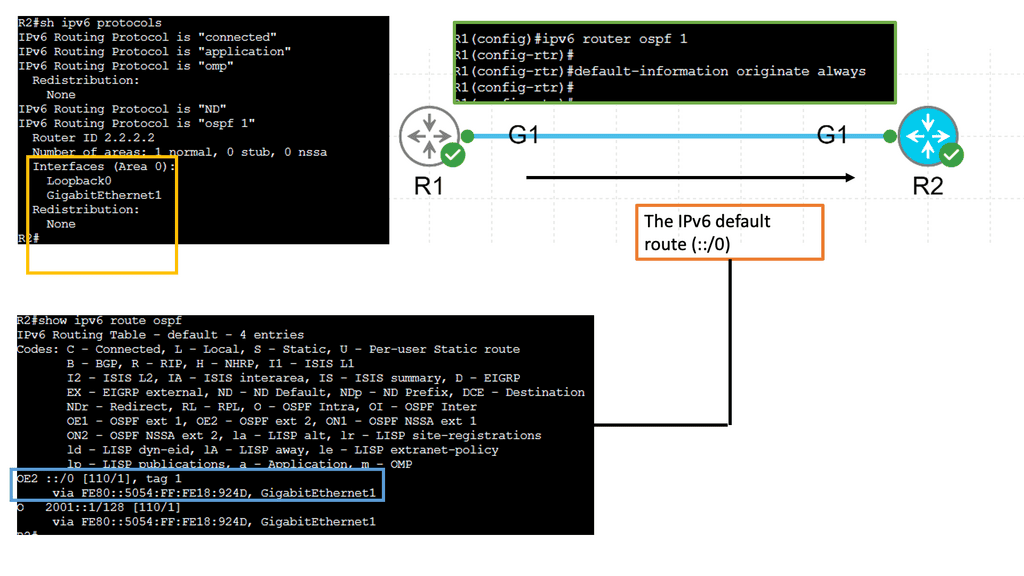

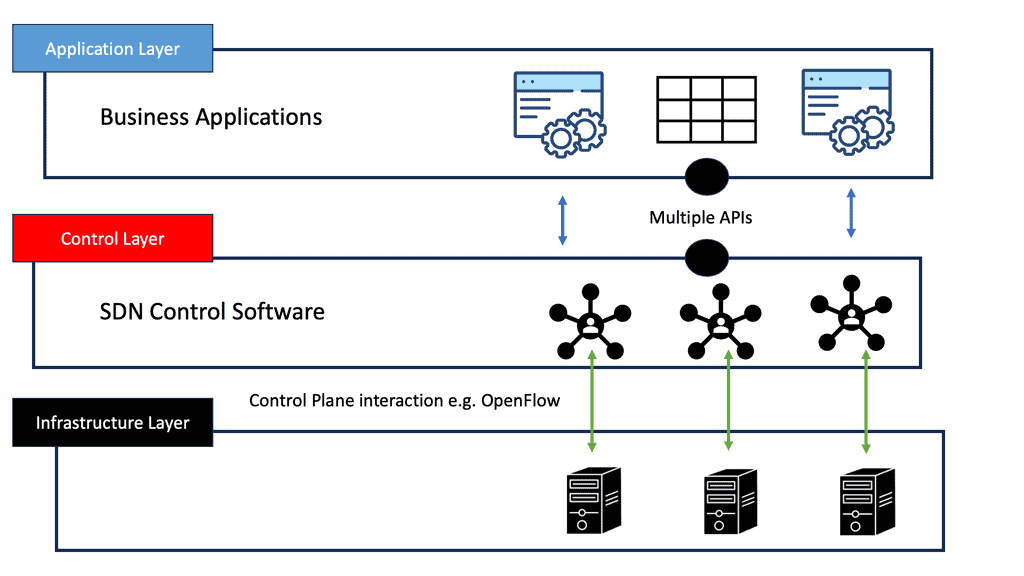

Enter stateless network functions, a paradigm shift that aims to address the shortcomings of their stateful counterparts. Stateless network functions operate without maintaining connection-specific states, treating each packet independently. By decoupling the state from the functions, networks become more agile, scalable, and fault-tolerant. This approach aligns perfectly with the demands of cloud-native architectures, microservices, and modern software-defined networking (SDN) frameworks.

Considerations: Stateless Network Functions

Enhanced Scalability: One key benefit of removing the state from network functions is its improved scalability. By eliminating the need for state management and storage, network systems can handle significantly more concurrent connections. This enables seamless scaling to accommodate growing demands without compromising performance or stability.

Improved Flexibility and Interoperability: When the state is removed from network functions, it allows for greater flexibility and interoperability among different systems and platforms. Stateless network functions can be easily deployed across various environments, making integrating new technologies and adapting to evolving requirements easier. This promotes innovation and paves the way for developing advanced network solutions.

Enhanced Security: Stateless network functions also offer enhanced security benefits. With no state to maintain, the risk of data breaches and unauthorized access is significantly reduced. Stateless systems can operate in a zero-trust environment, where each transaction is treated independently and authentically. This approach minimizes the potential impact of security breaches and strengthens overall network resilience.

Simplified Management and Maintenance: Removing the state from network functions significantly reduces the complexity of managing and maintaining these systems. Stateless architectures require less administrative overhead, as there is no need to track and manage state information across different network nodes. This simplification leads to cost savings and allows network administrators to focus on other critical tasks.

The Role of Non-Proprietary Hardware

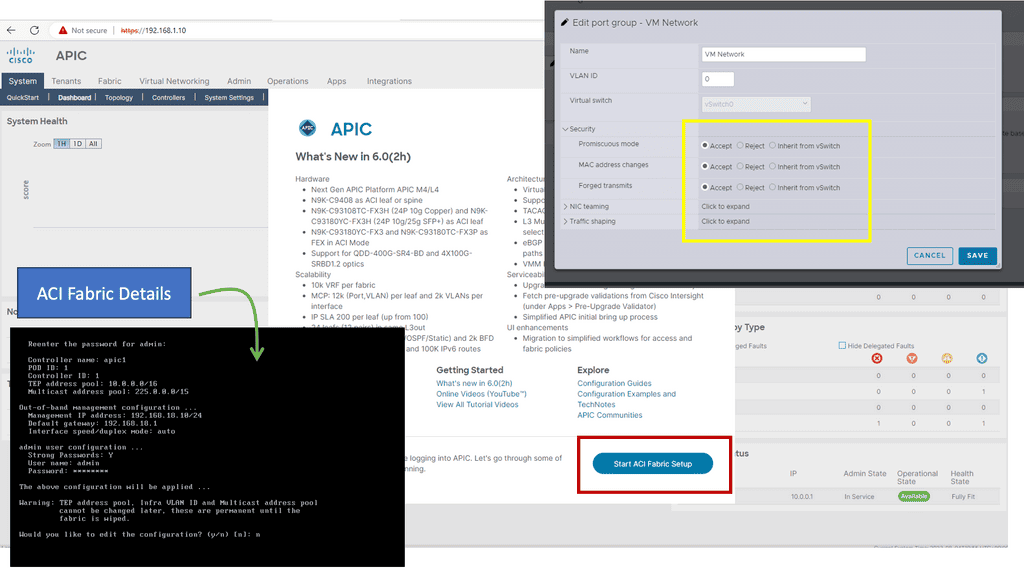

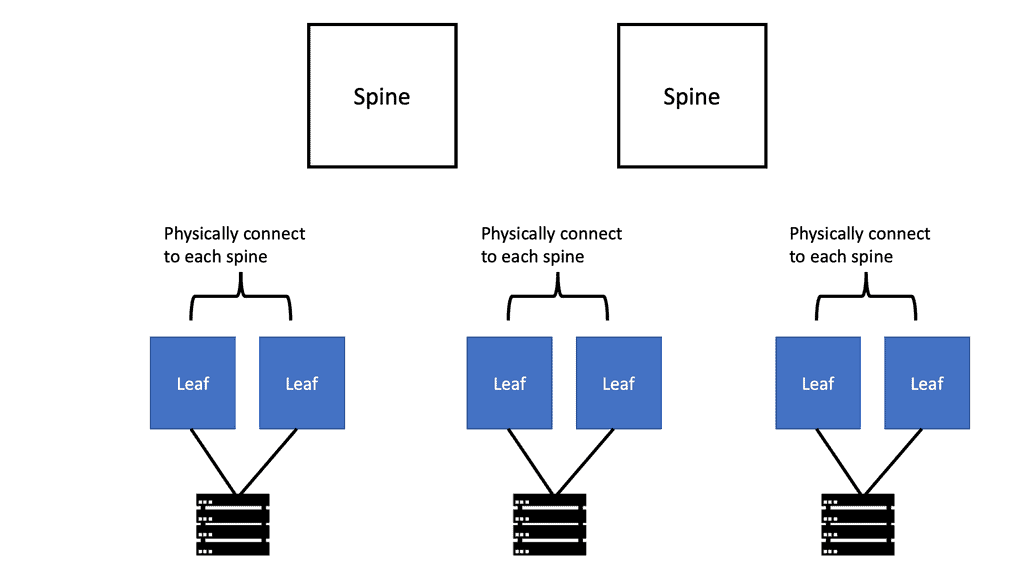

We have seen a significant technological evolution, where network functions can run in software on non-proprietary commodity hardware, whether in a grey box or white box deployment model. However, taking network functions from a physical appliance and putting them into a virtual appliance is only half the battle.

The move to software provides network security components’ on-demand elasticity and scale and quick recovery from failures. However, one major factor still hinders us—the state that each network function needs to process.

The Tights Coupling of State

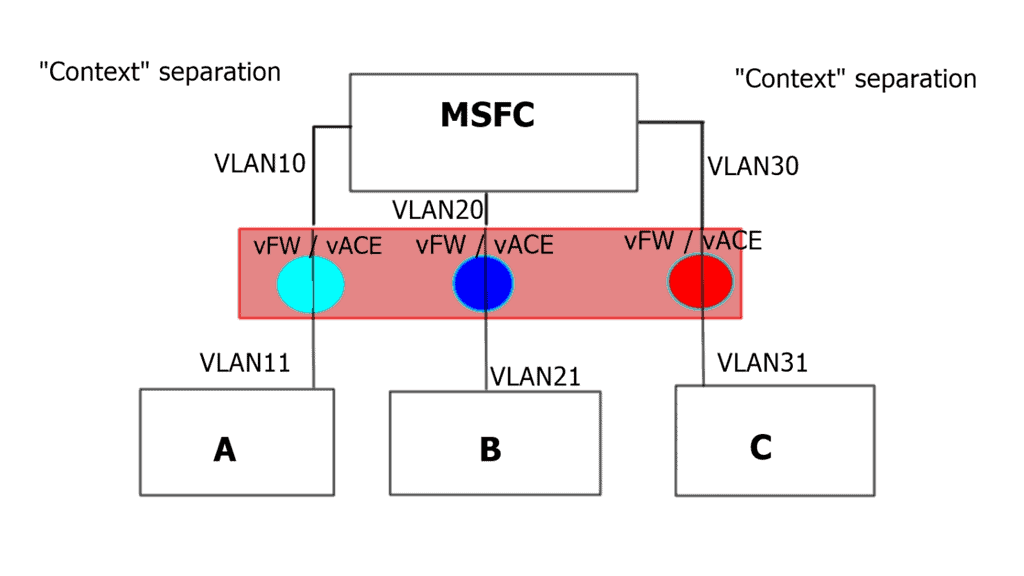

We still face challenges created by the tight coupling of the state and processing for each network function, be it virtual firewalls, load balancer scaling, intrusion protection system (IPS), or even distributed firewalls closer to the workloads for dynamic workload scaling use cases. The state is tightly coupled with the network functions, limiting the network functions’ agility, scalability, and failure recovery.

Compounded by this, we have seen an increase in network complexity. The rise of the public cloud and the emergence of hybrid and multi-cloud has made data center connectivity more complicated and critical than ever.

For pre-information, you may find the following helpful:

Removing State From Network Functions

Virtualization

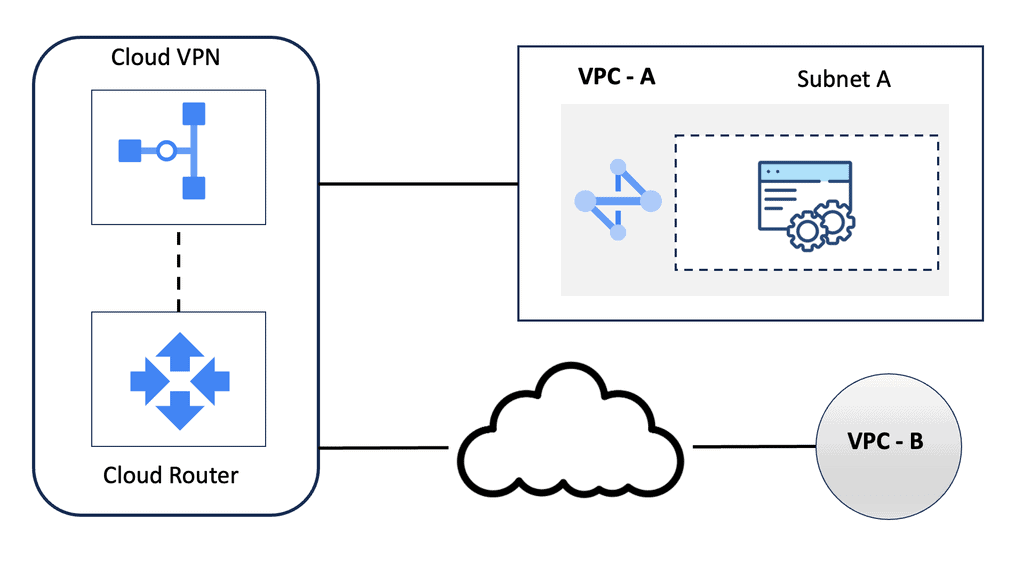

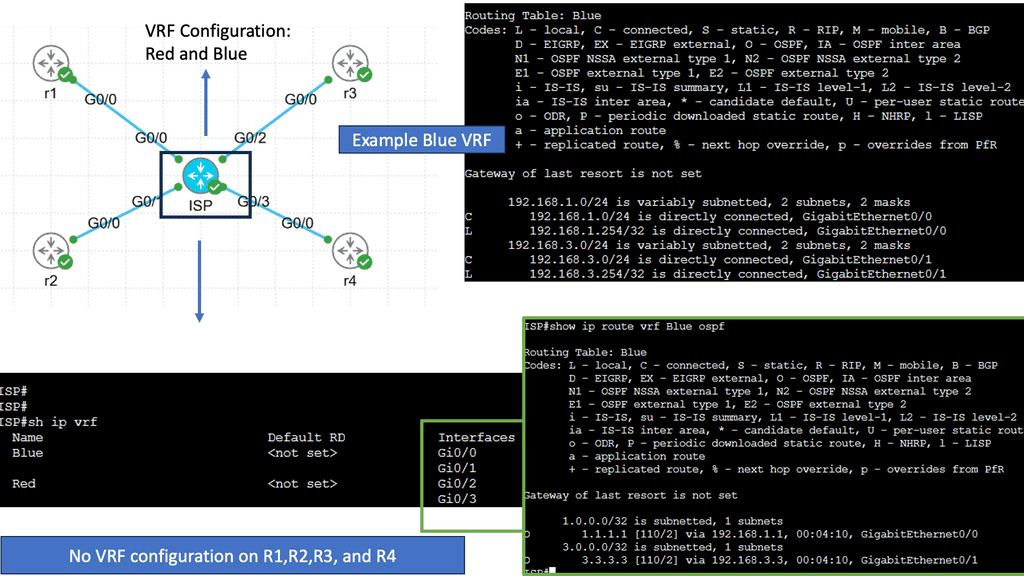

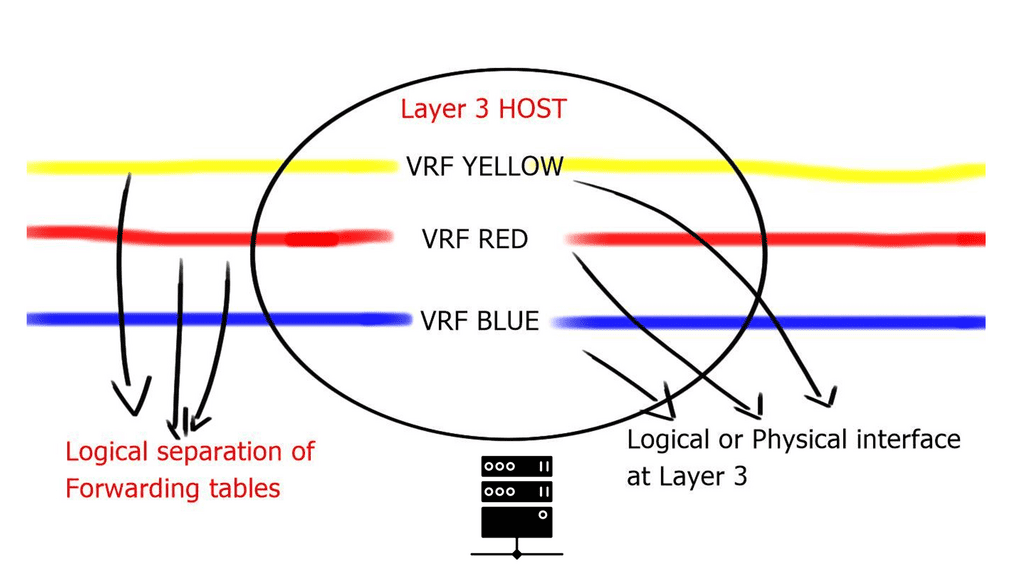

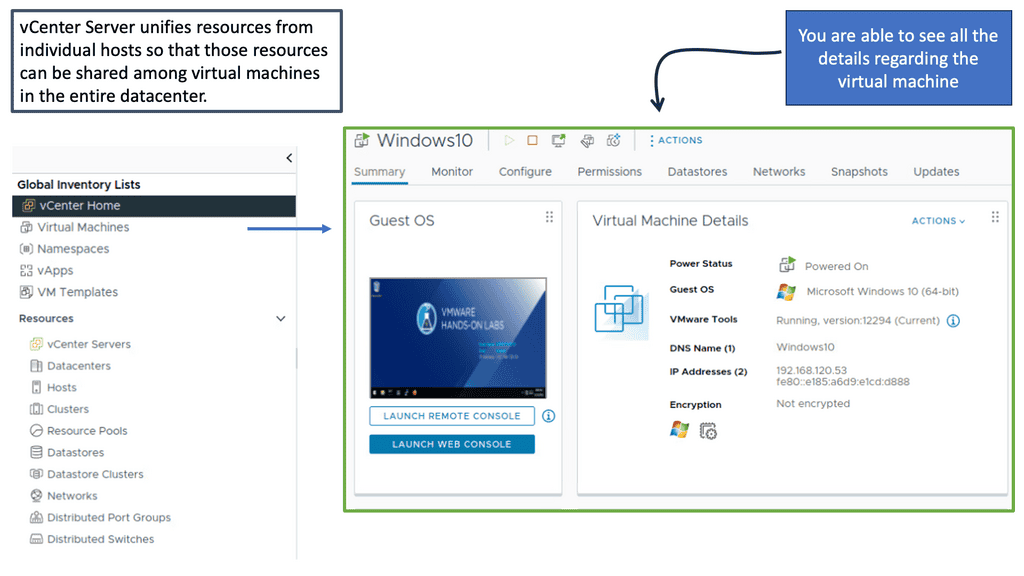

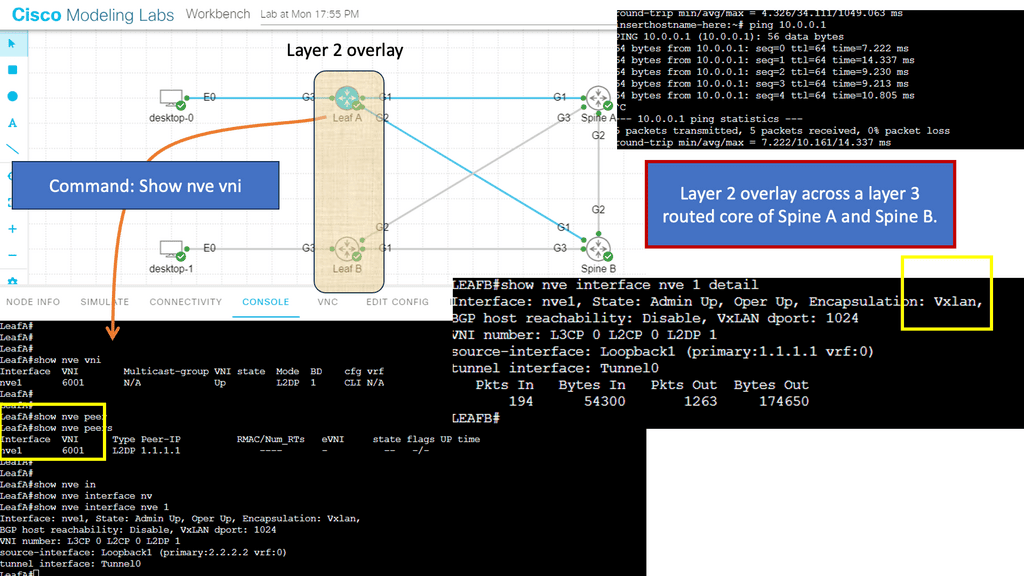

Virtualization (which generally indicates server virtualization when used as a standalone phrase) refers to the abstraction of the application and operating system from the hardware. Similarly, network virtualization is the abstraction of the network endpoints from the physical arrangement of the network. In other words, network virtualization permits you to group or arrange endpoints on a network independent from their physical location.

Network Virtualization refers to forming logical groupings of endpoints on a network. In this case, the endpoints are abstracted from their physical locations so that VMs (and other assets) can look, behave, and be managed as if they are all on the same physical segment of the network.

Importance of Network Functions:

Network functions are the backbone of modern communication systems, making them essential for businesses, organizations, and individuals. They provide the necessary infrastructure to connect devices, transmit data, and facilitate the exchange of information reliably and securely. Without network functions, our digital interactions, such as accessing websites, making online payments, or conducting video conferences, would be nearly impossible.

Types of Network Functions:

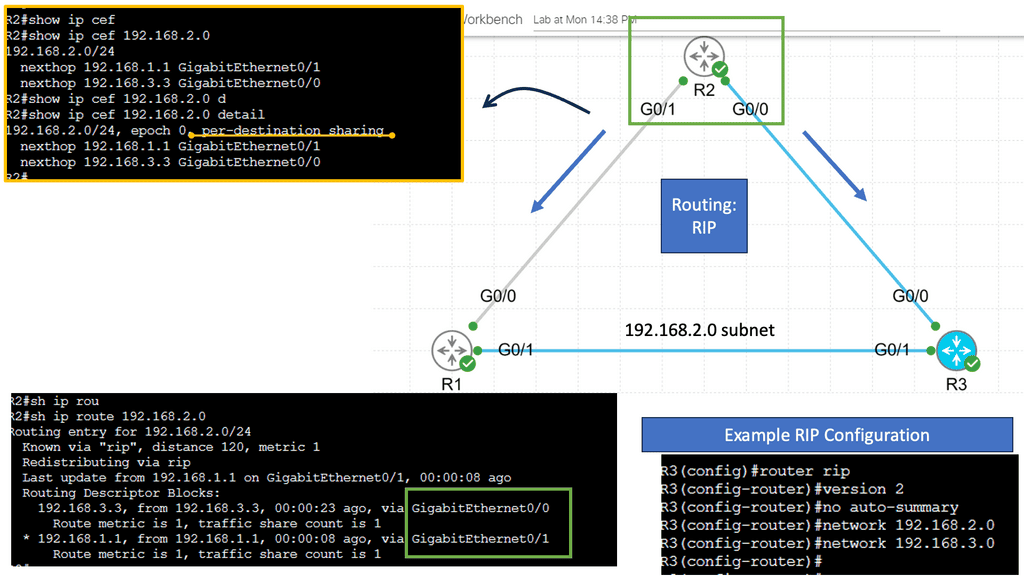

1. Routing: Routing functions enable forwarding data packets between different networks, ensuring that information reaches its intended destination. This process involves selecting the most efficient path for data transmission based on network congestion, bandwidth availability, and network topology.

2. Switching: Switching functions allow data packets to be forwarded within a local network, connecting devices within the same network segment. Switches efficiently direct packets to their intended destination, minimizing latency and optimizing network performance.

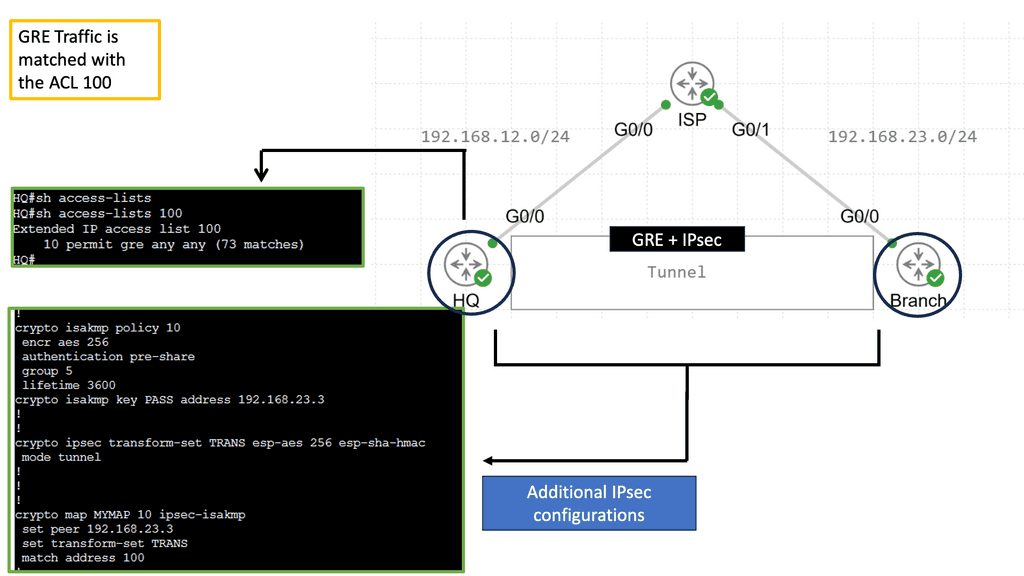

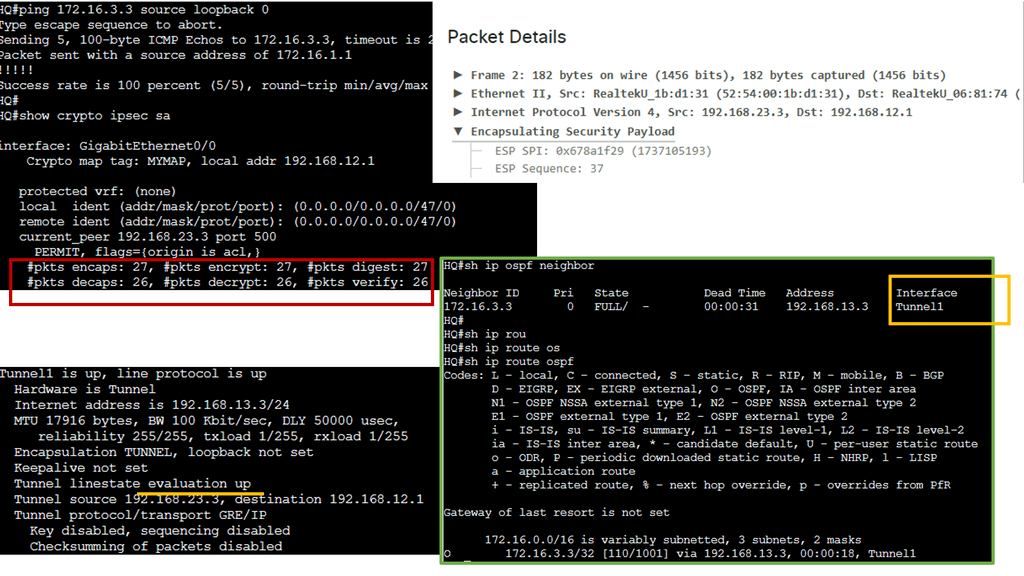

3. Firewalls: Firewalls act as barriers between internal and external networks, protecting against unauthorized access and potential security threats. They monitor incoming and outgoing traffic, filtering and blocking suspicious or malicious data packets.

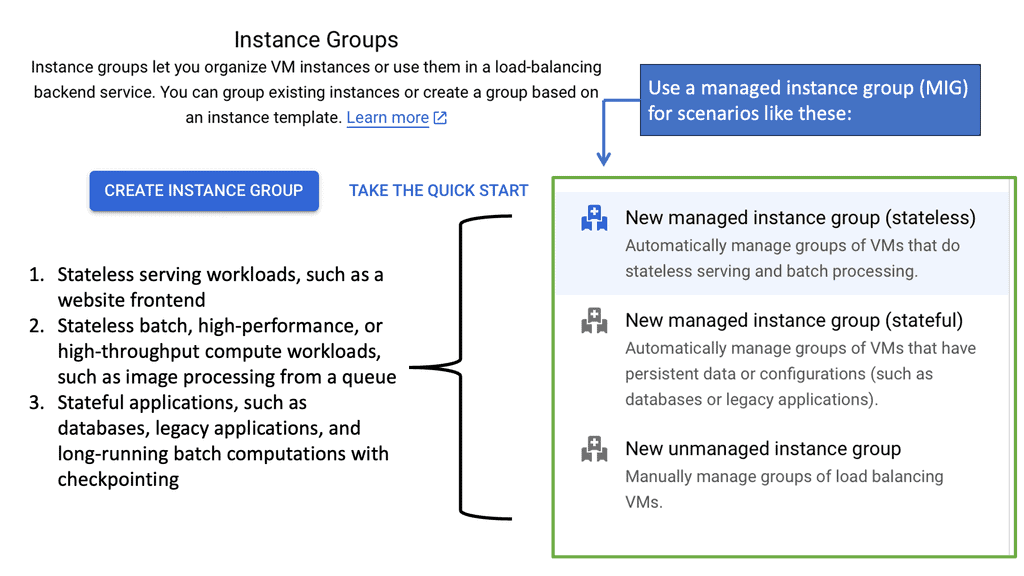

4. Load Balancing: Load balancing distributes network traffic across multiple servers to prevent overloading and ensure optimal resource utilization. Load balancing enhances network performance, scalability, and reliability by evenly distributing workloads.

5. Network Address Translation (NAT): NAT allows multiple devices within a private network to share a single public IP address. It translates private IP addresses into public ones, enabling communication with external networks while maintaining the security and privacy of internal devices.

6. Intrusion Detection Systems (IDS): IDS monitors network traffic for any signs of intrusion or malicious activity. They analyze data packets, identify potential threats, and generate alerts or take preventive actions to safeguard the network from unauthorized access or attacks.

**What is State**

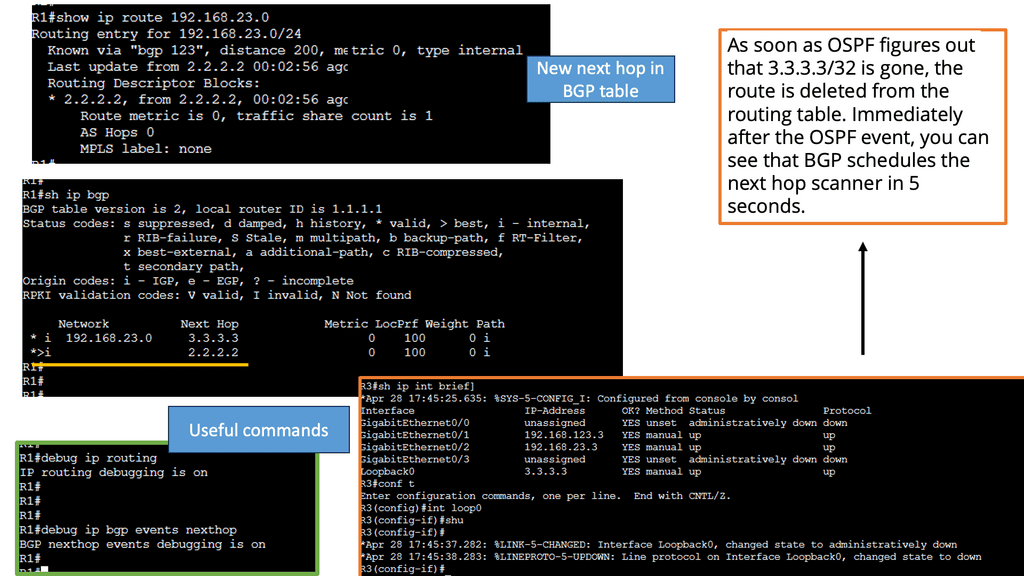

Before we delve into potential solutions to this problem, mainly by introducing stateless network functions, let us first describe the different types of states. We have two: dynamic and static. The network function processes continuously update the dynamic state, which could be anything from a firewall’s connection information to the load balancer’s server mappings.

On the other hand, the static state could include something like pre-configured firewall rules or the IPS signature database. The dynamic state must persist across instance failures and be available to the network functions when scaling in or out. On the other hand, the static state is accessible and can be replicated to a network instance upon boot time.

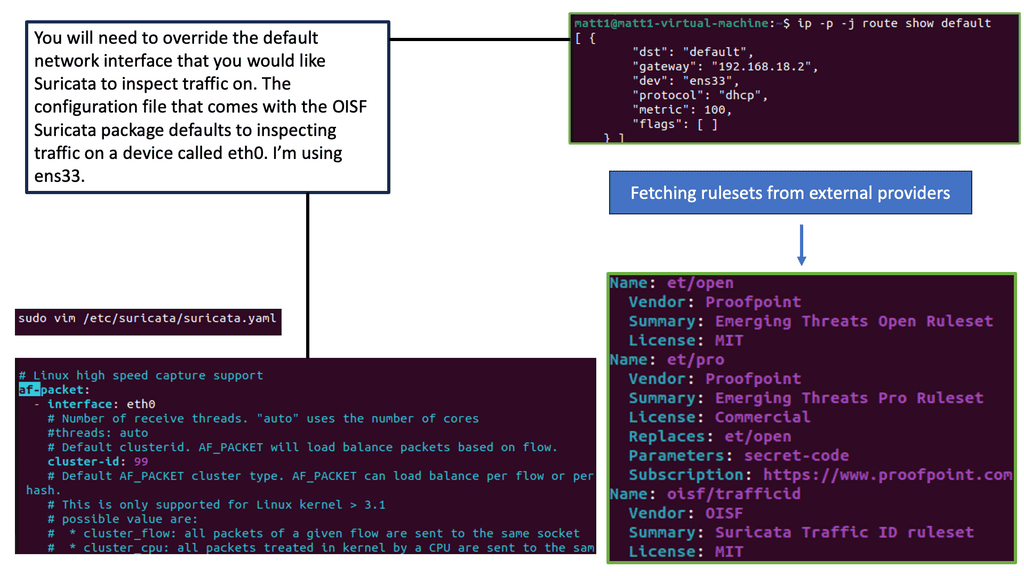

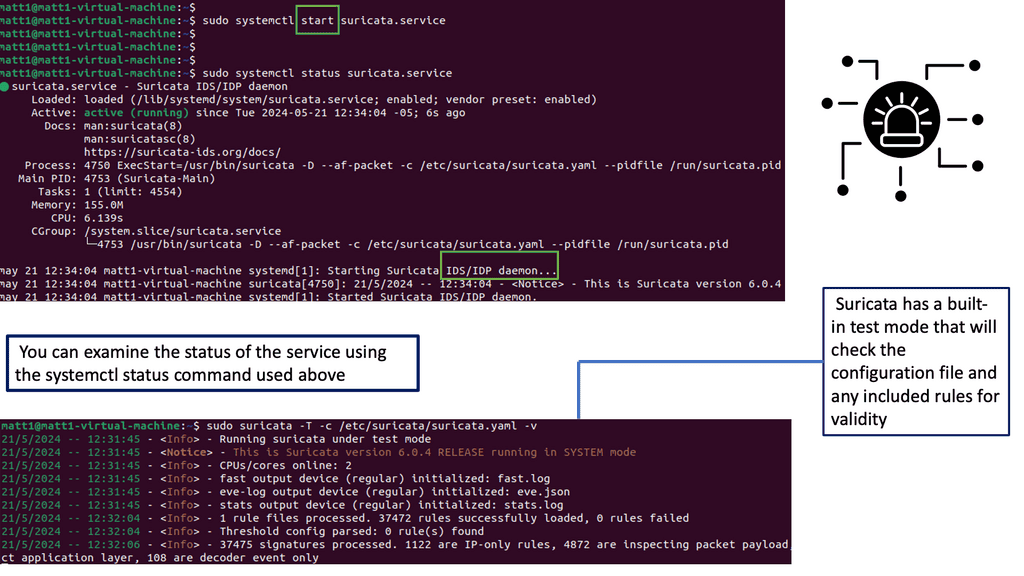

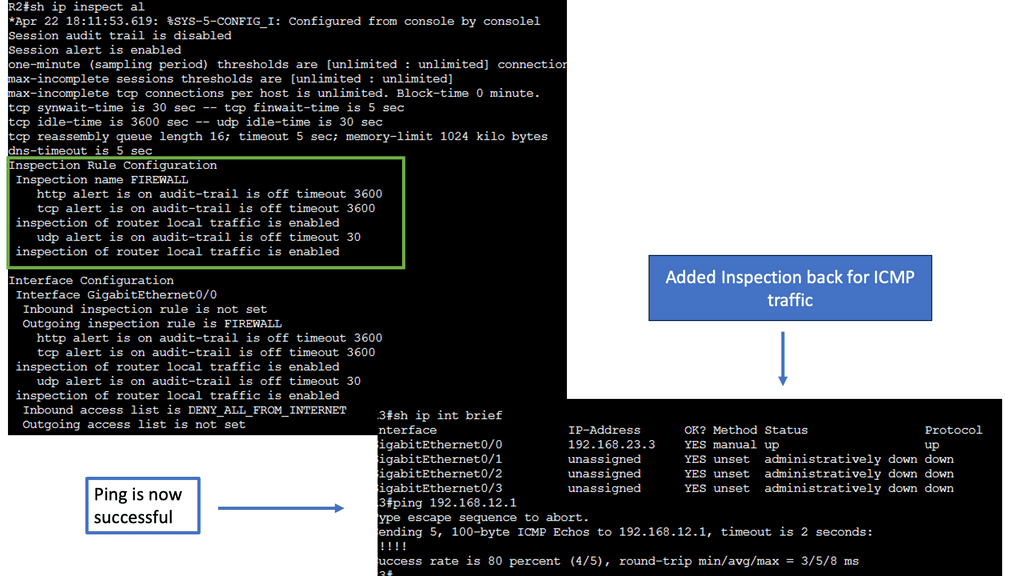

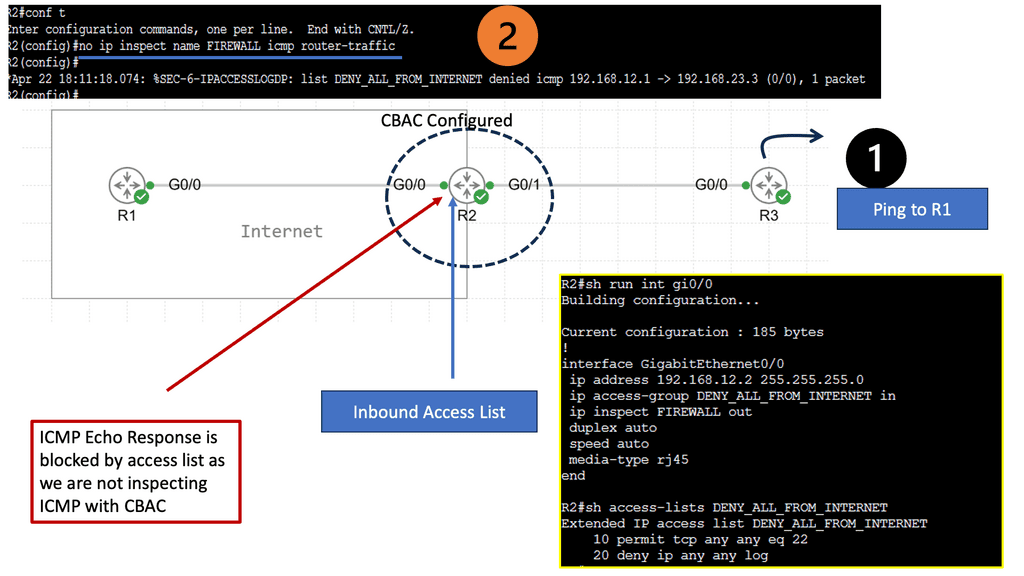

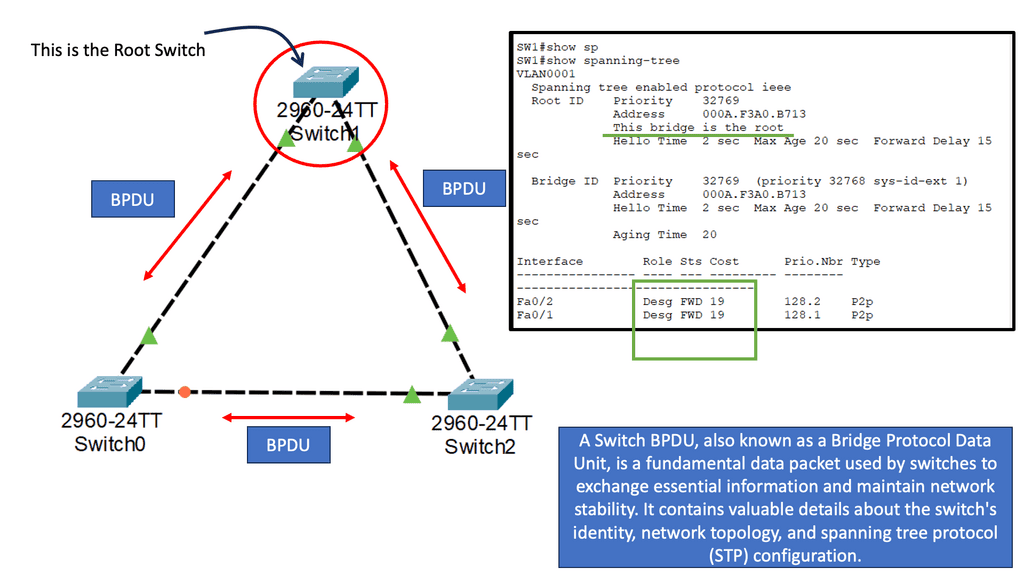

Example Stateful Technology: Cisco CBAC Stateful Firewall

**How CBAC Works: Stateful Inspection Explained**

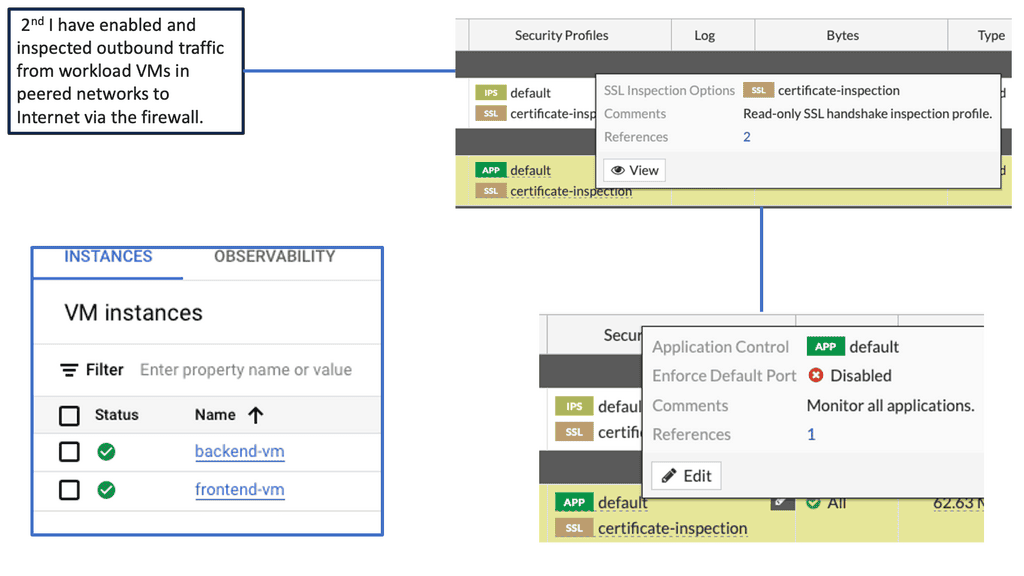

At its core, CBAC functions as a stateful firewall, which means it monitors the state of active connections and makes decisions based on the context of the traffic. Unlike stateless firewalls that merely assess packet headers, CBAC inspects the entire traffic stream, understanding and remembering the state of connections. This enables it to effectively block unauthorized access while allowing legitimate traffic to flow smoothly. By maintaining a state table, CBAC can dynamically filter packets based on the context of the communication session, providing a more nuanced and effective security measure.

**Stateless Network Functions**

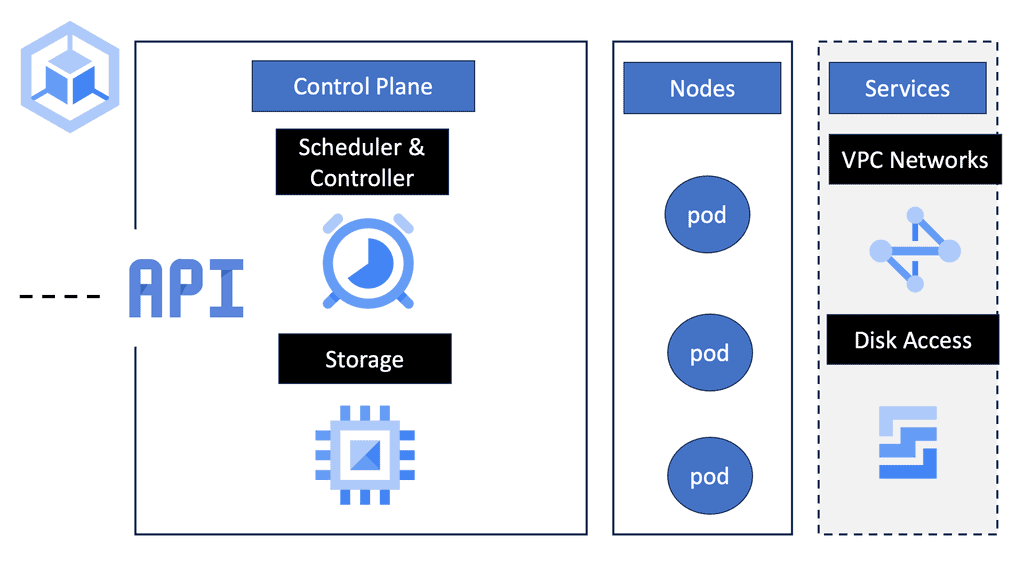

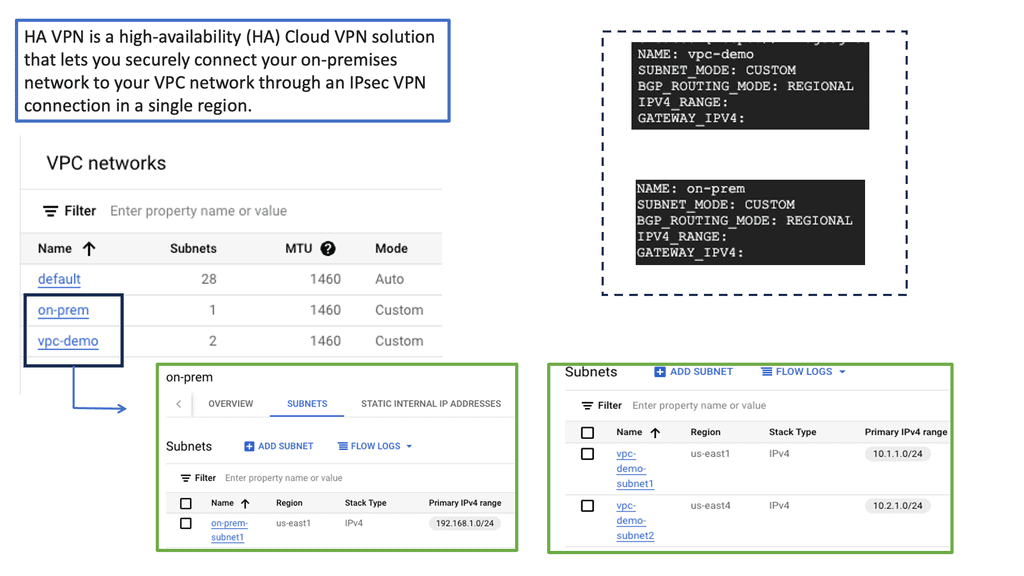

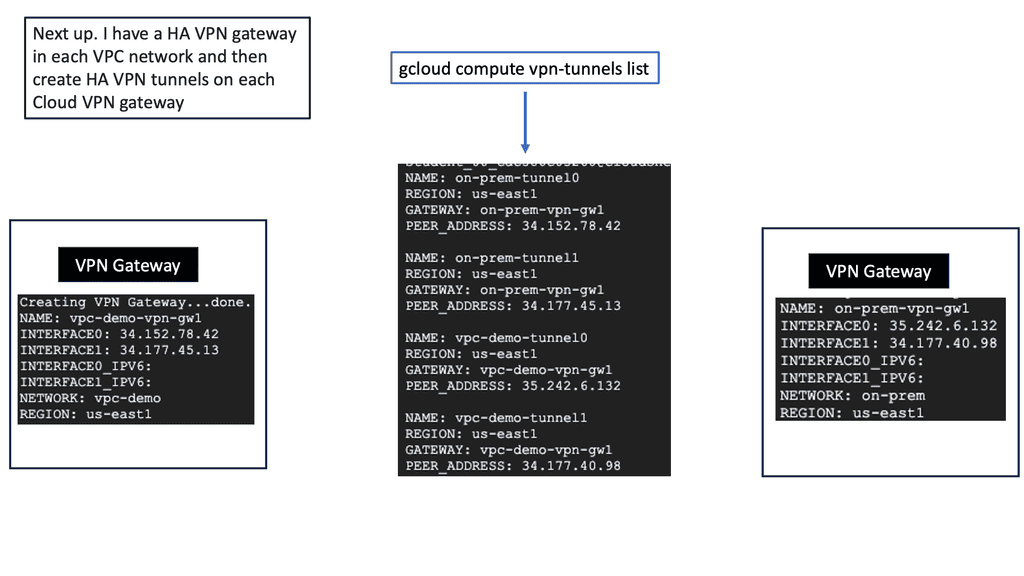

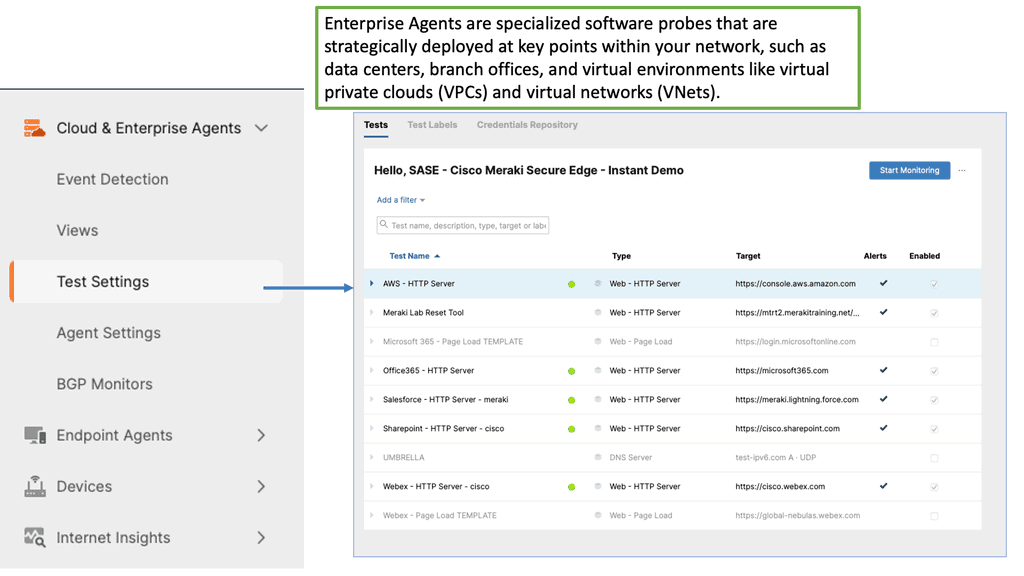

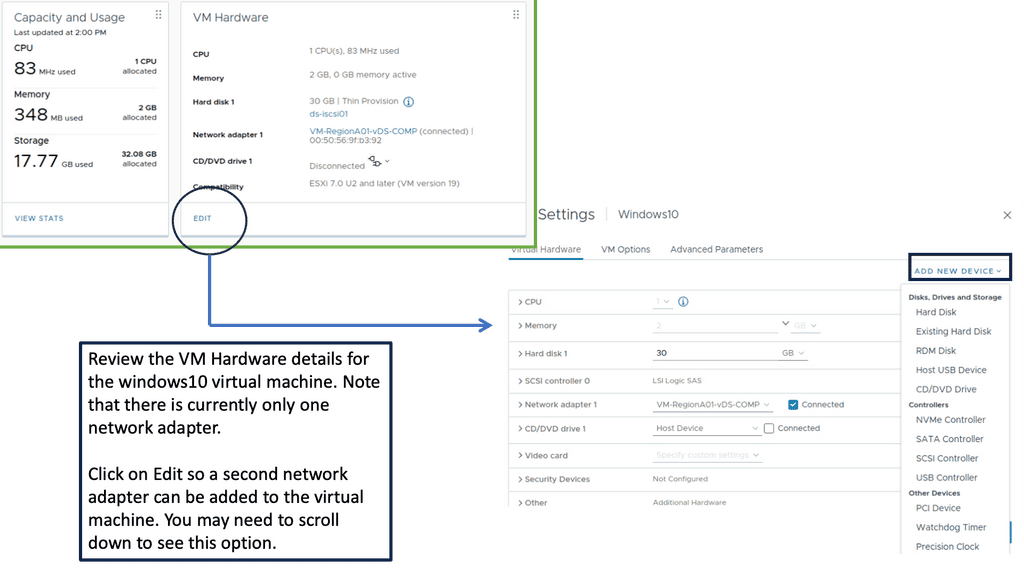

Stateless Network Functions are a new and disruptive technology that decouples the design of network functions into a stateless process component and a data store layer. An orchestration layer that can monitor the network function instances for load and failure and adjust the number of cases accordingly is also needed.

Taking or decoupling the state from a network function enables a more elastic and resilient infrastructure. So how does this work? From a 20,000 bird’s eye view, the network functions become stateless. The statefulness of the application, such as a stateful firewall, is maintained by storing the state in a separate data store. The data store provides the resilience of the state. No state is stored on the individual networking functions themselves.

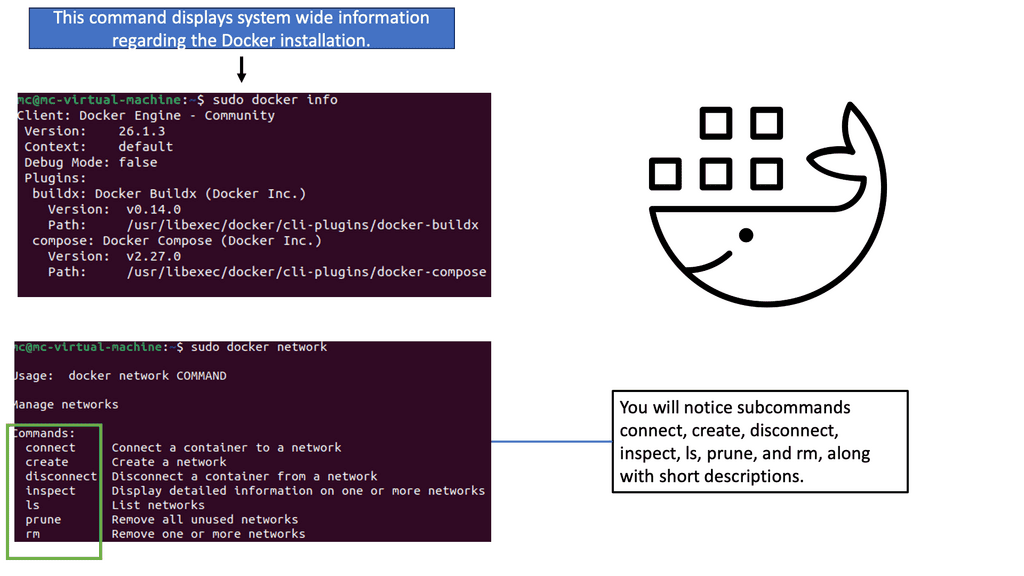

Datastore Example:

The data store can be, for example, RAMCloud. RAMCloud is a distributed key-value storage system with high-speed storage for large-scale applications. It is designed for many servers needing low-latency access to a durable data store. RAMCloud is suitable for low-latency access as it’s based primarily on DRAM. RAMCloud keeps all data in DRAM. As a result, the network functions can read RAMCloud objects remotely over the network in as little as 5μs.

**Stateless network functions advantages**

Stateless network functions may not be helpful for all, but they are valid for standard network functions that can be redesigned statelessly. Stateful network functions are helpful for a stateful firewall, intrusion prevention system, network address translator, and load balancer. Removing the state and placing it in a database brings many advantages to network management.

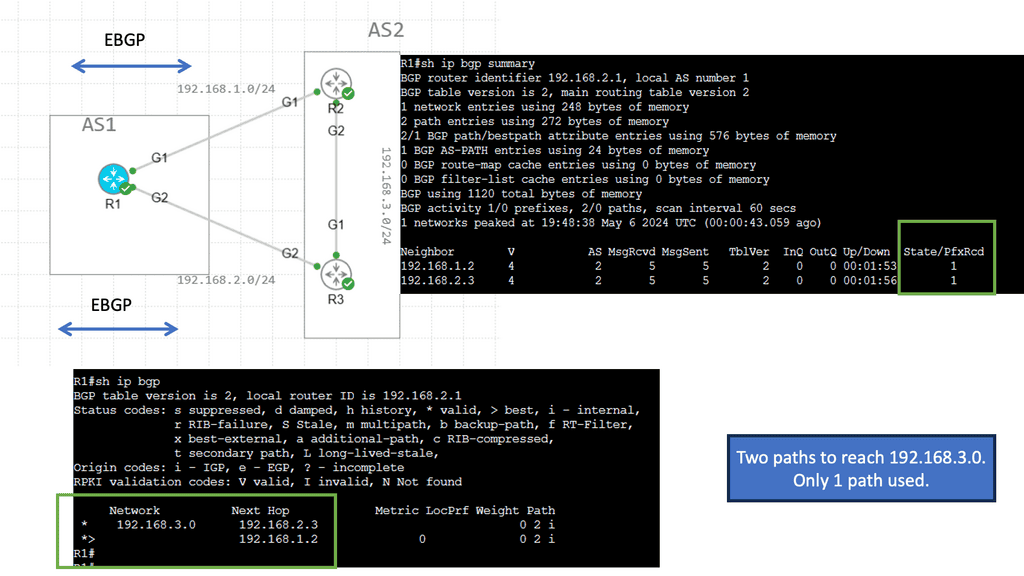

As the state is accessed via a data store, a new instance can be launched, and traffic is immediately directed to it, offering elasticity. Secondly, resilience, a new instance, can be spawned instantaneously upon failure. Finally, as any instance can handle an individual packet, packets traversing different paths do not have asymmetric and multi-path routing issues.

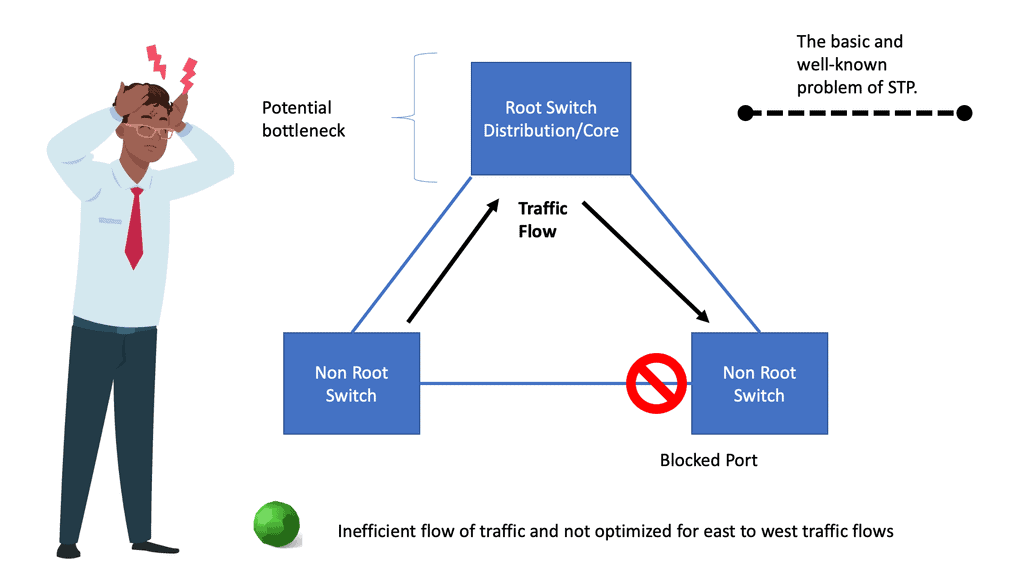

Problems with having state: Failure

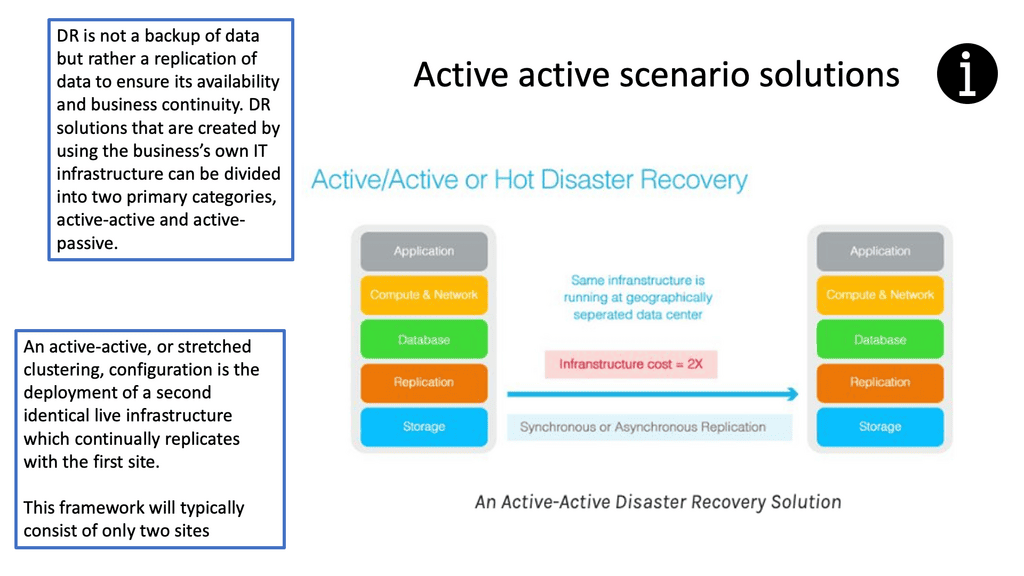

The majority of network designs have redundancy built-in. It sounds easy when one data center fails to let the secondary take over. When the data center interconnect (DCI) is configured correctly, everything should work upon failover, correct?

Let’s not forget about one little thing called state with a firewall in each data center design. The network address translation (NAT) in the primary data center stores the mapping for two flows, let’s call them F1 and F2. Upon failure, the second firewall in the other data center takes over, and traffic is directed to the new firewall. However, any packets from flows F1 and F2 will not enter the second firewall.

This will result in a failed lookup; existing connections will timeout, causing application failure. Asymmetric routing causes problems. If a firewall has an established state for a client-to-server connection (SYN packet), if the return SYN-ACK passes through a different firewall, the packet will result in a failed lookup and get dropped.

Some have tried to design distributed active-active firewalls to solve layer three issues and asymmetrical traffic flow over the stateful firewalls. The solution looks perfect. Configure both wide area network (WAN) routers to advertise the same IP prefix to the outside world.

This will attract inbound traffic and pass it through the nearest firewall—nice and easy. The active-active firewalls would exchange flow information, solving the asymmetrical flow problems. Distributed active-active firewall state across each data center is better in PowerPoint than in real life.

Problems with having the state: Scaling

The tight coupling of the state can also cause problems with the scaling of network functions. Scaling out NAT functions will have the same effect as NAT box failure. Packets from flow originating from a different firewall directed to a new instance will result in a failed lookup.

Network functions form the foundation of modern communication systems, enabling us to connect, share, and collaborate in a digitized world. Network functions ensure smooth and secure data flow across networks by performing vital tasks such as routing, switching, firewalls, load balancing, NAT, and IDS. Understanding the significance of these functions is crucial for businesses and individuals to harness the full potential of the interconnected world we live in today.

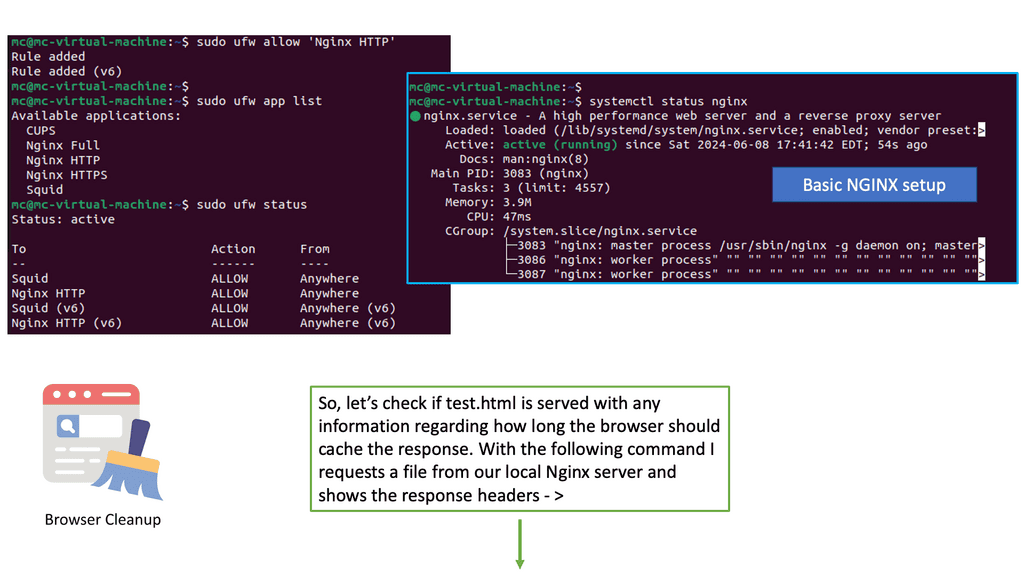

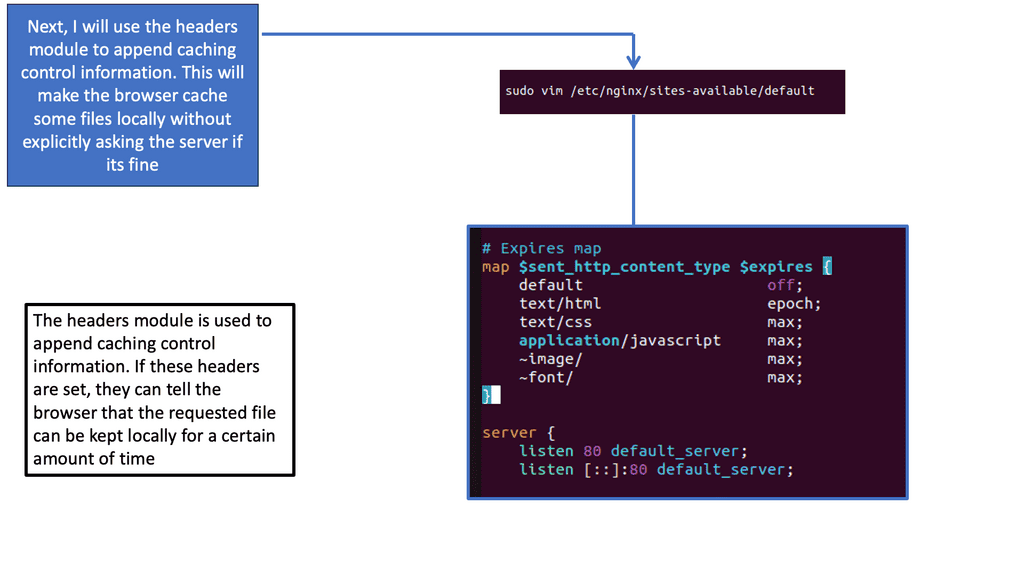

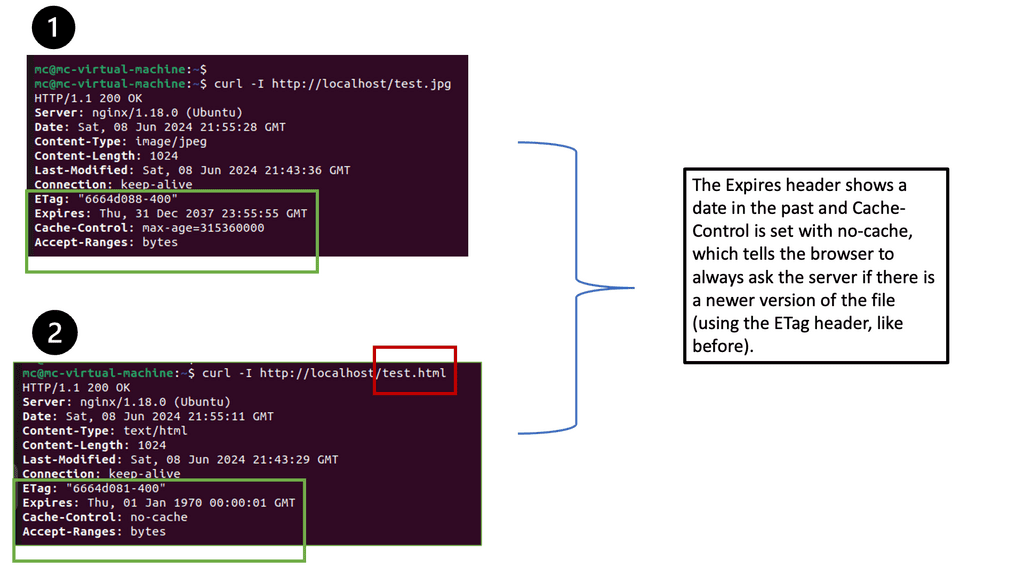

Example Technology: Browser Caching

Understanding Browser Caching

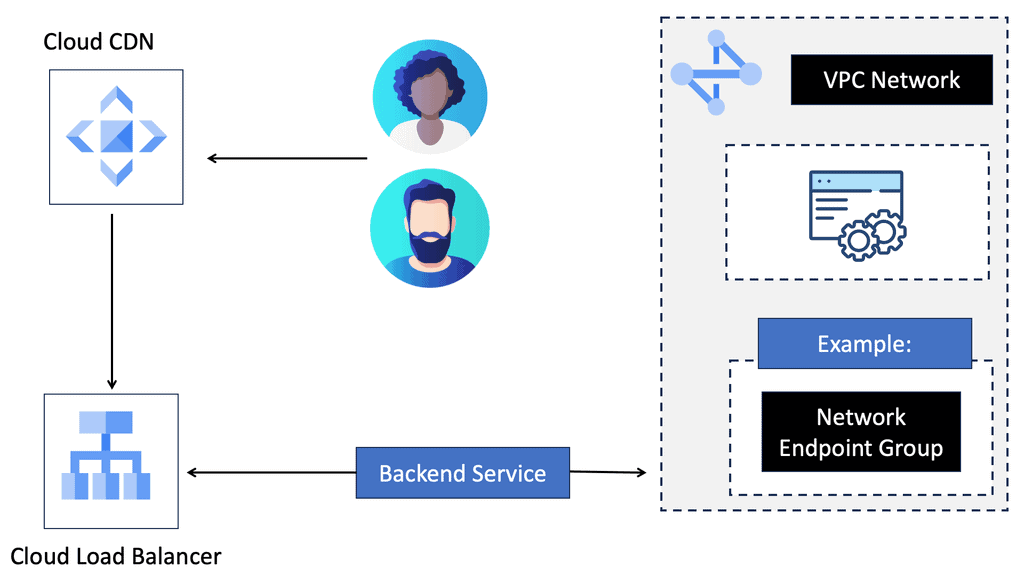

Browser caching is a mechanism that allows web browsers to store static resources, such as images, CSS files, and JavaScript, locally on a user’s device. When a user revisits a website, the browser can retrieve these cached resources instead of downloading them again from the server. This results in faster page load times and reduced server load.

Nginx, a popular open-source web server, provides a powerful module called ‘header’ that enables fine-grained control over HTTP response headers. With this module, you can easily configure browser caching directives to instruct clients on how long to cache specific resources. By leveraging the ‘expires’ and ‘Cache-Control’ headers, you can set expiration times for different file types, ensuring optimal caching behavior.

Summary: Removing State From Network Functions

In networking, the concept of state plays a crucial role in determining the behavior and functionality of network functions. However, a paradigm shift is underway as experts explore the potential of removing the state from network functions. In this blog post, we delved into the significance of this approach and how it is revolutionizing the networking landscape.

Understanding State in Network Functions

In the context of networking, state refers to the stored information that network devices maintain about ongoing communications. It includes connection status, session data, and routing information. Stateful network functions have traditionally been widely used, allowing for complex operations and enhanced control. However, they also come with certain limitations.

The Limitations of Stateful Network Functions

While stateful network functions have played a crucial role in shaping modern networks, they also introduce challenges. One notable limitation is the increased complexity and overhead introduced by state management. The need to store and update state information for each communication session can lead to scalability and performance issues, especially in large-scale networks. Additionally, stateful functions are more susceptible to failures and require synchronization mechanisms, making them less resilient.

The Emergence of Stateless Network Functions

The concept of stateless network functions provides a promising alternative to overcome the limitations of their stateful counterparts. In stateless functions, the processing of network packets is decoupled from maintaining any session-specific information. This approach simplifies the design and implementation of network functions, offering benefits such as improved scalability, reduced resource consumption, and enhanced fault tolerance.

Benefits and Use Cases

Removing state from network functions brings a multitude of benefits. Stateless functions allow easier load balancing and horizontal scaling, as they don’t rely on session affinity. They enable better resource utilization, as there is no need to maintain per-session state information. Stateless functions also enhance network resilience, as they are not dependent on maintaining a synchronized state across multiple instances.

Stateless network functions have diverse and expanding use cases. They are well-suited for cloud-native applications, microservices architectures, and distributed systems. Organizations can build more flexible and scalable networks by leveraging stateless functions, supporting dynamic workloads and rapidly evolving infrastructure requirements.

Conclusion:

Removing the state from network functions marks a significant shift in the networking landscape. Stateless functions offer improved scalability, reduced complexity, and enhanced fault tolerance. As the demand for agility and scalability grows, embracing stateless network functions becomes paramount. By harnessing this approach, organizations can build resilient, efficient, and future-ready networks.