Event Stream Processing

Event Stream Processing (ESP) has emerged as a groundbreaking technology, transforming the landscape of real-time data analytics. In this blog post, we will delve into the world of ESP, exploring its capabilities, benefits, and potential applications. Join us on this exciting journey as we uncover the untapped potential of event stream processing.

ESP is a cutting-edge technology that allows for the continuous analysis of high-velocity data streams in real-time. Unlike traditional batch processing, ESP enables organizations to harness the power of data as it flows, making instant insights and actions possible. By processing data in motion, ESP empowers businesses to react swiftly to critical events, leading to enhanced decision-making and improved operational efficiency.

Real-Time Data Processing: ESP enables organizations to process and analyze data as it arrives, providing real-time insights and enabling immediate actions. This capability is invaluable in domains such as fraud detection, IoT analytics, and financial market monitoring.

Scalability and Performance: ESP systems are designed to handle massive volumes of data with low latency. The ability to scale horizontally allows businesses to process data from diverse sources and handle peak loads efficiently.

Complex Event Processing: ESP platforms provide powerful tools for detecting patterns, correlations, and complex events across multiple data streams. This enables businesses to uncover hidden insights, identify anomalies, and trigger automated actions based on predefined rules.

Financial Services: ESP is revolutionizing the financial industry by enabling real-time fraud detection, algorithmic trading, risk management, and personalized customer experiences.

Internet of Things (IoT): ESP plays a crucial role in IoT analytics by processing massive streams of sensor data in real-time, allowing for predictive maintenance, anomaly detection, and smart city applications.

Supply Chain Optimization: ESP can help organizations optimize their supply chain operations by monitoring and analyzing real-time data from various sources, including inventory levels, logistics, and demand forecasting.

Event stream processing has opened up new frontiers in real-time data analytics. Its ability to process data in motion, coupled with features like scalability, complex event processing, and real-time insights, make it a game-changer for businesses across industries. By embracing event stream processing, organizations can unlock the true value of their data, gain a competitive edge, and drive innovation in the digital age.

Matt Conran

Highlights: Event Stream Processing

Real-time Data Streams

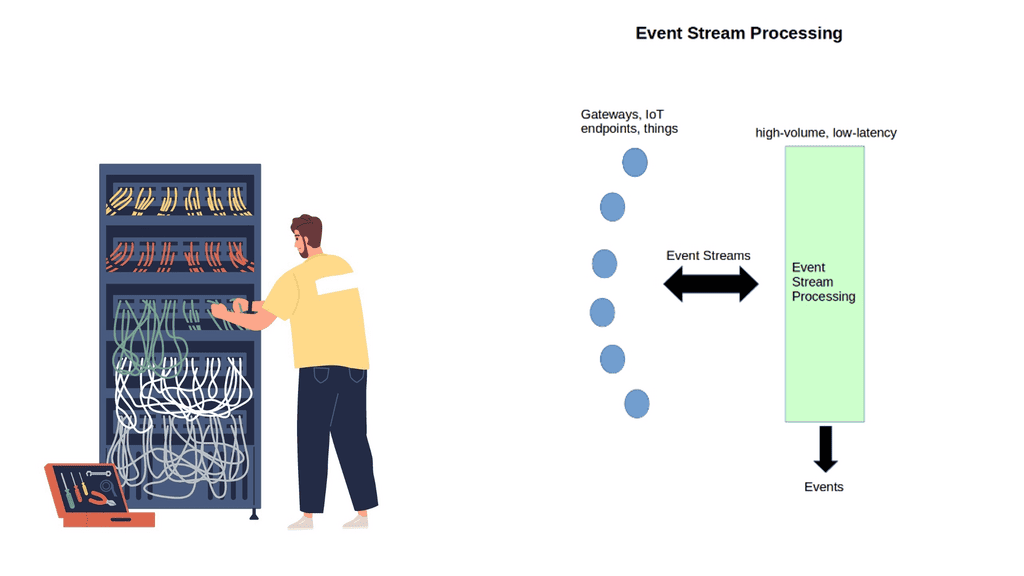

Event Stream Processing, also known as ESP, is a computing paradigm that allows for analyzing and processing real-time data streams. Unlike traditional batch processing, where data is processed in chunks or batches, event stream processing deals with data as it arrives, enabling organizations to respond to events in real time. ESP empowers businesses to make timely decisions, detect patterns, and identify anomalies by continuously analyzing and acting upon incoming data.

Event Stream Processing is a method of analyzing and deriving insights from continuous streams of data in real-time. Unlike traditional batch processing, ESP enables organizations to process and respond to data as it arrives, enabling instant decision-making and proactive actions. By leveraging complex event processing algorithms, ESP empowers businesses to unlock actionable insights from high-velocity, high-volume data streams.

ESP Key Points:

- Real-time Insights: One critical advantage of event stream processing is gaining real-time insights. By processing data as it flows in, organizations can detect and respond to essential events immediately, enabling them to seize opportunities and mitigate risks promptly.

- Scalability and Flexibility: Event stream processing systems are designed to handle massive amounts of real-time data. These systems can scale horizontally, allowing businesses to process and analyze data from multiple sources concurrently. Additionally, event stream processing offers flexibility regarding data sources, supporting various input streams such as IoT devices, social media feeds, and transactional data.

- Fraud Detection: Event stream processing plays a crucial role in fraud detection by enabling organizations to monitor and analyze real-time transactions. By processing transactional data as it occurs, businesses can detect fraudulent activities and take immediate action to prevent financial losses.

- Predictive Maintenance: With event stream processing, organizations can monitor and analyze sensor data from machinery and equipment in real time. By detecting patterns and anomalies, businesses can identify potential faults or failures before they occur, allowing for proactive maintenance and minimizing downtime.

- Supply Chain Optimization: Event stream processing helps optimize supply chain operations by providing real-time visibility into inventory levels, demand patterns, and logistics data. By continuously analyzing these data streams, organizations can make data-driven decisions to improve efficiency, reduce costs, and enhance customer satisfaction.

Example: Apache Flink and Stateful Stream Processing

a) Apache Flink provides an intuitive and expressive API for implementing stateful stream processing applications. These applications can be run fault-tolerantly on a large scale. The Apache Software Foundation incubated Flink in April 2014, and it became a top-level project in January 2015. Since its inception, Flink’s community has been very active.

b) Thanks to the contributions of more than five hundred people, Flink has evolved into one of the most sophisticated open-source stream processing engines. Flink powers large-scale, business-critical applications across various industries and regions.

c) In addition to offering superior solutions for many established use cases, such as data analytics, ETL, and transactional applications, stream processing technology facilitates new applications, software architectures, and business opportunities for companies of all sizes.

d) Data and data processing have been ubiquitous in businesses for decades. Companies are designing and building infrastructures to manage data, which has increased over the years. Transactional and analytical data processing are standard in most businesses.

**Massive Amounts of Data**

It’s a common theme that the Internet of Things is all about data. IoT represents a massive increase in data rates from multiple sources that need to be processed and analyzed from various Internet of Things access technologies.

In addition, various heterogeneous sensors exhibit a continuous stream of information back and forth, requiring real-time processing and intelligent data visualization with event stream processing (ESP) and IoT stream processing.

This data flow and volume shift may easily represent thousands to millions of events per second. It is the most significant kind of “big data” and will exhibit considerably more data than we have seen on the Internet of humans. Processing large amounts of data from multiple sources in real time is crucial for most IoT solutions, making reliability in distributed systems a pivotal factor to consider in the design process.

**Data Transmission**

Data transmitted between things instructs how to act and react to certain conditions and thresholds. Analysis of this data turns data streams into meaningful events, offering unique situational awareness and insight into the thing transmitting the data. This analysis allows engineers and data science specialists to track formerly immeasurable processes.

Before you proceed, you may find the following helpful:

Event Stream Processing

Stream processing technology is increasingly prevalent because it provides superior solutions for many established use cases, such as data analytics, ETL, and transactional applications. It also enables novel applications, software architectures, and business opportunities. With traditional data infrastructures, data and data processing have been omnipresent in businesses for many decades.

Over the years, data collection and usage have grown consistently, and companies have designed and built infrastructures to manage that data. However, the traditional architecture that most businesses implement distinguishes two types of data processing: transactional processing and analytical processing.

Analytics and Data Handling are Changing.

– All this type of new device information enables valuable insights into what is happening on our planet, offering the ability to make accurate and quick decisions. However, analytics and data handling are challenging. Everything is now distributed to the edge, and new ways of handling data are emerging.

– To combat this, IoT uses emerging technologies such as stream data processing with in-stream analytics, predictive analytics, and machine learning techniques. In addition, IoT devices generate vast amounts of data, putting pressure on the internet infrastructure. This is where the role of cloud computing comes in useful. Cloud computing assists in storing, processing, and transferring data in the cloud instead of connected devices.

– Organizations can utilize various technologies and tools to implement event stream processing (ESP). Some popular ESP frameworks include Apache Kafka, Apache Flink, and Apache Storm. These frameworks provide the infrastructure and processing capabilities to handle high-speed data streams and perform real-time analytics.

IoT Stream Processing: Distributed to the Edge

1: IoT represents a distributed architecture. Analytics are distributed from the IoT platform, either cloud or on-premise, to network edges, making analytics more complicated. A lot of the filtering and analysis is carried out on the gateways and the actual things themselves. These types of edge devices process sensor event data locally.

2: Some can execute immediate local responses without contacting the gateway or remote IoT platform. A device with sufficient memory and processing power can run a lightweight version of an Event Stream Processing ( ESP ) platform.

3: For example, Raspberry PI supports complex-event processing ( CEP ). Gateways ingest event streams from sensors and usually carry out more sophisticated steam processing than the actual thing. Some can send an immediate response via a control signal to actuators, causing a state change.

Technicality is only one part of the puzzle; data ownership and governance are the other.

Time Series Data – Data in Motion

In specific IoT solutions, such as traffic light monitoring in intelligent cities, reaction time must be immediate without delay. This requires a different type of big data solution that processes data while it’s in motion. In some IoT solutions, there is too much data to store, so the analysis of data streams must be done on the fly while being transferred.

It’s not just about capturing and storing as much data as possible anymore. The essence of IoT is the ability to use the data while it is still in motion. Applying analytical models to data streams before they are forwarded enables accurate pattern and anomaly detection while they are occurring. This analysis offers immediate insight into events, enabling quicker reaction times and business decisions.

Traditional analytical models are applied to stored data, offering analytics for historical events only. IoT requires the examination of patterns before data is stored, not after. The traditional store and process model does not have the characteristics to meet the real-time analysis of IoT data streams.

In response to new data handling requirements, new analytical architectures are emerging. The volume and handling of IoT traffic require a new type of platform known as Event Stream Processing ( ESP ) and Distributed Computing Platforms ( DCSP )

Event Stream Processing ( ESP )

ESP is an in-memory, real-time process technique that enables the analysis of continuously flowing events in data streams. Assessing events in motion is known as “event streams.” This reveals what is happening now and can be used with historical data to predict future events accurately. Predictive models are embedded into the data streams to predict future events.

This type of processing represents a shift in data processing. Data is no longer stored and processed; it is analyzed while still being transferred, and models are applied.

ESP & Predictive Analytics Models

ESP applies sophisticated predictive analytics models to data streams and then takes action based on those scores or business rules. It is becoming popular in IoT solutions for predictive asset maintenance and real-time fault detection.

For example, you can create models that signal a future unplanned condition. This can then be applied to ESP, quickly detecting upcoming failures and interruptions. ESP is also commonly used in network optimization of the power grid and traffic control systems.

ESP – All Data in RAM

ESP is in-memory, meaning all data is loaded into RAM. It does not use hard drives or substitutes, resulting in fast processing, enhanced scale, and analytics. In-memory can analyze terabytes of data in just a few seconds and can ingest from millions of sources in milliseconds. All the processing happens at the system’s edge before data is passed to storage.

How you define real-time depends on the context. Your time horizon will depict whether you need the full power of ESP. Events with ESP should happen close together in time and frequency. However, if your time horizon is over a relatively long period and events are not close together, your requirements might be fulfilled with Batch processing.

**Batch vs Real-Time Processing**

With Batch processing, files are gathered over time and sent together as a batch. It is commonly used when fast response times are not critical and for non-real-time processing. Batch jobs can be stored for an extended period and then executed; for example, an end-of-day report is suited for batch processing as it does not need to be done in real-time.

They can scale, but the batch orientation limits real-time decision-making and IoT stream requirements. Real-time processing involves a continual input, process, and output of data. Data is processed in a relatively short period. When your solution requires immediate action, real-time is the one for you. Examples of batch and real-time solutions include Hadoop for batch and Apache Spark, focusing on real-time computation.

**Hadoop vs. Apache Spark**

Hadoop is a distributed data infrastructure that distributes data collections across nodes in a cluster. It includes a storage component called Hadoop Distributed File System ( HDFS ) and a processing component called MapReduce. However, with the new requirements for IoT, MapReduce is not the answer for everything.

MapReduce is fine if your data operation requirements are static and you can wait for batch processing. But if your solution requires analytics from sensor streaming data, then you are better off using Apache Spark. Spark was created in response to MapReduce’s limitations.

Apache Spark does not have a file system and may be integrated with HDFS or a cloud-based data platform such as Amazon S3 or OpenStack Swift. It is much faster than MapReduce and operates in memory and real-time. In addition, it has machine learning libraries to gain insights from the data and identify patterns. Machine learning can be as simple as a Python event and anomaly detection script.

Closing Points on Event Stream Processing

Event Stream Processing is a computing paradigm focused on the real-time analysis of data streams. Unlike traditional batch processing, which involves collecting data and analyzing it periodically, ESP allows for immediate insights as data flows in. This capability is crucial for applications that demand instantaneous action, such as fraud detection in financial transactions or monitoring network security.

The architecture of an Event Stream Processing system typically comprises several key components:

1. **Event Sources**: These are the origins of the data streams, which could be sensors, user actions, or any system generating continuous data.

2. **Stream Processor**: The core of ESP, where the actual computation and analysis occur. It processes the data in real-time, applying various transformations and detecting patterns.

3. **Data Sink**: This is where the processed data is delivered, often to databases, dashboards, or triggering subsequent actions.

4. **Messaging System**: A crucial part of ESP, it ensures that data flows seamlessly from the source to the processor and finally to the sink.

The versatility of ESP makes it applicable across numerous industries:

– **Finance**: ESP is used in algorithmic trading, risk management, and fraud detection, providing real-time insights that drive decisions.

– **Healthcare**: In monitoring patient vitals and managing hospital operations, ESP enables timely interventions and improved resource allocation.

– **Telecommunications**: ESP helps in network monitoring, ensuring optimal performance and quick resolution of issues.

– **E-commerce**: Companies use ESP to personalize user experiences by analyzing browsing and purchasing behaviors in real-time.

Implementing ESP can be challenging due to the need for reliable and scalable infrastructure. Key considerations include:

– **Latency**: Minimizing the delay between data generation and analysis is crucial for effectiveness.

– **Scalability**: As data volumes increase, the system must efficiently handle larger streams without performance degradation.

– **Data Quality**: Ensuring data accuracy and consistency is essential for meaningful analysis.

Summary: Event Stream Processing

In today’s fast-paced digital world, the ability to process and analyze data in real time has become crucial for businesses across various industries. Event stream processing is one technology that has emerged as a game-changer in this realm. In this blog post, we will explore the concept of event stream processing, its benefits, and its applications in different domains.

Understanding Event Stream Processing

Event stream processing is a method of analyzing and acting upon data generated in real time. Unlike traditional batch processing, which deals with static datasets, event stream processing focuses on continuous data streams. It involves capturing, filtering, aggregating, and analyzing events as they occur, enabling businesses to gain immediate insights and take proactive actions.

Benefits of Event Stream Processing

Real-Time Insights: Event stream processing processes data in real time, allowing businesses to derive insights and make decisions instantly. This empowers them to respond swiftly to changing market conditions, customer demands, and emerging trends.

Enhanced Operational Efficiency: Event stream processing enables businesses to automate complex workflows, detect anomalies, and trigger real-time alerts. This leads to improved operational efficiency, reduced downtime, and optimized resource utilization.

Seamless Integration: Event stream processing platforms seamlessly integrate existing IT systems and data sources. This ensures that businesses can leverage their existing infrastructure and tools, making implementing and scaling event-driven architectures easier.

Applications of Event Stream Processing

Financial Services: Event stream processing is widely used in the financial sector for real-time fraud detection, algorithmic trading, and risk management. By analyzing vast amounts of transactional data in real time, financial institutions can identify suspicious activities, make informed trading decisions, and manage risks.

Internet of Things (IoT): With the proliferation of IoT devices, event stream processing has become crucial for managing and extracting value from the massive amounts of data generated by these devices. It enables real-time monitoring, predictive maintenance, and anomaly detection in IoT networks.

Retail and E-commerce: Event stream processing lets retailers personalize customer experiences, optimize inventory management, and detect fraudulent transactions in real time. By analyzing customer behavior data in real time, retailers can deliver targeted promotions, ensure product availability, and prevent fraudulent activities.

Conclusion: Event stream processing is revolutionizing how businesses harness real-time data’s power. Providing instant insights, enhanced operational efficiency, and seamless integration empowers organizations to stay agile, make data-driven decisions, and gain a competitive edge in today’s dynamic business landscape.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023