Auto Scaling Observability

Observability in the context of autoscaling is a crucial aspect of managing and optimizing the scalability and efficiency of modern applications. This blog post will delve into autoscaling observability and its significance in today’s dynamic and rapidly evolving technological landscape.

Highlights: Auto Scaling Observability

- The Role of the Metric

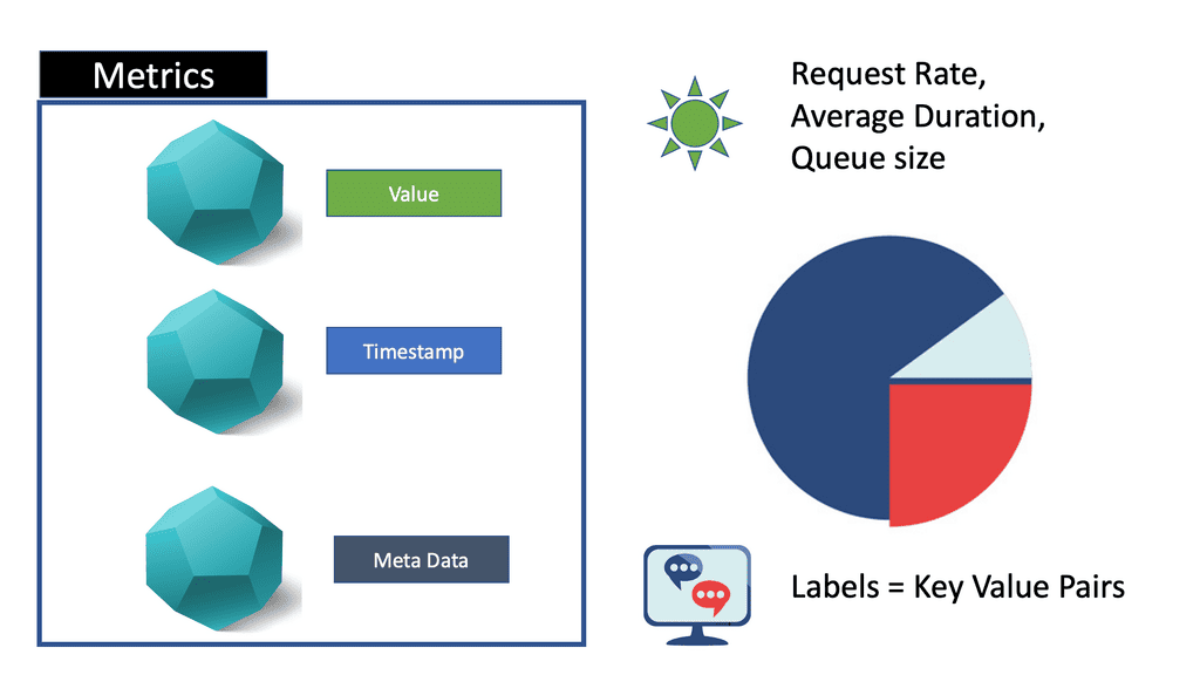

“What Is a Metric: Good for Known” So when it comes to auto-scaling observability and auto-scaling metrics, one needs to understand the downfall of the metric. A metric is a single number, with tags optionally appended for grouping and searching those numbers. They are disposable and cheap and have a predictable storage footprint.

A metric is a numerical representation of a system state over the recorded time interval and can tell you if a particular resource is over or underutilized at a specific moment. For example, CPU utilization might be at 75% right now.

- Prometheus Pull Approach

There can be many tools to gather metrics, such as Prometheus, along with several techniques used to collect these metrics, such as the PUSH and PULL approaches. There are pros and cons to each method. However, Prometheus metric types and its PULL approach are prevalent in the market. However, if you want full observability and controllability, remember it is solely in metrics-based monitoring solutions. For additional information on Monitoring and Observability and their difference, visit this post on observability vs monitoring.

Related: Before you proceed, you may find the following helpful

Auto Scaling Metrics |

|

Back to basics with Auto Scaling Observability

Understanding Autoscaling

Before we dive into observability, let’s briefly explore the concept of autoscaling. Autoscaling refers to the ability of an application or infrastructure to adjust its resources based on demand automatically. It enables organizations to handle fluctuating workloads and optimize resource allocation efficiently.

Observability, in the context of autoscaling, refers to gaining insights into an autoscaling system’s performance, health, and efficiency. It involves collecting, analyzing, and visualizing relevant data to understand the behavior and patterns of the application and infrastructure. Organizations can make informed decisions to optimize autoscaling algorithms, resource allocation, and overall system performance through observability.

Observability | Main Auto Scaling Observability Components Auto Scaling Observability

|

Critical Components of Autoscaling Observability

To achieve effective autoscaling observability, several critical components come into play. These include:

Metrics and Monitoring: Gathering and monitoring key metrics such as CPU utilization, response times, request rates, and error rates are fundamental for understanding the performance of the application and infrastructure.

Logging and Tracing: Logging captures detailed information about events and transactions within the system, while tracing provides insights into the flow of requests across various components. Both logging and tracing contribute to a comprehensive understanding of system behavior.

Alerting and Thresholds: Setting up appropriate alerts and thresholds based on predefined criteria ensures timely notifications when specific conditions are met. This allows

Tools and Technologies for Autoscaling Observability

A wide range of tools and technologies are available to facilitate autoscaling observability. Prominent examples include Prometheus, Grafana, Elasticsearch, Kibana, and CloudWatch. These tools provide robust monitoring, visualization, and analysis capabilities, enabling organizations to gain deep insights into their autoscaling systems.

The first component of observability is the channels that convey observations to the observer. There are three channels: logs, traces, and metrics. These channels are common to all areas of observability, including data observability.

- Logs

Logs are the most typical channel and take several forms (e.g., line of free-text, JSON. Logs are intended to encapsulate information about an event.

- Traces

Traces allow you to do what logs don’t—reconnect the dots of a process. Because traces represent the link between all events of the same process, they allow the whole context to be derived from logs efficiently. Each pair of events, an operation, is a span that can be distributed across multiple servers.

- Metrics

Finally, we have metrics. Every system state has some component that can be represented with numbers, and these numbers change as the state changes. Metrics provide a basis of information that allows an observer not only to understand using factual information but also leverage mathematical methods to derive insight from even a large number of metrics (e.g., the CPU load, the number of open files, the average amount of rows, the minimum date).

Auto Scaling Observability

Metrics: Resource Utilization Only

So, metrics help tell us about resource utilization. Within a Kubernetes environment, these metrics are used to perform auto-healing and auto-scheduling purposes. So, when it comes to metrics, monitoring performs several functions. First, it can collect, aggregate, and analyze metrics to shift through known patterns that indicate troubling trends.

The critical point here is that it shifts through known patterns. Then, based on a known event, metrics trigger alerts that notify when further investigation is needed. Finally, we have dashboards that display the metrics data trends adapted for visual consumption on top of all of this.

These monitoring systems work well for identifying previously encountered known failures but don’t help as much for the unknown. Unknown failures are the norm today with disgruntled systems and complex system interactions.

Metrics are suitable for dashboards, but there won’t be a predefined dashboard for unknowns as it can’t track something it does not know about. Using metrics and dashboards like this is a very reactive approach. Yet, it’s an approach widely accepted as the norm. Monitoring is a reactive approach best suited for detecting known problems and previously identified patterns.

Metrics and intermittent problems?

So, the metrics can help you when the microservice is healthy or unhealthy within a microservices environment. Still, a metric will have difficulty telling you if a microservices function takes a long time to complete or if there is an intermittent problem with an upstream or downstream dependency. So, we need different tools to gather this type of information.

We have an issue with auto-scaling metrics because they only look at individual microservices with a given set of attributes. So, they don’t give you a holistic view of the problem. For example, the application stack now exists in numerous locations and location types; we need a holistic viewpoint.

And a metric does not give this. For example, metrics are used to track simplistic system states that might indicate a service may be running poorly or may be a leading indicator or an early warning signal. However, while those measures are easy to collect, they don’t turn out to be proper measures for triggering alerts.

Auto-scaling metrics: Issues with dashboards: Useful only for a few metrics

So, these metrics are gathered and stored in time-series databases, and we have several dashboards to display these metrics. These dashboards were first built, and there weren’t many system metrics to worry about. You could have gotten away with 20 or so dashboards. But that was about it. As a result, it was easy to see the critical data anyone should know about for any given service. Moreover, those systems were simple and did not have many moving parts. This contrasts the modern services that typically collect so many metrics that fitting them into the same dashboard is impossible.

Auto-scaling metrics: Issues with aggregate metrics

So, we must find ways to fit all the metrics into a few dashboards. Here, the metrics are often pre-aggregated and averaged. However, the issue is that the aggregate values no longer provide meaningful visibility, even when we have filters and drill-downs. Therefore, we need to predeclare conditions that describe conditions we expect in the future.

This is where we use instinctual practices of past experiences and rely on gut feeling. Remember the network and software hero? It would help to avoid aggregation and averaging within the metrics store. On the other hand, we have Percentiles that offer a richer view. Keep in mind, however, that they require raw data.

Auto Scaling Observability: Any Question

For auto-scaling observability, we take on an entirely different approach. They strive for other exploratory methods to find problems. Essentially, those operating observability systems don’t sit back and wait for an alert or something to happen. Instead, they are always actively looking and asking random questions to the observability system.

Observability tools should gather rich telemetry for every possible event, having full content of every request and then having the ability to store it and query. In addition, these new auto-scaling observability tools are specifically designed to query against high-cardinality data. High cardinality allows you to interrogate your event data in any arbitrary way that we see fit. Now, we ask any questions about your system and inspect its corresponding state.

Key Auto Scaling Observability Considerations

No predictions in advance.

Due to the nature of modern software systems, you want to understand any inner state and services without anticipating or predicting them in advance. For this, we need to gain valuable telemetry and use some new tools and technological capabilities to gather and interrogate this data once it has been collected. Telemetry needs to be constantly gathered in flexible ways to debug issues without predicting how failures may occur.

The conditions affecting infrastructure health change infrequently and are relatively easier to monitor. In addition, we have several well-established practices to predict, such as capacity planning and the ability to remediate automatically, e.g., auto-scaling in a Kubernetes environment. All of which can be used to tackle these types of known issues.

Due to its relatively predictable and slowly changing nature, the aggregated metrics approach monitors and alerts perfectly for infrastructure problems. So here, a metric-based system works well. Metrics-based systems and their associated signals help you see when capacity limits or known error conditions of underlying systems are being reached.

So, metrics-based systems work well for infrastructure problems that don’t change much but fall dramatically short in complex distributed systems. You should opt for an observability and controllability platform for these systems.

Summary: Understanding Autoscaling

Autoscaling is a mechanism that automatically adjusts the number of computing resources allocated to an application based on its demand. By dynamically scaling resources up or down, autoscaling enables organizations to handle fluctuating workloads efficiently. However, to truly harness the power of autoscaling, it is crucial to have robust observability in place.

Section 1: The Role of Observability in Autoscaling

Observability is the ability to gain insights into the internal state of a system based on its external outputs. Observability plays a pivotal role in understanding the system’s behavior, identifying bottlenecks, and making informed scaling decisions when it comes to autoscaling. It provides visibility into key metrics like CPU utilization, memory usage, and network traffic. With observability, you can make data-driven decisions and ensure optimal resource allocation.

Section 2: Monitoring and Metrics

To achieve effective autoscaling observability, comprehensive monitoring is essential. Monitoring tools collect various metrics, such as response times, error rates, and resource utilization, to provide a holistic view of your infrastructure. These metrics can be analyzed to identify patterns, detect anomalies, and trigger autoscaling actions when necessary. You can proactively address performance issues and optimize resource utilization by monitoring and analyzing metrics.

Section 3: Logging and Tracing

In addition to monitoring, logging, and tracing are critical components of autoscaling observability. Logging captures detailed information about system events, errors, and activities, enabling you to troubleshoot issues and gain insights into system behavior. Tracing helps you understand the flow of requests across different services. Logging and tracing provide a granular view of your application’s performance, aiding in autoscaling decisions and ensuring smooth operation.

Section 4: Automation and Alerting

To truly master autoscaling observability, automation, and alerting mechanisms are vital. You can configure thresholds and triggers that initiate autoscaling actions based on predefined conditions by setting up automated processes. This allows for proactive scaling, ensuring your system is constantly optimized for performance. Additionally, timely alerts can notify you of critical events or anomalies, enabling you to take immediate action and maintain the desired scalability.

Conclusion:

Autoscaling observability is the key to unlocking the true potential of autoscaling. By understanding the behavior of your system through comprehensive monitoring, logging, and tracing, you can make informed decisions and ensure optimal resource allocation. With automation and alerting mechanisms in place, you can proactively respond to changing demands and maintain high efficiency. Embrace autoscaling observability and take your infrastructure management to new heights!

- Fortinet’s new FortiOS 7.4 enhances SASE - April 5, 2023

- Comcast SD-WAN Expansion to SMBs - April 4, 2023

- Cisco CloudLock - April 4, 2023