Container Scheduler

In modern application development and deployment, containerization has gained immense popularity. Containers allow developers to package their applications and dependencies into portable and isolated environments, making them easily deployable across different systems. However, as the number of containers grows, managing and orchestrating them becomes complex. This is where container schedulers come into play.

A container scheduler is a crucial component of container orchestration platforms. Its primary role is to manage the allocation and execution of containers across a cluster of machines or nodes. By efficiently distributing workloads, container schedulers ensure optimal resource utilization, high availability, and scalability.

Container schedulers serve as a crucial component in container orchestration frameworks, such as Kubernetes. They act as intelligent managers, overseeing the deployment and allocation of containers across a cluster of machines. By automating the scheduling process, container schedulers enable efficient resource utilization and workload distribution.

Enhanced Resource Utilization: Container schedulers optimize resource allocation by intelligently distributing containers based on available resources and workload requirements. This leads to better utilization of computing power, minimizing resource wastage.

Scalability and Load Balancing: Container schedulers enable horizontal scaling, allowing applications to seamlessly handle increased traffic and workload. With the ability to automatically scale up or down based on demand, container schedulers ensure optimal performance and prevent system overload.

High Availability: By distributing containers across multiple nodes, container schedulers enhance fault tolerance and ensure high availability. If one node fails, the scheduler automatically redirects containers to other healthy nodes, minimizing downtime and maximizing system reliability.

Microservices Architecture: Container schedulers are particularly beneficial in microservices-based applications. They enable efficient deployment, scaling, and management of individual microservices, facilitating agility and flexibility in development.

Cloud-Native Applications: Container schedulers are a fundamental component of cloud-native application development. They provide the necessary framework for deploying and managing containerized applications in dynamic and distributed environments.

DevOps and Continuous Deployment: Container schedulers play a vital role in enabling DevOps practices and continuous deployment. They automate the deployment process, allowing developers to focus on writing code while ensuring smooth and efficient application delivery.

Container schedulers have revolutionized the way organizations develop, deploy, and manage their applications. By optimizing resource utilization, enabling scalability, and enhancing availability, container schedulers empower businesses to build robust and efficient software systems. As technology continues to evolve, container schedulers will remain a critical tool in streamlining efficiency and scaling applications in the dynamic digital landscape.

Matt Conran

Highlights: Container Scheduler

### What Are Containerized Applications?

Containerized applications are a method of packaging software in a way that allows it to run consistently across different computing environments. Unlike traditional virtual machines, containers share the host system’s OS kernel but run in isolated user spaces. This means they are lightweight, fast, and can be deployed with minimal overhead. By encapsulating an application and its dependencies, containers ensure that software runs reliably no matter where it is deployed, be it on a developer’s laptop or in a cloud environment.

### Advantages of Containerization

The adoption of containerized applications brings numerous benefits to the table. First and foremost, they provide unmatched flexibility. Containers can be easily moved between on-premises servers and various cloud environments, offering unparalleled portability. They also enhance resource efficiency, allowing multiple containers to run on a single machine without the need for separate OS installations. Additionally, containers support microservices architectures, enabling developers to break down applications into manageable, scalable components. This modular approach not only accelerates development cycles but also simplifies updates and maintenance.

### Challenges in the Containerized Ecosystem

Despite their advantages, containerized applications come with their own set of challenges. Security is a primary concern, as the shared kernel approach can potentially expose the system to vulnerabilities. Proper isolation and management are crucial to maintaining a secure environment. Furthermore, the orchestration of containers at scale requires sophisticated tools and expertise. Technologies like Kubernetes have emerged to address these needs, but they introduce their own complexities that teams must navigate. Additionally, as organizations shift to containerized architectures, they must also consider the cultural and procedural changes necessary to fully leverage this technology.

### Technologies Powering Containerization

Several technologies have become integral to the containerized application ecosystem. Docker is perhaps the most well-known, providing a platform for developers to create, deploy, and run applications in containers. Kubernetes, on the other hand, offers robust orchestration capabilities, managing containerized applications in a cluster. It automates deployment, scaling, and operations, ensuring applications run smoothly in large, dynamic environments. Other tools like Helm and Prometheus play supporting roles, enhancing the management and monitoring of these applications.

Container Orchestration

– Orchestration and mass deployment tools are the first tools that add functionality to the Docker distribution and Linux container experience. Ansible Docker and New Relic’s Centurion tooling still function like traditional deployment tools but leverage the container as the distribution artifact. Their approach is pretty simple and easy to implement. Although Docker offers many benefits without much complexity, many of these tools have been replaced by more robust and flexible alternatives, like Kubernetes.

– In addition to Kubernetes or Apache Mesos with Marathon schedulers, fully automatic schedulers can manage a pool of hosts on your behalf. The free and commercial options ecosystems continue to grow rapidly, including HashiCorp’s Nomad, Mesosphere’s DC/OS (Datacenter Operating System), and Rancher.

– There is more to Docker than just a standalone solution. Despite its extensive feature set, someone will always need more than it can deliver alone. It is possible to improve or augment Docker’s functionality with various tools. Ansible for simple orchestration and Prometheus for monitoring the use of Docker APIs. Others take advantage of Docker’s plug-in architecture. Docker plug-ins are executable programs that receive and return data according to a specification.

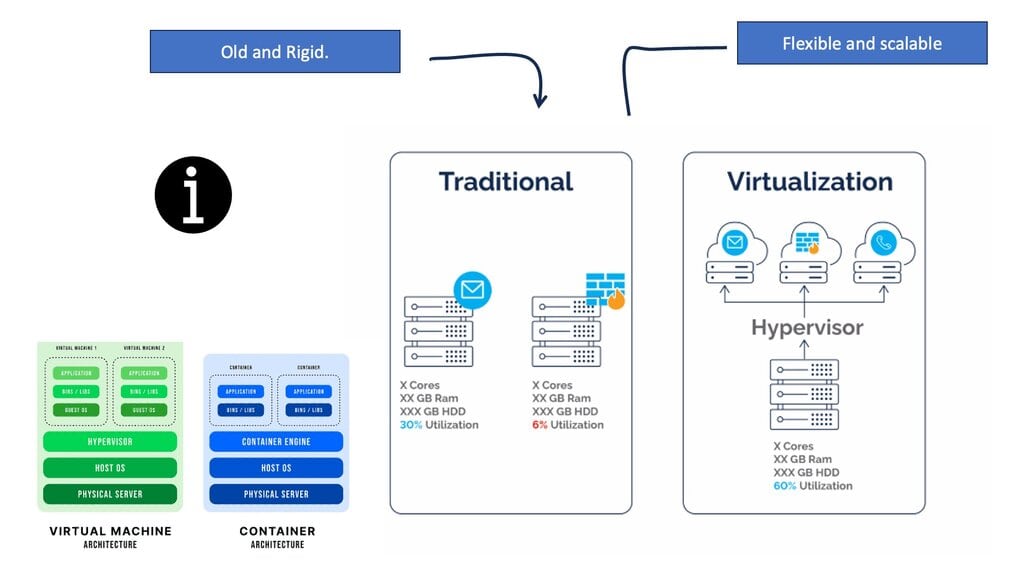

**Virtualization**

Virtualization systems, such as VMware or KVM, allow you to run Linux kernels and operating systems on top of a virtualized layer, commonly called a hypervisor. On top of a hardware virtualization layer, each VM hosts its operating system kernel in a separate memory space, providing extreme isolation between workloads. A container is fundamentally different since it shares only one kernel and achieves all workload isolation within it. Operating systems are virtualized in this way.

**Docker and OCI Images**

There is almost no place today that does not use containers. Many production systems, including Kubernetes and most “serverless” cloud technologies, rely on Docker and OCI images as the packaging format for a significant and growing amount of software delivered into production environments.

Container Scheduling

Often, we want our containers to restart if they exit. Containers can come and go quickly, but some are very short-lived. You expect production applications, for example, to be constantly running after you tell them to do so. Schedulers may handle this for you if your system is more complex.

Docker’s cgroup-based CPU share constraints can have unexpected results, unlike VMs. Like the excellent command, they are relative limits, not hard limits. Suppose a container is limited to half the CPU share on a system that is not very busy. As the CPU is not busy, the CPU share limit would only have a limited effect since the scheduler pool is not competitive. Suddenly, the constraint will affect the first container when a second container using a lot of CPU is deployed to the same system. When allocating resources and constraining containers, keep this in mind.

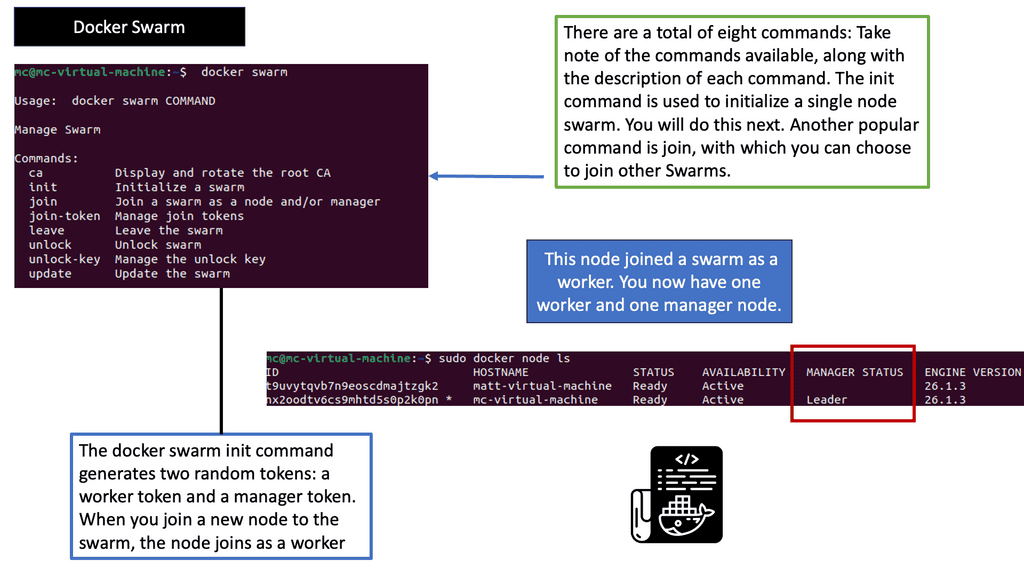

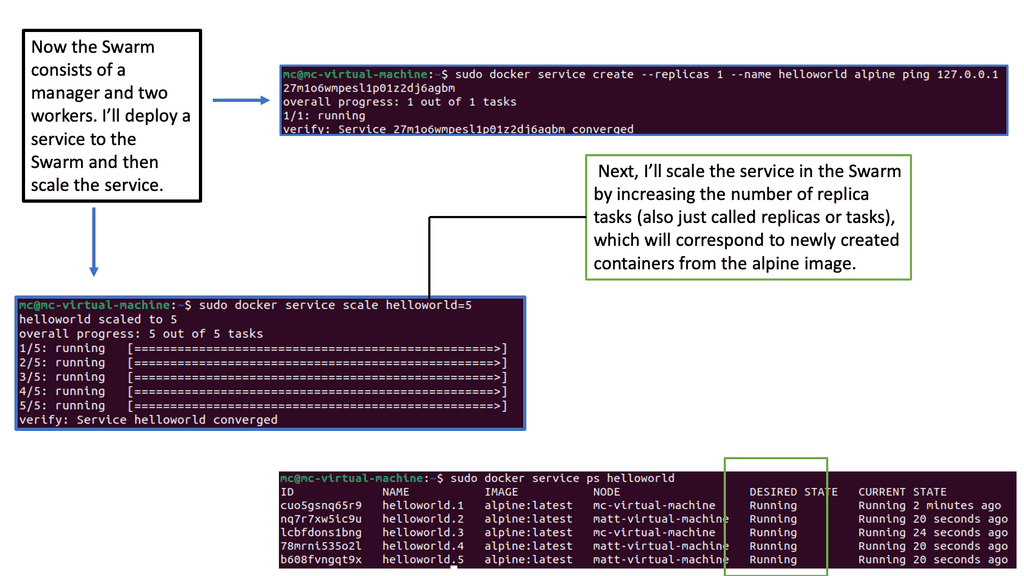

- Scheduling with Docker Swarm

Container scheduling lies at the heart of efficient resource allocation in containerized environments. It involves intelligently assigning containers to available resources based on various factors such as resource availability, load balancing, and fault tolerance. Docker Swarm simplifies this process by providing a built-in orchestration layer that automates container scheduling, making it seamless and hassle-free.

- Scheduling with Apache Mesos

Apache Mesos is an open-source cluster manager designed to abstract and pool computing resources across data centers or cloud environments. As a distributed systems kernel, Mesos enables efficient resource utilization by offering a unified API for managing diverse workloads. With its modular architecture, Mesos ensures flexibility and scalability, making it a preferred choice for large-scale deployments.

- Container Orchestration

Containerization has revolutionized software development by providing a consistent and isolated application environment. However, managing many containers across multiple hosts manually can be daunting. This is where container orchestration comes into play. Docker Swarm simplifies managing and scaling containers, making it easier to deploy applications seamlessly.

Docker Swarm offers a range of powerful features that enhance container orchestration. From declarative service definition to automatic load balancing and service discovery, Docker Swarm provides a robust platform for managing containerized applications. Its ability to distribute containers across a cluster of machines and handle failover seamlessly ensures high availability and fault tolerance.

Getting Started with Docker Swarm

You need to set up a Swarm cluster to start leveraging Docker Swarm’s benefits. This involves creating a Swarm manager, adding worker nodes, and joining them to the cluster. Docker Swarm provides a user-friendly command-line interface and APIs to manage the cluster, making it accessible to developers of all levels of expertise.

One of Docker Swarm’s most significant advantages is its ability to scale applications effortlessly. By leveraging the power of service replicas, Docker Swarm enables horizontal scaling, allowing you to handle increased traffic and demand. Swarm’s built-in load balancing also ensures that traffic is evenly distributed across containers, optimizing resource utilization.

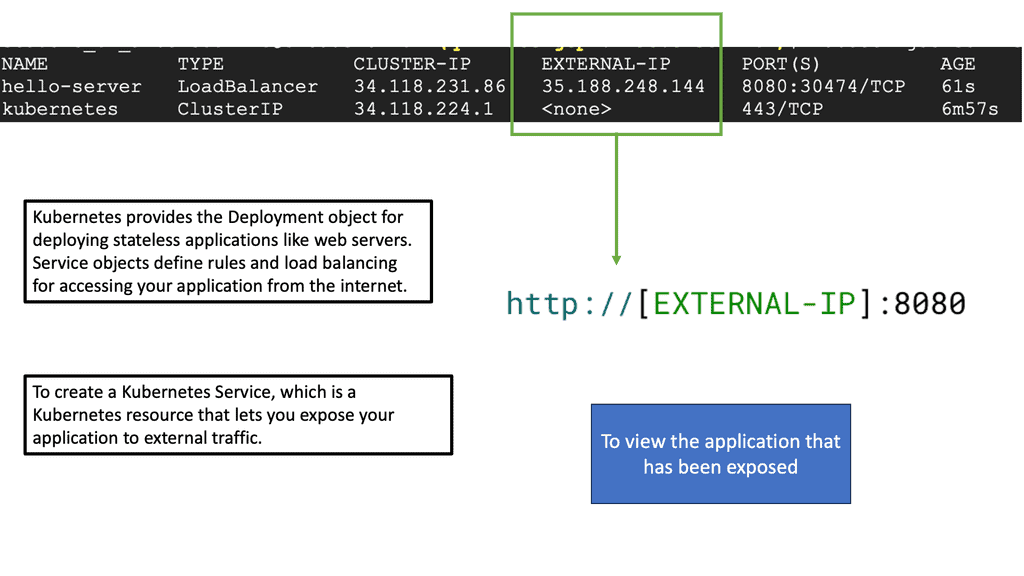

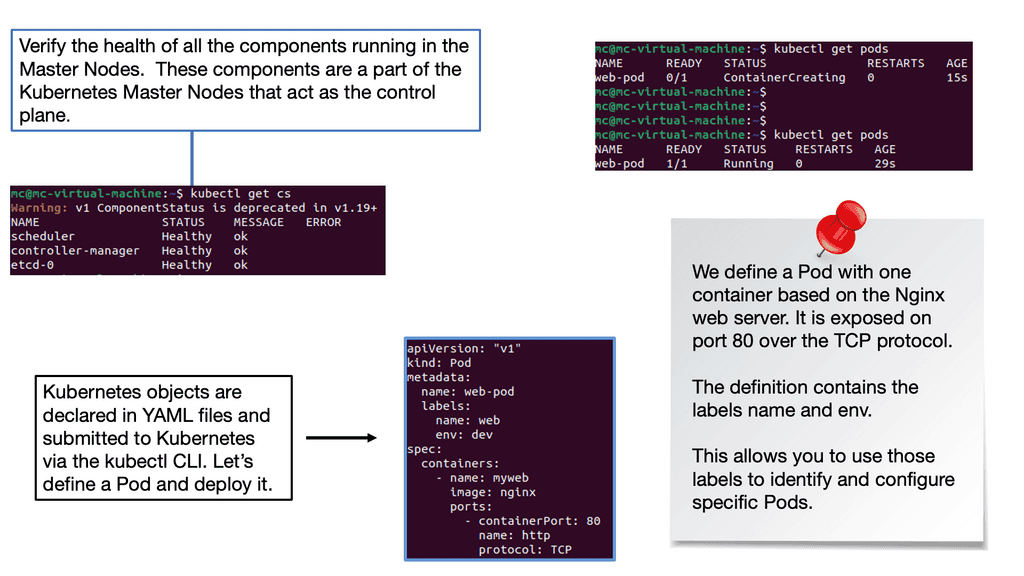

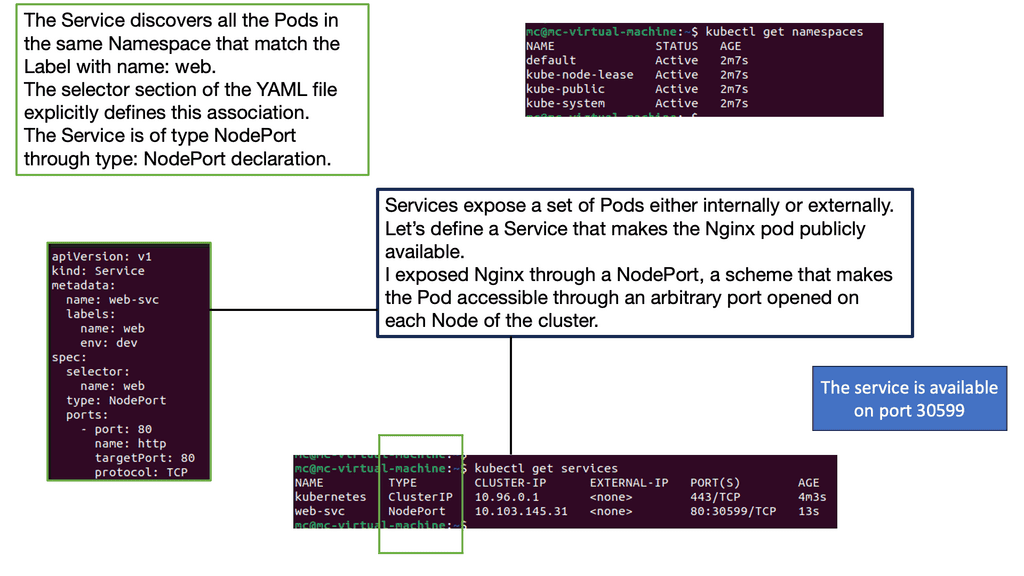

Scheduling with Kubernetes

Kubernetes employs a sophisticated scheduling system to assign containers to appropriate nodes in a cluster. The scheduling process considers various factors such as resource requirements, node capacity, affinity, anti-affinity, and custom constraints. Using intelligent scheduling algorithms, Kubernetes optimizes resource allocation, load balancing, and fault tolerance.

**Traditional Application**

Applications started with single server deployments and no need for a container scheduler. However, this was an inefficient deployment model, yet it was widely adopted. Applications mapped to specific hardware do not scale. The landscape changed, and the application stack was divided into several tiers. Decoupling the application to a loosely coupled system is a more efficient solution. Nowadays, the application is divided into different components and spread across the network with various systems, dependencies, and physical servers.

**Example: OpenShift Networking**

An example of this is with OpenShift networking. OpenShift is based on Kubernetes and borrows many of the Kubernetes constructs. For pre-information, you may find this post informative on Kubernetes and Kubernetes Security Best Practice

**The Process of Decoupling**

The world of application containerization drives the ability to decouple the application. As a result, there has been a massive increase in containerized application deployments and the need for a container scheduler. With all these changes, remember the need for new security concerns to be addressed with Docker container security.

The Kubernetes team conducts regular surveys on container usage, and their recent figures show an increase in all areas of development, testing, pre-production, and production. Currently, Google initiates about 2 billion containers per week. Most of Google’s apps/services, such as its search engine, Docs, and Gmail, are packaged as Linux containers.

For pre-information, you may find the following helpful

Container Scheduler

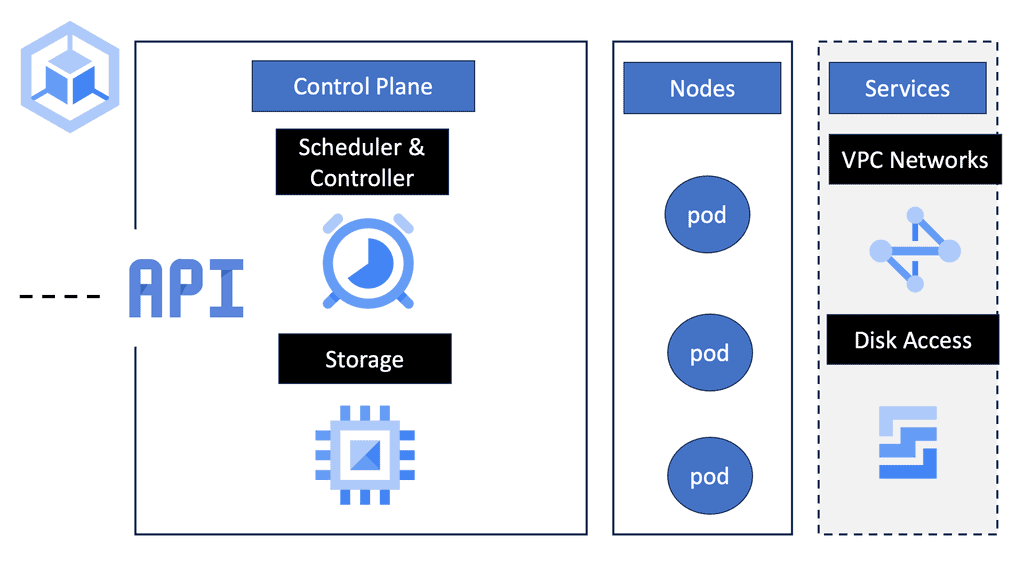

With a container orchestration layer, we are marrying the container scheduler’s decisions on where to place a container with the primitives provided by lower layers. The container scheduler knows where containers “live,” and we can consider it the absolute source of truth concerning a container’s location.

So, a container scheduler’s primary task is to start containers on the most suitable host and connect them. It also has to manage failures by performing automatic fail-overs and be able to scale containers when there is too much data to process/compute for a single instance.

Popular Container Schedulers:

1. Kubernetes: Kubernetes is an open-source container orchestration platform with a powerful scheduler. It provides extensive features for managing and orchestrating containers, making it widely adopted in the industry.

2. Docker Swarm: Docker Swarm is another popular container scheduler provided by Docker. It simplifies container orchestration by leveraging Docker’s ease of use and integrates well with existing workflows.

3. Apache Mesos: Mesos is a distributed systems kernel that provides a framework for managing and scheduling containers and other workloads. It offers high scalability and fault tolerance, making it suitable for large-scale deployments.

Understanding Container Schedulers

Container schedulers, such as Kubernetes and Docker Swarm, play a vital role in managing containers efficiently. These schedulers leverage a range of algorithms and policies to intelligently allocate resources, schedule tasks, and optimize performance. By abstracting away the complexities of machine management, container schedulers enable developers and operators to focus on application development and deployment, leading to increased productivity and streamlined operations.

Key Scheduling Features

To truly comprehend the value of container schedulers, it is essential to understand their key features and functionality. These schedulers excel in areas such as automatic scaling, load balancing, service discovery, and fault tolerance. By leveraging advanced scheduling techniques, such as bin packing and affinity/anti-affinity rules, container schedulers can effectively utilize available resources, distribute workloads evenly, and ensure high availability of services.

Kubernetes & Docker Swarm

There are two widely used container schedulers: Kubernetes and Docker Swarm. Both offer powerful features and a robust ecosystem, but they differ in terms of architecture, scalability, and community support. By examining their strengths and weaknesses, organizations can make informed decisions on selecting the most suitable container scheduler for their specific requirements.

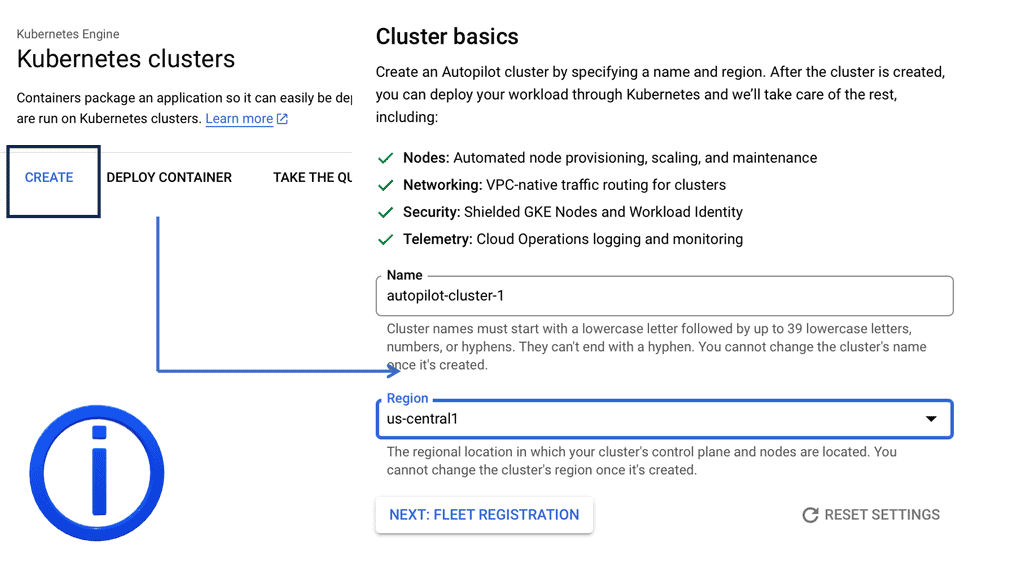

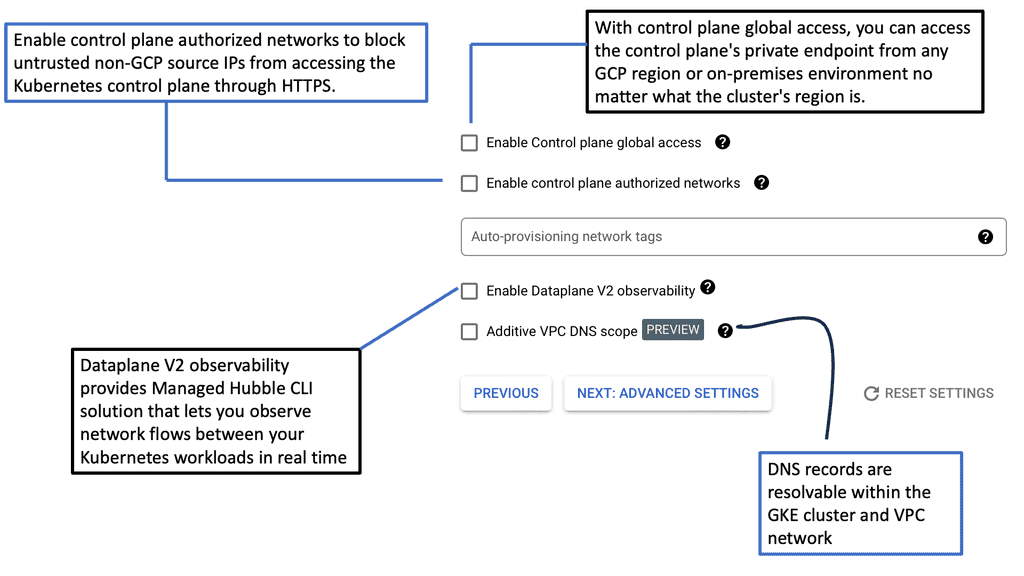

Kubernetes Clusters Google Cloud

### Understanding Kubernetes Clusters

At the heart of Kubernetes is the concept of clusters. A Kubernetes cluster consists of a set of worker machines, known as nodes, that run containerized applications. Every cluster has at least one node and a control plane that manages the nodes and the workloads within the cluster. The control plane decisions, such as scheduling, are managed by a component called the Kubernetes scheduler. This scheduler ensures that the pods are distributed efficiently across the nodes, optimizing resource utilization and maintaining system health.

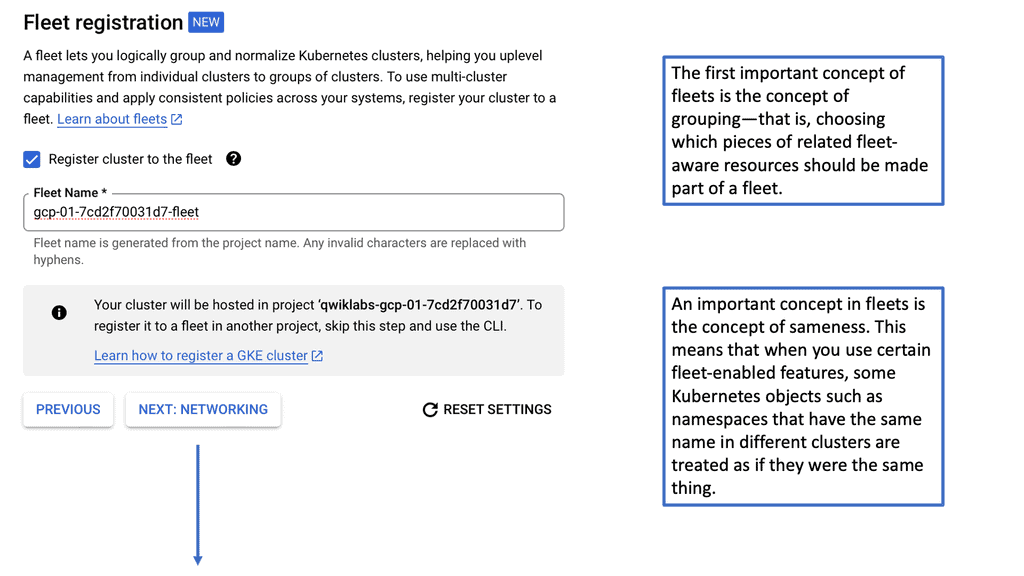

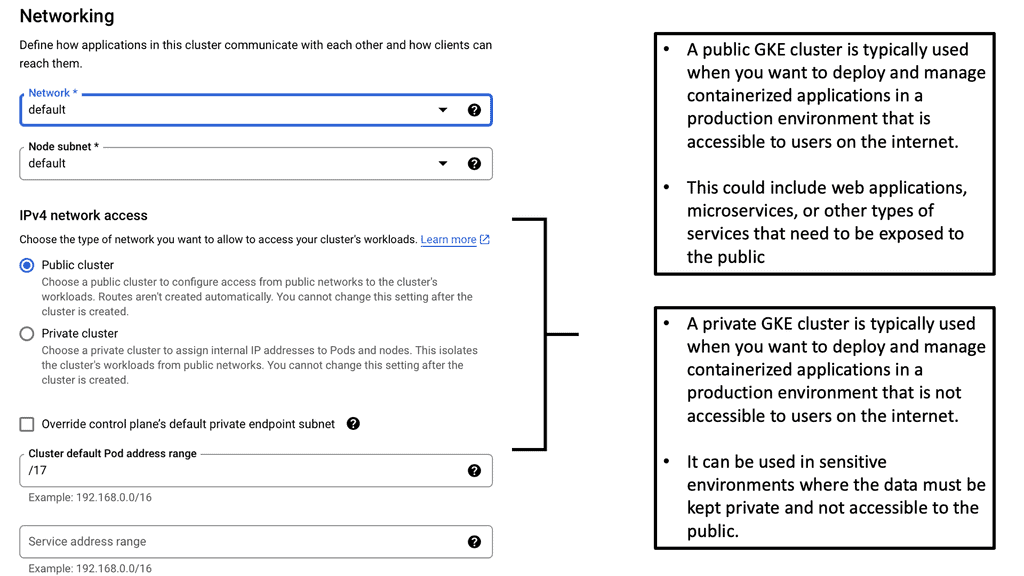

### Google Cloud and Kubernetes: A Perfect Match

Google Cloud offers a powerful integration with Kubernetes through Google Kubernetes Engine (GKE). This managed service allows developers to deploy, manage, and scale their Kubernetes clusters with ease, leveraging Google’s robust infrastructure. GKE simplifies cluster management by automating tasks such as upgrades, repairs, and scaling, allowing developers to focus on building applications rather than infrastructure maintenance. Additionally, GKE provides advanced features like auto-scaling and multi-cluster support, making it an ideal choice for enterprises looking to harness the full potential of Kubernetes.

### The Role of Container Schedulers

A critical component of Kubernetes is its container scheduler, which optimizes the deployment of containers across the available resources. The scheduler considers various factors, such as resource requirements, hardware/software/policy constraints, and affinity/anti-affinity specifications, to decide where to place new pods. This ensures that applications run efficiently and reliably, even as workloads fluctuate. By automating these decisions, Kubernetes frees developers from manual resource allocation, enhancing productivity and reducing the risk of human error.

**Key Features of Container Schedulers**

**Key Features of Container Schedulers**

1. Resource Management: Container schedulers allocate appropriate resources to each container, considering factors such as CPU, memory, and storage requirements. This ensures that containers operate without resource contention, preventing performance degradation.

2. Scheduling Policies: Schedulers implement various scheduling policies to allocate containers based on priorities, constraints, and dependencies. They ensure containers are placed on suitable nodes that meet the required criteria, such as hardware capabilities or network proximity.

3. Scalability and Load Balancing: Container schedulers enable horizontal scalability by automatically scaling up or down the number of containers based on demand. They also distribute the workload evenly across nodes, preventing any single node from becoming overloaded.

4. High Availability: Schedulers monitor the health of containers and nodes, automatically rescheduling failed containers to healthy nodes. This ensures that applications remain available even in node failures or container crashes.

**Benefits of Container Schedulers**

1. Efficient Resource Utilization: Container schedulers optimize resource allocation, allowing organizations to maximize their infrastructure investments and reduce operational costs by eliminating resource wastage.

2. Improved Application Performance: Schedulers ensure containers have the necessary resources to operate at their best, preventing resource contention and bottlenecks.

3. Simplified Management: Container schedulers automate the deployment and management of containers, reducing manual effort and enabling faster application delivery.

4. Flexibility and Portability: With container schedulers, applications can be easily moved and deployed across different environments, whether on-premises, in the cloud, or in hybrid setups. This flexibility allows organizations to adapt to changing business needs.

Containers – Raising the Abstraction Level

Container networking raises the abstraction level. The abstraction level was at a VM level, but with containers, the abstraction is moved up one layer. So, instead of virtual hardware, you have an idealized O/S stack.

1 -) Containers change the way applications are packaged. They allow application tiers to be packaged and isolated, so all dependencies are confined to individual islands and do not conflict with other stacks. Containers provide a simple way to package all application pieces into an easily deployable unit. The ability to create different units radically simplifies deployment.

2 -) It creates a predictable isolated stack with ALL userland dependencies. Each application is isolated from others, and dependencies are sealed in. Dependencies are the natural killer as they can slow down deployment lifecycles. Containers combat this and fundamentally change the operational landscape. Docker and Rocket are the main Linux application container stacks in production.

3 -) Containers don’t magically appear. They need assistance with where to go; this is the role of the container scheduler. The scheduler’s main job is to start the container on the correct host and connect it. In addition, the scheduler needs to monitor the containers and deal with container/host failures.

4 -) The schedulers are Docker Swarm, Google Kubernetes, and Apache Mesos. Docker Swarm is probably the easiest to start with, and it’s not attached to any cloud provider. The container sends several requirements to the cluster scheduler. For example, I have this amount of resources and want to run five copies of this software with this amount of CPU and disk space – now find me a place.

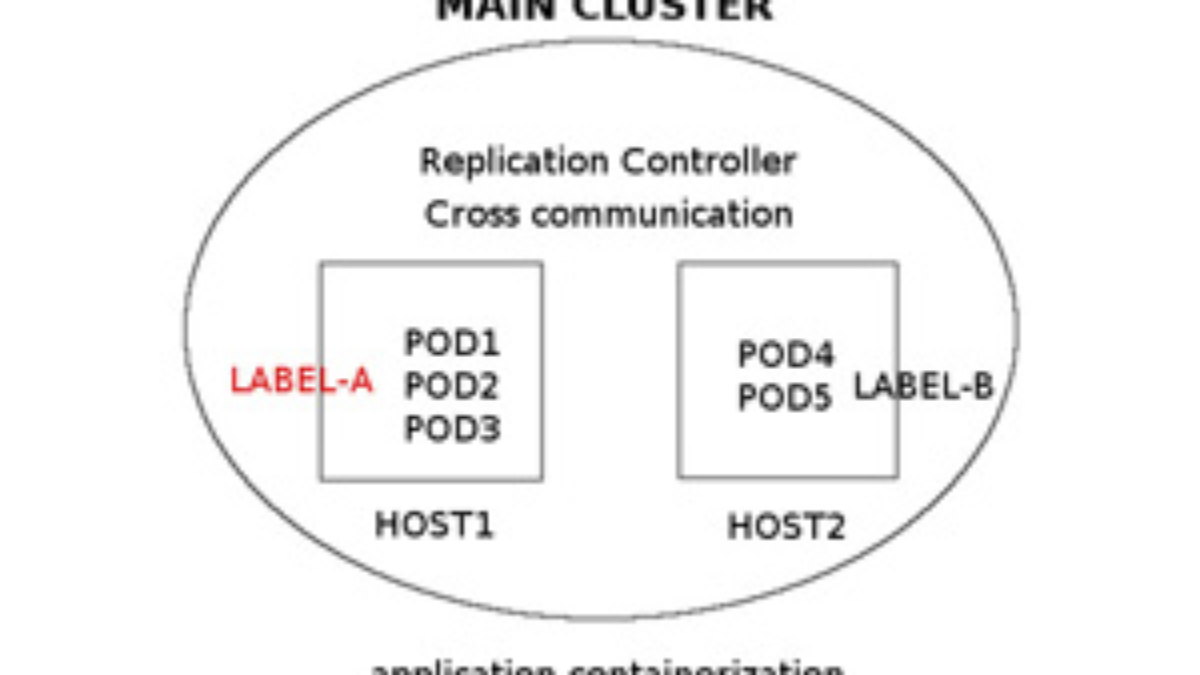

Kubernetes – Container scheduler

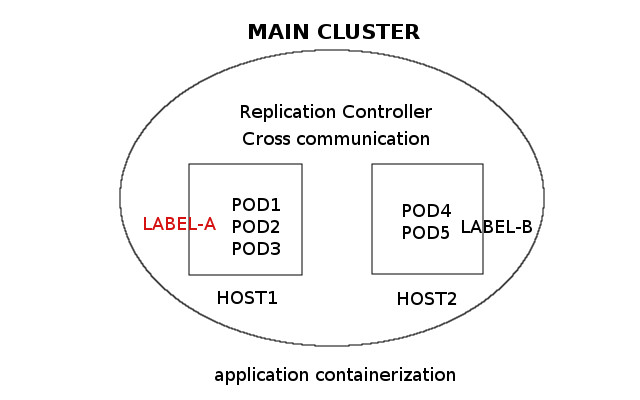

Hand on Kubernetes. Kubernetes is an open-source cluster solution for containerized environments. It aims to make deploying microservice-based applications easy by using the concepts of PODS and LABELS to group containers into logical units. All containers run inside a POD.

PODS are the main difference between Kubernetes and other scheduling solutions. Initially, Kubernetes focused on continuously running stateless and “cloud native” stateful applications. In the coming future, it is said to support other workload types.

Kubernetes Networking 101

Kubernetes is not just interested in the deployment phase but works across the entire operational model—scheduling, updating, maintenance, and scaling. Unlike orchestration systems, it actively ensures the state matches the user’s requirements. Kubernetes is also involved in monitoring and healing if something goes wrong.

The Google team refers to this as a flight control mechanism. It provides the cluster and the decoupling between it. The application containers view the world as a sea of computing, an entirely homogenous (similar kind) cluster. Every machine you create in your fleet looks the same. The application is completely decoupled from low-level computing.

The unit of work has changed

The user does not need to care about physical placement anymore. The unit of work has changed and become a service. The administrator only needs to care about services, such as the amount of CPU, RAM, and disk space. The unit of work presented is now at a service level. The physical location is abstracted, all taken care of by the Kubernetes components.

This does not mean that the application components can be spread randomly. For example, some application components require the same host. However, selecting the hosts is no longer the user’s job. Kubernetes provides an abstracted layer over the infrastructure, allowing this type of management.

Containers are scheduled using a homogenous pool of resources. The VM disappears, and you think about resources such as CPU and RAM. Everything else, like location, disappears.

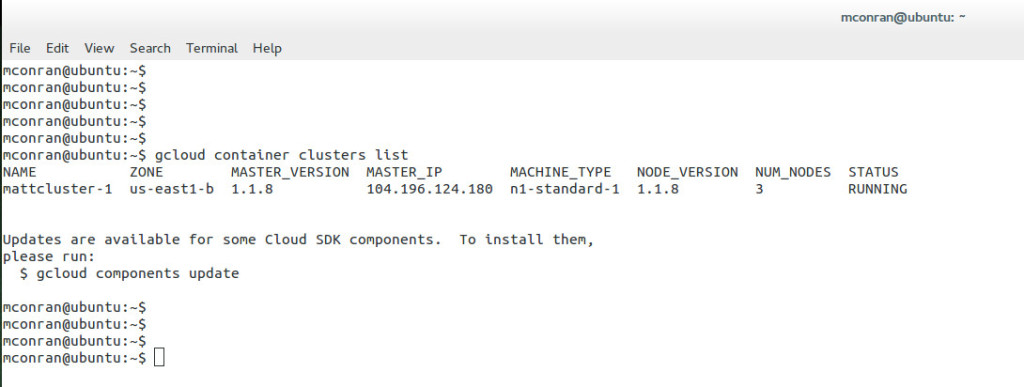

Kubernetes pod and label

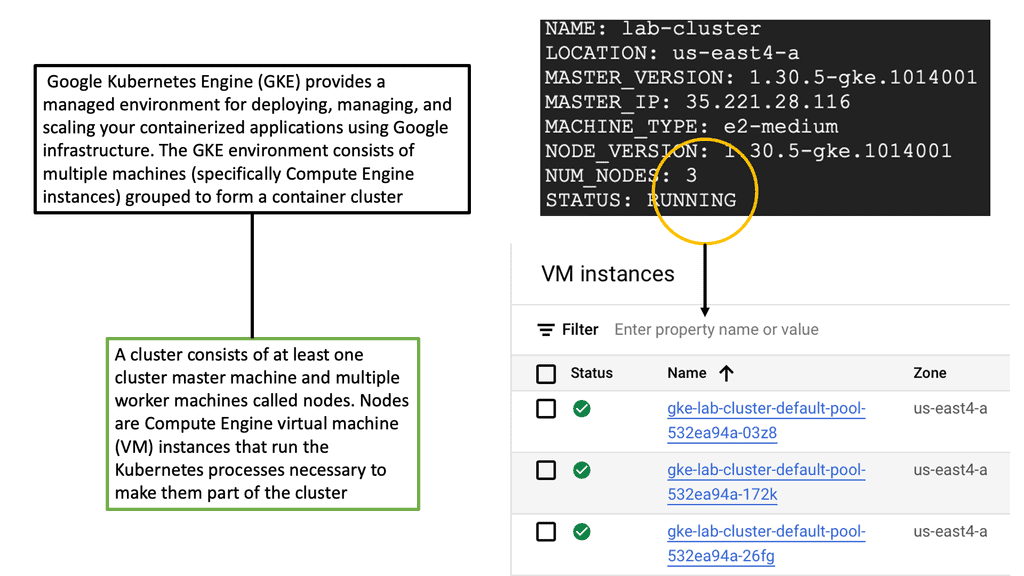

The main building blocks for Kubernetes clusters are PODS and LABELS. So, the first step is to create a cluster, and once complete, you can proceed to PODS and other services. The diagram below shows the creation of a Kubernetes cluster. It consists of a 3-node instance created in us-east1-b.

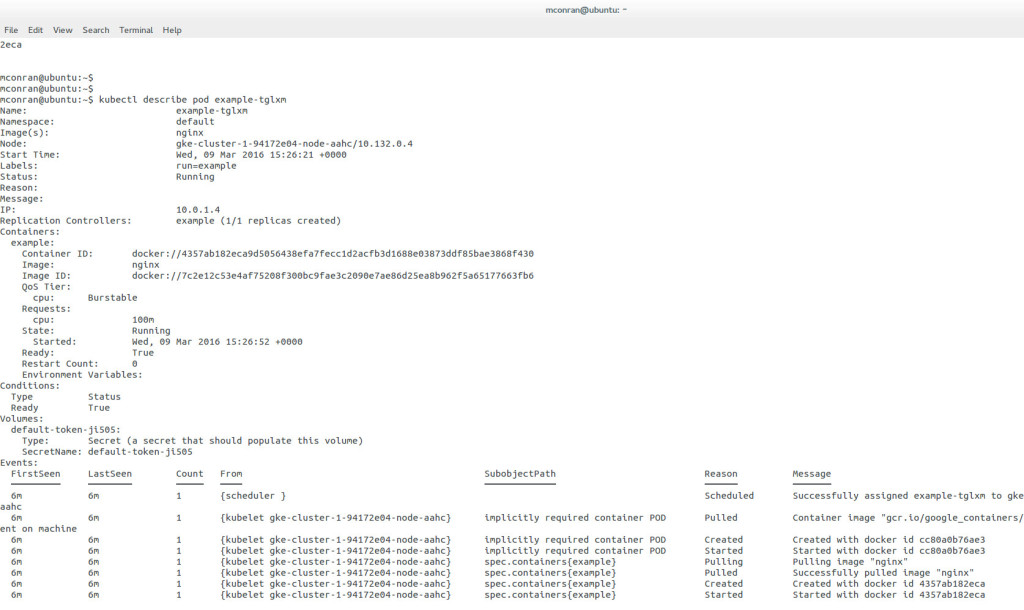

A POD is a collection of applications running within a shared context. Containers within a POD share fate and some resources, such as volumes and IP addresses. They are usually installed on the same host. When you create a POD, you should also make a kubernetes replication controller.

It monitors POD health and starts new PODS as required. Most PODS should be built with a replication controller, but it may not be needed if your POD is short-lived and is writing non-persistent data that won’t survive a restart. There are two types of PODS a) single container and b) Multi-container.

The following diagram displays the full details of a POD named example-tglxm. Its label is run=example and is located in the default network (namespace).

A POD may contain either a single container with a private volume or a group with a shared volume. If a container fails within a POD, the Kubelet automatically restarts it. However, if an entire POD or host fails, the replication controller needs to restart it.

Replication to another host must be specifically configured. It is not automatic by default. The Kubernetes replication controller dynamically resizes things and ensures that the required number of PODS and containers are running. If there are too many, it will kill some; if not enough, it will start some more.

Kubernetes operates with the concept of LABELS – a key-value pair attached to objects, such as a POD. A label is a tag that can be used to query against. Kubernetes is an abstraction, and you can query whatever item you want using a label in an entire cluster.

For example, you can select all frontend containers with a label called “frontend”; it then selects all front ends. The cluster can be anywhere. Labels can also be building blocks for other services, such as port mappings. For example, a POD whose labels match a specific service selector is accessible through the defined service’s port.

Closing Points on Container Scheduler

At its core, a container scheduler is a system that automates the deployment, scaling, and operation of application containers. It intelligently assigns workloads to available computing resources, ensuring optimal performance and availability. Popular container schedulers like Kubernetes, Docker Swarm, and Apache Mesos have become essential tools for organizations aiming to leverage the full potential of containerization.

Container schedulers come packed with features that enhance the management and orchestration of containers:

– **Automated Load Balancing**: They dynamically distribute incoming application traffic to ensure a balanced load across containers, preventing any single resource from becoming a bottleneck.

– **Self-Healing Capabilities**: In the event of a failure, container schedulers can automatically restart or reschedule containers to maintain application availability.

– **Efficient Resource Utilization**: By intelligently allocating resources based on demand, schedulers ensure that your infrastructure is used efficiently, minimizing costs and maximizing performance.

Selecting the right container scheduler depends on several factors, including your organization’s infrastructure, scale, and specific use cases. Kubernetes is the most widely adopted due to its rich feature set and community support, making it an excellent choice for complex, large-scale deployments. Docker Swarm, on the other hand, offers simplicity and ease of use, making it ideal for smaller projects or teams new to container orchestration.

To maximize the benefits of container schedulers, consider the following best practices:

– **Define Clear Resource Limits**: Set appropriate resource limits for your containers to prevent resource contention and ensure stable performance.

– **Implement Security Measures**: Use network policies and role-based access controls to secure your containerized environments.

– **Monitor and Optimize**: Continuously monitor your containerized applications and adjust resource allocations as needed to optimize performance.

Summary: Container Scheduler

Container scheduling plays a crucial role in modern software development and deployment. It efficiently manages and allocates resources to containers, ensuring optimal performance and minimizing downtime. In this blog post, we explored the world of container scheduling, its importance, key strategies, and popular tools used in the industry.

Understanding Container Scheduling

Container scheduling involves orchestrating the deployment and management of containers across a cluster of machines or nodes. It ensures that containers run on the most suitable resources while considering resource utilization, scalability, and fault tolerance factors. By intelligently distributing workloads, container scheduling helps achieve high availability and efficient resource allocation.

Key Strategies for Container Scheduling

1. Load Balancing: Load balancing evenly distributes container workloads across available resources, preventing any single node from being overwhelmed. Popular load-balancing algorithms include round-robin and least connections.

2. Resource Constraints: Container schedulers consider resource constraints such as CPU, memory, and disk space when allocating containers. By understanding the resource requirements of each container, schedulers can make informed decisions to avoid resource bottlenecks.

3. Affinity and Anti-Affinity: Schedulers can leverage affinity rules to ensure containers with specific requirements are placed together on the same node. Conversely, anti-affinity rules can separate containers that may interfere with each other.

Popular Container Scheduling Tools

1. Kubernetes: Kubernetes is a leading container orchestration platform with robust scheduling capabilities. It offers advanced features like auto-scaling, rolling updates, and cluster workload distribution.

2. Docker Swarm: Docker Swarm is a native clustering and scheduling tool for Docker containers. It simplifies the management of containerized applications and provides fault tolerance and high availability.

3. Apache Mesos: Mesos is a flexible distributed systems kernel that supports multiple container orchestration frameworks. It provides fine-grained resource allocation and efficient scheduling across large-scale clusters.

Conclusion:

Container scheduling is critical to modern software deployment, enabling efficient resource utilization and improved performance. Organizations can optimize their containerized applications by leveraging strategies like load balancing, resource constraints, and affinity rules. Furthermore, popular tools like Kubernetes, Docker Swarm, and Apache Mesos offer powerful scheduling capabilities to manage container deployments effectively. Embracing container scheduling technologies empowers businesses to scale their applications seamlessly and deliver high-quality services to end-users.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023

[…] Kubernetes has two key abstractions – Pods and Services. Pods are essentially scheduling ATOMs in Kubernetes. They represent a group of containers, tightly integrated sharing resources and fate. An example of an application container grouping in a Pod might be a file puller and a web server. Frontend / Backend tiers wouldn’t usually fall into this category as they can be scaled separately. Pods share network namespace and within themselves, talk to each other as localhosts. […]