Neutron Network

In today's interconnected world, the importance of a robust and efficient network infrastructure cannot be emphasized enough. One technology that has been making waves in the networking realm is Neutron Network. In this blog post, we will delve into the intricacies of Neutron Network and explore its potential to bridge the digital divide.

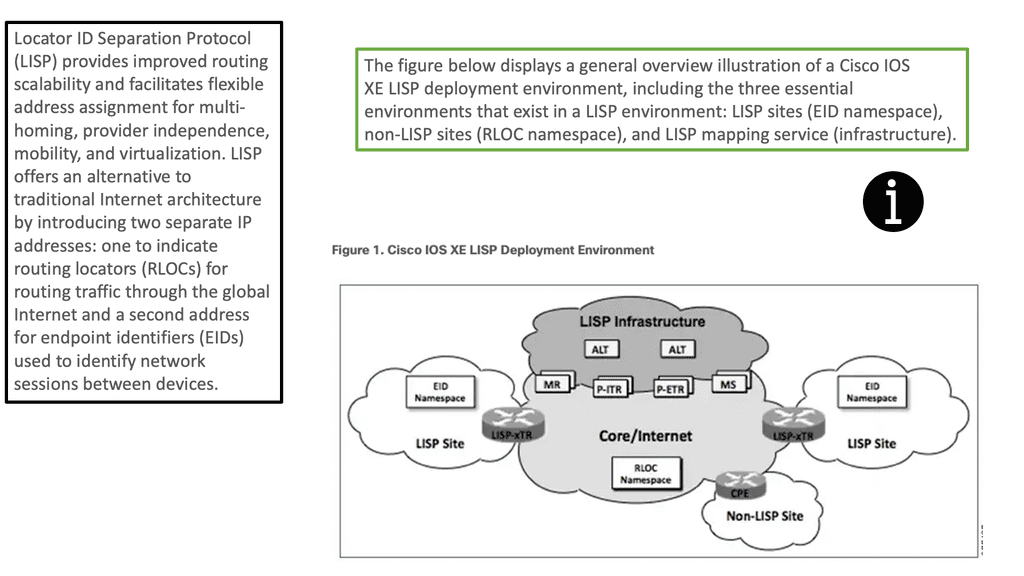

Neutron Network, a component of OpenStack, is a software-defined networking (SDN) project that provides networking capabilities as a service for other OpenStack services. It enables the creation and management of virtual networks, routers, and security groups, offering a flexible and scalable solution for network infrastructure.

Neutron Network offers a wide range of features that make it an ideal choice for modern network deployments. From network segmentation and isolation to load balancing and firewall services, Neutron Network empowers administrators with granular control and enhanced security. Additionally, its integration with other OpenStack components allows for seamless management and orchestration of the entire infrastructure.

The versatility of Neutron Network opens up a plethora of use cases across various industries. In the realm of cloud computing, Neutron Network enables the creation of virtual networks for tenants, ensuring isolation and security. It also finds applications in data centers, enabling efficient traffic routing and load balancing. Moreover, with the rise of edge computing, Neutron Network plays a crucial role in connecting distributed devices and facilitating real-time data transfer.

While Neutron Network offers a plethora of advantages, it is essential to acknowledge and address the challenges it may pose. Some common limitations include complex initial setup, scalability concerns, and potential performance bottlenecks. However, with proper planning, optimization, and ongoing development, these challenges can be mitigated, ensuring a smooth and efficient network infrastructure.

Neutron Network emerges as a powerful tool in bridging the digital divide, empowering organizations to build robust and flexible network infrastructures. With its extensive features, seamless integration, and diverse applications, Neutron Network paves the way for enhanced connectivity, improved security, and efficient data transfer. Embracing this technology can unlock new possibilities and propel businesses into the future of networking.

Matt Conran

Highlights: Neutron Network

Understanding Neutron Network

Neutron Network is an open-source networking project that provides networking services to virtual machines and containers within an OpenStack environment. It serves as the networking component of OpenStack, enabling users to create and manage networks, subnets, routers, and security groups. By abstracting the underlying network infrastructure, Neutron Network offers flexibility, scalability, and simplified network management.

Neutron Network boasts an impressive array of features that empower users to build robust and secure networks. Some of its key features include:

1. Network Abstraction: Neutron Network allows users to define and manage networks using a variety of network types, such as flat, VLAN, VXLAN, or GRE. This flexibility enables seamless integration with existing network infrastructure.

2. Security Groups: With Neutron Network, users can define security groups and associated rules to control traffic flow and enforce security policies. This granular level of security helps protect workloads from unauthorized access and potential threats.

3. Load Balancing: Neutron Network offers built-in load balancing capabilities, allowing users to distribute traffic across multiple instances. This ensures high availability, scalability, and optimal performance for applications and services.

Neutron Network finds application in various scenarios, catering to a wide range of use cases. Some notable use cases include:

1. Multi-Tenant Environments: Neutron Network enables the creation of isolated networks for different tenants within an OpenStack cloud. This segregation ensures secure and independent network environments, making it ideal for service providers and enterprises with multiple clients.

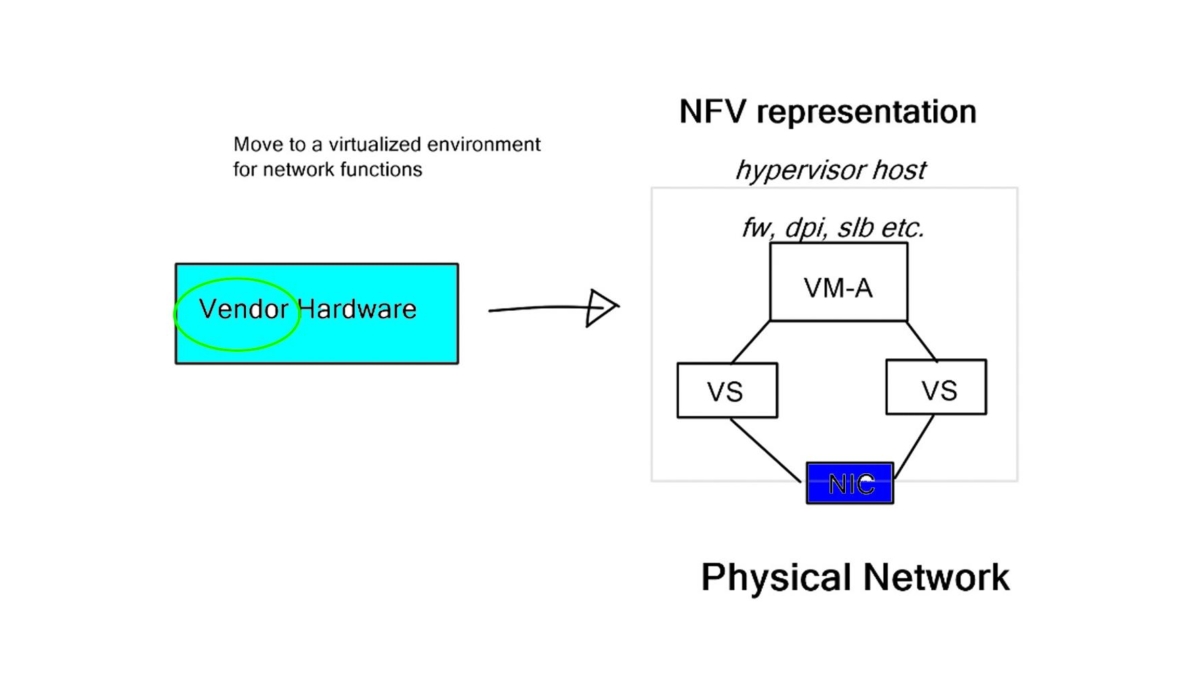

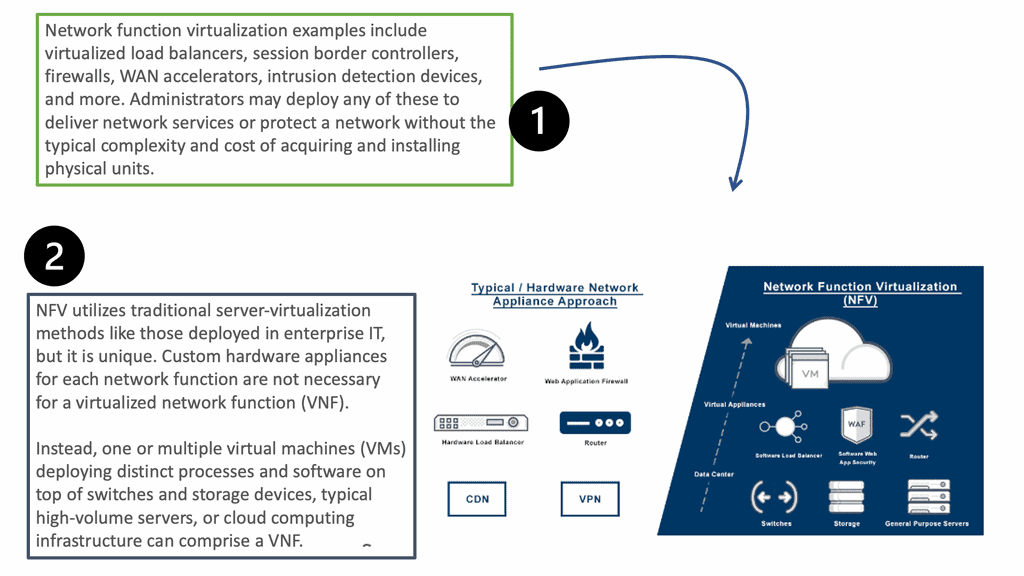

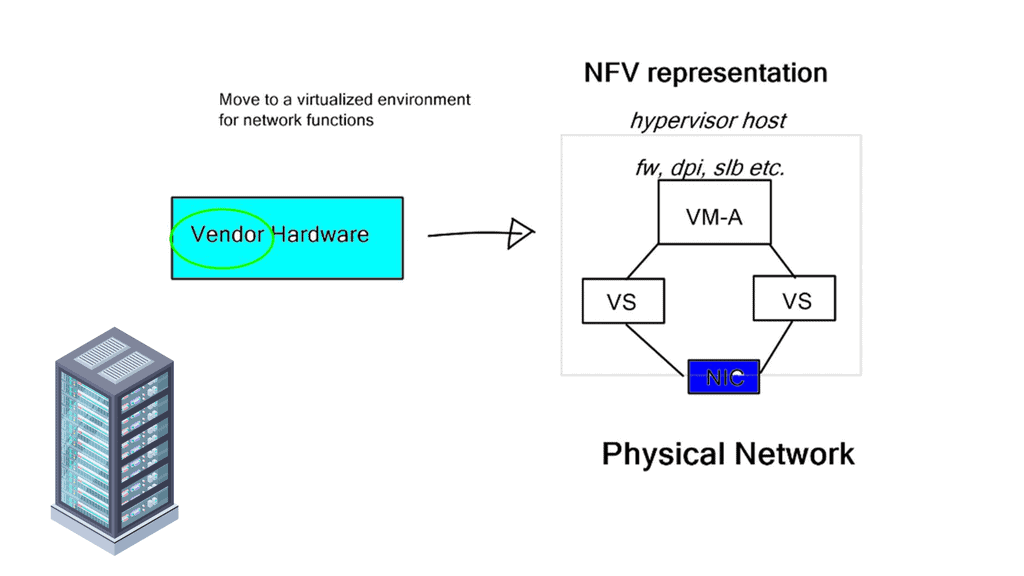

2. NFV (Network Function Virtualization): Neutron Network plays a crucial role in NFV deployments, where network functions are virtualized. It facilitates the creation and management of virtual network functions (VNFs), enabling efficient network service delivery and orchestration.

The need for virtual networking

A: ) Due to the proliferation of devices in data centers, today’s networks contain more devices than ever. Servers, switches, routers, storage systems, and security appliances are now available as virtual machines and virtual network appliances. A scalable, automated approach is needed to manage next-generation networks. Thanks to its flexibility, control, and provisioning time, users can control their infrastructure more easily and quickly with OpenStack.

B: ) OpenStack Networking is a pluggable, scalable, API-driven system that manages networks and IP addresses on OpenStack clouds. Like other core OpenStack components, It allows users and administrators to maximize the value and utilization of existing data center resources. Unlike Nova (computing), Glance (images), Keystone (identity), Cinder (block storage), or Horizon (dashboard), Neutron (Networking) is a stand-alone service. To provide resiliency and redundancy, OpenStack Networking can be configured to run on a single server or distributed across multiple hosts.

C: ) With OpenStack Networking, users can request additional processing through an application programmable interface or API. Cloud operators can enhance and power the cloud by defining network connectivity with different networking technologies. Access to a database is required for Neutron to store network configurations persistently.

Understanding Open vSwitch

Open vSwitch, often called OVS, is a multi-layer virtual switch that enables network automation and virtualization. It is designed to work seamlessly with hypervisors, containers, and cloud environments, providing a flexible and scalable networking solution. By integrating with various virtualization technologies, Open vSwitch allows for efficient network traffic management, ensuring optimal performance and reliability.

Features and Benefits of Open vSwitch

Open vSwitch offers an array of features that make it a preferred choice for network administrators and developers. Some key features include support for standard management interfaces, virtual and physical network integration, VLAN and VXLAN support, and flow-based forwarding. Additionally, OVS supports advanced features like Quality of Service (QoS), network slicing, and load balancing, empowering network operators to create dynamic and efficient networks.

OVS Use Cases

Open vSwitch finds applications in a wide range of use cases. One prominent use case is network virtualization, where OVS enables the creation of virtual networks isolated from the physical infrastructure. This allows for better resource utilization, enhanced security, and simplified network management.

OVS is also extensively used in cloud environments, facilitating seamless connectivity and virtual machine migration across data centers. Furthermore, Open vSwitch is leveraged in Software-Defined Networking (SDN) deployments, enabling centralized network control and programmability.

**Application Program Interface**

Neutron’s pluggable application program interface ( API ) architecture enables the management of network services for container networking in public or private cloud environments. The API allows users to interact with neutron networking constructs, such as routers and switches, enabling instance reachability. The OpenStack Neutron and OpenStack network types were initially built into Nova but lacked flexibility for advanced designs. It was helpful for large Layer 2 networks, but most environments require better multi-tenancy with advanced load balancing and firewalling functionality.

**Decoupled Layer 3 Approach**

The limited networking functionality gave Neutron, which offers a decoupled Layer 3 approach. It operates an Agent-Database model where the API receives and sends calls to agents installed locally on the hosts. Without this efficiency, there won’t be any communication and connectivity between your host’s platforms, which can sometimes affect productivity.

For additional pre-information, you may find the following helpful:

Neutron Network

Understanding Neutron’s Architecture

Neutron’s architecture is designed to be highly modular and pluggable, allowing operators to choose from a wide array of network services and plugins. At its core, Neutron consists of several components, including the Neutron server, plugins, and agents. The Neutron server is responsible for managing the high-level network state, while plugins handle the actual configuration of the low-level networking details across different technologies. Agents work as the intermediaries, ensuring that the network state is correctly applied to the physical or virtual network infrastructure.

**Advantages of Using Neutron in OpenStack**

Neutron provides several advantages for cloud administrators and users. Its modular architecture allows for flexibility and customization to meet the specific networking needs of different organizations. Additionally, Neutron supports advanced networking features such as security groups, floating IPs, and VPN services, which enhance the security and functionality of cloud deployments. By utilizing Neutron, organizations can efficiently manage their network resources, ensuring high availability and performance.

**Challenges and Considerations**

While Neutron offers numerous benefits, it also presents some challenges. Configuring and managing Neutron can be complex, especially in large-scale deployments. It’s essential to have a deep understanding of networking concepts and OpenStack’s architecture to avoid potential pitfalls. Additionally, integrating Neutron with existing network infrastructure may require careful planning and coordination.

Key Features and Benefits:

1. Network Virtualization: Neutron Network leverages network virtualization technologies such as VLANs, VXLANs, and GRE tunnels to create isolated virtual networks. This allows tenants to have complete control over their network resources without interference from other tenants.

2. Scalability: Neutron’s distributed architecture can scale horizontally to accommodate many virtual networks and instances. This ensures that cloud environments can handle increased workloads without compromising performance.

3. Network Segmentation: Neutron Network supports network segmentation, allowing tenants to partition their virtual networks based on specific requirements. This enables better network isolation, security, and performance optimization.

4. Flexible Network Topologies: Neutron provides the flexibility to create a variety of network topologies, including flat networks, VLAN-based networks, and overlay networks. This adaptability empowers users to design their networks according to their unique needs.

5. Integration with Security Services: Neutron Network integrates seamlessly with OpenStack’s security services, such as Firewall-as-a-Service (FWaaS) and Virtual Private Network-as-a-Service (VPNaaS). This integration enhances network security by providing additional layers of protection.

6. Load Balancing and VPN Services: Neutron Network offers load balancing services, allowing users to distribute network traffic across multiple instances for improved performance and reliability. Additionally, it supports VPN services to establish secure connections between different networks or remote users.

Neutron Network Architecture:

Under the hood, Neutron Network consists of several components working together to provide a robust networking service. The main elements include:

– Neutron API: Provides a RESTful API for users to interact with Neutron Network and manage their network resources.

– Neutron Core Plugin: The central component responsible for handling network-related requests and managing network plugins.

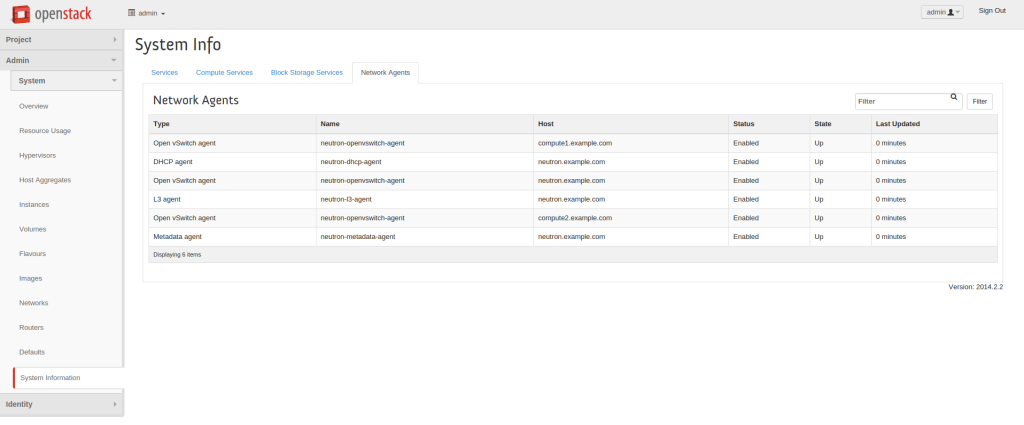

– Neutron Agents: Various agents, such as the DHCP agent, L3 agent, and OVS agent, ensure the smooth operation of the Neutron Network by handling specific tasks like DHCP allocation, routing, and switching.

– Network Plugins: Neutron supports multiple plugins, such as the Open vSwitch (OVS) plugin and the Linux Bridge plugin, which provide different network virtualization capabilities and integrate with various networking technologies.

OpenStack Network Types

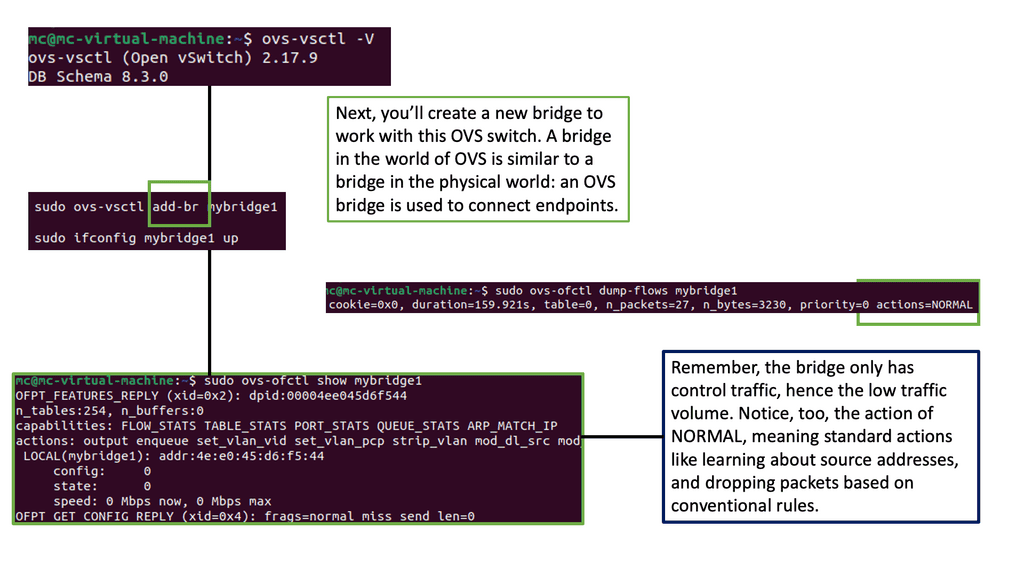

Logical network information is stored in the database. Plugins and agents extract the data and carry out the necessary low-level functions to pin the virtual network, enabling instance connectivity. For example, the Open vSwitch agent converts information in the Neutron database to Open vSwitch flow while maintaining the local flows to match the network design following topology changes. Agents and plugins build the network based on the logical data model. The screenshot below illustrates the agent-to-host installation.

Neutron Networking: Network, Subnets, and Ports

Neutron consists of four elements that form the foundation for OpenStack Network Types. The configuration consists of the following entities: Networks, Subnets, and Ports. A network is a standard Layer 2 broadcast domain in which subnets and ports are assigned. A subnet is an IPv4 or IPv6 address block ( IPAM—IP Address Management) posted to a network.

A port is a connection point with properties similar to those of a physical port, except that it is virtual. Ports have media access control addresses ( MAC ) and IP addresses. All port information is stored in the Neutron database, which plugins/agents use to stitch and build the virtual infrastructure.

Neutron networking features

Neutron networks enable core networking and the potential for much more once the appropriate extension and plugin are activated. Extensions enhance plugins to provide additional network functionality. Due to its pluggable architecture, Neutron can be extended with third-party open-source or proprietary products, for example, an SDN OpenDaylight controller for advanced centralized functionality.

While Neutron does offer an API for network interaction, it does not provide an API to manage the network. Integrating an SDN controller with Neutron enables a centralized viewpoint and management entity for the entire network infrastructure, not just individual pieces.

Some vendor plugins complement Neutron, while others completely replace it. Advancements have been made to Neutron in an attempt to make it more “production-ready,” but some of these features are still at the experimental stage. There are bugs in all platforms, but generally, early-release features should be kept in nonproduction environments.

Virtual switches, routing, and advanced services

Virtual switches are software switches that connect VM instances at Layer 2. Any communication outside that boundary requires a Layer 3 router, either physical or virtual. Neutron has built-in support for Linux Bridges and Open vSwitch virtual switches. Overlay networking, the foundation for multi-tenancy for cloud computing, is supported in both.

Layer 3 routing permits external connectivity and connectivity between VMs in different subnets. Routing is enabled through IP forwarding rules, IPtables, and network namespaces.

IPtables provide ingress/egress filtering throughout different parts of the network (for example, perimeter edge or local compute ), namespaces provide network stack isolation, and IP forwarding rules provide forwarding. Firewalling and security services are based on Security Groups or FWaaS (FireWall-as-a-Service).

They can be used in conjunction for better depth defense. Both operate with IPtables but differ in network placement.

Security group IPtable rules are configured locally on ports corresponding to the compute node hosting the instance. Implementation is close to the actual workload, offering finer-grained filleting. Firewall IPtable rules are at the network’s edge on Neutron routers ( namespaces ), filtering perimeter traffic.

Load balancing enables requests to be distributed to multiple instances. Dispersing load to numerous hosts offers advantages similar to those of the traditional world. The plugin is based on open-source HAProxy. Finally, VPNs allow operators to extend the network securely with IPSec-based VPN tunnels.

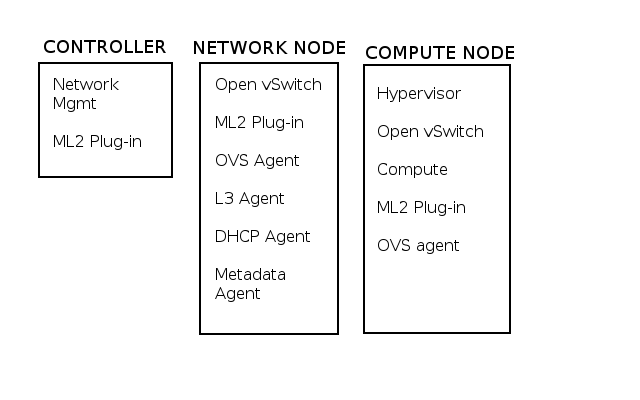

Virtual network preparation

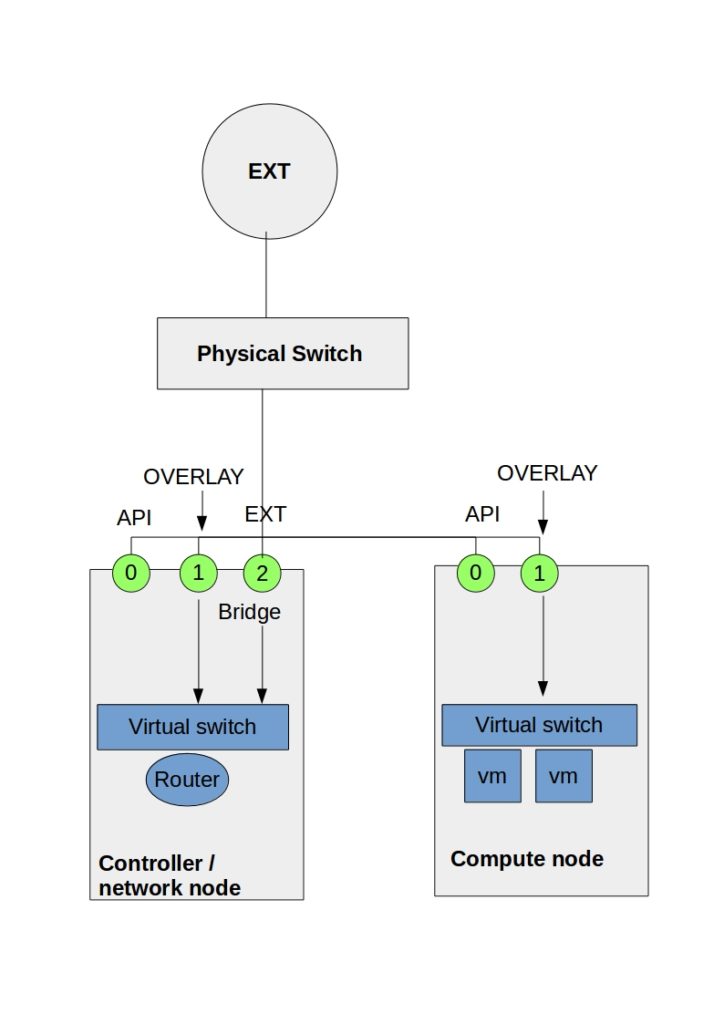

The diagram below displays the initial configuration and physical interface assignments for a standard neutron deployment. The reference model consists of a controller, network, and compute nodes. The compute nodes are restricted to provide compute resources, while the controller/network node may be combined or separated for all other services.

Separating services on the compute nodes allows compute services to be scaled horizontally. It’s common to see the controller and networking node operating on a single host.

The number and type of interfaces depend on how good you feel about combining control and data traffic. Networking can function with just one interface, but splitting the different kinds of network traffic into several separate interfaces is good.

OpenStack Network Types uses four types of traffic – Management, API, External, and Guest. If you are going to separate anything, it’s recommended to physically separate management and API traffic from all other types of traffic. Separating the traffic to different interfaces splits the control from data traffic—a tick from the security auditors’ box.

The preceding diagram, Eth0 is used for the management and API network, Eth1 for overlay traffic, and Eth2 for external and Tenant networks ( depending on the host ). The tenant networks ( Eth2 ) reside on the compute nodes, and the external network resides on the controller node ( Eth2 ).

The controller Eth2 interface uses Neutron routers for external network traffic to instances. In certain Neutron Distributed Virtual Routing ( DVR ) scenarios, the external networks are at the compute nodes.

Plugins and drivers

Neutron networking operates with the concept of plugins and drivers. Neutrons core plugin can be either an ML2 or a vendor plugin. Before ML2, Neutron was limited to a single-core plugin at any given time. The ML2 plugin introduces the concept of type and mechanism drivers.

Type drivers represent type-specific network states and support local, flat, vlan, gre, and vxlan network types. Mechanism drivers take information from the type driver and ensure its implementation correctly.

There are agent-based, controller-based, and Top-of-Rack models of the mechanism driver. The most popular are the L2 population, Open vSwitch, and Linux bridge. In addition, the mechanism driver arena is a popular space for vendors’ products.

Linux Namespaces

The majority of environments require some multi-tenancy. Cloud environments would be straightforward if built for only one customer or department. In reality, this is never the case. Multi-tenancy within Neutron is based on Linux Namespaces. Namespace offers a completely isolated stack to do what you want. They enable a logical copy of the network stack supporting overlapping IP assignments.

A lot of Neutron networking is made possible with the use of namespaces and the ability to connect them.

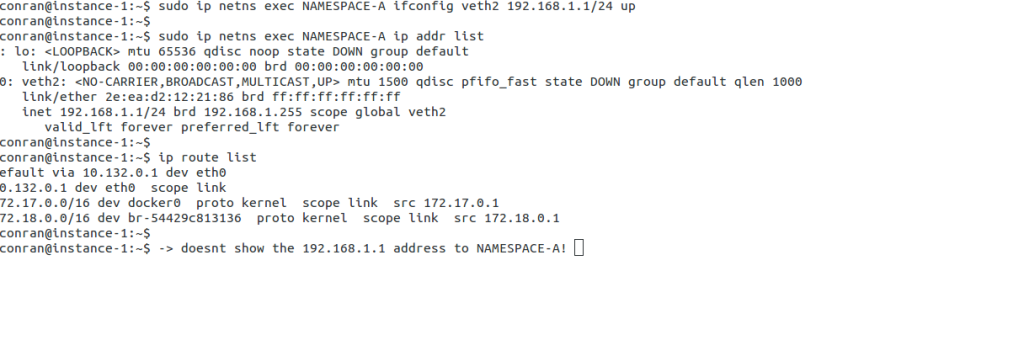

We have a qdhcp namespace, qrouter namespace, qlbass namespace, and additional namespaces for DVR functionality. Namespaces are present on nodes running the respective agents. The following command displays different routing tables for NAMESPACE-A and the global namespace, illustrating the ability of network stack isolation.

OpenStack Network Types: Virtual network infrastructure

Local, Flat, VLAN, VXLAN, and GRE networks

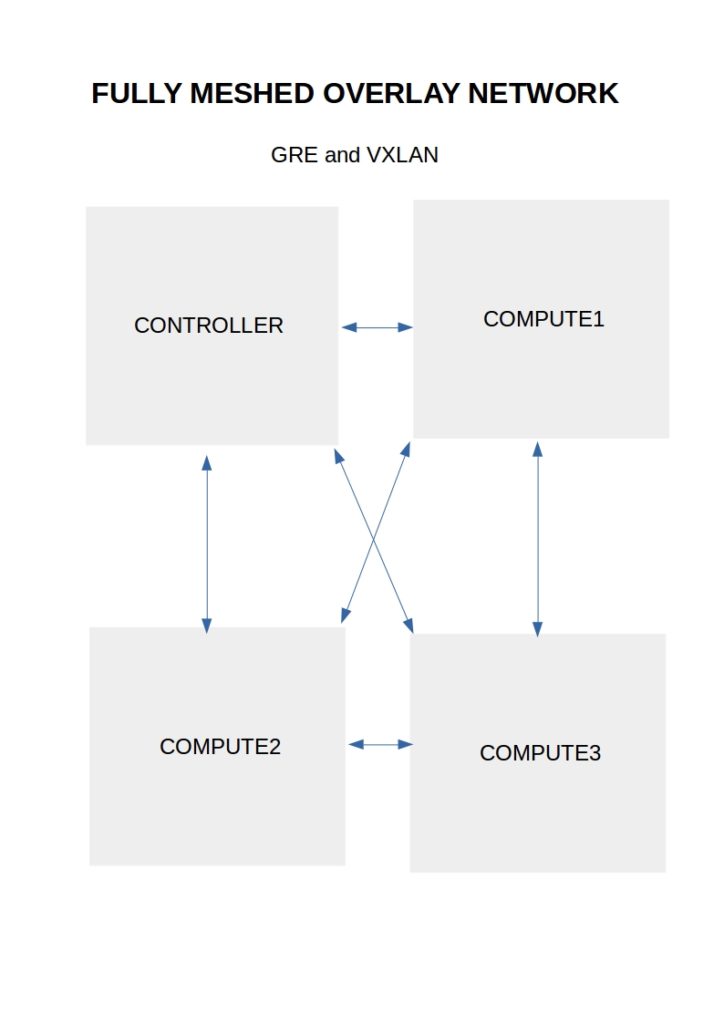

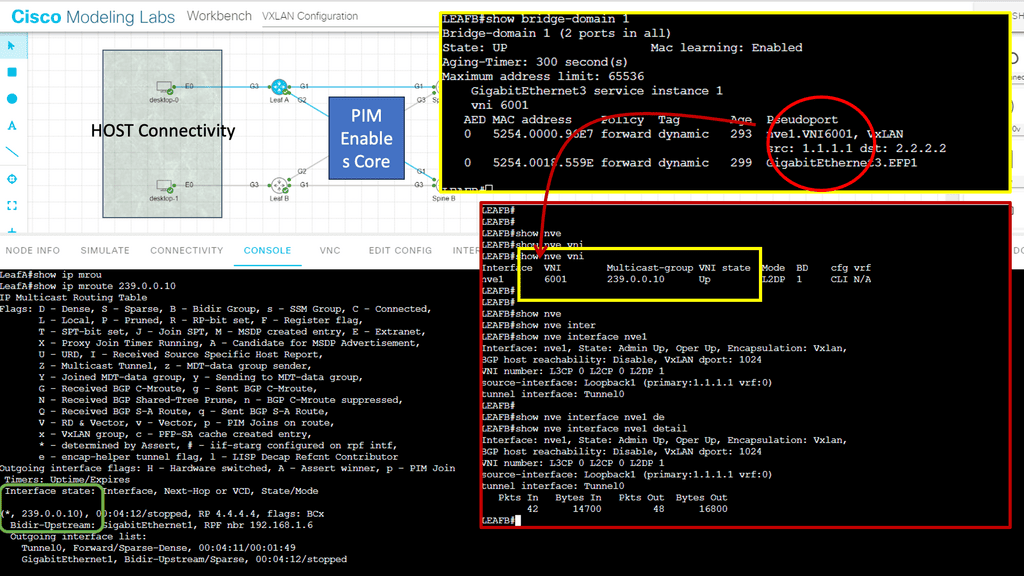

Neutron networking supports Local, Flat, VLAN, VXLAN, and GRE networks. Local networks are isolated networks. Flat networks do not incorporate any VLAN tagging. On the other hand, VLAN networks use the standard. Q tagging ( IEEE 802.1Q ) to segregate traffic. VXLAN networks encapsulate Layer 2 traffic over IP using VTEP and VXLAN network identifier ( VNI ).

GRE is another type of Layer 2 over Layer 3 overlay. Both GRE and VXLAN accomplish the same goal of emulation over pure IP but use different methods —VXLAN traffic uses UDP, and GRE traffic uses IP protocol 47.

Layer 2 data is transported from an end host, encapsulated over IP to the egress switch that sends the data to the destination host. With an underlay and overlay approach, you have two layers to debug when something goes wrong.

OpenStack Network Types: Virtual Network Switches

The first step in building a virtual network is to make the virtual switching infrastructure. This acts as the base for any network design, whether virtual or physical. Virtual switching provides connectivity to and from the virtual instances, building the concrete for advanced networking services. The first piece of the puzzle is the virtual network switches.

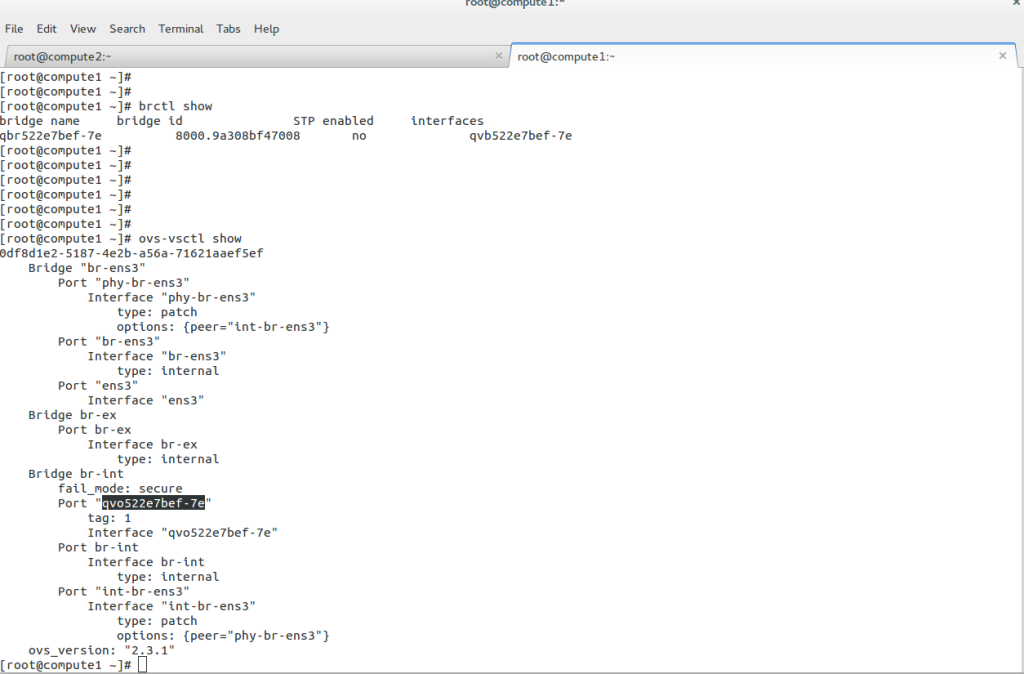

Neutron networking includes built-in support for the Linux Bridge and Open vSwitch. Both are virtual switches but operate with some significant differences. The Linux bridge uses VLANs to tag traffic, while the Open vSwitch uses flow rules to manipulate traffic before forwarding.

Instead of mapping the local VLAN ID to a physical VLAN ID, the local ID is added or stripped from the Ethernet header by flow rules.

The “brctl show” command displays the Linux bridge. The bridge ID is automatically generated based on the NIC, and the bridge name is based on the UUID of the corresponding Neutron network. The “ovs-vsctl show” command displays the Open vSwtich. It has a slightly more complicated setup, with the br-int ( integration bridge ) acting as the main center point of connections.

Neutron uses the bridge, 802.1q, and vxlan kernel modules to connect instances with the Linux bridge. Bridge and Open vSwitch kernel modules are used for the Open vSwitch. The Open vSwitch uses some userspace utilities to manage the OVS database. Most networking elements are connected with virtual cables, known as veth cables. What goes in one end must come out; the other best describes a virtual cable.

Veths connect many elements, including namespace to the namespace, Open vSwitch to Linux bridge, and Linux bridge to Linux bridge, all combined with veth cables. The Open vSwitch uses additional particular patch ports to connect Open vSwitch bridges. The Linux bridge doesn’t use patch ports.

The Linux Bridge and Open vSwitch can complement each other. For example, when Neutron Security Groups are enabled, instances connect to the Linux and Open vSwitch Integration bridges with a Veth cable. The workaround is caused by the inability to place IPtable rules ( needed by security groups ) on tap interfaces connected to Open vSwitch bridge ports.

Neutron network and network address translation (NAT)

Neutron employs the concept of Network Address Translation (NAT) to predict inbound and outbound translations. The concept of NAT stays the same in the virtual world, either by modifying an IP packet’s source or destination address. Neutron employs two types of translations – one-to-one and one-to-many.

One-to-one translations utilize floating IP addresses, and many-to-one is a Port Address Translation ( PAT ) style design where floating IP is not used. F

Floating IP addresses are externally routed IP addresses that directly map instances and an external IP address. The term floating comes from the fact that they can be modified on-the-fly between instances. They are associated with a Neutron port logically mapped to an example. Ports can have multiple IP addresses assigned.

- SNAT refers to source NAT, which changes the source IP address to appear as the externally connected interface.

- Floating IPs are called Destination NAT ( DNAT ), which change the source and destination IP address depending on traffic direction.

The external network connected to the virtual router is the network from which floating IPs are derived. The default behavior is to source NAT traffic from instances that lack floating IP. Instances that use source NAT can not accept traffic initiated externally. If you want traffic created externally to hit an instance, you must use a one-to-one mapping with a floating IP.

Neutron High Availability

Standalone router

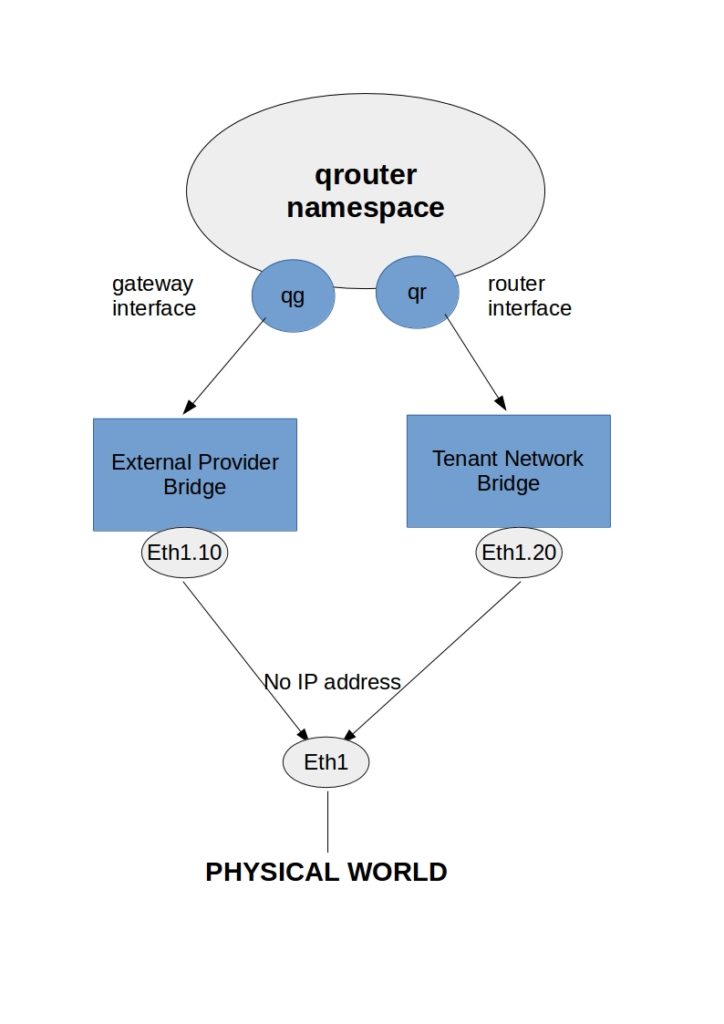

The most accessible type of router to create in Neutron is a standalone router. As the name suggests, it lacks high availability. Routers created with Neutron exist on namespaces that reside on the nodes running the L3 agent. It is the role of the Layer 3 agent to create the network namespace representing the routing function.

A virtual router is essentially a network namespace called the qrouter namespace. The qrouter namespace uses routing tables to forward traffic and IPtable rules to dictate how traffic is translated.

A virtual router can connect to two different types of networks: a single external provider network or one or more tenant networks. The interface to an external provider bridge network is “qg,” and to a tenant network bridge is a “qr” interface. The tenant network traffic is routed from the “qr” interface to the “qg” interface for onward external forwarding.

Virtual router redundancy protocol

VRRP is pretty simple and offers highly available and redundant default gateways or the next hop of a route. The namespaces ( routers ) are spread across multiple hosts running the Layer 3 agent. Multiple router namespaces are created and distributed among the Layer 3 agents. VRRP operates with a Linux keepalive instance. Each runs a “keepalive” service to detect the other’s availability.

The keepalive service is a Linux keepalive tool that uses VRRP internally. It is the role of the L3 agent to start the keepalive instance on every namespace. A dedicated HA network allows the routers to talk to each other. There are split-brain and MAC flapping issues; as far as I understand, it’s still an experimental feature.

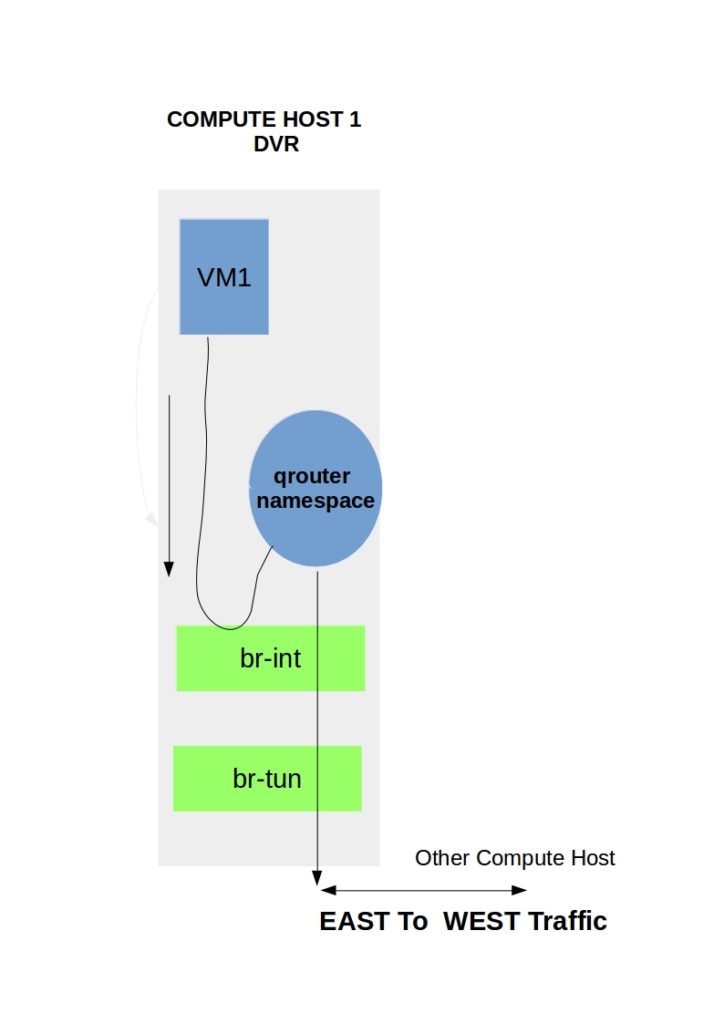

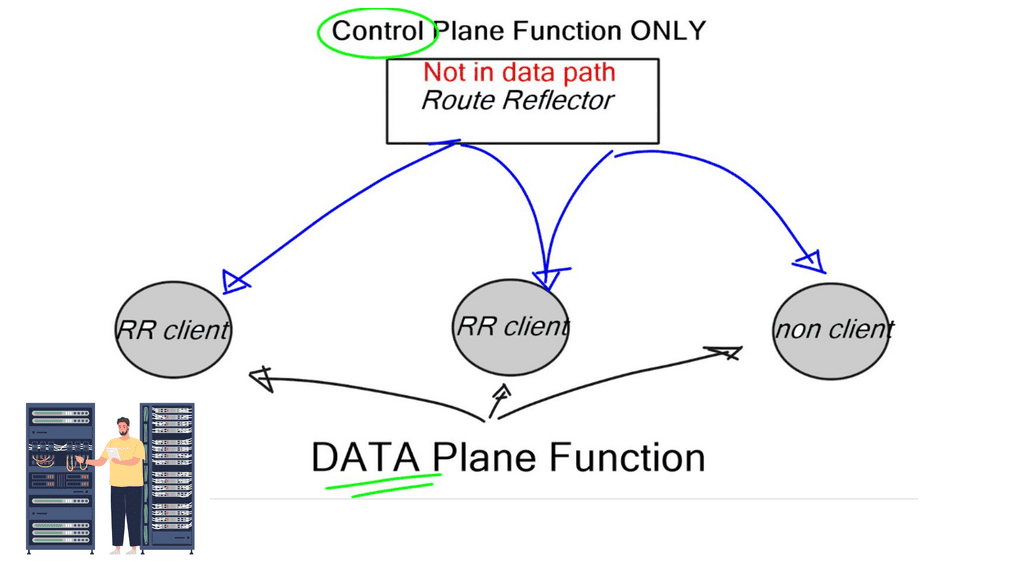

Distributed virtual routing

DVR eliminates the bottleneck caused by the Layer 3 agent and distributes most of the routing function across multiple compute nodes. This helps isolate failure domains and increases the high availability of the virtual network infrastructure. With DVR, the routing function is not centralized anymore but decentralized to the compute nodes. The compute nodes themselves become one big router.

DVR routers are spawned on the compute nodes, and all the routing gets done closer to the workload. Distributing routing to the compute nodes is much better than having a central element perform the routing function.

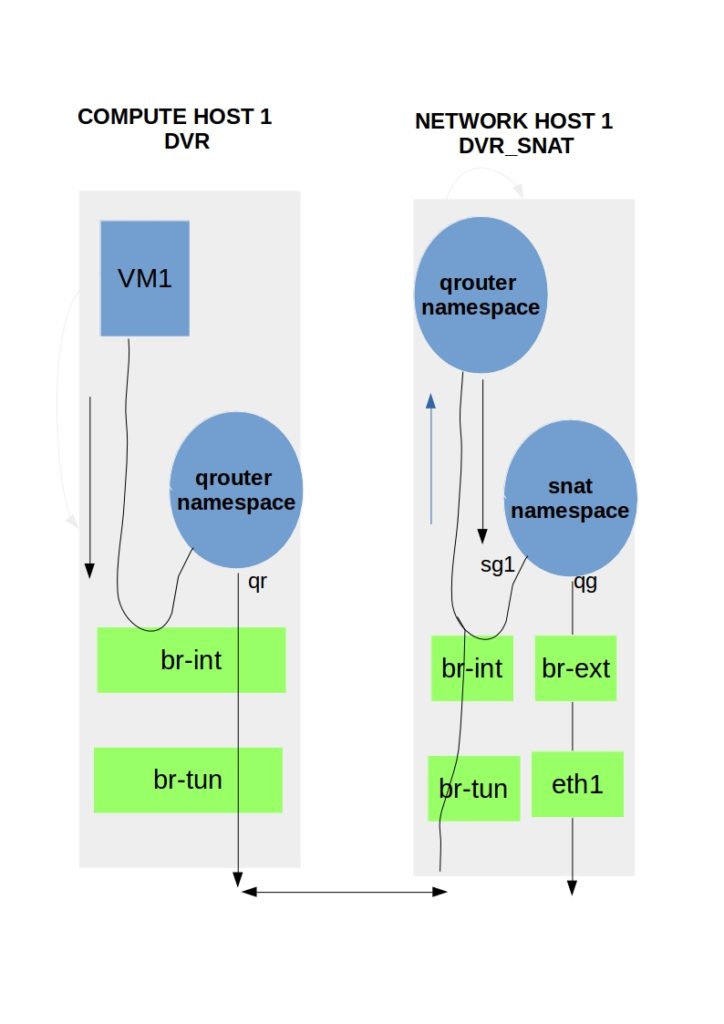

There are two types of modes: dvr and dvr_snat. Mode dvr_snat handles north-to-south SNAT traffic. Mode DVR handles north-to-south DNAT traffic ( floating IP) and all east-to-west traffic.

Key Points:

- East-West traffic ( server to server ) previously went through the centralized network node. DVR pushes this down to the compute nodes hosting the VMs.

- North-South traffic with floating IPs ( DNAT ) is routed directly by the compute nodes hosting the VMs.

- North-South traffic without floating IP ( SNAT ) is routed through a central network node. Distributing the SNAT functions to the local compute nodes can be complicated.

- DNAT is required to compute nodes using floating IPs that require direct external connectivity.

East-west traffic between instances

East-to-west traffic (traditional server-to-server) refers to local communication, such as traffic between a frontend and the backend application tier. DVR enables each compute node to host a copy of the same router. The router namespace created on each compute node has the same interface, MAC, and IP settings.

The qr interfaces within the namespaces on each compute node share the same IP and MAC address. But how is this possible?? One can assume the distribution of ports would raise IP clashes and MAC flapping. Neutron cleverly uses routing tables and Open vSwitch flow rules to enable this type of forbidden sharing.

Neutron allocates each compute node a unique MAC address, which is used whenever traffic leaves the node.

Once traffic leaves the virtual router, Open vSwitch rules rewrite the source MAC address with the unique MAC address allocated to the source node. All the manipulation is done before and after traffic leaves or enters, so the VM is unaware of any rewriting and operates as usual.

Centralized SNAT

Source SNAT is used when instances do not have a floating IP address. Neutron decided not to distribute SNAT to the compute nodes and kept it central, similar to the legacy model. Why did they decide to do this when DVR distributes floating IP for north-south traffic?

Decentralizing SNAT would require an address from the external network on every node providing the SNAT service. This would consume a lot of addresses on your external network.

The Layer 3 agent configured as dvr_snat server is the centralized SNAT function. Two namespaces are created for the same router—a regular qrouter namespace and an SNAT namespace. The SNAT and qrouter namespaces are created on the centralized nodes, either the controller or the network node.

The qrouter namespaces on the controller and compute nodes are identical. However, even though the router is attached to an external network, there are no qg interfaces. The qg interfaces are now inside the SNAT namespace. There is also now a new interface called the sg. This is used as an extra hop.

Packet Flow

- A VM without a floating IP sends a packet to an external destination.

- Traffic arrives at the regular qrouter namespace on the actual node and gets redirected to the SNAT namespace on the central node.

- To redirect traffic from the qrouter namespace to the SNAT namespace is carried out by clever tricks with source routing and multiple routing tables.

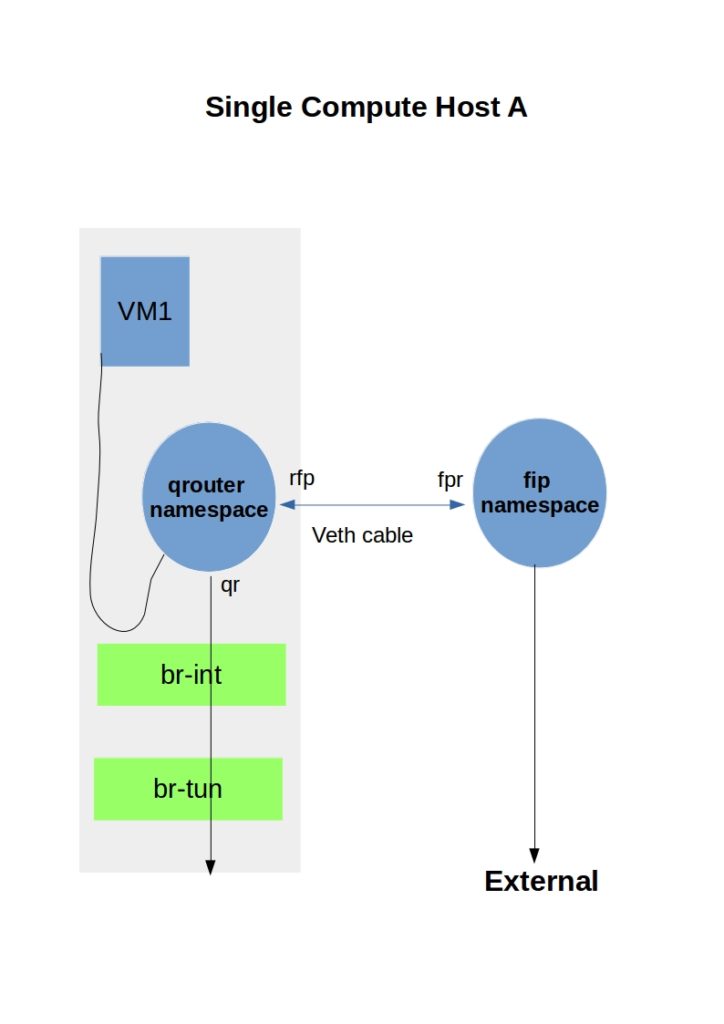

North-to-south traffic with Neutron floating IP

In the legacy world, floating IPs are configured as /32 prefixes on the router’s external device. The one-to-one mapping between the VM IP address and the floating IP address is used so external devices can initiate external traffic to the internal instance.

North-to-south traffic with floating IP is now handled with another namespace called the fip namespace. The new fip namespace is created by the Layer 3 agent and represents the external network to which the fip belongs.

Every router on the compute node is hooked into the new fip namespace with a veth pair. Veth pairs are commonly used to connect namespaces. One side of the other pair is in the router namespace (rfp), and the other end belongs to the fip namespace (fpr).

Whenever a layer 3 agent creates a new floating IP, a new rule is specific to that IP. Neutron adds the fixed IP of the VM to the rules table with an additional new routing table.

Packet Flow

- When a VM with a floating IP sends traffic to an external destination, it arrives at the qrouter namespace.

- The IP rules are consulted, showing a default route for that source to the next hop. IPtables rules kick in, and the source IP is translated to the floating IP.

- Traffic is forwarded out the rfp interface and arrives at the fpr interface at the fip namespace.

- The fip namespace uses a default route to forward traffic out the ‘fg’ device to its external destination.

Traffic in the reverse direction requires Proxy ARP, so the fip namespace answers requests for the floating IP configured on the router’s router namespace ( not the fip namespace ). In addition, proxy ARP enables hosts ( fip namespace) to answer ARP requests intended for other hosts ( qrouter namespace ).

Closing Points on Neutron Networking

Neutron is built on a modular architecture that allows for easy integration and customization. At its core, Neutron consists of several components, including the Neutron server, plugins, agents, and a database. The Neutron server handles API requests and manages network states, while plugins and agents manage network configurations on physical devices. This modular design ensures that Neutron can be extended to support new networking technologies and adapt to evolving industry standards.

Neutron offers a wide array of features that empower users to build complex network topologies. Some of the key features include:

– **Network Segmentation**: Neutron supports VLAN, VXLAN, and GRE tunneling technologies, enabling efficient network segmentation and isolation.

– **Load Balancing**: With Neutron, users can deploy load balancers as a service to distribute incoming network traffic across multiple servers, enhancing application availability and reliability.

– **Security Groups**: Neutron’s security groups allow users to define network access control policies, providing an additional layer of security for cloud applications.

– **Floating IPs**: These enable dynamic IP allocation, allowing instances to be accessed from external networks, which is crucial for public-facing applications.

Neutron is seamlessly integrated with other OpenStack services, making it an indispensable part of the OpenStack ecosystem. It works in tandem with Nova, the compute service, to ensure that network resources are allocated efficiently to virtual instances. Neutron also collaborates with Cinder, the block storage service, to provide persistent storage solutions. This integration ensures a cohesive cloud environment where networking, compute, and storage components work harmoniously.

Summary: Neutron Network

Neutron Network, a fundamental component of OpenStack, is pivotal in connecting virtual machines and providing networking services within a cloud infrastructure. In this blog post, we delved into the intricacies of the Neutron Network and explored its key features and benefits.

Understanding Neutron Network Architecture

Neutron Network operates with a modular architecture comprising various components such as agents, plugins, and drivers. These components work together to enable network virtualization, creating virtual networks, subnets, and routers. By understanding the architecture, users can leverage the full potential of the Neutron Network.

Network Virtualization with Neutron

One of the standout features of Neutron Network is its ability to provide network virtualization. By abstracting the underlying physical network infrastructure, Neutron empowers users to create isolated virtual networks tailored to their specific requirements. This flexibility allows for enhanced security, scalability, and agility within cloud environments.

Neutron Network Extensions

Neutron Network offers many extensions that cater to diverse networking needs. From load balancers and firewalls to virtual private networks (VPNs) and quality of service (QoS) policies, these extensions provide additional functionality and customization options. We explored some popular extensions and their use cases.

Section 4: Neutron Network in Action: Use Cases

To truly comprehend the value of Neutron Network, it’s essential to explore real-world use cases where its capabilities shine. This section delved into scenarios such as multi-tenant environments, hybrid cloud deployments, and network function virtualization (NFV). By examining these use cases, readers can envision the practical applications of the Neutron Network.

Conclusion:

Neutron Network is a vital networking component within OpenStack, enabling seamless connectivity and virtualization. With its modular architecture, extensive feature set, and wide range of use cases, Neutron Network empowers users to build robust and scalable cloud infrastructures. As cloud technologies evolve, Neutron Network ensures efficient and reliable networking within cloud environments.