GTM Load Balancer

In today's fast-paced digital world, websites and applications face the constant challenge of handling high traffic loads while maintaining optimal performance. This is where Global Traffic Manager (GTM) load balancer comes into play. In this blog post, we will explore the key benefits and functionalities of GTM load balancer, and how it can significantly enhance the performance and reliability of your online presence.

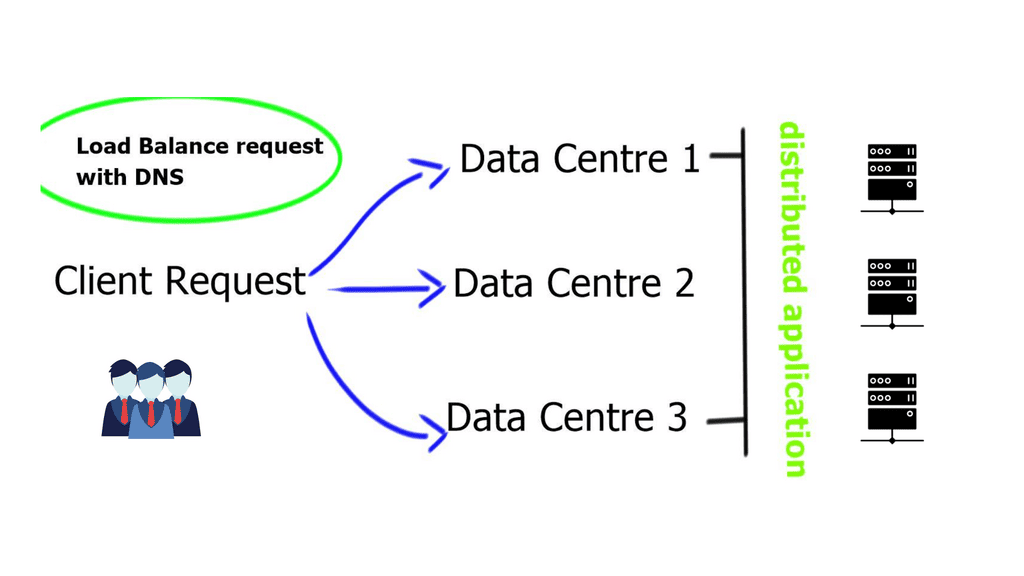

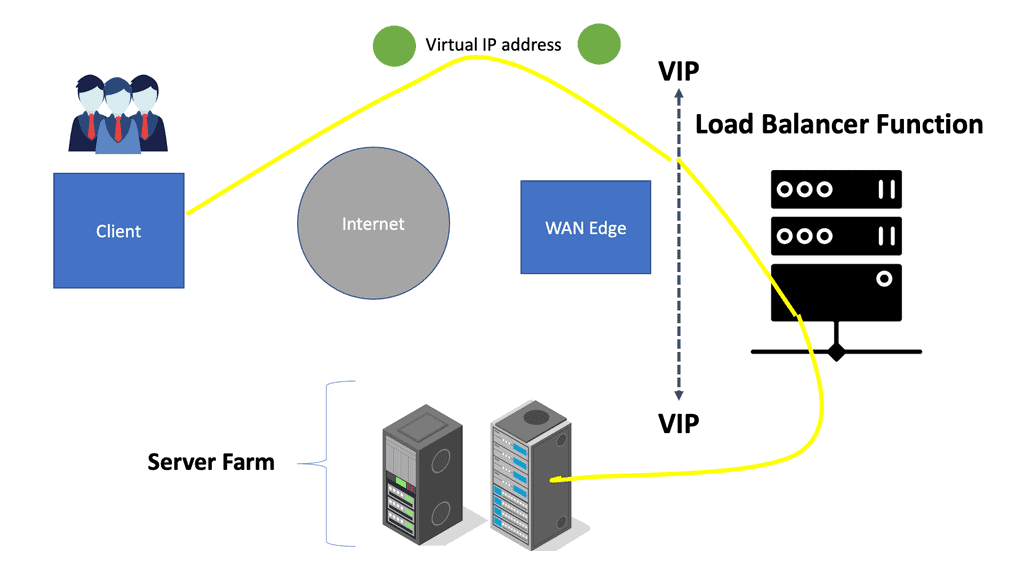

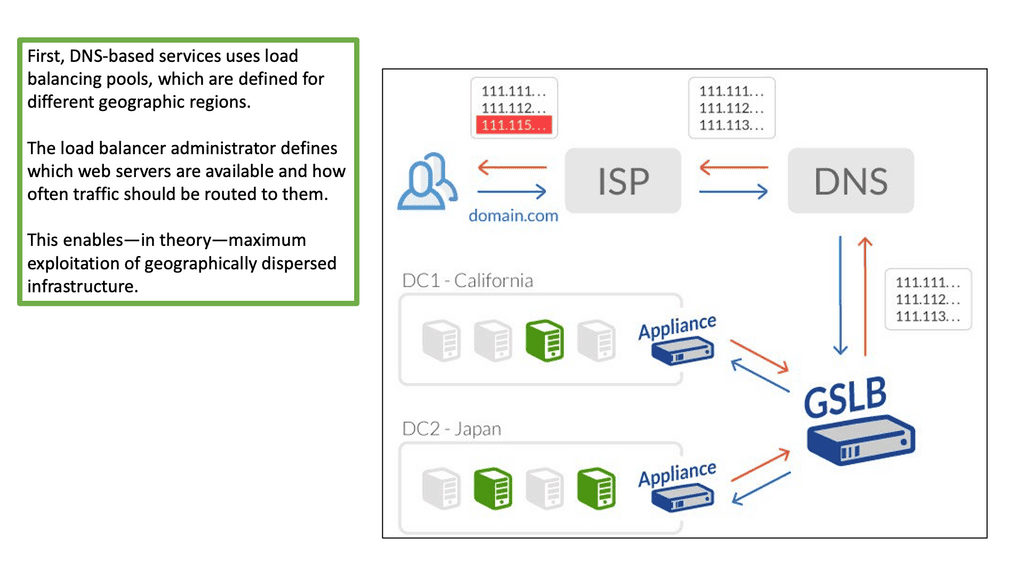

GTM Load Balancer, or Global Traffic Manager, is a sophisticated, global server load balancing solution designed to distribute incoming network traffic across multiple servers or data centers. It operates at the DNS level, intelligently directing users to the most appropriate server based on factors such as geographic location, server health, and network conditions. By effectively distributing traffic, GTM load balancer ensures that no single server becomes overwhelmed, leading to improved response times, reduced latency, and enhanced user experience.

GTM load balancer offers a range of powerful features that enable efficient load balancing and traffic management. These include:

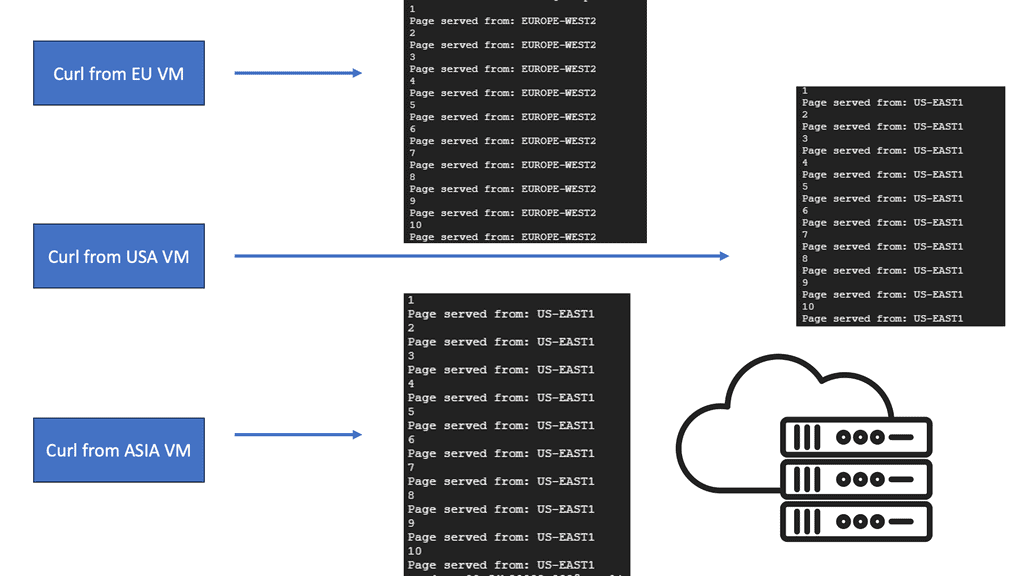

1. Geographic Load Balancing: By leveraging geolocation data, GTM load balancer directs users to the nearest or most optimal server based on their physical location, reducing latency and optimizing network performance.

2. Health Monitoring and Failover: GTM continuously monitors the health of servers and automatically redirects traffic away from servers experiencing issues or downtime. This ensures high availability and minimizes service disruptions.

3. Intelligent DNS Resolutions: GTM load balancer dynamically resolves DNS queries based on real-time performance and network conditions, ensuring that users are directed to the best available server at any given moment.

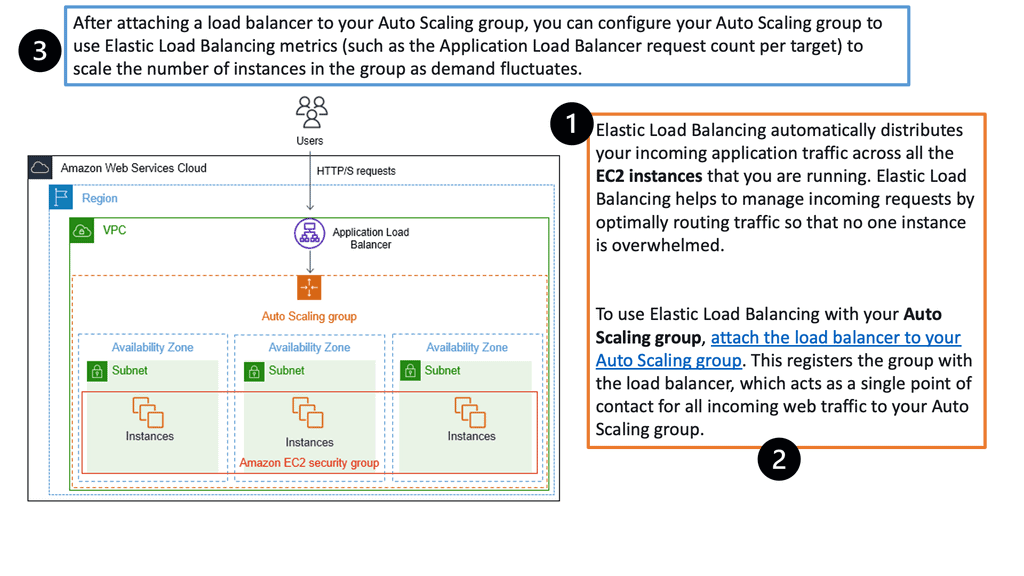

Scalability and Flexibility: One of the key advantages of GTM load balancer is its ability to scale and adapt to changing traffic patterns and business needs. Whether you are experiencing sudden spikes in traffic or expanding your global reach, GTM load balancer can seamlessly distribute the load across multiple servers or data centers. This scalability ensures that your website or application remains responsive and performs optimally, even during peak usage periods.

Integration with Existing Infrastructure: GTM load balancer is designed to integrate seamlessly with your existing infrastructure and networking environment. It can be easily deployed alongside other load balancing solutions, firewall systems, or content delivery networks (CDNs). This flexibility allows businesses to leverage their existing investments while harnessing the power and benefits of GTM load balancer.

In conclusion, GTM load balancer offers a robust and intelligent solution for achieving optimal performance and scalability in today's digital landscape. By effectively distributing traffic, monitoring server health, and adapting to changing conditions, GTM load balancer ensures that your website or application can handle high traffic loads without compromising on performance or user experience. Implementing GTM load balancer can be a game-changer for businesses seeking to enhance their online presence and stay ahead of the competition.

Matt Conran

Highlights: GTM Load Balancer

The Role of Load Balancing

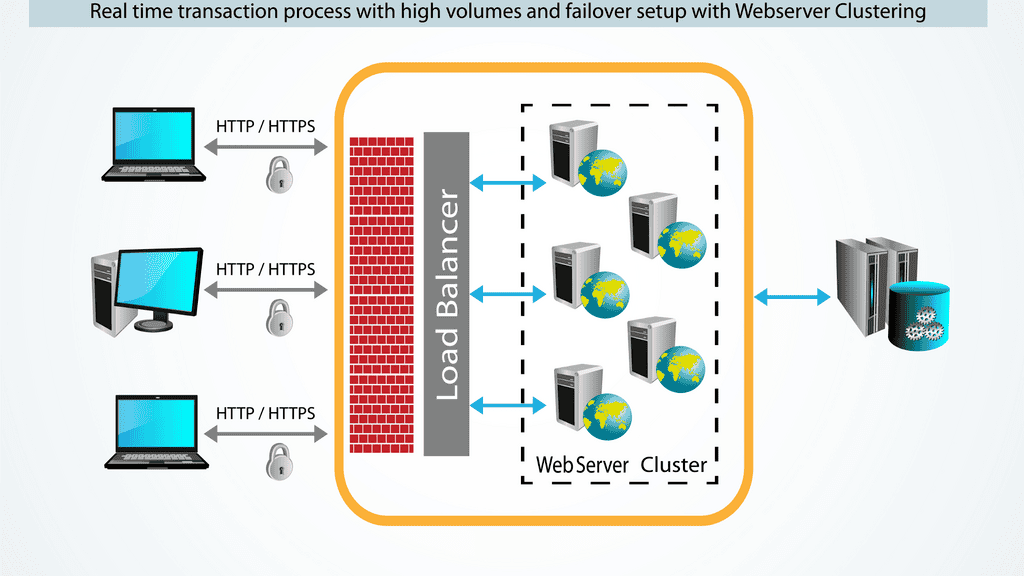

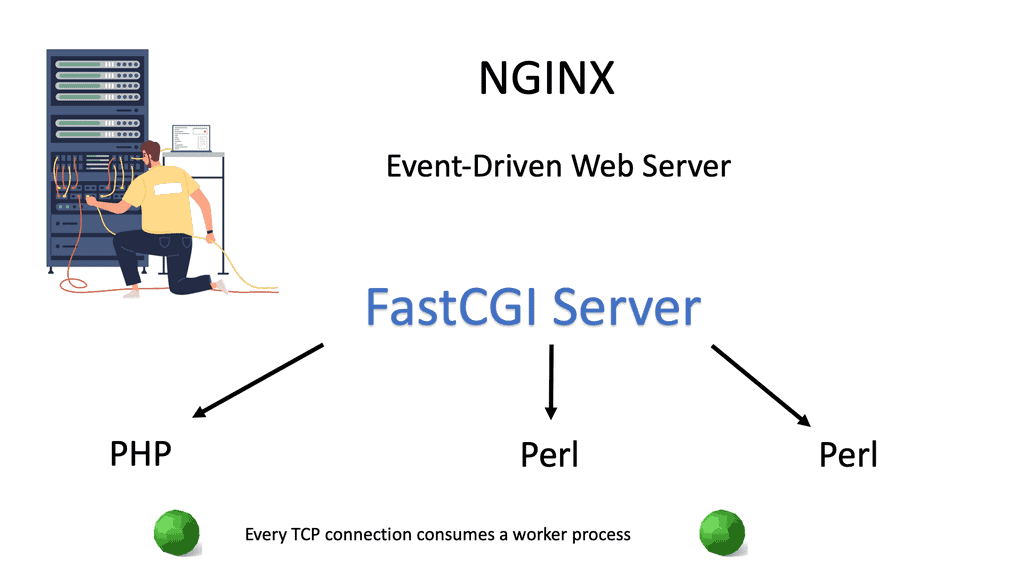

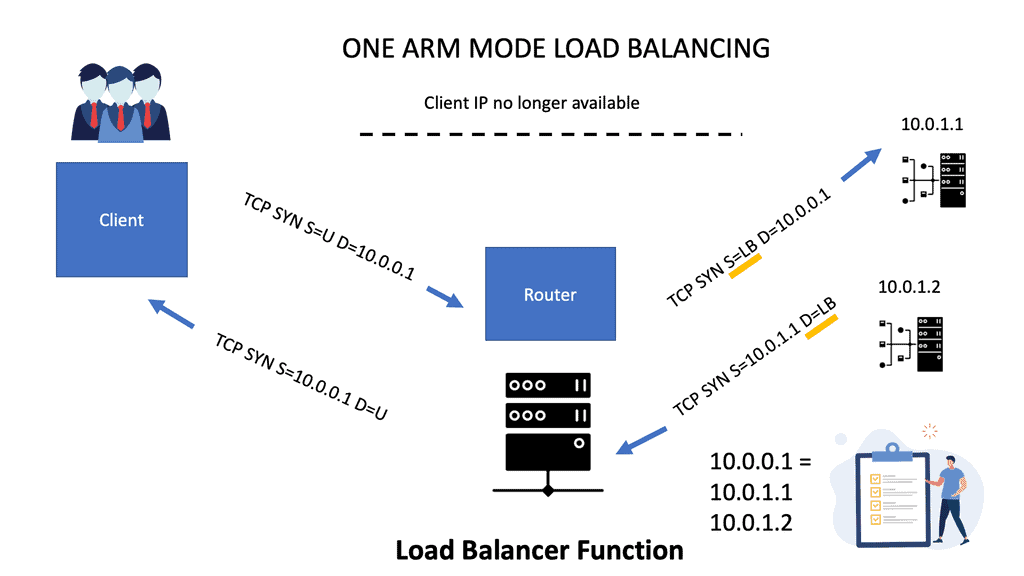

Load balancing involves spreading an application’s processing load over several different systems to improve overall performance in processing incoming requests. It splits the load that arrives into one server among several other devices, which can decrease the amount of processing done by the primary receiving server.

While splitting up different applications used to process a request among separate servers is usually the first step, there are several additional ways to increase your ability to split up and process loads—all for greater efficiency and performance. DNS load balancing failover, which we will discuss next, is the most straightforward way to load balance.

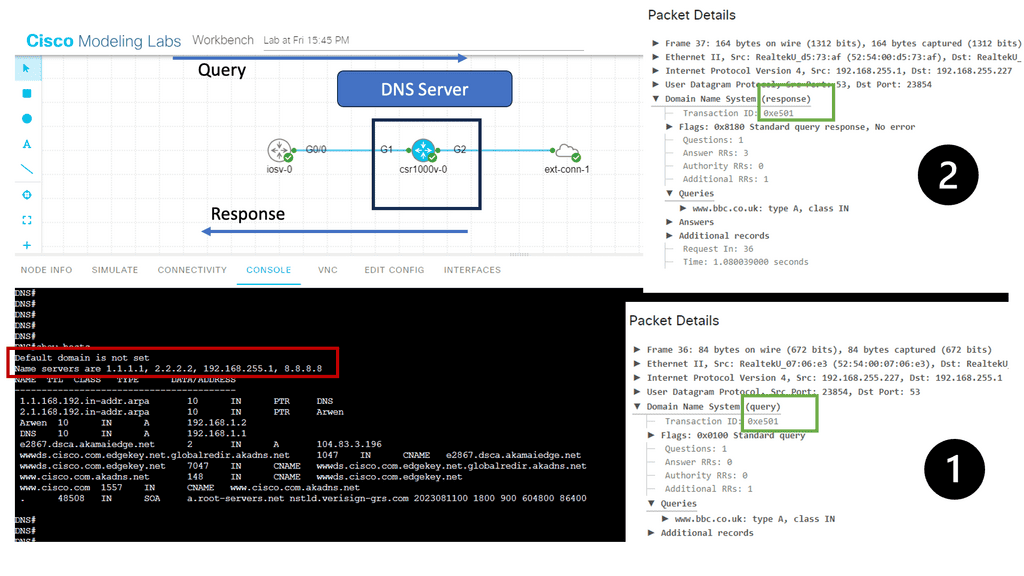

DNS Load Balancing

DNS load balancing is the simplest form of load balancing. However, it is also one of the most powerful tools available. Directing incoming traffic to a set of servers quickly solves many performance problems. In spite of its ease and quickness, DNS load balancing cannot handle all situations.

A DNS server is a cluster of servers that answer queries together but cannot handle every DNS query on the planet. The solution lies in caching. Your system looks up servers from its storage by keeping a list of known servers in a cache. As a result, you can reduce the time it takes to walk a previously visited server’s DNS tree. Furthermore, it reduces the number of queries sent to the primary nodes.

The Role of a GTM Load Balancer

A GTM Load Balancer is a solution that efficiently distributes traffic across multiple web applications and services. In addition, it distributes traffic across various nodes, allowing for high availability and scalability. As a result, these load balancers enable organizations to improve website performance, reduce costs associated with hardware, and allow seamless scaling as application demand increases. It acts as a virtual traffic cop, ensuring incoming requests are routed to the most appropriate server or data center based on predefined rules and algorithms.

The Role of an LTM Load Balancer

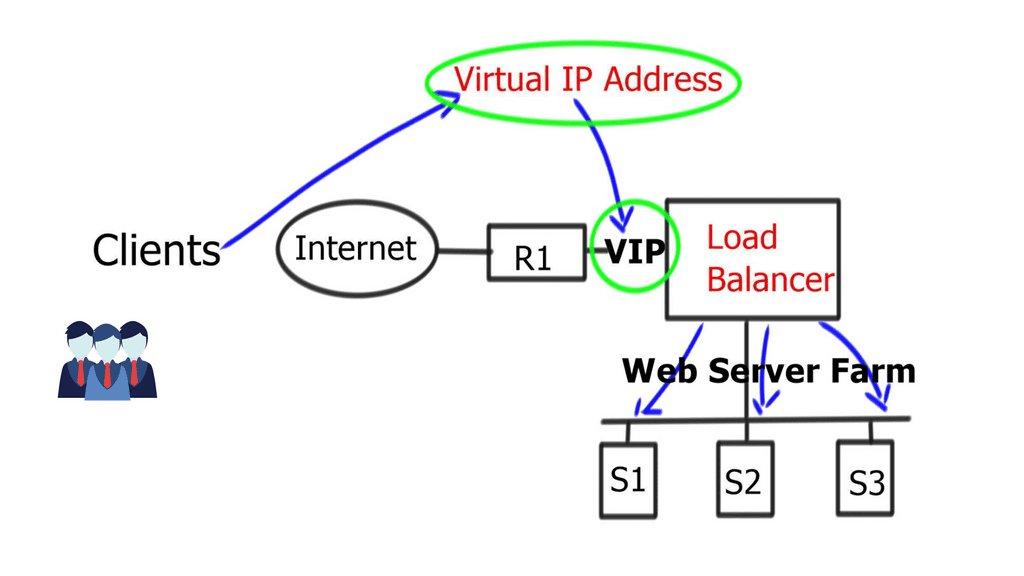

The LTM Load Balancer, short for Local Traffic Manager Load Balancer, is a software-based solution that distributes incoming requests across multiple servers. This ensures efficient resource utilization and prevents any single server from being overwhelmed. By intelligently distributing traffic, the LTM Load Balancer ensures high availability, scalability, and improved performance for applications and services.

Continuously Monitors

GTM Load Balancers continuously monitor server health, network conditions, and application performance. They use this information to distribute incoming traffic intelligently, ensuring that each server or data center operates optimally. By spreading the load across multiple servers, GTM Load Balancers prevent any single server from becoming overwhelmed, thus minimizing the risk of downtime or performance degradation.

Traffic Patterns

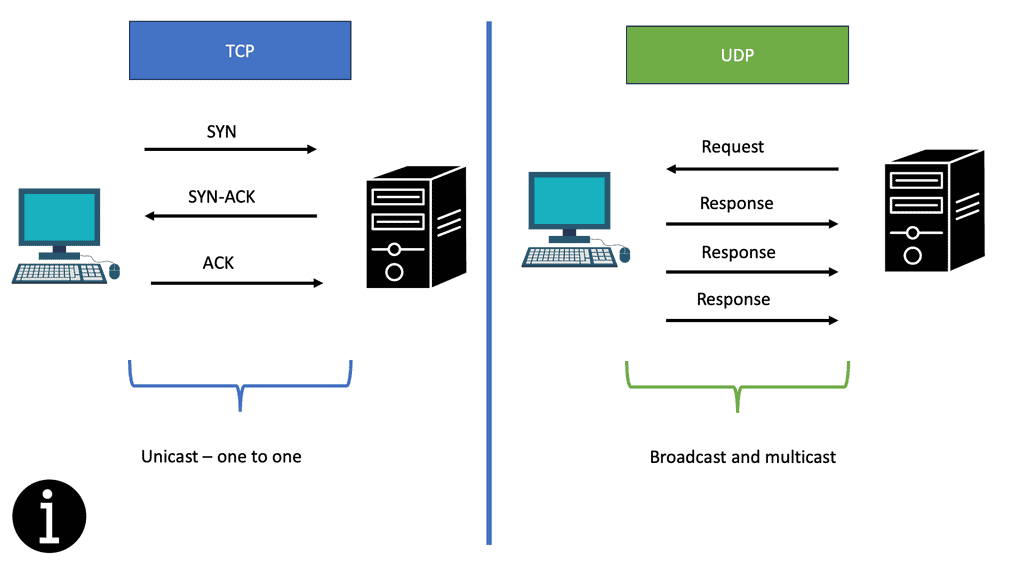

GTM Load Balancers are designed to handle a variety of traffic patterns, such as round robin, least connections, and weighted least connections. It can also be configured to use dynamic server selection, allowing for high flexibility and scalability. GTM Load Balancers work with HTTP, HTTPS, TCP, and UDP protocols, which are well-suited to handle various applications and services.

GTM Load Balancers can be deployed in public, private, and hybrid cloud environments, making them a flexible and cost-effective solution for businesses of all sizes. GTM Load Balancers have advanced features such as automatic failover, health checks, and SSL acceleration.

Related: Both of you proceed. You may find the following helpful information:

- DNS Security Solutions

- OpenShift SDN

- ASA Failover

- Load Balancing and Scalability

- Data Center Failover

- Application Delivery Architecture

- Port 179

- Full Proxy

- Load Balancing

GTM Load Balancing. Key GTM Load Balancer Discussion Points: |

|

Back to Basics: GTM load balancer

What is a Load Balancer?

A load balancer is a specialized device or software that distributes incoming network traffic across multiple servers or resources. Its primary objective is evenly distributing the workload, optimizing resource utilization, and minimizing response time. By intelligently routing traffic, load balancers prevent any single server from being overwhelmed, ensuring high availability and fault tolerance.

Load Balancer Functions and Features

Load balancers offer many functions and features that enhance network performance and scalability. Some essential functions include:

1. Traffic Distribution: Load balancers efficiently distribute incoming network traffic across multiple servers, ensuring no single server is overwhelmed.

2. Health Monitoring: Load balancers continuously monitor the health and availability of servers, automatically detecting and avoiding faulty or unresponsive ones.

3. Session Persistence: Load balancers can maintain session persistence, ensuring that requests from the same client are consistently routed to the same server, which is essential for specific applications.

4. SSL Offloading: Load balancers can offload the SSL/TLS encryption and decryption process, relieving the backend servers from this computationally intensive task.

5. Scalability: Load balancers allow for easy resource scaling by adding or removing servers dynamically, ensuring optimal performance as demand fluctuates.

Types of Load Balancers

Load balancers come in different types, each catering to specific network architectures and requirements. The most common types include:

1. Hardware Load Balancers: These devices are designed for load balancing. They offer high performance and scalability and often have advanced features.

2. Software Load Balancers: These are software-based load balancers that run on standard server hardware or virtual machines. They provide flexibility and cost-effectiveness while still delivering robust load-balancing capabilities.

3. Cloud Load Balancers: Cloud service providers offer load-balancing solutions as part of their infrastructure services. These load balancers are highly scalable, automatically adapting to changing traffic patterns, and can be easily integrated into cloud environments.

GTM and LTM Load Balancing Options

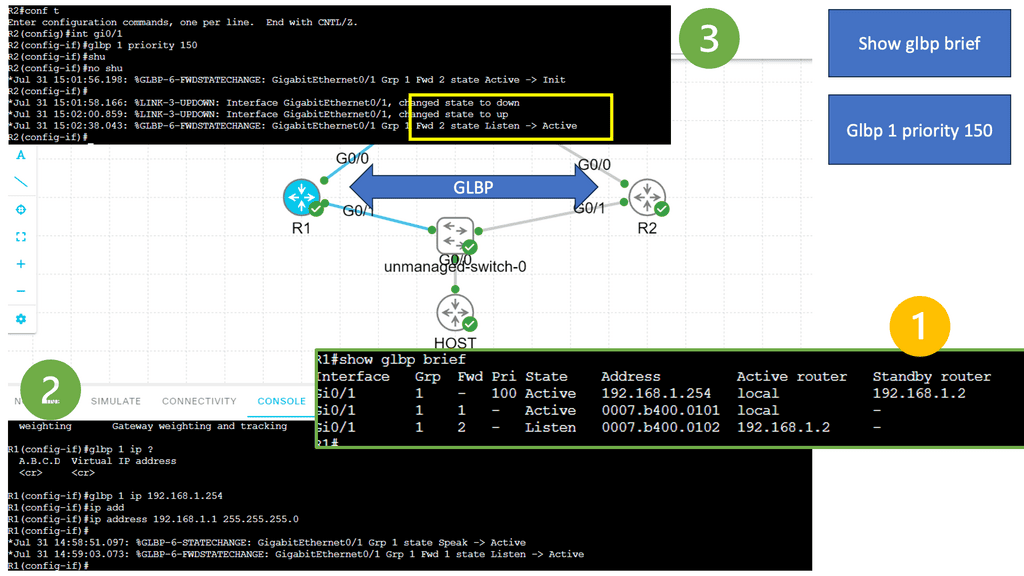

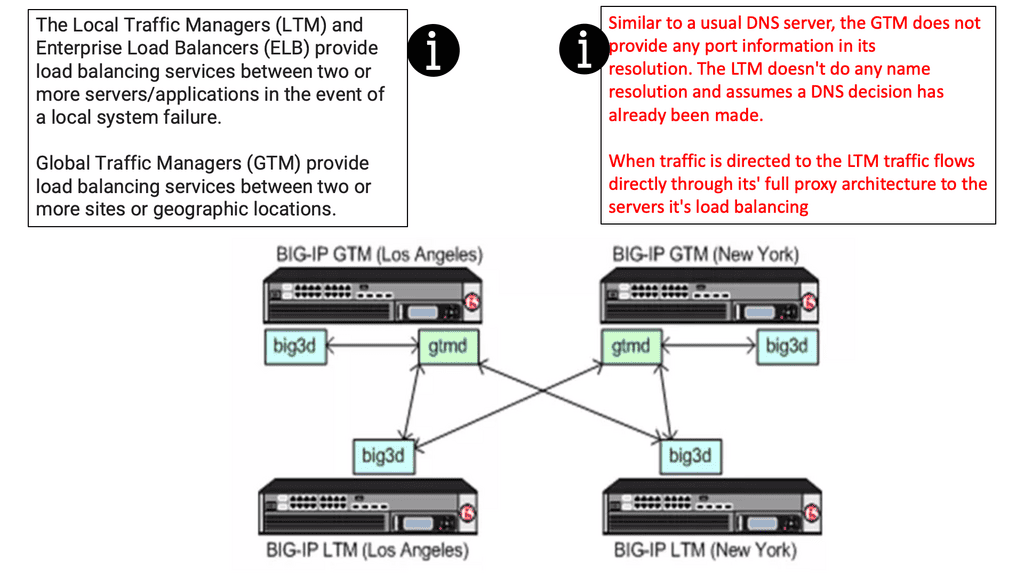

The Local Traffic Managers (LTM) and Enterprise Load Balancers (ELB) provide load-balancing services between two or more servers/applications in case of a local system failure. Global Traffic Managers (GTM) provide load-balancing services between two or more sites or geographic locations.

Local Traffic Managers, or Load Balancers, are devices or software applications that distribute incoming network traffic across multiple servers, applications, or network resources. They act as intermediaries between users and the servers or resources they are trying to access. By intelligently distributing traffic, LTMs help prevent server overload, minimize downtime, and improve system performance.

GTM Load Balancer | Main GTM Load Balaner Components GTM Load Balancer

|

GTM and LTM Components

Before diving into the communication between GTM and LTM, let’s understand what each component does.

GTM, or Global Traffic Manager, is a robust DNS-based load-balancing solution that distributes incoming network traffic across multiple servers in different geographical regions. Its primary objective is to ensure high availability, scalability, and optimal performance by directing users to the most suitable server based on various factors such as geographic location, server health, and network conditions.

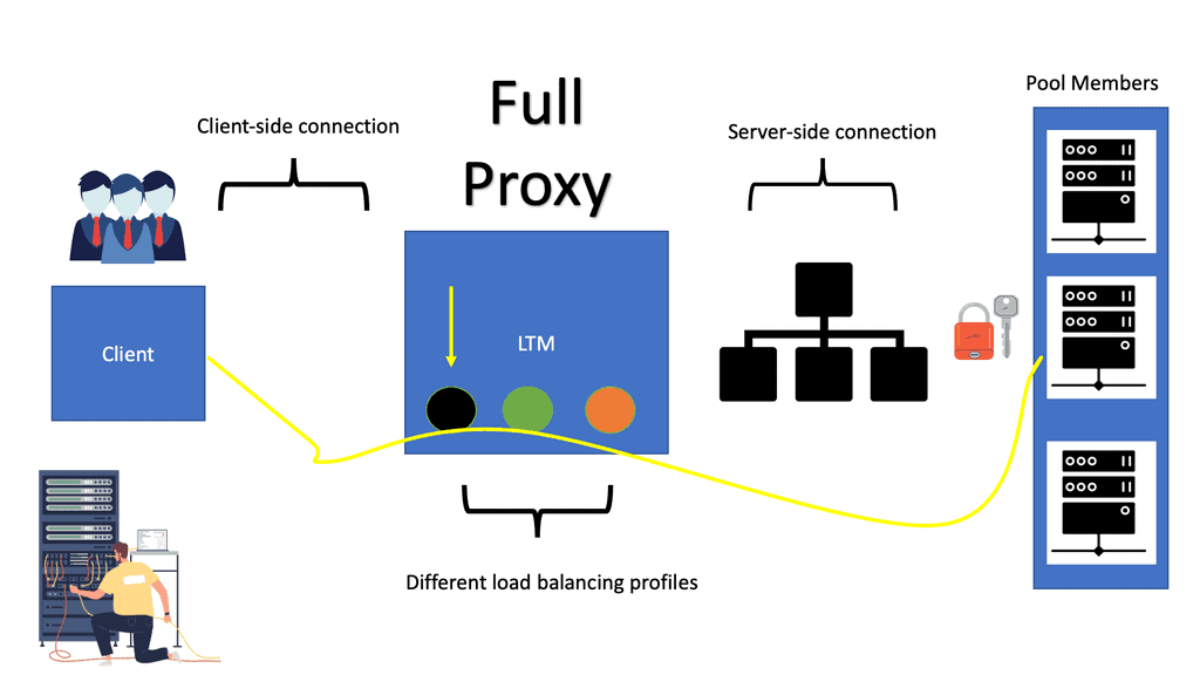

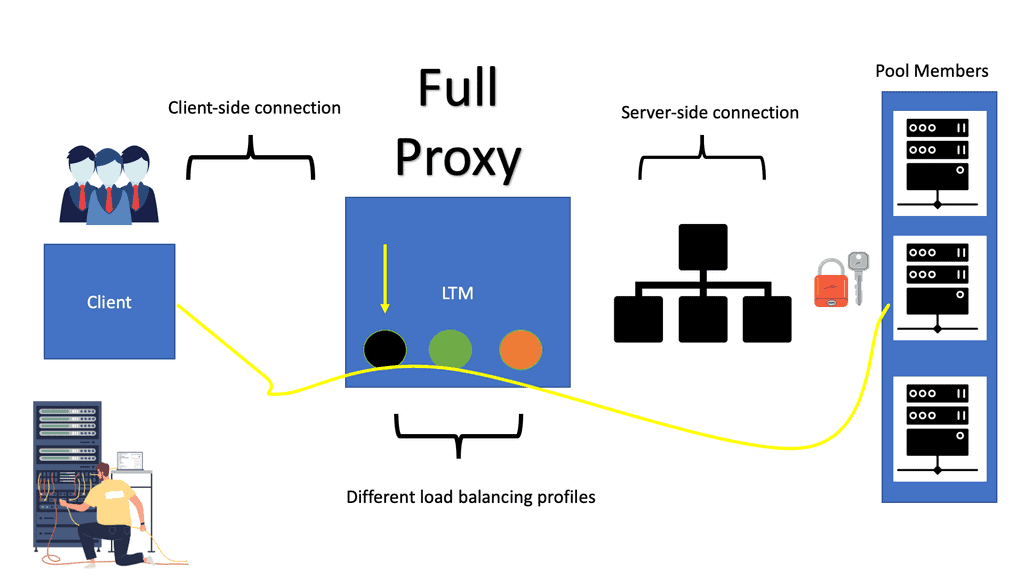

On the other hand, LTM, or Local Traffic Manager, is responsible for managing network traffic at the application layer. It works within a local data center or a specific geographic region, balancing the load across servers, optimizing performance, and ensuring secure connections.

As mentioned earlier, the most significant difference between the GTM and LTM is traffic doesn’t flow through the GTM to your servers.

- GTM (Global Traffic Manager )

The GTM load balancer balances traffic between application servers across Data Centers. Using F5’s iQuery protocol for communication with other BIGIP F5 devices, GTM acts as an “Intelligent DNS” server, handling DNS resolutions based on intelligent monitors. The service determines where to resolve traffic requests among multiple data center infrastructures.

- LTM (Local Traffic Manager)

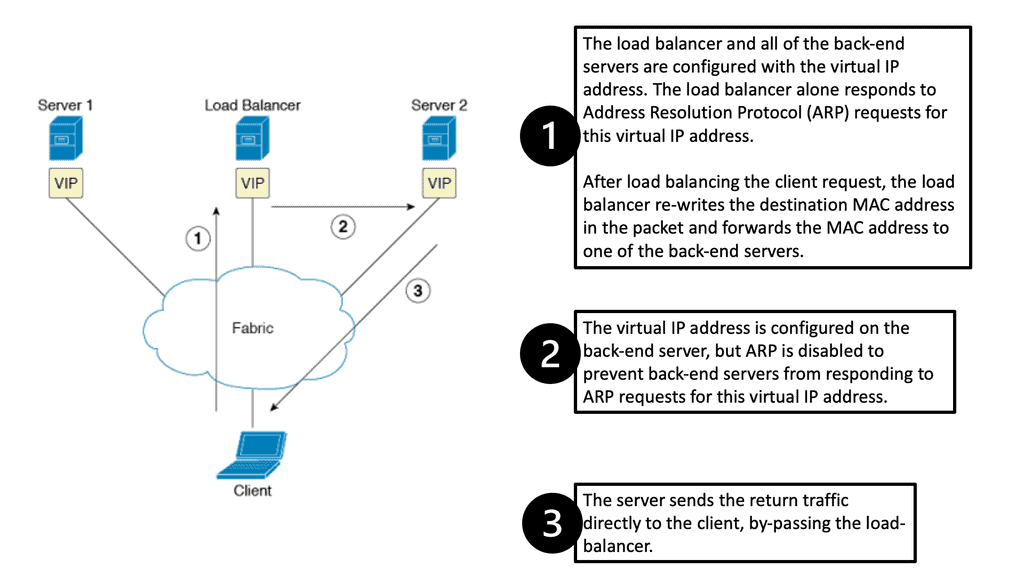

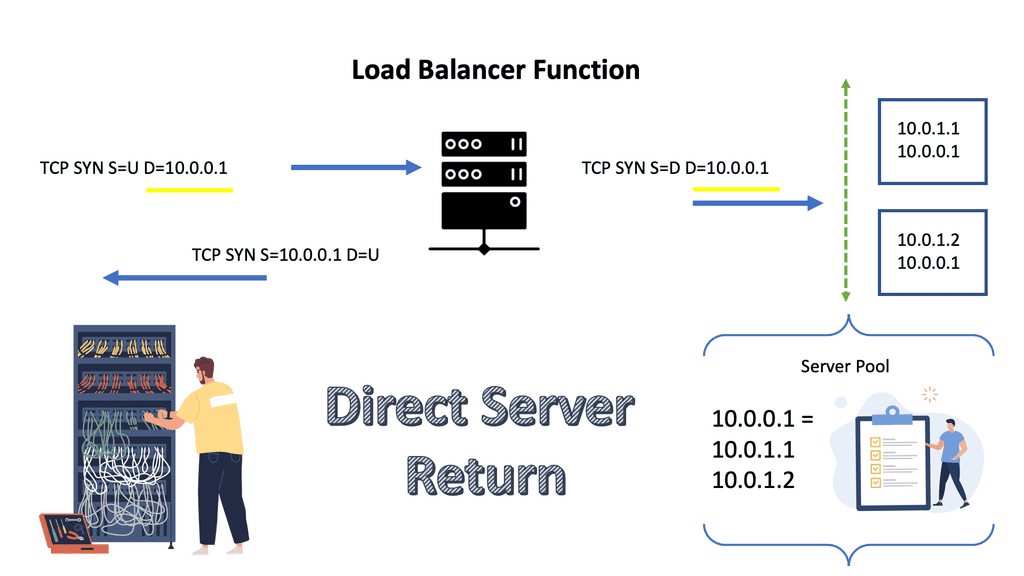

LTM balances servers and caches, compresses, persists, etc. The LTM network acts as a full reverse proxy, handling client connections. The F5 LTM uses Virtual Services (VSs) and Virtual IPs (VIPs) to configure a load-balancing setup for a service.

LTMs offer two load balancing methods: nPath configuration and Secure Network Address Translation (SNAT). In addition to load balancing, LTM performs caching, compression, persistence, and other functions.

Load Balancing Global Traffic Manager Load Balancing Functions

| Load Balancing Local Traffic Manager Load Balancing Functions

|

Communication between GTM and LTM:

BIG-IP Global Traffic Manager (GTM) uses the iQuery protocol to communicate with the local big3d agent and other BIG-IP big3d agents. GTM monitors BIG-IP systems’ availability, the network paths between them, and the local DNS servers attempting to connect to them.

The communication between GTM and LTM occurs in three key stages:

1. Configuration Synchronization:

GTM and LTM communicate to synchronize their configuration settings. This includes exchanging information about the availability of different LTM instances, their capacities, and other relevant parameters. By keeping the configuration settings current, GTM can efficiently make informed decisions on distributing traffic.

2. Health Checks and Monitoring:

GTM continuously monitors the health and availability of the LTM instances by regularly sending health check requests. These health checks ensure that only healthy LTM instances are included in the load-balancing decisions. If an LTM instance becomes unresponsive or experiences issues, GTM automatically removes it from the distribution pool, optimizing the traffic flow.

3. Dynamic Traffic Distribution:

GTM distributes incoming traffic to the most suitable LTM instances based on the configuration settings and real-time health monitoring. This ensures load balancing across multiple servers, prevents overloading, and improves the overall user experience. Additionally, GTM can reroute traffic to alternative LTM instances in case of failures or high traffic volumes, enhancing resilience and minimizing downtime.

- A key point: TCP Port 4353

LTMs and GTMs can work together or separately. Most organizations that own both modules use them together, and that’s where the real power lies.

They use a proprietary protocol called iQuery to accomplish this.

Through TCP port 4353, iQuery reports VIP availability/performance to GTMs. A GTM can then dynamically resolve VIPs that reside on an LTM. With LTMs as servers in GTM configuration, there is no need to monitor VIPs directly with application monitors since the LTM is doing that, and iQuery reports it back to the GTM.

Text |

|

Button Text |

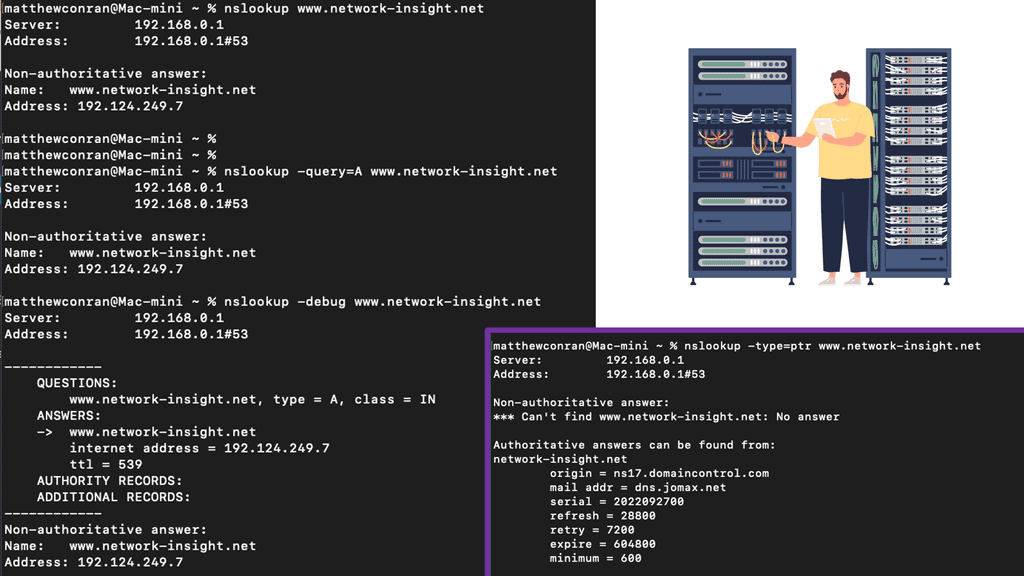

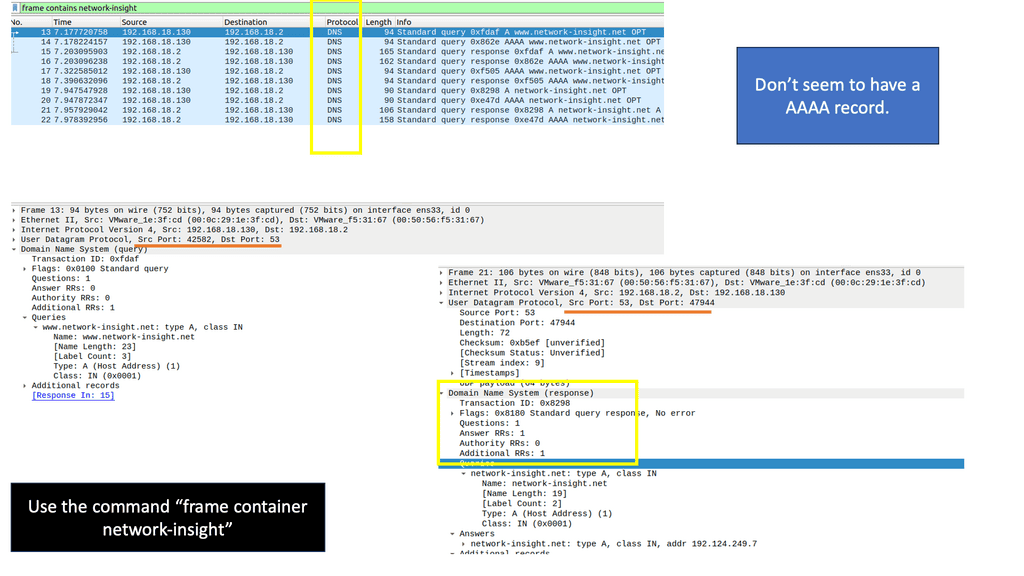

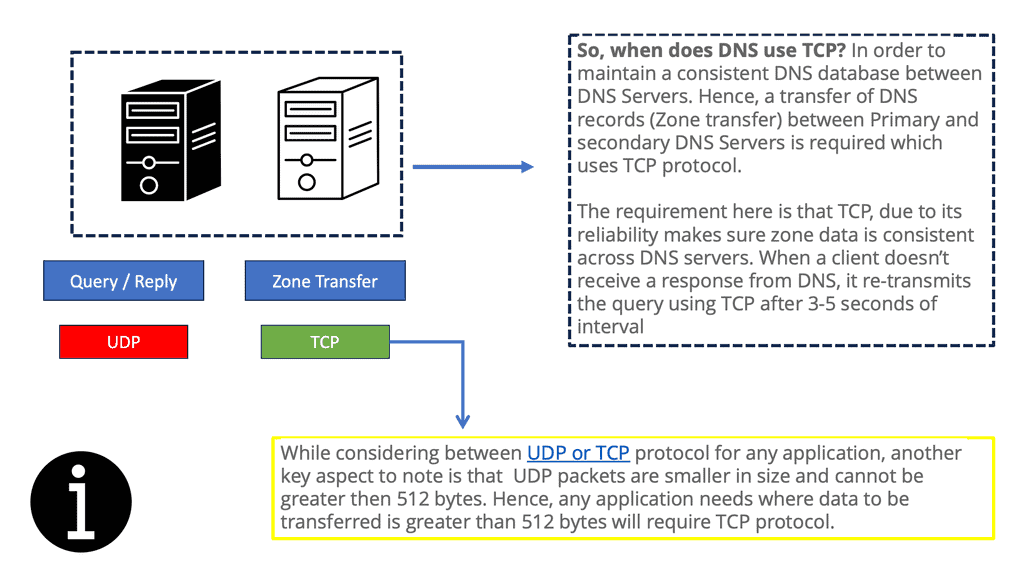

The Role of DNS With Load Balancing

The GTM load balancer offers intelligent Domain Name System (DNS) resolution capability to resolve queries from different sources to different data center locations. It loads and balances DNS queries to existing recursive DNS servers and caches the response or processes the resolution. This does two main things. First, for security, it can enable DNS security designs and act as the authoritative DNS server or secondary authoritative DNS server web. It implements several security services with DNSSEC, allowing it to protect against DNS-based DDoS attacks.

DNS relies on UDP for transport, so you are also subject to UDP control plane attacks and performance issues. DNS load balancing failover can improve performance for load balancing traffic to your data centers. DNS is much more graceful than Anycast and is a lightweight protocol.

DNS load balancing provides several significant advantages.

Adding a duplicate system may be a simple way to increase your load when you need to process more traffic. If you route multiple low-bandwidth Internet addresses to one server, the server will have a more significant amount of total bandwidth.

DNS load balancing is easy to configure. Adding the additional addresses to your DNS database is as easy as 1-2-3! It doesn’t get any easier than this!

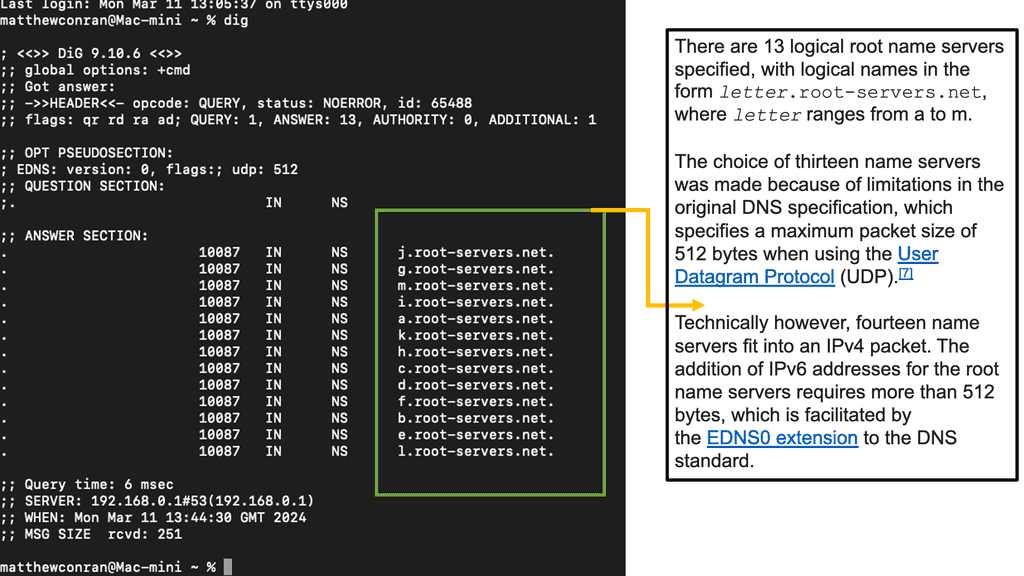

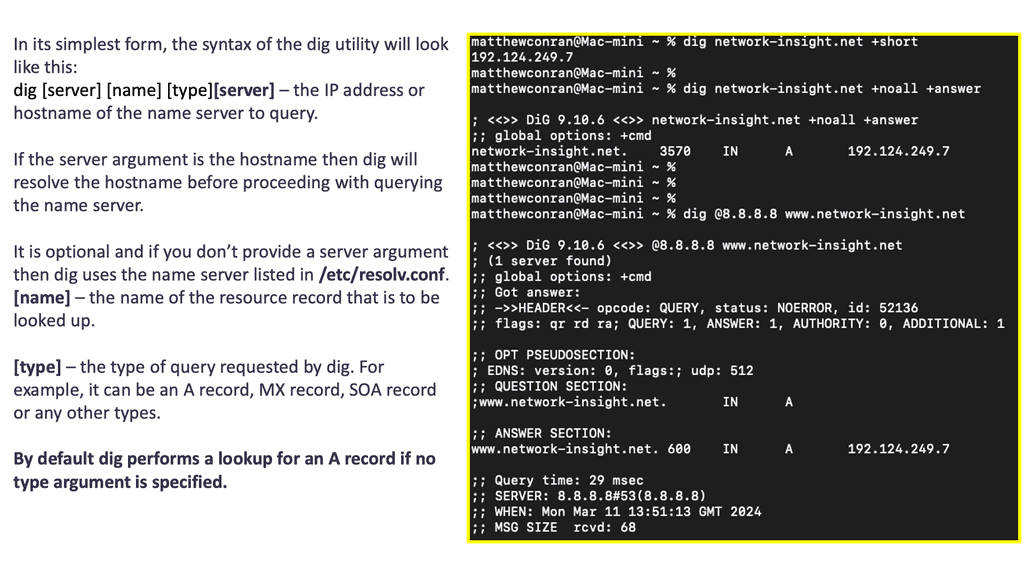

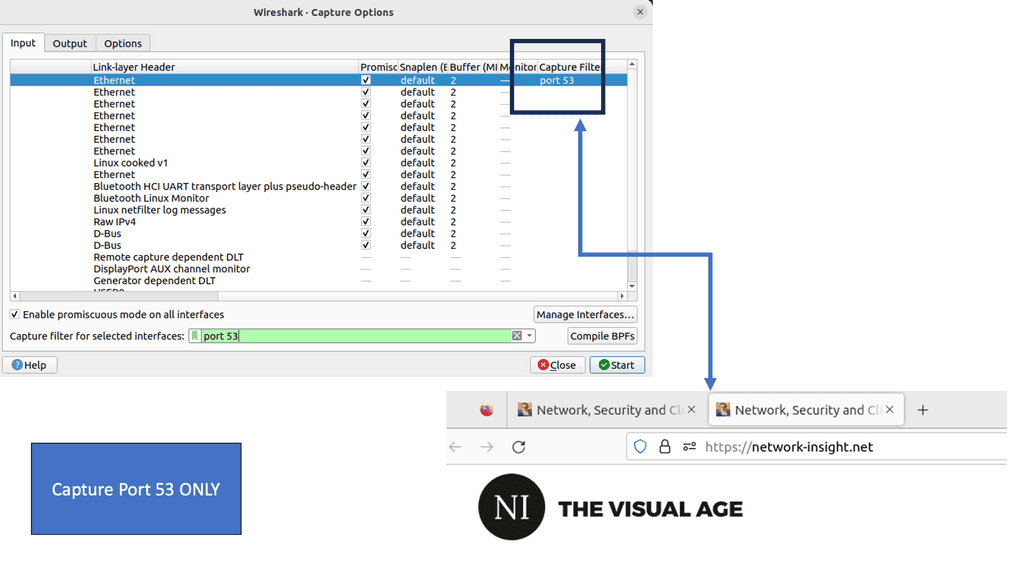

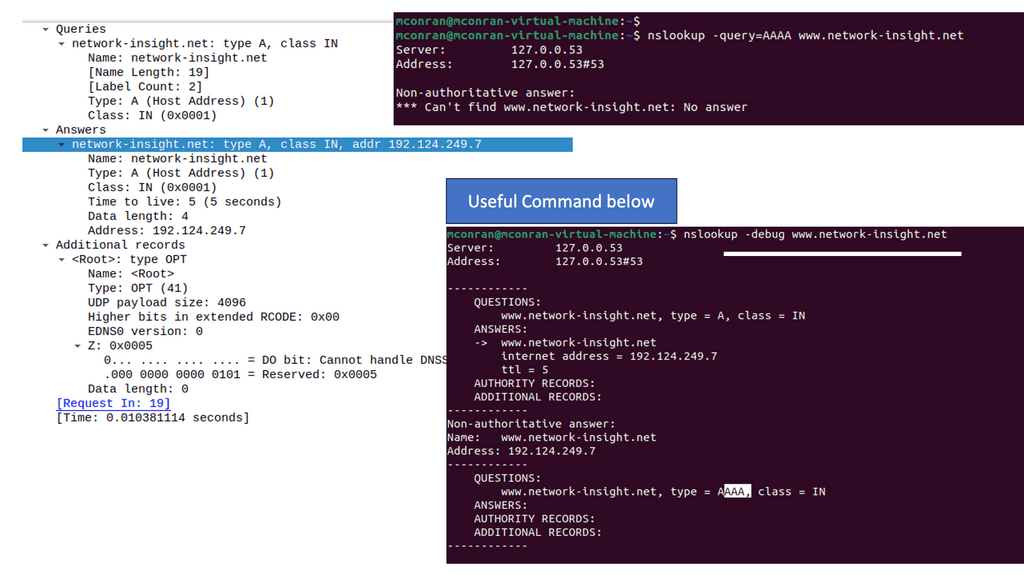

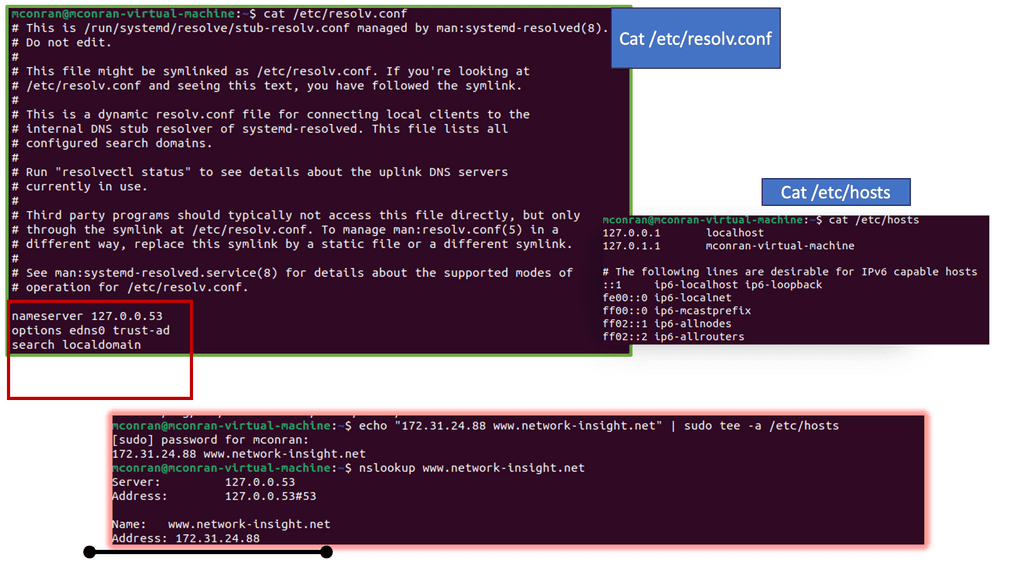

Simple to debug: You can work with DNS using tools such as dig, ping, and nslookup. In addition, BIND includes tools for validating your configuration, and all testing can be conducted via the local loopback adapter.

You will need a DNS server to have a domain name since you have a web-based system. At some point, you will undoubtedly need a DNS server. Your existing platform can be quickly extended with DNS-based load balancing!

Issues with DNS Load Balancing

In addition to its limitations, DNS load balancing also has some advantages.

Dynamic applications suffer from sticky behavior, but static sites rarely experience it. HTTP (and, therefore, the Web) is a stateless protocol. Chronic amnesia prevents it from remembering one request from another. To overcome this, a unique identifier accompanies each request. Identifiers are stored in cookies, but there are other sneaky ways to do this.

Through this unique identifier, your web browser can collect information about your current interaction with the website. Since this data isn’t shared between servers, if a new DNS request is made to determine the IP, there is no guarantee you will return to the server with all of the previously established information.

As mentioned previously, one in two requests may be high-intensity, and one in two may be easy. In the worst-case scenario, all high-intensity requests would go to only one server while all low-intensity requests would go to the other. This is not a very balanced situation, and you should avoid it at all costs lest you ruin the website for half of the visitors.

A fault-tolerant system. DNS load balancers cannot detect when one web server goes down, so they still send traffic to the space left by the downed server. As a result, half of all request

Benefits of GTM Load Balancer:

1. Enhanced Website Performance: By efficiently distributing traffic, GTM Load Balancer helps balance the server load, preventing any single server from being overwhelmed. This leads to improved website performance, faster response times, and reduced latency, resulting in a seamless user experience.

2. Increased Scalability: As online businesses grow, the demand for server resources increases. GTM Load Balancer allows enterprises to scale their infrastructure by adding more servers or data centers. This ensures that the website can handle increasing traffic without compromising performance.

3. Improved Availability and Redundancy: GTM Load Balancer offers high availability by continuously monitoring server health and automatically redirecting traffic away from any server experiencing issues. It can detect server failures and quickly reroute traffic to healthy servers, minimizing downtime and ensuring uninterrupted service.

4. Geolocation-based Routing: Businesses often cater to a diverse audience across different regions in a globalized world. GTM Load Balancer can intelligently route traffic based on the user’s geolocation, directing them to the nearest server or data center. This reduces latency and improves the overall user experience.

5. Traffic Steering: GTM Load Balancer allows businesses to prioritize traffic based on specific criteria. For example, it can direct high-priority traffic to servers with more resources or specific geographic locations. This ensures that critical requests are processed efficiently, meeting the needs of different user segments.

Key Features of GTM Load Balancer:

1. Geographic Load Balancing: GTM Load Balancer uses geolocation-based routing to direct users to the nearest server location. This reduces latency and ensures that users are connected to the server with the lowest network hops, resulting in faster response times.

2. Health Monitoring: The load balancer continuously monitors the health and availability of servers. If a server becomes unresponsive or experiences a high load, GTM Load Balancer automatically redirects traffic to healthy servers, minimizing service disruptions and maintaining high availability.

3. Flexible Load Balancing Algorithms: GTM Load Balancer offers a range of load balancing algorithms, including round-robin, weighted round-robin, and least connections. These algorithms enable businesses to customize the traffic distribution strategy based on their specific needs, ensuring optimal performance for different types of web applications.

Back to Basic: DNS Load Balancing Failover

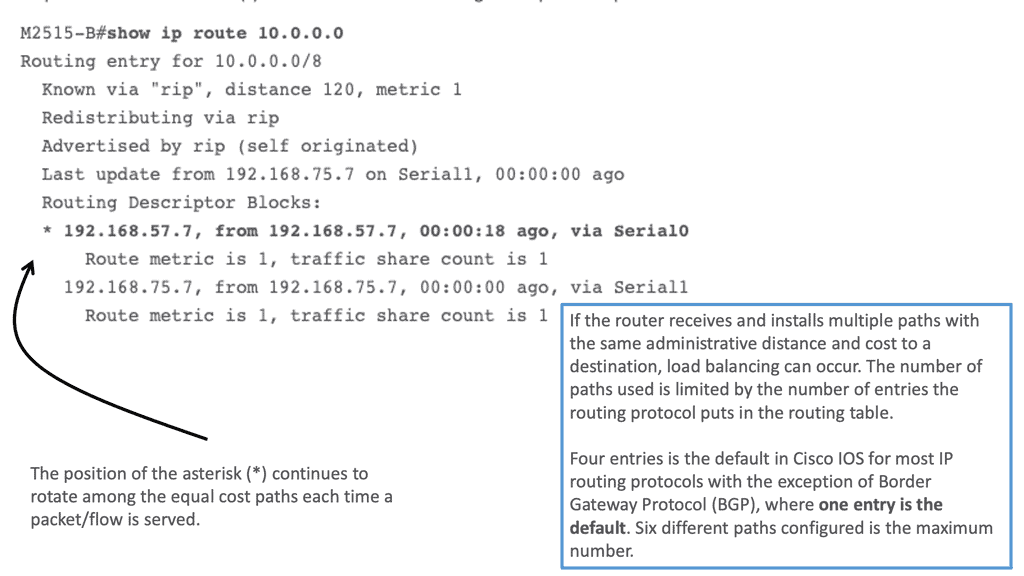

DNS load balancing is the simplest form of load balancing. As for the actual load balancing, it is somewhat straightforward in how it works. It uses a direct method called round robin to distribute connections over the group of servers it knows for a specific domain. It does this sequentially. This means going first, second, third, etc.). To add DNS load balancing failover to your server, you must add multiple A records for a domain.

GTM load balancer and LTM

DNS load balancing failover

The GTM load balancer and the Local Traffic Manager (LTM) provide load-balancing services towards physically dispersed endpoints. Endpoints are in separate locations but logically grouped in the eyes of the GTM. For data center failover events, DNS is much more graceful than Anycast. With GTM DNS failover, end nodes are restarted (cold move) into secondary data centers with a different IP address.

As long as the DNS FQDN remains the same, new client connections are directed to the restarted hosts in the new data center. The failover is performed with a DNS change, making it a viable option for disaster recovery, disaster avoidance, and data center migration.

On the other hand, stretch clusters and active-active data centers pose a separate set of challenges. In this case, other mechanisms, such as FHRP localization and LISP, are combined with the GTM to influence ingress and egress traffic flows.

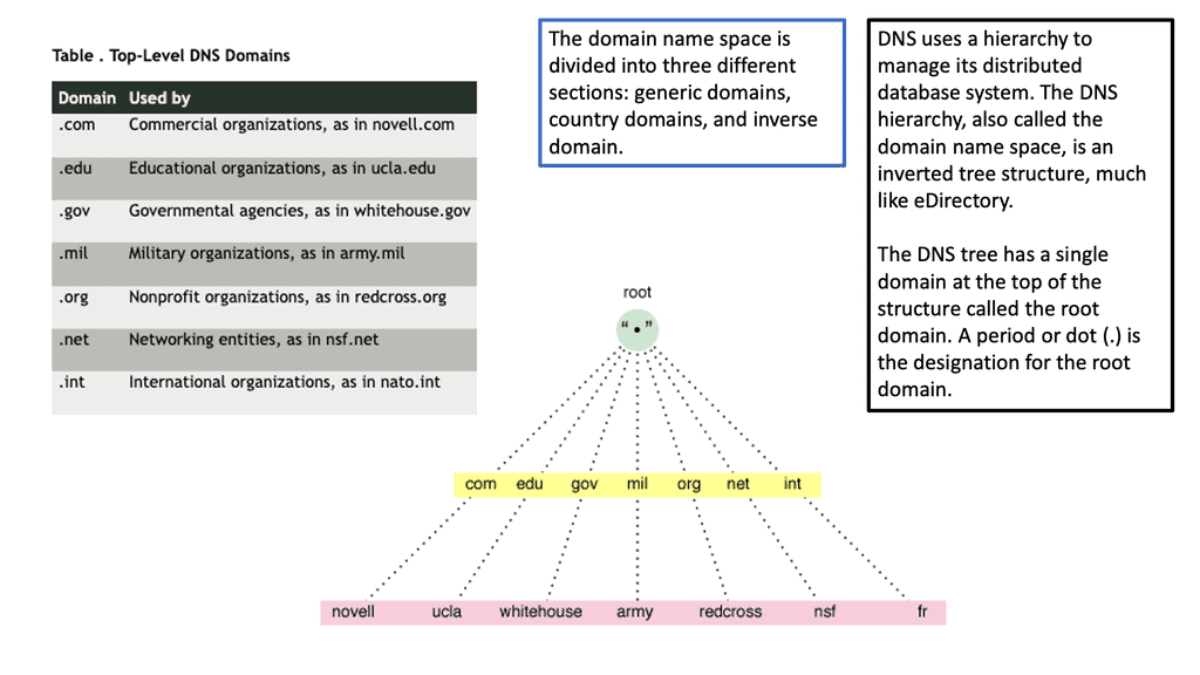

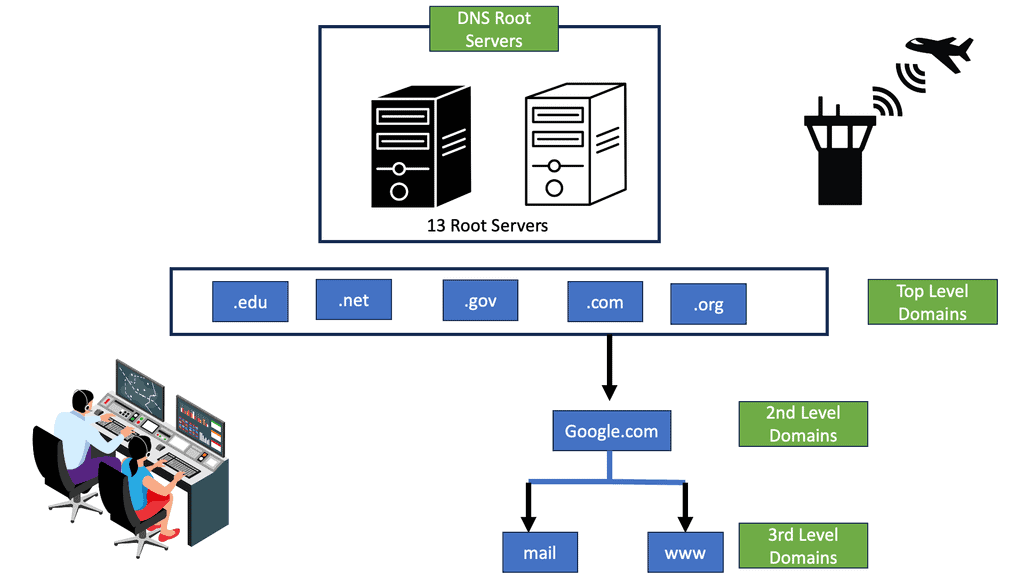

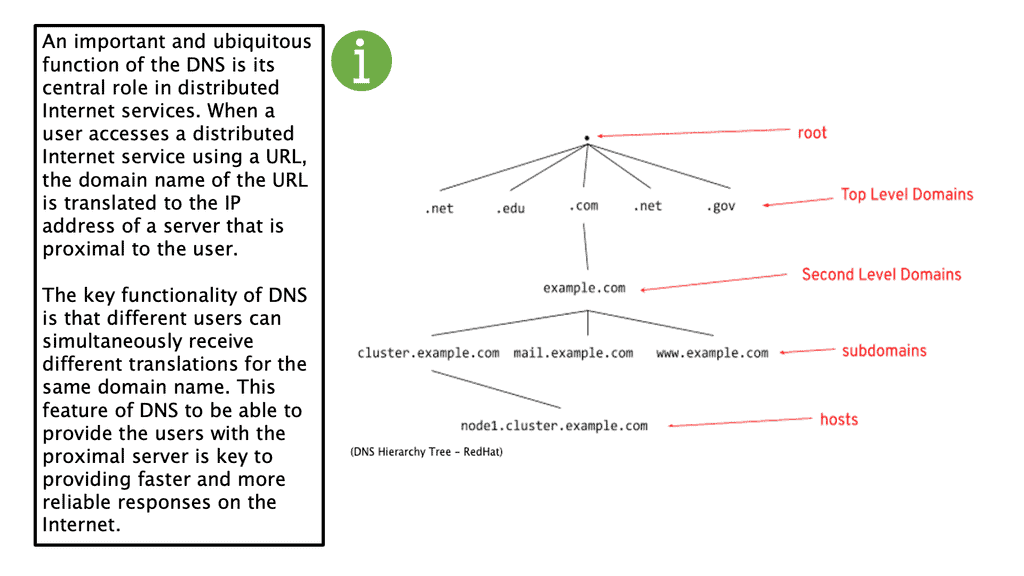

DNS Namespace Basics

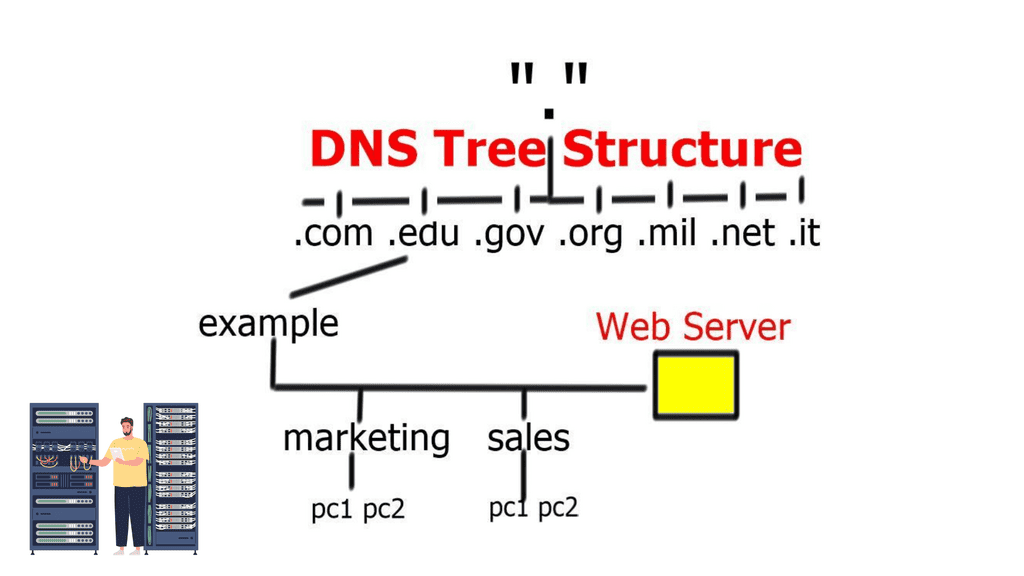

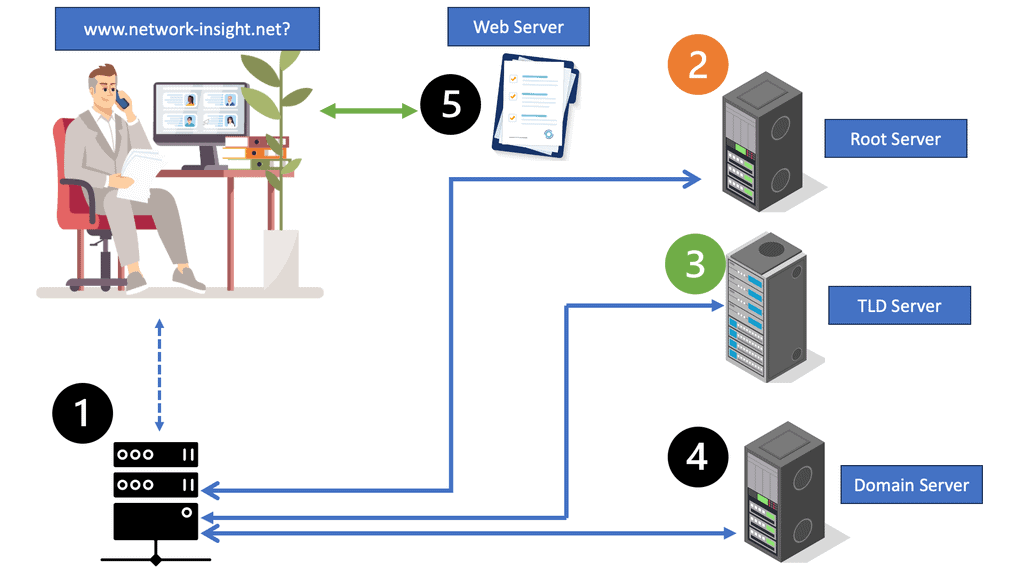

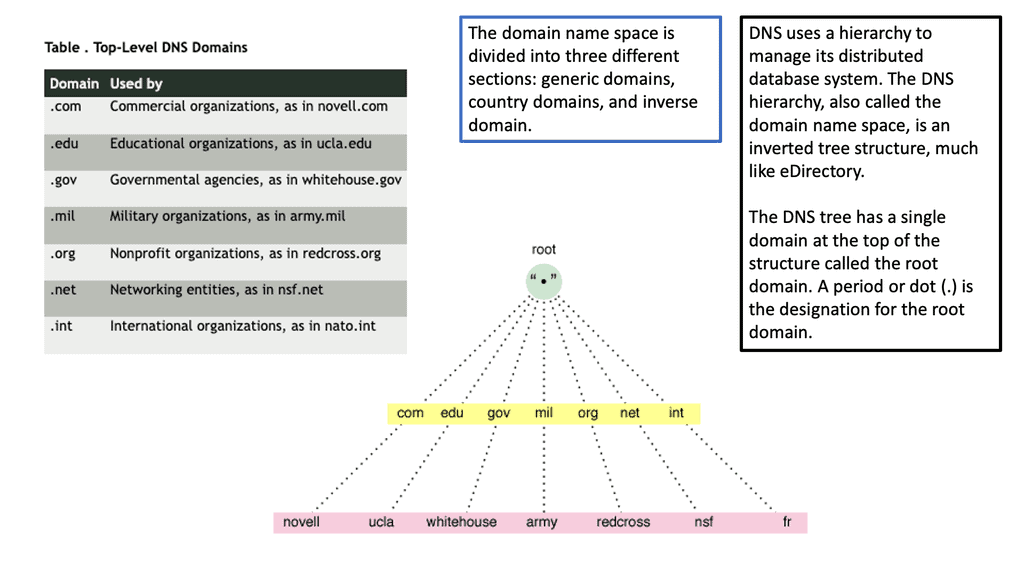

Packets traverse the Internet using numeric IP addresses, not names, to identify communication devices. DNS was developed to map the IP address to a user-friendly name to make numeric IP addresses memorable and user-friendly. Employing memorable names instead of numerical IP addresses dates back to the early 1980s in ARPANET. Localhost files called HOSTS.txt mapped IP to names on all the ARPANET computers. The resolution was local, and any changes were implemented on all computers.

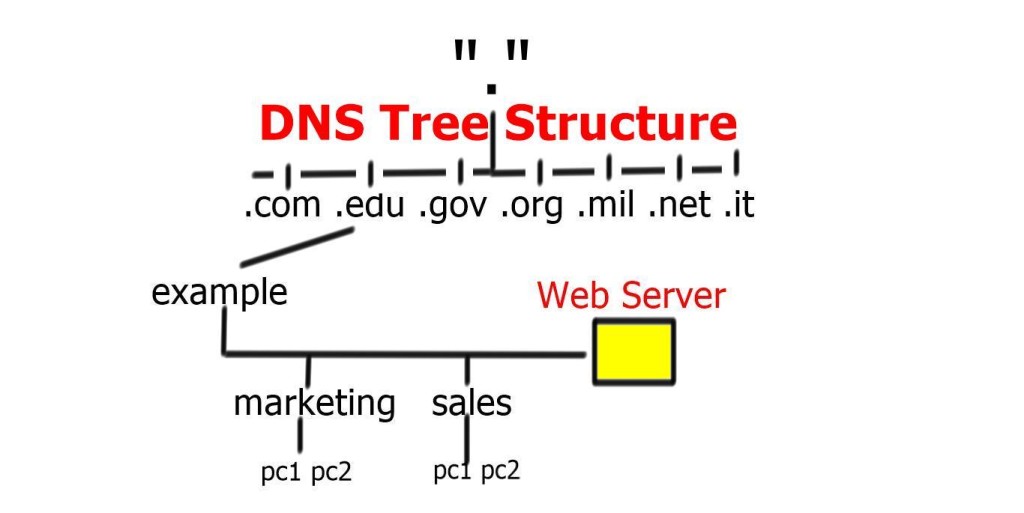

Example: DNS Structure

This was sufficient for small networks, but with the rapid growth of networking, a hierarchical distributed model known as a DNS namespace was introduced. The database is distributed worldwide on what’s known as DNS nameservers that consist of a DNS structure. It resembles an inverted tree, with branches representing domains, zones, and subzones.

At the very top of the domain is the “root” domain, and then further down, we have Top-Level domains (TLD), such as .com or .net. and Second-Level domains (SLD), such as www.network-insight.net.

The IANA delegates management of the TLD to other organizations such as Verisign for.COM and. NET. Authoritative DNS nameservers exist for each zone. They hold information about the domain tree structure. Essentially, the name server stores the DNS records for that domain.

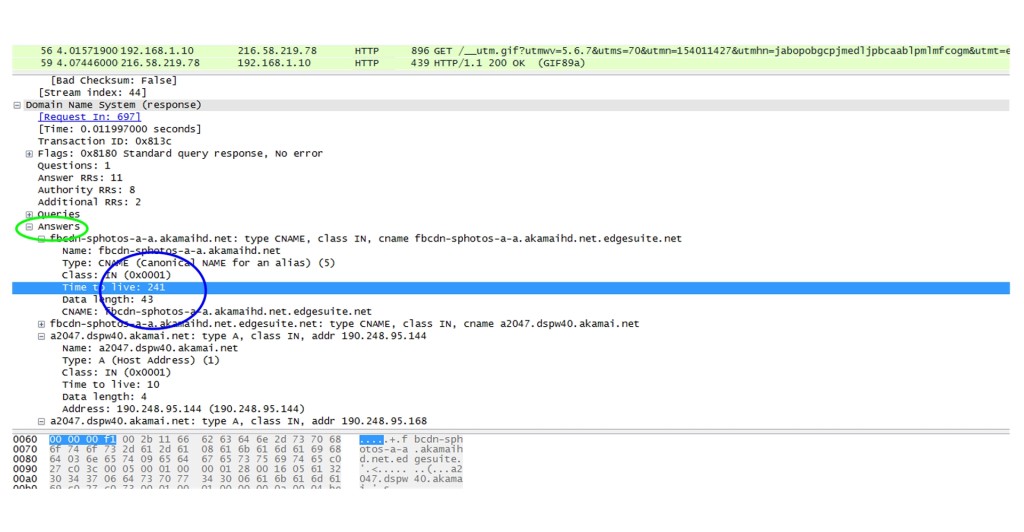

You interact with the DNS infrastructure with the process known as RESOLUTION. First, end stations request a DNS to their local DNS (LDNS). If the LDNS supports caching and has a cached response for the query, it will respond to the client’s requests.

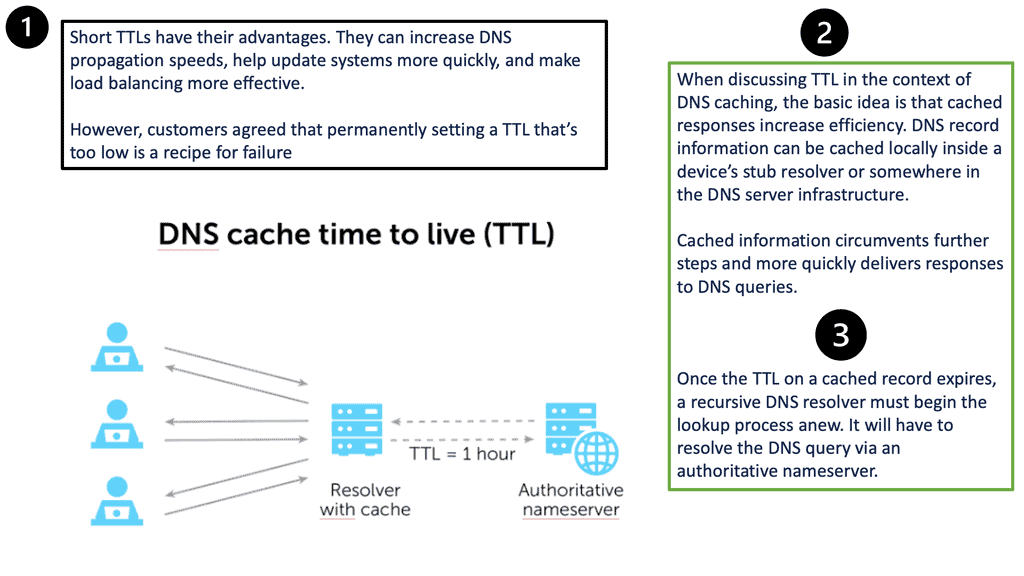

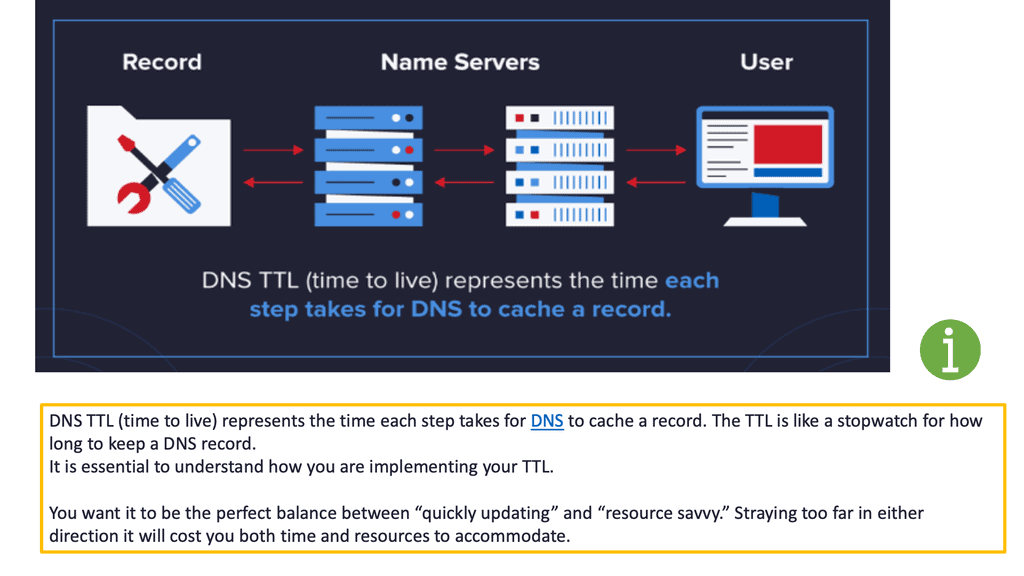

DNS caching stores DNS queries for some time, which is specified in the DNS TTL. Caching improves DNS efficiency by reducing DNS traffic on the Internet. If the LDNS doesn’t have a cached response, it will trigger what is known as the recursive resolution process.

Next, the LDNS queries the authoritative DNS server in the “root” zones. These name servers will not have the mapping in their database but will refer the request to the appropriate TLD. The process continues, and the LDNS queries the authoritative DNS in the appropriate.COM .NET or. ORG zones. The method has many steps and is called “walking a tree.” However, it is based on a quick transport protocol (UDP) and takes only a few milliseconds.

DNS Load Balancing Failover Key Components

DNS TTL

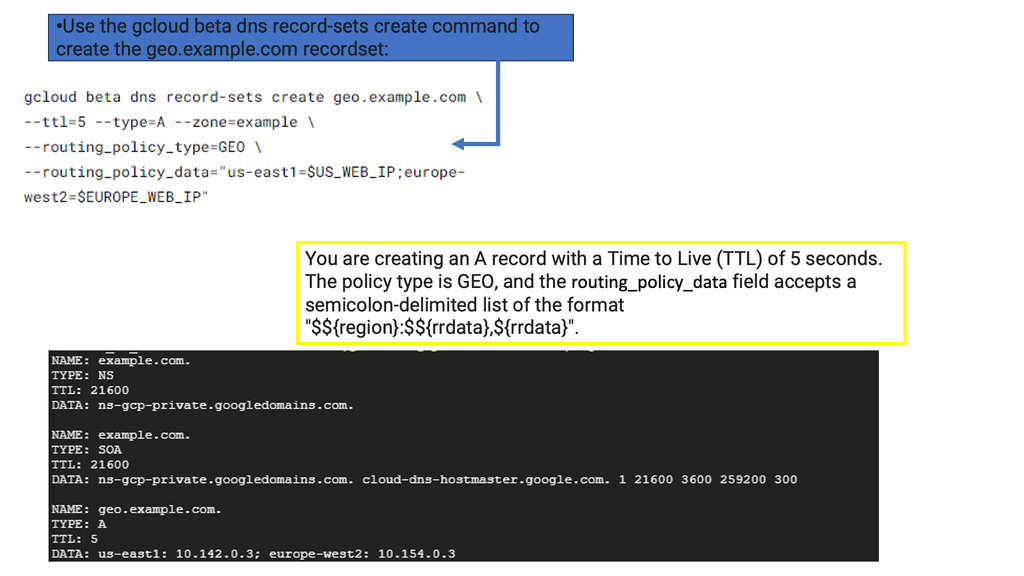

Once the LDNS gets a positive result, it caches the response for some time, referenced by the DNS TTL. The DNS TTL setting is specified in the DNS response by the authoritative nameserver for that domain. Previously, an older and common TTL value for DNS was 86400 seconds (24 hours).

This meant that if there were a change of record on the DNS authoritative server, the DNS servers around the globe would not register that change for the TTL value of 86400 seconds.

This was later changed to 5 minutes for more accurate DNS results. Unfortunately, TTL in some end hosts’ browsers is 30 minutes, so if there is a failover data center event and traffic needs to move from DC1 to DC2, some ingress traffic will take time to switch to the other DC, causing long tails.

DNS pinning and DNS cache poisoning

Web browsers implement a security mechanism known as DNS pinning, where they refuse to take low TTL as there are many security concerns with low TTL settings, such as cache poisoning. Every time you read from the DNS namespace, there is potential DNS cache poisoning and a DNS reflection attack.

Because of this, all browser companies ignored low TTL and implemented their aging mechanism, which is about 10 minutes.

In addition, there are embedded applications that carry out a DNS lookup only once when you start the application, for example, a Facebook client on your phone. During data center failover events, this may cause a very long tail, and some sessions may time out.

GTM Load Balancer and GTM Listeners

The first step is to configure GTM Listeners. A listener is a DNS object that processes DNS queries. It is configured with an IP address and listens to traffic destined to that address on port 53, the standard DNS port. It can respond to DNS queries with accelerated DNS resolution or GTM intelligent DNS resolution.

GTM intelligent Resolution is also known as Global Server Load Balancing (GSLB) and is just one of the ways you can get GTM to resolve DNS queries. It monitors a lot of conditions to determine the best response.

The GTM monitors LTM and other GTMs with a proprietary protocol called IQUERY. IQUERY is configured with the bigip_add utility. It’s a script that exchanges SSL certificates with remote BIG-IP systems. Both systems must be configured to allow port 22 on their respective self-IPs.

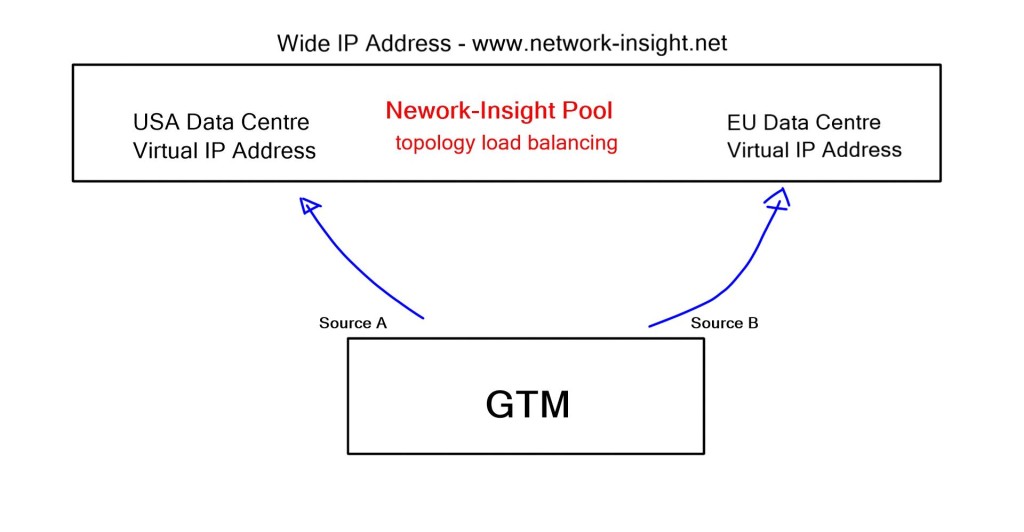

The GTM allows you to group virtual servers, one from each data center, into a pool. These pools are then grouped into a larger object known as a Wide IP, which maps the FQDN to a set of virtual servers. The Wide IP may contain Wild cards.

Load Balancing Methods

When the GTM receives a DNS query that matches the Wide IP, it selects the virtual server and sends back the response. Several load balancing methods (Static and Dynamic) are used to select the pool; the default is round-robin. Static load balancing includes round-robin, ratio, global availability, static persists, drop packets, topology, fallback IP, and return to DNS.

Dynamic load balancing includes round trip time, completion time, hops, least connections, packet rate, QoS, and kilobytes per second. Both methods involve predefined configurations, but dynamic considers real-time events.

For example, topology load balancing allows you to select a DNS query response based on geolocation information. Queries are resolved based on the resource’s physical proximity, such as LDNS country, continent, or user-defined fields. It uses an IP geolocation database to help make the decisions. It helps service users with correct weather and news based on location. All this configuration is carried out with Topology Records (TR).

Anycast and GTM DNS for DC failover

Anycast means you advertise the same address from multiple locations. It is a viable option when data centers are geographically far apart. Anycast solves the DNS problem, but we also have a routing plane to consider. Getting people to another DC with Anycast can take time and effort.

It’s hard to get someone to go to data center A when the routing table says go to data center B. The best approach is to change the actual routing. As a failover mechanism, Anycast is not as graceful as DNS migration with F5 GTM.

Generally, if session disruption is a viable option, go for Anycast. Web applications would be OK with some session disruption. HTTP is stateless, and it will just resend. However, other types of applications might not be so tolerant. If session disruption is not an option and graceful shutdown is needed, you must use DNS-based load balancing. Remember that you will always have long tails due to DNS pinning in browsers, and eventually, some sessions will be disrupted.

Scale-Out Applications

The best approach is to do a fantastic scale-out application architecture. Begin with parallel application stacks in both data centers and implement global load balancing based on DNS. Start migrating users to the other data center, and when you move all the other users, you can shut down the instance in the first data center. It is much cleaner and safer to do COLD migrations. Live migrations and HOT moves (keep sessions intact) are challenging over Layer 2 links.

You need a different IP address. You don’t want to have stretched VLANs across data centers. It’s much easier to make a COLD move, change the IP, and then use DNS. The load balancer config can be synchronized to vCenter, so the load balancer definitions are updated based on vCenter VM groups.

Another reason for failures in data centers during scale-outs could be the lack of airtight sealing, otherwise known as hermetic sealing. Not having an efficient seal brings semiconductors in contact with water vapor and other harmful gases in the atmosphere. As a result, ignitors, sensors, circuits, transistors, microchips, and much more don’t get the protection they require to function correctly.

Data and Database Challenges.

The main challenge with active-active data centers and failover events is with your actual DATA and Databases. If data center A fails, how accurate will your data be? You cannot afford to lose any data if you are running a transaction database.

Resilience is achieved by storage or database-level replication that employs log shipping or distribution between two data centers with a two-phase commit. Log shipping has an RPO of non-zero, as transactions could happen a minute before. A two-phase commit synchronizes multiple copies of the database but can slow down due to latency.

GTM Load Balancer is a robust solution for optimizing website performance and ensuring high availability. With its advanced features and intelligent traffic routing capabilities, businesses can enhance their online presence, improve user experience, and handle growing traffic demands. By leveraging the power of GTM Load Balancer, online companies can stay competitive in today’s fast-paced digital landscape.

Efficient communication between GTM and LTM is essential for businesses to optimize network traffic management. By collaborating seamlessly, GTM and LTM provide enhanced performance, scalability, and high availability, ensuring a seamless experience for end-users. Leveraging this powerful duo, businesses can deliver their services reliably and efficiently, meeting the demands of today’s digital landscape.

Summary: GTM Load Balancer

GTM Load Balancer is a sophisticated traffic management solution that distributes incoming user requests across multiple servers or data centers. Its primary purpose is to optimize resource utilization and enhance the user experience by intelligently directing traffic to the most suitable backend server based on predefined criteria.

Key Features and Functionality

GTM Load Balancer offers a wide range of features that make it a powerful tool for traffic management. Some of its notable functionalities include:

1. Health Monitoring: GTM Load Balancer continuously monitors the health and availability of backend servers, ensuring that only healthy servers receive traffic.

2. Load Distribution Algorithms: It employs various load distribution algorithms, such as Round Robin, Least Connections, and IP Hashing, to intelligently distribute traffic based on different factors like server capacity, response time, or geographical location.

3. Geographical Load Balancing: With geolocation-based load balancing, GTM can direct users to the nearest server based on location, reducing latency and improving performance.

4. Failover and Redundancy: In case of server failure, GTM Load Balancer automatically redirects traffic to other healthy servers, ensuring high availability and minimizing downtime.

Implementation Best Practices

Implementing a GTM Load Balancer requires careful planning and configuration. Here are some best practices to consider:

1. Define Traffic Distribution Criteria: Clearly define the criteria to distribute traffic, such as server capacity, geographical location, or any specific business requirements.

2. Set Up Health Monitors: Configure health monitors to regularly check the status and availability of backend servers. This helps in avoiding directing traffic to unhealthy or overloaded servers.

3. Fine-tune Load Balancing Algorithms: Based on your specific requirements, fine-tune the load balancing algorithms to achieve optimal traffic distribution and server utilization.

4. Regularly Monitor and Evaluate: Continuously monitor the performance and effectiveness of the GTM Load Balancer, making necessary adjustments as your traffic patterns and server infrastructure evolve.

Conclusion: In a world where online presence is critical for businesses, ensuring seamless traffic distribution and optimal performance is a top priority. GTM Load Balancer is a powerful solution that offers advanced functionalities, intelligent load distribution, and enhanced availability. By effectively implementing GTM Load Balancer and following best practices, businesses can achieve a robust and scalable infrastructure that delivers an exceptional user experience, ultimately driving success in today’s digital landscape.