Open vSwitch: What is OVS Bridge?

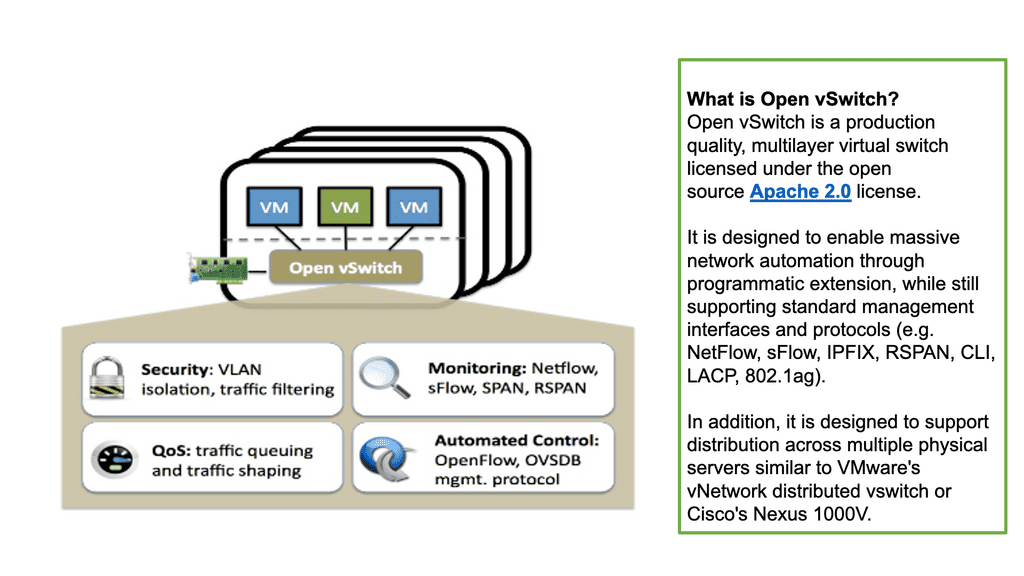

Open vSwitch (OVS) is an open-source multilayer virtual switch that provides a flexible and robust solution for network virtualization and software-defined networking (SDN) environments. It’s versatility and extensive feature set make it an invaluable tool for network administrators and developers. In this blog post, we will explore the world of Open vSwitch, its key features, benefits, and use cases.

Open vSwitch is a software switch designed for virtualized environments, enabling efficient network virtualization and SDN. It operates at layer 2 (data link layer) and layer 3 (network layer), offering advanced networking capabilities that enhance performance, security, and scalability.

Highlights: Open vSwitch

- Barriers to Network Innovation

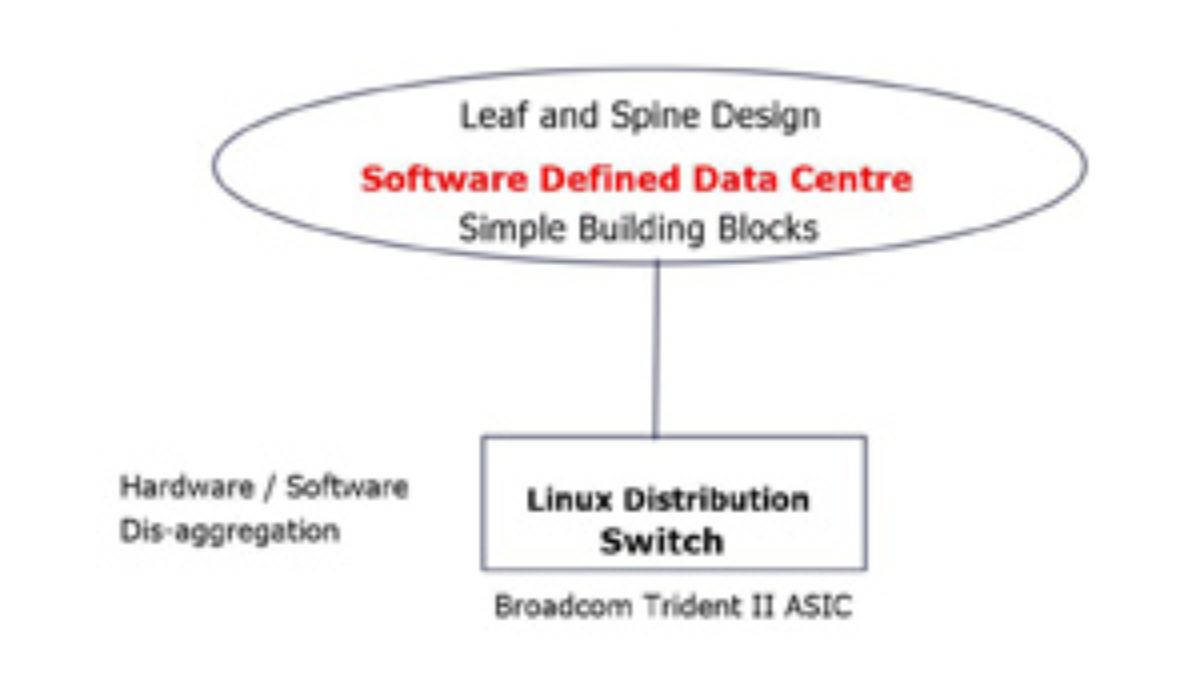

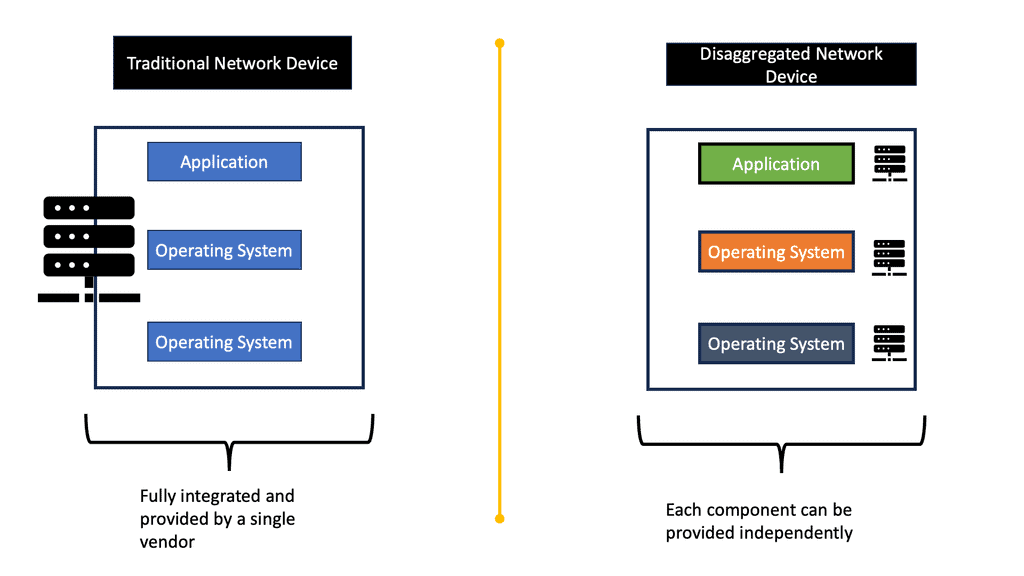

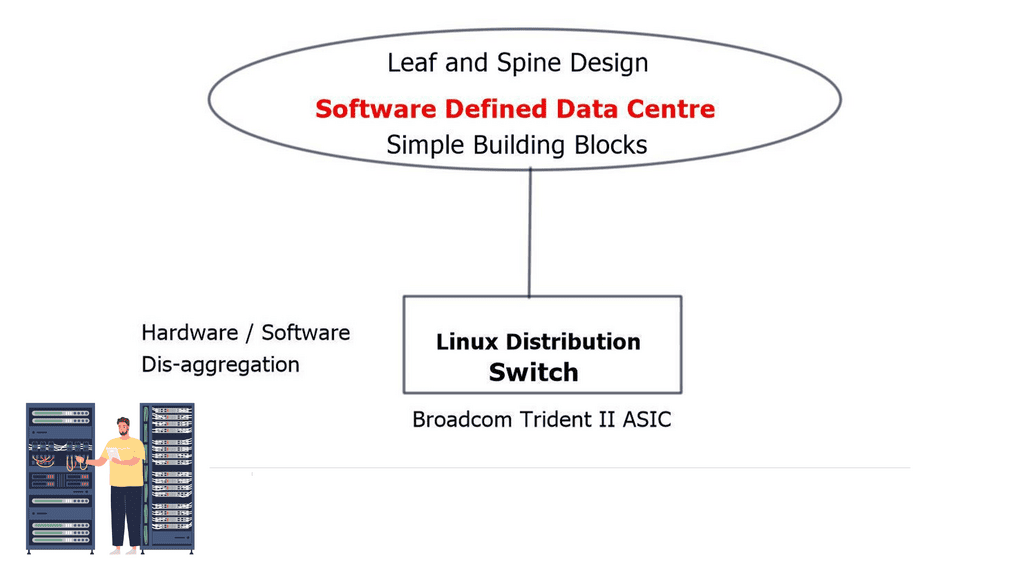

There are many barriers to network innovation, which makes it difficult for outsiders to drive features and innovate. Until recently, technologies were largely proprietary and controlled by a few vendors. The lack of tools available limited network virtualization and network resource abstraction. Many new initiatives are now challenging this space, and the Open vSwitch project with the OVS bridge, managed by the Open Network Foundation (ONF), is one of them. The ONF is a non-profit organization that promotes adopting software-defined networking through open standards and open networking.

- The Role of OVS Switch

Since its release, the OVS switch has gained popularity and is now the de-facto open standard cloud networking switch. It changes the network landscape and moves the network edge to the hypervisor. The hypervisor is the new edge of the network. It resolves the problem of network separation; cloud users can now be assigned VMs with flexible configurations. It brings new challenges to networking and security, some of which the OVS network can alleviate in conjunction with OVS rules.

For pre-information, before you proceed, you may find the following post of interest:

- Container Networking

- OpenStack Neutron

- OpenStack Neuron Security Groups

- Neutron Networks

- Neutron Network

Open vSwitch. |

|

Back to Basics With Open vSwitch

The virtual switch

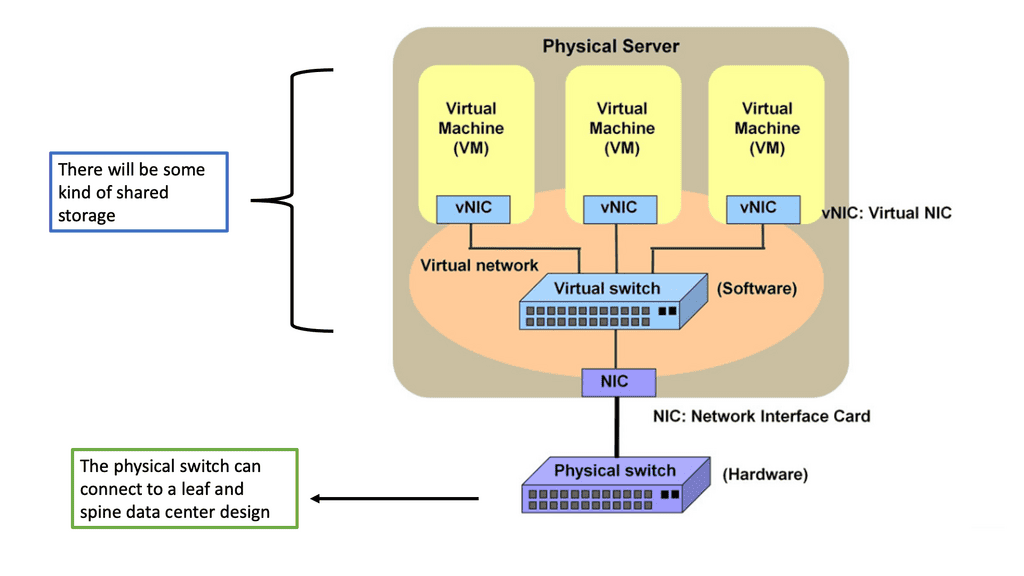

A virtual switch is a software-defined networking (SDN) device that enables the connection of multiple virtual machines within a single physical host. It is a Layer 2 device that operates within the virtualized environment and provides the same functionalities as a physical switch.

Virtual switches can be used to improve the performance and scalability of the network and are often used in cloud computing and virtualized environments. Virtual switches provide several advantages over their physical counterparts, including flexibility, scalability, and cost savings. In addition, as virtual switches are software-defined, they can be easily configured and managed by administrators.

Virtual switches are software-based switches that reside in the hypervisor kernel providing local network connectivity between virtual machines (and now containers). They deliver functions like MAC learning and features like link aggregation, SPAN, and sFlow, just like their physical switch companions have been doing for years. While these virtual switches are often found in more comprehensive SDN and network virtualization solutions, they are a switch that happens to be running in software.

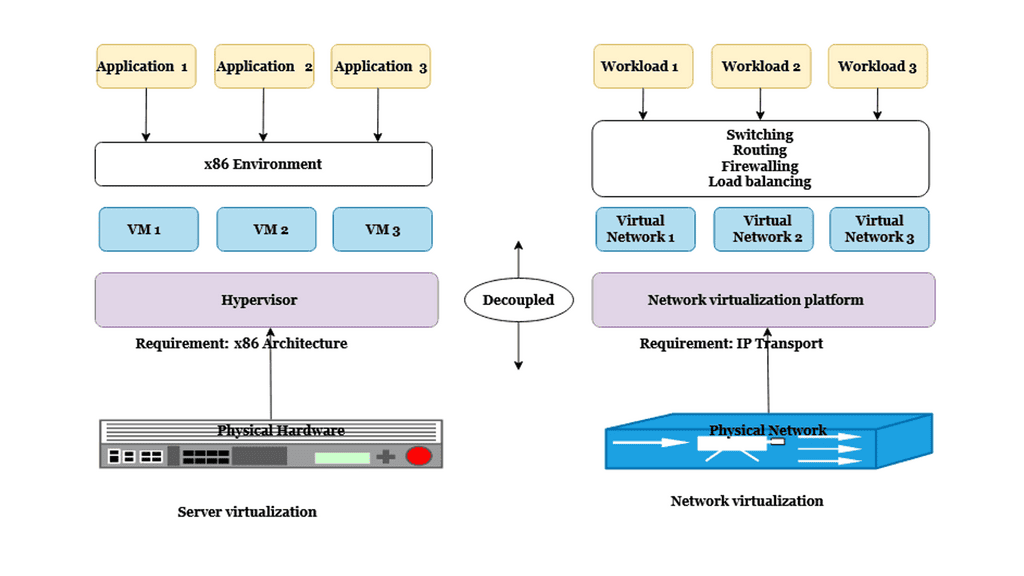

Network virtualization

network virtualization can also enable organizations to improve their network performance by allowing them to create multiple isolated networks. This can be particularly helpful when an organization’s network is experiencing congestion due to multiple applications, users, or customers. By segmenting the network into multiple isolated networks, each network can be optimized for the specific needs of its users.

In summary, network virtualization is a powerful tool that can enable organizations to control better and manage their network resources while still providing the flexibility and performance needed to meet the demands of their users. Network virtualization can help organizations improve their networks’ security, privacy, scalability, and performance by allowing organizations to create multiple isolated networks.

Highlighting the OVS bridge

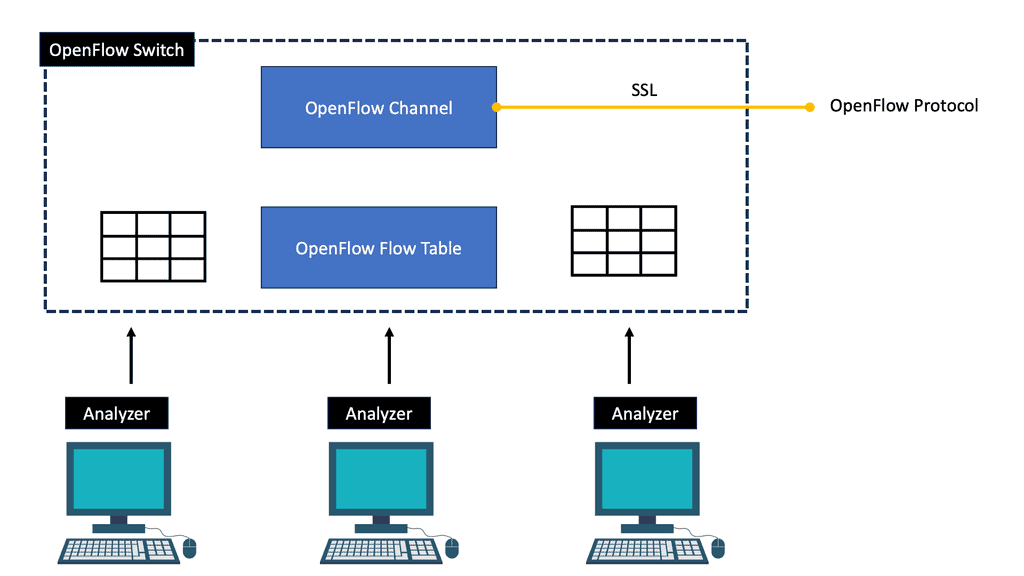

Open vSwitch is an open-source software switch designed for virtualized environments. It provides a multi-layer virtual switch designed to enable network connectivity and communication between virtual machines running within a single host or across multiple hosts. In addition, open vSwitch fully complies with the OpenFlow protocol, allowing it to be integrated with other OpenFlow-compatible software components.

The software switch can also manage various virtual networking functions, including LANs, routing, and port mirroring. Open vSwitch is highly configurable and can construct complex virtual networks. It supports a variety of features, including support for multiple VLANs, support for network isolation, and support for dynamic port configurations. As a result, open vSwitch is a critical component of many virtualized environments, providing an essential and powerful tool for managing the network environment.

- A simple flow-based switch

Open vSwitch originates from the academic labs from a project known as Ethan – SIGCOMM 2007. Ethan created a simple flow-based switch with a central controller. The central controller has end-to-end visibility, allowing policies to be applied to one place while affecting many data plane devices. In addition, central controllers make orchestrating the network much more accessible. SIGCOMM 2007 introduced the OpenFlow protocol – SIGCOMM CCR 2008 and the first Open vSwitch (OVS) release in early 2009.

Key Features of Open vSwitch:

Virtual Switching: Open vSwitch allows the creation of virtual switches, enabling network administrators to define and manage multiple isolated networks on a single physical machine. This feature is particularly useful in cloud computing environments, where virtual machines (VMs) require network connectivity.

Flow Control: Open vSwitch supports flow-based packet processing, allowing administrators to define rules to handle network traffic efficiently. This feature enables fine-grained control over network traffic, implementing Quality of Service (QoS) policies, and enhancing network performance.

Network Virtualization: Open vSwitch enables network virtualization by supporting network overlays such as VXLAN, GRE, and Geneve. This allows the creation of virtual networks that span physical infrastructure, simplifying network management and enabling seamless migration of virtual machines across different hosts.

SDN Integration: Open vSwitch seamlessly integrates with SDN controllers, such as OpenDaylight and OpenFlow, enabling centralized network management and programmability. This integration empowers administrators to automate network provisioning, optimize traffic routing, and implement dynamic policies.

Benefits of Open vSwitch:

Flexibility: Open vSwitch offers a wide range of features and APIs, providing flexibility to adapt to various network requirements. Its modular architecture allows administrators to customize and extend functionalities per their needs, making it highly versatile.

Scalability: Open vSwitch scales effortlessly as network demands grow, efficiently handling large virtual machines and network flows. Its distributed nature enables load balancing and fault tolerance, ensuring high availability and performance.

Cost-Effectiveness: Being an open-source solution, Open vSwitch eliminates the need for expensive proprietary hardware. This reduces costs and enables organizations to leverage the benefits of software-defined networking without a significant investment.

Use Cases:

Cloud Computing: Open vSwitch plays a crucial role in cloud computing environments, enabling network virtualization, multi-tenant isolation, and seamless VM migration. It facilitates the creation and management of virtual networks, enhancing the agility and efficiency of cloud infrastructure.

SDN Deployments: Open vSwitch integrates seamlessly with SDN controllers, making it an ideal choice for SDN deployments. It allows for centralized network management, dynamic policy enforcement, and programmability, enabling organizations to achieve greater control and flexibility over their networks.

Network Testing and Development: Open vSwitch provides a powerful tool for testing and development. Its extensive feature set and programmability allow developers to simulate complex network topologies, test network applications, and evaluate network performance under different conditions.

Open vSwitch (OVS)

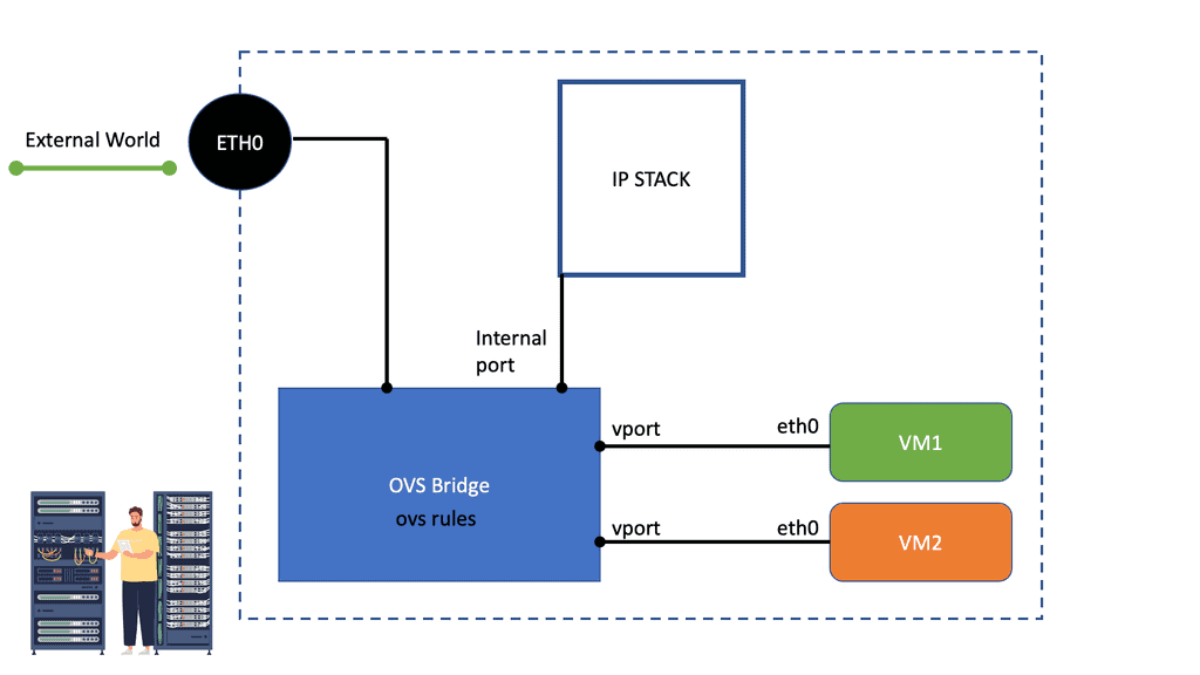

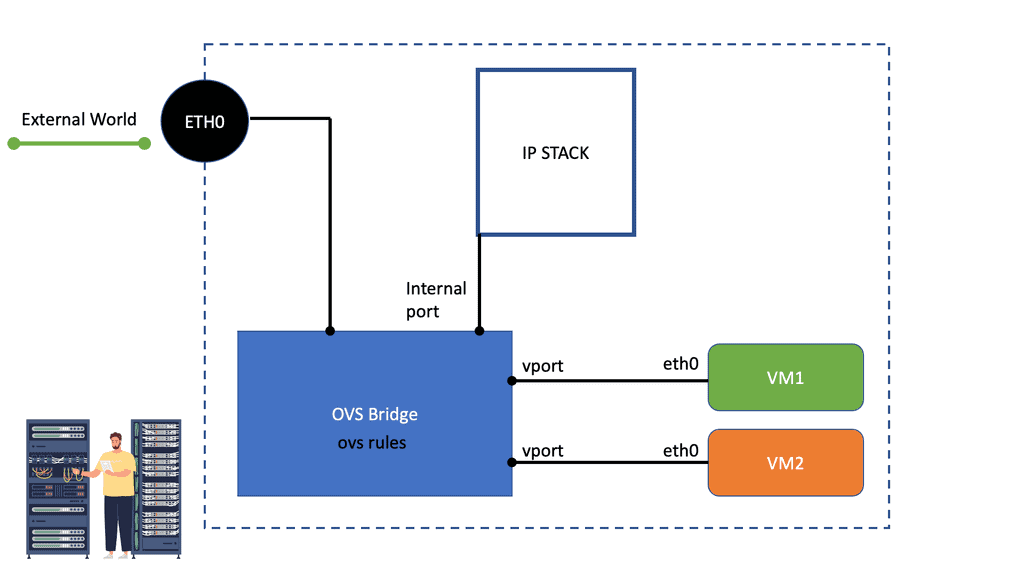

The OVS bridge is a multilayer virtual switch implemented in software. It uses virtual network bridges and flows rules to forward packets between hosts. It behaves like a physical switch, only virtualized. Namespaces and instance tap interfaces connect to what is known as OVS bridge ports.

Like a traditional switch, OVS maintains information about connected devices, such as MAC addresses. In addition, it enhances the monolithic Linux Bridge plugin and includes overlay networking (GRE & VXLAN), providing multi-tenancy in cloud environments.

Programming the Open vSwitch and OVS rules

The OVS switch can also be integrated with hardware and serve as the control plane for switching silicon. Programming flow rules work differently in the OVS switch than in the standard Linux Bridge. The OVS plugin does not use VLANs to tag traffic. Instead, it programs OVS flow rules on the virtual switches that dictate how traffic should be manipulated before being forwarded to the exit interface. The OVS rules essentially determine how inbound and outbound traffic should be treated.

OVS has two fail modes a) Standalone and b) Secure. Standalone is the default mode and acts as a learning switch. Secure mode relies on the controller element to insert flow rules. Therefore, the secure mode has a dependency on the controller.

Open vSwitch Flow Forwarding.

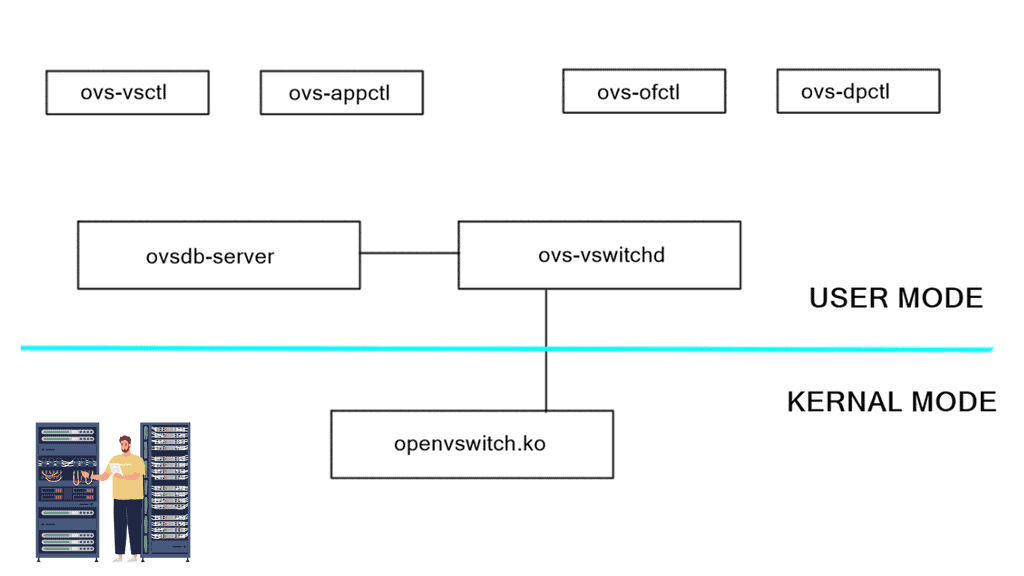

Kernel mode, known as “fast path” processing, is where it does the switching. If you relate this to hardware components on a physical device, the kernel mode will map to the ASIC. User mode is known as the “slow path.” If there is a new flow, the kernel doesn’t know about the user mode and is instructed to engage. Once the flow is active, the user mode should not be invoked. So you may take a hit the first time.

The first packet in a flow goes to the userspace ovs-vswitchd, and subsequent packets hit cached entries in the kernel. When the kernel module receives a packet, the cache is inspected to determine if there is a flow entry. The associated action is carried out on the packet if a corresponding flow entry is found in the cache.

This could be forwarding the packet or modifying its headers. If no cache entry is found, the packet is passed to the userspace ovs-vswitchd process for processing. Subsequent packets are processed in the kernel without userspace interaction. The processing speed of the OVS is now faster than the original Linux Bridge. It also has good support for mega flows and multithreading.

OVS component architecture

There are several CLI tools to interface with the various components:

CLI Component | OVS Component |

Ovs-vsctl manages the state | in the ovsdb-server |

Ovs-appctl sends commands | to the ovs-vswitchd |

Ovs-dpctl is the | Kernal module configuration |

ovs-ofctl work with the | OpenFlow protocols |

You may have an off-host component, such as the controller. It communicates and acts as a manager of a set of OVS components in a cluster. The controller has a global view and manages all the components. An example controller is OpenDaylight. OpenDaylight promotes the adoption of SDN and serves as a platform for Network Function Virtualization (NFV).

NFV virtualized network services instead of using physical function-specific hardware. A northbound interface exposes the network application and southbound interfaces interface with the OVS components.

- RYU provides a framework for SDN controllers and allows you to develop controllers. It is written in Python. It supports OpenFlow, Netconf, and OF-config.

There are many interfaces used to communicate across and between components. The database has a management protocol known as OVSDB, RFC 7047. OVS has a local database server on every physical host. It maintains the configuration of the virtual switches. Netlink communicates between user and kernel modes and between different userspace processes. It is used between ovs-vswitchd and openvswitch.ko and is designed to transfer miscellaneous networking information.

OpenFlow and the OVS bridge

OpenFlow can also be used to talk and program the OVS. The ovsdb-server interfaces with an external controller (if used) and the ovs-vswitchd interface. Its purpose is to store information for the switches. Its state is persistent.

The central CLI tool is ovs-vsctl. The ovs-vswitchd interface with an external controller, kernel via Netlink, and the ovsdb server. Its purpose is to manage multiple bridges and is involved in the data path. It’s a core system component for the OVS. Two CLI tools ovs-ofctl and ovs-appctl are used to interface with this.

Linux containers and networking

OVS can make use of Linux and Docker containers. Containers provide a layer of isolation that reduces communication in humans. They make it easy to build out example scenarios. Starting a container takes milliseconds compared to the minutes of a virtual machine.

Deploying container images is much faster if less data needs to travel across the fabric. Elastic applications with frequent state changes and dynamic resource allocation can be built more efficiently with containers.

Linux and Docker containers represent a fundamental shift in how we consume and manage applications. Libvirt is a tool used to make use of containers. It’s a virtualization application for Linux. Linux containers involve process isolation in Linux, so instead of running an entire-blown VM, you can do a container, but you share the same kernel but are entirely isolated.

Each container has its view of networking and processes. Containers isolate instances without the overhead of a VM. A lightweight way of doing things on a host and builds on the mechanism in the kernel.

Source versus package install

There are two paths for installation, a) Source code and b) Package installation based on your Linux distribution. The source code install is primarily used if you are a developer and is helpful if you are trying to make an extension or focusing on hardware component integration; before accessing the Repo-install, any build dependencies, such as git, autoconf, and libtool.

Then you pull the image from GitHub with the “clone” command. <git clone https://github.com/openvswitch/ovs>. Running from source code is a lot more difficult than installing through distribution. All the dependencies will be done for you when you install from packages.

Conclusion:

Open vSwitch is a feature-rich and highly flexible virtual switch that empowers network administrators and developers to build efficient and scalable networks. Its support for network virtualization, flow control, and SDN integration makes it a valuable tool in cloud computing environments, SDN deployments, and network testing and development. By leveraging Open vSwitch, organizations can unlock the full potential of network virtualization and software-defined networking, enhancing their network capabilities and driving innovation in the digital era.