Implementing Network Security

In today's interconnected world, where technology reigns supreme, the need for robust network security measures has become paramount. This blog post aims to provide a detailed and engaging guide to implementing network security. By following these steps and best practices, individuals and organizations can fortify their digital infrastructure against potential threats and protect sensitive information.

Network security is the practice of protecting networks and their infrastructure from unauthorized access, misuse, or disruption. It encompasses various technologies, policies, and practices aimed at ensuring the confidentiality, integrity, and availability of data. By employing robust network security measures, organizations can safeguard their digital assets against cyber threats.

Network security encompasses a range of measures designed to protect computer networks from unauthorized access, data breaches, and other malicious activities. It involves both hardware and software components, as well as proactive policies and procedures aimed at mitigating risks. By understanding the fundamental principles of network security, organizations can lay the foundation for a robust and resilient security infrastructure.

Before implementing network security measures, it is crucial to conduct a comprehensive assessment of potential risks and vulnerabilities. This involves identifying potential entry points, evaluating existing security measures, and analyzing the potential impact of security breaches. By conducting a thorough risk assessment, organizations can develop an effective security strategy tailored to their specific needs.

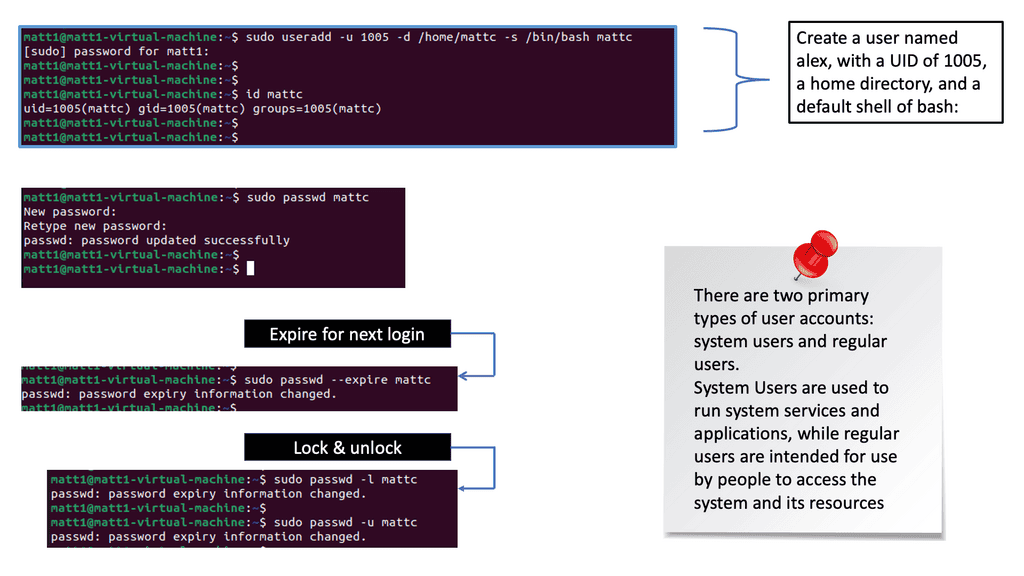

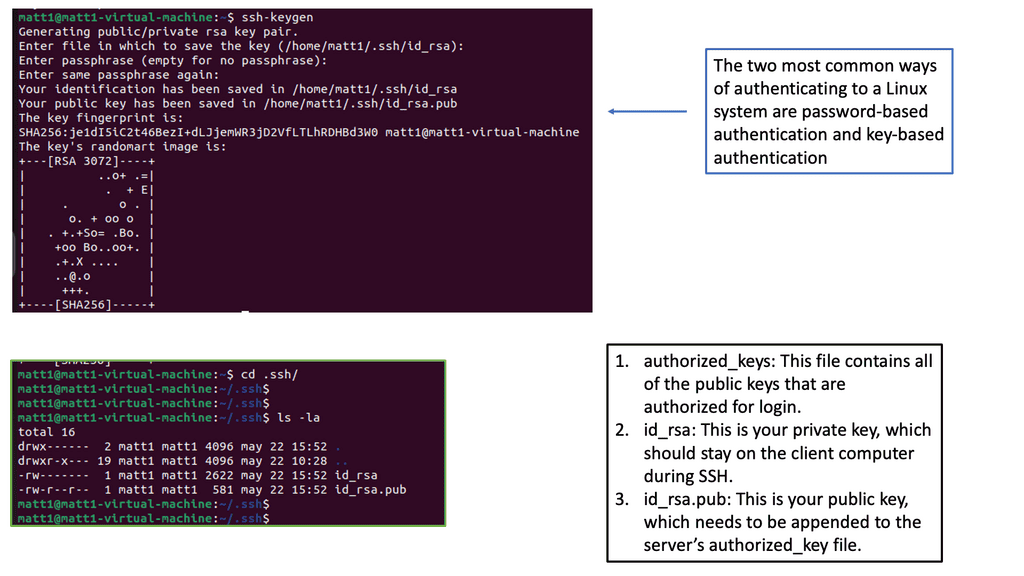

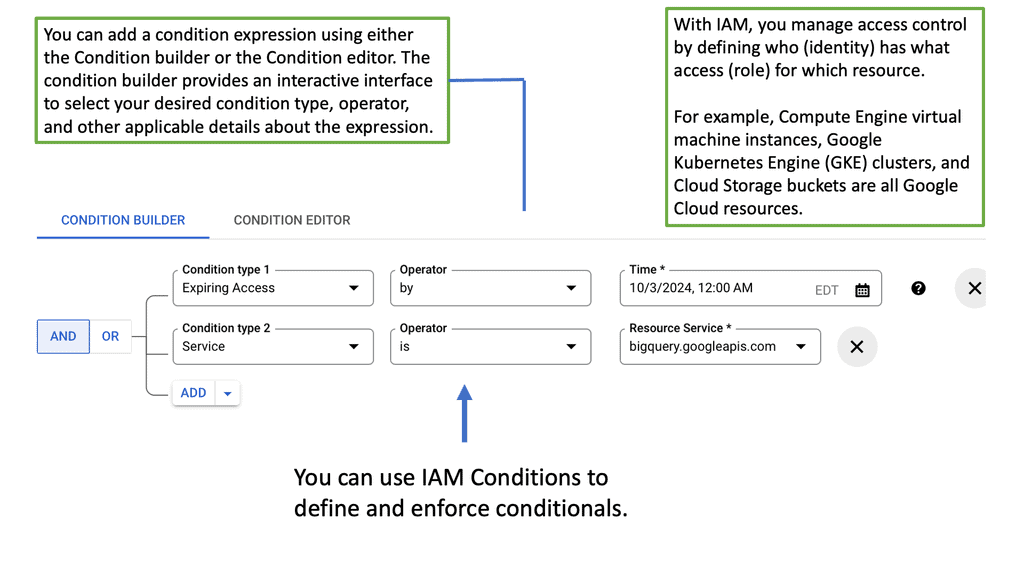

Implementing Strong Access Controls: One of the fundamental aspects of network security is controlling access to sensitive information and resources. This includes implementing strong authentication mechanisms, such as multi-factor authentication, and enforcing strict access control policies. By ensuring that only authorized individuals have access to critical systems and data, organizations can significantly reduce the risk of unauthorized breaches.

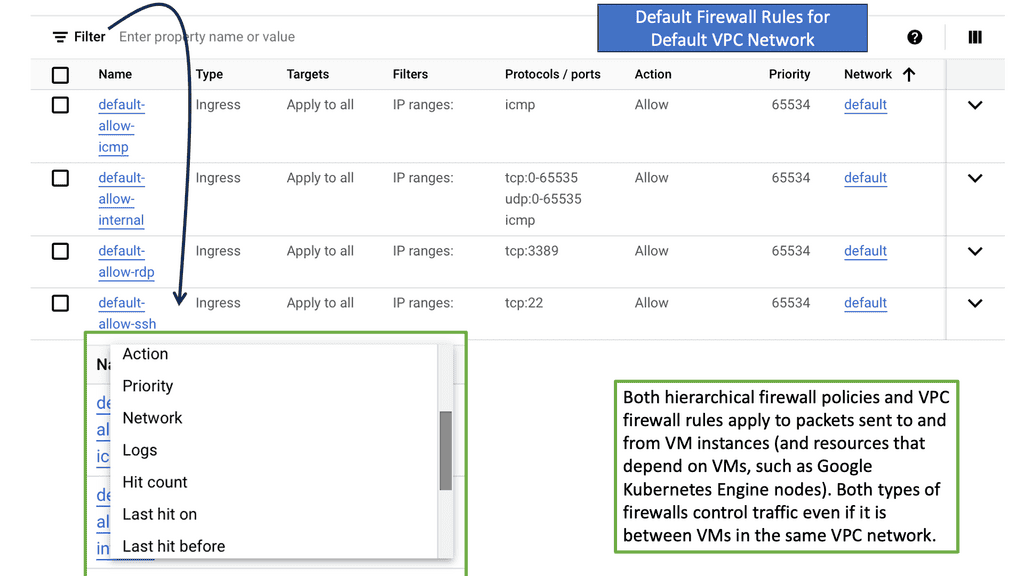

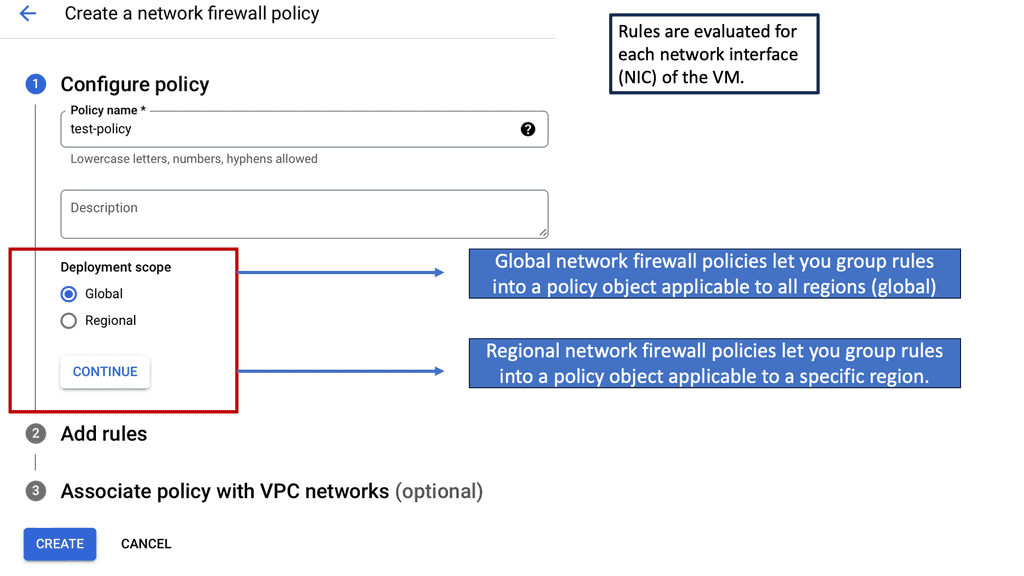

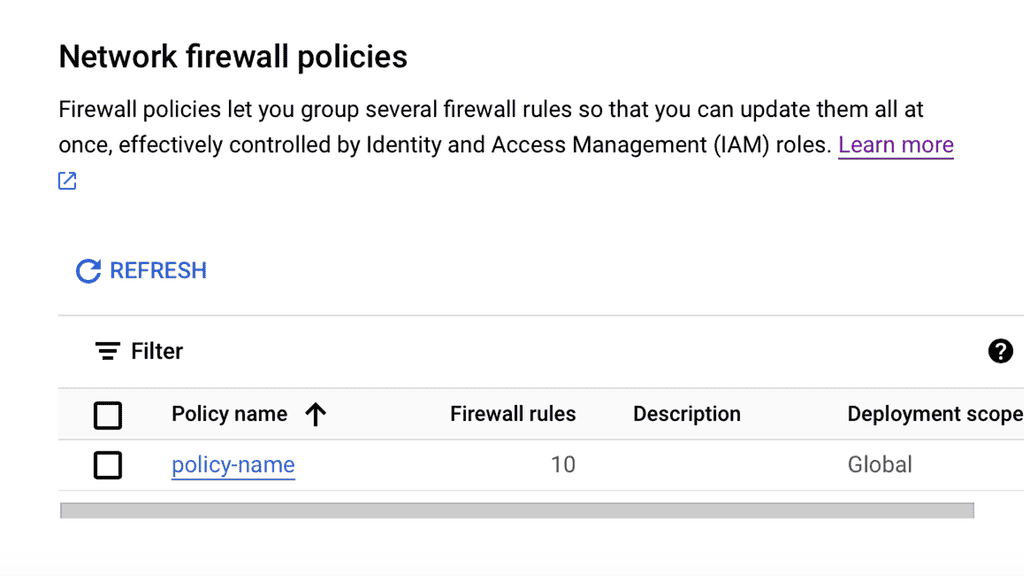

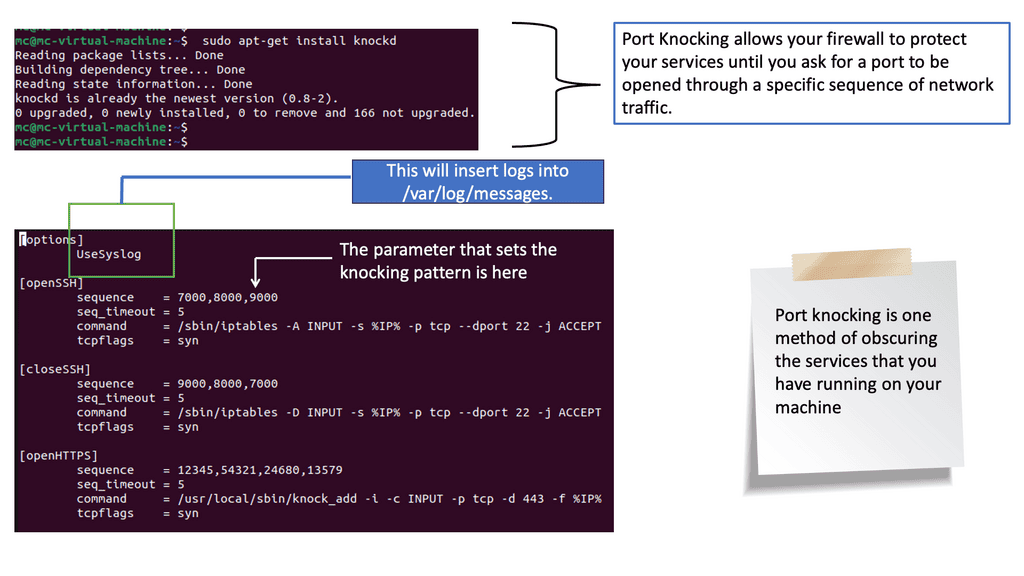

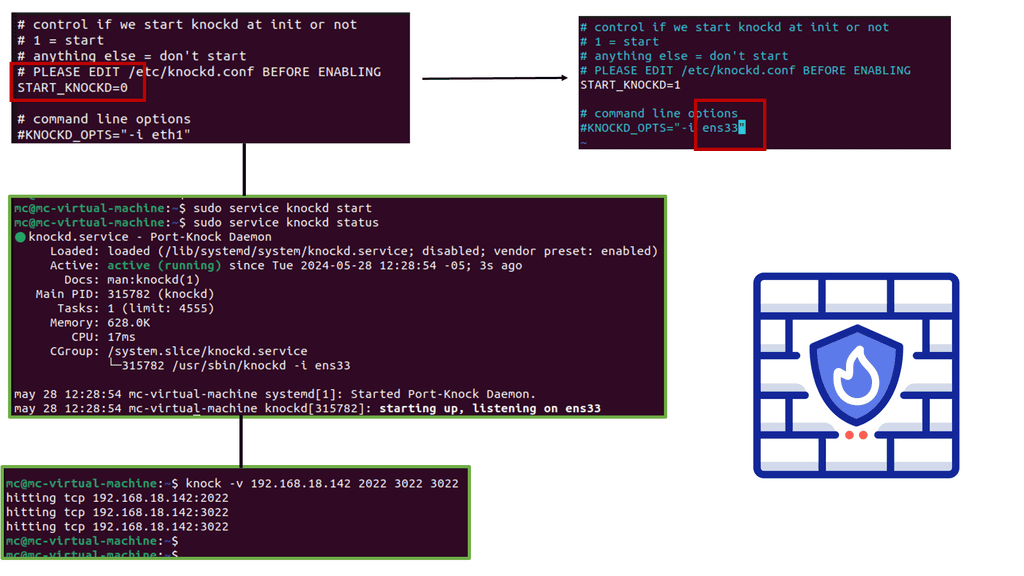

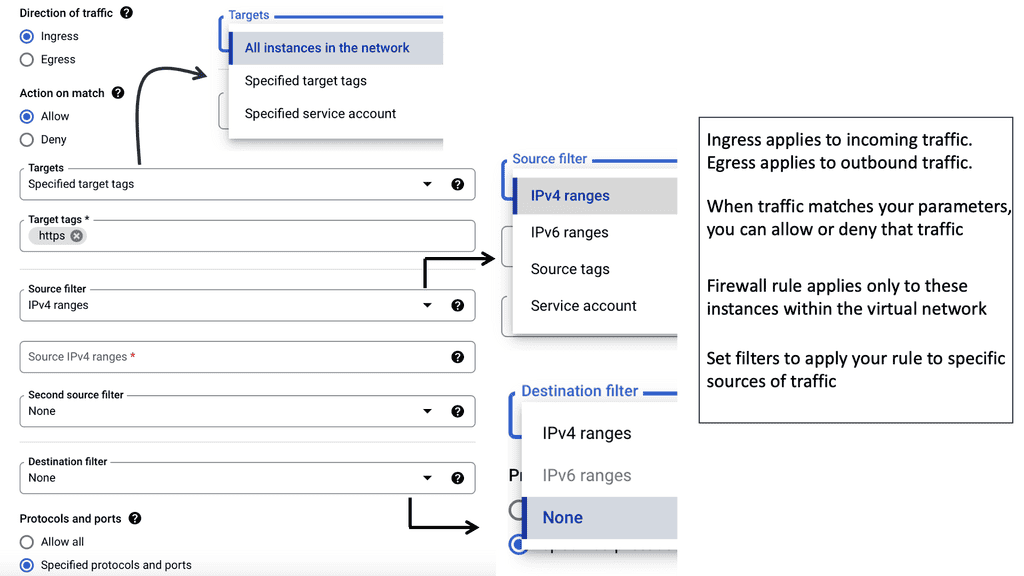

Deploying Firewalls and Intrusion Detection Systems: Firewalls and intrusion detection systems (IDS) are essential components of network security. Firewalls act as a barrier between internal and external networks, monitoring and filtering incoming and outgoing traffic. IDS, on the other hand, analyze network traffic for suspicious activities or patterns that may indicate a potential breach. By deploying these technologies, organizations can detect and prevent unauthorized access attempts.

Regular Updates and Patches: Network security is an ongoing process that requires constant attention and maintenance. Regular updates and patches play a crucial role in addressing vulnerabilities and fixing known security flaws. It is essential to keep all network devices, software, and firmware up to date to ensure optimal protection against emerging threats.

Matt Conran

Highlights: Implementing Network Security

Understanding Network Security

Network security refers to the practices and measures used to prevent unauthorized access, misuse, modification, or denial of computer networks and their resources. It involves implementing various protocols, technologies, and best practices to ensure data confidentiality, integrity, and availability. By understanding network security fundamentals, individuals and organizations can make informed decisions to protect their networks.

Key Points:

A) Computer Technology is changing: Computer networking technology is evolving and improving faster than ever before. Most organizations and individuals now have access to wireless connectivity. However, malicious hackers increasingly use every means to steal identities, intellectual property, and money.

B) Internal and External Threats: Many organizations spend little time, money, or effort protecting their assets during the initial network installation. Both internal and external threats can cause a catastrophic system failure or compromise. Depending on the severity of the security breach, a company may even be forced to close its doors. Business and individual productivity would be severely hindered without network security.

C) The Role of Trust: Trust must be established for a network to be secure. An organization’s employees assume all computers and network devices are trustworthy. However, it is essential to note that not all trusts are created equal. Different layers of trust can (and should) be used.

D) Privileges and permissions: Privileges and permissions are granted to those with a higher trust level. Privileges allow an individual to access an asset on a network, while permissions authorize an individual to access an asset. Violations of trust are dealt with by removing the violator’s access to the secure environment. For example, an organization may terminate an untrustworthy employee or replace a defective operating system.

**Networking is Complex**

Our challenge is that the network is complex and constantly changing. We have seen this with WAN monitoring and the issues that can arise from routing convergence. This may not come as a hardware refresh, but it constantly changes from a network software perspective and needs to remain dynamic. If you don’t have complete visibility while the network changes, this will result in different security blind spots.

**Security Tools**

Existing security tools are in place, but better security needs to be integrated. Here, we can look for the network and provide that additional integration point. In this case, we can use a network packet broker to sit in the middle and feed all the security tools with data that has already been transformed or, let’s say, optimized for that particular security device it is sending back to, reducing false positives.

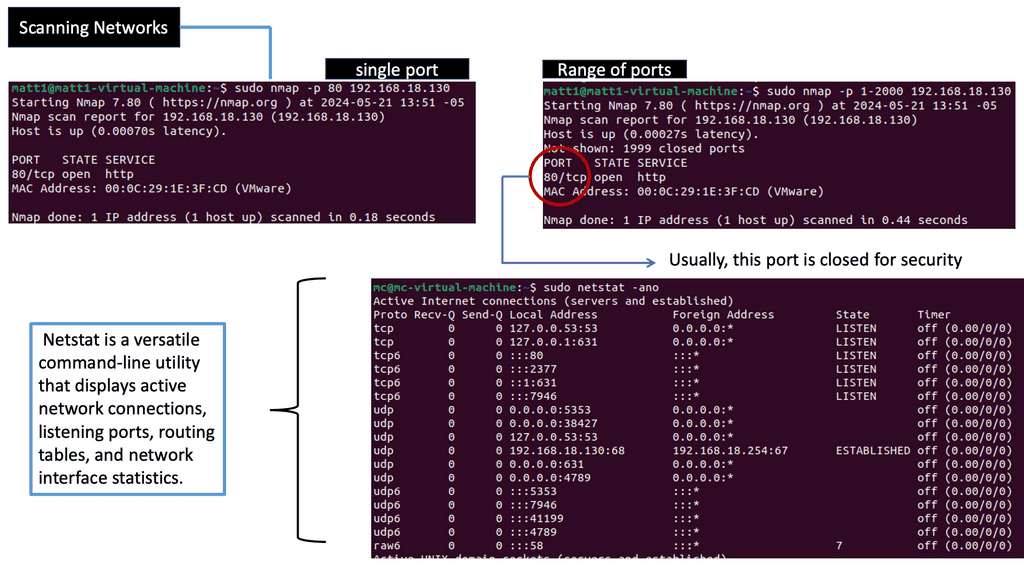

**Port Scanning**

When interacting with target systems for the first time, it is expected to perform a port scan. A port scan is a way of identifying open ports on the target network. Port scans aren’t just conducted for the sake of conducting them. They allow you to identify applications and services by listening to ports. Identifying security issues on your target network is always the objective so your client or employer can improve their security posture. To identify vulnerabilities, we need to identify the applications.

**Follow a framework**

A business needs to follow a methodology that provides additional guidance. Adopting a framework could help solve this problem. Companies can identify phases to consider implementing security controls using NIST’s Cybersecurity Framework. According to NIST, the phases are identifying, protecting, detecting, responding, and recovering. The NIST Cybersecurity Framework is built around these five functions.

Improving Network Security

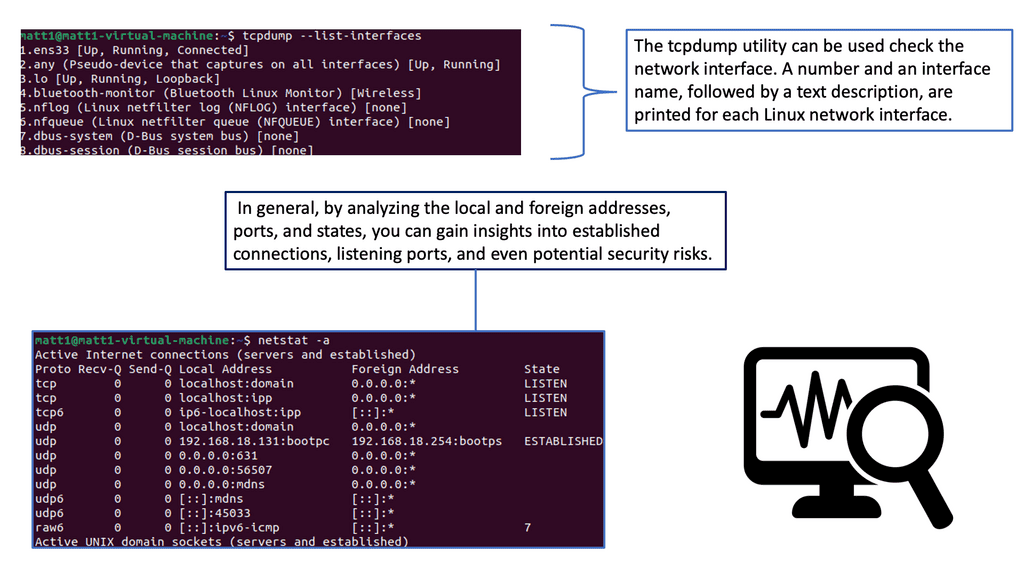

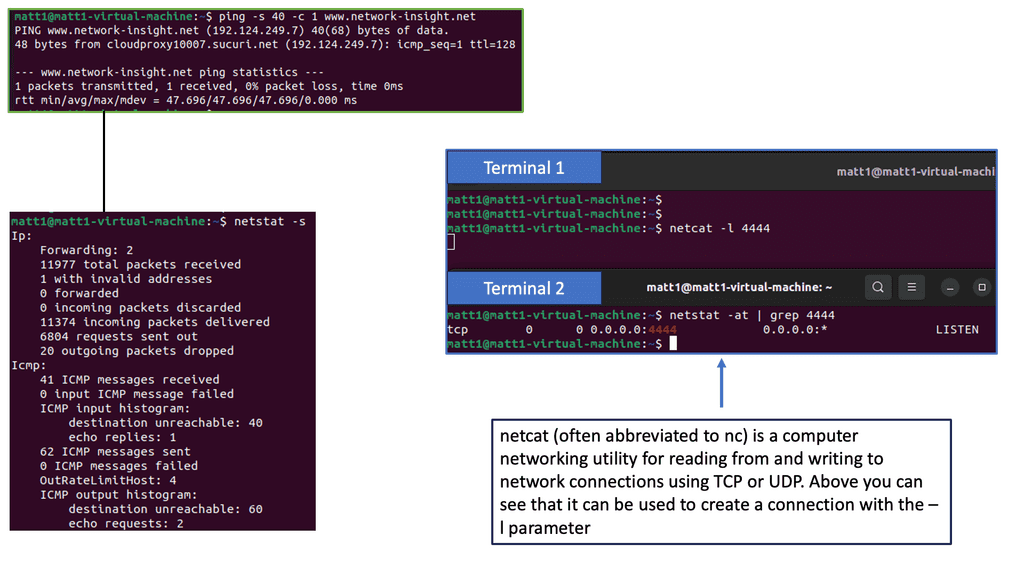

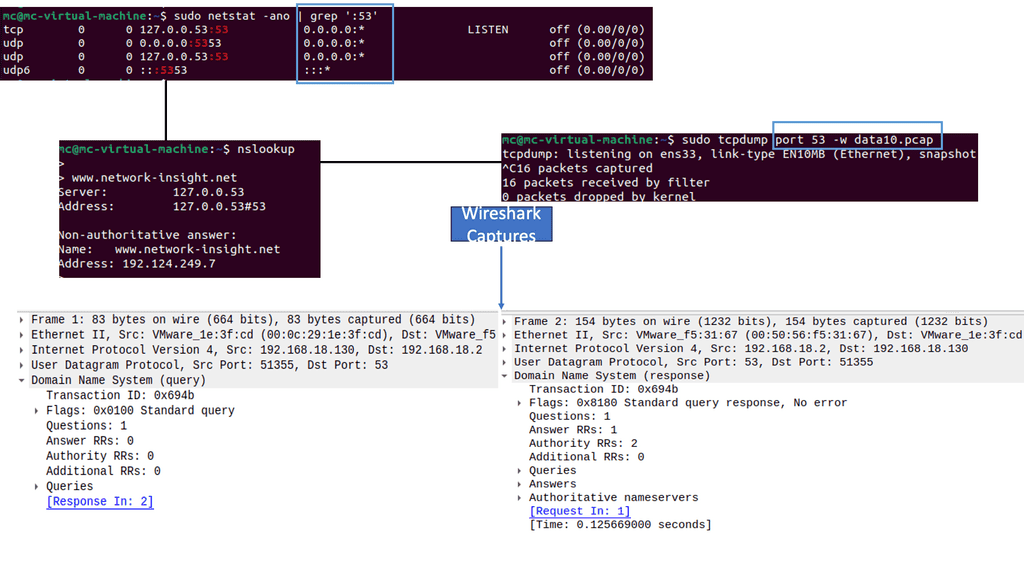

Network Monitoring & Scanning

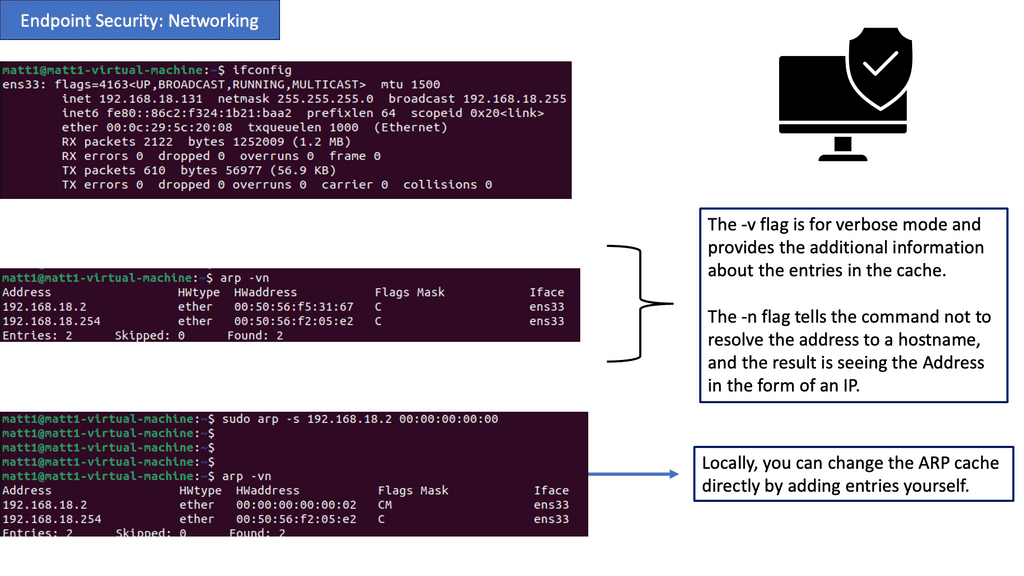

Network monitoring involves continuously surveilling and analyzing network activities, including traffic, devices, and applications. It provides real-time visibility into network performance metrics, such as bandwidth utilization, latency, and packet loss. By monitoring these key indicators, IT teams can identify potential bottlenecks, troubleshoot issues promptly, and optimize network resources.

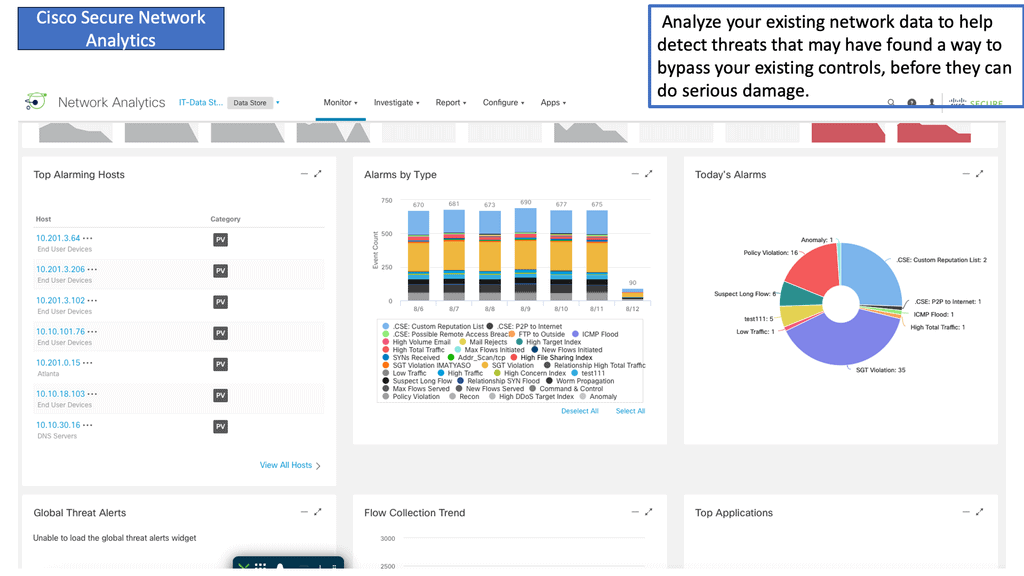

Identifying and mitigating security threats

Network monitoring plays a crucial role in identifying and mitigating security threats. With cyberattacks becoming increasingly sophisticated, organizations must be vigilant in detecting suspicious activities. Network administrators can quickly identify potential security breaches, malicious software, or unauthorized access attempts by monitoring network traffic and utilizing intrusion detection systems. This proactive approach helps strengthen network security and prevent potential data breaches.

Understanding Network Scanning

Network scanning is the proactive process of discovering and assessing network devices, systems, and vulnerabilities. It systematically examines the network to identify potential security weaknesses, misconfigurations, or unauthorized access points. By comprehensively scanning the network, organizations can identify and mitigate potential risks before malicious actors exploit them.

Network Scanning Methods

Several methods are employed in network scanning, each serving a specific purpose. Port scanning, for instance, focuses on identifying open ports and services running on targeted systems.

On the other hand, vulnerability scanning aims to detect known vulnerabilities within network devices and applications. Additionally, network mapping provides a topological overview of the network, enabling administrators to identify potential entry points for intruders.

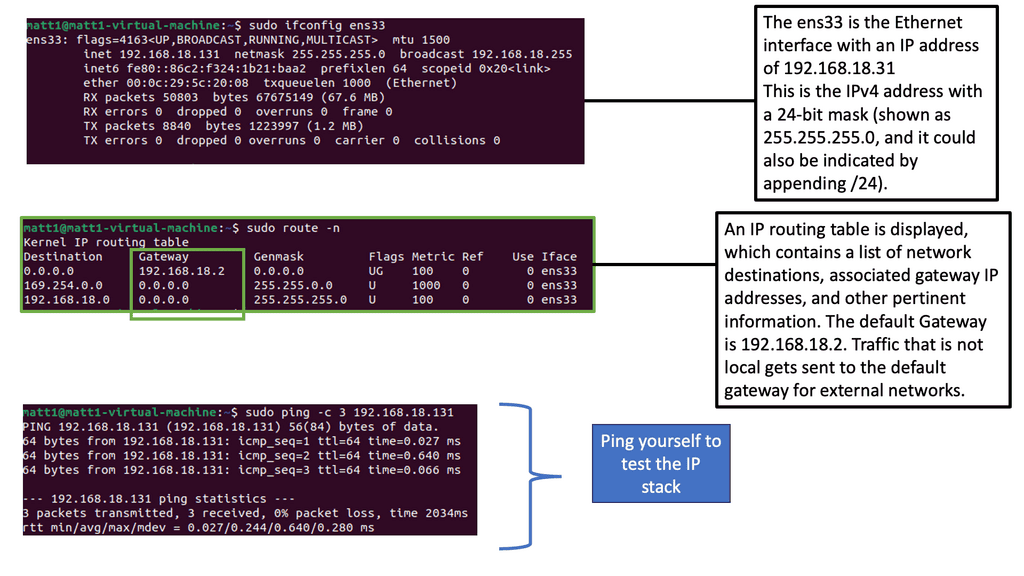

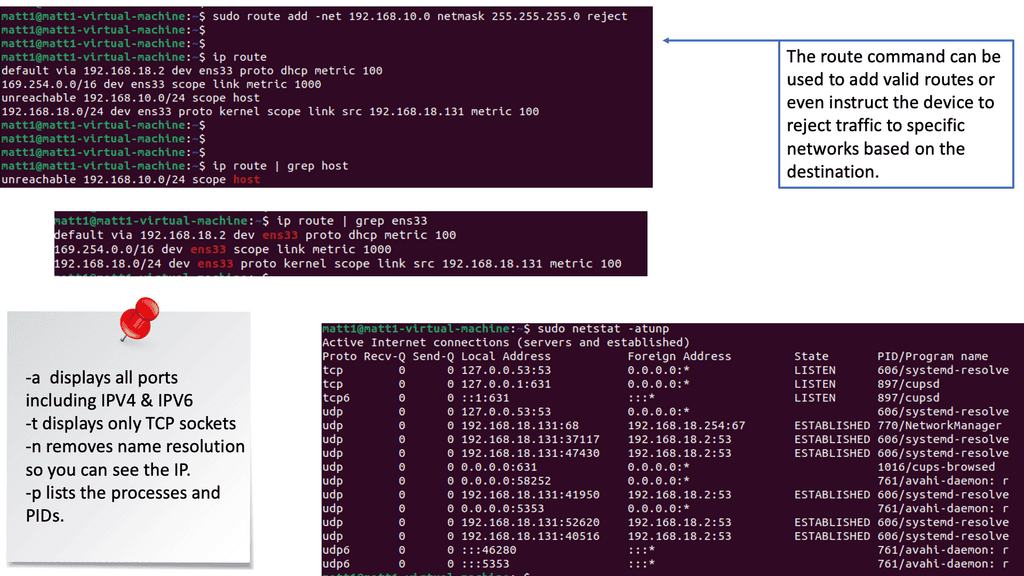

Identifying Networks

To troubleshoot the network effectively, you can use a range of tools. Some are built into the operating system, while others must be downloaded and run. Depending on your experience, you may choose a top-down or a bottom-up approach.

**Common Network Security Components**

Firewalling: Firewalls are a crucial barrier between an internal network and the external world. They monitor and control incoming and outgoing network traffic based on predetermined security rules. By analyzing packet data, firewalls can identify and block potential threats, such as malicious software or unauthorized access attempts. Implementing a robust firewall solution is essential to fortify network security.

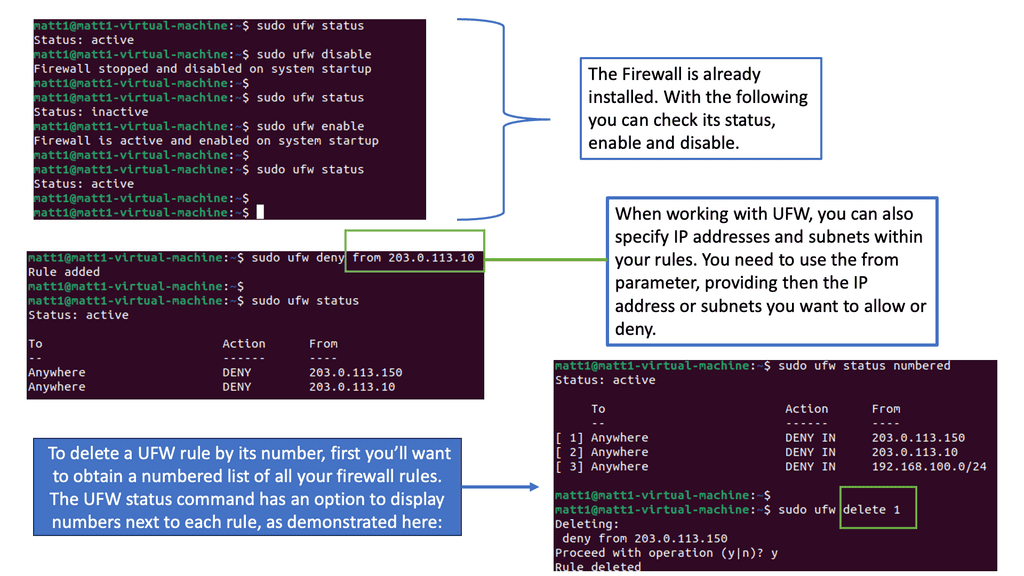

The UFW firewall, built upon the foundation of iptables, is a user-friendly frontend interface that simplifies the management of firewall rules. It provides an efficient way to control incoming and outgoing traffic, enhancing the security of your network. By understanding the key concepts and principles behind UFW, you can harness its capabilities to safeguard your data.

Implementing a UFW firewall brings a myriad of benefits to your network. Firstly, it is a barrier to preventing unauthorized access to your system. It filters network traffic based on predefined rules, allowing only the necessary connections. Secondly, UFW will enable you to define specific rules for different applications, granting you granular control over network access. Additionally, UFW helps mitigate common network attacks like DDoS and port scanning, enhancing overall security posture.

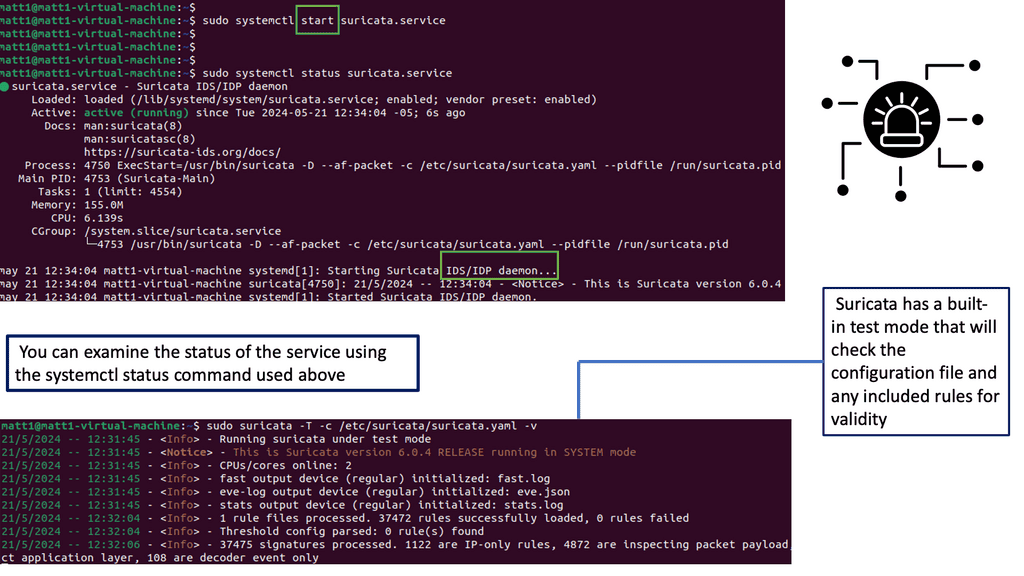

Intrusion Detection Systems (IDS): Intrusion Detection Systems (IDS) play a proactive role in network security. They continuously monitor network traffic, analyzing it for suspicious activities and potential security breaches. IDS can detect patterns and signatures of known attacks and identify anomalies that may indicate new or sophisticated threats. By alerting network administrators in real time, IDS helps mitigate risks and enable swift response to potential security incidents.

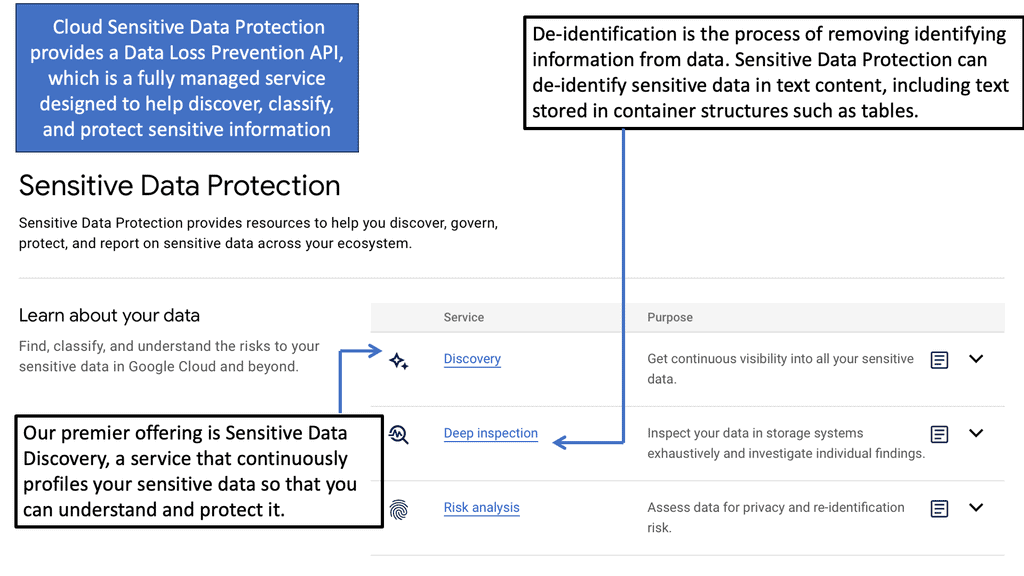

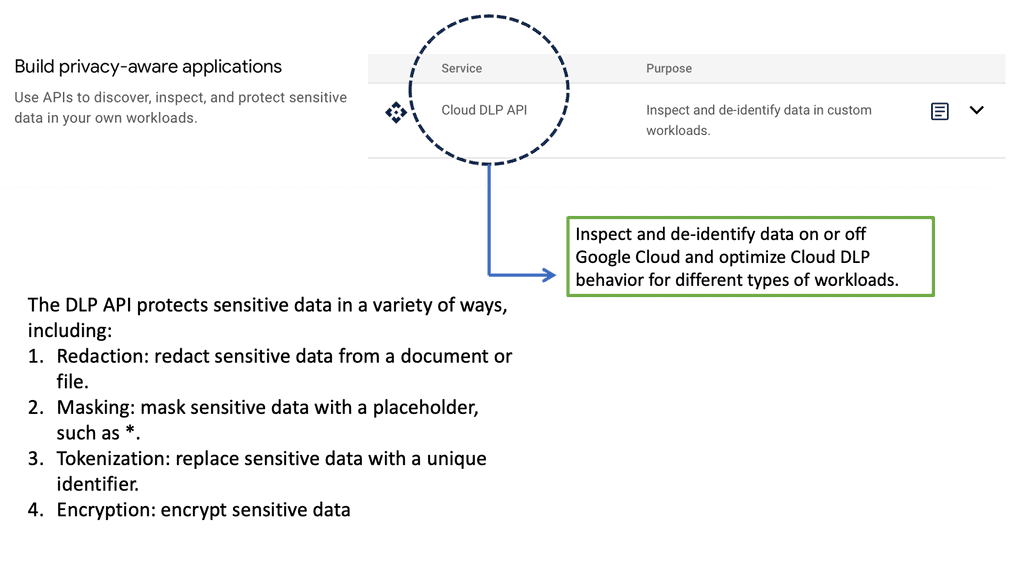

Example: Sensitive Data Protection

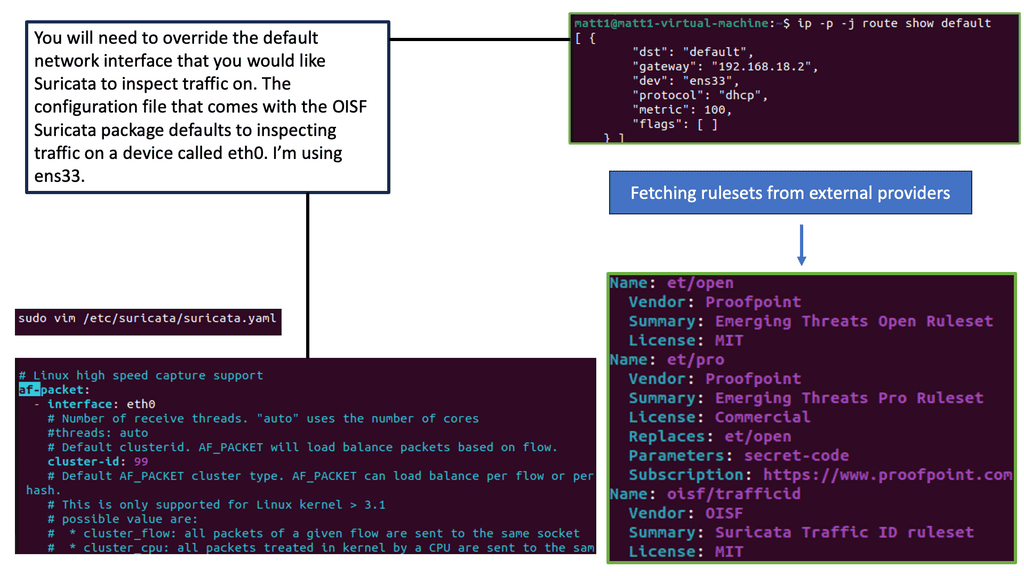

Example Technology: Suricata – Traffic Inspection

Virtual Private Networks (VPNs): In an era of prevalent remote work and virtual collaboration, Virtual Private Networks (VPNs) have emerged as a vital component of network security. VPNs establish secure and encrypted connections between remote users and corporate networks, ensuring the confidentiality and integrity of data transmitted over public networks. By creating a secure “tunnel,” VPNs protect sensitive information from eavesdropping and unauthorized interception, offering a safe digital environment.

Authentication Mechanisms: Authentication mechanisms are the bedrock of network security, verifying the identities of users and devices seeking access to a network. From traditional password-based authentication to multi-factor authentication and biometric systems, these mechanisms ensure that only authorized individuals or devices gain entry. Robust authentication protocols significantly reduce the risk of unauthorized access and protect against identity theft or data breaches.

Encryption: Encryption plays a crucial role in maintaining the confidentiality of sensitive data. By converting plaintext into an unreadable format using complex algorithms, encryption ensures that the information remains indecipherable to unauthorized parties even if intercepted. Whether it’s encrypting data at rest or in transit, robust encryption techniques are vital to protecting the privacy and integrity of sensitive information.

IPv4 and IPv6 Network Security

IPv4 Network Security:

IPv4, the fourth version of the Internet Protocol, has been the backbone of the Internet for several decades. However, its limited address space and security vulnerabilities have prompted the need for a transition to IPv6. IPv4 faces various security challenges, such as IP spoofing, distributed denial-of-service (DDoS) attacks, and address exhaustion.

IPv4 – Lack of built-in encryption:

Issues like insufficient address space and lack of built-in encryption mechanisms make IPv4 networks more susceptible to security breaches. To enhance IPv4 network security, organizations should implement measures like network segmentation, firewall configurations, intrusion detection systems (IDS), and regular security audits. Staying updated with security patches and protocols like HTTPS can mitigate potential risks.

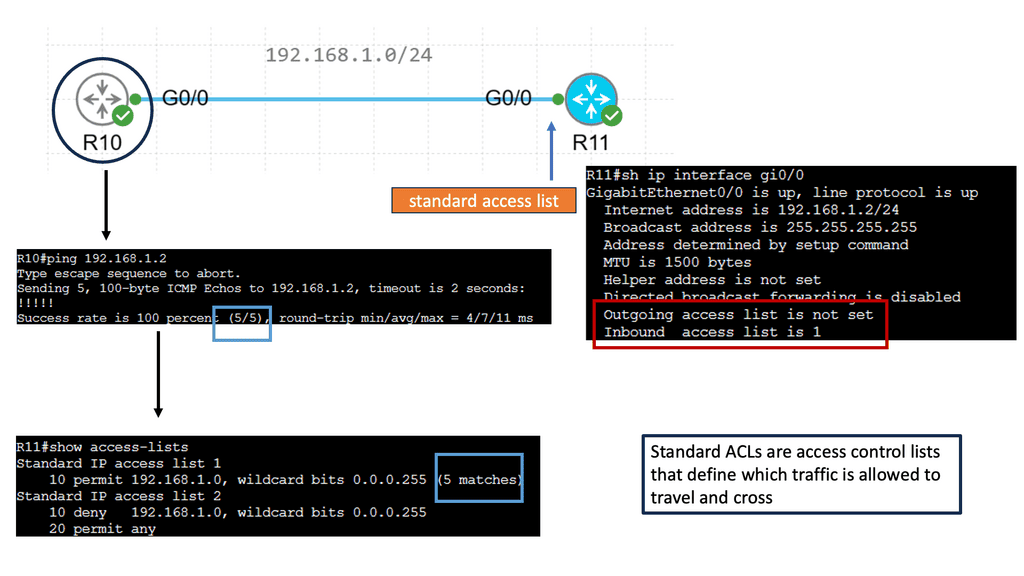

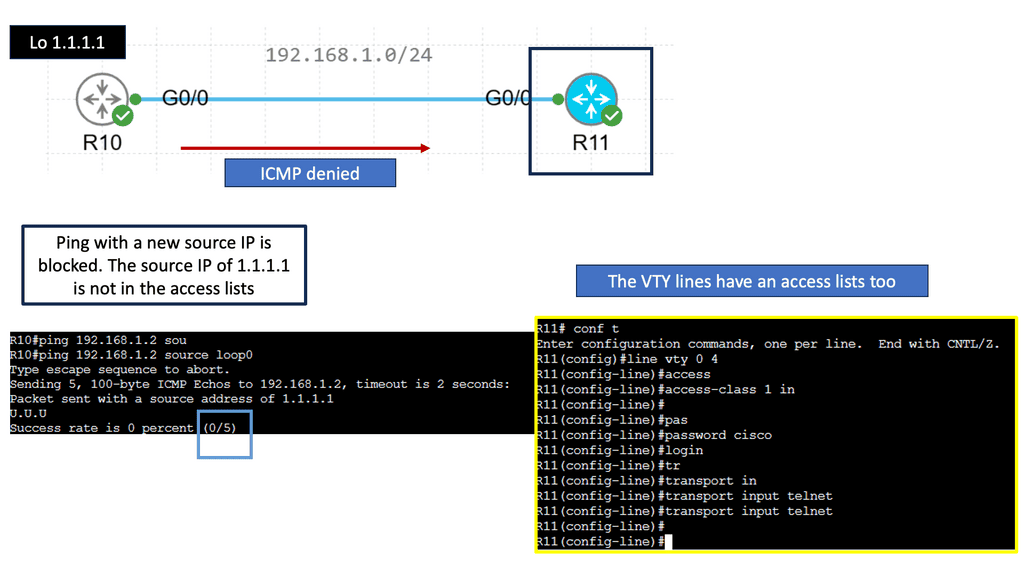

Example: IPv4 Standard Access Lists

Standard access lists are a type of access control mechanism used in Cisco routers. They evaluate packets’ source IP addresses to determine whether they should be allowed or denied access to a network. Unlike extended access lists, standard access lists only consider the source IP address, making them more straightforward and efficient for basic filtering needs.

**Create a Standard ACL**

To create a standard access list, define the access list number and specify the permit or deny statements. The access list number can range from 1 to 99 or 1300 to 1999. Each entry in the access list consists of a permit or deny keyword followed by the source IP address or wildcard mask. By carefully crafting the access list statements, you can control which traffic is allowed or denied access to your network.

**Apply to an Interface**

Once you have created your standard access list, apply it to an interface on your router. This can be done using the “access-group” command followed by the access list number and the direction (inbound or outbound). By applying the access list to an interface, you ensure that the defined filtering rules are enforced on the traffic passing through that interface.

**ACL Best Practices**

To maximize standard access lists, follow some best practices. First, always place the most specific access list entries at the top, as they are evaluated in order. Second, regularly review and update your access lists to reflect any changes in your network environment. Lastly, consider using named access lists instead of numbered ones for better readability and ease of management.

IPv6 Network Security

IPv6, the latest version of the Internet Protocol, offers significant improvements over its predecessor. Its expanded address space, improved security features, and built-in encryption make it a more secure choice for networking.

IPv6 incorporates IPsec (Internet Protocol Security), which provides integrity, confidentiality, and authentication for data packets. With IPsec, end-to-end encryption and secure communication become more accessible, enhancing overall network security.

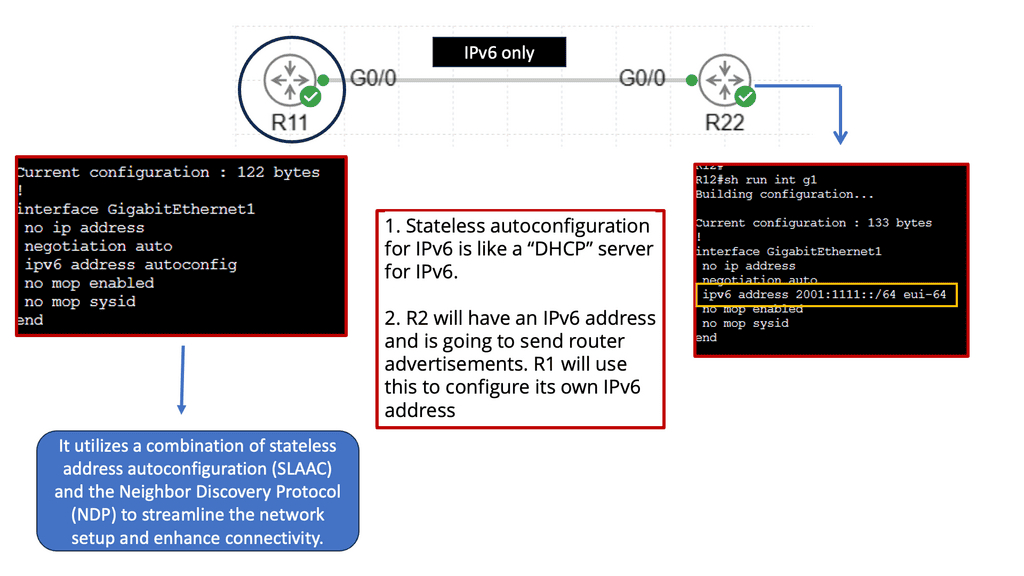

IPv6 simplifies IP address assignment and reduces the risk of misconfiguration. This feature and temporary addresses improve network security by making it harder for attackers to track devices.

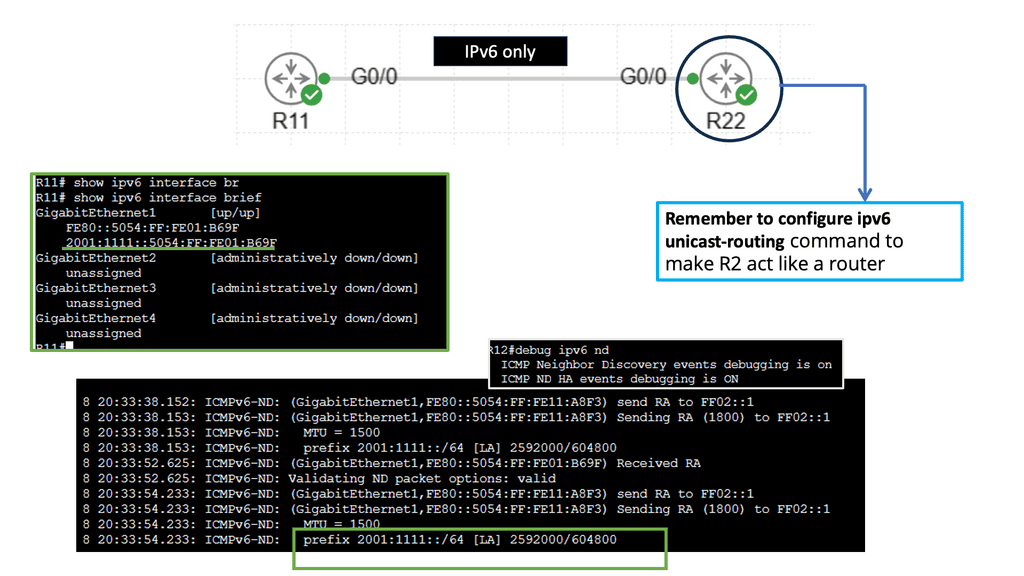

Understanding Router Advertisement (RA)

Router Advertisement (RA) is a critical mechanism in IPv6 networks that allows routers to inform neighboring devices about their presence and various network parameters. RAs contain invaluable information, such as the router’s IPv6 address, network prefix, and, most importantly, the default gateway information.

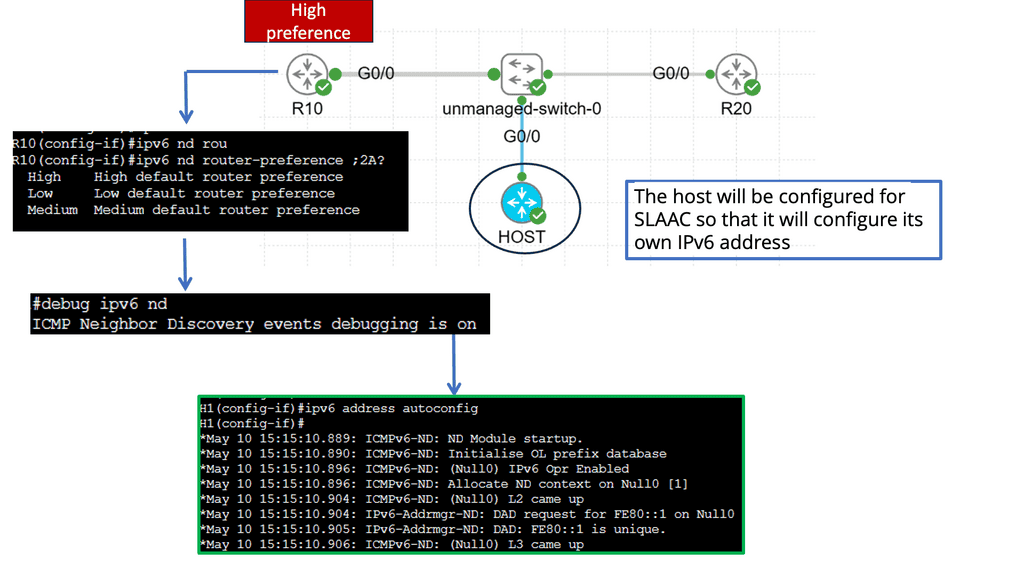

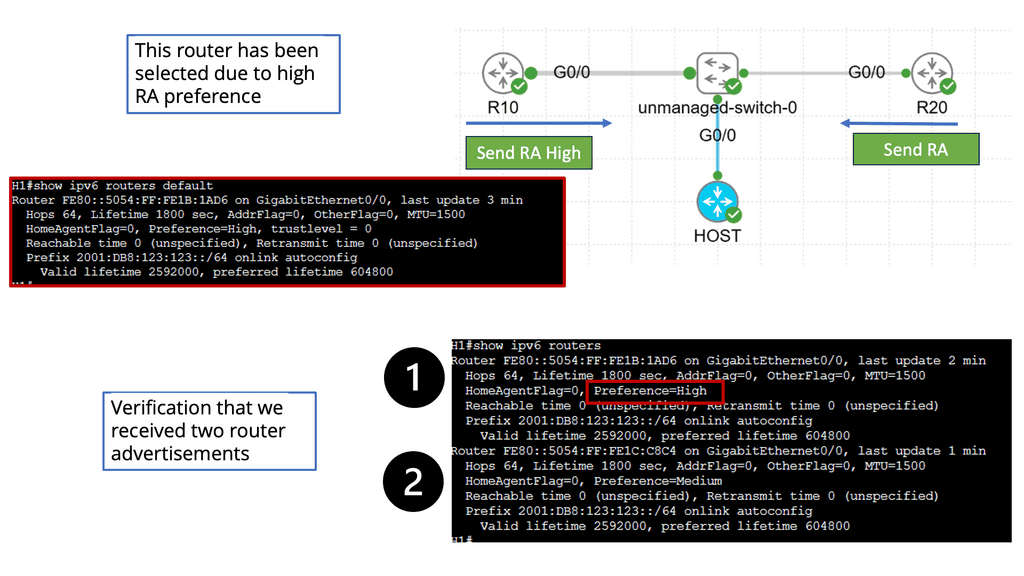

Router Advertisement Preference

Router Advertisement Preference is crucial in determining the default gateway selection process for devices in an IPv6 network. By assigning preference values to RAs, network administrators can influence router prioritization, ultimately shaping the network’s behavior and performance.

Configuring RA Preference

Configuring Router Advertisement Preference involves assigning specific preference values to routers within the network. This can be achieved through various methods, including manual configuration or routing protocols such as OSPFv3 or RIPng. Network administrators can fine-tune the preference values based on factors like router capacity, reliability, or location.

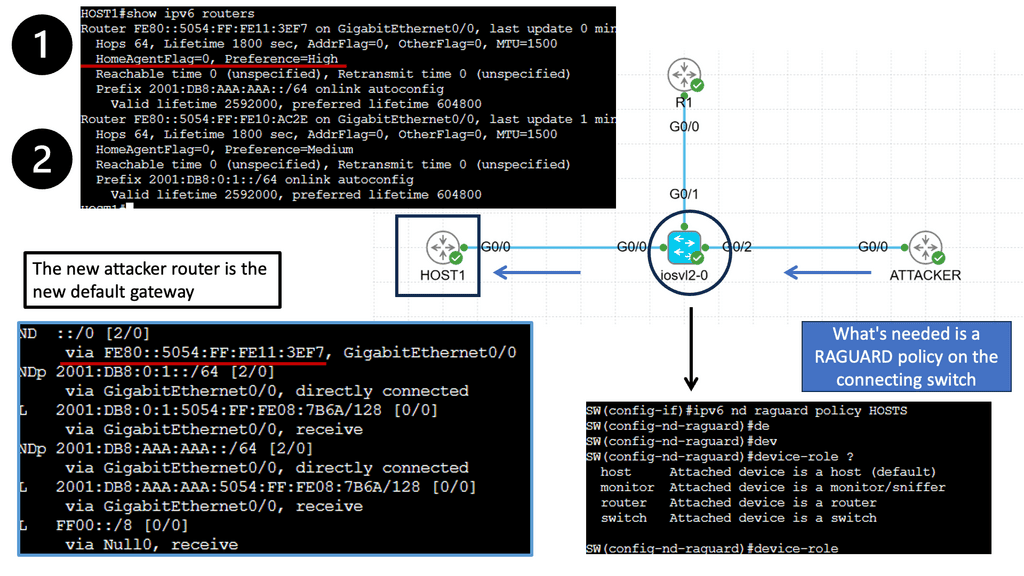

IPv6 Router Advertisement (RA) Guard

IPv6 Router Advertisement (RA) is a vital component of IPv6 networks, allowing routers to inform neighboring devices about network configurations. However, RA messages can be manipulated or forged, posing potential security risks. This is where the IPv6 RA Guard comes into play.

RA operates at Layer 2

IPv6 RA Guard is a security feature that safeguards network devices against unauthorized or malicious RAs. It operates at layer 2 of the network, specifically at the access layer, to protect against potential threats introduced through unauthorized routers or rogue devices.

Inspecting & Filtering

IPv6 RA Guard functions by inspecting and filtering incoming RA messages, verifying their legitimacy, and allowing only authorized RAs to reach the intended devices. It uses various techniques, such as Neighbor Discovery Inspection (NDI) and Secure Neighbor Discovery (SEND), to validate the authenticity and integrity of RAs.

IPv6 Neighbor Discovery

Understanding IPv6 Neighbor Discovery Protocol

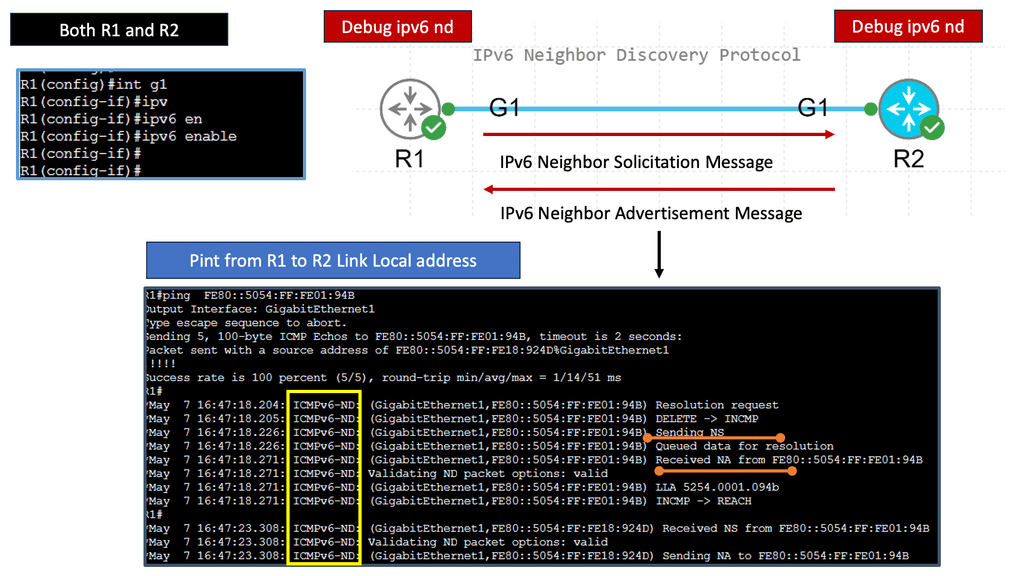

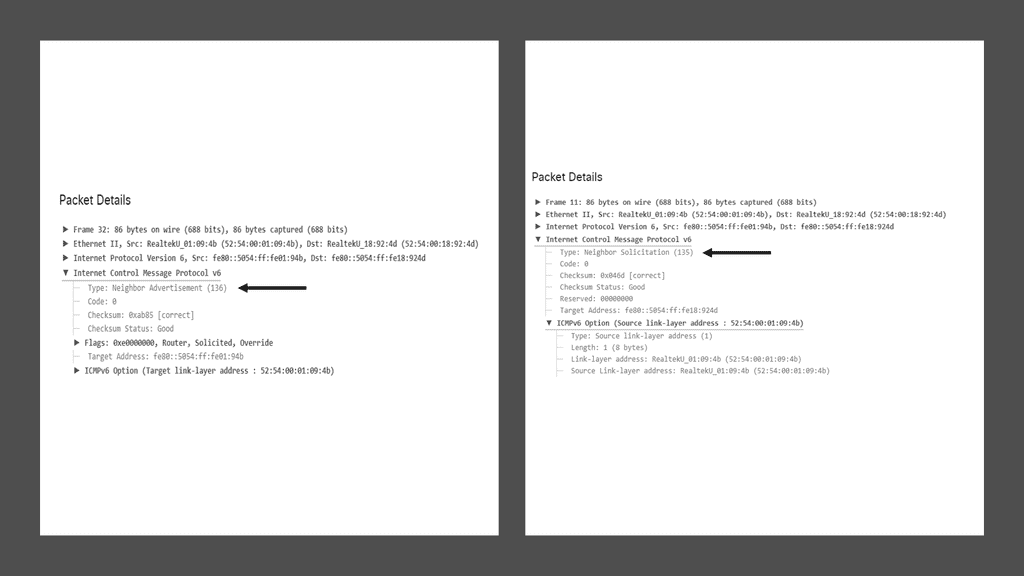

The Neighbor Discovery Protocol (NDP) is a fundamental part of the IPv6 protocol suite. It replaces the Address Resolution Protocol (ARP) used in IPv4 networks. NDP plays a crucial role in various aspects of IPv6 networking, including address autoconfiguration, neighbor discovery, duplicate address detection, and router discovery. Network administrators can optimize their IPv6 deployments by understanding how NDP functions and ensuring smooth communication between devices.

**Address Auto-configuration**

One of NDP’s key features is its ability to facilitate address autoconfiguration. With IPv6, devices can generate unique addresses based on specific parameters, eliminating the need for manual configuration or reliance on DHCP servers. NDP’s Address Autoconfiguration process enables devices to obtain their global and link-local IPv6 addresses, simplifying network management and reducing administrative overhead.

**Neighbor Discovery**

Neighbor Discovery is another vital aspect of NDP. It allows devices to discover and maintain information about neighboring nodes on the same network segment. Through Neighbor Solicitation and Neighbor Advertisement messages, devices can determine the link-layer addresses of neighboring devices, verify their reachability, and update their neighbor cache accordingly. This dynamic process ensures efficient routing and enhances network resilience.

**Duplicate Address Detection (DAD)**

IPv6 NDP incorporates Duplicate Address Detection (DAD) to prevent address conflicts. When a device joins a network or configures a new address, it performs DAD to ensure the uniqueness of the chosen address. By broadcasting Neighbor Solicitation messages with the tentative address, the device can detect if any other device on the network is already using the same address. DAD is an essential mechanism that guarantees the integrity of IPv6 addressing and minimizes the likelihood of address conflicts.

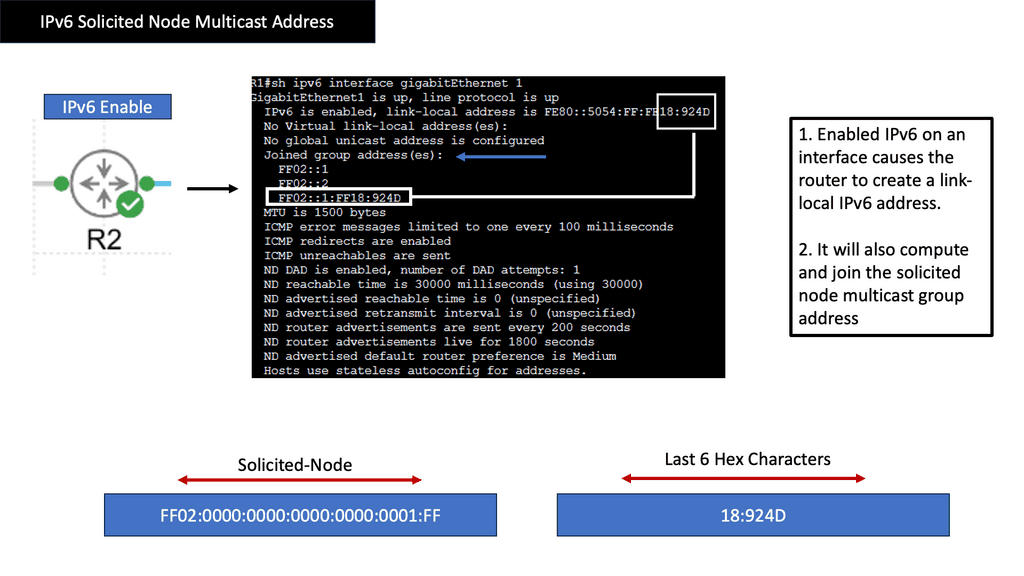

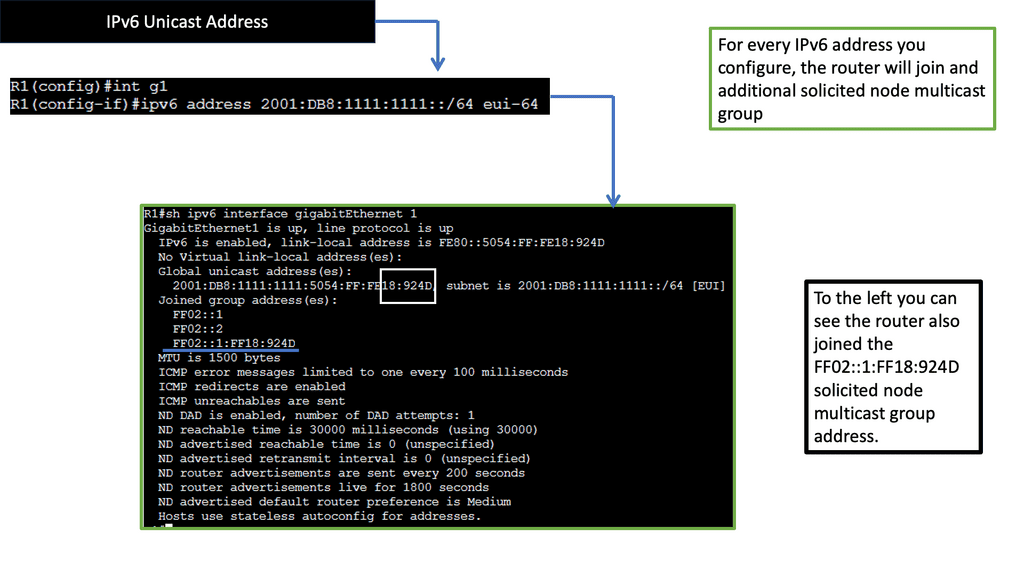

IPv6 & Multicast Communication

Multicast communication plays a vital role in IPv6 networks, enabling efficient data transmission to multiple recipients simultaneously. Unlike unicast communication, where data is sent to a specific destination address, multicast uses a group address to reach a set of interested receivers. This approach minimizes network traffic and optimizes resource utilization.

–The Role of Solicited Node Multicast Address–

The IPv6 Solicited Node Multicast Address is a specialized multicast address primarily used in IPv6 networks. It is crucial in enabling efficient neighbor discovery and address resolution processes. When a node joins an IPv6 network, it sends a Neighbor Solicitation message to the solicited node multicast address corresponding to its IPv6 address. This allows neighboring nodes to quickly respond with Neighbor Advertisement messages, establishing a communication link.

The construction of a IPv6 Solicited Node Multicast Address involves a specific pattern. It is formed by taking the prefix FF02:0:0:0:0:1:FF00/104 and appending the last 24 bits of the unicast address of the node being resolved. This process ensures that the unique solicited-node multicast address only reaches the intended recipients.

–Benefits: IPv6 Solicited Note Multicast Address—

Using IPv6 Solicited Node Multicast Address brings several benefits to IPv6 networks. Firstly, it significantly reduces the volume of network traffic by limiting the scope of Neighbor Solicitation messages to interested nodes. This helps conserve network resources and improves overall network performance. Additionally, the rapid and efficient neighbor discovery enabled by solicited-node multicast addresses enhances the responsiveness and reliability of communication in IPv6 networks.

IPv6 Network Address Translation

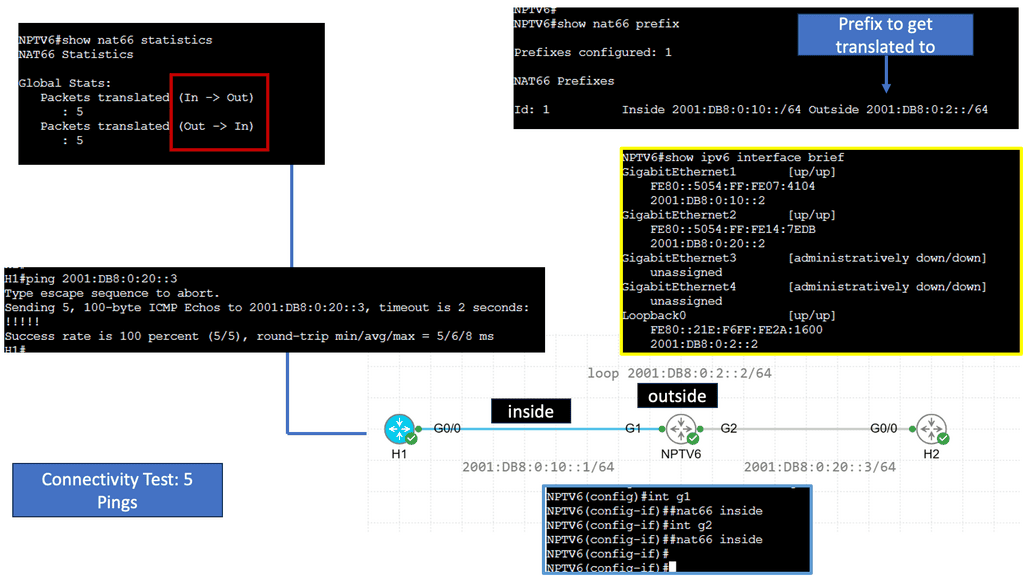

Understanding NPTv6

NPTv6, an evolution of NAT64, is an IPv6 transition technology that facilitates communication between IPv6-only and IPv4-only networks. It allows for seamless connectivity by translating IPv6 prefixes to IPv4 addresses, enabling efficient communication across different network types. NPTv6 bridges the gap between IPv6 and IPv4 by providing this translation mechanism, facilitating the transition to the next-generation internet protocol.

Benefits: NPTv6

NPTv6 offers several notable features that make it a compelling choice for network architects and administrators. Firstly, it provides transparent communication between IPv6 and IPv4 networks, ensuring compatibility and interoperability.

Additionally, NPTv6 supports stateful and stateless translation modes, providing flexibility for various deployment scenarios. Its ability to handle large-scale address translation efficiently makes it suitable for environments with extensive IPv6 adoption.

**Eliminate Dual Stack Deployments**

The adoption of NPTv6 brings forth numerous benefits and implications for network infrastructure. Firstly, it simplifies the transition process by eliminating the need for dual-stack configurations, reducing complexity and potential security vulnerabilities.

NPTv6 also promotes IPv6 adoption by enabling communication with legacy IPv4 networks, facilitating a gradual migration strategy. Moreover, NPTv6 can alleviate the strain on IPv4 address exhaustion, extending the lifespan of existing IPv4 infrastructure.

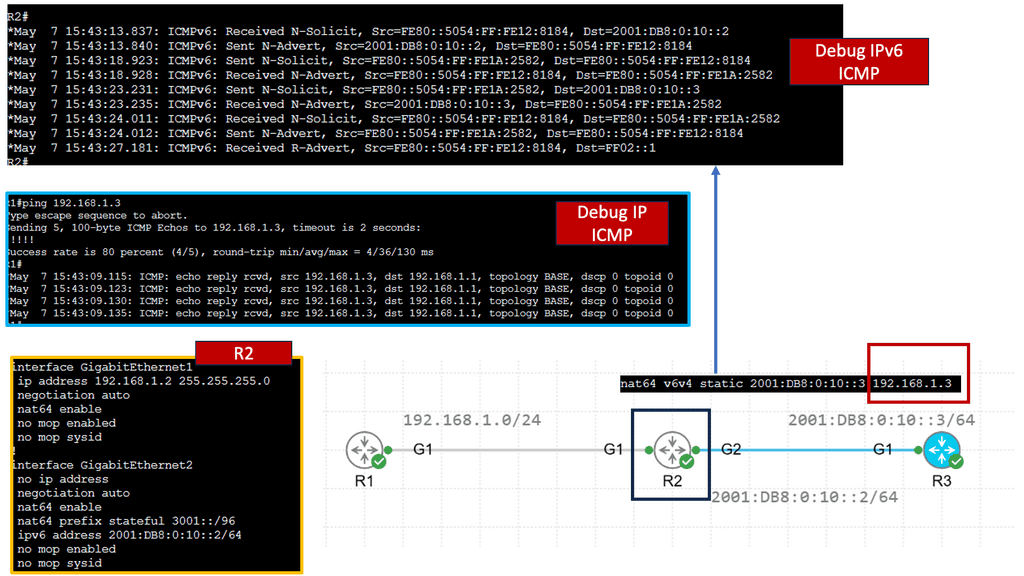

Example Technology: NAT64

Understanding NAT64

NAT64 is a translator between IPv6 and IPv4, allowing devices using different protocols to communicate effectively. With the depletion of IPv4 addresses, the transition to IPv6 becomes crucial, and NAT64 plays a vital role in enabling this transition. By facilitating communication between IPv6-only and IPv4-only devices, NAT64 ensures smooth connectivity in a mixed network environment.

Mapping IPv6 to IPv4 addresses

NAT64 operates by mapping IPv6 to IPv4 addresses, allowing seamless communication between the two protocols. It employs various techniques, such as stateful and stateless translation, to ensure efficient packet routing between IPv6 and IPv4 networks. NAT64 enables devices to communicate across different network types by dynamically translating addresses and managing traffic flow.

NAT64 offers several advantages, including preserving IPv4 investments, simplified network management, and enhanced connectivity. It eliminates the need for costly dual-stack deployment and facilitates the coexistence of IPv4 and IPv6 networks. However, NAT64 also poses challenges, such as potential performance limitations, compatibility issues, and the need for careful configuration to ensure optimal results.

NAT64 Use Cases:

NAT64 finds applications in various scenarios, including service providers transitioning to IPv6, organizations with mixed networks, and mobile networks facing IPv4 address scarcity. It enables these entities to maintain connectivity and seamlessly bridge network protocol gaps. NAT64’s versatility and compatibility make it a valuable tool in today’s evolving network landscape.

IPv4 to IPv6 Transition

Security Considerations

Dual Stack Deployment: While transitioning from IPv4 to IPv6, organizations often deploy dual-stack networks, supporting both protocols simultaneously. However, this introduces additional security considerations, as vulnerabilities in either protocol can impact the overall network security.

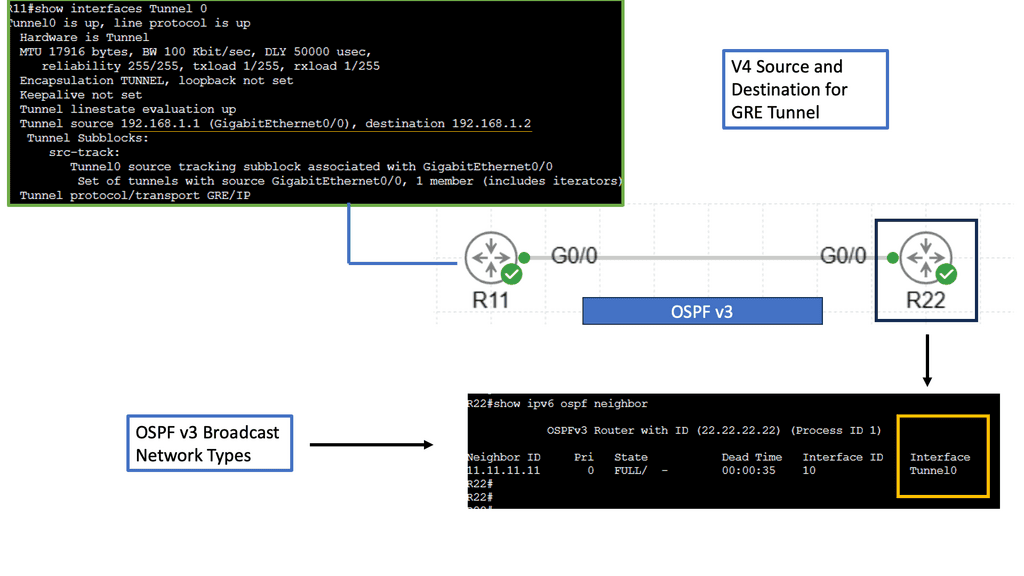

Transition Mechanism Security: Various transition mechanisms, such as tunneling and translation, facilitate communication between IPv4 and IPv6 networks. Ensuring the security of these mechanisms is crucial, as they can introduce potential vulnerabilities and become targets for attackers.

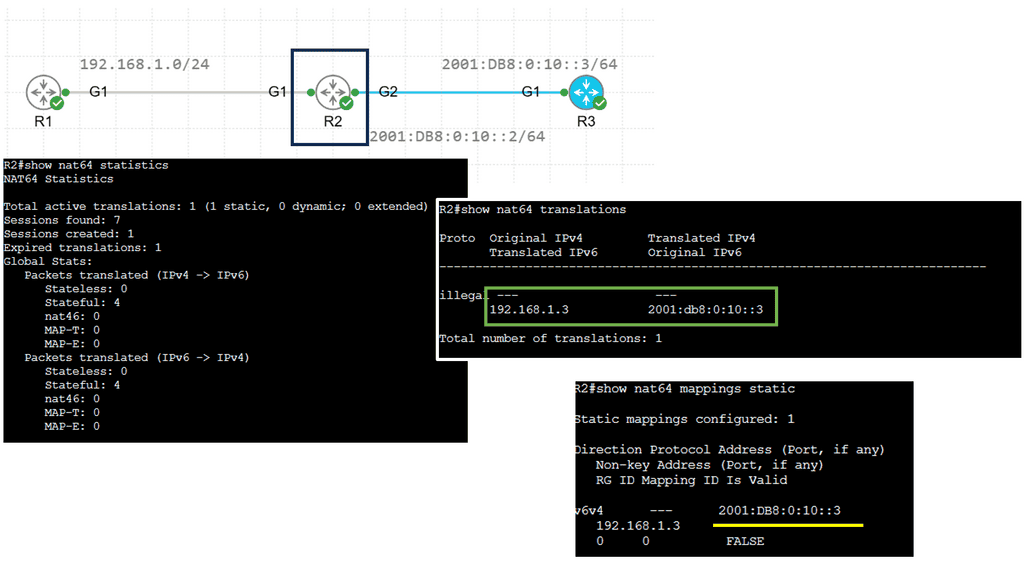

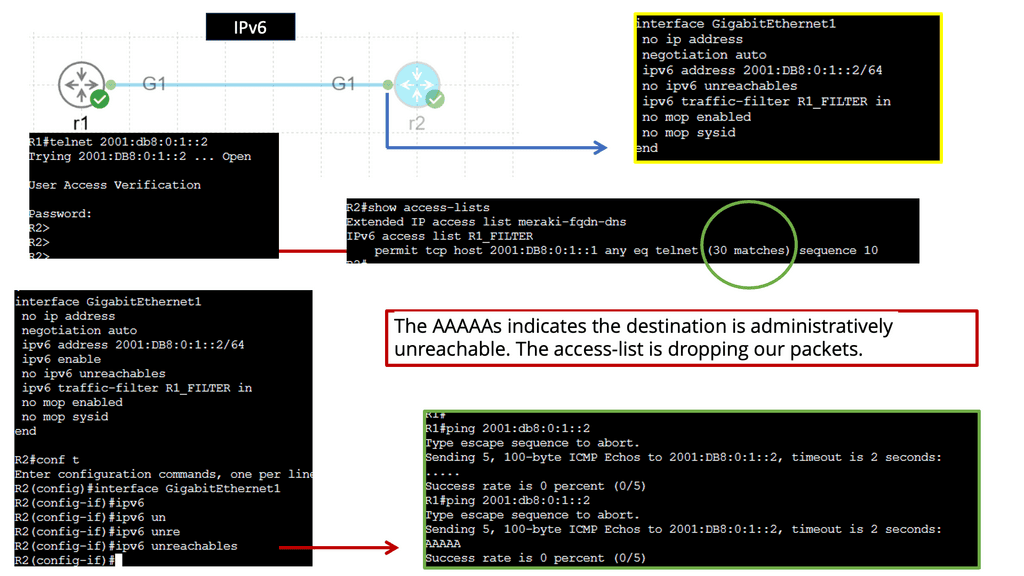

Example: IPv6 Access Lists

IPv6, the next-generation Internet Protocol, brings new features and enhancements. One critical aspect of IPv6 is the access list, which allows network administrators to filter and control traffic based on various criteria. Unlike IPv4 access lists, IPv6 access lists offer a more robust and flexible approach to network security.

One of the primary purposes of IPv6 access lists is to filter traffic based on specific conditions. IPv6 has various filtering techniques, including source and destination IP address, protocol, and port-based filtering. Also, prefix lists to enhance traffic filtering capabilities.

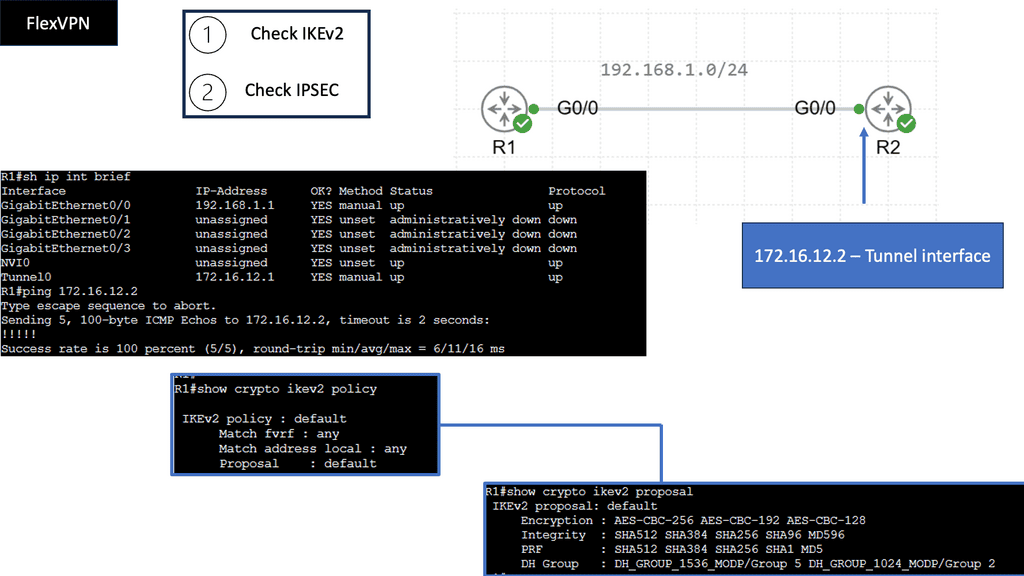

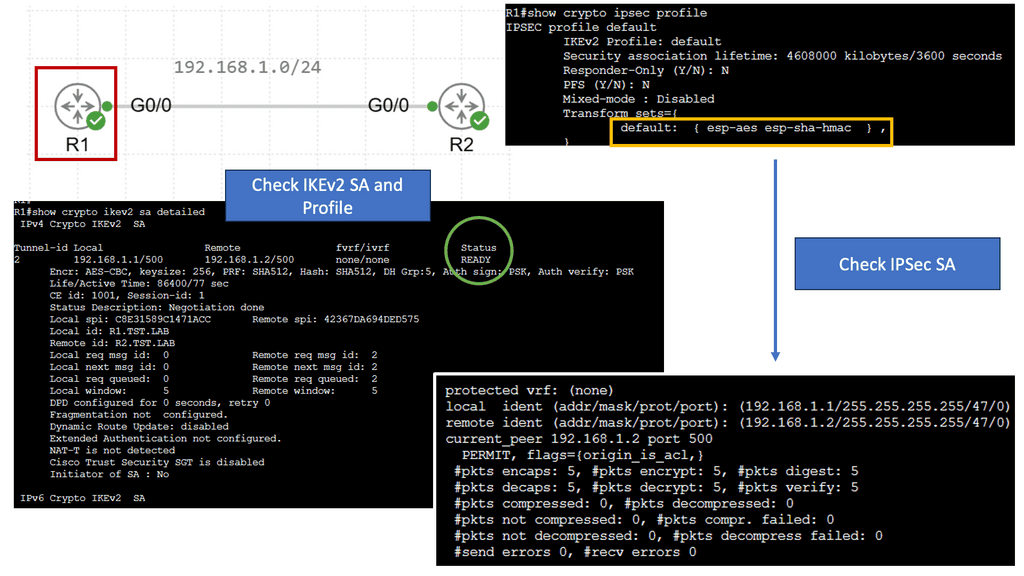

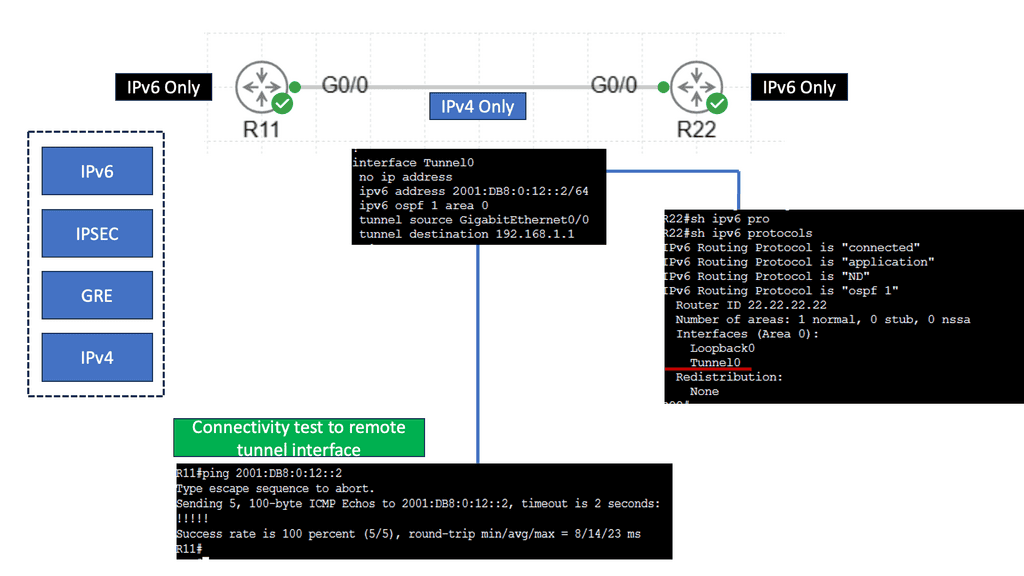

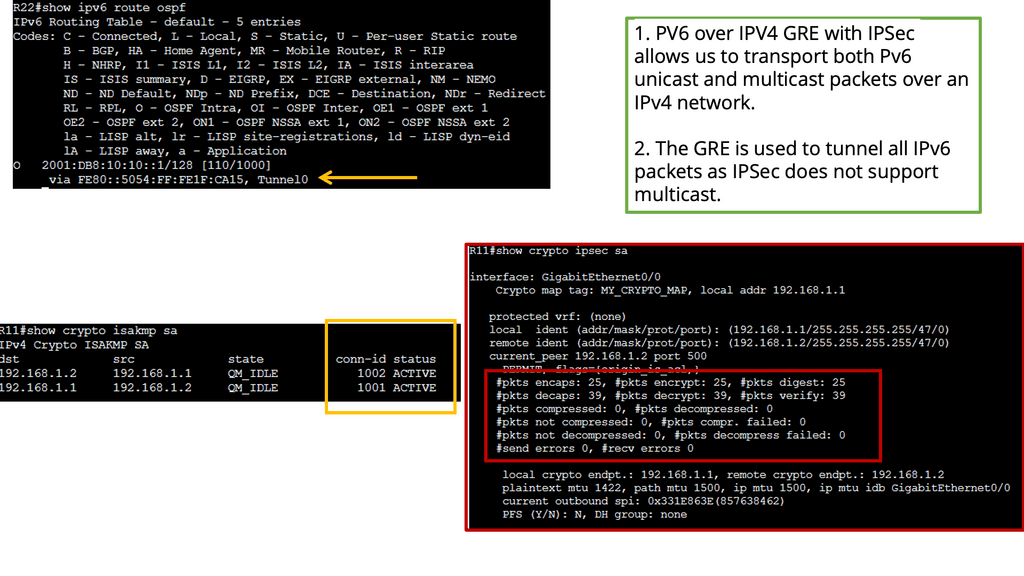

Securing Tunnels: IPSec in IPv6 over IPv4 GRE

IPv6 over IPv4 GRE (Generic Routing Encapsulation) is a tunneling protocol that allows the transmission of IPv6 packets over an existing IPv4 network infrastructure. It encapsulates IPv6 packets within IPv4 packets, enabling seamless communication between networks that have not yet fully adopted IPv6.

IPsec: IPSec (Internet Protocol Security) ensures the confidentiality, integrity, and authenticity of the data transmitted over the IPv6 over the IPv4 GRE tunnel. IPSec safeguards the tunnel against malicious activities and unauthorized access by providing robust encryption and authentication mechanisms.

1. Enhanced Security: With IPSec’s encryption and authentication capabilities, IPv6 over IPv4 GRE with IPSec offers a high level of security for data transmission. This is particularly important in scenarios where sensitive information is being exchanged.

2.Seamless Transition: IPv6 over IPv4 GRE allows organizations to adopt IPv6 gradually without disrupting their existing IPv4 infrastructure. This smooth transition path ensures minimal downtime and compatibility issues.

3. Expanded Address Space: IPv6 provides a significantly larger address space than IPv4, addressing the growing demand for unique IP addresses. By leveraging IPv6 over IPv4 GRE, organizations can tap into this expanded address pool while still utilizing their existing IPv4 infrastructure.

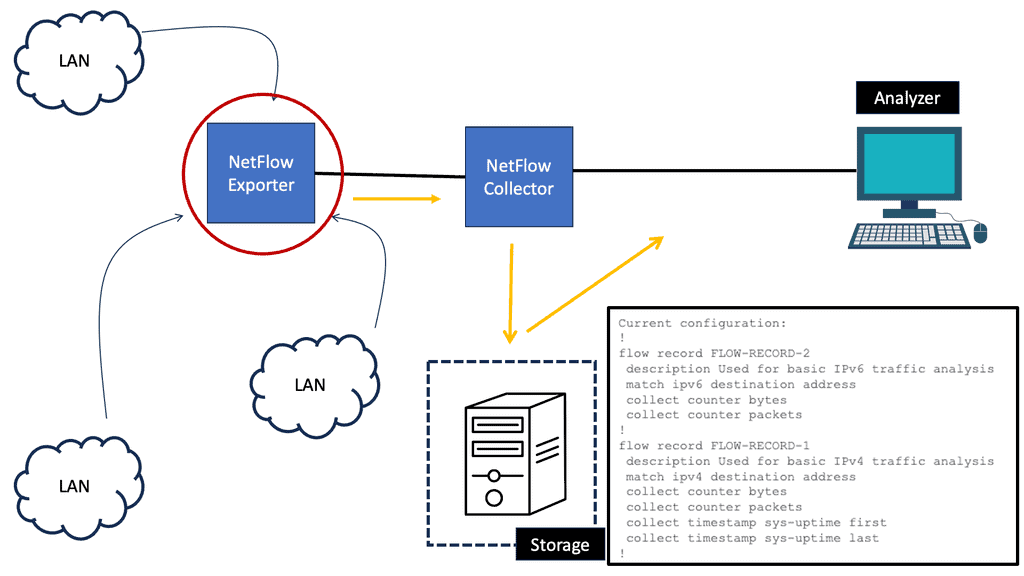

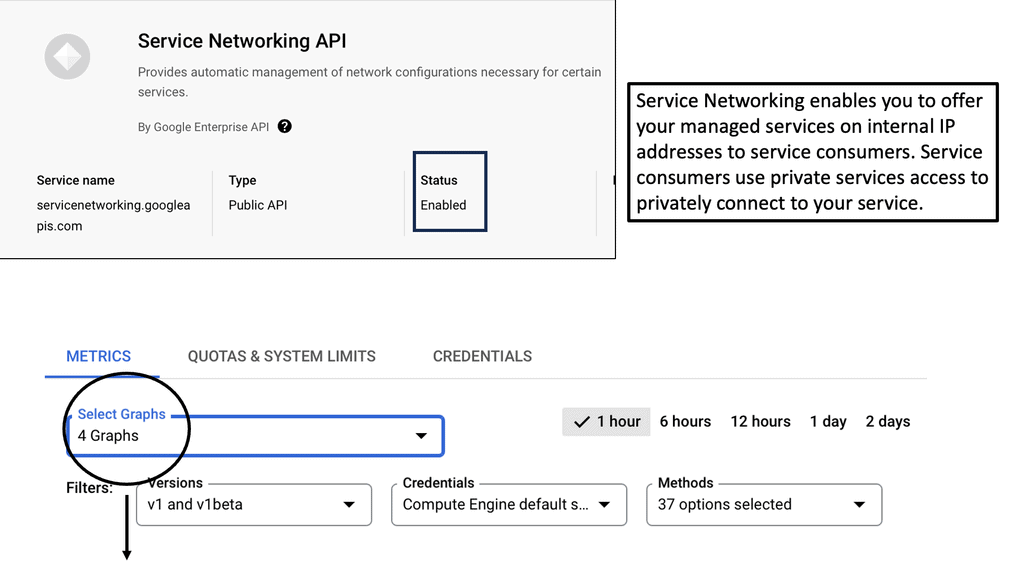

Improving Network Security

Appropriate network visibility is critical to understanding network performance and implementing network security components. Much of the technology used in network performance, such as Netflow, is security-focused. The landscape is challenging; workloads move to the cloud without monitoring or any security plan. We need to find a solution to have visibility over these clouds and on-premise applications without refuting the entire tracking and security stack.

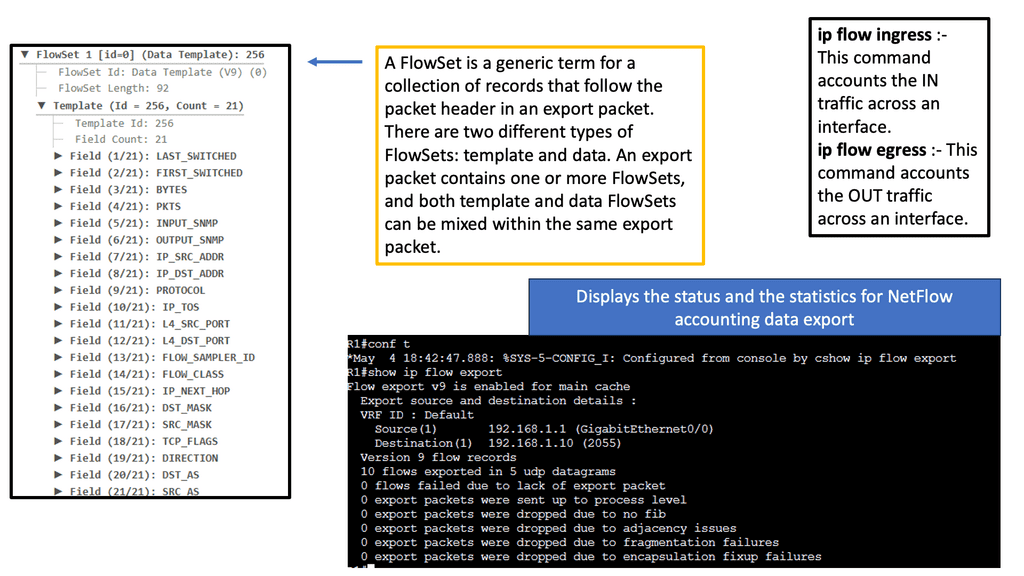

Understanding NetFlow

NetFlow is a network protocol developed by Cisco Systems that provides valuable insights into network traffic. By collecting and analyzing flow data, NetFlow enables organizations to understand their network’s behavior, identify anomalies, and detect potential security threats.

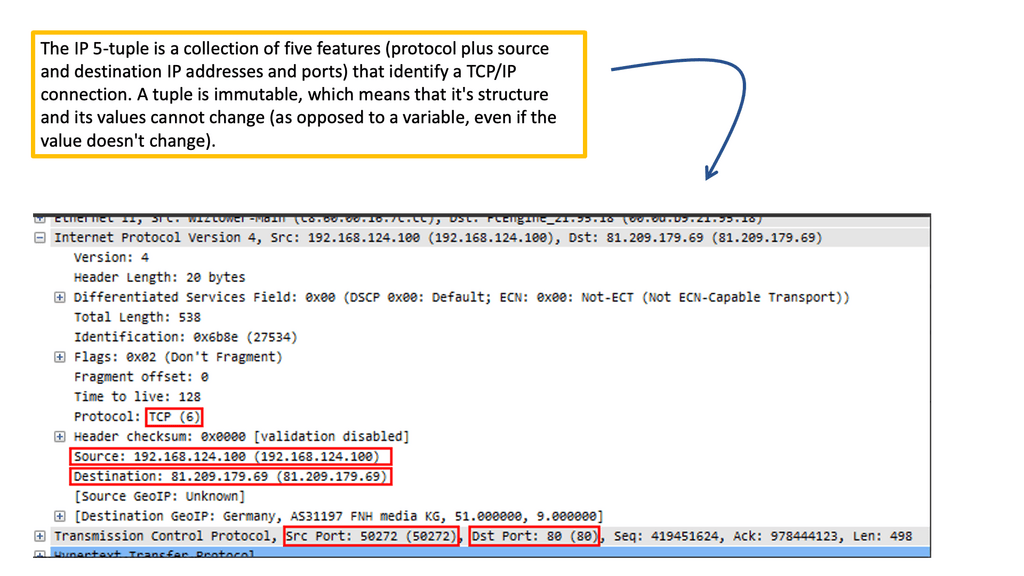

A) Identifying Suspicious Traffic Patterns: NetFlow allows security teams to monitor traffic patterns and identify deviations from the norm. NetFlow can highlight suspicious activities that may indicate a security breach or an ongoing cyberattack by analyzing data such as source and destination IPs, ports, and protocols.

B) Real-time Threat Detection: NetFlow empowers security teams to detect threats as they unfold by capturing and analyzing data in real time. By leveraging NetFlow-enabled security solutions, organizations can receive immediate alerts and proactively mitigate potential risks.

C) Forensic Analysis and Incident Response: NetFlow data is valuable for forensic analysis and incident response. NetFlow records can reconstruct network activity, identify the root cause, and enhance incident response efforts in a security incident.

D) Configuring NetFlow on Network Devices: To harness NetFlow’s power, network devices must be configured appropriately to export flow data. This involves enabling NetFlow on routers, switches, or dedicated NetFlow collectors and defining the desired flow parameters.

E) Choosing the Right NetFlow Analyzer: Organizations must invest in a robust NetFlow analyzer tool to effectively analyze and interpret NetFlow data. The ideal analyzer should offer comprehensive visualization, reporting capabilities, and advanced security features to maximize its potential.

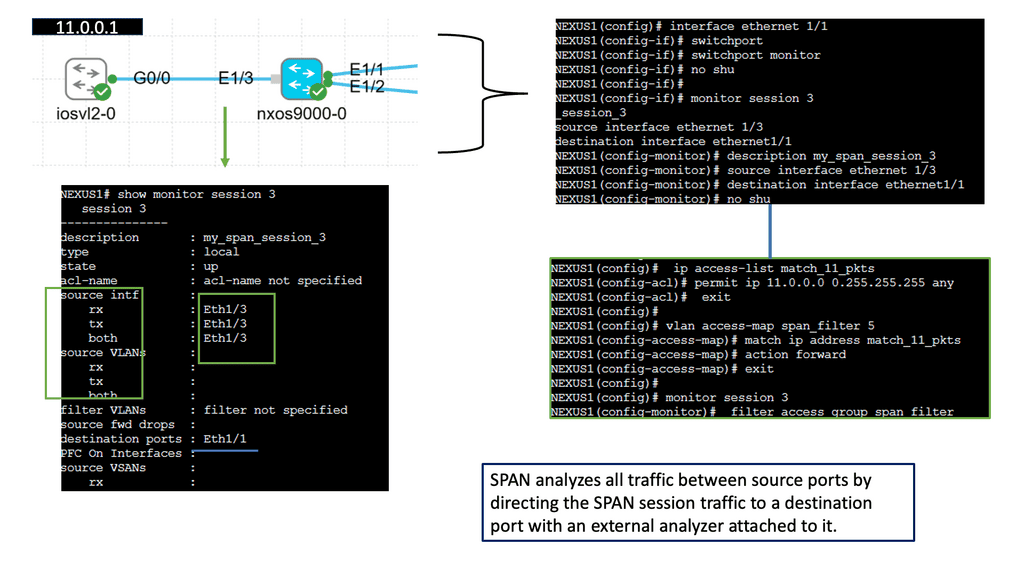

Understanding SPAN

Understanding the fundamental concepts of SPAN is the foundation of practical network analysis.

Knowing how to configure SPAN on Cisco NX-OS is crucial for harnessing its power. This section will provide a step-by-step guide on setting up SPAN sessions, selecting source ports, and defining destination ports. SPAN has many advanced configuration options that allow you to customize SPAN according to specific monitoring requirements.

Once SPAN is configured, the next step is effectively analyzing the captured data. SPAN has various tools and techniques for analyzing SPAN traffic. From packet analyzers to flow analysis tools, along with different approaches to gaining valuable insights from the captured network data.

Understanding sFlow

sFlow is a technology that enables network administrators to gain real-time visibility into their network traffic. It provides a scalable and efficient solution for monitoring and analyzing network flows. With sFlow, network administrators can capture and analyze packet-level data without introducing significant overhead.

Cisco NX-OS, the operating system used in Cisco Nexus switches, offers robust support for sFlow. It allows network administrators to configure sFlow on their switches, enabling them to collect and analyze flow data from the network. Integrating sFlow with Cisco NX-OS provides enhanced visibility and control over the network infrastructure.

Data Center Network Security:

What are MAC ACLs?

MAC ACLs, or Media Access Control Access Control Lists, are essential to network security. Unlike traditional IP-based ACLs, MAC ACLs operate at the data link layer, allowing for granular control over traffic within a local network. By filtering traffic based on MAC addresses, MAC ACLs provide an additional layer of defense against unauthorized access and ensure secure communication within the network.

MAC ACL Implementation

Implementing MAC ACLs offers several critical benefits for network security. Firstly, MAC ACLs enable administrators to control access to specific network resources based on MAC addresses, preventing unauthorized devices from connecting to the network.

Additionally, MAC ACLs can segment network traffic, creating isolated zones for enhanced security and improved network performance. By reducing unnecessary traffic, MAC ACLs also contribute to optimizing network bandwidth.

Understanding VLAN ACLs

VLAN ACLs provide a granular level of control over traffic within VLANs. By applying access control rules, network administrators can regulate which packets are allowed or denied based on various criteria, such as source/destination IP addresses, protocols, and port numbers.

Proper configuration is key to effectively utilizing VLAN ACLs. This section will walk you through the step-by-step process of configuring VLAN ACLs on Cisco NX-OS devices.

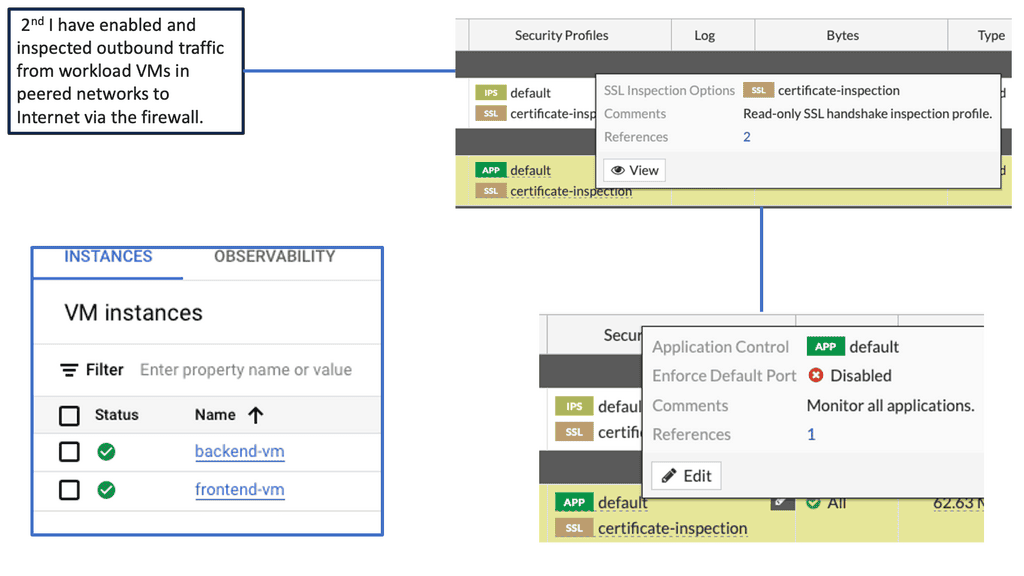

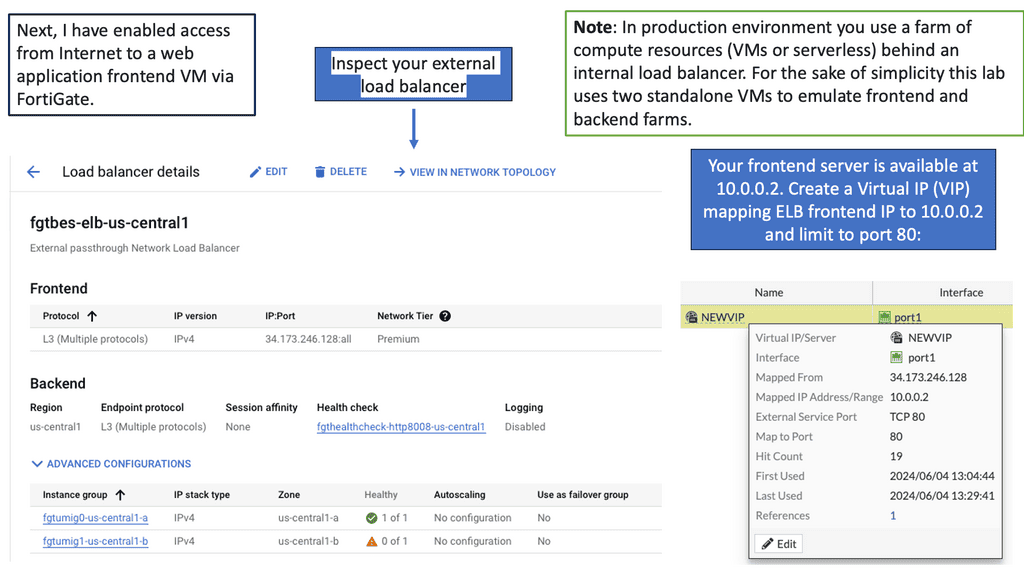

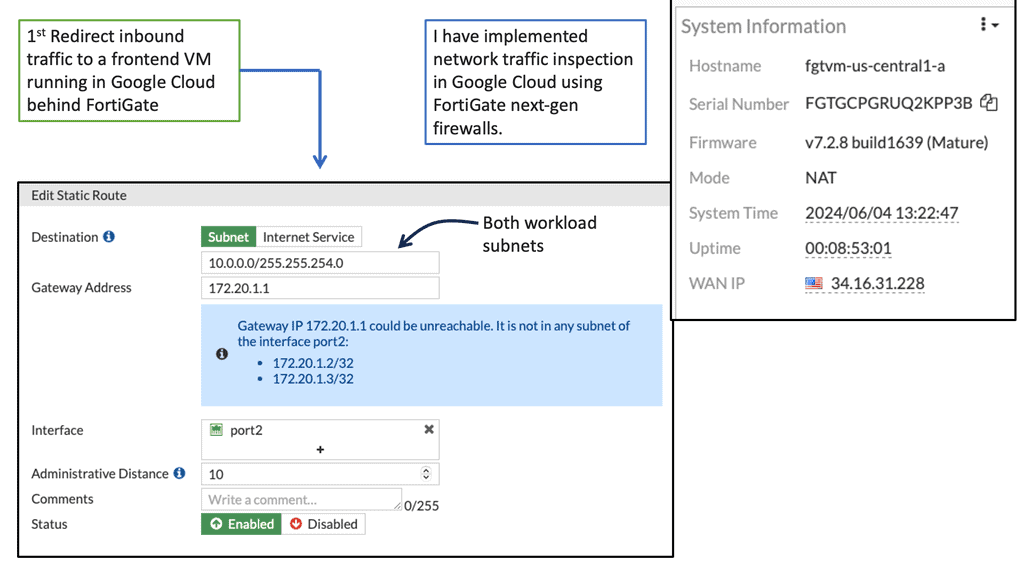

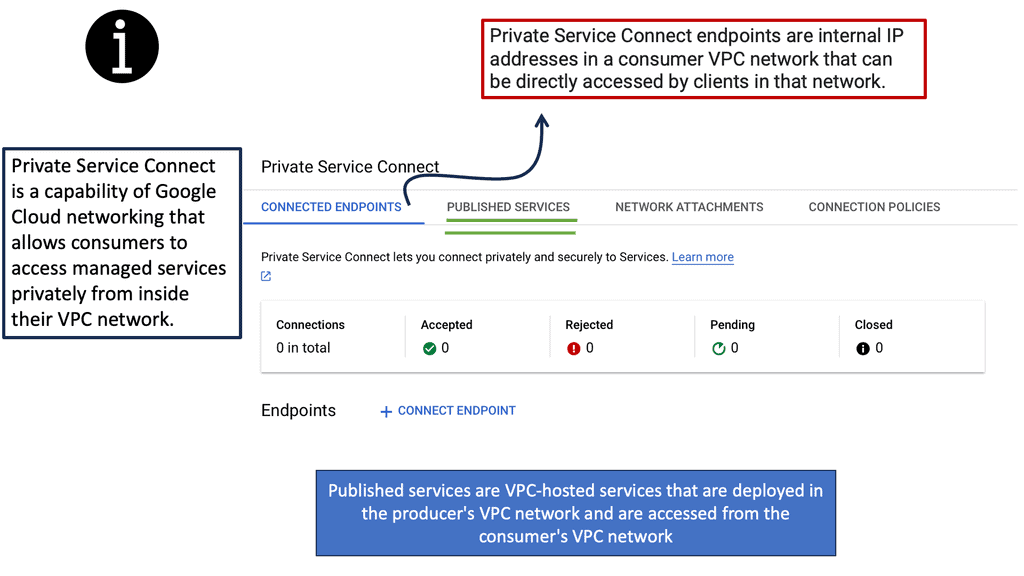

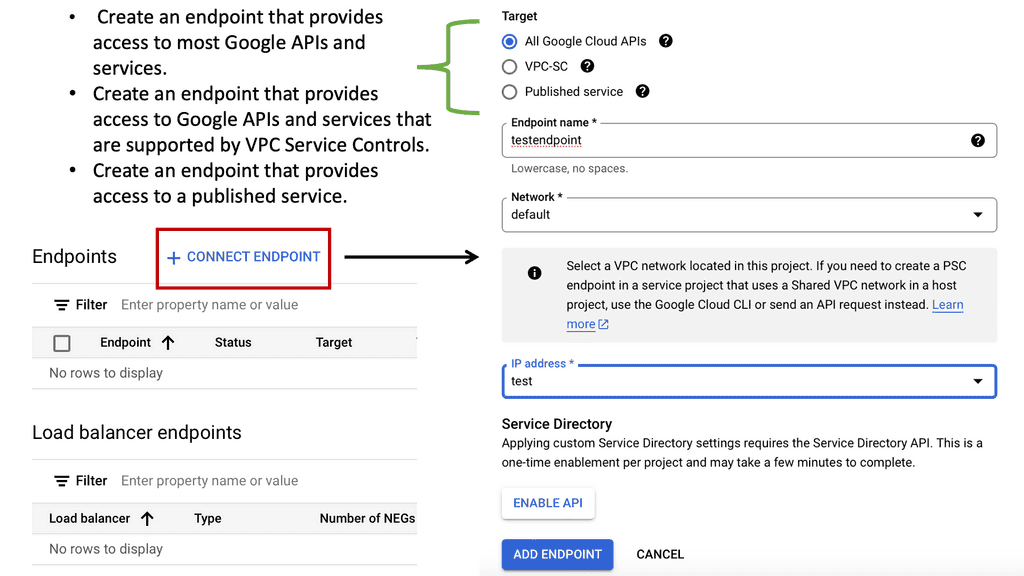

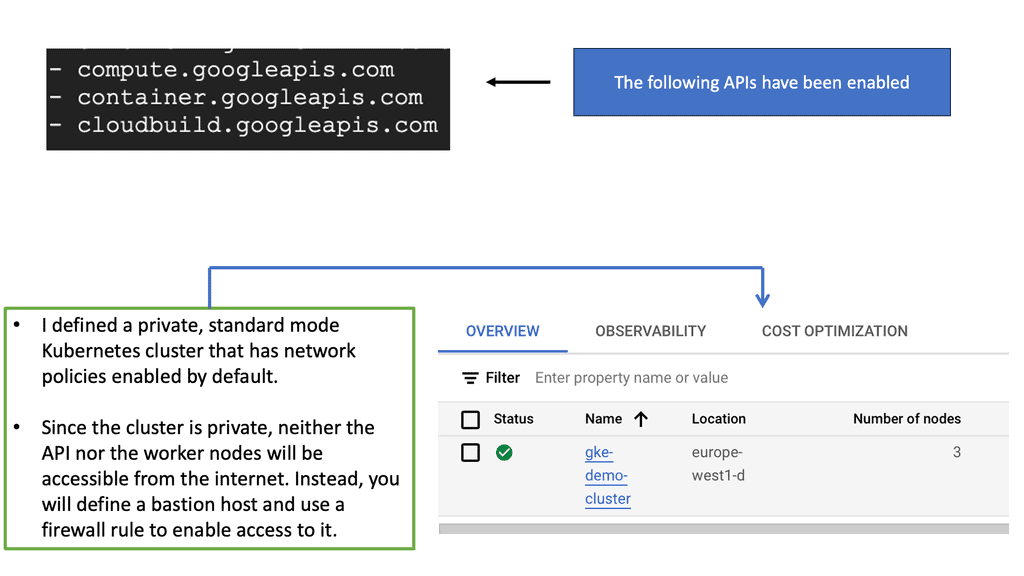

Google Cloud Network Security: FortiGate

Understanding FortiGate

FortiGate is a comprehensive network security platform developed by Fortinet. It offers a wide range of security services, including firewall, VPN, intrusion prevention, and more. With its advanced threat intelligence capabilities, FortiGate provides robust protection against various cyber threats.

FortiGate seamlessly integrates with Google Compute Engine, allowing you to extend your security measures to the cloud. By deploying FortiGate instances within your Google Compute Engine environment, you can create a secure perimeter around your resources and control traffic flow to and from your virtual machines.

Threat Detection & Prevention

One of the key advantages of using FortiGate with Google Compute resources is its advanced threat detection and prevention capabilities. FortiGate leverages machine learning and artificial intelligence to identify and mitigate potential threats in real-time. It continuously monitors network traffic, detects anomalies, and applies proactive measures to prevent attacks.

Centralized Management & Monitoring

FortiGate offers a centralized management and monitoring platform that simplifies the administration of security policies across your Google Compute resources. Through a single interface, you can configure and enforce security rules, monitor traffic patterns, and analyze security events. This centralized approach enhances visibility and control, enabling efficient management of your security infrastructure.

Related: For pre-information, you may find the following post helpful:

Implementing Network Security

The Role of Network Security

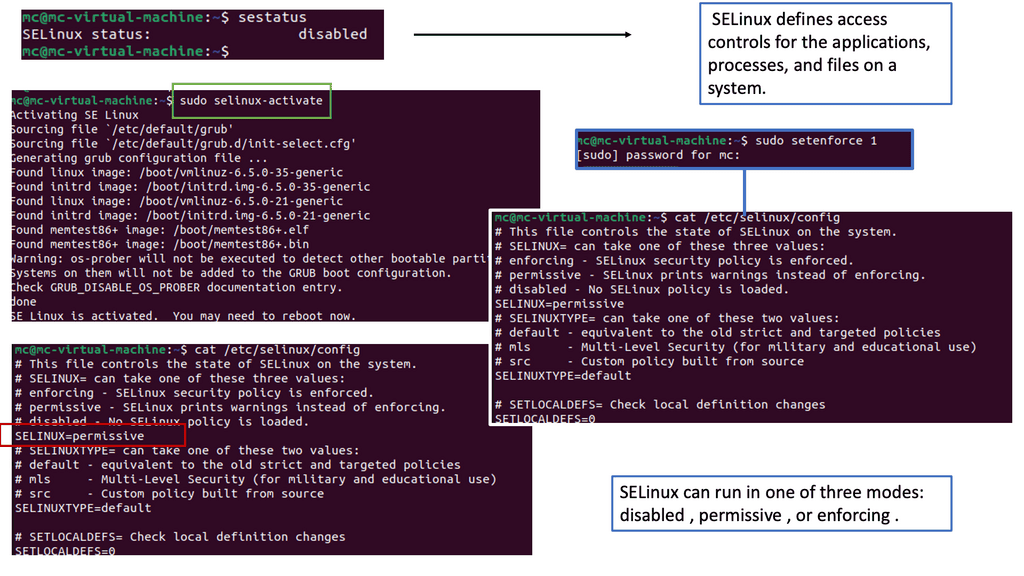

For sufficient network security to exist, it is essential to comprehend its central concepts and the implied technologies and processes around it that make it robust and resilient to cyber-attacks. However, this is complicated when the lack of a demarcation of the various network boundaries blurs the visibility.

Moreover, network security touches upon multiple attributes of security controls that we need to consider, such as security gateways, SSL inspection, threat prevention engines, policy enforcement, cloud security solutions, threat detection and insights, and attack analysis w.r.t frameworks, to name a few.

One of the fundamental components of network security is the implementation of firewalls and intrusion detection systems (IDS). Firewalls act as a barrier between your internal network and external threats, filtering out malicious traffic. On the other hand, IDS monitors network activity and alerts administrators of suspicious behavior, enabling rapid response to potential breaches.

A. Enforcing Strong Authentication and Access Controls

Controlling user access is vital to prevent unauthorized entry and data breaches. Implement strict access controls, including strong password policies, multi-factor authentication, and role-based access controls (RBAC). Regularly review user privileges to ensure they align with the principle of least privilege (PoLP).

Unauthorized access to sensitive data can have severe consequences. Implementing robust authentication mechanisms, such as two-factor authentication (2FA) or biometric verification, adds an extra layer of security. Additionally, enforcing stringent access controls, limiting user privileges, and regularly reviewing user permissions minimize the risk of unauthorized access.

B. Regular Software Updates and Patch Management

Cybercriminals often exploit vulnerabilities in outdated software. Regularly updating and patching your network’s software, including operating systems, applications, and security tools, is crucial to prevent potential breaches. Automating the update process helps ensure your network remains protected against emerging threats whenever possible.

C. Data Encryption and Secure Communication

Data encryption is critical to network security, mainly when transmitting sensitive information. Utilize industry-standard encryption algorithms to protect data at rest and in transit. Implement secure protocols like HTTPS for web communication and VPNs for remote access.

Protecting sensitive data in transit is essential to maintain network security. Implementing encryption protocols, such as Secure Sockets Layer (SSL) or Transport Layer Security (TLS), safeguards data as it travels across networks. Additionally, using Virtual Private Networks (VPNs) ensures secure communication between remote locations and adds an extra layer of encryption.

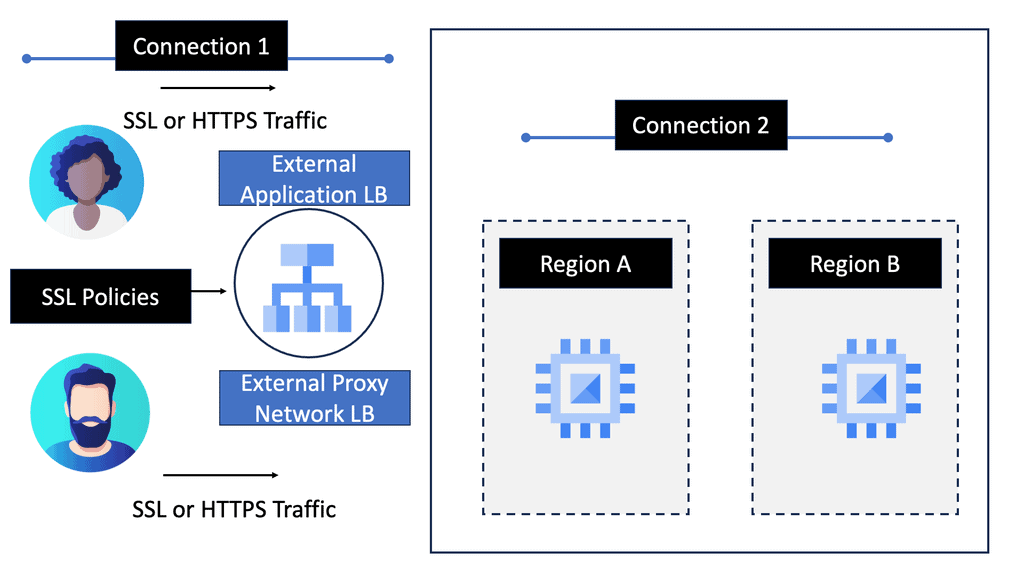

Example: SSL Policies

### What are SSL Policies?

SSL policies are a set of rules that define the behavior of SSL/TLS (Transport Layer Security) connections. These policies determine how data should be encrypted between servers and clients to prevent unauthorized access and ensure data integrity. Implementing robust SSL policies is essential for protecting sensitive information, such as personal data and financial transactions, from cyber threats.

### Benefits of Using SSL Policies on Google Cloud

Google Cloud offers extensive features for managing SSL policies, providing organizations with flexibility and control over their security configurations. Here are some key benefits:

– **Enhanced Security**: By enforcing strong encryption protocols, Google Cloud’s SSL policies help protect your data against man-in-the-middle attacks and other security threats.

– **Customizable Configurations**: Google Cloud allows for the customization of SSL policies to meet specific security requirements. This flexibility enables organizations to tailor their security posture according to their unique needs.

– **Seamless Integration**: Google Cloud’s SSL policies integrate seamlessly with other Google Cloud services, making it easier for businesses to maintain comprehensive security across their infrastructure.

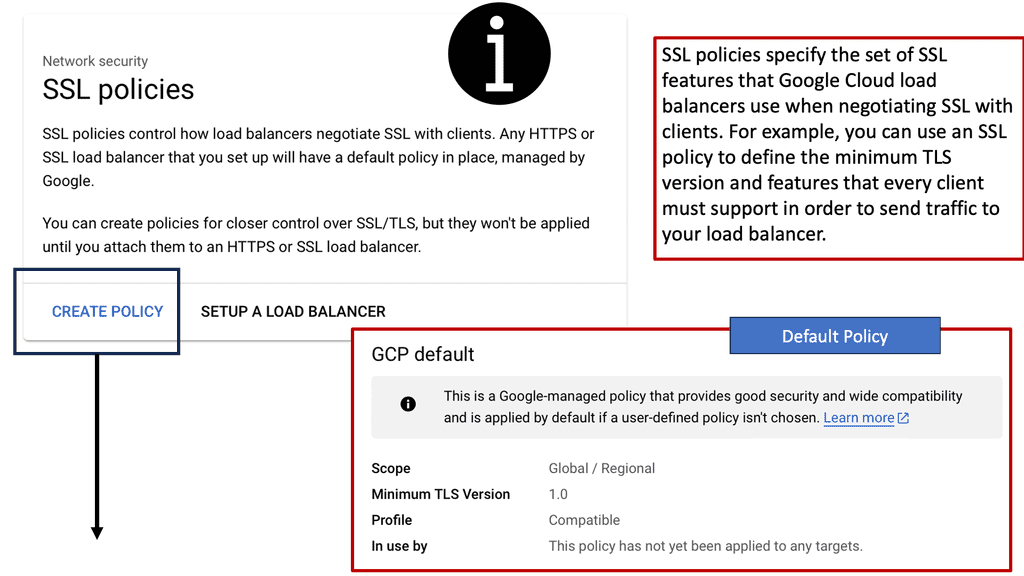

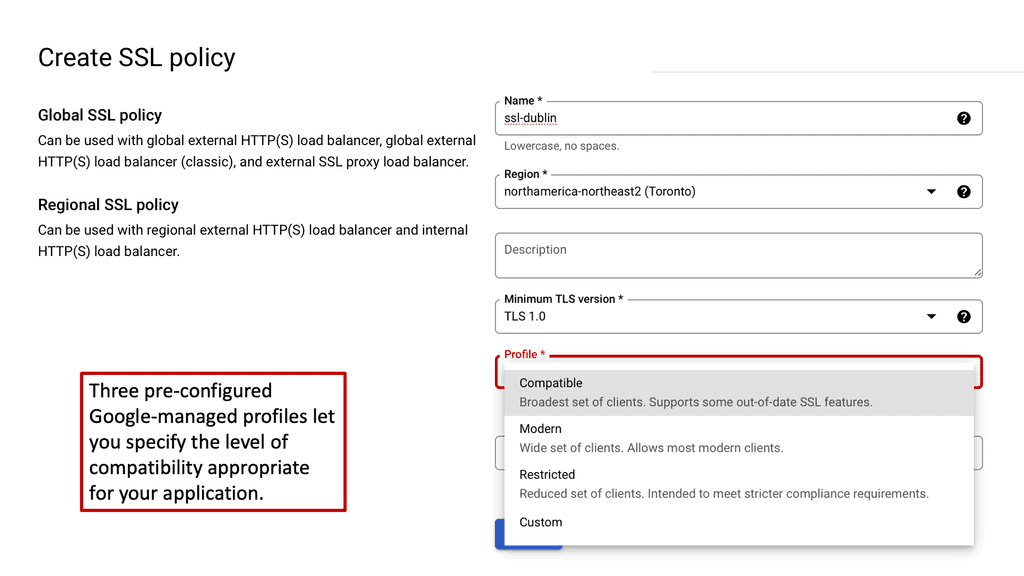

### Implementing SSL Policies on Google Cloud

Setting up SSL policies on Google Cloud is straightforward. Here’s a step-by-step guide to get you started:

1. **Access the Google Cloud Console**: Begin by logging into your Google Cloud account and navigating to the Google Cloud Console.

2. **Create an SSL Policy**: Under the “Network Services” section, select “Load Balancing” and then “SSL Policies.” Click on “Create Policy” to initiate the process.

3. **Configure Policy Settings**: Define the parameters of your SSL policy, including supported protocols and cipher suites. Google Cloud provides a range of options to customize these settings.

4. **Apply the Policy**: Once the SSL policy is created, apply it to your load balancers or other relevant services to enforce your desired security standards.

### Best Practices for Managing SSL Policies

To maximize the effectiveness of your SSL policies on Google Cloud, consider the following best practices:

– **Regularly Update Policies**: Stay informed about the latest security threats and update your SSL policies accordingly to ensure optimal protection.

– **Monitor SSL Traffic**: Use Google Cloud’s monitoring tools to keep an eye on SSL traffic patterns. This can help identify potential security vulnerabilities and prevent breaches.

– **Educate Your Team**: Ensure that your IT staff is well-versed in managing SSL policies and understands the importance of maintaining robust security protocols.

D. Assessing Vulnerabilities

Conducting a comprehensive network infrastructure assessment before implementing network security is crucial. This assessment will identify potential vulnerabilities, weak points, and areas that require immediate attention and serve as a foundation for developing a tailored security plan.

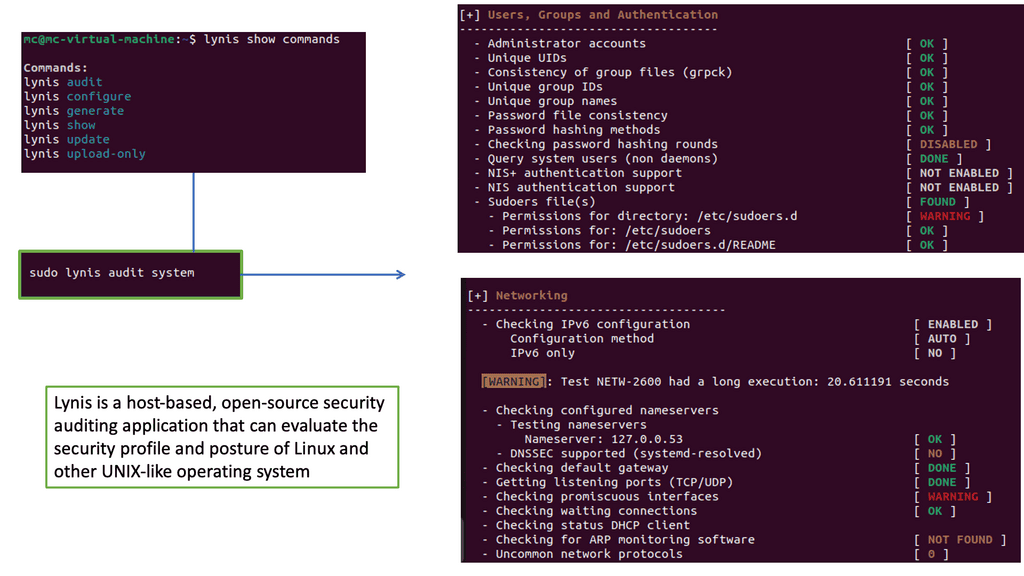

Example: What is Lynis?

Lynis is an open-source security auditing tool designed to assess the security defenses of Linux and Unix-based systems. It performs a comprehensive scan, evaluating various security aspects such as system hardening, vulnerability scanning, and compliance testing. Lynis provides valuable insights into potential risks and weaknesses by analyzing the system’s configurations and settings.

**Building a Strong Firewall**

One of the fundamental elements of network security is a robust firewall. A firewall acts as a barrier between your internal network and the external world, filtering incoming and outgoing traffic based on predefined rules. Ensure you invest in a reliable firewall solution with advanced features such as intrusion detection and prevention systems.

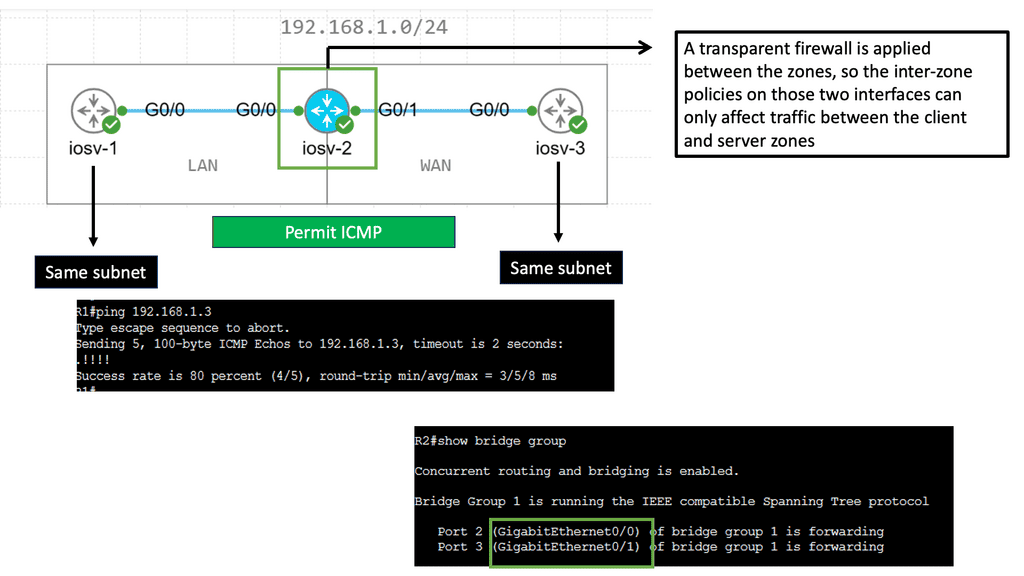

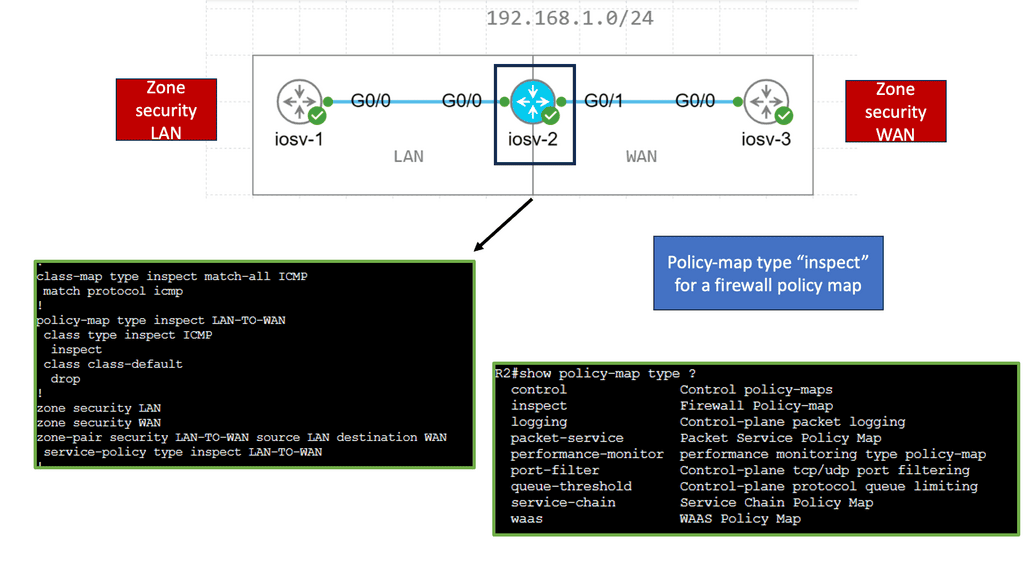

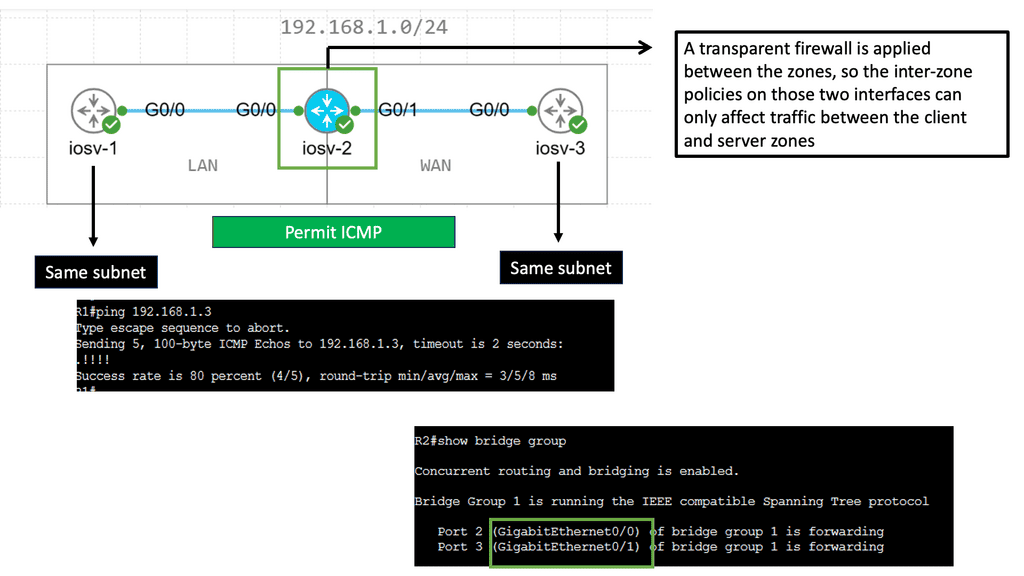

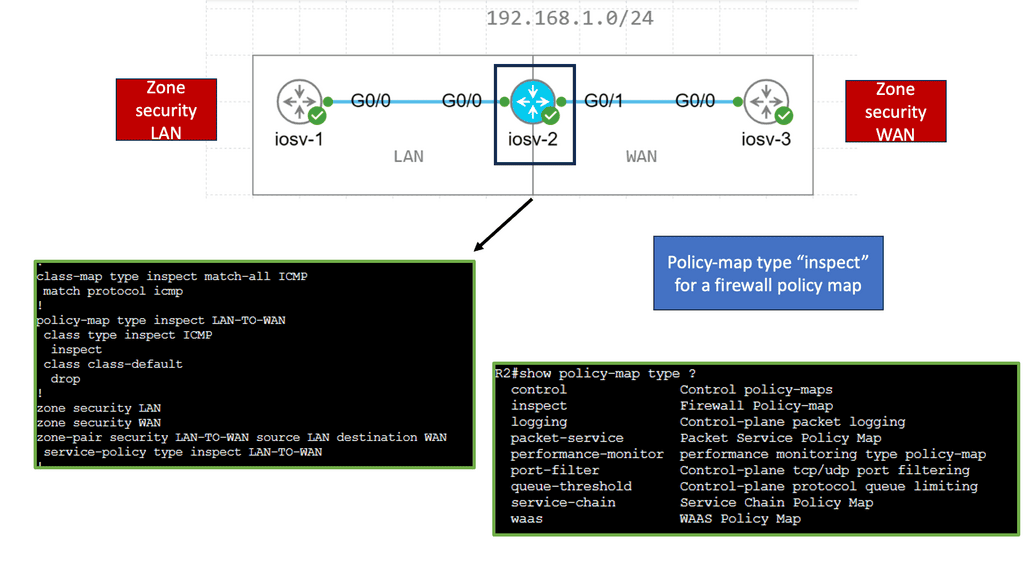

Example: Zone-Based Firewall ( Transparent Mode )

Zone-based firewalls provide a robust and flexible approach to network security by dividing the network into security zones. Each zone is associated with specific security policies, allowing administrators to control traffic flow between zones based on predetermined rules. This segmentation adds an extra layer of protection and enables efficient traffic management within the network.

Transparent mode is a unique operating mode of zone-based firewalls that offers enhanced network security while maintaining seamless integration with existing network infrastructure. Unlike traditional firewalls that require explicit IP addressing and routing changes, zone-based firewalls in transparent mode work transparently without modifying the network topology. This makes them an ideal choice for organizations looking to enhance security without disrupting their existing network architecture.

Key Advantages:

One key advantage of zone-based firewalls in transparent mode is the simplified deployment process. Since they operate transparently, there is no need for complex network reconfiguration or IP address changes. This saves time and minimizes the risk of potential misconfigurations or network disruptions.

Another significant benefit is the increased visibility and control over network traffic. Zone-based firewalls in transparent mode allow organizations to monitor and analyze traffic at a granular level, effectively detecting and mitigating potential threats. Additionally, these firewalls provide a centralized management interface, simplifying the administration and configuration process.

Example: Context-Based Access Control

The CBAC firewall, or Context-Based Access Control, is a stateful inspection firewall that goes beyond traditional packet filtering. Unlike simple packet filtering firewalls, CBAC examines individual packets and their context. This contextual analysis gives CBAC a more comprehensive understanding of network traffic, making it highly effective in identifying and mitigating potential threats.

CBAC firewall offers a range of features and benefits that make it a powerful tool for network security. Firstly, it provides application-level gateway services, allowing it to inspect traffic at the application layer. This capability enables CBAC to detect and block specific types of malicious traffic, such as Denial of Service attacks or unauthorized access attempts.

Additionally, the CBAC firewall supports dynamic protocol inspection, which means it can dynamically monitor and control traffic for various protocols. This flexibility allows for efficient and effective network management while ensuring that only legitimate traffic is permitted.

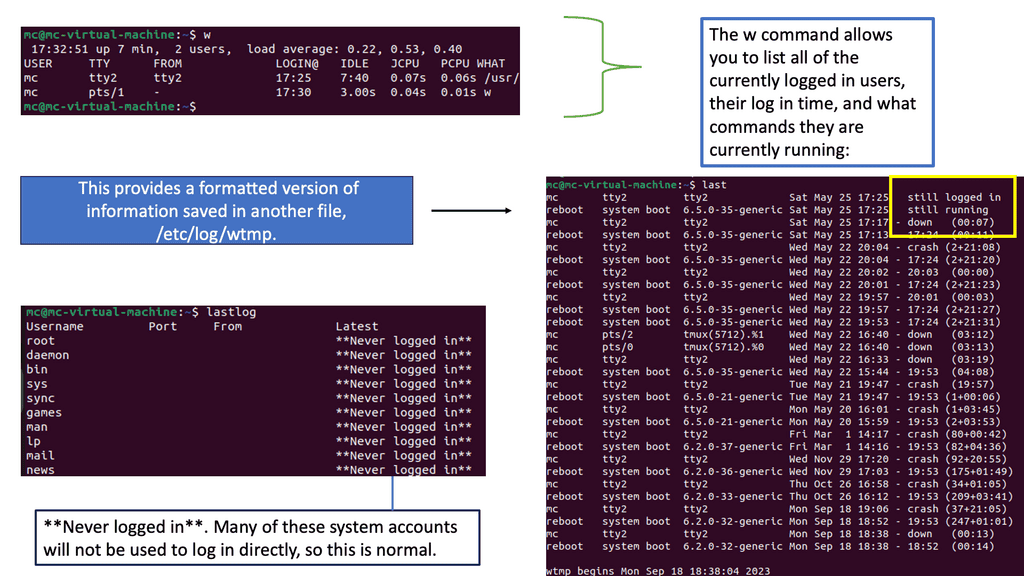

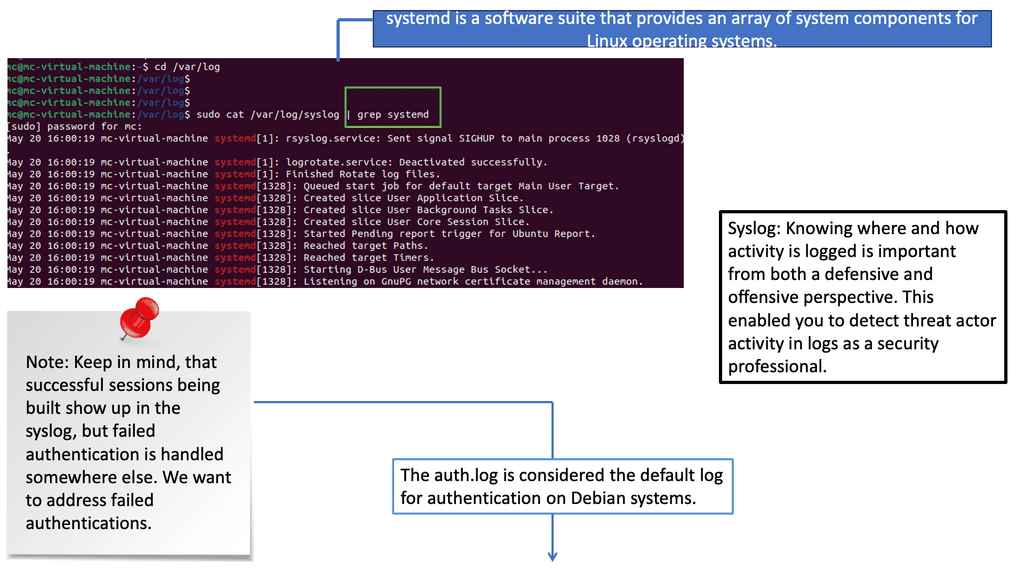

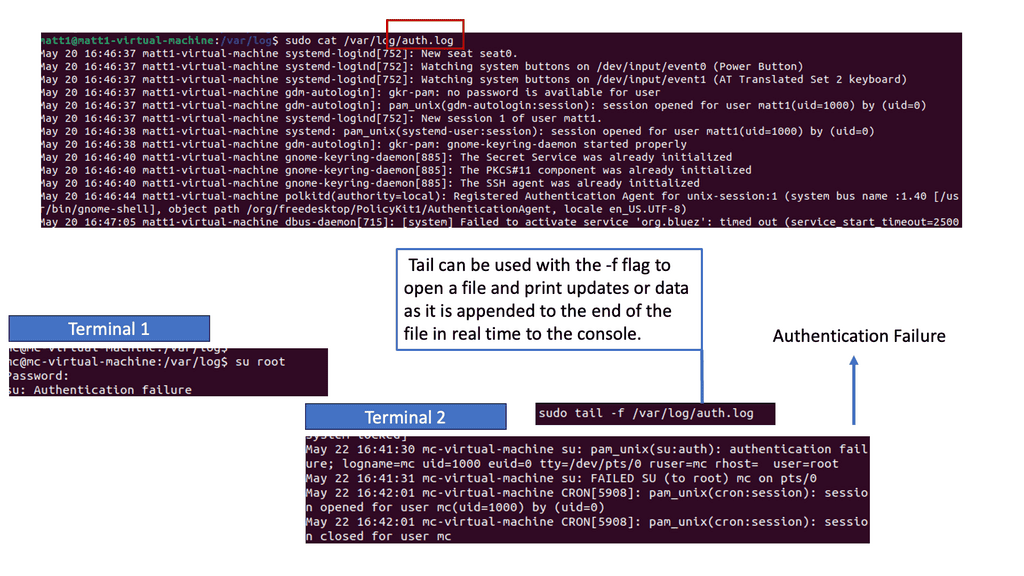

F. Monitoring and Intrusion Detection

Network security is an ongoing process that requires constant vigilance. Implement a robust monitoring and intrusion detection system (IDS) to detect and respond promptly to potential security incidents. Monitor network traffic, analyze logs, and employ intrusion prevention systems (IPS) to protect against attacks proactively.

**Knowledge Check: Malware**

A. – Antivirus: Antivirus software is often used to protect or eradicate malicious software, so it is probably no surprise that virus is one of the most commonly used words to describe malware. Malware is not always a virus, but all computer viruses are malware. For a virus to infect a system, it must be activated by the user.

For the virus to be executed, the user must do something. After infecting the system, the virus may inject code into other programs, so the virus remains in control when those programs run. Regardless of whether the original executable and process are removed, the system will remain infected if the infected programs run. The virus must be removed entirely.

B. – Worm: There is a common misconception that worms are malicious, but they are not. In addition to Code Red and Nimda, many other notorious worms worldwide have caused severe damage. It is also possible to contract worms like Welchia/Nachi, in addition to removing another worm, Blaster, that worm patched systems so they were no longer vulnerable to Blaster. Removing malware such as Blaster is not enough to combat a worm. Removing malware is insufficient; if the worm’s vulnerability is not fixed, it will reinfect from another source.

C. – Trojan: As with viruses, Trojans are just another type of malware. Its distinctive feature is that it appears to be something it’s not. Although it’s probably well known, the term Trojan horse was used to describe it. During the Trojan War, the Greeks built a horse for the Trojans as a “gift” to them. There were Greeks inside the gift horse. Instead of being a wooden horse statue, it was used to deliver Greek soldiers who crept out of the horse at night and attacked Troy from within.

D. – Botnet: Viruses, worms, and Trojan horses can deliver botnets as part of their payload. Botnets are clients that are installed when you hear the word. Botnets are collections of endpoints infected with a particular type of malware. Botnet clients connect to command-and-control infrastructure (or C&C) through small pieces of software. The client receives commands from the C&C infrastructure. The purpose of a botnet is primarily to generate income for its owner, but it can be used for various purposes. Clients serve as facilitators of that process.

Hacking Stages

The hacking stages: There are different stages of an attack chain, and with the correct network visibility, you can break the attack at each stage. Firstly, there will be the initial recon, access discovery, where a bad actor wants to understand the lay of the land to determine the next moves. Once they know this, they can try to exploit it.

Stage 1: Deter

You must first deter threats and unauthorized access, detect suspicious behavior and access, and automatically respond and alert. So, it would help if you looked at network security. We have our anti-malware devices, perimeter security devices, identity access, firewalls, and load balancers for the first stage, which deters.

Stage 2: Detect

The following dimension of security is detection. Here, we can examine the IDS, log insights, and security feeds aligned with analyses and flow consumption. Again, any signature-based detection can assist you here.

Stage 3: Respond

Then, we need to focus on how you can respond. This will be with anomaly detection and response solutions. Remember that all of this must be integrated with, for example, the firewall enabling you to block and then deter that access.

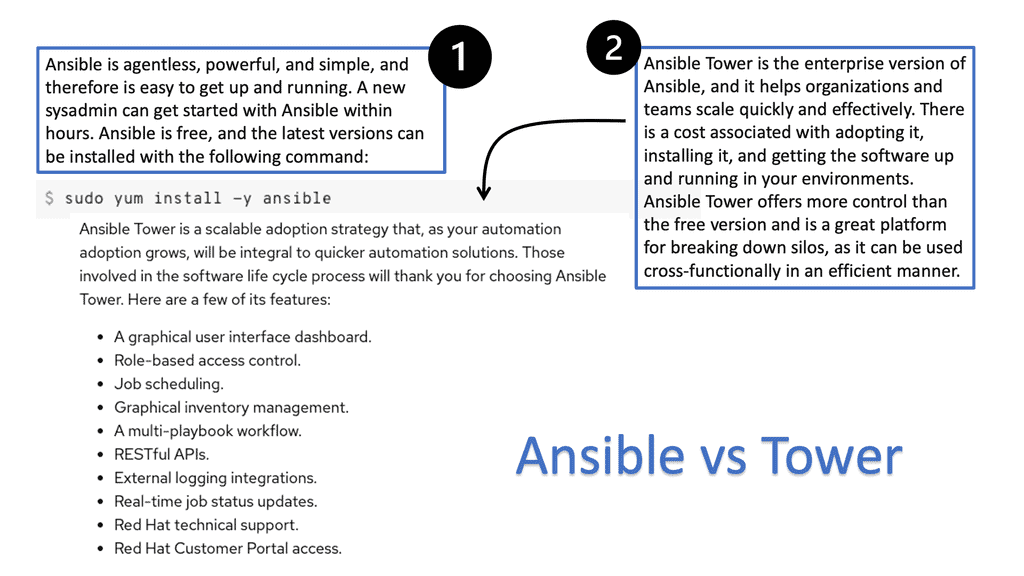

Red Hat Ansible Tower

Ansible is the common automation language for everyone across your organization. Specifically, Ansible Tower can be the common language between security tools. This leads to repetitive work and the ability to respond to security events in a standardized way. If you want a unified approach, automation can help you here, especially with a Platform such as Ansible Tower. It would help if you integrated Ansible Tower and your security technologies.

Example: Automating firewall rules.

We can add an allowlist entry in the firewall configuration to allow traffic from a particular machine to another. We can have a playbook that first adds the source and destination I.P.s as variables. Then, when a source and destination object are defined, the actual access rule between those is defined. All can be done with automation.

There is not one single device that can stop an attack. We need to examine multiple approaches that should be able to break the attack at any part of this attack chain. Whether the bad actors are doing their TCP scans, ARP Scans, or Malware scans, you want to be able to identify them before they become a threat. You must always assume threat access, leverage all possible features, and ensure every application is critical and protected.

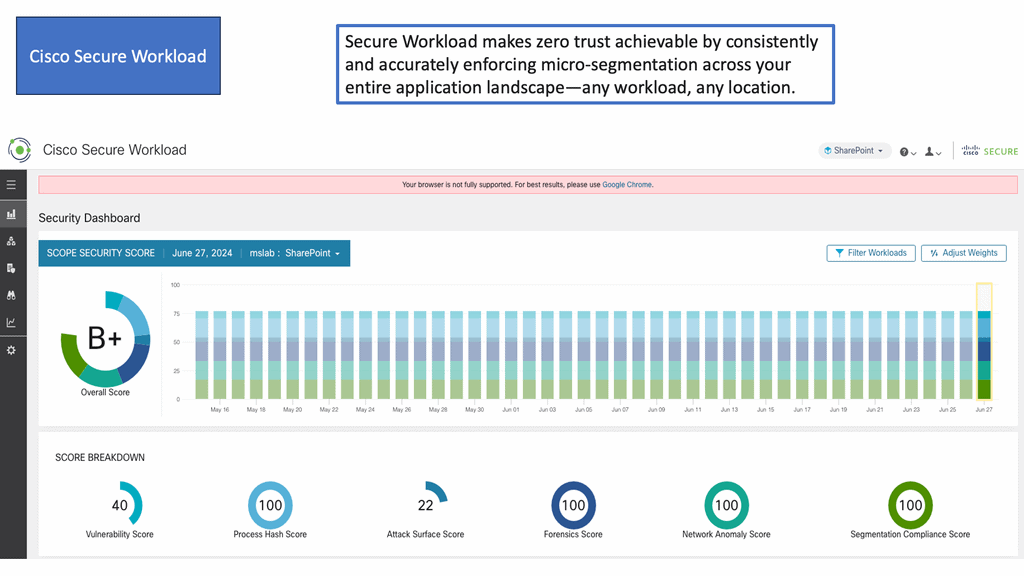

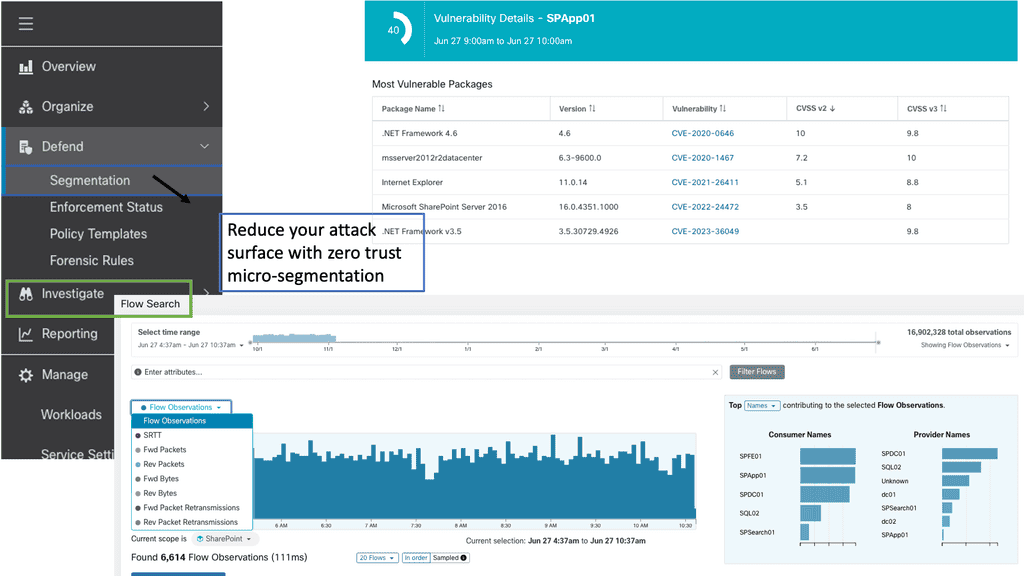

We must improve various technologies’ monitoring, investigation capabilities, and detection. The zero-trust architecture can help you monitor and improve detection. In addition, we must look at network visibility, logging, and Encrypted Traffic Analyses (ETA) to improve investigation capabilities.

Knowledge Check: Ping Sweeps

– Consider identifying responsive systems within address spaces rather than blindly attacking them. Responding to network messages means responding appropriately to the messages sent to them. In other words, you can identify live systems before attempting to attack or probe them. Performing a ping sweep is one way to determine if systems are alive.

– Ping sweeps involve sending ping messages to every computer on the network. As a standard message, the ping uses ICMP echo requests. They may not be noticed if you are not bombarding targets with unusually large or frequent messages. Firewall rules may block ICMP messages outside the network, so ping sweeps may not succeed.

**Network-derived intelligence**

So, when implementing network security, you need to consider that the network and its information add much value. This can still be done with an agent-based approach, where an agent collects data from the host and sends it back to, for example, a data lake where you set up a dashboard and query. However, an agent-based approach will have blind spots. It misses a holistic network view and can’t be used with unmanaged devices like far-reaching edge IoT.

The information gleaned from the host misses data that can be derived for the network. Network-derived traffic analysis is especially useful for investigating unmanaged hosts such as IoT—any host and its actual data.

This is not something that can be derived from a log file. The issue we have with log data is if a bad actor gets internal to the network, the first thing they want to do to cover their footprints is log spoofing and log injections.

**Agent-based and network-derived intelligence**

An agent-based approach and network-derived intelligence’s deep packet inspection process can be appended. Network-derived intelligence allows you to pull out tons of metadata attributes, such as what traffic this is, what the characteristics of the traffic are, what a video is, and what the frame rate is.

The beauty is that this can get both north-south and east-west traffic and unmanaged devices. So, we have expanded the entire infrastructure by combining an agent-based approach and a network-derived intelligence.

**Detecting rogue activity: Layers of security**

Now, we can detect new vulnerabilities, such as old SSL ciphers, shadow I.T. activity, such as torrent and crypto mining, and suspicious activities, such as port spoofing. Rogue activities such as crypto mining are a big concern. Many workflows get broken, and many breaches and attacks install crypto mining software.

This is the best way for a bad actor to make money. The way to detect this is not to have an agent but to examine network traffic and look for anomalies in the traffic. When there are anomalies in the traffic, the traffic may not look too different. This is because the mining software will not generate log files, and there is no command and control communication.

**Observability & The SIEM**

We make the observability and SIEM more targeted to get better information. With the network, we have new capabilities to detect and invent. This adds a new layer of in-depth defense and makes you more involved in the cloud threats that are happening at the moment. Netflow is used for network monitoring, detection, and response. Here, you can detect the threats and integrate them with other tools so we can see the network intrusion as it begins. It makes a decision based on the network. So you can see the threats as they happen.

You can’t protect what you can’t see.

The first step in the policy optimization process is how the network connects, what is connecting, and what it should be. You can’t protect what you can’t see. Therefore, everything desperately managed within a hybrid network must be fully understood and consolidated. Secondly, once you know how things connect, how do you ensure they don’t reconnect through a broader definition of connectivity?

You must support different user groups, security groups, and IP addresses. You can’t just rely on IP addresses to implement security controls anymore. We need visibility at traffic flow, process, and contextual data levels. Without this granular application, visibility, mapping, and understanding normal traffic flow and irregular communication patterns is challenging.

Complete network visibility

We also need to identify when there is a threat easily. For this, we need a multi-dimensional security model and good visibility. Network visibility is integral to security, compliance, troubleshooting, and capacity planning. Unfortunately, custom monitoring solutions cannot cope with the explosive growth networks.

We also have reasonable solutions from Cisco, such as Cisco’s Nexus Dashboard Data Broker (NDDB). Cisco’s Nexus Dashboard Data Broker (NDDB) is a packet brokering solution that provides a software-defined, programmable solution that can aggregate, filter, and replicate network traffic using SPAN or optical TAPs for network monitoring and visibility.

What prevents visibility?

There is a long list of things that can prevent visibility. Firstly, there are too many devices and complexity and variance between vendors in managing them. Even CLI commands from the same vendor vary. Too many changes result in the inability to meet the service level agreement (SLA), as you are just layering on connectivity without fully understanding how the network connects.

This results in complex firewall policies. For example, you have access but are not sure if you should have access. Again, this leads to significant, complex firewall policies without context. More often, the entire network lacks visibility. For example, AWS teams understand the Amazon cloud but do not have visibility on-premise. We also have distributed responsibilities across multiple groups, which results in fragmented processes and workflows.

Security Principles: Data-flow Mapping

Network security starts with the data. Data-flow mapping enables you to map and understand how data flows within an organization. But first, you must understand how data flows across your hybrid network and between all the different resources and people, such as internal employees, external partners, and customers. This includes the who, what, when, where, why, and how your data creates a strong security posture. You are then able to understand access to sensitive data.

Data-flow mapping will help you create a baseline. Once you have a baseline, you can start implementing Chaos Engineering projects to help you understand your environment and its limits. One example would be a chaos engineering kubernetes project that breaks systems in a controlled manner.

What prevents mapping sensitive data flows

What prevents mapping sensitive data flow? Firstly, there is an inability to understand how the hybrid network connects. Do you know where sensitive data is, how to find it, and how to ensure it has the minimum necessary access?

With many teams managing different parts and the rapid pace of application deployments, there are often no documents. No filing systems in place. There is a lack of application connectivity requirements. People don’t worry about documenting and focus on connectivity. More often than not, we have an overconnected network environment.

We often connect first and then think about security. We also cannot understand if application connectivity violates security policy and lacks application-required resources. Finally, there is a lack of visibility into the cloud and deployed applications and resources. What is in the cloud, and how is it connected to on-premise and external Internet access?

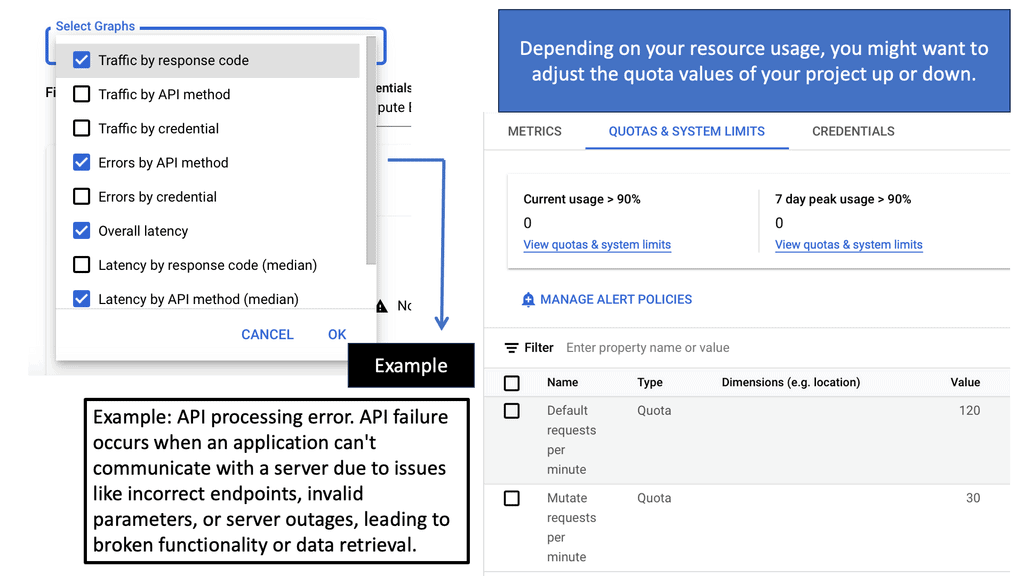

Network Security and Telemetry

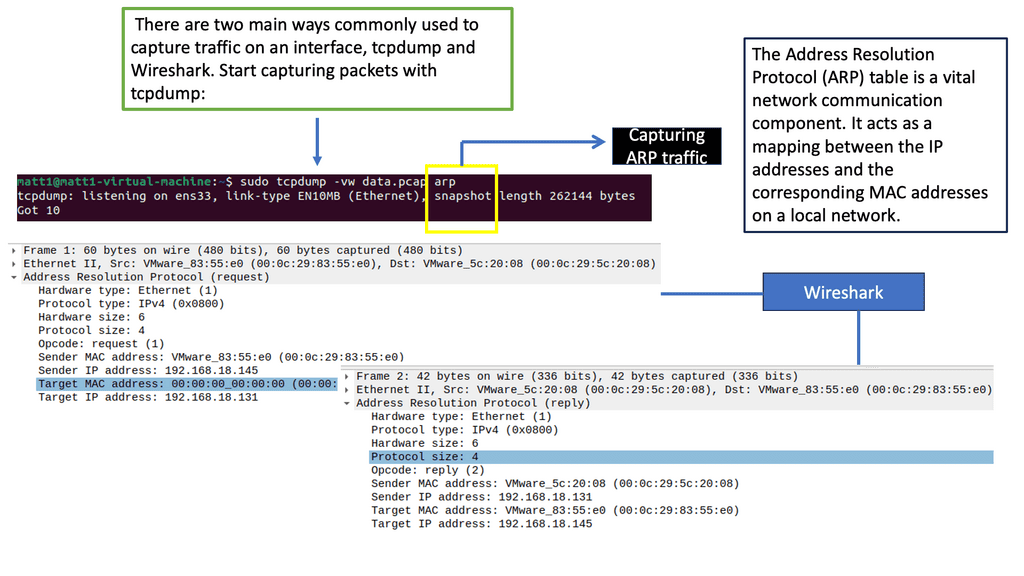

Implementing network security involves leveraging the different types of telemetry for monitoring and analysis. For this, we have various kinds of packet analysis and telemetry data. Packet analysis is critical, involving new tools and technologies such as packet brokers. In addition, SPAN taps need to be installed strategically in the network infrastructure.

Example Telemetry Technologies

Telemetry, such as flow, SNMP, and API, is also examined. Flow is a technology similar to IPFIX and NETFLOW. We can also start to look at API telemetry. Then, we have logs that provide a wealth of information. So, we have different types of telemetry and different ways of collecting and analyzing it, and now we can use this from both the network and security perspectives.

Threat Detection & Response

From the security presence, it would be for threat detection and response. Then, for the network side of things, it would be for network and application performance. So there are a lot of telemetries that can be used for security. These technologies were initially viewed as performance monitoring.

However, security and networking have been merged to meet the cybersecurity use cases. So, in summary, we have flow, SNMP, and API for network and application performance, encrypted traffic analysis, and machine learning for threat and risk identification for security teams.

The issues with packet analysis: Encryption.

The issue with packet analysis is that everything is encrypted, especially with TLS1.3. And at the WAN Edge. So how do you decrypt all of this, and how do you store all of this? Decrypting traffic can create an exploit and potential attack surface, and you also don’t want to decrypt everything.

Do not fully decrypt the packets.

One possible solution is not fully decrypting the packets. However, when looking at the packet information, especially in the header, which can consist of layer 2 and TCP headers. You can immediately decipher what is expected and what is malicious. You can look at the packers’ length and the arrival time order and understand what DNS server it uses.

Also, look at the round trip time and the connection times. You can extract many insights and features from encrypted traffic without fully decrypting it. Combining all this information can be fed to different machine learning models to understand good and bad traffic.

You don’t need to decrypt everything. So you may not have to look at the actual payload, but from the pattern of the packets, you can see with the right tools that one is a wrong website, and another is a good website.

Stage 1: Know your infrastructure with good visibility

The first thing is getting to know all the traffic around your infrastructure. Once you know, they need to know this for on-premises, cloud, and multi-cloud scenarios. It would help if you had higher visibility across all environments.

Stage 2: Implement security tools

In all environments, we have infrastructure that our applications and services ride upon. Several tools protect this infrastructure, which will be placed in different network parts. As you know, we have firewalls, DLP, email gateways, and SIEM. We also have other tools to carry out various security functions. These tools will not disappear or be replaced anytime soon but must be better integrated.

Stage 3: Network packet broker

You can introduce a network packet broker. So, we can have a packed brokering device that fetches the data and then sends the data back to the existing security tools you have in place. Essentially, this ensures that there are no blind spots in the network. Remember that this network packet broker should support any workload to any tools.

Stage 4: Cloud packet broker

In the cloud, you will have a variety of workloads and several tools, such as SIEM, IPS, and APM. These tools need access to your data. A packet broker can be used in the cloud, too. So, if you are in a cloud environment, you need to understand the native cloud protocols, such as VPC mirroring; this traffic can be brokered, allowing some transformation to happen before we move the traffic over. These transformant functions can include de-duplication, packet slicing, and TLS analyses.

This will give you complete visibility into the data set across VPC at scale, eliminating any blind spots and improving the security posture by sending appropriate network traffic, whether packets or metadata, to the tools stacked in the cloud.

Summary: Implementing Network Security

In today’s interconnected world, where digital communication and data exchange are the norm, ensuring your network’s security is paramount. Implementing robust network security measures not only protects sensitive information but also safeguards against potential threats and unauthorized access. This blog post provided you with a comprehensive guide on implementing network security, covering key areas and best practices.

Assessing Vulnerabilities

Before diving into security solutions, it’s crucial to assess the vulnerabilities present in your network infrastructure. Conducting a thorough audit helps identify weaknesses such as outdated software, unsecured access points, or inadequate user permissions.

Firewall Protection

One of the fundamental pillars of network security is a strong firewall. A firewall is a barrier between your internal network and external threats, monitoring and filtering incoming and outgoing traffic. It serves as the first line of defense, preventing unauthorized access and blocking malicious activities.

Intrusion Detection Systems

Intrusion Detection Systems (IDS) play a vital role in network security by actively monitoring network traffic, identifying suspicious patterns, and alerting administrators to potential threats. IDS can be network- or host-based, providing real-time insights into ongoing attacks or vulnerabilities.

Securing Wireless Networks

Wireless networks are susceptible to various security risks due to their inherent nature. Implementing robust encryption protocols, regularly updating firmware, and using unique and complex passwords are essential to securing your wireless network. Additionally, segregating guest networks from internal networks helps prevent unauthorized access.

User Authentication and Access Controls

Controlling user access is crucial to maintaining network security. Implementing robust user authentication mechanisms such as two-factor authentication (2FA) or biometric authentication adds an extra layer of protection. Regularly reviewing user permissions, revoking access for former employees, and employing the principle of least privilege ensures that only authorized individuals can access sensitive information.

Conclusion:

Implementing network security measures is an ongoing process that requires a proactive approach. Assessing vulnerabilities, deploying firewalls and intrusion detection systems, securing wireless networks, and implementing robust user authentication controls are crucial steps toward safeguarding your network. By prioritizing network security and staying informed about emerging threats, you can ensure the integrity and confidentiality of your data.