Software-Defined Perimeter

In the evolving landscape of cybersecurity, organizations are constantly seeking innovative solutions to protect their sensitive data and networks from potential threats. One such solution that has gained significant attention is the Software Defined Perimeter (SDP). In this blog post, we will delve into the concept of SDP, its benefits, and how it is reshaping the future of network security.

The concept of SDP revolves around the principle of zero trust architecture. Unlike traditional network security models that rely on perimeter-based defenses, SDP adopts a more dynamic approach by providing secure access to users and devices based on their identity and context. By creating individualized and isolated connections, SDP reduces the attack surface and minimizes the risk of unauthorized access.

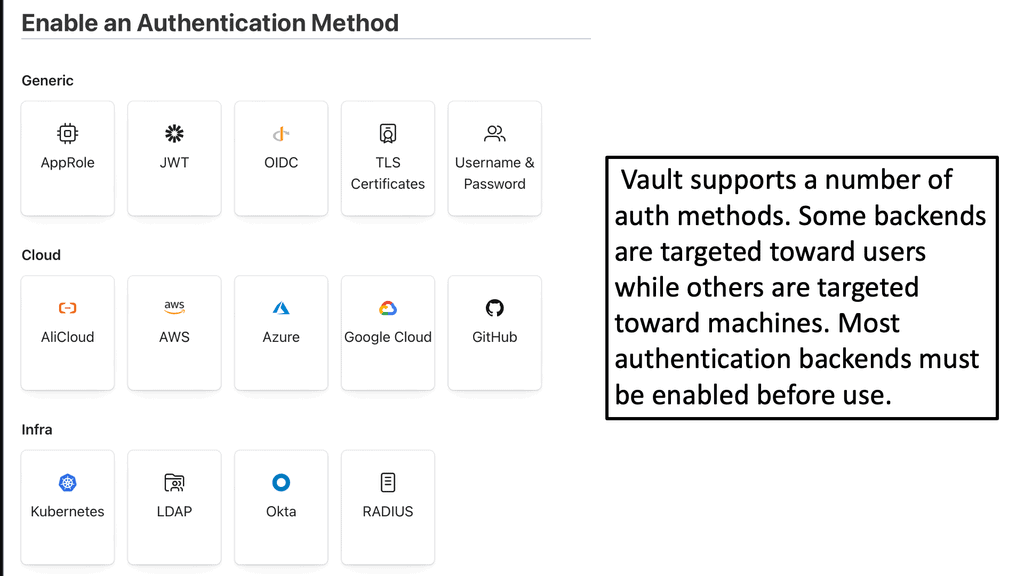

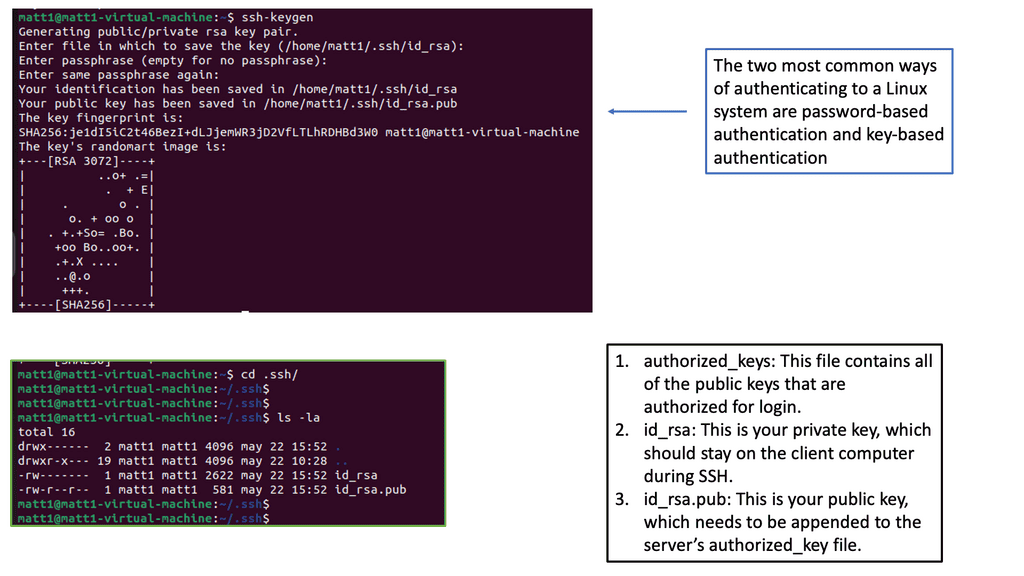

1. Identity-Based Authentication: SDP leverages strong authentication mechanisms such as multi-factor authentication (MFA) and certificate-based authentication to verify the identity of users and devices.

2. Dynamic Access Control: SDP employs contextual information such as user location, device health, and behavior analysis to dynamically enforce access policies. This ensures that only authorized entities can access specific resources.

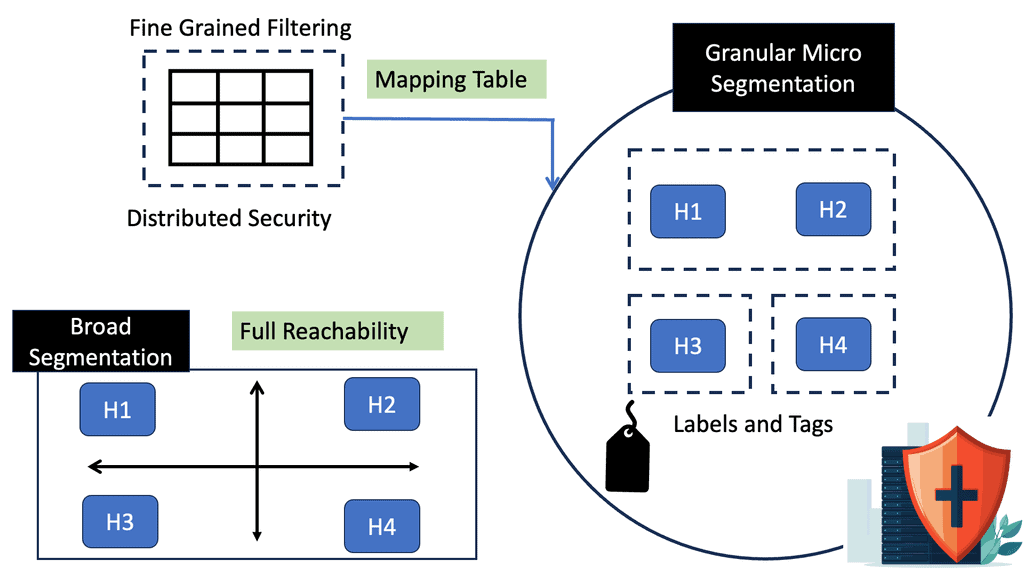

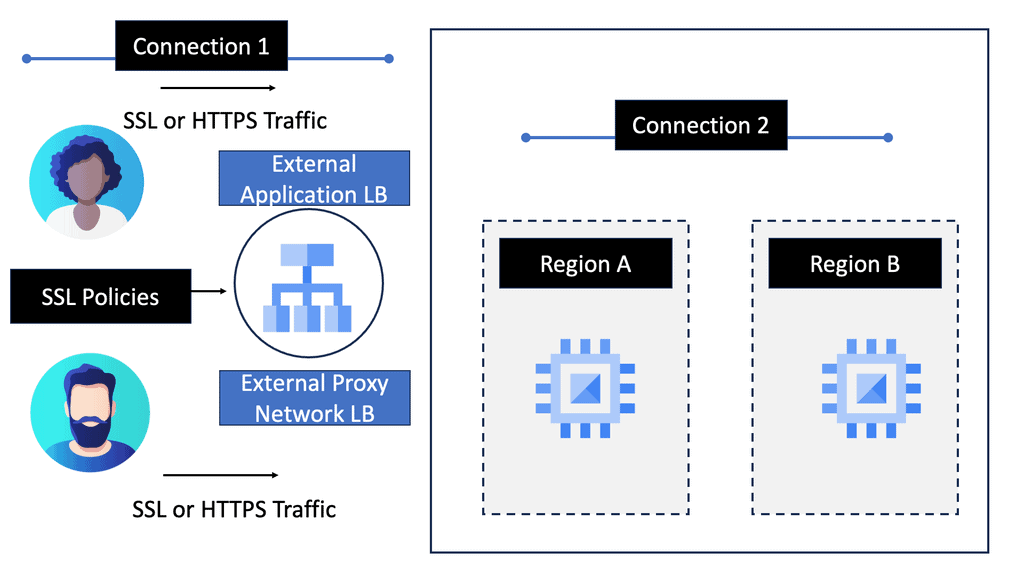

3. Micro-Segmentation: SDP enables micro-segmentation, dividing the network into smaller, isolated segments. This ensures that even if one segment is compromised, the attacker's lateral movement is restricted.

1. Enhanced Security: SDP significantly reduces the risk of unauthorized access and lateral movement, making it challenging for attackers to exploit vulnerabilities.

2. Improved User Experience: SDP enables seamless and secure access to resources, regardless of user location or device type. This enhances productivity and simplifies the user experience.

3. Scalability and Flexibility: SDP can easily adapt to changing business requirements and scale to accommodate growing networks. It offers greater agility compared to traditional security models.

As organizations face increasingly sophisticated cyber threats, the need for advanced network security solutions becomes paramount. Software Defined Perimeter (SDP) presents a paradigm shift in the way we approach network security, moving away from traditional perimeter-based defenses towards a dynamic and identity-centric model. By embracing SDP, organizations can fortify their network security posture, mitigate risks, and ensure secure access to critical resources.

Matt Conran

Highlights: Software-Defined Perimeter

Understanding Software-Defined Perimeter

1- ) The software-defined perimeter, also known as Zero-Trust Network Access (ZTNA), is a security framework that adopts a dynamic, identity-centric approach to protecting critical resources. Unlike traditional perimeter-based security measures, SDP focuses on authenticating and authorizing users and devices before granting access to specific resources. By providing granular control and visibility, SDP ensures that only trusted entities can establish a secure connection, significantly reducing the attack surface.

2- ) its core, a Software-Defined Perimeter leverages a zero-trust security model, meaning that trust is never assumed simply based on network location. Instead, SDP dynamically creates secure, encrypted connections to applications or data, only after users and devices are authenticated. This approach significantly reduces the attack surface by ensuring that unauthorized entities cannot even see the network resources, let alone access them.

3- ) an SDP can transform the way organizations approach security. One major advantage is the enhanced security posture, as SDPs effectively cloak network resources from potential attackers. Moreover, SDPs are highly scalable, allowing organizations to quickly adapt to changing demands without compromising security. This flexibility is particularly beneficial for businesses with remote workforces, as it facilitates secure access to resources from any location.

Key SDP Components:

To implement an effective SDP, several key components work in tandem to create a robust security architecture. These components include:

1. Identity-Based Authentication: SDP leverages strong identity verification techniques such as multi-factor authentication (MFA) and certificate-based authentication to ensure that only authorized users gain access.

2. Dynamic Provisioning: SDP enables dynamic policy-based provisioning, allowing organizations to adapt access controls based on real-time context and user attributes.

3. Micro-Segmentation: With SDP, organizations can establish micro-segments within their network, isolating critical resources from potential threats and limiting lateral movement.

Example Micro-segmentation Technology:

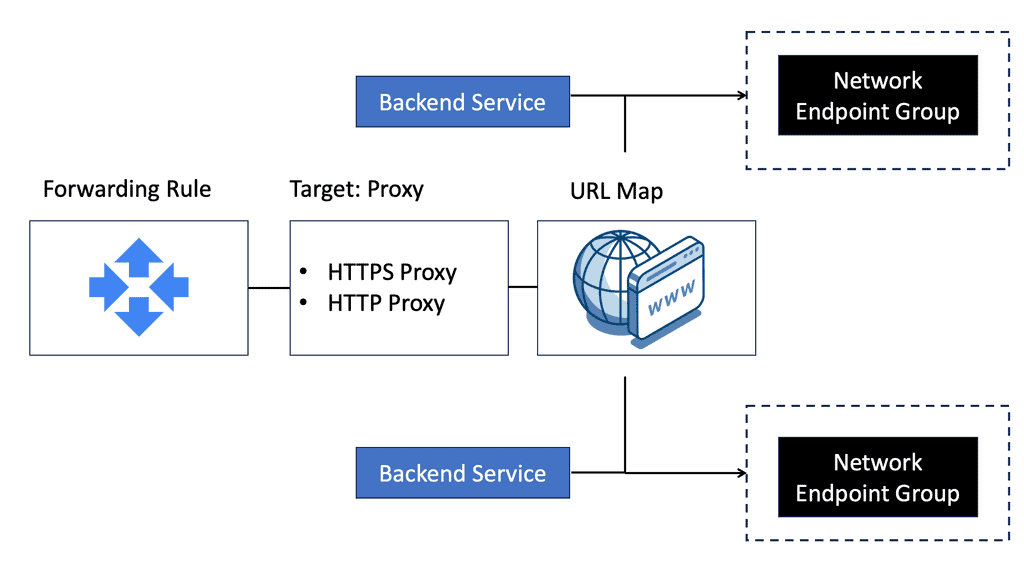

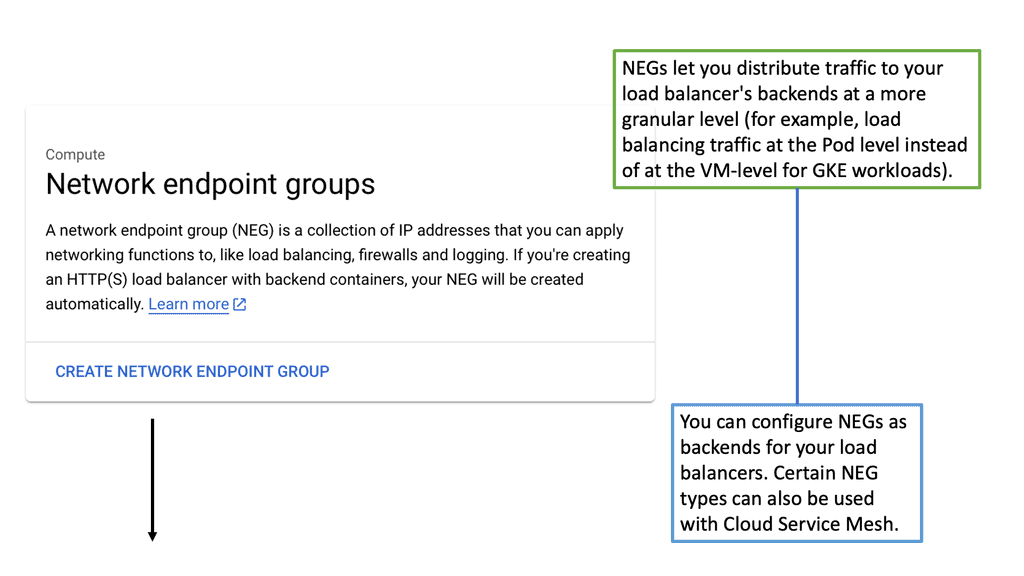

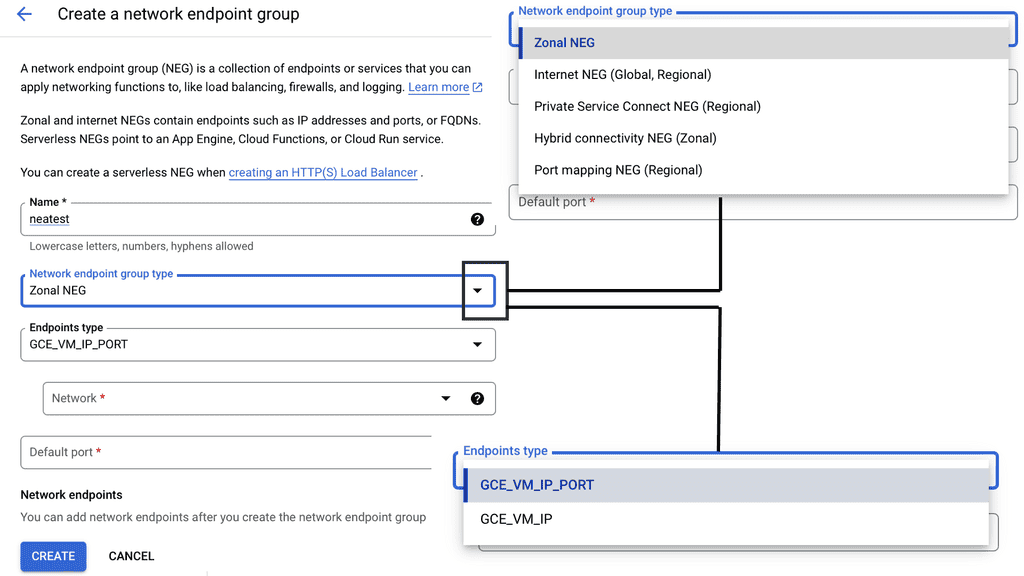

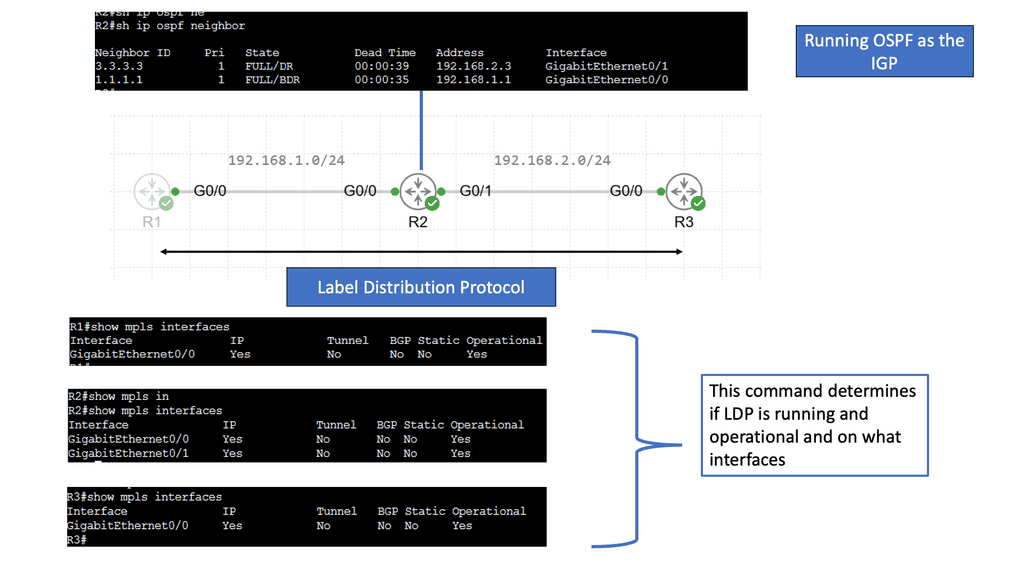

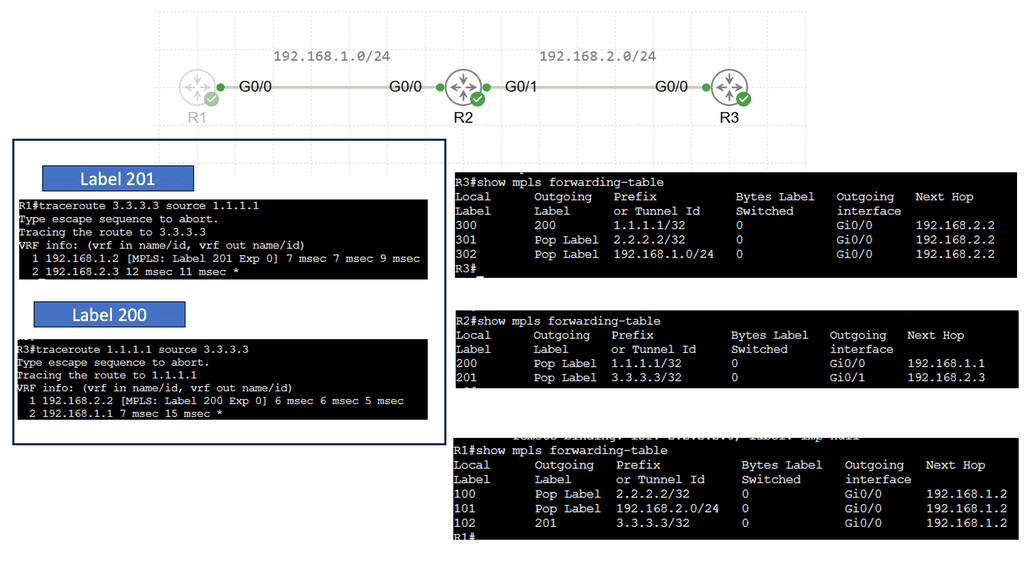

Network Endpoint Groups (NEGs)

Network Endpoint Groups, or NEGs, are collections of IP address-port pairs that enable you to define how traffic is distributed across your applications. This flexibility makes NEGs a versatile tool, particularly in scenarios involving microsegmentation. Microsegmentation involves dividing a network into smaller, isolated segments to improve security and traffic management. NEGs support both zonal and serverless applications, allowing you to efficiently manage your infrastructure’s traffic flow.

The Role of NEGs in Microsegmentation

One of the standout features of NEGs is their ability to support microsegmentation within Google Cloud. By using NEGs, you can create precise policies that govern the flow of data between different segments of your network. This granular control is vital for security, as it allows you to isolate sensitive data and applications, minimizing the risk of unauthorized access. With NEGs, you can ensure that each microservice in your architecture communicates only with the necessary components, further enhancing your network’s security posture.

**A Disruptive Technology**

Over the last few years, there has been tremendous growth in the adoption of software-defined perimeter solutions and zero-trust network design. This has resulted in SDP VPN becoming a disruptive technology, especially when replacing or working with the existing virtual private network. Why? because the steps that software-defined perimeter proposes are needed.

Challenge With today’s Security

Today’s network security architectures, tools, and platforms are lacking in many ways when trying to combat current security threats. From a bird’ s-eye view, the zero-trust software-defined perimeter (SDP) stages are relatively simple. SDP requires that endpoints, both internal and external to an organization, authenticate and then be authorized before being granted network access. Once these steps occur, two-way encrypted connections between the requesting entity and the intended protected resource are created.

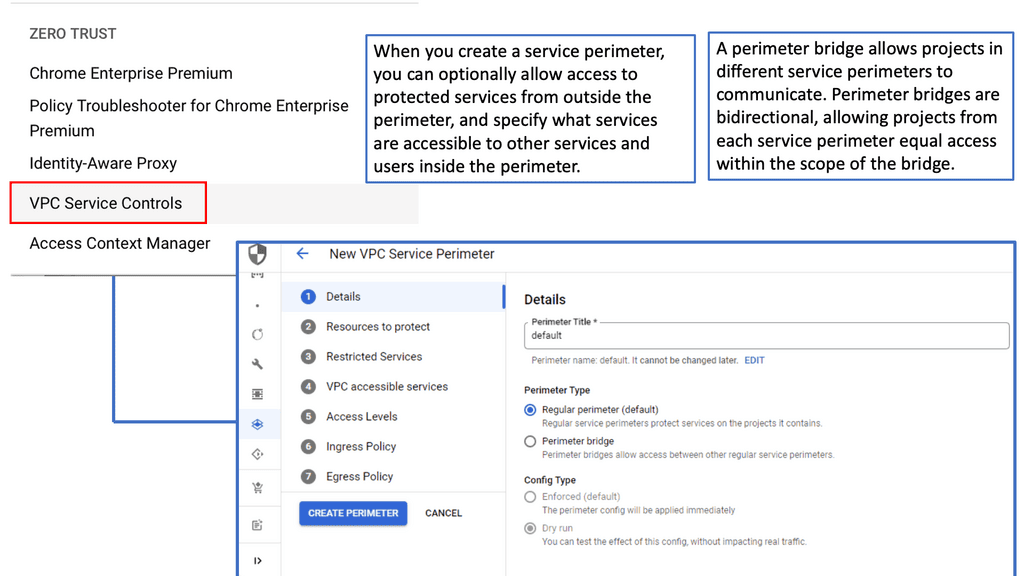

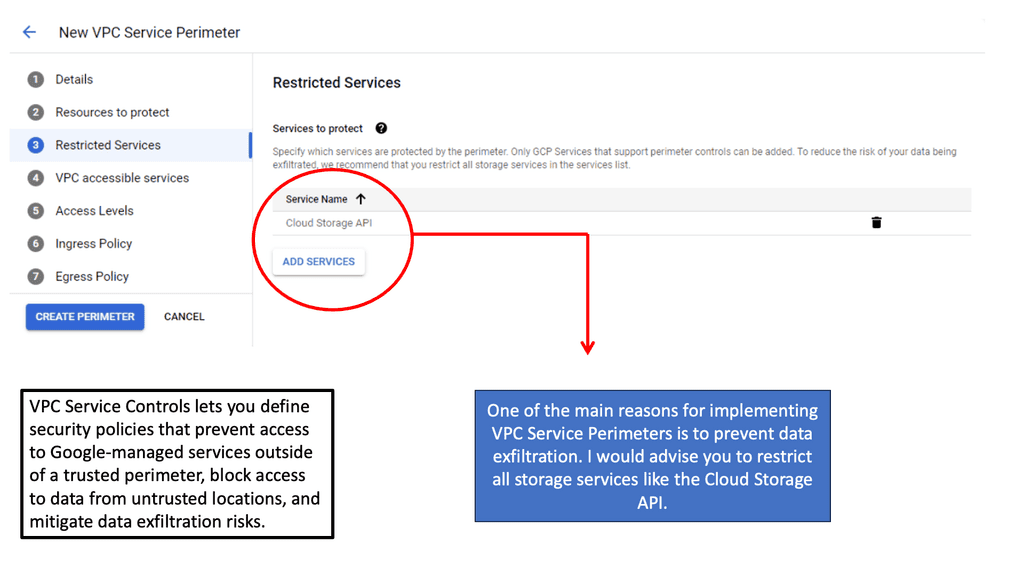

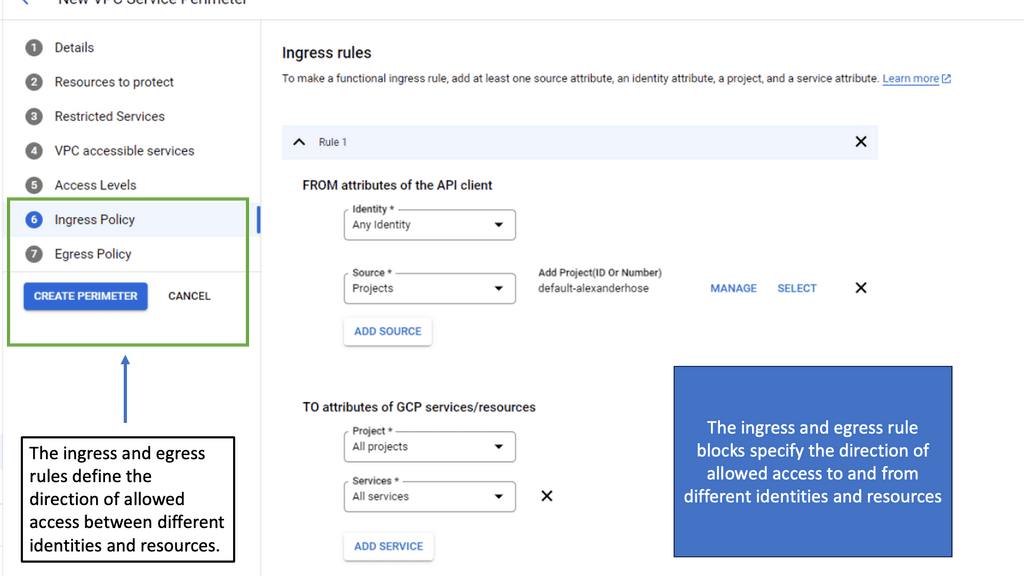

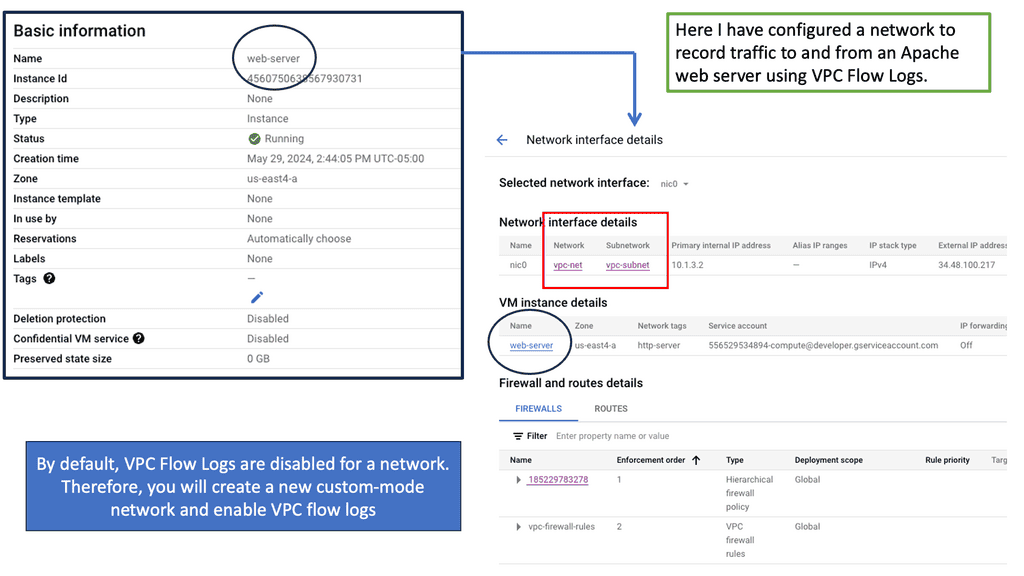

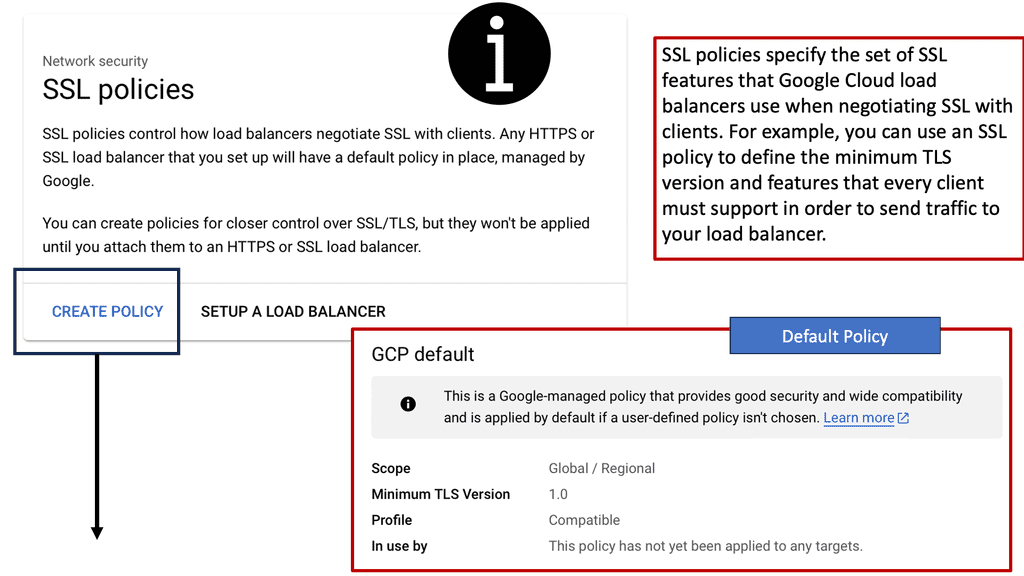

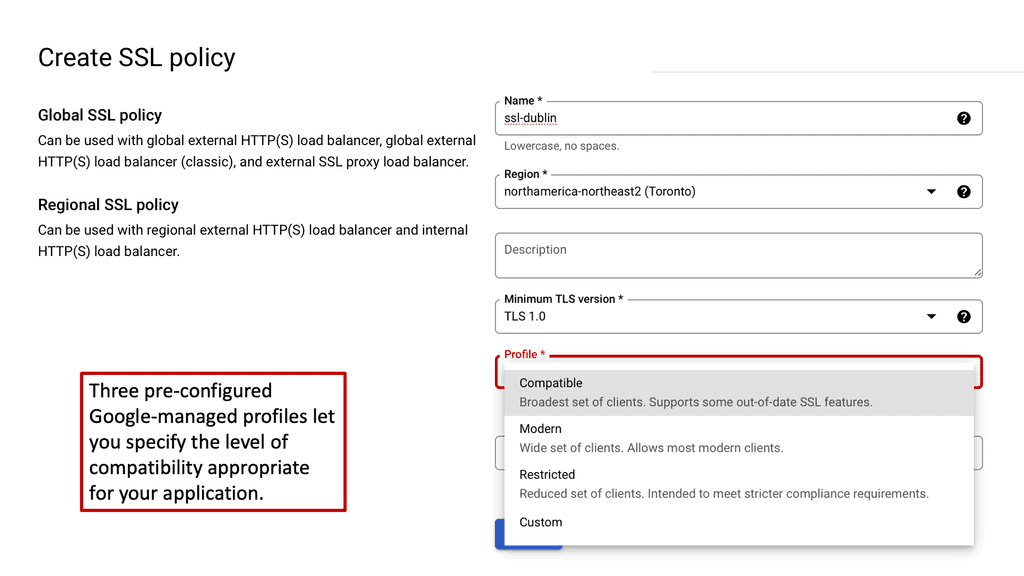

Example SDP Technology: VPC Service Controls

**What Are VPC Service Controls?**

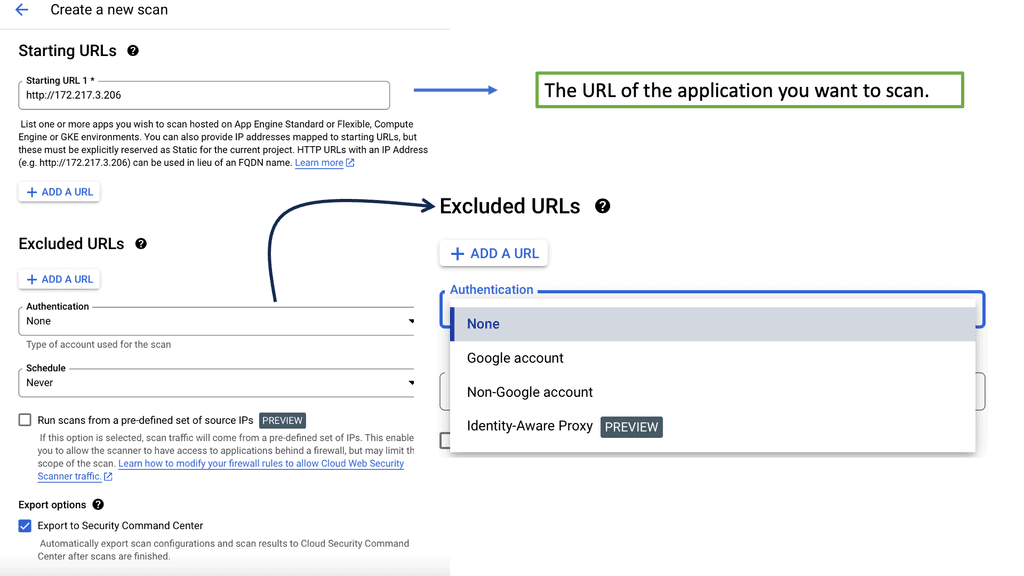

VPC Service Controls are a security feature in Google Cloud that help define a secure perimeter around Google Cloud resources. By creating service perimeters, organizations can restrict data exfiltration and mitigate risks associated with unauthorized access to sensitive resources. This feature is particularly useful for businesses that need to comply with strict regulatory requirements, as it provides a framework for managing and protecting data more effectively.

**Key Features and Benefits**

One of the standout features of VPC Service Controls is the ability to set up service perimeters, which act as virtual borders around cloud services. These perimeters help prevent data from being accessed by unauthorized users, both inside and outside the organization. Additionally, VPC Service Controls offer context-aware access, allowing organizations to define access policies based on factors such as user location, device security status, and time of access. This granular control ensures that only authorized users can interact with sensitive data.

**Implementing VPC Service Controls in Your Organization**

To effectively implement VPC Service Controls, organizations should begin by identifying the resources that require protection. This involves assessing which data and services are most critical to the business and determining the appropriate level of security needed. Once these resources are identified, service perimeters can be configured using the Google Cloud Console. It’s important to regularly review and adjust these configurations to adapt to changing security requirements and business needs.

**Best Practices for Maximizing Security**

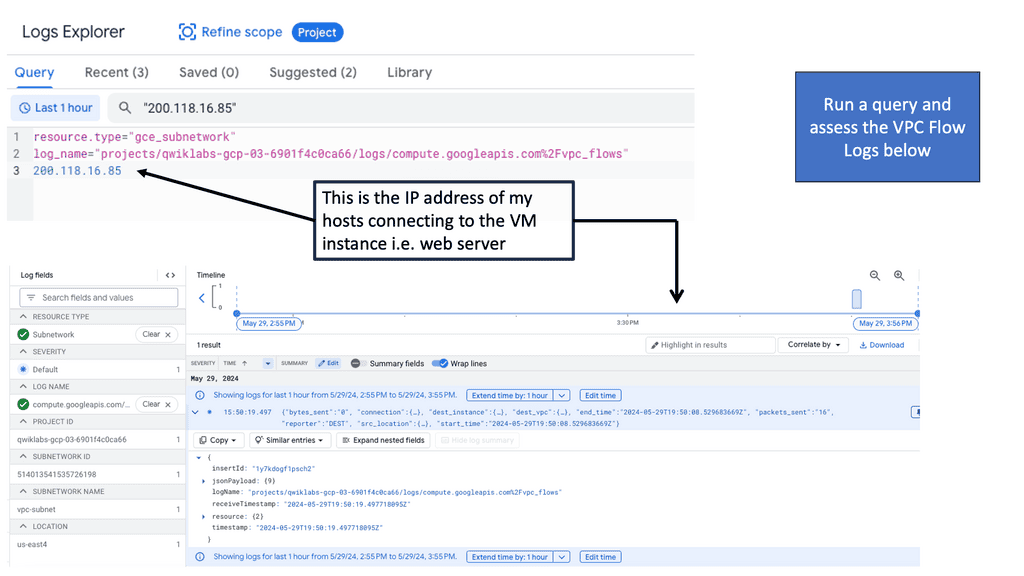

To maximize the security benefits of VPC Service Controls, organizations should follow several best practices. First, regularly audit and monitor access logs to detect any unauthorized attempts to access protected resources. Second, integrate VPC Service Controls with other Google Cloud security features, such as Identity and Access Management (IAM) and Cloud Audit Logs, to create a comprehensive security strategy. Finally, ensure that all employees are trained on security protocols and understand the importance of maintaining data integrity.

Benefits of Software-Defined Perimeter:

1. Enhanced Security: SDP employs a zero-trust approach, ensuring that only authorized users and devices can access the network. This eliminates the risk of unauthorized access and reduces the attack surface.

2. Scalability: SDP allows organizations to scale their networks without compromising security. It seamlessly accommodates new users, devices, and applications, making it ideal for expanding businesses.

3. Simplified Management: With SDP, managing access controls becomes more straightforward. IT administrators can easily assign and revoke permissions, reducing the administrative burden.

4. Improved Performance: By eliminating the need for backhauling traffic through a central gateway, SDP reduces latency and improves network performance, enhancing the overall user experience.

Implementing Software-Defined Perimeter:

**Deploying SDP in Your Organization**

– Implementing SDP requires a strategic approach to ensure a seamless transition. Begin by identifying the critical assets that need protection and mapping out access requirements for different user groups.

– Next, choose an SDP solution that aligns with your organization’s needs and integrate it with existing infrastructure. It’s crucial to provide training for your IT team to effectively manage and maintain the system.

– Additionally, regularly monitor and update the SDP framework to adapt to evolving security threats and organizational changes.

Implementing SDP requires a systematic approach and careful consideration of various factors. Here are the critical steps involved in deploying SDP:

1. Identify Critical Assets: Determine the applications and resources that require enhanced security measures. This could include sensitive data, intellectual property, or customer information.

2. Define Access Policies: Establish granular access policies based on user roles, device types, and locations. This ensures that only authorized individuals can access specific resources.

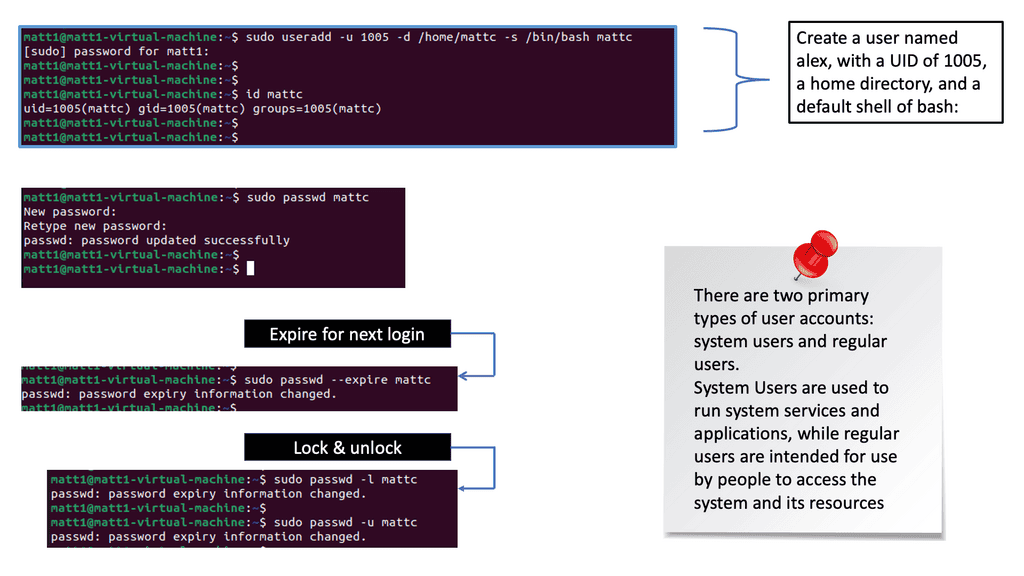

3. Implement Authentication Mechanisms: To verify user identities, incorporate strong authentication measures such as multi-factor authentication (MFA) or biometric authentication.

4. Implement Encryption: Encrypt all data in transit to prevent eavesdropping or unauthorized interception.

5. Continuous Monitoring: Regularly monitor network activity and analyze logs to identify suspicious behavior or anomalies.

For pre-information, you may find the following post helpful:

Software-Defined Perimeter

A software-defined perimeter constructs a virtual boundary around company assets. This separates it from access-based controls, restricting user privileges but allowing broad network access. The three fundamental pillars on which a software-defined perimeter is built are Zero Trust:

It leverages micro-segmentation to apply the principle of least privilege to the network, ultimately reducing the attack surface. Identity-centric: It’s designed around the user identity and additional contextual parameters, not the IP address.

The Software-Defined Perimeter Proposition

Security policy flexibility is offered with fine-grained access control that dynamically creates and removes inbound and outbound access rules. Therefore, a software-defined perimeter minimizes the attack surface for bad actors to play with—a small attack surface results in a small blast radius. So less damage can occur.

A VLAN has a relatively large attack surface, mainly because the VLAN contains different services. SDP eliminates the broad network access that VLANs exhibit. SDP has a separate data and control plane.

A control plane sets up the controls necessary for data to pass from one endpoint to another. Separating the control from the data plane renders protected assets “black,” thereby blocking network-based attacks. You cannot attack what you cannot see.

Example: VLAN-based Segmentation

**Challenges and Considerations**

While VLAN-based segmentation offers many advantages, it also presents challenges that need addressing:

1. **Complexity in Management**: With increased segmentation, the complexity of managing and troubleshooting the network can rise. Proper training and tools are essential.

2. **Compatibility Issues**: Ensure that all network devices support VLANs and are configured correctly to avoid communication breakdowns.

3. **Security Oversight**: While VLANs enhance security, they are not foolproof. Regular audits and updates are necessary to maintain a robust security posture.

The IP Address Is Not a Valid Hook

We should know that IP addresses are lost in today’s hybrid environment. SDP provides a connection-based security architecture instead of an IP-based one. This allows for many things. For one, security policies follow the user regardless of location. Let’s say you are doing forensics on an event 12 months ago for a specific IP.

However, that IP address is a component in a test DevOps environment. Do you care? Anything tied to IP is ridiculous, as we don’t have the right hook to hang things on for security policy enforcement.

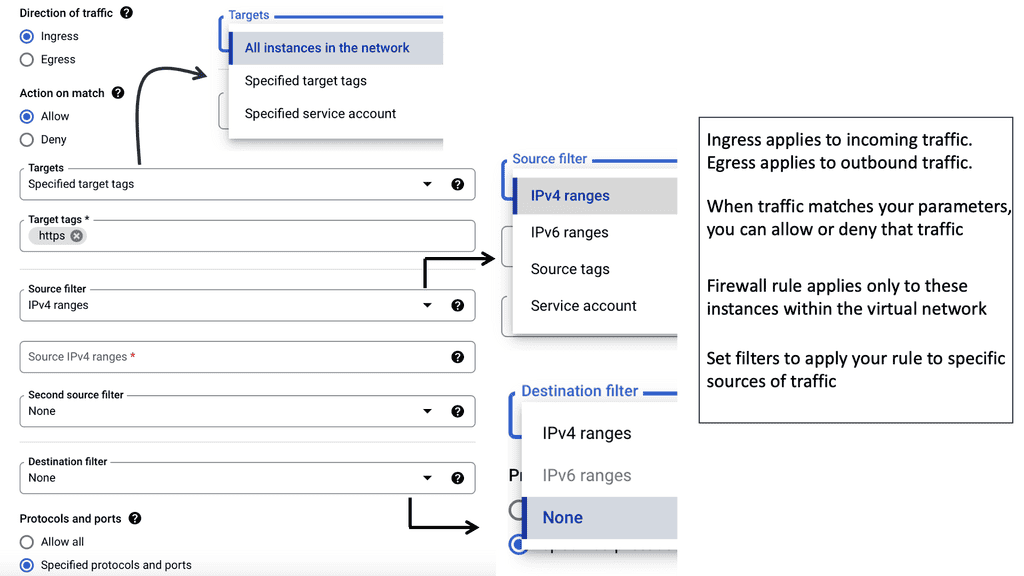

Example – Firewalling based on Tags & Labels

Software-defined perimeter; Identity-driven access

Identity-driven network access control is more precise in measuring the actual security posture of the endpoint. Access policies tied to IP addresses cannot offer identity-focused security. SDP enables the control of all connections based on pre-vetting who can connect and to what services.

If you do not meet this level of trust, you can’t, for example, access the database server, but you can access public-facing documents. Users are granted access only to authorized assets, preventing lateral movements that will probably go unnoticed when traditional security mechanisms are in place.

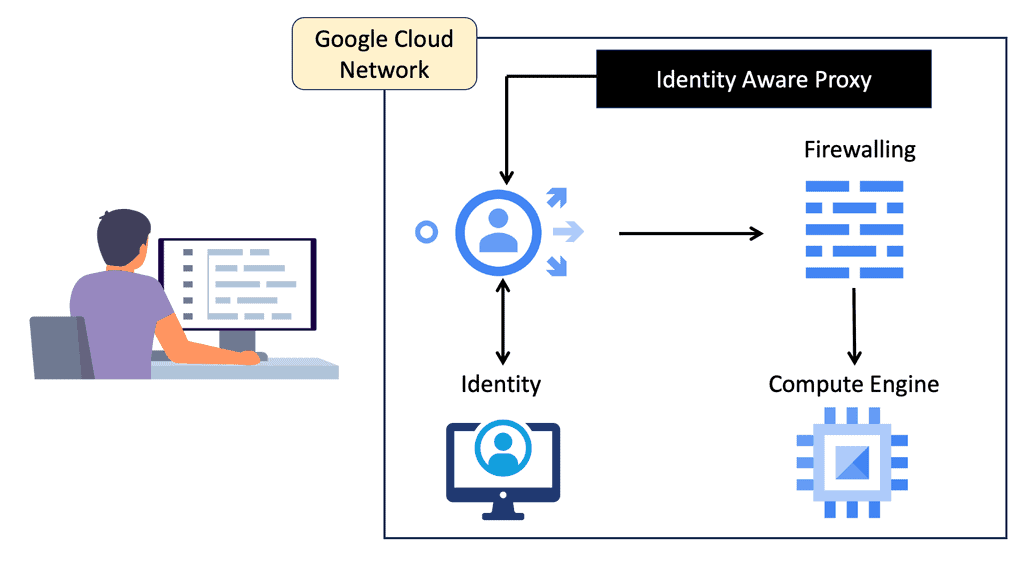

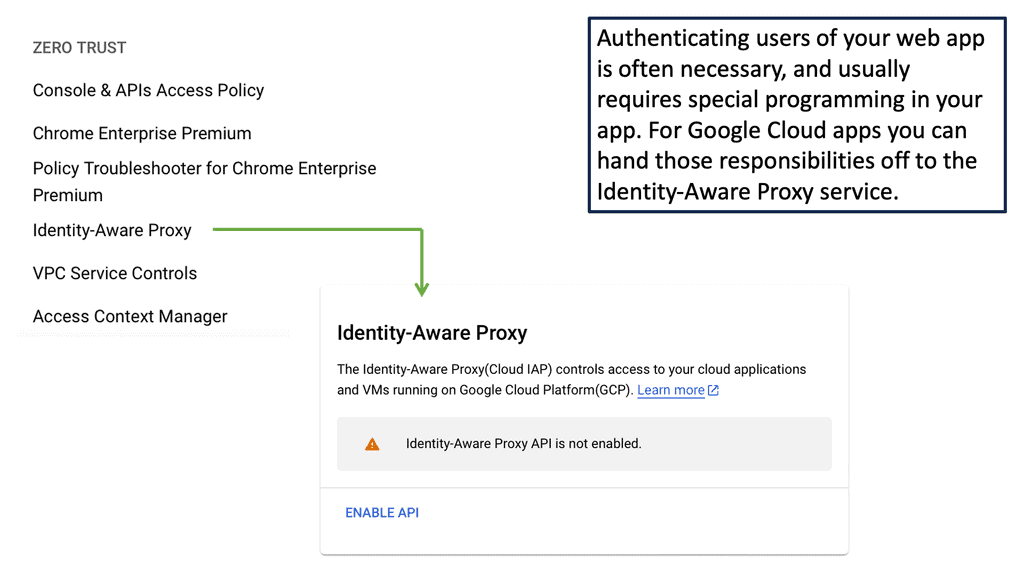

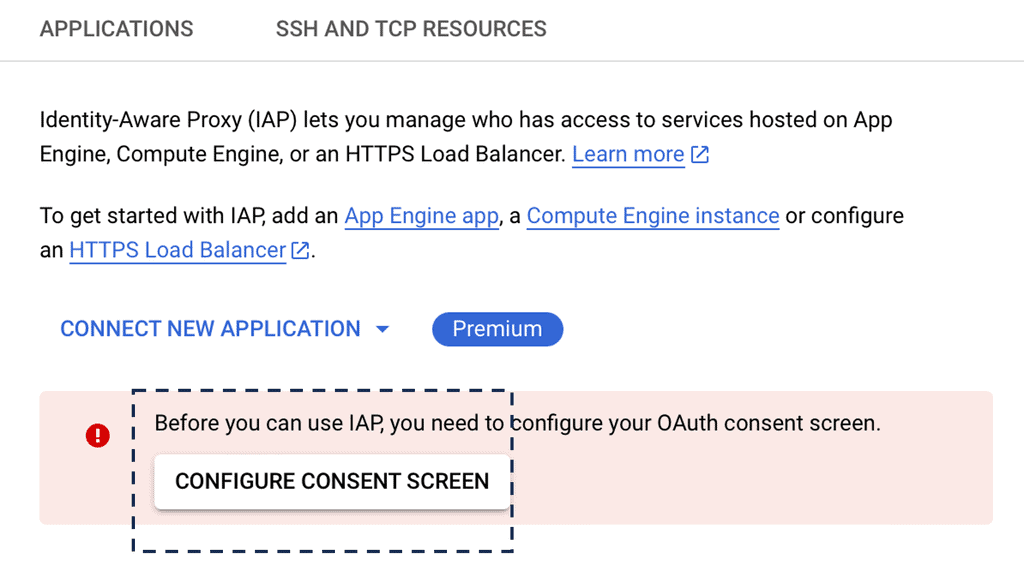

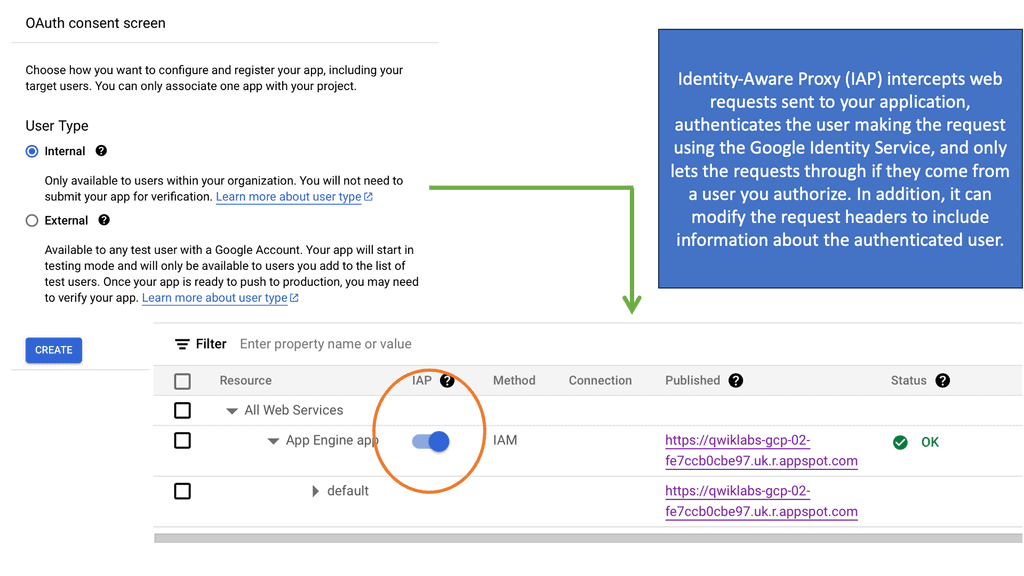

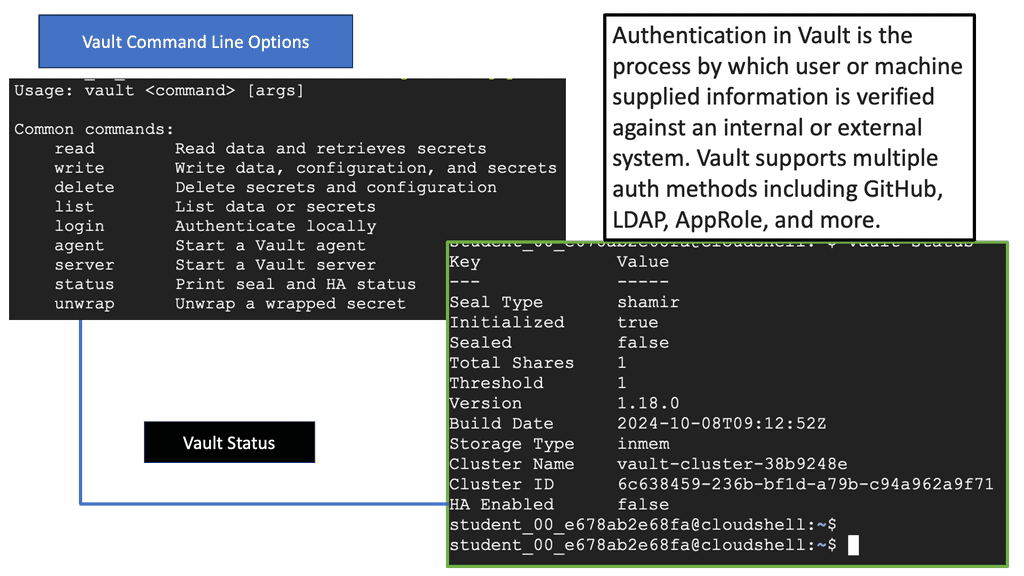

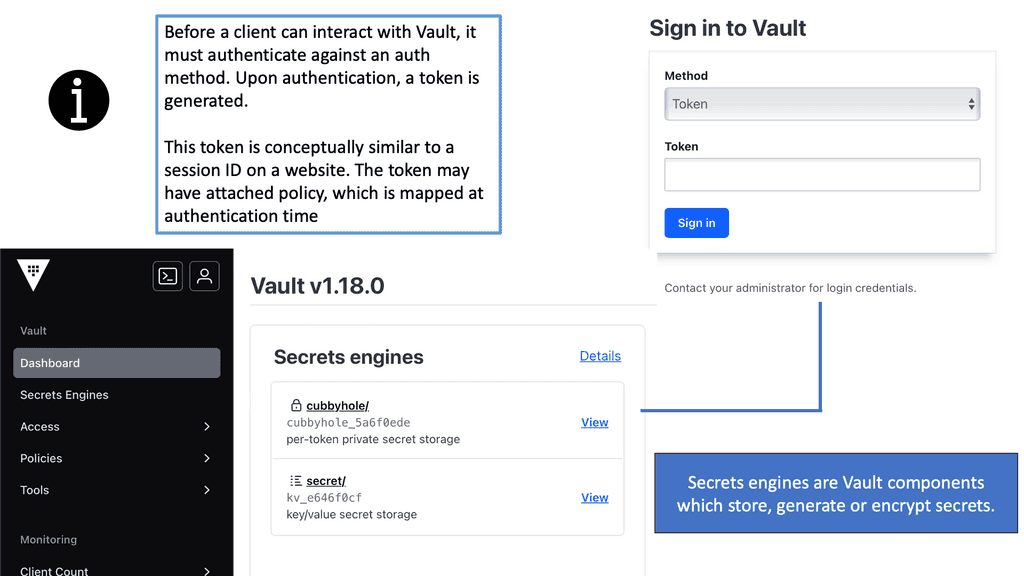

Example Technology: IAP in Google Cloud

### How IAP Works

IAP functions by intercepting user requests before they reach the application. It verifies the user’s identity and context, allowing access only if the user’s credentials match the predefined security policies. This process involves authentication through Google Identity Platform, which leverages OAuth 2.0, OpenID Connect, and other standards to confirm user identity efficiently. Once authenticated, IAP evaluates the context, such as the user’s location or device, to further refine access permissions.

### Benefits of Using IAP on Google Cloud

Implementing IAP on Google Cloud offers several compelling benefits. First, it enhances security by centralizing access control, reducing the risk of unauthorized entry. Additionally, IAP simplifies the user experience by eliminating the need for multiple login credentials across different applications. It also supports granular access control, allowing organizations to tailor permissions based on user roles and contexts, thereby improving operational efficiency.

### Setting Up IAP on Google Cloud

Setting up IAP on Google Cloud is a straightforward process. Administrators begin by enabling IAP in the Google Cloud Console. Once activated, they can configure access policies, determining who can access which resources and under what conditions. The system’s flexibility allows administrators to integrate IAP with various identity providers, ensuring compatibility with existing authentication frameworks. Comprehensive documentation and support from Google Cloud further streamline the setup process.

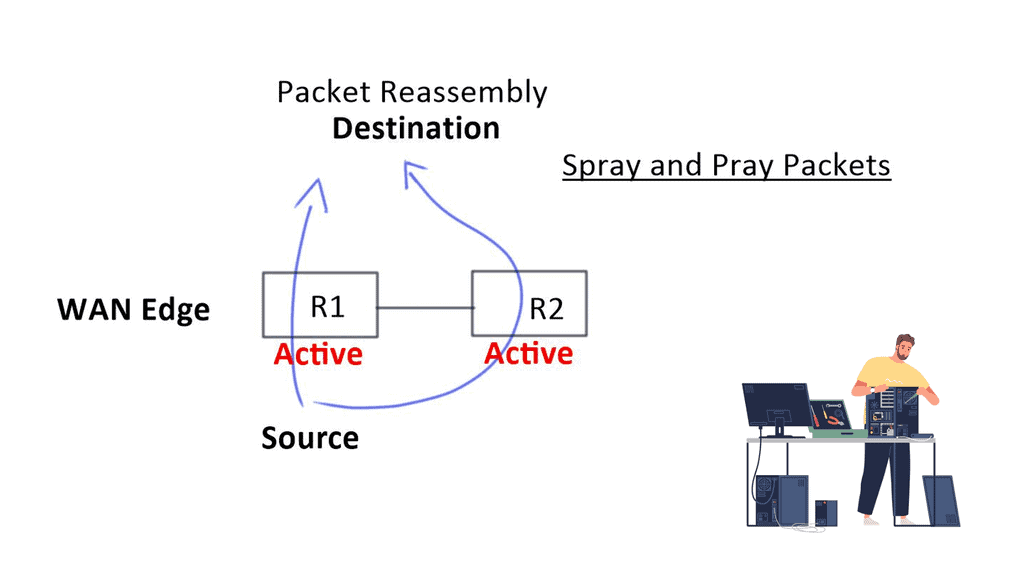

Information & Infrastructure Hiding

SDP does a great job of hiding information and infrastructure. The SDP architectural components ( the SDP controller and gateways ) are “dark, ” providing resilience against high- and low-volume DDoS attacks. A low-bandwidth DDoS attack may often bypass traditional DDoS security controls. However, the SDP components do not respond to connections until the requesting clients are authenticated and authorized, allowing only good packets through.

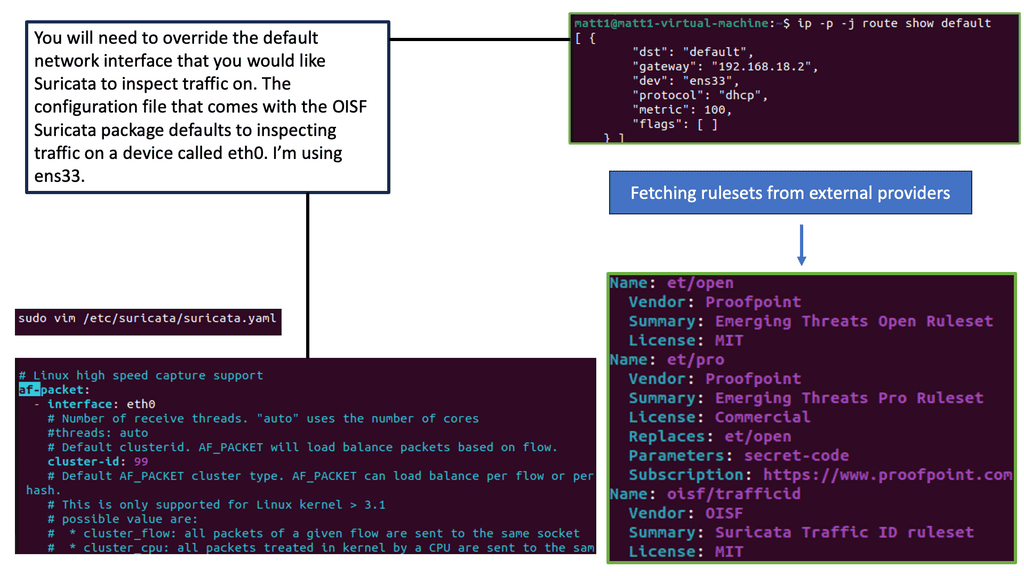

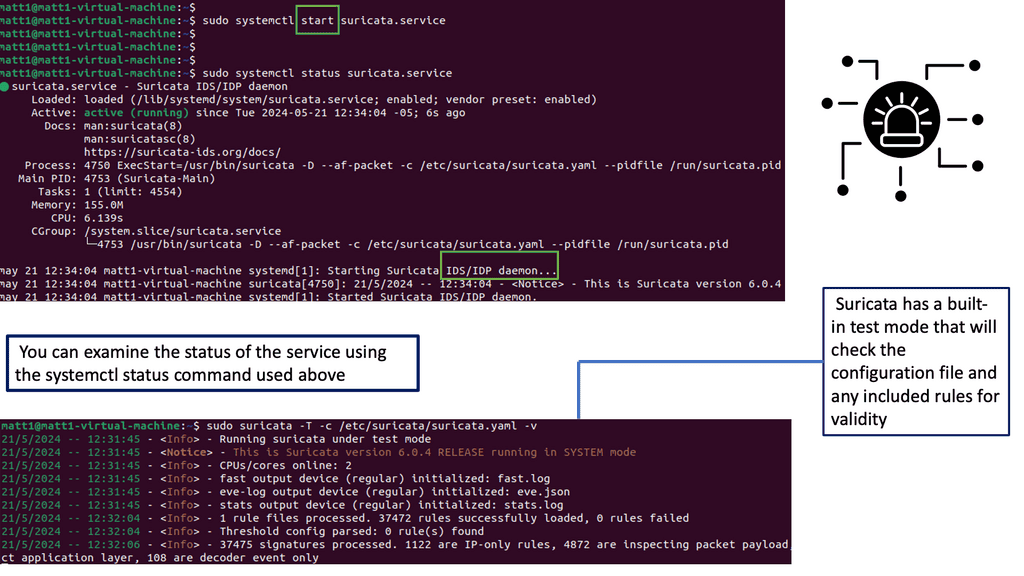

A suitable security protocol for this is single packet authorization (SPA). Single Packet Authorization, or Authentication, gives the SDP components a default “deny-all” security posture.

The “default deny” can be achieved because if an accepting host receives any packet other than a valid SPA packet, it assumes it is malicious. The packet will get dropped, and a notification will not get sent back to the requesting host. This stops the survey at the door, silently detecting and dropping bad packets.

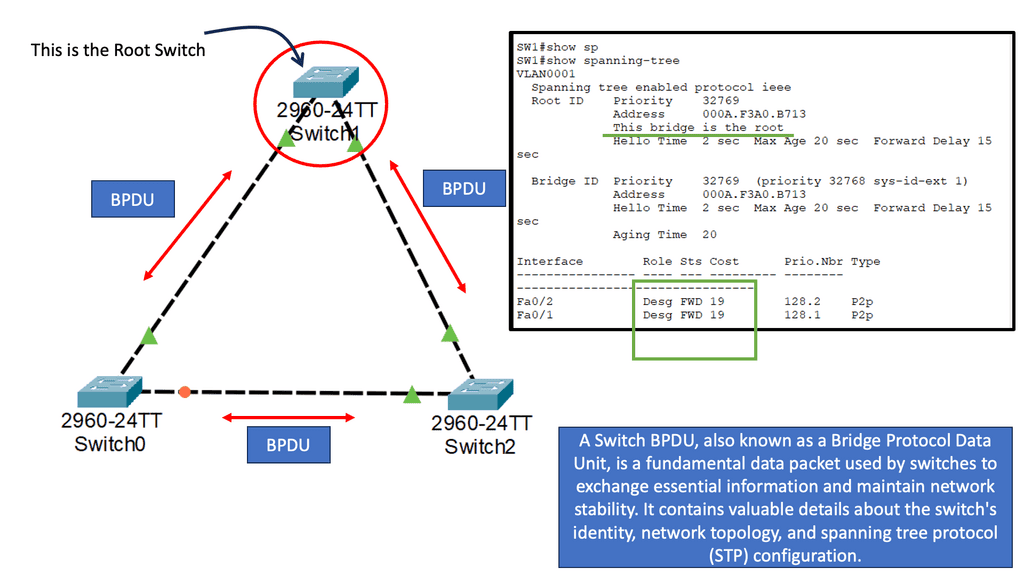

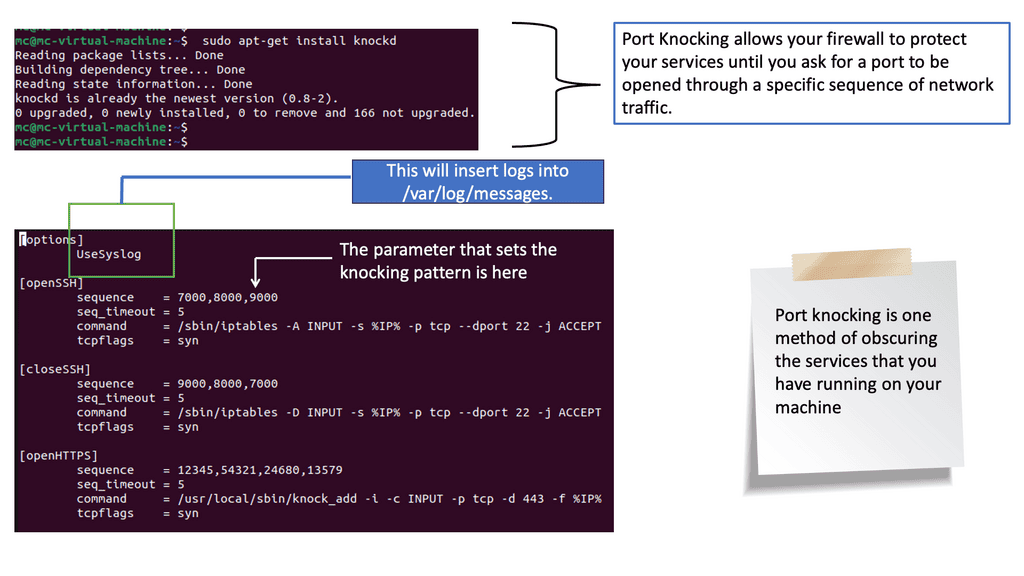

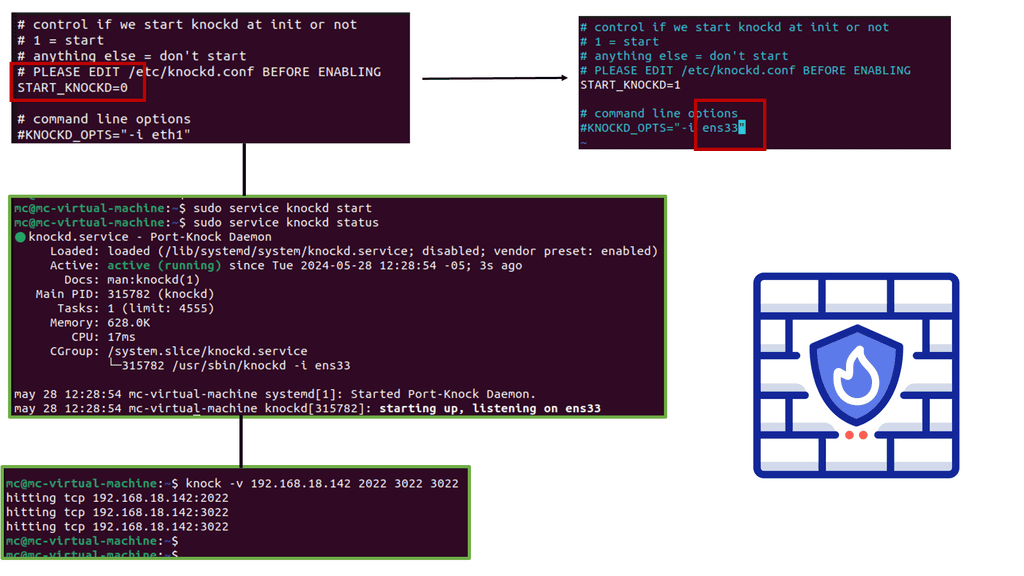

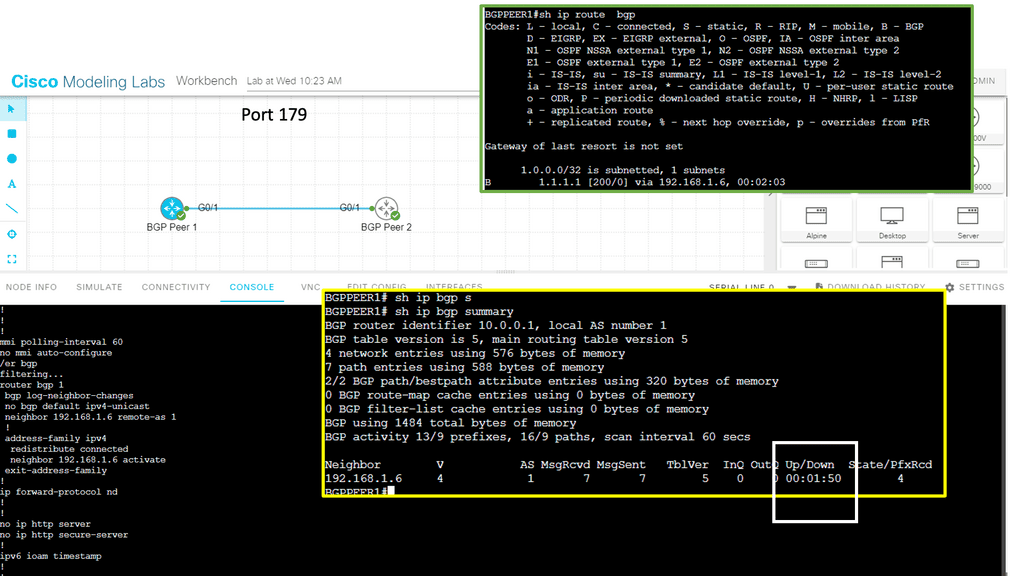

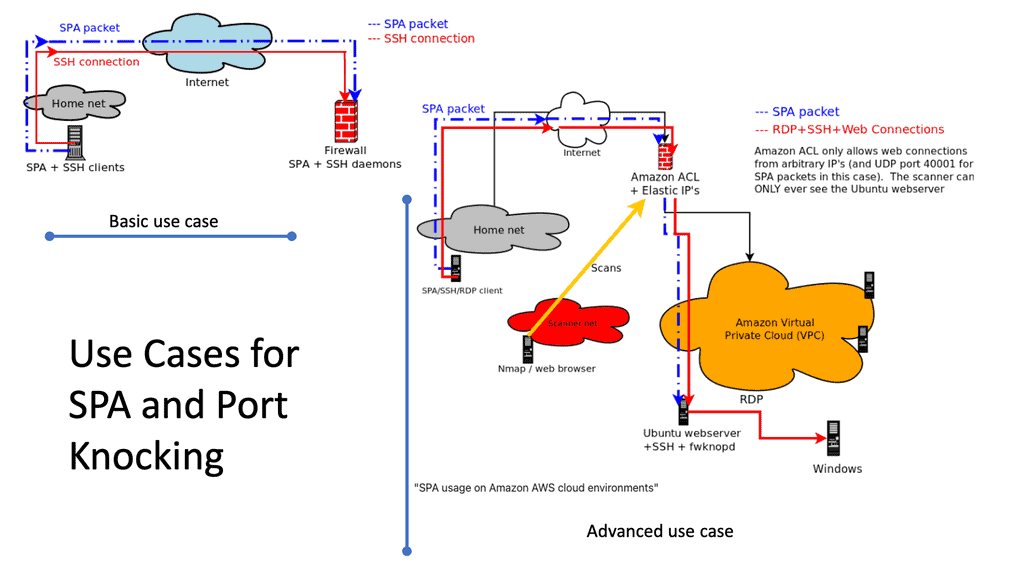

What is Port Knocking?

– Port knocking is a security technique that involves sequentially probing a predefined sequence of closed ports on a network to establish a connection with a desired service. It acts as a virtual secret handshake, allowing users to access specific services or ports that would otherwise remain hidden or blocked from unauthorized access.

– Port knocking typically involves sending connection attempts to a series of ports in a specific order, which serves as a secret code. Once a listening daemon or firewall detects the correct sequence, it dynamically opens the desired port and allows the connection. This stealthy approach helps to prevent unauthorized access and adds an extra layer of security to network services.

Sniffing a SPA packet

However, SPA can be subject to Man-In-The-Middle (MITM) attacks. If a bad actor can sniff an SPA packet, they can establish the TCP connection to the controller or AH client. However, there is another level of defense: the bad actor cannot complete the mutually encrypted connection (mTLS) without the client’s certificate.

SDP brings in the concept of mutually encrypted connections, also known as two-way encryption. The usual configuration for TLS is that the client authenticates the server, but TLS ensures that both parties are authenticated. Only validated devices and users can become authorized members of the SDP architecture.

We should also remember that the SPA is not a security feature that can be implemented to protect all. It has its benefits but does not take over from existing security technologies. SPA should work alongside them. The main reason for its introduction to the SDP world is to overcome the problems with TCP. TCP connects and then authenticates. With SPA, you authenticate first and then connect only then.

**The World of TCP & SDP**

When clients want to access an application with TCP, they must first set up a connection. There needs to be direct connectivity between the client and the application. So, this requires the application to be reachable and is carried out with IP addresses on each end. Then, once the connect stage is done, there is an authentication phase.

Once the authentication stage is completed, we can pass data. Therefore, we must connect, authenticate, and pass data through a stage. SDP reverses this.

The center of the software-defined perimeter is trust.

In Software-Defined Perimeter, we must establish trust between the client and the application before the client can set up the connection. The trust is bi-directional between the client and the SDP service and the application to the SDP service. Once trust has been established, we move into the next stage, authentication.

Once this has been established, we can connect the user to the application. This flips the entire security model and makes it more robust. The user has no idea of where the applications are located. The protected assets are hidden behind the SDP service, which in most cases is the SDP gateway, or some call this a connector.

Cloud Security Alliance (CSA) SDP

-

- With the Cloud Security Alliance SDP architecture, we have several components:

Firstly, the IH & AH are the clients initiating hosts (IH) and the service accepting hosts (AH). The IH devices can be any endpoint device that can run the SDP software, including user-facing laptops and smartphones. Many SDP vendors have remote browser isolation-based solutions without SDP client software. The IH, as you might expect, initiates the connections.

With an SDP browser-based solution, the user accesses the applications using a web browser and only works with applications that can speak across a browser. So, it doesn’t give you the full range of TCP and UDP ports, but you can do many things that speak natively across HTML5.

Most browser-based solutions don’t require additional security posture checks to assess the end-user device rather than an endpoint with the client installed.

Software-Defined Perimeter: Browser-based solution

The AHs accept connections from the IHS and provide a set of services protected securely by the SDP service. They are under the administrative control of the enterprise domain. They do not acknowledge communication from any other host and will not respond to non-provisioned requests. This architecture enables the control plane to remain separate from the data plane, achieving a scalable security system.

The IH and AH devices connect to an SDP controller that secures access to isolated assets by ensuring that the users and their devices are authenticated and authorized before granting network access. After authenticating an IH, the SDP controller determines the list of AHs to which the IH is authorized to communicate. The AHs are then sent a list of IHs that should accept connections.

Aside from the hosts and the controller, we have the SDP gateway component, which provides authorized users and devices access to protected processes and services. The protected assets are located behind the gateway and can be architecturally positioned in multiple locations, such as the cloud or on-premise. The gateways can exist in various locations simultaneously.

**Highlighting Dynamic Tunnelling**

A user with multiple tunnels to multiple gateways is expected in the real world. It’s not a static path or a one-to-one relationship but a user-to-application relationship. The applications can exist everywhere, and the tunnel is dynamic and ephemeral.

For a client to connect to the gateway, latency or SYN SYN/ACK RTT testing should be performed to determine the Internet links’ performance. This ensures that the application access path always uses the best gateway, improving application performance.

Remember that the gateway only connects outbound on TCP port 443 (mTLS), and as it acts on behalf of the internal applications, it needs access to the internal apps. As a result, depending on where you position the gateway, either internal to the LAN, private virtual private cloud (VPC), or in the DMZ protected by local firewalls, ports may need to be opened on the existing firewall.

**Future of Software-Defined Perimeter**

As the digital landscape evolves, secure network access becomes even more crucial. The future of SDP looks promising, with advancements in technologies like Artificial Intelligence and Machine Learning enabling more intelligent threat detection and mitigation.

In an era where data breaches are a constant threat, organizations must stay ahead of cybercriminals by adopting advanced security measures. Software Defined Perimeter offers a robust, scalable, and dynamic security framework that ensures secure access to critical resources.

By embracing SDP, organizations can significantly reduce their attack surface, enhance network performance, and protect sensitive data from unauthorized access. The time to leverage the power of Software Defined Perimeter is now.

Closing Points on SDP

At its core, a Software Defined Perimeter is a security framework designed to protect networked applications by concealing them from external users. Unlike traditional security measures that rely on a perimeter-based approach, SDP focuses on identity-based access controls. This means that users must be authenticated and authorized before they can even see the resources they’re trying to access. By effectively creating a “black cloud,” SDP ensures that only legitimate users can interact with the network, significantly reducing the risk of unauthorized access.

The operation of an SDP is based on a simple yet powerful principle: “Verify first, connect later.” It employs a multi-step process that involves:

1. **User Authentication**: Before any connection is established, SDP verifies the identity of the user or device attempting to connect.

2. **Access Validation**: Once authenticated, the system checks the user’s permissions and determines whether access should be granted.

3. **Dynamic Environment**: SDP dynamically provisions network connections, ensuring that only the necessary resources are exposed to the user.

This approach not only minimizes the attack surface but also adapts to the changing needs of the network, providing a flexible and scalable security solution.

The implementation of a Software Defined Perimeter offers numerous benefits:

– **Enhanced Security**: By hiding network resources and requiring stringent authentication, SDP provides a robust defense against cyber threats.

– **Reduced Attack Surface**: SDP ensures that only authorized individuals have access to specific resources, significantly reducing potential vulnerabilities.

– **Scalability and Flexibility**: As organizations grow, SDP can easily scale to meet their expanding security needs without requiring substantial changes to the existing infrastructure.

– **Improved User Experience**: With its streamlined access process, SDP can improve the overall user experience by reducing the friction often associated with security measures.

Summary: Software-Defined Perimeter

In today’s interconnected world, secure and flexible network solutions are paramount. Traditional perimeter-based security models can no longer protect sensitive data from sophisticated cyber threats. This is where the Software Defined Perimeter (SDP) comes into play, revolutionizing how we approach network security.

Understanding the Software-Defined Perimeter

The concept of the Software Defined Perimeter might seem complex at first. Still, it is a security framework that focuses on dynamically creating secure network connections as needed. Unlike traditional network architectures, where a fixed perimeter is established, SDP allows for granular access controls and encryption at the application level, ensuring that only authorized users can access specific resources.

Key Benefits of Implementing an SDP Solution

Implementing a Software-Defined Perimeter offers numerous advantages for organizations seeking robust and adaptive security measures. First, it provides a proactive defense against unauthorized access, as resources are effectively hidden from view until authorized users are authenticated. Additionally, SDP solutions enable organizations to enforce fine-grained access controls, reducing the risk of internal breaches and data exfiltration. Moreover, SDP simplifies the management of access policies, allowing for centralized control and greater visibility into network traffic.

Overcoming Network Limitations with SDP

Traditional network architectures often struggle to accommodate the demands of modern business operations, especially in scenarios involving remote work, cloud-based applications, and third-party partnerships. SDP addresses these challenges by providing secure access to resources regardless of their location or the user’s device. This flexibility ensures employees can work efficiently from anywhere while safeguarding sensitive data from potential threats.

Implementing an SDP Solution: Best Practices

When implementing an SDP solution, certain best practices should be followed to ensure a successful deployment. Firstly, organizations should thoroughly assess their existing network infrastructure and identify the critical assets that require protection. Next, selecting a reliable SDP solution provider that aligns with the organization’s specific needs and industry requirements is essential. Lastly, a phased approach to implementation can help mitigate risks and ensure a smooth transition for both users and IT teams.

Conclusion:

The Software Defined Perimeter represents a paradigm shift in network security, offering organizations a dynamic and scalable solution to protect their valuable assets. By adopting an SDP approach, businesses can achieve a robust security posture, enable seamless remote access, and adapt to the evolving threat landscape. Embracing the power of the Software Defined Perimeter is a proactive step toward safeguarding sensitive data and ensuring a resilient network infrastructure.