Data Center Performance

In today's digital era, where data is the lifeblood of businesses, data center performance plays a crucial role in ensuring the seamless functioning of various operations. A data center serves as the backbone of an organization, housing critical infrastructure and storing vast amounts of data. In this blog post, we will explore the significance of data center performance and its impact on businesses.

Data centers are nerve centers that house servers, networking equipment, and storage systems. They provide the necessary infrastructure to store, process, and distribute data efficiently. These facilities ensure high availability, reliability, and data security, essential for businesses to operate smoothly in today's digital landscape.

Before diving into performance optimization, it is crucial to conduct a comprehensive assessment of the existing data center infrastructure. This includes evaluating hardware capabilities, network architecture, cooling systems, and power distribution. By identifying any bottlenecks or areas of improvement, organizations can lay the foundation for enhanced performance.

One of the major factors that can significantly impact data center performance is inadequate cooling. Overheating can lead to hardware malfunctions and reduced operational efficiency. By implementing efficient cooling solutions such as precision air conditioning, hot and cold aisle containment, and liquid cooling technologies, organizations can maintain optimal temperatures and maximize performance.

Virtualization and automation technologies have revolutionized data center operations. By consolidating multiple physical servers into virtual machines, organizations can optimize resource utilization and improve overall performance. Automation further streamlines processes, allowing for faster provisioning, efficient workload management, and proactive monitoring of performance metrics.

Data center performance heavily relies on the speed and reliability of the network infrastructure. Network optimization techniques, such as load balancing, traffic shaping, and Quality of Service (QoS) implementations, ensure efficient data transmission and minimize latency. Additionally, effective bandwidth management helps prioritize critical applications and prevent congestion, leading to improved performance.

Unforeseen events can disrupt data center operations, resulting in downtime and performance degradation. By implementing redundancy measures such as backup power supplies, redundant network connections, and data replication, organizations can ensure continuous availability and mitigate the impact of potential disasters on performance.

Conclusion: In a digital landscape driven by data, optimizing data center performance is paramount. By assessing the current infrastructure, implementing efficient cooling solutions, harnessing virtualization and automation, optimizing networks, and ensuring redundancy, organizations can unleash the power within their data centers. Embracing these strategies will not only enhance performance but also pave the way for scalability, reliability, and a seamless user experience.

Matt Conran

Highlights: Data Center Performance

Understanding Data Center Speed

Data center speed refers to the rate at which data can be processed, transferred, and accessed within a data center infrastructure. It encompasses various aspects, including network speed, processing power, storage capabilities, and overall system performance. As technology advances, the demand for faster data center speeds grows exponentially.

In today’s digital landscape, real-time applications such as video streaming, online gaming, and financial transactions require lightning-fast data center speeds. Processing and delivering data in real-time is essential for providing users with seamless experiences and reducing latency issues. Data centers with high-speed capabilities ensure smooth streaming, responsive gameplay, and swift financial transactions.

High-Speed Networking

High-speed networking forms the backbone of data centers, enabling efficient communication between servers, storage systems, and end-users. Technologies like Ethernet, fiber optics, and high-speed interconnects facilitate rapid data transfer rates, minimizing bottlenecks and optimizing overall performance. By investing in advanced networking infrastructure, data centers can achieve remarkable speeds and meet the demands of today’s data-intensive applications.

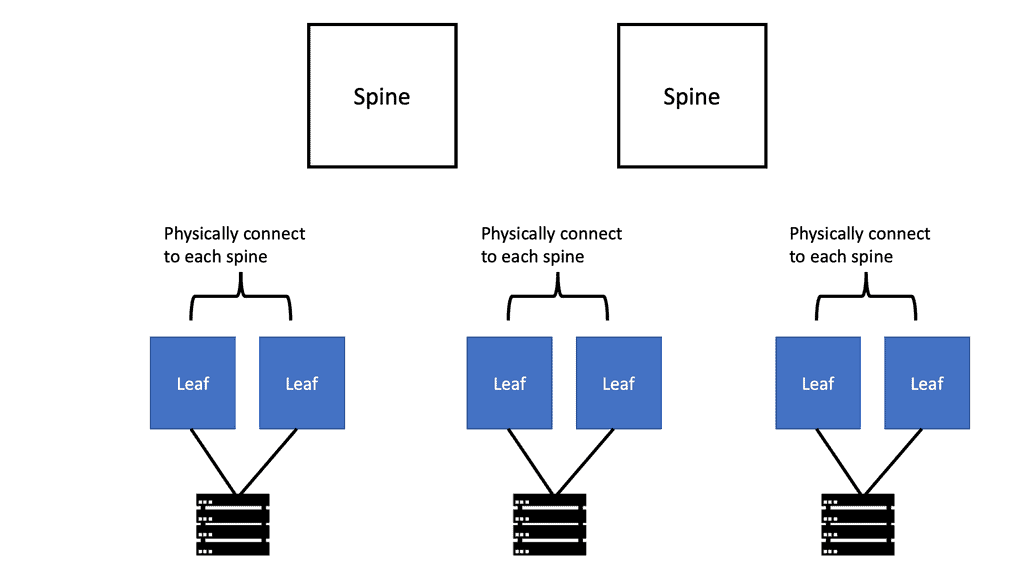

Leaf and spine performance

Leaf and spine architecture is a network design approach that provides high bandwidth, low latency, and seamless scalability. The leaf switches act as access switches, connecting end devices, while the spine switches form a non-blocking fabric for efficient data forwarding. This architectural design ensures consistent performance and minimizes network congestion.

Understanding TCP Performance Parameters

TCP performance parameters are settings that govern the behavior and efficiency of TCP connections. These parameters control various aspects of the TCP protocol, including congestion control, window size, retransmission behavior, and more. Network administrators and engineers can tailor TCP behavior to specific network conditions and requirements by tweaking these parameters.

1. Window Size: The TCP window size determines how much data can be sent before receiving an acknowledgment. Optimizing the window size can help maximize throughput and minimize latency.

2. Congestion Control Algorithms: TCP employs various congestion control algorithms, such as Reno, New Reno, and Cubic. Each algorithm handles congestion differently, and selecting the appropriate one for specific network scenarios is vital.

3. Maximum Segment Size (MSS): MSS refers to the maximum amount of data sent in a single TCP segment. Adjusting the MSS can optimize efficiency and reduce the overhead associated with packet fragmentation.

Now that we understand the significance of TCP performance parameters let’s explore how to tune them for optimal performance. Factors such as network bandwidth, latency, and the specific requirements of the applications running on the network must be considered.

1. Analyzing Network Conditions: Conduct thorough network analysis to determine the ideal values for TCP performance parameters. This analysis examines round-trip time (RTT), packet loss, and available bandwidth.

2. Testing and Iteration: Implement changes to TCP performance parameters gradually and conduct thorough testing to assess the impact on network performance. Fine-tuning may require multiple iterations to achieve the desired results.

Various tools and utilities are available to simplify the process of monitoring and optimizing TCP performance parameters. Network administrators can leverage tools like Wireshark, TCPdump, and Netalyzer to analyze network traffic, identify bottlenecks, and make informed decisions regarding parameter adjustments.

What is TCP MSS?

TCP MSS refers to the maximum amount of data encapsulated within a single TCP segment. It represents the largest payload size that can be sent over a TCP connection without fragmentation. MSS is primarily negotiated during the TCP handshake process, where the two communicating hosts agree upon an MSS value based on their respective capabilities.

Several factors influence the determination of TCP MSS. One crucial factor is the network path’s Maximum Transmission Unit (MTU) between the communicating hosts. The MTU represents the maximum packet size that can be transmitted without fragmentation across the underlying network infrastructure. TCP MSS is generally set to the MTU minus the IP and TCP headers’ overhead. It ensures the data fits within a single packet and avoids unnecessary fragmentation.

Understanding the implications of TCP MSS is essential for optimizing network performance. When the TCP MSS value is higher, it allows for larger data payloads in each segment, which can improve overall throughput. However, larger MSS values also increase the risk of packet fragmentation, especially if the network path has a smaller MTU. Fragmented packets can lead to performance degradation, increased latency, and potential retransmissions.

To mitigate the issues arising from fragmentation, TCP utilizes a mechanism called Path MTU Discovery (PMTUD). PMTUD allows TCP to dynamically discover the smallest MTU along the network path and adjust the TCP MSS value accordingly. By determining the optimal MSS value, PMTUD ensures efficient data transmission without relying on packet fragmentation.

The Basics of Spanning Tree Protocol (STP)

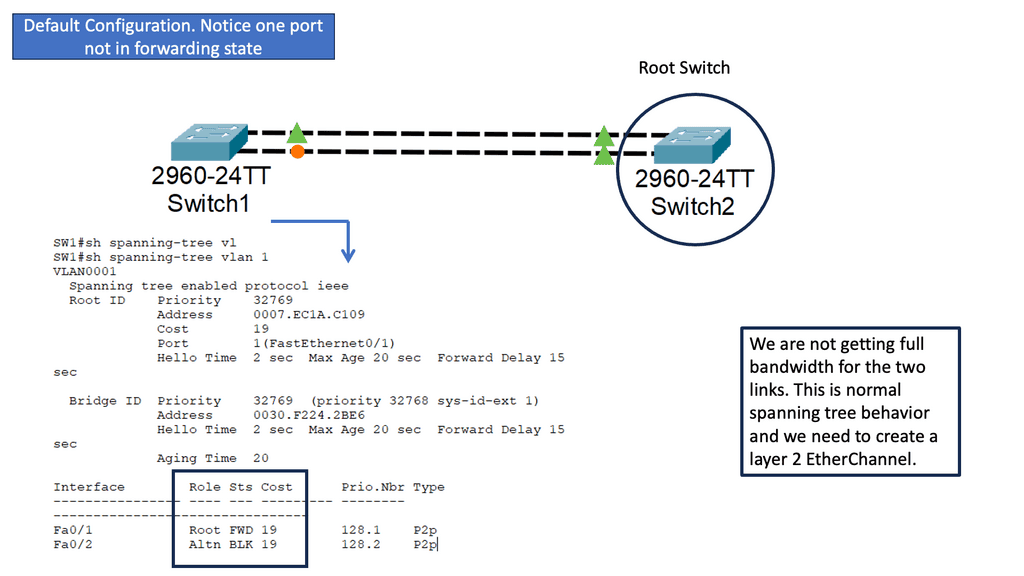

STP is a layer 2 network protocol that prevents loops in Ethernet networks. It creates a loop-free logical topology, ensuring a single active path between any network devices. We will discuss the critical components of STP, such as the root bridge, designated ports, and blocking ports. Understanding these elements is fundamental to comprehending the overall functionality of STP.

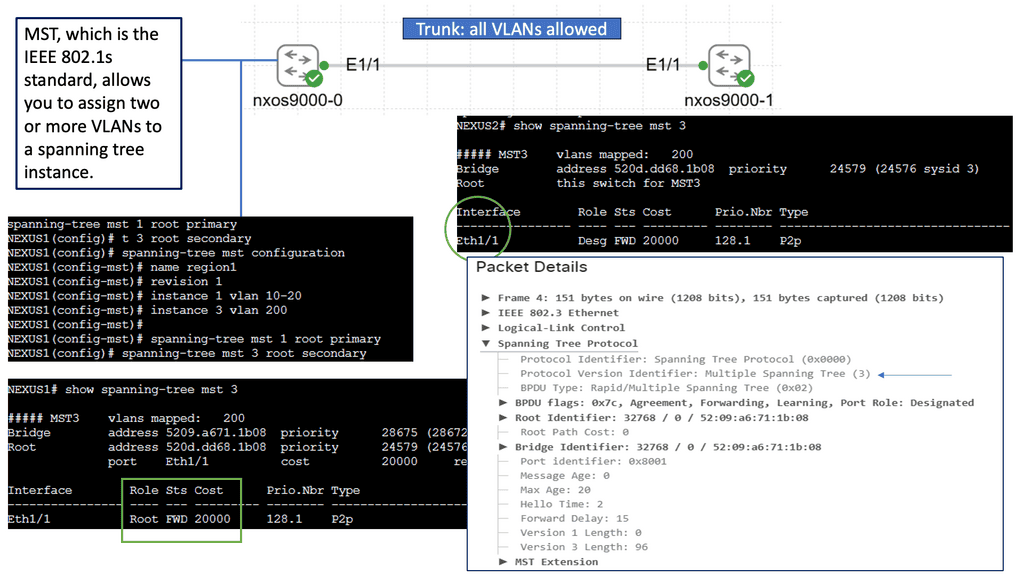

While STP provides loop prevention and network redundancy, it has certain limitations. For instance, in large networks, STP can be inefficient due to the use of a single spanning tree for all VLANs. MST addresses this drawback by dividing the network into multiple spanning tree instances, each with its own set of VLANs. We will explore the motivations behind MST and how it overcomes the limitations of STP.

Deploying STP MST in a network requires careful planning and configuration. We will discuss the steps for implementing MST, including creating MST regions, assigning VLANs to instances, and configuring the root bridges. Additionally, we will provide practical examples and best practices to ensure a successful MST deployment.

Understanding Nexus 9000 Series VRRP

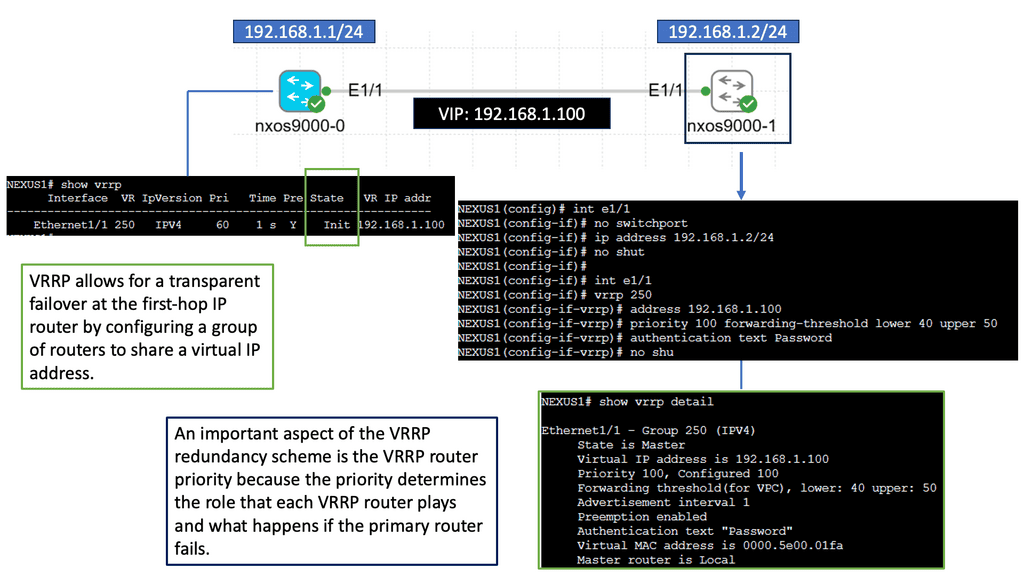

At its core, Nexus 9000 Series VRRP is a dynamic routing protocol that allows for the creation of a virtual router that acts as a single point of contact for multiple physical routers. This virtual router offers redundancy and high availability by seamlessly enabling failover between the physical routers. By utilizing VRRP, network administrators can ensure that their networks remain operational despite hardware or software failures.

One of the standout features of Nexus 9000 Series VRRP is its ability to provide load balancing across multiple routers. By distributing network traffic intelligently, VRRP ensures optimal utilization of resources while preventing bottlenecks. Additionally, VRRP supports virtual IP addresses, allowing for transparent failover without requiring any changes in the network configuration. This flexibility makes Nexus 9000 Series VRRP an ideal choice for businesses with stringent uptime requirements.

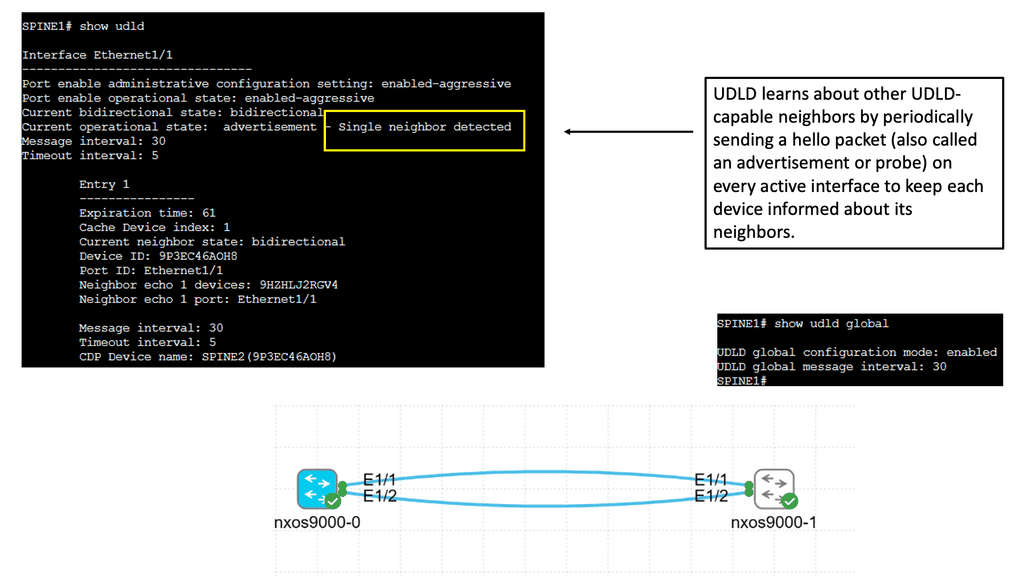

Understanding UDLD

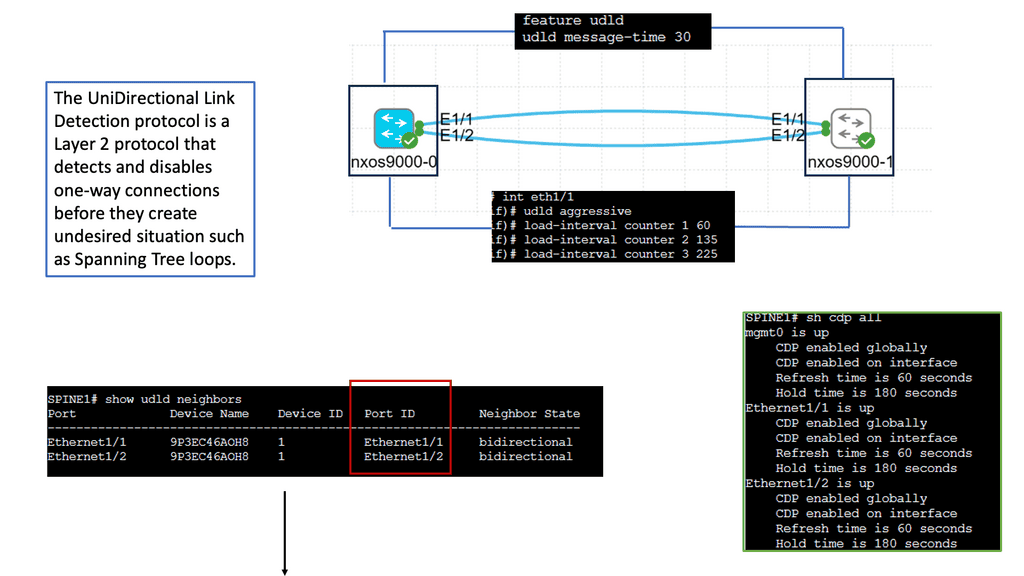

UDLD is a Layer 2 protocol that detects and mitigates unidirectional links, which can cause network loops and data loss. It operates by exchanging periodic messages between neighboring switches to verify that the link is bidirectional. If a unidirectional link is detected, UDLD immediately disables the affected port, preventing potential network disruptions.

Implementing UDLD brings several advantages to the network environment. Firstly, it enhances network reliability by proactively identifying and addressing unidirectional link issues. This helps to avoid potential network loops, packet loss, and other connectivity problems. Additionally, UDLD improves network troubleshooting capabilities by providing detailed information about the affected ports, facilitating quick resolution of link-related issues.

Configuring UDLD on Cisco Nexus 9000 switches is straightforward. It involves enabling UDLD globally on the device and enabling UDLD on specific interfaces. Additionally, administrators can fine-tune UDLD behavior by adjusting parameters such as message timers and retries. Proper deployment of UDLD in critical network segments adds an extra layer of protection against unidirectional link failures.

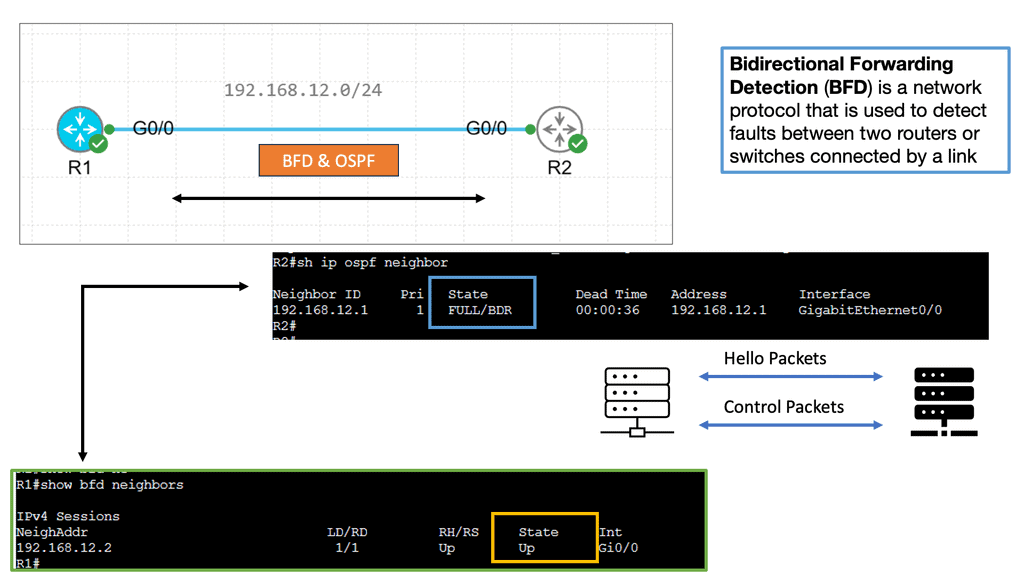

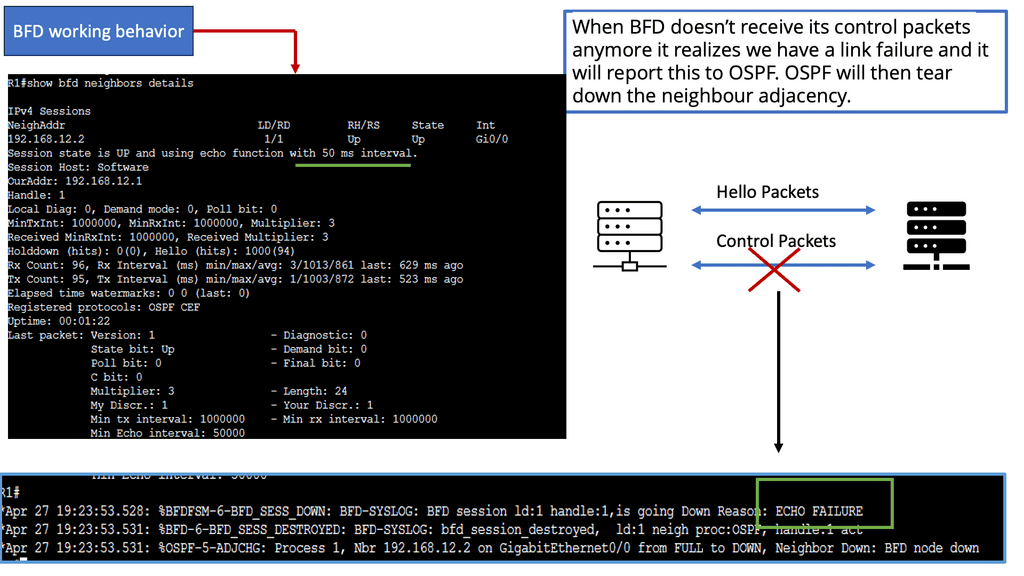

Example Technology: BFD for data center performance

BFD, an abbreviation for Bidirectional Forwarding Detection, is a protocol to detect network path faults. It offers rapid detection and notification of link failures, improving network reliability. BFD data centers leverage this protocol to enhance performance and ensure seamless connectivity.

The advantages of optimal BFD data center performance are manifold. Let’s highlight a few key benefits:

a. Enhanced Network Reliability: BFD data centers offer enhanced fault detection capabilities, leading to improved network reliability. Identifying link failures allows quick remediation, minimizing downtime and ensuring uninterrupted connectivity.

b. Reduced Response Time: BFD data centers significantly reduce response time by swiftly detecting network faults. This is critical in mission-critical applications where every second counts, such as financial transactions, real-time communication, or online gaming.

c. Proactive Network Monitoring: BFD data centers enable proactive monitoring, giving administrators real-time insights into network performance. This allows for early detection of potential issues, enabling prompt troubleshooting and preventive measures.

Factors Influencing Leaf and Spine Performance

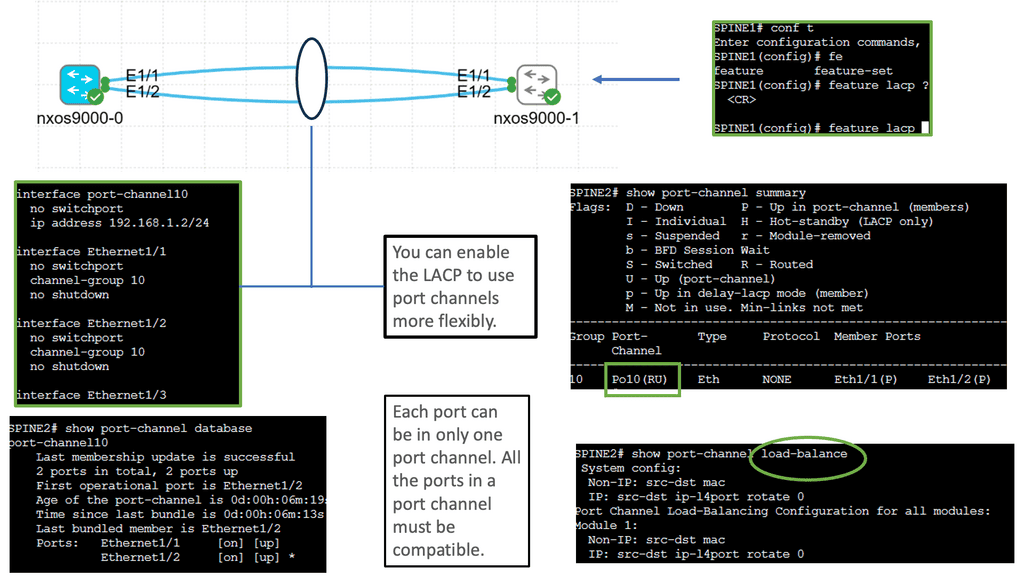

a) Bandwidth Management: Properly allocating and managing bandwidth among leaf and spine switches is vital to avoid bottlenecks. Link aggregation techniques, such as LACP (Link Aggregation Control Protocol), help with load balancing and redundancy.

b) Network Topology: The leaf and spine network topology design dramatically impacts performance. Ensuring equal interconnectivity between leaf and spine switches and maintaining appropriate spine switch redundancy enhances fault tolerance and overall performance.

c) Quality of Service (QoS): Implementing QoS mechanisms allows prioritization of critical traffic, ensuring smoother data flow and preventing congestion. Assigning appropriate QoS policies to different traffic types guarantees optimal leaf and spine performance.

Performance Optimization Techniques

a) Traffic Engineering: Effective traffic engineering techniques, like ECMP (Equal-Cost Multipath), evenly distribute traffic across multiple paths, maximizing link utilization and minimizing latency. Dynamic routing protocols, such as OSPF (Open Shortest Path First) or BGP (Border Gateway Protocol), can be utilized for efficient traffic flow.

b) Buffer Management: Proper buffer allocation and management at leaf and spine switches prevent packet drops and ensure smooth data transmission. Tuning buffer sizes based on traffic patterns and requirements significantly improves leaf and spine performance.

c) Monitoring and Analysis: Regular monitoring and analysis of leaf and spine network performance help identify potential bottlenecks and latency issues. Utilizing network monitoring tools and implementing proactive measures based on real-time insights can enhance overall performance.

Planning for Future Growth

One of the primary objectives of scaling a data center is to ensure it can handle future growth and increased workloads. This requires careful planning and forecasting. Organizations must analyze their projected data storage and processing needs, considering anticipated business growth, emerging technologies, and industry trends. By accurately predicting future demands, businesses can design a scalable data center that can adapt to changing requirements.

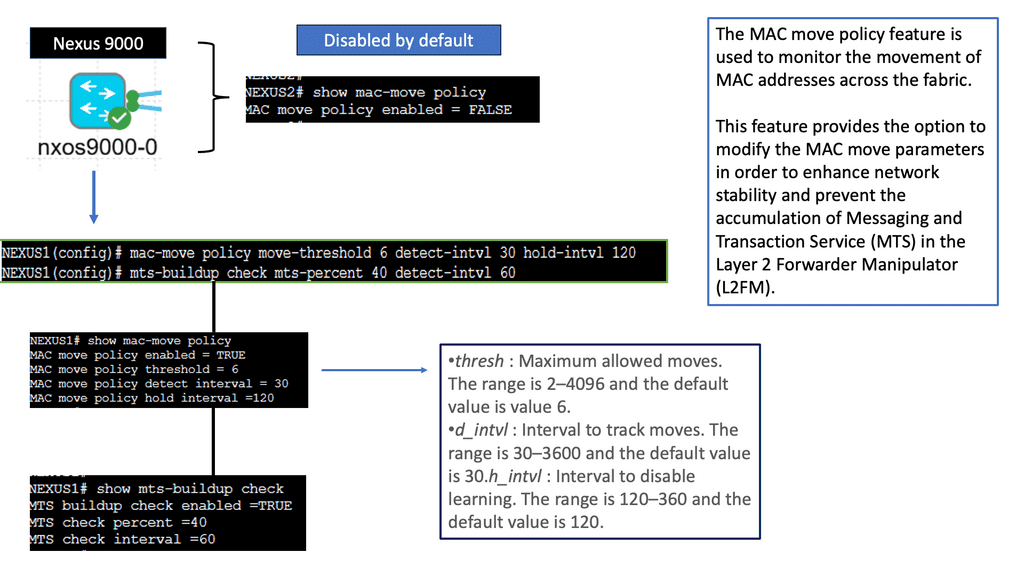

Understanding the MAC Move Policy

The MAC move policy, also known as the Move Limit feature, is designed to prevent MAC address flapping and enhance network stability. It allows network administrators to define how many times a MAC address can move within a specified period before triggering an action. By comprehending the MAC move policy’s purpose and functionality, administrators can better manage their network’s stability and performance.

Troubleshooting MAC Move Issues

Network administrators may encounter issues related to MAC address moves despite implementing the MAC move policy. Here are some standard troubleshooting steps to consider:

1. Verifying MAC Move Configuration: It is crucial to double-check the MAC move configuration on Cisco NX-OS devices. Ensure that the policy is properly enabled and that the correct parameters, such as aging time and notification settings, are applied.

2. Analyzing MAC Move Logs: Dive deep into the MAC move logs to identify any patterns or anomalies. Look for recurring MAC move events that may indicate a misconfiguration or unauthorized activity.

3. Reviewing Network Topology Changes: Changes in the network topology can sometimes lead to unexpected MAC moves. Analyze recent network changes, such as new device deployments or link failures, to identify potential causes for MAC move issues.

Modular Design and Flexibility

Modular design has emerged as a game-changer in data center scaling. Organizations can add or remove resources flexibly and cost-effectively by adopting a modular approach. Modular data center components like prefabricated server modules and containerized solutions allow for rapid deployment and easy scalability. This reduces upfront costs and enables businesses to have faster time to market.

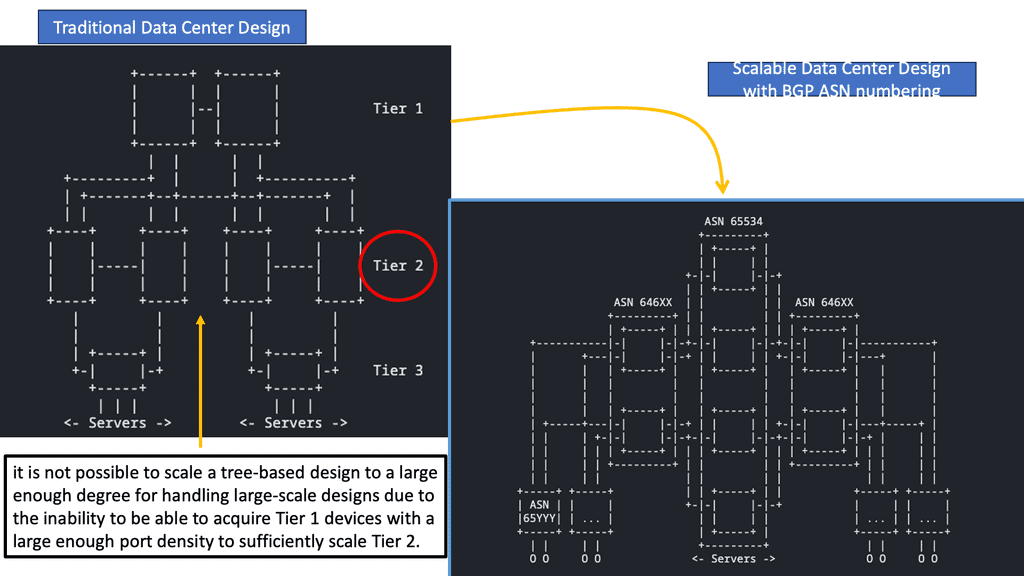

Example Technology: Traditional Design and the Move to VPC

The architecture has three types of routers: core routers, aggregation routers (sometimes called distribution routers), and access switches. Layer 2 networks use the Spanning Tree Protocol to establish loop-free topologies between aggregation routers and access switches. The spanning tree protocol has several advantages. There are several advantages to using this technology, including its simplicity and ease of use. The IP address and default gateway setting do not need to be changed when servers move within a pod because VLANs are extended within each pod. In a VLAN, Spanning Tree Protocol never allows redundant paths to be used simultaneously.

To overcome the limitations of the Spanning Tree Protocol, Cisco introduced virtual port channel (vPC) technology in 2010. A vPC eliminates blocked ports from spanning trees, provides active-active uplinks between access switches and aggregation routers, and maximizes bandwidth usage.

In 2003, virtual technology allowed computing, networking, and storage resources to be pooled previously segregated in pods in Layer 2 of the three-tier data center design. This revolutionary technology created a need for a larger Layer 2 domain.

By deploying virtualized servers, applications are increasingly distributed, resulting in increased east-west traffic due to the ability to access and share resources securely. Latency must be low and predictable to handle this traffic efficiently. In a three-tier data center, bandwidth becomes a bottleneck when only two active parallel uplinks; however, vPC can provide four active parallel uplinks. Three-tier architectures also present the challenge of varying server-to-server latency.

A new data center design based on the Clos network was developed to overcome these limitations. With this architecture, server-to-server communication is high-bandwidth, low-latency, and non-blocking

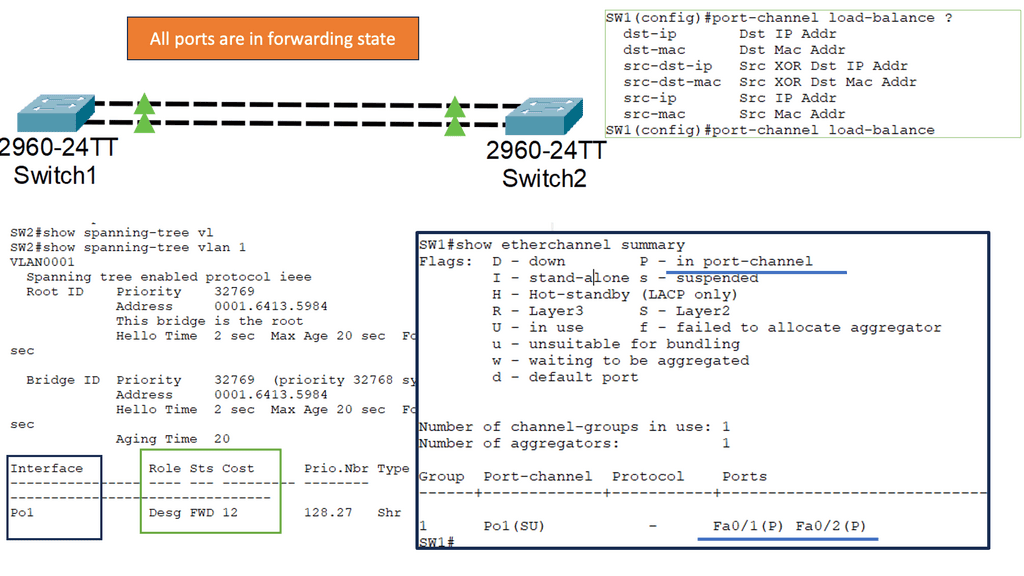

Understanding Layer 2 Etherchannel

Layer 2 Etherchannel, or Link Aggregation, allows multiple physical links between switches to be treated as a single logical link. This bundling of links increases the available bandwidth and provides load balancing across the aggregated links. It also enhances fault tolerance by creating redundancy in the network.

To configure Layer 2 Etherchannel, several steps need to be followed. Firstly, the participating interfaces on the switches need to be identified and grouped as a channel group. Once the channel group is formed, a protocol such as the Port Aggregation Protocol (PAgP) or Link Aggregation Control Protocol (LACP) must be selected to manage the bundle. The protocol ensures the links are synchronized and operate as a unified channel.

Understanding Layer 3 Etherchannel

Layer 3 Etherchannel, or routed Etherchannel, is a technique that aggregates multiple physical links into a single logical link. Unlike Layer 2 Etherchannel, which operates at the data link layer, Layer 3 Etherchannel operates at the network layer. This means it can provide load balancing and redundancy for routed traffic, making it a valuable asset in network design.

To implement Layer 3 Etherchannel, specific requirements must be met. Firstly, the switches involved must support Layer 3 Etherchannel and have compatible configurations. Secondly, the physical links to be bundled should have the same speed and duplex settings. Additionally, the links must be connected to the same VLAN or bridge domain. Once these prerequisites are fulfilled, the configuration process involves creating a port channel interface, assigning the physical interfaces to the port channel, and configuring appropriate routing protocols or static routes.

Understanding Cisco Nexus 9000 Port Channel

Port channeling, also known as link aggregation or EtherChannel, allows us to combine multiple physical links between switches into a single logical link. This logical link provides increased bandwidth, redundancy, and load-balancing capabilities, ensuring efficient utilization of network resources. The Cisco Nexus 9000 port-channel takes this concept to a new level, offering advanced features and functionalities.

Configuring the Cisco Nexus 9000 port channel is a straightforward process. First, we need to identify the physical interfaces that will be part of the port channel. Then, we create the port-channel interface and assign it a number. Next, we associate the physical interfaces with the port channel using the “channel-group” command. We can also define additional parameters such as load balancing algorithm, mode (active or passive), and spanning tree protocol settings.

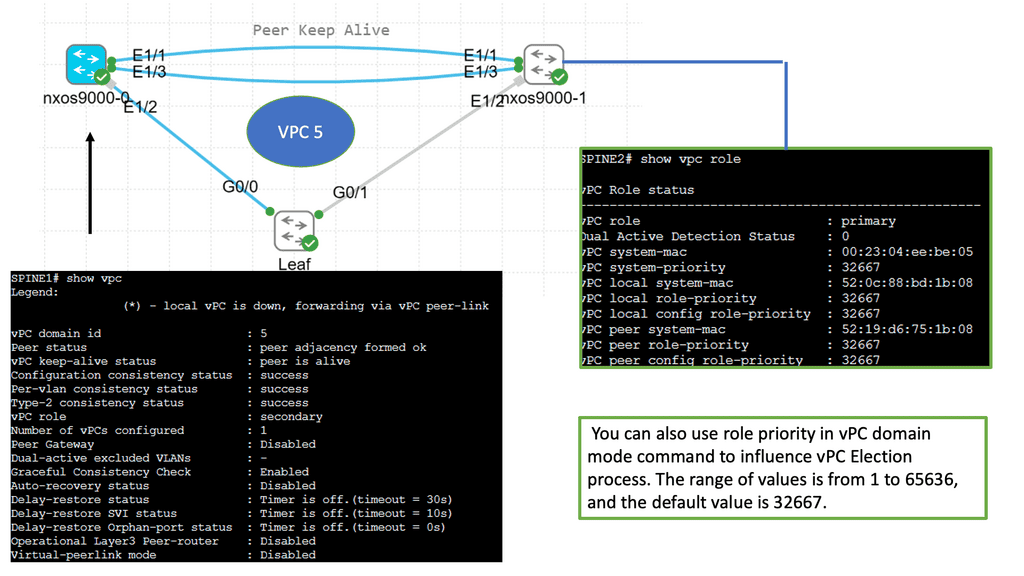

Understanding Virtual Port Channel (VPC)

VPC, in simple terms, enables the creation of a logical link aggregation between two Cisco Nexus switches. This link aggregation forms a single, robust connection, eliminating the need for Spanning Tree Protocol (STP) and providing active-active forwarding. By combining the bandwidth and redundancy of multiple physical links, VPC ensures high availability and efficient utilization of network resources.

Configuring VPC on Cisco Nexus 9000 Series switches involves a series of steps. Both switches must be configured with a unique domain ID and a peer-link interface. This peer-link serves as the control plane communication channel between the switches. Next, member ports are added to the VPC domain, forming a port channel. This port channel is then assigned to VLANs, creating a virtual network spanning the switches. Lastly, VPC parameters such as peer gateway, auto-recovery, and graceful convergence can be fine-tuned to suit specific requirements.

Advanced Topics

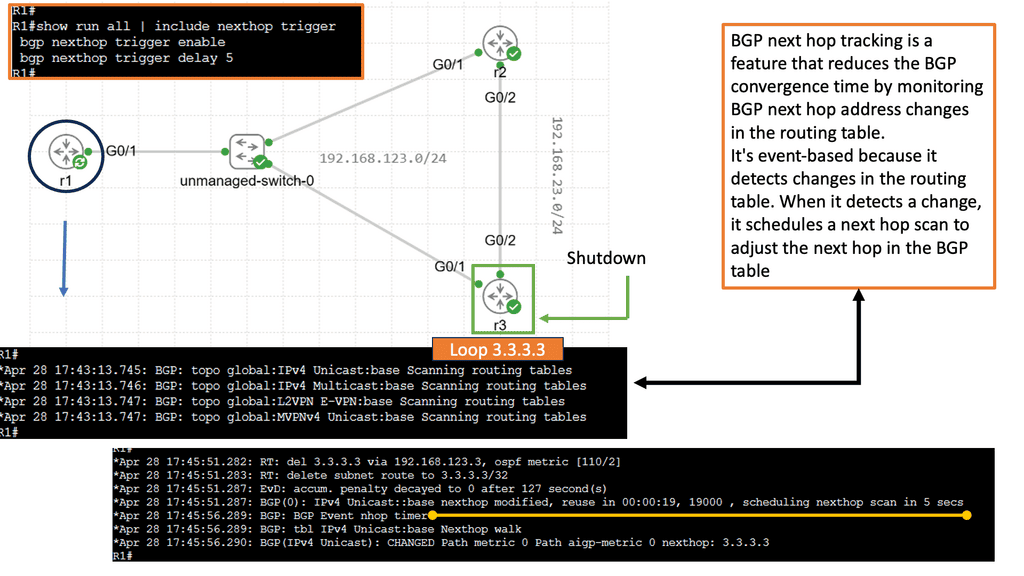

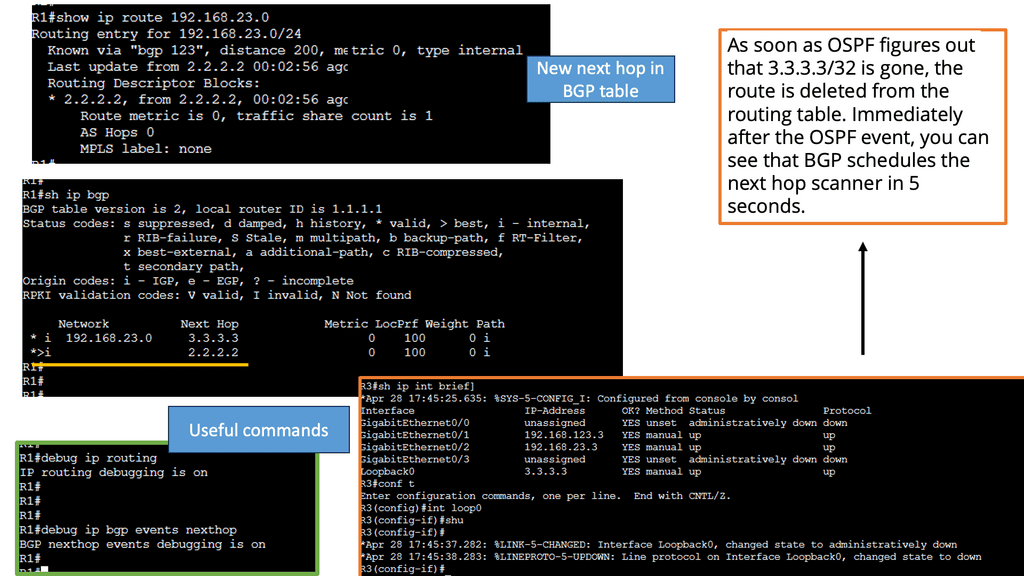

BGP Next Hop Tracking:

BGP next hop refers to the IP address used to reach a specific destination network. It represents the next hop router or gateway that should be used to forward packets towards the intended destination. Unlike traditional routing protocols, BGP considers multiple paths to reach a destination and selects the best path based on path length, AS (Autonomous System) path, and next hop information.

Importance of Next Hop Tracking:

Next-hop tracking within BGP is paramount as it ensures the proper functioning and stability of the network. By accurately tracking the next hop, BGP can quickly adapt to changes in network topology, link failures, or routing policy modifications. This proactive approach enables faster convergence times, reduces packet loss, and optimizes network performance.

Implementing BGP next-hop tracking offers network administrators and service providers numerous benefits. Firstly, it enhances network stability by promptly detecting and recovering from link failures or changes in network topology. Secondly, it optimizes traffic engineering capabilities, allowing for efficient traffic distribution and load balancing. Next-hop tracking improves network security by preventing route hijacking or unauthorized traffic diversion.

Understanding BGP Route Reflection

BGP route reflection is a mechanism to alleviate the complexity of full-mesh BGP configurations. It allows for the propagation of routing information without requiring every router to establish a direct peering session with every other router in the network. Instead, route reflection introduces a hierarchical structure, dividing routers into different clusters and designating route reflectors to handle the distribution of routing updates.

Implementing BGP route reflection brings several advantages to large-scale networks. Firstly, it reduces the number of peering sessions required, resulting in simplified network management and reduced resource consumption. Moreover, route reflection enhances scalability by eliminating the need for full-mesh configurations, enabling networks to accommodate more routers. Additionally, route reflectors improve convergence time by propagating routing updates more efficiently.

Understanding sFlow

sFlow is a network monitoring technology that provides real-time visibility into network traffic. It samples packets flowing through network devices, allowing administrators to analyze and optimize network performance. By capturing data at wire speed, sFlow offers granular insights into traffic patterns, application behavior, and potential bottlenecks.

Cisco NX-OS, a robust operating system for Cisco network switches, fully supports sFlow. Enabling sFlow on Cisco NX-OS can provide several key benefits. First, it facilitates proactive network monitoring by continuously collecting data on network flows. This real-time visibility enables administrators to swiftly identify and address performance issues, ensuring optimal network uptime.

sFlow on Cisco NX-OS equips network administrators with powerful troubleshooting and analysis capabilities. The technology provides detailed information on packet loss, latency, and congestion, allowing for swift identification and resolution of network anomalies. Additionally, sFlow offers insights into application-level performance, enabling administrators to optimize resource allocation and enhance user experience.

Capacity planning is a critical aspect of network management. By leveraging sFlow on Cisco NX-OS, organizations can accurately assess network utilization and plan for future growth. The detailed traffic statistics provided by sFlow enable administrators to make informed decisions about network upgrades, ensuring sufficient capacity to meet evolving demands.

Overcoming Challenges: Power and Cooling

As data centers strive to achieve faster speeds, they face significant power consumption and cooling challenges. High-speed processing and networking equipment generate substantial heat, necessitating robust cooling mechanisms to maintain optimal performance. Efficient cooling solutions, such as liquid cooling and advanced airflow management, are essential to prevent overheating and ensure data centers can operate reliably at peak speeds. As data centers become more powerful, cooling becomes a critical challenge.

Liquid Cooling

In the relentless pursuit of higher computing power, data centers turn to liquid cooling as a game-changing solution. By immersing servers in a specially designed coolant, heat dissipation becomes significantly more efficient. This technology allows data centers to push the boundaries of performance and offers a greener alternative by reducing energy consumption.

Artificial Intelligence Optimization

Artificial Intelligence (AI) is making its mark in data center performance. By leveraging machine learning algorithms, data centers can optimize their operations in real-time. AI-driven predictive analysis helps identify potential bottlenecks and enables proactive maintenance, improving efficiency and reducing downtime.

Edge Computing

With the exponential growth of Internet of Things (IoT) devices, data processing at the network’s edge has become necessary. Edge computing brings computation closer to the data source, reducing latency and bandwidth requirements. This innovative approach enhances data center performance and enables faster response times and improved user experiences.

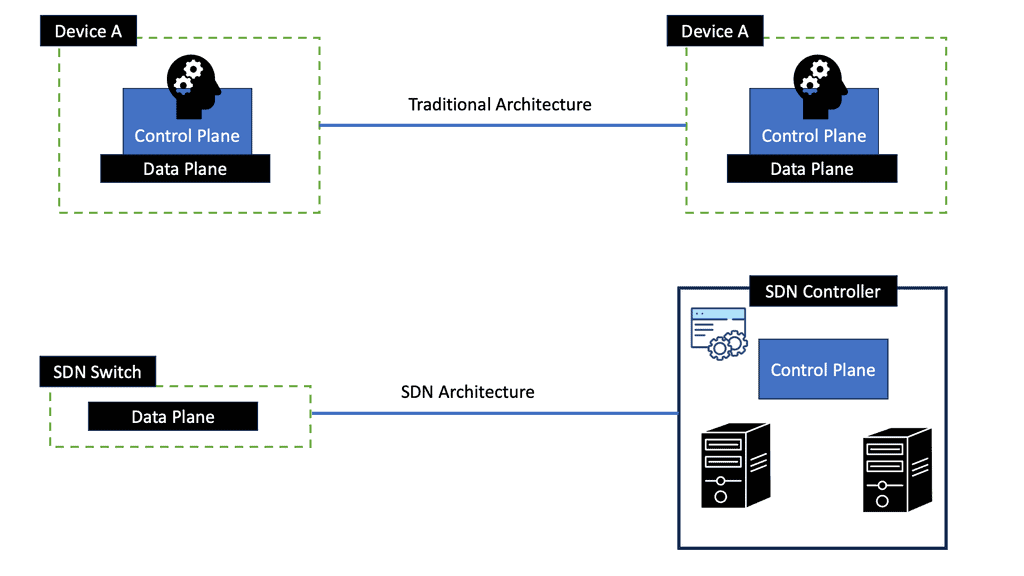

Software-Defined Networking

Software-defined networking (SDN) redefines how data centers manage and control their networks. SDN allows centralized network management and programmability by separating the control plane from the data plane. This flexibility enables data centers to dynamically allocate resources, optimize traffic flows, and adapt to changing demands, enhancing performance and scalability.

Switch Fabric Architecture

Switch fabric architecture is crucial to minimize packet loss and increase data center performance. A Gigabit (10GE to 100GE) data center network only takes milliseconds of congestion to cause buffer overruns and packet loss. Selecting the correct platforms that match the traffic mix and profiles is an essential phase of data center design. Specific switch fabric architectures are better suited to certain design requirements. Network performance has a direct relationship with switching fabric architecture.

The data center switch fabric aims to optimize end-to-end fabric latency with the ability to handle traffic peaks. Environments should be designed to send data as fast as possible, providing better application and storage performance. For these performance metrics to be met, several requirements must be set by the business and the architect team.

Switch Fabric Architecture. Key Data Center Performance Discussion Points: |

|

Before you proceed, you may find the following post helpful for pre-information.

- Dropped Packet Test

- Data Center Topologies

- Active Active Data Center Design

- IP Forwarding

- Data Center Fabric

Fabric Switch Requirements

|

Back to basics with network testing.

Several key factors influence data center performance:

a. Uptime and Reliability: Downtime can have severe consequences for businesses, resulting in financial losses, damaged reputation, and even legal implications. Therefore, data centers strive to achieve high uptime and reliability, minimizing disruptions to operations.

b. Speed and Responsiveness: Data centers must deliver fast and responsive services with increasing data volumes and user expectations. Slow response times can lead to dissatisfied customers and hamper business productivity.

c. Scalability: As businesses grow, their data requirements increase. A well-performing data center should be able to scale seamlessly, accommodating the organization’s expanding needs without compromising on performance.

d. Energy Efficiency: Data centers consume significant amounts of energy. Optimizing energy usage through efficient cooling systems, power management, and renewable energy sources can reduce costs and contribute to a sustainable future.

Impact on Businesses:

Data center performance directly impacts businesses in several ways:

a. Enhanced User Experience: A high-performing data center ensures faster data access, reduced latency, and improved website/application performance. This translates into a better user experience, increased customer satisfaction, and higher conversion rates.

b. Business Continuity: Data centers with robust performance measures, including backup and disaster recovery mechanisms, help businesses maintain continuity despite unexpected events. This ensures that critical operations can continue without significant disruption.

c. Competitive Advantage: In today’s competitive landscape, businesses that leverage the capabilities of a well-performing data center gain a competitive edge. Processing and analyzing data quickly can lead to better decision-making, improved operational efficiency, and innovative product/service offerings.

Proactive Testing

Although the usefulness of proactive testing is well known, most do not vigorously and methodically stress their network components in the ways that their applications will. As a result, testing that is too infrequent returns significantly less value than the time and money spent. In addition, many existing corporate testing facilities are underfunded and eventually shut down because of a lack of experience and guidance, limited resources, and poor productivity from previous test efforts. That said, the need for testing remains.

To understand your data center performance, you should undergo planned system testing. System testing is a proven approach for validating the existing network infrastructure and planning its future. It is essential to comprehend that in a modern enterprise network, achieving a high level of availability is only possible with some formalized testing.

Different Types of Switching

Cut-through switching

Cut-through switching allows you to start forwarding frames immediately. Switches process frames using a “first bit in, first bit out” method.

When a switch receives a frame, it makes a forwarding decision based on the destination address, known as destination-based forwarding. On Ethernet networks, the destination address is the first field following the start-of-frame delimiter. Due to the positioning of the destination address at the start of the frame, the switch immediately knows what egress port the frame needs to be sent to, i.e., there is no need to wait for the entire frame to be processed before you carry out the forwarding.

Buffer pressure at the leaf switch uplink and corresponding spine port is about the same, resulting in the same buffer size between these two network points. However, increasing buffering size at the leaf layer is more critical as more cases of speed mismatch occur in the cast ( many-to-one ) traffic and oversubscription. Speed mismatch, incast traffic, and oversubscription are the leading causes of buffer utilization.

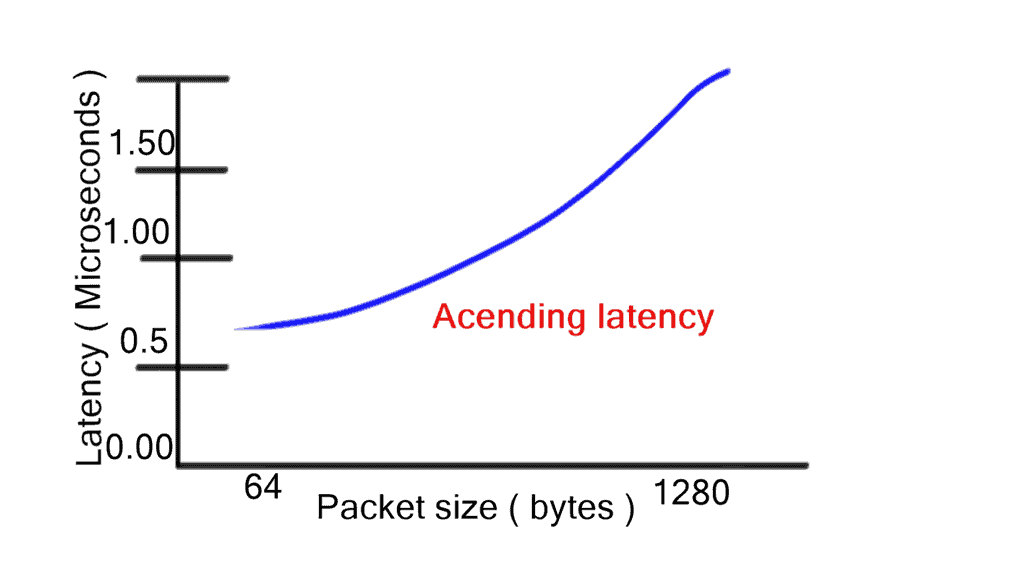

Store-and-forwarding

Store-and-forwarding works in contrast to cut-through switching. However, store-and-forwarding switching increases latency with packet size as the entire frame is stored first before the forwarding decision is made. One of the main benefits of cut-through is consistent latency among packet sizes, which is suitable for network performance. However, there are motivations to inspect the entire frame using the store-and-forward method. Store-and-forward ensures a) Collision detection and b) No packets with errors are propagated.

Cut-through switching is a significant data center performance improvement for switching architectures. Regardless of packet sizes, cut-through reduces the latency of the lookup-and-forwarding decision. Low and predictable latency results in optimized fabric and more minor buffer requirements. Selecting the correct platform with adequate interface buffer space is integral to data center design. For example, different buffering size requirements exist for leaf and spine switches. In addition, varying buffering utilization exists for other points of the network.

Switch Fabric Architecture

The main switch architectures used are the crossbar and SoC. A cut-through or store-and-forward switch can use either a crossbar fabric, a multistage crossbar fabric, an SoC, or a multistage SoC with either.

Crossbar Switch Fabric Architecture

In a crossbar design, every input is uniquely connected to every output through a “crosspoint. ” With a crosspoint design, crossbar fabric is strictly non-blocking and provides lossless transport. In addition, it has a feature known as over speed, which is used to achieve a 100% throughput (line rate) capacity for each port.

Overspeed clocks the switch fabric several times faster than the physical port interface connected to the fabric. Crossbar and cut-through switching enable line-rate performance with low latency regardless of packet size.

Cisco Nexus 6000 and 5000 series are cut-through with the crossbar. Nexus 7000 uses store-and-forward crossbar-switching mechanisms with large output-queuing memory or egress buffer.

Because of the large memory store-and-forward crossbar design offered, they provide large table sizes for MAC learning. Due to large table sizes, the density of ports is lower than that of other switch categories. The Nexus 7000 series with an M-series line card exemplifies this architecture.

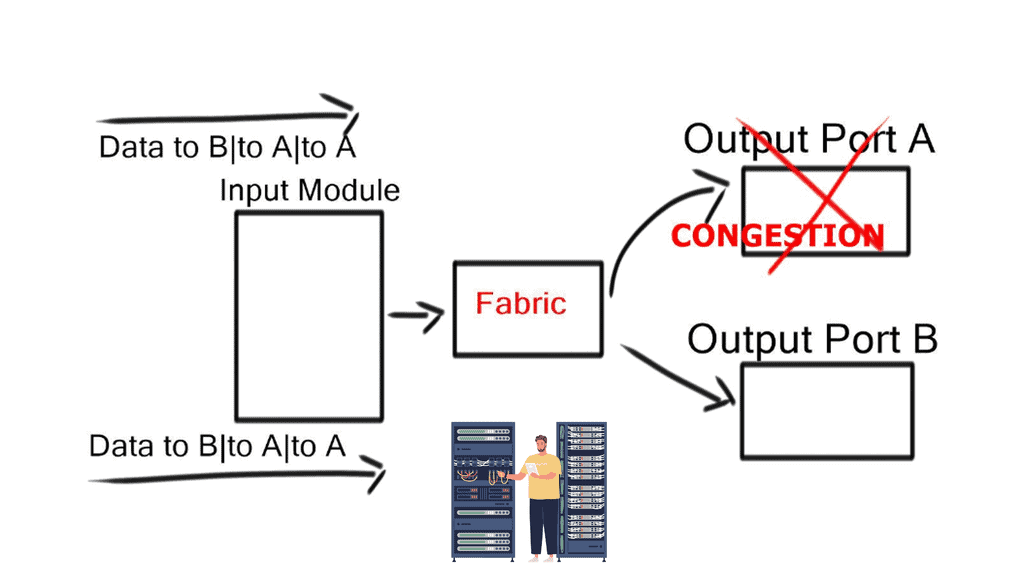

Head-of-line blocking (HOLB)

When frames for different output ports arrive on the same ingress port, frames destined for a free output port can be blocked by a frame in front of it destined for a congested output port. For example, an extensive FTP transfer lands in the same path across the internal switching fabric in addition to the request-response protocol (HTTP), which handles short transactions.

This causes the frame destined for the free port to wait in a queue until the frame in front of it can be processed. This idle time degrades performance and can create out-of-order frames.

Virtual output queues (VoQ)

Instead of having a single per-class queue on an output port, the hardware implements a per-class virtual output queue (VoQ) on input ports. Received packets stay in the virtual output queue on the input line card until the output port is ready to accept another packet. With VoQ, data centers no longer experience HOLB. VoQ is effective at absorbing traffic loads at congestion points in the network.

It forces congestion on ingress/queuing before traffic reaches the switch fabric. Packets are held at the ingress port buffer until the egress queue frees up.

VoQ is not the same as ingress queuing. Ingress queuing occurs when the total ingress bandwidth exceeds backplane capacity, and actual congestion occurs, which ingress-queuing policies would govern. VoQ generates a virtual congestion scenario at a node before the switching fabric. They are governed by egress queuing policies, not ingress policies.

Centralized shared memory ( SoC )

SoC is another type of data center switch architecture. Lower bandwidth and port density switches usually have SoC architectures. SoC differs from the crossbar in that all inputs and outputs share all memory. This inherently reduces frame loss and drop probability. Unused buffers are given to ports under pressure from increasing loads.

Data center performance is a critical aspect of modern businesses. It impacts the overall user experience, business continuity, and competitive advantage. By investing in high-performing data centers, organizations can ensure seamless operations, improved productivity, and stay ahead in a digital-first world. As technology continues to evolve, data center performance will remain a key factor in shaping the success of businesses across various industries.

Summary: Data Center Performance

In today’s digital age, data centers play a pivotal role in storing, processing, and managing massive amounts of information. Optimizing data center performance becomes paramount as businesses continue to rely on data-driven operations. In this blog post, we explored key strategies and considerations to unlock the full potential of data centers.

Understanding Data Center Performance

Data center performance refers to the efficiency, reliability, and overall capability of a data center to meet its users’ demands. It encompasses various factors, including processing power, storage capacity, network speed, and energy efficiency. By comprehending the components of data center performance, organizations can identify areas for improvement.

Infrastructure Optimization

A solid infrastructure foundation is crucial to enhancing data center performance. This includes robust servers, high-speed networking equipment, and scalable storage systems. Data centers can handle increasing workloads and deliver seamless user experiences by investing in the latest technologies and ensuring proper maintenance.

Virtualization and Consolidation

Virtualization and consolidation techniques offer significant benefits in terms of data center performance. By virtualizing servers, businesses can run multiple virtual machines on a single physical server, maximizing resource utilization and reducing hardware costs. Conversely, consolidation involves combining multiple servers or data centers into a centralized infrastructure, streamlining management, and reducing operational expenses.

Efficient Cooling and Power Management

Data centers consume substantial energy, leading to high operational costs and environmental impact. Implementing efficient cooling systems and power management practices is crucial for optimizing data center performance. Advanced cooling technologies, such as liquid or hot aisle/cold aisle containment, can significantly improve energy efficiency and reduce cooling expenses.

Monitoring and Analytics

Continuous monitoring and analytics are essential to maintain and improve data center performance. By leveraging advanced monitoring tools and analytics platforms, businesses can gain insights into resource utilization, identify bottlenecks, and proactively address potential issues. Real-time monitoring enables data center operators to make data-driven decisions and optimize performance.

Conclusion:

In the ever-evolving landscape of data-driven operations, data center performance remains a critical factor for businesses. By understanding the components of data center performance, optimizing infrastructure, embracing virtualization, implementing efficient cooling and power management, and leveraging monitoring and analytics, organizations can unlock the true potential of their data centers. With careful planning and proactive measures, businesses can ensure seamless operations, enhanced user experiences, and a competitive edge in today’s digital world.