Zero Trust SDP

The foundations that support our systems are built with connectivity, not security, as an essential feature. TCP connects before it authenticates. Security policy and user access based on IP lack context and allow architectures with overly permissive access. This will likely result in a brittle security posture enabling the need for Zero Trust SDP and SDP VPN.

Our environment has changed considerably, leaving traditional network and security architectures vulnerable to attack. The threat landscape is unpredictable. We are getting hit by external threats from all over the world. However, the environment is not just limited to external threats. There are insider threats within a user group and insider threats across user group boundaries.

Therefore, we must find ways to decouple security from the physical network and decouple application access from the network. We must change our mindset and invert the security model to a Zero Trust Security Strategy to do this. Software Defined Perimeter (SDP) is an extension of Zero Trust Network ZTN, which presents a revolutionary development. It provides an updated approach that current security architectures fail to address.

SDP is often referred to as Zero Trust Access (ZTA). Safe-T’s package of the access control software is called: Safe-T Zero+. Safe-T offers a phased deployment model, enabling you to progressively migrate to zero-trust network architecture while lowering the risk of technology adoption. Safe-T’s Zero+ model is flexible to meet today’s diverse hybrid IT requirements. It satisfies the zero-trust principles used to combat today’s network security challenges.

Before you proceed, you may find the following posts helpful:

Zero Trust SDP |

|

Network Challenges

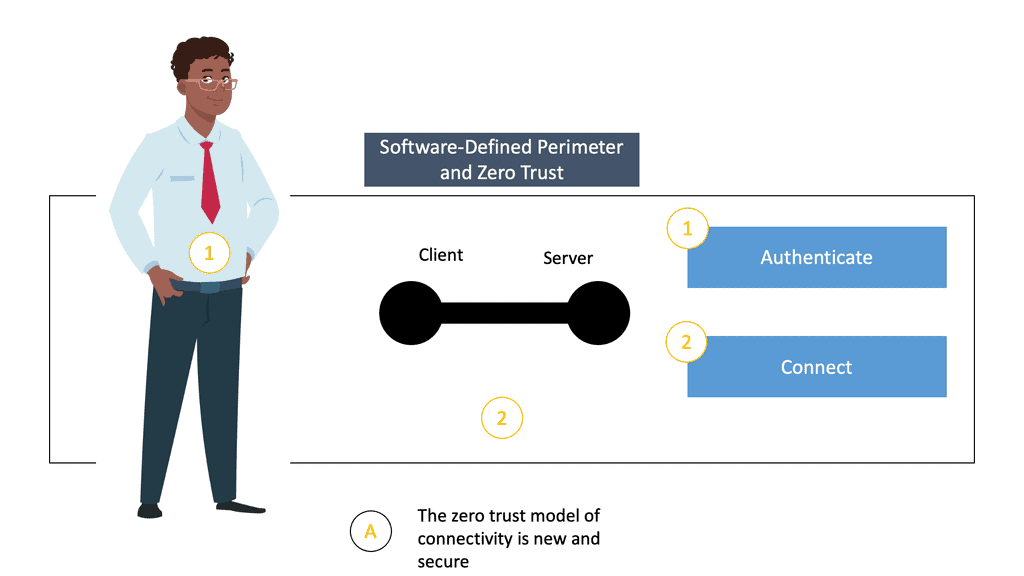

- Connect First and Then Authenticate

TCP has a weak security foundation. When clients want to communicate and have access to an application: they first set up a connection. Only after the connect stage has been carried out can the authentication stage be accomplished. Unfortunately, with this model, we have no idea who the client is until they have completed the connect phase. There is a possibility that the requesting client is not trustworthy.

- The Network Perimeter

We began with static domains, whereby a fixed perimeter separates internal and external segments. Public IP addresses are assigned to the external host, and private addresses are to the internal. If a host is assigned a private IP, it is thought to be more trustworthy than if it has a public IP address. Therefore, trusted hosts operate internally, while untrusted operate externally to the perimeter. The significant factor that needs to be considered is that IP addresses lack user knowledge to assign and validate trust.

Today, IT has become more diverse since it supports hybrid architectures with various user types, humans, applications, and the proliferation of connected devices. Cloud adoption has become the norm since many remote workers access the corporate network from various devices and places.

The perimeter approach no longer accurately reflects the typical topology of users and servers. It was built for a different era where everything was inside the organization’s walls. However, today, organizations are increasingly deploying applications in public clouds located in geographical locations. These locations are remote from an organization’s trusted firewalls and the perimeter network. This certainly stretches the network perimeter.

We have a fluid network perimeter where data and users are located everywhere. Hence, now we operate in a completely new environment. But the security policy controlling user access is built for static corporate-owned devices within the supposed trusted LAN.

- Lateral Movements

A significant concern with the perimeter approach is that it assumes a trusted internal network. However, 80% of threats are from internal malware or malicious employee that will often go undetected.

Besides, with the rise of phishing emails, an unintentional click will give a bad actor broad-level access. And once on the LAN, the bad actors can move laterally from one segment to another. They are likely to navigate undetected between or within the segments.

Eventually, the bad actor can steal the credentials and use them to capture and exfiltrate valuable assets. Even social media accounts can be targeted for data exfiltration since the firewall does not often inspect them as a file transfer mechanism.

- Issues with the Virtual Private Network (VPN)

What happens with traditional VPN access is that the tunnel creates an extension between the client’s device and the application’s location. The VPN rules are static and do not dynamically change with the changing levels of trust on a given device. They provide only network information, which is a significant limitation.

Therefore, from a security standpoint, the traditional method of VPN access enables the clients to have broad network-level access. This makes the network susceptible to undetected lateral movements. Also, the remote users are authenticated and authorized but once permitted to the LAN. They have coarse-grained access. This creates a high level of risk as undetected malware on a user’s device can spread to an internal network.

Another significant challenge is that VPNs generate administrative complexity and cannot easily handle cloud or multiple network environments. They require the installation of end-user VPN software clients and knowing where the application they are accessing is located. Users would have to change their VPN client software to access the applications at different locations. In a nutshell, traditional VPNs are complex for administrators to manage and for users to operate.

With public concern over surveillance, privacy, and identity theft growing, many people are turning to VPNs to help keep them safer online. But where should you start when choosing the best VPN for your needs?

Also, poor user experience will likely occur as you need to backhaul the user traffic to a regional data center. This adds latency and bandwidth costs.

In recent years, torrenting has become increasingly popular among computer users who wish to download movies, books, and songs. Without having a VPN, you could risk your privacy and security. It is also important to note that you should be very careful when downloading files to your computer as they could cause more harm than good.

Can Zero Trust Access be the Solution?

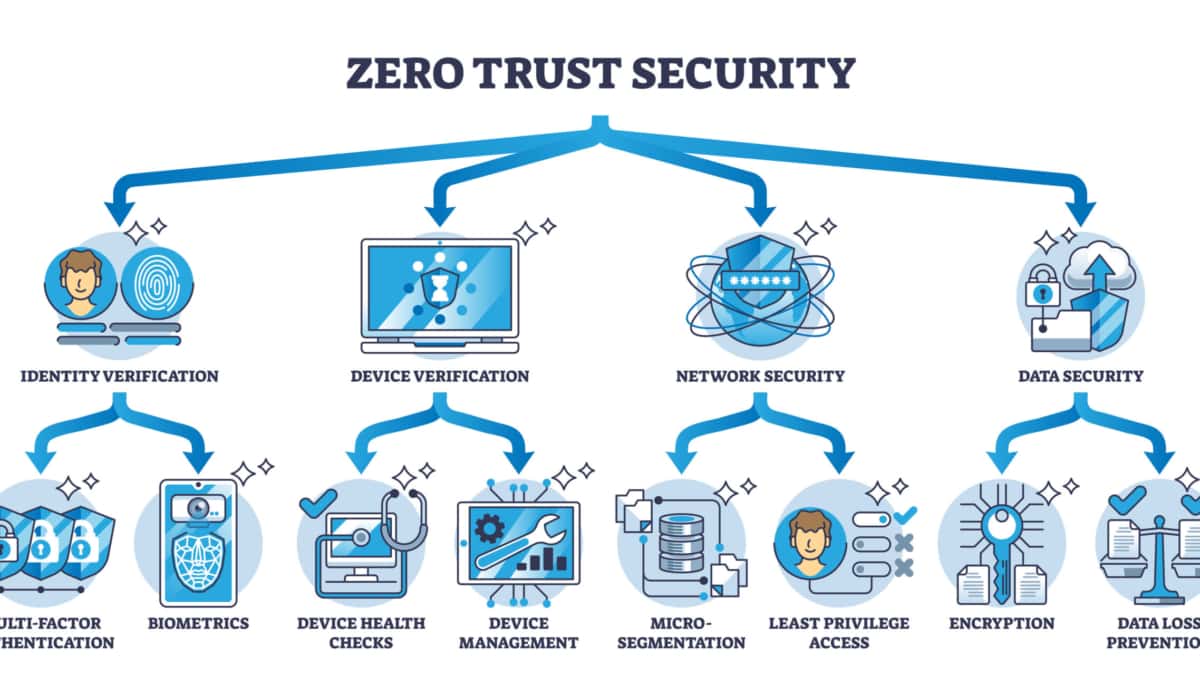

The main principle that Zero Trust Network Design follows is that nothing should be trusted. This is regardless of whether the connection originates inside or outside the network perimeter. Reasonably, today, we have no reason to trust any user, device, or application; some companies may try and decrease accessibility by using programs like office 365 distribution group to allow and disallow users’ and devices’ specific network permissions. You know that you cannot protect what you cannot see, but you also cannot attack what you cannot see also holds. ZTA makes the application and the infrastructure utterly undetectable to unauthorized clients, creating an invisible network.

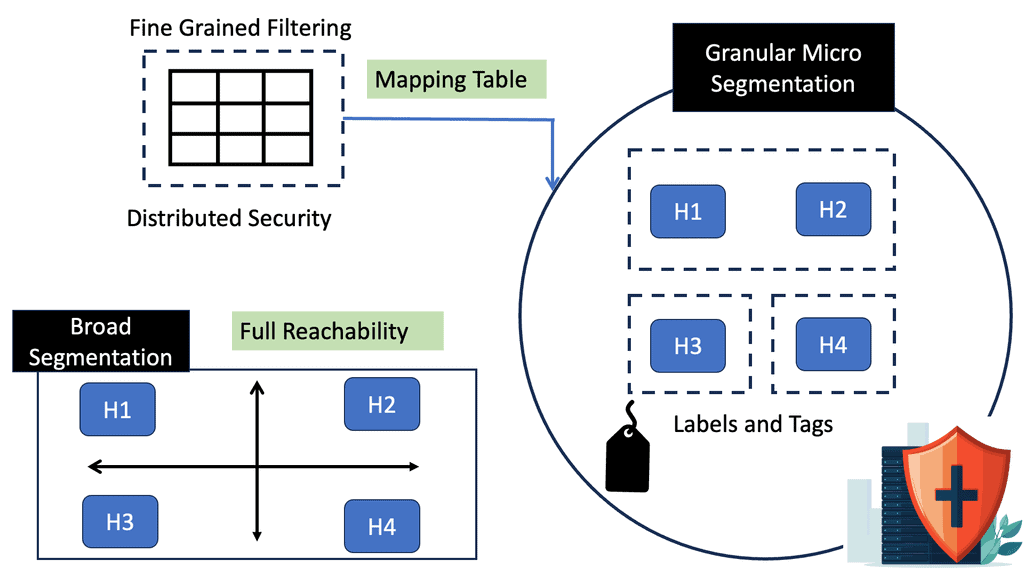

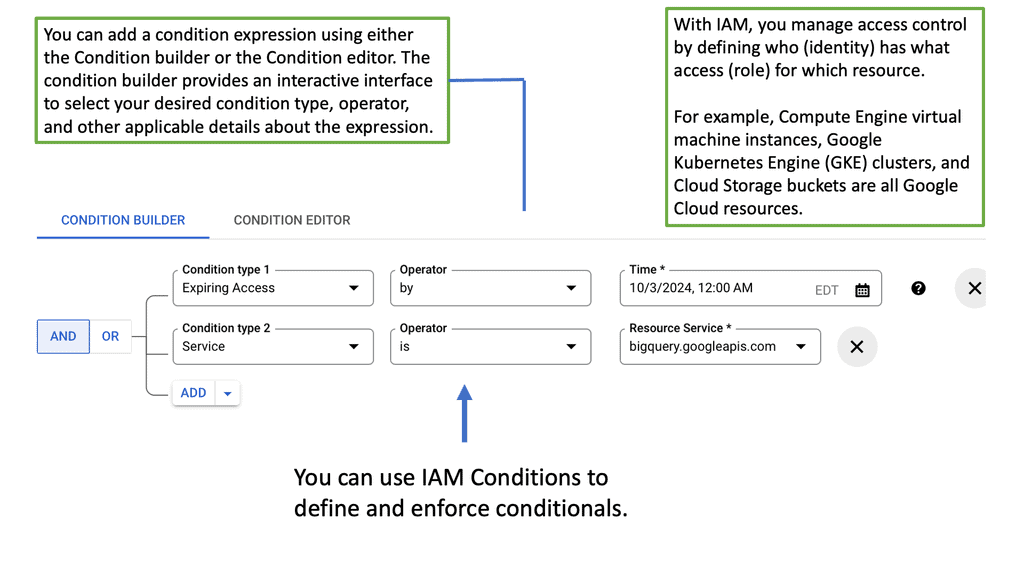

Preferably, application access should be based on contextual parameters, such as who/where the user is located and the judgment of the security stance of the device. Then a continuous assessment of the session should be performed. This moves us from network-centric to user-centric, providing a connection-based approach to security. Security enforcement should be based on user context and include policies that matter to the business. It should be unlike a policy based on subnets that have no meaning. The authentication workflows should include context-aware data, such as device ID, geographic location, and the time and day when the user requests access.

It’s not good enough to provide network access. We must provide granular application access with a dynamic segment of 1. Here, an application microsegment gets created for every request that comes in. Micro-segmentation creates the ability to control access by subdividing the larger network into small secure application micro perimeter internal to the network. This abstraction layer puts a lockdown on lateral movements. In addition, zero trust access also implements a policy of least privilege by enforcing controls that enable the users to have access only to the resources they need to perform their tasks.

Characteristics of Safe-T

Safe-T has three main pillars to provide a secure application and file access solution:

1) An architecture that implements zero trust access,

2) A proprietary secure channel that enables users to access/share sensitive files remotely and

3) User behavior analytics.

Safe-T’s SDP architecture is designed to substantially implement the essential capabilities delineated by the Cloud Security Alliance (CSA) architecture. Safe-T’s Zero+ is built using these main components:

The Safe-T Access Controller is the centralized control and policy enforcement engine that enforces end-user authentication and access. It acts as the control layer, governing the flow between end-users and backend services.

Secondly, the Access Gateway is a front-end for all the backend services published to an untrusted network. The Authentication Gateway presents to the end-user in a clientless web browser. Hence, a pre-configured authentication workflow is provided by the Access Controller. The authentication workflow is a customizable set of authentication steps, such as 3rd party IDPs (Okta, Microsoft, DUO Security, etc.). In addition, it has built-in options, such as a captcha, username/password, No-Post, and OTP.

Safe-T Zero+ Capabilities

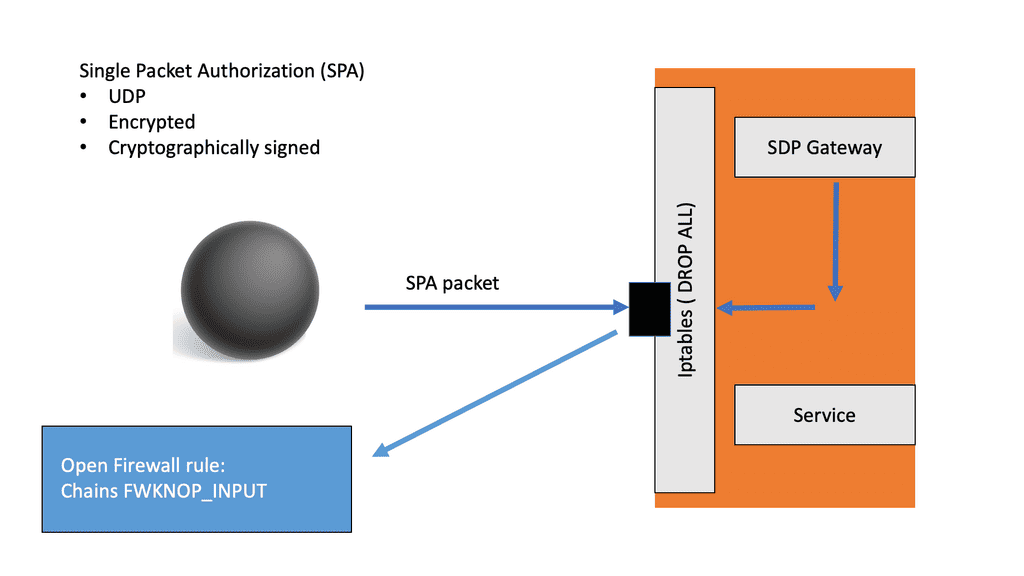

The Safe-T Zero+ capabilities are in line with zero trust principles. With Safe-T Zero+, clients requesting access must go through authentication and authorization stages before accessing the resource. Any network resource that has not passed these steps is blackened. Here, URL rewriting is used to hide the backend services.

This reduces the attack surface to an absolute minimum and follows Safe-T’s axiom: If you can’t be seen, you can’t be hacked. In a normal operating environment, for the users to access services behind a firewall, they have to open ports on the firewall. This presents security risks as a bad actor could directly access that service via the open port and exploit any vulnerabilities.

Another paramount capability of Safe-T Zero+ is implementing a patented technology called reverse access to eliminate the need to open incoming ports in the internal firewall. This also eliminates the need to store sensitive data in the demilitarized zone (DMZ). It can extend to on-premise, public, and hybrid cloud, supporting the most diverse hybrid and meeting the IT requirements. Zero+ can be deployed on-premises, as part of Safe-T’s SDP services, or on AWS, Azure, and other cloud infrastructures, thereby protecting both cloud and on-premise resources.

Zero+ provides the capability of user behavior analytics that monitors the actions of protected web applications. This allows the administrator to inspect the details of anomalous behavior. Forensic assessment is more accessible by offering a single source for logging.

Finally, Zero+ provides a unique, native HTTPS-based file access solution for the NTFS file system, replacing the vulnerable SMB protocol. Besides, users can create a standard mapped network drive in their Windows explorer. This provides a secure, encrypted, access-controlled channel to shared backend resources.

Zero Trust Access: Deployment Strategy

Safe-T customers can exclusively select an architecture that meets their on-premise or cloud-based requirements.

There are three options:

i) The customer deploys three VMs: 1) Access Controller, 2) Access Gateway, and 3) Authentication Gateway. The VMs can be deployed on-premises in an organization’s LAN, on Amazon Web Services (AWS) public cloud, or on Microsoft’s Azure public cloud.

ii) The customer deploys the 1) Access Controller VM and 2) Access Gateway VM on-premises in their LAN. The customer deploys the Authentication Gateway VM on a public cloud like AWS or Azure.

iii) The customer deploys the Access Controller VM on-premise in the LAN, and Safe-T deploys and maintains two VMs, 1) Access Gateway and 2) Authentication Gateway, both hosted on Safe-T’s global SDP cloud service.

ZTA Migration Path

Today, organizations recognize the need to move to zero-trust architecture. However, there is a difference between recognition and deployment. Also, new technology brings with it considerable risks. Chiefly, traditional Network Access Control (NAC) and VPN solutions fall short in many ways, but a rip-and-replace model is a very aggressive approach.

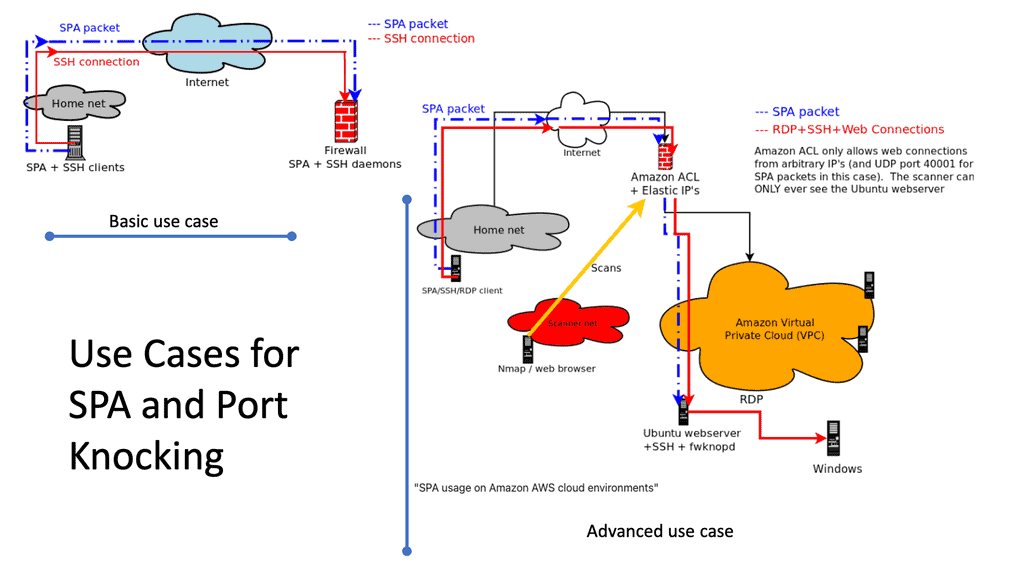

To transition from legacy to ZTA and single packet authorization, you should look for a migration path you feel comfortable with. Maybe you want to run a traditional VPN in parallel or in conjunction with your SDP solution and only for a group of users for a set period. A probable example could be: choosing a server used primarily by experienced users, such as DevOps or QA personnel. This ensures minimal risk if any problem occurs during your organization’s phased deployment of SDP access.

A recent survey by the CSA indicates that SDP awareness and adoption are still in an early stage. However, when you go down the path of ZTA, vendor selection that provides an architecture that matches your requirements is the key to successful adoption. For example, look for SDP vendors who allow you to continue using your existing VPN deployment while adding.

SDP/ZTA capabilities on top of your VPN. This could sidestep the risks if you switch to an entirely new technology.