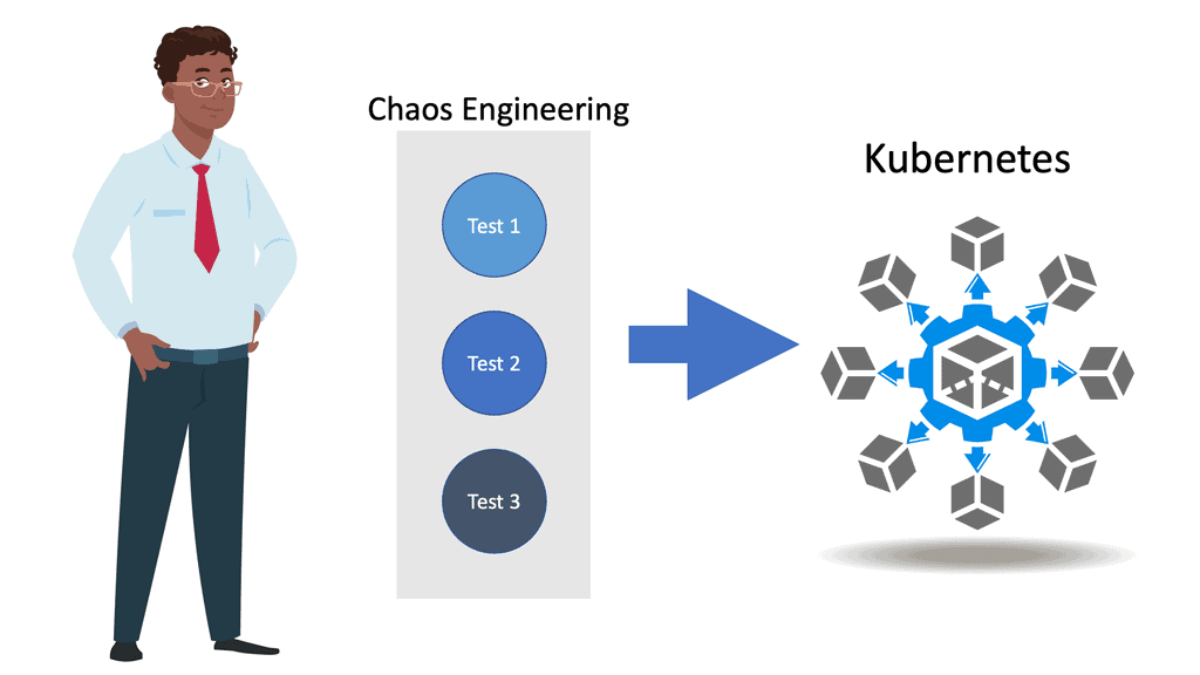

Chaos Engineering Kubernetes

In the world of cloud-native computing, Kubernetes has emerged as the de facto container orchestration platform. With its ability to manage and scale containerized applications, Kubernetes has revolutionized modern software development and deployment. However, as systems become more complex, ensuring their resilience and reliability has become a critical challenge. This is where Chaos Engineering comes into play. In this blog post, we will explore the concept of Chaos Engineering in the context of Kubernetes and its importance in building robust, fault-tolerant applications.

Chaos Engineering is a discipline that deliberately injects failure into a system to uncover weaknesses and vulnerabilities. By simulating real-world scenarios, organizations can proactively identify and address potential issues before they impact end-users. Chaos Engineering embraces the philosophy of “fail fast to learn faster,” helping teams build more resilient systems that can withstand unforeseen circumstances and disruptions with minimal impact.

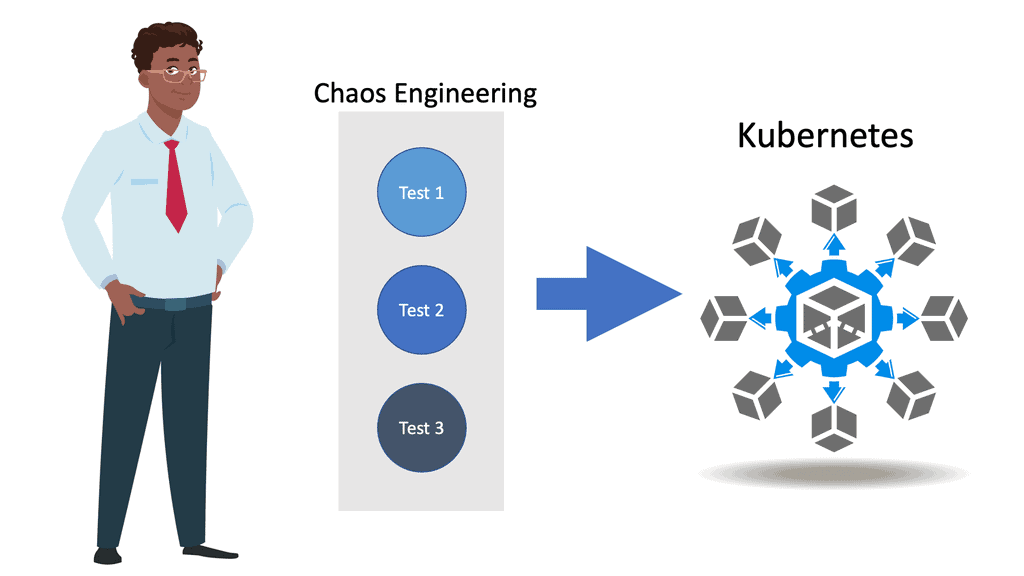

Regarding Chaos Engineering in Kubernetes, the focus is on injecting controlled failures into the ecosystem to assess the system’s behavior under stress. By leveraging Chaos Engineering tools and techniques, organizations can gain valuable insights into the resiliency of their Kubernetes deployments and identify areas for improvement.

Highlights: Chaos Engineering Kubernetes

- The Traditional Application

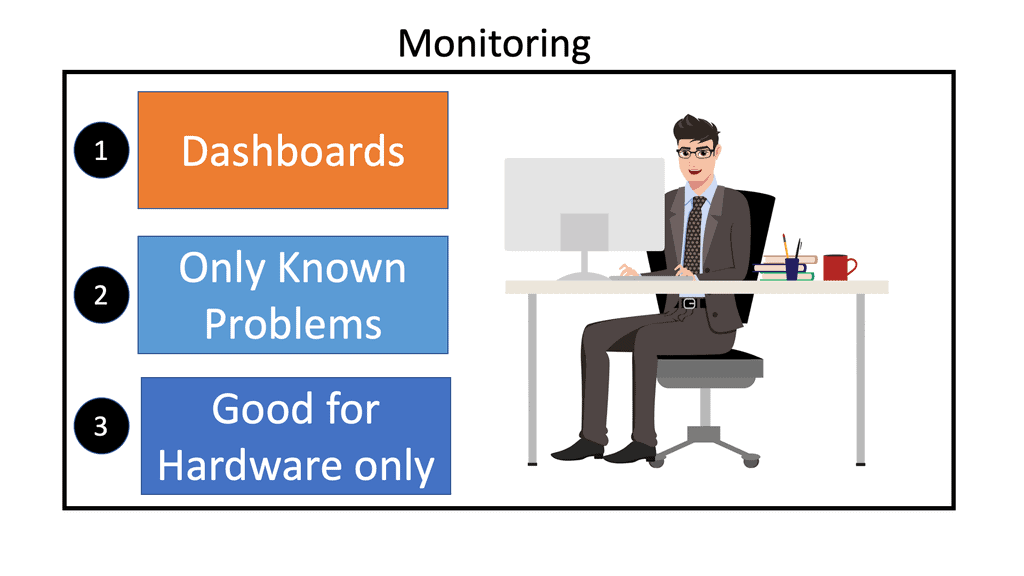

When considering Chaos Engineering kubernetes, we must start from the beginning. Not too long ago, applications ran in single private data centers, potentially two data centers for high availability. These data centers were on-premises, and all components were housed internally. Life was easy, and troubleshooting and monitoring any issues could be done by a single team, if not a single person, with predefined dashboards. Failures were known, and there was a capacity planning strategy that did not change too much, and you could carry out standard dropped packet test.

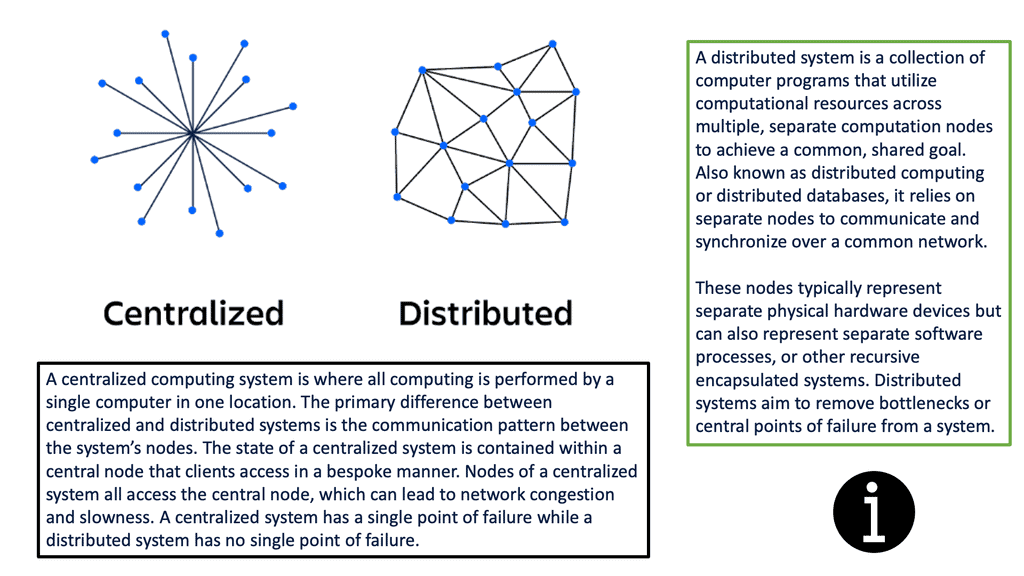

- A Static Infrastructure

The network and infrastructure had fixed perimeters and were pretty static. There weren’t many changes to the stack, for example, daily. Agility was at an all-time low, but that did not matter for the environments in which the application and infrastructure were housed. However, nowadays, we are in a completely different environment.

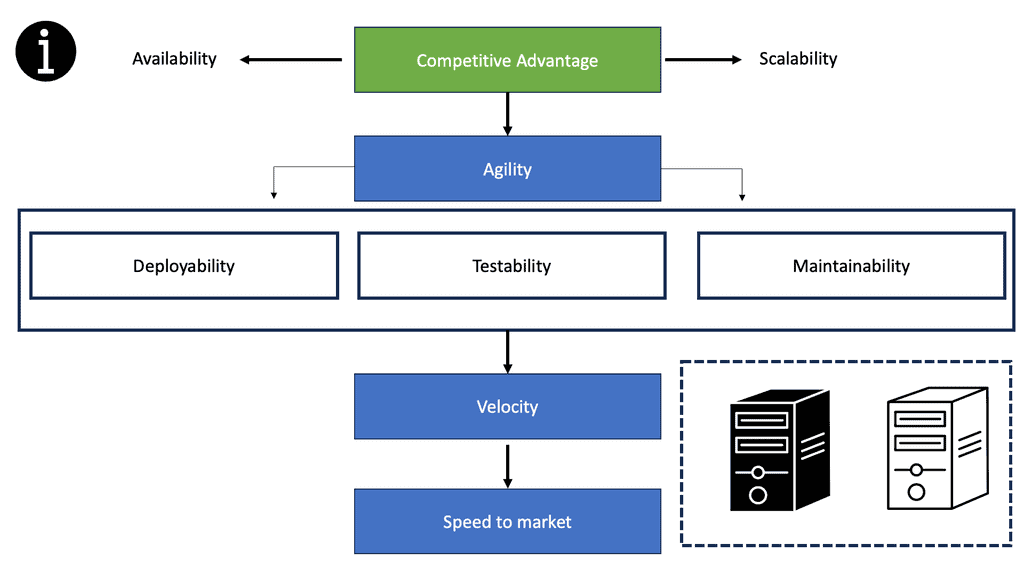

Complexity is at an all-time high, and agility in business is critical. Now, we have distributed applications with components/services located in many different places and types of places, on-premises and in the cloud, with dependencies on both local and remote services. So, in this land of complexity, we must find system reliability. A reliable system is one that you can trust will be reliable.

Before you proceed to the details of Chaos Engineering, you may find the following useful:

- Service Level Objectives (slos)

- Kubernetes Networking 101

- Kubernetes Security Best Practice

- Network Traffic Engineering

- Reliability In Distributed System

- Distributed Systems Observability

Kubernetes Chaos Engineering |

|

- A key point: Video on Chaos Engineering Kubernetes

In this video tutorial, we are going through the basics of how to start a Chaos Engineering project, along with a discussion on baseline engineering. I will introduce to you how this can be solved by knowing exactly how your application and infrastructure perform under stress and what are their breaking points.

Back to basics with Chaos Engineering Kubernetes

Today’s standard explanation for Chaos Engineering is “The facilitation of experiments to uncover systemic weaknesses.” The following is true for Chaos Engineering.

- Begin by defining “steady state” as some measurable output of a system that indicates normal behavior.

- Hypothesize that this steady state will persist in both the control and experimental groups.

- Submit variables that mirror real-world events like servers that crash, hard drives that malfunction, severed network connections, etc.

- Then, as a final step. Try to disprove the hypothesis by looking for a steady state difference between the control and experimental groups.

Chaos Engineering Scenarios in Kubernetes:

1. Pod Failures: Simulating failures of individual pods within a Kubernetes cluster allows organizations to evaluate how the system responds to such events. By randomly terminating pods, Chaos Engineering can help ensure that the system can handle pod failures gracefully, redistributing workload and maintaining high availability.

2. Network Partitioning: Introducing network partitioning scenarios can help assess the resilience of a Kubernetes cluster. By isolating specific nodes or network segments, Chaos Engineering enables organizations to test how the group reacts to network disruptions and evaluate the effectiveness of load balancing and failover mechanisms.

3. Resource Starvation: Chaos Engineering can simulate resource scarcity scenarios by intentionally consuming excessive resources, such as CPU or memory, within a Kubernetes cluster. This allows organizations to identify potential performance bottlenecks and optimize resource allocation strategies.

Benefits of Chaos Engineering in Kubernetes:

1. Enhanced Reliability: By subjecting Kubernetes deployments to controlled failures, Chaos Engineering helps organizations identify weak points and vulnerabilities, enabling them to build more resilient systems that can withstand unforeseen events.

2. Improved Incident Response: Chaos Engineering allows organizations to test and refine their incident response processes by simulating real-world failures. This helps teams understand how to quickly detect and mitigate potential issues, reducing downtime and improving the overall incident response capabilities.

3. Cost Optimization: By identifying and addressing performance bottlenecks and inefficient resource allocation, Chaos Engineering can help optimize the utilization of resources within a Kubernetes cluster. This, in turn, leads to cost savings and improved efficiency.

Beyond the Complexity Horizon

Therefore, monitoring and troubleshooting are much more demanding, as everything is interconnected, making it difficult for a single person in one team to understand what is happening entirely. The edge of the network and application boundary surpasses one location and team. Enterprise systems have gone beyond the complexity horizon, and you can’t understand every bit of every single system.

Even if you are a developer closely related to the system and truly understand the nuts and bolts of the application and its code, no one can understand every bit of every single system. So, finding the correct information is essential, but once you find it, you have to give it to those who can fix it. So monitoring is not just about finding out what is wrong; it needs to alert, and these alerts need to be actionable.

Troubleshooting: Chaos engineering kubernetes

Chaos Engineering aims to improve a system’s reliability by ensuring it can withstand turbulent conditions. Chaos Engineering makes Kubernetes more secure. So, if you are adopting Kubernetes, you should adopt Chaos Engineering as an integral part of your monitoring and troubleshooting strategy.

Firstly, we can pinpoint the application errors and understand, at best, how these errors arose. This could be anything from badly ordered scripts on a web page to, let’s say, a database query that has bad sequel calls or even unoptimized code-level issues.

Or there could be something more fundamental going on. It is common to have issues with how something is packaged into a container. You can pull in the incorrect libraries or even use a debug version of the container. Or there could be nothing wrong with the packaging and containerization of the container; it is all about where the container is being deployed. There could be something wrong with the infrastructure, either a physical or logical problem—incorrect configuration or a hardware fault somewhere in the application path.

Non-ephemeral and ephemeral services

With the introduction of containers and microservices observability, monitoring solutions need to manage non-ephemeral and ephemeral services. We are collecting data for applications that consist of many different benefits.

So when it comes to container monitoring and performing chaos engineering kubernetes tests, we need to understand the nature and the application that lays upon fully. Everything is dynamic by nature. You need to have monitoring and troubleshooting in place that can handle the dynamic and transient nature. When monitoring a containerized infrastructure, you should consider the following.

Container Lifespan: Containers have a short lifespan; containers are provisioned and commissioned based on demand. This is compared to the VM or bare-metal workloads that generally have a longer lifespan. As a generic guideline, containers have an average lifespan of 2.5 days, while traditional and cloud-based VMs have an average lifespan of 23 days. Containers can move, and they do move frequently.

One day, we could have workload A on cluster host A, and the next day or even on the same day, the same cluster host could be hosting Application workload B. Therefore, different types of impacts could depend on the time of day.

Containers are Temporary: Containers are dynamically provisioned for specific use cases temporarily. For example, we could have a new container based on a specific image. New network connections will be set up for that container, storage, and any integrations to other services that make the application work. All of this is done dynamically and can be done temporarily.

Different monitoring levels: We have many monitoring levels in a Kubernetes environment. The components that make up the Kubernetes deployment will affect application performance. We have, for example, nodes, pods, and application containers. We have monitoring at different levels, such as the VM, storage, and microservice level.

Microservices change fast and often: Microservices consist of constantly evolving apps. New microservices are added, and existing ones are decommissioned quickly. So, what does this mean to usage patterns? This will result in different usage patterns on the infrastructure. If everything is often changing, it can be hard to derive the baseline and build a topology map unless you have something automatic in place.

Metric overload: We now have loads of metrics. We now have additional metrics for the different containers and infrastructure levels. We must consider metrics for the nodes, cluster components, cluster add-on, application runtime, and custom application metrics. This is compared to a traditional application stack where we use metrics for components such as the operating system and the application.

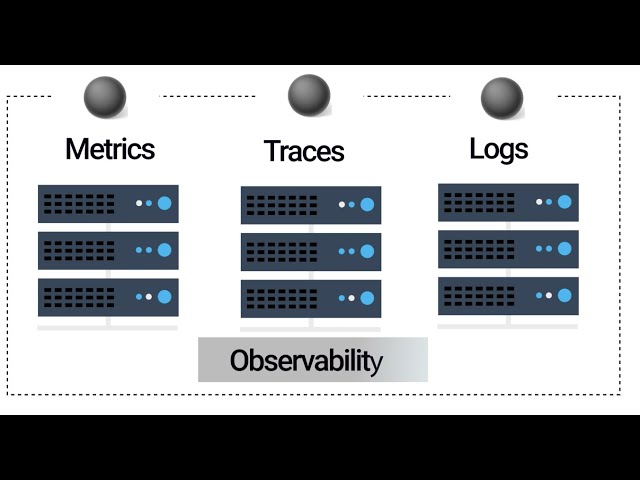

- A key point: Video on Observability vs. Monitoring

We will start by discussing how our approach to monitoring needs to adapt to the current megatrends, such as the rise of microservices. Failures are unknown and unpredictable. Therefore, a pre-defined monitoring dashboard will have difficulty keeping up with the rate of change and unknown failure modes.

For this, we should look to have the practice of observability for software and monitoring for infrastructure.

Metric explosion

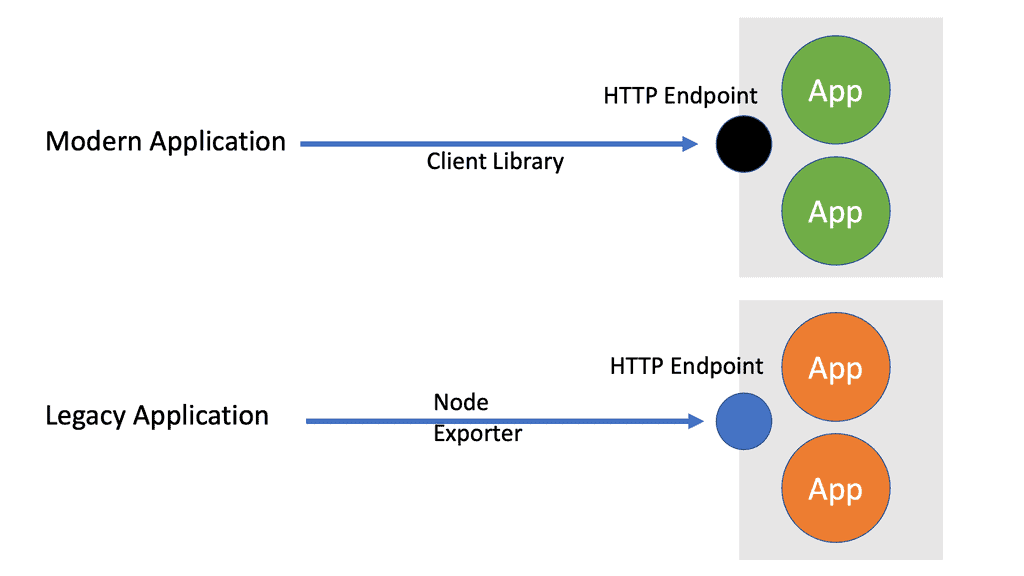

In the traditional world, we didn’t have to be concerned with the additional components such as an orchestrator or the dynamic nature of many containers. With a container cluster, we must consider metrics from the operating system, application, orchestrator, and containers. We refer to this as a metric explosion. So now we have loads of metrics that need to be gathered. There are also different ways to pull or scrape these metrics.

Prometheus is expected in the world of Kubernetes and uses a very scalable pull approach to getting those metrics from HTTP endpoints either through Prometheus client libraries or exports.

A key point: What happens to visibility

So we need complete visibility now more than ever. And not just for single components but visibility at a holistic level. Therefore, we need to monitor a lot more data points than we had to in the past. We need to monitor the application servers, Pods and containers, clusters running the containers, the network for service/pod/cluster communication, and the host OS.

All of the data from the monitoring needs to be in a central place so trends can be seen and different queries to the data can be acted on. Correlating local logs would be challenging in a sizeable multi-tier application with docker containers. We can use Log forwarders or Log shippers such as FluentD or Logstash to transform and ship logs to a backend such as Elasticsearch.

A key point: New avenues for monitoring

Containers are the norm for managing workloads and adapting quickly to new markets. Therefore, new avenues have opened up for monitoring these environments. So we have, for example, AppDynamics and Elastic search, which are part of the ELK stack, the various logs shippers that can be used to help you provide a welcome layer of unification. We also have Prometheus to get metrics. Keep in mind that Prometheus works in the land of metrics only. There will be different ways to visualize all this data, such as Grafana and Kibana.

What happened to visibility

What happens to visibility? So we need complete visibility now more than ever. And not just for single components but visibility at a holistic level. Therefore, we need to monitor a lot more data points than we had to in the past. We need to monitor the application servers, Pods and containers, clusters running the containers, the network for service/pod/cluster communication, and the host OS.

All of the data from the monitoring needs to be in a central place so trends can be seen and different queries to the data can be acted on. Correlating local logs would be challenging in a sizeable multi-tier application with docker containers. We can use Logforwarders or Log shippers such as FluentD or Logstash to transform and ship logs to a backend such as Elasticsearch.

Containers are the norm for managing workloads and adapting quickly to new markets. Therefore, new avenues have opened up for monitoring these environments. So I have mentioned AppDynamics, Elastic search, which is part of the ELK stack, and the various log shippers that can be used to help you provide a layer of unification. We also have Prometheus. There will be different ways to visualize all this data, such as Grafana and Kibana.

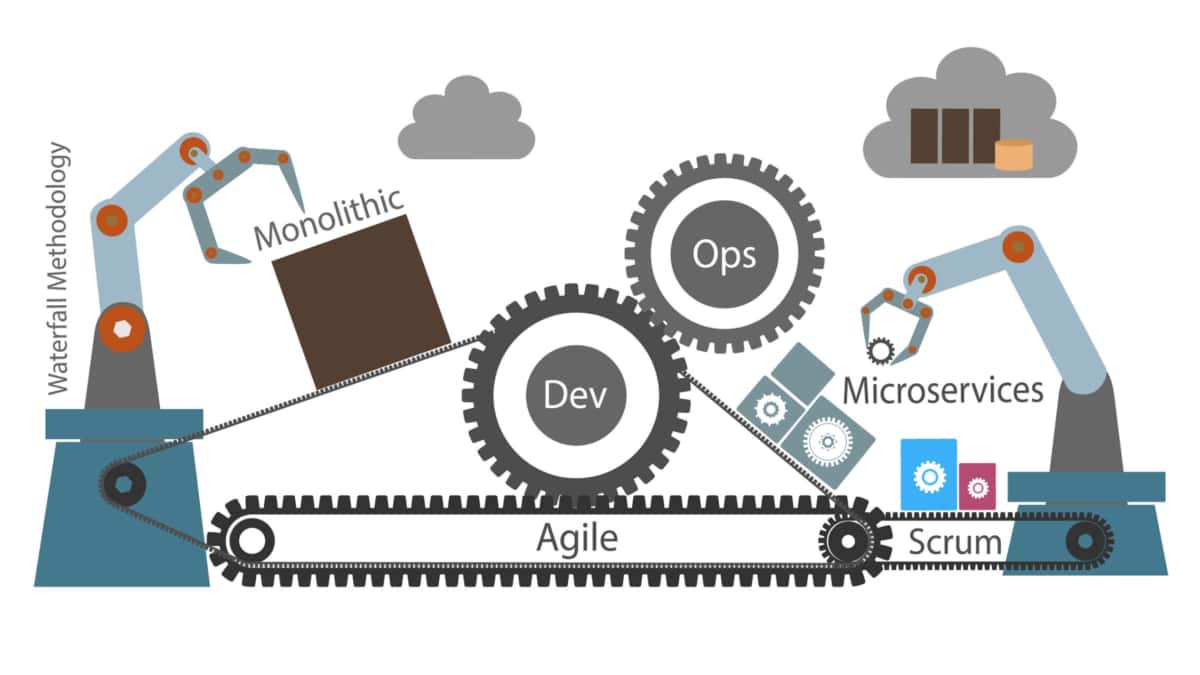

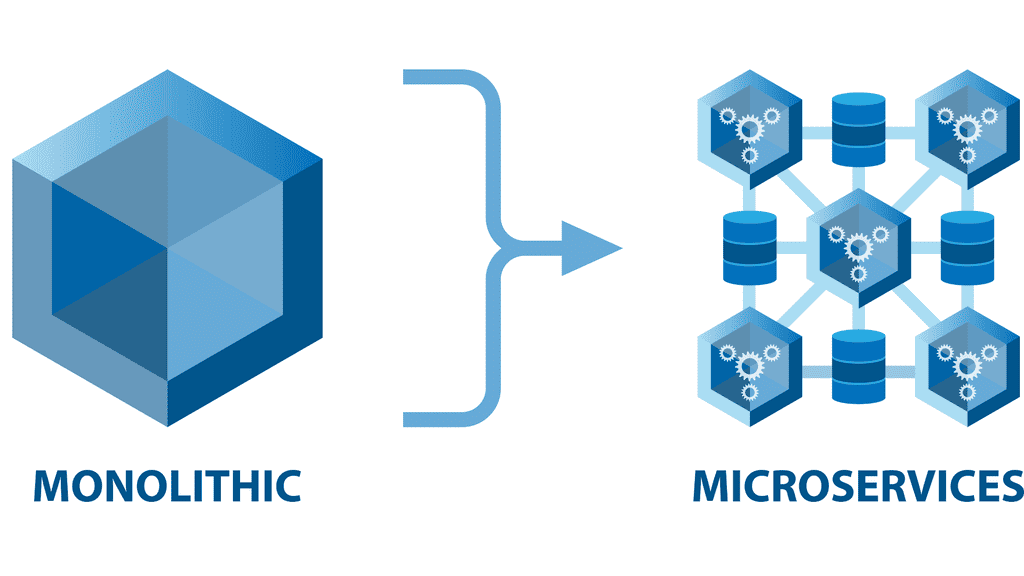

Microservices complexity: Management is complex

So, with the wave towards microservices, we get the benefits of scalability and business continuity, but managing is very complex. The monolith is much easier to manage and monitor. Also, as they are separate components, they don’t need to be written in the same language or toolkits. So you can mix and match different technologies.

So, this approach has a lot of flexibility, but we can have increased latency and complexity. There are a lot more moving parts that will increase complexity.

We have, for example, reverse proxies, load balancers, firewalls, and other infrastructure support services. What used to be method calls or interprocess calls within the monolith host now go over the network and are susceptible to deviations in latency.

Debugging microservices

With the monolith, the application is simply running in a single process, and it is relatively easy to debug. Many traditional tooling and code instrumentation technologies have been built, assuming you have the idea of a single process. However, with microservices, we have a completely different approach with a distributed application.

Now, your application has multiple processes running in other places. The core challenge is that trying to debug microservices applications is challenging.

So much of the tooling we have today has been built for traditional monolithic applications. So, there are new monitoring tools for these new applications, but there is a steep learning curve and a high barrier to entry. New tools and technologies such as distributed tracing and chaos engineering kubernetes are not the simplest to pick up on day one.

- Automation and monitoring: Checking and health checks

Automation comes into play with the new environment. With automation, we can do periodic checks not just on the functionality of the underlying components, but we can implement the health checks of how the application performs. All can be automated for specific intervals or in reaction to certain events.

With the rise of complex systems and microservices, it is more important to have real-time monitoring of performance and metrics that tell you how the systems behave. For example, what is the usual RTT, and how long can transactions occur under normal conditions?

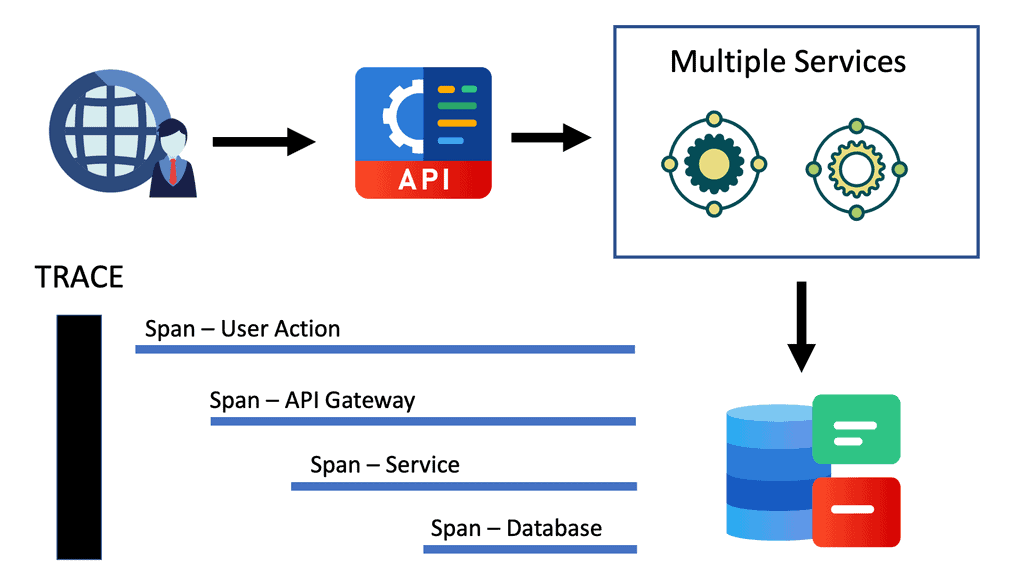

- A key point: Video on Distributed Tracing

We generally have two types of telemetry data. We have log data and time-series statistics. The time-series data is also known as metrics in a microservices environment. The metrics, for example, will allow you to get an aggregate understanding of what’s happening to all instances of a given service.

Then, we have logs, on the other hand, that provide highly fine-grained detail on a given service. But have no built-in way to provide that detail in the context of a request. Due to how distributed systems fail, you can’t use metrics and logs to discover and address all of your problems. We need a third piece to the puzzle: distributed tracing.

The Rise of Chaos Engineering

There is a growing complexity of infrastructure, and let’s face it, a lot can go wrong. It’s imperative to have a global view of all the infrastructure components and a good understanding of the application’s performance and health. In a large-scale container-based application design, there are many moving pieces and parts, and trying to validate the health of each piece manually is hard to do.

With these new environments, especially cloud-native at scale. Complexity is at its highest, and many more things can go wrong. For this reason, you must prepare as much as possible so the impact on users is minimal.

So, the dynamic deployment patterns you get with frameworks with Kubernetes allow you to build better applications. But you need to be able to examine the environment and see if it is working as expected. Most importantly, this course’s focus is that to prepare effectively, you need to implement a solid strategy for monitoring in production environments.

-

- Chaos engineering kubernetes

For this, you need to understand practices like Chaos Engineering and Chaos Engineering tools and how they can improve the reliability of the overall system. Chaos Engineering is the ability to perform tests in a controlled way. Essentially, we intentionally break things to learn how to build more resilient systems.

So, we are injecting faults in a controlled way to make the overall application more resilient by injecting various issues and faults. It comes down to a trade-off and your willingness to accept it. There is a considerable trade-off with distributed computing. You have to monitor efficiently, have performance management, and, more importantly, accurately test the distributed system in a controlled manner.

-

- Service mesh chaos engineering

Service Mesh is an option to use to implement Chaos Engineering. You can also implement Chaos Engineering with Chaos Mesh, a cloud-native Chaos Engineering platform that orchestrates tests in the Kubernetes environment. The Chaos Mesh project offers a rich selection of experiment types. Here are the choices, such as the POD lifecycle test, network test, Linux kernel, I/O test, and many other stress tests.

Implementing practices like Chaos Engineering will help you understand and manage unexpected failures and performance degradation. The purpose of Chaos Engineering is to build more robust and resilient systems.

Conclusion:

Chaos Engineering has emerged as a valuable practice for organizations leveraging Kubernetes to build and deploy cloud-native applications. By subjecting Kubernetes deployments to controlled failures, organizations can proactively identify and address potential weaknesses, ensuring the resilience and reliability of their systems. As the complexity of cloud-native architectures continues to grow, Chaos Engineering will play an increasingly vital role in building robust and fault-tolerant applications in the Kubernetes ecosystem.