Zero Trust Network Design

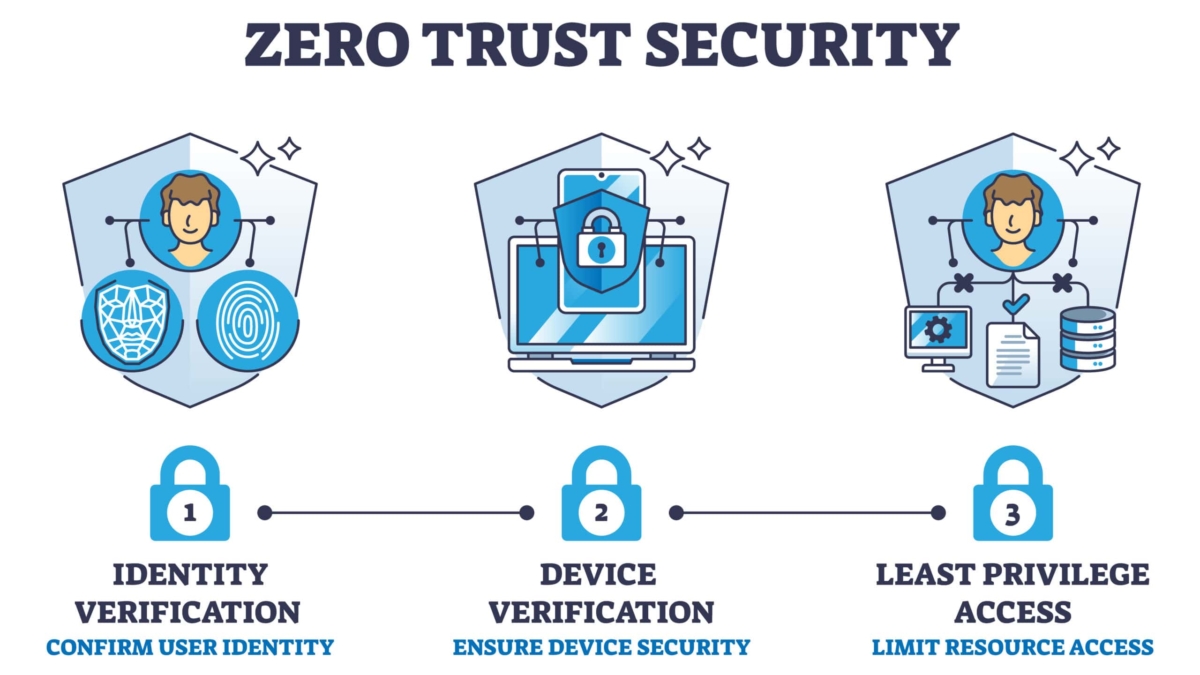

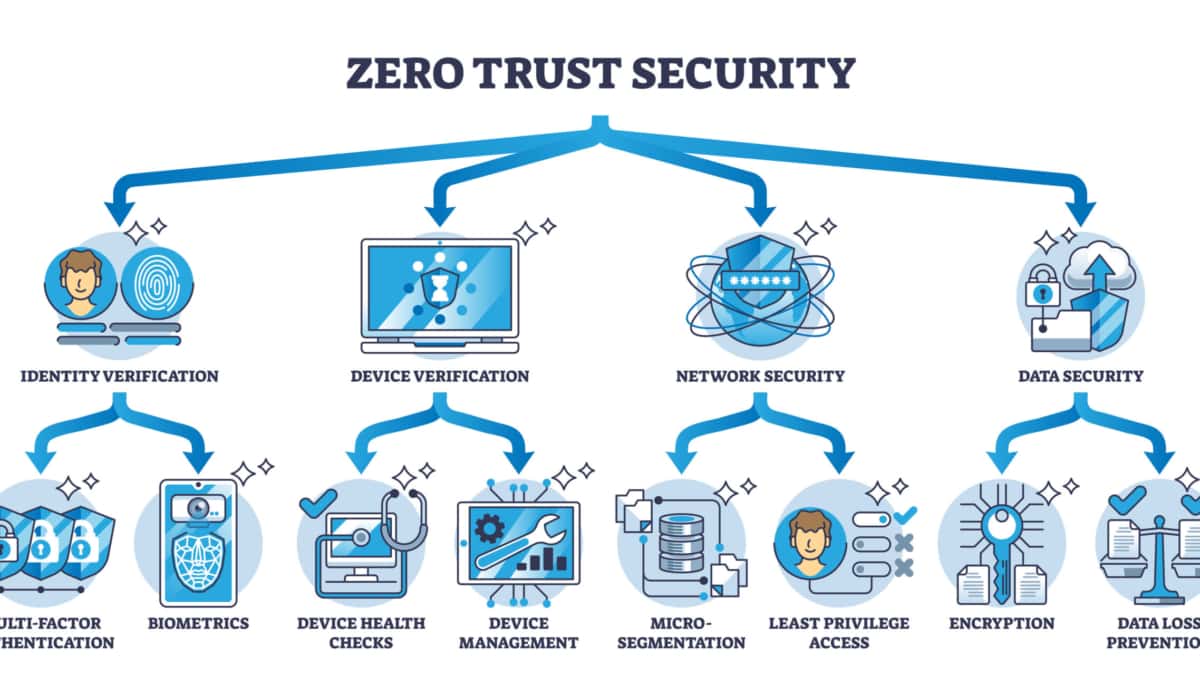

In today's interconnected world, where data breaches and cyber threats have become commonplace, traditional perimeter defenses are no longer enough to protect sensitive information. Enter Zero Trust Network Design is a security approach that prioritizes data protection by assuming that every user and device, inside or outside the network, is a potential threat. In this blog post, we will explore the Zero Trust Network Design concept, its principles, and its benefits in securing the modern digital landscape.

Zero trust network design is a security concept that focuses on reducing the attack surface of an organization’s network. It is based on the assumption that users and systems inside a network are untrusted, and therefore, all traffic is considered untrusted and must be verified before access is granted. This contrasts traditional networks, which often rely on perimeter-based security to protect against external threats.

Key Points:

-Identity and Access Management (IAM): IAM plays a vital role in Zero Trust by ensuring that only authenticated and authorized users gain access to specific resources. Multi-factor authentication (MFA) and strong password policies are integral to this component.

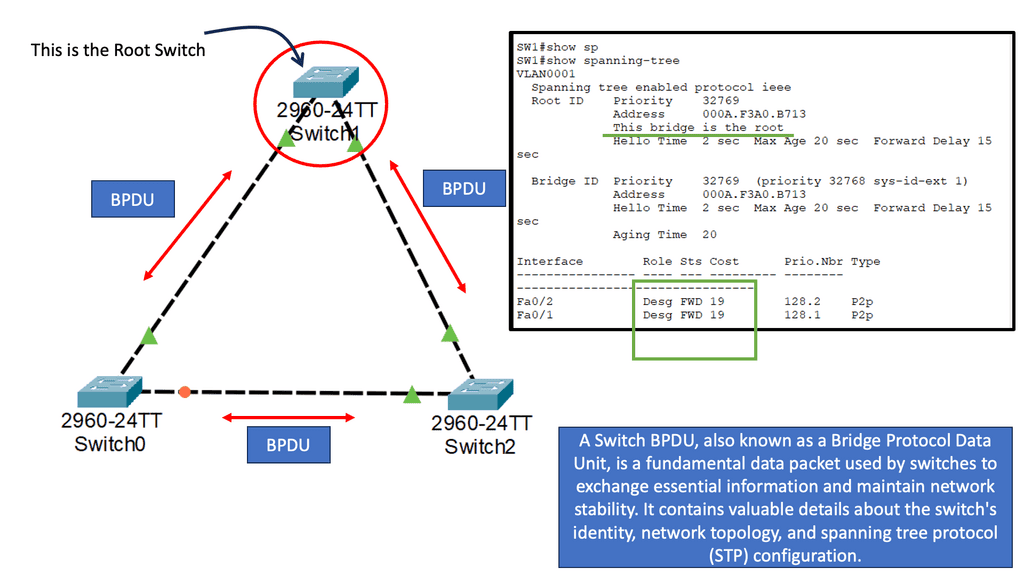

-Network Segmentation: Zero Trust advocates for segmenting the network into smaller, more manageable zones. This helps contain potential breaches and restricts lateral movement within the network.

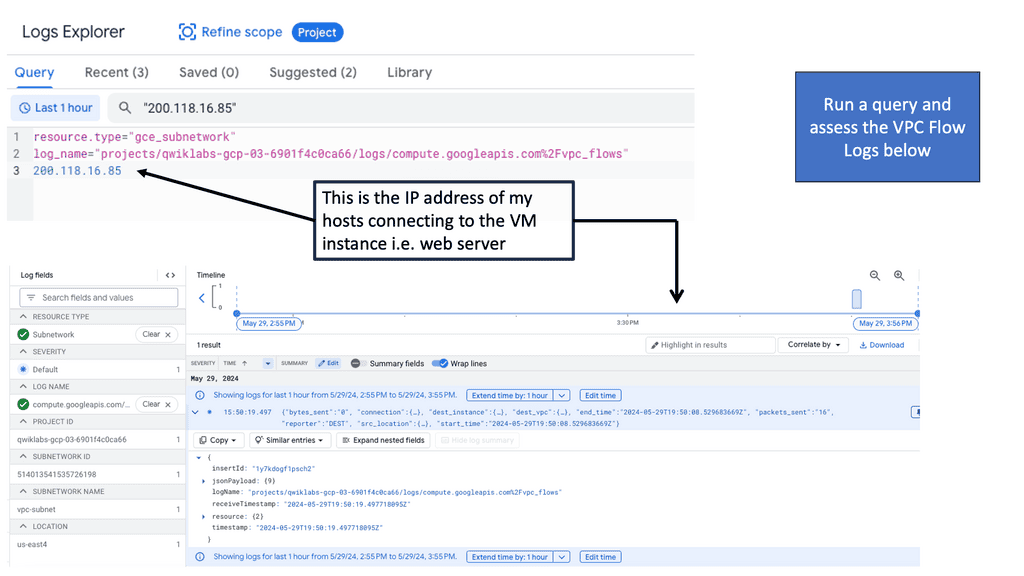

-Continuous Monitoring and Analytics: Real-time monitoring and analysis of network traffic, user behavior, and system logs are essential for detecting any anomalies or potential security breaches.

-Enhanced Security: By adopting a Zero Trust approach, organizations significantly reduce the risk of unauthorized access and lateral movement within their networks, making it harder for cyber attackers to exploit vulnerabilities.

-Improved Compliance: Zero Trust aligns with various regulatory and compliance requirements, providing organizations with a structured framework to ensure data protection and privacy.

-Greater Flexibility: Zero Trust allows organizations to embrace modern workplace practices, such as remote work and BYOD (Bring Your Own Device), without compromising security. Users can securely access resources from anywhere, anytime.

Implementing Zero Trust requires a well-defined strategy and careful planning. Here are some key steps to consider:

1. Assess Current Security Infrastructure: Conduct a thorough assessment of existing security measures, identify vulnerabilities, and evaluate the readiness for Zero Trust implementation.

2. Define Trust Boundaries: Determine the trust boundaries within the network and establish access policies accordingly. Consider factors like user roles, device types, and resource sensitivity.

3. Choose the Right Technologies: Select security solutions and tools that align with your organization's needs and objectives. These may include next-generation firewalls, secure web gateways, and identity management systems.

Matt Conran

Highlights: Zero Trust Network Design

**Understanding Zero Trust**

Zero trust is a security concept that challenges the traditional perimeter-based network security model. It operates on the principle of never trusting any user or device, regardless of their location or network connection. Instead, it continuously verifies and authenticates every user and device attempting to access network resources.

Key Points:

A – Certain principles must be followed to implement a zero-trust network design successfully. One crucial principle is the principle of least privilege, where users and devices are granted only the necessary access to perform their tasks. Another principle is continuously monitoring and assessing all network traffic, ensuring that any anomalies or suspicious activities are detected and responded to promptly.

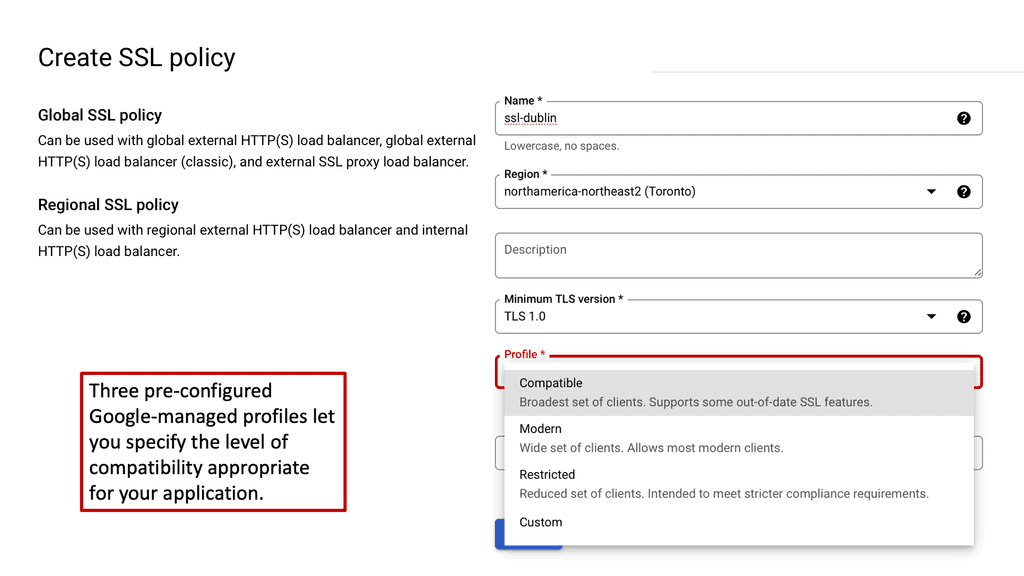

B – Implementing a zero-trust network design requires careful planning and consideration. It involves a combination of technological solutions, such as multi-factor authentication, network segmentation, encryption, and granular access controls. Additionally, organizations must establish comprehensive policies and procedures to govern user access, device management, and incident response.

C – Zero trust network design offers several benefits to organizations. Firstly, it enhances overall security posture by minimizing the attack surface and preventing lateral movement within the network. Secondly, it provides granular control over network resources, ensuring that only authorized users and devices can access sensitive data. Lastly, it simplifies compliance efforts by enforcing strict access controls and maintaining detailed audit logs.

“Never Trust, Always Verify”

D – The core concept of zero-trust network design and segmentation is never to trust, always verify. This means that all traffic, regardless of its origin, must be verified before access is granted. This is achieved through layered security controls, including authentication, authorization, encryption, and monitoring.

E – Authentication verifies users’ and devices’ identities before allowing access to resources. Authorization determines what resources a user or device is allowed to access. Encryption protects data in transit and at rest. Monitoring detects threats and suspicious activity.

**Zero Trust Network Segmentation**

Zero-trust network design, including segmentation, is becoming increasingly popular as organizations move away from perimeter-based security. By verifying all traffic rather than relying on perimeter-based security, organizations can reduce their attack surface and improve their overall security posture. Segmentation can work at different layers of the OSI Model.

**Scanning Networks: Securing Networks**

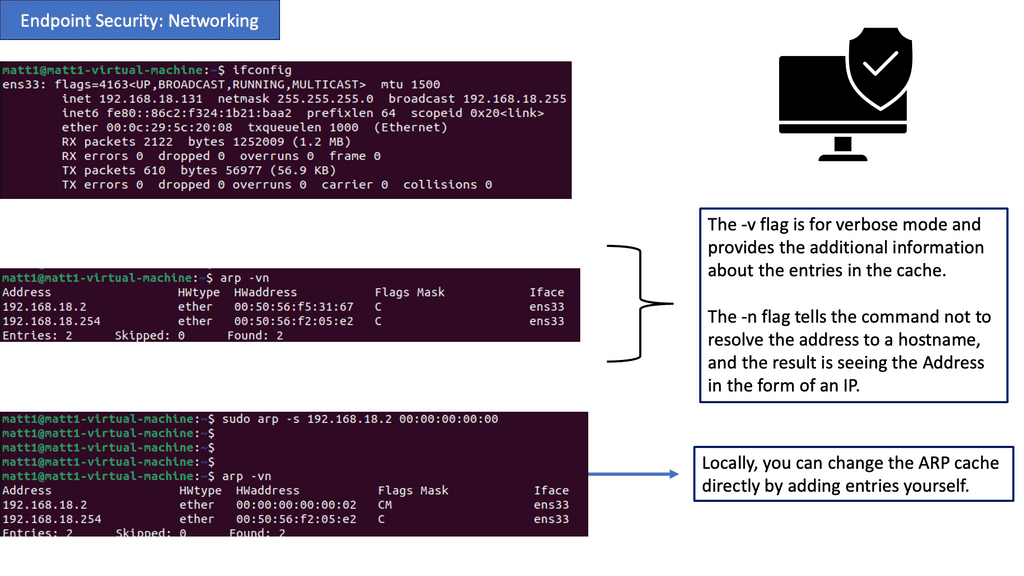

Endpoint security refers to the protection of devices (endpoints) that have access to a network. These devices, which include laptops, smartphones, and servers, are often targeted by cybercriminals seeking unauthorized access, data breaches, or system disruptions. Businesses and individuals can fortify their digital realms against threats by implementing robust endpoint security measures.

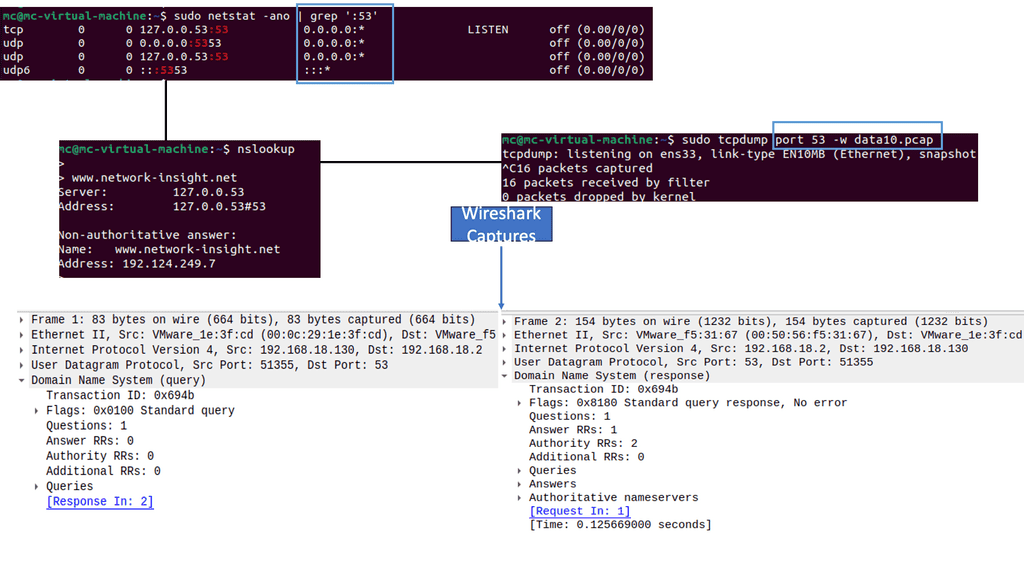

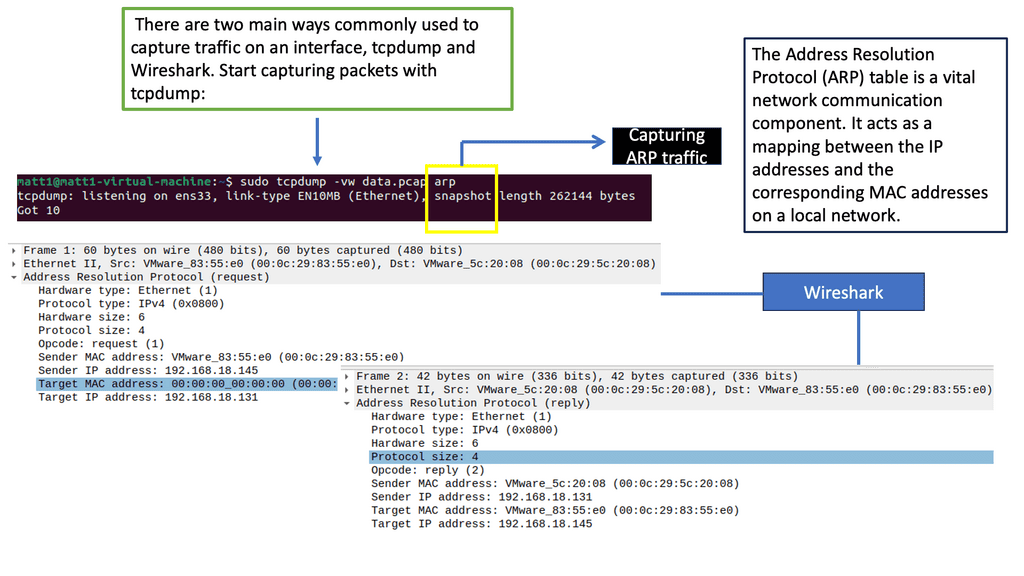

Address Resolution Protocol (APR):

ARP (Address Resolution Protocol) plays a vital role in establishing communication between devices within a network. It maps an IP address to a physical (MAC) address, allowing data transmission between devices. However, cyber attackers can exploit ARP to launch attacks, such as ARP spoofing, compromising network security. Understanding ARP and implementing countermeasures is crucial for adequate endpoint security.

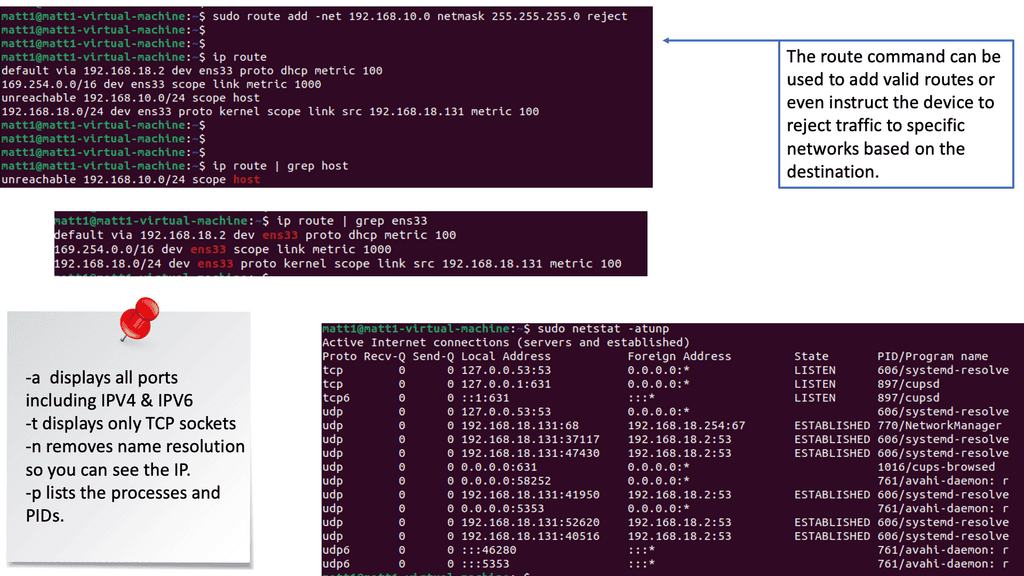

The Role of Routing:

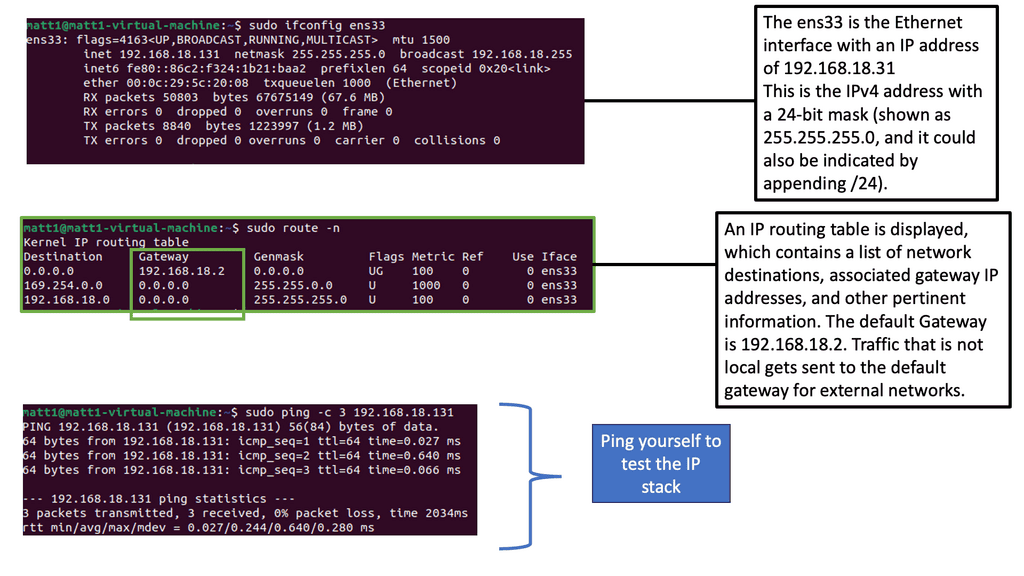

Routing is the process of forwarding network traffic between different networks. Secure routing protocols and practices are essential to prevent unauthorized access and ensure data integrity. By implementing secure routing mechanisms, organizations can establish trusted paths for data transmission, reducing the risk of data breaches and unauthorized network access.

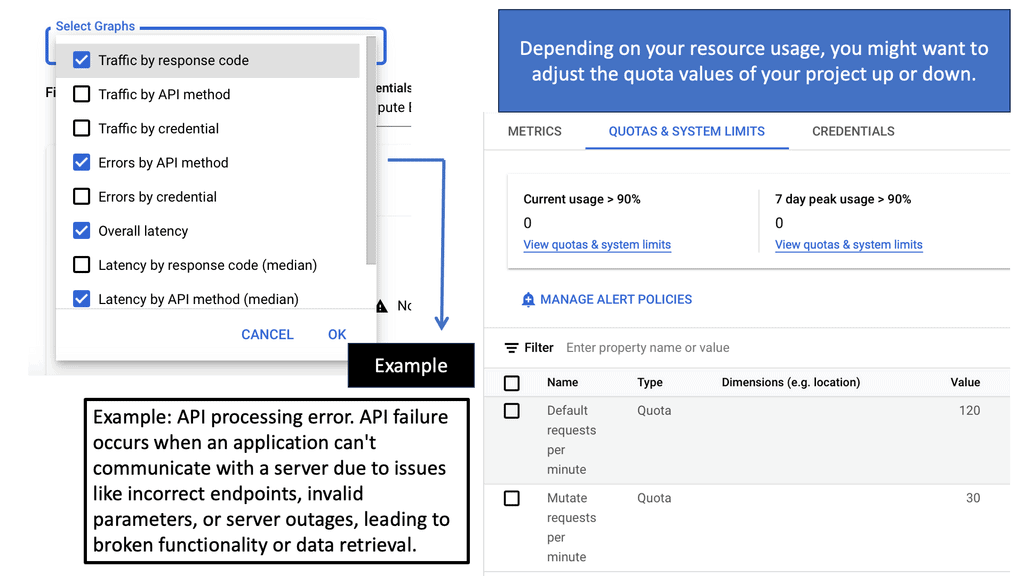

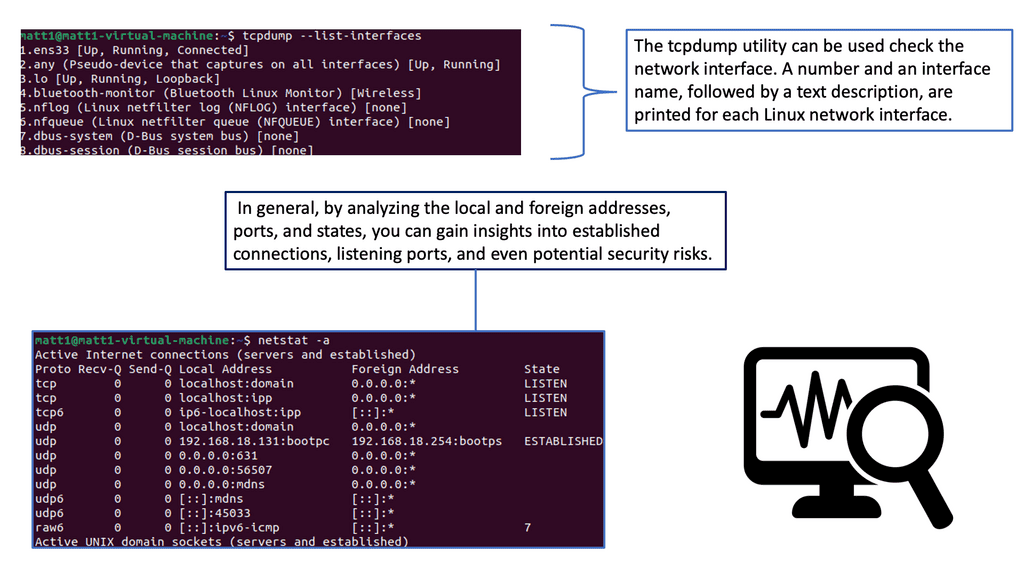

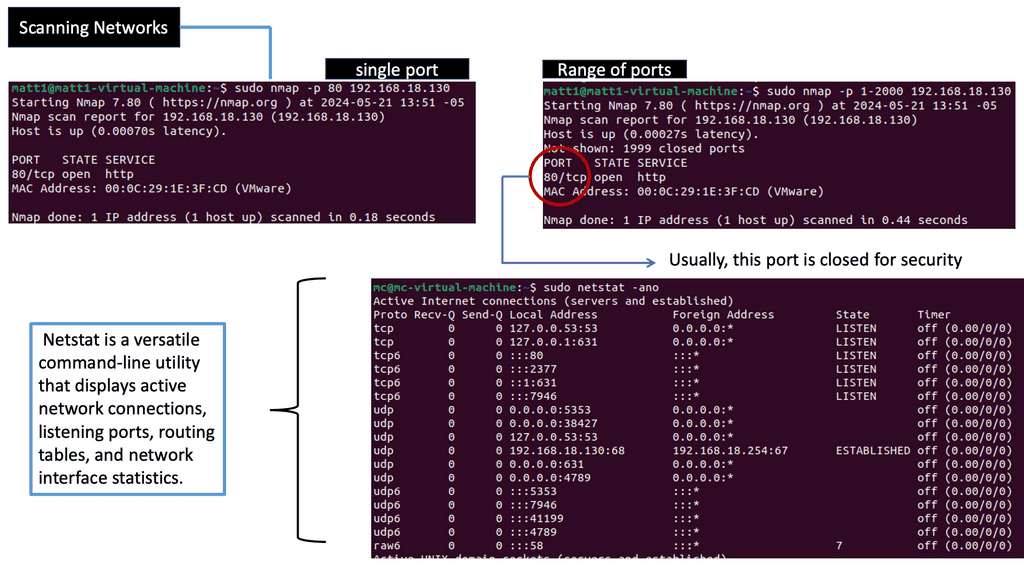

Note: Netstat: Netstat, a command-line tool, provides valuable insights into network connections, active ports, and listening services. By utilizing Netstat, network administrators can identify suspicious connections, potential malware infections, or unauthorized access attempts. Regularly monitoring and analyzing Netstat outputs can aid in maintaining a secure network environment.

Zero Trust Connectivity: NCC

### What is Google’s Network Connectivity Center?

Google’s Network Connectivity Center is a centralized platform that simplifies the management of hybrid and multi-cloud networks. It provides organizations with a unified view of their network, enabling them to connect, secure, and manage their infrastructure with ease. By leveraging Google’s global network, NCC ensures high availability, low latency, and optimized performance.

#### Unified Network Management

NCC offers a single pane of glass for managing all network connections, whether they are on-premises, in the cloud, or across different cloud providers. This unified approach reduces complexity and streamlines operations, making it easier for IT teams to maintain a cohesive network architecture.

#### Advanced Security Measures

Security is a core component of NCC. It integrates seamlessly with Google’s security services, providing advanced threat protection, encryption, and compliance monitoring. This ensures that data remains secure as it traverses the network, adhering to the principles of Zero Trust.

#### Scalability and Flexibility

One of the standout features of NCC is its scalability. Organizations can easily scale their network infrastructure to accommodate growth and changing business needs. Whether expanding to new regions or integrating additional cloud services, NCC offers the flexibility to adapt without compromising performance or security.

Zero Trust Connectivity: Private Service Connect

Zero Trust Connectivity: Private Service Connect

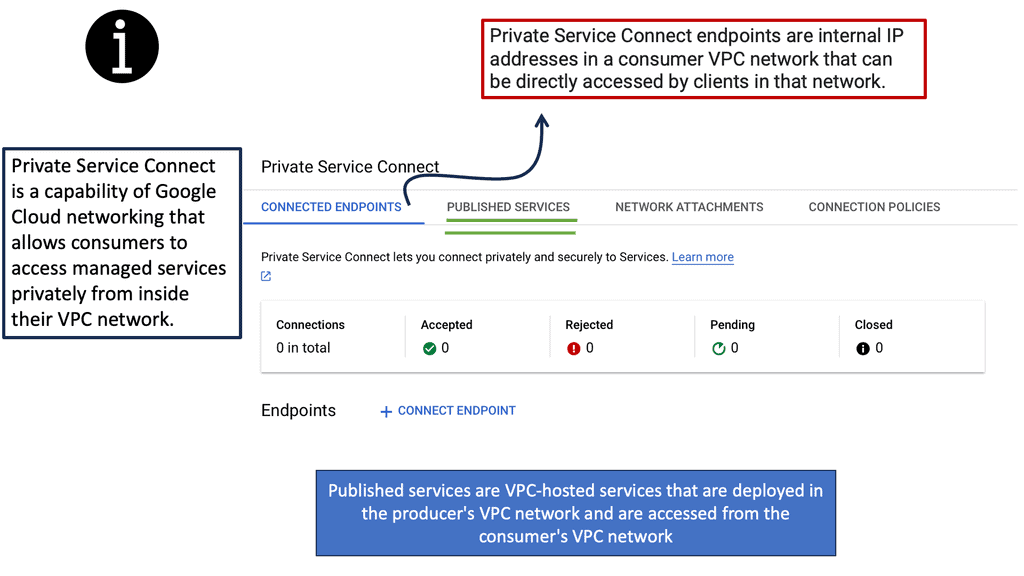

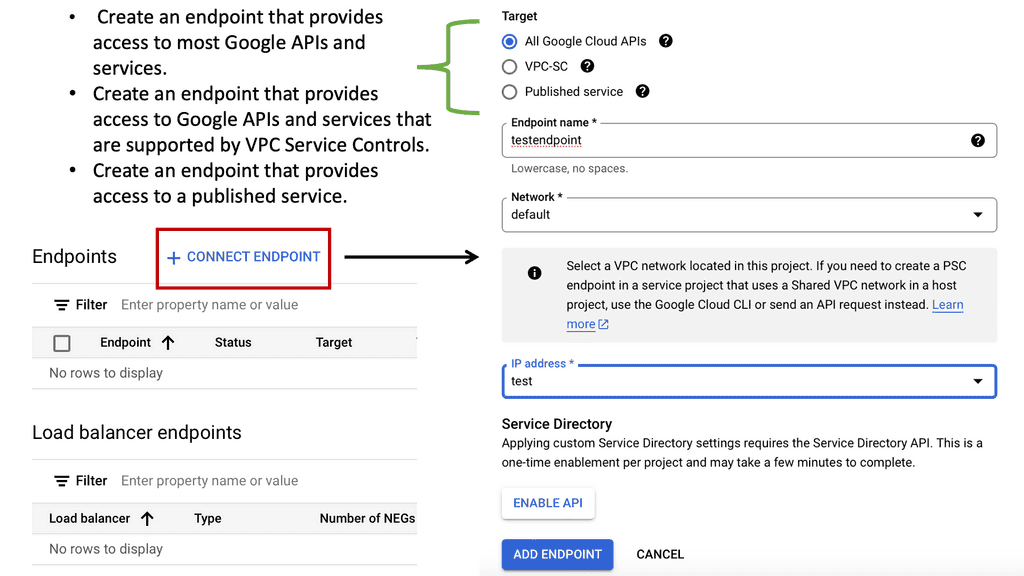

### What is Private Service Connect?

Private Service Connect is a feature offered by Google Cloud that allows users to securely connect services across different VPC networks. It leverages private IPs to ensure that data does not traverse the public internet, reducing the risk of exposure to potential threats. This service is particularly useful for organizations looking to maintain a high level of security while ensuring seamless connectivity between their cloud-based services.

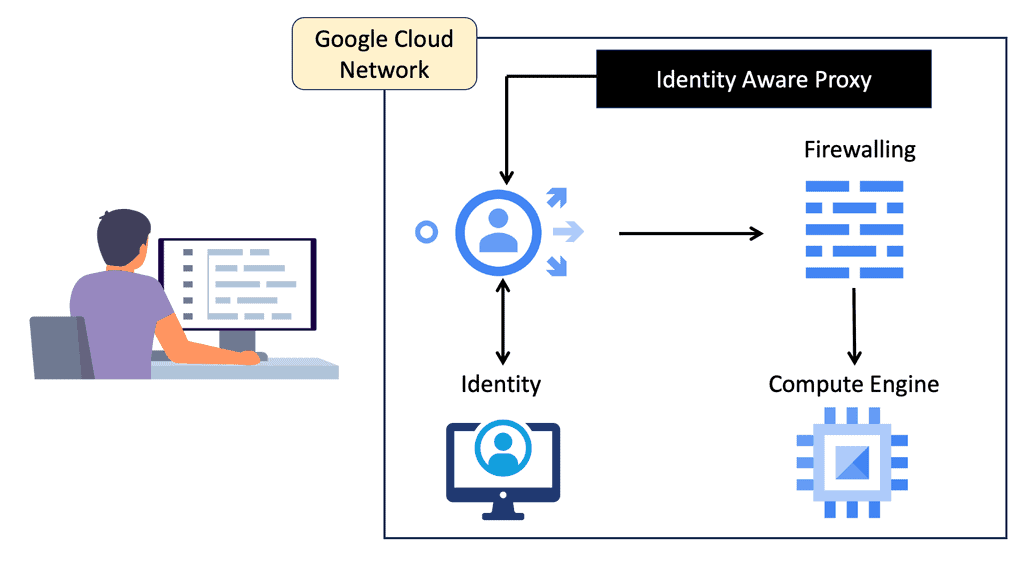

### The Role of Zero Trust in Private Service Connect

Zero trust is a security framework that operates on the principle of “never trust, always verify.” It assumes that threats can come from both inside and outside the network. Private Service Connect embodies this principle by ensuring that services are only accessible to authorized users and devices. By integrating zero trust into its framework, Private Service Connect provides an additional layer of security, ensuring that data and services remain protected.

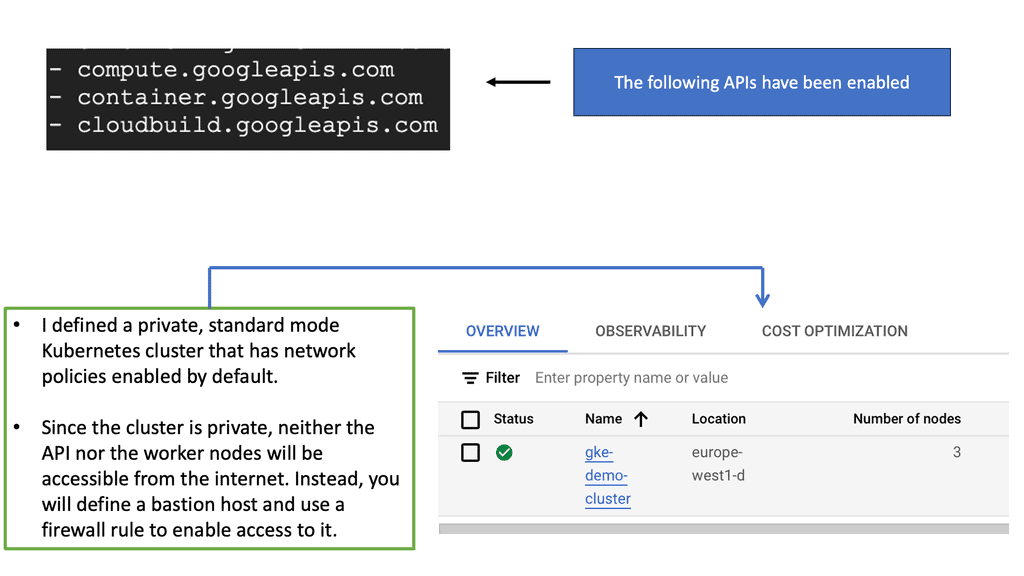

Network Policies: GKE

**Understanding the Basics of Network Policy**

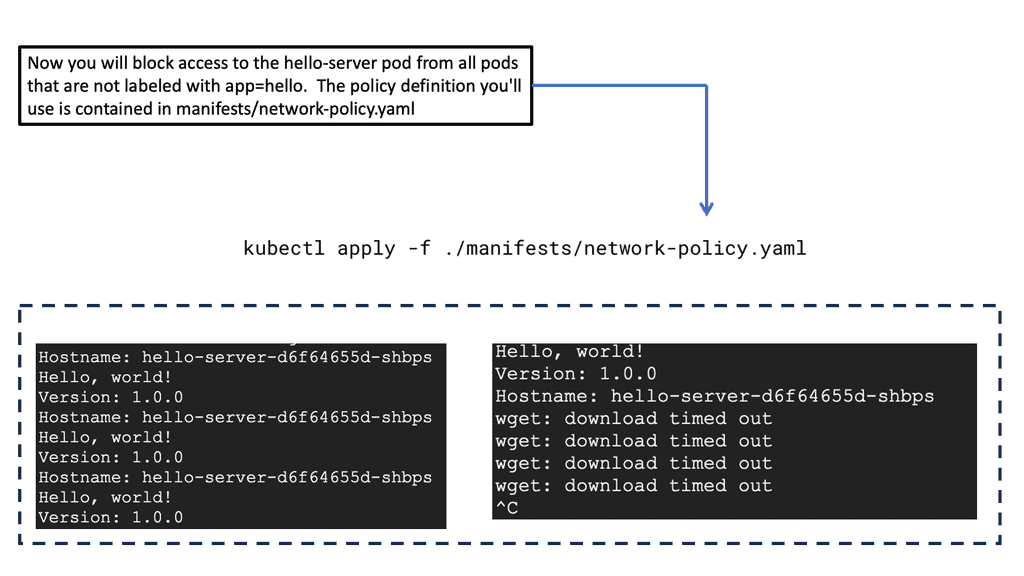

Network policies in GKE are akin to firewall rules that control the traffic flow between pods, effectively determining which pods can communicate with each other. These policies are essential for isolating applications, segmenting traffic, and protecting sensitive data. In essence, network policies provide a framework for defining how groups of pods can interact, allowing for fine-grained control over network communication.

—

**Implementing Zero Trust Network Design with GKE**

Zero trust network design is a security model that operates on the principle of “never trust, always verify.” In the context of GKE, this means that no pod should be able to communicate with another pod without explicit permission. Implementing zero trust in GKE involves carefully crafting network policies to ensure that only the necessary communication paths are open. This approach minimizes the risk of unauthorized access and lateral movement within the cluster, enhancing the overall security posture.

—

**Best Practices for Configuring Network Policies**

When configuring network policies in GKE, there are several best practices to consider. First, start by defining default deny policies to block all traffic by default, then incrementally add specific allow policies as required. It’s also important to regularly review and update these policies to reflect changes in the application architecture. Additionally, leveraging tools like Kubernetes Network Policy API can simplify the management and enforcement of these policies.

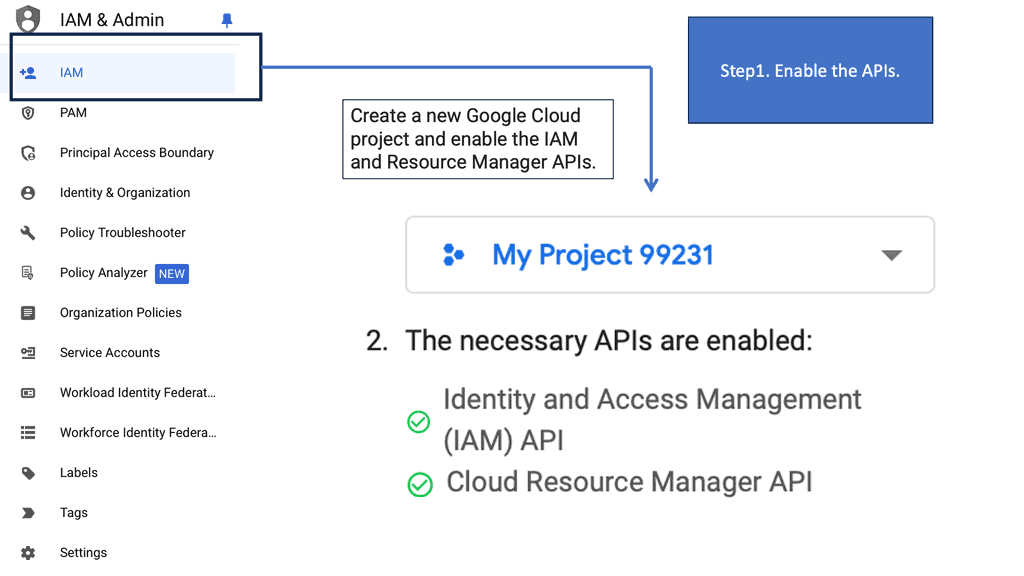

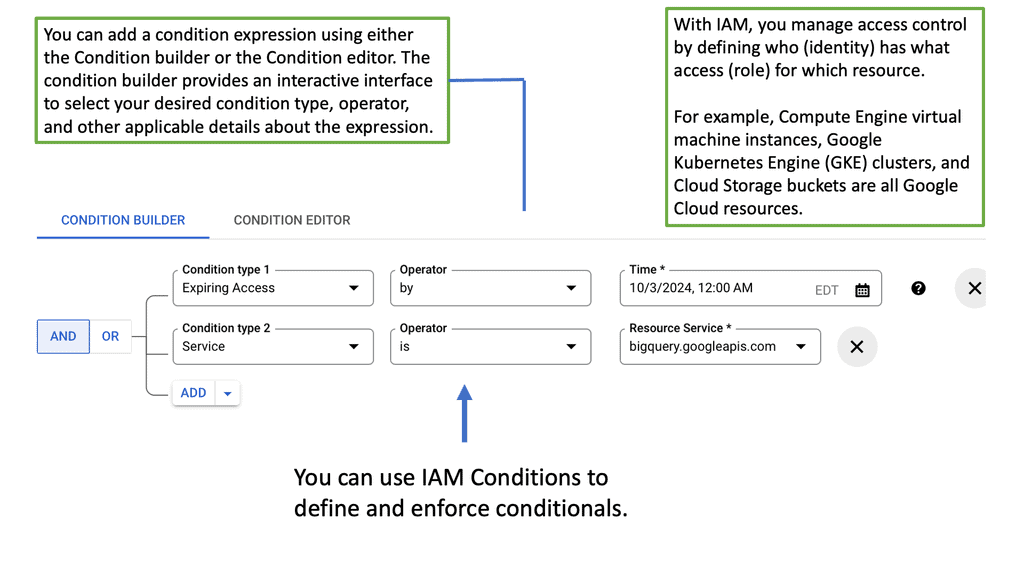

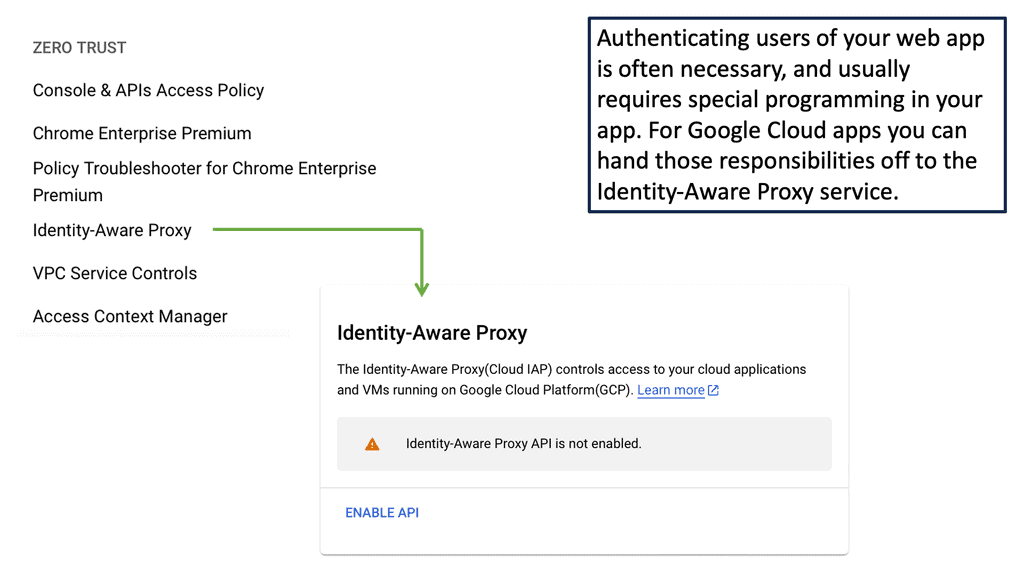

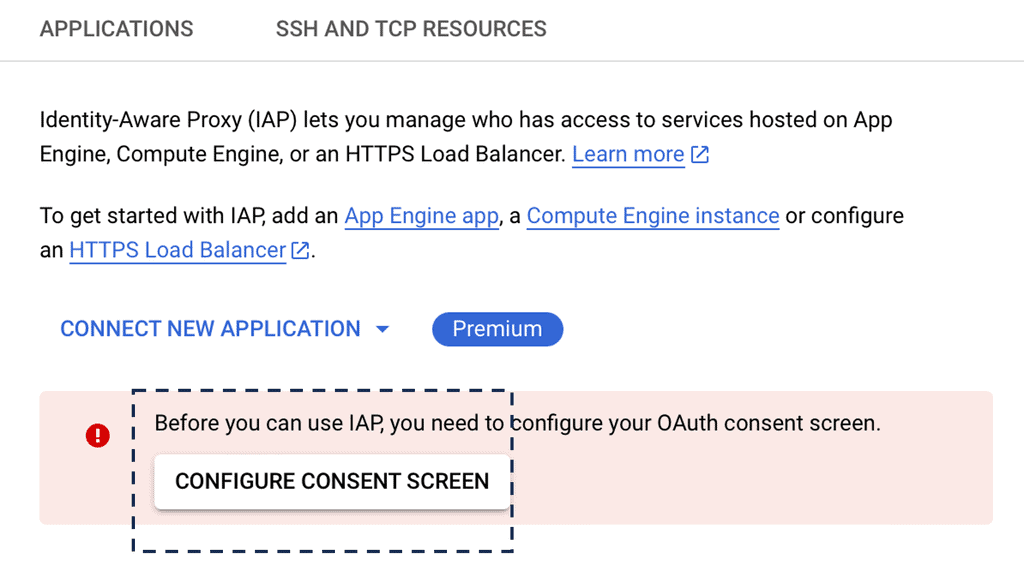

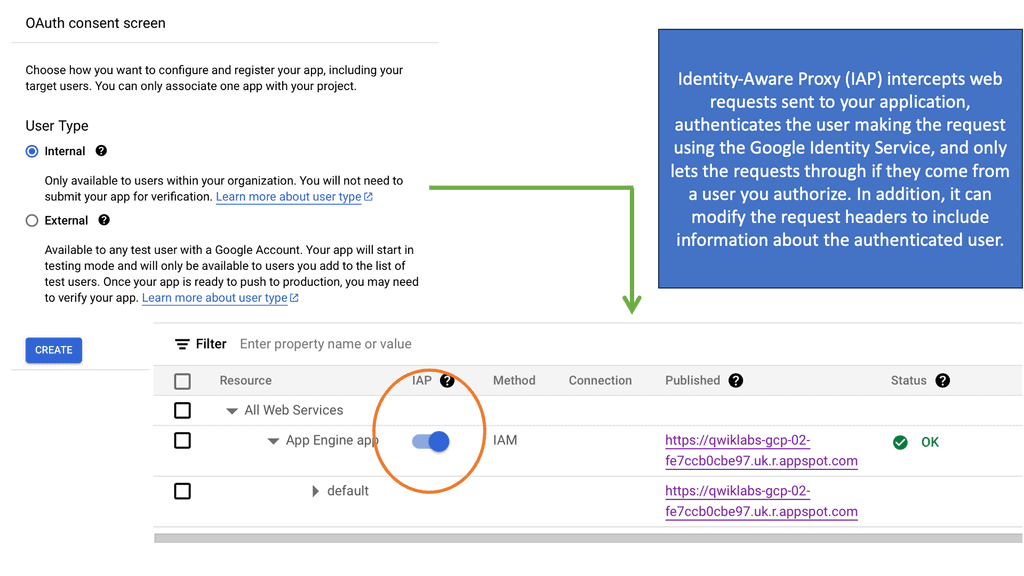

Zero Trust Google Cloud IAM

## Understanding the Basics

At its core, Google Cloud IAM allows you to define roles and permissions that determine what actions users can take with your resources. It’s a comprehensive tool that helps you manage access to Google Cloud services with precision. By assigning roles based on the principle of least privilege, you ensure that users have only the permissions they need to perform their jobs, minimizing potential security risks.

## Zero Trust Network Design

Incorporating a zero trust network design with Google Cloud IAM is an effective way to bolster security. Unlike traditional security models that rely heavily on perimeter defenses, zero trust assumes that threats could be both outside and inside the network. This approach requires strict identity verification for every person and device trying to access resources. By integrating zero trust principles, organizations can enhance their security posture and reduce the risk of unauthorized access.

## Advanced Features for Enhanced Security

Google Cloud IAM offers several advanced features that complement a zero trust strategy. These include conditional access based on attributes such as device security status and location, as well as support for multi-factor authentication. Additionally, IAM’s audit logs provide comprehensive visibility into who accessed what, when, and how, allowing for thorough monitoring and quick incident response.

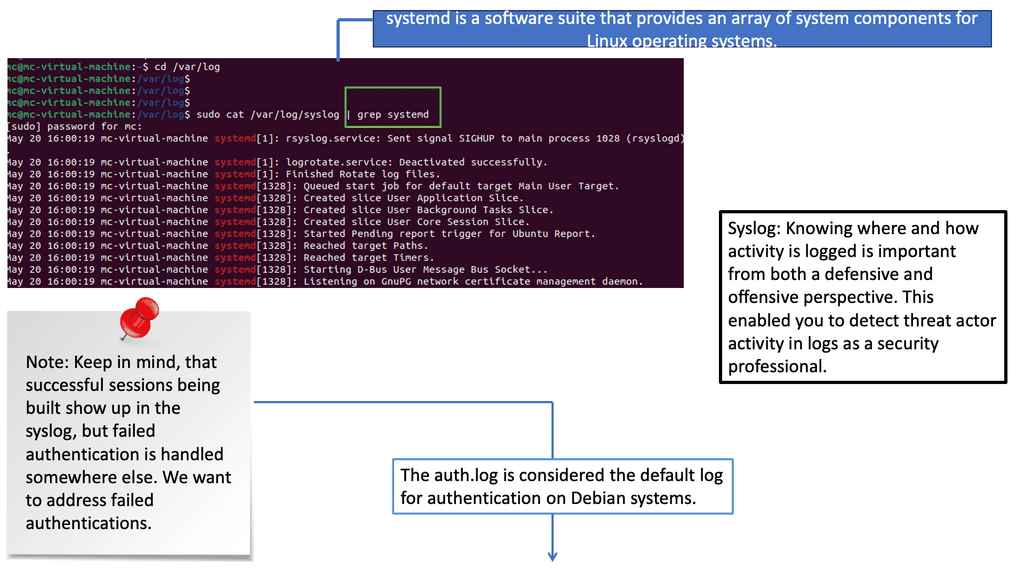

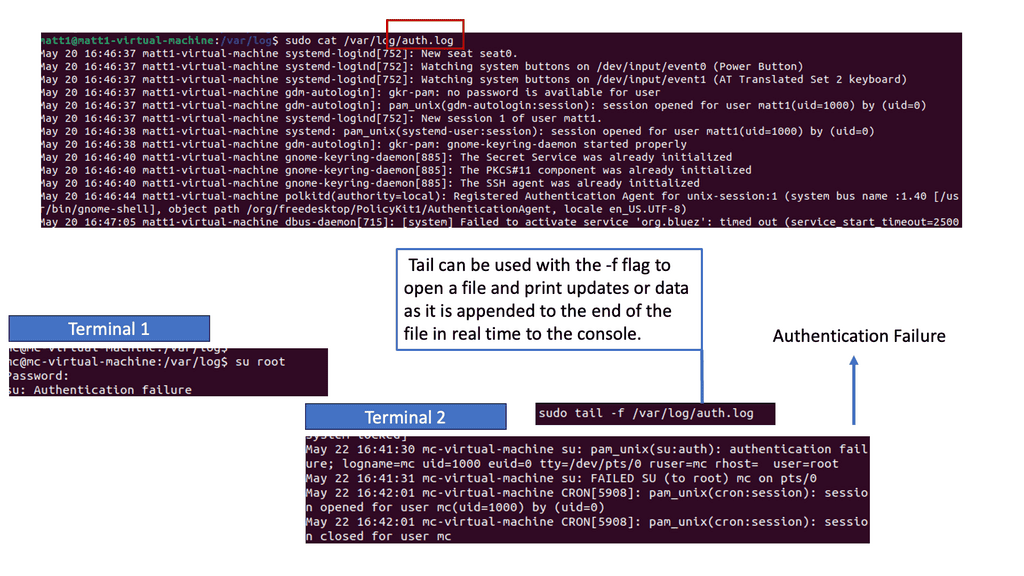

Detecting Authentication Failures in Logs

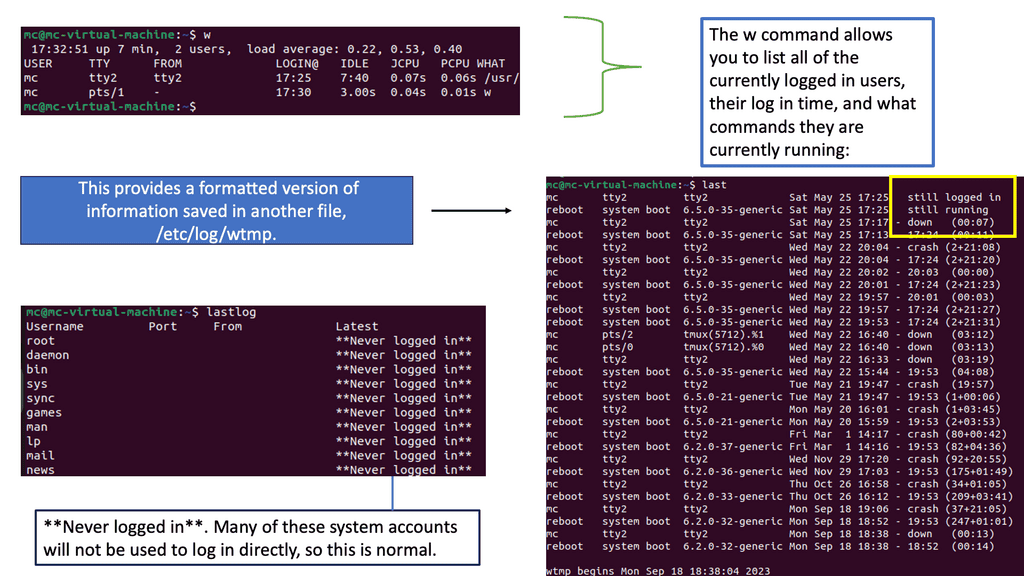

Understanding Log Analysis

Log analysis is the process of examining log data to extract meaningful insights and identify potential security events. Logs act as a digital trail, capturing valuable information about system activities, user actions, and network traffic. By carefully analyzing logs, security teams can detect anomalies, track user behavior, and uncover potential threats lurking in the shadows.

Syslog is a standard protocol for message logging. It allows various devices and applications to send log messages to a central logging server. Syslog provides a standardized format, making aggregating and analyzing logs from different sources easier. Syslog messages contain essential details such as timestamps, log levels, and source IP addresses, which are crucial for detecting security events.

Auth.log, or the authentication log, is a specific log file that records authentication-related events on Unix-based systems. It includes valuable information about user logins, failed login attempts, and other authentication activities. Analyzing auth.log can help identify brute-force attacks, unauthorized access attempts, and potential security breaches targeting user accounts.

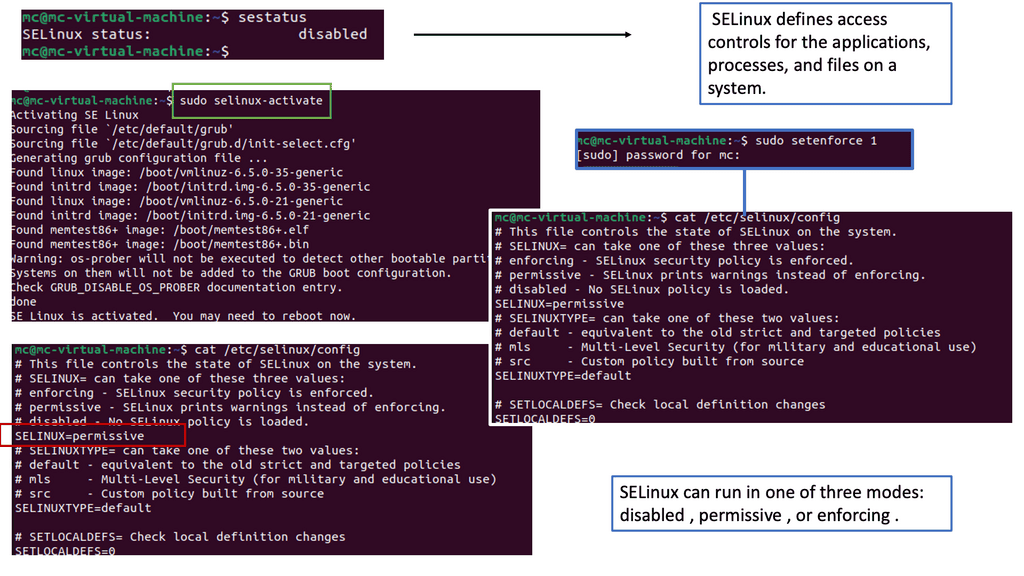

Understanding SELinux

SELinux is a security framework built into the Linux kernel that provides Mandatory Access Control (MAC) policies. Unlike traditional discretionary access control (DAC), which relies on user permissions, SELinux focuses on controlling access based on the security context of processes and resources. This means that even if an attacker gains unauthorized access to a system, SELinux can prevent them from compromising the entire system.

Implementing SELinux

To implement zero trust endpoint security with SELinux, organizations should start by defining security policies that align with their specific needs. These policies should enforce strict access controls, limit privileges, and define fine-grained permissions for processes and resources. By doing so, organizations can ensure that even if an endpoint is compromised, the attacker’s ability to move laterally within the network is significantly restricted.

Zero Trust Networking with Cloud Service Mesh

## What is a Cloud Service Mesh?

At its core, a Cloud Service Mesh is a configurable infrastructure layer for microservices application, which makes communication between service instances flexible, reliable, and observable. It decouples network and security policies from the application code, allowing developers to focus on their core functionality without worrying about the intricacies of service-to-service communication. Essentially, it acts as a dedicated layer for managing service-to-service communications, offering features like load balancing, service discovery, retries, and circuit breaking.

## The Benefits of Implementing a Cloud Service Mesh

Implementing a Cloud Service Mesh offers numerous benefits that streamline operations and enhance security:

1. **Enhanced Observability**: It provides deep insights into service behavior with monitoring and tracing capabilities, helping to quickly identify and resolve issues.

2. **Improved Security**: By enforcing security policies like mutual TLS and fine-grained access control, it ensures secure service-to-service communication.

3. **Resilience and Reliability**: Features like automatic retries, circuit breaking, and load balancing ensure that services remain resilient and available, even in the face of failures.

4. **Operational Simplicity**: By offloading the complexities of service management to the mesh, developers can focus on business logic, speeding up development cycles.

### Cloud Service Mesh and Zero Trust Networks

The concept of Zero Trust Networks (ZTN) revolves around the principle of “never trust, always verify.” In a ZTN, every request, whether it originates inside or outside the network, must be authenticated and authorized. Cloud Service Meshes align perfectly with ZTN principles by providing robust security features:

– **Mutual TLS**: Ensures that all communication between services is encrypted and authenticated.

– **Fine-Grained Policy Control**: Allows administrators to define and enforce policies at a granular level, ensuring that only authorized services can communicate.

Google has been at the forefront of integrating Cloud Service Mesh technology with Zero Trust principles. Their Istio service mesh, for example, offers robust security features that align with Zero Trust guidelines, making it a preferred choice for organizations looking to enhance their security posture.

### Google’s Contribution to Cloud Service Mesh

Google has played a significant role in advancing Cloud Service Mesh technology. Their open-source service mesh, Istio, has become a cornerstone in the industry. Istio simplifies service management by providing a uniform way to secure, connect, and monitor microservices. It integrates seamlessly with Kubernetes, making it an ideal choice for cloud-native applications. Google’s emphasis on security, observability, and operational efficiency in Istio reflects their commitment to fostering innovation in cloud technologies.

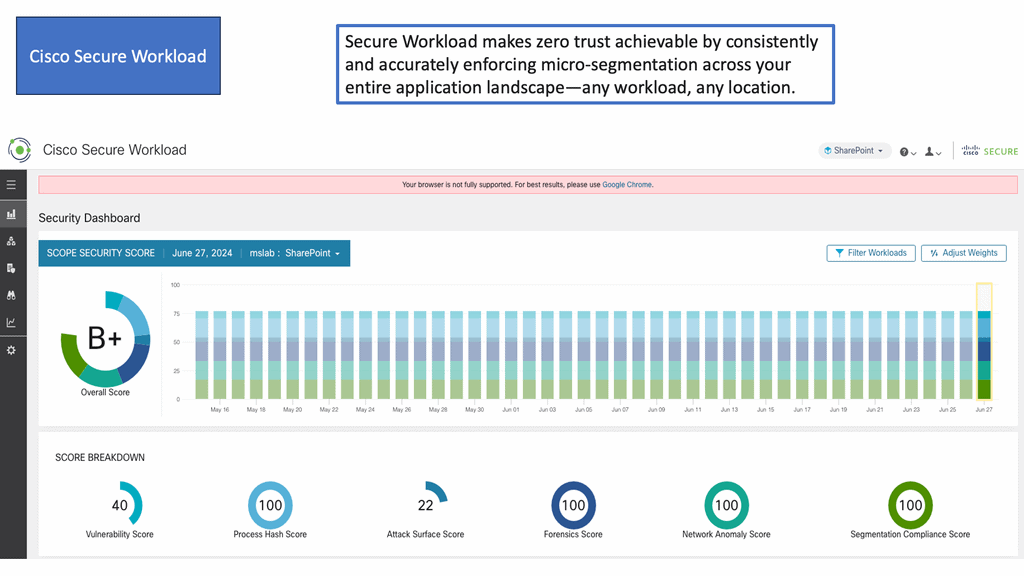

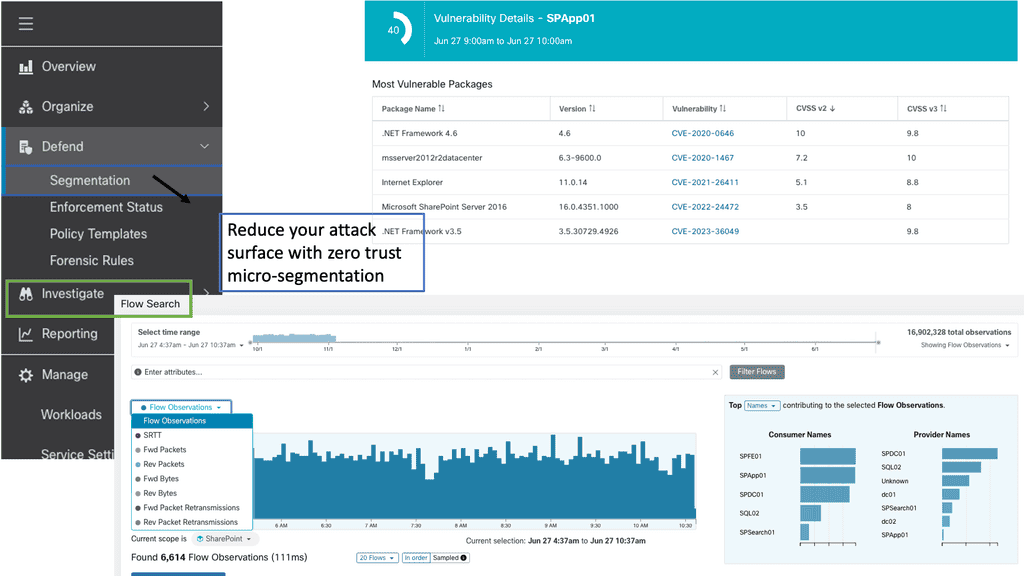

Example Product: Cisco Secure Workload

### What is Cisco Secure Workload?

Cisco Secure Workload is a comprehensive security solution that provides visibility, micro-segmentation, and workload protection for applications across multi-cloud environments. It leverages advanced analytics and machine learning to identify and mitigate threats, ensuring that your workloads remain secure, whether they are on-premises, in the cloud, or in hybrid environments.

#### 1. Enhanced Visibility

One of the standout features of Cisco Secure Workload is its ability to provide unparalleled visibility into your network. It offers real-time insights into application dependencies, communications, and behaviors, allowing you to detect anomalies and potential threats swiftly.

#### 2. Micro-Segmentation

Micro-segmentation is a critical component of modern security strategies. Cisco Secure Workload enables fine-grained segmentation of workloads, reducing the attack surface and preventing lateral movement of threats within your network. This granular approach to segmentation ensures that even if a threat breaches one segment, it cannot easily spread to others.

#### 3. Automated Policy Enforcement

Maintaining consistent security policies across diverse environments can be challenging. Cisco Secure Workload simplifies this process through automated policy enforcement. By defining security policies centrally, you can ensure they are uniformly applied across all workloads, reducing the risk of misconfigurations and human errors.

### How Cisco Secure Workload Works

#### 1. Data Collection

Cisco Secure Workload starts by collecting data from various sources within your network. This includes telemetry data from workloads, network traffic, and existing security tools. This data is then analyzed to create a comprehensive map of your application environment.

#### 2. Behavior Analysis

Using machine learning and advanced analytics, Cisco Secure Workload analyzes the collected data to identify normal and abnormal behaviors. This analysis helps in detecting potential threats and vulnerabilities that traditional security tools might miss.

#### 3. Threat Detection and Response

Once potential threats are identified, Cisco Secure Workload provides actionable insights and automated responses to mitigate these threats. This proactive approach ensures that your workloads remain protected even as new threats emerge.

### Real-World Applications

#### 1. Financial Services

Financial institutions handle sensitive data and are prime targets for cyberattacks. Cisco Secure Workload helps these organizations secure their workloads, ensuring compliance with regulatory requirements and protecting customer data from breaches.

#### 2. Healthcare

In the healthcare sector, patient data security is of utmost importance. Cisco Secure Workload provides healthcare organizations with the tools they need to protect electronic health records (EHRs) and ensure HIPAA compliance.

#### 3. Retail

Retailers face unique challenges with high transaction volumes and diverse IT environments. Cisco Secure Workload helps retailers secure their transactional data, protect customer information, and prevent fraud.

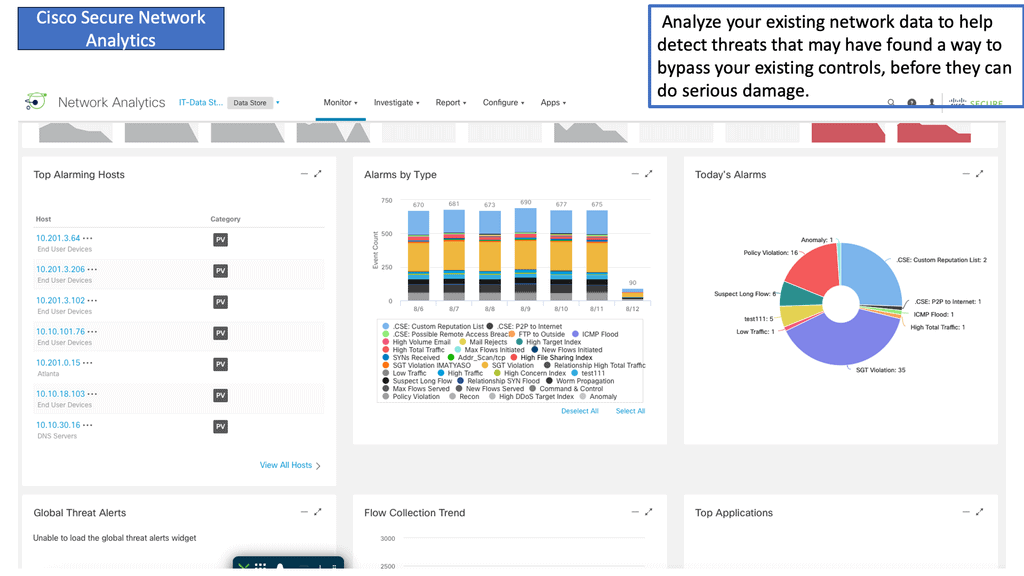

Example Product: Cisco Secure Network Analytics

Cisco Secure Network Analytics offers a plethora of features that make it stand out in the crowded cybersecurity market. Here are some of the core functionalities:

– **Comprehensive Network Visibility**: Cisco SNA provides a complete view of all network traffic, allowing you to see what’s happening across your entire infrastructure. This visibility is crucial for identifying potential threats and understanding normal network behavior.

– **Advanced Threat Detection**: Utilizing machine learning and behavioral analytics, Cisco SNA can detect anomalies that may indicate a security breach. This proactive approach helps in identifying threats before they can cause significant damage.

– **Automated Response and Mitigation**: When a threat is detected, Cisco SNA can automatically respond by triggering predefined actions, such as isolating affected devices or blocking malicious traffic. This automation ensures a swift and efficient response to security incidents.

### Benefits of Implementing Cisco Secure Network Analytics

Implementing Cisco Secure Network Analytics offers numerous benefits to organizations of all sizes. Some of the key advantages include:

– **Reduced Mean Time to Detect (MTTD) and Respond (MTTR)**: With its advanced detection and automated response capabilities, Cisco SNA significantly reduces the time it takes to identify and mitigate threats. This rapid response is crucial for minimizing the impact of security incidents.

– **Enhanced Network Performance**: By providing detailed insights into network traffic, Cisco SNA helps organizations optimize their network performance. This optimization leads to improved efficiency and reduced downtime.

– **Regulatory Compliance**: Many industries are subject to strict regulatory requirements regarding data protection and network security. Cisco SNA helps organizations meet these compliance standards by providing detailed audit trails and reporting capabilities.

### Real-World Applications of Cisco Secure Network Analytics

Cisco Secure Network Analytics is versatile and can be applied across various industries and use cases. Here are a few examples:

– **Financial Services**: Banks and financial institutions can use Cisco SNA to protect sensitive customer information and prevent fraud. The tool’s advanced threat detection capabilities are particularly valuable in identifying and stopping sophisticated cyber-attacks.

– **Healthcare**: In the healthcare sector, protecting patient data is paramount. Cisco SNA helps healthcare providers secure their networks against breaches and ensure compliance with regulations such as HIPAA.

– **Education**: Educational institutions can benefit from Cisco SNA by safeguarding student and faculty data. The tool also helps in maintaining the integrity of online learning platforms and preventing disruptions.

Related: For pre-information, you may find the following helpful:

Zero Trust Network Design

**Issue 1 – We Connect First and Then Authenticate**

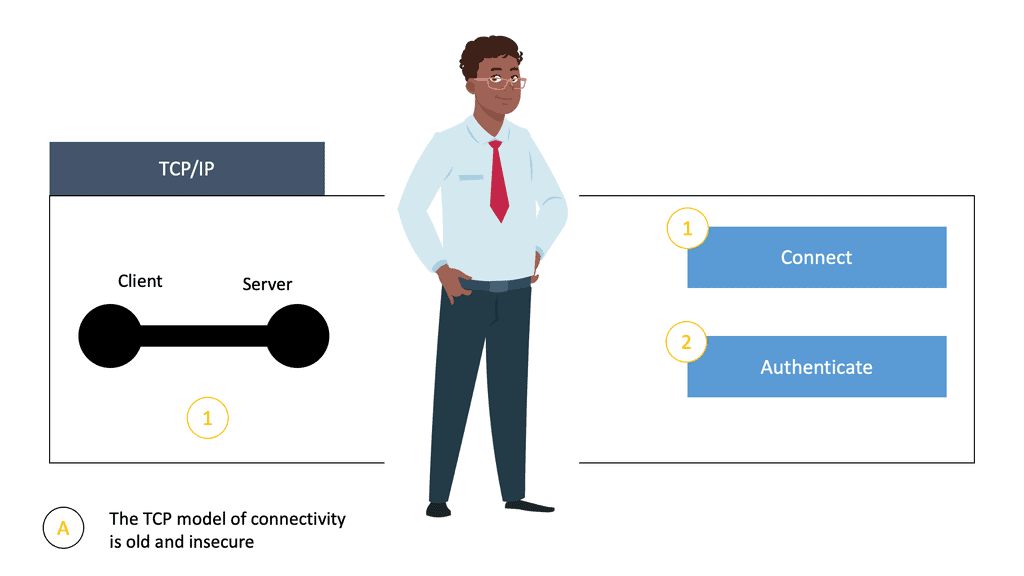

- Connect first, authenticate second.

TCP/IP is a fundamentally open network protocol facilitating easy connectivity and reliable communications between distributed computing nodes. It has served us well in enabling our hyper-connected world but—for various reasons—doesn’t include security as part of its core capabilities.

- TCP has a weak security foundation

Transmission Control Protocol (TCP) has been around for decades and has a weak security foundation. When it was created, security was out of scope. TCP can detect and retransmit error packets but leave them to their default; communication packets are not encrypted, which poses security risks.

In addition, TCP operates with a Connect First, Authenticate, Second operation model, which is inherently insecure. It leaves the two connecting parties wide open for an attack. When clients want to communicate and access an application, they first set up a connection.

The authentication stage occurs only once the connect stage has been completed. Once the authentication stage has been completed, we can pass the data.

From a security perspective, the most important thing to understand is that this connection occurs purely at a network layer with no identity, authentication, or authorization. The beauty of this model is that it enables anyone with a browser to easily connect to any public web server without requiring any upfront registration or permission. This is a perfect approach for a public web server but a lousy approach for a private application.

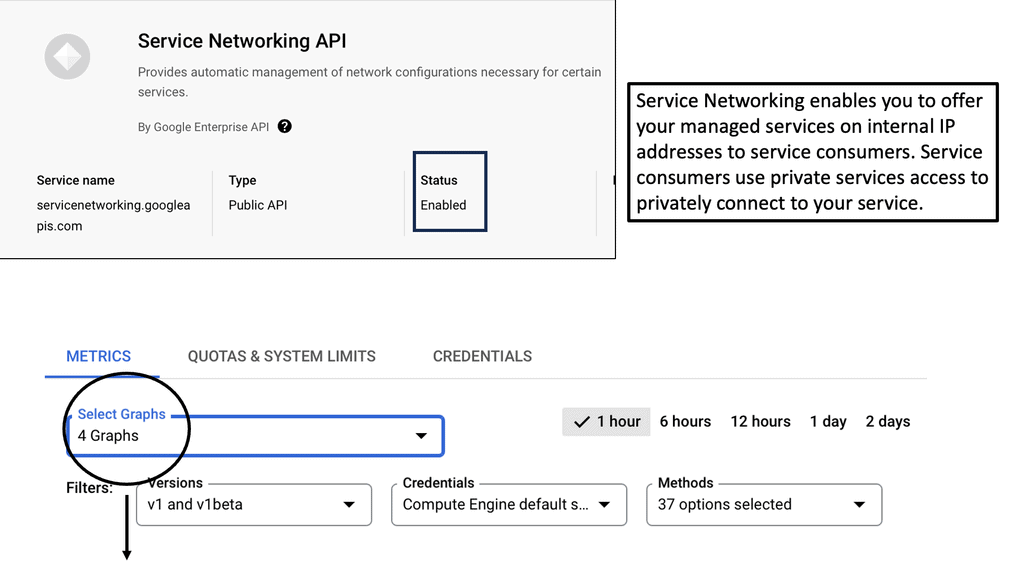

Zero Trust Connectivity: Service Networking APIs

**Understanding Zero Trust: A Paradigm Shift in Security**

In the context of service networking APIs, zero trust ensures that only authorized users and devices can interact with the APIs, reducing the risk of unauthorized access and data breaches. Implementing zero trust can significantly enhance the security posture of an organization, safeguarding sensitive data and maintaining user trust.

**Integrating Google Cloud and Zero Trust for Enhanced API Security**

Combining Google Cloud’s robust platform with zero trust principles creates a powerful synergy for securing service networking APIs. Google Cloud’s identity and access management tools, such as Cloud Identity and Access Management (IAM), work seamlessly within a zero trust framework to enforce strict authentication and authorization policies. By leveraging these tools, organizations can create a secure environment where APIs are protected from potential threats, and data is kept confidential and integral.

**The potential for malicious activity**

With this process of Connect First and Authenticate Second, we are essentially opening up the door of the network and the application without knowing who is on the other side. Unfortunately, with this model, we have no idea who the client is until they have carried out the connect phase, and once they have connected, they are already in the network. Maybe the requesting client is not trustworthy and has bad intentions. If so, once they connect, they can carry out malicious activity and potentially perform data exfiltration.

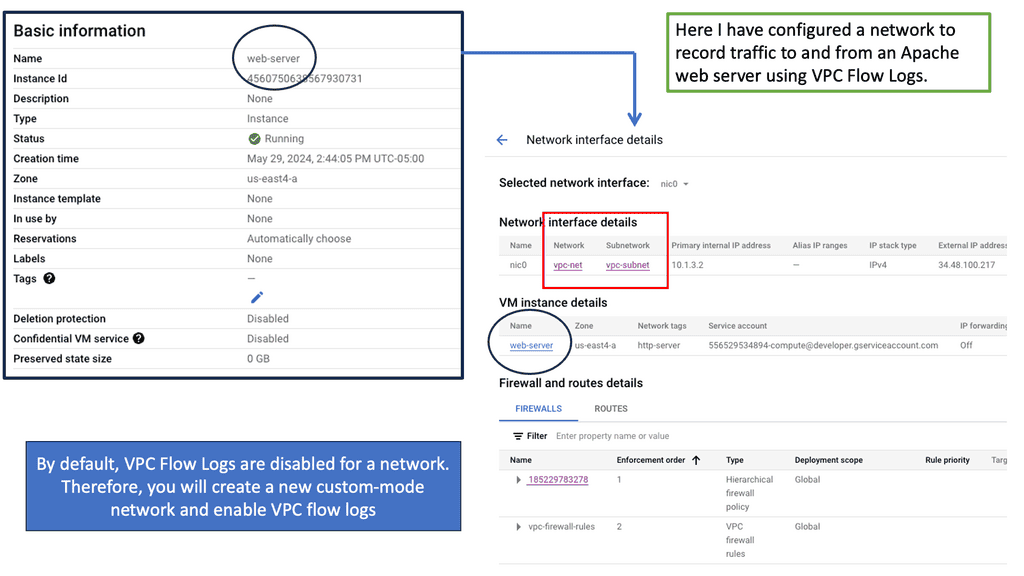

What is Network Monitoring?

Network monitoring is observing and analyzing network components and traffic to identify anomalies or performance issues. It uses specialized software and tools that provide real-time insights into network health, bandwidth utilization, device status, etc. By actively monitoring the network infrastructure, businesses can proactively detect and resolve issues before they escalate.

Network monitoring plays a pivotal role in safeguarding sensitive data from external threats. By monitoring network traffic for any suspicious activities or unauthorized access attempts, IT teams can quickly detect and respond to potential security breaches. Additionally, monitoring network devices for vulnerabilities and applying necessary patches and updates ensures a robust defense against cyber threats.

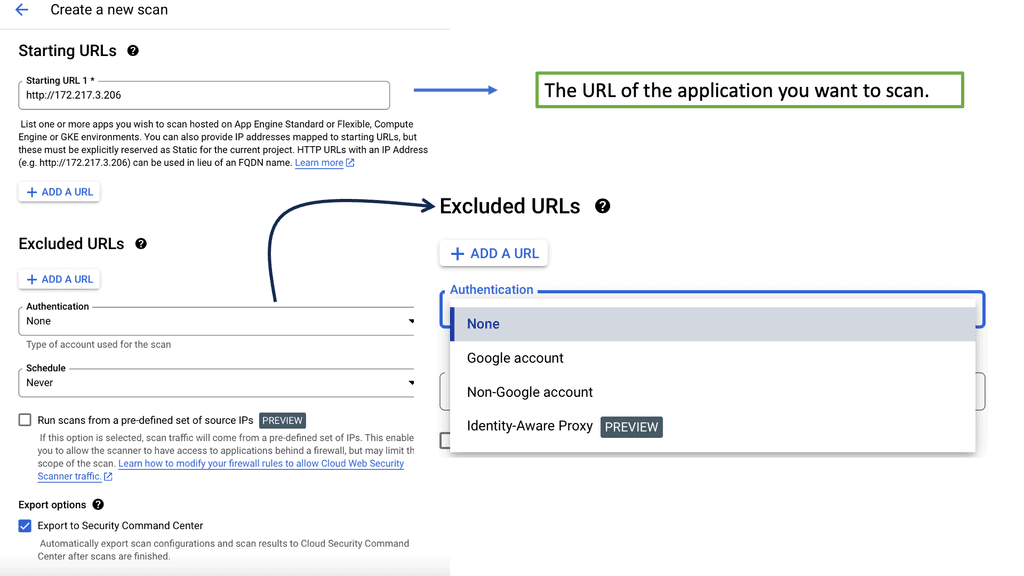

**Understanding Network Scanning**

Network scanning, at its core, involves systematically examining a network to identify its assets, configurations, and potential vulnerabilities. By employing various scanning techniques, security professionals can understand the network’s structure and potential risks.

Different methodologies for conducting network scanning exist, each catering to specific objectives. Passive scanning, for instance, focuses on observing network traffic without actively engaging with devices. On the other hand, active scanning involves sending requests to network devices to gather information about their configurations and potential vulnerabilities.

Numerous powerful tools are available to aid in network scanning endeavors. From widely used tools like Nmap and Wireshark to more specialized ones like Nessus and OpenVAS, the selection of tools depends on the desired scanning approach and the level of detail required. These tools provide many features, including port scanning, vulnerability assessment, and network mapping capabilities.

Additional Information on Network Mapping

Example: Identifying and Mapping Networks

To troubleshoot the network effectively, you can use a range of tools. Some are built into the operating system, while others must be downloaded and run. Depending on your experience, you may choose a top-down or a bottom-up approach.

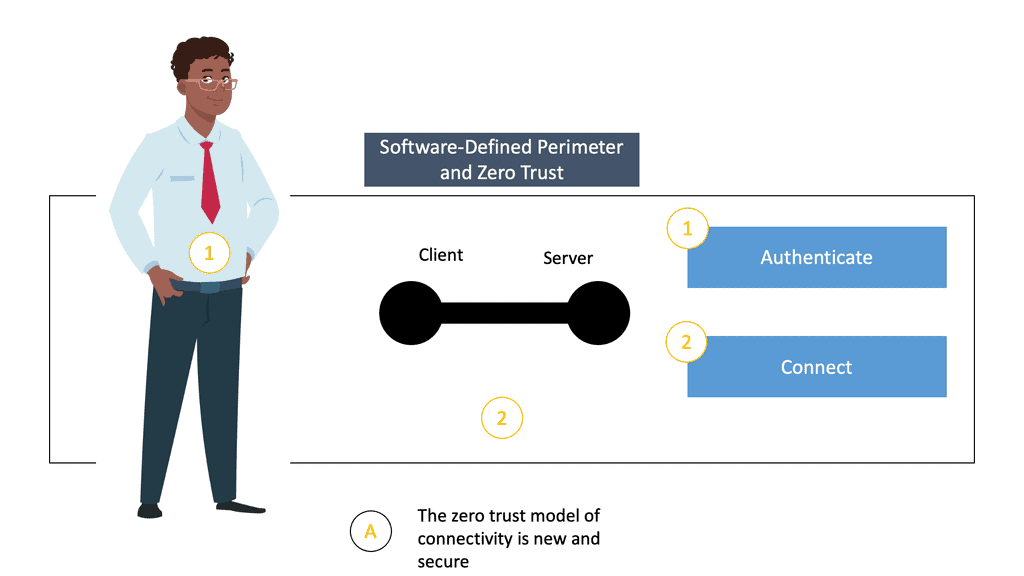

**Developing a Zero Trust Architecture**

A zero-trust architecture requires endpoints to authenticate and be authorized before obtaining network access to protected servers. Then, real-time encrypted connections are created between requesting systems and application infrastructure. With a zero-trust architecture, we must establish trust between the client and the application before the client can set up the connection. Zero Trust is all about trust – never trust, always verify.

Trust is bidirectional between the client and the Zero Trust architecture (which can take forms ) and the application to the Zero Trust architecture. It’s not a one-time check; it’s a continuous mode of operation. Once sufficient trust has been established, we move into the next stage, authentication. Once authentication has been set, we can connect the user to the application. Zero Trust access events flip the entire security model and make it more robust.

- We have gone from connecting first and authenticating second to authenticating first and connecting second.

Example of a zero-trust network access

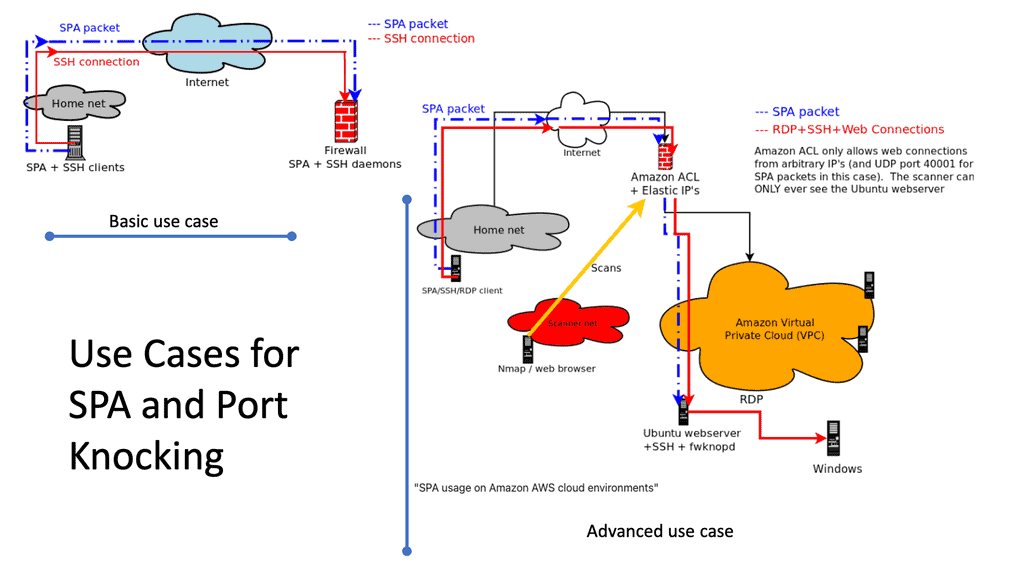

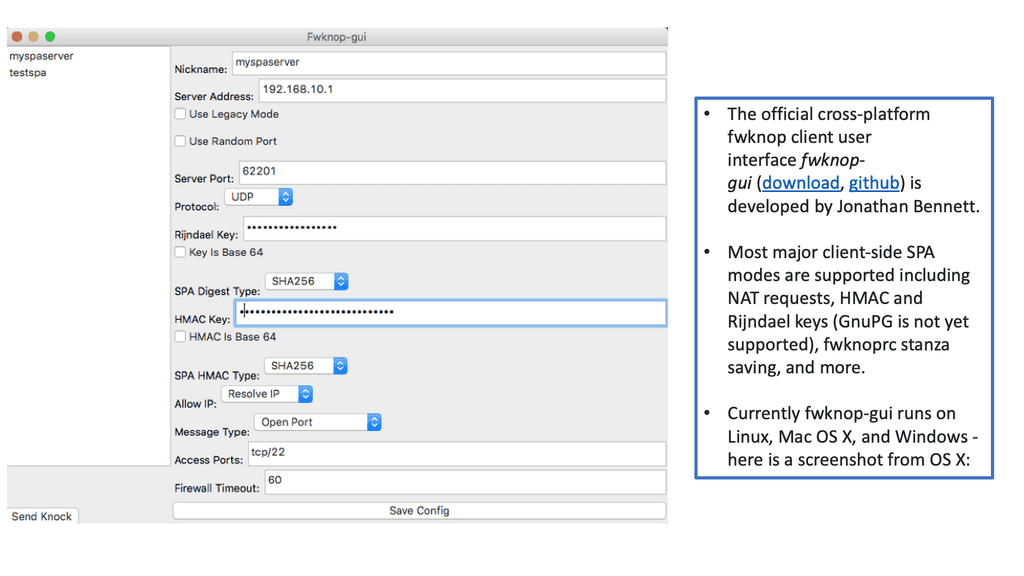

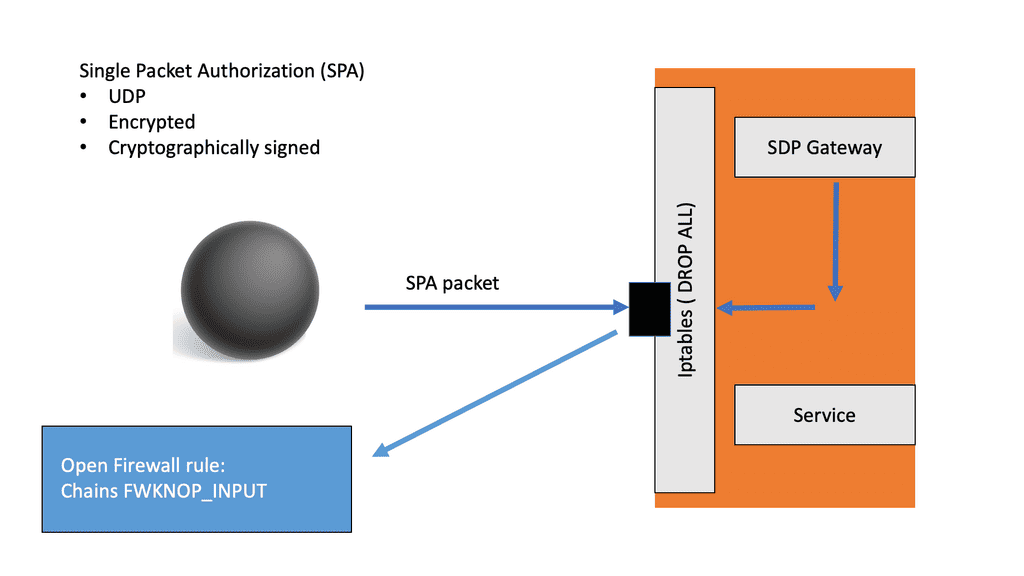

A. Single Pack Authorization ( SPA)

The user cannot see or know where the applications are located. SDP hides the application and creates a “dark” network by using Single Packet Authorization (SPA) for the authorization.

SPAs, also known as Single Packet Authentication, aim to overcome the open and insecure nature of TCP/IP, which follows a “connect then authenticate” model. SPA is a lightweight security protocol that validates a device or user’s identity before permitting network access to the SDP. The purpose of SPA is to allow a service to be darkened via a default-deny firewall.

The systems use a One-Time-Password (OTP) generated by algorithm 14 and embed the current password in the initial network packet sent from the client to the Server. The SDP specification mentions using the SPA packet after establishing a TCP connection. In contrast, the open-source implementation from the creators of SPA15 uses a UDP packet before the TCP connection.

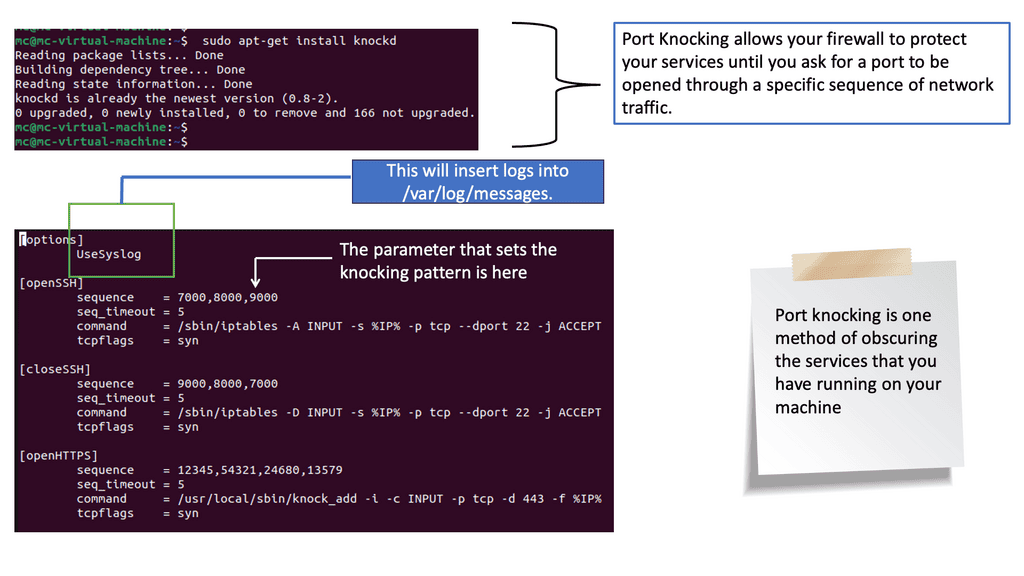

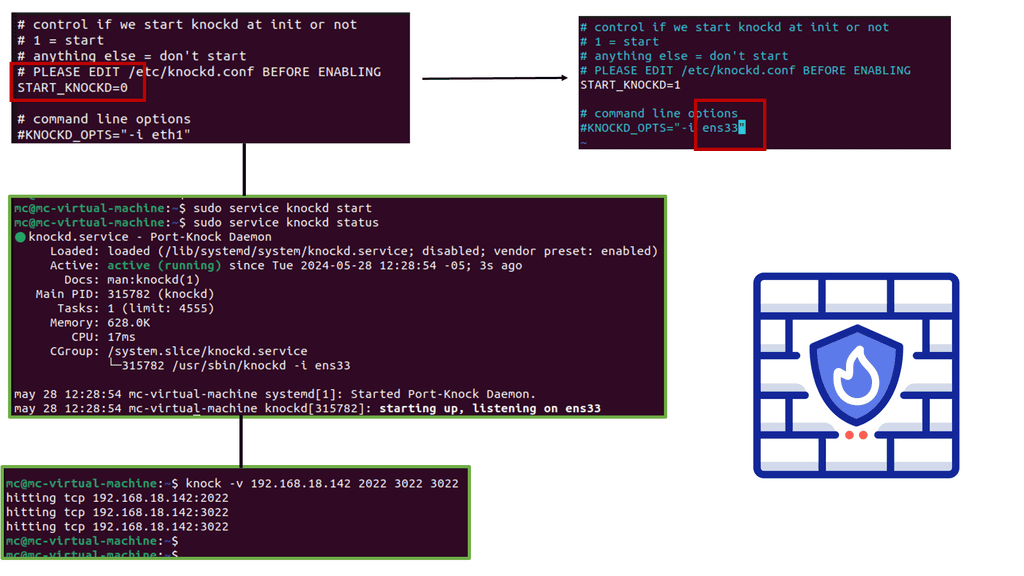

B. Understanding Port Knocking

At its core, port knocking is an access control method that conceals open ports on a server. Instead of leaving ports visibly open and vulnerable to attackers, port knocking requires a sequence of connection attempts to predefined closed ports. Once the correct sequence is detected, the server dynamically opens the desired port and allows access. This covert approach adds an extra layer of protection, making it an intriguing choice for those seeking to fortify their network security.

Implementing port knocking within a zero-trust framework can significantly enhance your network security. By obscuring open ports and allowing access only to authorized users who possess the correct port-knocking sequence, potential attackers face an additional barrier to overcome. This technique effectively reduces the attack surface and minimizes the risk of unauthorized access, making it an invaluable tool for security-conscious individuals and organizations.

**Issue 2 – Fixed perimeter approach to networking and security**

Traditionally, security boundaries were placed at the edge of the enterprise network in a classic “castle wall and moat” approach. However, as technology evolved, remote workers and workloads became more common. As a result, security boundaries necessarily followed and expanded from just the corporate perimeter.

**The traditional world of static domains**

The traditional world of networking started with static domains. Networks were initially designed to create internal segments separated from the external world by a fixed perimeter. The classical network model divided clients and users into trusted and untrusted groups. The internal network was deemed trustworthy, whereas the external was considered hostile.

The perimeter approach to network and security has several zones. We have, for example, the Internet, DMZ, Trusted, and then Privileged. In addition, we have public and private address spaces that separate network access from here. Private addresses were deemed more secure than public ones as they were unreachable online. However, this trust assumption that all private addresses are safe is where our problems started.

**The fixed perimeter**

The digital threat landscape is concerning. We are getting hit by external threats to your applications and networks from all over the world. They also come internally within your network, and we have insider threats within a user group and internally as insider threats across user group boundaries. These types of threats need to be addressed one by one.

One issue with the fixed perimeter approach is that it assumes trusted internal and hostile external networks. However, we must assume that the internal network is as hostile as the external one.

Over 80% of threats are from internal malware or malicious employees. The fixed perimeter approach to networking and security is still the foundation for most network and security professionals, even though a lot has changed since the design’s inception.

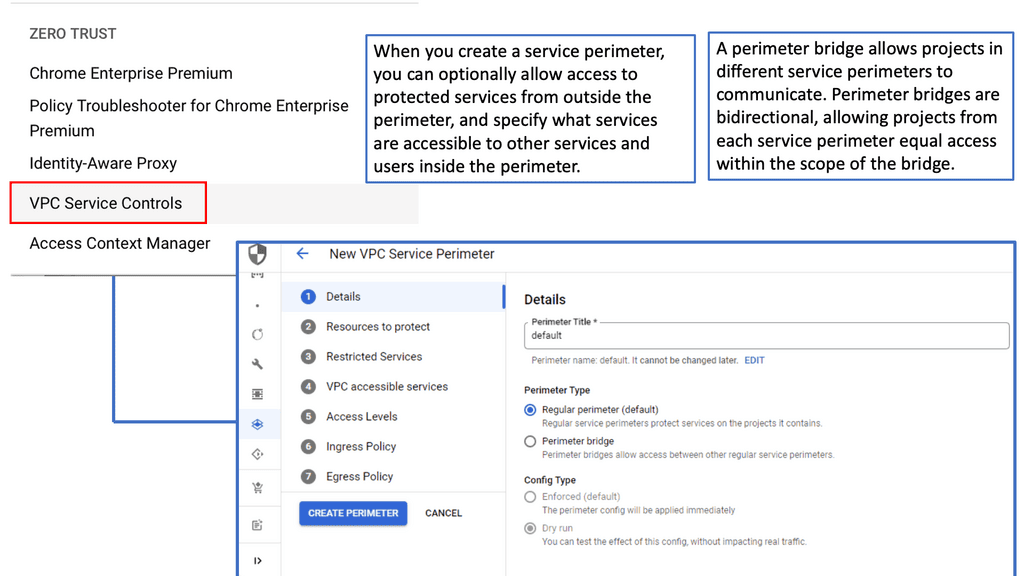

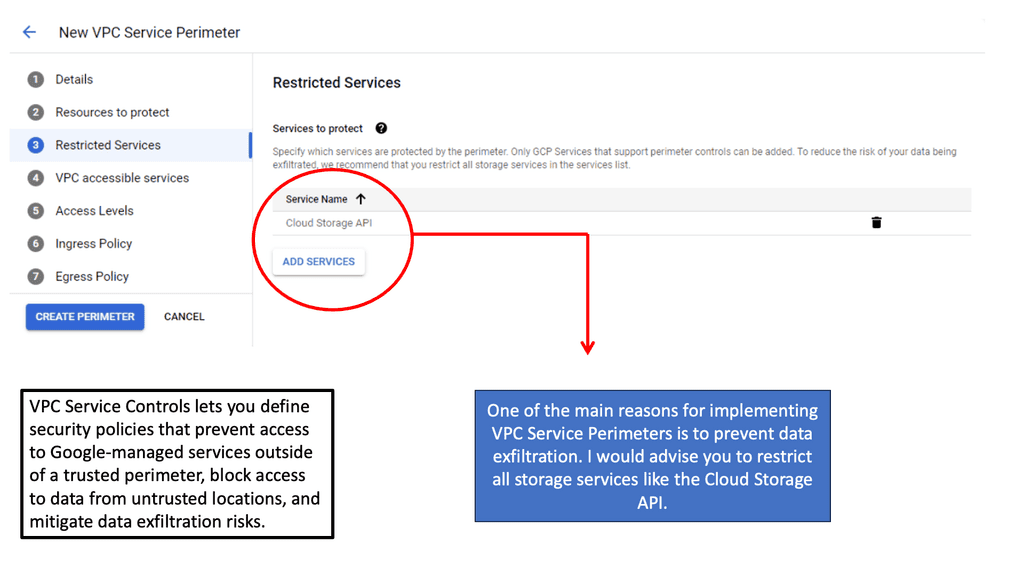

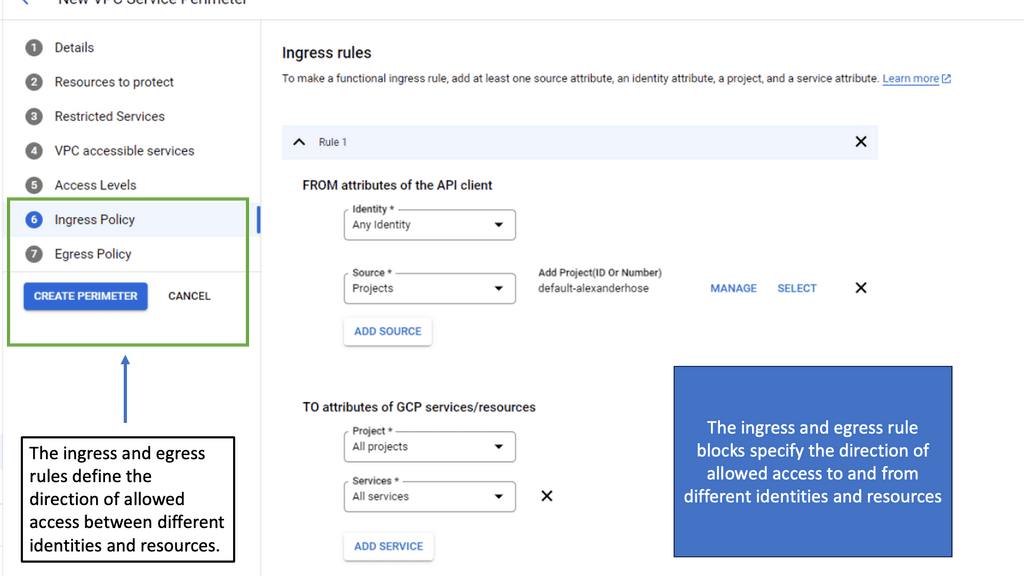

Zero Trust & VPC Service Controls

### Role of VPC Service Controls in Zero Trust Network Design

Zero Trust Network Design is rapidly gaining traction as an essential cybersecurity framework. Unlike traditional security models that assume trust within the network, Zero Trust operates on the principle of ‘never trust, always verify.’ This paradigm shift emphasizes the need for more granular controls and continuous verification of user and device identities. VPC Service Controls align perfectly with this approach by restricting access to critical resources and ensuring that only authenticated and authorized entities can interact with the data. This integration fortifies the network’s defenses, minimizes potential attack vectors, and ensures data integrity.

### Implementing VPC Service Controls in Google Cloud

Implementing VPC Service Controls within Google Cloud is a strategic move for organizations aiming to enhance their security posture. The process involves setting up security perimeters around sensitive resources, such as Cloud Storage buckets, BigQuery datasets, and Cloud Bigtable instances. By defining these perimeters, organizations can enforce policies that restrict access based on specific criteria, such as IP addresses, service accounts, or even user-defined attributes. This granular control not only prevents unauthorized access but also ensures compliance with industry regulations and standards.

We get hacked daily!

We are now at a stage where 45% of US companies have experienced a data breach. The 2022 Thales Data Threat Report found that almost half (45%) of US companies suffered a data breach in the past year. However, this could be higher due to the potential for undetected breaches.

We are getting hacked daily, and major networks with skilled staff are crashing. Unfortunately, the perimeter approach to networking has failed to provide adequate security in today’s digital world. It works to an extent by delaying an attack. However, a bad actor will eventually penetrate your guarded walls with enough patience and skill.

If a large gate and walls guard your house, you would feel safe and fully protected inside. However, the perimeter protecting your home may be as large and thick as possible. There is still a chance that someone can climb the walls, access your front door, and enter your property. If a bad actor cannot even see your house, they cannot take the next step and try to breach your security.

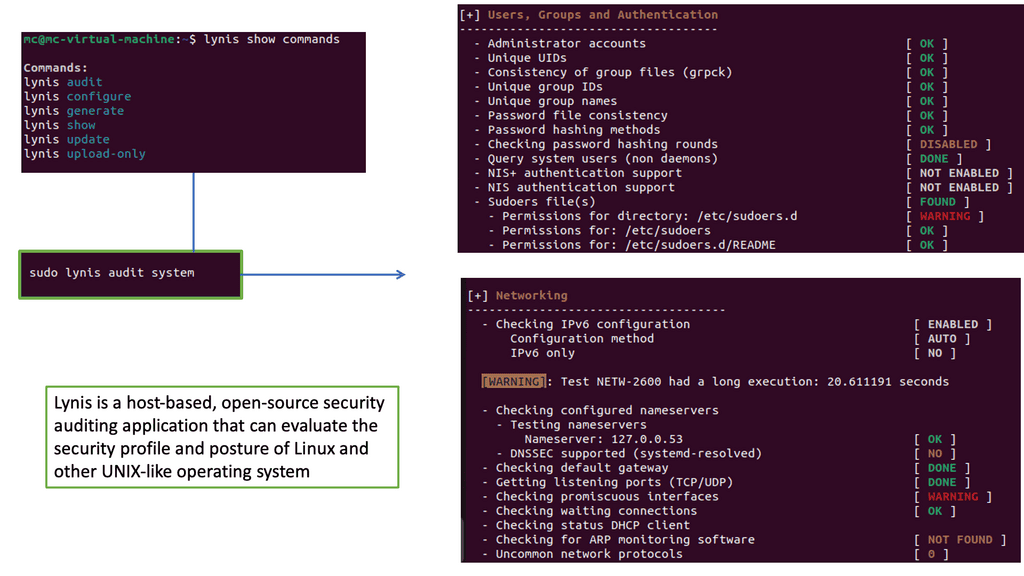

Example: Security Scan Lynis

Lynis is an open-source security auditing tool designed to assess the security of Linux and Unix-based systems. Developed by CISOfy, Lynis performs comprehensive security scans by analyzing system configurations, checking for vulnerabilities, and recommending steps to improve overall security posture.

**Issue 3 – Dissolved perimeter caused by the changing environment**

The environment has changed with the introduction of the cloud, advanced BYOD, machine-to-machine connections, the rise in remote access, and phishing attacks. We have many internal devices and a variety of users, such as on-site contractors, that need to access network resources.

Corporate devices are also trending to move to the cloud, collocated facilities, and off-site to customer and partner locations. In addition, they are becoming more diversified with hybrid architectures.

These changes are causing major security problems with the fixed perimeter approach to networking and security. For example, with the cloud, the internal perimeter is stretched to the cloud, but traditional security mechanisms are still being used. But it is an entirely new paradigm. Also, some abundant remote workers work from various devices and places.

Again, traditional security mechanisms are still being used. As our environment evolves, security tools and architectures must evolve. Let’s face it: the network perimeter has dissolved as your remote users, things, services, applications, and data are everywhere. In addition, as the world moves to the cloud, mobile, and IoT, the ability to control and secure everything in the network is no longer available.

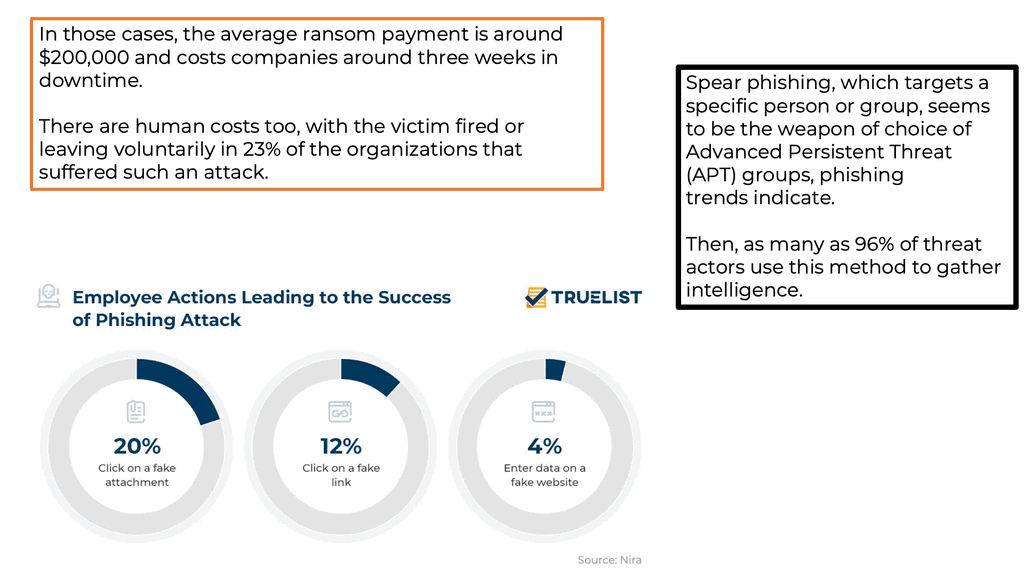

Phishing attacks are on the rise.

We have witnessed increased phishing attacks that can result in a bad actor landing on your local area network (LAN). Phishing is a type of social engineering where an attacker sends a fraudulent message designed to trick a person into revealing sensitive information to the attacker or to deploy malicious software on the victim’s infrastructure, like ransomware. The term “phishing” was first used in 1994 when a group of teens worked to obtain credit card numbers from unsuspecting users on AOL manually.

Hackers are inventing new ways.

By 1995, they had created a program called AOHell to automate their work. Since then, hackers have continued to invent new ways to gather details from anyone connected to the internet. These actors have created several programs and types of malicious software that are still used today.

Recently, I was a victim of a phishing email. Clicking and downloading the file is very easy if you are not educated about phishing attacks. In my case, the particular file was a .wav file. It looked safe, but it was not.

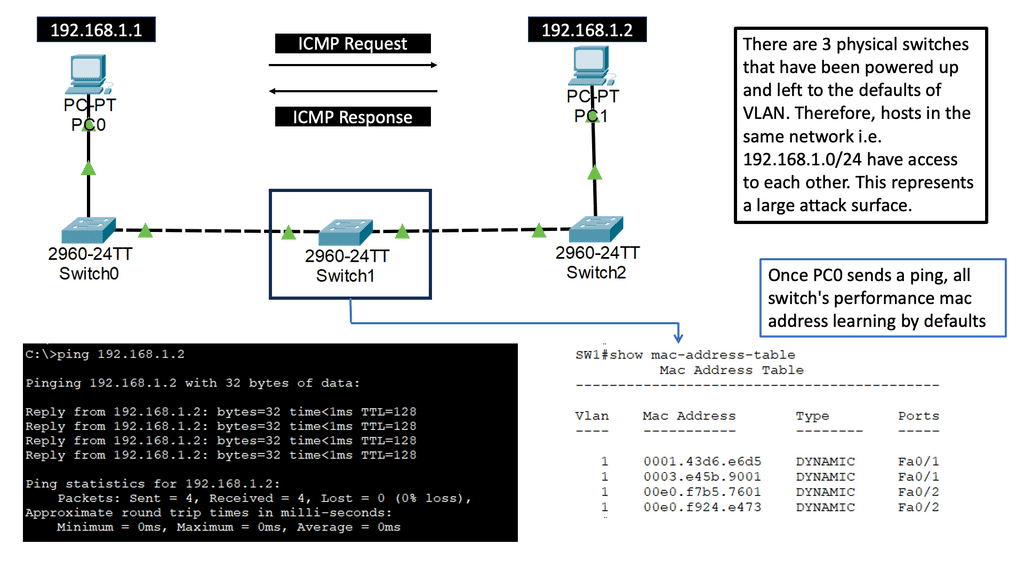

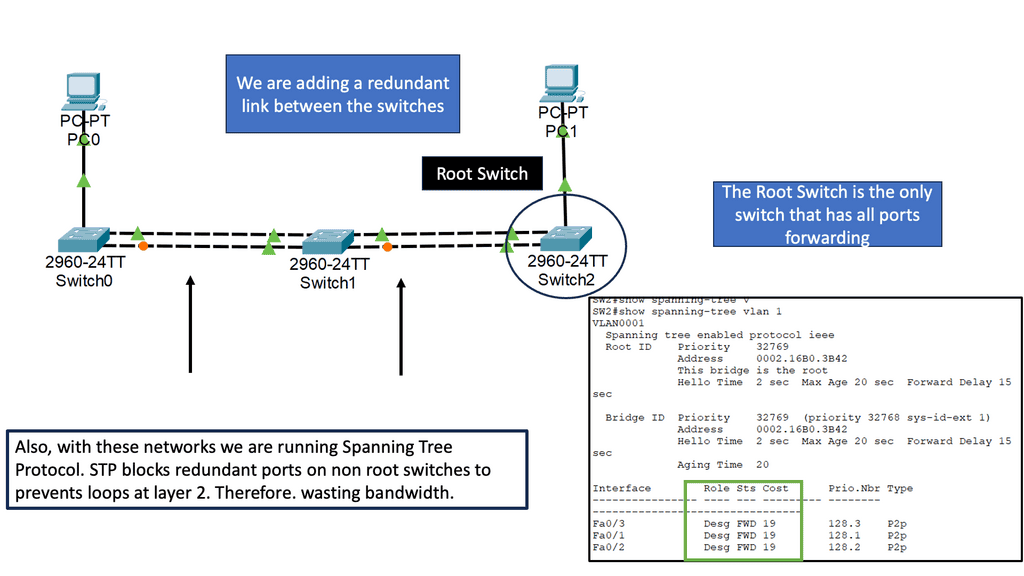

**Issue 4 – Broad-level access**

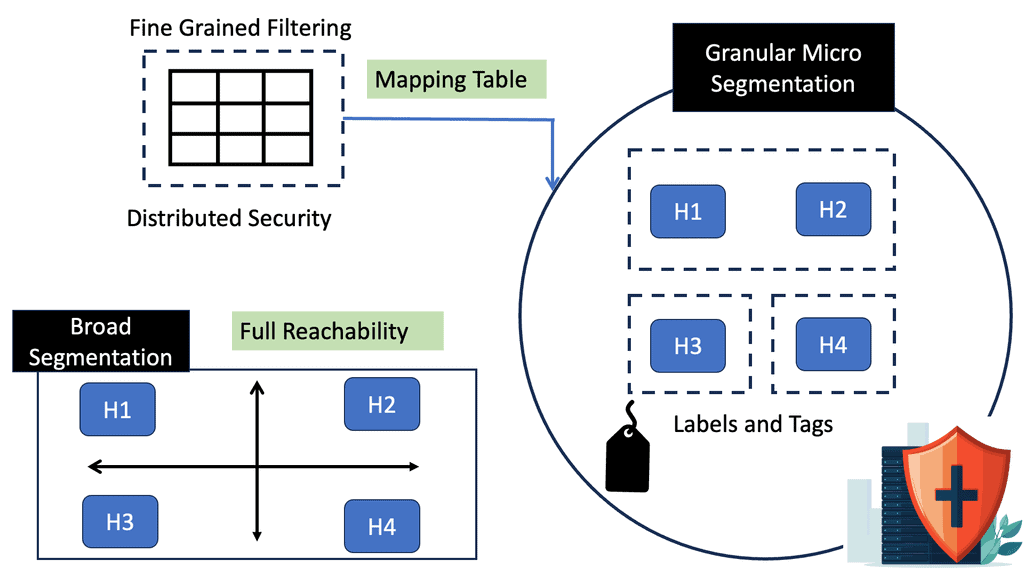

So, you may have heard of broad-level access and lateral movements. Remember, with traditional network and security mechanisms, when a bad actor lands on a particular segment, i.e., a VLAN, known as zone-based networking, they can see everything on that segment. So, this gives them broad-level access. But, generally speaking, when you are on a VLAN, you can see everything in that VLAN and VLAN-to-VLAN communication is not the hardest thing to do, resulting in lateral movements.

The issue of lateral movements

Lateral movement is the technique attackers use to progress through the organizational network after gaining initial access. Adversaries use lateral movement to identify target assets and sensitive data for their attack. Lateral movement is the tenth step in the MITRE Att&ck framework. It is the set of techniques attackers use to move in the network while gaining access to credentials without being detected.

No intra-VLAN filtering

This is made possible as, traditionally, a security device does not filter this low down on the network, i.e., inside of the VLAN, known as intra-VLAN filtering. A phishing email can easily lead the bad actor to the LAN with broad-level access and the capability to move laterally throughout the network.

For example, a bad actor can initially access an unpatched central file-sharing server; they move laterally between segments to the web developers’ machines and use a keylogger to get the credentials to access critical information on the all-important database servers.

They can then carry out data exfiltration with DNS or even a social media account like Twitter. However, firewalls generally do not check DNS as a file transfer mechanism, so data exfiltration using DNS will often go unnoticed.

With a zero-trust network segmentation approach, networks are segmented into smaller islands with specific workloads. In addition, each segment has its own ingress and egress controls to minimize the “blast radius” of unauthorized access to data.

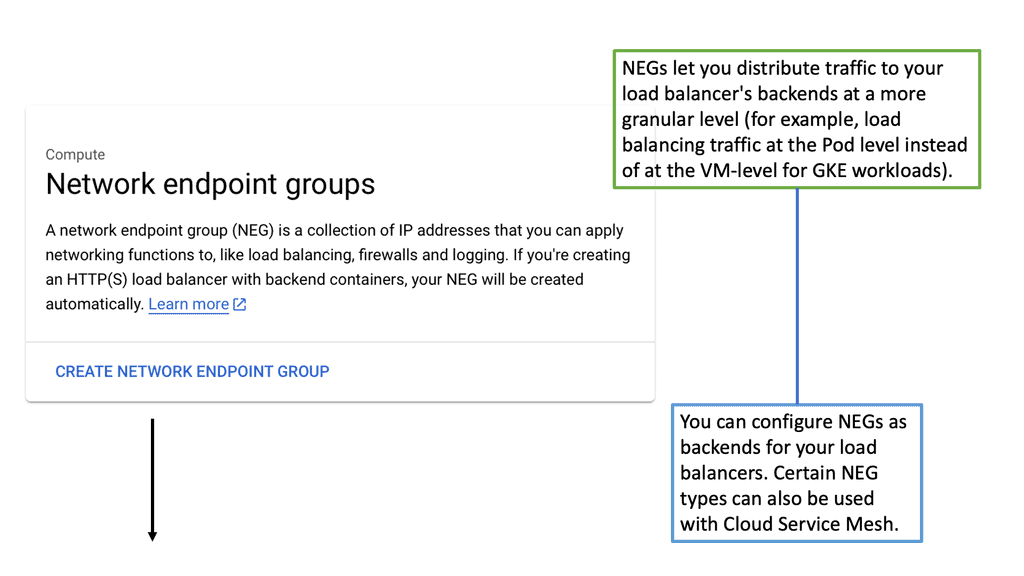

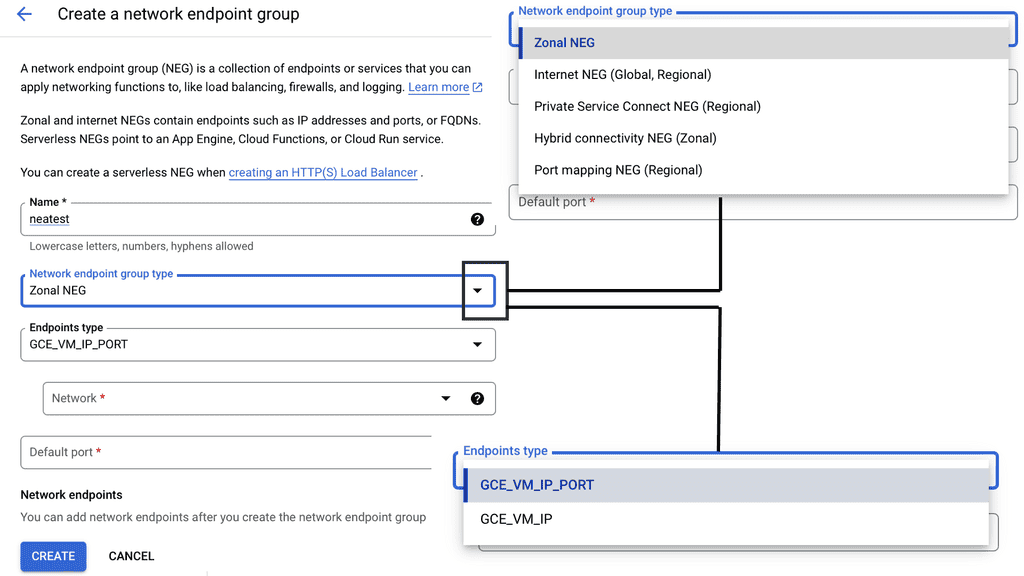

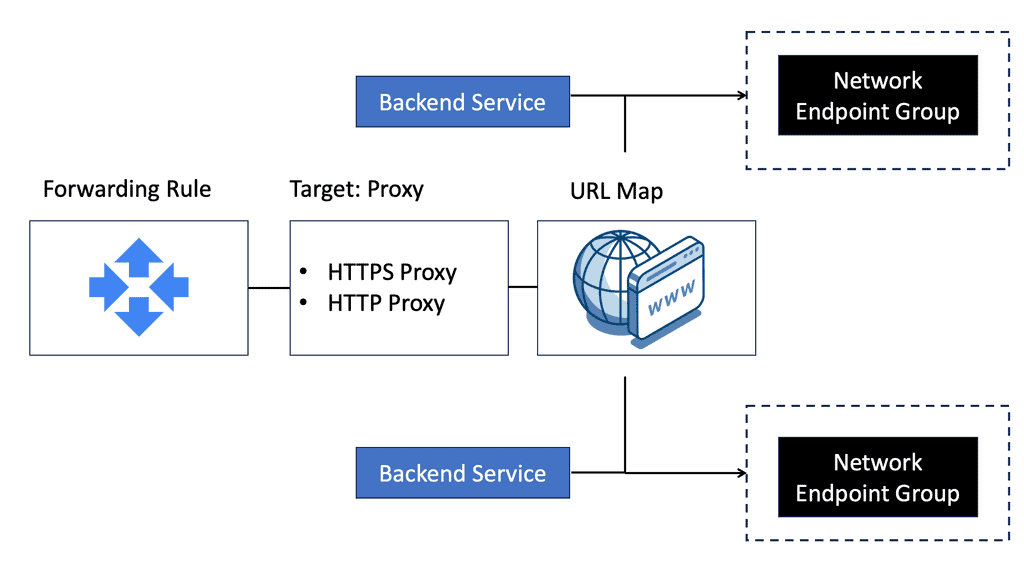

Example: Segmentation with Network Endpoint Groups (NEGs)

**Issue 5 – The challenges with traditional firewalls**

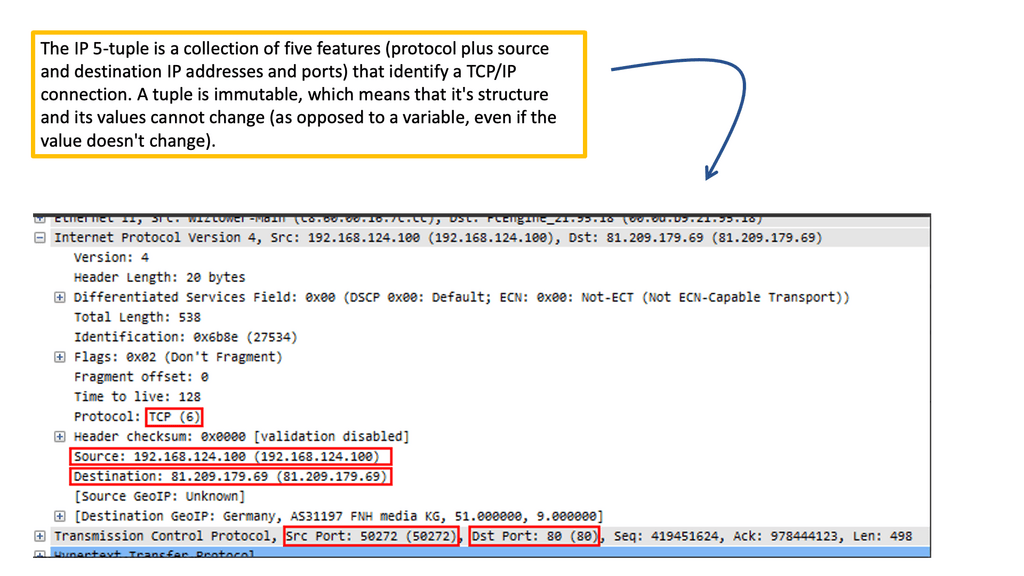

The limited world of 5-tuple

Traditional firewalls typically control access to network resources based on source IP addresses. This creates the fundamental challenge of securing admission. Namely, we need to solve the user access problem, but we only have the tools to control access based on IP addresses.

As a result, you have to group users, some of whom may work in different departments and roles, to access the same service and with the same IP addresses. The firewall rules are also static and don’t change dynamically based on levels of trust on a given device. They provide only network information.

Maybe the user moves to a more risky location, such as an Internet cafe, its local Firewall, or antivirus software that has been turned off by malware or even by accident. Unfortunately, a traditional firewall cannot detect this and live in the little world of the 5-tuple. Traditional firewalls can only express static rule sets and not communicate or enforce rules based on identity information.

**Issue 6 – A Cloud-focused environment**

Upon examining the cloud, let’s compare a public parking space. A public cloud is where you can put your car compared to your vehicle in your parking garage. We have multiple tenants who can take your area in a public parking space, but we don’t know what they can do to your car.

Today, we are very cloud-focused, but when moving applications to the cloud, we need to be very security-focused. However, the cloud environment is less mature in providing the traditional security control we use in our legacy environment.

So, when putting applications in the cloud, you shouldn’t leave security to its default. Why? Firstly, we operate in a shared model where the tenant after you can steal your encryption keys or data. There have been many cloud breaches. We have firewalls with static rulesets, authentication, and key management issues in cloud protection.

**Control point change**

One of the biggest problems is that the perimeter has moved when you move to a cloud-based application. Servers are no longer under your control. Mobile and tablets exacerbate the problem as they can be located everywhere. So, trying to control the perimeter is very difficult. More importantly, firewalls only have access to and control network information and should have more content.

This perimeter is defined by ZTNA architecture and software-defined perimeter. Cloud users now manage firewalls by moving their applications to the cloud, not the I.T. teams within the cloud providers.

So when moving applications to the cloud, even though cloud providers provide security tools, the cloud consumer has to integrate security to have more visibility than they have today.

Before, we had clear network demarcation points set by a central physical firewall creating inside and outside trust zones. Anything outside was considered hostile, and anything on the inside was deemed trusted.

1. Connection-centric model

The Zero Trust model flips this around and considers everything untrusted. To do this, there are no longer pre-defined fixed network demarcation points. Instead, the network perimeter initially set in stone is now fluid and software-based.

Zero Trust is connection-centric, not network-centric. Each user on a specific device connected to the network gets an individualized connection to a particular service hidden by the perimeter.

Instead of having one perimeter every user uses, SDP creates many small perimeters purposely built for users and applications. These are known as micro perimeters. Clients are cryptographically signed into these microperimeters.

2. Micro perimeters: Zero trust network segmentation

The micro perimeter is based on user and device context and can dynamically adjust to environmental changes. So, as a user moves to different locations or devices, the Zero Trust architecture can detect this and set the appropriate security controls based on the new context.

The data center is no longer the center of the universe. Instead, the user on specific devices, along with their service requests, is the new center of the universe.

Zero Trust does this by decoupling the user and device from the network. The data plane is separated from the network to remove the user from the control plane, where the authentication happens first.

Then, the data plane, the client-to-application connection, transfers the data. Therefore, the users don’t need to be on the network to gain application access. As a result, they have the least privilege and no broad-level access.

3. Zero trust network segmentation

Zero-trust network segmentation is gaining traction in cybersecurity because it increases an organization’s network protection. This method of securing networks is based on the concept of “never trust, always verify,” meaning that all traffic must be authenticated and authorized before it can access the network.

This is accomplished by segmenting the network into multiple isolated zones accessible only through specific access points, which are carefully monitored and controlled.

Network segmentation is a critical component of a zero-trust network design. By dividing the network into smaller, isolated units, it is easier to monitor and control access to the network. Additionally, segmentation makes it harder for attackers to move laterally across the network, reducing the chance of a successful attack.

Zero-trust network design segmentation is essential to any organization’s cybersecurity strategy. By utilizing segmentation, authentication, and monitoring systems, organizations can ensure their networks are secure and their data is protected.

4. The I.P. address conundrum

Everything today relies on IP addresses for trust, but there is a problem: IP addresses lack user knowledge to assign and validate the device’s trust. There is no way for an IP address to do this. IP addresses provide connectivity but do not involve validating the trust of the endpoint or the user.

Also, I.P. addresses should not be used as an anchor for network locations as they are today because when a user moves from one place to another, the I.P. address changes.

Can’t have security related to an I.P. address.

But what about the security policy assigned to the old IP addresses? What happens with your changed IPs? Anything tied to IP is ridiculous, as we don’t have a good hook to hang things on for security policy enforcement. There are several facets to policy. For example, the user access policy touches on authorization, the network access policy touches on what to connect to, and the user account policies touch on authentication.

With either one, there is no policy visibility with I.P. addresses. This is also a significant problem for traditional firewalling, which displays static configurations; for example, a stationary design may state that this particular source can reach this destination using this port number.

**Security-related issues to I.P.**

- This has no meaning. There is no indication of why that rule exists or under what conditions a packet should be allowed to travel from one source to another.

- No contextual information is taken into consideration. When creating a robust security posture, we must consider more than ports and IP addresses.

For a robust security posture, you need complete visibility into the network to see who, what, when, and how they connect with the device. Unfortunately, today’s Firewall is static and only contains information about the network.

On the other hand, Zero Trust enables a dynamic firewall with the user and device context to open a firewall for a single secure connection. The Firewall remains closed at all other times, creating a ‘black cloud’ stance regardless of whether the connections are made to the cloud or on-premise.

The rise of the next-generation firewall?

Next-generation firewalls are more advanced than traditional firewalls. They use the information in layers 5 through 7 (session, presentation, and application layers) to perform additional functions. They can provide advanced features such as intrusion detection, prevention, and virtual private networks.

Today, most enterprise firewalls are “next generation” and typically include IDS/IPS, traffic analysis and malware detection for threat detection, URL filtering, and some degree of application awareness/control.

Like the NAC market segment, vendors in this area began a journey to identity-centric security around the same time Zero Trust ideas began percolating through the industry. Today, many NGFW vendors offer Zero Trust capabilities, but many operate with the perimeter security model.

Still, IP-based security systems

NGFWs are still IP-based systems offering limited identity and application-centric capabilities. In addition, they are static firewalls. Most do not employ zero-trust segmentation, and they often mandate traditional perimeter-centric network architectures with site-to-site connections and don’t offer flexible network segmentation capabilities. Similar to conventional firewalls, their access policy models are typically coarse-grained, providing users with broader network access than what is strictly necessary.

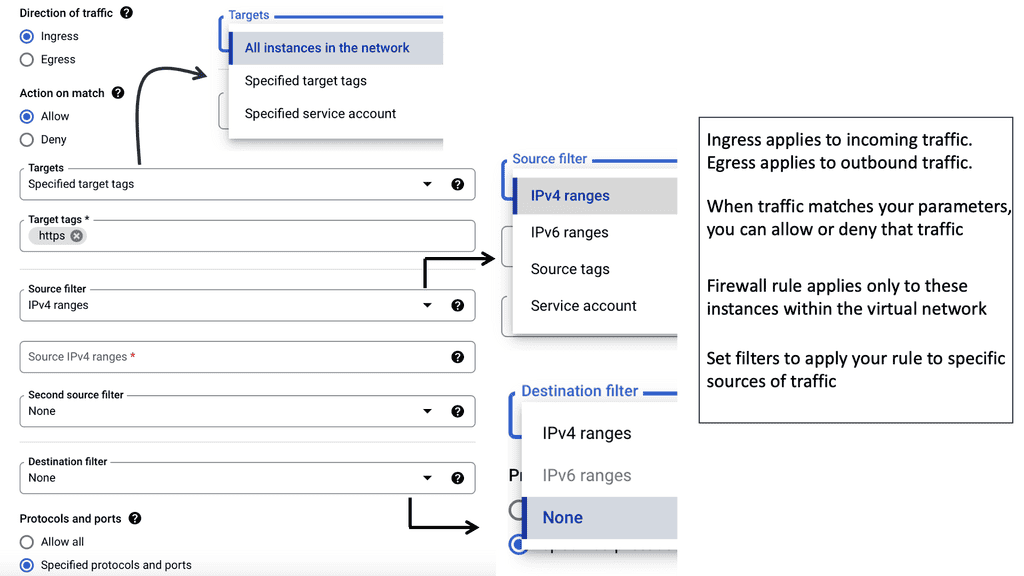

Example: Tags and Controls with firewalling

Summary: Zero Trust Network Design

Traditional network security measures are no longer sufficient in today’s digital landscape, where cyber threats are becoming increasingly sophisticated. Enter zero trust network design, a revolutionary approach that challenges the traditional perimeter-based security model. In this blog post, we will delve into the concept of zero-trust network design, its key principles, benefits, and implementation strategies.

Understanding Zero Trust Network Design

Zero-trust network design is a security framework that operates on the principle of “never trust, always verify.” Unlike traditional perimeter-based security, which assumes trust within the network, zero-trust treats every user, device, or application as potentially malicious. This approach is based on the belief that trust should not be automatically granted but continuously verified, regardless of location or network access method.

Key Principles of Zero Trust

Certain key principles must be followed to implement zero trust network design effectively. These principles include:

1. Least Privilege: Users and devices are granted the minimum level of access required to perform their tasks, reducing the risk of unauthorized access or lateral movement within the network.

2. Microsegmentation: The network is divided into smaller segments or zones, allowing granular control over network traffic and limiting the impact of potential breaches or lateral movement.

3. Continuous Authentication: Authentication and authorization are not just one-time events but are verified throughout a user’s session, preventing unauthorized access even after initial login.

Benefits of Zero Trust Network Design

Implementing a zero-trust network design offers several significant benefits for organizations:

1. Enhanced Security: By adopting a zero-trust approach, organizations can significantly reduce the attack surface and mitigate the risk of data breaches or unauthorized access.

2. Improved Compliance: Zero trust network design aligns with many regulatory requirements, helping organizations meet compliance standards more effectively.

3. Greater Flexibility: Zero trust allows organizations to embrace modern workplace trends, such as remote work and cloud-based applications, without compromising security.

Implementing Zero Trust

Implementing a trust network design requires careful planning and a structured approach. Some key steps to consider are:

1. Network Assessment: Conduct a thorough assessment of the existing network infrastructure, identifying potential vulnerabilities or areas that require improvement.

2. Policy Development: Define comprehensive security policies that align with zero trust principles, including access control, authentication mechanisms, and user/device monitoring.

3. Technology Adoption: Implement appropriate technologies and tools that support zero-trust network design, such as network segmentation solutions, multifactor authentication, and continuous monitoring systems.

Conclusion:

Zero trust network design represents a paradigm shift in network security, challenging traditional notions of trust and adopting a more proactive and layered approach. By implementing the fundamental principles of zero trust, organizations can significantly enhance their security posture, reduce the risk of data breaches, and adapt to evolving threat landscapes. Embracing the principles of least privilege, microsegmentation, and continuous authentication, organizations can revolutionize their network security and stay one step ahead of cyber threats.