Modularization Virtualization

Modularization virtualization has emerged as a game-changing technology in the field of computing. This innovative approach allows organizations to streamline operations, improve efficiency, and enhance scalability. In this blog post, we will explore the concept of modularization virtualization, understand its benefits, and discover how it is revolutionizing various industries.

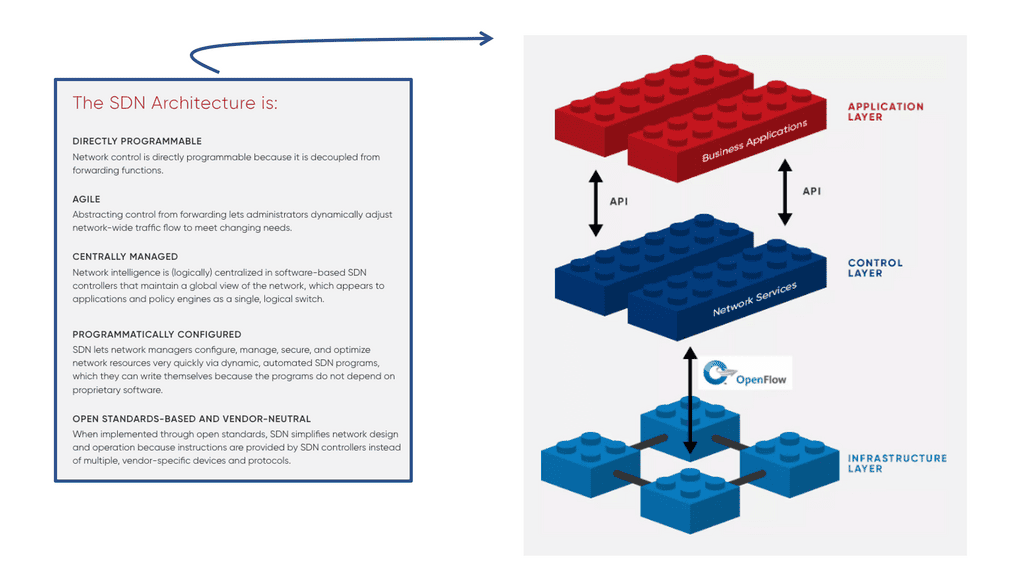

Modularization virtualization refers to breaking down complex systems or applications into smaller, independent modules that can be managed and operated individually. These modules are then virtualized, enabling them to run on virtual machines or containers separate from the underlying hardware infrastructure. This approach offers numerous advantages over traditional monolithic systems.

Modularization virtualization brings together two transformative concepts in technology. Modularization refers to the practice of breaking down complex systems into smaller, independent modules, while virtualization involves creating virtual instances of hardware, software, or networks. When combined, these concepts enable flexible, scalable, and efficient systems.

By modularizing systems, organizations can easily add or remove modules as needed, allowing for greater flexibility and scalability. Virtualization further enhances this by providing the ability to create virtual instances on-demand, eliminating the need for physical infrastructure.

Modularization virtualization optimizes resource utilization by pooling and sharing resources across different modules and virtual instances. This leads to efficient use of hardware, reduced costs, and improved overall system performance.

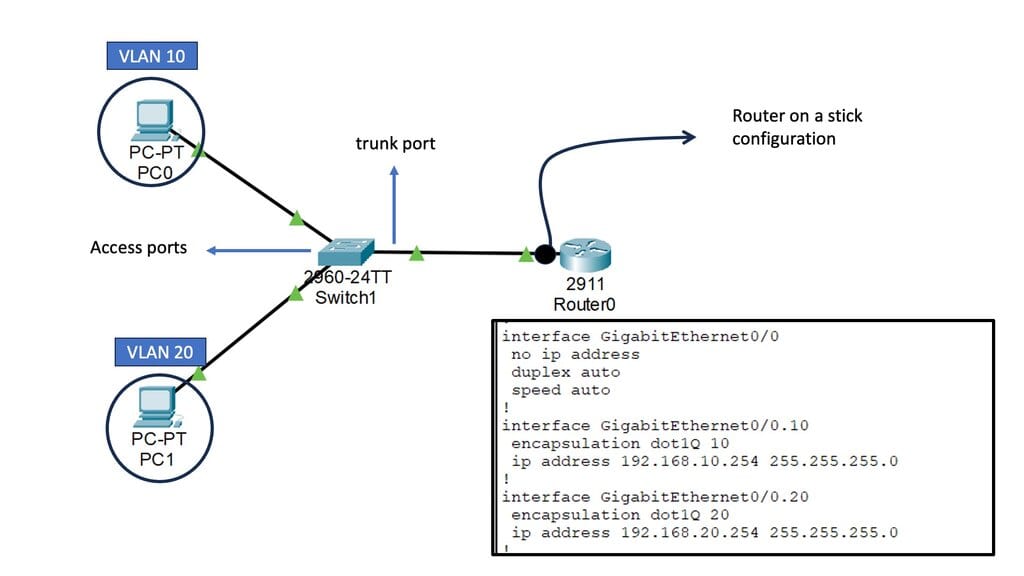

IT Infrastructure: Modularization virtualization has revolutionized IT infrastructure by enabling the creation of virtual servers, storage, and networks. This allows for easy provisioning, management, and scaling of IT resources, leading to increased efficiency and cost savings.

Manufacturing: In the manufacturing industry, modularization virtualization has streamlined production processes by creating modular units that can be easily reconfigured and adapted. This enables agile manufacturing, faster time-to-market, and improved product quality.

Healthcare: The healthcare sector has embraced modularization virtualization to enhance patient care and improve operational efficiency. Virtualized healthcare systems enable seamless data sharing, remote patient monitoring, and resource optimization, leading to better healthcare outcomes.

Matt Conran

Highlights: Modularization Virtualization

Data centers and modularity

There are two ways to approach modularity in data center design. In the first step, each leaf (pod or rack) must be constructed entirely. Each pod contains the necessary storage, processing, and other services to perform a specific task. It is possible to design pods to provide Hadoop databases and human resources systems or even build application environments.

In a modular network, pods can be exchanged relatively independently of each other and other services and pods. Services can be connected (or disconnected) according to their needs. This model is extremely flexible and ideal for enterprises and other users of data centers with rapidly changing needs.

**Pod Modularity**

– The second approach modularizes pods according to their resource availability. Block storage pods, file storage pods, virtualized compute pods, and bare metal compute pods can all be housed in different pods. By upgrading one type of resource in bulk, the network operator can minimize the effect of upgrading it on the operation of specific services in the data center.

– This solution would benefit organizations that virtualize most of their services on standard hardware and want to separate hardware and software lifecycle management.Of course, the two options can be mixed. In a data protection pod, backup services might be provided to other pods, which would then be organized based on their services rather than their resources.

– A resource-based modularization plan may be interrupted if an occasional service runs on bare metal servers instead of virtual servers. There are two types of traffic in these situations: those that can be moved for optimal traffic levels and those that cannot.

**Performing Modularization**

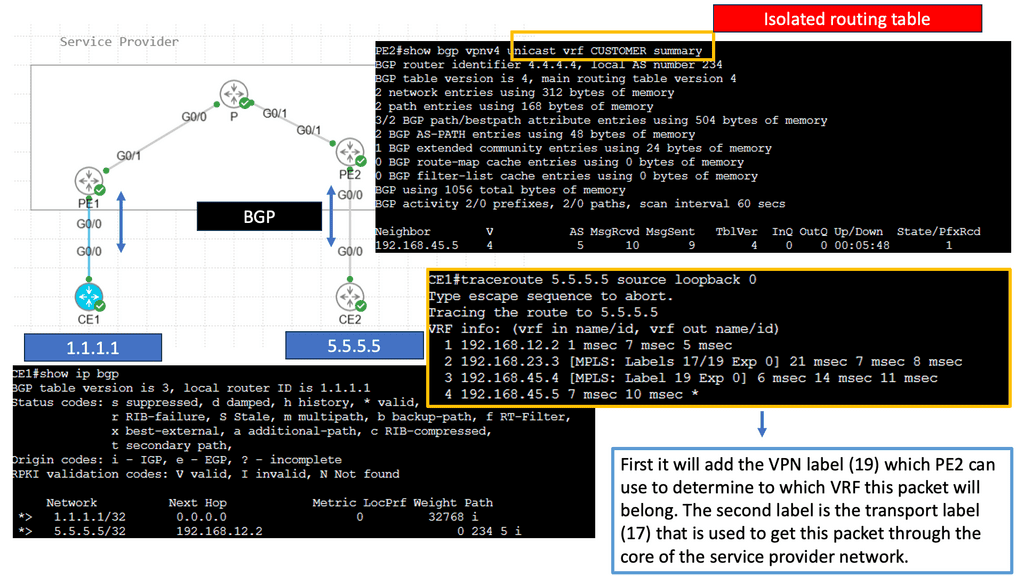

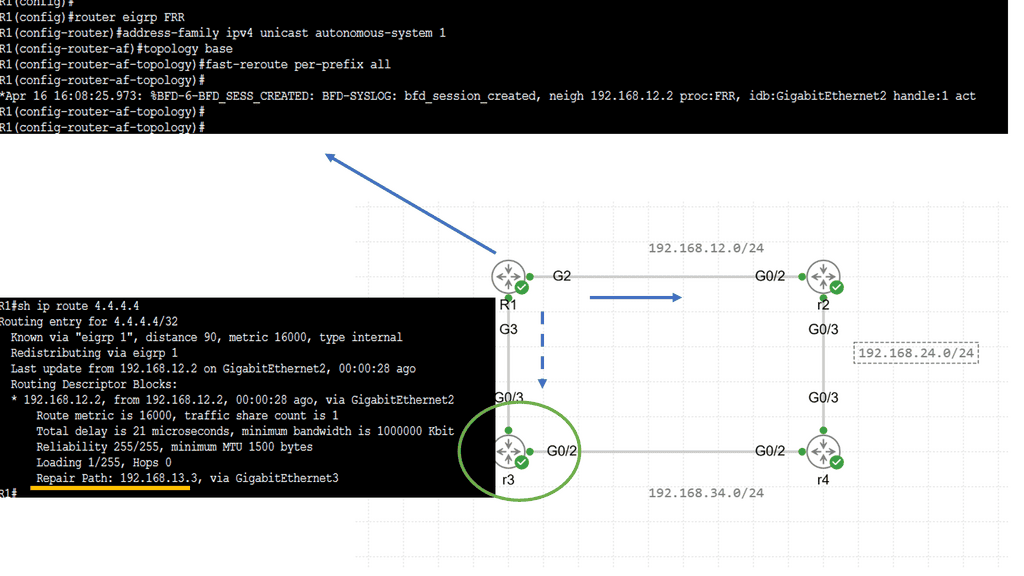

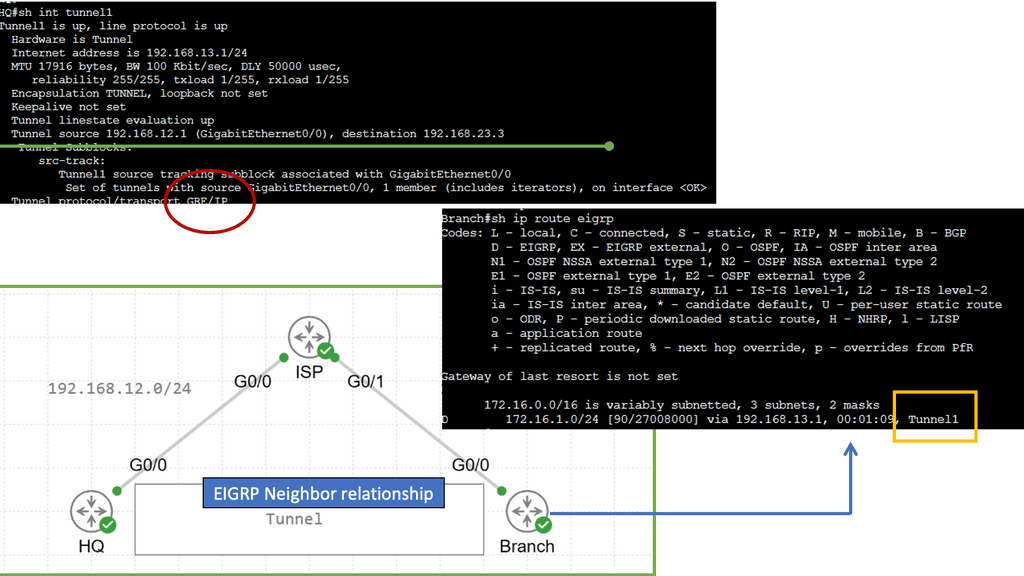

With virtualization modularization, systems are deemed modular when they can be decomposed into several components that may be mixed and matched in various configurations. So, with virtualization modularization, we don’t have one flat network; we have different modules with virtualization as the base technology performing the modularization. Some of these virtualization technologies include MPLS.

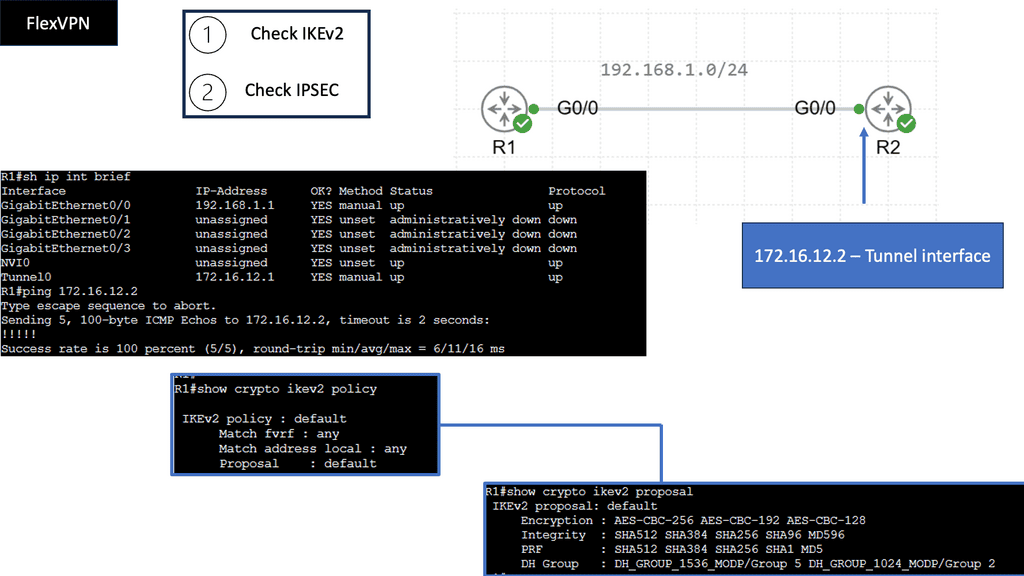

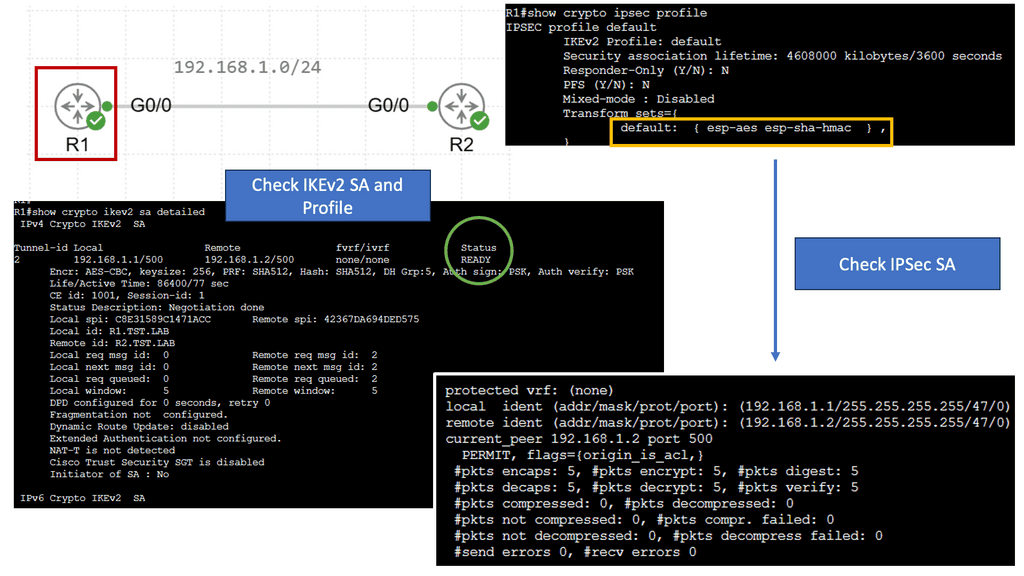

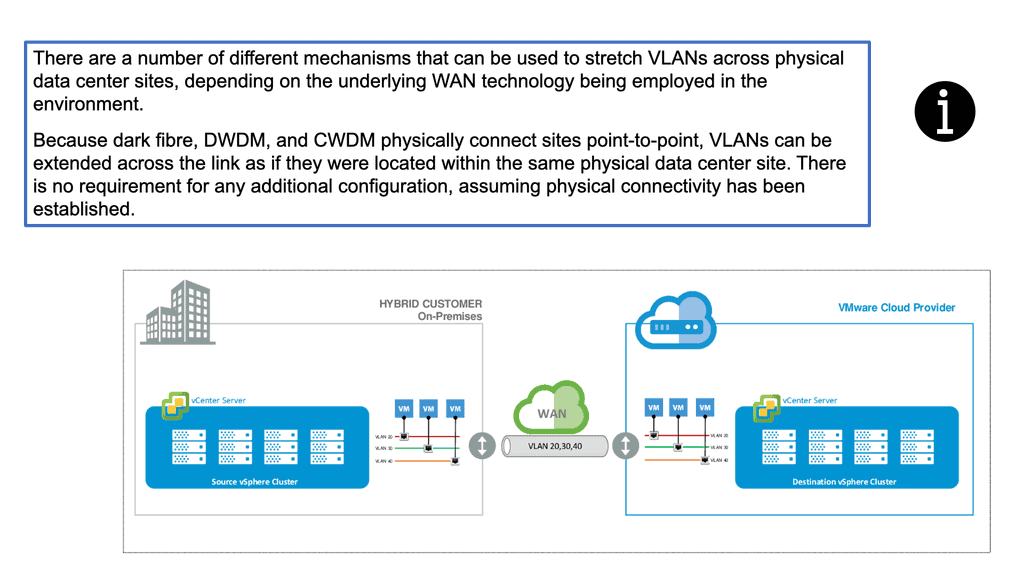

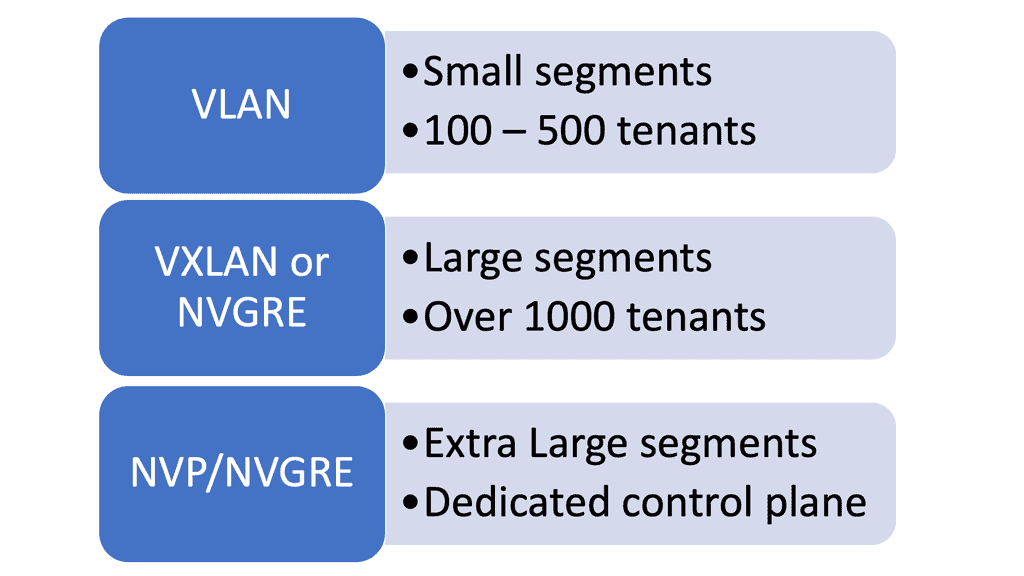

Overlay Networking: Modular Partitions

To move data across the physical network, overlay services, and data-plane encapsulations must be defined. Underlay networks (or simply underlays) are typically used for this type of transport. The OSI layer at which tunnel encapsulation occurs is crucial to determining the underlay. The overlay header type somewhat dictates the transport network type.

With VXLAN, for example, the underlying transport network (underlay) is a Layer 3 network that transports VXLAN-encapsulated packets between the source and destination tunnel edge devices. As a result, the underlay facilitates reachability between the tunnel edge devices and the overlay edge devices.

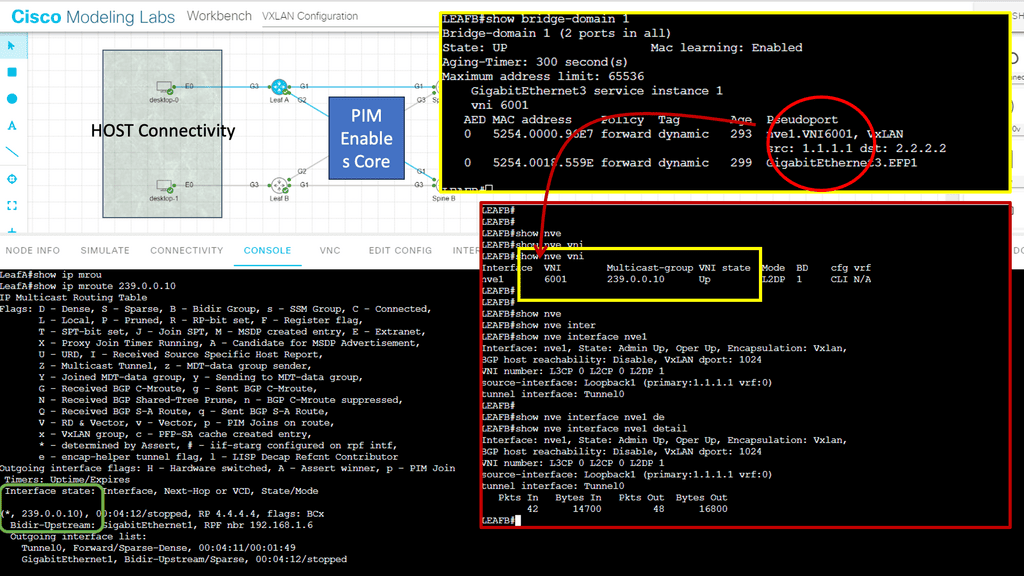

Example VXLAN Overlay Networking – Multicast Mode

**The Role of Multicast in VXLAN**

Multicast mode in VXLAN is a crucial feature that optimizes the way network traffic is handled. In a traditional network, broadcast traffic can lead to inefficiencies and congestion. VXLAN addresses this by using multicast groups to distribute broadcast, unknown unicast, and multicast (BUM) traffic across the network. This method minimizes unnecessary data replication and ensures that only the intended recipients receive specific packets, enhancing overall network efficiency and reducing bandwidth consumption.

**How Multicast Mode Works in VXLAN**

In VXLAN multicast mode, each VXLAN segment is associated with a multicast group. When a device sends a BUM packet, it is encapsulated in a VXLAN header and transmitted to the multicast group. Only devices that have joined this group will receive the packet, ensuring that data is transmitted only where it is needed. This approach not only streamlines network traffic but also significantly reduces the likelihood of packet loss and latency, providing a more reliable and efficient network experience.

**Reducing state and control plane**

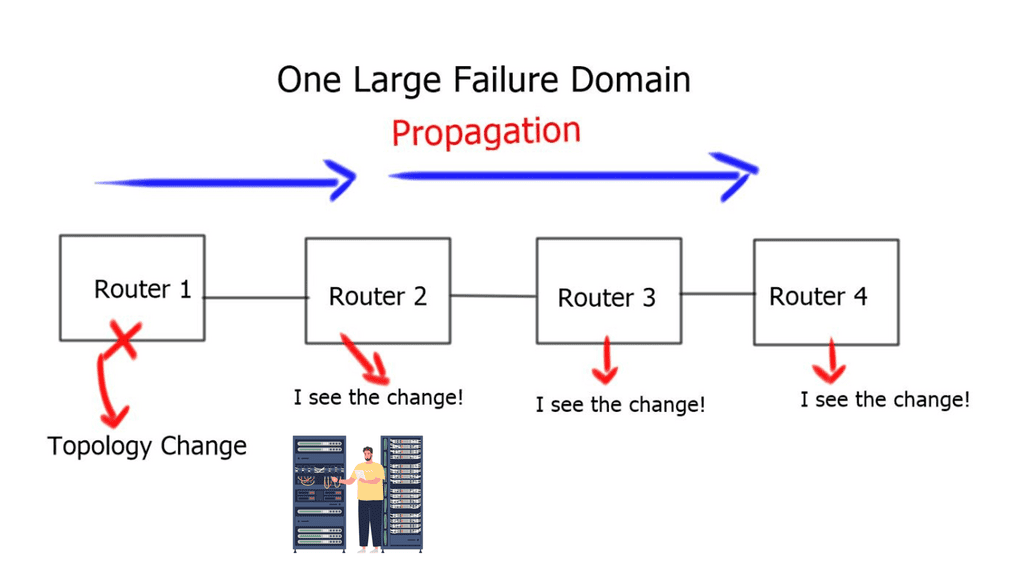

Why don’t we rebuild the Internet into one flat-switched domain – the flat earth model? The problem with designing one significant flat architecture is that you would find no way to reduce individual devices’ state and control plane. To forward packets efficiently, every device would have to know how to reach every other device; each device would also have to be interrupted every time there was a state change on any router in the entire domain. This is in contrast to modularization virtualization, also called virtualization modularization.

Modularity: Data Center Design

Modularity in data center design can be approached in two ways.

To begin with, each leaf (or pod, or “rack”) should be constructed as a complete unit. Each pod provides storage, processing, and other services to perform all the tasks associated with one specific service set. One pod may be designed to process and store Hadoop data, another for human resources management, or an application build environment.

This modularity allows the network designer to interchange different types of pods without affecting other pods or services in the network. By connecting (or disconnecting) services as needed, the fabric becomes a “black box”. The model is flexible for enterprises and other data center users whose needs constantly change.

In addition, pods can be modularized according to the type of resources they offer. The bare metal compute, the virtualized compute, and the block storage pods may be housed in different pods. As a result, the network operator can upgrade one type of resource en masse with minimal impact on the operation of any particular service in the data center. A solution like this is more suited to organizations that can virtualize most of their services onto standard hardware and want to manage the hardware life cycle separately from the software life cycle.

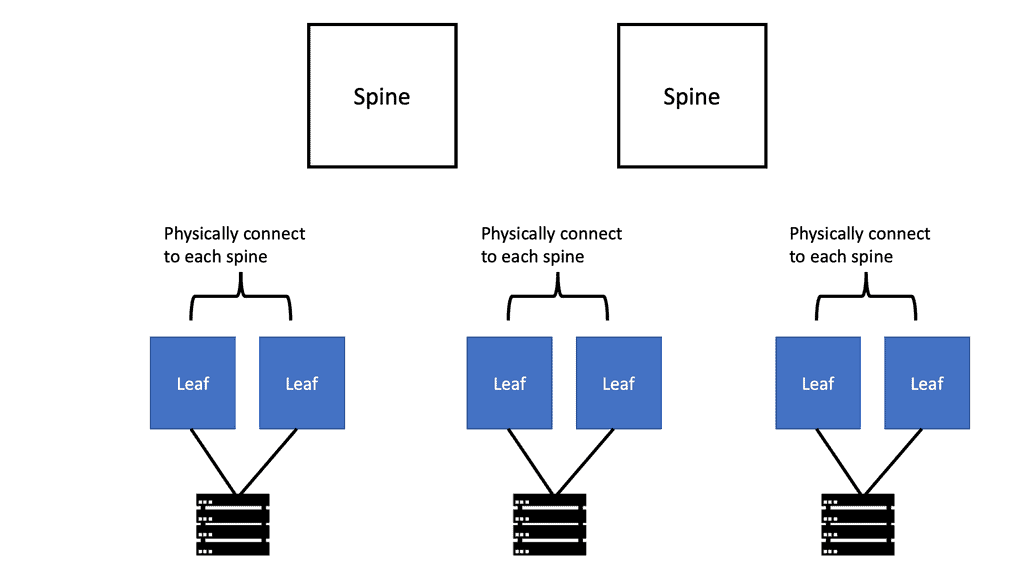

Modular Design – Leaf and Spine Architecture

### Understanding Leaf and Spine Architecture

At its core, the leaf and spine architecture consists of two main components: the spine switches and the leaf switches. Spine switches are the backbone of the network, connecting all leaf switches, which, in turn, connect to the servers and storage devices. This design ensures that each leaf switch is only a single hop away from any other leaf switch, minimizing latency and maximizing throughput. The architecture is inherently non-blocking and allows for greater flexibility and scalability compared to traditional hierarchical models.

### The Role of Modular Design

Modular design is a key feature of leaf and spine architecture, offering several advantages over monolithic network designs. By using interchangeable, standardized components, network administrators can easily integrate new technologies and expand the network as needed. This flexibility reduces downtime, simplifies maintenance, and enables organizations to adapt quickly to changing demands. Additionally, modular design allows for cost-effective scaling, as components can be added incrementally without the need for a complete network overhaul.

Related: Before you proceed, you may find the following posts helpful:

Modularization Virtualization

Network Modularity and Hierarchical Network Design

Hierarchical network design reaches beyond hub-and-spoke topologies at the module level and provides rules, or general design methods, that give the best overall network design.

The first rule is to assign each module a single function. Reducing the number of functions or roles assigned to any particular module will help. It will also streamline the configuration of devices within the module and along its edge.

The second general rule in the hierarchical method is to design the network modules. Hence, every module at a given layer or distance from the network core has a roughly parallel function.

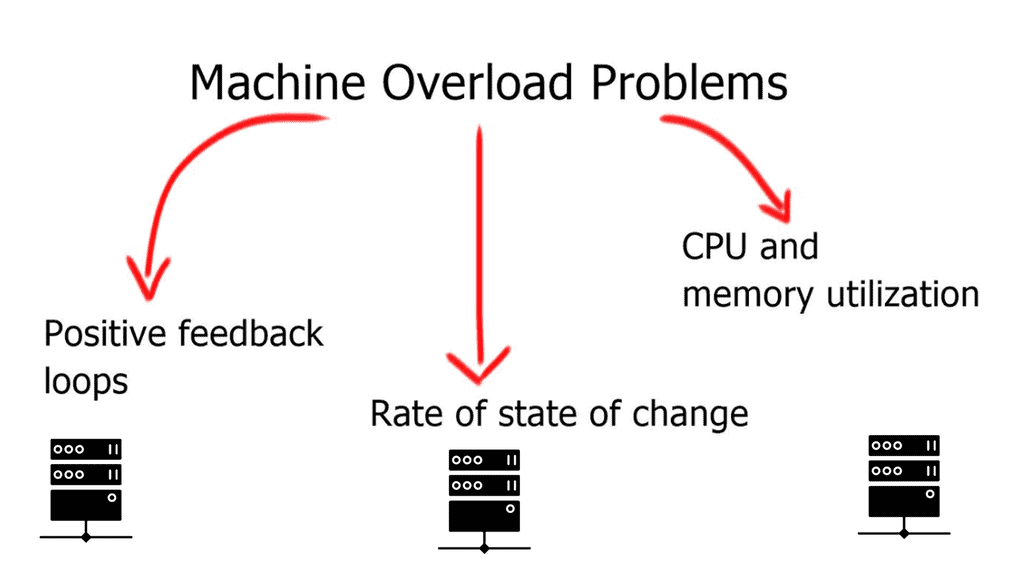

The amount of state and the rate at which it changes is impossible to maintain, and what you would witness would be a case of information overload at the machine level. Machine overload can be diagnosed into three independent problems below. The general idea behind machine overload is that too much information is insufficient for network efficiency. Some methods can reduce these defects, but no matter how much you try to optimize your design, you will never get away from the fact that fewer routes in a small domain are better than many routes in a large domain.

- CPU and memory utilization:

On most Catalyst platforms, routing information is stored in a special high-speed memory called TCAM. Unfortunately, TCAM is not infinite and is generally expensive. Large routing tables require more CPU cycles, physical memory, and TCAM.

- Rate of state of change:

Every time the network topology changes, the control plane must adapt to the new topology. The bigger the domain, the more routers will have to recalculate the best path and propagate changes to their neighbors, increasing the rate of state change. Because MAC addresses are not hierarchical, a Layer 2 network has a much higher rate of state change than a Layer 3 network.

- Positive feedback loops:

Positive feedback loops add the concept of rate of change with the rate of information flow.

Positive feedback loops |

How can we address these challenges? The answer is network design with modularization and information hiding using virtualization modularization.

Modularization, virtualization, and information hiding

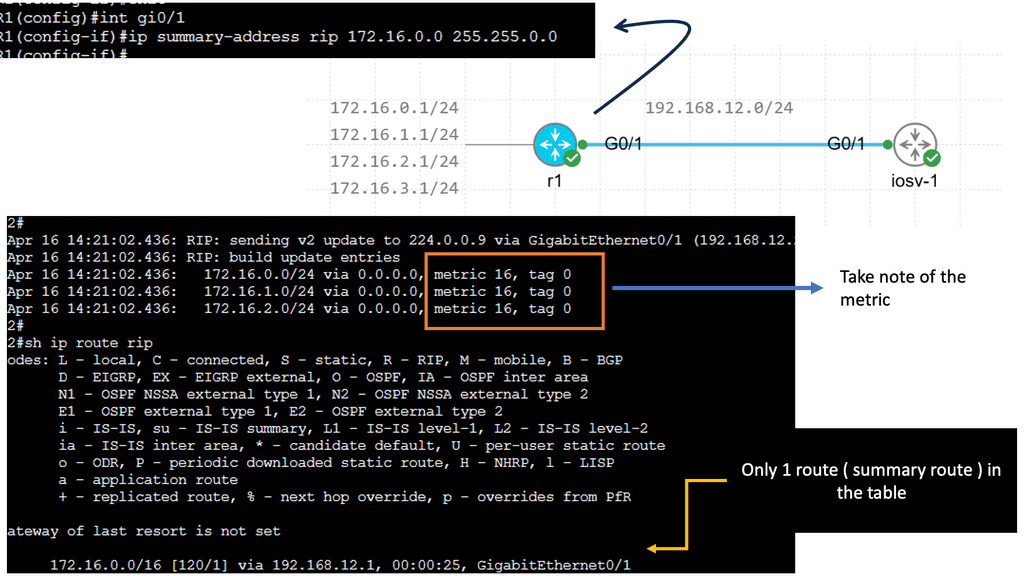

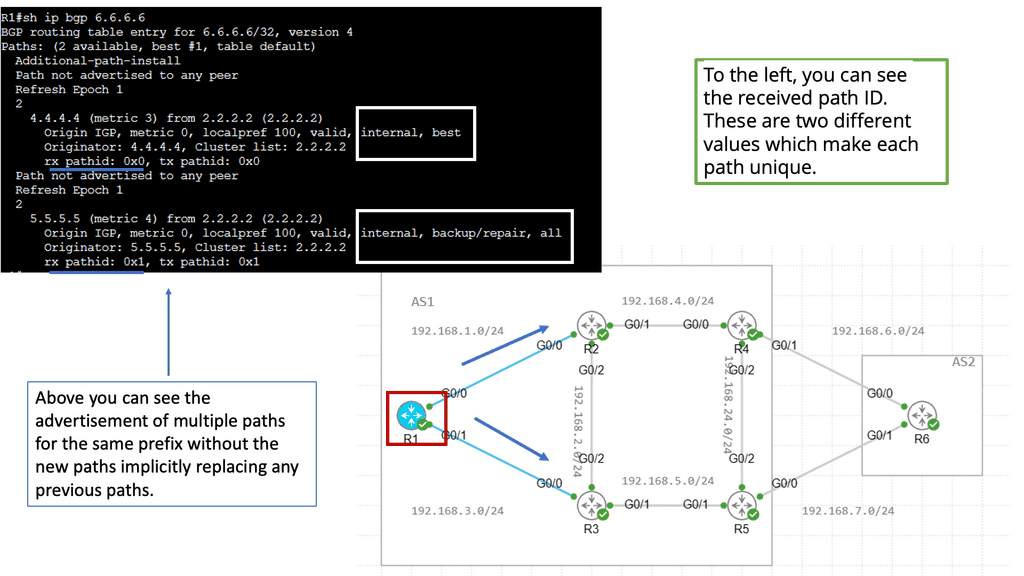

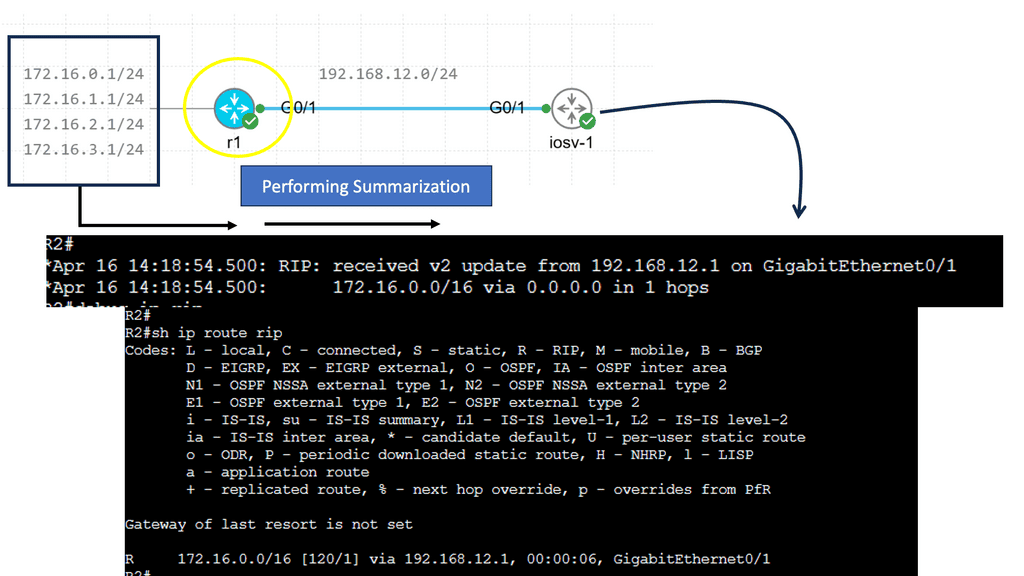

Information hiding reduces routing table sizes and state change rates by combining multiple destinations into one summary prefix, aggregation, or separating destinations into sub-topologies, aka virtualization. Information hiding can also be carried out by configuring route filters at specific network points.

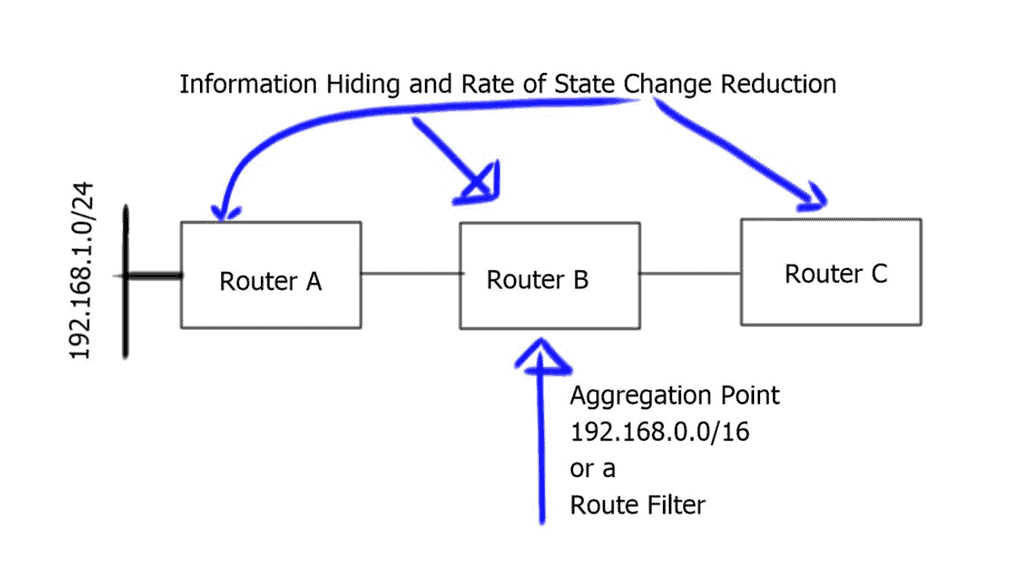

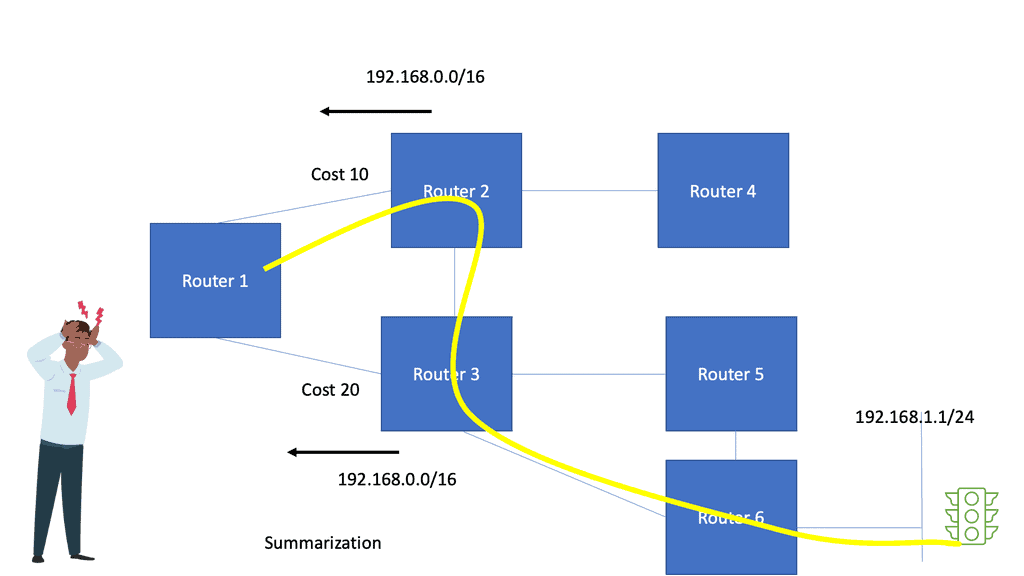

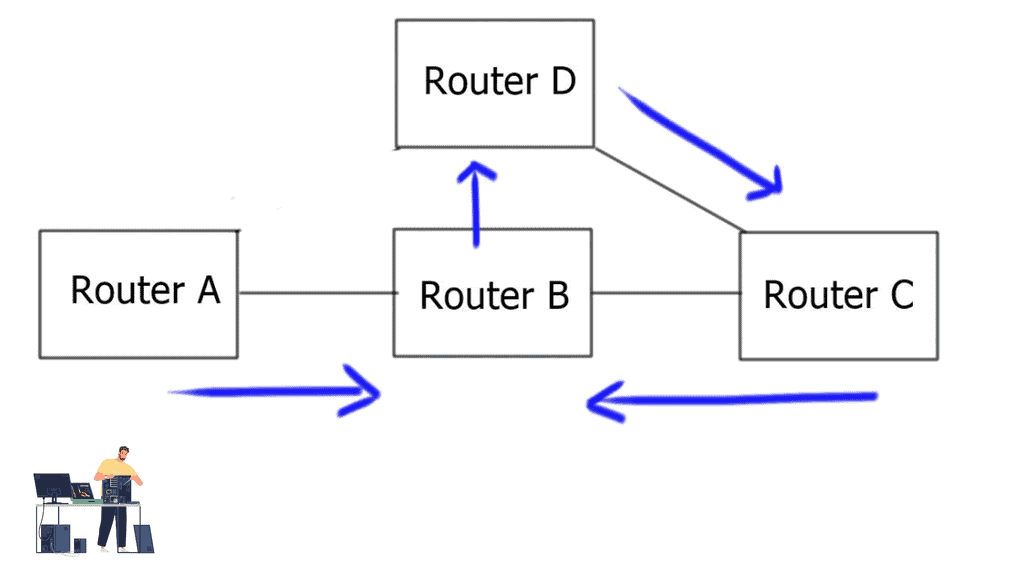

Router B summarizes network 192.168.0.0/16 in the diagram below and sends the aggregate route to Router C. The aggregation process hides more specific routes behind Router A. Router C never receives any specifics or state changes for those specifics, so it doesn’t have to do any recalculations if the reachability of those networks changes. Link flaps and topology changes on Router A will not be known to Router C and vice versa.

Positive feedback loops

Positive feedback loops add the concept of rate of change with the rate of information flow.

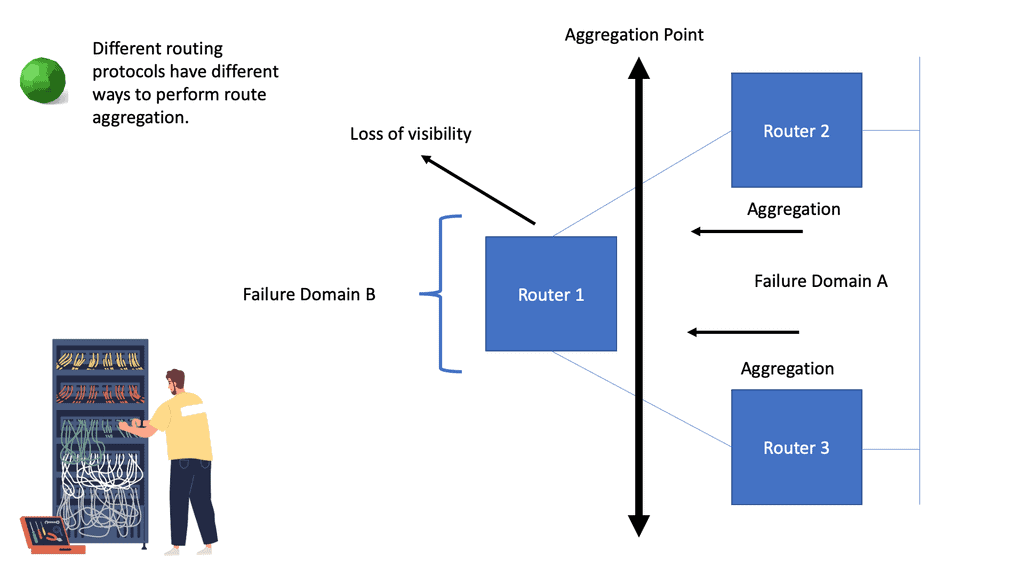

Routers A and B are also in separate failure domains from router C. Routers C’s view of the network differs from Routers A and B. A failure domain is the set of devices that must recalculate their control plane information in the case of a topology change.

When a link or node fails in one fault domain, it does not affect the other. There is an actual split in the network. You could argue that aggregation does not split the network into “true” fault domains, as you can still have backup paths ( specific routes ) with different metrics reachable in the other domain.

If we split the network into fault domains, devices within each fault domain only compute paths within their fault domain. This drags the network closer to the MTTR/MTBF balance point, another reason you should divide complexity from complexity.

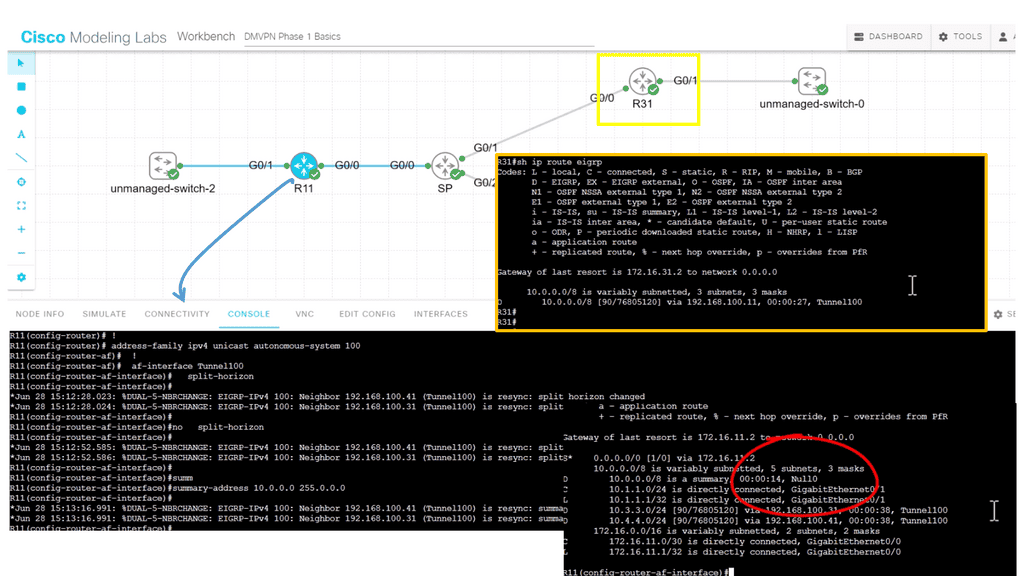

Virtualization Modularization

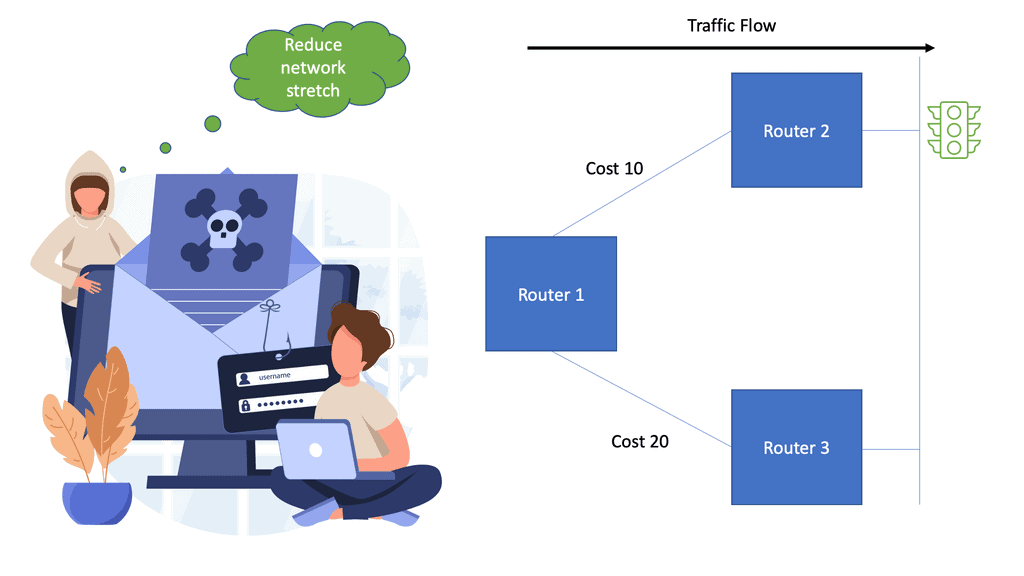

The essence of network design and fault domain isolation is based on the modularization principle. Modularization breaks up the control plane, giving you different information in different network sections. It would help if you engineered the network so it can manage organic growth and change with fixed limits. You can move to the next module when the network gets too big. The concept of repeatable configurations creates a more manageable network. Each topology should be designed and configured using the same tools where possible.

Why Modularize?

The prime reason to introduce modularity and a design with modular building blocks is to reduce the amount of data any particular network device must handle when it describes and calculates paths to a specific destination. The less information the routing process has to process, the faster the network will converge in conjunction with tight modulation limits.

The essence of modularization can be traced back to why the OSI and TCP/IP models were introduced. So why do we have these models? First, they allow network engineers to break big problems into little pieces so we can focus on specific elements and not get clouded by the complexity of the entire problem all at once. With the practice of modulation, particular areas of the network are assigned specific tasks.

The core focuses solely on fast packet forwarding, while the edge carries out various functions such as policing, packet filtering, QoS classification, etc. Modulization is done by assigning specific tasks to different points in the network.

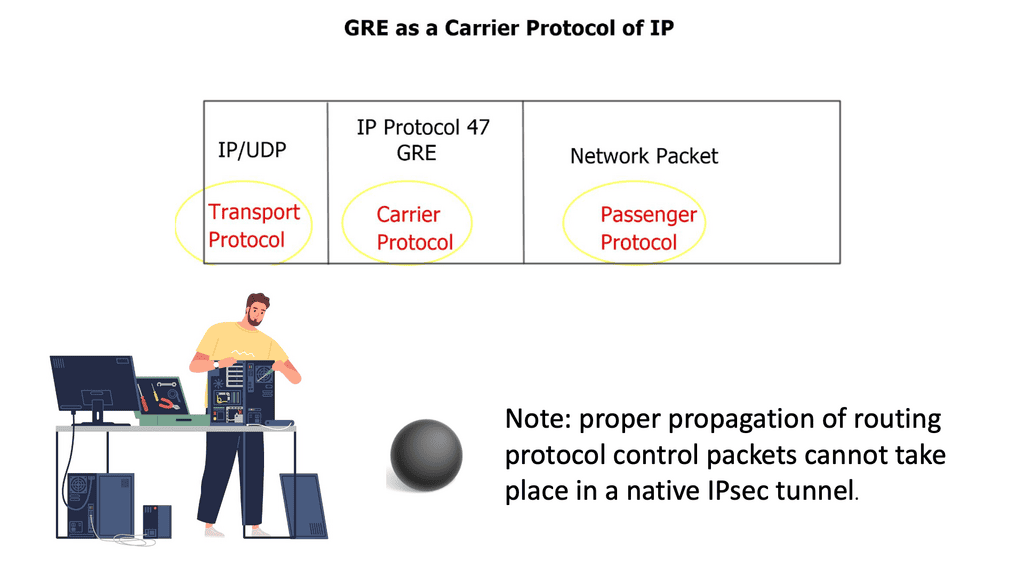

Virtualization techniques to perform modularization

Virtualization techniques such as MPLS and 802.1Q are also ways to perform modularization. The difference is that they are vertical rather than horizontal. Virtualization can be thought of as hiding information and vertical layers within a network. So why don’t we perform modularization on every router and put each router into a single domain? The answer is network stretch.

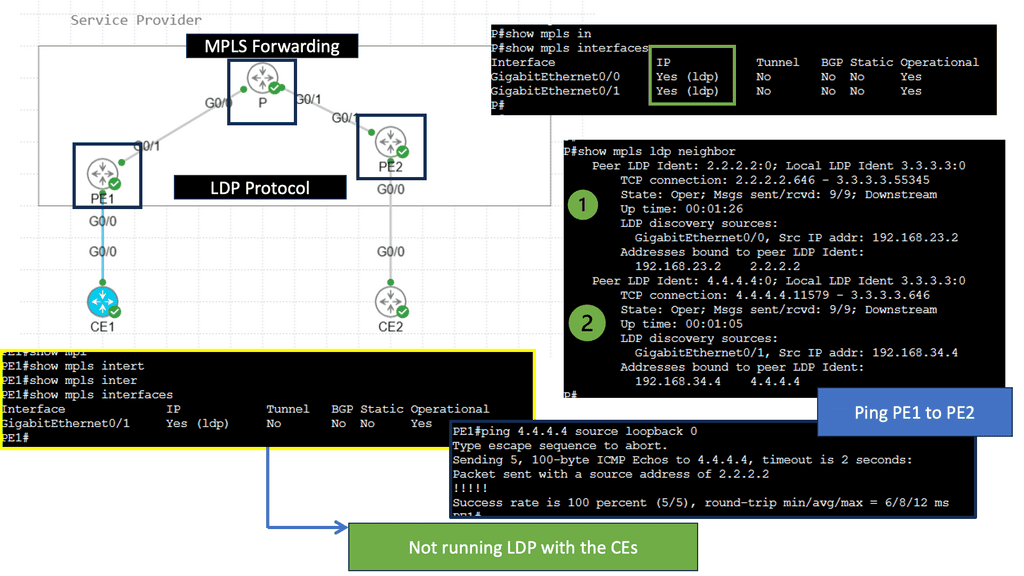

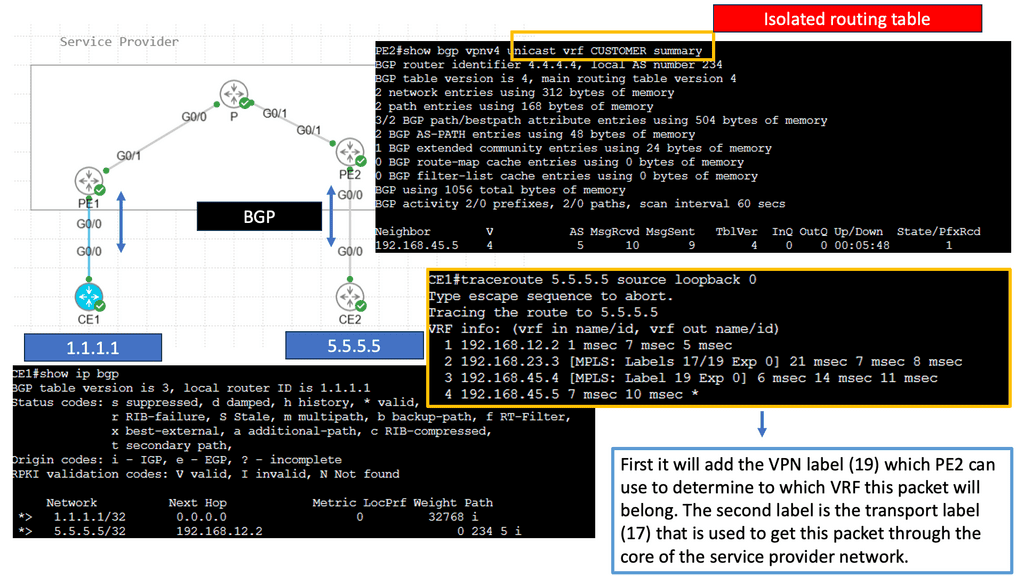

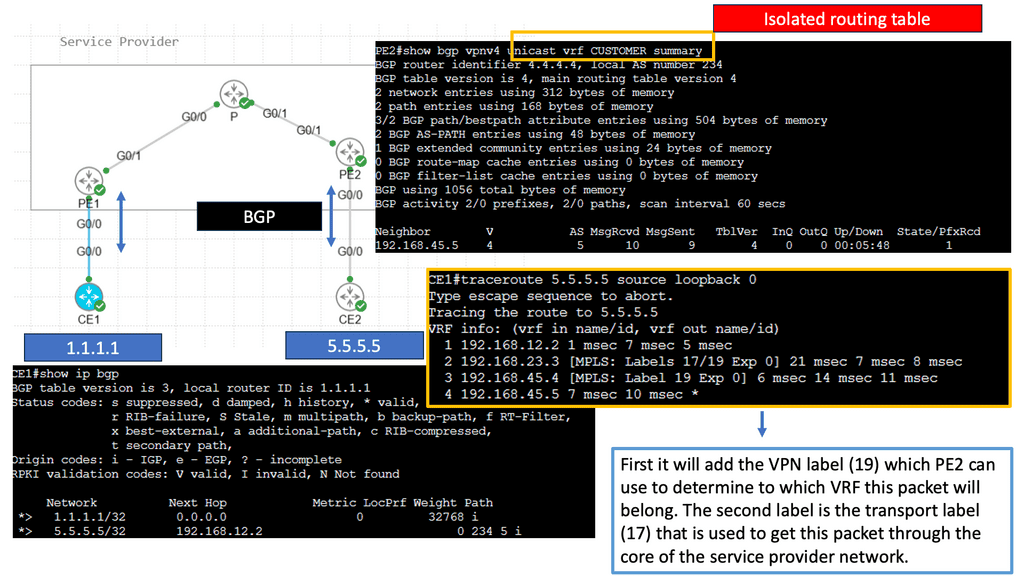

MPLS provides modularization by providing abstraction with labels. MPLS leverages the concept of predetermined “labels” to route traffic instead of relying solely on the ultimate source and destination addresses. This is done by appending a short bit sequence to the packet, known as forwarding equivalence class (FEC) or class of service (CoS).

Example MPLS Technology

Multi-Protocol Label Switching (MPLS) is a sophisticated network mechanism that directs data from one node to the next based on short path labels rather than long network addresses. This technique avoids complex lookups in a routing table and speeds up the overall traffic flow. By encapsulating packets with labels, MPLS can streamline the delivery of various types of traffic, whether it’s IP packets, native ATM, or Ethernet frames. Its versatility and efficiency make it a preferred choice in many enterprise and service provider networks.

Key Advantages to Modularization

A: – Enhanced Scalability and Flexibility:

One of the primary benefits of modularization virtualization is its ability to enhance scalability and flexibility. Organizations can quickly scale their infrastructure up or down by virtualizing individual modules based on demand. This flexibility allows businesses to adapt rapidly to changing market conditions and optimize resource allocation.

B: – Improved Fault Isolation and Resilience:

Modularization virtualization also improves fault isolation and resilience. Since each module operates independently, a failure or issue in one module does not impact the entire system. This isolation ensures that critical functions remain unaffected, enhancing the overall reliability and uptime of the system.

C: – Simplified Development and Maintenance:

With modularization, virtualization, development, and maintenance become more manageable and efficient. Each module can be developed and tested independently, enabling faster deployment and reducing the risk of errors. Additionally, updates or changes to a specific module can be implemented without disrupting the entire system, minimizing downtime and reducing maintenance efforts.

Summary: Modularization Virtualization

In today’s fast-paced technological landscape, businesses constantly seek ways to optimize their operations and maximize efficiency. Two concepts that have gained significant attention in recent years are modularization and virtualization. In this blog post, we will explore the power of these two strategies and how they can revolutionize various industries.

Understanding Modularization

In simple terms, modularization refers to breaking down complex systems or processes into smaller, self-contained modules. Each module serves a specific function and can be developed, tested, and deployed independently. This approach offers several benefits, such as improved scalability, easier maintenance, and faster development cycles. Additionally, modularization promotes code reusability, allowing businesses to save time and resources by leveraging existing modules in different projects.

Unleashing the Potential of Virtualization

Conversely, virtualization involves creating virtual versions of physical resources, such as servers, storage devices, or networks. By decoupling software from hardware, virtualization enables businesses to achieve greater flexibility, cost-effectiveness, and resource utilization. Virtualization technology allows for creating virtual machines, virtual networks, and virtual storage, all of which can be easily managed and scaled based on demand. This reduces infrastructure costs, enhances disaster recovery capabilities, and simplifies software deployment.

Transforming Industries with Modularization and Virtualization

The combined power of modularization and virtualization can potentially transform numerous industries. Let’s examine a few examples:

1. IT Infrastructure: Modularization and virtualization can revolutionize how IT infrastructure is managed. By breaking down complex systems into modular components and leveraging virtualization, businesses can achieve greater agility, scalability, and cost-efficiency in managing their IT resources.

2. Manufacturing: Modularization allows for creating modular production units that can be easily reconfigured to adapt to changing demands. Coupled with virtualization, manufacturers can simulate and optimize their production processes, reducing waste and improving overall productivity.

3. Software Development: Modularization and virtualization are crucial in modern software development practices. Modular code allows for easier collaboration among developers and promotes rapid iteration. Virtualization enables developers to create virtual environments for testing, ensuring software compatibility and stability across different platforms.

Conclusion

Modularization and virtualization are not just buzzwords; they are powerful strategies that can bring significant transformations across industries. By embracing modularization, businesses can achieve flexibility and scalability in their operations, while virtualization empowers them to optimize resource utilization and reduce costs. The synergy between these two concepts opens up endless possibilities for innovation and growth.