Application Delivery Networks

In today's fast-paced digital world, businesses rely heavily on applications to deliver services and engage customers. However, as the demand for seamless connectivity and optimal performance increases, so does the need for a robust and efficient infrastructure. This is where Application Delivery Networks (ADNs) come into play. In this blog post, we will explore the importance of ADNs and how they enhance the delivery of applications.

An Application Delivery Network is a suite of technologies designed to optimize applications' performance, security, and availability across the internet. It bridges users and applications, ensuring data flows smoothly, securely, and efficiently.

ADNs, also known as content delivery networks (CDNs), are a distributed network of servers strategically placed across various geographical locations. Their primary purpose is to efficiently deliver web content, applications, and other digital assets to end-users. By leveraging a network of servers closer to the user, ADNs significantly reduce latency, improve website performance, and ensure faster content delivery.

Accelerated Website Performance: ADNs employ various optimization techniques such as caching, compression, and content prioritization to enhance website performance. By reducing page load times, businesses can improve user engagement, increase conversions, and boost overall customer satisfaction.

Global Scalability: With an ADN in place, businesses can effortlessly scale their online presence globally. ADNs have servers strategically located worldwide, allowing organizations to effectively serve content to users regardless of their geographic location. This scalability ensures consistent and reliable performance, irrespective of the user's proximity to the server.

Security Protection:ADNs act as a protective shield against cyber threats, including Distributed Denial of Service (DDoS) attacks. These networks are equipped with advanced security measures, including real-time threat monitoring, traffic filtering, and intelligent routing, ensuring a secure and uninterrupted online experience for users.

E-commerce Websites: ADNs play a crucial role in optimizing e-commerce websites by delivering product images, videos, and other content quickly. This leads to improved user experience, increased conversion rates, and ultimately, higher revenue.

Media Streaming Platforms: Streaming platforms heavily rely on ADNs to deliver high-quality video content without buffering or interruptions. By distributing the content across multiple servers, ADNs ensure smooth playback and an enjoyable streaming experience for users worldwide.

In conclusion, implementing an Application Delivery Network is a game-changer for businesses aiming to enhance digital experiences. With accelerated website performance, global scalability, and enhanced security, ADNs pave the way for improved user satisfaction, increased engagement, and ultimately, business success in the digital realm.

Matt Conran

Highlights: Application Delivery Networks

Content Delivery Networks

Using server farms and Web proxies can help build large sites and improve Web performance, but they are insufficient for viral sites serving global content. A different approach is needed for these sites.

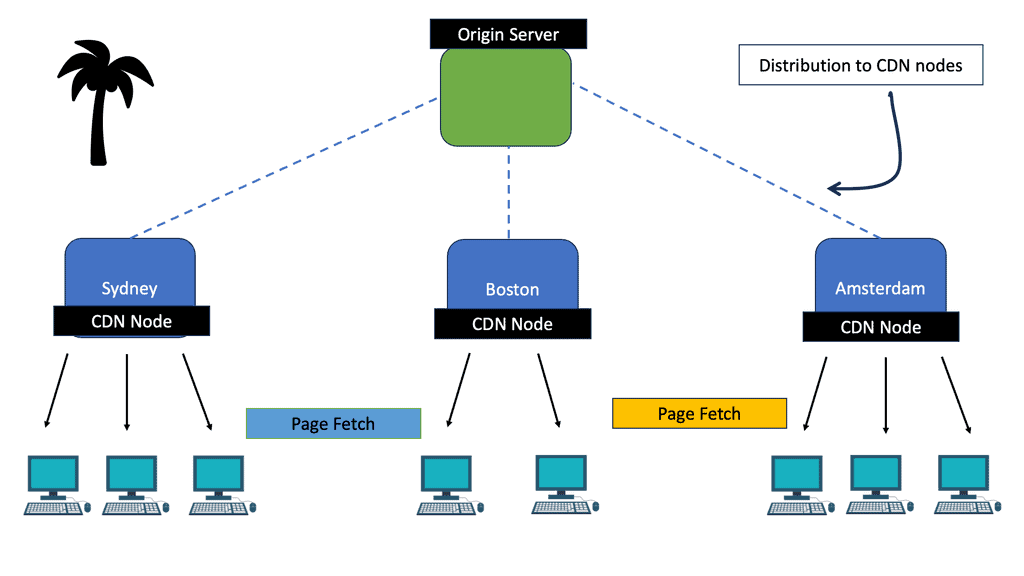

The concept of traditional Web caching is turned on by Content Delivery Networks (CDNs). The provider places a copy of the requested page in a set of nodes at different locations and instructs the client to use a nearby node as the server rather than having the client search for a copy in a nearby cache.

The diagram shows the path data takes when a CDN distributes it. It is a tree. In this example, the origin server distributes a copy of the content to Sydney, Boston, and Amsterdam nodes. Dashed lines indicate this. The client is responsible for fetching pages from the nearest node in the CDN. Solid lines show this. As a result, the Sydney clients both fetch the Sydney copy of the page; they do not both fetch the European origin server.

There are three advantages to using a tree structure. When the distribution among CDN nodes becomes the bottleneck, more levels in the tree can be used to scale up the content distribution to as many clients as needed. The tree structure is efficient regardless of how many clients there are.

The origin server is not overloaded by communicating with the many clients through the CDN tree. It does not have to respond to each request for a page on its own. A nearby server is more efficient than a distant server for fetching pages for each client. TCP slow-start ramps up more quickly because of the shorter round-trip time, and a shorter network path passes through less congestion on the Internet because the round-trip time is shorter.

Additionally, the network is placed under the least amount of load possible. Traffic for a particular page should only pass through each CDN node once if they are well placed. This is because someone eventually pays for network bandwidth.

Varying Applications

Compared to 15 years ago, applications exploded when load balancers first arrived. Content such as blogs, content sharing, wiki, shared calendars,s, and social media exist that load balancers ( ADC ) must now serve. A plethora of “chattier” protocols exist with different requirements. Every application has additional network requirements for the functions they provide.

Each application has different expectations for its service levels. Slow networks and high server load mean you cannot efficiently run applications and web-based services. Data is slow to load, and productivity slips. Application delivery controllers ( ADC ) or load balancers can detect and adapt to changing network conditions for public, private, and hybrid cloud traffic patterns.

Before you proceed, you may find the following posts helpful:

- Stateful Inspection Firewall

- Application Delivery Architecture

- Load Balancer Scaling

- A10 Networks

- Stateless Network Functions

- Network Security Components

- WAN SDN

Back to basics with load balancing

Load balancing is distributing network traffic across a group of endpoints. In addition, load balancing is a solution to hardware and software performance. So, when you are faced with scaling user demand and maxing out the performance, you have two options: scale up or scale out. Scaling up, or vertical scaling, has physical computational limits and can be costly. Then scaling out (i.e., horizontal scaling) allows you to distribute the computational load across as many systems as necessary to handle the workload. When scaling out, a load balancer can help spread the workload.

The Benefits of Application Delivery Networks:

1. Enhanced Network Connectivity: ADNs utilize various techniques like load balancing, caching, and content optimization to deliver applications faster and improve user experience. Distributing traffic intelligently, ADNs ensure that no single server is overwhelmed, leading to faster response times and reduced latency.

2. Scalability: ADNs enable businesses to scale their applications effortlessly. With the ability to add or remove servers dynamically, ADNs accommodate high traffic demands without compromising performance. This scalability ensures businesses can handle sudden spikes in user activity or seasonal fluctuations without disruption.

3. Security: In an era of increasing cyber threats, ADNs provide robust security features to protect applications and data from unauthorized access, DDoS attacks, and other vulnerabilities. ADNs employ advanced security mechanisms such as SSL encryption, web application firewalls, and intrusion detection systems to safeguard critical assets.

4. Global Load Balancing: As businesses expand across different geographical regions, ADNs offer global load balancing capabilities. By strategically distributing traffic across multiple data centers, ADNs ensure that users are seamlessly connected to the nearest server, reducing latency and optimizing performance.

5. Improved Availability: ADNs employ techniques like health monitoring and failover mechanisms to ensure high availability of applications. In a server failure, ADNs automatically redirect requests to healthy servers, minimizing downtime and improving overall reliability.

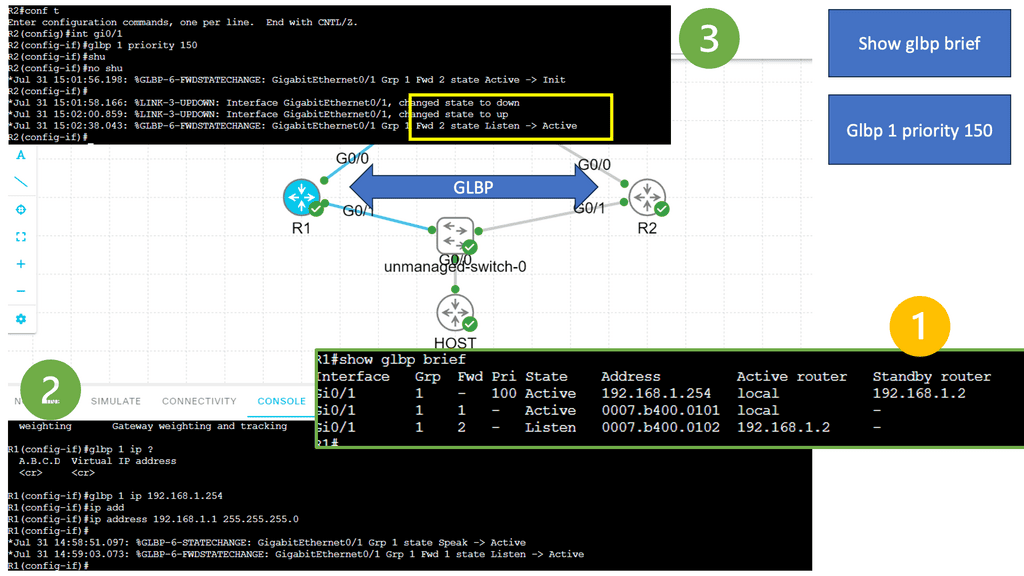

Lab on GLBP

GLBP is a Cisco proprietary routing protocol designed to provide load balancing and redundancy for IP traffic across multiple routers or gateways. It enhances the commonly used Hot Standby Router Protocol (HSRP). It is primarily used in enterprise networks to distribute traffic across multiple paths, ensuring optimal utilization of network resources. Notice below when we change the GLBP priority, the role of the device changes.

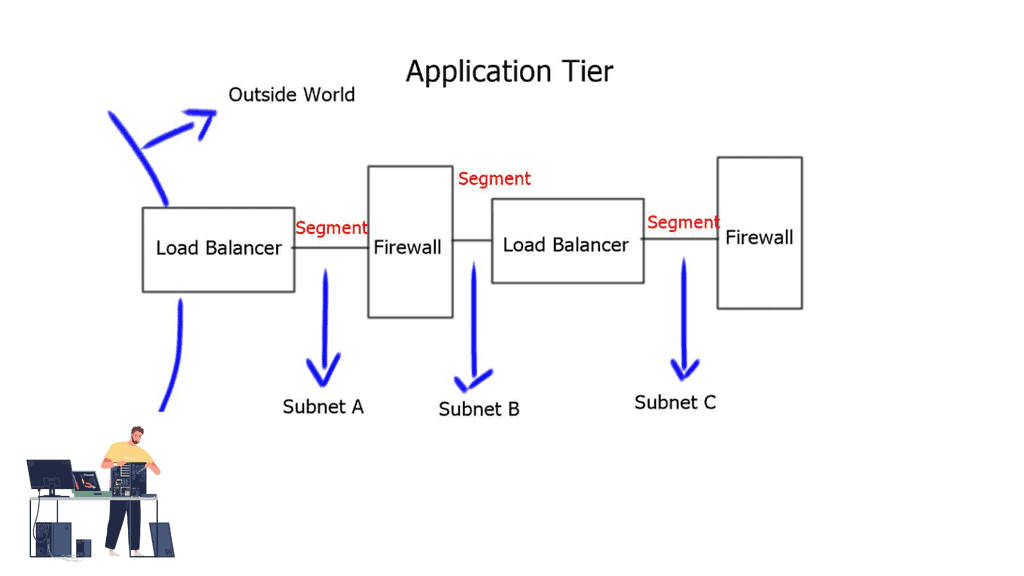

Application Delivery Network and Network Control Points.

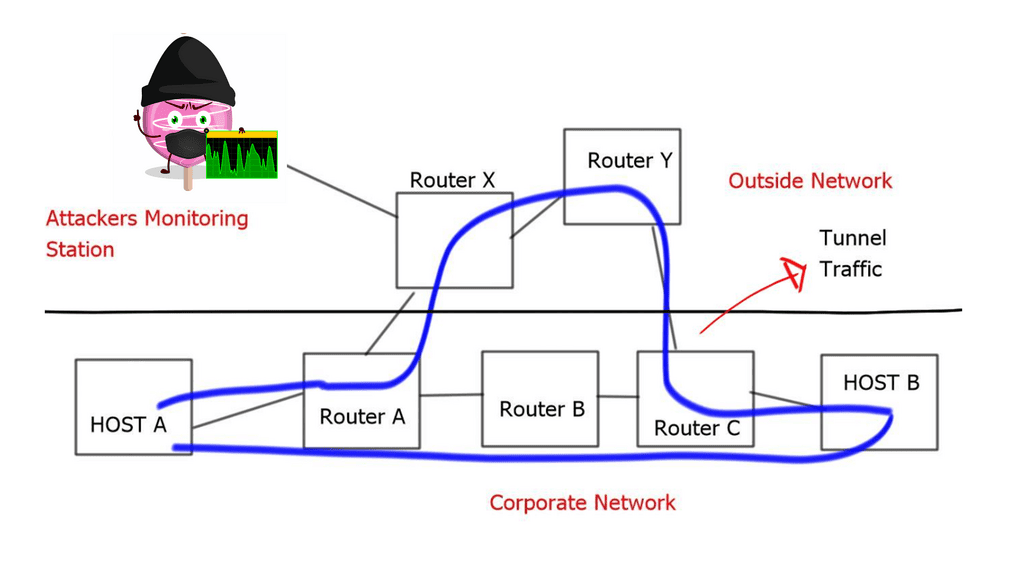

Application delivery controllers act as network control points to protect our networks. We use them to improve application service levels delivered across networks. Challenges for securing the data center range from preventing denial of service attacks from the network to application.

Also, how do you connect data centers to link on-premise to remote cloud services and support traffic bursts between both locations? When you look at the needs of the data center, the network is the control point, and nothing is more important than this control point. ADC allows you to insert control points and enforce policies at different points of the network.

Case Study: Citrix Netscaler

The Company was founded in 1998; the first product was launched in 2000. The first product was a simple Transmission Control Protocol (TCP) proxy. All it did was sit behind a load balancer, proxy TCP connections at layer 4, and offload them from backend servers. As the web developed, scalability issues were the load on the backend servers from servicing the increasing amount of TCP connections. So, they wrote their own performance-orientated custom TCP stack.

They have a quick PCI architecture. No, interrupts. Netscaler has written the code with x86 architecture in mind. The way x86 is written is to have fast processors and slower dynamic random-access memory (DRAM). The processor should work on the local cache, but that does not work for how network traffic flows. Netscaler has a unique code that processes a packet while permitting entry to another packet. This gives them great latency statistics.

Application delivery network and TriScale technology

TriScale technology changes how the Application Delivery Controller (ADC) is provisioned and managed. It brings cloud agility to data centers. TriScale allows networks to scale up, out, and in via consolidation.

Scale-out: Clustering

For high availability (HA), Netscaler only has active / standby and clustering. They oppose active/active. Active/active deployments are not truly active. Most setups are accomplished by setting one application via one load balancer and another via a 2nd-load balancer. It does not give you any more capacity. You cannot oversubscribe if one fails. The other node has to take over and service additional load from the failed load balancer.

Netscaler skipped this and went straight to clustering. They can cluster up to 32, allowing a cloud of Netscaler. Clustering is a cloud of Netscaler. All are active, sharing states and configurations, so if one of your ADCs goes down, others can pick up transparently. All nodes know all information about sessions, and that information is shared.

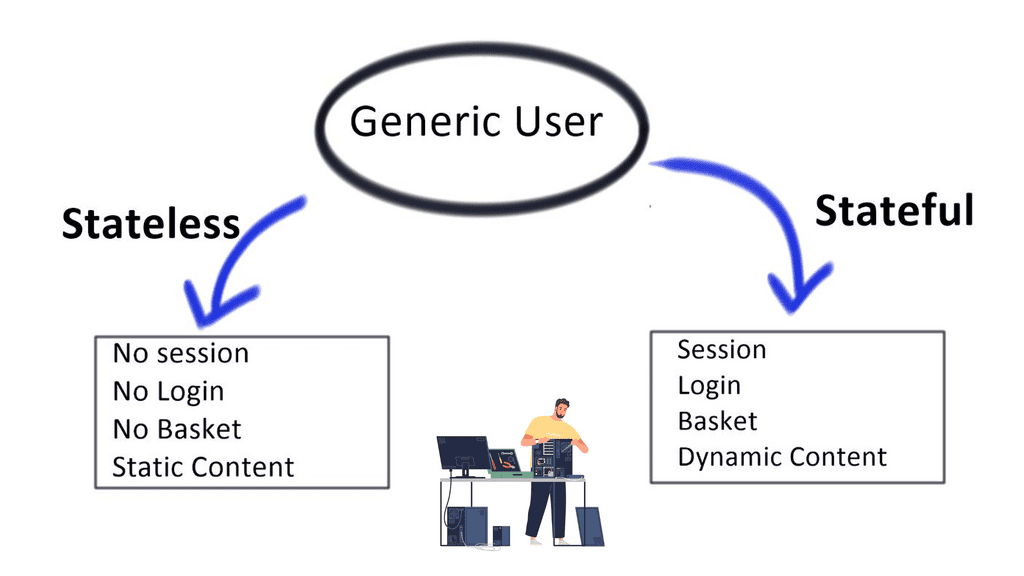

Stateless vs. stateful

Netscaler offers dynamic failover for long-lived protocols, like Structured Query Language (SQL) sessions and other streaming protocols. This differs from when you are load-balancing Hypertext Transfer Protocol (HTTP). HTTP is a generic and stateless application-level protocol. No information is kept across requests; applications must remember the per-user state.

Every HTTP request is a valid standalone request per the protocol. No one knows or cares that much if you lose an HTTP request. Clients try again. High availability is generally not an issue for web traffic. With HTTP 2.0, the idea of sustaining the connection during failover means that the session never gets torn down and restarted.

HTTP ( stateless ) lives on top of TCP ( stateful ). Transmission Control Protocol (TCP) is stateful in the sense that it maintains state in the form of TCP windows size (how much data endpoints can receive) and packet order ( packet receipts confirmation). TCP endpoints must remember the state of the other. Stateless protocols can be built on top of the stateful protocol, and stateful protocols can be built on top of the stateless protocol.

Applications built on top of HTTP aren’t necessarily stateless. Applications implement state over HTTP. For example, a client requests data and is first authenticated before data transfer. This is common for websites requiring users to visit a login page before sending a message.

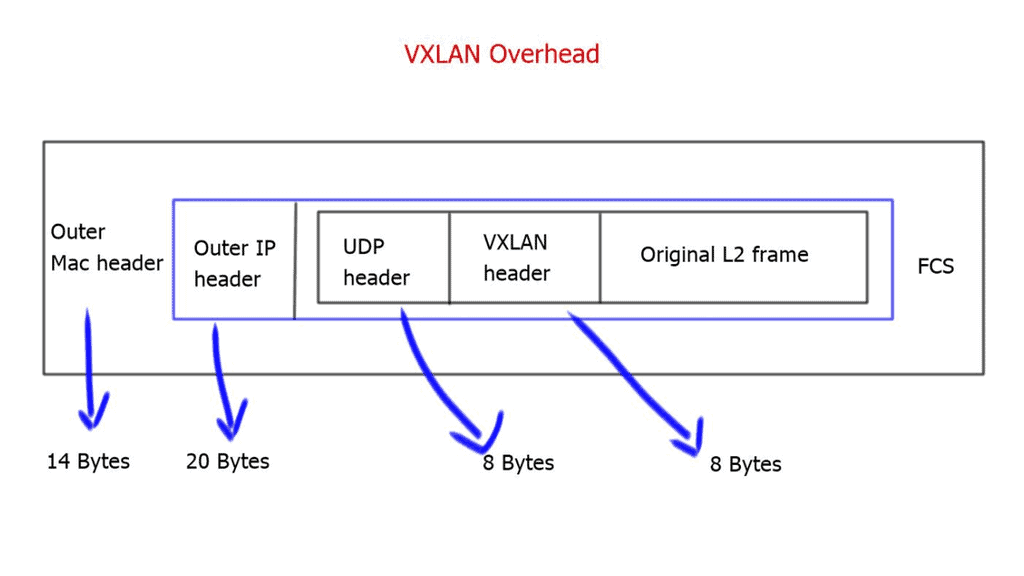

Scale-in: Multi-tenancy

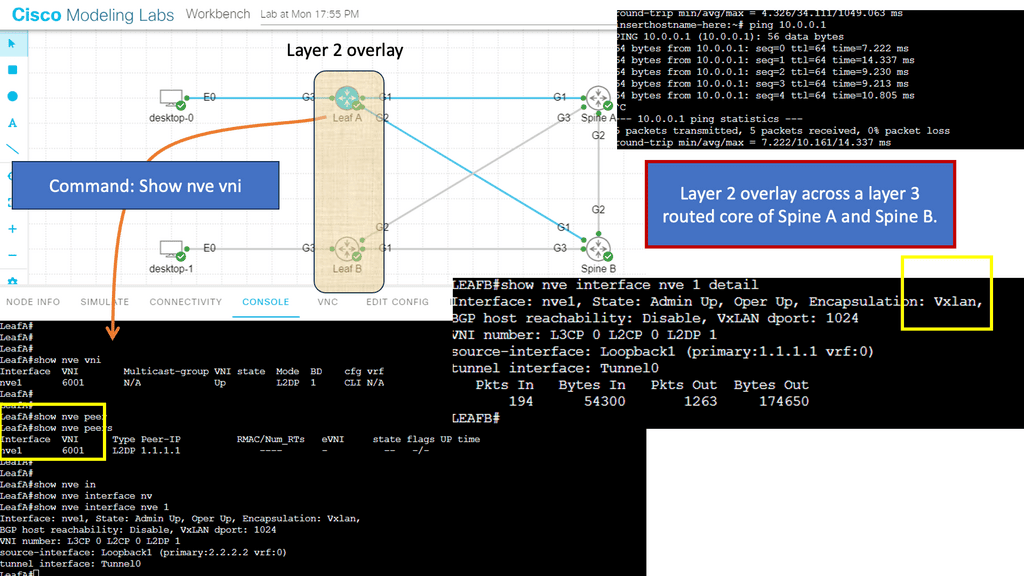

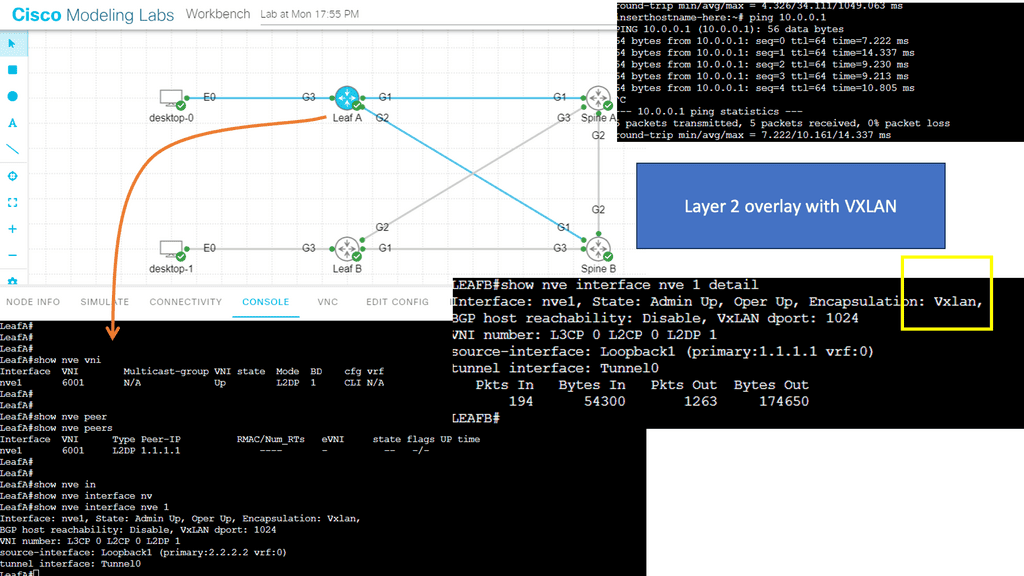

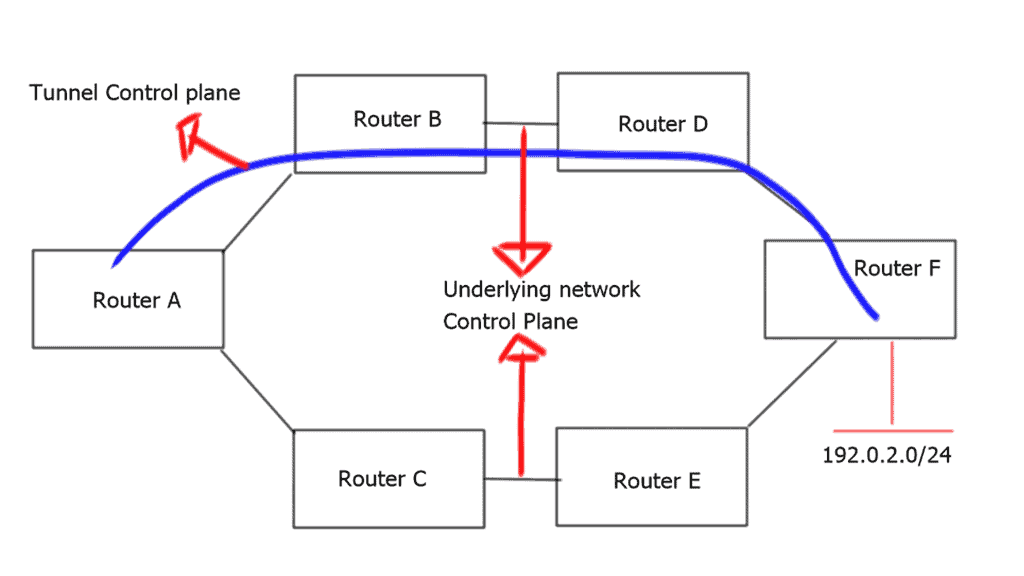

In the enterprise, you can have network overlays ( such as VXLAN) that allow the virtualization of segments. We need network services like firewalls and load balancers to do the same thing. Netscaler offers a scale-in service that allows a single platform to become multiple. It’s not a software partition; it’s a hardware partition.

100% of CPU, crypto, and network resources are isolated. This allows the management of individual instances without affecting others. If you experience a big traffic spike on one load balancer, it does not affect other cases of load balancing on that device.

Every application or application owner can have a dedicated ADC. This approach lets you meet the application requirement without worrying about contention or overrun from other application configurations. In addition, it enables you to run several independent Netscaler instances on a single 2RU appliance. Every application owner looks like they have a dedicated ADC, and from the network view, each application is running on its appliance.

Behind the scenes, Netscaler consolidated all this down to a single device. So, what Netscaler did was get the MPX platform and add a load of VPX to it to create an SDX product. When you spin up the VPX on the SDX, you allocate isolated resources such as CPU and disk space.

Scale-up: pay as you grow

Scale-up is a software license key upgrade that increases performance and offers customers more flexibility. For example, if you buy an MPX, you are not locked into specific performance metrics of that box. With a license upgrade, you can double its throughput, packets per second, connections per second, and Secure Sockets Layer (SSL) transactions per second.

Netscaler and Software-defined networking (SDN)

When we usually discuss SDN, we focus on Layer 2 and 3 networks and what it takes to separate the control and data plane. The majority of SDN discussions are Layer 2 and Layer 3-centric conversations. However, Layer 4 to Layer 7 solutions need to be integrated into the SDN network. Netscaler is developing centralized control capabilities for integrating Layer 4 to Layer 7 solutions into SDN networks.

So, how can SDN directly benefit the application at Layer 7? As applications become more prominent, there must be ways to auto-deploy applications. Storage and computing have been automated for some time now. However, the network is more complex, so virtualization is harder. This is where SDN comes into play. SDN takes all the complexity away from managing networks.

In an era where applications are businesses’ backbone, ensuring optimal performance, scalability, and security is crucial. Application Delivery Networks play a vital role in achieving these objectives. From enhancing performance and scalability to providing robust security and global load balancing, ADNs offer a comprehensive solution for businesses seeking to optimize application delivery. By leveraging ADNs, companies can deliver seamless experiences to their users, gain a competitive edge, and drive growth in today’s digital landscape.

Summary: Application Delivery Networks

In today’s digital age, where speed and efficiency are paramount, businesses and users constantly seek ways to optimize their online experiences. One technology that has emerged as a game-changer in this realm is Application Delivery Networks (ADNs). ADNs are revolutionizing web performance by improving speed, reliability, and security. In this blog post, we explored the critical aspects of ADNs and their role in enhancing web performance.

Understanding Application Delivery Networks

ADNs, also known as Content Delivery Networks (CDNs), are a distributed network of servers strategically placed worldwide. These servers act as intermediaries between users and web servers, optimizing web content delivery. By caching and storing website data closer to the end-user, ADNs reduce latency and minimize the distance data packets travel.

Accelerating Web Performance

One of the primary benefits of ADNs is their ability to accelerate web performance. With traditional web hosting, a user’s request goes directly to the origin server, which may be geographically distant. This can result in slower loading times. ADNs, on the other hand, leverage their distributed server infrastructure to serve content from the server nearest to the user. By minimizing the distance and network hops, ADNs significantly improve page load times, providing a seamless browsing experience.

Enhancing Reliability and Scalability

Another advantage of ADNs is their ability to enhance reliability and scalability. ADNs employ load balancing, failover mechanisms, and intelligent routing to ensure the high availability of web content. In case of server failures or traffic spikes, ADNs automatically redirect requests to alternate servers, preventing downtime and maintaining a smooth user experience.

Strengthening Security

In addition to performance benefits, ADNs offer robust security features. ADNs can protect websites from DDoS attacks, malicious bots, and other security threats by acting as a shield between users and origin servers. With their widespread server infrastructure and advanced security measures, ADNs provide an additional layer of defense, ensuring the integrity and availability of web content.

Conclusion:

Application Delivery Networks have emerged as a vital component in optimizing web performance. By leveraging their distributed server infrastructure, ADNs enhance speed, reliability, and security, making the browsing experience faster and more secure for users worldwide. As businesses strive to deliver exceptional online experiences, investing in ADNs has become essential.