Correlate Disparate Data Points

In today's data-driven world, the ability to extract meaningful insights from diverse data sets is crucial. Correlating disparate data points allows us to uncover hidden connections and gain a deeper understanding of complex phenomena. In this blog post, we will explore effective strategies and techniques to correlate disparate data points, unlocking a wealth of valuable information.

To begin our journey, let's establish a clear understanding of what disparate data points entail. Disparate data points refer to distinct pieces of information originating from different sources, often seemingly unrelated. These data points may vary in nature, such as numerical, textual, or categorical data, posing a challenge when attempting to find connections.

One way to correlate disparate data points is by identifying common factors that may link them together. By carefully examining the characteristics or attributes of the data points, patterns and similarities can emerge. These common factors act as the bridge that connects seemingly unrelated data, offering valuable insights into their underlying relationships.

Advanced analytics techniques provide powerful tools for correlating disparate data points. Techniques such as regression analysis, cluster analysis, and network analysis enable us to uncover intricate connections and dependencies within complex data sets. By harnessing the capabilities of machine learning algorithms, these techniques can reveal hidden patterns and correlations that human analysis alone may overlook.

Data visualization serves as a vital component in correlating disparate data points effectively. Through the use of charts, graphs, and interactive visualizations, complex data sets can be transformed into intuitive representations. Visualizing the connections between disparate data points enhances our ability to grasp relationships, identify outliers, and detect trends, ultimately leading to more informed decision-making.

In conclusion, the ability to correlate disparate data points is a valuable skill in leveraging the vast amount of information available to us. By defining disparate data points, identifying common factors, utilizing advanced analytics techniques, and integrating data visualization, we can unlock hidden connections and gain deeper insights. As we continue to navigate the era of big data, mastering the art of correlating disparate data points will undoubtedly become increasingly essential.

Matt Conran

Highlights: Correlate Disparate Data Points

### Understanding the Importance of Data Correlation

In today’s data-driven world, the ability to correlate disparate data points has become an invaluable skill. Organizations are inundated with vast amounts of information from various sources, and the challenge lies in extracting meaningful insights from this data deluge. Correlating these data points not only helps in identifying patterns but also aids in making informed decisions that drive business success.

### Tools and Techniques for Effective Data Correlation

To effectively correlate disparate data points, it’s essential to leverage the right tools and techniques. Data visualization tools like Tableau and Power BI can help in identifying patterns by representing data in graphical formats. Statistical methods, such as regression analysis and correlation coefficients, are crucial for understanding relationships between variables. Additionally, machine learning algorithms can uncover hidden patterns that are not immediately apparent through traditional methods.

### Real-World Applications of Data Correlation

The ability to connect seemingly unrelated data points has applications across various industries. In healthcare, correlating patient data with treatment outcomes can lead to more effective care plans. In finance, analyzing market trends alongside economic indicators can aid in predicting stock movements. Retailers can enhance customer experience by correlating purchase history with seasonal trends to offer personalized recommendations. The possibilities are endless, and the impact can be transformative.

### Challenges in Correlating Disparate Data Points

While the benefits are clear, correlating disparate data points comes with its own set of challenges. Data quality and consistency are paramount, as inaccurate data can lead to misleading conclusions. Additionally, the sheer volume of data can be overwhelming, necessitating robust data management strategies. Privacy concerns also need to be addressed, particularly when dealing with sensitive information. Overcoming these challenges requires a combination of technological solutions and strategic planning.

Defining Disparate Data Points

To begin our journey, let’s first clearly understand what we mean by “disparate data points.” In data analysis, disparate data points refer to individual pieces of information that appear unrelated or disconnected at first glance. These data points could come from different sources, possess varying formats, or have diverse contexts.

One primary approach to correlating disparate data points is to identify common attributes. By thoroughly examining the data sets, we can search for shared characteristics, such as common variables, timestamps, or unique identifiers. These common attributes act as the foundation for establishing potential connections.

Considerations:

Utilizing Data Visualization Techniques: Visualizing data is a powerful tool when it comes to correlating disparate data points. By representing data in graphical forms like charts, graphs, or heatmaps, we can easily spot patterns, trends, or anomalies that might not be apparent in raw data. Leveraging advanced visualization techniques, such as network graphs or scatter plots, can further aid in identifying interconnections.

Applying Machine Learning and AI Algorithms: In recent years, machine learning and artificial intelligence algorithms have revolutionized the field of data analysis. These algorithms identify complex relationships and make predictions by leveraging vast amounts of data. We can discover hidden correlations and gain valuable predictive insights by training models on disparate data points.

Combining Data Sources and Integration: In some cases, correlating disparate data points requires integrating multiple data sources. This integration process involves merging data sets from different origins, standardizing formats, and resolving inconsistencies. Combining diverse data sources can create a unified view that enables more comprehensive analysis and correlation.

**The Required Monitoring Solution**

Digital transformation intensifies the touch between businesses, customers, and prospects. Although it expands workflow agility, it also introduces a significant level of complexity as it requires a more agile information technology (IT) architecture and increased data correlation. This belittles the network and application visibility, creating a substantial data volume and data points that require monitoring. The monitoring solution is needed to correlate disparate data points.

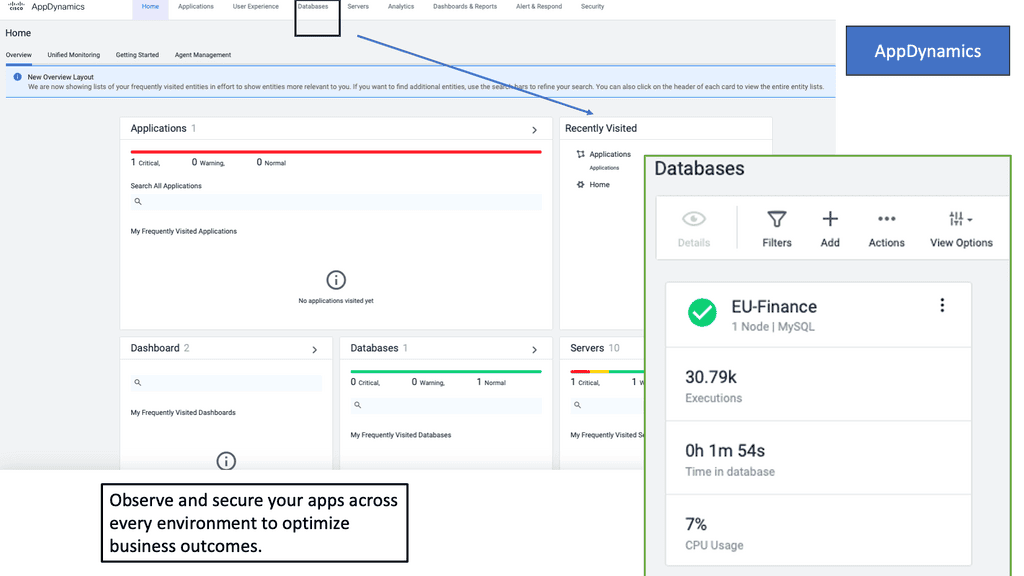

Example Product: Cisco AppDynamics

### Real-Time Monitoring and Analytics

One of the standout features of Cisco AppDynamics is its ability to provide real-time monitoring and analytics. This means you can get instant insights into your application’s performance, identify bottlenecks, and take immediate action to resolve issues. With its intuitive dashboard, you can easily visualize data and make informed decisions to enhance your application’s performance.

### End-to-End Visibility

Cisco AppDynamics offers end-to-end visibility into your application’s performance. This feature allows you to track every transaction from the end-user to the back-end system. By understanding how each component of your application interacts, you can pinpoint the root cause of performance issues and optimize each layer for better performance.

### Automatic Discovery and Mapping

Another powerful feature of Cisco AppDynamics is its automatic discovery and mapping capabilities. The tool automatically discovers your application’s architecture and maps out all the dependencies. This helps you understand the complex relationships between different components and ensures you have a clear picture of your application’s infrastructure.

### Machine Learning and AI-Powered Insights

Cisco AppDynamics leverages machine learning and AI to provide predictive insights. By analyzing historical data, the tool can predict potential performance issues before they impact your users. This proactive approach allows you to address problems before they become critical, ensuring a seamless user experience.

Before you proceed, you may find the following posts helpful:

- Ansible Tower

- Network Stretch

- IPFIX Big Data

- Microservices Observability

- Software Defined Internet Exchange

Correlate Disparate Data Points

Data observability:

Over the last while, data has transformed almost everything we do, starting as a strategic asset and evolving the core strategy. However, managing data quality is the most critical barrier for organizations to scale data strategies due to the need to identify and remediate issues appropriately. Therefore, we need an approach to quickly detect, troubleshoot, and prevent a wide range of data issues through data observability, a set of best practices that enable data teams to gain greater visibility of data and its usage.

Identifying Disparate Data Points:

Disparate data points refer to information that appears unrelated or disconnected at first glance. They can be derived from multiple sources, such as customer behavior, market trends, social media interactions, or environmental factors. The challenge lies in recognizing the potential relationships between these seemingly unrelated data points and understanding the value they can bring when combined.

Unveiling Hidden Patterns:

Correlating disparate data points reveals hidden patterns that would otherwise remain unnoticed. For example, in the retail industry, correlating sales data with weather patterns may help identify the impact of weather conditions on consumer behavior. Similarly, correlating customer feedback with product features can provide insights into areas for improvement or potential new product ideas.

Benefits in Various Fields:

The ability to correlate disparate data points has significant implications across different domains. Analyzing patient data alongside environmental factors in healthcare can help identify potential triggers for certain diseases or conditions. In finance, correlating market data with social media sentiment can provide valuable insights for investment decisions. In transportation, correlating traffic data with weather conditions can optimize route planning and improve efficiency.

Tools and Techniques:

Advanced data analysis techniques and tools are essential to correlate disparate data points effectively. Machine learning algorithms, data visualization tools, and statistical models can help identify correlations and patterns within complex datasets. Data integration and cleaning processes are crucial in ensuring accurate and reliable results.

Challenges and Considerations:

Correlating disparate data points is not without its challenges. Combining data from different sources often involves data quality issues, inconsistencies, and compatibility challenges. Additionally, ethical considerations regarding data privacy and security must be considered when working with sensitive information.

Getting Started: Correlate Disparate Data Points

Many businesses feel overwhelmed by the amount of data they’re collecting and don’t know what to do with it. The digital world swells the volume of data and data correlation to which a business has access. Apart from impacting the network and server resources, the staff is also taxed in their attempts to manually analyze the data while resolving the root cause of the application or network performance problem. Furthermore, IT teams operate in silos, making it difficult to process data from all the IT domains – this severely limits business velocity.

Data Correlation: Technology Transformation

Conventional systems, while easy to troubleshoot and manage, do not meet today’s requirements, which has led to introducing an array of new technologies. The technological transformation umbrella includes virtualization, hybrid cloud, hyper-convergence, and containers.

While technically remarkable, introducing these technologies posed an array of operationally complex monitoring tasks, increased the volume of data, and required the correlation of disparate data points. Today’s infrastructures comprise complex technologies and architectures.

They entail a variety of sophisticated control planes consisting of next-generation routing and new principles such as software-defined networking (SDN), network function virtualization (NFV), service chaining, and virtualization solutions.

Virtualization and service chaining introduce new layers of complexity that don’t follow the traditional monitoring rules. Service chaining does not adhere to the standard packet forwarding paradigms, while virtualization hides layers of valuable information.

Micro-segmentation changes the security paradigm while introducing virtual machine (VM) mobility, which introduces north-to-south and east-to-west traffic trombones. The VM, which the application sits on, now has mobility requirements and may move instantly to different on-premise data center topology types or external to the hybrid cloud.

The hybrid cloud dissolves the traditional network perimeter and triggers disparate data points in multiple locations. Containers and microservices introduce a new wave of application complexity and data volume. Individual microservices require cross-communication, potentially located in geographically dispersed data centers.

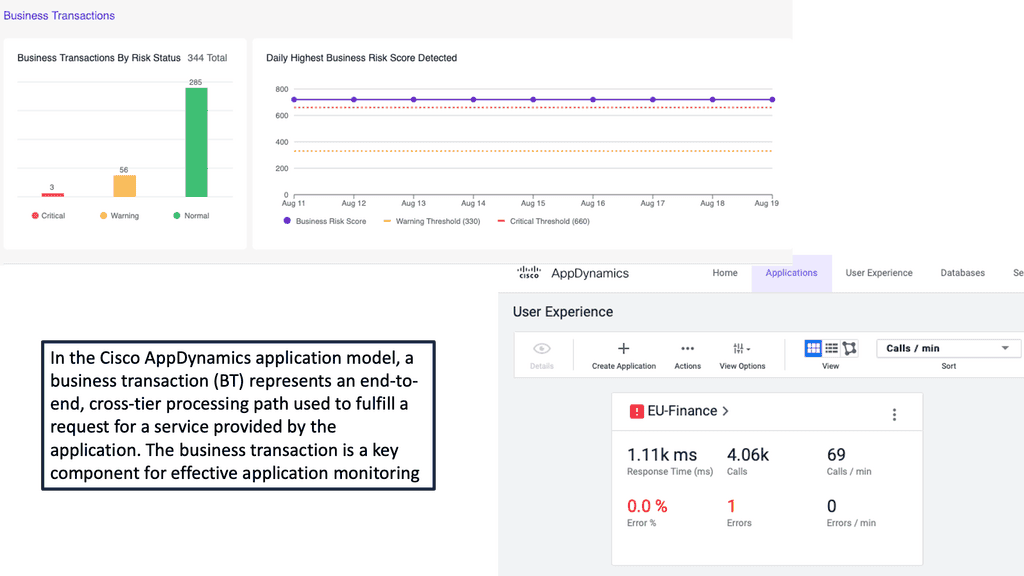

All these new technologies increase the number of data points and volume of data by an order of magnitude. Therefore, an IT organization must compute millions of data points to correlate information from business transactions to infrastructures such as invoices and orders.

Growing Data Points & Volumes

The need to correlate disparate data points

As part of the digital transformation, organizations are launching more applications. More applications require additional infrastructure, which always snowballs, increasing the number of data points to monitor.

Breaking up a monolithic system into smaller, fine-grained microservices adds complexity when monitoring the system in production. With a monolithic application, we have well-known and prominent investigation starting points.

But the world of microservices introduces multiple data points to monitor, and it’s harder to pinpoint latency or other performance-related problems. The human capacity hasn’t changed – a human can correlate at most 100 data points per hour. The actual challenge surfaces because they are monitored in a silo.

Containers are deployed to run software that is found more reliable when moved from one computing environment to another. They are often used to increase business agility. However, the increase in agility comes at a high cost—containers generate 18x more data than they would in traditional environments. Conventional systems may have a manageable set of data points to be managed, while a full-fledged container architecture could have millions.

The amount of data to be correlated to support digital transformation far exceeds human capabilities. It’s just too much for the human brain to handle. Traditional monitoring methods are not prepared to meet the demands of what is known as “big data.” This is why some businesses use the big data analytics software from Kyligence.

That uses an AI-augmented engine to manage and optimize the data, allowing businesses to see their most valuable data, which helps them make decisions. While data volumes grow to an unprecedented level, visibility is decreasing due to the complexity of the new application style and the underlying infrastructure. All this is compounded by ineffective troubleshooting and team collaboration.

Ineffective Troubleshooting Team Collaboration

The application rides on various complex infrastructures and, at some stage, requires troubleshooting. Troubleshooting should be a science, but most departments use the manual method. This causes challenges with cross-team collaboration during an application troubleshooting event among multiple data center segments—network, storage, database, and application.

IT workflows are complex, and a single response/request query will touch all supporting infrastructure elements: routers, servers, storage, database, etc. For example, an application request may traverse the web front ends in one segment to be processed by database and storage modules on different segments. This may require firewalling or load-balancing services in various on and off-premise data centers.

IT departments will never have a single team overlooking all areas of the network, server, storage, database, and other infrastructure modules. The technical skill sets required are far too broad for any individual to handle efficiently.

Multiple technical teams are often distributed to support various technical skill levels at different locations, time zones, and cultures. Troubleshooting workflows between teams should be automated, although they are not because monitoring and troubleshooting are carried out in silos, completely lacking any data point correlation. The natural assumption is to add more people, which is nothing less than fueling the fire.

An efficient monitoring solution is a winning formula.

There is an increasingly vast lack of collaboration due to silo boundaries that don’t even allow you to look at each other’s environments. By the design of the silos, engineers blame each other as collaboration is not built by the very nature of how different technical teams communicate.

Engineers say bluntly, “It’s not my problem; it’s not my environment.” In reality, no one knows how to drill down and pinpoint the root cause. Mean Time to Innocence becomes the de facto working practice when the application faces downtime. It’s all about how you can save yourself. Compounding application complexity and the lack of efficient collaboration and troubleshooting science create a bleak picture.

How to Win the Race with Growing Volumes of Data and Data Volumes?

How do we resolve this mess and ensure the application meets the service level agreement (SLA) and operates at peak performance levels? The first thing you need to do is collect the data—not just from one domain but from all domains simultaneously. Data must be collected from various data points from all infrastructure modules, no matter how complicated.

Once the data is collected, application flows are detected, and the application path is computed in real-time. The data is extracted from all data center points and correlated to determine the exact path and time. The path visually presents the correct application route and over what devices the application is traversing.

For example, the application path can instantly show application A flowing over a particular switch, router, firewall, load balancer, web frontend, and database server.

**It’s An Application World**

The application path defines what infrastructure components are being used and will change dynamically in today’s environment. The application that rides over the infrastructure uses every element in the data center, including interconnects to the cloud and other off-premise physical or virtual locations.

Customers are well informed about the products and services, as they have all the information at their fingertips. This makes the work of applications complex to deliver excellent results. Having the right objectives and key results (OKRs) is essential to comprehending the business’s top priorities and working towards them. You can review some examples of OKRs by profit to learn more about this topic.

That said, it is essential to note that an issue with critical application performance can happen in any compartment or domain on which the application depends. In a world that monitors everything but monitors in a silo, it’s difficult to understand the cause of the application problem quickly. The majority of time is spent isolating and identifying rather than fixing the problem.

Imagine a monitoring solution helping customers select the best coffee shop to order a cup from. The customer has a variety of coffee shops to choose from, and there are several lanes in each. One lane could be blocked due to a spillage, while the other could be slow due to a training cashier. Wouldn’t having all this information upfront before leaving your house be great?

Economic Value:

Time is money in two ways. First is the cost, and the other is damage to the company brand due to poor application performance. Each device requires several essential data points to monitor. These data points contribute to determining the overall health of the infrastructure.

Fifteen data points aren’t too bad to monitor, but what about a million data points? These points must be observed and correlated across teams to conclude application performance. Unfortunately, the traditional monitoring approach in silos has a high time value.

Using traditional monitoring methods and in the face of application downtime, the theory of elimination and answers are not easily accessible to the engineer. There is a time value that creates a cost. Given the amount of data today, on average, it takes 4 hours to repair an outage, and an outage costs $300K.

If revenue is lost, the cost to the enterprise, on average, is $5.6M. How much will it take, and what price will a company incur if the amount of data increases 18x? A recent report states that only 21% of organizations can successfully troubleshoot within the first hour. That’s an expensive hour that could have been saved with the proper monitoring solution.

There is real economic value in applying the correct monitoring solution to the problem and adequately correlating between silos. What if a solution does all the correlation? The time value is now shortened because, algorithmically, the system is carrying out the heavy-duty manual work for you.

Summary: Correlate Disparate Data Points

In today’s data-driven world, connecting seemingly unrelated data points is a valuable skill. Whether you’re an analyst, researcher, or simply curious, understanding how to correlate disparate data points can unlock valuable insights and uncover hidden patterns. In this blog post, we will explore the concept of correlating disparate data points and discuss strategies to make these connections effectively.

Defining Disparate Data Points

Before we delve into correlation, let’s establish what we mean by “disparate data points.” Disparate data points refer to distinct pieces of information that, at first glance, may seem unrelated or unrelated to datasets. These data points could be numerical values, textual information, or visual representations. The challenge lies in finding meaningful connections between them.

The Power of Context

Context is key when it comes to correlating disparate data points. Understanding the broader context in which the data points exist can provide valuable clues for correlation. By examining the surrounding circumstances, timeframes, or relevant events, we can start to piece together potential relationships between seemingly unrelated data points. Contextual information acts as a bridge, helping us make sense of the puzzle.

Utilizing Data Visualization Techniques

Data visualization techniques offer a powerful way to identify patterns and correlations among disparate data points. We can quickly identify trends and outliers by representing data visually through charts, graphs, or maps. Visualizing the data allows us to spot potential correlations that might have gone unnoticed. Furthermore, interactive visualizations enable us to explore the data dynamically and engagingly, facilitating a deeper understanding of the relationships between disparate data points.

Leveraging Advanced Analytical Tools

In today’s technological landscape, advanced analytical tools and algorithms can significantly aid in correlating disparate data points. Machine learning algorithms, for instance, can automatically detect patterns and correlations in large datasets, even when the connections are not immediately apparent. These tools can save time and effort, enabling analysts to focus on interpreting the results and gaining valuable insights.

Conclusion:

Correlating disparate data points is a skill that can unlock a wealth of knowledge and provide a deeper understanding of complex systems. We can uncover hidden connections and gain valuable insights by embracing the power of context, utilizing data visualization techniques, and leveraging advanced analytical tools. So, next time you come across seemingly unrelated data points, remember to explore the context, visualize the data, and tap into the power of advanced analytics. Happy correlating!

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023