Network Configuration Automation

In today's fast-paced digital landscape, efficient network configuration automation has become a cornerstone for organizations striving to enhance their operational productivity. Automating network configuration processes not only saves time and effort but also minimizes human error and ensures consistent network performance. In this blog post, we will explore the key benefits and considerations of network configuration automation, along with best practices to implement it effectively.

Network configuration automation refers to the practice of automating the deployment, management, and monitoring of network devices and related configurations. It streamlines the repetitive and time-consuming tasks involved in configuring network devices, such as routers, switches, and firewalls. By utilizing automation tools and frameworks, organizations can achieve greater agility, scalability, and accuracy in their network infrastructure.

Automating network configuration brings numerous advantages to organizations. Firstly, it significantly reduces the risk of human errors that can lead to network downtime or security vulnerabilities. Automation ensures consistency across network devices, eliminating configuration discrepancies.

Secondly, it enhances operational efficiency by reducing manual efforts and standardizing configuration processes, allowing IT teams to focus on more strategic initiatives. Lastly, network configuration automation facilitates faster troubleshooting and enables rapid changes to adapt to dynamic network requirements.

Comprehensive Network Inventory: Begin by creating a detailed inventory of network devices, including their models, firmware versions, and current configurations. This inventory will serve as a foundation for automation workflows.

Define Configuration Standards: Establish clear and standardized configuration templates that align with industry best practices. These templates should include essential parameters, such as IP addresses, routing protocols, and security policies.

Utilize Automation Tools: Choose a robust automation tool or framework that suits your organization's requirements. Evaluate features like device compatibility, scalability, and ease of integration with existing network management systems.

Test and Validate: Before deploying automated configurations in a production environment, thoroughly test and validate them in a controlled lab or staging environment. This step helps identify potential issues or conflicts.

While network configuration automation offers substantial benefits, it is essential to consider potential challenges. Organizations must ensure proper security measures are in place to protect automation tools and the integrity of network configurations. Additionally, regular monitoring and auditing of automated processes are crucial to detect any anomalies or unauthorized changes.

Network configuration automation serves as a catalyst for operational efficiency and reliability in modern network infrastructures. By embracing automation tools, defining robust processes, and adhering to best practices, organizations can streamline their network configuration workflows, reduce errors, and improve overall network performance.

With the right approach, network configuration automation becomes a strategic enabler for organizations seeking to stay competitive in today's digital landscape.

Matt Conran

Highlights: Network Configuration Automation

**The Rise of Automation in Networking**

Automation in networking refers to the use of software and technologies to automate the configuration, management, testing, deployment, and operation of network devices. This approach is gaining traction as it addresses some of the most pressing challenges in networking today, such as the need for speed, accuracy, and scalability. By automating routine tasks, network administrators can focus on more strategic initiatives, resulting in more efficient and effective networks.

**Benefits of Networking Automation**

One of the most significant benefits of automation in networking is its ability to enhance efficiency. Automated systems can perform repetitive tasks quickly and accurately, reducing the likelihood of human error. This increased precision leads to improved network performance and reliability. Additionally, automation allows for faster deployment of network changes and updates, enabling organizations to respond swiftly to evolving business needs and technological advancements.

**Overcoming Challenges in Automation**

While the advantages of networking automation are clear, the journey to full automation is not without obstacles. One of the primary challenges is the complexity of integrating automation tools with existing network infrastructures. Organizations must also address potential security issues, as automated systems can be vulnerable to cyber threats if not properly managed. Moreover, there is a need for skilled personnel who can oversee and maintain these automated systems, highlighting the importance of ongoing training and development.

**The Future of Networking: What Lies Ahead?**

As automation continues to gain ground, the future of networking looks promising. Emerging technologies such as artificial intelligence and machine learning are expected to further enhance automation capabilities, leading to more intelligent and adaptive networks. These advancements will enable networks to self-optimize and self-heal, minimizing downtime and maximizing performance. As a result, businesses can expect to see increased efficiency, reduced operational costs, and improved customer satisfaction.

Introducing Network Configuration Automation

1: – In today’s fast-paced digital landscape, efficient network configuration management is crucial for businesses to thrive. Manual network configuration tasks can be time-consuming, error-prone, and hinder productivity. This is where network configuration automation comes into play, revolutionizing the way networks are managed and maintained.

2: – Network configuration automation refers to the use of tools and scripts to automatically configure network devices such as routers, switches, and firewalls. This process eliminates the need for manual configuration, which can be time-consuming and prone to human error. By automating repetitive tasks, organizations can ensure consistency and accuracy across their network infrastructure.

3: – Automating network configuration processes offers a plethora of advantages. Firstly, it significantly reduces human errors that often occur during manual configurations. With automation, consistency and accuracy are ensured, leading to enhanced network reliability. Additionally, network configuration automation saves time and resources, allowing IT teams to focus on more strategic tasks rather than mundane and repetitive configurations.

4: – Implementing network configuration automation involves selecting the right tools and technologies for your organization. Popular options include Ansible, Puppet, and Chef, each offering unique features and capabilities. It’s important to assess your specific needs and environment before choosing a solution. Once implemented, automation scripts can be created and customized to meet your organization’s unique requirements, ensuring seamless integration into existing workflows.

Note: – **Application Changes**

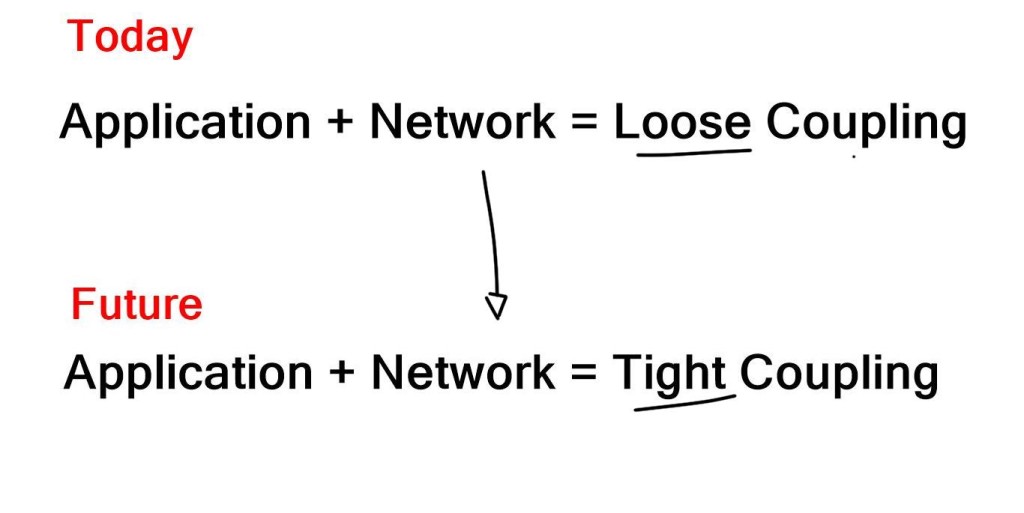

Applications are deployed differently today than they were 10-15 years ago. So much has changed with the app. The problem we are seeing today is that the network is not tightly coupled with these other developments. Providing various network policies and corresponding configurations is not tightly associated with the application.

They are usually loosely coupled and reactive. For example, analyzing firewall rules and providing a network assessment is nearly impossible with old security devices, driving the need for network configuration automation and the ability to automate network configuration.

Right Tools & Strategies

– To successfully implement network configuration automation, businesses need to adopt the right tools and strategies. Robust automation platforms, such as Ansible or Puppet, can streamline the process by providing intuitive interfaces and extensive libraries of pre-built configurations. IT teams should also establish clear configuration standards and templates to ensure consistency across the network.

– While network configuration automation offers numerous benefits, it’s not without its challenges. One common obstacle is the initial investment required to implement automation tools and train IT staff. However, the long-term cost savings and increased efficiency outweigh the initial costs. Additionally, businesses must carefully plan and test automation workflows to avoid unintended consequences or disruptions to network operations.

Deterministic outcomes

An enterprise organization’s change review involves examining upcoming network changes, their impact on external systems, and their rollback plans. When humans use the CLI to make changes, typing the wrong command can have catastrophic results. Think about a team of three, four, five, or fifty engineers. Depending on the engineer, changes can be made in a variety of ways. In addition, even using a GUI or a CLI does not eliminate or reduce the chance of errors during change control.

The executive team has a better chance of achieving deterministic outcomes when they use proven and tested network automation to make changes. This increases their chances of achieving a successful project the first time around by achieving more predictable behavior than when making changes manually. This might happen when a new VLAN is added or a new customer is onboarded, requiring multiple network changes.

Furthermore, deterministic results result in lower operating expenses (OpEx), as network changes require less manual labor, resulting in a more efficient network operation (e.g., automating time-consuming tasks such as updating a network device’s operating system). Network engineers can focus on more strategic projects and improve processes with less operating time.

Device Provisioning

An easy and fast way to get started with network automation is to automate the creation and pushing of device configuration files. Two steps are involved in this process: creating the configuration file and pushing it to the device.

To automate configuration files (or configuration data in general), the input parameters (configuration parameters) must first be decoupled from the vendor-proprietary syntax (CLI). Separate files will be created for configuration templates, VLANs, domains, interfaces, routing, etc.

Data Collection and Enrichment

Through SNMP, monitoring tools typically poll management information bases (MIBs) for data. Data may be returned in excess or insufficient to meet your needs. What should be done when polling interface statistics? What if you only need interface resets, not CRC errors, jumbo frames, or output errors?

The command show interface may return every counter, but what if you only need interface resets? Moreover, what if you want to see interface resets correlated with Cisco Discovery Protocol (CDP) or LLDP neighbors now rather than in the future? In this context, what role does network automation play?

We focus on giving you more control so you can customize what you get when you get it, how it is formatted, and how it is used after it is collected. Automating the process can maximize your data.

Migrations

Migrating from one platform to another is never easy. There may be platforms from the same vendor or different vendors. In our example, you can create configuration templates for network devices and operating systems using various forms of automation. Vendors may provide a script or tool that helps with migrations. It would then be possible to generate a configuration file for every vendor using a defined and standard data set (common data model).

If you are using them, you must also consider vendor-proprietary extensions. It is fantastic that such a tool can be built independently rather than by a vendor. A vendor must account for all the device features, while an organization only needs a limited number. Vendors aren’t concerned about this; they are worried about their equipment not making it easier for you, the network operator, to manage multivendor environments.

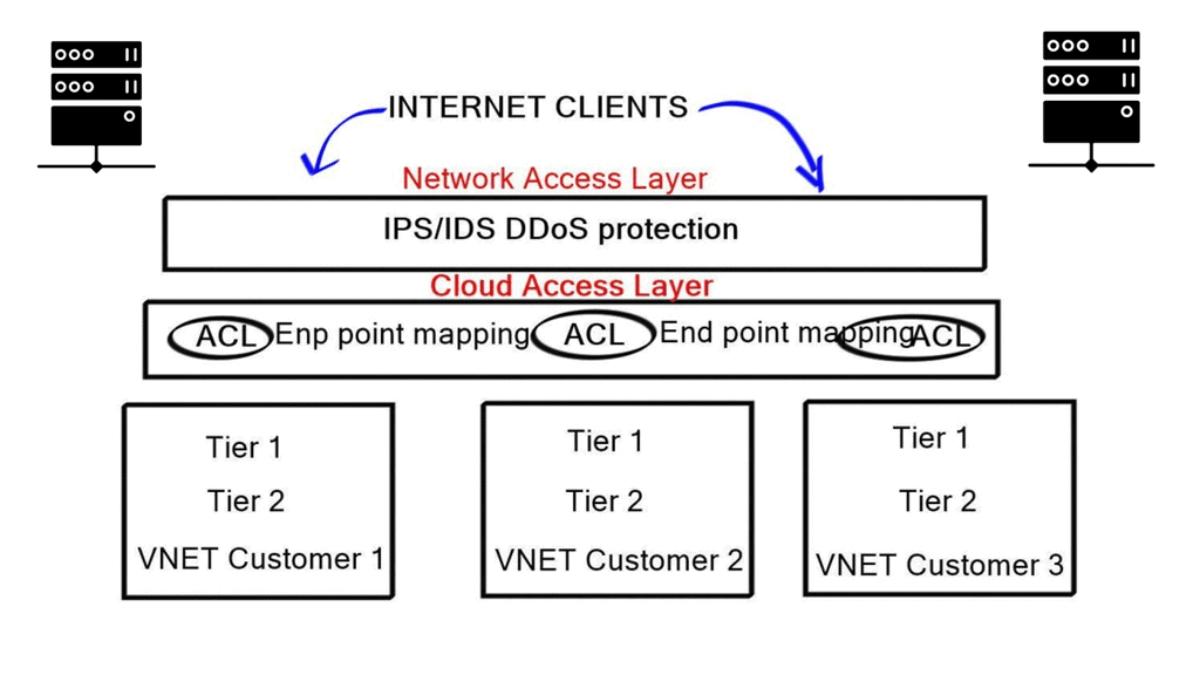

Configuration Management

In configuration management, devices are deployed, pushed, and managed according to their configuration state. Everything from interface descriptions to configurations of ToR switches, firewalls, load balancers, and advanced security infrastructure is covered to deploy three-tier applications.

As you can see, with the read-only forms of automation, you don’t have to start by pushing configurations. This method may be worthwhile if you spend countless hours making the same change across many routers or switches.

Before you proceed, you may find the following articles of interest:

Network Configuration Automation

One of the easiest and quickest ways to get started with network automation is to automate the creation of the device configuration files used for initial device provisioning and push them to network devices. You can also get a lot of information with automation. For example, network devices have enormous static and ephemeral data buried inside, and using open-source tools or building your own gets you access to this data.

Examples of this type of data include entries in the BGP table, OSPF adjacencies, active neighbors, interface statistics, specific counters and resets, and even counters from application-specific integrated circuits (ASICs) themselves on newer platforms.

Guide with Ansible Core

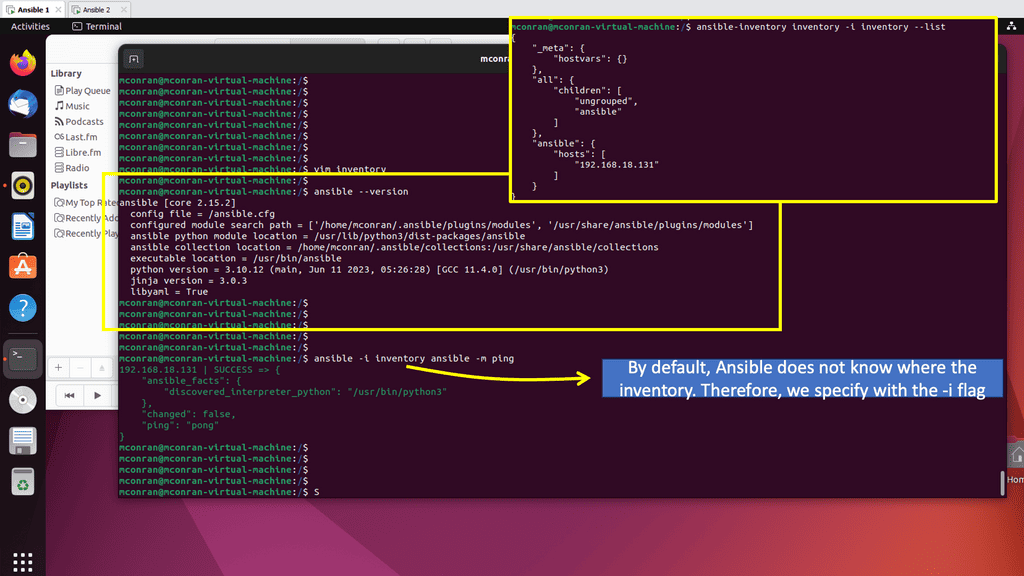

We have Ansible installed and a managed host already prepared in the following. The managed host needs to have SSH enabled and a user with admin privileges. Ansible finds managed hosts by looking at the inventory file. The inventory file is also a great place to pass variables that can be used to remove site-specific information; this is set under the host var section below.

Remember that Ansible requires Python, and below, we are running Python version 3.0.3 and Jinja version 3.0.3, which is used for templating. You can pass information to ansible managed hosts with playbooks and ad hoc commands. Below, I’m using an ad hoc command, calling the command module by default, and testing with a ping.

**Benefits of Network Configuration Automation**

1. Time and Resource Efficiency: Organizations can free up their IT staff to focus on more strategic initiatives by automating repetitive and time-consuming network configuration tasks. This results in increased productivity and efficiency across the organization.

2. Enhanced Accuracy and Consistency: Manual configuration processes are prone to human error, leading to misconfigurations and network downtime. Network configuration automation eliminates these risks by ensuring consistency and accuracy in network configurations, reducing the chances of costly errors.

3. Rapid Network Deployment: Network administrators can quickly deploy network configurations across multiple devices simultaneously with automation tools. This accelerates network deployment and enables organizations to respond faster to changing business needs.

4. Improved Security and Compliance: Network configuration automation enhances security by enforcing standardized configurations and ensuring compliance with industry regulations. Automated security protocols can be applied consistently across the network, reducing vulnerabilities and enhancing overall network protection.

5. Simplified Network Management: Automation tools provide a centralized platform for managing network configurations, making it easier to monitor, troubleshoot, and maintain network devices. This simplifies network management and reduces the complexity associated with manual configuration processes.

**Implementing Network Configuration Automation**

To implement network configuration automation, organizations need to consider the following steps:

1. Assess Network Requirements: Understand the specific network requirements, including device types, network protocols, and security policies.

2. Select an Automation Tool: Evaluate different automation tools available on the market and choose the one that best suits the organization’s needs and network infrastructure.

3. Create Configuration Templates: Develop standardized configuration templates that can be easily applied to network devices. These templates should include best practices, security policies, and network-specific configurations.

4. Test and Validate: Before deploying automated configurations, thoroughly test and validate them in a controlled environment to ensure their effectiveness and compatibility with the existing network infrastructure.

5. Monitor and Maintain: Regularly monitor and maintain the automated network configurations to identify and resolve any issues or security vulnerabilities that may arise.

The Need to Automate Network Configuration

There are always hundreds, if not thousands, of outdated rules even though the application service is not required. Another example is unused VLANs left configured on access ports, posing a security risk. The problem lies in the process: how we change and provision the network is not tied to the application. It is not automated. Inconsistent configurations tend to grow as human interaction is required to tidy things up. People move on and change roles.

You cannot guarantee the person creating a firewall rule will be the engineer deleting the rule once the corresponding applications are decommissioned or changed. And if you don’t have a rigorous change control process, deprecated configurations will be idle on active nodes.

A key point: The use of Ansible variables in an Ansible architecture.

For configuration management, you could opt for Red Hat Ansible. The Ansible architecture consists of modules with tasks on the target hosts listed in the inventory. Various plugins are available for additional context and Ansible variables for flexible playbook development. Ansible Core is the CLI-based version of automation, and Ansible Tower is the platform.

The recommended approach for enterprise-wide security would be a platform-based approach to the Ansible architecture. Using a platform approach using Ansible variables creates a very flexible automation journey where you can have one playbook with Ansible variables, removing any site-specific information running against several different inventories that could relate to your other functions, Dev, Staging, and Production.

The network is critical for business continuity, resulting in real uptime pressure. Operational uptime is directly tied to the success of the business. This results in a manual culture, which manifests as manual and slow. The actual bottleneck is our manual culture for network provision and operation.

Virtualization – Beginning the change

Virtualization vendors are changing the manual approach. For example, if we look at essential MAC address learning and its process with traditional switches. The source MAC address of an incoming Ethernet frame is examined, and if the source MAC address is known, it doesn’t need to do anything, but if it’s not known, it will add that MAC to its table and make a note of the port the frame entered. The switch has a port for MAC mapping. The table is continually maintained, and MAC addresses are added and removed via timers and flushing.

The virtual switch

The virtual switch operates differently. Whenever a VM spins up and a VNIC attaches to the virtual switch, the Hypervisor programs everything it needs to know to forward that traffic into its process on the virtual switch. There is no MAC learning. When you spin down the VM, the hypervisor does not need to wait for a timer.

It knows the source is no longer there; as a result, it no longer needs to have that state. Less state in a network is a good thing. The critical point is that the provision of the application/ virtual machine is tightly coupled with the provisioning of network resources. Tightly coupling applications to network resources/provisioning offers less “Garbage Collection.”

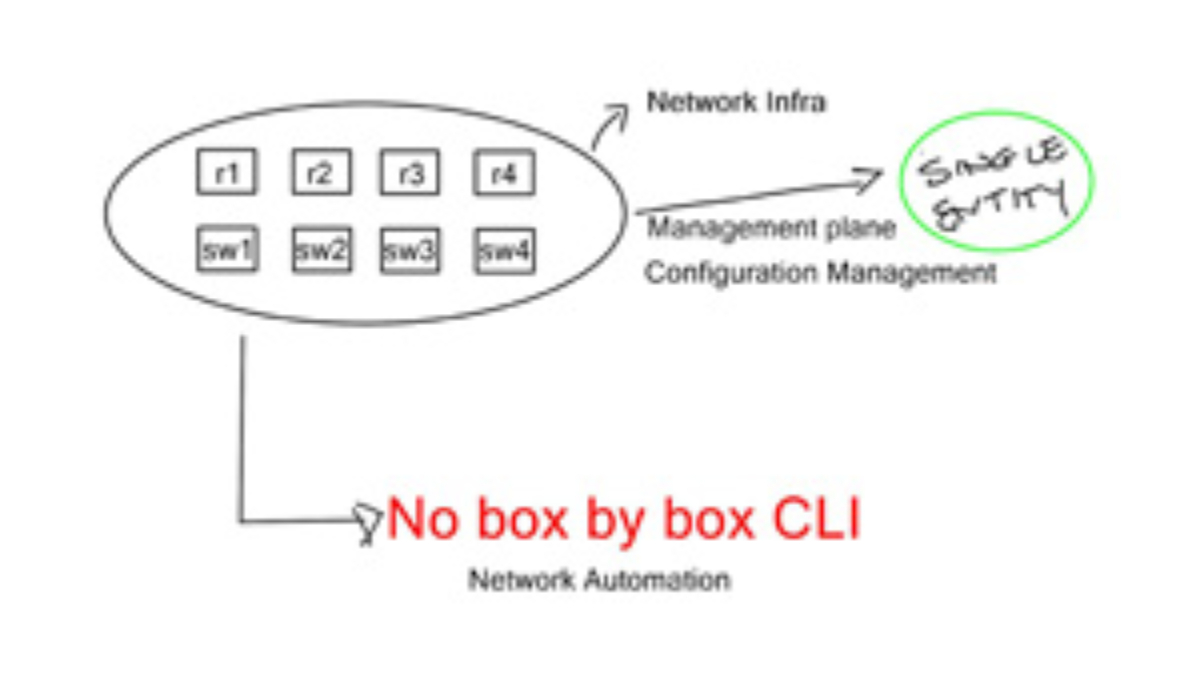

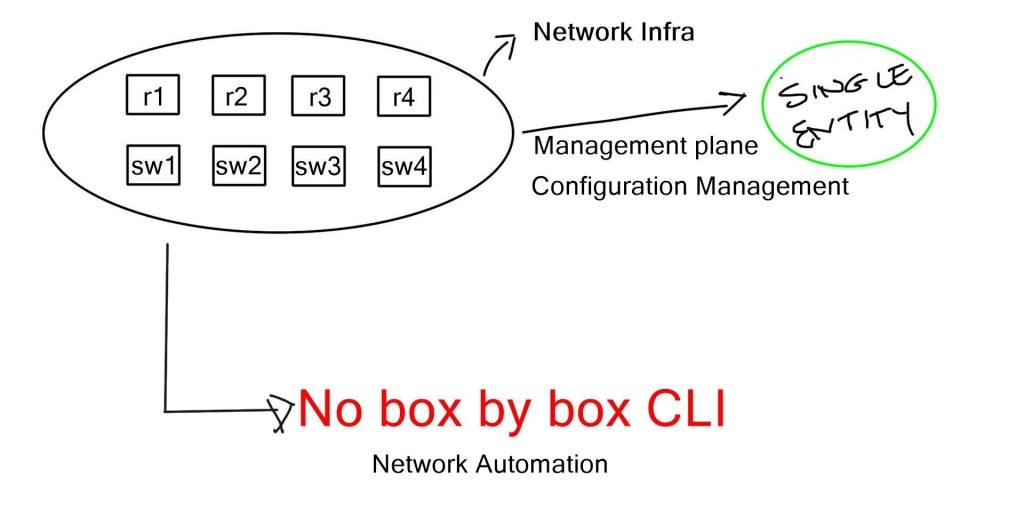

Box mentality

When the contents of HLD / LLD are completed and you are now moving to the configuration stage, the current implementation-specific details are done per box. The commands are defined on individual boxes and are vendor-specific. This works functionally, and it’s how the Internet was built, but it lacks agility and proper configuration management. Many repetitive tasks with a box mentality destroy your ability to scale.

Businesses are mainly concerned with agility and continuity, but you cannot have these two things with manual provisions. You must look at your network as a system, not individual boxes. When you look at applications and their scaling, the current network-style implementation method does not scale and keeps in line with the apps. The solution is to move to network configuration automation and automatic interaction.

Network Configuration Automation and Automate Network Configuration

We must move out of a manual approach and into an automated system. Focus initially on low-hanging fruit and easy wins. What takes engineers the longest to do? Do VLAN and Subnet allocation sheets ring a bell? We should size according to demand and not care about the type of VLAN or the Internal subnet allocation. Microsoft Azure cloud is a perfect example.

They do not care about the type of private address they assign to internal systems. They automate the IP allocation and gateway assignment so you can communicate locally. Designing optimum networks to last and scale is not good enough anymore. The network must evolve and be programmed to keep up with app placement. The configuration approach needs to change, and we should move to proper configuration management and automation.

Ansible is a widespread tool of choice. As previously mentioned, we have Ansible Tower as a platform, and for CLI-based devices, we have Ansible Core, which supports variable substitution with Ansible variables.

SDN: A companion to network automation?

One benefit of Software-Defined Networking (SDN) is that it lets you view your network holistically, with a central viewpoint. Network configuration automation is not SDN, and SDN is not network automation. They work side by side and complement each other. SDN allows you to be abstract and prevents those who do not need to see the detail from not seeing it.

The application owners do not care about VLANs. Application designers should also not care about local IP allocations if they have designed the application correctly. Centralization is also a goal for SDN. Centralization with SDN is different from control-plane centralization. Central SDN controller devices should not fully control the control plane.

SDN companies have learned this and now allow network nodes to handle some or part control plane operations.

Programming network: Automate network configuration

You don’t need to be a programmer, but you should start thinking like one. Learning to program will make you better equipped to deal with things. Programming networks is a diagonal step from what you are doing now, offering an environment to run code and ways to test code before you run it out.

The current CLI is the most dangerous approach to device configuration; you can even lock yourself out of a device. Programming adds a safety net. It’s more of a mental shift. Stop jumping to the CLI and THINK FIRST. Break the task down and create workflows. Workloads are then mapped to an automation platform.

A key point: TCL and EXPECT

TCL ( Tool Command Language ) is a scripting language created in 1988 at UC Berkeley. It aims to connect Shell scripts and Unix commands. EXPECT is a TCL extension written by Don Libes. It automates Telnet, SSH, and Serial sessions to perform many repetitive tasks.

EXPECT’s main drawback is that it is not secure and is synchronous only. If you log onto a device, you display login credentials in the EXPECT scripts and cannot encrypt that data in the code. In addition, it operates sequentially, meaning you send a command and wait for the output; it does not send send send and wait to receive; it’s a send and waits, sends and wait for mythology.

A key point: SNMP has failed | NETCONF begins

SNMP is used for fault handling, monitoring equipment, and retrieving performance data, but very few use SNMP to set configurations. More often, there is no 1:1 translation between a CLI configuration operation and an SNMP “SET.” It’s hard to get this 1-2-1 correlation. As a result, not many people use SNMP for device configuration and management of structures.

CLI scripting was the primary approach to automating network configuration changes before NETCONF. Unfortunately, CLI scripting has several limitations, including a lack of transaction management, no structured error management, and the ever-changing structure and syntax of commands, making scripts fragile and costly to maintain. Even the use of autocorrelation scripts won’t be able to fix it.

People make mistakes, and ultimately, people are bad at stuff. It’s the nature of the beast. Human error plays a significant role in network outages, and if a person is not logging in doing CLI, they are less likely to make a costly mistake. Human interaction with the network is a major cause of network outages.

NETCONF & Tail-F

NETCONF ( network control protocol ) is an XML-based data encoding for configuration and protocol messages. It offers secure transport and is Asynchronous, so it’s not sequential like TCL and EXPECT. Asynchronous makes NETCONF more efficient. It allows the separation of the configuration from the non-configuration items.

SNMP makes backup and restore complex, as you have no idea what fields are used to restore. Also, because of SNMP’s binary nature, it isn’t easy to compare configurations from one device to another. NETCONF is much better at this.

A final note: Transaction-based approach

It offers a transaction-based approach. A transaction is a set of configuration changes, not a sequence. SNMP for configuration requires everything to be in the correct sequence/order. But with a transaction, you throw in everything, and the device figures out how to roll it out.

What matters is that operators can write service-level applications that activate service-level changes and don’t have to make the application aware of all the sequence of changes that must be completed before the network can serve application responses and requests. Check out an exciting company called Tail-F (now part of Cisco), which offers a family of NETCONF-enabled products.

Closing Points on Network Configuration Automation

Automation in networking refers to the use of software and tools to execute network management tasks with minimal human intervention. This includes configuration, management, testing, deployment, and operation of physical and virtual devices within a network. The primary goal of automation is to improve efficiency, reduce human error, and enable network administrators to focus on strategic tasks rather than mundane, repetitive ones.

The integration of automation into networking brings numerous advantages. Firstly, it significantly enhances operational efficiency by automating routine tasks, allowing network teams to allocate their time and resources more effectively. Secondly, it reduces the risk of human errors, which are often the cause of network outages and security breaches. Thirdly, automation supports scalability, enabling networks to grow and adapt without the need for extensive manual configuration. Lastly, it provides real-time insights and analytics, empowering businesses to make data-driven decisions.

Despite its benefits, the implementation of automation in networking is not without challenges. One of the primary obstacles is the complexity of integrating automation tools with existing network infrastructure. Organizations may also face resistance from IT staff who are wary of changing established processes. Additionally, there is a need for ongoing training and upskilling to ensure that teams can effectively manage and optimize automated systems. Addressing these challenges requires careful planning, investment, and a commitment to change management.

As technology continues to evolve, the future of networking automation looks promising. Advances in artificial intelligence and machine learning are expected to further enhance automation capabilities, leading to smarter, more adaptive networks. The rise of the Internet of Things (IoT) and 5G technologies will also drive demand for automated solutions to manage the increased complexity and volume of network traffic. As businesses continue to embrace digital transformation, automation will become an indispensable component of modern networking strategies.

Summary: Network Configuration Automation

Network configuration is crucial in ensuring seamless connectivity and efficient data flow in today’s fast-paced technological landscape. However, the manual configuration of networks can be time-consuming, prone to errors, and hinder scalability. This is where network configuration automation comes into play, revolutionizing how networks are managed and optimized. In this blog post, we explored the benefits, implementation techniques, and best practices of network configuration automation.

Understanding Network Configuration Automation

Network configuration automation involves leveraging software and tools to automate configuring and managing network devices. Reducing human intervention eliminates manual errors, enhances agility, and enables effective network management at scale.

Benefits of Network Configuration Automation

Automating network configuration brings a plethora of advantages to organizations. Firstly, it significantly reduces human errors, ensuring accurate and consistent device configurations. Secondly, it enhances efficiency by saving time and effort spent on manual configurations. Additionally, automation allows for faster deployment of network changes, improving overall network agility and responsiveness.

Implementation Techniques for Network Configuration Automation

Implementing network configuration automation requires a structured approach. It involves:

1. Inventory and Device Discovery: Create an inventory of network devices and establish a comprehensive understanding of the existing network infrastructure.

2. Configuration Templates and Version Control: Develop standardized configuration templates that can be easily applied to multiple devices. Implement version control mechanisms to track and manage configuration changes effectively.

3. Orchestration and Automation Tools: Leverage network automation tools that provide scripting, scheduling, and deployment automation features. Tools like Ansible, Chef, and Puppet offer potent capabilities to streamline network configuration.

Best Practices for Network Configuration Automation

To ensure a successful implementation of network configuration automation, consider the following best practices:

1. Test and Verify: Before deployment, thoroughly test and verify automation scripts and templates to ensure they align with the desired network configuration and functionality.

2. Security and Compliance: Incorporate security measures and compliance standards into automation processes to protect network assets and ensure adherence to industry regulations.

3. Documentation and Change Management: Maintain detailed documentation of configurations and changes made through automation. Implement a change management process to track modifications and facilitate troubleshooting.

Conclusion

Network configuration automation is a game-changer in network management. By embracing automation, organizations can reduce errors, enhance efficiency, and improve overall network performance. Whether deploying standardized configurations or orchestrating complex network changes, automation streamlines processes, allowing IT teams to focus on strategic initiatives and innovation.