**Understanding Zero Trust Networking**

In today’s digital landscape, where cyber threats are ever-evolving, traditional security models are often inadequate. Enter Zero Trust Networking, a revolutionary approach that challenges the “trust but verify” mindset. Instead, Zero Trust operates on a “never trust, always verify” principle. This model assumes that threats can originate both outside and inside the network, leading to a more robust security posture. By scrutinizing every access request and continuously validating user permissions, Zero Trust Networking aims to protect organizations from data breaches and unauthorized access.

**Key Components of Zero Trust**

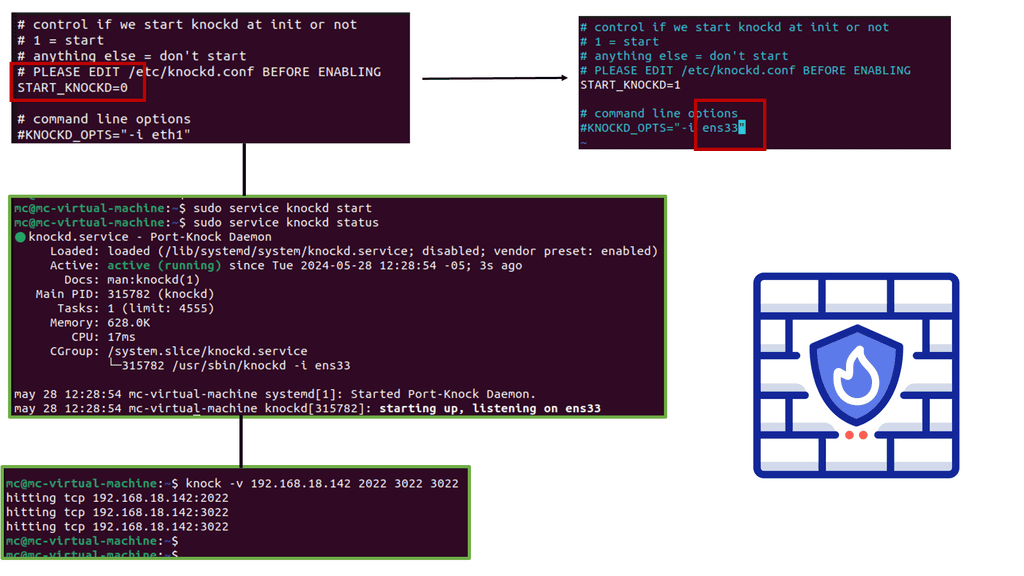

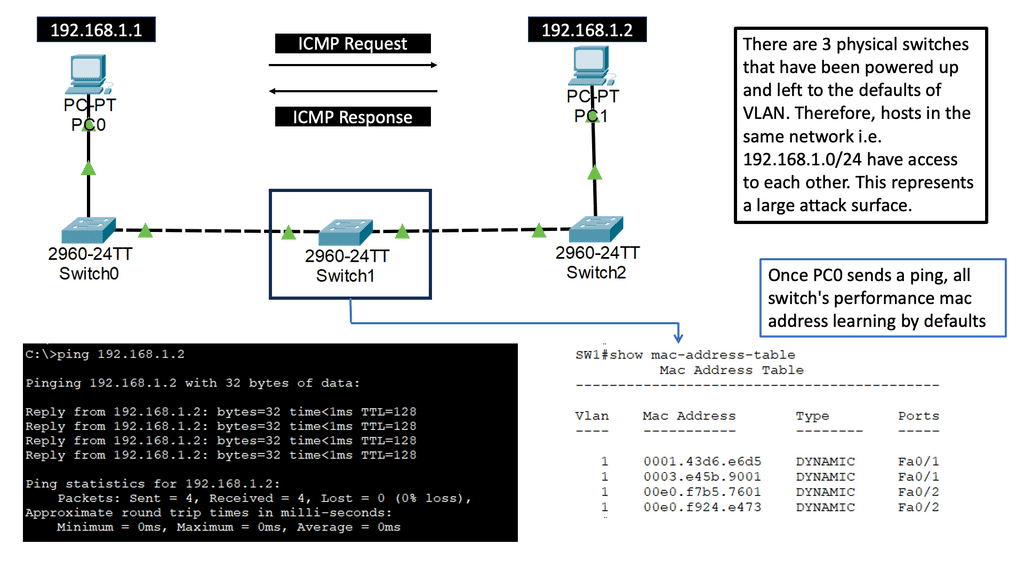

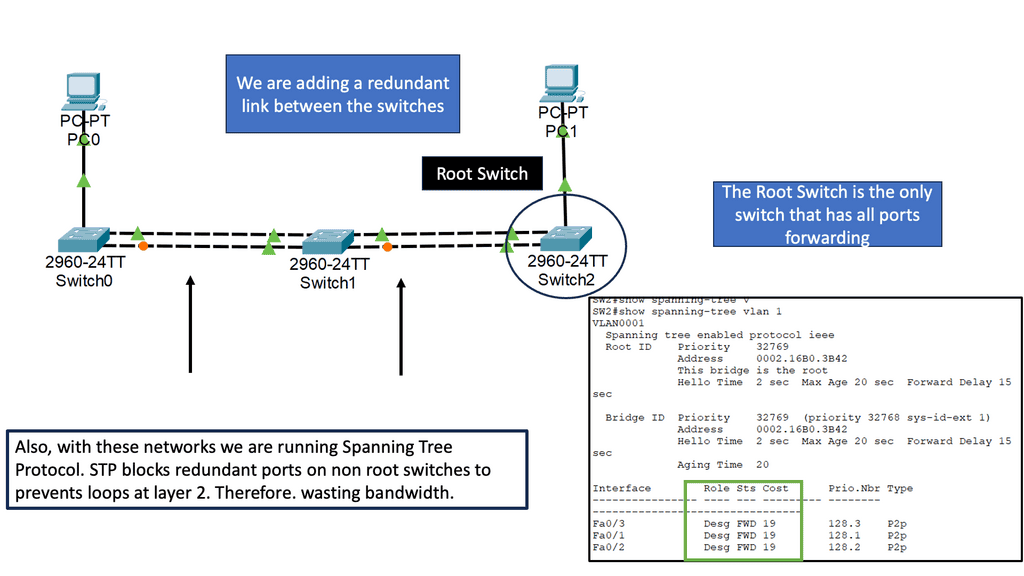

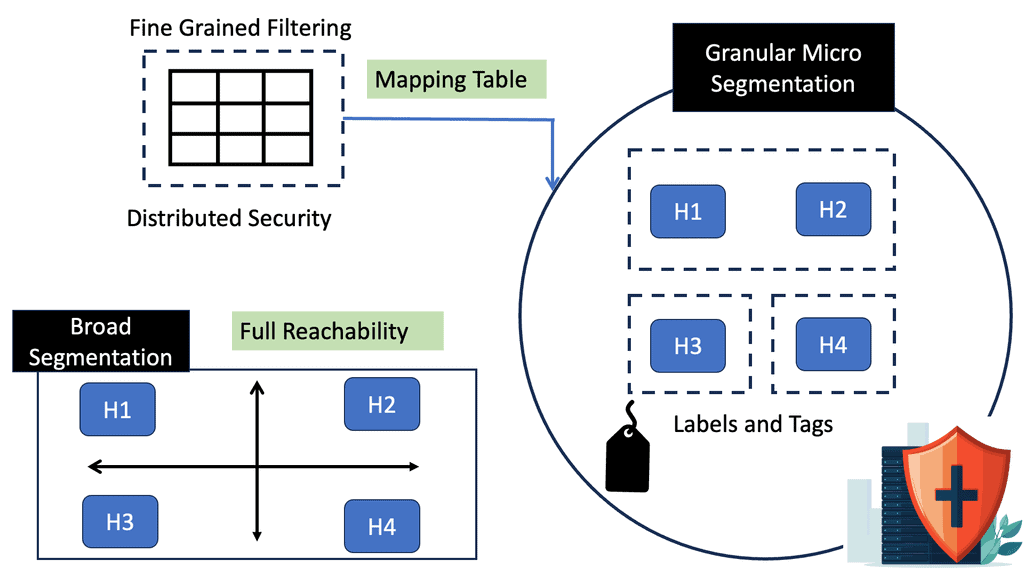

Implementing a Zero Trust Network involves several key components. First, identity verification becomes paramount. Every user and device must be authenticated and authorized before accessing any resource. This can be achieved through strong multi-factor authentication mechanisms. Secondly, micro-segmentation plays a critical role in limiting lateral movement within the network. By dividing the network into smaller, isolated segments, Zero Trust ensures that even if one segment is compromised, the threat is contained. Finally, continuous monitoring and analytics are essential. By keeping a watchful eye on user behavior and network activity, anomalies can be detected and addressed swiftly.

**Benefits of Adopting Zero Trust**

Adopting a Zero Trust model offers numerous benefits for organizations. One of the most significant advantages is the enhanced security posture it provides. By reducing the attack surface and limiting access to only what is necessary, organizations can significantly decrease the likelihood of a breach. Moreover, Zero Trust enables compliance with stringent regulatory requirements by ensuring that data access is strictly controlled and monitored. Additionally, with the rise of remote work and cloud-based services, Zero Trust offers a flexible and scalable security solution that adapts to changing business needs.

**Challenges in Implementing Zero Trust**

Despite its advantages, transitioning to a Zero Trust Network is not without challenges. Organizations may face resistance from employees accustomed to traditional access models. The initial setup and configuration of Zero Trust can also be complex and resource-intensive. Furthermore, maintaining continuous visibility and control over every device and user can strain IT resources. However, these challenges can be mitigated by gradually implementing Zero Trust principles, starting with high-risk areas, and leveraging automation and advanced analytics.

Understanding Zero Trust Networking

Zero-trust networking is a security model that challenges the traditional perimeter-based approach. It operates on the principle of “never trust, always verify.” Every user, device, or application trying to access a network is treated as potentially malicious until proven otherwise. Zero-trust networking aims to reduce the attack surface and prevent lateral movement within a network by eliminating implicit trust.

Several components are crucial to implementing zero-trust networking effectively. These include:

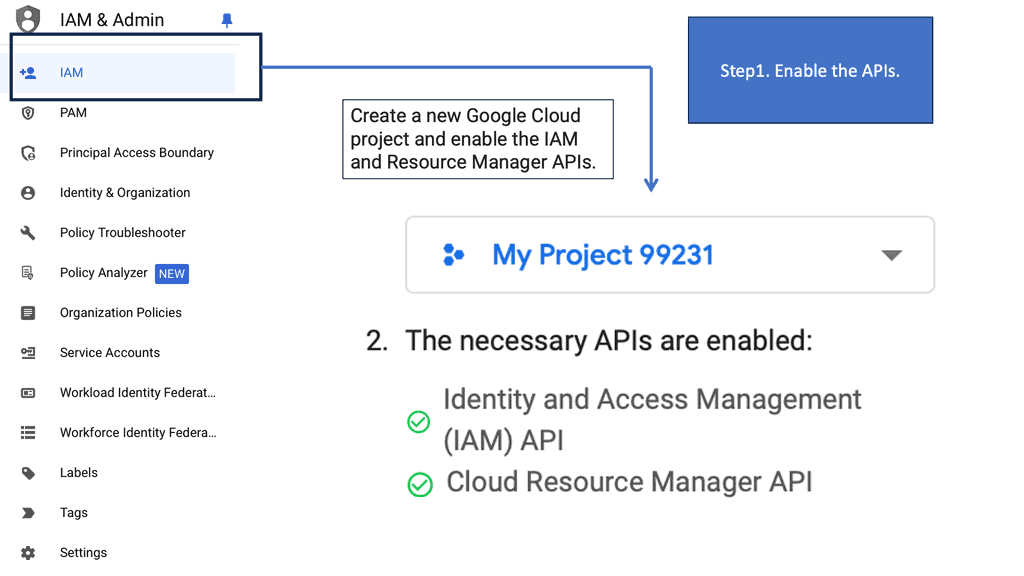

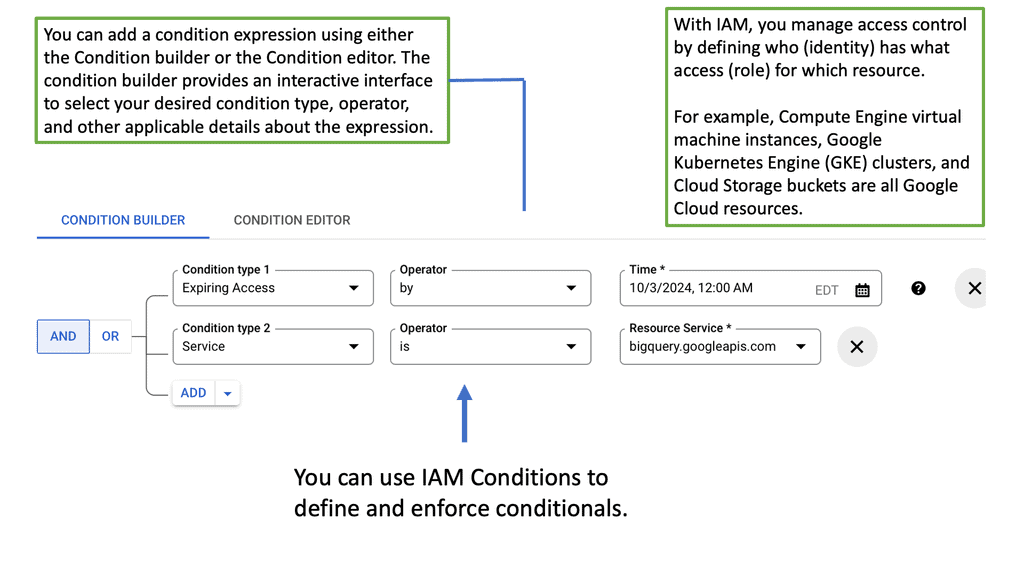

1. Identity and Access Management (IAM): IAM solutions play a vital role in zero-trust networking by ensuring that only authenticated and authorized individuals can access specific resources. Multi-factor authentication, role-based access control, and continuous monitoring are critical features of IAM in a zero-trust architecture.

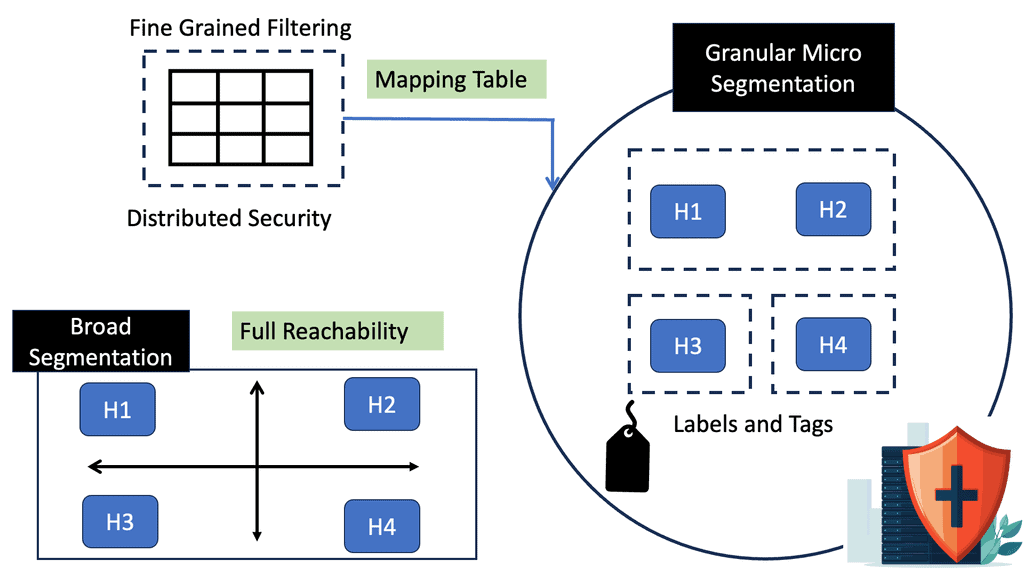

2. Microsegmentation: Microsegmentation divides a network into smaller, isolated segments, enhancing security by limiting lateral movement. Each segment has its security policies and controls, preventing unauthorized access and reducing the potential impact of a breach.

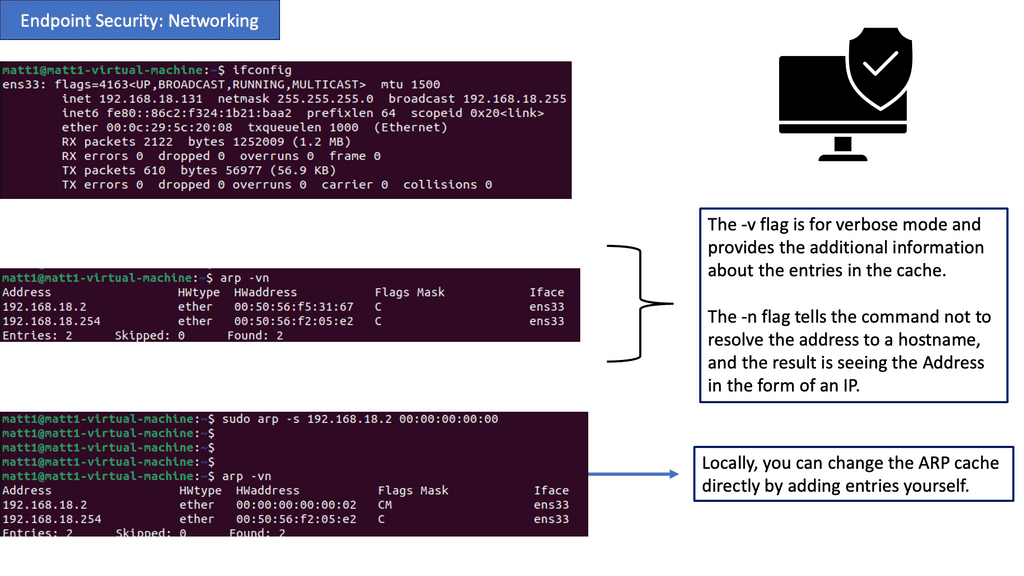

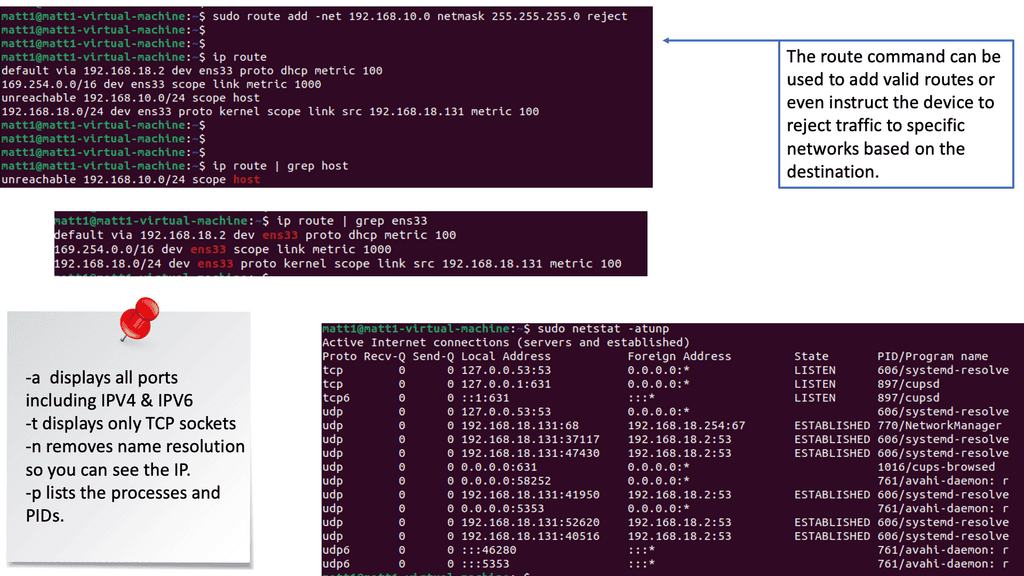

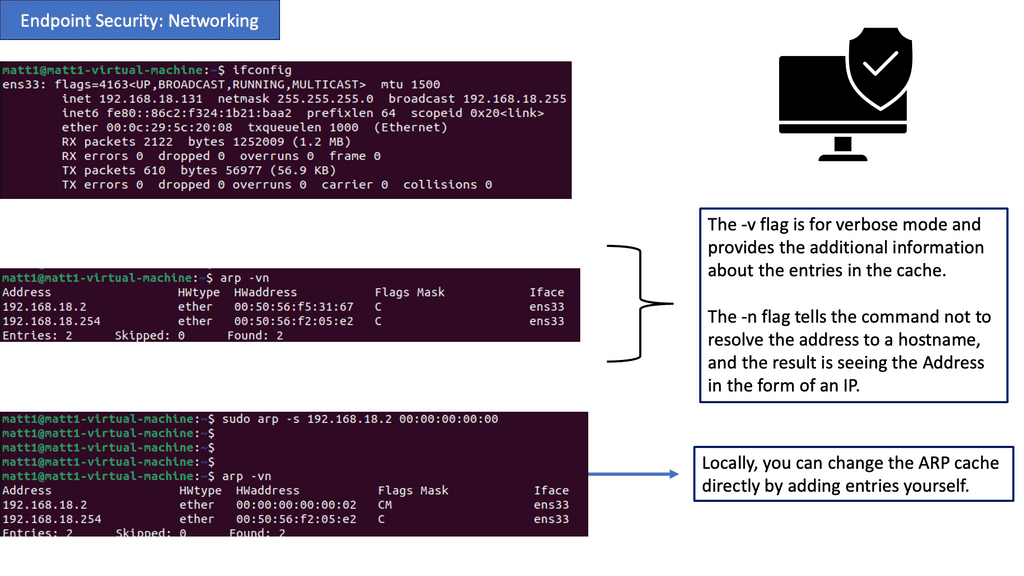

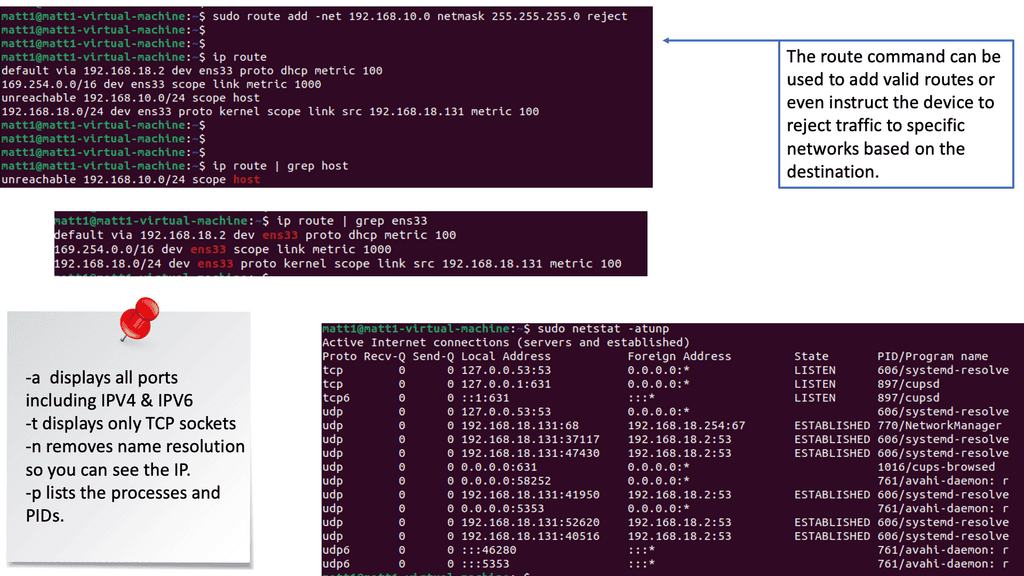

Endpoint Security: Networking

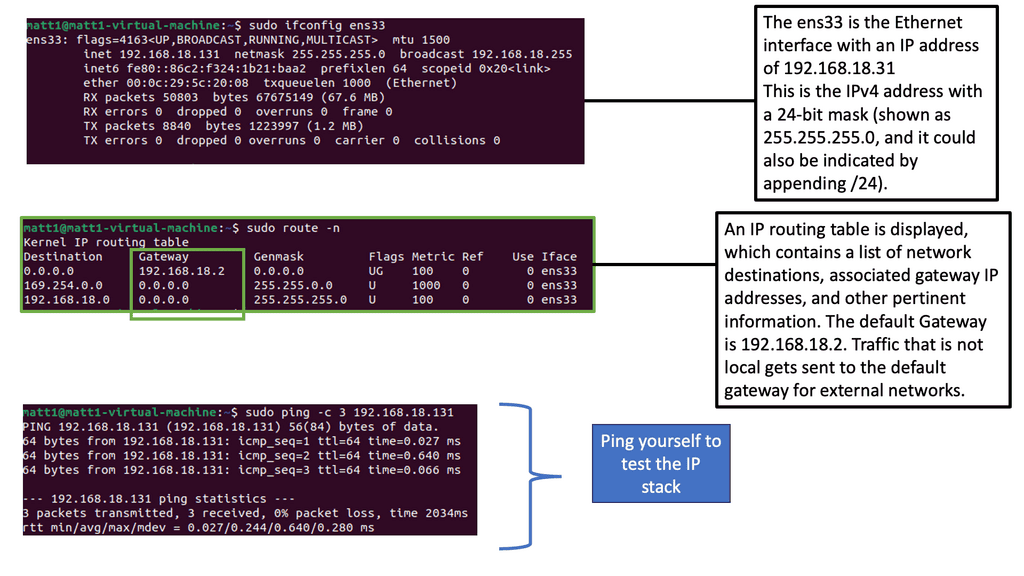

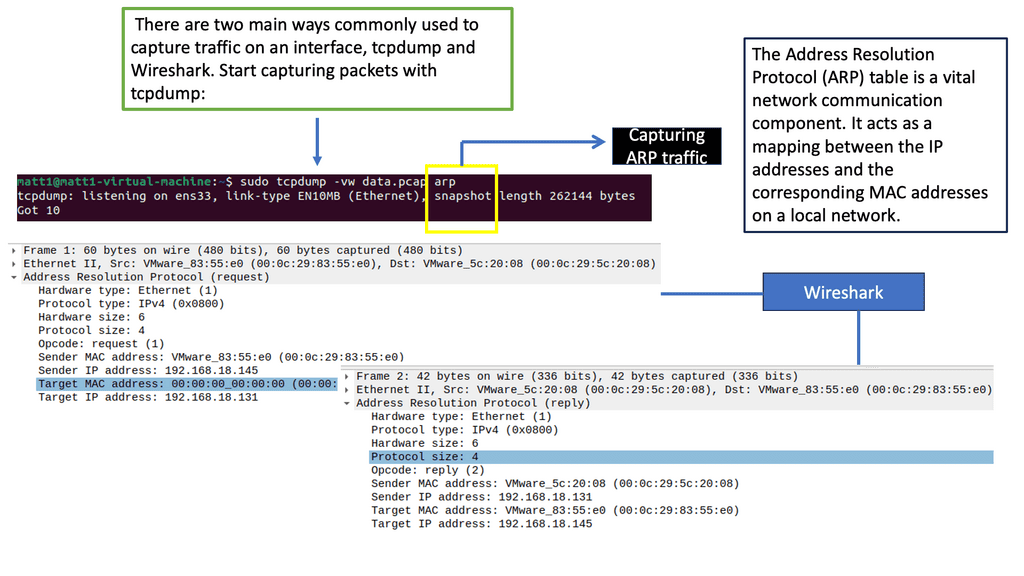

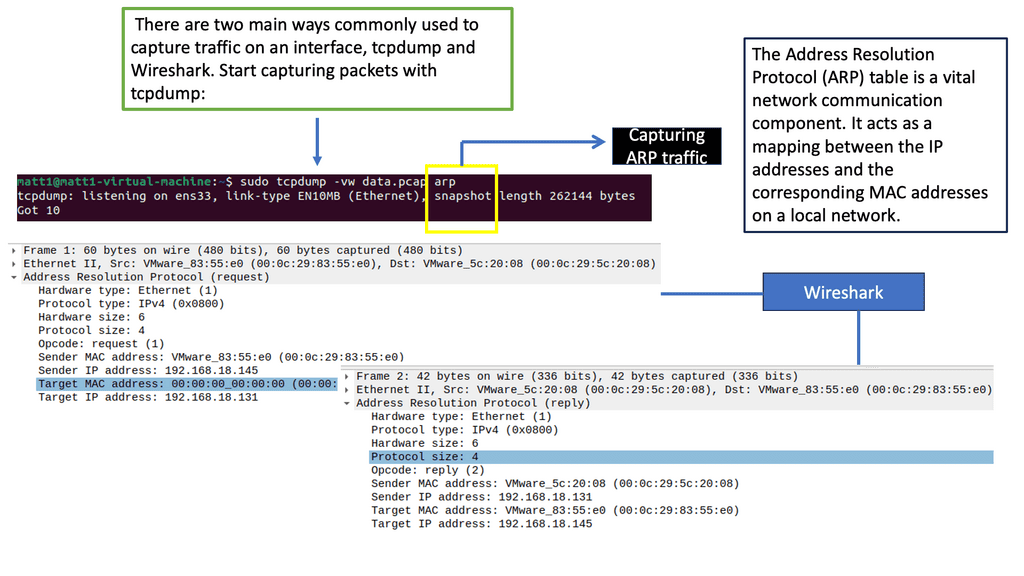

Understanding ARP (Address Resolution Protocol)

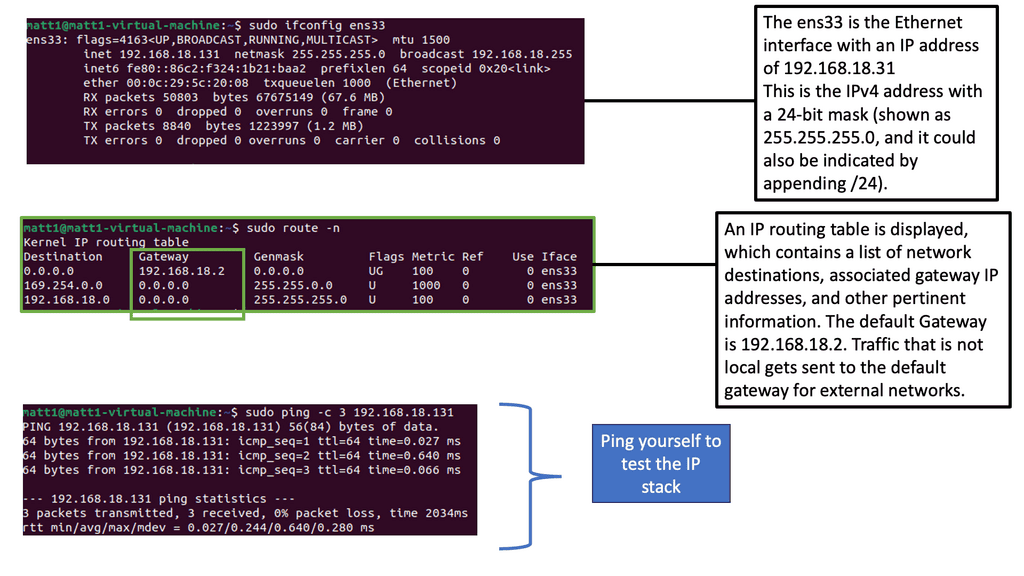

– ARP plays a vital role in establishing communication between devices within a network. It resolves IP addresses into MAC addresses, facilitating data transmission. Network administrators can identify potential spoofing attempts or unauthorized entities trying to gain access by examining ARP tables. Understanding ARP’s inner workings is crucial for implementing effective endpoint security measures.

– Route tables are at the core of network routing decisions. They determine the path that data packets take while traveling across networks. Administrators can ensure that data flows securely and efficiently by carefully configuring and monitoring route tables. We will explore techniques to secure route tables, including access control lists (ACLs) and route summarization.

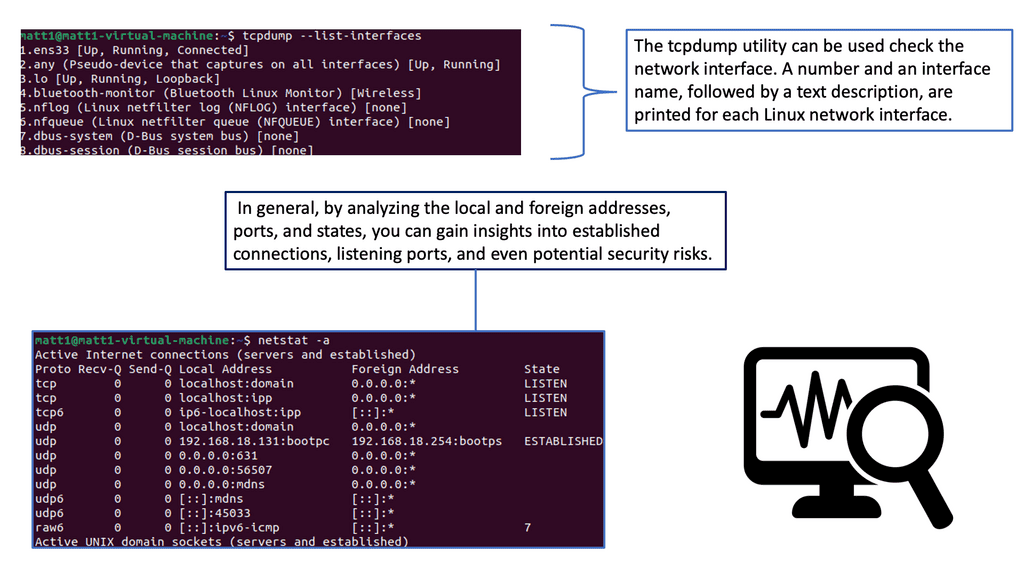

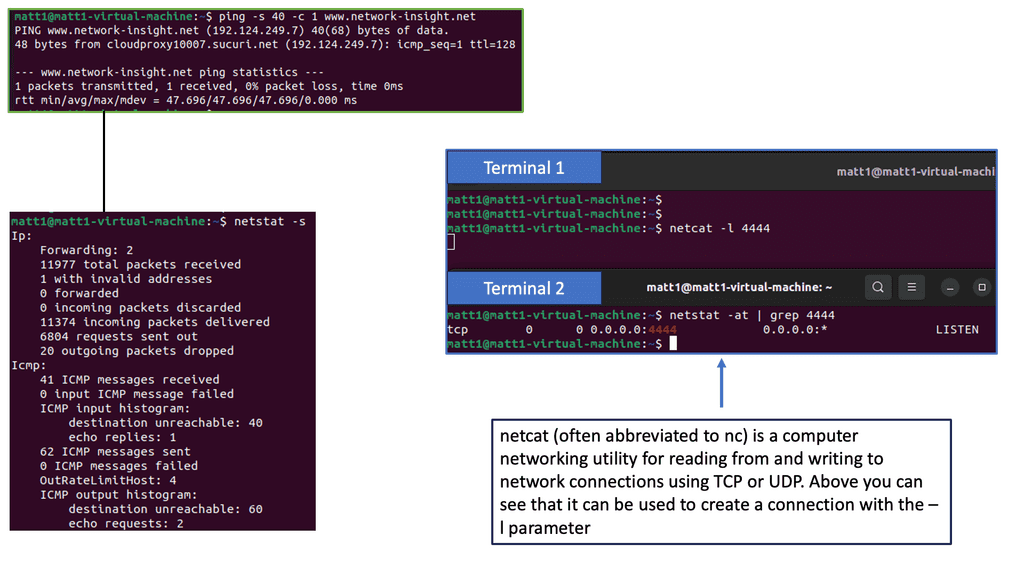

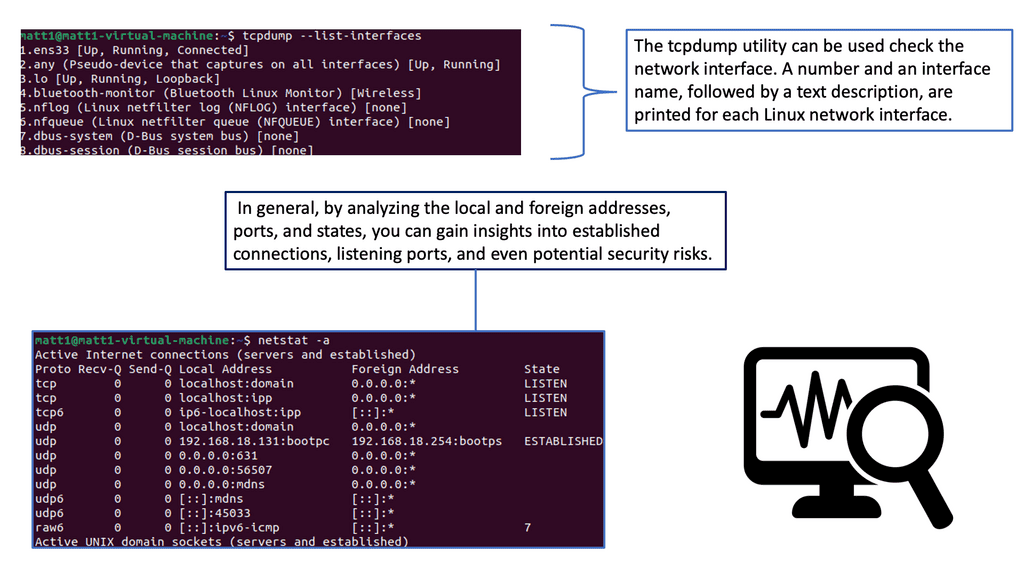

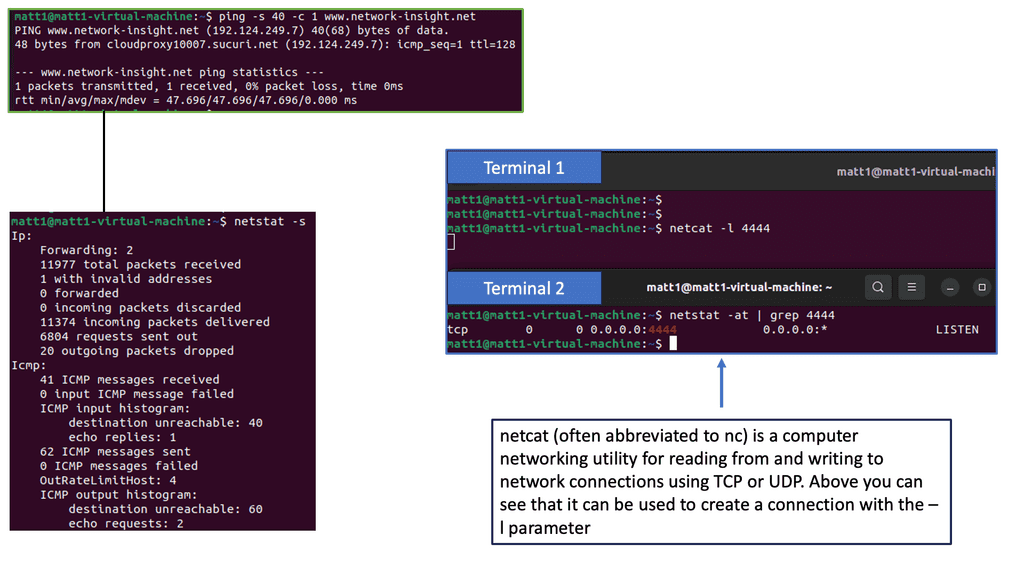

– Netstat, short for “network statistics,” is a powerful command-line tool that provides valuable insights into network connections and interface statistics. It enables administrators to monitor active connections, detect suspicious activities, and identify potential security breaches. We will uncover various netstat commands and their practical applications in enhancing endpoint security.

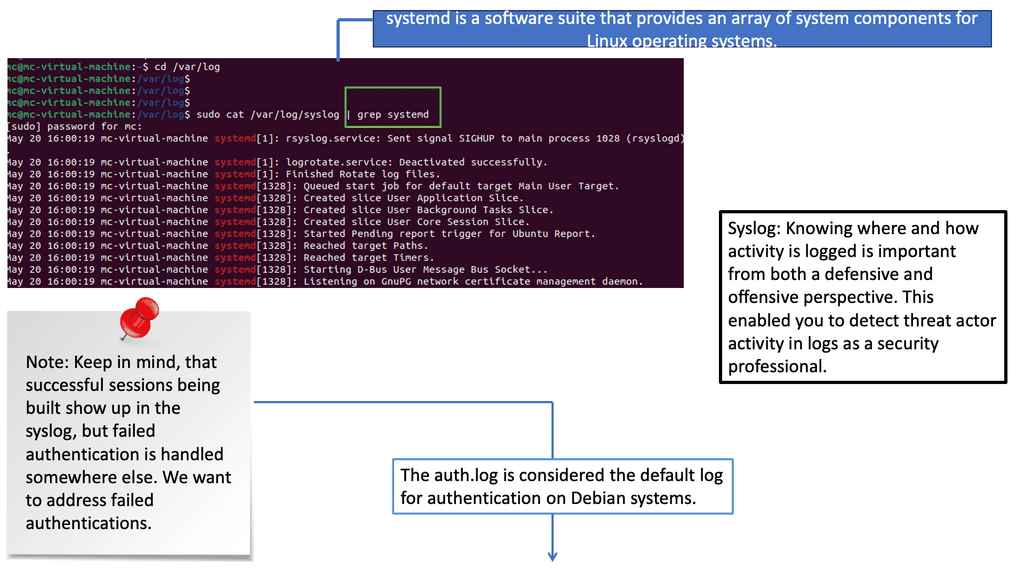

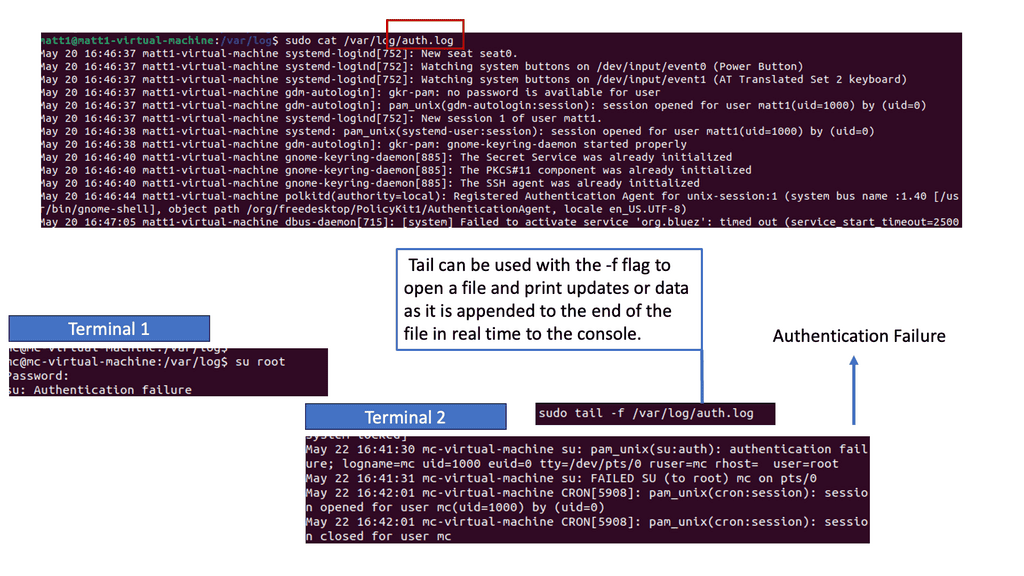

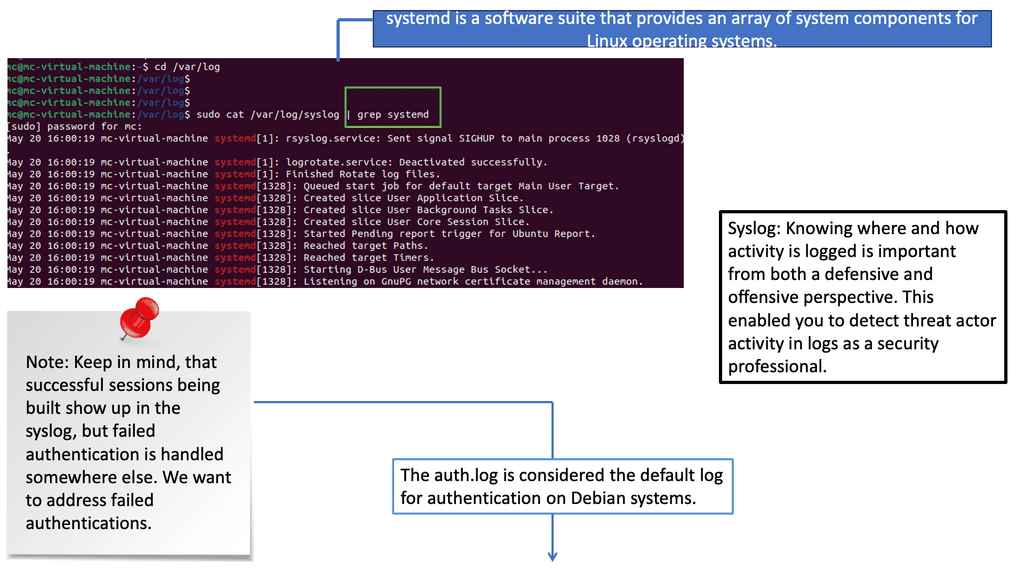

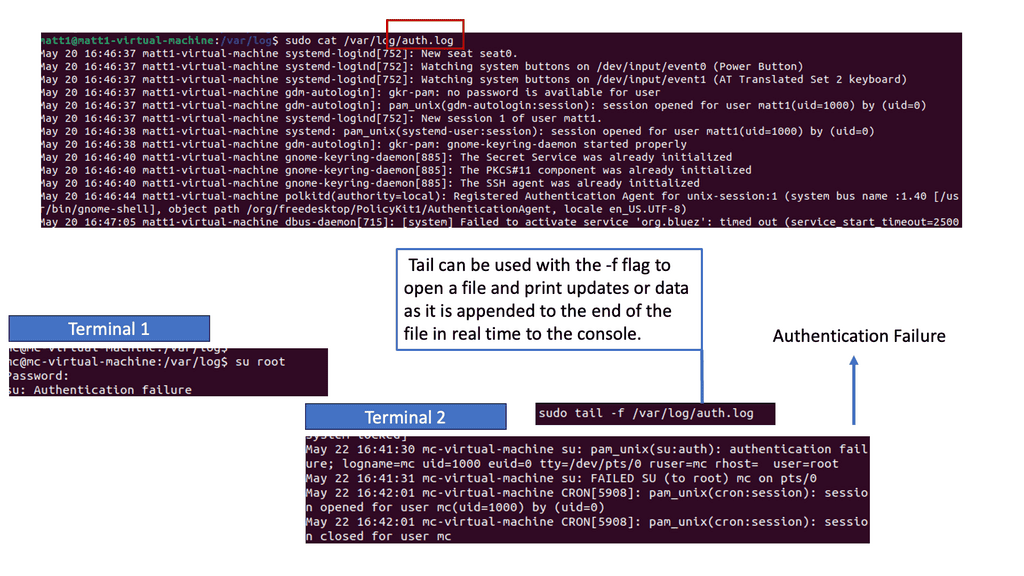

Example: Detecting Authentication Failures in Logs

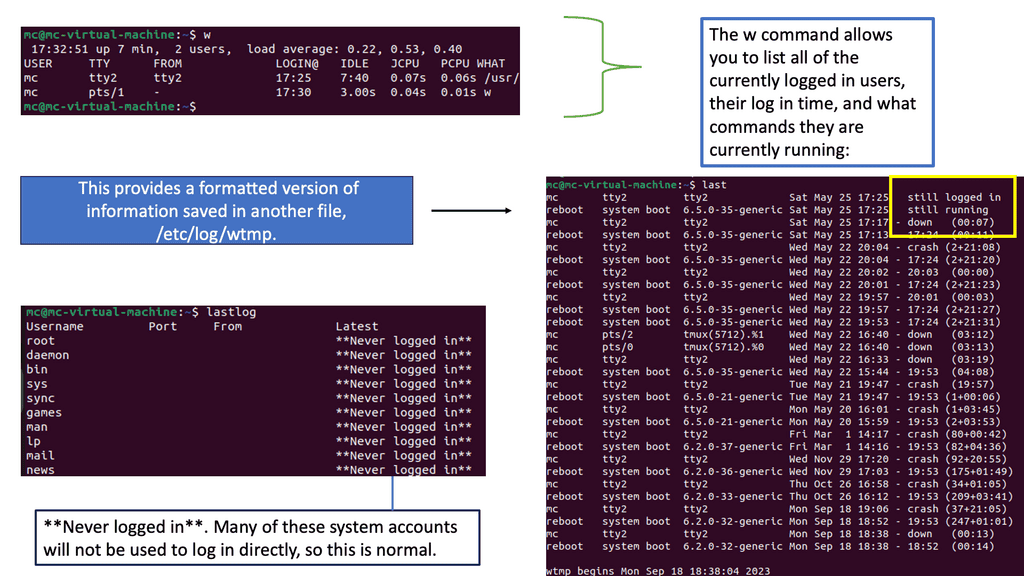

Understanding Syslog

– Syslog, a standard protocol for message logging, provides a centralized mechanism to collect and store log data. It is a repository of vital information, capturing events from various systems and devices. By analyzing syslog entries, security analysts can gain insights into network activities, system anomalies, and potential security incidents. Understanding the structure and content of syslog messages is crucial for practical log analysis.

– Auth.log, a log file specific to Unix-like systems, records authentication-related events such as user logins, failed login attempts, and privilege escalations. This log file is a goldmine for detecting unauthorized access attempts, brute-force attacks, and suspicious user activities. Familiarizing oneself with the format and patterns within auth.log entries can significantly enhance the ability to identify potential security breaches.

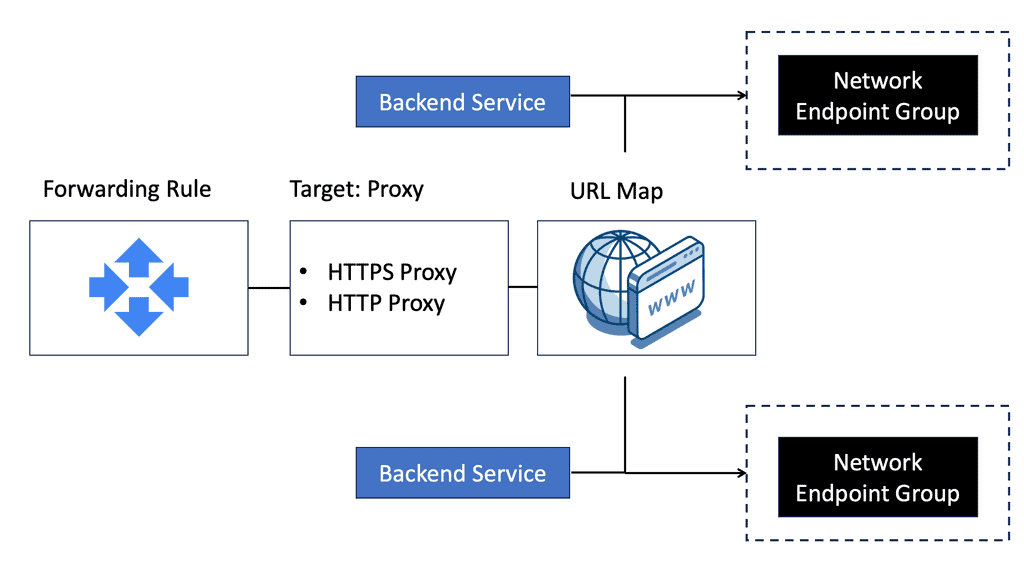

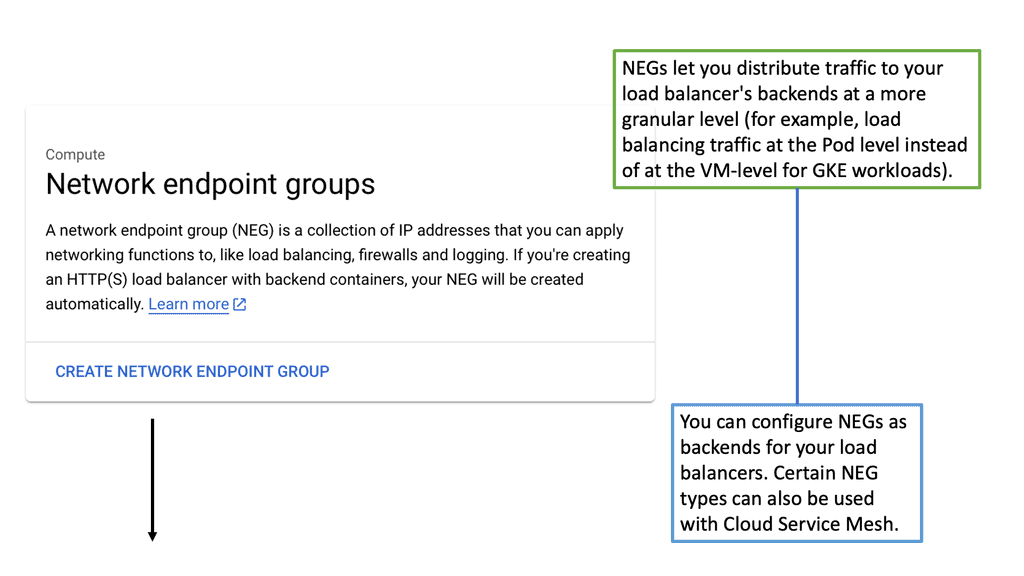

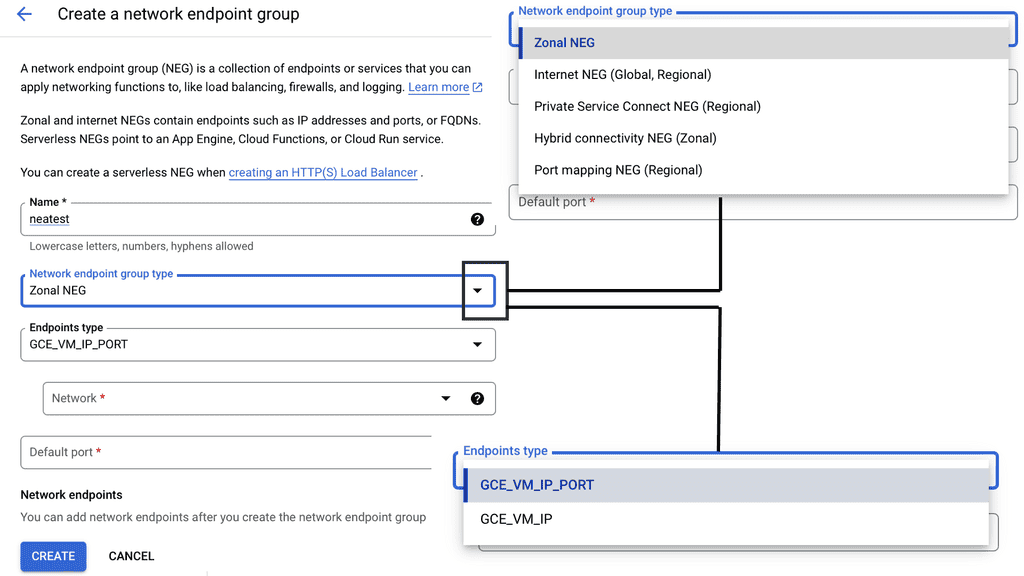

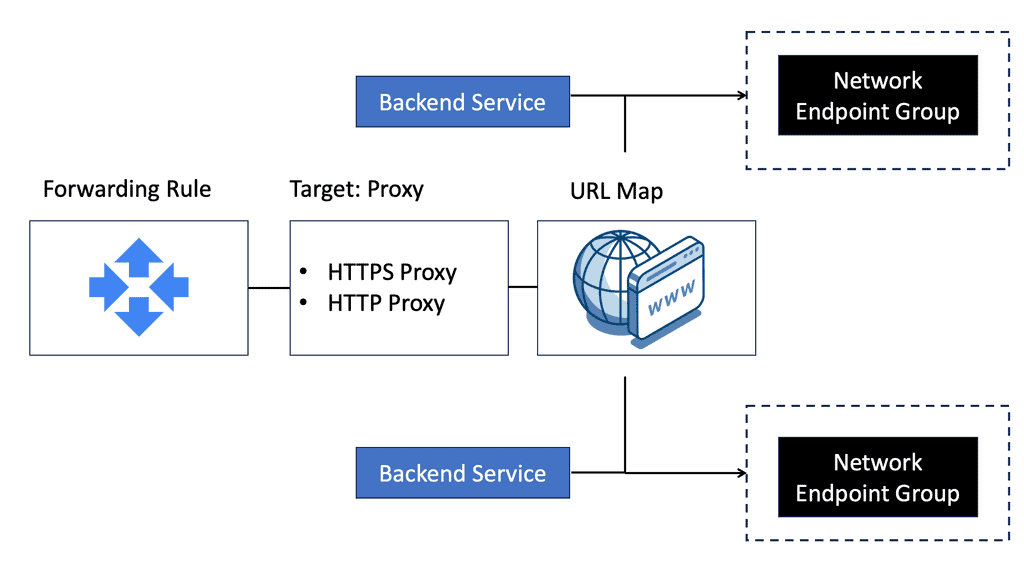

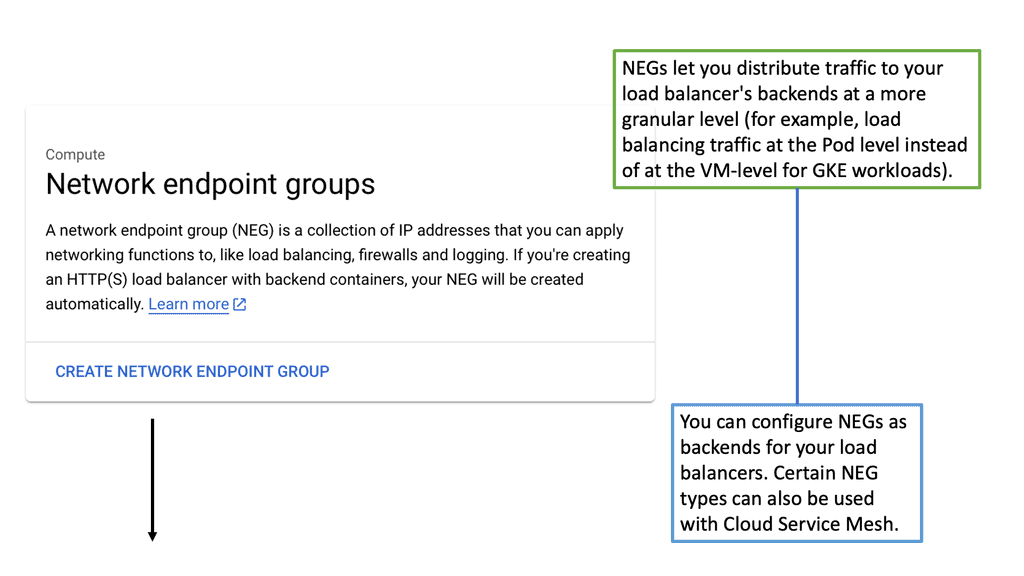

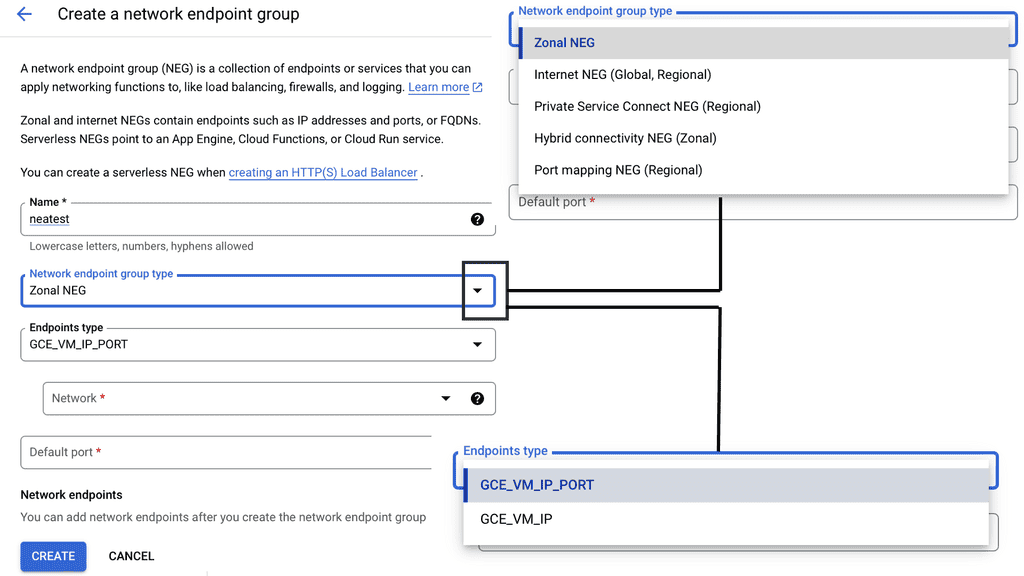

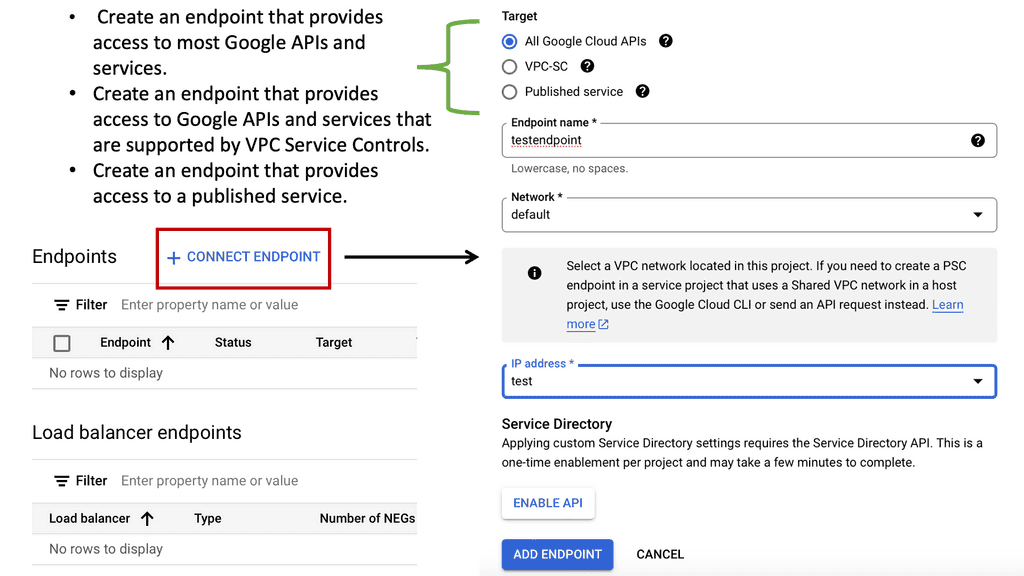

Example Technology: Network Endpoint Groups

**Understanding Network Endpoint Groups**

Network Endpoint Groups are a collection of network endpoints within Google Cloud, each representing an IP address and optionally a port. This concept allows you to define how traffic should be distributed across different services, whether they are hosted on Google Cloud or external services. NEGs enable better load balancing, seamless integration with Google Cloud services, and the ability to connect with legacy systems or third-party services outside your direct cloud environment.

**Benefits of Using Network Endpoint Groups**

The adoption of NEGs offers multiple benefits:

1. **Scalability**: NEGs provide a scalable solution to manage large volumes of traffic efficiently. You can dynamically add or remove endpoints as demand fluctuates, ensuring optimal performance and cost-effectiveness.

2. **Flexibility**: With NEGs, you have the flexibility to direct traffic to different types of endpoints, including Google Cloud VMs, serverless applications, and external services. This flexibility supports a wide range of application architectures.

3. **Enhanced Load Balancing**: NEGs work seamlessly with Google Cloud Load Balancing, allowing for sophisticated traffic management. You can configure traffic policies that suit your specific needs, ensuring reliability and performance.

**Implementing Network Endpoint Groups in Your Infrastructure**

Implementing NEGs is straightforward with Google Cloud’s intuitive interface. Begin by defining your endpoints, which could include Google Compute Engine instances, Google Kubernetes Engine pods, or even external endpoints. Next, configure your load balancer to direct traffic to your NEGs. This setup ensures that your applications benefit from consistent performance and availability, regardless of where your endpoints are located.

**Best Practices for Managing Network Endpoint Groups**

To maximize the effectiveness of NEGs, consider the following best practices:

– **Regularly Monitor and Update**: Keep a close eye on endpoint performance and update your NEGs as your infrastructure evolves. This proactive approach helps maintain optimal resource utilization.

– **Security Considerations**: Implement proper security measures, including network policies and firewalls, to protect your endpoints from potential threats.

– **Integration with CI/CD Pipelines**: Integrating NEGs with your continuous integration and continuous deployment pipelines ensures that your network configurations evolve alongside your application code, reducing manual overhead and potential errors.

Transitioning to a zero-trust networking model requires careful planning and execution. Here are a few strategies to consider:

1. Comprehensive Network Assessment: Begin by thoroughly assessing your existing network infrastructure, identifying vulnerabilities and areas that need improvement.

2. Phased Approach: Implementing zero-trust networking across an entire network can be challenging. Consider adopting a phased approach, starting with critical assets and gradually expanding to cover the whole network.

3. User Education: Educate users about the principles and benefits of zero-trust networking. Emphasize the importance of strong authentication, safe browsing habits, and adherence to security policies.

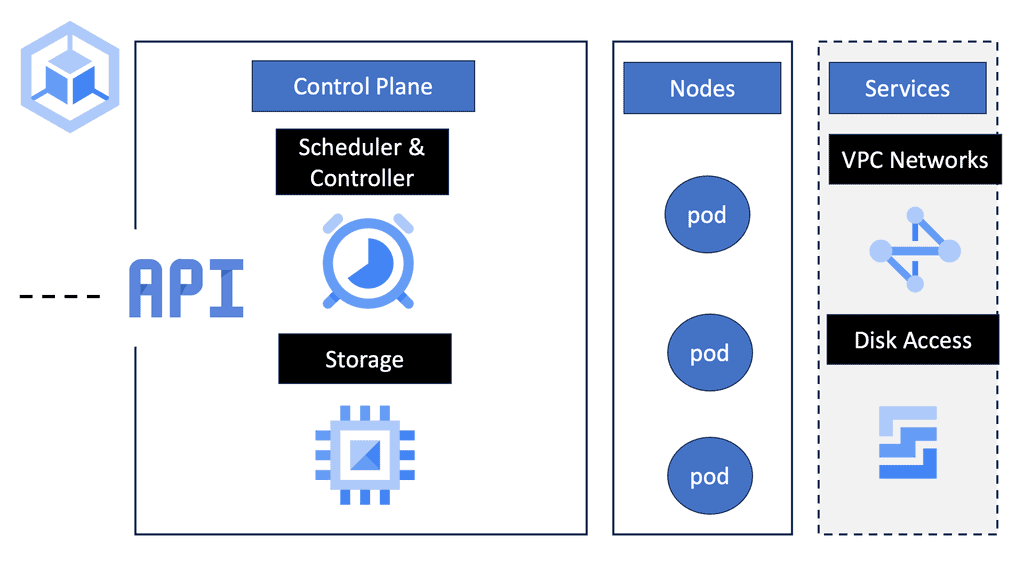

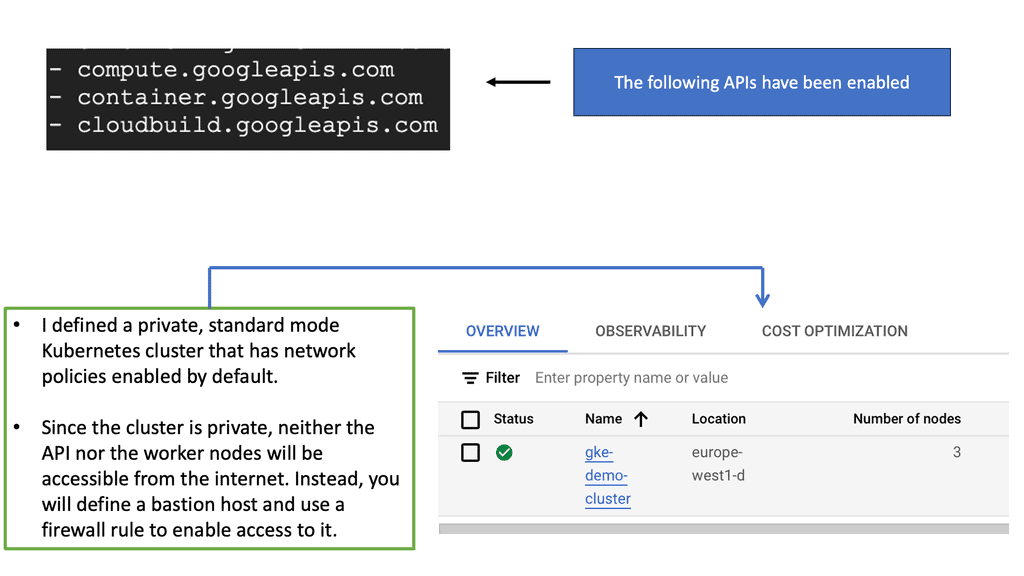

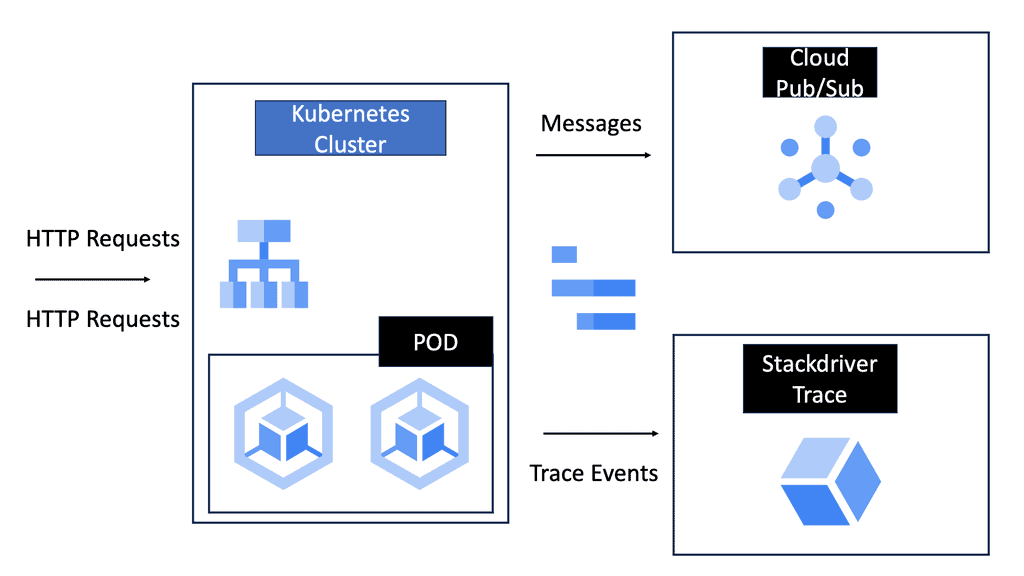

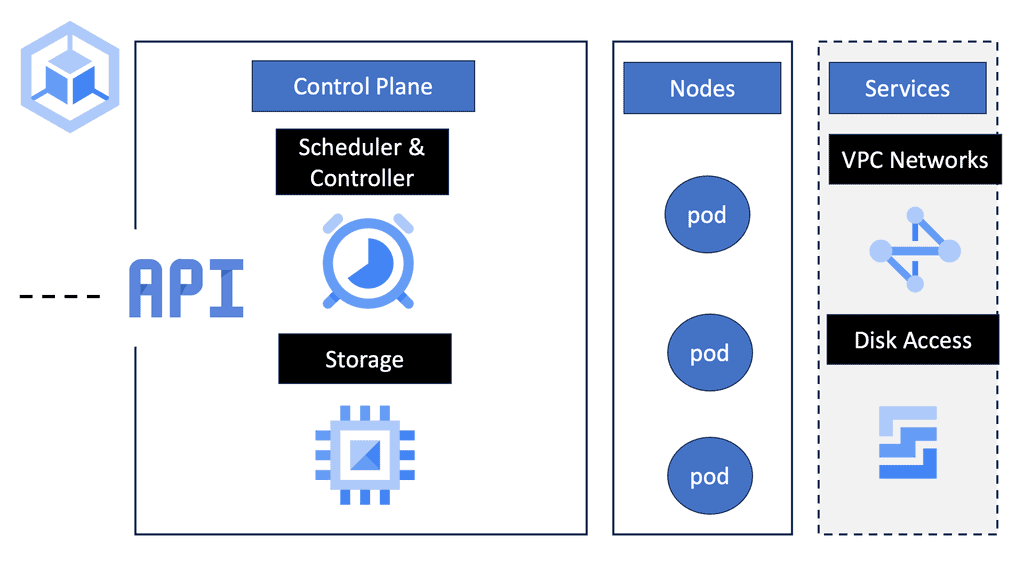

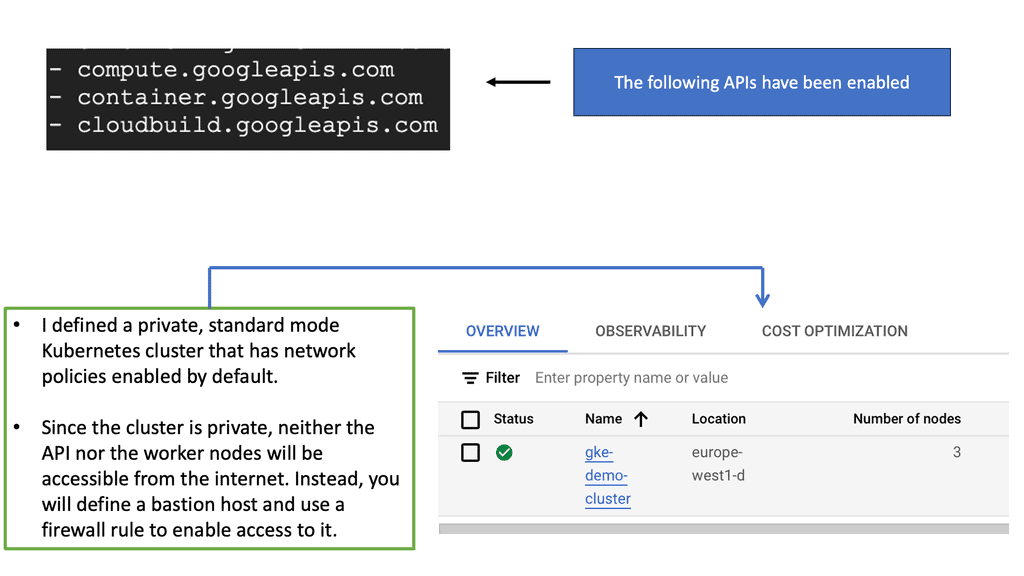

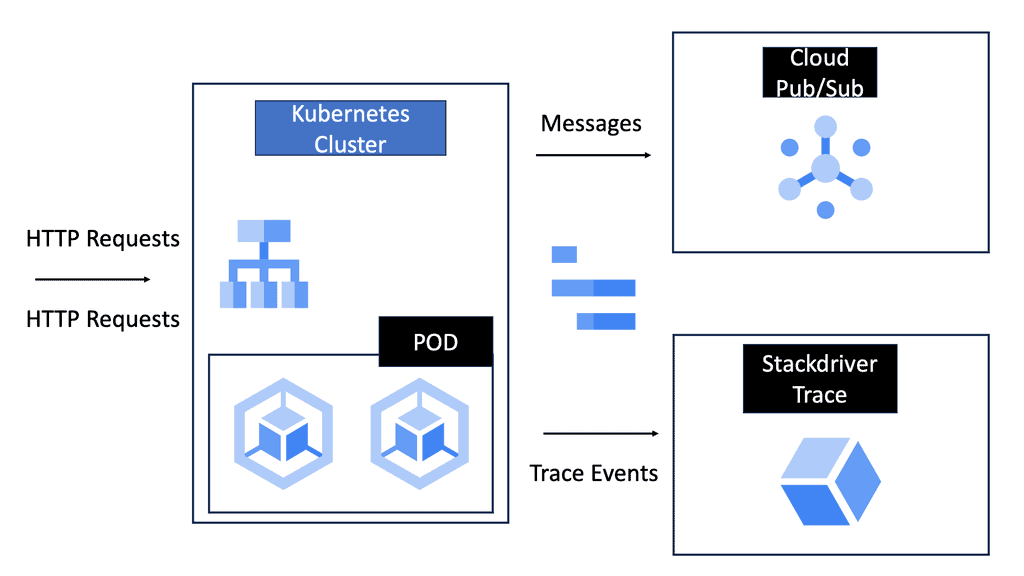

Google Cloud – GKE Network Policy

Google Kubernetes Engine (GKE) offers a robust platform for deploying, managing, and scaling containerized applications. One of the essential tools at your disposal is Network Policy. This feature allows you to define how groups of pods communicate with each other and other network endpoints. Understanding and implementing Network Policies is a crucial step towards achieving zero trust networking within your Kubernetes environment.

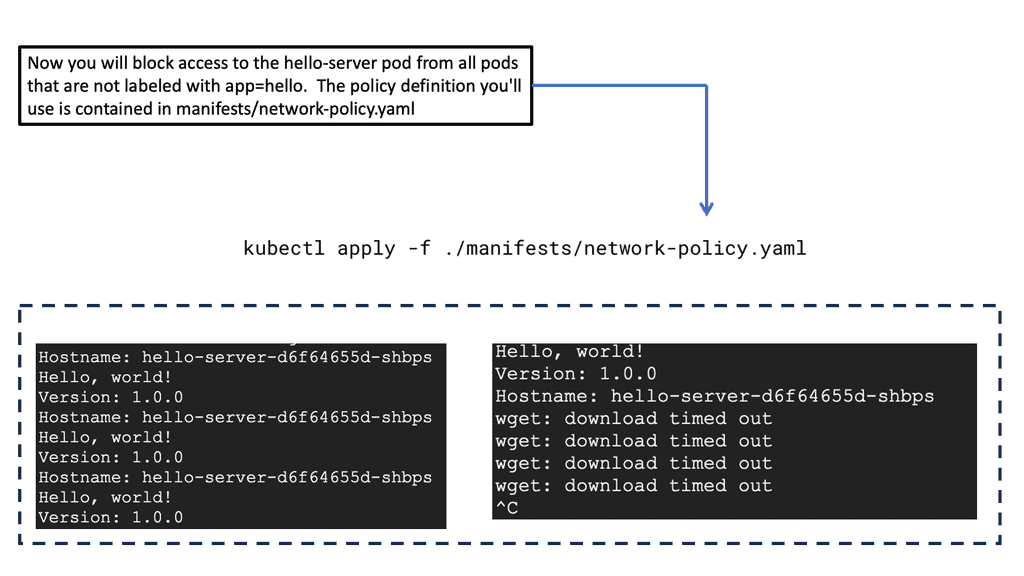

## The Basics of Network Policies

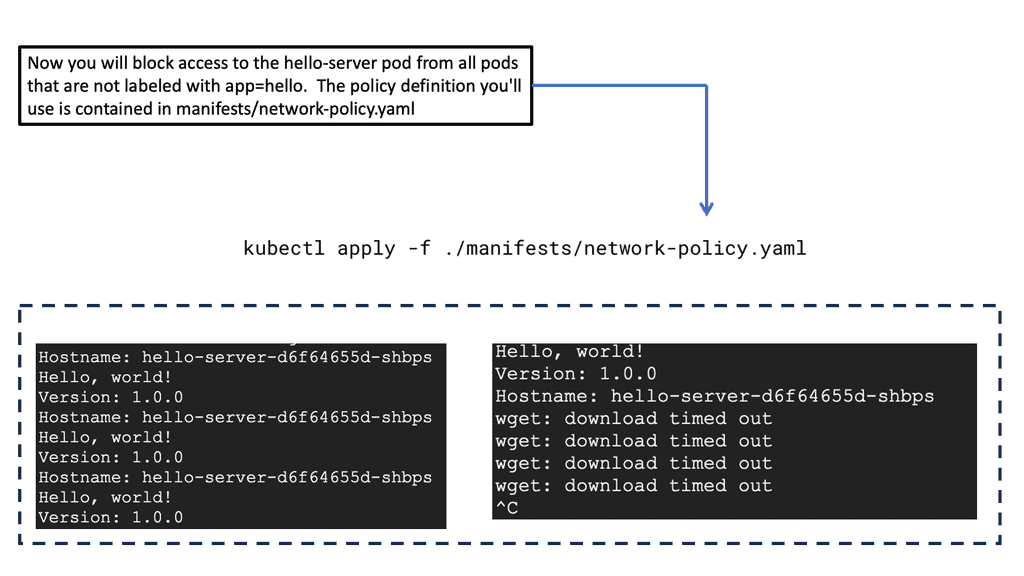

Network Policies in GKE are essentially rules that define the allowed connections to and from pods. These policies are based on the Kubernetes NetworkPolicy API and provide fine-grained control over the communication within a Kubernetes cluster. By default, all pods in GKE can communicate with each other without restrictions. However, as your applications grow in complexity, this open communication model can become a security liability. Network Policies allow you to enforce restrictions, enabling you to specify which pods can communicate with each other, thereby reducing the attack surface.

## Implementing Zero Trust Networking

Zero trust networking is a security concept that assumes no implicit trust, and everything must be verified before gaining access. Implementing Network Policies in GKE is a core component of adopting a zero trust approach. By default, zero trust networking assumes that threats could originate from both outside and inside the network. With Network Policies, you can enforce strict access controls, ensuring that only the necessary pods and services can communicate, effectively minimizing the potential for lateral movement in the event of a breach.

## Best Practices for Network Policies

When designing Network Policies, it’s crucial to adhere to best practices to ensure both security and performance. Start by defining a default-deny policy, which blocks all traffic, and then create specific allow rules for necessary communications. Regularly review and update these policies to accommodate changes in your applications and infrastructure. Utilize namespaces effectively to segment different environments (e.g., development, staging, production) and apply specific policies to each, ensuring that only essential communications are permitted within and across these boundaries.

## Monitoring and Troubleshooting

Implementing Network Policies is not a set-and-forget task. Continuous monitoring is essential to ensure that policies are functioning correctly and that no unauthorized traffic is allowed. GKE provides tools and integrations to help you monitor network traffic and troubleshoot any connectivity issues that arise. Consider using logging and monitoring solutions like Google Cloud’s Operations Suite to gain insights into your network traffic and policy enforcement, allowing you to identify and respond to potential issues promptly.

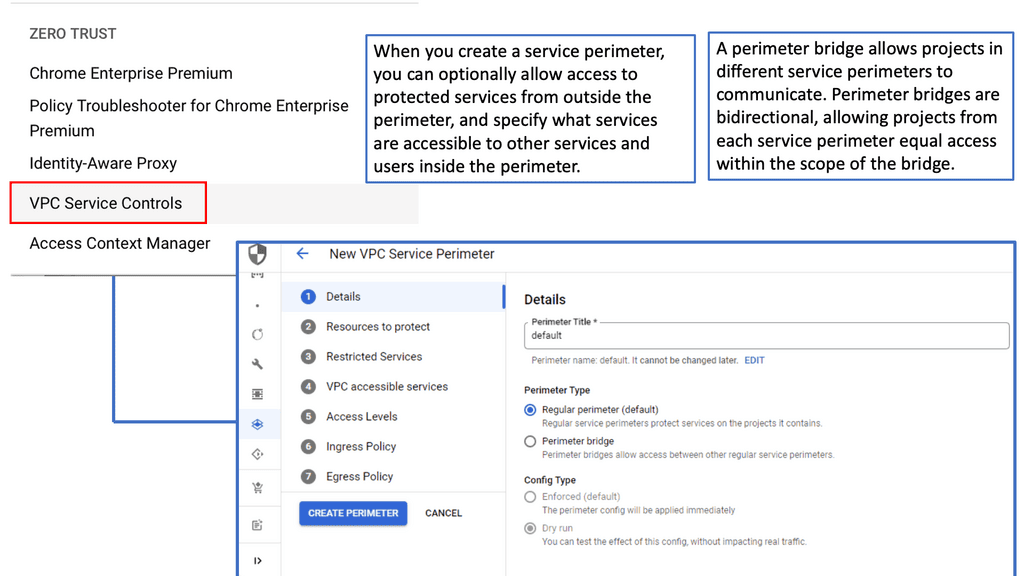

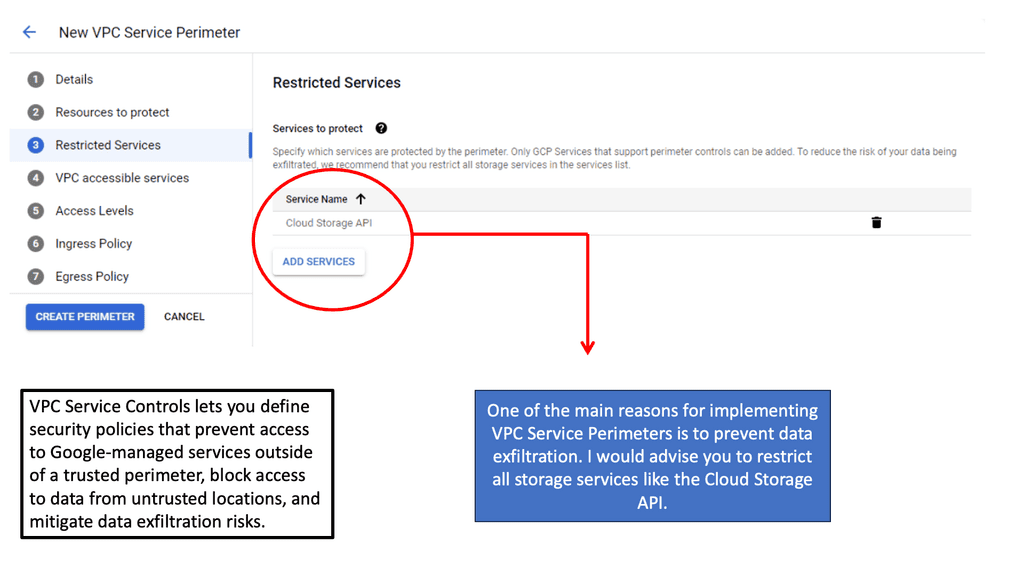

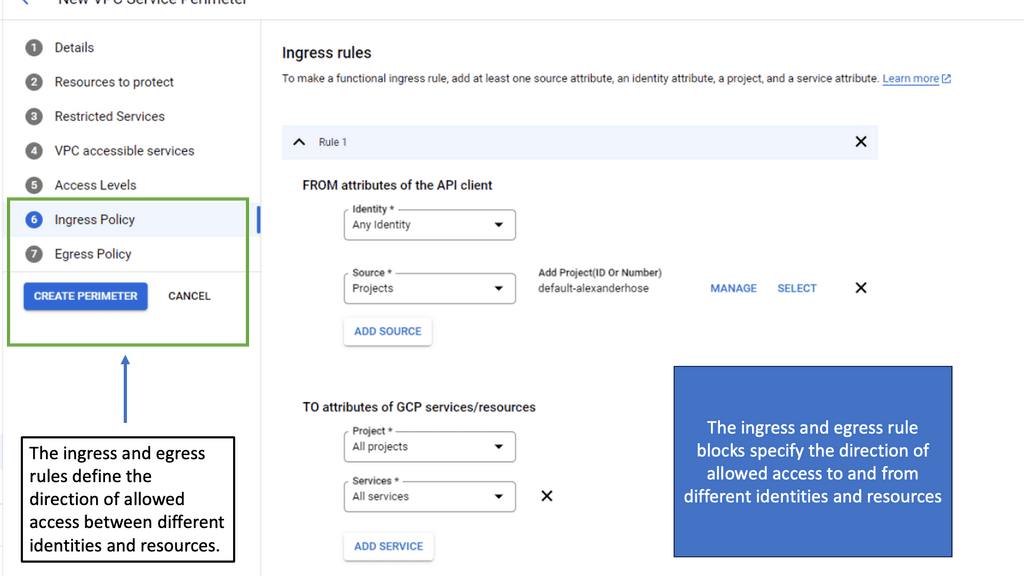

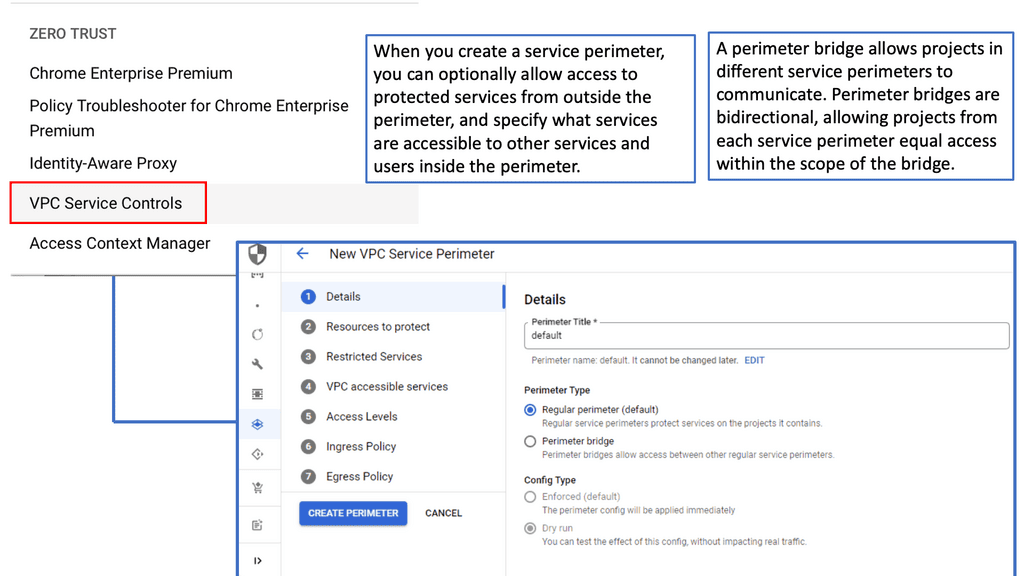

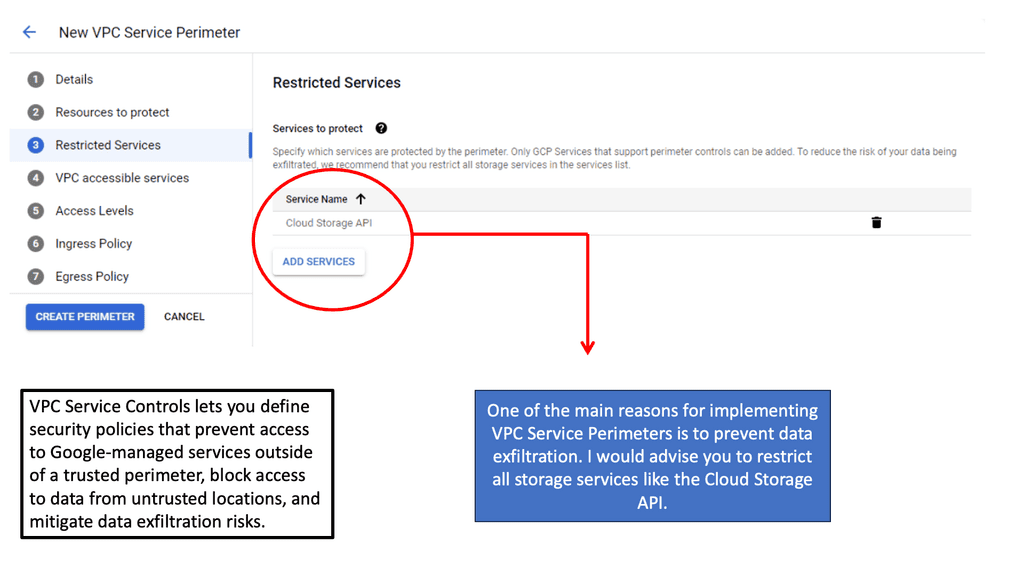

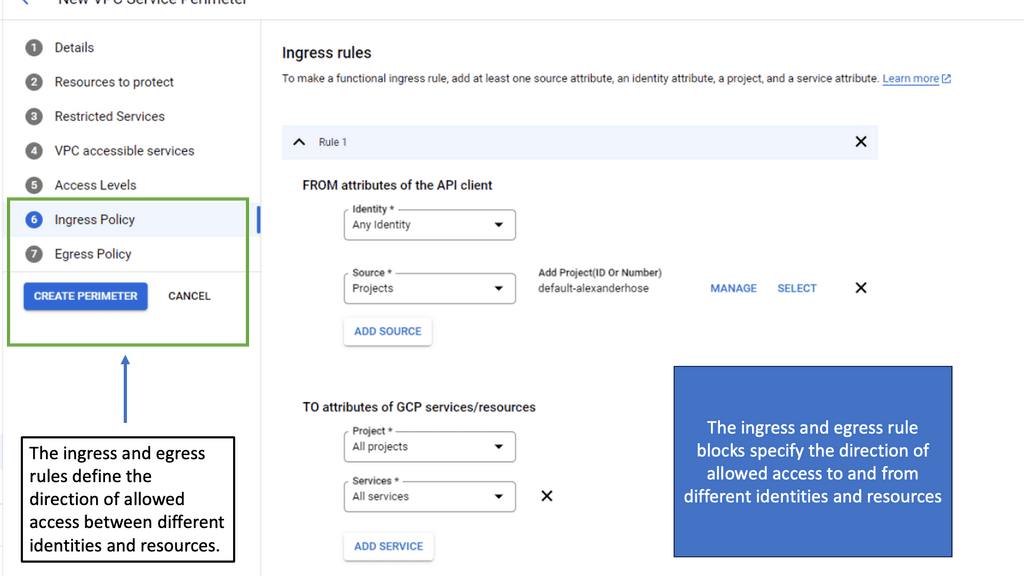

Googles VPC Service Controls

**The Role of Zero Trust Network Design**

VPC Service Controls align perfectly with the principles of a zero trust network design, an approach that assumes threats could originate from inside or outside the network. This design necessitates strict verification processes for every access request. VPC Service Controls help enforce these principles by allowing you to define and enforce security perimeters around your Google Cloud resources, such as APIs and services. This ensures that only authorized requests can access sensitive data, even if they originate from within the network.

**Implementing VPC Service Controls on Google Cloud**

Implementing VPC Service Controls is a strategic move for organizations leveraging Google Cloud services. By setting up service perimeters, you can protect a wide range of Google Cloud services, including Cloud Storage, BigQuery, and Cloud Pub/Sub. These perimeters act as virtual barriers, preventing unauthorized transfers of data across the defined boundaries. Additionally, VPC Service Controls offer features like Access Levels and Access Context Manager to fine-tune access policies based on contextual attributes, such as user identity and device security status.

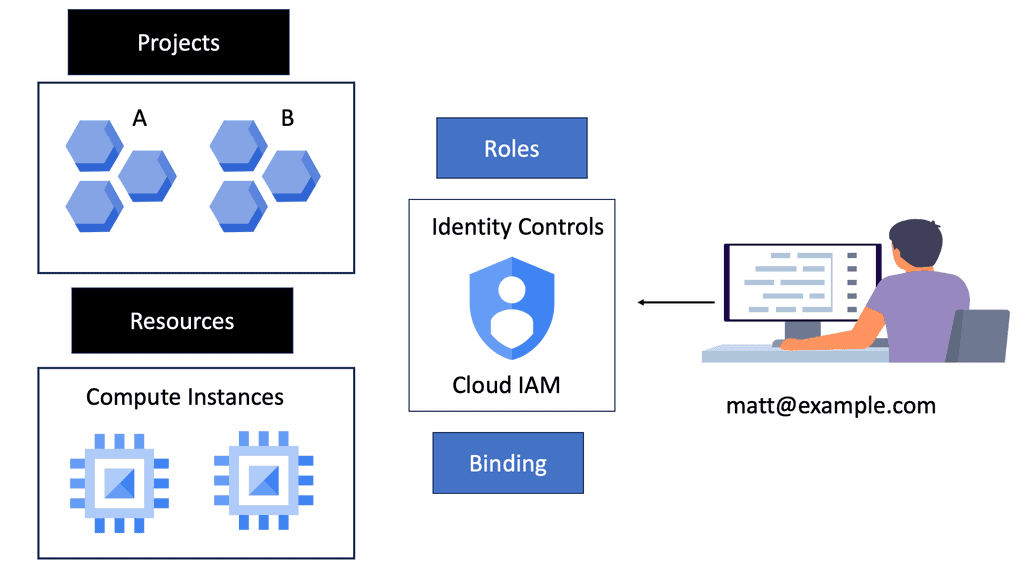

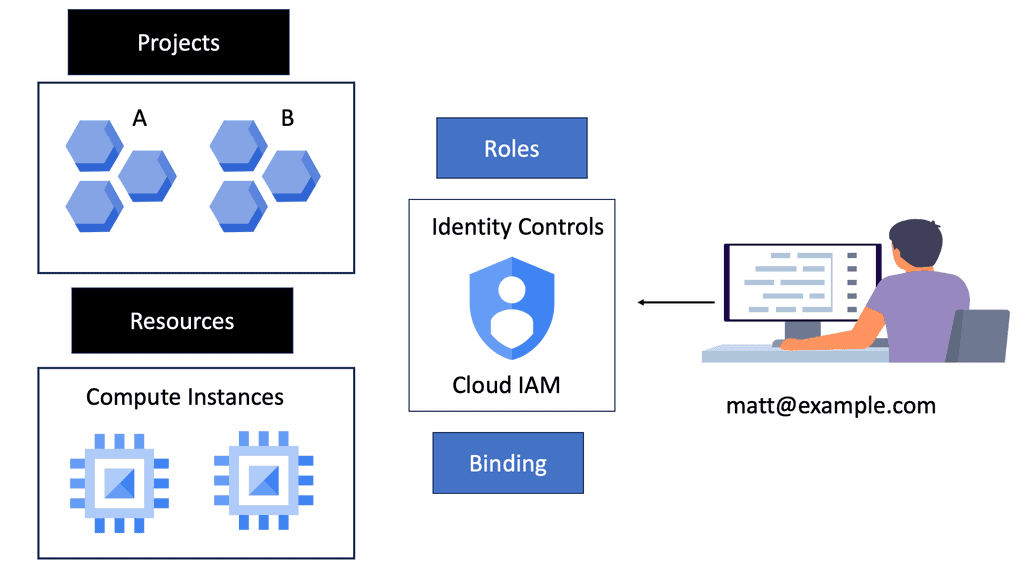

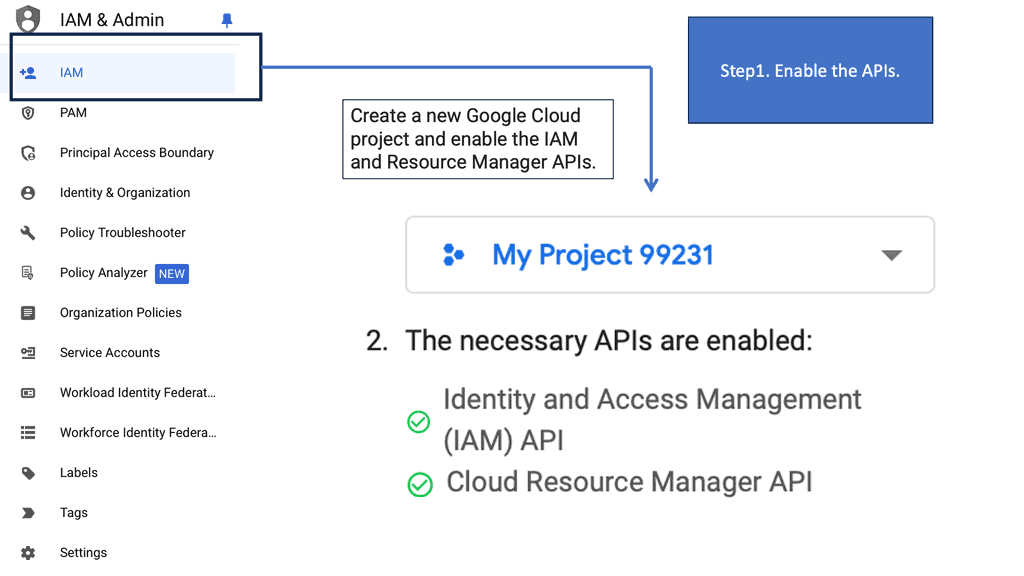

Zero Trust with IAM

**Understanding Google Cloud IAM**

Google Cloud IAM is a critical security component that allows organizations to manage who has access to specific resources within their cloud infrastructure. It provides a centralized system for defining roles and permissions, ensuring that only authorized users can perform certain actions. By adhering to the principle of least privilege, IAM helps minimize potential security risks by limiting access to only what is necessary for each user.

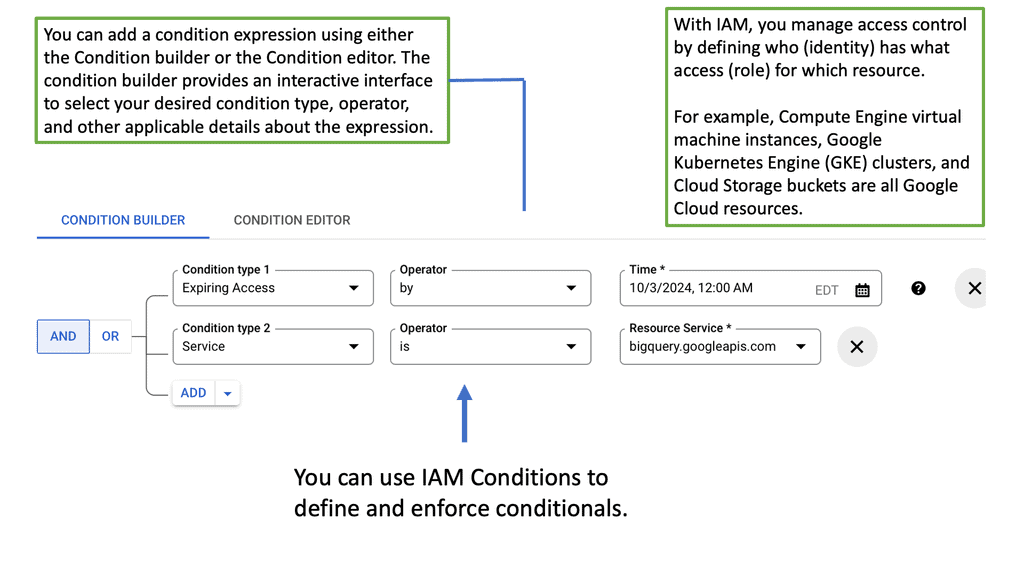

**Implementing Zero Trust with Google Cloud**

Zero trust is a security model that assumes threats could be both inside and outside the network, thus requiring strict verification for every user and device attempting to access resources. Google Cloud IAM plays a pivotal role in realizing a zero trust architecture by providing granular control over user access. By leveraging IAM policies, organizations can enforce multi-factor authentication, continuous monitoring, and strict access controls to ensure that every access request is verified before granting permissions.

**Key Features of Google Cloud IAM**

Google Cloud IAM offers a range of features designed to enhance security and simplify management:

– **Role-Based Access Control (RBAC):** Allows administrators to assign specific roles to users, defining what actions they can perform on which resources.

– **Custom Roles:** Provides the flexibility to create roles tailored to the specific needs of your organization, offering more precise control over permissions.

– **Audit Logging:** Facilitates the tracking of user activity and access patterns, helping in identifying potential security threats and ensuring compliance with regulatory requirements.

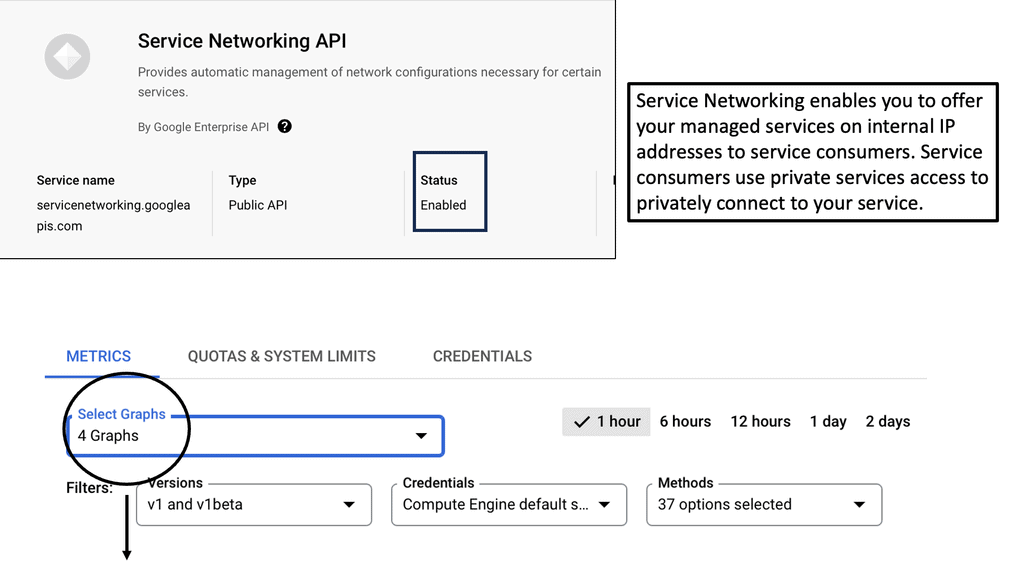

API Service Networking

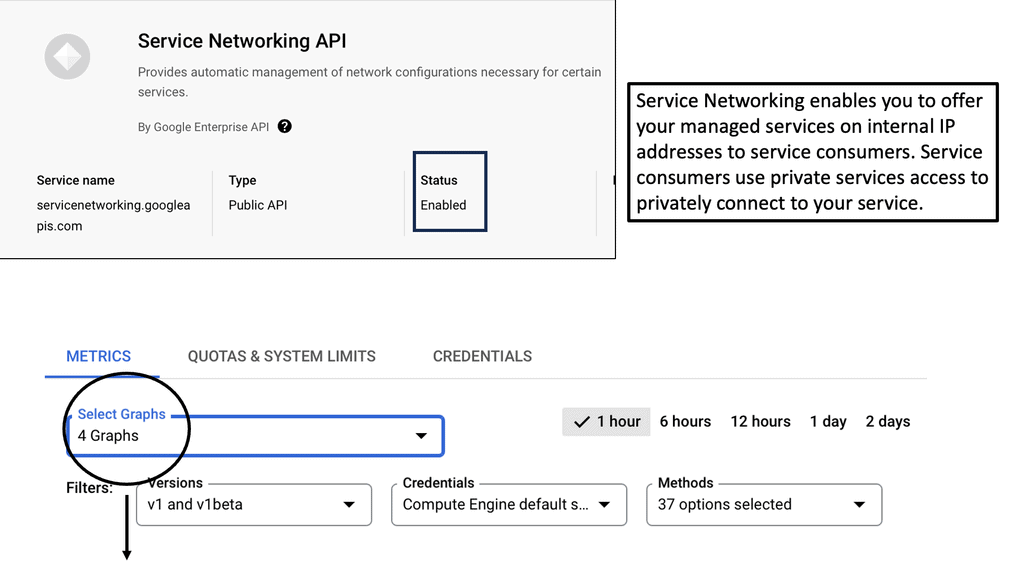

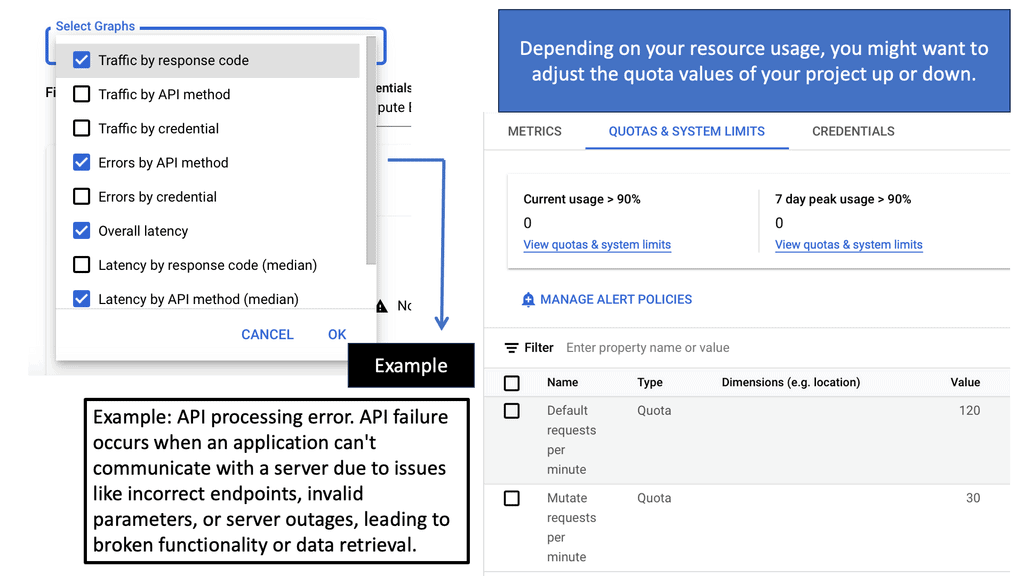

**The Role of Google Cloud in Service Networking**

Google Cloud has emerged as a leader in providing robust service networking solutions that leverage its global infrastructure. With tools like Google Cloud’s Service Networking API, businesses can establish secure connections between their various services, whether they’re hosted on Google Cloud, on-premises, or even in other cloud environments. This capability is crucial for organizations looking to build scalable, resilient, and efficient architectures. By utilizing Google Cloud’s networking solutions, businesses can ensure their services are interconnected in a way that maximizes performance and minimizes latency.

**Embracing Zero Trust Architecture**

Incorporating a Zero Trust security model is becoming a standard practice for organizations aiming to enhance their cybersecurity posture. Zero Trust operates on the principle that no entity, whether inside or outside the network, should be automatically trusted. This approach aligns perfectly with Service Networking APIs, which can enforce stringent access controls, authentication, and encryption for all service communications. By adopting a Zero Trust framework, businesses can mitigate risks associated with data breaches and unauthorized access, ensuring their service interactions are as secure as possible.

**Advantages of Service Networking APIs**

Service Networking APIs offer numerous advantages for businesses navigating the complexities of modern IT environments. They provide the flexibility to connect services across hybrid and multi-cloud setups, ensuring that data and applications remain accessible regardless of their physical location. Additionally, these APIs streamline the process of managing network configurations, reducing the overhead associated with manual network management tasks. Furthermore, by facilitating secure and efficient connections, Service Networking APIs enable businesses to focus on innovation rather than infrastructure challenges.

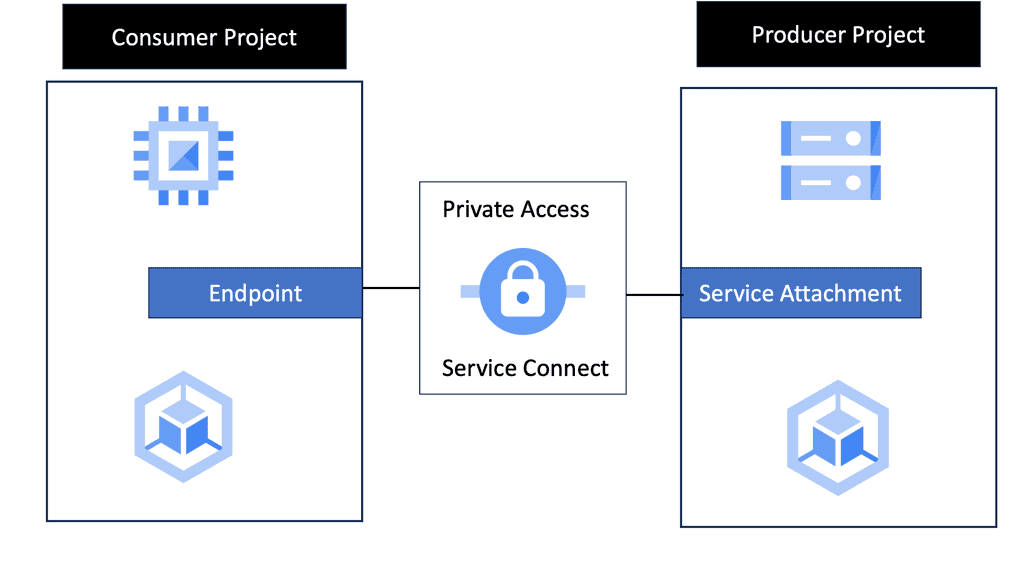

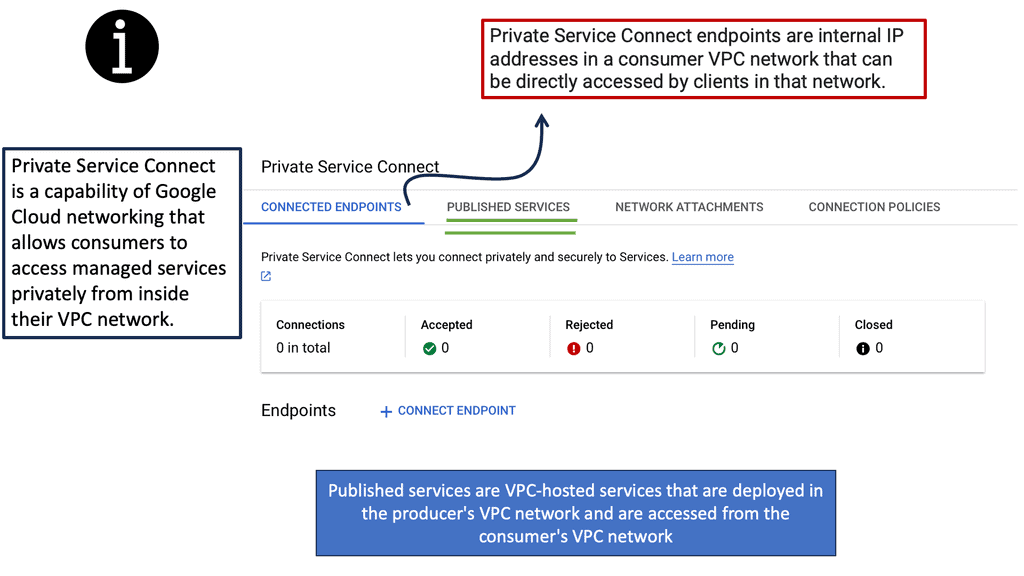

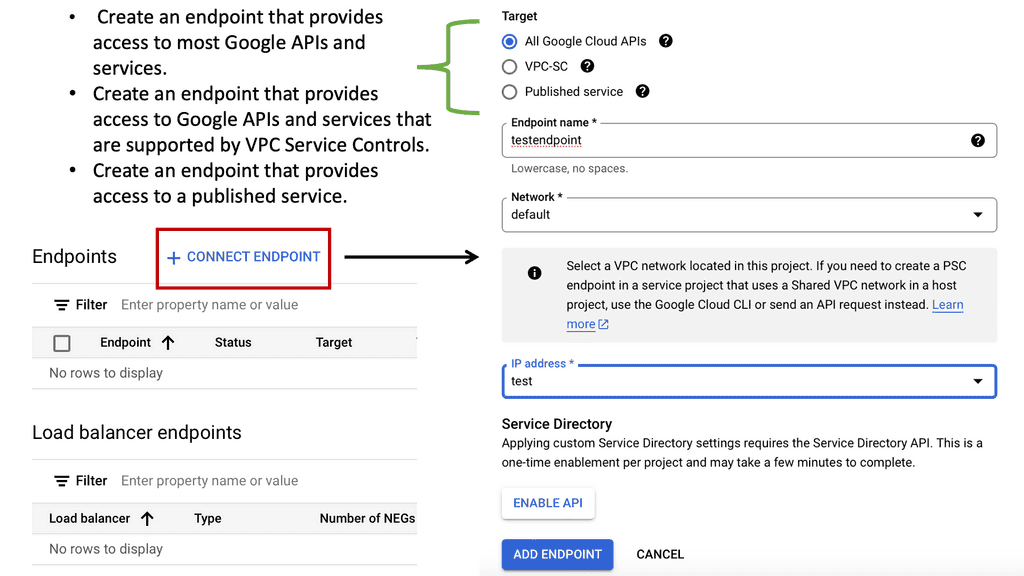

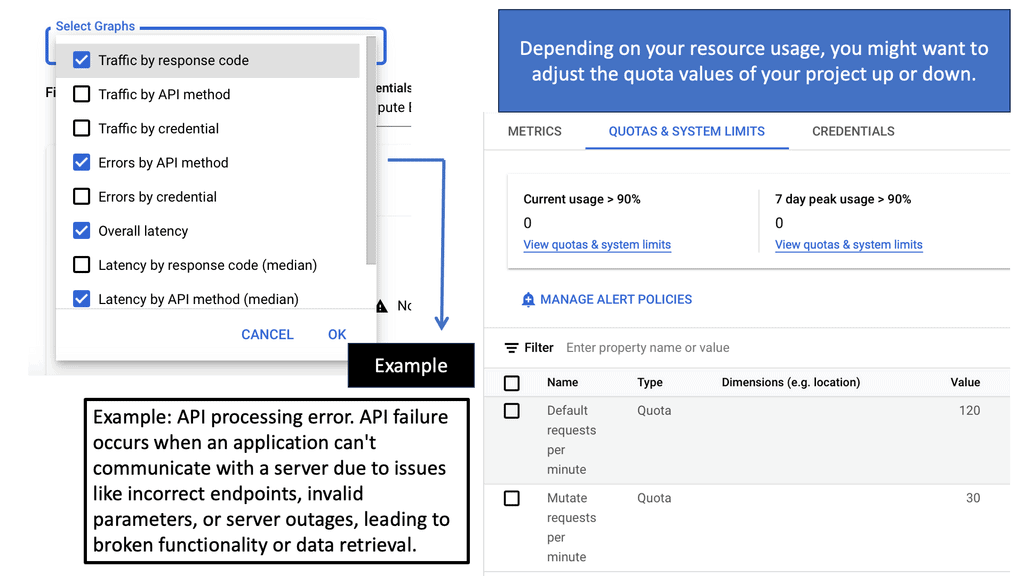

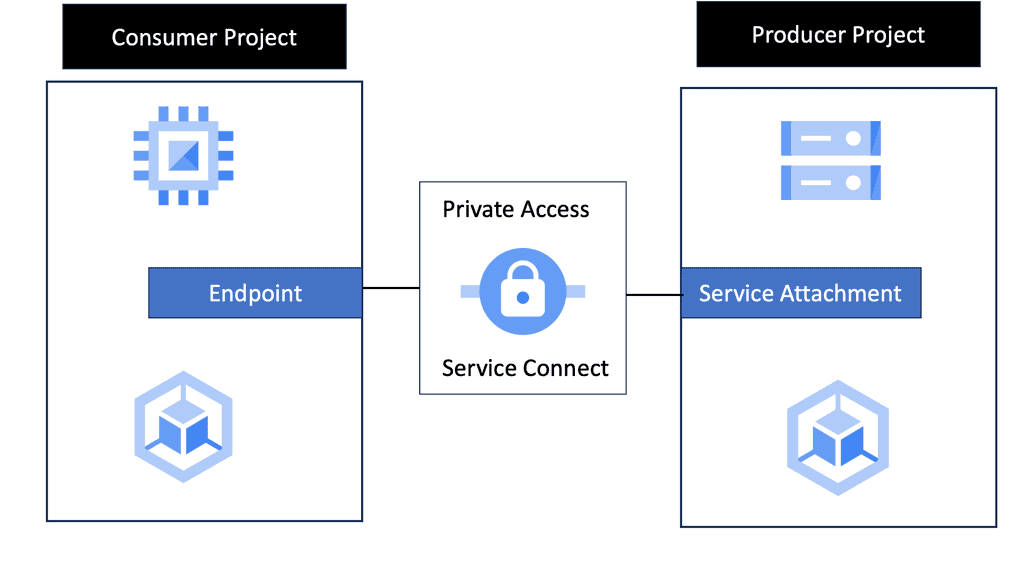

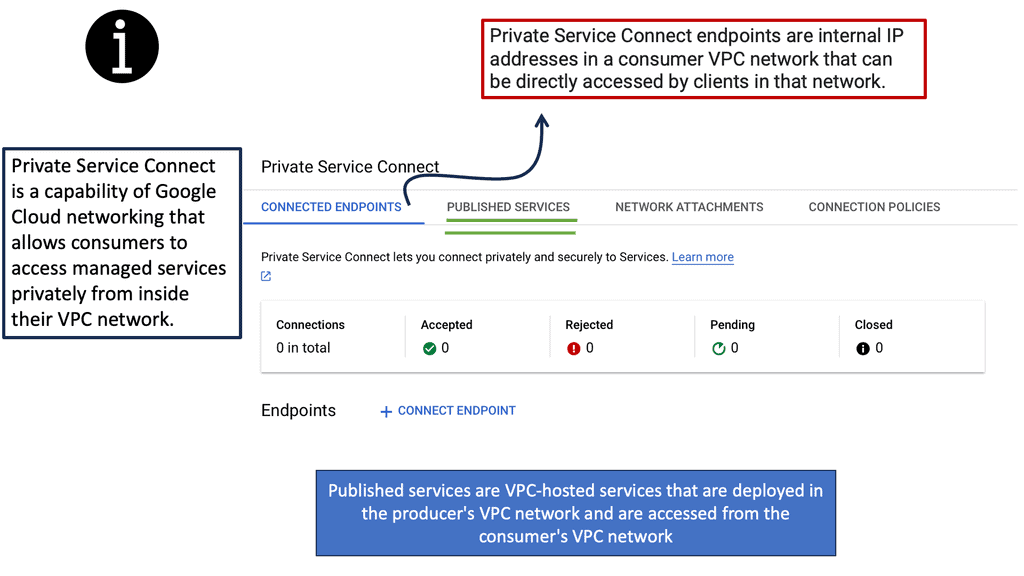

Zero Trust with Private Service Connect

**Understanding Google Cloud’s Private Service Connect**

At its core, Private Service Connect is designed to simplify service connectivity by allowing you to create private and secure connections to Google services and third-party services. This eliminates the need for public IPs while ensuring that your data remains within Google’s protected network. By utilizing PSC, businesses can achieve seamless connectivity without compromising on security, a crucial aspect of modern cloud infrastructure.

**The Role of Private Service Connect in Zero Trust**

Zero trust is a security model centered around the principle of “never trust, always verify.” It assumes that threats could be both external and internal, and hence, every access request should be verified. PSC plays a critical role in this model by providing a secure pathway for services to communicate without exposing them to the public internet. By integrating PSC, organizations can ensure that their cloud-native applications follow zero-trust principles, thereby minimizing risks and enhancing data protection.

**Benefits of Adopting Private Service Connect**

Implementing Private Service Connect offers several advantages:

1. **Enhanced Security**: By eliminating the need for public endpoints, PSC reduces the attack surface, making your services less vulnerable to threats.

2. **Improved Performance**: With direct and private connectivity, data travels through optimized paths within Google’s network, reducing latency and increasing reliability.

3. **Simplicity and Scalability**: PSC simplifies the network architecture by removing the complexities associated with managing public IPs and firewalls, making it easier to scale services as needed.

Network Connectivity Center

### The Importance of Zero Trust Network Design

Zero Trust is a security model that requires strict verification for every person and device trying to access resources on a private network, regardless of whether they are inside or outside the network perimeter. This approach significantly reduces the risk of data breaches and unauthorized access. Implementing a Zero Trust Network Design with NCC ensures that all network traffic is continuously monitored and verified, enhancing overall security.

### How NCC Enhances Zero Trust Security

Google Network Connectivity Center provides several features that align with the principles of Zero Trust:

1. **Centralized Management:** NCC offers a single pane of glass for managing all network connections, making it easier to enforce security policies consistently across the entire network.

2. **Granular Access Controls:** With NCC, organizations can implement fine-grained access controls, ensuring that only authorized users and devices can access specific network resources.

3. **Integrated Security Tools:** NCC integrates with Google Cloud’s suite of security tools, such as Identity-Aware Proxy (IAP) and Cloud Armor, to provide comprehensive protection against threats.

### Real-World Applications of NCC

Organizations across various industries can benefit from the capabilities of Google Network Connectivity Center. For example:

– **Financial Services:** A bank can use NCC to securely connect its branch offices and data centers, ensuring that sensitive financial data is protected at all times.

– **Healthcare:** A hospital can leverage NCC to manage its network of medical devices and patient records, maintaining strict access controls to comply with regulatory requirements.

– **Retail:** A retail chain can utilize NCC to connect its stores and warehouses, optimizing network performance while safeguarding customer data.

Zero Trust with Cloud Service Mesh

What is a Cloud Service Mesh?

A Cloud Service Mesh is essentially a network of microservices that communicate with each other. It abstracts the complexity of managing service-to-service communications, offering features like load balancing, service discovery, and traffic management. The mesh operates transparently to the application, meaning developers can focus on writing code without worrying about the underlying network infrastructure. With built-in observability, it provides deep insights into how services interact, helping to identify and resolve issues swiftly.

#### Advantages of Implementing a Service Mesh

1. **Enhanced Security with Zero Trust Network**: A Service Mesh can significantly bolster security by implementing a Zero Trust Network model. This means that no service is trusted by default, and strict verification processes are enforced for each interaction. It ensures that communications are encrypted and authenticated, reducing the risk of unauthorized access and data breaches.

2. **Improved Resilience and Reliability**: By offering features like automatic retries, circuit breaking, and failover, a Service Mesh ensures that services remain resilient and reliable. It helps in maintaining the performance and availability of applications even in the face of network failures or high traffic volumes.

3. **Simplified Operations and Management**: Managing a microservices architecture can be overwhelming due to the sheer number of services involved. A Service Mesh simplifies operations by providing a centralized control plane, where policies can be defined and enforced consistently across all services. This reduces the operational overhead and makes it easier to manage and scale applications.

#### Real-World Applications of Cloud Service Mesh

Several industries are reaping the benefits of implementing a Cloud Service Mesh. In the financial sector, where security and compliance are paramount, a Service Mesh ensures that sensitive data is protected through robust encryption and authentication mechanisms. In e-commerce, it enhances the customer experience by ensuring that applications remain responsive and available even during peak traffic periods. Healthcare organizations use Service Meshes to secure sensitive patient data and ensure compliance with regulations like HIPAA.

#### Key Considerations for Adoption

While the benefits of a Cloud Service Mesh are evident, there are several factors to consider before adoption. Organizations need to assess their existing infrastructure and determine whether it is compatible with a Service Mesh. They should also consider the learning curve associated with adopting new technologies and ensure that their teams are adequately trained. Additionally, it’s crucial to evaluate the cost implications and ensure that the benefits outweigh the investment required.

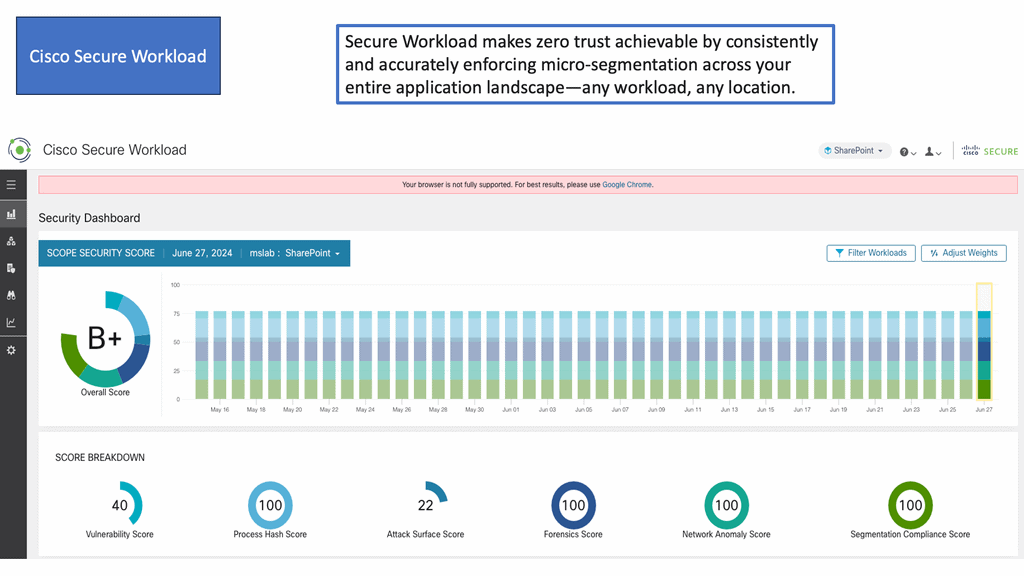

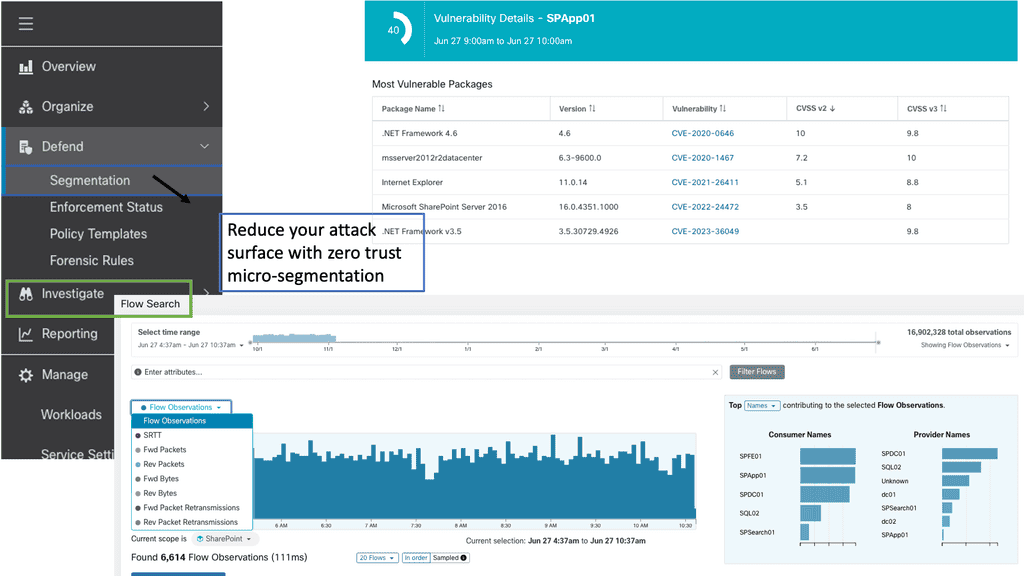

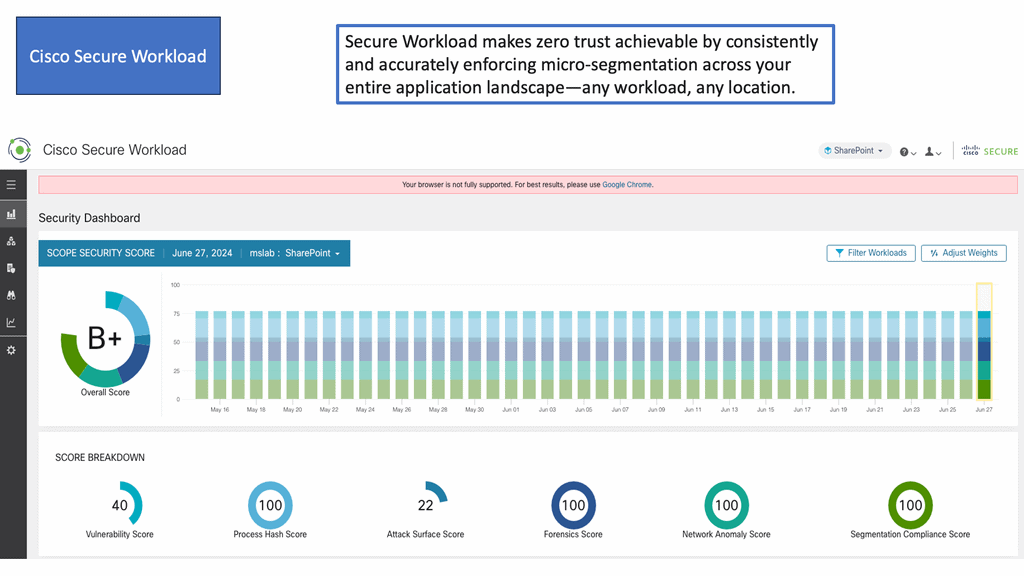

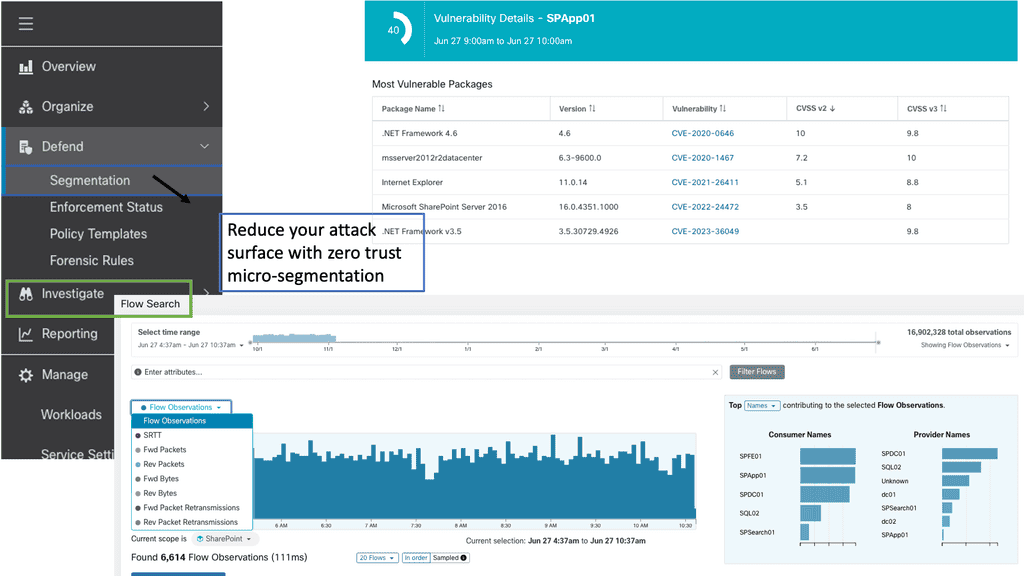

Example Product: Cisco Secure Workload

### What is Cisco Secure Workload?

Cisco Secure Workload, formerly known as Cisco Tetration, is a security solution that provides visibility and micro-segmentation for applications across your entire IT environment. It leverages machine learning and advanced analytics to monitor and protect workloads in real-time, ensuring that potential threats are identified and mitigated before they can cause harm.

### Key Features of Cisco Secure Workload

1. **Comprehensive Visibility**: Cisco Secure Workload offers unparalleled visibility into your workloads, providing insights into application dependencies, communication patterns, and potential vulnerabilities. This holistic view is crucial for understanding and securing your IT environment.

2. **Micro-Segmentation**: By implementing micro-segmentation, Cisco Secure Workload allows you to create granular security policies that isolate workloads, minimizing the attack surface and preventing lateral movement by malicious actors.

3. **Real-Time Threat Detection**: Utilizing advanced machine learning algorithms, Cisco Secure Workload continuously monitors your environment for suspicious activity, ensuring that threats are detected and addressed in real-time.

4. **Automation and Orchestration**: With automation features, Cisco Secure Workload simplifies the process of applying and managing security policies, reducing the administrative burden on your IT team while enhancing overall security posture.

### Benefits of Implementing Cisco Secure Workload

– **Enhanced Security**: By providing comprehensive visibility and micro-segmentation, Cisco Secure Workload significantly enhances the security of your IT environment, reducing the risk of breaches and data loss.

– **Improved Compliance**: Cisco Secure Workload helps organizations meet regulatory requirements by ensuring that security policies are consistently applied and monitored across all workloads.

– **Operational Efficiency**: The automation and orchestration features of Cisco Secure Workload streamline security management, freeing up valuable time and resources for your IT team to focus on other critical tasks.

– **Scalability**: Whether you have a small business or a large enterprise, Cisco Secure Workload scales to meet the needs of your organization, providing consistent protection as your IT environment grows and evolves.

### Practical Applications of Cisco Secure Workload

Cisco Secure Workload is versatile and can be applied across various industries and use cases. For example, in the financial sector, it can protect sensitive customer data and ensure compliance with stringent regulations. In healthcare, it can safeguard patient information and support secure communication between medical devices. No matter the industry, Cisco Secure Workload offers a robust solution for securing critical workloads and data.

**Challenges to Consider**

While zero-trust networking offers numerous benefits, implementing it can pose particular challenges. Organizations may face difficulties redesigning their existing network architectures, ensuring compatibility with legacy systems, and managing the complexity associated with granular access controls. However, these challenges can be overcome with proper planning, collaboration, and tools.

One of the main challenges customers face right now is that their environments are changing. They are moving to cloud and containerized environments, which raises many security questions from an access control perspective, especially in a hybrid infrastructure where traditional data centers with legacy systems are combined with highly scalable systems.

An effective security posture is all about having a common way to enforce a policy-based control and contextual access policy around user and service access.

When organizations transition into these new environments, they must use multiple tool sets, which are not very contextual in their operations. For example, you may have Amazon Web Services (AWS) security groups defining IP address ranges that can gain access to a particular virtual private cloud (VPC).

This isn’t granular or has any associated identity or device recognition capability. Also, developers in these environments are massively titled, and we struggle with how to control them.

Example Technology: What is Network Monitoring?

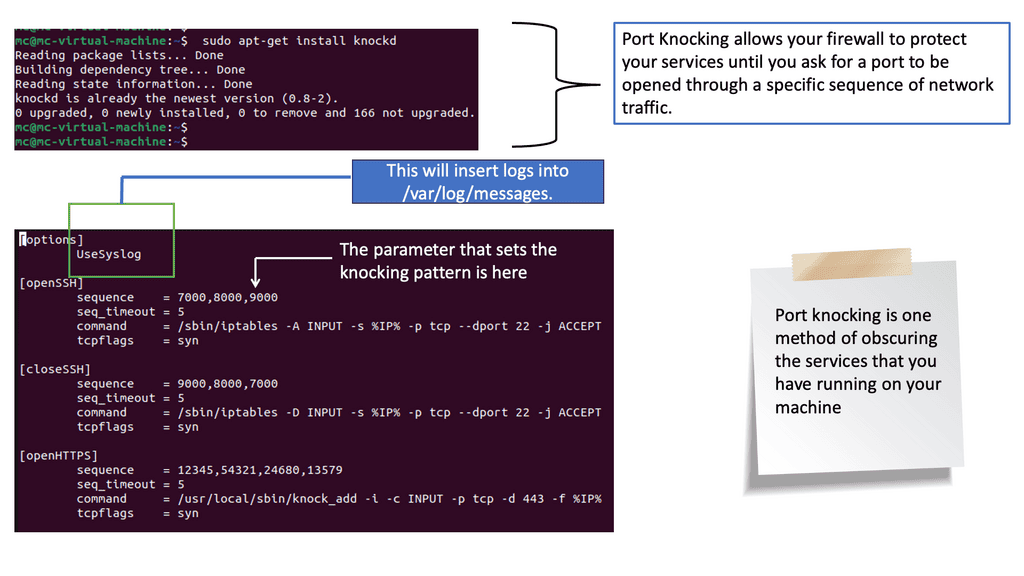

Network monitoring involves observing and analyzing computer networks for performance, security, and availability. It consists in tracking network components such as routers, switches, servers, and applications to ensure they function optimally. Administrators can identify potential issues, troubleshoot problems, and prevent downtime by actively monitoring network traffic.

Network monitoring tools provide insights into network traffic patterns, allowing administrators to identify potential security breaches, malware attacks, or unauthorized access attempts. By monitoring network activity, administrators can implement robust security measures and quickly respond to any threats, ensuring the integrity and safety of their systems.

An authenticated network flow must be processed before it can be processed

Whenever a zero-trust network receives a packet, it is considered suspicious. Before data can be processed within them, they must be rigorously inspected. Strong authentication is our primary method for accomplishing this.

Authentication is required for network data to be trusted. It is possibly the most critical component of a zero-trust network. In the absence of it, we must trust the network.

All network flows SHOULD be encrypted before transmission

It is trivial to compromise a network link that is physically accessible to unsafe actors. Bad actors can infiltrate physical networks digitally and passively probe for valuable data by digitally infiltrating them.

When data is encrypted, the attack surface is reduced to the device’s application and physical security, which is the device’s trustworthiness.

- The application-layer endpoints MUST perform authentication and encryption.

Application-layer endpoints must communicate securely to establish zero-trust networks since trusting network links threaten system security. When middleware components handle upstream network communications (for example, VPN concentrators or load balancers that terminate TLS), they can expose these communications to physical and virtual threats. To achieve zero trust, every endpoint at the application layer must implement encryption and authentication.

**The Role of Segmentation**

Security consultants carrying out audits will see a common theme. There will always be a remediation element; the default line is that you need to segment. There will always be the need for user and micro-segmentation of high-value infrastructure in sections of the networks. Micro-segmentation is hard without Zero Trust Network Design and Zero Trust Security Strategy.

User-centric: Zero Trust Networking (ZTN) is a dynamic and user-centric method of microsegmentation for zero trust networks, which is needed for high-value infrastructure that can’t be moved, such as an AS/400. You can’t just pop an AS/400 in the cloud and expect everything to be ok. Recently, we have seen a rapid increase in using SASE, a secure access service edge. Zero Trust SASE combines network and security functions, including zero trust networking but offering from the cloud.

Example: Identifying and Mapping Networks

To troubleshoot the network effectively, you can use a range of tools. Some are built into the operating system, while others must be downloaded and run. Depending on your experience, you may choose a top-down or a bottom-up approach.

For pre-information, you may find the following posts helpful:

- Technology Insight for Microsegmentation