WAN Design Considerations

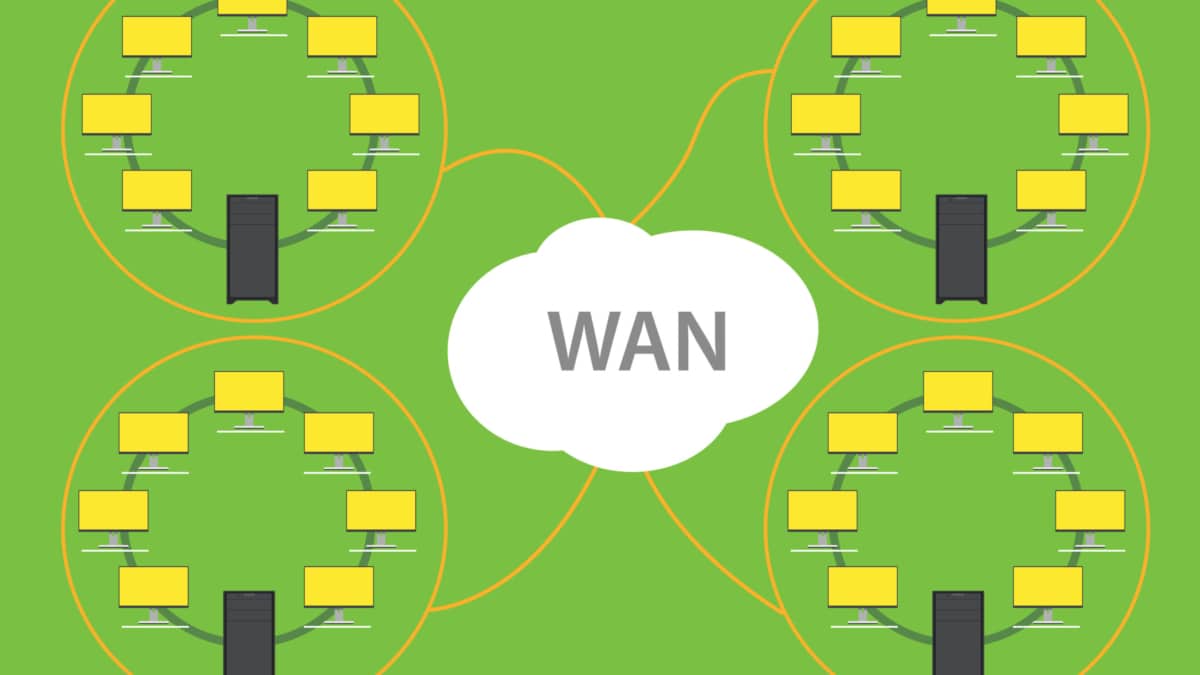

In today's interconnected world, Wide Area Network (WAN) design plays a crucial role in ensuring seamless communication and data transfer between geographically dispersed locations. This blogpost explores the key considerations and best practices for designing a robust and efficient WAN infrastructure. WAN design involves carefully planning and implementing the network architecture to meet specific business requirements. It encompasses factors such as bandwidth, scalability, security, and redundancy. By understanding the foundations of WAN design, organizations can lay a solid framework for their network infrastructure.

Bandwidth Requirements: One of the primary considerations in WAN design is determining the required bandwidth. Analyzing the organization's needs and usage patterns helps establish the baseline for bandwidth capacity. Factors such as the number of users, types of applications, and data transfer volumes should all be evaluated to ensure the WAN can handle the expected traffic without bottlenecks or congestion.

Network Topology: Choosing the right network topology is crucial for a well-designed WAN. Common topologies include hub-and-spoke, full mesh, and partial mesh. Each has its advantages and trade-offs. The decision should be based on factors such as cost, scalability, redundancy, and the organization's specific needs. Evaluating the pros and cons of each topology ensures an optimal design that aligns with the business objectives.

Security Considerations: In an era of increasing cyber threats, incorporating robust security measures is paramount. WAN design should include encryption protocols, firewalls, intrusion detection systems, and secure remote access mechanisms. By implementing multiple layers of security, organizations can safeguard their sensitive data and prevent unauthorized access or breaches.

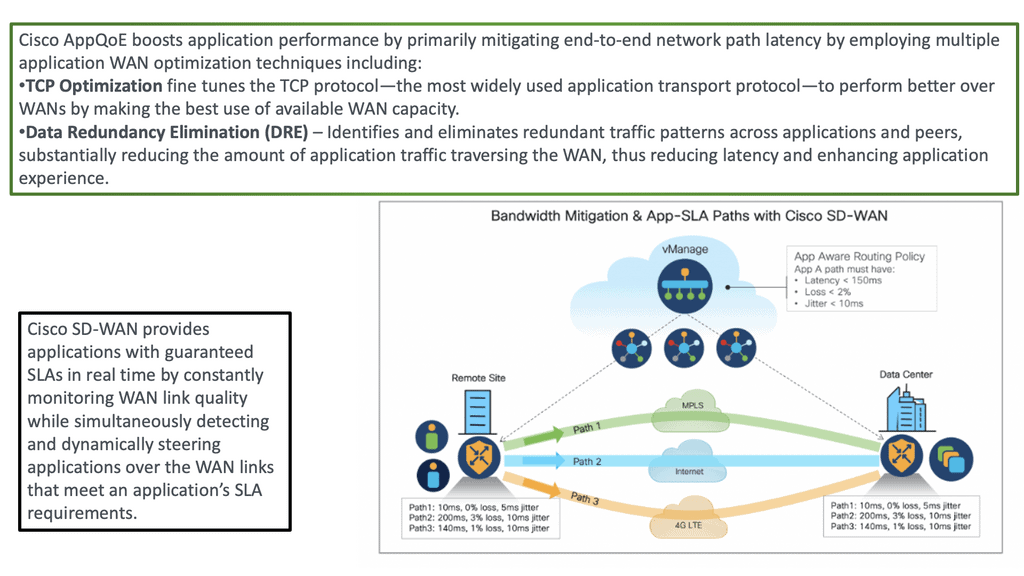

Quality of Service (QoS) Prioritization: To ensure critical applications receive the necessary resources, implementing Quality of Service (QoS) prioritization is essential. QoS mechanisms allow for traffic classification and prioritization based on predefined rules. By assigning higher priority to real-time applications like VoIP or video conferencing, organizations can mitigate latency and ensure optimal performance for time-sensitive operations.

Redundancy and Failover: Unplanned outages can severely impact business continuity, making redundancy and failover strategies vital in WAN design. Employing redundant links, diverse carriers, and failover mechanisms helps minimize downtime and ensures uninterrupted connectivity. Redundancy at both the hardware and connectivity levels is crucial to maintain seamless operations and minimize the risk of single points of failure.

Matt Conran

Highlights: WAN Design Considerations

Designing The WAN

**Section 1: Assessing Network Requirements**

Before embarking on WAN design, it’s crucial to conduct a comprehensive assessment of the network requirements. This involves understanding the specific needs of the business, such as bandwidth demands, data transfer volumes, and anticipated growth. Identifying the types of applications that will run over the WAN, including voice, video, and data applications, helps in determining the appropriate technologies and infrastructure needed to support them.

**Section 2: Choosing the Right WAN Technology**

Selecting the appropriate WAN technology is a critical step in the design process. Options range from traditional leased lines and MPLS to more modern solutions such as SD-WAN. Each technology comes with its own set of advantages and trade-offs. For instance, while MPLS offers reliable performance, SD-WAN provides greater flexibility and cost savings through the use of internet links. Decision-makers must weigh these factors against their specific requirements and budget constraints.

**Section 3: Ensuring Network Security and Resilience**

Security is a paramount concern in WAN design. Implementing robust security measures, such as encryption, firewalls, and intrusion detection systems, helps protect sensitive data as it traverses the network. Additionally, designing for resilience involves incorporating redundancy and failover mechanisms to maintain connectivity in case of link failures or network disruptions. This ensures minimal downtime and uninterrupted access for users.

**Section 4: Optimizing Network Performance**

Performance optimization is a key consideration in WAN design. Techniques such as traffic shaping, Quality of Service (QoS), and bandwidth management can be employed to prioritize critical applications and ensure smooth operation. Regular monitoring and analysis of network performance metrics allow for proactive adjustments and troubleshooting, ultimately improving user experience and overall efficiency.

Key WAN Design Considerations

A: – Bandwidth Requirements:

One of the primary considerations in WAN design is determining the bandwidth requirements for each location. The bandwidth needed will depend on the number of users, applications used, and data transfer volume. Accurately assessing these requirements is essential to avoid bottlenecks and ensure reliable connectivity.

Several key factors influence the bandwidth requirements of a WAN. Understanding these variables is essential for optimizing network performance and ensuring smooth data transmission. Some factors include the number of users, types of applications being used, data transfer volume, and the geographical spread of the network.

Calculating the precise bandwidth requirements for a WAN can be a complex task. However, some general formulas and guidelines can help determine the approximate bandwidth needed. These calculations consider user activity, application requirements, and expected data traffic.

B: – Network Topology:

Choosing the correct network topology is crucial for a well-designed WAN. Several options include point-to-point, hub-and-spoke, and full-mesh topologies. Each has advantages and disadvantages, and the choice should be based on cost, scalability, and the organization’s specific needs.

With the advent of cloud computing, increased reliance on real-time applications, and the need for enhanced security, modern WAN network topologies have emerged to address the changing requirements of businesses. Some of the contemporary topologies include:

- Hybrid WAN Topology

- Software-defined WAN (SD-WAN) Topology

- Meshed Hybrid WAN Topology

These modern topologies leverage technologies like virtualization, software-defined networking, and intelligent routing to provide greater flexibility, agility, and cost-effectiveness.

Securing the WAN

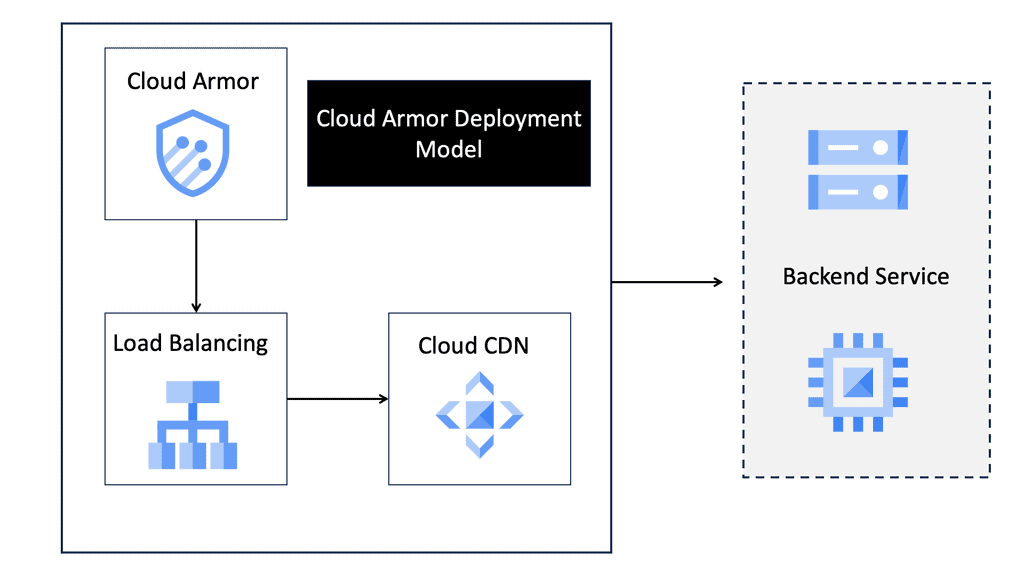

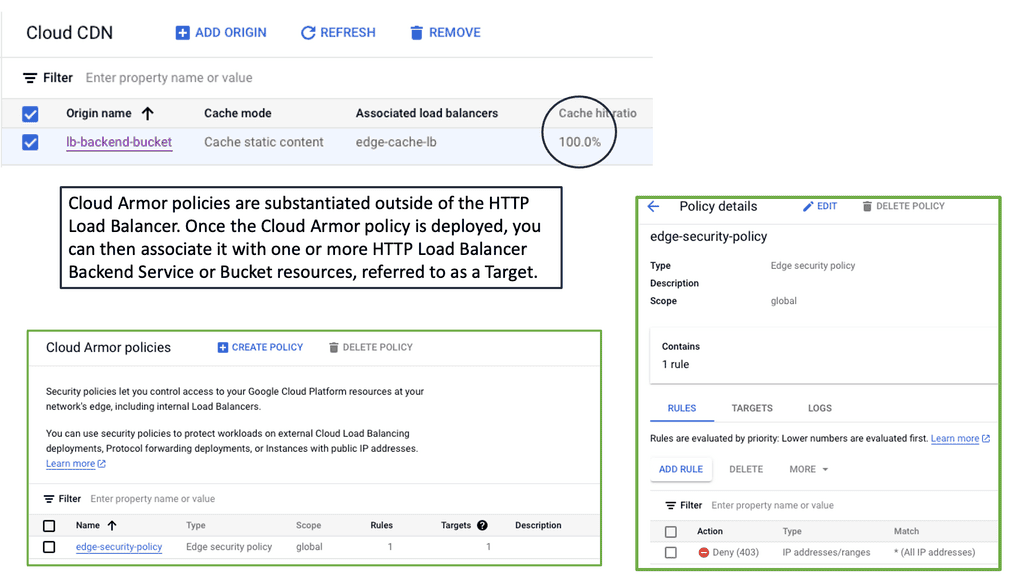

### What is Cloud Armor?

Cloud Armor is a comprehensive security solution provided by Google Cloud Platform (GCP). It offers advanced protection against various cyber threats, including Distributed Denial of Service (DDoS) attacks, SQL injections, and cross-site scripting (XSS). By leveraging Cloud Armor, businesses can create custom security policies that are enforced at the edge of their network, ensuring that threats are mitigated before they can reach critical infrastructure.

### Key Features of Cloud Armor

#### DDoS Protection

One of the standout features of Cloud Armor is its ability to defend against DDoS attacks. By utilizing Google’s global infrastructure, Cloud Armor can absorb and mitigate large-scale attacks, ensuring that your services remain available even under heavy traffic.

#### Custom Security Policies

Cloud Armor allows you to create tailor-made security policies based on your specific needs. Whether you need to block certain IP addresses, enforce rate limiting, or inspect HTTP requests for malicious patterns, Cloud Armor provides the flexibility to configure rules that align with your security requirements.

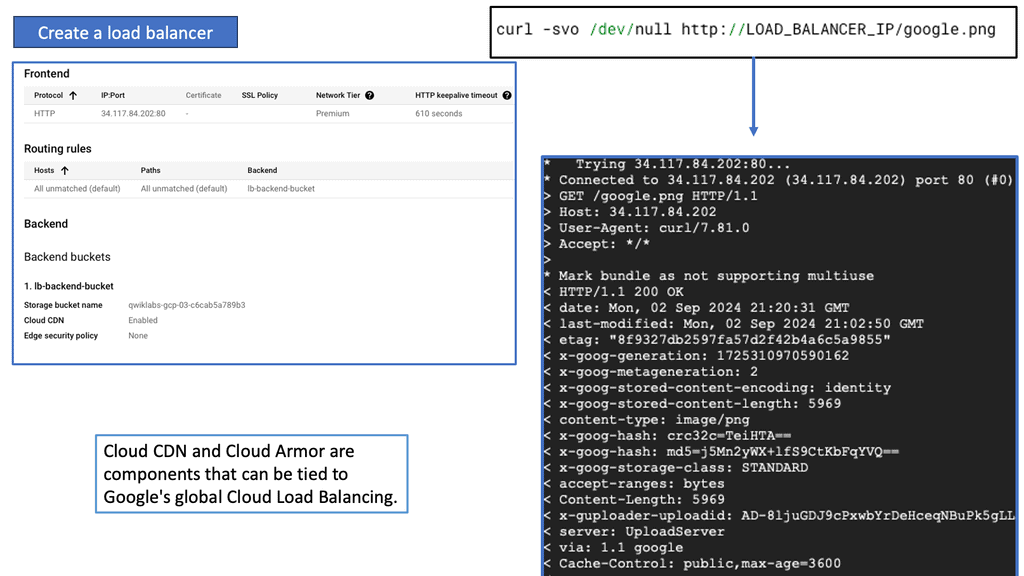

#### Integration with Google Cloud Services

Seamlessly integrated with other Google Cloud services, Cloud Armor provides a unified security approach. It works in conjunction with Google Cloud Load Balancing, enabling you to apply security policies at the network edge, thus enhancing protection and performance.

### Implementing Edge Security Policies

#### Defining Your Security Needs

Before you can implement effective edge security policies, it’s crucial to understand your specific security needs. Conduct a thorough assessment of your infrastructure to identify potential vulnerabilities and determine which types of threats are most relevant to your organization.

#### Creating and Applying Policies

Once you have a clear understanding of your security requirements, you can begin creating custom policies in Cloud Armor. Use the intuitive interface to define rules that target specific threats, and apply these policies to your Google Cloud Load Balancers to ensure they are enforced at the network edge.

#### Monitoring and Adjusting Policies

Security is an ongoing process, and it’s essential to continually monitor the effectiveness of your policies. Cloud Armor provides detailed logs and metrics that allow you to track the performance of your security rules. Use this data to make informed adjustments, ensuring that your policies remain effective against emerging threats.

### Benefits of Using Cloud Armor

#### Enhanced Security

By implementing Cloud Armor, you can significantly enhance the security of your cloud infrastructure. Its advanced threat detection and mitigation capabilities ensure that your applications and data remain protected against a wide range of cyber attacks.

#### Scalability

Cloud Armor leverages Google’s global network, providing scalable protection that can handle even the largest DDoS attacks. This ensures that your services remain available and performant, regardless of the scale of the attack.

#### Cost-Effective

Compared to traditional on-premise security solutions, Cloud Armor offers a cost-effective alternative. By utilizing a cloud-based approach, you can reduce the need for expensive hardware and maintenance, while still benefiting from cutting-edge security features.

**Network Connectivity Center (NCC)**

### Unified Connectivity Management

One of the standout features of Google NCC is its ability to unify connectivity management. Traditionally, managing a network involved juggling multiple tools and interfaces. NCC consolidates these tasks into a single, user-friendly platform. This unified approach simplifies network operations, making it easier for IT teams to monitor, manage, and troubleshoot their networks.

### Enhanced Security and Compliance

Security is a top priority in any data center. NCC offers robust security features, ensuring that data remains protected from potential threats. The platform integrates seamlessly with Google Cloud’s security protocols, providing end-to-end encryption and compliance with industry standards. This ensures that your network not only performs efficiently but also adheres to the highest security standards.

### Scalability and Flexibility

As businesses grow, so do their networking needs. NCC’s scalable architecture allows organizations to expand their network without compromising performance. Whether you’re managing a small data center or a global network, NCC provides the flexibility to scale operations seamlessly. This adaptability ensures that your network infrastructure can grow alongside your business.

### Advanced Monitoring and Analytics

Effective network management relies heavily on monitoring and analytics. NCC provides advanced tools to monitor network performance in real-time. These tools offer insights into traffic patterns, potential bottlenecks, and overall network health. By leveraging these analytics, IT teams can make informed decisions to optimize network performance and ensure uninterrupted service.

### Integration with Hybrid and Multi-Cloud Environments

In today’s interconnected world, many organizations operate in hybrid and multi-cloud environments. NCC excels in providing seamless integration across these diverse environments. It offers connectivity solutions that bridge on-premises data centers with cloud-based services, ensuring a cohesive network infrastructure. This integration is key to maintaining operational efficiency in a multi-cloud strategy.

**Connecting to the WAN Edge**

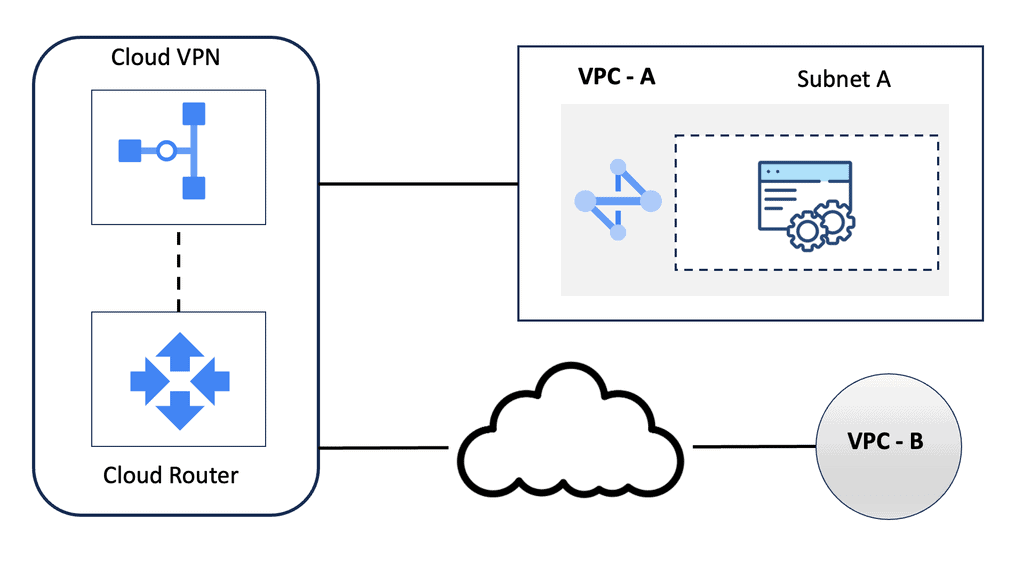

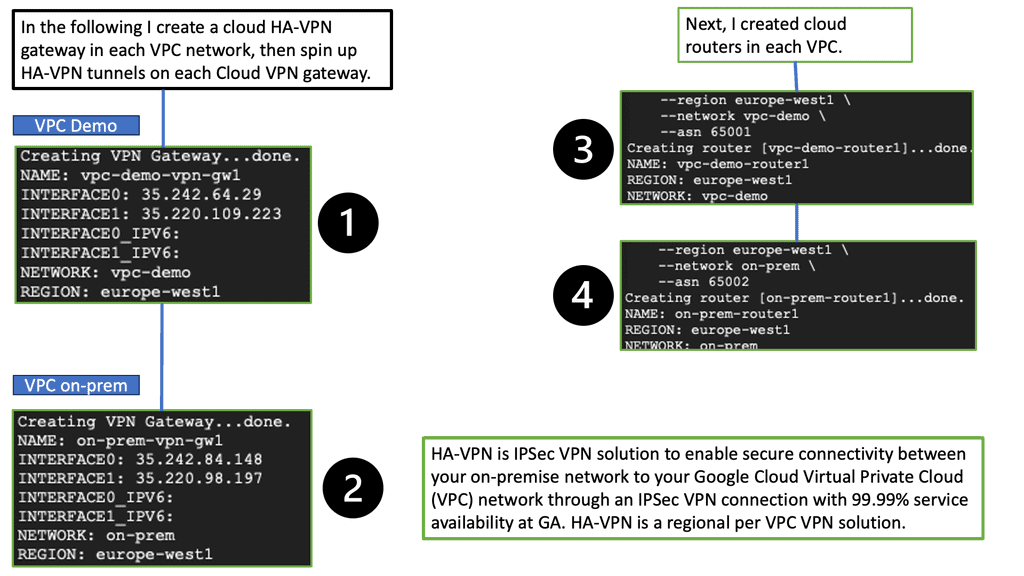

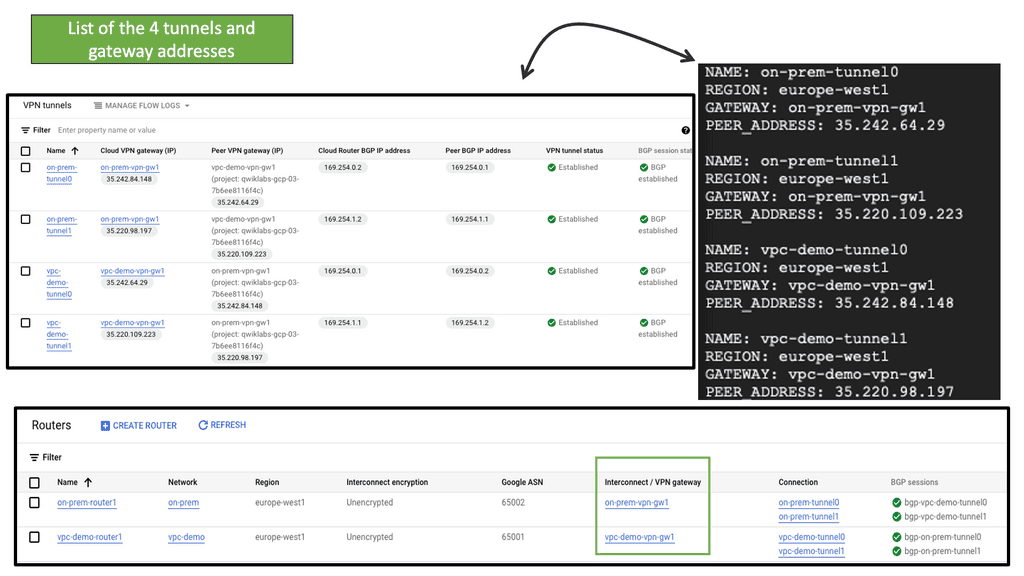

**What is Google Cloud HA VPN?**

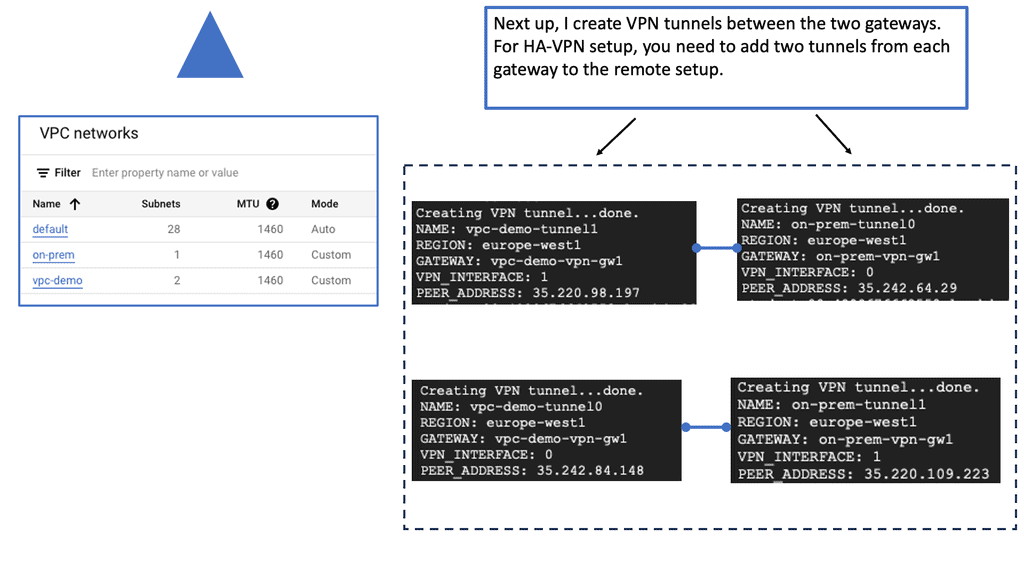

Google Cloud HA VPN is a managed service designed to provide a high-availability connection between your on-premises network and your Google Cloud Virtual Private Cloud (VPC). Unlike the standard VPN, which offers a single tunnel configuration, HA VPN provides a dual-tunnel setup, ensuring that your network remains connected even if one tunnel fails. This dual-tunnel configuration is key to achieving higher availability and reliability.

**Key Features and Benefits**

1. **High Availability**: The dual-tunnel setup ensures that there is always a backup connection, minimizing downtime and ensuring continuous connectivity.

2. **Scalability**: HA VPN allows you to scale your network connections efficiently, accommodating the growing needs of your business.

3. **Enhanced Security**: With advanced encryption and security protocols, HA VPN ensures that your data remains protected during transit.

4. **Ease of Management**: Google Cloud’s user-friendly interface and comprehensive documentation make it easy to set up and manage HA VPN connections.

**How Does HA VPN Work?**

Google Cloud HA VPN works by creating two tunnels between your on-premises network and your VPC. These tunnels are established across different availability zones, enhancing redundancy. If one tunnel experiences issues or goes down, traffic is automatically rerouted through the second tunnel, ensuring uninterrupted connectivity. This automatic failover capability is a significant advantage for businesses that cannot afford any downtime.

**HA VPN vs. Standard VPN**

While both HA VPN and standard VPN provide secure connections between your on-premises network and Google Cloud, there are some critical differences:

1. **Redundancy**: HA VPN offers dual tunnels for redundancy, whereas the standard VPN typically provides a single tunnel.

2. **Availability**: The dual-tunnel setup in HA VPN ensures higher availability compared to the standard VPN.

3. **Scalability and Flexibility**: HA VPN is more scalable and flexible, making it suitable for enterprises with dynamic and growing connectivity needs.

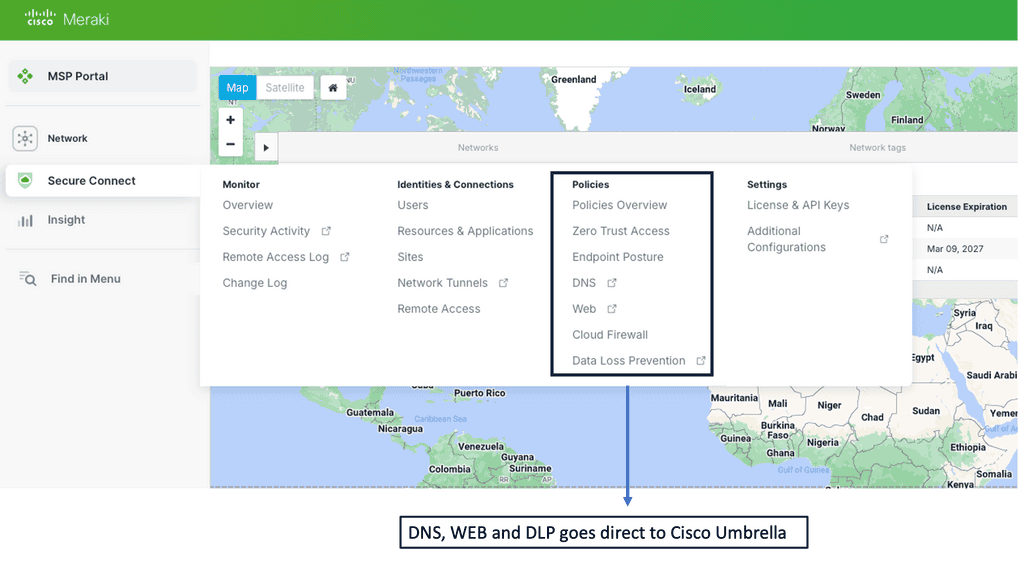

Example Product: SD-WAN with Cisco Meraki

### What is Cisco Meraki?

Cisco Meraki is a suite of cloud-managed networking products that include wireless, switching, security, enterprise mobility management (EMM), and security cameras, all centrally managed from the web. This centralized management allows for ease of deployment, monitoring, and troubleshooting, making it an ideal solution for businesses looking to maintain a secure and efficient network infrastructure.

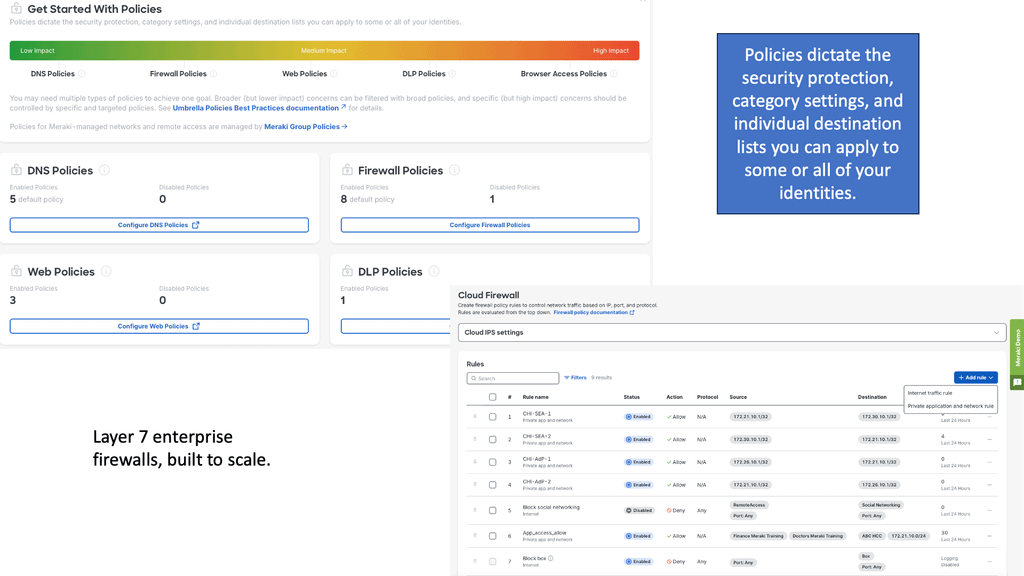

### Advanced Security Features

One of the standout aspects of Cisco Meraki is its focus on security. From built-in firewalls to advanced malware protection, Meraki offers a plethora of features designed to keep your network safe. Here are some key security features:

– **Next-Generation Firewall:** Meraki’s firewall capabilities include Layer 7 application visibility and control, allowing you to identify and manage applications, not just ports or IP addresses.

– **Intrusion Detection and Prevention (IDS/IPS):** Meraki’s IDS/IPS system identifies and responds to potential threats in real-time, ensuring your network remains secure.

– **Content Filtering:** With Meraki, you can block inappropriate content and restrict access to harmful websites, making your network safer for all users.

Cisco Meraki’s cloud-based dashboard is a game-changer for IT administrators. The intuitive interface allows you to manage multiple sites from a single pane of glass, reducing complexity and increasing efficiency. Features like automated firmware updates and centralized policy management make the day-to-day management of your network a breeze.

### Scalability and Flexibility

One of the key benefits of Cisco Meraki is its scalability. Whether you have one location or hundreds, Meraki’s cloud-based management allows you to easily scale your network as your business grows. The flexibility of Meraki’s product line means you can tailor your network to meet the specific needs of your organization, whether that involves high-density Wi-Fi deployments, secure remote access, or advanced security monitoring.

### Real-World Applications

Cisco Meraki is used across various industries to enhance security and improve efficiency. For example, educational institutions use Meraki to provide secure, high-performance Wi-Fi to students and staff, while retail businesses leverage Meraki’s analytics to optimize store operations and customer experiences. The versatility of Meraki’s solutions makes it applicable to a wide range of use cases.

Google Cloud Data Centers

Understanding Network Tiers

Network tiers form the foundation of network infrastructure, dictating how data flows within a cloud environment. In Google Cloud, there are two primary network tiers: Premium Tier and Standard Tier. Each tier offers distinct benefits and cost structures, making it crucial to comprehend their differences and use cases.

With its emphasis on performance and reliability, the Premium Tier is designed to deliver unparalleled network connectivity across the globe. Leveraging Google’s extensive global network infrastructure, this tier ensures low-latency connections and high throughput, making it ideal for latency-sensitive applications and global-scale businesses.

While the Premium Tier excels in global connectivity, the Standard Tier offers a balance between cost and performance. It leverages peering relationships with major internet service providers (ISPs) to provide cost-effective network connectivity. This tier is well-suited for workloads that don’t require the extensive global reach of the Premium Tier, allowing businesses to optimize network spending without compromising on performance.

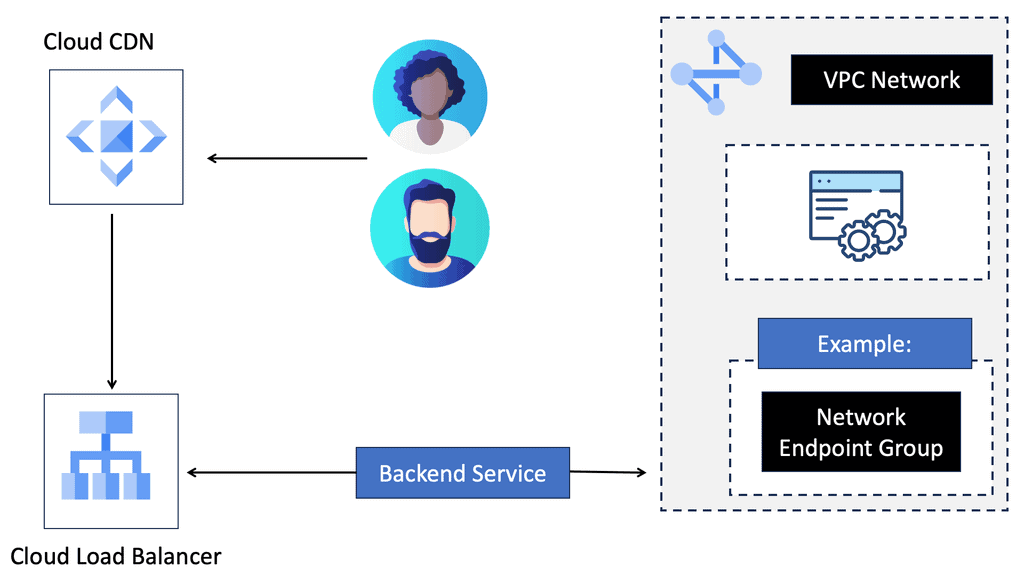

Understanding VPC Networking

VPC Networking forms the foundation of any cloud infrastructure, enabling secure communication and resource isolation. In Google Cloud, a VPC is a virtual network that allows users to define and manage their own private space within the cloud environment. It provides a secure and scalable environment for deploying applications and services.

Google Cloud VPC offers a plethora of powerful features that enhance network management and security. From customizable IP addressing to robust firewall rules, VPC empowers users with granular control over their network configuration. Furthermore, the integration with other Google Cloud services, such as Cloud Load Balancing and Cloud VPN, opens up a world of possibilities for building highly available and resilient architectures.

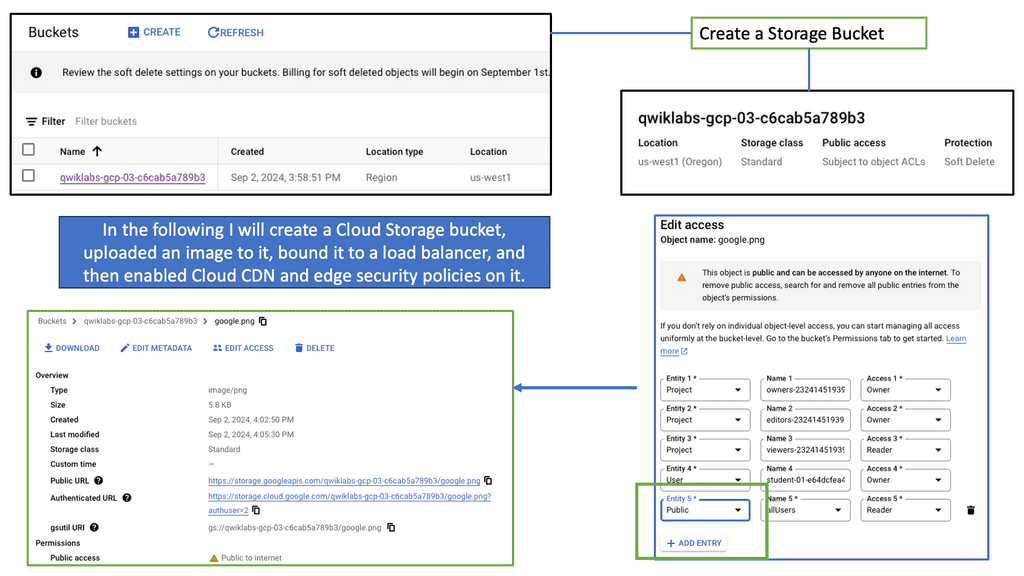

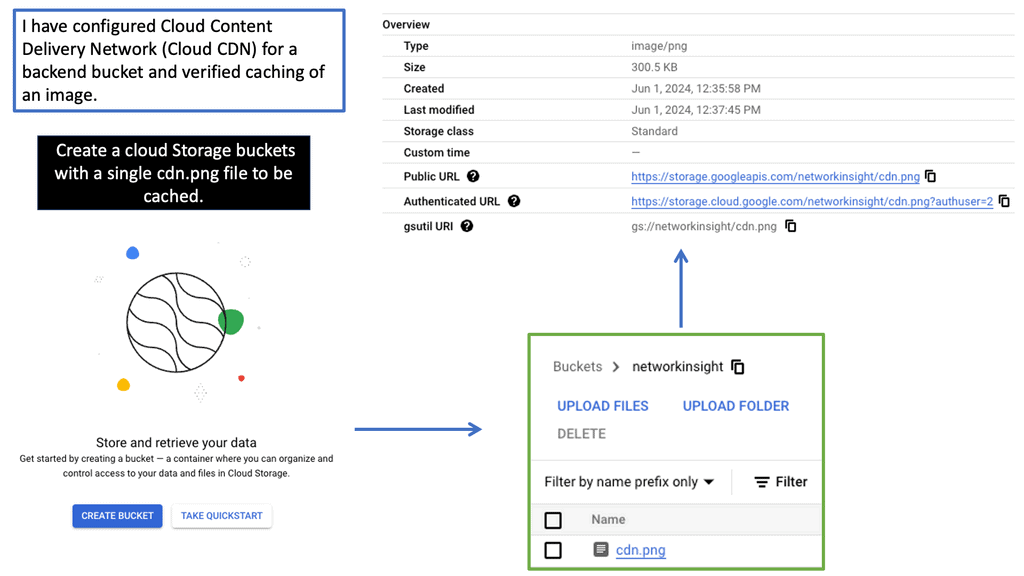

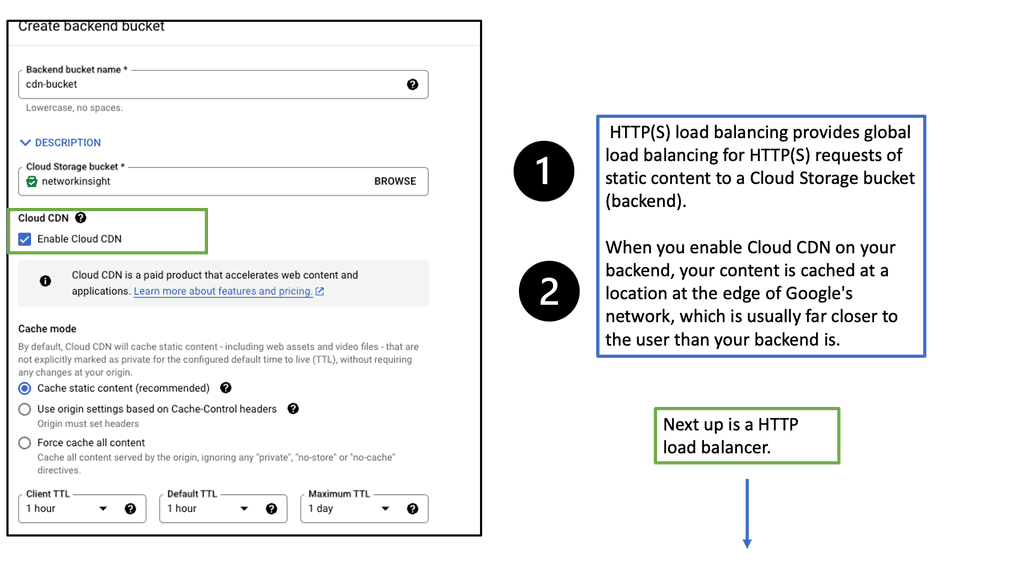

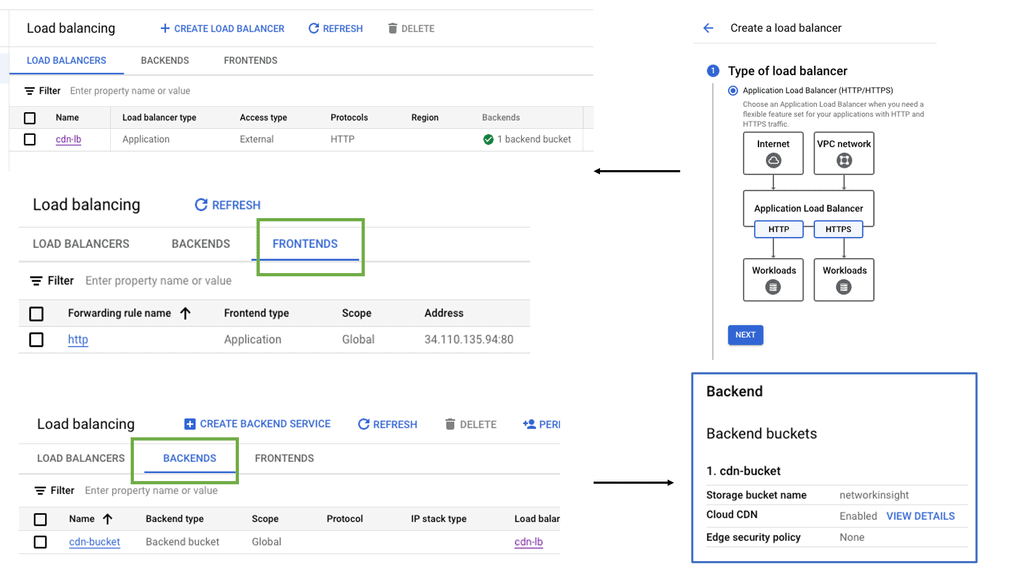

Understanding Cloud CDN

Cloud CDN is a robust content delivery network provided by Google Cloud. It is designed to deliver high-performance, low-latency content to users worldwide. By caching website content in strategic edge locations, Cloud CDN reduces the distance between users and your website’s servers, resulting in faster delivery times and reduced network congestion.

One of Cloud CDN’s primary advantages is its ability to accelerate load times for your website’s content. By caching static assets such as images, CSS files, and JavaScript libraries, Cloud CDN serves these files directly from its edge locations. This means that subsequent requests for the same content can be fulfilled much faster, as it is already stored closer to the user.

With Cloud CDN, your website gains global scalability. As your content is distributed across multiple edge locations worldwide, users from different geographical regions can access it quickly and efficiently. Cloud CDN automatically routes requests to the nearest edge location, ensuring that users experience minimal latency and optimal performance.

Traffic spikes can challenge websites, leading to slower load times and potential downtime. However, Cloud CDN is specifically built to handle high volumes of traffic. By caching your website’s content and distributing it across numerous edge locations, Cloud CDN can effectively manage sudden surges in traffic, ensuring that your website remains responsive and available to users.

WAN Design Considerations: Optimal Performance

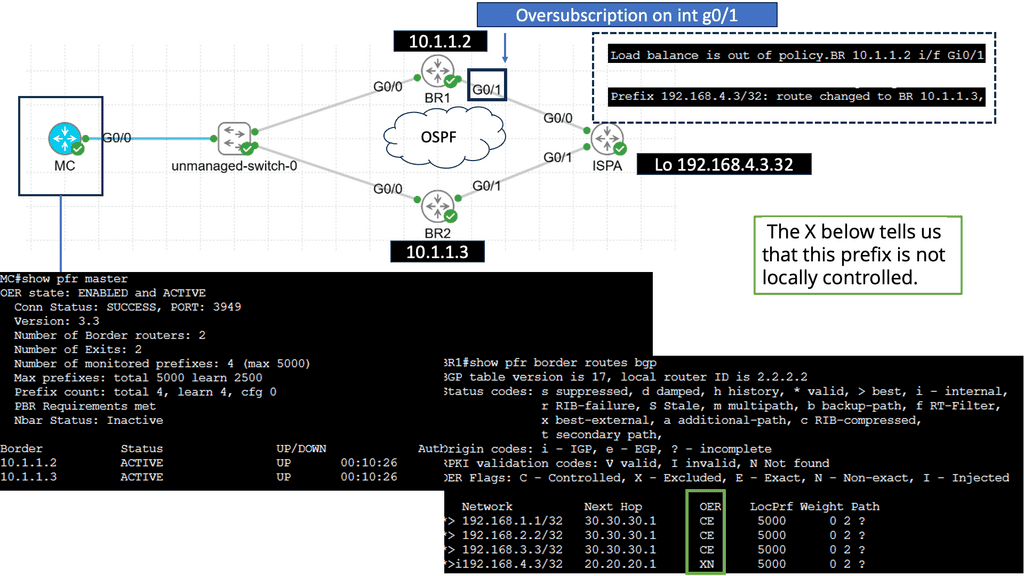

What is Performance-Based Routing?

Performance-based routing is a dynamic technique that intelligently directs network traffic based on real-time performance metrics. Unlike traditional static routing, which relies on pre-configured paths, performance-based routing considers various factors, such as latency, bandwidth, and packet loss, to determine the optimal path for data transmission. By constantly monitoring network conditions, performance-based routing ensures traffic is routed through the most efficient path, enhancing performance and improving user experience.

Enhanced Network Performance: Performance-based routing can significantly improve network performance by automatically adapting to changing network conditions. By dynamically selecting the best path for data transmission, it minimizes latency, reduces packet loss, and maximizes available bandwidth. This leads to faster data transfer, quicker response times, and a smoother user experience.

Increased Reliability and Redundancy: One of the key advantages of performance-based routing is its ability to provide increased reliability and redundancy. Continuously monitoring network performance can quickly identify issues such as network congestion or link failures. In such cases, it can dynamically reroute traffic through alternate paths, ensuring uninterrupted connectivity and minimizing downtime.

Cost Optimization: Performance-based routing also offers cost optimization benefits. Intelligently distributing traffic across multiple paths enables better utilization of available network resources. This can result in reduced bandwidth costs and improved use of existing network infrastructure. Additionally, businesses can use their network budget more efficiently by avoiding congested or expensive links.

- Bandwidth Allocation

To lay a strong foundation for an efficient WAN, careful bandwidth allocation is imperative. Determining the bandwidth requirements of different applications and network segments is crucial to avoid congestion and bottlenecks. By prioritizing critical traffic and implementing Quality of Service (QoS) techniques, organizations can ensure that bandwidth is allocated appropriately, guaranteeing optimal performance.

- Network Topology

The choice of network topology greatly influences WAN performance. Whether it’s a hub-and-spoke, full mesh, or hybrid topology, understanding the unique requirements of the organization is essential. Each topology has its pros and cons, impacting factors such as scalability, latency, and fault tolerance. Assessing the specific needs of the network and aligning them with the appropriate topology is vital for achieving high-performance WAN connectivity.

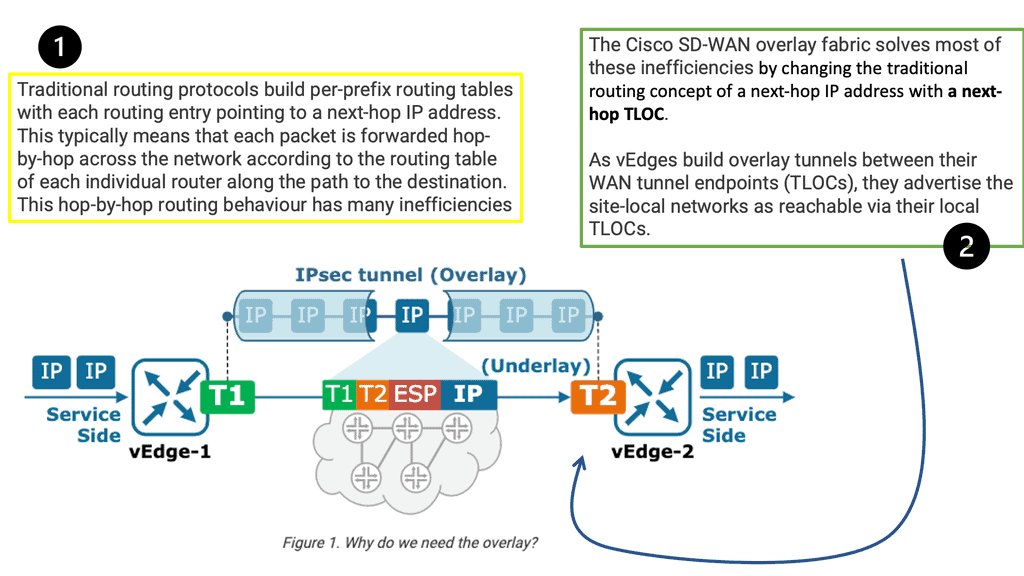

- Traffic Routing and Optimization

Efficient traffic routing and optimization mechanisms play a pivotal role in enhancing WAN performance. Implementing intelligent routing protocols, such as Border Gateway Protocol (BGP) or Open Shortest Path First (OSPF), ensures efficient data flow across the WAN. Additionally, employing traffic optimization techniques like WAN optimization controllers and caching mechanisms can significantly reduce latency and enhance overall network performance.

Understanding TCP Performance Parameters

TCP performance parameters govern the behavior of TCP connections. These parameters include congestion control algorithms, window size, Maximum Segment Size (MSS), and more. Each plays a crucial role in determining the efficiency and reliability of data transmission.

Congestion Control Algorithms: Congestion control algorithms, such as Reno, Cubic, and BBR, regulate the amount of data sent over a network to avoid congestion. They dynamically adjust the sending rate based on network conditions, ensuring fair sharing of network resources and preventing congestion collapse.

Window Size and Maximum Segment Size (MSS): The window size represents the amount of data that can be sent without receiving an acknowledgment from the receiver. A larger window size allows for faster data transmission but also increases the chances of congestion. On the other hand, the Maximum Segment Size (MSS) defines the maximum amount of data that can be sent in a single TCP segment. Optimizing these parameters can significantly improve network performance.

Selective Acknowledgment (SACK): Selective Acknowledgment (SACK) extends TCP that allows the receiver to acknowledge non-contiguous data blocks, reducing retransmissions and improving efficiency. By selectively acknowledging lost segments, SACK enhances TCP’s ability to recover from packet loss.

Understanding TCP MSS

In simple terms, TCP MSS refers to the maximum amount of data that can be sent in a single TCP segment. It is a parameter negotiated during the TCP handshake process and is typically determined by the underlying network’s Maximum Transmission Unit (MTU). Understanding the concept of TCP MSS is essential as it directly affects network performance and can have implications for various applications.

The significance of TCP MSS lies in its ability to prevent data packet fragmentation during transmission. By adhering to the maximum segment size, TCP ensures that packets are not divided into smaller fragments, reducing overhead and potential delays in reassembling them at the receiving end. This improves network efficiency and minimizes the chances of packet loss or retransmissions.

TCP MSS directly impacts network communications, especially when traversing networks with varying MTUs. Fragmentation may occur when a TCP segment encounters a network with a smaller MTU than the MSS. This can lead to increased latency, reduced throughput, and performance degradation. It is crucial to optimize TCP MSS to avoid such scenarios and maintain smooth network communications.

Optimizing TCP MSS involves ensuring that it is appropriately set to accommodate the underlying network’s MTU. This can be achieved by adjusting the end hosts’ MSS value or leveraging Path MTU Discovery (PMTUD) techniques to determine the optimal MSS for a given network path dynamically. By optimizing TCP MSS, network administrators can enhance performance, minimize fragmentation, and improve overall user experience.

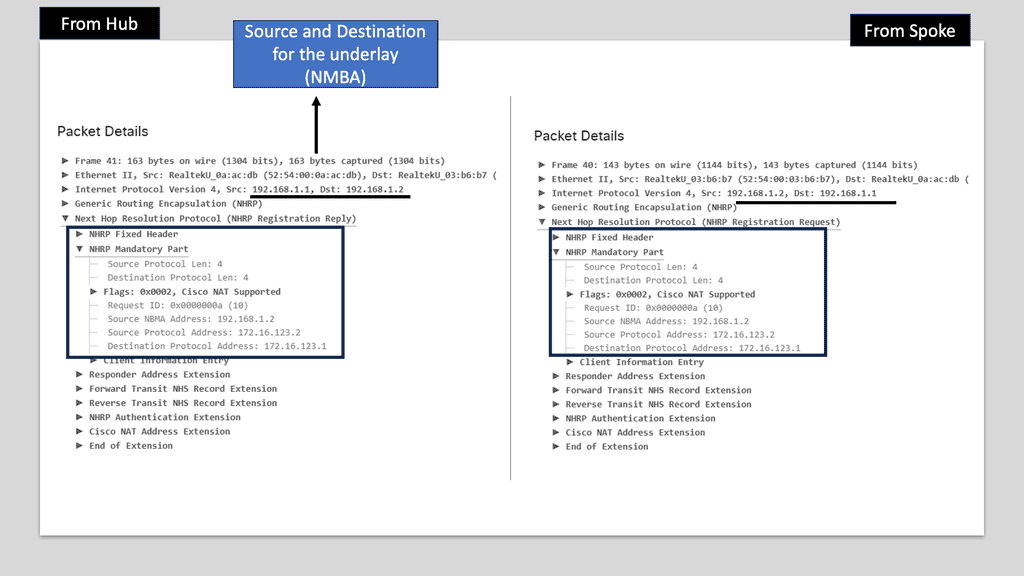

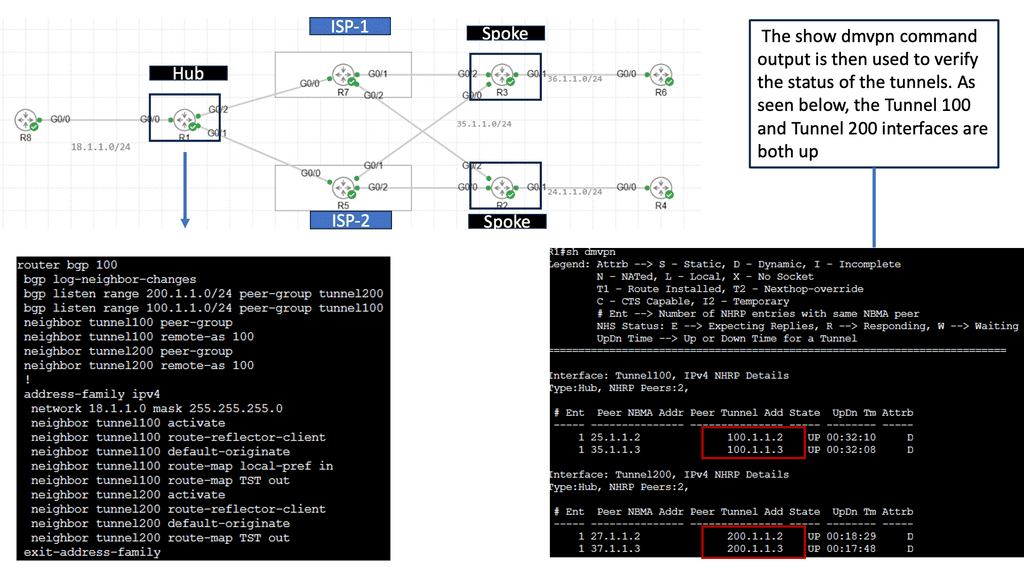

DMVPN: At the WAN Edge

Understanding DMVPN:

DMVPN is a routing technique that allows for the creation of scalable and dynamic virtual private networks over the Internet. Unlike traditional VPN solutions, which rely on point-to-point connections, DMVPN utilizes a hub-and-spoke architecture, offering flexibility and ease of deployment. By leveraging multipoint GRE tunnels, DMVPN enables secure communication between remote sites, making it an ideal choice for organizations with geographically dispersed branches.

Benefits of DMVPN:

Enhanced Scalability: With DMVPN, network administrators can easily add or remove remote sites without complex manual configurations. This scalability allows businesses to adapt swiftly to changing requirements and effortlessly expand their network infrastructure.

Cost Efficiency: DMVPN uses existing internet connections to eliminate the need for expensive dedicated lines. This cost-effective approach ensures organizations can optimize their network budget without compromising security or performance.

Simplified Management: DMVPN simplifies network management by centralizing the configuration and control of VPN connections at the hub site. With routing protocols such as EIGRP or OSPF, network administrators can efficiently manage and monitor the entire network from a single location, ensuring seamless connectivity and minimizing downtime.

Security Considerations

While DMVPN provides a secure communication channel, proper security measures must be implemented to protect sensitive data. Encryption protocols such as IPsec can add an additional layer of security to VPN tunnels, safeguarding against potential threats.

Bandwidth Optimization

DMVPN employs NHRP (Next Hop Resolution Protocol) and IP multicast to optimize bandwidth utilization. These technologies help reduce unnecessary traffic and improve network performance, especially in bandwidth-constrained environments.

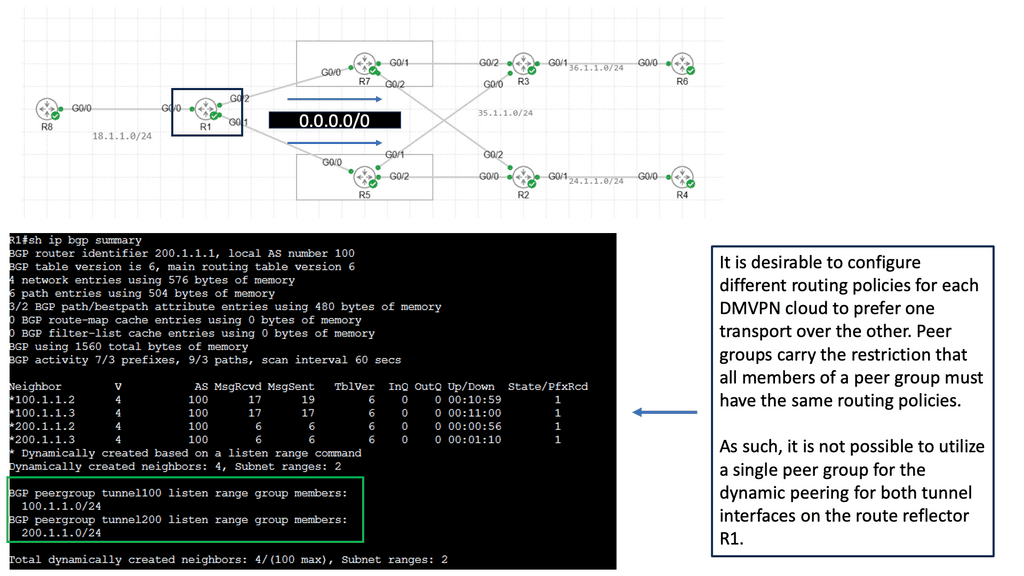

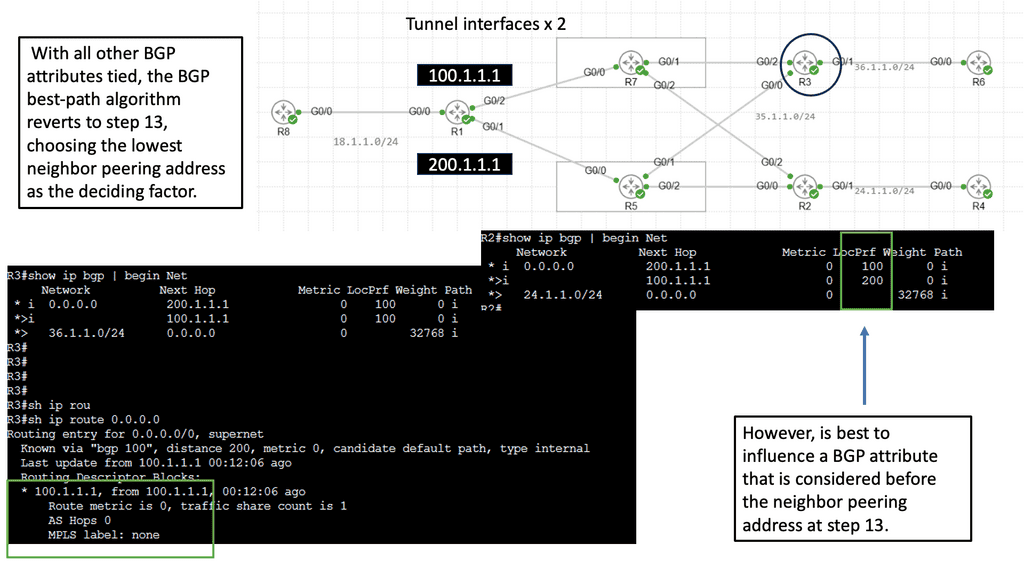

WAN Use Case: Exploring Single Hub Dual Cloud

Single hub dual cloud is an advanced variation of DMVPN that enhances network reliability and redundancy. It involves the deployment of two separate cloud infrastructures, each with its own set of internet service providers (ISPs), interconnected to a single hub. This setup ensures that even if one cloud or ISP experiences downtime, the network remains operational, maintaining seamless connectivity.

a) Enhanced Redundancy: Using two independent cloud infrastructures, single hub dual cloud provides built-in redundancy, minimizing the risk of service disruptions. This redundancy ensures that critical applications and services remain accessible even in the event of a cloud or ISP failure.

b) Improved Performance: Utilizing multiple clouds allows for load balancing and traffic optimization, improving network performance. A single-hub dual cloud distributes network traffic across the two clouds, preventing congestion and bottlenecks.

c) Simplified Maintenance: With a single hub dual cloud, network administrators can perform maintenance tasks on one cloud while the other remains operational. This ensures minimal downtime and allows for seamless updates and upgrades.

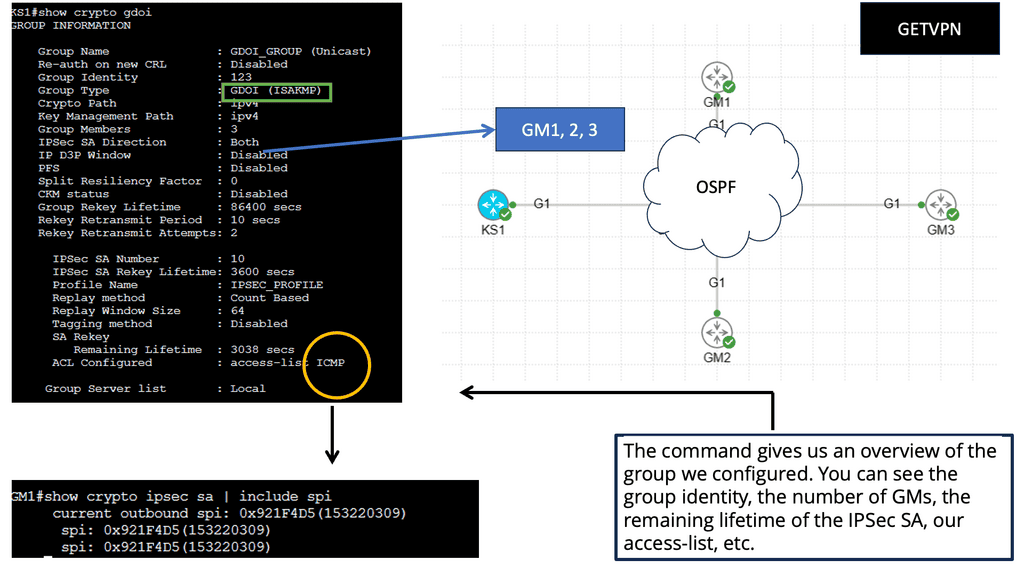

Understanding GETVPN

GETVPN, at its core, is a key-based encryption technology that provides secure and scalable communication within a network. Unlike traditional VPNs that rely on tunneling protocols, GETVPN operates at the network layer, encrypting and authenticating multicast traffic. By using a standard encryption key, GETVPN ensures confidentiality, integrity, and authentication for all group members.

GETVPN offers several key benefits that make it an attractive choice for organizations. Firstly, it enables secure communication over any IP network, making it versatile and adaptable to various infrastructures. Secondly, it provides end-to-end encryption, ensuring that data remains protected from unauthorized access throughout its journey. Additionally, GETVPN offers simplified key management, reducing the administrative burden and enhancing scalability.

Implementation and Deployment

Implementing GETVPN requires careful planning and configuration. Organizations must designate a Key Server (KS) responsible for managing key distribution and group membership. Group Members (GMs) receive the encryption keys from the KS and can decrypt the multicast traffic. By following best practices and considering network topology, organizations can deploy GETVPN effectively and seamlessly.

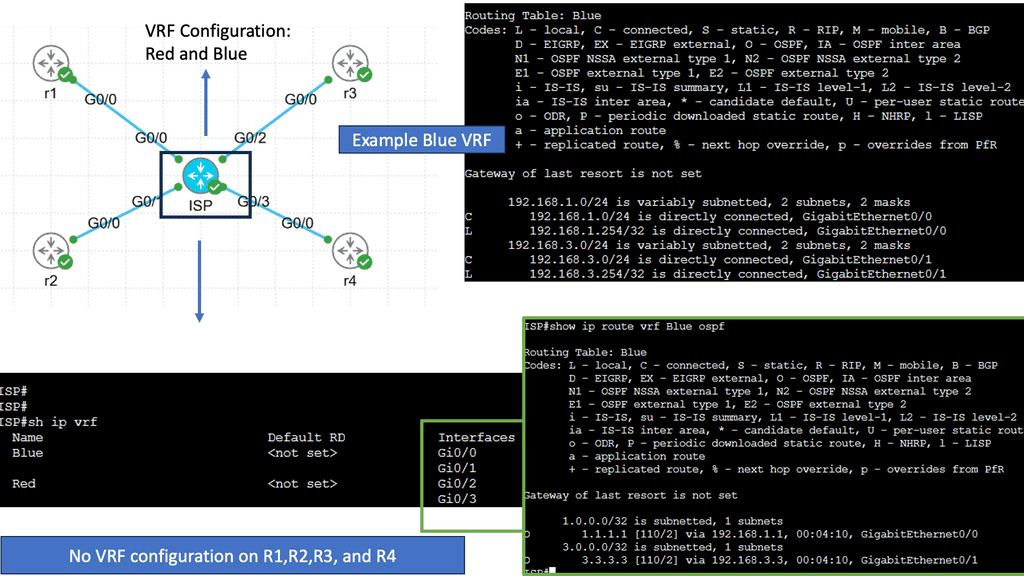

Example WAN Technology: VRFs

Understanding Virtual Routing and Forwarding

VRF is best described as isolating routing and forwarding tables, creating independent routing instances within a shared network infrastructure. Each VRF functions as a separate virtual router, with its routing table, routing protocols, and forwarding decisions. This segmentation enhances network security, scalability, and flexibility.

While VRF brings numerous benefits, it is crucial to consider certain factors when implementing it. Firstly, careful planning and design are essential to ensure proper VRF segmentation and avoid potential overlap or conflicts. Secondly, adequate network resources must be allocated to support the increased routing and forwarding tables associated with multiple VRFs. Lastly, thorough testing and validation are necessary to guarantee the desired functionality and performance of the VRF implementation.

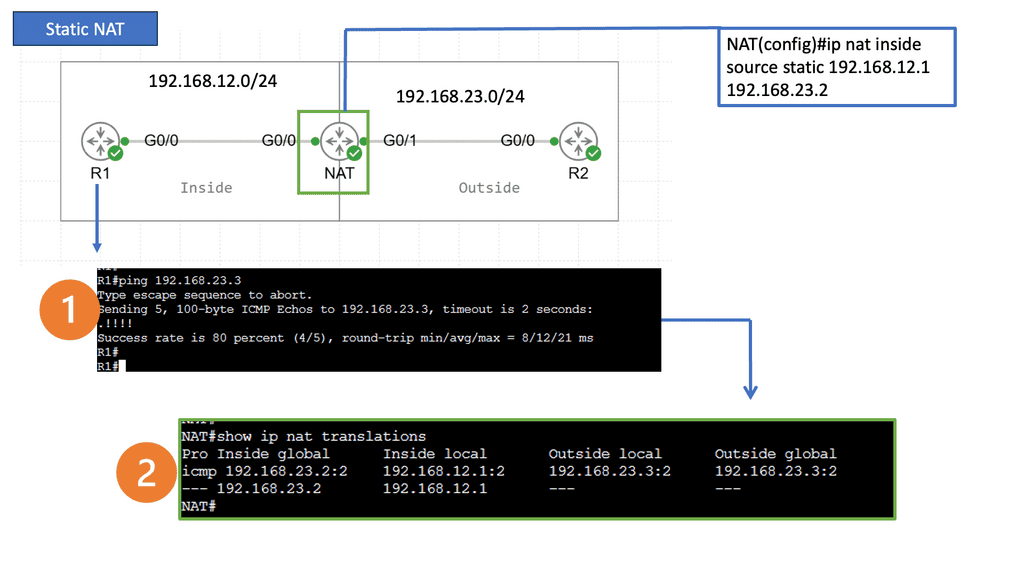

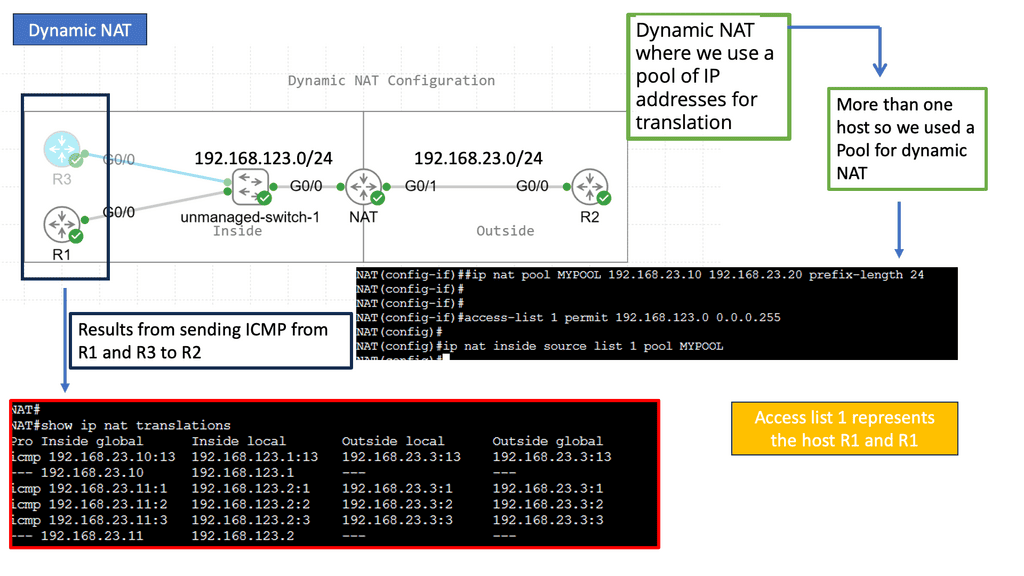

Understanding Network Address Translation

NAT bridges private and public networks, enabling private IP addresses to communicate with the Internet. It involves translating IP addresses and ports, ensuring seamless data transfer across different networks. Let’s explore the fundamental concepts behind NAT and its significance in networking.

There are several types of NAT, each with unique characteristics and applications. We will examine the most common types: Static NAT, Dynamic NAT, and Port Address Translation (PAT). Understanding these variations will illuminate their specific use cases and advantages.

NAT offers numerous benefits for organizations and individuals alike. From conserving limited public IP addresses to enhancing network security, NAT plays a pivotal role in modern networking infrastructure. We will discuss these advantages in detail, showcasing how NAT has become integral to our connected world.

While Network Address Translation presents many advantages, it also has specific challenges. One such challenge is the potential impact on specific network protocols and applications that rely on untouched IP addresses. We will explore these considerations and discuss strategies to mitigate any possible issues that may arise.

WAN Design Considerations

Redundancy and High Availability:

Redundancy and high availability are vital considerations in WAN design to ensure uninterrupted connectivity. Implementing redundant links, multiple paths, and failover mechanisms can help mitigate the impact of network failures or outages. Redundancy also plays a crucial role in load balancing and optimizing network performance.

Diverse Connection Paths

One of the primary components of WAN redundancy is the establishment of diverse connection paths. This involves utilizing multiple carriers or network providers offering different physical transmission routes. By having diverse connection paths, businesses can reduce the risk of a complete network outage caused by a single point of failure.

Automatic Failover Mechanisms

Another crucial component is the implementation of automatic failover mechanisms. These mechanisms monitor the primary connection and instantly switch to the redundant connection if any issues or failures are detected. Automatic failover ensures minimal downtime and enables seamless transition without manual intervention.

Redundant Hardware and Equipment

Businesses must invest in redundant hardware and equipment to achieve adequate WAN redundancy. This includes redundant routers, switches, and other network devices. By having duplicate hardware, businesses can ensure that a failure in one device does not disrupt the entire network. Redundant hardware also facilitates faster recovery and minimizes the impact of failures.

Load Balancing and Traffic Optimization

WAN redundancy provides failover capabilities and enables load balancing and traffic optimization. Load balancing distributes network traffic across multiple connections, maximizing bandwidth utilization and preventing congestion. Traffic optimization algorithms intelligently route data through the most efficient paths, ensuring optimal performance and minimizing latency.

Example: DMVPN Single Hub Dual Cloud

Exploring the Single Hub Architecture

The single hub architecture in DMVPN involves establishing a central hub location that acts as a focal point for all site-to-site VPN connections. This hub is a central routing device, allowing seamless communication between various remote sites. By consolidating the VPN traffic at a single location, network administrators gain better control and visibility over the entire network.

One key advantage of DMVPN’s single hub architecture is the ability to connect to multiple cloud service providers simultaneously. This dual cloud connectivity enhances network resilience and allows organizations to distribute their workload across different cloud platforms. By leveraging this feature, businesses can ensure high availability, minimize latency, and optimize their cloud resources.

Implementing DMVPN with a single hub and dual cloud connectivity brings numerous benefits to organizations. It enables simplified network management, reduces operational costs, and enhances network scalability. However, it is crucial to consider factors such as security, bandwidth requirements, and cloud provider compatibility when designing and implementing this architecture.

WAN Design Considerations: Ensuring Security Characteristics

WAN Design Considerations: Ensuring Security Characteristics

A secure WAN is the foundation of a resilient and protected network environment. It shields data, applications, and resources from unauthorized access, ensuring confidentiality, integrity, and availability. By comprehending the significance of WAN security, organizations can make informed decisions to fortify their networks.

- Encryption and Data Protection

Encryption plays a pivotal role in safeguarding data transmitted across a WAN. Implementing robust encryption protocols, such as IPsec or SSL/TLS, can shield sensitive information from interception or tampering. Additionally, employing data protection mechanisms like data loss prevention (DLP) tools and access controls adds an extra layer of security.

- Access Control and User Authentication

Controlling access to the WAN is essential to prevent unauthorized entry and potential security breaches. Implementing strong user authentication mechanisms, such as two-factor authentication (2FA) or multi-factor authentication (MFA), ensures that only authorized individuals can access the network. Furthermore, incorporating granular access control policies helps restrict network access based on user roles and privileges.

- Network Segmentation and Firewall Placement

Proper network segmentation is crucial to limit the potential impact of security incidents. Dividing the network into secure segments or Virtual Local Area Networks (VLANs) helps contain breaches and restricts lateral movement. Additionally, strategically placing firewalls at entry and exit points of the WAN provides an added layer of protection by inspecting and filtering network traffic.

- Monitoring and Threat Detection

Continuous monitoring of the WAN is paramount to identify potential security threats and respond swiftly. Implementing robust intrusion detection and prevention systems (IDS/IPS) enables real-time threat detection, while network traffic analysis tools provide insights into anomalous behavior. By promptly detecting and mitigating security incidents, organizations can ensure the integrity of their WAN.

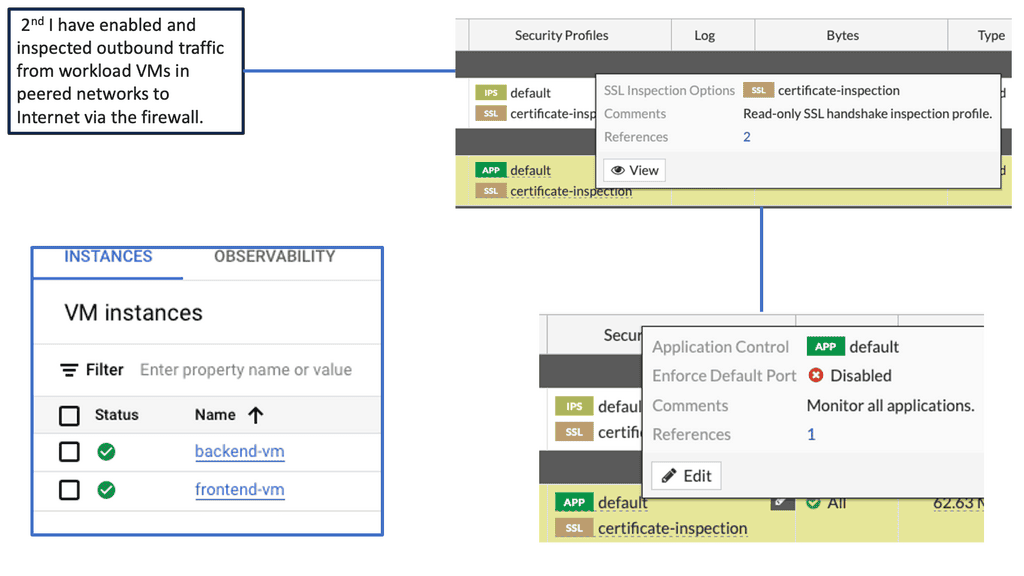

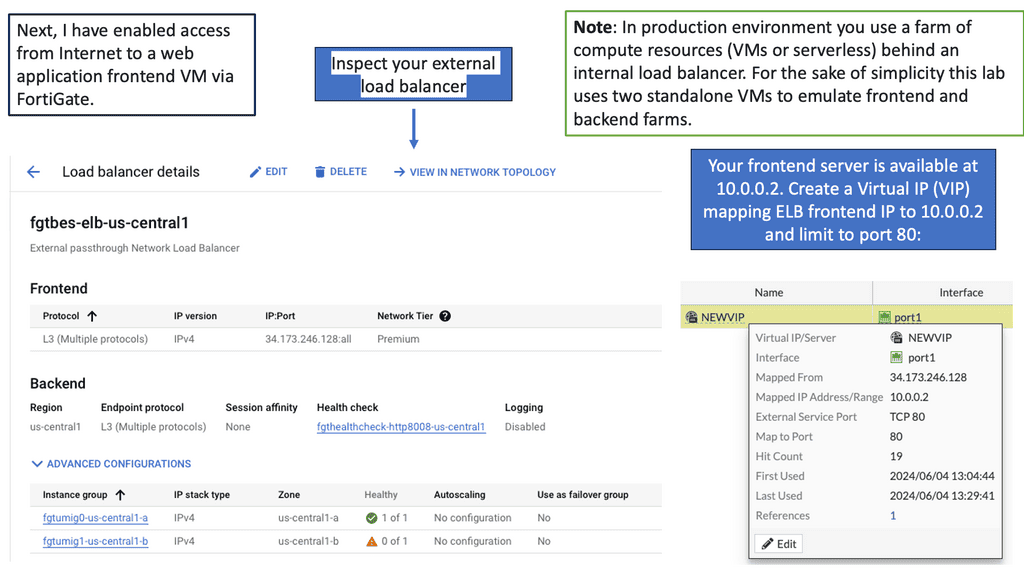

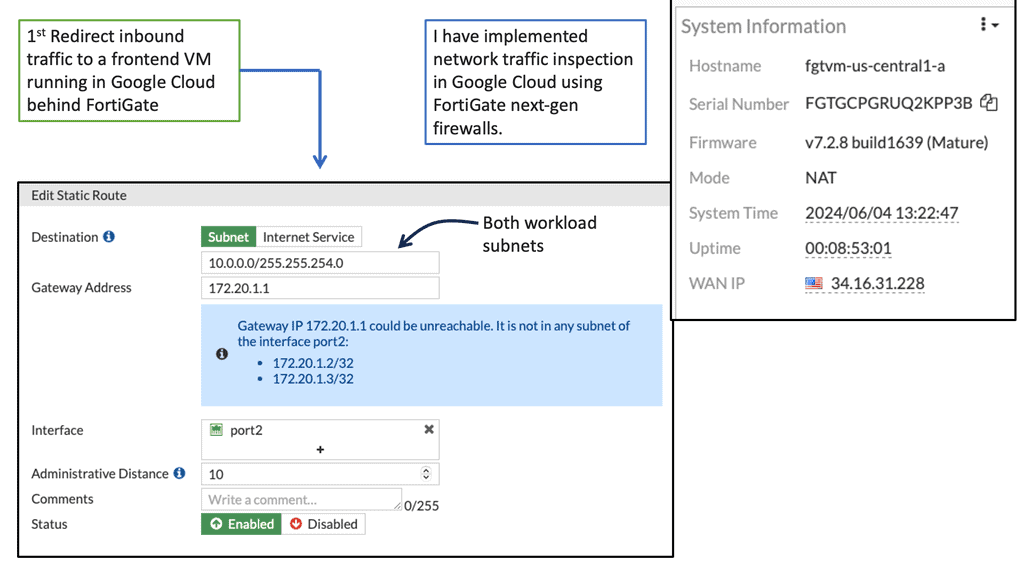

Google Cloud Security

Understanding Google Compute Resources

Google Compute Engine (GCE) is a cloud-based infrastructure service that enables businesses to deploy and run virtual machines (VMs) on Google’s infrastructure. GCE offers scalability, flexibility, and reliability, making it an ideal choice for various workloads. However, with the increasing number of cyber attacks targeting cloud infrastructure, it is crucial to implement robust security measures to protect these resources.

The Power of FortiGate

FortiGate is a next-generation firewall (NGFW) solution offered by Fortinet, a leading provider of cybersecurity solutions. It is designed to deliver advanced threat protection, high-performance inspection, and granular visibility across networks. FortiGate brings a wide range of security features to the table, including intrusion prevention, antivirus, application control, web filtering, and more.

Enhanced Threat Prevention: FortiGate provides advanced threat intelligence and real-time protection against known and emerging threats. Its advanced security features, such as sandboxing and behavior-based analysis, ensure that malicious activities are detected and prevented before they can cause damage.

High Performance: FortiGate offers high-speed inspection and low latency, ensuring that security doesn’t compromise the performance of Google Compute resources. With FortiGate, organizations can achieve optimal security without sacrificing speed or productivity.

Simplified Management: FortiGate allows centralized management of security policies and configurations, making it easier to monitor and control security across Google Compute environments. Its intuitive user interface and robust management tools simplify the task of managing security policies and responding to threats.

WAN – Quality of Service (QoS):

Different types of traffic, such as voice, video, and data, coexist in a WAN. Implementing quality of service (QoS) mechanisms allows prioritizing and allocating network resources based on the specific requirements of each traffic type. This ensures critical applications receive the bandwidth and latency to perform optimally.

Identifying QoS Requirements

Every organization has unique requirements regarding WAN QoS. It is essential to identify these requirements to tailor the QoS implementation accordingly. Key factors include application sensitivity, traffic volume, and network topology. By thoroughly analyzing these factors, organizations can determine the appropriate QoS policies and configurations that align with their needs.

Bandwidth Allocation and Traffic Prioritization

Bandwidth allocation plays a vital role in QoS implementation. Different applications have varying bandwidth requirements, and allocating bandwidth based on priority is essential. By categorizing traffic into different classes and assigning appropriate priorities, organizations can ensure that critical applications receive sufficient bandwidth while non-essential traffic is regulated to prevent congestion.

QoS Mechanisms for Latency and Packet Loss

Latency and packet loss can significantly impact application performance in WAN environments. To mitigate these issues, QoS mechanisms such as traffic shaping, traffic policing, and queuing techniques come into play. Traffic shaping helps regulate traffic flow, ensuring it adheres to predefined limits. Traffic policing, on the other hand, monitors and controls the rate of incoming and outgoing traffic. Proper queuing techniques ensure that real-time and mission-critical traffic is prioritized, minimizing latency and packet loss.

Network Monitoring and Optimization

Implementing QoS is not a one-time task; it requires continuous monitoring and optimization. Network monitoring tools provide valuable insights into traffic patterns, performance bottlenecks, and QoS effectiveness. With this data, organizations can fine-tune their QoS configurations, adjust bandwidth allocation, and optimize traffic management to meet evolving requirements.

Related: Before you proceed, you may find the following posts helpful:

WAN Design Considerations

Defining the WAN edge

Wide Area Network (WAN) edge is a term used to describe the outermost part of a vast area network. It is the point at which the network connects to the public Internet or private networks, such as a local area network (LAN). The WAN edge is typically comprised of customer premises equipment (CPE) such as routers, firewalls, and other types of hardware. This hardware connects to other networks, such as the Internet, and provides a secure connection.

The WAN Edge also includes software such as network management systems, which help maintain and monitor the network. Standard network solutions at the WAN edge are SD-WAN and DMVPN. In this post, we will address an SD-WAN design guide. For details on DMVPN and its phases, including DMVPN phase 3, visit the links.

An Enterprise WAN edge consists of several functional blocks, including Enterprise WAN Edge Distribution and Aggregation. The WAN Edge Distribution provides connectivity to the core network and acts as an integration point for any edge service, such as IPS and application optimization. The WAN Edge Aggregation is a line of defense that performs aggregation and VPN termination. The following post focuses on integrating IPS for the WAN Edge Distribution-functional block.

Back to Basic with the WAN Edge

Concept of the wide-area network (WAN)

A WAN connects your offices, data centers, applications, and storage. It is called a wide-area network because it spans outside a single building or large campus to enclose numerous locations across a specific geographic area. Since WAN is an extensive network, the data transmission speed is lower than that of other networks. An is connects you to the outside world; it’s an integral part of the infrastructure to have integrated security. You could say the WAN is the first line of defense.

Topologies of WAN (Wide Area Network)

- Firstly, we have a point-to-point topology. A point-to-point topology utilizes a point-to-point circuit between two endpoints.

- We also have a hub-and-spoke topology.

- Full mesh topology.

- Finally, a dual-homed topology.

Concept of SD-WAN

SD-WAN (Software-Defined Wide Area Network) technology enables businesses to create a secure, reliable, and cost-effective WAN (Wide Area Network) connection. SD-WAN can provide enterprises various benefits, including increased security, improved performance, and cost savings. SD-WAN provides a secure tunnel over the public internet, eliminating the need for expensive networking hardware and services. Instead, SD-WAN relies on software to direct traffic flows and establish secure site connections. This allows businesses to optimize network traffic and save money on their infrastructure.

SD-WAN Design Guide

An SD-WAN design guide is a practice that focuses on designing and implementing software-defined wide-area network (SD-WAN) solutions. SD-WAN Design requires a thorough understanding of the underlying network architecture, traffic patterns, and applications. It also requires an understanding of how the different components of the network interact and how that interaction affects application performance.

To successfully design an SD-WAN solution, an organization must first determine the business goals and objectives for the network. This will help define the network’s requirements, such as bandwidth, latency, reliability, and security. The next step is determining the network topology: the network structure and how the components connect.

Once the topology is determined, the organization must decide on the hardware and software components to use in the network. This includes selecting suitable routers, switches, firewalls, and SD-WAN controllers. The hardware must also be configured correctly to ensure optimal performance.

Once the components are selected and configured, the organization can design the SD-WAN solution. This involves creating virtual overlays, which are the connections between the different parts of the network. The organization must also develop policies and rules to govern the network traffic.

Key WAN Design Considerations

- Dynamic multi-pathing. Being able to load-balance traffic over multiple WAN links isn’t new.

- Policy. There is a broad movement to implement a policy-based approach to all aspects of IT, including networking.

- Visibility.

- Integration. The ability to integrate security such as the IPS

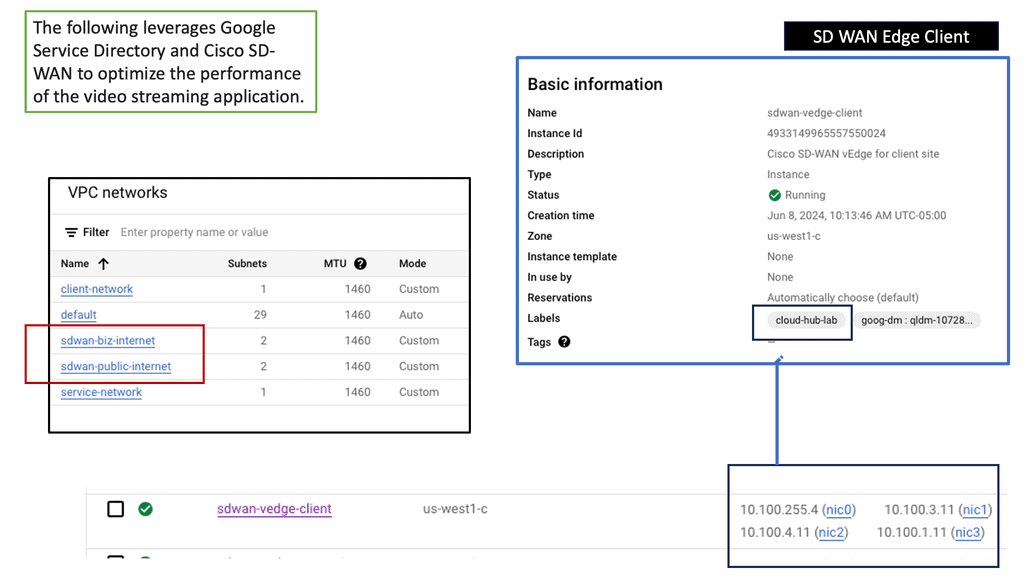

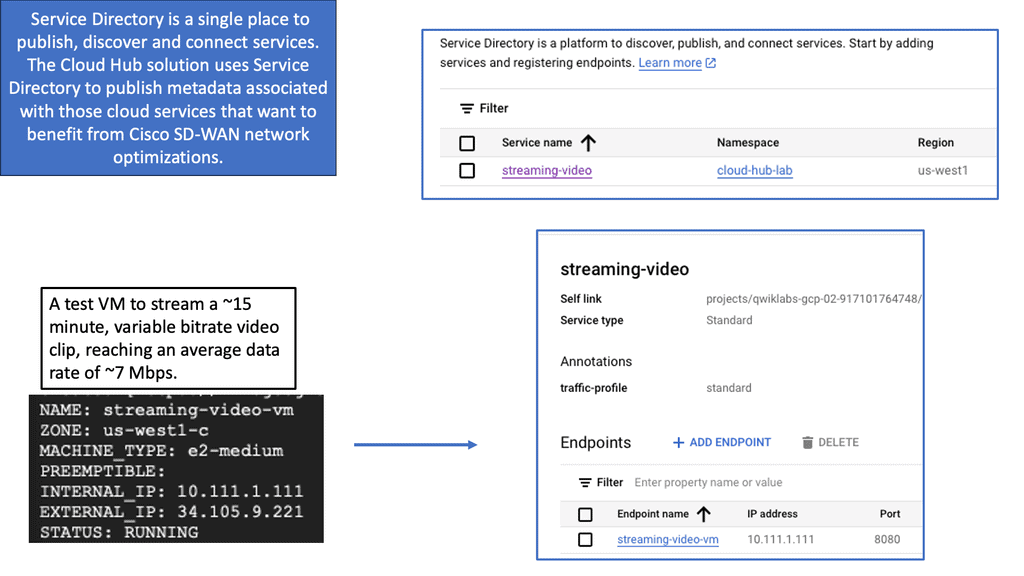

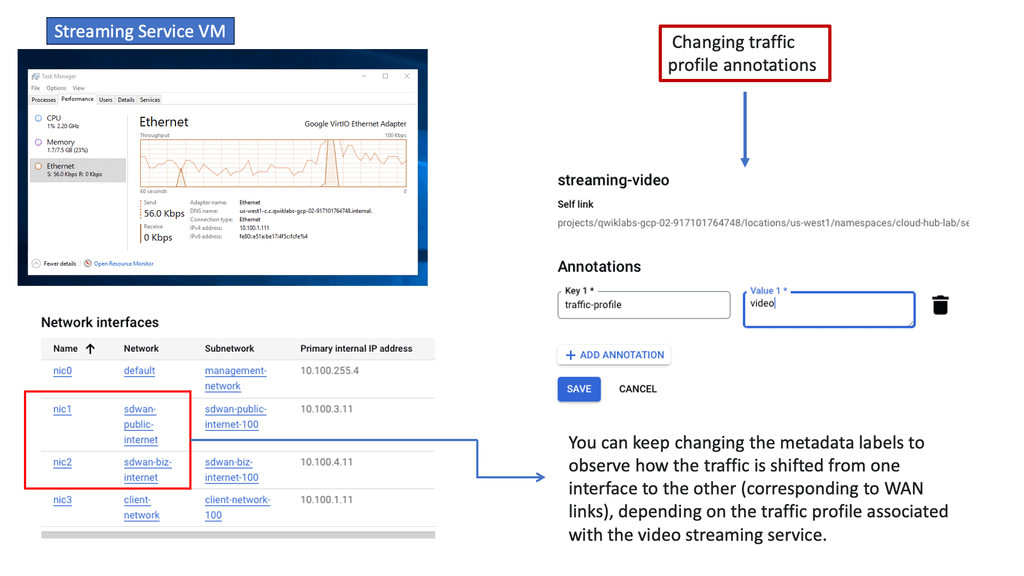

Google SD-WAN Cloud Hub

SD-WAN Cloud Hub takes the potential of SD-WAN and Google Cloud to the next level. By combining the agility and intelligence of SD-WAN with the scalability and reliability of Google Cloud, organizations can achieve optimized connectivity and seamless integration with cloud-based services.

WAN Security – Intrusion Prevention System

An IPS uses signature-based detection, anomaly-based detection, and protocol analysis to detect malicious activities. Signature-based detection involves comparing the network traffic against a known list of malicious activities. In contrast, anomaly-based detection identifies activities that deviate from the expected behavior of the network. Finally, protocol analysis detects malicious activities by analyzing the network protocol and the packets exchanged.

An IPS includes network access control, virtual patching, and application control. Network access control restricts access to the network by blocking malicious connections and allowing only trusted relationships. Virtual patching detects any vulnerability in the system and provides a temporary fix until the patch is released. Finally, application control restricts the applications users can access to ensure that only authorized applications are used.

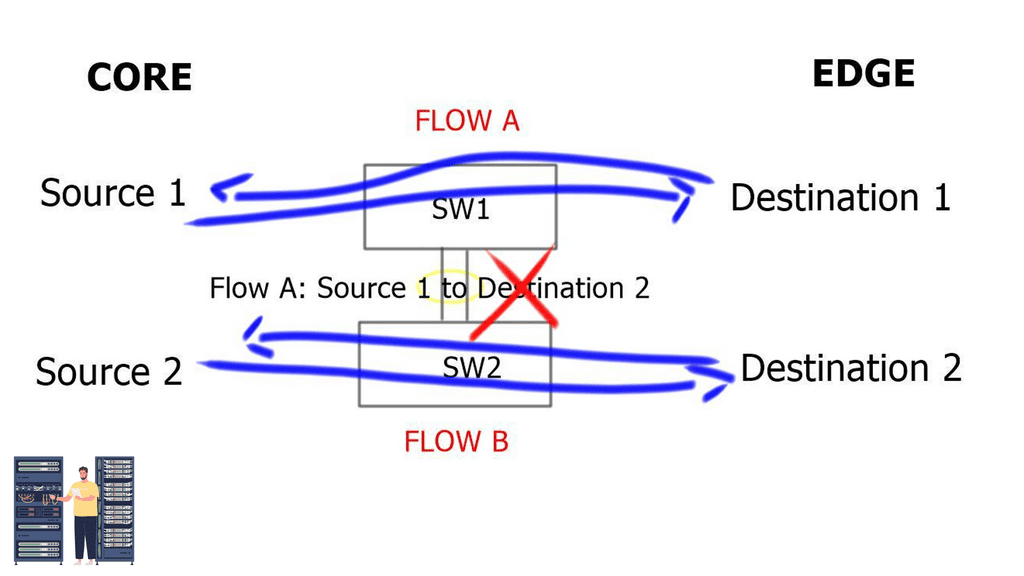

The following design guide illustrates EtherChannel Load Balancing ( ECLB ) for Intrusion Prevention System ( IPS ) high availability and traffic symmetry through Interior Gateway Protocol ( IGP ) metric manipulation. Symmetric traffic ensures the IPS system has visibility of the entire traffic path. However, IPS can lose visibility into traffic flows with asymmetrical data paths.

IPS key points

- Two VLANs on each switch logically insert IPS into the data path. VLANs 9 and 11 are the outside VLANs that face Wide Area Networks ( WAN ), and VLANs 10 and 12 are the inside VLANs that meet the protected Core.

- VLAN pairing on each IPS bridges traffic back to the switch across its VLANs.

- Etherchannel Load balancing ( ECLB ) allows the split of flows over different physical paths to and from the Intrusion Prevention System ( IPS ). It is recommended that load balance on flows be used as opposed to individual packets.

- ECLB performs a hash on the flow’s source and destination IP address to determine what physical port a flow should take. It’s a form of load splitting as opposed to load balancing.

- IPS does not maintain a state if a sensor goes down. TCP flow will be reset and forced through a different IPS appliance.

- Layer 3 routed point-to-point links implemented between switches and ASR edge routers. Interior Gateway Protocol ( IGP ) path costs are manipulated to influence traffic to and from each ASR. We are ensuring traffic symmetry.

- OSPF deployed IGP; the costs are manipulated per interface to influence traffic flow. OSPF calculates costs in the outbound direction. Selection EIGRP as the IGP, destinations are chosen based on minimum path bandwidth and accumulative delay.

- All interfaces between WAN distribution and WAN edge, including the outside VLANs ( 9 and 11 ), are placed in a Virtual Routing and Forwarding ( VRF ) instance. VRFs force all traffic between the WAN edge and the internal Core via an IPS device.

WAN Edge Considerations with the IPS

A recommended design would centralize the IPS in a hub-and-spokes system where all branch office traffic is forced through the WAN edge. In addition, a distributed IPS model should be used if local branch sites use split tunneling for local internet access.

The IPS should receive unmodified and clear text traffic. To ensure this, integrate the IPS inside the WAN edge after any VPN termination or application optimization techniques. When using route manipulation to provide traffic symmetry, a single path ( via one ASR ) should have sufficient bandwidth to accommodate the total traffic capacity of both links.

ECLB performs hashing on the source and destination address, not the flow’s bandwidth. If there are high traffic volumes between a single source and destination, all traffic passes through a single IPS.

Closing Points: WAN Design Consideration

Before diving into the technical details, it’s vital to understand the specific requirements of your network. Consider the number of users, the types of applications they’ll be accessing, and the amount of data transfer involved. Different applications, such as VoIP, video conferencing, and data-heavy software, require varying levels of bandwidth and latency. By mapping out these needs, you can tailor your WAN design to provide optimal performance.

The technology you choose to implement your WAN plays a significant role in its efficiency. Traditional options like MPLS (Multiprotocol Label Switching) offer reliability and quality of service, but newer technologies such as SD-WAN (Software-Defined Wide Area Network) provide greater flexibility and cost savings. SD-WAN allows for centralized control, making it easier to manage traffic and adjust to changing demands. Evaluate the pros and cons of each option to determine the best fit for your organization.

Security is a paramount concern in WAN design. With data traversing various networks, implementing robust security measures is essential. Consider integrating features like encryption, firewalls, and intrusion detection systems. Additionally, adopt a zero-trust approach by authenticating every device and user accessing the network. Regularly update your security protocols to defend against evolving threats.

Optimizing bandwidth and managing latency are critical for ensuring smooth network performance. Implement traffic prioritization to ensure that mission-critical applications receive the necessary resources. Consider employing WAN optimization techniques, such as data compression and caching, to enhance speed and reduce latency. Regularly monitor network performance to identify and rectify any bottlenecks.

Summary: WAN Design Considerations

In today’s interconnected world, a well-designed Wide Area Network (WAN) is essential for businesses to ensure seamless communication, data transfer, and collaboration across multiple locations. Building an efficient WAN involves considering various factors that impact network performance, security, scalability, and cost-effectiveness. In this blog post, we delved into the critical considerations for WAN design, providing insights and guidance for constructing a robust network infrastructure.

Bandwidth Requirements

When designing a WAN, understanding the bandwidth requirements is crucial. Analyzing the volume of data traffic, the types of applications being used, and the number of users accessing the network are essential factors to consider. Organizations can ensure optimal network performance and prevent potential bottlenecks by accurately assessing bandwidth needs.

Network Topology

Choosing the correct network topology is another critical aspect of WAN design. Whether it’s a star, mesh, ring, or hybrid topology, each has its advantages and disadvantages. Factors such as scalability, redundancy, and ease of management must be considered to determine the most suitable topology for the organization’s specific requirements.

Security Measures

Securing the WAN infrastructure is paramount to protect sensitive data and prevent unauthorized access. Implementing robust encryption protocols, firewalls, intrusion detection systems, and virtual private networks (VPNs) are vital considerations. Additionally, regular security audits, access controls, and employee training on best security practices are essential to maintain a secure WAN environment.

Quality of Service (QoS)

Maintaining consistent and reliable network performance is crucial for organizations relying on real-time applications such as VoIP, video conferencing, or cloud-based services. Implementing Quality of Service (QoS) mechanisms enables prioritization of critical traffic, ensuring a smooth and uninterrupted user experience. Properly configuring QoS policies helps allocate bandwidth effectively and manage network congestion.

Conclusion:

Designing a robust WAN requires a comprehensive understanding of an organization’s unique requirements, considering factors such as bandwidth requirements, network topology, security measures, and Quality of Service (QoS). By carefully evaluating these considerations, organizations can build a resilient and high-performing WAN infrastructure that supports their business objectives and facilitates seamless communication and collaboration across multiple locations.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023