Full Proxy

In the vast realm of computer networks, the concept of full proxy stands tall as a powerful tool that enhances security and optimizes performance. Understanding its intricacies and potential benefits can empower network administrators and users alike. In this blog post, we will delve into the world of full proxy, exploring its key features, advantages, and real-world applications.

Full proxy is a network architecture approach that involves intercepting and processing all network traffic between clients and servers. Unlike other methods that only handle specific protocols or applications, full proxy examines and analyzes every packet passing through, regardless of the protocol or application used. This comprehensive inspection allows for enhanced security measures and advanced traffic management capabilities.

Enhanced Security: By inspecting each packet, full proxy enables deep content inspection, allowing for the detection and prevention of various threats, such as malware, viruses, and intrusion attempts. It acts as a robust barrier safeguarding the network from potential vulnerabilities.

Advanced Traffic Management: Full proxy provides granular control over network traffic, allowing administrators to prioritize, shape, and optimize data flows. This capability enhances network performance by ensuring critical applications receive the necessary bandwidth while mitigating bottlenecks and congestion.

Application Layer Filtering: Full proxy possesses the ability to filter traffic at the application layer, enabling fine-grained control over the types of content that can pass through the network. This feature is particularly useful in environments where specific protocols or applications need to be regulated or restricted.

Enterprise Networks: Full proxy finds extensive use in large-scale enterprise networks, where security and performance are paramount. It enables organizations to establish robust defenses against cyber threats while optimizing the flow of data across their infrastructures.

Web Filtering and Content Control: Educational institutions and public networks often leverage full proxy solutions to implement web filtering and content control measures. By examining the content of web pages and applications, full proxy allows administrators to enforce policies and ensure compliance with acceptable usage guidelines.

Full proxy represents a powerful network architecture approach that offers enhanced security measures, advanced traffic management capabilities, and application layer filtering. Its real-world applications span across enterprise networks, educational institutions, and public environments. Embracing full proxy can empower organizations and individuals to establish resilient network infrastructures while fostering a safer and more efficient digital environment.

Matt Conran

Highlights: Full Proxy

Understanding Full Proxy

Full Proxy refers to a web architecture that utilizes a proxy server to handle and process all client requests. Unlike traditional architectures where the client directly communicates with the server, Full Proxy acts as an intermediary, intercepting and forwarding requests on behalf of the client. This approach provides several advantages, including enhanced security, improved performance, and better control over web traffic.

At its core, a full proxy server acts as an intermediary between a user’s device and the internet. Unlike a simple proxy, which merely forwards requests, a full proxy server fully terminates and re-establishes connections on behalf of both the client and the server. This complete control over the communication process allows for enhanced security, better performance, and more robust content filtering.

Full Proxy Key Points:

a: – Enhanced Security: One of the primary reasons for the growing popularity of Full Proxy is its ability to bolster security measures. By acting as a gatekeeper between the client and the server, Full Proxy can inspect and filter incoming requests, effectively mitigating potential threats such as DDoS attacks, SQL injections, and cross-site scripting. Furthermore, Full Proxy can enforce strict authentication and authorization protocols, ensuring that only legitimate traffic reaches the server.

b: – Performance: Another significant advantage of Full Proxy is its impact on performance. With Full Proxy architecture, the proxy server can cache frequently requested resources, reducing the load on the server and significantly improving response times. Additionally, Full Proxy can employ compression techniques, minimizing the amount of data transmitted between the client and server, resulting in faster page loads and a smoother user experience.

c: – Control and Load Balancing: Full Proxy also offers granular control over web traffic. By intelligently routing requests, it allows for load balancing across multiple servers, ensuring optimal resource utilization and preventing bottlenecks. Additionally, Full Proxy can prioritize certain types of traffic, allocate bandwidth, and implement traffic shaping mechanisms, enabling administrators to manage network resources effectively.

d: – Comprehensive Content Filtering: Full proxies can enforce strict content filtering policies, making them ideal for organizations that need to regulate internet usage and block access to inappropriate or harmful content.

**Full Proxy Mode**

A full proxy mode is a proxy server that acts as an intermediary between a user and a destination server. The proxy server acts as a gateway between the user and the destination server, handling all requests and responses on behalf of the user. A full proxy mode aims to provide users with added security, privacy, and performance by relaying traffic between two or more locations.

In full proxy mode, the proxy server takes on the client role, initiating requests and receiving responses from the destination server. All requests are made on behalf of the user, and the proxy server handles the entire process and provides the user with the response. This provides the user with an added layer of security, as the proxy server can authenticate the user before allowing them access to the destination server.

**Increase in Privacy**

The full proxy mode also increases privacy, as the proxy server is the only point of contact between the user and the destination server. All requests sent from the user are relayed through the proxy server, ensuring that the user’s identity remains hidden. Additionally, the full proxy mode can improve performance by caching commonly requested content, reducing lag times, and improving the user experience.

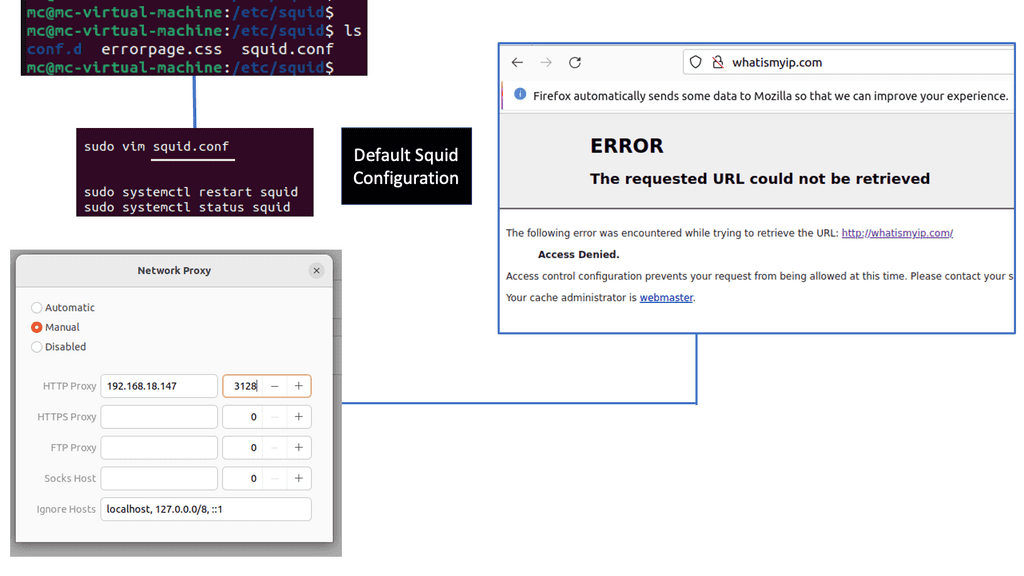

Example Caching Proxy: What is Squid Proxy?

Squid Proxy is a widely-used caching proxy server that acts as an intermediary between clients and servers. It acts as a buffer, storing frequently accessed web pages and files locally, thereby reducing bandwidth usage and improving response times. Whether you’re an individual user or an organization, Squid Proxy can be a game-changer in optimizing your internet connectivity.

Features and Benefits of Squid Proxy

Squid Proxy offers a plethora of features that make it a valuable tool in the world of networking. From caching web content to controlling access and providing security, it offers a comprehensive package. Some key benefits of using Squid Proxy include:

1. Bandwidth Optimization: By caching frequently accessed web pages, Squid Proxy reduces the need to fetch content from the source server, resulting in significant bandwidth savings.

2. Faster Browsing Experience: With cached content readily available, users experience faster page load times and smoother browsing sessions.

3. Access Control: Squid Proxy allows administrators to implement granular access control policies, restricting or allowing access to specific websites or content based on customizable rules.

4. Security: Squid Proxy acts as a shield between clients and servers, providing an additional layer of security by filtering out malicious content, blocking potentially harmful websites, and protecting against various web-based attacks.

The Benefits of Full Proxy

– Enhanced Security: By acting as a middleman, Full Proxy provides an additional layer of security by inspecting and filtering incoming and outgoing traffic. This helps protect users from malicious attacks and unauthorized access to sensitive information.

– Performance Optimization: Full Proxy optimizes web performance through techniques such as caching and compression. Storing frequently accessed content and reducing the transmitted data size significantly improves response times and reduces bandwidth consumption.

– Content Filtering and Control: With Full Proxy, administrators can enforce content filtering policies, restricting access to certain websites or types of content. This feature is handy in educational institutions, corporate environments, or any setting where internet usage needs to be regulated.

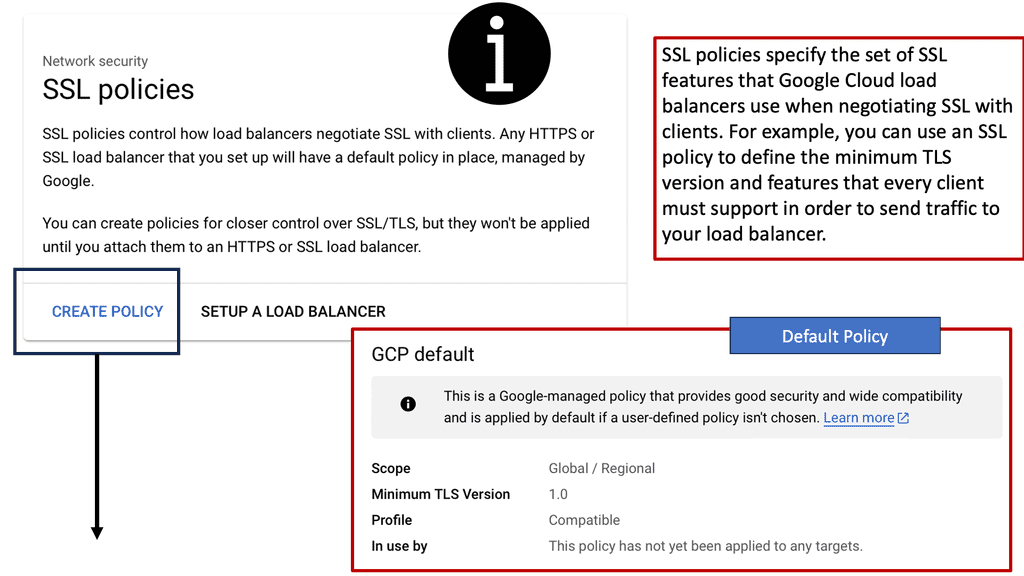

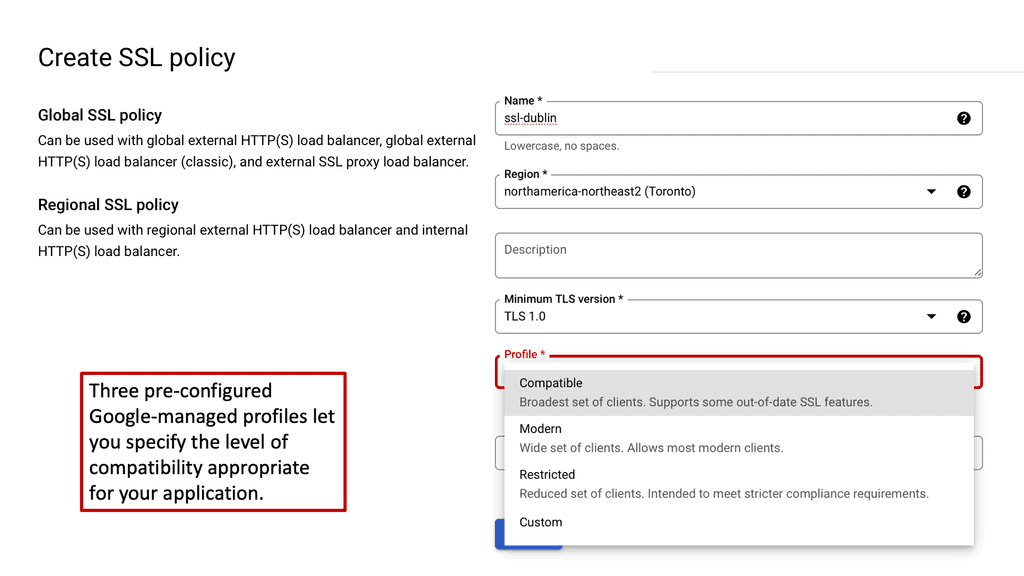

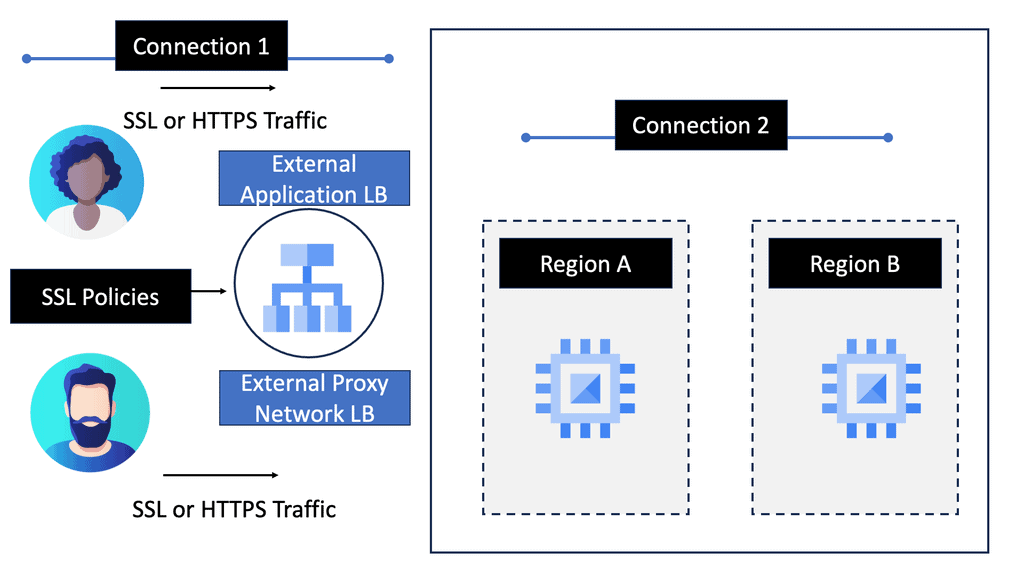

Example of SSL Policies with Google Cloud

**The Importance of SSL Policies**

Implementing robust SSL policies is crucial for several reasons. Firstly, they help in maintaining data integrity by preventing data from being tampered with during transmission. Secondly, SSL policies ensure data confidentiality by encrypting information, making it accessible only to the intended recipient. Lastly, they enhance user trust; customers are more likely to engage with websites and applications that visibly prioritize their security.

**Implementing SSL Policies on Google Cloud**

Google Cloud offers comprehensive tools and features for managing SSL policies. By leveraging Google Cloud Load Balancing, businesses can easily configure SSL policies to enforce specific security standards. This includes setting minimum and maximum TLS versions, as well as selecting compatible cipher suites. Google Cloud’s integration with Cloud Armor provides additional layers of security, allowing businesses to create a robust defense against potential threats.

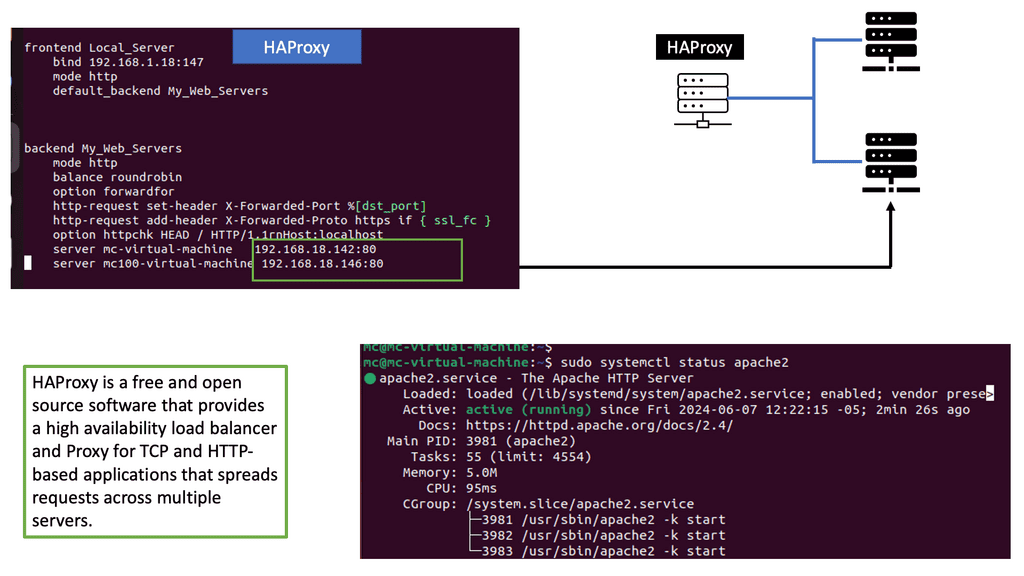

Example Reverse Proxy: Load Balancing with HAProxy

Understanding HAProxy

HAProxy, which stands for High Availability Proxy, is an open-source software that serves as a load balancer and reverse proxy. It acts as an intermediary between clients and servers, distributing incoming requests across multiple backend servers to optimize performance and ensure fault tolerance.

One of the primary features of HAProxy is its robust load balancing capabilities. It intelligently distributes traffic among multiple backend servers based on predefined algorithms such as round-robin, least connections, or source IP hashing. This allows for efficient utilization of resources and prevents any single server from becoming overwhelmed.

HAProxy goes beyond simple load balancing by providing advanced traffic management features. It supports session persistence, SSL termination, and content switching, enabling organizations to handle complex scenarios seamlessly. With HAProxy, you can prioritize certain types of traffic, apply access controls, and even perform content-based routing.

Full Proxy – Improving TCP Performance

**Enhancing TCP Performance with Full Proxy**

One of the significant advantages of using a full proxy is its ability to improve TCP performance. TCP, or Transmission Control Protocol, is responsible for ensuring reliable data transmission across networks.

Full proxies can optimize TCP performance by managing the connection lifecycle, reducing latency, and improving throughput. They achieve this by implementing techniques like TCP multiplexing, where multiple TCP connections are consolidated into a single connection to reduce overhead and improve efficiency.

Additionally, full proxies can adjust TCP window sizes, manage congestion, and provide dynamic load balancing, all of which contribute to a smoother and more efficient network experience.

**The Dynamics of TCP Performance**

Transmission Control Protocol (TCP) is fundamental to internet communications, ensuring data packets are delivered accurately and reliably. However, TCP’s performance can often be hindered by latency, packet loss, and congestion. This is where a full proxy comes into play, offering a solution to optimize TCP performance by managing connections more efficiently and applying advanced traffic management techniques.

**Optimizing TCP with Full Proxy: Techniques and Benefits**

Full proxy improves TCP performance through several impactful techniques:

1. **Connection Multiplexing:** By consolidating multiple client requests into fewer server connections, full proxy reduces server load and optimizes resource utilization, leading to faster response times.

2. **TCP Offloading:** Full proxy can offload TCP processing tasks from the server, freeing up server resources for other critical tasks and improving overall performance.

3. **Traffic Shaping and Prioritization:** By analyzing and prioritizing traffic, full proxy ensures that critical data is transmitted with minimal delay, enhancing user experience and application performance.

These techniques not only boost TCP performance but also contribute to a more resilient and adaptive network infrastructure.

A key Point: Full Proxy and Load Balancing

Full Proxy plays a crucial role in load balancing, distributing incoming network traffic across multiple servers to ensure optimal resource utilization and prevent server overload. This results in improved performance, scalability, and high availability.

One of the standout benefits of using a full proxy in load balancing is its ability to provide enhanced security. By fully terminating client connections, the full proxy can inspect traffic for potential threats and apply security measures before forwarding requests to the server.

Additionally, full proxy load balancers can offer improved fault tolerance. In the event of a server failure, the full proxy can seamlessly redirect traffic to healthy servers without interrupting the user experience. This resilience is crucial for maintaining service availability in today’s always-on digital landscape.

Knowledge Check: Reverse Proxy vs Full Proxy

## What is a Reverse Proxy?

A reverse proxy is a server that sits in front of web servers and forwards client requests to the appropriate backend server. This setup is used to help distribute the load, improve performance, and enhance security. Reverse proxies can hide the identity and characteristics of the backend servers, making it harder for attackers to target them directly. Additionally, they can provide SSL encryption, caching, and load balancing, making them a versatile tool in managing web traffic.

### What is a Full Proxy?

A full proxy, on the other hand, provides a more comprehensive control over the traffic between the client and server. Unlike a reverse proxy, a full proxy creates two separate connections: one between the client and the proxy, and another between the proxy and the destination server. This means the full proxy can inspect, filter, and even modify data as it passes through, offering enhanced levels of security and customization. Full proxies are often used in environments where data integrity and security are paramount.

### Key Differences Between Reverse and Full Proxies

The primary difference between a reverse proxy and a full proxy lies in their level of interaction with the traffic. While a reverse proxy merely forwards requests to backend servers, a full proxy terminates the client connection and establishes a new one to the server. This allows full proxies to offer more extensive security features, such as data leak prevention and deep content inspection. However, this added functionality can also introduce complexity and latency.

### Use Cases: When to Choose Which?

Choosing between a reverse proxy and a full proxy depends largely on your specific needs. If your primary goal is to distribute traffic, provide basic security, and improve performance with minimal configuration, a reverse proxy might be sufficient. However, if your requirements include detailed traffic analysis, robust security protocols, and the ability to modify data in transit, a full proxy is likely the better choice.

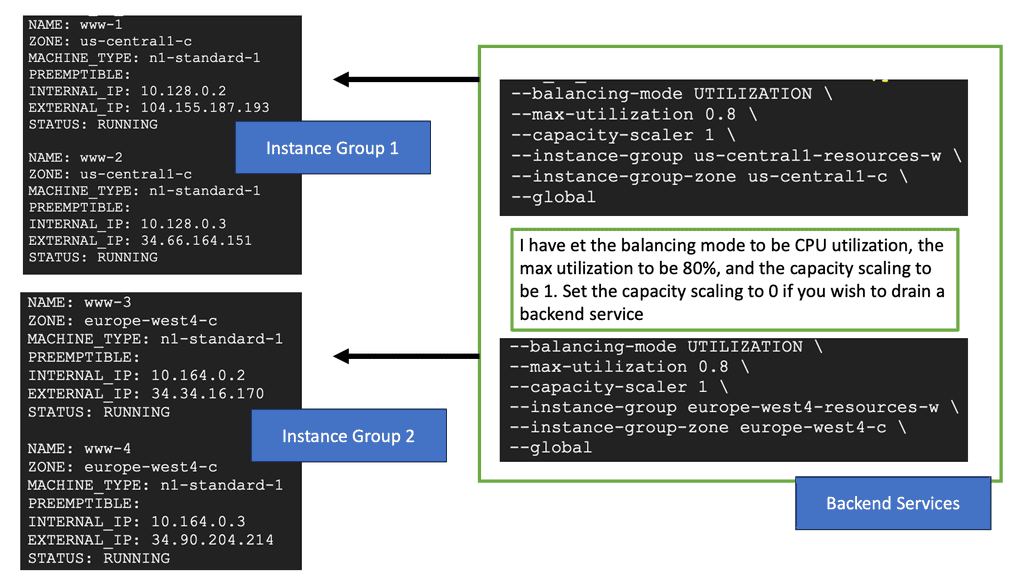

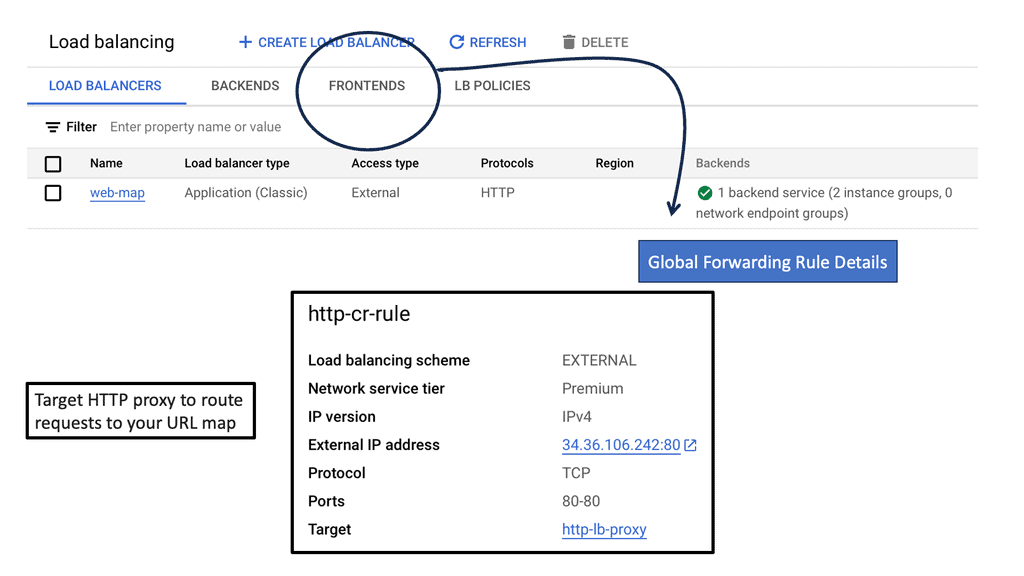

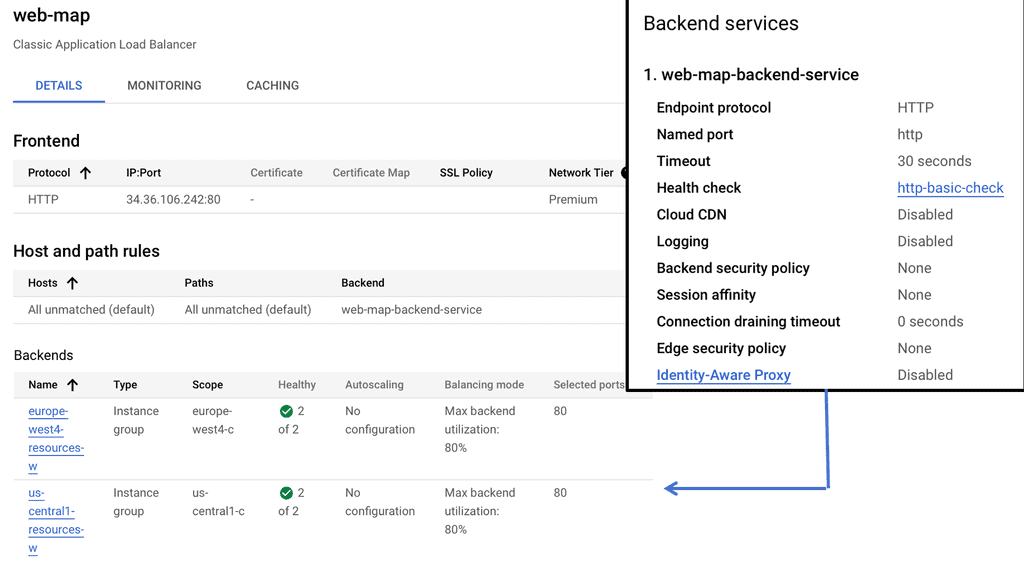

Example: Load Balancing in Google Cloud

### The Power of Google Cloud’s Global Network

Google Cloud’s global network is one of its most significant advantages when it comes to cross-region load balancing. With data centers spread across the world, Google Cloud offers a truly global reach that ensures your applications are always available, regardless of where your users are located. This section explores how Google Cloud’s infrastructure supports seamless load balancing across regions, providing businesses with a reliable and scalable solution.

### Setting Up Your Load Balancer

Implementing a cross-region HTTP load balancer on Google Cloud may seem daunting, but with the right guidance, it can be a straightforward process. This section provides a step-by-step guide on setting up a load balancer, from selecting the appropriate configuration to deploying it within your Google Cloud environment. Key considerations, such as choosing between internal and external load balancing, are also discussed to help you make informed decisions.

### Optimizing Performance and Security

Once your load balancer is up and running, the next step is optimizing its performance and ensuring the security of your applications. Google Cloud offers a range of tools and best practices for fine-tuning your load balancer’s performance. This section delves into techniques such as auto-scaling, health checks, and SSL offloading, providing insights into how you can maximize the efficiency and security of your load-balanced applications.

**Advanced TCP Optimization Techniques**

TCP performance parameters are settings that govern the behavior of the TCP protocol stack. These parameters can be adjusted to adapt TCP’s behavior based on specific network conditions and requirements. By understanding these parameters, network administrators and engineers can optimize TCP’s performance to achieve better throughput, reduced latency, and improved overall network efficiency.

Full Proxy leverages several advanced TCP optimization techniques to enhance performance. These techniques include:

Control Algorithms: One key aspect of TCP performance parameters is the choice of congestion control algorithm. Congestion control algorithms, such as Reno, Cubic, and BBR, regulate the rate TCP sends data packets based on the network’s congestion level. Each algorithm has its characteristics and strengths, and selecting the appropriate algorithm can significantly impact network performance.

Window Size and Scaling: Another critical TCP performance parameter is the window size, which determines the amount of data that can be sent before receiving an acknowledgment. By adjusting the window size and enabling window scaling, TCP can better utilize network bandwidth and minimize latency. Understanding the relationship between window size, round-trip time, and bandwidth is crucial for optimizing TCP performance.

Selective Acknowledgment (SACK): The SACK option is a TCP performance parameter that enables the receiver to inform the sender about the missing or out-of-order packets. By utilizing SACK, TCP can recover from packet loss more efficiently and reduce the need for retransmissions. Implementing SACK can greatly enhance TCP’s reliability and overall throughput in networks prone to packet loss or congestion.

What is TCP MSS?

TCP MSS refers to the maximum amount of data that can be encapsulated within a single TCP segment. It represents the largest payload size that can be transmitted without fragmentation. Understanding TCP MSS ensures efficient data transmission and avoids unnecessary overhead.

The determination of TCP MSS involves negotiation between the communicating devices during the TCP handshake process. The MSS value is typically based on the underlying network’s Maximum Transmission Unit (MTU), which represents the largest size of data that can be transmitted in a single network packet.

TCP MSS has a direct impact on network communications performance and efficiency. By optimizing the MSS value, we can minimize the number of segments and reduce overhead, leading to improved throughput and reduced latency. This is particularly crucial in scenarios where bandwidth is limited or network congestion is a common occurrence.

To achieve optimal network performance, it is important to carefully tune the TCP MSS value. This can be done at various network layers, such as at the operating system level or within specific applications. By adjusting the MSS value, we can ensure efficient utilization of network resources and mitigate potential bottlenecks.

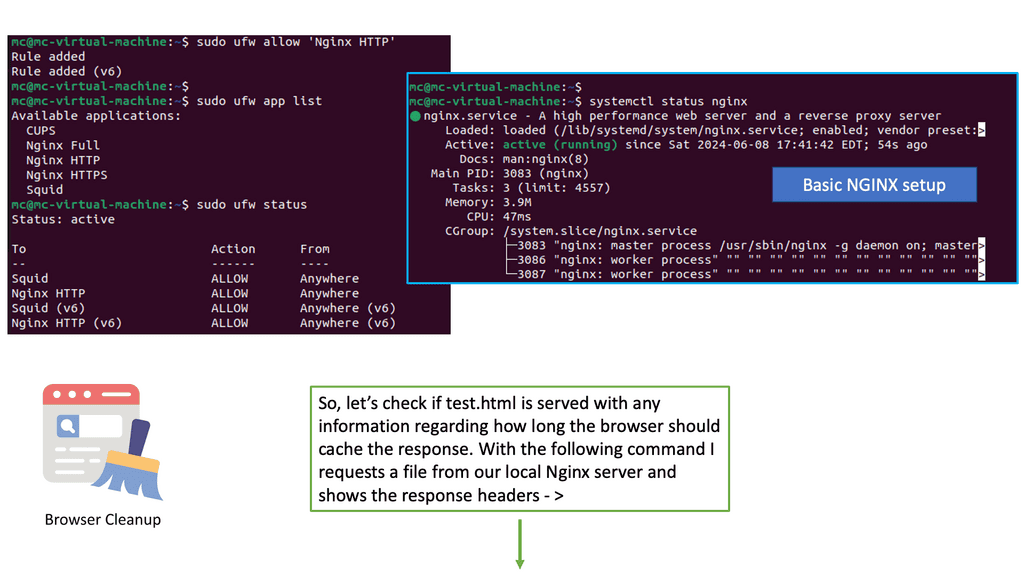

Knowledge Check: Understanding Browser Caching

Browser caching is a mechanism that allows web browsers to store static resources locally, such as images, CSS files, and JavaScript files. When a user revisits a website, the browser can retrieve these cached resources instead of making new requests to the server. This significantly reduces page load times and minimizes server load.

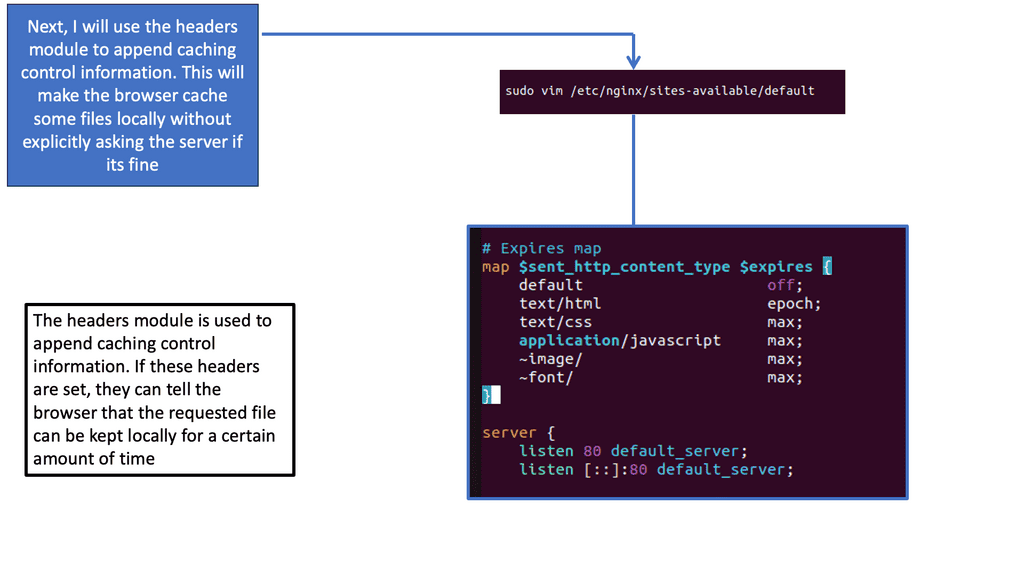

Nginx, a high-performance web server, provides the header module, which allows us to manipulate HTTP headers and control browser caching behavior. By configuring the appropriate headers, we can instruct the browser to cache specific resources and define cache expiration rules.

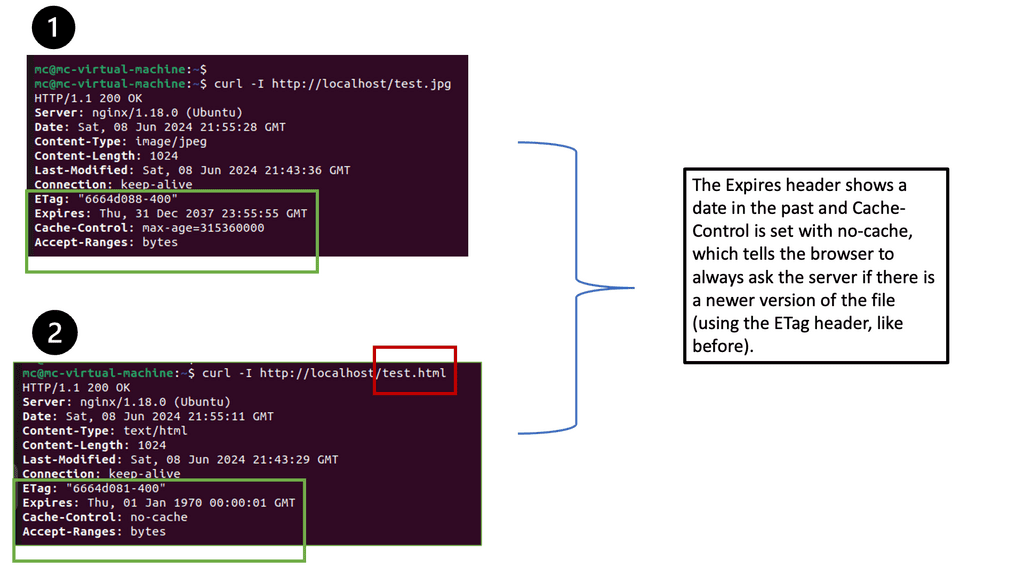

Cache-Control Headers & ETag Headers

One crucial aspect of browser caching is setting the Cache-Control header. This header specifies the caching directives that the browser should follow. With Nginx’s header module, we can fine-tune the Cache-Control header for different types of resources, such as images, CSS files, and JavaScript files. By setting appropriate max-age values, we can control how long the browser should cache these resources.

In addition to Cache-Control, Nginx’s header module allows us to implement ETag headers. ETags are unique identifiers assigned to each version of a resource. By configuring ETags, we can enable conditional requests, wherein the browser can send a request to the server only if the resource has changed. This further optimizes browser caching by reducing unnecessary network traffic.

Related: Before you proceed, you may find the following helpful information.

Full Proxy

– The term ‘Proxy’ is a contraction from the middle English word procuracy, a legal term meaning to act on behalf of another. For example, you may have heard of a proxy vote. You submit your choice, and someone else votes the ballot on your behalf.

– In networking and web traffic, a proxy is a device or server that acts on behalf of other devices. It sits between two entities and performs a service. Proxies are hardware or software solutions that sit between the client and the server and do something to request and sometimes respond.

– A proxy server sits between the client requesting a web document and the target server. It facilitates communication between the sending client and the receiving target server in its most straightforward form without modifying requests or replies.

– When a client initiates a request for a resource from the target server, such as a webpage or document, the proxy server hijacks our connection. It represents itself as a client to the target server, requesting the resource on our behalf. If a reply is received, the proxy server returns it to us, giving the impression that we have communicated with the target server.

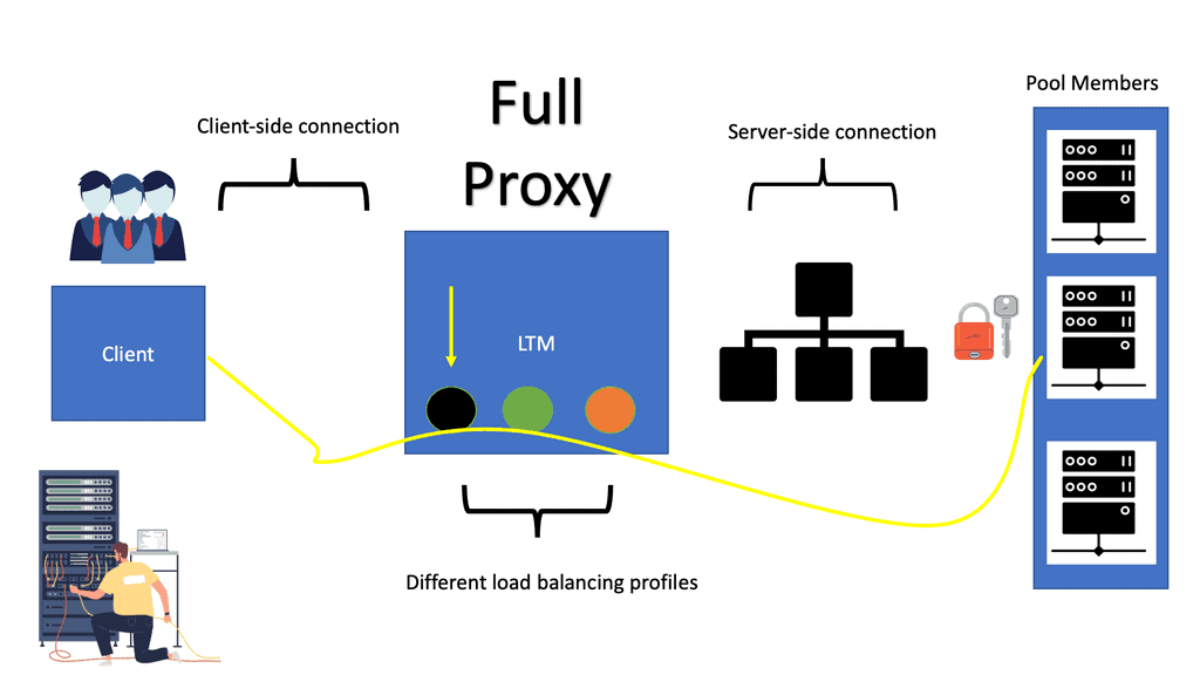

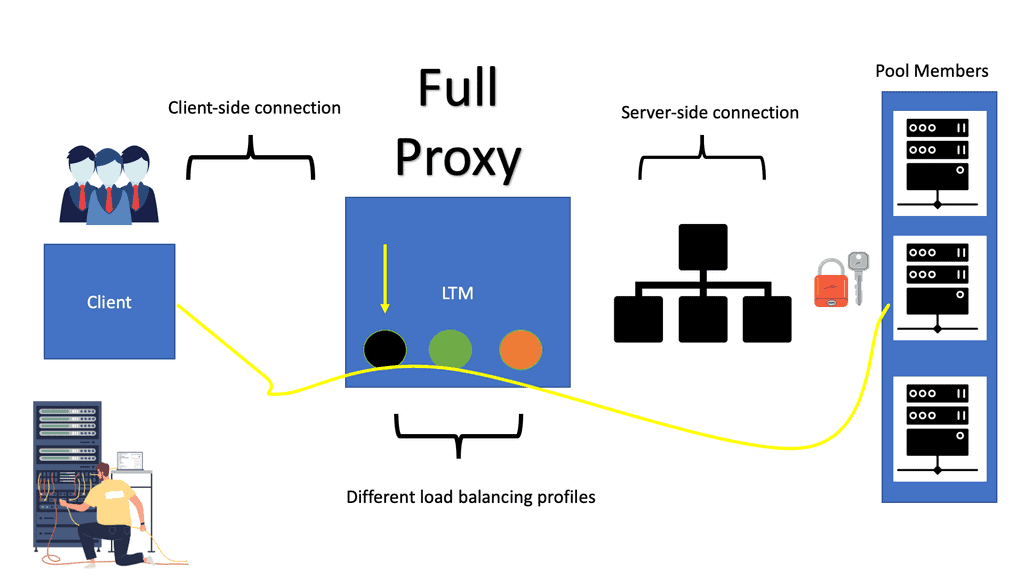

Example Product: Local Traffic Manager

Local Traffic Manager (LTM) is part of a suite of BIG-IP products that add intelligence to connections by intercepting, analyzing, and redirecting traffic. Its architecture is based on full proxy mode, meaning the LTM load balancer completely understands the connection, enabling it to be an endpoint and originator of client—and server-side connections.

All kinds of full or standard proxies act as gateways from one network to another. They sit between two entities and mediate connections. The difference in F5 full proxy architecture becomes apparent with their distinctions in flow handling. So, the main difference in the full proxy vs. half proxy debate is how connections are handled.

- Enhancing Web Performance:

One critical advantage of Full Proxy is its ability to enhance web performance. By employing techniques like caching and compression, Full Proxy servers can significantly reduce the load on origin servers and improve the overall response time for clients. Caching frequently accessed content at the proxy level reduces latency and bandwidth consumption, resulting in a faster and more efficient web experience.

- Load Balancing:

Full Proxy also provides load balancing capabilities, distributing incoming requests across multiple servers to ensure optimal resource utilization. By intelligently distributing the load, Full Proxy helps prevent server overload, improving scalability and reliability. This is especially crucial for high-traffic websites or applications with many concurrent users.

- Security and Protection:

In the age of increasing cyber threats, Full Proxy plays a vital role in safeguarding sensitive data and protecting web applications. Acting as a gatekeeper, Full Proxy can inspect, filter, and block malicious traffic, protecting servers from distributed denial-of-service (DDoS) attacks, SQL injections, and other standard web vulnerabilities. Additionally, Full Proxy can enforce SSL encryption, ensuring secure data transmission between clients and servers.

- Granular Control and Flexibility:

Full Proxy offers organizations granular control over web traffic, allowing them to define access policies and implement content filtering rules. This enables administrators to regulate access to specific websites, control bandwidth usage, and monitor user activity. By providing a centralized control point, Full Proxy empowers organizations to enforce security measures and maintain compliance with data protection regulations.

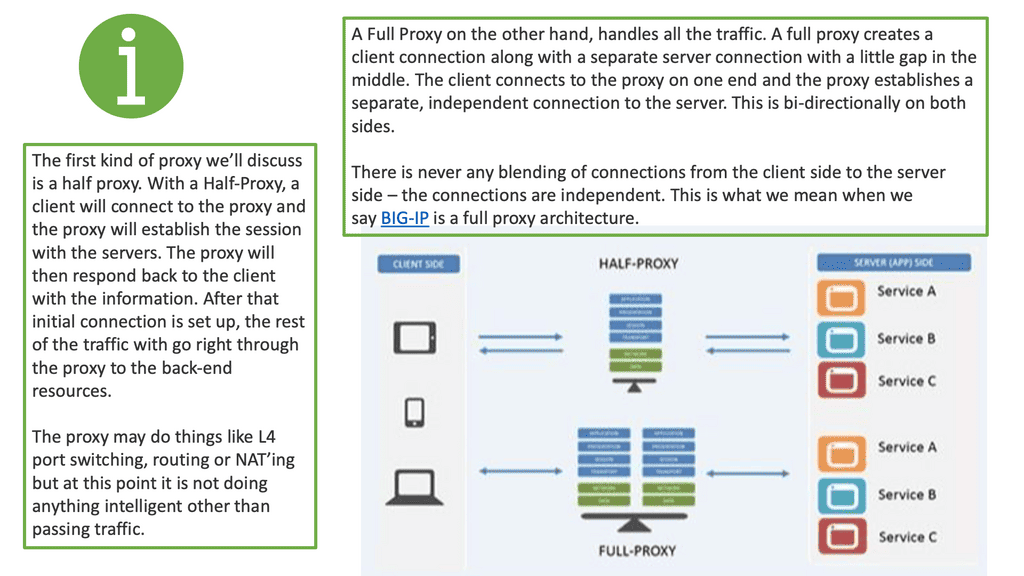

Full Proxy vs Half Proxy

When considering a full proxy vs. a half proxy, the half-proxy sets up a call, and the client and server do their thing. Half-proxies are known to be suitable for Direct Server Return (DSR). You’ll have the initial setup for streaming protocols, but instead of going through the proxy for the rest of the connections, the server will bypass the proxy and go straight to the client.

This is so you don’t waste resources on the proxy for something that can be done directly from server to client. A full proxy, on the other hand, handles all the traffic. It creates a client connection and a separate server connection with a little gap in the middle.

The full proxy intelligence is in that OSI Gap. With a half-proxy, it is primarily client-side traffic on the way in during a request and then does what it needs…with a full proxy, you can manipulate, inspect, drop, and do what you need to the traffic on both sides and in both directions. Whether a request or response, you can manipulate traffic on the client-side request, the server-side request, the server-side response, or the client-side response. So you get a lot more power with a full proxy than you would with a half proxy.

Highlighting F5 full proxy architecture

A full proxy architecture offers much more granularity than a half proxy ( full proxy vs. half proxy ) by implementing dual network stacks for client and server connections and creating two separate entities with two different session tables—one on the client side and another on the server side. The BIG-IP LTM load balancer manages the two sessions independently.

The connections between the client and the LTM are different and independent of the connections between the LTM and the backend server, as you will notice from the diagram below. Again, there is a client-side connection and a server-side connection. Each connection has its TCP behaviors and optimizations.

Different profiles for different types of clients

Generally, client connections have longer paths to take and are exposed to higher latency levels than server-side connections. It’s more than likely that the majority of client connections will experience higher latency. A full proxy addresses these challenges by implementing different profiles and properties to server and client connections and allowing more advanced traffic management. Traffic flow through a standard proxy is end-to-end; usually, the proxy cannot simultaneously optimize for both connections.

F5 full proxy architecture: Default BIP-IP traffic processing

Clients send a request to the Virtual IP address that represents backend pool members. Once a load-balancing decision is made, a second connection is opened to the pool member. We now have two connections, one for the client and the server. The source IP address is still that of the original sending client, but the destination IP address changes to the pool member, known as destination-based NAT. The response is the reverse.

The source address is the pool member and the original client’s destination. This process requires that all traffic passes through the LTM, enabling these requests to be undone. The source address is translated from the pool member to the Virtual Server IP address.

Response traffic must flow back through the LTM load balancer to ensure the translation can be undone. For this to happen, servers (pool members) use LTM as their Default Gateway. Any off-net traffic flows through the LTM. What happens if requests come through the BIG-IP, but the response goes through a different default gateway?

A key point: Source address translation (SNAT)

The source address will be the responding pool member, but the sending client does not have a connection with the pool member; it has a connection to the VIP located on the LTM. In addition to doing destination address translation, the LTM can do Source address translation (SNAT). This forces the response back to the LTM, and the transitions are undone. It is expected to use the Auto Map Source Address Selection feature- the BIG-IP selects one of its “IP” addresses as the IP for the SNAT.

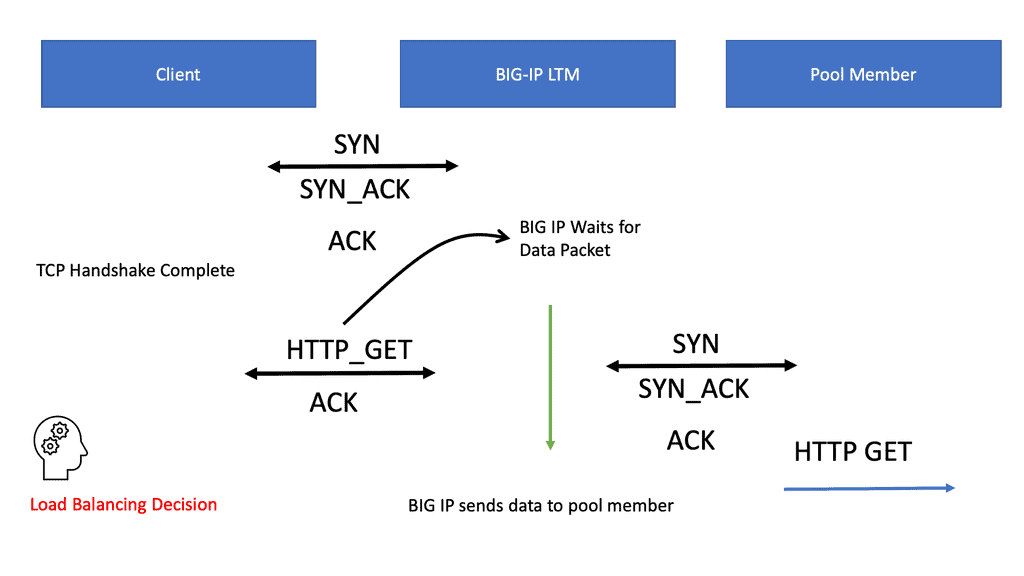

F5 full proxy architecture and virtual server types

Virtual servers have independent packet handling techniques that vary by type. The following are examples of some of the available virtual servers: standard virtual server with Layer 7 functionality, Performance Layer 4 Virtual Server, Performance HTTP virtual server, Forwarding Layer 2 virtual server, Forwarding IP virtual server, Reject virtual server, Stateless, DHCP Relay, and Message Routing. The example below displays the TCP connection setup for a Virtual server with Layer 7 functionality.

LMT forwards the HTTP GET requests to the Pool member

When the client-to-LTM handshake is complete, it waits for the initial HTTP request (HTTP_GET) before making a load-balancing decision. Then, it does a full TCP session with the pool member, but this time, the LTM is the client in the TCP session. For the client connection, the LTM was the server. The BIG-IP waits for the initial traffic flow to set up the load balancing to mitigate against DoS attacks and preserve resources.

As discussed, all virtual servers have different packet-handling techniques. For example, clients send initial SYN to the LTM with the performance virtual server. The LTM system makes the load-balancing decision and passes the SYN request to the pool member without completing the full TCP handshake.

Load balancing and health monitoring

The client requests the destination IP address in the IPv4 or IPv6 header. However, this destination IP address could get overwhelmed by large requests. Therefore, the LTM distributes client requests (based on a load balancing method) to multiple servers instead of to the single specified destination IP address. The load balancing method determines the pattern or metric used to distribute traffic.

These methods are categorized as either Static or Dynamic. Dynamic load balancing considers real-time events and includes least connections, fastest, observed, predictive, etc. Static load balancing includes both round-robin and ratio-based systems. Round-robin-based load balancing works well if servers are equal (homogeneous), but what if you have nonhomogeneous servers?

Ratio load balancing

In this case, Ratio load balancing can distribute traffic unevenly based on predefined ratios. For example, Ratio 3 is assigned to servers 1 and 2, and Ratio 1 is assigned to servers 3. This configuration results in that for every 1 packet assigned to server 3, both servers 1 and 2 will get 3. Initially, it starts with a round-robin, but subsequent flows are differentiated based on the ratios.

A feature known as priority-based member activation allows you to configure pool members into priority groups. High priority gets more traffic. For example, you group the two high-spec servers (server 1 and server 2) in a high-priority group and a low-spec server (server 3) in a low-priority group. The old server will not be used unless there is a failure in priority group 1.

F5 full proxy architecture: Health and performance monitors

Health and performance monitors are associated with a pool to determine if servers are operational and can receive traffic. The type of health monitor used depends on the type of traffic you want to monitor. There are several predefined monitors, and you can customize your own. For example, LTM attempts FTP to download a specified file to the /var/tmp directory, and the check is successful if the file is retrieved.

Some HTTP monitors permit the inclusion of a username and password to retrieve a page on the website. You also have LDAP, MYSQL, ICMP, HTTPS, NTP, Oracle, POP3, Radius, RPC, and many others. iRules allows you to manage traffic based on business logic. For example, you can direct customers to the correct server based on language preference in their browsers. An iRule can be the trigger to inspect this header (accept-language) and select the right pool of application servers based on the value specified in the header.

Increase backend server performance.

It says computationally it is more exhausting to set up a new connection rather than receive requests over an existing OPEN connection. That’s HTTP keepalives invented and made standard in HTTP v1. LTM has a “One connect” feature that leverages HTTP keepalives to reuse connections for multiple clients, not just a single client. It works with HTTP keepalives to make existing connections available for other clients, not just a single client. Fewer open connections means lower resource consumption per server.

When the LTM receives the HTTP request from the client, it makes the load-balancing decision before the “One connect” is considered. If there are no OPEN or IDLE server-side connections, the BIP-IP creates a new TCP connection to the server. When the server responds with the HTTP response, the connection is left open on the BIP-IP for reuse. The connection is held in a table buffer called the connection reuse pool.

New requests from other clients can reuse the OPEN IDLE connection without setting up a new TCP connection. The source mask on the OC profile determines which clients can reuse open and idle server-side connections. Using SNAT, the source address is translated before applying the OC profile.

Closing Points on Full Proxy

To appreciate the advantages of full proxy, it’s essential to understand how it differs from a traditional proxy setup. A traditional proxy server forwards requests from clients to servers and vice versa, acting as a conduit without altering the data. In contrast, a full proxy terminates the client connection and establishes a separate connection with the server. This distinction allows full proxies to inspect and modify requests and responses, offering enhanced security, optimization, and control over traffic.

Load balancing is a critical component of network management, ensuring that no single server becomes overwhelmed with requests. Full proxy architecture excels in this area by providing intelligent traffic distribution. It can analyze incoming requests, evaluate server health, and distribute workloads accordingly. This dynamic management not only improves server efficiency but also enhances user experience by reducing latency and preventing server downtime.

Another significant advantage of full proxy is its ability to bolster network security. By terminating the client connection, full proxies can inspect incoming traffic for malicious content before it reaches the server. This inspection enables the implementation of robust security measures such as SSL/TLS encryption, DDoS protection, and web application firewalls. Consequently, businesses can safeguard sensitive data and maintain compliance with industry regulations.

Full proxies offer a suite of tools to optimize network performance beyond load balancing. Features like caching, compression, and content filtering can be implemented to improve data flow and reduce unnecessary network strain. By caching frequently requested content, full proxies reduce the load on backend servers, accelerating response times and enhancing overall efficiency.

Summary: Full Proxy

In today’s digital age, connectivity is the lifeblood of our society. The Internet has become an indispensable tool for communication, information sharing, and business transactions. However, numerous barriers still hinder universal access to the vast realm of online resources. One promising solution that has emerged in recent years is the concept of fully proxy networks. In this blog post, we delved into the world of fully proxy networks, exploring their potential to revolutionize internet accessibility.

Understanding Fully Proxy Networks

Fully proxy networks, or reverse proxy networks, are innovative systems designed to enhance internet accessibility for users. Unlike traditional networks that rely on direct connections between users and online resources, fully proxy networks act as intermediaries between the user and the internet. They intercept user requests and fetch the requested content on their behalf, optimizing the delivery process and bypassing potential obstacles.

Overcoming Geographical Restrictions

One of the primary benefits of fully proxy networks is their ability to overcome geographical restrictions imposed by content providers. With these networks, users can access websites and online services that are typically inaccessible due to regional limitations. By routing traffic through proxy servers located in different regions, fully proxy networks enable users to bypass geo-blocking and enjoy unrestricted access to online content.

Enhanced Security and Privacy

Another significant advantage of fully proxy networks is their ability to enhance security and privacy. By acting as intermediaries, these networks add an extra layer of protection between users and online resources. The proxy servers can mask users’ IP addresses, making tracking their online activities more challenging for malicious actors. Additionally, fully proxy networks can encrypt data transmissions, safeguarding sensitive information from potential threats.

Accelerating Internet Performance

In addition to improving accessibility and security, fully proxy networks can significantly enhance internet performance. By caching and optimizing content delivery, these networks can reduce latency and speed up web page loading times. Users can experience faster and more responsive browsing experiences, especially for frequently accessed websites. Moreover, fully proxy networks can alleviate bandwidth constraints during peak usage periods, ensuring a seamless online experience for users.

Conclusion:

Fully proxy networks offer a promising solution to the challenges of internet accessibility. By bypassing geographical restrictions, enhancing security and privacy, and accelerating internet performance, these networks can unlock a new era of online accessibility for users worldwide. As technology continues to evolve, fully proxy networks are poised to play a crucial role in bridging the digital divide and creating a more inclusive internet landscape.