Web Application Security

Hello, I did a tailored package for ACUNETIX. We split a number of standard blogs into smaller ones for SEO. There are lots of ways to improve web application security so we covered quite a lot of bases in the package.

“So why is there a need for true multi-cloud capacity? The upsurge of the latest applications demands multi-cloud scenarios. Firstly, organizations require application portability amongst multiple cloud providers. Application uptime is a necessity and I.T organizations cannot rely on a single cloud provider to host the critical applications. Besides, lock-in I.T organizations don’t want to have their application locked into specific cloud frameworks. Hardware vendors have been doing this since the beginning of time, thereby, locking you to their specific life cycles. Within a cloud environment that has been locked into one provider means, you cannot easily move your application from one provider to another.

Thirdly, cost is one of the dominant factors. Clouds are not a cheap resource and the pricing models vary among providers, even for the same instance size and type. With a multi-cloud strategy in place, you are in a much better position to negotiate the price.”

The World Wide Web (WWW) has transformed from simple static content to serving the dynamic world of today. The remodel has essentially changed the way we communicate and do business. However, now we are experiencing another wave of innovation in the technologies. The cloud is becoming an even more diverse technology compared to the former framework. The cloud has evolved into its second decade of existence, which formulates and drives a new world of cloud computing and application security. After all, it has to overtake the traditional I.T by offering an on-demand elastic environment. It largely affects how the organizations operate and have become a critical component for new technologies.

The new shift in cloud technologies is the move to ‘multi-cloud designs’, which is a big game-changer for application security. Undoubtedly, multi-cloud will become a necessity for the future but unfortunately, at this time, it is miles apart from a simple move. It is a fact, that not many have started their multi-cloud journey. As a result, there are a few lessons learned, which can expose your application stack to security risks unless you were to hire a professional Web Application Company that will develop and maintain the security of your new application within the cloud for you and your business, opting for this method can mean having a dedicated IT specialist company that can be of service should anything go awry.

Reference architecture guides are a great starting point, however, there are many unknowns when it comes to multi-cloud environments. To take advantage of these technologies, you need to move with application safety in mind. Applications don’t care what cloud technology they lay in. What is significant is, that they need to be operational and hardened with appropriate security.”

“In the early 2000s, we had simple shell scripts created to take down a single web page. Usually, one attacking signature was used from one single source IP address. This was known as a classical Bot based attack, which was effective in taking down a single web page. However, this type of threat needed a human to launch every single attack. For example, if you wanted to bring ten web applications to a halt, you would need to hit “enter” on the keyboard ten times.

We then started to encounter the introduction of simple scripts compiled with loops. Under this improved attack, instead of hitting the keyboard every time they wanted to bring down a web page, the bad actor would simply add the loop to the script. The attack still used only one source IP address and was known as the classical denial of service (DoS).

Thus, the cat and mouse game continued between the web application developers and the bad actors. The patches were quickly released. If you patched the web application and web servers in time, and as long as a good design was in place, then you could prevent these types of known attacks.”

“The speed at which cybersecurity has evolved over the last decade has taken everyone by surprise. Different types of threats and methods of attack have been surfacing consistently, hitting the web applications at an alarming rate. Unfortunately, the foundations of web application design were not laid with security in mind. Therefore, the dispersed design and web servers continue to pose challenges to security professionals.

If the correct security measures are not in place, the existing well-known threats that have been around for years will infuse application downtime and data breaches. Here the prime concern is that if security professionals are unable to protect themselves against today’s web application attacks, how would they fortify against the unknown threats of tomorrow?

The challenges that we see today are compounded by the use of Artificial Intelligence (AI) by cybercriminals. Cybercriminals already have an extensive arsenal at their disposal but to make matters worse, they now have the capability to combine their existing toolkits with the unknown power of AI.”

“The cloud API management plane is one of the most significant differences between traditional computing and cloud computing. It offers an interface, which is often public, to connect the cloud assets. In the past, we followed the box-by-box configuration mentality, where we configured the physical hardware stringed by the wires. However, now, our infrastructure is controlled with an application programming interface (API) calls.

The abstraction of virtualization is aided by the use of APIs, which are the underlying communication methods for assets within a cloud. As a result of this shift of management paradigm, compromising the management plane is like winning unfiltered access to your data center, unless proper security controls to the application level are in place.”

“As we delve deeper into the digital world of communication, from the perspective of privacy, the impact of personal data changes in proportion to the way we examine security. As organizations chime in this world, the normal methods that were employed to protect data have now become obsolete. This forces the security professionals to shift their thinking from protecting the infrastructure to protecting the actual data. Also, the magnitude at which we are engaged in digital business makes the traditional security tools outdated. Security teams must be equipped with real-time visibility to fathom what’s happening all the way up at the web application layer. It is a constant challenge to map all the connections we are building and the personal data that is spreading literally everywhere. This challenge must be addressed not just from the technical standpoint but also from the legal and legislative context.

With the arrival of new General Data Protection Regulation (GDPR) legislation, security professionals must become data-centric. As a result, they no longer rely on traditional practices to monitor and protect data along with the web applications that act as a front door to the user’s personal data. GDPR is the beginning of wisdom when it comes to data governance and has more far-reaching implications than one might think of. It has been predicted that by the end of 2018, more than 50% of the organizations affected by GDPR, will not be in full compliance with its requirements.”

“Cloud computing is the technology that equips the organizations to fabricate products and services for both internal and external usage. It is one of the exceptional shifts in the I.T industry that many of us are likely to witness in our lifetimes. However, to align both; the business and operational goals, cloud security issues must be addressed by governance and not just treated as a technical issues. Essentially, the cloud combines resources such as central processing unit (CPU), Memory, and Hard Drives and places them into a virtualized pool. Consumers of the cloud can access the virtualized pool of resources and can allocate them in accordance to the requirement. Upon completion of the task, the assets are released back into the pool for future use.

Cloud computing represents a shift from a server-service-based approach, eventually, offering significant gains to businesses. However, these gains are often eroded when the business’s valuable assets, such as web applications, become vulnerable to the plethora of cloud security threats, which are like a fly in the ointment.”

“Firewall Designs & the Evolving Security Paradigm The firewall has weathered through a number of design changes. Initially, we started with a single chunky physical firewall and prayed that it wouldn’t fail. We then moved to a variety of firewall design models such as active-active and active-backup mode. The design of active-active really isn’t a true active-active due to certain limitations. However, the active-backup leaves one device, which is possibly quite expensive, left idle sitting there, waiting to take over in the event of primary firewall failover.

We now have the ability to put firewalls in containers. At the same time, some vendors claim that they can cluster up to eight firewalls creating one big active firewall. While these introductions are technically remarkable, nevertheless, they are complex as well. Anything complexity involved in security is certainly a volatile place to dock a critical business application.”

“Introduction Internet Protocol (IP) networks provide services to customers and businesses across the sphere. Everything and everyone is practically connected in some form or another. As a result, the stability and security of the network and the services that ride on top of IP are of paramount importance for successful service delivery. The connected world banks on IP networks and as the reliance mushrooms so does the level of network and web application attacks. Although the new technologies may offer services that simplify life and facilitate businesses to function more efficiently but in certain scenarios, they change the security paradigms which introduce oodles of complexities.

Alloying complexity with security is like stirring the water in oil which would eventually result in a crash. We operate in a world where we need multiple layers of security and updated security paradigms in order to meet the latest application requirements. Here, the significant questions to be pondered over are, can we trust the new security paradigms? Are we comfortable withdrawing from the traditional security model of well-defined component tiers? How does the security paradigm appear from a security auditor’s perspective?”

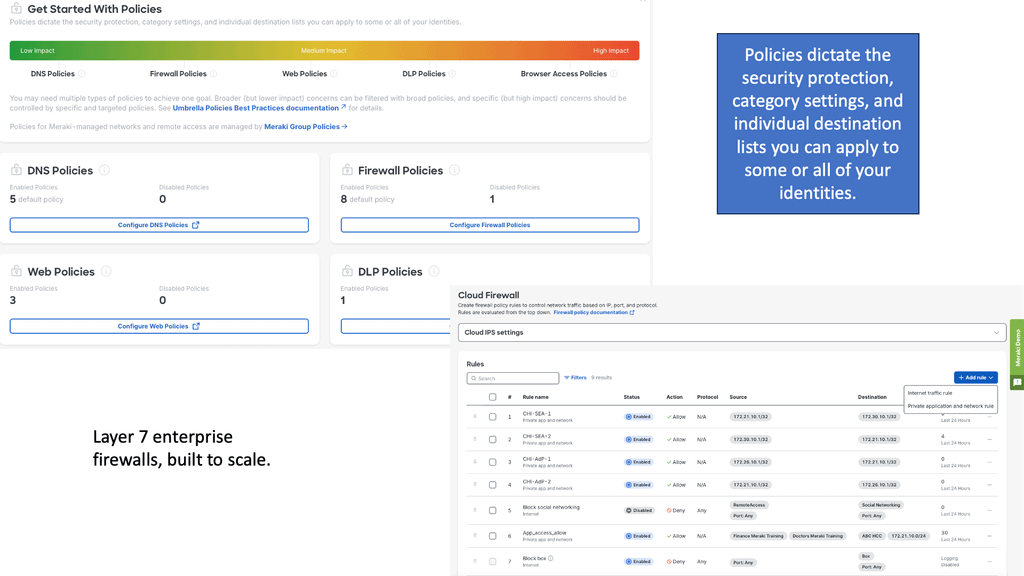

“Part One in this two-part series looked at the evolution of network architecture and how it affects security. Here we will take a deeper look at the security tools needed to deal with these changes. The Firewall is not enough Firewalls in three-tier or leaf and spine designs are not lacking features; this is not the actual problem. They are fully-featured rich. The problem is with the management of Firewall policies that leave the door wide open. This might invite a bad actor to infiltrate the network and laterally move throughout searching to compromise valuable assets on a silver platter. The central Firewall is often referred to as a “holy cow” as it contains so many unknown configured policies that no one knows what are they used for what. Have you ever heard of the 20-year-old computer that can be pingable but no one knows where it is or has there been any security patches in the last decade?

Having a poorly configured Firewall, no matter how feature-rich it is, it poses the exact same threat as a 20-year-old unpatched computer. It is nothing less than a fly in the ointment. Over the years, the physical Firewall will have had many different security administrators. The security professionals leave jobs every couple of years. And each year the number of configured policies on the Firewall increase. When the security administrator leaves his or her post, the Firewall policy stays configured but is often undocumented. Yet the rule may not even be active anymore. Therefore, we are left with central security devices with thousands of rules that no one fully understands but are still parked like deadwood.”

“The History of Network Architecture The goal of any network and its underlying infrastructure is simple. It is to securely transport the end user’s traffic to support an application of some kind without any packet drops which may trigger application performance problems. Here a key point to consider is that the metrics engaged to achieve this goal and the design of the underlying infrastructure derives in many different forms. Therefore, it is crucial to tread carefully and fortify the many types of web applications comfortably under an umbrella of hardened security. The network design has evolved over the last 10 years to support the new web application types and the ever-changing connectivity models such as remote workers and Bring Your Own Device (BYOD).”

“Part 1 in this series looked at Online Security and the flawed protocols it lays upon. Online Security is complex and its underlying fabric was built without security in mind. Here we shall be exploring aspects of Application Security Testing. We live in a world of complicated application architecture compounds with poor visibility leaving the door wide open for compromise. Web Applications Are Complex The application has transformed from a single server app design to a multi-tiered architecture, which has rather opened Pandora’s Box.

To complicate application security testing further, multiple tiers have both firewalling and load balancing between tiers, implemented with either virtualized or physical appliances. Containers and microservices introduce an entirely new wave of application complexity. Individual microservices require cross-communication, yet potentially located in geographically dispersed data centers.”

“A plethora of valuable solutions now run on web-based applications. One could argue that web applications are at the forefront of the world. More importantly, we must equip them with appropriate online security tools to barricade against the rising web vulnerabilities. With the right toolset at hand, any website can shock-absorb known and unknown attacks. Today the average volume of encrypted internet traffic is greater than the average volume of unencrypted traffic. Hypertext Transfer Protocol (HTTPS) is good but it’s not invulnerable. We see evidence of its shortcoming in the Heartbleed Bug where the compromise of secret keys was made possible. Users may assume that they see HTTPS in the web browser and that the website is secured.”