Data Center Failover

In today's digital age, data centers play a vital role in storing and managing vast amounts of critical information. However, even the most advanced data centers are not immune to failures. This is where data center failover comes into play. This blog post will explore what data center failover is, why it is crucial, and how it ensures uninterrupted business operations.

Data center failover refers to seamlessly switching from a primary data center to a secondary one in case of a failure or outage. It is a critical component of disaster recovery and business continuity planning. Organizations can minimize downtime, maintain service availability, and prevent data loss by having a failover mechanism.

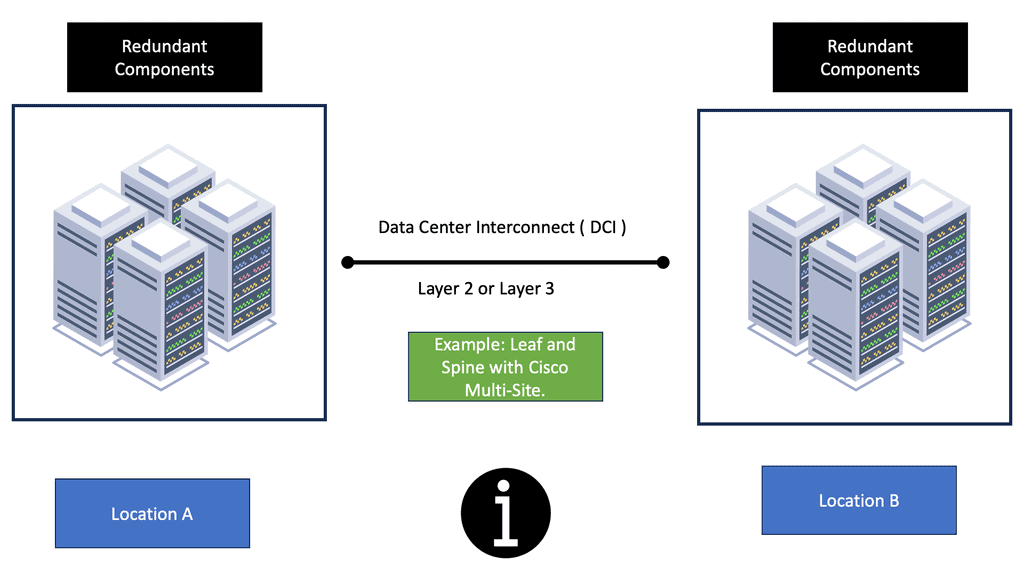

To achieve effective failover capabilities, redundancy measures are essential. This includes redundant power supplies, network connections, storage systems, and servers. By eliminating single points of failure, organizations can ensure that if one component fails, another can seamlessly take over.

Virtualization technologies, such as virtual machines and containers, play a vital role in data center failover. By encapsulating entire systems and applications, virtualization enables easy migration from one server or data center to another, ensuring minimal disruption during failover events.

Proactive monitoring and timely detection of potential issues are paramount in data center failover. Implementing comprehensive monitoring tools that track performance metrics, system health, and network connectivity allows IT teams to detect anomalies early on and take necessary actions to prevent failures.

Regular failover testing is crucial to validate the effectiveness of failover mechanisms and identify any potential gaps or bottlenecks. By simulating real-world scenarios, organizations can refine their failover strategies, improve recovery times, and ensure the readiness of their backup systems.

Matt Conran

Highlights: Data Center Failover

### Understanding High Availability in Data Centers

In our increasingly digital world, data centers are the backbone of countless services and applications. High availability is a crucial aspect of data center operations, ensuring that these services remain accessible without interruption. But what exactly does high availability mean? In the context of data centers, it refers to the systems and protocols in place to guarantee that services are continuously operational, with minimal downtime. This is achieved through redundancy, failover mechanisms, and robust infrastructure design.

### Key Components of High Availability

To achieve high availability, data centers rely on several critical components. Firstly, redundancy is essential; this involves having duplicate systems and components ready to take over in case of a failure. Load balancing is another vital feature, distributing workloads across multiple servers to prevent any single point of failure. Additionally, disaster recovery plans are indispensable, providing a roadmap for restoring services in the event of a major disruption. By integrating these components, data centers can maintain service continuity and reliability.

### The Role of Monitoring and Maintenance

Continuous monitoring and proactive maintenance are pivotal in sustaining high availability in data centers. Monitoring tools track the performance and health of data center infrastructure, providing real-time alerts for any anomalies. Regular maintenance ensures that all systems are running optimally and helps prevent potential failures. This proactive approach not only minimizes downtime but also extends the lifespan of the data center’s equipment. By prioritizing monitoring and maintenance, data centers can swiftly address issues before they escalate.

### Challenges in Achieving High Availability

Despite the benefits, achieving high availability in data centers is not without its challenges. One significant hurdle is the cost associated with implementing redundant systems and sophisticated monitoring tools. Additionally, managing the complexity of diverse systems and ensuring seamless integration can be daunting. Data centers must also navigate evolving security threats and technological advancements to maintain their high availability standards. Addressing these challenges requires strategic planning and investment in cutting-edge technologies.

Database Redundancy

**Understanding High Availability: What It Means for Your Database**

High availability refers to a system’s ability to remain operational and accessible for the maximum possible time. In the context of data centers, this means your database should be able to withstand failures and still provide continuous service. Achieving this involves implementing redundancy, failover mechanisms, and load balancing to mitigate the risk of downtime and ensure that your data remains safeguarded.

**Key Strategies for Achieving High Availability**

1. **Redundancy and Load Balancing**: Implement redundant systems and components to eliminate single points of failure. Load balancing ensures that traffic is evenly distributed across servers, minimizing the risk of any one server becoming overwhelmed.

2. **Regular Backups and Disaster Recovery Planning**: Regular backups are a fundamental part of a high availability strategy. Pair this with a robust disaster recovery plan to ensure that, in the event of a failure, data can be restored quickly and operations can resume with minimal disruption.

3. **Cluster Configurations and Failover Systems**: Use cluster configurations to link multiple servers together, allowing them to act as a unified system. Failover systems automatically switch to a standby server if the primary one fails, thereby ensuring continuous availability.

**Challenges in Maintaining High Availability**

Despite best efforts, maintaining high availability comes with its challenges. These can include the cost of additional infrastructure, the complexity of managing redundant systems, and the potential for human error during maintenance tasks. It’s crucial to anticipate these challenges and prepare accordingly to ensure a seamless high availability strategy.

Creating Redundancy with Network Virtualization

In network virtualization, multiple physical networks are consolidated and operated as single or numerous independent networks by combining their resources. A virtual network is created to deploy and manage network services, while the hardware-based physical network only forwards packets. Network virtualization abstracts network resources traditionally delivered as hardware into software.

Overlay Network Protocols: Abstracting the data center

Modern virtualized data center fabrics must meet specific requirements to accelerate application deployment and support DevOps. To support multitenant support on shared physical infrastructure, fabrics must support scaling of forwarding tables, network segments, extended Layer 2 segments, virtual device mobility, forwarding path optimization, and virtualized networks. To achieve these requirements, overlay network protocols such as NVGRE, Cisco OTV, and VXLAN are used. Let’s define underlay and overlay to better understand various overlay protocols.

The underlay network is the physical infrastructure for an overlay network. It delivers packets as part of the underlying network across networks. Physical underlay networks provide unicast IP connectivity between any physical devices (servers, storage devices, routers, switches) in data center environments. However, technology limitations make underlay networks less scalable.

With network overlays, applications that demand specific network topologies can be deployed without modifying the underlying network. Overlays are virtual networks of interconnected nodes sharing a physical network. Multiple overlay networks can coexist simultaneously.

**Note: Blog Series**

This blog discusses the tail of active-active data centers and data center failover. The first blog focuses on the GTM Load Balancer and introduces failover challenges. The 3rd addresses Data Center Failure, focusing on the challenges and best practices for Storage. Much of this post addresses database challenges; the third is storage best practices; the final post will focus on ingress and egress traffic flows.

Understanding VPC Peering

VPC Peering is a networking connection that allows different Virtual Private Clouds (VPCs) to communicate with each other using private IP addresses. It eliminates the need for complex VPN setups or public internet exposure, ensuring secure and efficient data transfer. Within Google Cloud, VPC Peering offers numerous advantages for organizations seeking to optimize their network architecture.

VPC Peering in Google Cloud brings a multitude of benefits. Firstly, it enables seamless communication between VPCs, regardless of their geographical location. This means that resources in different VPCs can communicate as if they were part of the same network, fostering collaboration and efficient data exchange. Additionally, VPC Peering helps reduce network costs by eliminating the need for additional VPN tunnels or dedicated interconnects.

Database Management Series

A Database Management System (DBMS) is a software application that interacts with users and other applications. It sits behind other elements known as “middleware” between the application and storage tier. It connects software components. Not all environments use databases; some store data in files, such as MS Excel. Also, data processing is not always done via query languages. For example, Hadoop has a framework to access data stored in files. Popular DBMS include MySQL, Oracle, PostgreSQL, Sybase, and IBM DB2. Database storage differs depending on the needs of a system.

Related: Before you proceed, you may find the following posts helpful:

Data Center Failover

Why is Data Center Failover Crucial?

1. Minimizing Downtime: Downtime can have severe consequences for businesses, leading to revenue loss, decreased productivity, and damaged customer trust. Failover mechanisms enable organizations to reduce downtime by quickly redirecting traffic and operations to a secondary data center.

2. Ensuring High Availability: Providing uninterrupted services is crucial for businesses, especially those operating in sectors where downtime can have severe implications, such as finance, healthcare, and e-commerce. Failover mechanisms ensure high availability by swiftly transferring operations to a secondary data center, minimizing service disruptions.

3. Preventing Data Loss: Data loss can be catastrophic for businesses, leading to financial and reputational damage. By implementing failover systems, organizations can replicate and synchronize data across multiple data centers, ensuring that in the event of a failure, data remains intact and accessible.

How Does Data Center Failover Work?

Datacenter failover involves several components and processes that work together to ensure smooth transitions during an outage:

1. Redundant Infrastructure: Failover mechanisms rely on redundant hardware, power systems, networking equipment, and storage devices. Redundancy ensures that if one component fails, another can seamlessly take over to maintain operations.

2. Automatic Detection: Monitoring systems constantly monitor the health and performance of the primary data center. In the event of a failure, these systems automatically detect the issue and trigger the failover process.

3. Traffic Redirection: Failover mechanisms redirect traffic from the primary data center to the secondary one. This redirection can be achieved through DNS changes, load balancers, or routing protocols. The goal is to ensure that users and applications experience minimal disruption during the transition.

4. Data Replication and Synchronization: Data replication and synchronization are crucial in data center failover. By replicating data across multiple data centers in real-time, organizations can ensure that data is readily available in case of a failover. Synchronization ensures that data remains consistent across all data centers.

What does a DBMS provide for applications?

It provides a means to access massive amounts of persistent data. Databases handle terabytes of data every day, usually much larger than what can fit into the memory of a standard O/S system. The size of the data and the number of connections mean that the database’s performance directly affects application performance. Databases carry out thousands of complex queries per second over terabytes of data.

The data is often persistent, meaning the data on the database system outlives the period of application execution. The data does not go away and sits. After that, the program stops running. Many users or applications access data concurrently, and measures are in place so concurrent users do not overwrite data. These measures are known as concurrency controls. They ensure correct results for simultaneous operations. Concurrency controls do not mean exclusive access to the database. The control occurs on data items, allowing many users to access the database but accessing different data items.

Data Center Failover and Database Concepts

The Data Model refers to how the data is stored in the database. Several options exist, such as the relational data model, a set of records. Also available is stored in XML, a hierarchical structure of labeled values. Finally, another solution includes a graph model where nodes represent all data.

The Schema sets up the structure of the database. You have to structure the data before you build the application. The database designers establish the Schema for the database. All data is stored within the Schema.

The Schema doesn’t change much, but data changes quickly and constantly. The Data Definition Language (DDL) sets up the Schema. It’s a standard of commands that define different data structures. Once the Schema is set up and the data is loaded, you start the query process and modify the data. This is done with the Data Manipulation Language (DML). DML statements are used to retrieve and work with data.

The SQL query language

The SQL query language is a standardized language based on relational algebra. It is a programming language used to manage data in a relational database and is supported by all major database systems. The SQL query engages with the database Query optimizer, which takes SQL queries and determines the optimum way to execute them on the database.

The language has two parts: the Data Definition Language (DDL) and the Data Manipulation Language (DML). DDL creates and drops tables, and DML (already mentioned) is used to query and modify the database with Select, Insert, Delete, and Update statements. The Select statement is the most commonly used and performs the database query.

Database Challenges

The database design and failover appetite is a business and non-technical decision. First, the company must decide on values acceptable to RTO (recovery time objective) and RPO (recovery point objective). How accurate do you want your data, and how long can a client application be in the read-only state? There are three main options a) Distributed databases with two-phase commit, b) Log shipping c) Read-only and read-write with synchronous replication.

With distributed databases and a two-phase commit, you have multiple synchronized copies of the database. It’s very complex, and latency can be a real problem affecting application performance. Many people don’t use this and go for log shipping instead. Log shipping maintains a separate copy of the database on the standby server.

There are two copies of a single database on different computers or the same computer with separate instances, primary and secondary databases. Only one copy is available at any given time. Any changes to the primary databases are logged or propagated to the other database copy.

Some environments have a 3rd instance, known as a monitor server. A monitor server records history, checks status, and tracks details of log shipping. A drawback to log shipping is that it has a non-zero RPO. It may be that a transaction was written just before the failure and, as a result, will be lost. Therefore, log shipping cannot guarantee zero data loss. An enhancement to log shipping is read-only and read-write copies of the database with synchronous replication between the two. With this method, there is no data loss, and it’s not as complicated as distributed databases with two-phase commit.

Data Center Failover Solution

If you have a transaction database and all the data is in one data center, you will have a problem with latency between the write database and the database client when you start the VM in the other DC. There is not much you can do about latency except shorten the link. Some believe WAN optimization will decrease latency, but many solutions will add it.

How well-written the application is will determine how badly the VM is affected. With very severely written applications, a few milliseconds can destroy performance. How quickly can you send SQL queries across the WAN link? How many queries per transaction does the application do? Poorly written applications require transactions encompassing many queries.

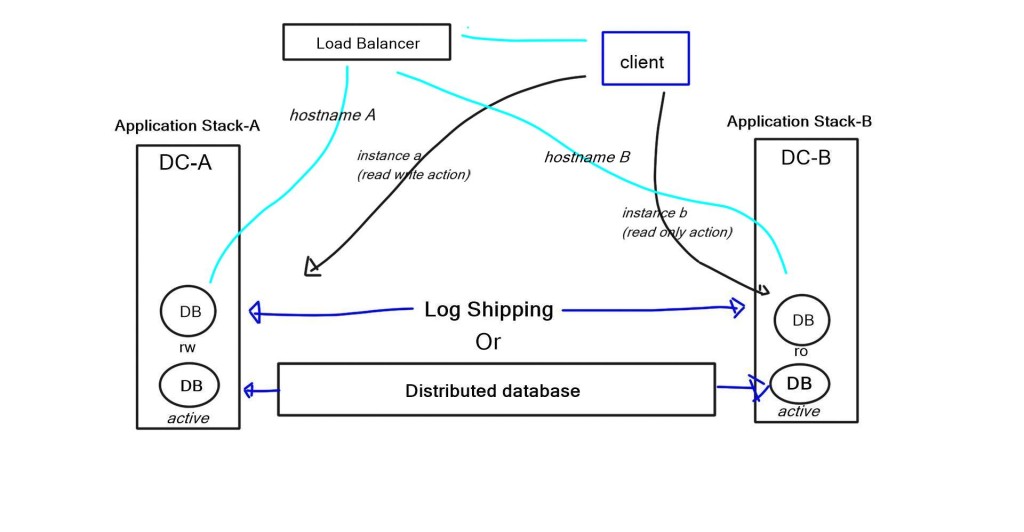

Multiple application stacks

A better approach would be to use multiple application stacks in different data centers. Load balancing can then be used to forward traffic to each instance. It is better to have various application stacks ( known as swim lanes ) that are entirely independent. Multiple instances on the same application allow you to take offline an instance without affecting others.

A better approach is to have a single database server and ship the changes to the read-only database server. With the example of a two-application stack, one of the application stacks is read-only and eventually consistent, and the other is read-write. So if the client needs to make a change, for example, submit an order, how do you do this from the read-only data center? There are several ways to do this.

One way is with the client software. The application knows the transaction, uses a different hostname, and redirects requests to the read-write database. The hostname request can be used with a load balancer to redirect queries to the correct database. Another method is having applications with two database instances – read-only and read-write. So, every transaction will know if it’s read-only or read-write and will use the appropriate database instance. For example, purchasing would trigger the read-write instance, and browsing products would trigger read-only.

Most things we do are eventually consistent at a user’s face level. If you buy something online, even in the shopping cart, it’s not guaranteed until you select the buy button. Exceptions that are not fulfilled are made manually by sending the user an email.

Closing Points: Data Center Failover Databases

Database failover refers to the process of automatically switching to a standby database server when the primary server fails. This mechanism is designed to ensure that there is no interruption in service, allowing applications to continue to function without any noticeable downtime. In a data center, where multiple databases might be running simultaneously, having a robust failover strategy is essential for maintaining high availability.

A comprehensive failover system typically consists of several key components. Firstly, there is the primary database server, which handles the regular data processing and transactions. Secondly, one or more standby servers are in place, usually kept in sync with the primary server through regular updates. Monitoring tools are also critical, as they detect failures and trigger the failover process. Finally, a failover mechanism ensures a smooth transition, redirecting workload from the failed server to a standby server with minimal disruption.

Implementing an effective failover strategy involves several steps. Data centers must first assess their specific needs and determine the appropriate level of redundancy. Options range from simple active-passive setups, where a standby server takes over in case of failure, to more complex active-active configurations, which allow multiple servers to share the load and provide redundancy. Regular testing and validation of the failover process are essential to ensure reliability. Additionally, choosing the right database technology that supports failover, such as cloud-based solutions or traditional on-premise systems, is crucial.

While database failover offers numerous benefits, it also presents certain challenges. Ensuring data consistency and preventing data loss during failover is a primary concern. Network latency and bandwidth can impact the speed of failover, especially in geographically distributed data centers. Organizations must also consider the cost implications of maintaining redundant systems and infrastructure. Careful planning and ongoing monitoring are vital to address these challenges effectively.

Summary: Data Center Failover

In today’s technology-driven world, data centers play a crucial role in ensuring the smooth operation of businesses. However, even the most robust data centers are susceptible to failures and disruptions. That’s where data center failover comes into play – a critical strategy that allows businesses to maintain uninterrupted operations and protect their valuable data. In this blog post, we explored the concept of data center failover, its importance, and the critical considerations for implementing a failover plan.

Understanding Data Center Failover

Data center failover refers to the ability of a secondary data center to seamlessly take over operations in the event of a primary data center failure. It is a proactive approach that ensures minimal downtime and guarantees business continuity. Organizations can mitigate the risks associated with data center outages by replicating critical data and applications to a secondary site.

Key Components of a Failover Plan

A well-designed failover plan involves several crucial components. Firstly, organizations must identify the most critical systems and data that require failover protection. This includes mission-critical applications, customer databases, and transactional systems. Secondly, the failover plan should encompass robust data replication mechanisms to ensure real-time synchronization between the primary and secondary data centers. Additionally, organizations must establish clear failover triggers and define the roles and responsibilities of the IT team during failover events.

Implementing a Failover Strategy

Implementing a failover strategy requires careful planning and execution. Organizations must invest in reliable hardware infrastructure, including redundant servers, storage systems, and networking equipment. Furthermore, the failover process should be thoroughly tested to identify potential vulnerabilities or gaps in the plan. Regular drills and simulations can help organizations fine-tune their failover procedures and ensure a seamless transition during a real outage.

Monitoring and Maintenance

Once a failover strategy is in place, continuous monitoring and maintenance are essential to guarantee its effectiveness. Proactive monitoring tools should be employed to detect any issues that could impact the failover process. Regular maintenance activities, such as software updates and hardware inspections, should be conducted to keep the failover infrastructure in optimal condition.

Conclusion:

In today’s fast-paced business environment, where downtime can translate into significant financial losses and reputational damage, data center failover has become a lifeline for business continuity. By understanding the concept of failover, implementing a comprehensive failover plan, and continuously monitoring and maintaining the infrastructure, organizations can safeguard their operations and ensure uninterrupted access to critical resources.