Virtual Switch

In today's digital age, where connectivity is paramount, network administrators constantly seek ways to optimize network performance and streamline management processes. The virtual switch is a crucial component that plays a significant role in achieving these goals. In this blog post, we will delve into virtual switches, exploring their benefits, features, and how they contribute to creating efficient and robust network infrastructures.

A virtual switch, also known as a vSwitch, is a software-based network switch that operates within a virtualized environment. It bridges virtual machines (VMs) and physical network interfaces, enabling communication. Like a physical switch, a virtual switch facilitates data transmission, ensuring seamless connectivity throughout the network.

Virtual switches are software-based networking components that operate within virtualized environments. They play a crucial role in directing network traffic between virtual machines (VMs) and physical networks. By emulating the functionalities of traditional physical switches, virtual switches enable efficient and flexible network management in virtualized environments.

Enhanced Network Virtualization: Virtual switches provide the foundation for network virtualization, allowing organizations to create multiple virtual networks within a single physical network infrastructure. This enables better resource utilization, improved security, and simplified network management.

Simplified Network Configuration: Unlike physical switches that require manual configuration, virtual switches can be easily managed and configured through software interfaces. This flexibility allows for dynamic allocation of network resources, making it easier to adapt to changing network requirements.

Improved Network Security: Virtual switches offer advanced security features, such as virtual LAN (VLAN) segmentation and access control lists (ACLs). These features help isolate and secure network traffic, reducing the risk of unauthorized access and potential security breaches.

Scalability and Flexibility: Virtual switches provide greater scalability and flexibility compared to their physical counterparts. With virtual switches, organizations can quickly add or remove virtual ports, adapt to changing network demands, and dynamically allocate network resources as needed.

Cost Efficiency: Virtual switches eliminate the need for additional hardware, resulting in cost savings for organizations. By leveraging existing server infrastructure, virtual switches reduce both capital and operational expenses associated with physical switches.

Conclusion: The virtual switch has emerged as a game-changer in the world of connectivity. Its ability to enhance network virtualization, simplify network configuration, and improve security makes it a valuable tool for organizations across various industries. As our reliance on virtualized environments continues to grow, the virtual switch will undoubtedly play a pivotal role in shaping the future of connectivity.

Matt Conran

Highlights: Virtual Switch

Enter the Hypervisor

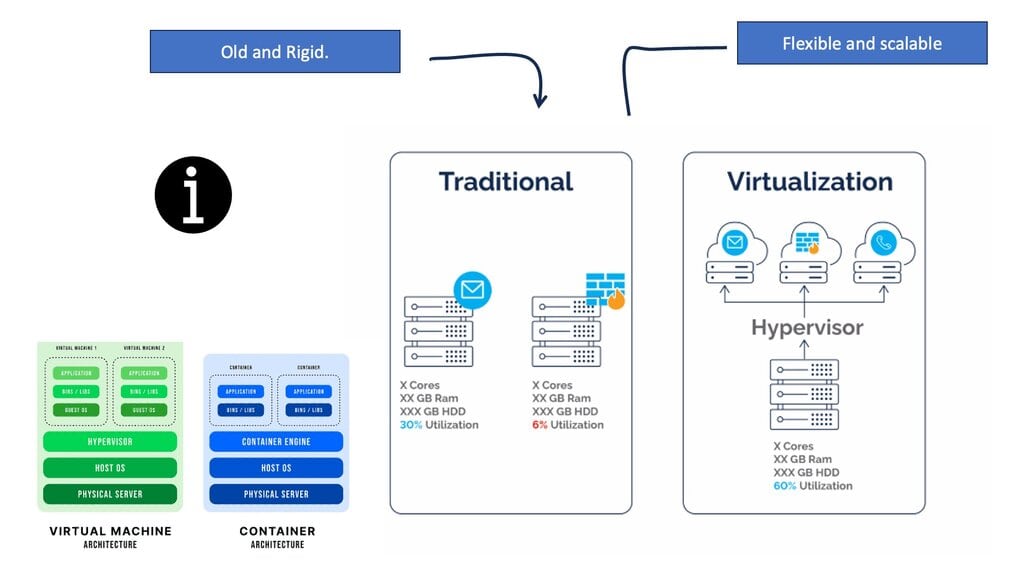

Servers use hypervisors to divide specialized hardware resources from the operating system. With a hypervisor, server drivers and resources are connected through the hypervisor, not the OS.

Adding a layer may increase efficiency, so what can they do to make it more efficient? I think that’s a good question.

In short, a hypervisor provides a virtual connection to a server’s resources for a virtual machine’s operating system. The significant part is that it can do this for many VMs running on the same server, regardless of whether they use different operating systems or applications.

Virtual machines and hypervisors allow us to consolidate servers at a very large scale. The majority of servers are only able to run at a capacity of 5% to 10%. A company may save 6x to 15x in physical server costs if multiple applications are combined onto one server and CPU usage is less than 80%. In addition, there are other efficiencies. Cooling and power costs can be dramatically reduced with a 10x reduction in server numbers.

Another significant benefit of virtualization is the ability to migrate servers within a whole data center in a few hours without disrupting service. If a data center were moved without virtualization, it would almost certainly cause widespread service disruption over weeks or months. Furthermore, virtualization improves disaster recovery capabilities and reduces provisioning times for new applications.

Virtualizing the network

VMs supporting applications or services need physical switching and routing to communicate with data center clients over a WAN link or the Internet. Data centers also require security and load balancing. Virtual switches (hypervisors) are the first switches traffic leaves a VM, followed by physical switches (TORs or EORs). When traffic leaves the hypervisor, it enters the physical network, which cannot handle the rapid changes in the state of the VMs connected to it.

Using a logical network of virtual machines, we can solve this problem. As with most network virtualization, VXLAN does this through encapsulation. Unlike VLANs, which can only create 4096 logical networks on any given physical network, VXLAN can create around 16 million logical networks. This scale makes it essential to have a large data center or cloud.

The Role of Virtual Switching

Virtual Switching functionality is not carried out with a standard switch, and we will have a distributed virtual switch located closer to the workloads that will connect to a ToR switch. The ToR switch is the first hop device from the virtual switch. In a VMware virtualized environment, a single host runs multiple virtual machines (VM) through the VMkernel hypervisor.

The physical host does not have enough network cards to allocate a physical NIC to every VM, and there are exceptions, such as Cisco VM-FEX. Still, we generally have more virtual machines than physical network cards. We need a network to support communication flows so that VMs can communicate out an uplink or even to each other internally.

Traffic Boundaries

Implementing a Layer 2 switch within the ESXi hosts allows traffic flowing from VMs within the same VLAN to be locally switched. Traffic across VLAN boundaries is passed to a security or routing device northbound to the switch.

There are possibilities for micro-segmentation, VM NIC firewalls, and stateful inspection firewalls, but let’s deal with them later in an article. Essentially, the virtual switch aggregates multiple VM traffic across a set of links and provides frame delivery between VMs based on Media Access Control (MAC) address, all of which fall under the umbrella of virtual switching with a distributed virtual switch.

Related: You may find the following helpful post for pre-information:

- Distributed Firewalls

- VMware NSX Security

- Nest Hypervisors

- Layer-3 Data Center

- Overlay Virtual Networks

- WAN SDN

- Hyperscale Networking

Virtual Switching. Key Virtual Switch Discussion Points: |

|

Back to Basics: Virtual Switch with VMware.

VMware Virtual Switch

The virtual network delivers networking for virtual machines, such as ESXi hosts in the VMware world. Like physical switches in our physical network, the essential component is a virtual switch in a virtual network. A virtual switch is a software-based switch built inside the ESXi kernel (VMkernel), which is used to deliver networking for the virtual environment.

For example, traffic that flows from/to virtual machines is passed through one of the virtual switches in VMkernel. The virtual switch provides the connection for virtual machines to communicate with each other, whether operating on the same host or different hosts. A virtual switch works at Layer 2 of the OSI model.

Benefits of Virtual Switches:

1. Enhanced Network Performance: Virtual switches enable administrators to allocate network resources dynamically, optimizing performance based on workload demands. Virtual switches reduce network congestion by efficiently managing bandwidth and prioritizing traffic, resulting in faster and more reliable data transmission.

2. Simplified Network Management: Virtual switches provide central management and configuration capabilities, eliminating the need for individually managing each physical switch. This streamlines network management processes, reducing complexity and saving valuable time for administrators.

3. Improved Security and Isolation: Virtual switches offer advanced security features like VLANs (Virtual Local Area Networks) and access control lists (ACLs). These features enhance network security by isolating traffic and preventing unauthorized access to sensitive data. Additionally, virtual switches allow administrators to implement network policies and monitor traffic, enabling efficient network segmentation and analysis.

Features of Virtual Switches:

1. VLAN Support: Virtual switches support VLANs, allowing administrators to divide a physical network into multiple virtual networks logically. This enables better network segmentation, improved security, and more efficient use of network resources.

2. Quality of Service (QoS): Virtual switches provide QoS capabilities, allowing administrators to prioritize specific types of network traffic. They ensure optimal performance and minimal latency by assigning higher priority to critical applications such as VoIP (Voice over Internet Protocol) or video conferencing.

3. Traffic Monitoring and Analysis: Virtual switches offer built-in traffic monitoring and analysis tools. Administrators can monitor network traffic in real-time, identify bottlenecks, and gain valuable insights into network performance. This enables proactive troubleshooting and optimization of network resources.

1st Lab Guide: Virtual Switching and Open vSwitch

Open vSwitch in Linux

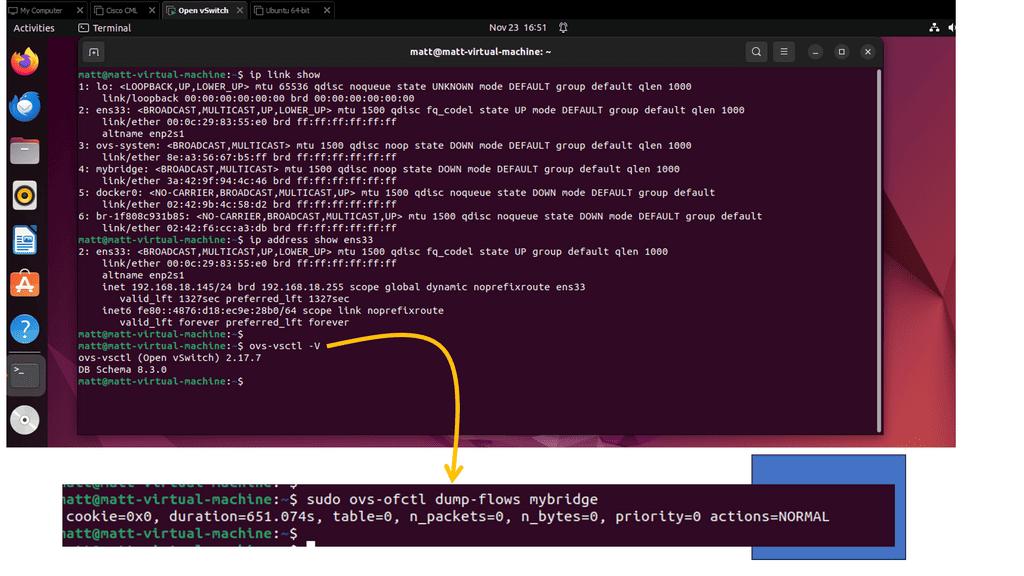

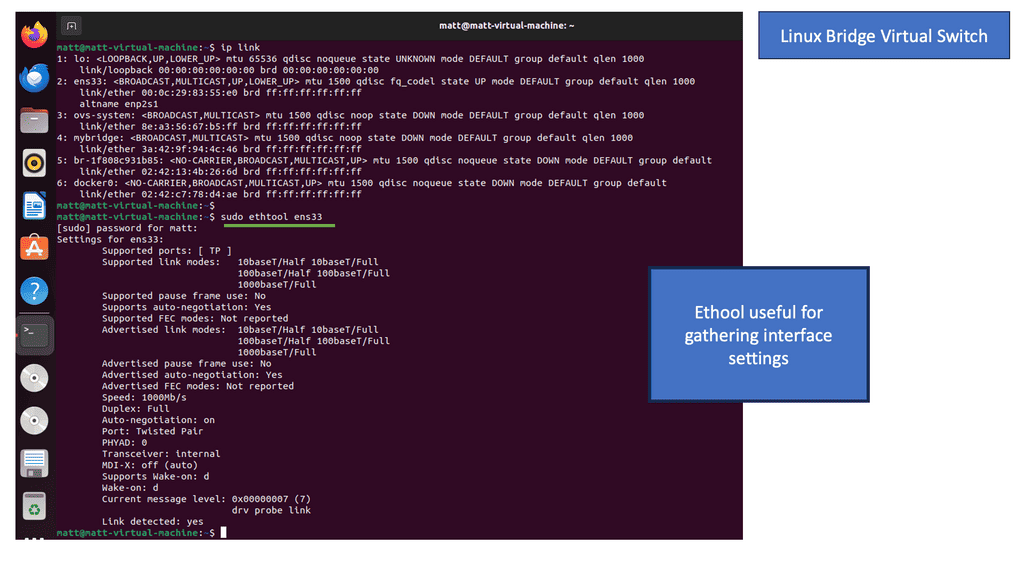

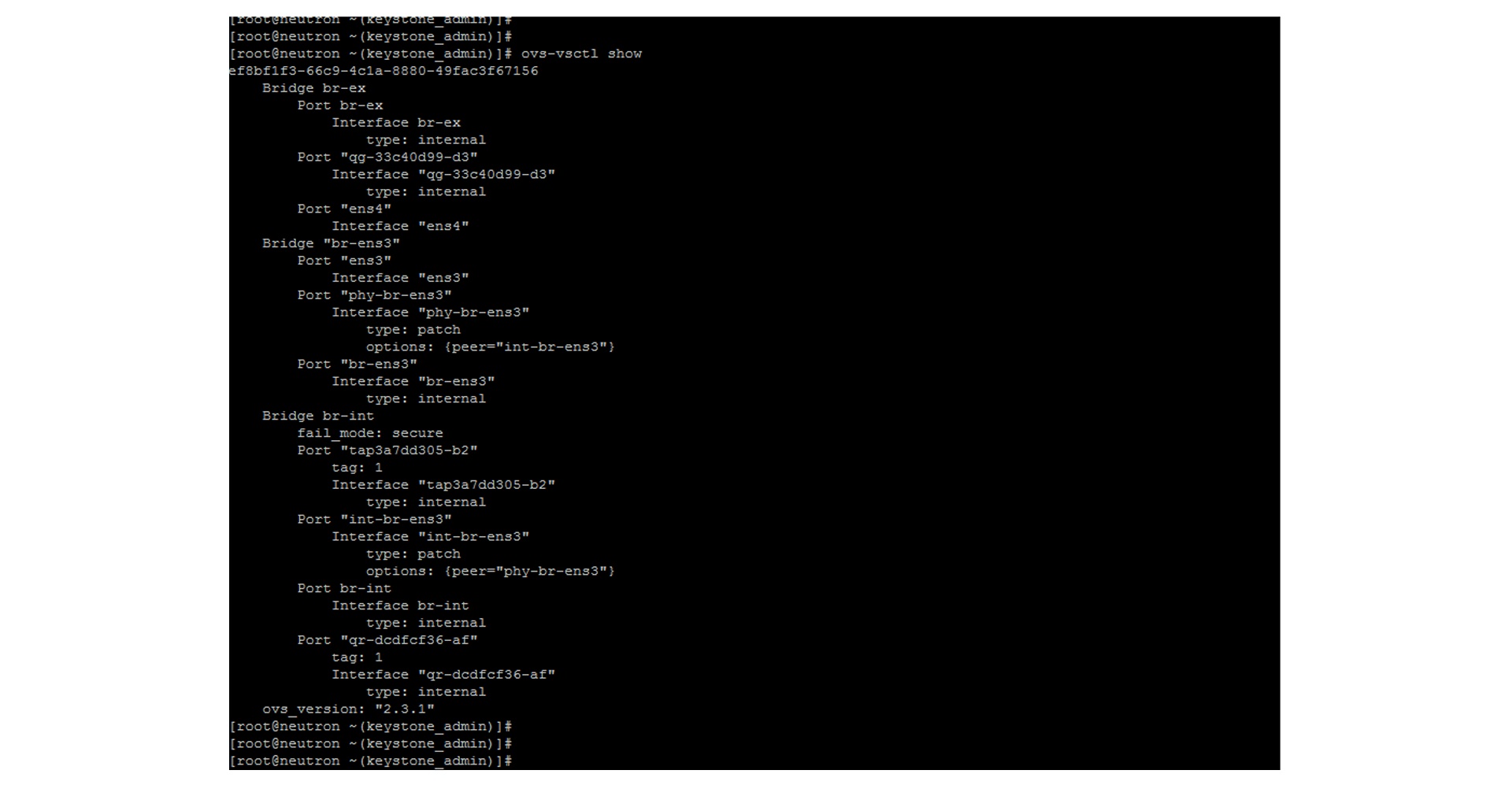

This guide addresses virtual switching with the Open vSwitch (OVS). The OVS is a multi-layer virtual switch that can perform basic Layer 3 and Layer 3 functionality. I have OVS running on a Ubuntu host. Let’s first have a look at the setup and network configuration. I’m in a virtualized environment, and we have an online interface ens33 to the outside world. You can also see that we have OVS already installed with a bridge called my bridge setup.

Note:

The OVS acts like a standard switch and carries out the same switching logic you would find with, for example, a physical catalyst switch. LAN switches receive Ethernet frames and then make a switching decision: either forward the frame to some other ports or ignore the frame. To accomplish this primary mission, switches perform three actions:

- Decide whether to forward a frame or filter (not forward) a frame based on the destination MAC address.

- Preparing to forward frames by learning MAC addresses by examining the source MAC address of each frame received by the switch

- Preparing to forward only one copy of the frame to the destination by creating a (Layer 2) loop-free environment with other switches by using Spanning Tree Protocol (STP)

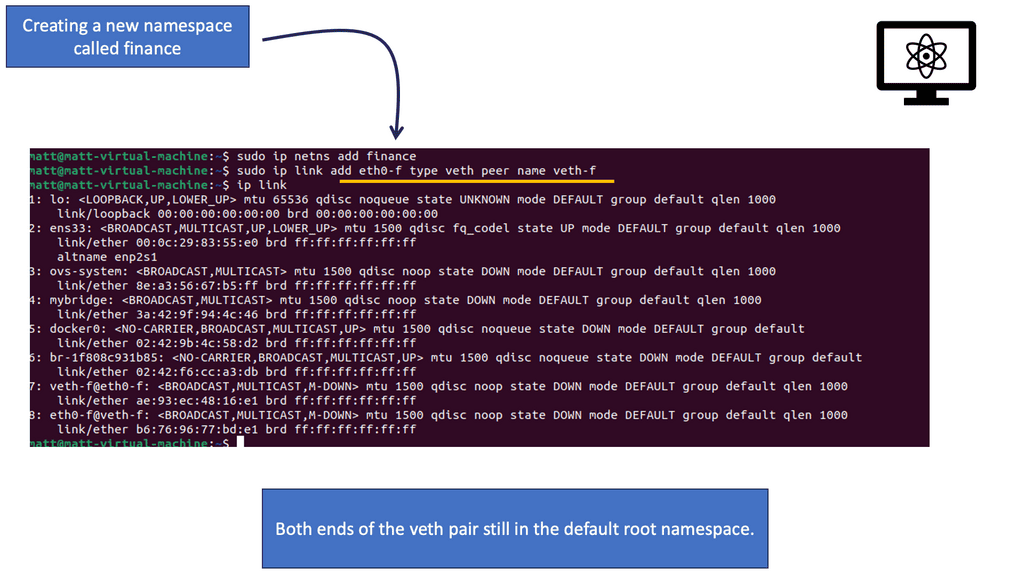

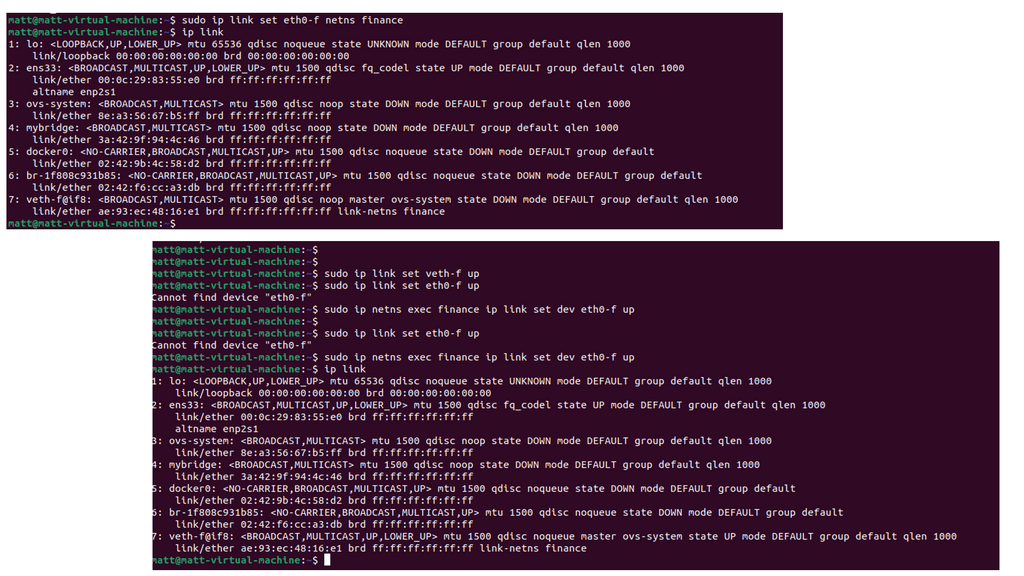

Linux works with namespaces, which form the underlying technology of containers. See how we added a new namespace called finance. We will also create a new veth pair. A veth pair is like a virtual cable. What goes in one end must come out the other end. Consider a veth pair linked to a standard physical cable but in virtual work.

Remember, when we discuss a new namespace, we need to move the links around of the veth pair to the corresponding namespace. In this setup, we have configured only one namespace, finance, but we also have the default namespace of root. The OVS switch, by default, is in the root namespace and can be used to connect disparate namespaces. The OVS also uses VXLAN to extend Layer 2 over IP.

Virtual Switch: Three Distinct Types

To enable virtual switching, there are three virtual switches in a VMware environment: a) a standalone virtual switch, b) distributed virtual switch, and c) 3rd party distributed switch, such as the Cisco Nexus 1000v. These virtual switches have ports, and the hypervisor presents what looks like an NIC to every VM. The VMs are now isolated, thinking they have a virtual Ethernet adapter. Even if you change the physical cards in the server, the VM does not care as it does not see the physical hardware.

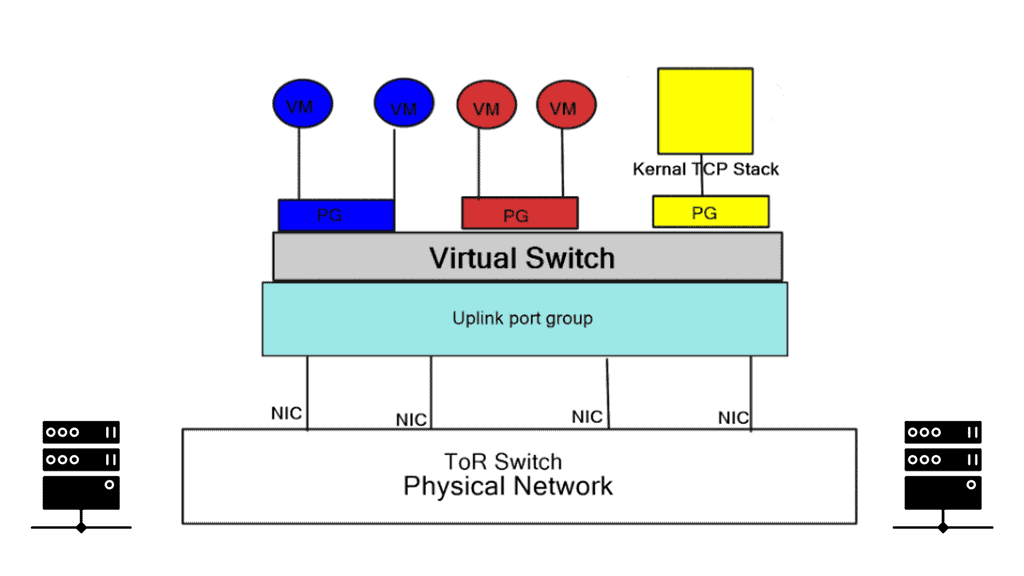

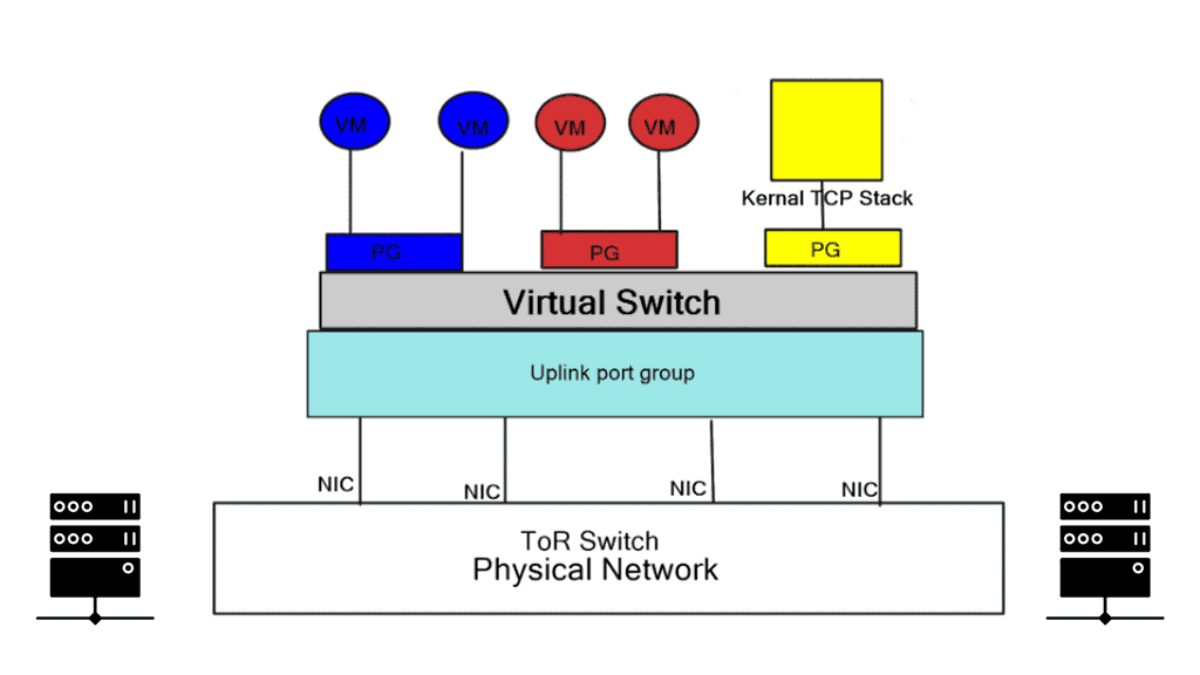

The diagram displays a virtualized environment with two sets of VMs, blue and red, attached to corresponding Port Groups. Port Groups are nothing special, simply management groups based on configuration templates. You may freely have different VMs in Port Groups in the same VLAN communication. The virtual NIC is a software construct emulated by the hypervisor.

Virtual switching and the virtual switch

The standalone virtual switch lacks advanced features but gains in performance. It is not a feature-rich virtual switch and supports standard VLAN and control planes consisting of CDP. Each ESXi host has an independent switch comprised of its data and control planes. Every switch is a separate management entity.

The Distributed virtual switch (vDS) is purely a management entity and minimizes the configuration burden of the standalone switch. It’s a template you configure in vCenter, applied to individual hosts. It lets you view the entire network infrastructure as one object in the vCenter. The port and network statistics assigned to the VM move when the VM moves.

Virtual distributed switch

The vDS is a simple management template, and each ESXi host has its control and data plane with unique MAC and forwarding rules. The local host proxy switch performs packet forwarding and runs control plane protocols. One major vDS drawback is if vCenter drops, you cannot change anything on the local hosts.

As a best design practice, most engineers use the standard standalone switch for management traffic and vDS for VM traffic on the same host. Each virtual switch (vS and vDS) must have its uplinks. For redundancy, you need at least two uplinks for each switch; already, you need four uplinks, usually operating at 10Gbps.

- VMware-based software switches don’t follow 802.1 forwardings or operate Spanning Tree Protocol (STP). Instead, they use special tricks to prevent forwarding loops, such as Reverse Path Forwarding (RPF) checking on the source MAC address.

2nd Lab Guide: Virtual Switch and the Linux Bridge

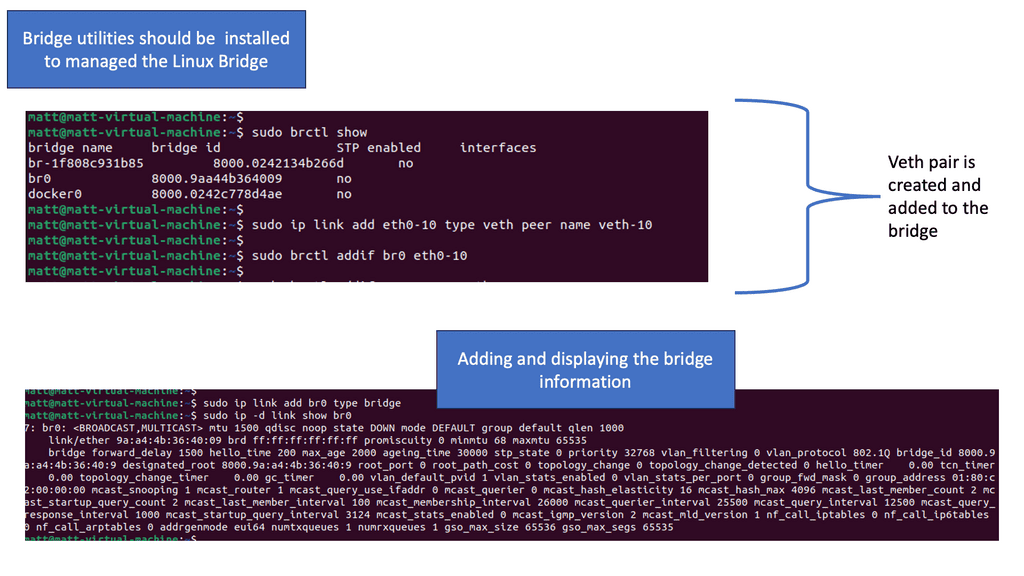

The Linux Bridge is a virtual device connecting multiple network interfaces, allowing them to operate as a single network segment. Similar to the Open vSwitch, the Linux bridge uses veth pairs.

Note:

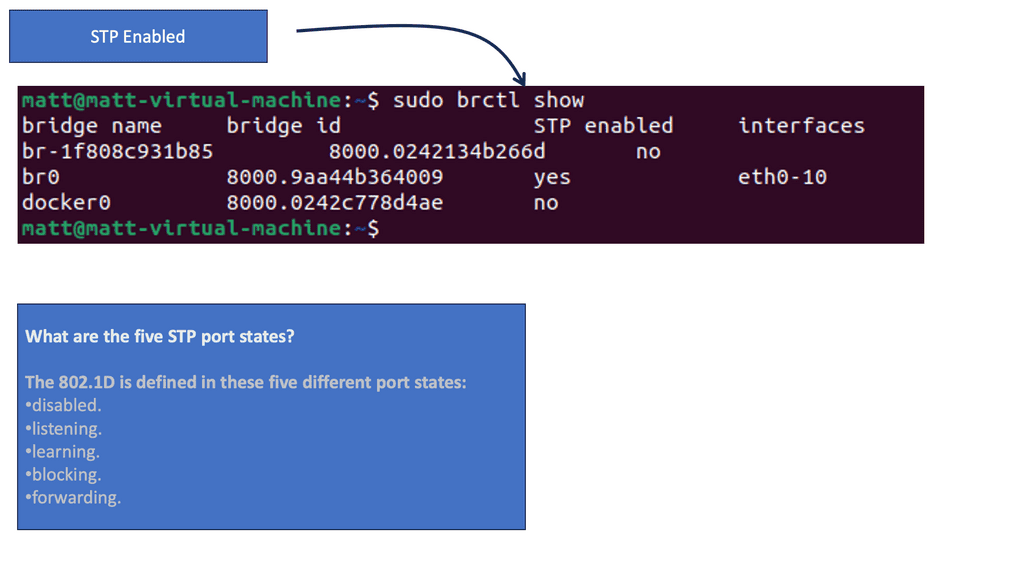

– The Linux Bridge supports STP, which prevents network loops and ensures efficient packet forwarding.

– The Linux Bridge can handle Virtual LANs (VLANs) and allows for the creation of VLAN-aware bridges.

– Configuring VLANs with the Linux Bridge enables network segmentation and improved traffic management.

Linux Bridge Utilities:

Linux Bridge Utilities, or brctl, can create and manage software-based network bridges in Linux. These utilities connect multiple network interfaces at the data link layer, creating a virtual bridge that facilitates communication between different network segments.

Virtual Switches, like physical switches, run STP to prevent loops.

The Cisco Nexus 1000v

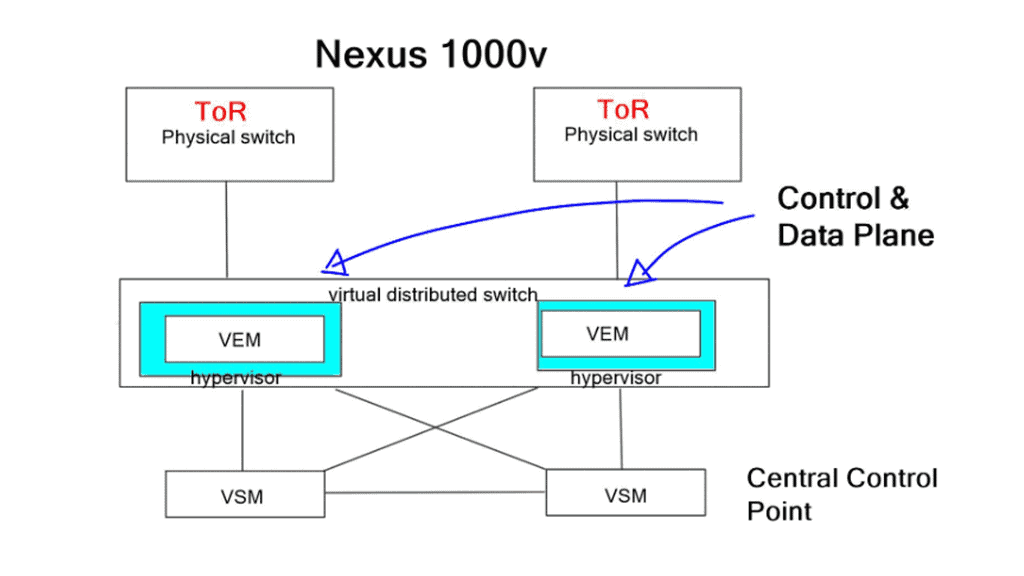

Third-party virtual switching may also be plugged in, and the Nexus 1000v is the most popular. It operates with a control plane, a Virtual Supervisor Module (VSM), distributed data plane objects, and a Virtual Ethernet Module (VEM). Cisco initially operated all control plane protocols on the VSM, including LACP and IGMP snooping. It severely inhibited scalability; now, control plane protocols are distributed locally to the VEMs.

It’s a feature-rich software switch and supports VXLAN. You may also use the TCP-established keyword, which is unavailable in VMware versions. Some of these products are free; others require an enterprise plus license. If you want a free, feature-rich, standards-based switching product, use Open vSwitch, licensed under Apache 2.0.

Open vSwitch

What is OVS? Open vSwitch is similar to VMware virtual switch and Cisco Nexus 1000v. It operates as a soft switch within the hypervisor or as the control stack for switching silicon. For example, you can flash your device with OpenWrt and install the Open vSwitch package from the OpenWrt repository. Both are standards-based.

The following displays the ports on an Open vSwitch; as you can see, several bridges are present. These bridges are used to forward packets between hosts. It has a great feature set for a complimentary switch, including VXLAN, STT, Layer 4 hashing, OpenFlow, etc.

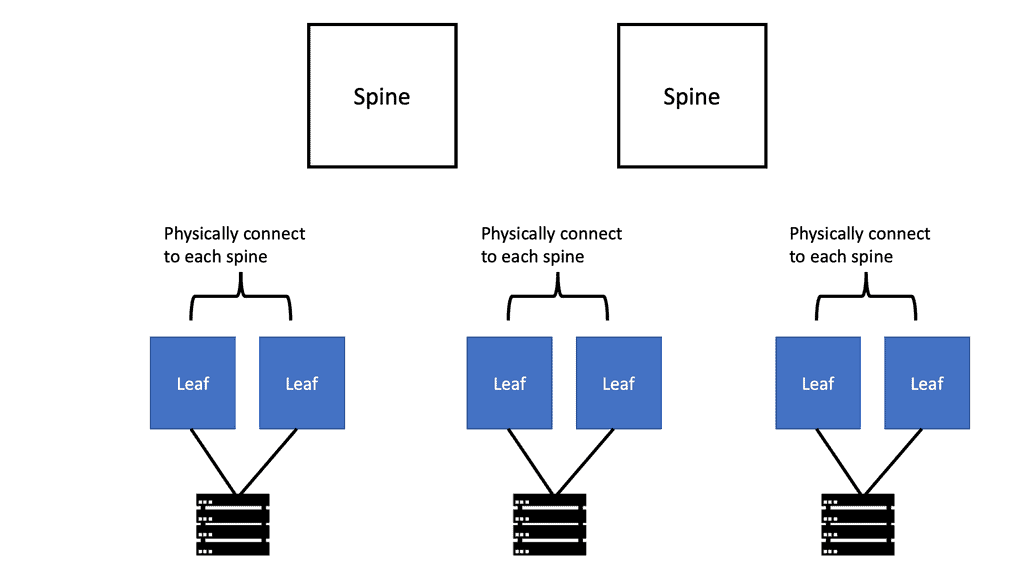

Virtual Switching: Integration with the ToR Switch

The virtual switch needs to connect to a ToR switch. So, even though the connection is logical, there must be a physical connection between the virtual switch and the ToR switch. Preferably a redundant connection for high availability. However, what happens with the VM on the virtual switch that needs to move?

Challenges occur when VMs must move, resulting in an enormous VLAN sprawl. All VLANs configured on all uplinks to the ToR switch create one big switch. What can be done to reduce this requirement? The best case would be integrating a solution synchronizing the virtual and physical worlds. Any changes in the virtual world are automatically provisioned in the physical world.

Ideally, we would like the list of VLANs configured on the server-facing port adjusted dynamically as VMs are moved around the network. For example, if VM-A moves from location A, we want its VLAN removed from the previous location and added to the new location B. Automatic VLAN synchronization reduces the flooding of Broadcast to servers, lowering the CPU utilization on each physical node.

Virtual switch: The different vendors

Arista, Force 10, and Brocade have VMware networking solutions on their ToR switches. Arista’s solution is VMtracer, natively integrated with EOS and works across all their data center switches. VMtracer gives you better visibility and control over VMs. It sends and receives CCDP or LLDP packets to extract VM information, including VLAN numbering per server port.

When a VM moves, it can remove the old VLAN and add the new VLAN to the new ports. Juniper and NEC use their Network Management systems to keep track of VMs and update the list of VLANs accordingly.

Cisco utilizes VM-FEX and a new feature called VM tracker, which is available on NX-OS. Cisco’s VM tracker interacts with vCenter SOAP API. It works with vCenter to identify the VLAN requirements of each VM and track their movements from one ESXi host to another. It relies on Cisco Discovery Protocol (CDP) information and does not support Link Layer Discovery Protocol (LLDP).

Edge Virtual Bridging (EVB)

An IEEE standard way to solve this is called EVB, and Juniper supports it, as HP ToR switches. VMware virtual switches do not currently support it. To implement EVB in a VMware virtualized environment, you must change the VMware virtual switch to either HP or Juniper. EVB uses VLANs or Q-in-Q tagging between the hypervisor and the physical switch. They introduced a new protocol called VSI that uses VDP as its discovery protocol.

The protocol runs between the virtual switch in the hypervisor and the adjacent physical switch, enabling the hypervisor to request information (for example, upon VM move) from the physical switch. EVB follows two paths a) 802.1qbg and b) 802.1qbh. 802.1qbg is also called VEPA (Virtual Ethernet Port Aggregation), and 802.1qbh is also known as VN-Tag (Cisco products support VN-Tag). Both are running in parallel and attempt to provide consistent control for VMs.

Limit core flooding

To reduce flooding in the network core, you need a protocol between the switches, allowing them to exchange information about which VLANs are in use. Cisco uses VTP and designs it carefully. There is a standard layer 2 messaging protocol called Multiple VLAN Registration Protocol (MVRP), but many vendors do not implement it.

It automates the creation and deactivation of VLANs by allowing switches to register and de-register VLAN identifiers. Unlike VTP, it does not use a “client” – “server” model. Instead, MVRP advertises VLAN information over 802.1q trunks to connected switches with MVRP enabled on the same interface. The neighboring switch receives the MVRP information and builds a dynamic VLAN table. MVRP is supported on Juniper Networks MX Series routers and EX Series switches.

Virtual switches have become indispensable components in modern network infrastructures. Their ability to enhance network performance, simplify management processes, and provide advanced security features make them essential for organizations of all sizes. By leveraging the benefits and features of virtual switches, network administrators can create robust and efficient networks, enabling seamless connectivity and optimizing overall network performance.

Summary: Virtual Switch

In today’s digital age, virtual switching has emerged as a transformative technology, revolutionizing how we connect and communicate. From data centers to networking infrastructure, virtual switching has paved the way for unprecedented flexibility and efficiency. This blog post delved into virtual switching, exploring its benefits, working principles, and potential applications.

Understanding Virtual Switching

Virtual switching is the process of emulating network switches using software. Unlike traditional physical switches, virtual switches exist purely in the virtual realm, allowing for dynamic configuration and management. By abstracting the hardware layer, virtual switching enables greater scalability, agility, and cost-effectiveness.

How Virtual Switching Works

Virtual switches operate within hypervisors or virtualization platforms, intermediaries between virtual machines (VMs) and the physical network infrastructure. They leverage software-defined networking (SDN) principles to facilitate network traffic flow, applying policies and routing packets between VMs or external networks. Through advanced algorithms and protocols, virtual switches ensure efficient data transmission and network security.

Benefits and Applications of Virtual Switching

Enhanced Flexibility:

Virtual switching liberates organizations from the constraints of physical hardware. It allows for seamless migration of VMs across hosts, enabling load balancing, resource optimization, and high availability. This flexibility empowers businesses to adapt and scale their networks with ease.

Improved Efficiency:

The dynamic nature of virtual switches streamlines network management and reduces operational complexity. Administrators can configure and provision virtual networks on demand, eliminating the need for manual hardware reconfiguration and resulting in significant time and cost savings.

Network Virtualization:

Virtual switching forms the foundation of network virtualization, enabling the creation of virtual networks, overlays, and logical partitions. By abstracting the network layer, organizations can achieve multi-tenancy, isolate traffic, and enhance security. This technology has found applications in cloud computing, software-defined data centers, and network function virtualization.

Challenges and Considerations

While virtual switching offers numerous advantages, there are also some drawbacks. Factors such as network latency, scalability, and compatibility with existing infrastructure must be carefully evaluated. Additionally, security measures, such as implementing virtual firewalls and access controls, become crucial in protecting virtual networks from potential threats.

Conclusion:

In conclusion, virtual switching has ushered in a new era of connectivity, transforming the networking landscape. Its ability to provide flexibility, efficiency, and network virtualization has made it a vital component of modern IT infrastructure. As technology continues to evolve, virtual switching will play a pivotal role in shaping the future of networking.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023

It is a comprehensive post about virtual switching and tor integration. It is useful for people who are looking for such information. Thanks for sharing this with us!