Redundant Links with Virtual PortChannels

In the world of networking, efficiency, and reliability are paramount. As data centers expand and organizations strive for seamless connectivity, Virtual PortChannels (vPCs) have emerged as a powerful solution. This blog post aims to demystify vPCs, comprehensively understanding their benefits, functionality, and implementation considerations.

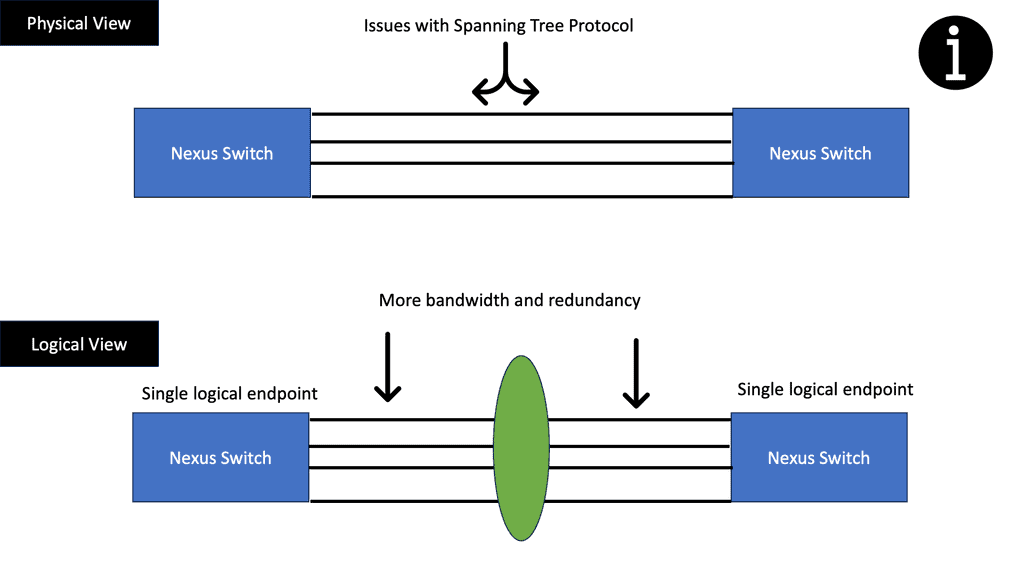

Virtual PortChannels, also known as vPCs, are a technology designed to enhance network scalability and resiliency. By combining multiple physical links into a single logical interface, vPCs allow for increased bandwidth and redundancy, ensuring uninterrupted connectivity and load balancing across network switches.

Redundant links refer to the practice of having multiple physical connections between network devices. This approach mitigates the risks of single points of failure and ensures uninterrupted network connectivity. However, managing redundant links can be complex and resource-intensive.

Virtual PortChannel (vPC) is a technology developed by Cisco Systems that revolutionizes how redundant links are deployed and managed. It allows the creation of a logical link aggregation group (LAG) by bundling multiple physical links into a single logical interface. This logical interface acts as a single point of attachment for downstream devices, simplifying the network topology.

1. Enhanced Redundancy: By bundling multiple physical links into a vPC, network administrators can achieve higher levels of redundancy. In the event of a link failure, traffic seamlessly fails over to the remaining active links, ensuring uninterrupted connectivity.

2. Improved Bandwidth Utilization: vPC enables load balancing across multiple physical links, maximizing the available bandwidth. This intelligent distribution of traffic prevents link congestion and optimizes network performance.

3. Simplified Network Design: Traditional redundant link configurations often involve complex Spanning Tree Protocol (STP) configurations to avoid loops. With vPC, STP is not required, simplifying the network design and reducing potential points of failure.

4. Hardware and Software Requirements: Implementing vPC requires compatible hardware and software. Network administrators must ensure that their devices support vPC functionality and that the necessary licenses are in place.

5. Configuration Best Practices: Proper configuration is crucial for the successful deployment of vPC. Network administrators should follow best practices provided by the equipment manufacturer and ensure consistency across all devices in the vPC domain.

Real-World Use Cases

- Data Centers: vPC is widely used in data center environments to provide high availability and optimal network performance. It allows for seamless migration of virtual machines (VMs) across physical hosts without losing network connectivity.

- Campus Networks: Large campus networks can leverage vPC to enhance redundancy and simplify network management. By aggregating multiple uplinks from access switches, vPC provides a resilient and scalable network infrastructure.Matt Conran

Highlights: Redundant Links with Virtual PortChannels

Port Channels and vPCs

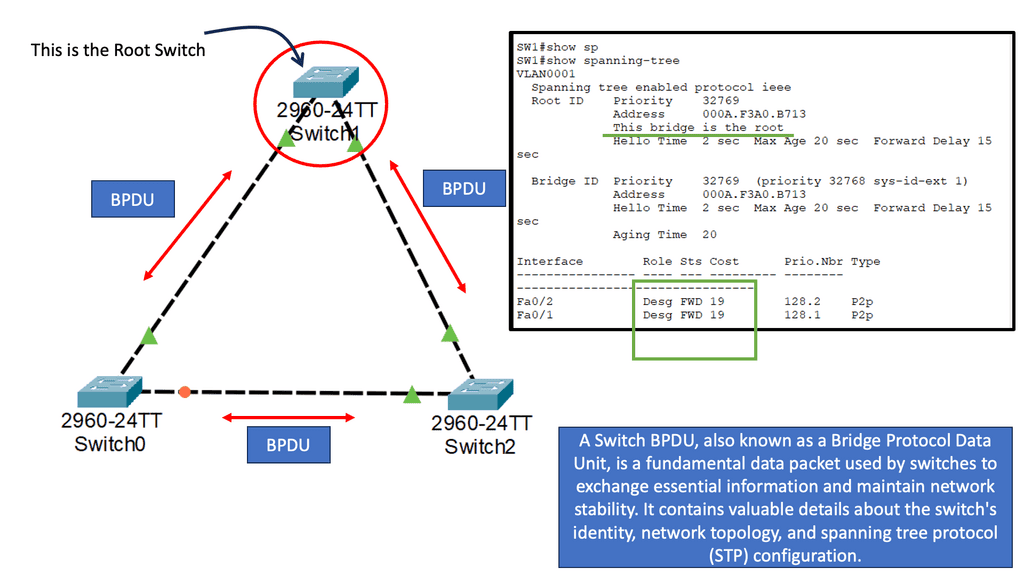

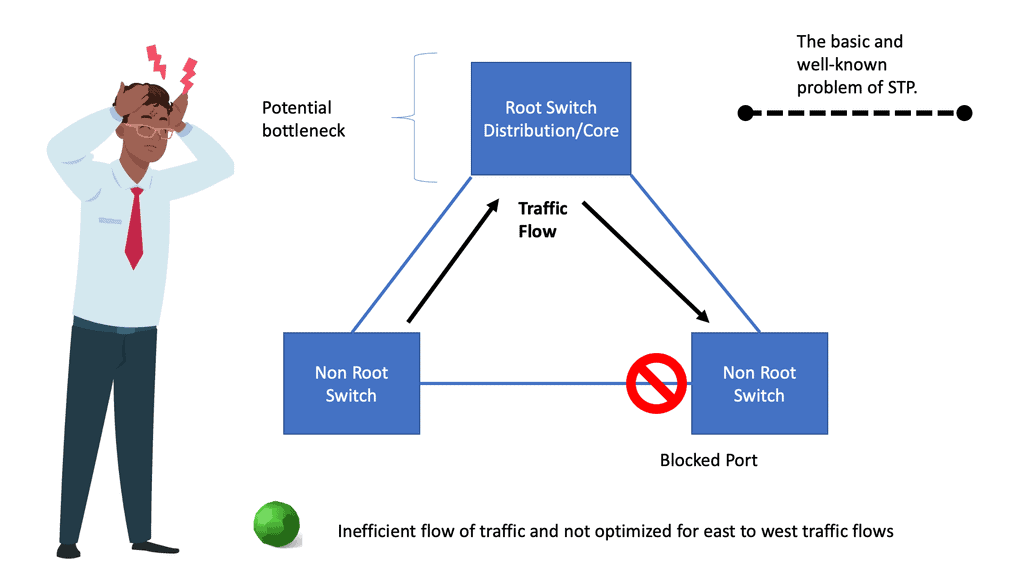

During the early days of Layer 2 Ethernet networks, Spanning Tree Protocol (STP) was used to limit the devastating effects of a topology loop. Even though there may be many connections in a network, STP has one suboptimal principle: only one active path is allowed between two devices.

There are two problems with a single logical link: the first is that half (or more) of the system’s bandwidth is unavailable to data traffic, and the second is that if the active link fails, the network will experience multiple seconds of systemwide data loss as it re-evaluates the new “best” solution to network forwarding on a Layer 2 network.

One of the significant drawbacks of the spanning tree is the concept of blocking ports. While they are essential to prevent loops, blocking ports leads to inefficient network performance. The blocked ports essentially go unused, resulting in unused bandwidth and decreased overall network throughput.

Load Balancing

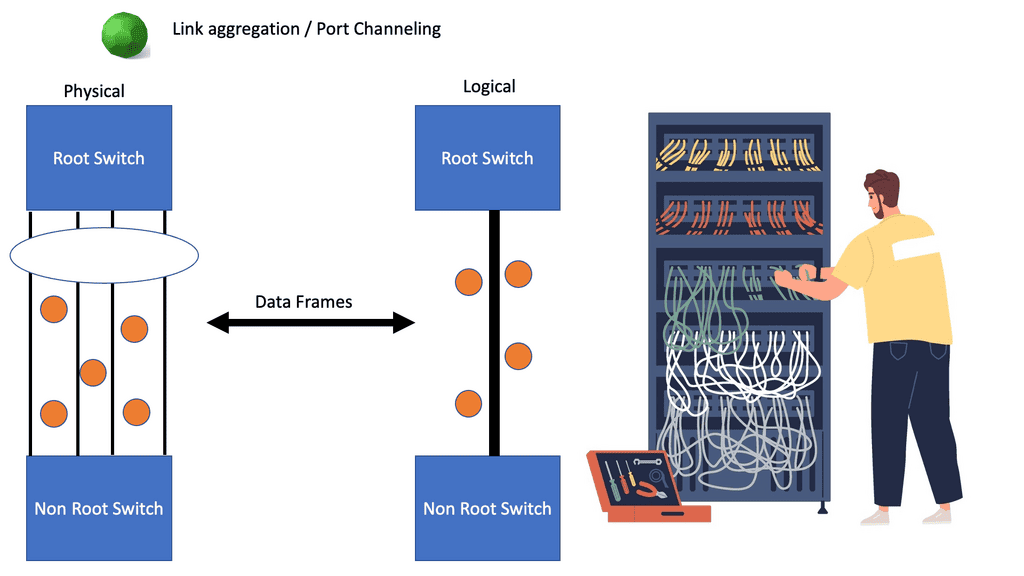

Furthermore, in a robust network with STP loop management, there is no efficient dynamic way to utilize all the available bandwidth. Enhanced Layer 2 Ethernet networks have been developed through the use of port channels and virtual port channels (vPCs). Port Channel technology allows forwarding traffic between two participating devices using a load-balancing algorithm to balance traffic across multiple inter-switch links (ISLs).

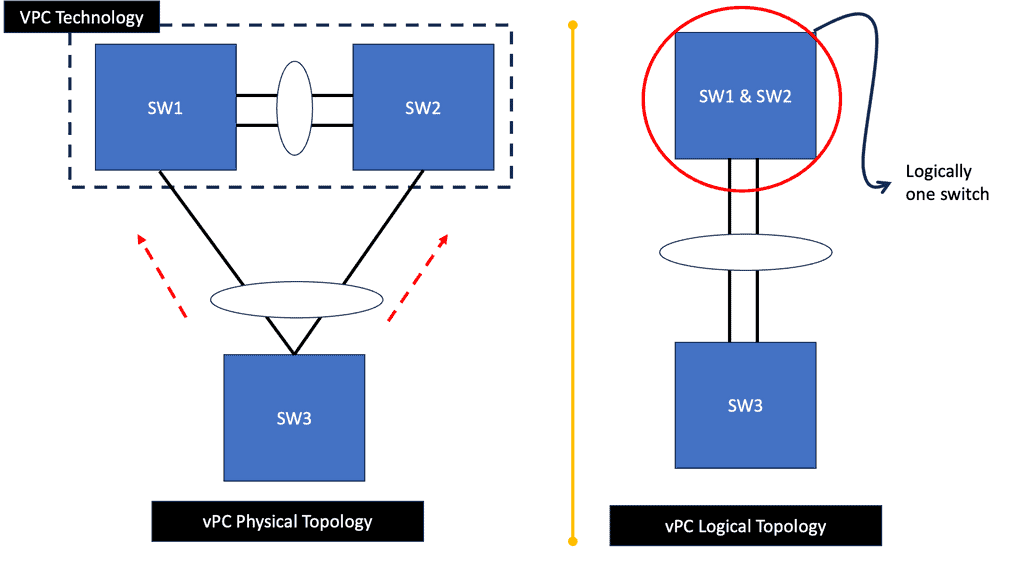

By bundling the links together as one logical link, the loop problem is also managed. Multi-device port channels can be formed using vPC technology. Port channel-attached devices see a pair of switches acting as a single logical endpoint when attached to a vPC peer; the devices act as separate endpoints. By combining hardware redundancy with port-channel loop management, the vPC environment provides multiple benefits.

Example: Cisco ACI

These technologies are extensively used in the ACI Cisco. A virtual port channel (vPC) allows links physically connected to two different ACI leaf nodes to appear as a single port channel to a third device (i.e., network switch, server, or any other networking device that supports link aggregation technology). Firstly, let us start with the basics.

Spanning Tree Challenges

Traditional spanning trees challenge network designers as they block redundant links. The drawbacks of STP ( spanning tree protocol ) prove extremely expensive in data centers when multiple redundant links are used for mission-critical applications, essentially wasting 50% of the capacity.

You can use the port channel to scale bandwidth, as the bundled links appear as one to higher-level protocols, resulting in all ports forwarding or blocking for a particular VLAN. It would help if you aimed to design all links in a data center as an EtherChannel, as this will optimize your bandwidth and reliability.

EtherChannel Technology

Network administrators connect multiple physical Ethernet links between devices to achieve more bandwidth and redundancy. The Spanning Tree Protocol blocks these links, so we need EtherChannel Technology. EtherChannel technology combines several physical links between switches into one logical connection to provide high-speed links and redundancy without being blocked by the Spanning Tree Protocol.

Port Channel and vPC

Port Channel technology forwards traffic between two participating devices using a load-balancing algorithm. Multiple devices can form a virtual port channel (vPC). A third device can see two Cisco Nexus 7000 or 9000 Series devices as a single port channel using a virtual port channel (vPC). Third devices can be switches, servers, or other networking devices that support port channels.

A virtual private cloud can provide Layer 2 multipathing to create redundancy and increase bandwidth by enabling multiple parallel paths between nodes and load-balancing traffic. The only ones you can use in the vPC are Layer 2 port channels. LACP or a static no protocol configuration is used to configure the port channels.

vPC provides the following technical benefits:

- A single device can share a port channel between two upstream devices

- Spanning Tree Protocol (STP) blocked ports are removed

- Makes sure there are no loops in the topology

- Uplink bandwidth is utilized to the fullest extent possible

- When either a device or a link fails, the system quickly converges

- Resilience at the link level is ensured

- Ensures a high level of availability

Implementation of vPC topologies

VPC supports the following topologies:

- Dual-uplink Layer 2 access: Using a Cisco Nexus 9000 Series switch, an access switch is dual-homed to a pair of distribution switches.

- Dual-homing: This topology connects two servers to two switches,

- Topologies supported by FEX: FEX supports various vPC topologies using Cisco Nexus 7000 and 9000 Series switches.

Related: For pre-information, you may find the following posts helpful:

- Data Center Fabric

- Optimal Layer 3 Forwarding

- Data Center Failure

- Active Active Data Center Design

- Network Overlays

- Dead Peer Detection

Virtual Portchannels Key Redundant Links Discussion Points: |

|

Back to Basics: Redundant links

STP has one suboptimal principle: to break loops in a network. This issue with having a single practice is that only one active path is allowed from one device to another, regardless of how many connections might exist in the network. In addition, no efficient dynamic mechanism exists for using all the available bandwidth with STP loop management.

Port Channel Technology

So, to overcome these challenges, enhancements to Layer 2 Ethernet networks were made in the shape of port channel and virtual port channel (vPC) technologies. Port Channel technology permits multiple links between two participating devices to forward traffic using a load-balancing algorithm while managing the loop problem by bundling the links as one logical link.

vPC Technology

Then we have the vPC technology. The vPC technology permits multiple devices to create a port channel. In vPC, a pair of switches acting as a vPC peer endpoint looks like a single logical entity to port channel–attached devices; the two devices that serve as the logical port-channel endpoint are still two different and separate devices.

High Availability: Link and Device.

You need to identify the level of high availability you want to achieve in enterprise branch offices. Then, you can meet your high availability requirements with the appropriate device level and link redundancy.

Link-level redundancy requires two links to run as active/active or active/backup links to recover traffic forwarding lost if one link fails. Therefore, any failure on an access link should not result in a loss of connectivity. To qualify, a branch office must have at least two upstream links, either to a private network or the Internet.

Device-level redundancy is another level of high availability, ensuring that the backup device can take over in the event of a failed device. Device and link redundancy is typically coupled, which means that if one fails, the other will too. As a result of this strategy, there should be no loss of connectivity between branch offices and data centers due to a single device failure.

High Availability and Designs

High-availability designs combine link and device redundancy between branches and data centers to ensure business-critical connectivity. Each data center is dual-homed, so if one fails, traffic can be redirected to the backup data center in the event of a complete failure.

Reroute traffic within 30 seconds should be possible whenever a failure (link, device, or data center) occurs. Packets can be lost during this period. When the user applications can withstand these failover times, sessions are maintained. Established sessions should not be dropped in a branch office with redundant devices if the failed device was forwarding traffic.

vPC vs Port Channel

Servers can be attached to the access switches as port channels, uplinks that consist of redundant links formed from the access can be link aggregated, and the core links can also be bundled. Most switches can support 8 ports in a bundle, and Nexus platforms can support up to 16 – 32 ports.

It would help to create a port channel with ports from different line cards in each redundant switch. This will prevent the failure of a single line card from affecting the entire channel. With this approach, we get redundancy at a logical and physical layer.

Link Aggregation and Port Channels

Link aggregation (EtherChannel and IEEE 802.3ad ) was developed to address that limitation where two Ethernet redundant switches were connected through multiple up-links. However, this did not address the challenges in the data center environment for deploying link aggregation on triangular topologies or if you want to terminate on different switches.

Traditional LAG ( link aggregation ) has limitations because its standard only allows aggregated links to terminate on a single switch. Technologies such as vPC Virtual Port Channel and Virtual Switching System (VSS) have been implemented to overcome this limitation.

Key Points: Port Channels

In summary, a port channel aggregates multiple physical interfaces that create a logical interface. On some platforms, you can bundle up to 32 individual redundant links. The Port channel will also load balance traffic across the redundant links. The port channel will remain operational as long as at least one physical interface within the port channel is operational. Finally, before we move to vPC vs port channel, you can create either Layer 2 or 3 port channels. However, as expected, you cannot combine Layer 2 and 3 interfaces in the same port channel.

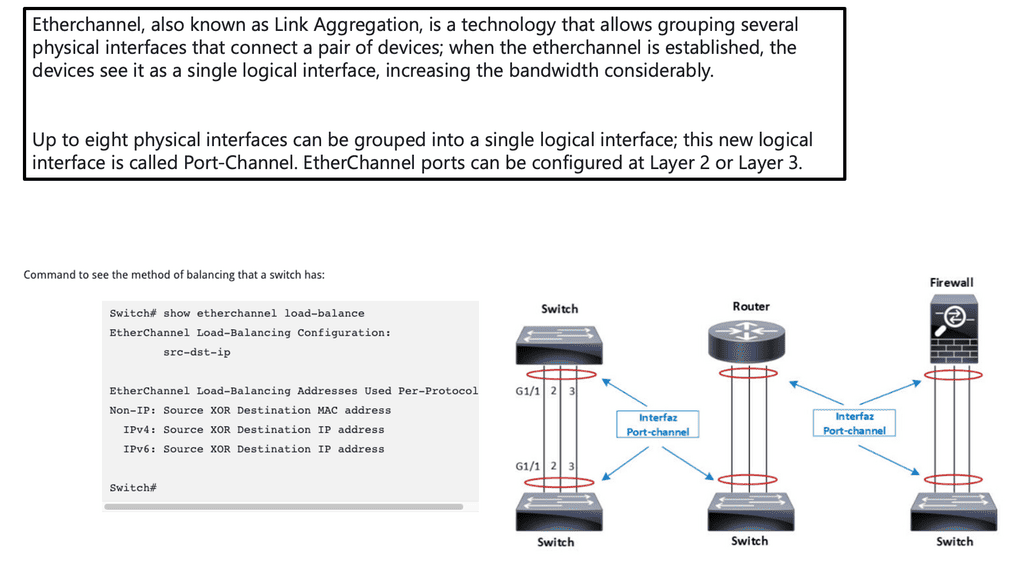

Port-channel load balancing

Using a hashing function, frames are distributed between the physical interfaces that make up the port channel. Depending on the method used for load balancing, this hash will differ. Based on the hash result, the physical port to be used for transmission is determined.

A hashing operation can be performed on MAC or IP addresses based on the source address, destination address, or both (some methods use the port number). Depending on the switch model and software version, default load-balancing methods can be layer 2, 3, or 4 and apply globally to all port channels. Here are a few methods for balancing etherchannels:

- src-ip : Source IP address

- dst-ip : Destination IP address

- src-dst-ip : Source and destination IP address

- src-mac : Source MAC address

- dst-mac : Destination MAC address

- src-dst-mac : Source and destination MAC address

- src-port : Source port number

- dst-port : Destination port number

- src-dst-port : Destination source port number

Starting the Debate: vPC vs Port Channel

vPC (Virtual Port-Channel), or multi-chassis ether channel (MEC), is a feature on the Cisco Nexus switches. You can configure port-channel across multiple redundant switches. The virtual Port-channel (vPC) is configured using the interfaces of two redundant switches. Now we have redundancy at a link and a switch layer forming a triangular design. We must terminate the links with a standard port channel on the same switch. We don’t have a channel between two redundant switches in this case.

Virtual PortChannels (vPCs), links between two Cisco switches, appear to a third downstream device as coming from one device and as part of a single PortChannel. A third device can be a switch, a server, or other networking devices that support IEEE 802.3ad PortChannels. Both standard port channels and Virtual PortChannels (vPC) can use the link Aggregation Control Protocol ( LACP ).

LACP negotiation and redundant switches

As part of the IEEE 802.3ad standards, a Link Aggregation Control Protocol ( LACP ) was created to negotiate the channel, and it recommended using this feature when building a bundle. LACP modes can be either active or passive. Active mode means the switch actively negotiates the channel, whereas passive means the port does not initiate an LACP negotiation.

You can form channels between active and passive or two active ports but not passive and passive ports. The port channel will not negotiate and remain down if the correct modes are not configured on each side of the challenge.

The following diagram depicts the logic and physical aspects of a vPC virtual port channel. This is not specific to a Cisco vPC but all aggregation technologies. We have several physical redundant links; in our case, four appear to be one prominent link from a logical perspective.

In either case, LACP can be used as the control plane to negotiate the channel. You may ask, is LACP mandatory for vPC?” – No. We can use mode “On” & bring UP the port channel without negotiation/checks. So, we are turning off the LACP or other control protocols. However, is LACP recommended for vPC?” –

Like a normal port channel, it is always advised to use a control protocol for the vPC/port channel. LACP adds a lot of intelligence to the background. The main difference between vPC and port channels is that vPC can terminate on secondary switches, creating a triangular design.

Building triangles for better redundancy

The quandary of the inability to build triangles with link aggregation can be mitigated by deploying either the Nexus technology, known as virtual Port Channels (vPCs), or the Catalyst technology, known as Virtual Switching System ( VSS ). VSS and vPC virtual port channels allow the termination of an LAG on two separate switches, resulting in a triangular design. In addition, they will enable the grouping of two physical redundant switches to form a single logical switch to any downstream device ( switch or server ).

Load Balancing Functions

A hash function is performed when a layer 2 frame is forwarding to a PortChannel to determine which physical links to forward the frame. The load balancing method used for Nexus switches is granular and includes the following:

Nexus Switches | Load Balancing Method |

Option 1 | Destination IP address |

Option 2 | Destination MAC address |

Option 3 | Destination TCP and UDP port number |

Option 4 | Source and Destination IP address |

Option 5 | Source and Destination MAC address |

Option 6 | Source and Destination TCP and UDP port numbers |

Option 7 | Source IP address |

Option 8 | Source MAC address |

Option 9 | Source TCP and UDP port number |

Redundant Links: Detect polarized links

Monitoring the traffic distribution over each physical link is essential to detect polarized links. The polarization effect occurs if some links attract more traffic than others, resulting in heavy utilization of some redundant links and low utilization of others. Therefore, before choosing the load balancing method, analyze the traffic flows from source to destination and determine if the flow is too many or evenly spread. For example, I would not use the source IP address load balancing method to load balance traffic from a firewall deploying Network Address Translation ( NAT ) to a single device.

Routing Protocols

Keep in mind for routing convergence, that routing protocols see the channel as one link, so if you have 8 x 10 ports in one bundle and that bundle has an OSPF cost of 10, a failure occurs, and you lose a member of that channel, the OSPF will still mark that link with the same metric. Routing protocols don’t dynamically change metrics due to a member link failure.

Virtual PortChannels Benefits

vPC and VSS offer the following benefits:

Redundant links with Virtual PortChannels | |

Improved convergence with a link and device failure. | |

Eliminate the need for STP. | |

Independent control planes ** Not with the VSS. | |

Increased bandwidth but combining all redundant links to one from the perspective of STP. |

What is vPC?

MEC (Multi-Chassis EtherChannel) is a feature on Cisco Nexus switches that allows you to configure a Port-Channel across multiple switches (i.e., vPC peers). Virtual PC is similar to Virtual Switch System (VSS) on Catalyst 6500s. However, VSS creates just one logical switch instead of vPC’s multiple ones. In this way, a single control plane handles both management and configuration. vPC, on the other hand, allows each switch to be managed and configured independently. vPC manages both switches independently, so it’s important to remember that. Therefore, you must create and permit your VLANs on both Nexus switches.

Comparing vPC and VSS

vPC and VSS are similar technologies, but the Nexus vPC feature has dual control planes. It offers In-Service Upgrade ( ISSU ), which allows upgrading one of the two switches without causing any service interruption. Because the control plane runs independently on each of the vPC peers, the failure of one peer does not affect the virtual switch.

With the VSS, the active peer going down brings down the entire system because of the lack of dual control planes. It should be worth noting that vPC falls back to STP, and the reliance on STP can only be entirely circumvented if you use Cisco’s Fabric Path or THRILL. The VSS is available on the Catalyst platforms, while vPC is solely a Nexus technology.

vPC Terminology:

- vPC Peer – a vPC switch, one of a pair.

- vPC member port – one of the ports that form a vPC.

- vPC – the combined port channel between the vPC peers and the downstream device.

- vPC peer-link – link used to synchronize state between vPC peer devices, must be 10GbE. The vPC-related control plane communications occur over this link, and any Ethernet frames transported receive special treatment to avoid loops in the vPC member ports.

- vPC peer keepalive link – the keepalive link between the vPC peer devices. It recommended using the MGMT0 interface and a VRF instance. If the mgmt interface is unavailable, then a routed interface in the mgmt VRF is.

- vPC VLAN – one of the VLANs that carry over the vPC peer link and are used to communicate via the vPC with a peer device.

- non-vPC peer VLAN – One STP VLAN is not carried over the peer link.

- CFS – Cisco Fabric Service Protocol, used for state synchronization and configuration validation between vPC peer devices.

Within a vPC domain, each pair is assigned to a primary or secondary role; by default, the switch with the lowest MAC address becomes the primary peer. The domain identifies the pair of redundant switches, generating a shared MAC address that can be used as a logical switch bridge ID in STP communication.

Virtual PortChannels Best Practices

Below are the best practices to consider for implementation:

- Manually define which vPC switch is primary and secondary. Lower the priority, and the more preferred switch will act as the primary.

- Form Layer 2 port channels using different 10GE modules on the Nexus switch for the vPC peer-link with ports in dedicated mode.

- Form Layer 2 port channels using different 10GE modules on the Nexus switch for the vPC peer keepalive link ( non-default VRF ).

- Enable Bridge Assurance ( BA ) on the vPC peer-link interface ( default ).

- Enable UDLD aggression on the vPC peer-link interface.

- On the primary vPC switch, configure the STP root bridge for a VLAN, the active HSRP router, and the PIM DR router. Likewise, on the secondary vPC switch, configure the secondary STP and the standby HSRP router. The Layer 2 and Layer 3 topologies should match.

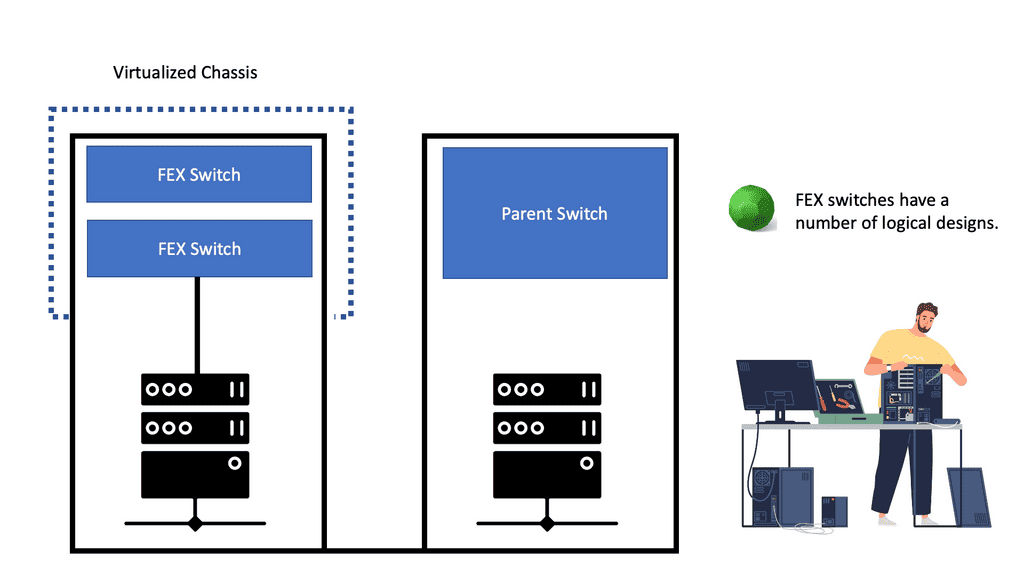

Introducing Fabric Extenders

If you want to add even more redundancy with vPC, use it with a Fabric Extender. Fabric Extenders act as a remote line card to a parent switch and can be used with vPC in three forms. The first is known as host vPC and is a vPC southbound from the FEX to the server; the second is a vPC northbound from the FEX to the parent switch, sometimes called a Fabric vPC; and the third is both a southbound and northbound vPC from the FEX which is known as Enhanced vPC.

Virtual PortChannels, the single connection

- Datacenter interconnect

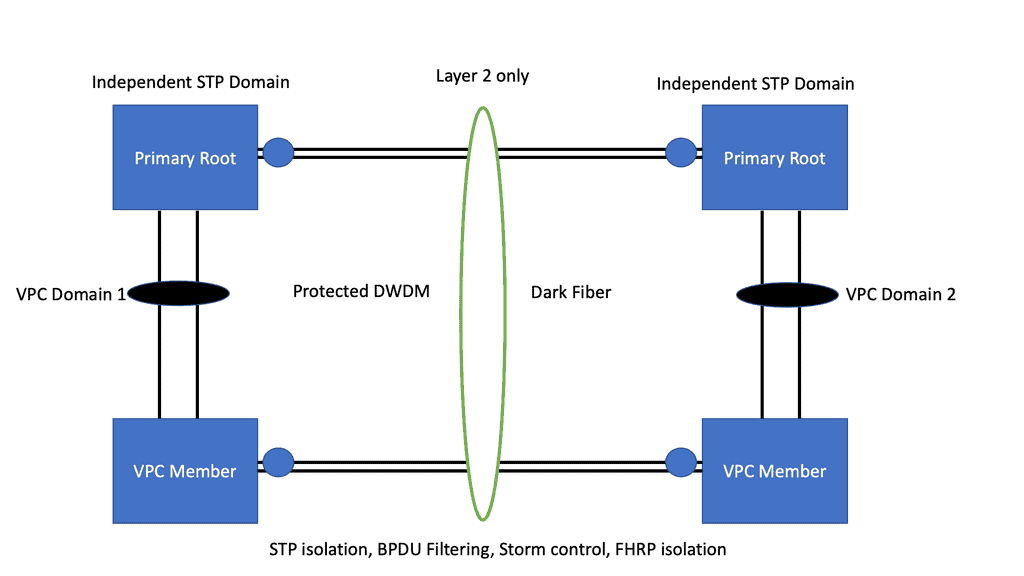

Because vPC characterizes a single connection from the perspective of higher-level protocols, e.g., STP or OSPF, it can be used as a Layer 2 extension for a DCI ( data center interconnect ) over short distances and dark fiber or protected DWDM only. vPC best practices still apply, and it is recommended that you use different vPC domains for each site and that Layer 3 communication between vPC peers is performed on dedicated routed redundant links.

- OTV or VPLS

If you connect more than two data centers with a full mesh topology, the best DCI mechanism would be Overlay Transport Virtualization (OTV) or VPLS ( Ethernet-based point-to-multipoint Layer 2 VPN ). vPC can work with two or more data centers, but you must design the topology as a hub-and-spoke.

Any spoke-to-spoke communication must flow through the hub, connecting two data centers back to back or two or more in a hub and spoke design; the layer 2 boundary and STP isolation can be achieved with bridge protocol unit ( BPDU ) filtering on the DCI links. BPDU filtering avoids transmitting BPDUs on a link, essentially turning off STP on the DCI links. You can extend with the multi-pod or multi-site designs if you have Cisco ACI.

- A key point: Loop prevention

vPC has a built-in loop prevention mechanism; never forward a frame received through a peer link to a vPC member port. Under normal operations, a vPC peer switch should never learn MAC addresses over the peer link and is mainly used for flooding, multicast, broadcast, and control plane traffic.

This is because the LAG is terminated on two peer switches, and you don’t want to send traffic received from a single downstream device back down to the same downstream device, resulting in a loop. However, this rule does not apply to:

- Non-vPC interface ( orphan port ) and

- vPC member ports that are only active in the receiving pair.

Note: An orphan port in a port to a downstream device connected to only one peer.

Redundant Switches: vPC peer link usage

As mentioned, the vPC peer link should not be used for end-host reachability under normal operations. However, if there is a failure on all members of a vPC in a single peer, the peer link will forward frames to the remaining member ports of the vPC. This explains why Cisco has recommended using the same 10G for the peer link.

The peer keepalive link is also mandatory and is used as a heartbeat mechanism to transport UDP datagrams between peers. This avoids a dual-active / split-brain scenario where both peers are active simultaneously. If no heartbeat is received after a configurable timeout, the secondary vPC peer is the primary peer, and all its member ports remain active.

However, if an orphan port is connected to only one peer, undesirable behavior occurs. For example, with a vPC peer link failure, the orphan ports remain active in the secondary peer, even though they are now isolated from the rest of the network. In this case, it is recommended that a non-vPC trunk be configured between peer switches.

Benefits of Virtual PortChannels:

1. Enhanced Network Performance: vPCs distribute traffic across multiple physical links, increasing overall bandwidth and reducing congestion. This improves network performance, especially in environments with high data transfer requirements.

2. Improved Redundancy and High Availability: By utilizing vPCs, organizations can eliminate single points of failure and achieve network resiliency. If a link or switch fails, traffic can seamlessly failover to the remaining active links, ensuring uninterrupted connectivity.

3. Simplified Network Management: vPCs simplify network management by treating multiple physical links as a single logical entity. This unified approach allows for more straightforward configuration, troubleshooting, and maintenance, reducing operational complexity and potential errors.

4. Scalability: As organizations grow and their network requirements evolve, vPCs offer a scalable solution. Additional switches and links can be seamlessly added to the vPC domain, expanding network capacity without disrupting ongoing operations.

Implementing Virtual PortChannels:

Implementing vPCs requires careful planning and consideration. Here are some key factors to keep in mind:

1. Compatible Network Equipment: Ensure the network switches and devices used support vPC technology. Consult the manufacturer’s documentation to verify compatibility and recommended configurations.

2. vPC Peer Link: Establishing a peer link between the vPC-enabled switches is crucial for synchronization and control plane communication. This link should have sufficient bandwidth and redundancy to support the vPC domain.

3. vPC Member Ports: Determine which physical links will be part of the vPC domain and configure them as vPC member ports. These ports should be connected to separate switches to ensure redundancy and minimize single points of failure.

4. vPC Keepalive Link: To monitor the health of vPC peers, a dedicated keepalive link is required. This link should be separate from the vPC peer link and have sufficient bandwidth to exchange keepalive messages.

Virtual PortChannels have revolutionized network connectivity, offering increased performance, redundancy, and scalability. By aggregating multiple physical links into a single logical interface, vPCs simplify network management and ensure uninterrupted connectivity.

While implementing vPCs requires careful planning and consideration, their benefits make them a valuable addition to any modern data center or network infrastructure. Remember, understanding the fundamentals and best practices of vPCs is essential for successfully implementing this technology and maximizing its benefits.

Summary: Redundant Links with Virtual PortChannels

In today’s fast-paced digital world, network reliability and performance are paramount. With the increasing demand for seamless connectivity, businesses seek innovative solutions to enhance their network infrastructure. One such solution that has gained significant traction is the implementation of redundant links with Virtual PortChannel (vPC). In this blog post, we explored the concept of redundant links and delved into the benefits and considerations of utilizing vPC technology.

Understanding Redundant Links

As the name suggests, redundant links are duplicate connections that provide failover capabilities in case of network failures. By establishing multiple links between network devices, organizations can ensure uninterrupted connectivity and minimize the risk of downtime. By distributing traffic across multiple paths, redundant links not only enhance network reliability but also improve overall network performance.

Exploring Virtual PortChannel (vPC) Technology

Virtual PortChannel (vPC) is a technology that aggregates multiple physical links into a single logical link. By bundling these links, vPC provides increased bandwidth, load balancing, and redundancy. This technology enables network devices to form a virtual port channel, presenting as a single port to connected devices. With vPC, organizations can achieve high availability and scalability while simplifying network configuration and management.

Benefits of Redundant Links with vPC

1. Enhanced Network Availability: Redundant links with vPC ensure network availability by providing alternate paths in case of link failures. This redundancy eliminates single points of failure and minimizes the impact of network disruptions.

2. Improved Load Balancing: VPC technology optimizes network performance and prevents bottlenecks by distributing traffic across multiple links. This load-balancing capability results in the efficient utilization of network resources and improved user experience.

3. Simplified Network Management: vPC technology simplifies network configuration and management. By logically consolidating multiple physical links, administrators can streamline their network setup, reducing complexity and potential human errors.

Considerations for Implementing Redundant Links with vPC

While the benefits of redundant links with vPC are significant, it’s essential to consider a few key factors before implementation. Factors such as network topology, hardware compatibility, and proper configuration must be thoroughly evaluated to ensure a successful deployment.

Conclusion:

In conclusion, redundant links with Virtual PortChannel (vPC) present a powerful solution for organizations aiming to enhance network reliability and performance. By combining the advantages of redundant links and virtualization, businesses can achieve high availability, improved load balancing, and simplified network management. With careful planning and consideration, implementing redundant links with vPC can pave the way for a robust and resilient network infrastructure.

- DMVPN - May 20, 2023

- Computer Networking: Building a Strong Foundation for Success - April 7, 2023

- eBOOK – SASE Capabilities - April 6, 2023