Azure ExpressRoute

In today's ever-evolving digital landscape, businesses are increasingly relying on cloud services for their infrastructure and data needs. Azure ExpressRoute, a dedicated network connection provided by Microsoft, offers a reliable and secure solution for organizations seeking direct access to Azure services. In this blog post, we will dive into the world of Azure ExpressRoute, exploring its benefits, implementation, and use cases.

Azure ExpressRoute is a private connection that allows businesses to establish a dedicated link between their on-premises network and Microsoft Azure. Unlike a regular internet connection, ExpressRoute offers higher security, lower latency, and increased reliability. By bypassing the public internet, organizations can experience enhanced performance and better control over their data.

Enhanced Performance: With ExpressRoute, businesses can achieve lower latency and higher bandwidth, resulting in faster and more responsive access to Azure services. This is especially critical for applications that require real-time data processing or heavy workloads.

Improved Security: ExpressRoute ensures a private and secure connection to Azure, reducing the risk of data breaches and unauthorized access. By leveraging private connections, businesses can maintain a higher level of control over their data and maintain compliance with industry regulations.

Hybrid Cloud Integration: Azure ExpressRoute enables seamless integration between on-premises infrastructure and Azure services. This allows organizations to extend their existing network resources to the cloud, creating a hybrid environment that offers flexibility and scalability.

Provider Selection: Businesses can choose from a range of ExpressRoute providers, including major telecommunications companies and internet service providers. It is essential to evaluate factors such as coverage, pricing, and support when selecting a provider that aligns with specific requirements.

Connection Types: Azure ExpressRoute offers two connection types - Layer 2 (Ethernet) and Layer 3 (IPVPN). Layer 2 provides a flexible and scalable solution, while Layer 3 offers more control over routing and traffic management. Understanding the differences between these connection types is crucial for successful implementation.

Global Enterprises: Large organizations with geographically dispersed offices can leverage Azure ExpressRoute to establish a private, high-speed connection to Azure services. This ensures consistent performance and secure data transmission across multiple locations.

Data-Intensive Applications: Industries dealing with massive data volumes, such as finance, healthcare, and research, can benefit from ExpressRoute's dedicated bandwidth. By bypassing the public internet, these organizations can achieve faster data transfers and real-time analytics.

Compliance and Security Requirements: Businesses operating in highly regulated industries, such as banking or government sectors, can utilize Azure ExpressRoute to meet stringent compliance requirements. The private connection ensures data privacy, integrity, and adherence to industry-specific regulations.

Conclusion: Azure ExpressRoute opens up a world of possibilities for businesses seeking a secure, high-performance connection to the cloud. By leveraging dedicated network links, organizations can unlock the full potential of Azure services while maintaining control over their data and ensuring compliance. Whether it's enhancing performance, improving security, or enabling hybrid cloud integration, ExpressRoute proves to be a valuable asset in today's digital landscape.

Matt Conran

Highlights: Azure ExpressRoute

Azure Networking

Using Azure Networking, you can connect your on-premises data center to the cloud using fully managed and scalable networking services. Azure networking services allow you to build a secure virtual network infrastructure, manage your applications’ network traffic, and protect them from DDoS attacks. In addition to enabling secure remote access to internal resources within your organization, Azure network resources can also be used to monitor and secure your network connectivity globally.

Connectivity with Azure Networking Services

With Azure, complex network architectures can be supported with robust, fully managed, and dynamic network infrastructure. A hybrid network solution combines on-premises and cloud infrastructure to create public access to network services and secure application networks.

Azure Virtual Network

Azure Virtual Networks (Azure VNets) are essential in building networks within the Azure infrastructure. Azure networking is fundamental to managing and securely connecting to other external networks (public and on-premises) over the Internet.

Azure VNet goes beyond traditional on-premises networks. In addition to isolation, high availability, and scalability, It helps secure your Azure resources by allowing you to administer, filter, or route traffic based on your preferences.

Peering between Azure VNets

Peering between Azure Virtual Networks (VNets) allows you to connect several virtual networks. Microsoft’s infrastructure and a secure private network connect the VMs in the peer virtual networks. Resources can be shared and connected directly between the two networks in a peering network.

Azure currently supports global VNet peering, which connects virtual networks within the same Azure region, instead of global VNet peering, which connects virtual networks across Azure regions.

A virtual wide area network powered by Azure

Azure Virtual WAN is a managed networking service that offers networking, security, and routing features. It is made possible by the Azure global network. Various VPN connectivity options are available, including site-to-site VPNs and ExpressRoutes.

For those who prefer working from home or other remote locations, virtual WANs assist in connecting to the Internet and other Azure resources, including networking and remote user connectivity. Using Azure Virtual WAN, existing infrastructure or data centers can be moved from on-premises to Microsoft Azure.

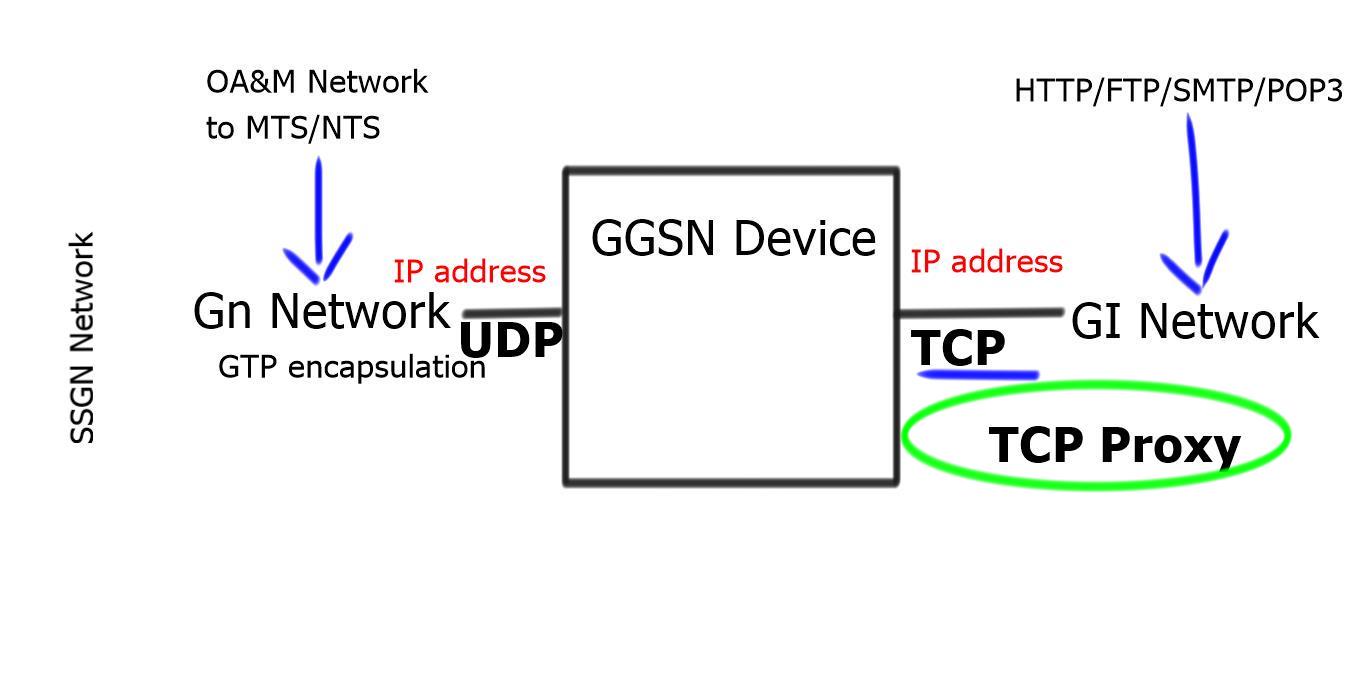

ExpressRoute Azure

Using Azure ExpressRoute, you can extend on-premises networks into Microsoft’s cloud infrastructure over a private connection. This networking service allows you to connect your on-premises networks to Azure. You can connect your on-premises network with Azure using an IP VPN network with Layer 3 connectivity, which will enable you to connect Azure to your own WAN or data center on-premises.

There is no internet traffic with Azure ExpressRoute since the connection is private. Compared to public networks, ExpressRoute connections are faster, more reliable, more available, and more secure.

Connecting to the Cloud

The following post details Azure ExpressRoute and Direct Connet. We will address Azure ExpressRoute redundancy and compare it to the Barracuda product, which uses a different tunneling method from the Azure Express Route. There is increasing talk about the cloud, what it can do for business, and how you connect to it. Any cloud can be connected via untrusted Internet or a private direct connection.

Direct Connectivity

For direct connectivity, AWS has a product known as AWS Direct Connect, and Microsoft has a competing product known as Azure ExpressRoute. Both provide the same end goal: cloud and on-premise endpoint connectivity, not over the Internet. However, as it stands, Microsoft’s ExpressRoute offers more flexibility in terms of geographical connectivity.

You may find the following helpful post for pre-information:

- Load Balancer Scaling

- IDS IPS Azure

- Low Latency Network Design

- Data Center Performance

- Baseline Engineering

- WAN SDN

- Technology Insight for Microsegmentation

- SDP VPN

Azure Express Route. Key Azure ExpressRoute Discussion Points: |

|

Back to basics with Azure VPN gateway

Let it to its defaults. When you deploy one Azure VPN gateway, two gateway instances are configured in an active standby configuration. This standby instance delivers partial redundancy but is not highly available, as it might take a few minutes for the second instance to arrive online and reconnect to the VPN destination.

For this lower level of redundancy, you can choose whether the VPN is regionally redundant or zone-redundant. If you utilize a Basic public IP address, the VPN you configure can only be regionally redundant. If you require a zone-redundant configuration, use a Standard public IP address with the VPN gateway.

Benefits of Azure ExpressRoute:

1. Enhanced Performance: ExpressRoute offers predictable network performance with low latency and high bandwidth, allowing organizations to meet their demanding application requirements. By bypassing the public internet, organizations can reduce network congestion and improve overall application performance.

2. Improved Security: ExpressRoute provides a private connection, ensuring data remains within the organization’s network perimeter. This eliminates the risks associated with transmitting data over the public internet, such as data breaches and unauthorized access. Furthermore, ExpressRoute supports network isolation, enabling organizations to control their data strictly.

3. Reliability and Availability: Azure ExpressRoute offers a Service Level Agreement (SLA) that guarantees a high level of availability, with uptime percentages ranging from 99.9% to 99.99%. This ensures organizations can rely on a stable connection to Azure services, minimizing downtime and maximizing productivity.

4. Cost Optimization: ExpressRoute helps organizations optimize costs by reducing data transfer costs and providing more predictable pricing models. With dedicated connectivity, businesses can avoid unpredictable network costs associated with public internet connections.

Use Cases of Azure ExpressRoute:

1. Hybrid Cloud Connectivity: Organizations with a hybrid cloud infrastructure, combining on-premises resources with cloud services, can use ExpressRoute to establish a seamless and secure connection between their environments. This enables seamless data transfer, application migration, and hybrid identity management.

2. Data-Intensive Workloads: ExpressRoute is particularly beneficial for organizations dealing with large volumes of data or running data-intensive workloads. By leveraging the high-bandwidth connection, organizations can transfer data quickly and efficiently, ensuring optimal performance for analytics, machine learning, and other data-driven processes.

3. Compliance and Data Sovereignty: Industries with strict compliance requirements, such as finance, healthcare, and government sectors, can benefit from ExpressRoute’s ability to keep data within their network perimeter. This ensures compliance with data protection regulations and facilitates data sovereignty, addressing data privacy and residency concerns.

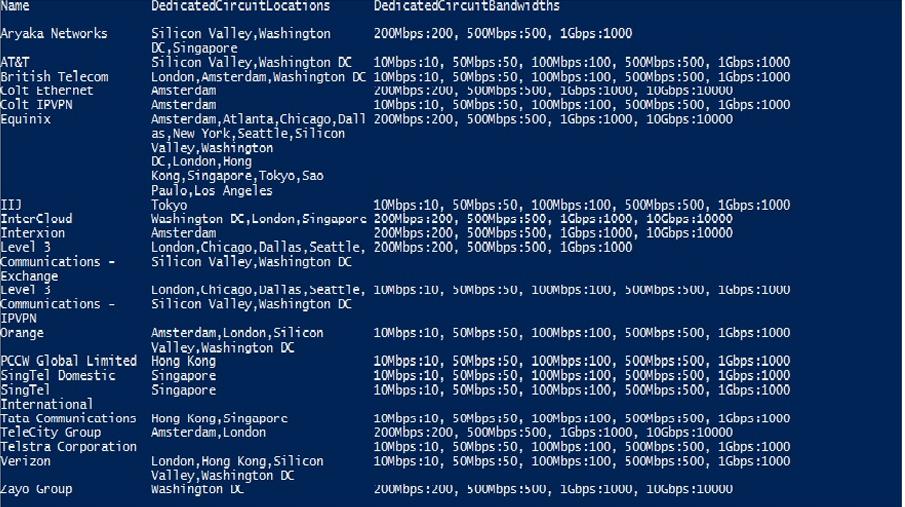

The following table lists ExpressRoute locations;

Azure Express Route and Encryption

Azure ExpressRoute does not offer built-in encryption. For this reason, you should investigate Barracuda’s cloud security product sets. They offer secure transmission and automatic path failover via redundant, secure tunnels to complete an end-to-end cloud solution. Other 3rd-party security products are available in Azure but are not as mature as Barracuda’s product set.

Internet Performance

Connecting to Azure public cloud over the Internet may be cheap, but it has its drawbacks with security, uptime, latency, packet loss, and jitter. The latency, jitter, and packet loss associated with the Internet often cause the performance of an application to degrade. This is primarily a concern if you support hybrid applications requiring real-time backend on-premise communications.

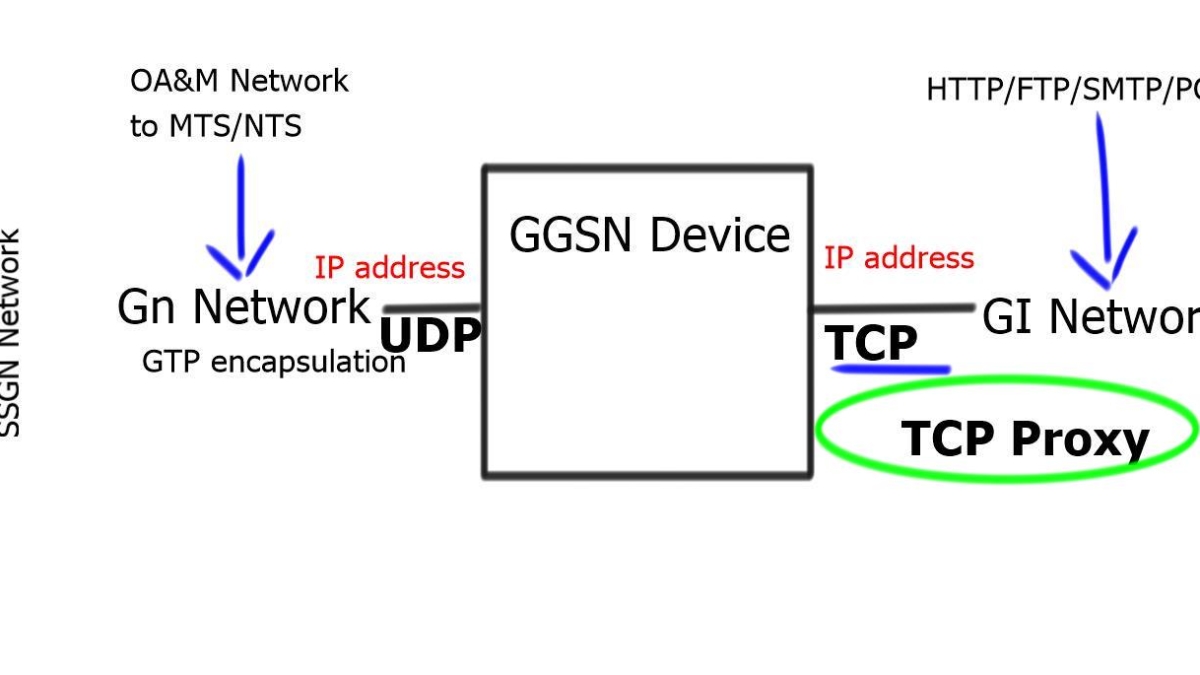

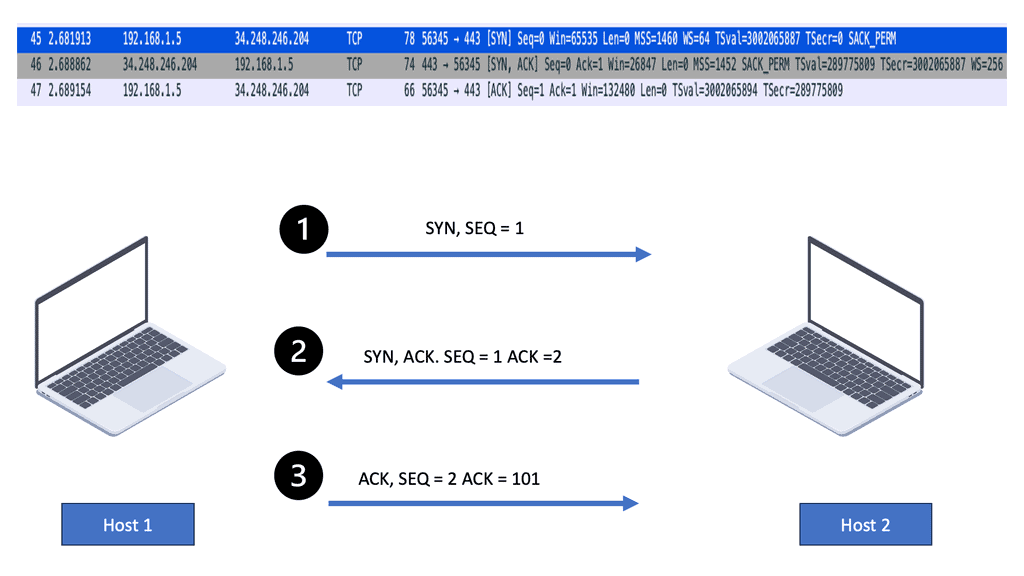

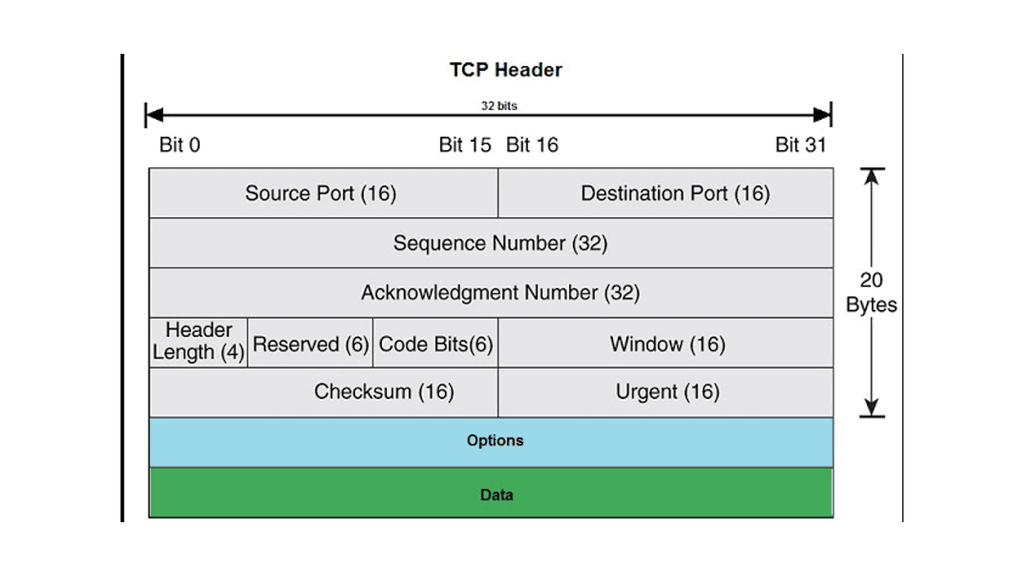

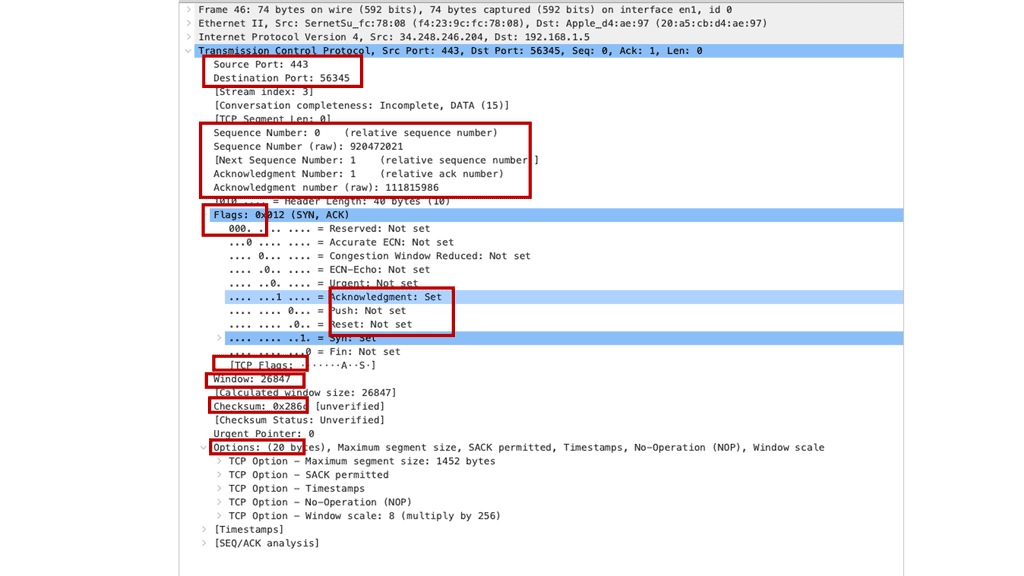

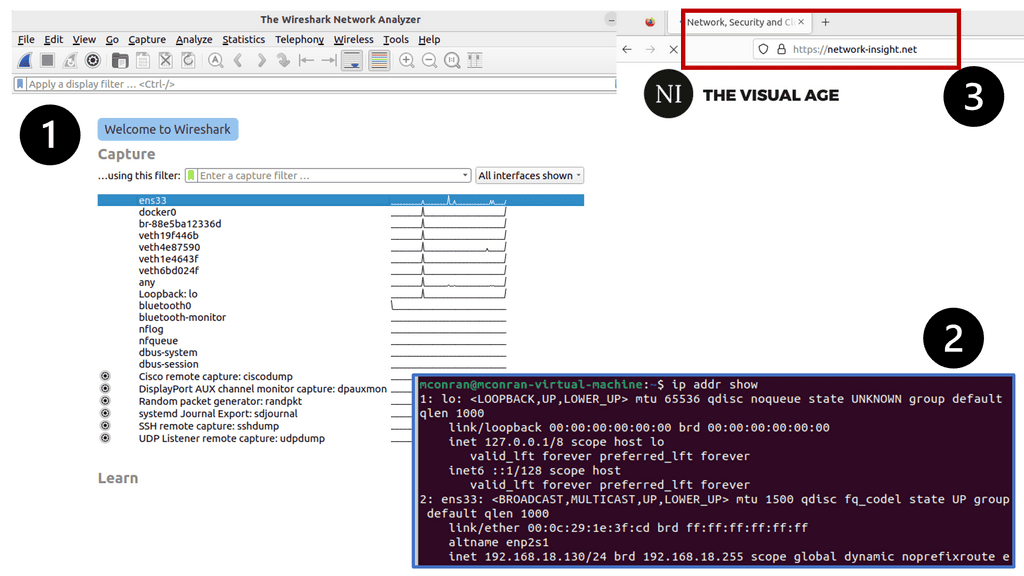

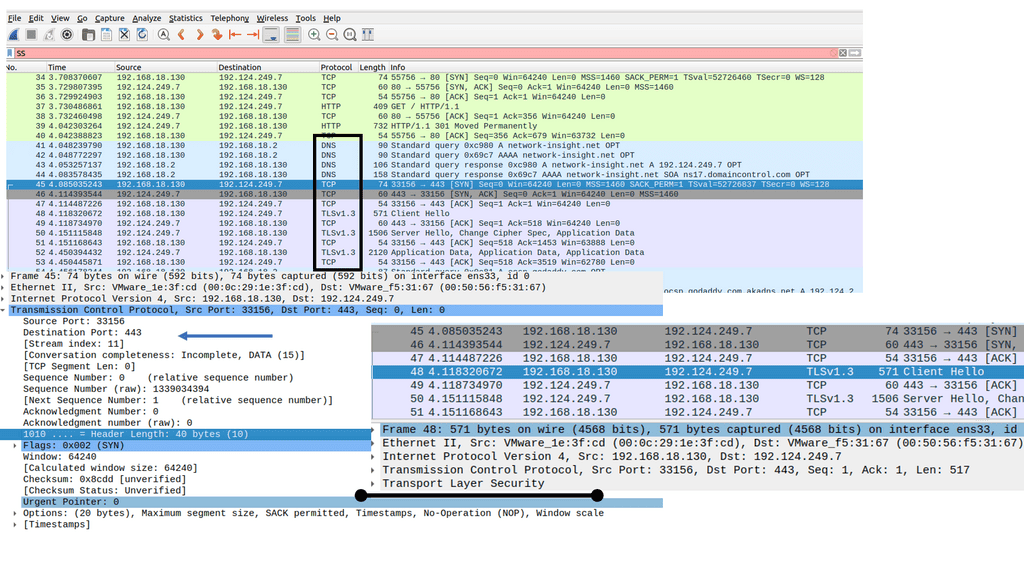

Transport network performance directly impacts application performance. Businesses are now facing new challenges when accessing applications in the cloud over the Internet. Delayed round-trip time (RTT) is a big concern. TCP spends a few RTTs to establish the TCP session—two RTTs before you get the first data byte.

Client-side cookies may also add delays if they are large enough and unable to fit in the first data byte. Having a transport network offering good RTT is essential for application performance. You need the ability to transport packets as quickly as possible and support the concept that “every packet counts.“

- The Internet does not provide this or offer any guaranteed Service Level Agreement (SLA) for individual traffic classes.

The Azure solution – Azure ExpressRoute & Telecity cloud-IX

With Microsoft Azure ExpressRoute, you get your private connection to Azure with a guaranteed SLA. It’s like a natural extension to your data center, offering lower latency, higher throughput, and better reliability than the Internet. You can now build applications spanning on-premise infrastructures and Azure Cloud without compromising performance. It bypasses the Internet and lets you connect your on-premise data center to your cloud data center via 3rd-party MPLS networks.

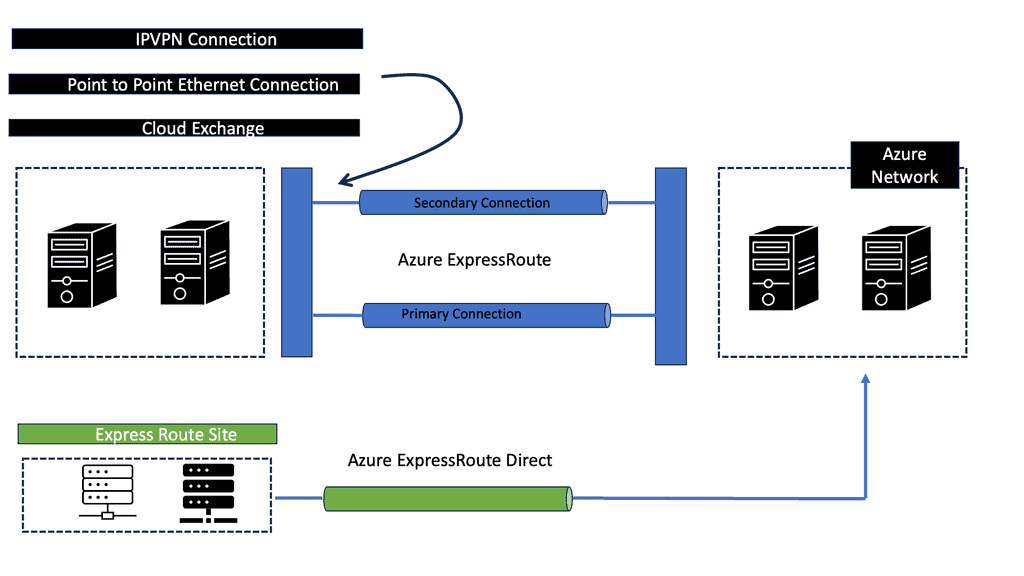

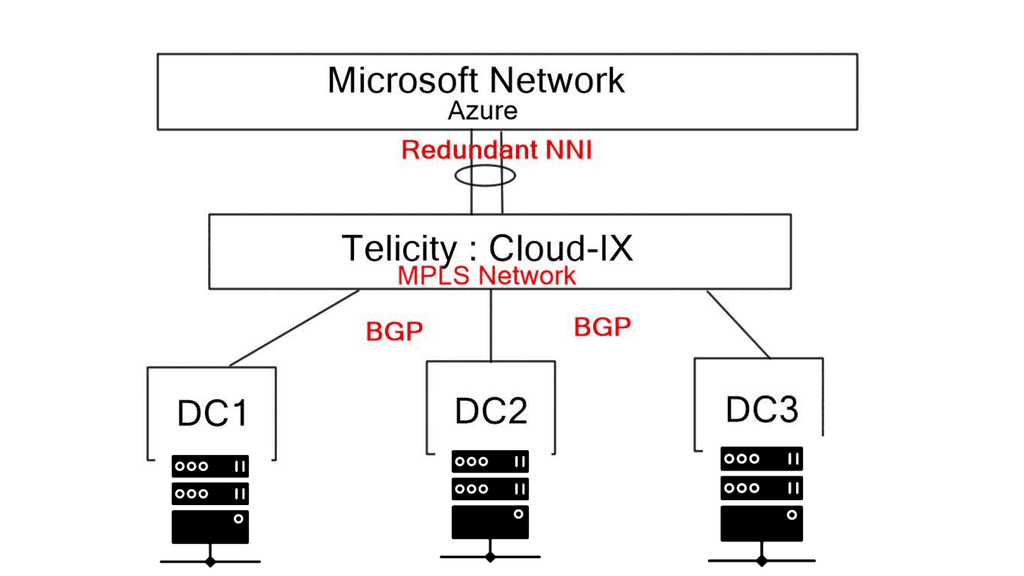

There are two ways to establish your private connection to Azure with ExpressRoute: Exchange Provider or Network Service Provider. Choose a method if you want to co-locate equipment. Companies like Telecity offer a “bridging product” enabling direct connectivity from your WAN to Azure via their MPLS network. Even though Telecity is an exchange provider, its network offerings are network service providers. Their bridging product is called Cloud-IX. Bridging product connectivity makes Azure Cloud look like another terrestrial data center.

Cloud-IX is a neutral cloud ecosystem. It allows enterprises to establish private connections to cloud service providers, not just Azure. Telecity Cloud-IX network already has redundant NNI peering to Microsoft data centers, enabling you to set up your peering connections to Cloud-IX via BGP or statics only. You don’t peer directly with Azure. Telecity and Cloud-IX take care of transport security and redundancy. Cloud-IX is likely an MPLS network that uses route targets (RT) and route distinguishers (RD) to separate and distinguish customer traffic.

Azure ExpressRoute Redundancy

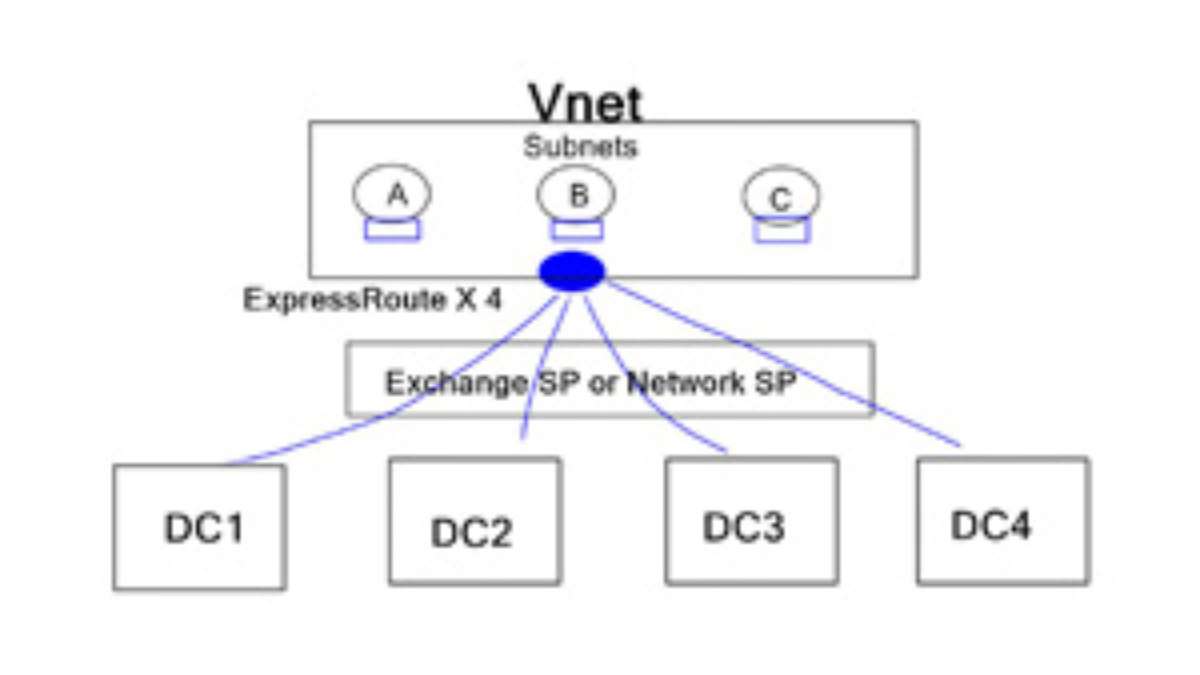

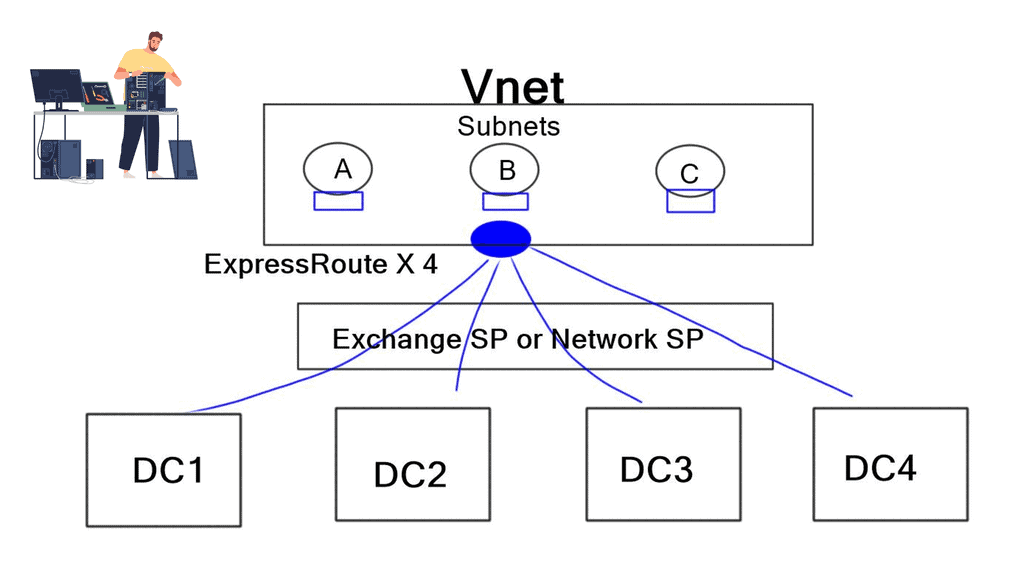

The introduction of VNets

Layer-3 overlays called VNets ( cloud boundaries/subnets) are now associated with four ExpressRoutes. This offers a proper active-active data center design, enabling path diversity and the ability to build resilient connectivity. This is great for designers as it means we can make true geo-resilience into ExpressRoute designs by creating two ExpressRoute “dedicated circuits” and associating each virtual network with both.

This ensures full end-to-end resilience built into the Azure ExpressRoute configuration, including removing all geographic SPOFs. ExpressRoute connections are created between the Exchange Service Provider or Network Service Provider and the Microsoft cloud. The connectivity between customers’ on-premise locations and the service provider is produced independently of ExpressRoute. Microsoft only peers with service providers.

Barracuda NG firewall & Azure Express Route

Barracuda NG Firewall adds protection to Microsoft ExpressRoute. The NG is installed at both ends of the connection and offers traffic access controls, security features, low latency, and automatic path failover with Barracuda’s proprietary transport protocol, TINA. Traffic Access Control: From the IP to the Application layer, the NG firewall gives you complete visibility into traffic flows in and out of ExpressRoute.

With visibility, you get better control of the traffic. In addition, the NG firewall allows you to log what servers are doing outbound. This may be interesting to know if a server gets hacked in Azure. You would like to know what the attacker is doing outbound to it. Analytics will let you contain it or log it. When you get attacked, you need to know what traffic the attacker generates and if they are pivoting to other servers.

There have been security concerns about the number of administrative domains ExpressRoute overlays. It would help if you implemented security measures as you shared the logic with other customers’ physical routers. The NG encrypts end-to-end traffic from both endpoints. This encryption can be customized based on your requirements; for example, transport may be TCP, UDP, or hybrid, and you have complete control over the keys and algorithms.

Preserve low latency

Preserve Low Latency for applications that require high-quality service. The NG can provide quality service based on ports and applications, which offer a better service to high business applications. It also optimizes traffic by sending bulk traffic automatically over the Internet and keeping critical traffic on the low latency path.

Automatic Transport Link failover with TINA. Upon MPLS link failure, the NG can automatically switch to an internet-based transport and continue to pass traffic to the Azure gateway. It automatically creates a secure tunnel over the Internet without any packet drops, offering a graceful failover to Internet VPN. This allows multiple links to be active-active, making the WAN edge similar to the analogy of SD-WAN utilizing a transport-agnostic failover approach.

TINA is SSL-based, not IPSEC, and runs over TCP/UDP /ESP. Because Azure only supports TCP & UDP, TINA is supported and can run across the Microsoft fabric.

Summary: Azure ExpressRoute

In today’s rapidly evolving digital landscape, businesses seek ways to enhance cloud connectivity for seamless data transfer and improved security. One such solution is Azure ExpressRoute, a private and dedicated network connection to Microsoft Azure. In this blog post, we delved into the various benefits of Azure ExpressRoute and how it can revolutionize your cloud experience.

Understanding Azure ExpressRoute

Azure ExpressRoute is a service that allows organizations to establish a private and dedicated connection to Azure, bypassing the public internet. This direct pathway ensures a more reliable, secure, and low-latency data and application transfer connection.

Enhanced Security and Data Privacy

With Azure ExpressRoute, organizations can significantly enhance security by keeping their data off the public internet. Establishing a private connection safeguards sensitive information from potential threats, ensuring data privacy and compliance with industry regulations.

Improved Performance and Reliability

The dedicated nature of Azure ExpressRoute ensures a high-performance connection with consistent network latency and minimal packet loss. By bypassing the public internet, organizations can achieve faster data transfer speeds, reduced latency, and enhanced user experience.

Hybrid Cloud Enablement

Azure ExpressRoute enables seamless integration between on-premises infrastructure and the Azure cloud environment. This makes it an ideal solution for organizations adopting a hybrid cloud strategy, allowing them to leverage the benefits of both environments without compromising on security or performance.

Flexible Network Architecture

Azure ExpressRoute offers flexibility in network architecture, allowing organizations to choose from multiple connectivity options. Whether establishing a direct connection from their data center or utilizing a colocation facility, organizations can design a network setup that best suits their requirements.

Conclusion:

Azure ExpressRoute provides businesses with a direct and dedicated pathway to the cloud, offering enhanced security, improved performance, and flexibility in network architecture. By leveraging Azure ExpressRoute, organizations can unlock the full potential of their cloud infrastructure and accelerate their digital transformation journey.